Abstract

In this paper, a multi-focus image fusion algorithm via the distance-weighted regional energy and structure tensor in non-subsampled contourlet transform domain is introduced. The distance-weighted regional energy-based fusion rule was used to deal with low-frequency components, and the structure tensor-based fusion rule was used to process high-frequency components; fused sub-bands were integrated with the inverse non-subsampled contourlet transform, and a fused multi-focus image was generated. We conducted a series of simulations and experiments on the multi-focus image public dataset Lytro; the experimental results of 20 sets of data show that our algorithm has significant advantages compared to advanced algorithms and that it can produce clearer and more informative multi-focus fusion images.

Keywords: multi-focus image, image fusion, distance-weighted regional energy, structure tensor, non-subsampled contourlet transform

1. Introduction

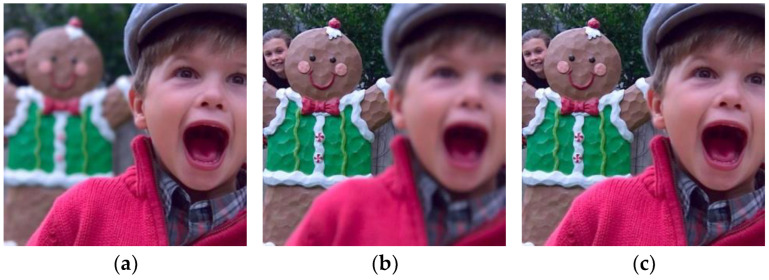

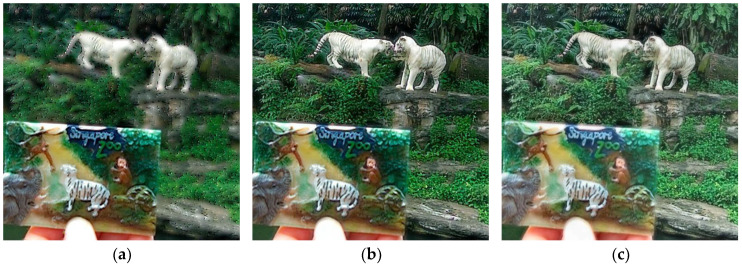

In order to obtain richer and more useful information and to improve the completeness and accuracy of scene description, multi-focus images obtained from video sensors are usually fused [1,2]. Multi-focus image fusion uses effective information processing methods to fuse clear and focused information from different images, resulting in a high-definition fully focused image [3,4,5]. Figure 1 depicts an example of multi-focus image fusion.

Figure 1.

Multi-focus image fusion. (a) Near-focused image; (b) far-focused image; and (c) fused image.

After many years of extensive and in-depth research, the image fusion method has made significant progress. Image fusion approaches can be divided into three categories: transform domain-based, edge-preserving filtering-based, and deep learning-based algorithms [6,7,8,9].

The most widely used transform domain-based methods are the curvelet transform [10], contourlet transform [11,12], shearlet transform [13,14], etc. Kumar et al. [15] constructed an intelligent multi-modal image fusion technique utilizing the fast discrete curvelet transform and type-2 fuzzy entropy, and the fusion results demonstrate the efficiency of this model in terms of subjective and objective assessment. Kumar et al. [16] constructed an image fusion technique via an improved multi-objective meta-heuristic algorithm with fuzzy entropy in fast discrete curvelet transform domain; a comparison of the developed methodology over the state-of-the-art models observes enhanced performance with respect to visual quality assessment. Li et al. [17] integrated the curvelet and discrete wavelet transform for multi-focus image fusion, and it can obtain advanced fusion performance. Zhang et al. [18] constructed an image fusion model using the contourlet transform. The average-based fusion rule and region energy-based fusion rule are performed on the low- and high-frequency sub-bands, respectively; the fused image is analyzed using fast non-local clustering for multi-temporal synthetic aperture radar image change detection; and this technique generates state-of-the-art change detection performance on both small- and large-scale datasets. Li et al. [19] constructed an image fusion approach using sparse representation and local energy in shearlet transform domain; this method can obtain good fusion performance. Hao et al. [20] introduced a multi-scale decomposition optimization-based image fusion method via gradient-weighted local energy; the non-subsampled shearlet transform is utilized to decompose the source images into low- and high-frequency sub-images, and then the low-frequency components are divided into base layers and texture layers, the base layers are merged according to the intrinsic attribute-based energy fusion rule, and the structure tensor-based gradient-weighted local energy operator fusion rule is utilized to merge the texture layers and high-frequency sub-bands. This fusion method can achieve a superior fusion capability in both qualitative and quantitative assessments.

The methods based on edge-preserving filtering also hold an important position in the field of image fusion, such as guided image filtering [21], cross bilateral filtering [22], Gaussian curvature filtering [23], sub-window variance filtering [24], etc. Feng et al. [24] constructed the multi-channel dynamic threshold neural P systems-based image fusion method via a visual saliency map in the sub-window variance filter domain; this algorithm can obtain effective fusion performance both on visual quality and quantitative evaluations. Zhang et al. [25] introduced a double joint edge preservation filter-based image fusion technique via a non-globally saliency gradient operator; it can obtain excellent subjective and objective performance. Jiang et al. [26] introduced the image fusion approach utilizing entropy measure between intuitionistic fuzzy set joint Gaussian curvature filtering.

The image fusion approaches based on deep learning have generated good performance, and these deep learning-based fusion models can be divided into supervised- and unsupervised-based approaches [27,28]. In terms of supervised algorithms, some effective fusion methods have been generated. The deep convolutional neural network introduced by Liu et al. [29] is used for multi-focus image fusion, and it generates good fusion results. The multi-scale visual attention deep convolutional neural network introduced by Lai et al. [30] is utilized to fuse multi-focus images. Wang et al. [31] introduced weakly supervised image fusion with modal synthesis and enhancement. The self-supervised residual feature learning model constructed by Wang et al. [32] is used to fuse multi-focus images. The attention mechanism-based image fusion approach via supervised learning was constructed by Jiang et al. [33]. In terms of unsupervised algorithms, Jin et al. [34] introduced the Transformer and U-Net for image fusion. Zhang et al. [35] presented another unsupervised generative adversarial network with adaptive and gradient joint constraints for image fusion. Liu et al. [36] constructed a generative adversarial network-based unsupervised back project dense network for image fusion. These deep learning-based fusion methods also achieve good performance.

In order to improve the fusion performance of multi-focus images, a distance-weighted regional energy-based multi-focus image fusion algorithm via the structure tensor in non-subsampled contourlet transform domain was constructed. The distance-weighted regional energy-based fusion rule and the structure tensor-based fusion rule were used to merge low- and high-frequency sub-images, respectively. The experimental results of the Lytro dataset show that the proposed fusion method can produce fusion effects that are superior to other traditional and deep learning methods in terms of subjective visual and objective evaluation.

2. Backgrounds

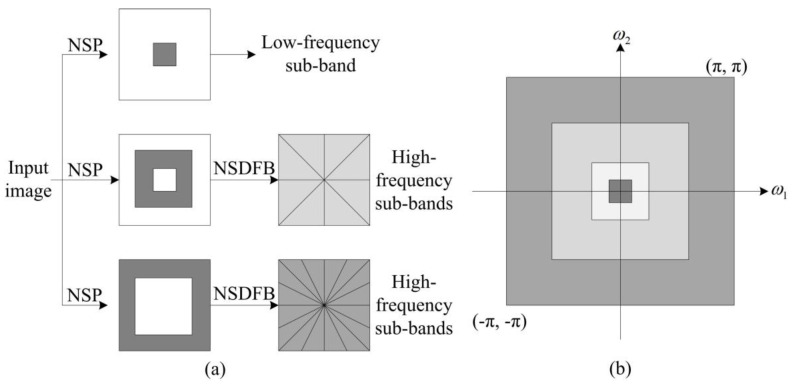

2.1. NSCT

The non-subsampled contourlet transform (NSCT) is a transformation with multi-directional, multi-scale, and translation invariance [12]. Its basic framework structure is divided into two parts: the non-subsampled pyramid (NSP) decomposition mechanism and the non-subsampled directional filter bank (NSDFB) decomposition mechanism. The NSCT first utilizes NSP decomposition to perform multi-scale decomposition on the source image and then uses NSDFB decomposition to further decompose the high-frequency components in the direction, ultimately obtaining sub-band images of the source image at different scales and in different directions [12]. Figure 2 shows the NSCT basic framework structure diagram and the NSCT frequency domain partition diagram.

Figure 2.

(a) NSCT basic framework structure diagram; (b) NSCT frequency domain partition diagram.

2.2. Structure Tensor

For a multi-channel image, , the grayscale images and color images are two special cases when m = 1 and m = 3, respectively. The square of variation of at position in direction for any can be given by the following [37]:

| (1) |

The rate of change of image at position can be expressed as follows:

| (2) |

where , the following second-moment positive semi-definite matrix is

| (3) |

where P is called the structure tensor and where E, F, and G are defined as

| (4) |

| (5) |

| (6) |

The structure tensor-based focus detection operator (STO) is given by

| (7) |

where and are the eigenvalues of the structure tensor and can be computed by the following:

| (8) |

3. The Proposed Multi-Focus Image Fusion Method

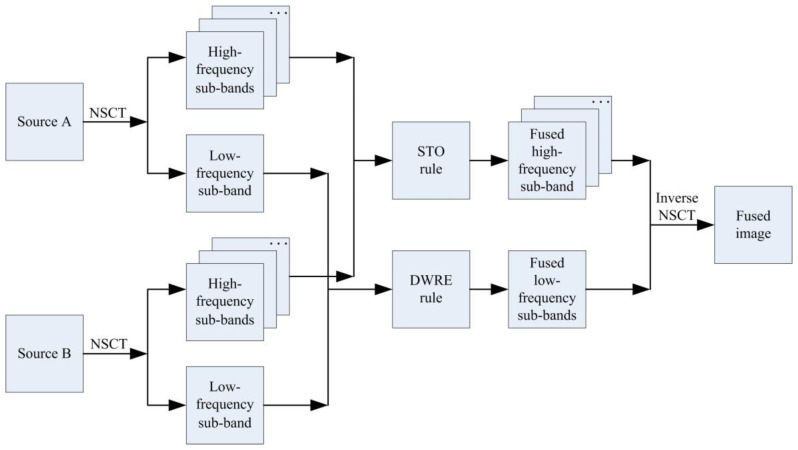

The multi-focus image fusion method based on the distance-weighted regional energy and structure tensor in NSCT domain is proposed in this section, and the schematic of the proposed algorithm is depicted in Figure 3. This fusion method can be divided into four parts: NSCT decomposition, low-frequency components fusion, high-frequency components fusion, and the inverse NSCT. More details can be seen in the follows.

Figure 3.

The schematic of the proposed fusion algorithm.

3.1. NSCT Decomposition

The multi-focus images A and B were decomposed into low-frequency components and high-frequency components through the NSCT, and the corresponding coefficients are defined as and , respectively.

3.2. Low-Frequency Components Fusion

Low-frequency components contain more energy and information in the images. In this section, the distance-weighted regional energy (DWRE)-based rule was used to merge the low-pass sub-bands, and it is defined as follows [38]:

| (9) |

where shows a matrix that allocates weights to the neighboring coefficients and where is defined as

| (10) |

Here, each entry of is achieved by reciprocating ‘1 + distance of the respective position from its center, i.e., ’, which is depicted in the following:

| (11) |

The fused low-frequency component is constructed by the following:

| (12) |

where is the DWRE of estimated over a neighborhood centered at the th coefficient.

3.3. High-Frequency Components Fusion

High-frequency components contain more details and noise in the images. In this section, the structure tensor-based focus detection operator (STO) fusion rule was used to process the high-frequency components, and the fused coefficients can be computed by

| (13) |

where

| (14) |

| (15) |

where shows the high-frequency coefficient of image at the -th scale and -th direction at location and where shows the structure salient image of computed by STO. is a local region centered at with the size of . Equation (14) is the classical operator called the consistency verification, which can improve the robustness and rectify some wrong focusing detection of .

3.4. Inverse NSCT

The final fused image was generated by the inverse NSCT, which was performed on fused low- and high-frequency components , and is defined as follows:

| (16) |

The main steps of the proposed multi-focus image fusion approach are summarized in Algorithm 1.

| Algorithm 1 Proposed multi-focus image fusion method |

|

Input: the source images: A and B Parameters: The number of NSCT decomposition levels: L, the number of directions at each decomposition level: Main step: Step 1: NSCT decomposition For each source image Perform NSCT decomposition on to generate ; End Step 2: Low-frequency components fusion For each source image Calculate the DWRE for LX utilizing Equations (9)–(11); End Merge LA and LB utilizing Equations (12) to generate LF; Step 3: High-frequency components fusion For each Level For each direction For each source image Calculate the structure salient image of computed by STO using Equation (7); Calculate the consistency verification using Equations (14) and (15); End Merge and using Equation (13) End End Step 4. Inverse NSCT Perform inverse NSCT on to generate F; Output: the fused image F. |

4. Experimental Results and Discussions

In this section, the Lytro dataset with twenty pair images (Figure 4) are utilized to experiment, and eight state-of-the-art fusion algorithms are used to be compared with our method, namely, multi-focus image fusion using a bilateral-gradient-based sharpness criterion (BGSC) [39], the non-subsampled contourlet transform and fuzzy-adaptive reduced pulse-coupled neural network (NSCT) [40], multi-focus image fusion in gradient domain (GD) [41], the unified densely connected network for image fusion (FusionDN) [42], the fast unified image fusion network based on the proportional maintenance of gradient and intensity (PMGI) [43], image fusion based on target-enhanced multi-scale transform decomposition (TEMST) [44], the unified unsupervised image fusion network (U2Fusion) [45], and zero-shot multi-focus image fusion (ZMFF) [3]. Subjective and objective assessments are also used; in terms of objective assessment, eight metrics, including edge-based similarity measurement [46], the feature mutual information metric [47], the gradient-based metric [48], the structural similarity-based metric [48], the phase congruency-based metric [48], the structural similarity-based metric introduced by Yang et al. [48], the average gradient metric [22], and the average pixel intensity metric [22], are used. The larger the values of these indicators, the better the fusion effect. In the proposed method, the size of is set as 5 × 5; the ‘vk’ and ‘pyrexc’ are used as the pyramid filter and directional filter, respectively; and the four decomposition levels with 2, 2, 2, and 2 directions from a coarser scale to a finer scale are used.

Figure 4.

Lytro dataset.

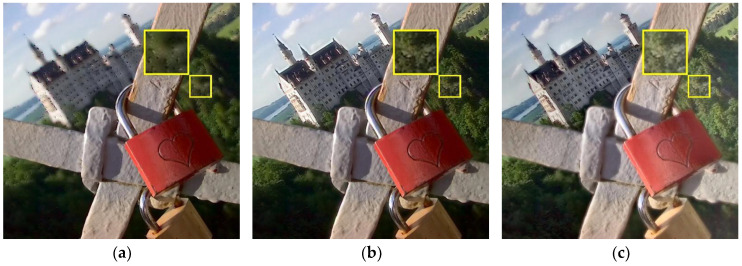

4.1. Qualitative Comparisons

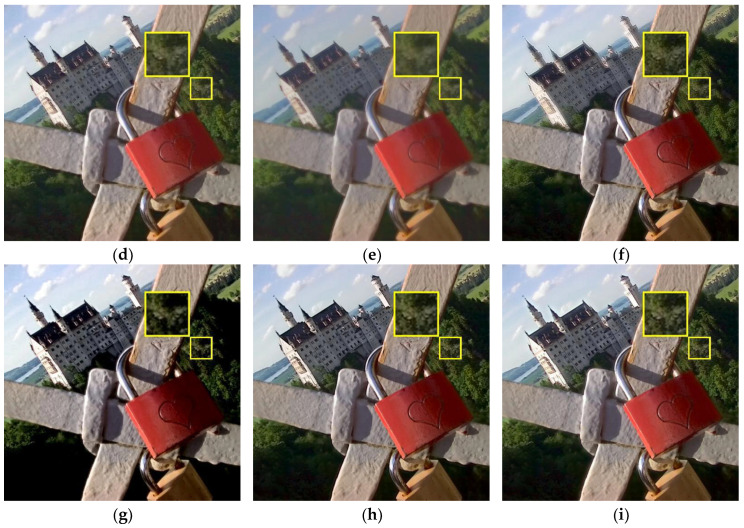

In this section, we selected five sets of data from the Lytro dataset for result display; the qualitative comparisons of different methods are shown in Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9. We have enlarged some areas for easy observation and comparison. In Figure 5, we can see that the fusion result generated by the BGSC method has a weak far-focus fusion effect and that black spots appear around the building; the fusion results computed by the NSCT, GD, FusionDN, PMGI, and TEMST methods are somewhat blurry; the U2Fusion method generates a dark fusion image which limits the observation of some areas in the image; and the ZMFF method produces fully focused image results. However, compared to our method, our result produces moderate brightness and clear edges, especially the brightness of the red lock in the image, which is better than ZMFF.

Figure 5.

Lytro-06 fusion results. (a) BGSC; (b) NSCT; (c) GD; (d) FusionDN; (e) PMGI; (f) TEMST; (g) U2Fusion; (h) ZMFF; and (i) proposed.

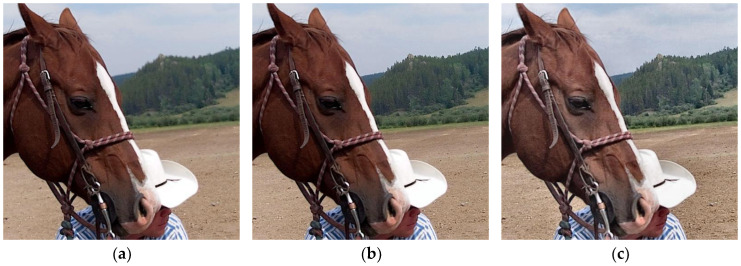

Figure 6.

Lytro-10 fusion results. (a) BGSC; (b) NSCT; (c) GD; (d) FusionDN; (e) PMGI; (f) TEMST; (g) U2Fusion; (h) ZMFF; and (i) proposed.

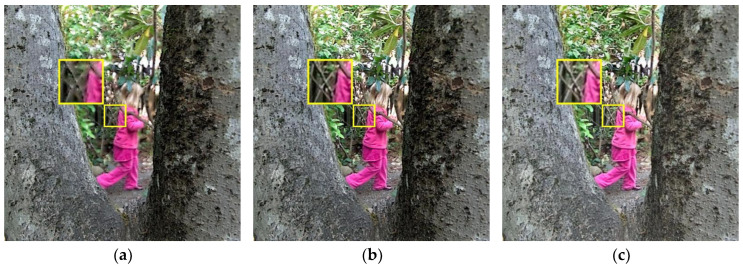

Figure 7.

Lytro-13 fusion results. (a) BGSC; (b) NSCT; (c) GD; (d) FusionDN; (e) PMGI; (f) TEMST; (g) U2Fusion; (h) ZMFF; and (i) proposed.

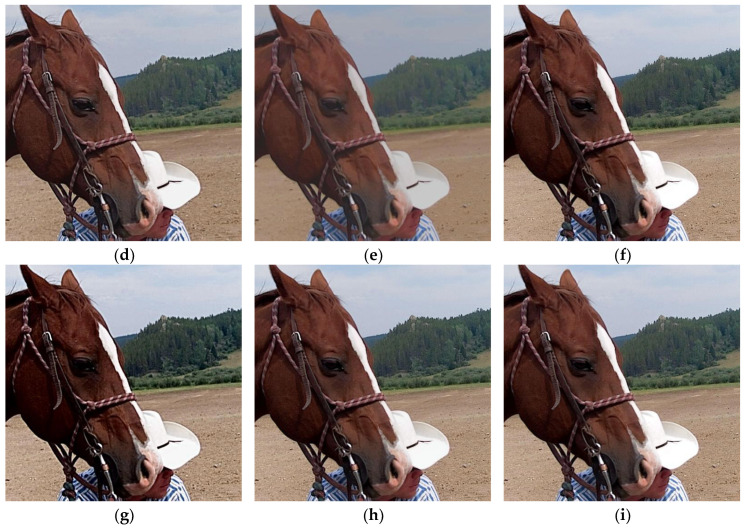

Figure 8.

Lytro-15 fusion results. (a) BGSC; (b) NSCT; (c) GD; (d) FusionDN; (e) PMGI; (f) TEMST; (g) U2Fusion; (h) ZMFF; and (i) proposed.

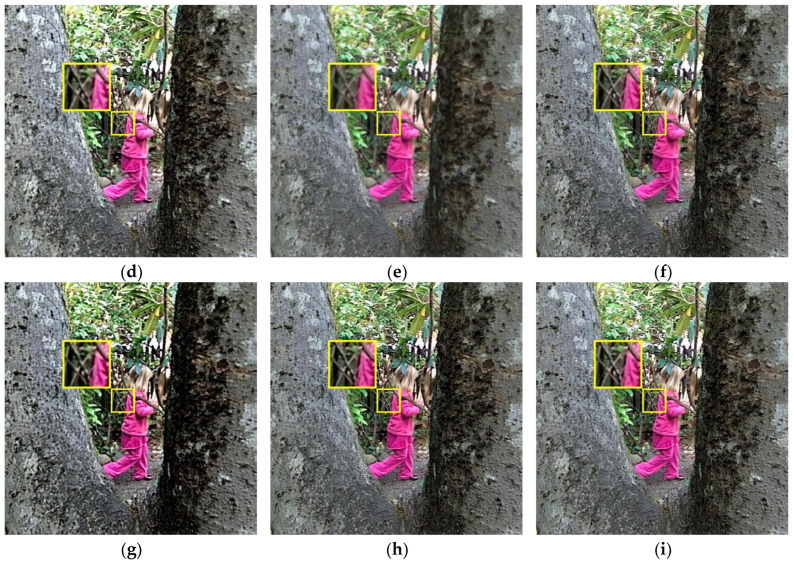

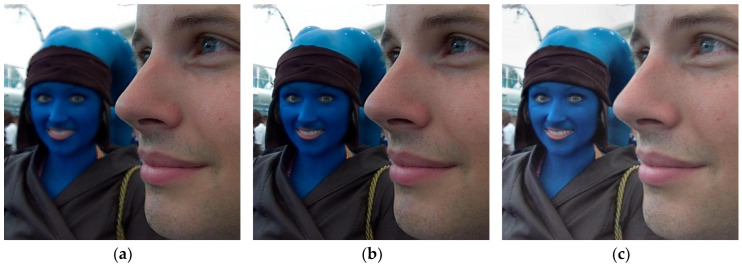

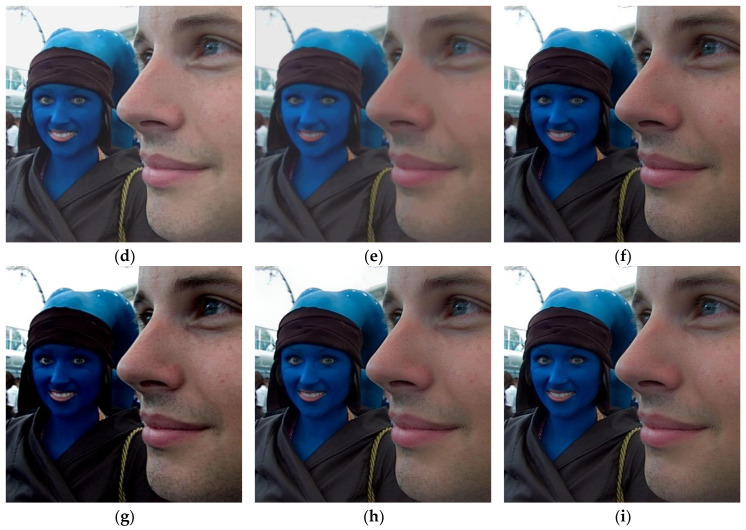

Figure 9.

Lytro-18 fusion results. (a) BGSC; (b) NSCT; (c) GD; (d) FusionDN; (e) PMGI; (f) TEMST; (g) U2Fusion; (h) ZMFF; and (i) proposed.

In Figure 6, we can see that the fusion results generated by the BGSC and PMGI methods are blurry, making it difficult to observe the detailed information in the fused images; the fusion images computed by NSCT, FusionDN, TEMST, and ZMFF are similar, these images all yielding fully focused image results; the GD method generates a high-brightness fusion image, with the color information in the image being partially lost; the U2Fusion method generates a sharpened fused image, with some areas of the image having lost information, such as the branches of the tree on the right side and the branches of the small tree that is far away in the image, all of which are darker in the image, which is not conducive to obtaining information in a fully focused image; and the fusion image calculated by the proposed method is an all-focused image, with the details and edge information of the image being well preserved and with moderate brightness and ease of observation.

In Figure 7, we can denote that the fusion images calculated by the BGSC, GD, FusionDN, PMGI, and TEMST methods appear blurry, the images generated by BGSC and TEMST especially exhibiting severe distortion; the image computed by the NSCT method shows a basic full-focus result with some artifacts in the image, making it appear to have texture overlays; the U2Fusion method generates a dark image; and the fusion image calculated by the ZMFF method is very close to the result obtained by our algorithm. However, our fusion effect is better, with a clearer image and the almost seamless integration of all the information into the fully focused image.

In Figure 8, we can see that the fused images computed by the BGSC, FusionDN, and PMGI methods appear blurry, especially the forest information in the far distance of the images, making it difficult to observe the details; the images generated by the NSCT, TEMST, and ZMFF methods are similar; the fused image obtained by the GD method has high brightness and clarity; the fused image computed by the U2Fusion method has some dark regions, especially in the mouth area of the horse, with the loss of information being severe; and the fused image achieved by the proposed method has moderate brightness and retains more image information.

In Figure 9, we can see that the fusion images obtained by the BGSC, FusionDN, and PMGI approaches exhibit a certain degree of the blurring phenomenon, which makes it difficult to observe the information in the images, the image generated by the BGSC method especially not achieving full focus and the information on the left side of the image being severely lost; the NSCT, TEMST, and ZMFF methods all obtain a basic fully focused image from which information about the entire scene can be observed; the GD method gives a high-brightness fusion image but can also cause some details in the image to be lost; the fused image achieved by the U2Fusion method has many darker areas, causing severe information loss (for example, the information about the girl’s clothing and headscarf in the image cannot be captured correctly); the fusion image obtained by our algorithm fully preserves the information of the two source images. Additionally, it not only has moderate brightness, but the detailed information of the entire scene can also be fully observed and obtained.

4.2. Quantitative Comparisons

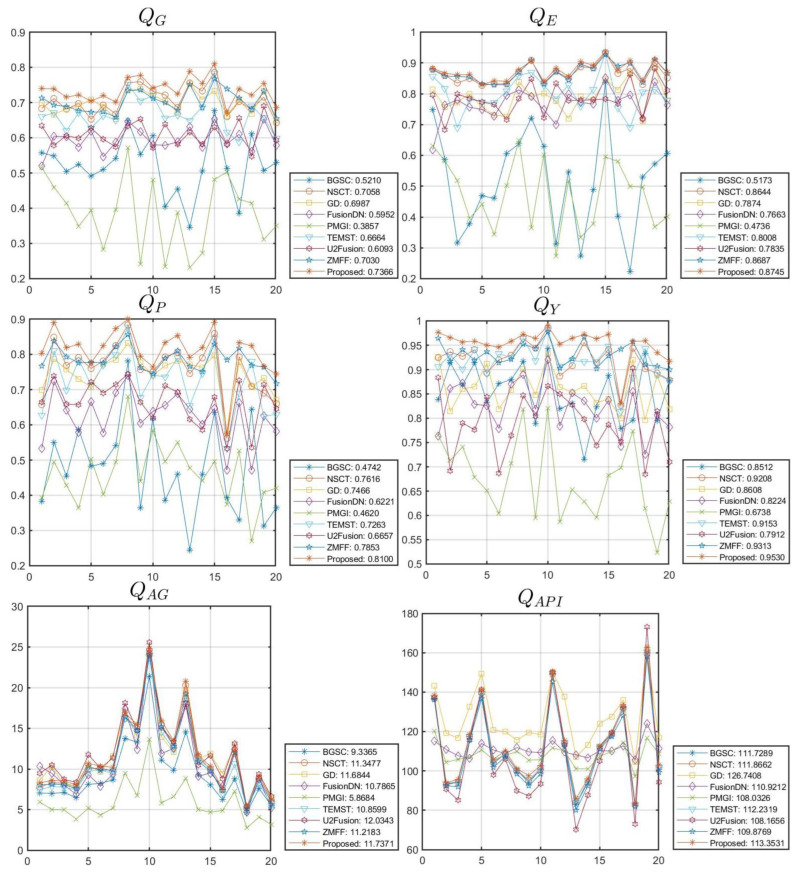

In this section, the quantitative comparisons of different methods are shown in Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6 and Figure 10. In Table 1, we can see that the metrics data , , , , , , and computed by the proposed method are the best; the metric data generated by the GD method is the best, but the corresponding indicator result of our algorithm still ranks third. In Table 2, we can see that the metrics data QAB/F, QFMI, QG, QE, QP, and QY generated by our method are the best. The metric data generated by the U2Fusion method is the best, while our method ranks second; the metric data generated by the GD method is the best, and our method ranks fourth. In Table 3, we denoted that the metrics data QAB/F, QFMI, QG, QE, QP, QY and QAG computed by our method are the best; the metric data generated by the FusionDN method is the best, and our method ranks fourth. In Table 4, we can see that the metrics data QAB/F, QFMI, QG, QE, QP, and QY generated by our method are the best; the metrics data and generated by the GD method are the best. In Table 5, we denoted that the metrics data , , , , , , and computed by our method are the best; the metric data computed by the FusionDN method is the best.

Table 1.

The quantitative assessment of different methods in Figure 5.

| QAB/F | QMFI | QG | QE | QP | QY | QAG | QAPI | |

|---|---|---|---|---|---|---|---|---|

| BGSC | 0.5083 | 0.8910 | 0.5104 | 0.4608 | 0.4902 | 0.8714 | 8.2490 | 103.9694 |

| NSCT | 0.6931 | 0.8963 | 0.6934 | 0.8322 | 0.7818 | 0.9205 | 10.1363 | 104.1275 |

| GD | 0.6843 | 0.8914 | 0.6874 | 0.7312 | 0.7718 | 0.8186 | 9.6352 | 120.8782 |

| FusionDN | 0.5398 | 0.8872 | 0.5459 | 0.7274 | 0.5769 | 0.7789 | 7.8779 | 110.6457 |

| PMGI | 0.2819 | 0.8835 | 0.2829 | 0.3429 | 0.4029 | 0.6042 | 4.3240 | 105.6984 |

| TEMST | 0.6632 | 0.8924 | 0.6633 | 0.7713 | 0.7702 | 0.9323 | 9.8586 | 104.3450 |

| U2Fusion | 0.5919 | 0.8863 | 0.5957 | 0.7646 | 0.6907 | 0.6872 | 10.0790 | 97.9331 |

| ZMFF | 0.6702 | 0.8898 | 0.6736 | 0.8290 | 0.7763 | 0.9146 | 9.8506 | 101.9007 |

| Proposed | 0.7190 | 0.8968 | 0.7193 | 0.8419 | 0.8245 | 0.9461 | 10.3594 | 105.8613 |

Table 2.

The quantitative assessment of different methods in Figure 6.

| QAB/F | QMFI | QG | QE | QP | QY | QAG | QAPI | |

|---|---|---|---|---|---|---|---|---|

| BGSC | 0.6042 | 0.8409 | 0.6059 | 0.6289 | 0.6181 | 0.9424 | 21.4182 | 100.9177 |

| NSCT | 0.7292 | 0.8431 | 0.7321 | 0.8345 | 0.7498 | 0.9861 | 24.1701 | 100.8183 |

| GD | 0.7172 | 0.8397 | 0.7211 | 0.8010 | 0.7403 | 0.9313 | 24.0096 | 118.4325 |

| FusionDN | 0.5762 | 0.8321 | 0.5800 | 0.7486 | 0.6380 | 0.9205 | 24.6566 | 109.2992 |

| PMGI | 0.4743 | 0.8352 | 0.4806 | 0.6013 | 0.5841 | 0.8205 | 13.6582 | 105.7407 |

| TEMST | 0.7159 | 0.8404 | 0.7193 | 0.8274 | 0.7421 | 0.9815 | 23.7422 | 101.4723 |

| U2Fusion | 0.5667 | 0.8320 | 0.5712 | 0.7219 | 0.6186 | 0.8666 | 25.5782 | 93.3662 |

| ZMFF | 0.7089 | 0.8429 | 0.7120 | 0.8316 | 0.7443 | 0.9772 | 24.0680 | 98.6039 |

| Proposed | 0.7381 | 0.8453 | 0.7412 | 0.8398 | 0.7649 | 0.9893 | 24.7951 | 102.7896 |

Table 3.

The quantitative assessment of different methods in Figure 7.

| QAB/F | QMFI | QG | QE | QP | QY | QAG | QAPI | |

|---|---|---|---|---|---|---|---|---|

| BGSC | 0.3510 | 0.8138 | 0.3463 | 0.2734 | 0.2438 | 0.7150 | 14.5754 | 82.8519 |

| NSCT | 0.7609 | 0.8528 | 0.7562 | 0.8874 | 0.7451 | 0.9556 | 20.2824 | 84.1720 |

| GD | 0.7597 | 0.8477 | 0.7543 | 0.7906 | 0.7720 | 0.8668 | 19.6094 | 107.0923 |

| FusionDN | 0.6414 | 0.8398 | 0.6312 | 0.7718 | 0.6440 | 0.8356 | 17.8845 | 108.0876 |

| PMGI | 0.2369 | 0.8391 | 0.2309 | 0.3342 | 0.4773 | 0.6285 | 8.8447 | 101.0823 |

| TEMST | 0.6563 | 0.8416 | 0.6491 | 0.7598 | 0.6544 | 0.9165 | 18.4142 | 83.8760 |

| U2Fusion | 0.6239 | 0.8354 | 0.6157 | 0.7789 | 0.6156 | 0.7982 | 18.1150 | 70.1179 |

| ZMFF | 0.7585 | 0.8490 | 0.7513 | 0.8963 | 0.7652 | 0.9671 | 19.2529 | 79.9660 |

| Proposed | 0.7909 | 0.8535 | 0.7881 | 0.9041 | 0.7919 | 0.9720 | 20.7733 | 85.8043 |

Table 4.

The quantitative assessment of different methods in Figure 8.

| QAB/F | QMFI | QG | QE | QP | QY | QAG | QAPI | |

|---|---|---|---|---|---|---|---|---|

| BGSC | 0.6808 | 0.9452 | 0.6766 | 0.8362 | 0.6360 | 0.8873 | 8.0699 | 112.4417 |

| NSCT | 0.7890 | 0.9460 | 0.7850 | 0.9335 | 0.8585 | 0.9412 | 9.9235 | 112.0279 |

| GD | 0.7360 | 0.9247 | 0.7328 | 0.8463 | 0.7966 | 0.8397 | 11.8692 | 124.0953 |

| FusionDN | 0.6570 | 0.9302 | 0.6529 | 0.8512 | 0.6466 | 0.8367 | 9.5025 | 110.2910 |

| PMGI | 0.4846 | 0.9326 | 0.4817 | 0.5948 | 0.4954 | 0.6824 | 4.6840 | 107.4757 |

| TEMST | 0.7850 | 0.9446 | 0.7830 | 0.9336 | 0.8464 | 0.9477 | 10.1213 | 112.5434 |

| U2Fusion | 0.6308 | 0.9205 | 0.6295 | 0.7828 | 0.6792 | 0.7869 | 11.6540 | 105.0806 |

| ZMFF | 0.7695 | 0.9390 | 0.7673 | 0.9270 | 0.8293 | 0.9289 | 9.7681 | 111.2188 |

| Proposed | 0.8106 | 0.9463 | 0.8102 | 0.9367 | 0.8903 | 0.9723 | 10.3106 | 112.7676 |

Table 5.

The quantitative assessment of different methods in Figure 9.

| QAB/F | QMFI | QG | QE | QP | QY | QAG | QAPI | |

|---|---|---|---|---|---|---|---|---|

| BGSC | 0.6100 | 0.9308 | 0.6117 | 0.5300 | 0.6441 | 0.9339 | 4.6552 | 82.9920 |

| NSCT | 0.6804 | 0.9410 | 0.6808 | 0.8308 | 0.7105 | 0.9020 | 5.1003 | 82.5546 |

| GD | 0.6710 | 0.9301 | 0.6678 | 0.7118 | 0.7076 | 0.7964 | 5.3423 | 104.4747 |

| FusionDN | 0.5641 | 0.9328 | 0.5611 | 0.7192 | 0.4708 | 0.7252 | 4.7077 | 105.7243 |

| PMGI | 0.4156 | 0.9272 | 0.4147 | 0.4973 | 0.2699 | 0.6139 | 2.7373 | 97.1696 |

| TEMST | 0.6899 | 0.9375 | 0.6902 | 0.8064 | 0.7799 | 0.9433 | 5.1428 | 82.9629 |

| U2Fusion | 0.5494 | 0.9316 | 0.5469 | 0.7190 | 0.5362 | 0.6846 | 5.3458 | 72.9192 |

| ZMFF | 0.6831 | 0.9390 | 0.6807 | 0.8368 | 0.7691 | 0.9100 | 5.1441 | 81.7770 |

| Proposed | 0.7209 | 0.9444 | 0.7210 | 0.8440 | 0.8238 | 0.9587 | 5.4338 | 83.2692 |

Table 6.

The average quantitative assessment of different methods in Figure 10.

| QAB/F | QMFI | QG | QE | QP | QY | QAG | QAPI | |

|---|---|---|---|---|---|---|---|---|

| BGSC | 0.5242 | 0.8826 | 0.5210 | 0.5173 | 0.4742 | 0.8512 | 9.3365 | 111.7289 |

| NSCT | 0.7103 | 0.8972 | 0.7058 | 0.8644 | 0.7616 | 0.9208 | 11.3477 | 111.8662 |

| GD | 0.7034 | 0.8887 | 0.6987 | 0.7874 | 0.7466 | 0.8608 | 11.6844 | 126.7408 |

| FusionDN | 0.6018 | 0.8833 | 0.5952 | 0.7663 | 0.6221 | 0.8224 | 10.7865 | 110.9212 |

| PMGI | 0.3901 | 0.8815 | 0.3857 | 0.4736 | 0.4620 | 0.6738 | 5.8684 | 108.0326 |

| TEMST | 0.6722 | 0.8915 | 0.6664 | 0.8008 | 0.7263 | 0.9153 | 10.8599 | 112.2319 |

| U2Fusion | 0.6143 | 0.8844 | 0.6093 | 0.7835 | 0.6657 | 0.7912 | 12.0343 | 108.1656 |

| ZMFF | 0.7087 | 0.8925 | 0.7030 | 0.8687 | 0.7853 | 0.9313 | 11.2183 | 109.8769 |

| Proposed | 0.7388 | 0.8986 | 0.7366 | 0.8745 | 0.8100 | 0.9530 | 11.7371 | 113.3531 |

Figure 10.

The line chart of metrics data.

Figure 10 shows the objective experimental results of different algorithms with respect to 20 sets of image pairs, and the data results of the same method with respect to different image pairs are connected into a curve, with the average indicator value on the right side of the indicator graph. Table 6 shows the average quantitative assessment of the different methods in Figure 10. In Figure 10 and Table 6, we can see that the average metrics data , , , , , and generated by our method are the best; the average metrics date and generated by the U2Fusion and GD methods are the best, respectively, and the two corresponding average metrics data computed by our method rank second.

Through rigorous qualitative and quantitative evaluation and analysis, the results show that our algorithm stands out in the fusion effect of multi-focus images. Compared with state-of-the-art algorithms, we have achieved the best fusion effect and have the advantages of rich information and clear images.

5. Conclusions

In this paper, a novel multi-focus image fusion method based on the distance-weighted regional energy and structure tensor in NSCT domain was proposed. The structure tensor-based fusion rule was utilized to fuse low-frequency sub-bands, and the distance-weighted regional energy-based fusion rule was utilized to fused high-frequency sub-bands. The proposed method was experimented on the Lytro dataset with 20 paired images, and the fusion results demonstrate that our method can generate state-of-the-art fusion performance in terms of image information, definition, and brightness, making the seamless fusion of multi-focus images possible. In future work, we will improve and expand the application of this algorithm in the field of multi-modal image fusion so that it has better universality in image fusion.

Author Contributions

The experimental measurements and data collection were carried out by M.L., L.L., Z.J. and H.M. The manuscript was written by M.L. with the assistance of L.L., Q.J., Z.J., L.C. and H.M. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was supported by the National Science Foundation of China under Grant No. 62261053; the Shanghai Aerospace Science and Technology Innovation Fund under Grant No. SAST2019-048; and the Cross-Media Intelligent Technology Project of the Beijing National Research Center for Information Science and Technology (BNRist) under Grant No. BNR2019TD01022.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Zhang H., Xu H. Image fusion meets deep learning: A survey and perspective. Inf. Fusion. 2021;76:323–336. doi: 10.1016/j.inffus.2021.06.008. [DOI] [Google Scholar]

- 2.Karim S., Tong G. Current advances and future perspectives of image fusion: A comprehensive review. Inf. Fusion. 2023;90:185–217. doi: 10.1016/j.inffus.2022.09.019. [DOI] [Google Scholar]

- 3.Hu X., Jiang J., Liu X., Ma J. ZMFF: Zero-shot multi-focus image fusion. Inf. Fusion. 2023;92:127–138. doi: 10.1016/j.inffus.2022.11.014. [DOI] [Google Scholar]

- 4.Zafar R., Farid M., Khan M. Multi-focus image fusion: Algorithms, evaluation, and a library. J. Imaging. 2020;6:60. doi: 10.3390/jimaging6070060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dong Y., Chen Z., Li Z., Gao F. A multi-branch multi-scale deep learning image fusion algorithm based on DenseNet. Appl. Sci. 2022;12:10989. doi: 10.3390/app122110989. [DOI] [Google Scholar]

- 6.Singh S., Singh H. A review of image fusion: Methods, applications and performance metrics. Digit. Signal Process. 2023;137:104020. doi: 10.1016/j.dsp.2023.104020. [DOI] [Google Scholar]

- 7.Li L., Ma H., Jia Z., Si Y. A novel multiscale transform decomposition based multi-focus image fusion framework. Multimed. Tools Appl. 2021;80:12389–12409. doi: 10.1007/s11042-020-10462-y. [DOI] [Google Scholar]

- 8.Li L., Si Y., Wang L., Jia Z., Ma H. A novel approach for multi-focus image fusion based on SF-PAPCNN and ISML in NSST domain. Multimed. Tools Appl. 2020;79:24303–24328. doi: 10.1007/s11042-020-09154-4. [DOI] [Google Scholar]

- 9.Wu P., Hua Z., Li J. Multi-scale siamese networks for multi-focus image fusion. Multimed. Tools Appl. 2023;82:15651–15672. doi: 10.1007/s11042-022-13949-y. [DOI] [Google Scholar]

- 10.Candes E., Demanet L. Fast discrete curvelet transforms. Multiscale Model. Simul. 2006;5:861–899. doi: 10.1137/05064182X. [DOI] [Google Scholar]

- 11.Do M.N., Vetterli M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005;14:2091–2106. doi: 10.1109/TIP.2005.859376. [DOI] [PubMed] [Google Scholar]

- 12.Da A., Zhou J., Do M. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE Trans. Image Process. 2006;15:3089–3101. doi: 10.1109/tip.2006.877507. [DOI] [PubMed] [Google Scholar]

- 13.Guo K., Labate D. Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 2007;39:298–318. doi: 10.1137/060649781. [DOI] [Google Scholar]

- 14.Easley G., Labate D., Lim W.Q. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008;25:25–46. doi: 10.1016/j.acha.2007.09.003. [DOI] [Google Scholar]

- 15.Kumar N., Prasad T., Prasad K. An intelligent multimodal medical image fusion model based on improved fast discrete curvelet transform and type-2 fuzzy entropy. Int. J. Fuzzy Syst. 2023;25:96–117. doi: 10.1007/s40815-022-01379-9. [DOI] [Google Scholar]

- 16.Kumar N., Prasad T., Prasad K. Multimodal medical image fusion with improved multi-objective meta-heuristic algorithm with fuzzy entropy. J. Inf. Knowl. Manag. 2023;22:2250063. doi: 10.1142/S0219649222500630. [DOI] [Google Scholar]

- 17.Li S., Yang B. Multifocus image fusion by combining curvelet and wavelet transform. Pattern Recognit. Lett. 2008;29:1295–1301. doi: 10.1016/j.patrec.2008.02.002. [DOI] [Google Scholar]

- 18.Zhang W., Jiao L., Liu F. Adaptive contourlet fusion clustering for SAR image change detection. IEEE Trans. Image Process. 2022;31:2295–2308. doi: 10.1109/TIP.2022.3154922. [DOI] [PubMed] [Google Scholar]

- 19.Li L., Lv M., Jia Z., Ma H. Sparse representation-based multi-focus image fusion method via local energy in shearlet domain. Sensors. 2023;23:2888. doi: 10.3390/s23062888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hao H., Zhang B., Wang K. MGFuse: An infrared and visible image fusion algorithm based on multiscale decomposition optimization and gradient-weighted local energy. IEEE Access. 2023;11:33248–33260. doi: 10.1109/ACCESS.2023.3263183. [DOI] [Google Scholar]

- 21.Li S., Kang X., Hu J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013;22:2864–2875. doi: 10.1109/TIP.2013.2244222. [DOI] [PubMed] [Google Scholar]

- 22.Shreyamsha Kumar B. Image fusion based on pixel significance using cross bilateral filter. Signal Image Video Process. 2015;9:1193–1204. doi: 10.1007/s11760-013-0556-9. [DOI] [Google Scholar]

- 23.Tan W., Zhou H. Fusion of multi-focus images via a Gaussian curvature filter and synthetic focusing degree criterion. Appl. Opt. 2018;57:10092–10101. doi: 10.1364/AO.57.010092. [DOI] [PubMed] [Google Scholar]

- 24.Feng X., Fang C., Qiu G. Multimodal medical image fusion based on visual saliency map and multichannel dynamic threshold neural P systems in sub-window variance filter domain. Biomed. Signal Process. Control. 2023;84:104794. doi: 10.1016/j.bspc.2023.104794. [DOI] [Google Scholar]

- 25.Zhang Y., Lee H. Multi-sensor infrared and visible image fusion via double joint edge preservation filter and non-globally saliency gradient operator. IEEE Sens. J. 2023;23:10252–10267. doi: 10.1109/JSEN.2023.3262775. [DOI] [Google Scholar]

- 26.Jiang Q., Huang J. Medical image fusion using a new entropy measure between intuitionistic fuzzy sets joint Gaussian curvature filter. IEEE Trans. Radiat. Plasma Med. Sci. 2023;7:494–508. doi: 10.1109/TRPMS.2023.3239520. [DOI] [Google Scholar]

- 27.Zhang C. Multifocus image fusion using a convolutional elastic network. Multimed. Tools Appl. 2022;81:1395–1418. doi: 10.1007/s11042-021-11362-5. [DOI] [Google Scholar]

- 28.Ma W., Wang K., Li J. Infrared and visible image fusion technology and application: A review. Sensors. 2023;23:599. doi: 10.3390/s23020599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Liu Y., Chen X., Peng H. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion. 2017;36:191–207. doi: 10.1016/j.inffus.2016.12.001. [DOI] [Google Scholar]

- 30.Lai R., Li Y., Guan J. Multi-scale visual attention deep convolutional neural network for multi-focus image fusion. IEEE Access. 2019;7:114385–114399. doi: 10.1109/ACCESS.2019.2935006. [DOI] [Google Scholar]

- 31.Wang L., Liu Y. MSE-Fusion: Weakly supervised medical image fusion with modal synthesis and enhancement. Eng. Appl. Artif. Intell. 2023;119:105744. doi: 10.1016/j.engappai.2022.105744. [DOI] [Google Scholar]

- 32.Wang Z., Li X. A self-supervised residual feature learning model for multifocus image fusion. IEEE Trans. Image Process. 2022;31:4527–4542. doi: 10.1109/TIP.2022.3184250. [DOI] [PubMed] [Google Scholar]

- 33.Jiang L., Fan H., Li J. A multi-focus image fusion method based on attention mechanism and supervised learning. Appl. Intell. 2022;52:339–357. doi: 10.1007/s10489-021-02358-7. [DOI] [Google Scholar]

- 34.Jin X., Xi X., Zhou D. An unsupervised multi-focus image fusion method based on Transformer and U-Net. IET Image Process. 2023;17:733–746. doi: 10.1049/ipr2.12668. [DOI] [Google Scholar]

- 35.Zhang H., Le Z., Shao Z. MFF-GAN: An unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion. Inf. Fusion. 2021;66:40–53. doi: 10.1016/j.inffus.2020.08.022. [DOI] [Google Scholar]

- 36.Liu S., Yang L. BPDGAN: A GAN-based unsupervised back project dense network for multi-modal medical image fusion. Entropy. 2022;24:1823. doi: 10.3390/e24121823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li X., Zhou F., Tan H. Multi-focus image fusion based on nonsubsampled contourlet transform and residual removal. Signal Process. 2021;184:108062. doi: 10.1016/j.sigpro.2021.108062. [DOI] [Google Scholar]

- 38.Panigrahy C., Seal A. Parameter adaptive unit-linking pulse coupled neural network based MRI-PET/SPECT image fusion. Biomed. Signal Process. Control. 2023;83:104659. doi: 10.1016/j.bspc.2023.104659. [DOI] [Google Scholar]

- 39.Tian J., Chen L., Ma L. Multi-focus image fusion using a bilateral gradient-based sharpness criterion. Opt. Commun. 2011;284:80–87. doi: 10.1016/j.optcom.2010.08.085. [DOI] [Google Scholar]

- 40.Das S., Kundu M.K. A neuro-fuzzy approach for medical image fusion. IEEE Trans. Biomed. Eng. 2013;60:3347–3353. doi: 10.1109/TBME.2013.2282461. [DOI] [PubMed] [Google Scholar]

- 41.Paul S., Sevcenco I., Agathoklis P. Multi-exposure and multi-focus image fusion in gradient domain. J. Circuits Syst. Comput. 2016;25:1650123. doi: 10.1142/S0218126616501231. [DOI] [Google Scholar]

- 42.Xu H., Ma J., Le Z. FusionDN: A unified densely connected network for image fusion; Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI); New York, NY, USA. 7–12 February 2020; pp. 12484–12491. [Google Scholar]

- 43.Zhang H., Xu H., Xiao Y. Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity; Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI); New York, NY, USA. 7–12 February 2020; pp. 12797–12804. [Google Scholar]

- 44.Chen J., Li X., Luo L. Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Inf. Sci. 2020;508:64–78. doi: 10.1016/j.ins.2019.08.066. [DOI] [Google Scholar]

- 45.Xu H., Ma J., Jiang J. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2022;44:502–518. doi: 10.1109/TPAMI.2020.3012548. [DOI] [PubMed] [Google Scholar]

- 46.Qu X., Yan J., Xiao H. Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Autom. Sin. 2008;34:1508–1514. doi: 10.3724/SP.J.1004.2008.01508. [DOI] [Google Scholar]

- 47.Haghighat M., Razian M. Fast-FMI: Non-reference image fusion metric; Proceedings of the IEEE 8th International Conference on Application of Information and Communication Technologies; Astana, Kazakhstan. 15–17 October 2014; pp. 424–426. [Google Scholar]

- 48.Liu Z., Blasch E., Xue Z. Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2012;34:94–109. doi: 10.1109/TPAMI.2011.109. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.