Abstract

Health care systems and nursing leaders aim to make evidence-based nurse staffing decisions. Understanding how nurses use and perceive available data to support safe staffing can strengthen learning health care systems and support evidence-based practice, particularly given emerging data availability and specific nursing challenges in data usability. However, current literature offers sparse insight into the nature of data use and challenges in the inpatient nurse staffing management context. We aimed to investigate how nurse leaders experience using data to guide their inpatient staffing management decisions in the Veterans Health Administration, the largest integrated health care system in the United States. We conducted semi-structured interviews with 27 Veterans Health Administration nurse leaders across five management levels, using a constant comparative approach for analysis. Participants primarily reported using data for quality improvement, organizational learning, and organizational monitoring and support. Challenges included data fragmentation, unavailability and unsuitability to user need, lack of knowledge about available data, and untimely reporting. Our findings suggest prioritizing end-user experience and needs is necessary to better govern evidence-based data tools for improving nursing care. Continuous nurse leader involvement in data governance is integral to ensuring high-quality data for end-user nurses to guide their decisions impacting patient care.

Keywords: learning health system, nursing, qualitative research, workforce

Introduction

Health care systems and nursing leaders aim to make evidence-based nurse staffing decisions, as inappropriately staffed nursing units can have detrimental effects on patient care, nurse well-being, and operational budgets.1-6 In learning health care systems, organizations use data generated as part of routine care for monitoring, coordination, reflection, and practice improvement.7-10 Understanding how nurses use and perceive available data and data tools to support safe staffing can strengthen learning health care systems and support evidence-based practice, particularly given emerging data availability and specific nursing challenges in data usability.11-13

Background

The Veterans Health Administration (VA) is the largest integrated health care system in the United States and was an early adopter of information systems and data utilization in health care.14 As of 2016, VA data systems housed more than 1.5 petabytes of data.15

For 20 years, VA has developed tools to support staff utilization of data to improve nursing care, including the national VA Nursing Outcomes Database (VANOD) in 2002, the near real-time, aggregated bed tracking and information system known as Bed Management Solutions (BMS) in 2008, and the 2010 Staffing Methodology Directive standardizing data-driven nurse staffing models.16 Recently, VA nursing informatics developed and embedded over 1400 standardized nursing health factors, or nursing assessment data elements, into the current VA electronic health record (EHR) known as the Computerized Patient Record System (CPRS).17, 18

Health care systems gather large amounts of structured data elements to support operations and care delivery; however, nursing is among several clinical professions whose provision of care generates overwhelming amounts of unstructured data through the electronic health record, handwritten notes, and other data collection means that ultimately require extensive cleaning, integration, and transformation to be rendered usable. Additional challenges such as lack of standardization in nursing terminologies and data standards, fragmentation, and lack of interoperability can arise in making data accessible, usable, relevant, and reliable to nurse end-users.19, 20 If not addressed, these challenges can affect the extent to which nurse leaders can make informed decisions to best achieve or sustain adequate, safe staffing levels, which may subsequently impact patient care and outcomes. Globally, professional nursing organizations recommend that optimal staffing decisions rely on professional judgment by nursing leaders focused on patient safety and equipped with real-time data.21 However, there is wide variation in organizational approaches to making data available that would allow for making informed nurse staffing decisions backed by evidence.22 Given inevitable increases in data applications and specific nursing challenges prevalent across the United States health care system, understanding how currently available data are used can help optimize health information systems for a learning health care system.19, 23, 24 To our knowledge, data challenges experienced in the health care system and particularly in nursing are well recognized in current literature, but assessments of individual nurse experiences with data use in the context of inpatient nurse staffing are sparse.25 We sought to fill this gap and build on existing literature by conducting one-on-one qualitative interviews to gather a range of VA nurse leader experiences and challenges of working with data that we could learn from in preparation for developing a prototype dashboard tailored to nurse staffing decision-making. Specifically, we investigated two research questions:

How do VA nurse leaders currently use data to guide inpatient staffing management decisions?

What challenges do VA nurse leaders encounter in using data to guide inpatient staffing management decisions?

Methods

Research Design

This study is part of a project aimed at developing a model to improve VA performance measurement. We employed a qualitative descriptive design to gather nurse leader perceptions about data guiding their inpatient staffing management decisions, and what data they need to improve evidence-based nursing care.26 We used the Consolidated Criteria for Reporting Qualitative Research (COREQ) to guide the development of this manuscript.27

Participants and Recruitment

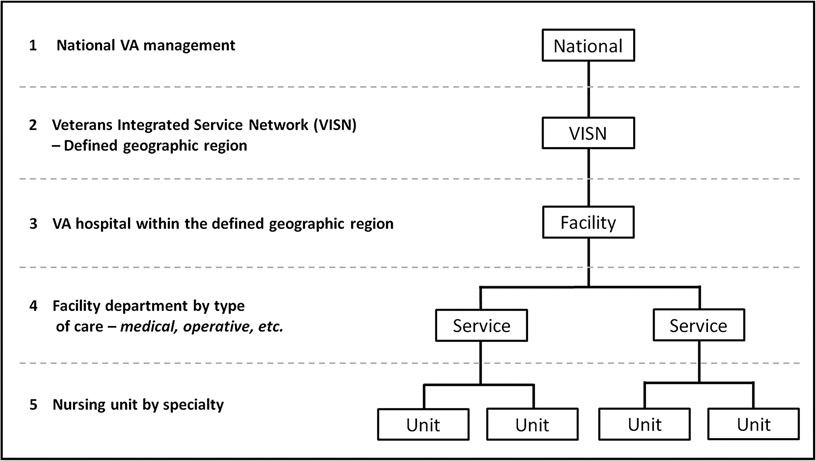

To enhance the rigor of our study and because staffing responsibilities and data needs may differ by leadership level, facility or network location, or inpatient unit type, we sought to obtain perspectives with comparable representation from each level of VA nursing leadership. Thus, we purposively sampled five to seven study participants from each of five organizational levels in the VA health care system (Figure 1).

Figure 1 – Simplified Veterans Health Administration (VA) System Organizational Chart From National to Unit Level.

There are 18 Veterans Integrated Service Networks (VISNs) that range in size from 5 to 11 hospitals per network. Facilities are categorized into one of five complexity levels based on hospital characteristics. Services are designated by care type. Units are designated by specialty. The number of services and units within a facility vary by facility.

Facilities were selected from different regional networks and complexity levels. The VA health care system currently comprises 18 regional networks, otherwise known as Veterans Integrated Service Networks (VISNs), defined by geographic region and ranging in size from 5 to 11 hospitals per network.28 Complexity levels are the clinical complexity of a VA hospital based on the hospital’s patient population, clinical services complexity, administrative complexity, and research and education.29 Each hospital is categorized into one of five complexity levels.29 For each selected facility, we targeted at least 1 facility-, 1 service-, and 1 unit-level interviewee based on their role’s relevance to inpatient nursing. In consultation with a study co-investigator ([LD]) who manages nursing patient care services at a large VA facility, we selected network- and national-level candidates based on the same criteria in addition to their professional connection with the co-investigator. Participants had knowledge neither of referral by the co-investigator nor of the co-investigator’s role on our research team and likewise, the specific participants included in the study were not disclosed to the co-investigator.

We e-mailed prospective subjects a study description and participation information. Respondents were verified through an e-mail questionnaire as study eligible if they worked at VA for greater than one year and held a role related to inpatient nursing.

Recruitment of interview participants took place from March 2020 through March 2021, coinciding with surges of COVID-19 hospitalizations across the United States. Our team was cognizant of the challenges faced by nurse leaders during this time, and while the topic of nurse staffing was especially salient, some hospitals and prospective participants understandably declined participation due to the urgent demands of the pandemic. Despite these challenges, we were able to meet our recruitment targets for all but one category (service-level) of nurse leaders.

Data Collection

Two research assistants ([JW] and [CY]) conducted 27 one-on-one, semi-structured interviews lasting 15 to 48 minutes. Ms. [CY] holds a Master of Science in Public Health (MSPH) and Ms. [JW] holds a Master of Public Health (MPH), with seven and three years of research experience, respectively. Participants answered questions in four areas (see Supplement 1 for IRB-approved interview guides): VA experience and current role, VA data use, how their experiences with VA data relate to inpatient staffing responsibilities and recent decisions, and preferences for a dashboard tailored to their role. We asked at least one question from each area, plus follow-ups as needed. Each participant was interviewed once. Interviews were conducted and audio-recorded using Skype for Business and Microsoft Teams.

Data Analysis

We adopted a constant comparative method approach for coding30 using Atlas.ti 8 (Atlas.ti GmbH, Berlin, Germany). Interview recordings were transcribed, with all personal- or location-identifying information redacted from final transcripts. One interviewer and one research coordinator ([JW] and [RS]), open coded one transcript from each organizational level to develop a codebook (Supplement 2). The grounded nature of the themes we found made it difficult to achieve a reasonable interrater reliability, which would have allowed one coder per transcript after initial double-coding for 20% of transcripts. Thus, we modified our initial plan and all interviews were coded twice then reviewed by both coders for agreement. Disagreements on codes were resolved through discussion and code resolutions were reflected in a final coded transcript. Once final coded transcripts were generated for all interviews, the most frequently mentioned codes across all interviews and organizational levels were grouped into larger themes based on the content and context of quotes associated with each code.

Ethical Considerations

This study was approved by the Baylor College of Medicine Institutional Review Board (protocol H-38405). Prior to interview, participants were made aware that their participation was confidential and completely voluntary with the option to freely withdraw if desired at any time during the study. Each participant provided verbal informed consent for participation and recording.

Results

We contacted 43 candidates and included a final sample of 27 participants in the study (Table 1). Of 12 facilities contacted, 5 participated; 5 declined, and 1 did not respond. One facility agreed to participate but we could not find an eligible participant at the necessary organizational level.

Table 1 – Participant Recruitment.

Out of 43 candidates initially contacted, the final sample of 27 participants included in our study comprised 5 unit-, 4 service-, 6 facility-, 6 network-, and 6 national-level nurse leaders across 5 facilities, 6 networks, and 3 national offices.

| Total Contacted (n=43) | Unit | Service | Facility | Network | National |

|---|---|---|---|---|---|

| Eligible; Participated (n=27) | 5 | 4 | 6 | 6 | 6 |

| Ineligible; Did Not Participate (n=7) | 1 | 2 | 0 | 3 | 1 |

| Unavailable; Did Not Participate (n=2) | 0 | 1 | 0 | 1 | 0 |

| Not interested; Did Not Participate (n=1) | 0 | 0 | 0 | 1 | 0 |

| No response; Did Not Participate (n=6) | 1 | 1 | 0 | 4 | 0 |

Supplement 3 includes participant role details. Though we were unable to recruit our target number of service-level participants after several attempts, we resolved not to pursue this issue extensively given the challenging conditions of the COVID-19 pandemic and since we had reached data saturation after 26 participant interviews.

Research Question 1: How Do VHA Nurse Leaders Currently Use Data to Guide Inpatient Staffing Management Decisions?

Quality Improvement

Participants reported using data to improve care quality and streamline care delivery, referencing nursing-sensitive quality indicators,31 patient safety indicators,32 and patient satisfaction metrics as key measures they use to evaluate quality of care and inform decision-making. For example, one service-level participant shared their involvement in their facility infection control task force that works to improve hospital-acquired infection rates (a nursing-sensitive quality indicator) (Supplement 4, A1). A network-level chief nursing officer noted their use of data from the Inpatient Evaluation Center (IPEC), which stores inpatient process and outcome measures, to assess staffing practices in light of documented patient outcomes (Supplement 4, A2).

Patient-centric measures may be used to plan for resources needed for adequate care, such as unit staffing and supplies, which a nurse manager noted when asked about preferences for an ideal dashboard (Supplement 4, A3).

Participants reported employing data for process improvement efforts including audits of nurse-sensitive patient safety and outcome indicators, establishment of staffing float pools, and evaluation of staffing adequacy as part of patient safety incident root cause analyses (RCAs). Participants at all levels, but most frequently national-level nurse leaders, discussed using data for process improvement.

Organizational Learning

Generally, interview responses related to the organizational learning theme reflected intra-organizational information exchange, application of evidence-based practice, and comparisons of data.

Intra-Organizational Information Exchange.

Information exchange within and among VA facilities was the most prominent subcategory for the organizational learning theme. At one service-level participant’s facility, sharing data and best practices relating to care quality occurs frequently within their service, between services, and among facility nursing leadership (Supplement 4, A4). Nurse leaders may seek out best practices from facilities performing well on certain metrics (e.g., patient experience – Supplement 4, A5) to guide performance improvement at their own facility.

Finally, facilities may receive information directly from network- and national-level nurses, especially for issues influencing direct patient care. One network-level participant engaged in this practice as part of their role in patient safety oversight; specifically, talking about working with their RCA teams to pull and manipulate relevant within-facility- to national-level VA data, as well as non-VA data, in investigating patient safety incidents that automatically require an RCA (Supplement 4, A6).

Applications of Evidence-Based Practice.

Participants noted that utilizing available data, generating data through research initiatives, and keeping abreast of external research data were integral to adopting evidence-based practices for effective staff management and care delivery, including maintenance of continuing staff education and employment of evidence-based practice for quality improvement. A facility-level participant emphasized how evidence-based practice is essential to a broader care model at their facility (Supplement 4, A7).

Data Comparisons.

Many VA data tools allow employees to view data for any VA facility or network. Participants reported using data comparisons for benchmarking, planning, or decision-making. A network-level participant shared that they compared nursing quality indicators across facilities statewide and academic teaching facilities nationally, to evaluate staffing impacts on patient outcomes in their network (Supplement 4, A8).

In contrast, a facility-level participant noted that comparative data for other emergency departments across the country would help inform staffing processes for their own facility’s emergency department; to their knowledge such data are unavailable (Supplement 4, A9).

Organizational Monitoring and Support

Another overarching theme reflected organizational monitoring and support, or the ways in which the VA’s organizational structure is utilized to monitor and assist facilities, leaders, and staff, with subthemes of organizational support, accountability, and advocacy.

Organizational Support.

Participants at all levels reported providing resources or direct assistance to subordinates or to more local organizational levels, though the participants who discussed using data to do so tended to be supervisory-level participants from facility- to national-level management. National-level leaders were most likely to discuss providing organizational support, and mainly provided educational support in the use and access of data for decision-making, as well as support in addressing process issues and developing tools for local nurse leaders. One national-level participant explained the various data elements, not limited to nurse hours per patient day, skill mix, and vacancy rate, that they discussed with a facility in reviewing its staffing methodology (Supplement 4, A10). Facility- to national-level participants discussed using data to support staffing adequacy.

Despite widespread discussion of providing support to subordinates, no participants mentioned receiving support from superiors.

Accountability.

Participants at all levels—except national—noted the importance of data for accountability to superiors or monitoring agencies. Unit-level participants discussed accountability to service and facility leaders. Only service-level participants discussed accountability to outside organizations such as The Joint Commission.

Advocacy.

One participant each at the network, service, and unit level discussed advocating for staff and serving as a liaison between subordinates and higher-level leadership. This advocacy often involved ensuring subordinates had “what they actually need” by speaking directly to leadership on their behalf (Supplement 4, A11), but advocacy could also occur through serving on committees (Supplement 4, A12). A network-level participant mentioned ensuring adequate staffing for the hospitals in their network, using available data to monitor staffing levels for each facility, in relation to observed patient outcomes, and working with facility executives to address issues and needs (Supplement 4, A11). As with organizational support, participants did not discuss instances in which superiors advocated on their behalf, they only discussed their role in advocating for subordinates.

Research Question 2: What Challenges Do VHA Nurse Leaders Encounter in Using Data to Guide Their Inpatient Staffing Management Decisions?

Fragmentation

Data fragmentation was cited frequently as an organizational challenge. A network-level participant reported that facilities even within the same network have adopted nurse staffing software from different vendors, which can pose challenges to real-time staffing monitoring (Supplement 4, C1). Also, since related data may be accessible only via disparate data tools, some participants expended unnecessary effort to locate then aggregate data to meet their needs. A facility-level participant expressed their concern about this in relation to correlating staffing levels with other metrics such as care quality and staff engagement (Supplement 4, C2).

Participants noted collection and reporting inconsistencies between data tools. Similar data may be presented differently between sources because of differing data definitions and interface configurations intended for specific user populations. The result is perceived inconsistency in reported values by end-users, who may question the data’s underlying validity and spend additional time to investigate multiple versions of the desired metric to make an effective decision (Supplement 4, C3). A national-level participant indicated their current efforts in standardizing data definitions to reduce confusion, citing retirement eligibility as an example staffing data element whose values differ by source (Supplement 4, C4).

Data Are Unavailable or Unsuitable to User Need

The interviews revealed data inaccessibility can result in knowledge gaps. For some, the challenge was not that certain data were unavailable; rather, the available data present insufficient information or are not aggregated appropriately to meet user needs. Several participants resorted to manual data collection, and as a national-level participant pointed out, relying on manual data capture for fluid processes such as float management can prove needlessly inconvenient, especially during times of crisis such as a pandemic (Supplement 4, C5).

Lack of Knowledge About Available Data

VHA data structure does not appear to be user-intuitive and lacks adequate user guidance. At least one participant from each role level, except national, commented on their lack of knowledge about available data. One service-level participant reasoned there must be existing data tools with their desired metrics but did not know how to access them (Supplement 4, C6). Another participant, at the unit-level, recalled challenges in familiarizing themselves with VA data when first starting their role (Supplement 4, C7). Both participants expressed their desire for a VA “data for dummies” type of book.

Lack of Timely Reporting

Participants valued real-time or future-forecasted data. Reporting delays limited use of data for real-time decisions and quality improvement, and frustrated participants at all organizational levels. Delay times vary by tool; some data may be months old by the time it becomes available (Supplement 4, C8). For example, one nurse manager described the challenge of addressing patient safety events in a timely manner because of the data system configuration and process at their facility (Supplement 4, C9).

Limitations

The ongoing COVID-19 pandemic affected interview responses. Many participants naturally discussed their pandemic experience; therefore the interview data may differ from that obtained under normal circumstances. A second limitation was the sample population. For convenience and time, we focused our contact efforts on some facilities as well as some network- and national-level participants based on professional connections through our nurse executive co-investigator. However, she was blinded to the identities of participants ultimately selected for the study. Additionally, all participants were internal to the VA. Although we believe that our participants share common experiences with those outside of VA, further research is necessary to ensure generalizability of the results.

Discussion

Data-driven culture was evident in the interviews as each participant shared about how they use data. Our results revealed that VA nurse leaders across organizational levels rely on data—particularly real-time and future-forecasted data—to support quality improvement efforts, organizational communication and accountability, and institutional learning, respective to inpatient staffing and care. VHA nurse leaders explore patterns and relationships in data for knowledge discovery, prediction, and evaluation, which corroborates existing research about nursing utilization of data.33, 34 Our identified data applications align with the areas of innovation, influence, and advocacy, which are defined as nurse leader core competencies in working toward the Triple Aim of improving care experience, improving population health, and reducing per-capita health care costs.35

All participant organizational levels depicted intra-organizational collaboration. Yet, participants only discussed their accountability to superiors and assisting subordinates, which implies that people either remain unaware of helpful resources or those resources lack salience when discussing data and staffing. Benefits of having abundant data were clear; however, the interviews also revealed facets of VA data structure that may hinder usability and usefulness in informing nursing decisions. Participants cited challenges of data unavailability and unsuitability, fragmentation, lack of user guidance, and untimely reporting, which strongly support the necessary pursuit of the Quadruple Aim—an extension of the Triple Aim that includes improving provider work life—particularly in the realm of data usability.33, 36 The following quote captures the essence of VA nurse leaders’ struggle in using available data effectively:

“ … one of the biggest challenges of this position is finding the data and then figuring out how to make it meaningful. … so that we can make good decisions, based on the data. … What is it really telling you? What was it measuring?”

-- Facility-level participant

Abundant data accompanied by poor system design, inadequate standardization, and lack of interoperability extends beyond the VA.11, 20 Although our study focused on the VA nursing experience with data, our results corroborate non-VA literature that have also explored data-associated challenges in health care and affirm the universal need to optimize data relevance and usability for nurse leaders.20, 33, 37 In a dynamic patient care environment, nurse leaders have no choice but to develop workarounds for information deficiencies to fulfill their responsibilities particularly in staffing inpatient units appropriately; therefore, current data infrastructure and processes must be streamlined to transform data from a burden into a resource. Our findings support others’ recommendations to standardize nursing terminologies and data standards and include nursing informatics experts in the development and implementation of health information technology,19 and strengthen the call to include nurse leaders, as end-users, in discussions revolving around data tool development. Perceptions obtained from VA nurse leaders build on available literature as firsthand accounts that can inform data infrastructure general development and process improvement in other large health care systems internationally, such as the United Kingdom’s National Health Service, whose experiences with system data infrastructure may share similarities with our results.38 Such improvement can ultimately lead to directly embedded, more nursing-relevant data science tools that enhance current software applications and support evidence-based decision-making. Based on the interview findings, our research team has prepared the following recommendations, which we plan to bring to upcoming discussions with regional and national VA nursing leaders, and which have applicability well beyond the VA.

Recommendations

Prioritize Data Standardization, Source Interoperability, and User Education

Accessibility, interpretability, and usability are necessary for users to maximize the benefit of available data.37 Our findings indicate users would benefit from standardized formatting across data sources, convenient access to standardized user guides, and user education opportunities. Once information systems are established, consistent quality assurance and process improvement are essential, as are continued attention to user graphical literacy and numeracy in data display design.39

Develop a Better Understanding of Nursing Culture

Attention to unique needs and shared values of nurses at all organizational levels is essential for aligning data tools with nurses’ preferred ways of working. For example, staffing adequacy is a daily concern in nursing; however, perceptions of contributing factors may differ by role level. Unit- and service-level participants emphasized clinical impacts, while supervisory-level participants emphasized non-clinical measures such as budgeting. Previous research suggests that directing attention to the nursing context and culture can yield benefits for patient outcomes and data science;40, 41 similarly, we recommend accounting for nursing culture, priorities, and challenges to optimize data systems.

More Frequent Communication Between Data Users and Developers

Regular communication between nursing leadership (i.e. the end-users) and data administrators is critical for effective data use. Broad, complex information systems such as EHRs, especially require strong understanding of information technology and target user population to properly develop data models translatable to user-friendly products. Closer collaboration among clinical nursing management, nursing informatics, and information technology is necessary to establish user needs, define effective and feasible solutions, and adapt data systems with refined clinical tools to efficiently generate reliable data for nurses to use.33 Using existing committees where nurse leaders already serve could improve communication between data administrators and nursing leadership.

Conclusion

Applications of data benefit patients and providers in many areas, as demonstrated by the many data initiatives the VA pioneered to improve nursing practice and the numerous improvements non-VA institutions have incorporated into system and clinical processes.8 Through the insights provided by representative VA nurse leaders, our study explored how nurse leaders use data in their work and what challenges remain in using data most effectively. When nurse leaders have appropriate tools to leverage data for its intended purpose, they can make accurate, timely, evidence-based decisions in support of a thriving learning health care system.24

Implications for Nursing Practice and Policy

Our results highlight the importance of data for collaborative evidence-based nursing management at the VA, from nurse managers who make direct care delivery decisions on the unit floor to senior leadership with a broader nursing oversight across the VA system. However, data’s vast potential requires resolving major end-user challenges. Nurse leaders can play an active role in improving data governance to benefit nursing care. With end-user experiences and understanding of nursing culture and needs, they can provide valuable input to data governance committees to deploy nursing-friendly data tools. Constantly changing circumstances and patient needs necessitate continuous partnership between clinical nursing management, nursing informatics, information technology, and executive leadership to maintain high standards of data accessibility, usability, and overall value for end-users. Additionally, because data applications and challenges may differ across organizational levels, nurses at each organizational level must help define appropriate data priorities to optimize product development. High-quality data is key to providing high-quality care in a learning health care system.19, 23, 24 As major data stakeholders, nurse leaders should influence the governance of timely, nursing-relevant data to best serve their patients.

Supplementary Material

Acknowledgements:

We are grateful to Ms. Pooja Prasad for her preliminary help in process mapping, target facility selection, and material development early on in this study.

Conflicts of Interest and Source of Funding:

The research presented in this article was conducted at the Center for Innovations in Quality, Effectiveness, and Safety, Michael E. DeBakey VA Medical Center, Houston, TX (COIN grant (CIN 13-413)), where Dr. Laura Petersen is the director/PI. This research was funded by Veterans Health Administration Health Services Research & Development (VA HSR&D) IIR 15-438, VA HSR&D Rapid Response Project COVID C19 20-212, and VA HSR&D CIN 13-413. Outside of the support and funding for this study, one of our co-investigators, Dr. Sylvia Hysong, is also funded by the Agency for Healthcare Research & Quality (AHRQ) 1 R01 HS 025982. All other authors have no conflicts of interest, financial or otherwise, to declare.

Footnotes

Disclaimer: The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the U.S. Department of Veterans Affairs, the United States government or VHA’s academic affiliates.

Ethics Approval: This study is approved by the Baylor College of Medicine Institutional Review Board (protocol H-38405).

Permission to Reproduce Material from Other Sources: All tables and figures in our manuscript are original.

Clinical Trial Registration: This study was not a clinical trial; therefore, clinical trial registration is not applicable.

CRediT Statement: JW: Methodology, Validation, Formal analysis, Investigation, Resources, Data curation, Writing – Original draft, Writing – Review & editing, Visualization, Project administration. RS: Validation, Formal analysis, Resources, Data curation, Writing – Original draft, Writing – Review & editing, Visualization. CY: Conceptualization, Methodology, Investigation, Resources, Data curation, Writing – Review & editing, Project administration. MK: Conceptualization, Methodology, Resources, Writing – Review & editing, Supervision, Project administration. SH: Conceptualization, Methodology, Writing – Review & editing, Supervision. LD: Conceptualization, Validation, Writing – Review & editing, Supervision. PO: Conceptualization, Methodology, Writing – Review & editing. LP: Conceptualization, Methodology, Writing – Review & editing, Supervision, Funding acquisition.

Data Availability:

Limited data underlying this article are available in the article and in its online supplementary material. The full set of data underlying this article cannot be shared publicly, in order to protect the privacy of our study participants.

References

- 1.Kane RL, Shamliyan TA, Mueller C, Duval S, Wilt TJ. The association of registered nurse staffing levels and patient outcomes: systematic review and meta-analysis. Med Care. Dec 2007;45(12):1195–204. doi: 10.1097/MLR.0b013e3181468ca3 [DOI] [PubMed] [Google Scholar]

- 2.Dall'Ora C, Saville C, Rubbo B, Turner L, Jones J, Griffiths P. Nurse staffing levels and patient outcomes: A systematic review of longitudinal studies. Int J Nurs Stud. Oct 2022;134:104311. doi: 10.1016/j.ijnurstu.2022.104311 [DOI] [PubMed] [Google Scholar]

- 3.Blume KS, Dietermann K, Kirchner-Heklau U, et al. Staffing levels and nursing-sensitive patient outcomes: Umbrella review and qualitative study. Health Serv Res. Oct 2021;56(5):885–907. doi: 10.1111/1475-6773.13647 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lasater KB, Aiken LH, Sloane DM, et al. Chronic hospital nurse understaffing meets COVID-19: an observational study. BMJ Qual Saf. Aug 2021;30(8):639–647. doi: 10.1136/bmjqs-2020-011512 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dall'Ora C, Ball J, Reinius M, Griffiths P. Burnout in nursing: a theoretical review. Hum Resour Health. Jun 5 2020;18(1):41. doi: 10.1186/s12960-020-00469-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McCue M, Mark BA, Harless DW. Nurse staffing, quality, and financial performance. J Health Care Finance. Summer 2003;29(4):54–76. [PubMed] [Google Scholar]

- 7.NEJM Catalyst. Healthcare big data and the promise of value-based care. Accessed 13 May 2021, https://catalyst.nejm.org/doi/full/10.1056/CAT.18.0290

- 8.Enticott J, Johnson A, Teede H. Learning health systems using data to drive healthcare improvement and impact: a systematic review. BMC Health Services Research. 2021;21(1):200. doi: 10.1186/s12913-021-06215-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chen LM, Kennedy EH, Sales A, Hofer TP. Use of health IT for higher-value critical care. The New England Journal of Medicine. 2013;368(7):594–7. doi: 10.1056/NEJMp1213273 [DOI] [PubMed] [Google Scholar]

- 10.Weaver SJ, Che XX, Petersen LA, Hysong SJ. Unpacking care coordination through a multiteam system lens: A conceptual framework and systematic review. Medical Care. Mar 2018;56(3):247–259. doi: 10.1097/mlr.0000000000000874 [DOI] [PubMed] [Google Scholar]

- 11.Blumer L, Giblin C, Lemermeyer G, Kwan JA. Wisdom within: Unlocking the potential of big data for nursing regulators. International Nursing Review. 2017;64(1):77–82. doi: 10.1111/inr.12315 [DOI] [PubMed] [Google Scholar]

- 12.Leary A, Cook R, Jones S, et al. Mining routinely collected acute data to reveal non-linear relationships between nurse staffing levels and outcomes. BMJ Open. 2016;6(12):e011177. doi: 10.1136/bmjopen-2016-011177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Van den Heede K, Cornelis J, Bouckaert N, Bruyneel L, Van de Voorde C, Sermeus W. Safe nurse staffing policies for hospitals in England, Ireland, California, Victoria and Queensland: A discussion paper. Health Policy. 2020/October/01/ 2020;124(10):1064–1073. doi: 10.1016/j.healthpol.2020.08.003 [DOI] [PubMed] [Google Scholar]

- 14.Deckro J, Phillips T, Davis A, Hehr AT, Ochylski S. Big data in the Veterans Health Administration: A nursing informatics perspective. Journal of Nursing Scholarship. 2021;53(3):288–295. doi: 10.1111/jnu.12631 [DOI] [PubMed] [Google Scholar]

- 15.Department of Veterans Affairs, VA Informatics and Computing Infrastructure. VINCI description for IRBs [Word document]. Washington, DC: United States Department of Veterans Affairs; 2016:1–3. [Google Scholar]

- 16.Annis AM, Robinson CH, Yankey N, et al. Factors associated with the implementation of a nurse staffing directive. The Journal of Nursing Administration. 2017;47(12):636–644. doi: 10.1097/nna.0000000000000559 [DOI] [PubMed] [Google Scholar]

- 17.Brown SH, Lincoln MJ, Groen PJ, Kolodner RM. VistA—U.S. Department of Veterans Affairs national-scale HIS. International Journal of Medical Informatics. 2003;69(2):135–156. doi: 10.1016/S1386-5056(02)00131-4 [DOI] [PubMed] [Google Scholar]

- 18.Office of Nursing Services. ONS Standardized Nursing Documentation [VA Internal SharePoint]. Unpublished internal document. 2021.

- 19.Sensmeier J Big data and the future of nursing knowledge. Nursing Management. 2015;46(4):22–7. doi: 10.1097/01.NUMA.0000462365.53035.7d [DOI] [PubMed] [Google Scholar]

- 20.Staggers N, Elias BL, Makar E, Alexander GL. The imperative of solving nurses' usability problems with health information technology. The Journal of Nursing Administration. 2018;48(4):191–196. doi: 10.1097/nna.0000000000000598 [DOI] [PubMed] [Google Scholar]

- 21.International Council of Nurses. Evidence-based safe nurse staffing. 2018. Accessed 14 November, 2022, https://www.icn.ch/sites/default/files/inline-files/PS_C_%20Evidence%20based%20safe%20nurse%20staffing_1.pdf

- 22.Griffiths P, Saville C, Ball J, et al. Nursing workload, nurse staffing methodologies and tools: A systematic scoping review and discussion. Int J Nurs Stud. Mar 2020;103:103487. doi: 10.1016/j.ijnurstu.2019.103487 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hysong SJ, Knox MK, Haidet P. Examining clinical performance feedback in patient-aligned care teams. Journal of General Internal Medicine. 2014;29 Suppl 2(Suppl 2):S667–74. doi: 10.1007/s11606-013-2707-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Olsen L, Aisner D, & McGinnis JM (Eds.). The learning healthcare system: Workshop summary. The National Academies Collection: Reports funded by National Institutes of Health. National Academies Press (US); 2007. [PubMed] [Google Scholar]

- 25.Robinson CH, Annis AM, Forman J, et al. Factors that affect implementation of a nurse staffing directive: Results from a qualitative multi-case evaluation. Journal of Advanced Nursing. 2016;72(8):1886–98. doi: 10.1111/jan.12961 [DOI] [PubMed] [Google Scholar]

- 26.Sandelowski M, Barroso J. Classifying the findings in qualitative studies. Qualitative Health Research. 2003;13(7):905–23. doi: 10.1177/1049732303253488 [DOI] [PubMed] [Google Scholar]

- 27.Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. International Journal for Quality in Health Care. 2007;19(6):349–57. doi: 10.1093/intqhc/mzm042 [DOI] [PubMed] [Google Scholar]

- 28.Office of Productivity, Efficiency and Staffing. VHA facility complexity model history. Washington, DC: United States Department of Veterans Affairs; 2020. [Google Scholar]

- 29.Office of Productivity, Efficiency, and Staffing. Data definitions: VHA facility complexity model. In: FY20 Complexity Model Data Definitions.pdf, editor. Facility Complexity Model, VHA Office of Productivity, Efficiency & Staffing (OPES) [VA Intranet]. Washington, DC: United States Department of Veterans Affairs; 2020. [Google Scholar]

- 30.Glaser BG. The constant comparative method of qualitative analysis. Social Problems. 1965;12(4):436–445. doi: 10.2307/798843 [DOI] [Google Scholar]

- 31.Lockhart L Measuring nursing's impact. Nursing made Incredibly Easy. 2018;16(2):55. doi: 10.1097/01.Nme.0000529956.73785.23 [DOI] [Google Scholar]

- 32.Agency for Healthcare Research and Quality. Patient safety indicators (PSI) overview. Updated n.d. Accessed 3 June 2021, https://www.qualityindicators.ahrq.gov/Modules/psi_resources.aspx#techspecs

- 33.Rossetti SC, Yen P-Y, Dykes PC, Schnock K, Cato K. Reengineering approaches for learning health systems: Applications in nursing research to learn from safety information gaps and workarounds to overcome electronic health record silos. In: Zheng K, Westbrook J, Kannampallil TG, Patel VL, eds. Cognitive informatics: Reengineering clinical workflow for safer and more efficient care. Springer International Publishing; 2019:115–148. [Google Scholar]

- 34.Westra BL, Sylvia M, Weinfurter EF, et al. Big data science: A literature review of nursing research exemplars. Nursing Outlook. 2017;65(5):549–561. doi: 10.1016/j.outlook.2016.11.021 [DOI] [PubMed] [Google Scholar]

- 35.Bowles J, Adams J, Batcheller J, Zimmermann D, Pappas S. The role of the nurse leader in advancing the Quadruple Aim. Nurse Leader. 2018;16:244–248. doi: 10.1016/j.mnl.2018.05.011 [DOI] [Google Scholar]

- 36.Bodenheimer T, Sinsky C. From Triple to Quadruple Aim: Care of the patient requires care of the provider. Annals of Family Medicine. 2014;12(6):573–576. doi: 10.1370/afm.1713 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Adnan K, Akbar R, Khor SW, Ali ABA. Role and challenges of unstructured big data in healthcare. Third International Conference on Data Management, Analytics and Innovation - ICDMAI 2019. Lincoln University College, Lumpur, Malaysia: Springer Nature Singapore Pte Ltd; 2019. p. 301–323. [Google Scholar]

- 38.National Audit Office, Department of Health & Social Care, NHS England & NHS Improvement, NHS Digital. Digital transformation in the NHS. 2020. Accessed 24 June 2021. https://www.nao.org.uk/wp-content/uploads/2019/05/Digital-transformation-in-the-NHS.pdf

- 39.Lopez KD, Wilkie DJ, Yao Y, et al. Nurses' numeracy and graphical literacy: Informing studies of clinical decision support interfaces. Journal of Nursing Care Quality. 2016;31(2):124–30. doi: 10.1097/ncq.0000000000000149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Brennan PF, Bakken S. Nursing needs big data and big data needs nursing. Journal of Nursing Scholarship. 2015;47(5):477–484. doi: 10.1111/jnu.12159 [DOI] [PubMed] [Google Scholar]

- 41.Yanchus NJ, Ohler L, Crowe E, Teclaw R, Osatuke K. ‘You just can’t do it all’: A secondary analysis of nurses' perceptions of teamwork, staffing and workload. Journal of Research in Nursing. 2017;22(4):313–325. doi: 10.1177/1744987117710305 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Limited data underlying this article are available in the article and in its online supplementary material. The full set of data underlying this article cannot be shared publicly, in order to protect the privacy of our study participants.