Abstract

Morphometric (i.e., shape and size) differences in the anatomy of cortical structures are associated with neurodevelopmental and neuropsychiatric disorders. Such differences can be quantized and detected by a powerful tool called Labeled Cortical Distance Map (LCDM). The LCDM method provides distances of labeled gray matter (GM) voxels from the GM/white matter (WM) surface for specific cortical structures (or tissues). Here we describe a method to analyze morphometric variability in the particular tissue using LCDM distances. To extract more of the information provided by LCDM distances, we perform pooling and censoring of LCDM distances. In particular, we employ Brown-Forsythe (BF) test of homogeneity of variance (HOV) on the LCDM distances. HOV analysis of pooled distances provides an overall analysis of morphometric variability of the LCDMs due to the disease in question, while the HOV analysis of censored distances suggests the location(s) of significant variation in these differences (i.e., at which distance from the GM/WM surface the morphometric variability starts to be significant). We also check for the influence of assumption violations on the HOV analysis of LCDM distances. In particular, we demonstrate that BF HOV test is robust to assumption violations such as the non-normality and within sample dependence of the residuals from the median for pooled and censored distances and are robust to data aggregation which occurs in analysis of censored distances. We recommend HOV analysis as a complementary tool to the analysis of distribution/location differences. We also apply the methodology on simulated normal and exponential data sets and assess the performance of the methods when more of the underlying assumptions are satisfied. We illustrate the methodology on a real data example, namely, LCDM distances of GM voxels in ventral medial prefrontal cortices (VMPFCs) to see the effects of depression or being of high risk to depression on the morphometry of VMPFCs. The methodology used here is also valid for morphometric analysis of other cortical structures.

Keywords: Brown-Forsythe test, Censoring, Computational anatomy, Homogeneity of variance, Pooled distances, Simultaneous inference

SUBJECT CLASSIFICATIONS: Primary 62H35, secondary 62F03, 62P10, 62-07

1. INTRODUCTION

Quantification of morphometric properties of cortical structures is a major component of Computational Anatomy (CA). Recently, the laminar structure of the neo-cortex has received considerable attention thanks to advances in high resolution magnetic resonance imaging (MRI) technology and the development of CA methods (see, e.g., [1–6]). Specifically, Labeled Cortical Distance Mapping (LCDM) has been used for structural comparisons of cortical thickness in the cingulate cortex in studies of Alzheimer’s disease [7] and schizophrenia [40] in comparison to control subjects. The LCDM algorithm provides distances of labeled gray matter (GM) voxels from the GM/white matter (WM) surface for cortical structure of interest. Depending on the resolution of the GM voxels, the associated data set can be very large for each subject. Previously, the morphometric differences between the diagnostic groups were discovered by the analysis of LCDM distances, more specifically, by comparing the means and distributions of the LCDM distances [8,9]. However, in this article, cortical morphometric variability (possibly due to a disease or impairment) as measured by the LCDM distances is studied.

Cortical thinning has been observed in other regions in a variety of neuro-developmental and neuro-degenerative disorders (see above references for examples). In particular, functional imaging studies implicate the ventral medial prefrontal cortex (VMPFC) in major depressive disorders (MDD) [10,11] which have been correlated with shape changes observed in structural imaging studies [12,13]. Some specific regions of the prefrontal cortex play an important role in modulating emotions and mood. Structural imaging studies in MDD have largely focused on adult onset with only few focused on early onset MDD which has been associated with structural deficits in the subgenual prefrontal cortex, a subregion of the VMPFC. Furthermore, the whole VMPFC has been examined in a twin study of early onset MDD [13].

Several studies of the VMPFC and related structures have been obtained from analysis of the cortex as a whole [13–17], whereas others have pursued more attempts at the localized analysis attempts to deal with the highly folded and variable GM cortex [18] and to address issues of signal inhomogeneity or artifacts which can cause processing issues in this region. Also in the localized approach, the laminar shape (i.e., curvature/folding and thickness) of the cortex can be quantified in great detail. Two aspects of the laminar shape are structural formation (like surface and form of the cortex or curvature of the tissue) and scale or size (like volume and surface area). Thus, morphometry refers to all aspects of laminar structure, where “shape” refers to the surface structure and “size” refers to the scale of the tissue in question.

LCDMs can be used in many ways. For example, the 90th percentile distance of LCDMs can be used as a measure of cortical thickness [19]. Group comparisons can be performed on GM volumes (by the usual t-test) or on randomly selected subsamples from LCDM distances can be analyzed via Wilcoxon rank sum (WRS) test [7]. Various morphometric measures (i.e., volume, descriptive statistics based on LCDM distances such as median, mode, range, and variance) can be used for group comparisons; however, these variables were shown to have less power in discriminating the depressed subjects from healthy ones, possibly due to oversimplification of cortical characterization represented in LCDM distances [9]. To avoid this information loss, the LCDM distances can be pooled (i.e., merged) for each diagnostic group and various statistical tests (such as tests on differences in distribution (such as Kolmogorov-Smirnov (KS) test or WRS test) or location (i.e., tests on mean or median such as ANOVA F-tests and t-tests) can be performed by classical parametric or non-parametric tests [8]. Furthermore LCDM distances can be censored at various (consecutive) distance thresholds to determine the location (i.e., distance from the GM/WM surface) where significant differences in morphometry starts [20]. That is, at each step we only use the voxels with distances smaller than the threshold distance and we do not use the voxels with distances larger than the threshold distance, hence the term “censoring” for this process. Previously, the distributional and mean differences at the diagnostic group level are investigated using LCDM distances [8,9] and also the location (i.e., the distance from the GM/WM surface) of such differences were found by using censored LCDM distances [20]. But, in this article, the morphometric variability of the region of interest (ROI) due to the disease in question is assessed. Due to the structure of LCDM distances, the location, distribution, and variance comparisons might provide complementary information. In particular, LCDM distances are positive, and with a skewed right probability density function (pdf). Hence, the distributional and location analysis are not totally unrelated to the morphometric variability. Here, we investigate the similarities and differences between variability (or variance) analysis when compared to distributional and location analysis. The effect of a disease or a disorder on the variability may be important in the sense that, if, e.g., the disease reduces variability, then the diagnostic inference on the morphometry of the ROI will be more precise in indicating the possibility of the disease. However, variability analysis should be performed in addition to or in conjunction with the distributional or location analysis to obtain more reliable information.

The LCDM approach has been applied in clinical neuroimaging studies of the cingulate in subjects with Alzheimer’s Disease [21] and schizophrenia [22–24], the prefrontal cortex in subjects with schizophrenia [25], the parahippocampal gyrus in subjects with schizophrenia [26], the occipital cortex in visual attention [27,28], Area 46 of the frontal cortex in fetal irradiated macaques [29] and ERC in normal aging controls and in subjects with mild cognitive impairment [30]. Finally, our observation of variable cortical thickness in the left PT in three groups of age-matched and gender-matched controls and patients with schizophrenia and bipolar disorder [31] is consistent with post-mortem analysis [32]. The approach has also been extended to deal with deeply buried sulci by modeling image intensity stochastically based on the normal distance where the model includes cortical thickness as one of the parameters [1]; others have similarly adapted LCDMs [33,34]. The LCDM approach is similar to the voxel-based cortical thickness method (VBCT) [35] where each voxel in the GM has a thickness value associated with it, but our analysis of these voxel-based thickness values is different. In VBCT cortical thickness values are compared on a voxel-by-voxel basis as in SPM2 [36], while our analysis of LCDM distances allows us, for example, to first pool (i.e., merge) the distance values for each diagnostic group, and perform the comparisons on the overall distance (or thickness) level, rather than the voxel level for each individual. It has been shown that LCDMs are comparable to other methods for computing cortical thickness [37] and that LCDM profiles for whole brains are similar in shape [35,38]. LCDM is essentially a ROI approach and therefore differs from global ones such as FreeSurfer [39] which averages point-to-point distances between outer and inner cortical surfaces.

HOV analysis of pooled distances provides an overall analysis of morphometric variability of the LCDMs at the group level due to the disease in question, while the HOV analysis of censored distances suggests the location(s) of significant variation in these differences (i.e., at which distance(s) from the GM/WM surface the morphometric variability starts to be significant on the average) at the group level. We also check for the influence of the assumption violations on the HOV analysis of LCDM distances. In particular, we demonstrate that BF HOV test is robust to assumption violations by the LCDM distances such as nonnormality and within sample dependence of the residuals from the median. Furthermore, at each censoring step, the censored distances aggregate, which might confound the results of statistical testing. We investigate the influence of data aggregation with a Monte Carlo simulation analysis and show that such influence on the HOV test is only mild and negligible in practice. We also assess the performance of the methods on normal and exponential data sets to see the performance of the tests when more assumptions than those of LCDM distances are satisfied.

As an illustrative example, we perform LCDM analysis of GM tissue in VMPFCs in a study of early onset depression in twins. The methodology is applied to LCDMs generated for the VMPFC implicated in major depressive disorders (MDD) [10,11,13,18,40]. Furthermore, in analysis of censoring distances, we have the multiple testing problem when all censoring thresholds are considered together. We consider various corrections for simultaneous inference (i.e., p-value adjustment or correction for multiple testing) and compare these correction methods. We demonstrate that among these methods, Benjamini-Hochberg correction [41] has the best performance.

We describe the acquisition of LCDM distances for VMPFCs in Section 2.1, pooling and censoring of LCDM distances in Section 2.2., statistical methods we employ in Section 2.3, assess the influence of assumption of violations and data aggregation with an extensive Monte Carlo simulation study in Sections 3.1–3.3, assess the performance of the methods on normal and exponential data in Section 3.4, and discuss the simultaneous inference procedures for analysis of censored distances in Section 3.5. We present the HOV analysis of the example data set in Section 4, and discussion and conclusions in Section 5.

2. METHODS

2.1. Data acquisition

A cohort of 34 right-handed young female twin pairs between the ages of 15 and 24 years old were obtained from the Missouri Twin Registry and were used to study cortical changes in the VMPFC associated with MDD. Both monozygotic and dizygotic twin pairs were included, of which 14 pairs were controls (Ctrl) and 20 pairs had one twin affected with MDD, their cotwins are designated as the High Risk (HR) group. Three high resolution T1-weighted MPRAGE magnetic resonance scans of each subject in this population were acquired using a Siemens 1.5T Sonata scanner with 1 mm3 isotropic resolution. Images were then averaged, corrected for intensity inhomogeneity and interpolated to 0.5×0.5×0.5 mm3 isotropic voxels. Following Ratnanather et al. (2001), a ROI comprising the prefrontal cortex stripped of the basal ganglia, eyes, sinus, cavity, and temporal lobe was defined manually and segmented into gray matter (GM), white matter (WM), and cerebrospinal fluid (CSF) by Bayesian segmentation using the expectation maximization algorithm [42]. A triangulated representation of the cortex at the GM/WM boundary was generated using isosurface algorithms [43].

LCDM is a method to characterize the cortical laminar shape over a specified cortical ROI and are generated as follows: first, the ROI subvolume is partitioned by a regular lattice of voxels of specific size h, denoted V (h). Each voxel is a cube of size h×h×h (in some unit, say, mm3). Every voxel is labeled by tissue type as gray matter (GM), white matter (WM), and cerebrospinal fluid (CSF) [e.g. 2,42]). For every voxel in the ROI subvolume, the (normal) distance from the center of the voxel to the closest point on GM/WM surface is computed. Let S (Δ) be the triangulated graph representing the smooth boundary at the GM/WM surface. The distance computation algorithm is specified as follows [2,18,19]:

for all i do

sclosest ← a point in S (Δ) such that

for all sj ∈ S (Δ) do

d(sclosest, centroid(υi)) ≤ d(sj, centroid(υi))

end for

Di ← d(sclosest, centroid(υi))

end for

where d(·, ·) stands for the usual Euclidean distance, υi is the ith voxel, sj is the jth point in S (Δ) and Di is the ith distance (i.e., distance for the ith voxel). That is, an LCDM distance is a set distance function d : υi ∈ V → d(centroid(υi), S (Δ)), which is the distance between the centroid (or center of mass) of υi and the set S (Δ). More precisely,

| (1) |

where CM (·) stands for center of mass (or centroid), and ∥·∥2 is the usual L2-norm. A signed distance is used to indicate the location of each voxel with respect to the GM/WM surface; distances are positive for GM and CSF voxels, and negative for WM voxels. These distances constitute the LCDM distance data. We will only use LCDM distances for the GM of the ROI in our further analysis. Hence, LCDM distances refer to those of GM voxels for the rest of the article.

Let be the distance associated with kth voxel in GM of left VMPFC of subject j in group i for j = 1, 2, . . . , ni, i = 1, 2, 3 (group 1 is for MDD, group 2 for HR, and group 3 for Ctrl) and where is the number of voxels for left VMPFC of subject j in group i. Thus, n1 = 20, n2 = 20, and n3 = 28. Right VMPFC distances are denoted similarly as with being the number of voxels for right VMPFC of subject j in group i. Based on prior anatomical knowledge (e.g., [44]), cortical thickness of the VMPFC is roughly 6 mm so we only retain distances larger than −0.5 mm so that mislabeled WM is excluded from the data with an upper limit of 5.5 mm. This causes discarding a small portion of the LCDM distance data set. In particular, in our VMPFC LCDM distances data, it turns out that only 0.16% of left distances and 0.14% of right distances are below −0.5 mm; on the other hand, only 0.22% of left distances and 0.07% of right distances are above 5.5 mm.

By construction, most of GM distances are positive, most of WM distances are negative, and all of CSF distances are positive. Mismatch of the signs for some GM and WM voxels close to the GM/WM boundary are due to the way the surface is constructed in relation to how the pixels are labeled, such that a surface is always intersecting pixels, i.e., partial volume. Hence some appropriately labeled GM and WM pixels may fall on a side of surface that they should not belong to; however, these mislabeled voxels constitute a small number of voxels and do not affect the overall analysis. Reliability of LCDMs is dependent on reliability of GM segmentation and reconstruction of GM/WM surface which has been validated for several cortical structures including VMPFC [18], cingulate gyrus ([45]; [46]) and planum temporale [47].

2.2. Pooling and censoring of LCDM distances by group

We pool LCDM distances of subjects from the same diagnostic group or condition; that is, we pool the LCDM distances of all left MDD VMPFCs in one group, those of all left HR VMPFCs in another group, and those of all left Ctrl VMPFCs in another. Let be the set of LCDM distances of subject j from group i for j = 1, 2, . . . , ni and i = 1, 2, 3. Then we set

| (2) |

where is the set of left distances for group i with i = 1, 2, 3, is the ℓth distance value for the merged (or pooled) distances from left VMPFCs of subjects in group i for . We pool the right VMPFC LCDM distances in a similar fashion and replace L with R in the notation.

One of the underlying assumptions for pooling is that the distances from left VMPFCs of subjects with the same diagnostic condition have the same distribution (i.e., distances of left VMPFCs of subjects with MDD have the same distribution, say , those of HR have the same distribution, , and so do those of Ctrl group, with ); and the same holds for distances of right VMPFCs with distributions for i = 1, 2, 3. In other words, we assume that are identically distributed for all j = 1, . . . , ni and . So, for all j, k, and is a sample from the distribution ; likewise for all j, k. Hence the pooled distances are distributed as and for i = 1, 2, 3 and . We take this action under the presumption that the morphometry of VMPFCs of the healthy subjects are similar and those of subjects with the same disease are affected in the same manner, hence age and gender matched subjects with the same health condition (whether healthy or diseased) have VMPFCs similar in morphometry. We also denote all left pooled distances as

and similarly denote all right pooled distances DR as replace L with R in the notation.

Next, we partition the range of LCDM distances into bins of size δ, then we have ⌊dmax/δ⌋ many bins where ⌊s⌋ stands for the floor of (i.e., largest integer less than or equal to) s. To construct LCDM censored distances, we only retain distances less than or equal to a specified distance value denoted γδ,k. In particular, at step k, we only consider the voxels whose LCDM distances are less than equal to γδ,k = kδ. Thus we only consider the layer of the cortex with thickness of roughly kδ from the GM/WM surface. These distances are called the censored LCDM distances, which, for left VMPFCs, are denoted as

and for group i of left VMPFCs,

for i = 1, 2, 3 (i.e., for groups MDD, HR, and Ctrl, respectively). Censored LCDM distances for right VMPFCs are denoted similarly as and for group i of right VMPFCs as .

Censored distances depend on the bin size, δ and resolution of the voxels h. We recommend the use of a bin size between [h/10, h] (which corresponds to 0.01 to 0.5 mm for our data). Because, if they are too large, censored distances do not provide the desired resolution in the distances from the GM/WM surface, and if they are too small, they do not improve on the results of 0.01 mm but increase computation time. So the lower bound on the bin size is rather of practical choice. In what follows, we use dmax = 5.5 mm and δ = 0.01 mm. Therefore, we have k = 0, 1, 2, . . . , 551 and γδ,k = .00, .01, .02, . . . , 5.50 mm. To overcome the possible confounding effects of the mislabeled GM voxels close to the GM/WM surface, censored distances within [0.5, 5.5] mm are used for statistical inference, as these censored distances provide more reliable results. Moreover, the censored distance analysis is performed with the same tests as the pooled distance analysis performed in [20].

2.3. Statistical tests

Previously, we have analyzed the pooled and LCDM distances for differences in location and distribution in [8] and [20], respectively. In this article, we employ a test of equality or homogeneity of the variances (HOV) of pooled and censored distances which is important in its own right, because variance differences in distances between groups might be indicative of differences between the morphometric variations/variability of VMPFCs (due to the disease). That is, variance of LCDM distances for, e.g., left VMPFC of a subject is a measure of shape or size variation in that particular VMPFC.

HOV tests are usually employed to check an important assumption in ANOVA and the t-test for mean comparisons which states that the variances in the different groups are equal (i.e., homogeneous). The two most common HOV tests are the Levene’s test and the Brown-Forsythe test where the latter is a modification of Levene’s test. HOV assumption is not a crucial one for ANOVA methods, so we perform HOV tests not for assumption checking for ANOVA but for a different purpose: to determine the effect of a certain disease on the morphometric variability of a brain tissue. In Levene’s test ANOVA is performed on the absolute deviations (called residuals) of the values from the mean, and BF test does the same but on deviations from the median. The basic assumptions for these HOV tests are the same as the ANOVA assumptions, not on the original variable but on the residuals from the mean or median. That is, the residuals should enjoy within sample independence, between sample independence, normality (i.e., Gaussianity), and equality of the variances (of the residuals). It has been shown that BF test is more robust to the assumption violations [48], hence we prefer it over Levene’s test in our further HOV analysis of LCDM distances. Therefore, we perform HOV by using Brown-Forsythe’s (BF) HOV test (see, e.g., [49]). We apply a multi-group BF HOV test and if this is significant, then we perform (directional) pairwise HOV comparisons with Holm’s correction [50]. In the literature, BF HOV (and Levene’s HOV) tests are only used for two-sided alternatives, as they are based on ANOVA F-test on the residuals. However, in the two-sample case, ANOVA F-test and t-test are equivalent, and the latter can be used for directional alternatives. Hence for pairwise HOV comparisons (i.e., in the two-sample case), we use BF test as the usual t-test on the residuals from the median.

For the pooled LCDM distances by group and censored distances, there is an inherent spatial correlation between neighboring voxels which implies the dependence between LCDM distances (and hence the residuals from the medians) of the close-by voxels. Furthermore, LCDM distances (and hence the residuals from the medians) are significantly non-normal. Previously, it has been shown in [8] that the assumption violations for the parametric tests (ANOVA F-test and t-test) and for nonparametric tests (Kruskal-Wallis (KW) and WRS tests) have negligible effect on the empirical size and power performance of these tests. In this article, we also check the influence of assumption violations on the ANOVA of the residuals from the median, i.e., on BF HOV test.

The similarity in the morphometry of ROIs implies similarity of LCDM distances, which in turn implies similarity of the distributions of LCDM distances (hence similarity in the means, medians, and variances). That is, identical morphometry in the ROIs would imply identical distance distributions. But converse is not necessarily true in the sense that two ROIs might have similar distance distributions, but the corresponding morphometry could be very different. However, when the distance distributions are found to be different, it would logically imply different morphometry in the ROIs. For KW test, which is a nonparametric test, we test the equality of the distributions of the left pooled distances between groups; i.e., where is the distribution function of the left pooled distances for group i = 1, 2, . . . , k. The null hypothesis for the right distances is similar with L being replaced with R. For ANOVA F-tests with or without HOV, we test the equality of the means of the left pooled distances between k groups; i.e., where is the mean of the left pooled distances for group i = 1, 2, . . . , k. The null hypothesis for the right distances is similar with L being replaced with R.

Observe that KW, ANOVA or WRS tests suggest shape and size differences when rejected, in particular the direction of the alternatives for the WRS test might indicate cortical thinning. Similarity of the morphometry of ROIs will cause similarity of LCDM distances, which would also imply similarity of the variances of LCDM distances. Variance of distances is suggestive of morphometric variation in ROIs. So similar shapes and sizes imply similar variances, but not vice versa. For example, cortical thinning might reduce the variation in LCDM distances, and the larger the spread in the boundary (surface) of ROI, the larger the variance of LCDM distances. In HOV analysis, we test the equality of the variances of the left pooled distances between k groups; i.e.,

| (3) |

where is the variance of the left pooled distances for group i = 1, 2, . . . , k. The null hypothesis for the right distances is similar with L replaced with R.

3. THE INFLUENCE OF ASSUMPTION VIOLATIONS AND AGGREGATION OF CENSORED DISTANCES ON THE HOV TESTS: A MONTE CARLO STUDY

The influence of the assumption violations due to the spatial correlation and nonnormality (i.e., non-Gaussianity) in the pooled LCDM distances has been shown to have negligible effect on the tests of location and distribution (such as ANOVA F-test, pairwise t-tests, KW test, WRS test, and KS test) [8]. In censoring LCDM distances, in addition to the above violations, we have the issue of accumulation/aggregation of the distances at each step. This aggregation of the distances have been shown not to substantially influence the tests and their sensitivity to the differences between the groups [20]. In this article, we assess the influence of the above problems on HOV tests. That is, we use an extensive Monte Carlo simulation study to determine the influence of violations of within sample independence and normality of pooled LCDM distances on the BF HOV test; and in censoring distances, in addition to these violations, we investigate the effect of distance accumulation at each censoring step on the HOV test. We employ the same Monte Carlo simulation setting of [8] in our data generation. For completeness, we replicate the distance generation procedure below which is shown to generate distances resembling those of LCDM distances from real subjects; i.e., capturing the true randomness in LCDM distances.

3.1. Simulation of distances resembling LCDM distances

We choose the left VMPFC of HR subject 1 whose distances are denoted as . We partition the range of distances into intervals I0 := [−1, 0.5] mm, I1 := (0.5, 1.0] mm, I2 := (1.0, 1.5] mm, . . . , and I11 := (5.5, 6.0] mm. Let be the number of distances within interval Ii, i.e, , for i = 0, 1, 2, . . . , 11. Then for we have . A possible Monte Carlo simulation to obtain LCDM-like distances can be performed as follows. We generate n = 10000 numbers with replacement in {0, 1, 2, . . . , 11} proportional to the above frequencies, (the choice of n = 10000 is due to the fact that the number of distances for left VMPFC of HR subject 1 is 11659). Then we generate as many uniform numbers in (0, 1) (i.e., numbers from distribution) for each i ∈ {0, 1, 2, . . . , 11} as i occurs in the generated sample of 10000 numbers, and add these uniform numbers to i. Then we divide each distance by 2 to match the range of generated distances with [0, 6.0] which is roughly the range of . More specifically, we independently generate n numbers from {0, 1, 2, . . . , 11} with the discrete probability mass function for i = 0, 1, . . . , 11 and j = 1, 2, . . . , n. So, where

Let ni be the frequency of i among the n generated numbers from {0, 1, 2, . . . , 11} with distribution P0, for i = 0, 1, . . . , 11. Hence . Then the set of simulated distances is

See Figure 1 for histograms overlaid with the kernel density estimates of LCDM distances from HR subject 1 and 10000 simulated distances as described above. Notice that the histograms and the kernel density estimates are very similar, which indicates that distances generated by the above simulation procedure resembles distances from real-life VMPFCs.

Figure 1.

Histograms overlaid with the kernel density estimates of LCDM distances for the left VMPFC of HR subject 1 (top) and a simulated sample as described in Section 3.1 (bottom).

3.2. Empirical size estimates and size curves

3.2.1. Multi-sample case

In the multi-sample case, our null hypothesis is HOV (i.e., equality of the variances) of LCDM distances, which follows from the equality of the distribution of LCDM distances for the groups. For simplicity, we consider k = 3 groups, extension of the below discussion to k > 3 groups is straightforward. Thus, for the null case, we generate three samples , , and each of size nx, ny, and nz, respectively, as described above in Section 3.1 with the sample sizes for bins (stacks) being selected to be proportional to the frequencies ; i.e., distances are generated to be similar to the left VMPFC of HR subject 1. No generality is lost here, because distances for any other VMPFC can either be obtained by rescaling of the generated distances, or by modifying the frequencies in . In particular, sample is generated as

| (4) |

Samples and are generated similarly and denoted as and , respectively.

We repeat this sample generation procedure Nmc = 10000 times and count the number of times the null hypothesis is rejected at α = 0.05 level for BF test of HOV, KW test of distributional equality, and ANOVA F-tests (with and without HOV) of equality of mean distances, thus obtain the estimated significance levels under Ho. The estimated significance levels for various values of nx, ny, and nz are provided in Table 1, where is the empirical size estimate for BF test, is for KW test, is for ANOVA F-test with HOV, and is for ANOVA F-test without HOV; furthermore, is the proportion of agreement between KW test and ANOVA with HOV, i.e., the number of times out of 10000 Monte Carlo replicates both KW test and ANOVA F-test with HOV simultaneously reject the null hypothesis. Similarly, is the proportion of agreement between KW test and ANOVA F-test without HOV, and is the proportion of agreement between ANOVA F-tests with and without HOV, is the proportion of agreement between BF test and KW test, is the proportion of agreement between BF and ANOVA F-test with HOV, and is the proportion of agreement between BF test and ANOVA F-test without HOV. Using the asymptotic normality of the proportions, we test the equality of the empirical size estimates at 0.05 level, and compare the empirical sizes pairwise. We observe that all tests are at the desired level (i.e., empirical size estimates are not significantly different from the nominal level of 0.05; with Nmc = 10000, empirical size estimates within [.0464, .0536] are not significantly different from the nominal level of 0.05). Hence, if the distances are not that different; i.e., the frequency of distances for each bin and the distances for each bin are identically distributed for each group, the inherent spatial correlation does not seem to influence the significance levels. Moreover, the proportion of agreement between KW test and ANOVA F-tests with and without HOV are significantly less than 0.05 and also significantly less than the smaller of the empirical sizes in each pair (i.e., the proportion of agreement between KW test and ANOVA F-test with HOV is significantly smaller than the smaller empirical size of these tests). This implies KW test and ANOVA F-tests have significantly different rejection (and hence different acceptance) regions. This is in agreement with the fact that KW and ANOVA F-tests are actually testing different hypotheses; in fact, KW test is for distributional equality (based on ranks), while ANOVA F-tests are for equality of the means. On the other hand, the proportion of agreement between ANOVA F-tests, , is neither significantly smaller than 0.05, nor significantly smaller than the minimum of and . Hence, F1 and F2 tests have about the same rejection (and acceptance) regions. That is, under the simulation of the null case, HOV is retained, hence ANOVA F-tests with or without HOV have the same empirical size performance, and moreover, F1 and F2 basically test the same hypotheses. In Table 1, we also observe that proportions of agreement with BF test and other tests (i.e., KW, F1 and F2 tests) are all significantly smaller than 0.05 (in fact, they are about 0.005), and also significantly smaller than the minimum of the empirical sizes in each pair. This suggests that the rejection and acceptance regions for BF test and all other tests are very different. In fact, BF test is testing equality of variances, while the others are tests of location or distribution (such as equality of means or rankings). Furthermore, the proportions of agreement between the tests of location is much higher compared to those of BF test with other tests, as the corresponding rejection or acceptance regions have different intersection levels. In particular, the common rejection region for tests of location is much larger than the common rejection region of BF test with a test of location or distribution.

Table 1.

Estimated significance levels (i.e., empirical size estimates) and proportions of agreement between the test pairs based on Monte Carlo simulations of distances with three groups, , , and each of size nx, ny, and nz, respectively, with Nmc = 10000 Monte Carlo replicates.

| Empirical size | |||||

| (nx, ny, nz) | |||||

| (1000,1000,1000) | .0495a | .0512a | .0501a | .0506a | |

| (5000,5000,10000) | .0509a | .0474a | .0473a | .0477a | |

| (5000,7500,10000) | .0486a | .0506a | .0484a,< | .0484a,< | |

| (10000,10000,10000) | .0512a | .0515a | .0501a | .0500a | |

| Proportion of agreement | |||||

| .0403ℓ | .0406ℓ | .0492≈ | .0049ℓ | .0080ℓ | .0080ℓ |

| .0376ℓ | .0378ℓ | .0468≈ | .0044ℓ | .0067ℓ | .0068ℓ |

| .0396ℓ | .0395ℓ | .0479≈ | .0055ℓ | .0078ℓ | .0078ℓ |

| .0404ℓ | .0403ℓ | .0497≈ | .0041ℓ | .0074ℓ | .0076ℓ |

The empirical sizes in the same row with the same superscript are not significantly different from each other. Empirical size estimates within [.0464, .0536] are not significantly different from the nominal level of 0.05. The sample sizes for the proportion of agreement rows are same as those for the empirical size estimates. (In the superscripts “>” means the empirical size is significantly larger than 0.05; i.e., method is liberal; “<” means empirical size is significantly smaller than 0.05; i.e., method is conservative; “ℓ” means the proportion of agreement significantly less than the minimum of the empirical sizes; “≈” means the proportion of agreement not significantly less than the minimum of the corresponding empirical sizes)

For censoring, we use the pooled distances with nx = ny = nz = 10000. For example for sample , we partition the range of generated distances into bins of size δ = 0.01, then we have many bins where is the largest distance value in . At kth censoring step, we only consider the distances less than or equal to γδ,k = kδ. These distances are denoted as

Censored distances for samples and are obtained similarly from and and are denoted as and , respectively. For brevity in notation, we write as Var(X), as Var(Y), and as Var(Z). We repeat the same procedure Nmc = 1000 times. At each censoring step, we record the p-values for multi-group BF HOV test and KW test of distributional equality, and pairwise BF HOV tests and pairwise WRS tests. We also count the number of times the null hypothesis is rejected at α = 0.05 level for these tests, thus obtain the empirical significance levels (i.e., sizes) under Ho. The average p-values and empirical size estimates together with 95% confidence bands for multi-group BF HOV test are plotted in Figure 2; and for pairwise BF HOV test for the one-sided alternatives Var(X) < Var(Y) and Var(X) > Var(Y), the empirical sizes are about 0.05 and average p-values are about 0.50 for all the tests considered hence are not presented.

Figure 2.

The average p-values (top) and empirical size estimates (bottom) together with 95% confidence bands versus censoring distance values under the null case for multi-group BF HOV test.

3.2.2. Pairwise size comparisons

In the multi-sample case with three or more samples, the alternatives are general ones with no direction. However, if the multi-class omnibus tests (i.e., BF HOV test, and KW test, ANOVA F-tests for testing the distribution/location differences) are significant, then the next question of interest is which pair(s) of the samples exhibit differences and in which direction. To answer these questions, we can apply the two-sample versions of these tests, namely, BF test, WRS test, and Welch’s t-test, as pairwise post-hoc tests. This will enable us to assess whether the methods/tests detect the specific direction of difference(s) between the samples which are present by construction; and if so, whether they detect these differences with high power.

Remark 1:

Two-sample tests can serve as a post-hoc testing procedure, after the multi-sample test being significant. If there are only two samples in the data set, then these tests are the two-sample versions of the multi-sample tests. As post-hoc tests, they may suffer from the type I error inflation because the two-sample tests will be performed conditioning on the significant result of the multi-sample tests when there are three or more classes. However, the conditional size and power estimates (conditional on the multi-sample test being significant) are only slightly different from unconditional ones. Hence, we only present the unconditional size and power estimates in this article. Furthermore, we omit the Holm’s correction for pairwise comparisons between groups in our simulations (but in the application to the example data, Holm’s correction is applied for pairwise group comparisons) for the same reason and to better compare the proportions of agreement between the two sample tests.

We count the number of times the null hypothesis is rejected at α = 0.05 for the post-hoc tests (BF test, WRS test, and t-test) and also Lilliefor’s test of normality and KS test; thereby obtain the empirical size estimates for these tests. Although we have one type of alternative in the multi-sample case, for the two-sample case, except for Lilliefor’s test, there are three types of alternative hypotheses possible: two-sided, left-sided, and right-sided alternatives. The estimated significance levels are provided in Table 2, where is the empirical size estimate for BF test, is for WRS test, is for t-test, is for KS test. Furthermore, is the proportion of agreement between WRS test and t-test, is the proportion of agreement between WRS test and KS test, and is the proportion of agreement between the t-test and KS test, is the proportion of agreement between BF test and WRS tests, is the proportion of agreement between BF test and the t-test, and is the proportion of agreement between BF test and KS test. We only present comparison of samples and , since the sample size combinations for samples and are included in vs comparisons. For vs comparisons, the sample sizes (1000, 1000) and (10000, 10000) are included in vs comparisons, and (5000, 5000) and (5000, 7500) comparisons are omitted for brevity (as they would not provide anything new to the conclusions). Our samples are severely non-normal by construction, and normality is rejected for almost all samples generated, hence we omit the results of Lilliefor’s test. We observe that under Ho, the empirical significance levels are about the desired level for all three types of alternatives, although KS test is slightly conservative. Hence, if the distances are not that different; i.e., the frequency of distances for each bin and the distances for each bin are identically distributed for each group, the inherent spatial correlation does not influence the significance levels for these tests. However, WRS, t-test, and KS tests are used for testing different null hypotheses, so their acceptance and rejection regions are significantly different for LCDM distances, since the proportion of agreement for each pair is significantly smaller than the minimum of the empirical size estimates for each pair of tests. Among these tests, the proportion of agreement between KS test and t-test is smallest. Moreover, we observe that the proportion of agreement of BF test with each of WRS, t, and KS tests is significantly smaller than 0.05 (in fact, the range of is 0.0039 to 0.0055, the range of is 0.0060 to 0.0088, and the range of is 0.0063 to 0.0091), and these proportions are much smaller than the agreement proportions for each pair of WRS, t, and KS tests.

Table 2.

Estimated significance levels (i.e., empirical size estimates) under Ho where ry = rz = 1.0 and ηy = ηz = 0 based on Monte Carlo simulation of LCDM-like distances with two groups and each with size ny and nz, respectively, with Nmc = 10000 Monte Carlo replicates.

| Two-Sided Tests | |||||

| Empirical size | |||||

| (nx, ny) | |||||

| (1000,1000) | .0522a | .0482a | .0488a | .0471a | |

| (5000,10000) | .0512a | .0462a,< | .0474a | .0460a,< | |

| (7500,10000) | .0479a | .0511a | .0516a | .0479a | |

| (10000,10000) | .0496a | .0476a | .0487a | .0447a,< | |

| Proportion of agreement | |||||

| .0392ℓ | .0301ℓ | .0274ℓ | .0055ℓ | .0082ℓ | .0091ℓ |

| .0378ℓ | .0276ℓ | .0239ℓ | .0050ℓ | .0084ℓ | .0079ℓ |

| .0414ℓ | .0315ℓ | .0286ℓ | .0048ℓ | .0079ℓ | .0088ℓ |

| .0390ℓ | .0267ℓ | .0241ℓ | .0046ℓ | .0086ℓ | .0079ℓ |

| Left-Sided Tests | |||||

| Empirical size | |||||

| (1000,1000) | .0518a | .0435b,< | .0453b,< | .0432b,< | |

| (5000,10000) | .0522a | .0481a | .0495a | .0485a | |

| (7500,10000) | .0525a | .0510a | .0499a | .0478a | |

| (10000,10000) | .0482a | .0473a | .0471a | .0460a,< | |

| Proportion of agreement | |||||

| .0363ℓ | .0278ℓ | .0256ℓ | .0044ℓ | .0071ℓ | .0071ℓ |

| .0389ℓ | .0300ℓ | .0277ℓ | .0035ℓ | .0060ℓ | .0063ℓ |

| .0421ℓ | .0326ℓ | .0302ℓ | .0044ℓ | .0065ℓ | .0067ℓ |

| .0399ℓ | .0297ℓ | .0278ℓ | .0048ℓ | .0074ℓ | .0081ℓ |

| Right-Sided Tests | |||||

| Empirical size | |||||

| (1000,1000) | .0507a | .0537a | .0539a | .0508a | |

| (5000,10000) | .0513a | .0487a | .0486a | .0497a | |

| (7500,10000) | .0461a,< | .0488a | .0501a | .0495a | |

| (10000,10000) | .0500a | .0477a | .0481a | .0470a | |

| Proportion of agreement | |||||

| .0436ℓ | .0337ℓ | .0311ℓ | .0048ℓ | .0079ℓ | .0083ℓ |

| .0400ℓ | .0324ℓ | .0304ℓ | .0055ℓ | .0083ℓ | .0087ℓ |

| .0401ℓ | .0322ℓ | .0305ℓ | .0039ℓ | .0062ℓ | .0075ℓ |

| .0395ℓ | .0304ℓ | .0290ℓ | .0040ℓ | .0067ℓ | .0063ℓ |

Left-sided (resp. right-sided) tests are for values in tending to be smaller (resp. larger) than those in ). The sample sizes for the proportion of agreement rows are same as those for the empirical size estimates. The size estimates in the same row are superscripted so that order of significance difference is as the alphabetical order of the superscripts, and size estimates with the same superscript are not significantly different from each other. Superscripts for the proportions of agreement are as in Table 1

3.3. Empirical power comparisons

3.3.1. Multi-sample power comparisons of the pooled distances

For the alternative hypotheses in the multi-sample case, we again consider k = 3 groups, namely, , , and . Let η be a nonnegative integer and where is the ith value after the entries are sorted in descending order for i = 0, 1, 2, . . . , 11 and . Then we set the probability mass function to

Let r ≥ 1 be a real number, then the set of simulated distances is

Then for samples , , and , we set r = rx, η = ηx, r = ry, η = ηy, and r = rz, η = ηz, respectively and take n = nx = ny = nz = 10000. In our simulations, sample distances are generated as in the null case with rx = 1.0, ηx = 0; i.e., they are similar to distances of HR subject 1. So we have for i = 0, 1, . . . , 11 and where is defined in Section 3.1. Notice also that when ry = rz = 1.0 and ηy = ηz = 0, we obtain the null case of distributional equality between samples , and . For practical purposes, we require ηy and ηz to be in [0, 1000] and ry and rz to be in [0, 2), although larger values would also be conceivable. The alternative cases we consider are

| (5) |

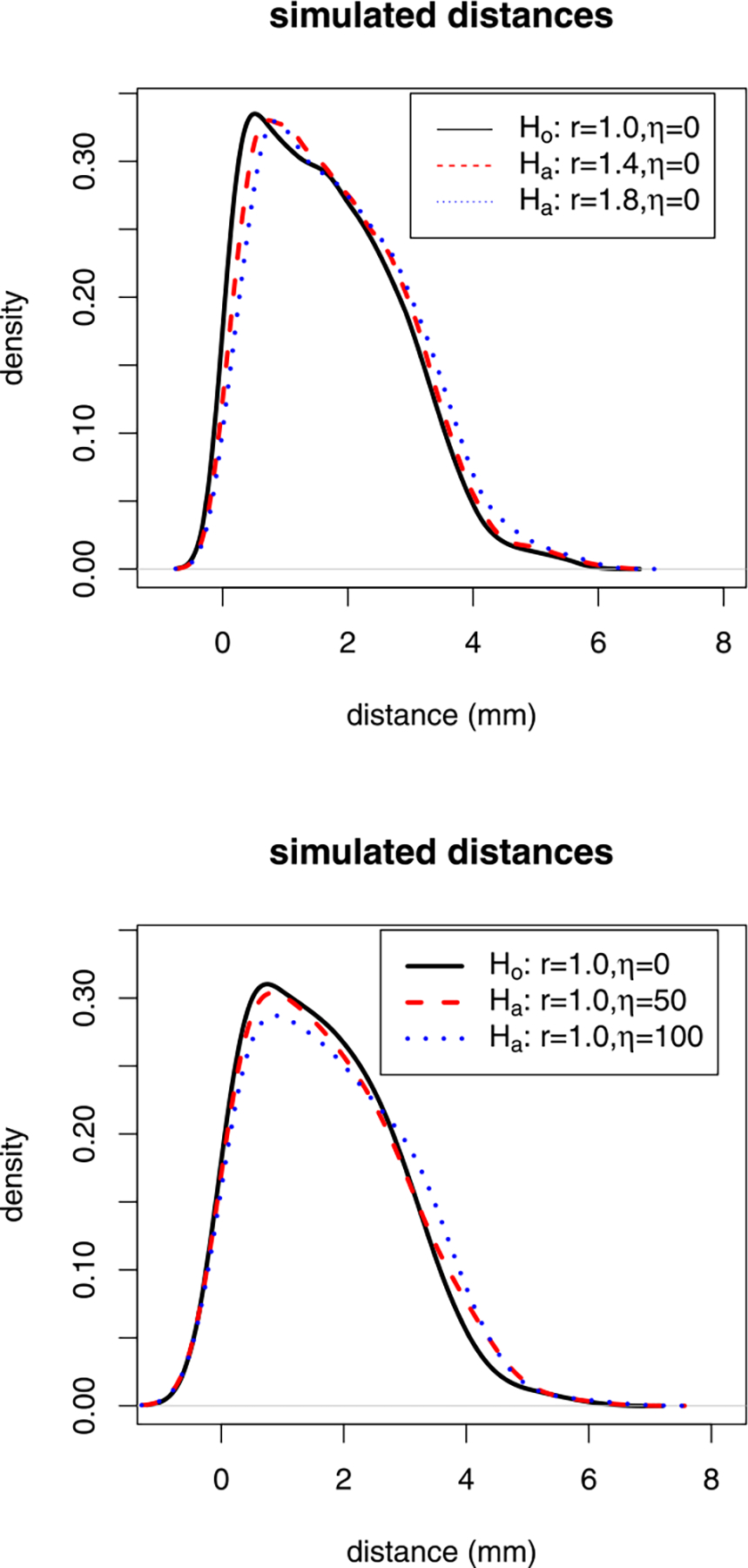

See Figure 3 for the kernel density estimates of sample distances under the null case and various alternatives.

Figure 3.

Plots of the kernel density estimates of the Monte Carlo simulated LCDM distances under the null case and alternatives with η = 0 and r ∈ {1.0, 1.4, 1.8} (top); null case and alternatives with r = 1.0 and η ∈ {0, 50, 100} (bottom). For the parameters r and η, see Section 3.3.1.

Remark 2:

Among the alternative parameters, r > 1 tends to make the corresponding samples less variable compared to the null samples. More specifically, r in (1, 2) would make a mild distance clustering for values around (i + r)/2 for i = 0, 1, . . . , 11 and does not increase the range and mean or medians of the distribution, but mostly changes the distribution and ranking of the distances and hence the locations of the samples. On the other hand, the parameters η > 0 would make the distributions more skewed to the right, and also modify the underlying probability mass function. That is, it would change the distribution of the distances, and increase the variability, mean/median and ranges of the distances. We observe in our simulations that impact of η > 0 on the variation of the samples is more severe compared to that on the distribution of the samples (see below).

The sample generation procedure is repeated Nmc = 10000 times. The empirical power estimates under Ha are obtained as follows: We count the number of times the null hypothesis is rejected at α = 0.05 for BF HOV test, KW test of distributional equality, and ANOVA F-tests (with and without HOV) of equality of mean distances. The empirical power estimates are provided in Table 3, where is the empirical power estimate for BF test, is for KW test, is for ANOVA F-test with HOV, and is for ANOVA F-test without HOV. Note that as n increases, the power estimates also increase under these alternative cases. Using the asymptotic normality of the empirical power estimates, we observe that under alternative cases L1 and L2, the variances of the distances are not that different, so we still have power estimates for BF test around .05 (i.e., in terms of variance differences, these alternatives are not different from the null case). However, under these alternatives, the distributions of the generated distances are different, hence the power estimates for the distribution and location tests (i.e., KW and ANOVA F-tests) are significantly larger than 0.05. In fact, the larger the ry − 1.0 and rz − 1.0, the higher the power estimates for KW and ANOVA F-tests. Under these alternatives, KW test tends to be more powerful than ANOVA F-tests, since such alternatives influence the distribution (hence ranking) of the distances, more than the means of the distances. Furthermore, under these alternatives, shape differences are more emphasized compared to size or scale differences; here “size” refers mostly to the distance with respect to the GM/WM surface. We also note that ANOVA F-tests (i.e., F1 and F2) have about the same power estimates.

Table 3.

The power estimates based on Monte Carlo simulation of distances under the alternative cases L1 – L4 with three groups, , , and each with size nx, ny, and nz, respectively, with Nmc = 10000 Monte Carlo replicates.

| L1 : (ry, rz, ηy, ηz) = (1.1, 1.0, 0, 0) | ||||

| (nx, ny, nz) | ||||

| (1000,1000,1000) | .0511b | .0778a | .0770a | .0768 a |

| (5000,5000,10000) | .0511c | .2281a | .2137b | .2114 b |

| (5000,7500,10000) | .0499 | .2903a | .2698b | .2694 b |

| (10000,10000,10000) | .0482c | .3900a | .3564b | .3559 b |

| L2 : (ry, rz, ηy, ηz) = (1.1, 1.2, 0, 0) | ||||

| (1000,1000,1000) | .0516c | .1396a | .1316ab | .1313b |

| (5000,5000,10000) | .0519c | .6725a | .6315b | .6317b |

| (5000,7500,10000) | .0513c | .6842a | .6396b | .6401b |

| (10000,10000,10000) | .0490c | .8410a | .8050b | .8050b |

| L3 : (ry, rz, ηy, ηz) = (1.0, 1.0, 10, 0) | ||||

| (1000,1000,1000) | .0899a | .0574c | .0728b | .0721b |

| (5000,5000,10000) | .3408a | .0767c | .1930b | .1854b |

| (5000,7500,10000) | .4378a | .0832c | .2415b | .2360b |

| (10000,10000,10000) | .5564a | .1006c | .3127b | .3061b |

| L4 : (ry, rz, ηy, ηz) = (1.0, 1.0, 10, 30) | ||||

| (1000,1000,1000) | .2236a | .0963c | .1519b | .1512b |

| (5000,5000,10000) | .9255a | .3986c | .7436b | .7537b |

| (5000,7500,10000) | .9214a | .4191c | .7578b | .7627b |

| (10000,10000,10000) | .9851a | .5352c | .8842b | .8835b |

The power estimates in the same row are superscripted so that order of significance difference is as the alphabetical order of the superscripts, and power estimates with the same superscript are not significantly different from each other

Under alternative cases L3 and L4, the variances of the distances tend to differ. Hence as ηy and ηz deviate more from 0 in the positive direction, the power estimates for BF test increase, and so do the power estimates of KW and ANOVA F-tests. Under these types of alternatives, BF test tends to be the most powerful of the tests considered, and ANOVA F-tests tend to be more powerful than KW tests, since the right skewness (tail) of distances are more emphasized, which in turn implies that the differences in the variances and in the mean distances are emphasized more. Under these alternatives, both the size or scale and shape are different. If the GM voxels from the GM/WM surface are at different distances, BF test is the most sensitive to the differences in LCDM distances, as these alternatives suggest more variability in LCDM distances. Furthermore, ANOVA F-tests are more sensitive to the differences in LCDM distances compared to KW test as these alternatives suggest more variability in means compared to the rankings. We also note that both ANOVA F-tests have about the same power estimates, which suggests that ANOVA F-test tends to be robust to deviations from HOV. Therefore, based on our Monte Carlo analysis, we observe that the spatial correlation between distances has a mild influence on the results. That is, the results based on BF HOV test on multiple samples are still reliable, although the assumption of within sample independence and normality of the residuals are violated.

3.3.2. Pairwise power comparisons for pooled distances

For the alternative cases L1 – L4, we determine which pairs of samples exhibit significant differences for the analysis of pooled distances. Note that in case L1 : (ry, rz, ηy, ηz) = (1.1, 1.0, 0, 0), the comparison of vs correspond to no difference, hence would be same as the size comparisons; and the same holds for case L3 : (ry, rz, ηy, ηz) = (1.0, 1.0, 10, 0), hence in these cases we omit the comparison of vs . In L1 : (ry, rz, ηy, ηz) = (1.1, 1.0, 0, 0) and L2 : (ry, rz, ηy, ηz) = (1.1, 1.2, 0, 0), the comparison of vs yield the same results, hence L2 is presented (for L1 and L2); the same holds for the comparison of L3 : (ry, rz, ηy, ηz) = (1.0, 1.0, 10, 0) and L4 : (ry, rz, ηy, ηz) = (1.0, 1.0, 10, 30), hence L4 is presented (for L3 and L4). In the alternative cases, we consider BF HOV test, WRS test, t-test as post-hoc tests for the pairwise analysis, and also Lilliefor’s test of normality and KS test, and estimate the empirical powers for these tests. We do not present the power estimates for Lilliefor’s test of normality, since our data is severely non-Gaussian by construction, and so we get power estimates of 1.00 under both null and alternative cases. The power estimates are provided in Table 4, where is the power estimate for BF test, is for WRS test, is for t-test, is for KS test. We only present the power estimates for the left-sided alternatives for , , and , since by construction, distances tend to be smaller than and distances, distances tend to be smaller than distances, and the power estimates in the reverse directions are virtually zero.

Table 4.

The power estimates of the tests for the pairwise comparisons of the samples based on Monte Carlo simulation of distances under the alternative cases L2 and L4 with three groups, , , and each with size nx, ny, and nz, respectively, with Nmc = 10000 Monte Carlo replicates.

| L2 : (ry, rz, ηy, ηz ) = (1.1, 1.2, 0, 0) | ||||

| (nx, ny, nz) | ||||

| (1000,1000,1000) | .0496b | .1231a | .1187a | .1198a |

| (5000,5000,10000) | .0591d | .3182b | .2975c | .3907a |

| (5000,7500,10000) | .0531d | .3445b | .3231c | .4514a |

| (10000,10000,10000) | .0528d | .4930b | .4618c | .6904a |

| (1000,1000,1000) | .0531c | .2709b | .2602b | .2868a |

| (5000,5000,10000) | .0665d | .8422b | .8183c | .9753a |

| (5000,7500,10000) | .0633d | .8415b | .8181c | .9720a |

| (10000,10000,10000) | .0659d | .9428b | .9277c | .9987a |

| (1000,1000,1000) | .0536d | .1368a | .1266b | .1323ab |

| (5000,5000,10000) | .0587d | .3950b | .3498c | .5084a |

| (5000,7500,10000) | .0577d | .4785b | .4269c | .6198a |

| (10000,10000,10000) | .0648d | .5338b | .4709c | .6896a |

| L4 : (ry, rz, ηy, ηz ) = (1.0, 1.0, 10, 30) | ||||

| (1000,1000,1000) | .1543b | .0714c | .1129a | .0601d |

| (5000,5000,10000) | .4201a | .1263c | .2698b | .0971d |

| (5000,7500,10000) | .4731a | .1302c | .2940b | .1019d |

| (10000,10000,10000) | .6494a | .1687c | .4137b | .1250d |

| (1000,1000,1000) | .4029a | .1798c | .2945b | .1438d |

| (5000,5000,10000) | .9793a | .5770c | .8869b | .8874b |

| (5000,7500,10000) | .9788a | .5856c | .8895b | .8863b |

| (10000,10000,10000) | .9975a | .7349d | .9670c | .9947b |

| (1000,1000,1000) | .1791a | .1278c | .1562b | .1064d |

| (5000,5000,10000) | .6172a | .3513d | .4914b | .3909c |

| (5000,7500,10000) | .7098a | .4290d | .5923c | .5511b |

| (10000,10000,10000) | .7601a | .4740c | .6537b | .6484b |

The superscripts are as in Table 3

Under alternative case L2, the variances of the distances are not that different, so we still have power estimates for BF test around 0.05 (that is, in terms of HOV, these cases are not significantly different from the null hypothesis). But the distributions start to differ; so as ry and rz deviate further away from 1.0 in the positive direction, the power estimates for WRS, t-test, and KS tests tend to increase. Furthermore, as the sample size, n, increases, the power estimates for these tests increase as well. As in the multi-sample case, under these alternatives, WRS test is more powerful than t-test, since the ranking of the distances are affected more than the mean distances under these alternatives. But KS test has the highest power estimates for all sample sizes considered. Thus, for differences in shape rather than the thickness from the GM/WM surface, KS test and WRS test are more sensitive (with the former test being more sensitive) than t-test.

Under alternative case L4, the variances of the distances tend to differ; as ηy and ηz deviate further away from 0 in the positive direction, the power estimates for BF test increase, and so do the power estimates of WRS, t-test, and KS tests. Note that as n increases, the power estimates also increase under each alternative case. Under these alternatives, t-test is more powerful than WRS test, since mean distances are more affected than the rankings under such alternatives. KS test has higher power estimates for larger deviations from the null case with larger sample sizes. Furthermore, BF test tends to have the highest power estimates under these alternatives. These alternatives imply that the distances of the GM voxels are at different scales, BF test has the best performance for small differences, while for large differences, KS and BF test have about the same performance that is better than the others.

Therefore, based on our Monte Carlo analysis, the spatial correlation between voxels (hence distances) has a mild influence, if any, on our results. That is, the results based on BF HOV test are still reliable, although assumptions of within sample independence and normality of the residuals are violated) and the results based on the other tests (WRS test, t-test, and KS tests) for two samples are still reliable, although the assumption of within sample independence is violated (and normality for the t-test is also violated). However, WRS test is more sensitive against the shape differences of GM of VMPFCs with similar distances from the GM/WM boundary and BF HOV test cannot detect such differences, since in this case, the variation of distances are not sufficiently different between the two groups. On the other hand, the t-test and KS test are more sensitive against the differences of GM tissue with different distances from the boundary compared to WRS test; we also notice that in this case, BF test has the best performance in detecting such deviations from the null case. That is, the variation of distances is more emphasized compared to the differences in central locations or ranking of the distances, hence the highest power for BF tests.

3.3.3. Pairwise power comparisons for censored distances

For the censoring distances, we consider the alternative hypothesis in which we generate sample as in the null case. For sample , we set ry = 1.2 and ηy = 0 and for sample , we set rz = 1.0 and ηz = 50. So the alternative hypothesis we consider is

| (6) |

and we use nx = ny = nz = 10000. So, is the same as (which is the null distribution) and where

Notice that sample is generated so that the rankings of distances are more different than those of sample rather than the distances from the GM/WM surface. By construction, sample would have larger variance than sample , while sample would have larger variance compared to other samples. Furthermore, by construction, sample contains distances that are more accumulated at intervals [0.5, 0.6], [1.0, 1.1], . . . ,[5.5, 5.6] compared to sample . Therefore, at distances around these intervals, the censored distances for sample tend to be smaller than censored distances for sample around γ.01,k for k = 50, 100, . . . , 550 (i.e., around k × 0.01 = 0.5, 1.0, . . . , 5.5). Also, the variance of sample would be smaller than sample around these intervals provided censoring distances are less than 4.0. On the other hand, comparing with , we see that sample is more likely to have distances more than 4.0 compared to those of sample while sample is more likely to have distances less than 4.0 compared to those of sample . Hence, we expect that for distances larger than 4.0, the censored distances for sample tend to be smaller than censored distances for sample at γ.01,k for k ≥ 400 (i.e., γ.01,k ≥ 4.0). The same trend is expected on variances of these samples. That is, the variance of sample would be larger than the variance of sample for censoring distances larger than 4.0.

We repeat the sample generation procedure Nmc = 10000 times. We count the number of times the null hypothesis is rejected at α = 0.05 for BF test, KW test, and pairwise WRS tests, thus obtain the empirical power estimates under Ha.

The average p-values together with 95% confidence bands versus censoring distance values for multi-group BF HOV test and for multi-group KW test are plotted in Figure 4. Observe that the differences in variance and distribution are at about the same censoring distances except for the distances between (2.5, 4.0): There are significant differences between group variances at about γ.01,k = 0.5, 1.0, . . . , 2.5, and for distance values larger than 4.0. The significant differences at steps of 0.5 increments is because of the construction of sample , and significant differences for distances larger than 4.0 are due to sample . However, for censoring distances within (2.5, 4.0), the samples seem to satisfy HOV, but they still tend to exhibit differences in distribution at about 2.5, 3.0, 3.5, and 4.0.

Figure 4.

The average p-values versus censoring distances for multi-group BF HOV test (top) and multi-group KW test (bottom) together with the 95% confidence bands (dashed lines) based on 10000 Monte Carlo replications of censored , , and sets that are generated under the alternative case L5. Horizontal lines are at 0.05.

The average p-values together with 95% confidence bands versus censoring distance values for BF HOV test for the one-sided alternatives Var(X) > Var(Y), Var(X) < Var(Z), and Var(Y) < Var(Z) and for WRS tests for the left-sided alternatives X < Y (which means values tend to be smaller than values), X < Z, and Y < Z are plotted in Figure 5. Based on BF HOV test for Var(X) > Var(Y) alternative, we observe that censored distances for sample tend to have smaller variance than censored distances for sample around γ.01,k for k = 50 and larger variance for k = 100, . . . , 250 (i.e., smaller variance around γ.01,k = 0.5 and larger variance around γ.01,k = 1.0, . . . , 2.5). For censored distances larger than 3.0, Var(X) and Var(Y) are not significantly different from each other. Except around γ.01,k = 0.5, by construction there is moderate clustering of sample distances around γ.01,k = 1.0, . . . , 2.5, which makes Var(Y) significantly smaller than Var(X). Based on WRS test for X < Y alternative, we observe that censored distances for sample tend to be smaller than censored distances for sample around γ.01,k for k = 50, 100, . . . , 350 and k ≥ 400 (i.e., around γ.01,k = 0.5, 1.0, . . . , 3.5 and at γ.01,k ≥ 4.0). For censored distances larger than 4.0, the proportions and are not large enough for samples and to balance the accumulation of distances around 4.0, 4.5, 5.0, and 5.5 for sample . Hence, censored distances for sample are significantly larger than those of sample for γ.01,k ≥ 3.5.

Figure 5.

The average p-values together with 95% confidence bands versus censoring distance values under the alternative case L5 based on 10000 Monte Carlo replications for pairwise BF HOV test for the one-sided alternatives Var(X) > Var(Y), Var(X) < Var(Z), and Var(Y) < Var(Z) and for pairwise WRS tests for the one-sided alternatives X < Y, X < Z, and Y > Z.

Based on BF test for Var(X) < Var(Z) alternative, we observe that censored distances for sample tend to have smaller variance than the censored distances for sample at γ.01,k for k ≥ 400 (i.e., at γ.01,k ≥ 4.0). Because, for censored distances larger than 4.0, the proportions have larger weights for sample . Hence, Var(Z) is significantly larger than Var(X) for γ.01,k ≥ 4.0. Based on WRS test for alternative, we observe that censored distances for sample tend to be smaller than censored distances for sample at γ.01,k for k ≥ 400 (i.e., at γ.01,k ≥ 4.0). Because for censored distances larger than 4.0, the proportions have larger weights for sample . Hence, censored distances for sample are significantly larger than those of sample for γ.01,k ≥ 4.0. Hence samples and show the same trend in variance and distribution under this alternative (which does not occur in general).

Based on BF test for Var(Y) < Var(Z) alternative, we observe that censored distances for sample tend to have smaller variance than censored distances for sample around γ.01,k for k = 100, . . . , 250 and k ≥ 400 (i.e., around γ.01,k = 1.0, . . . , 2.5 and γ.01,k ≥ 4.0). Furthermore, censored distances for sample tend to have larger variance than censored distances for sample around γ.01,k for k = 50 (i.e., around γ.01,k = 0.5). Except around γ.01,k = 0.5, by construction there is moderate clustering of sample distances around γ.01,k = 1.0, . . . , 2.5, which makes Var(Y) significantly smaller than Var(Z). On the other hand, for censored distances larger than 4.0, the proportions have larger weights for sample . Hence, Var(Z) is significantly larger than Var(Y) for γ.01,k ≥ 4.0. Based on WRS test for Y > Z alternative, we observe that censored distances for sample tend to be larger than censored distances for sample around γ.01,k for k = 50, 100, . . . , 350 (i.e., around γ.01,k = 0.5, 1.0, . . . , 3.5). For censored distances larger than 4.0, the proportions are not large enough for sample to make its censored distances larger than those of sample . Hence, censored distances for sample are not significantly different from those of sample for γ.01,k ≥ 4.0. This also occurs because the proportions have larger weights for distances less than 4.0, and any parameter affecting these distances have more influence in censored distance analysis. Moreover, for distances within (1.0, 3.0) variance differences and distributional differences are in the opposite direction. That is, for censoring distance in this interval, variance of sample is significantly smaller than variance of sample , while sample distances tend to be larger than sample distances at the same censoring distance values.

Remark 3:

We omit the assessment of assumption violations and data aggregation on the censoring distance analysis via Monte Carlo simulations, because the results are similar to the pairwise comparisons after the multi-group analysis in the multi-sample case in Section 3.2.2. That is, we have the same conclusions of power comparisons in that section extended to the two-sample case; i.e., the assumption violations and data aggregation have negligible influence on the size and power of the tests under consideration, in particular, for BF HOV test.

Remark 4:

The Choice of the Reference Subject for Monte Carlo Simulations:

We have chosen left VMPFC of HR subject 1 as our baseline or reference tissue in our Monte Carlo simulations. This subject is actually a typical one and does not seem to be an outlier in VMPFC morphometry, see, e.g., the kernel density estimate of LCDM distances in Figure 13 (where the reference subject is indicated with a thicker solid line). In fact, the choice of the reference subject is not so relevant in the simulations, as in the null case we generate distances from the distance distribution of this subject, so they would all be similar (in distances) to each other satisfying the underlying assumption behind pooling. Deviations from the null case (i.e., the alternatives) are generated by modifying the parameters (i.e., entries in the frequency vector ). Distances resembling other subjects can also be generated by the same approach. For example, to generate distances similar to subject 17 in the Ctrl group, we could have taken the frequency vector to be and then could have used it as our reference subject.

Figure 13.

Histograms overlaid with the kernel density estimates of the pooled LCDM distances for the left and right VMPFCs.

3.4. Comparison of the tests for normal and skewed data sets

3.4.1. Normal data

One of the main conclusions of this work is that HOV test is (and other tests are) valid and applicable in practice for LCDM distances, although some assumptions (such as within sample independence and normality) are violated. These tests can be much more powerful when the underlying assumptions are met by the data sets. In the presence of assumption violations, these tests might require very large samples to attain good power, but LCDM data sets tend to be extremely large by construction (as the voxel sizes are usually taken at mm or half mm resolution or adjusted accordingly based on the ROI considered) so sample size is not an issue for LCDM distances. However, we want to study and compare the size and power performance of the tests when the underlying assumptions are met, and thus consider first the normally distributed data sets that also satisfy within and between sample independence. We choose the same sample sizes used for the simulation of the LCDM-like distances in Sections 3.2 and 3.3.

With k = 3 groups, we generate three samples , , and each of size nx, ny, and nz, respectively, each from a normal distribution. In particular, we generate sample as

| (7) |

Samples and are generated similarly. For the null case, we choose μx = μy = μz = 3.35 and σx = σy = σz = 2.28; these choices are made so that the means and standard deviations of the normal data (approximately) match those of the data generated under the null case with (ry, rz, ηy, ηz) = (1.0, 1.0, 0, 0) in Section 3.2.

For the alternatives, we use

which correspond to cases L1 – L4, respectively. That is, the means and standard deviations for the normal data in cases N1 – N4 are chosen so that they (approximately) match those of the data from cases L1 – L4, respectively.

Under N1 (resp. N2), the samples and (resp. all samples) are different in mean but same in variance. Under N3, sample is different from the others in location and variance; and under N4, samples are all different both in mean and variance. The estimated significance levels based on Nmc = 10000 Monte Carlo replicates at α = 0.05 level for the multi-class tests are presented in Table 5 where all the tests are about the desired level of .05; and the same holds for the pairwise tests, hence their size estimates are not presented. So, the methods behave as expected in terms of empirical size when all the assumptions are satisfied. The proportions of agreement between the multi-sample and pairwise tests have the similar trend as in Tables 1 and 2 (hence omitted again).

Table 5.

Estimated significance levels (i.e., empirical size estimates) based on Monte Carlo simulations of normal data (left) and exponential data (right) with three groups, , , and each with size nx, ny, and nz, respectively, with Nmc = 10000 Monte Carlo replicates.

| Normal Data | ||||

| (nx, ny, nz) | ||||

| (1000,1000,1000) | .0498a | .0499a | .0503a | .0497a |

| (5000,5000,10000) | .0486a | .0529a | .0527a | .0522a |

| (5000,7500,10000) | .0530a | .0498a | .0519a | .0515a |

| (10000,10000,10000) | .0506a | .0511a | .0492a | .0492a |

| Exponential Data | ||||

| (1000,1000,1000) | .0455b,< | .0519a | .0492ab | .0498ab |

| (5000,5000,10000) | .0544a | .0500a | .0517a | .0524a |

| (5000,7500,10000) | .0481a | .0475a | .0463a,< | .0465a |

| (10000,10000,10000) | .0522a | .0531a | .0553a,> | .0550a,> |

Superscript labeling is as in Table 1

Pooled Analysis:

For pooled data, we present the power estimates for the multi-group tests under N1 – N4 in Table 6. Notice that and have very similar power for all cases. Under N1 and N2, BF test has power about the nominal level of the test (i.e., .05), and ANOVA F-tests tend to be more powerful compared to KW test for samples larger than 1000. On the other hand, under N3 and N4, BF test has much higher power compared to other tests, and ANOVA F-tests are slightly more powerful than KW test for samples larger than 1000. These results are in agreement with the fact than under N1 and N2, variances are equal, and means are different; while under N3 and N4, we have differences in mean and variance.

Table 6.

The power estimates for the multi-group tests based on Monte Carlo simulation of normal data under the given alternatives N1 – N4 (left) and exponential data under the alternatives E1 – E4 (right) with samples , , and each with size nx, ny, and nz, respectively, with Nmc = 10000 Monte Carlo replicates.

| N1 | ||||

| (nx, ny, nz) | ||||

| (1000,1000,1000) | .0498b | .0660a | .0645a | .0652 a |

| (5000,5000,10000) | .0486b | .1411a | .1453a | .1449 a |

| (5000,7500,10000) | .0530 b | .1760a | .1827a | .1829 a |

| (10000,10000,10000) | .0506b | .2216a | .2283a | .2277 a |

| N2 | ||||

| (1000,1000,1000) | .0498b | .0712a | .0688a | .0682a |

| (5000,5000,10000) | .0486b | .1836a | .1931a | .1934a |

| (5000,7500,10000) | .0530b | .1913a | .1940a | .1934a |

| (10000,10000,10000) | .0506c | .2864b | .2996a | .3000a |

| N3 | ||||

| (1000,1000,1000) | .0948a | .0650b | .0642b | .0648b |

| (5000,5000,10000) | .3342a | .1396b | .1462b | .1423b |

| (5000,7500,10000) | .4269a | .1741b | .1802b | .1784b |

| (10000,10000,10000) | .5619a | .2178b | .2258b | .2228b |

| N4 | ||||

| (1000,1000,1000) | .1610a | .0863b | .0860b | .0861b |

| (5000,5000,10000) | .7543a | .3133c | .3257bc | .3336b |

| (5000,7500,10000) | .7618a | .3153c | .3231bc | .3313b |

| (10000,10000,10000) | .9052a | .4546c | .4719b | .4741b |

| E1 | ||||

| (1000,1000,1000) | .0531c | .1410a | .0842b | .0857b |

| (5000,5000,10000) | .0556c | .6168a | .2811b | .2794b |

| (5000,7500,10000) | .0528c | .7312a | .3560b | .3574b |

| (10000,10000,10000) | .0549c | .8617a | .4561b | .4585b |

| E2 | ||||

| (1000,1000,1000) | .0555c | .3456a | .1620b | .1624b |

| (5000,5000,10000) | .0707c | .9916a | .7871b | .7877b |

| (5000,7500,10000) | .0631c | .9922a | .7940b | .7942b |

| (10000,10000,10000) | .0738c | .9997a | .9252b | .9252b |

| E3 | ||||

| (1000,1000,1000) | .0881a | .0513c | .0722b | .0722b |

| (5000,5000,10000) | .2873a | .0657c | .1997b | .1906b |

| (5000,7500,10000) | .3515a | .0684c | .2423b | .2357b |

| (10000,10000,10000) | .4600a | .0742c | .3159b | .3070b |

| E4 | ||||

| (1000,1000,1000) | .1310a | .0696c | .1100b | .1115b |

| (5000,5000,10000) | .6317a | .2194c | .5135b | .5270b |

| (5000,7500,10000) | .6378a | .2340c | .5172b | .5311b |

| (10000,10000,10000) | .8214a | .3078c | .6968b | .7010b |

The superscripts are as in Table 3

We present the power estimates for the pairwise tests under the alternative cases N1 – N4 in Table 7 where we only present , , and alternatives, as these are the only plausible alternatives by construction (except for N1 and N2, where variances are equal). Based on the same reasoning for presenting L2 and L4 only in Table 4, we only present cases N2 and N4 here. The pairwise tests are also performed so as to check whether the correct direction in the differences is detected for each comparison. As expected, under N2, power estimates of the BF HOV test is at about the nominal level of .05 since variances are equal by construction; t-test seems to be most powerful, then comes WRS test, and then KS test. So under N2, since all the assumptions (including equality of the variances) are met, the parametric test of location (i.e., t-test) is more powerful than the WRS and KS tests. However, under N4, BF test has the highest power; and for alternative WRS, t-test, and KS tests have power estimates as in case N2, but for and alternatives these tests are more powerful compared to the case N2; and as sample sizes increase, KS test has the highest power (since in this case, both location and scale are different, and WRS and t-tests are sensitive to location only). Furthermore, under N2 (resp. under N4), the power estimates for is higher for WRS, t-test, and KS test (resp. all tests) compared to other alternative directions, as sample is much different in location (resp. different in location and scale) than other samples in this case.

Table 7.

The power estimates for pairwise tests based on Monte Carlo simulation of normal data under the given alternatives N2 and N4 with samples , , and each with size nx, ny, and nz, respectively, with Nmc = 10000 Monte Carlo replicates.

| Normal Data | ||||

| N2 : μx = 3.35, μy = 3.39, μz = 3.40; σx = σy = σz = 2.28 | ||||

| (nx, ny, nz) | ||||

| (1000,1000,1000) | .0501b | .1008a | .1070a | .0942a |

| (5000,5000,10000) | .0519c | .2081a | .2144a | .1772b |

| (5000,7500,10000) | .0497c | .2435a | .2484a | .2062b |

| (10000,10000,10000) | .0516c | .3325a | .3455a | .2790b |

| (1000,1000,1000) | .0523c | .1225a | .1236a | .1121b |

| (5000,5000,10000) | .0478c | .3430a | .3546a | .2867b |

| (5000,7500,10000) | .0482c | .3463a | .3562a | .2972b |

| (10000,10000,10000) | .0507c | .4547a | .4660a | .3790b |

| (1000,1000,1000) | .0529b | .0619a | .0636a | .0574ab |

| (5000,5000,10000) | .0477c | .0869a | .0884a | .0766b |

| (5000,7500,10000) | .0479b | .0869a | .0872a | .0825a |

| (10000,10000,10000) | .0458c | .0919a | .0935a | .0827b |

| N4 : μx = 3.35, μy = 3.39, μz = 3.42; σx = 2.28, σy = 2.33, σz = 2.37 | ||||

| (1000,1000,1000) | .1535a | .1001b | .1064b | .0997b |

| (5000,5000,10000) | .4160a | .2054c | .2128b | .2198b |

| (5000,7500,10000) | .4694a | .2400c | .2468c | .2642b |

| (10000,10000,10000) | .6550a | .3280c | .3397c | .3861b |

| (1000,1000,1000) | .3149a | .1592b | .1650b | .1635b |

| (5000,5000,10000) | .9057a | .5258d | .5451c | .6696b |

| (5000,7500,10000) | .9074a | .5271d | .5480c | .6768b |

| (10000,10000,10000) | .9767a | .6722d | .6913c | .8385b |

| (1000,1000,1000) | .1303a | .0866bc | .0910b | .0822c |

| (5000,5000,10000) | .3615a | .1847bc | .1930b | .1981b |

| (5000,7500,10000) | .4287a | .2070c | .2106c | .2255b |

| (10000,10000,10000) | .4688a | .2302c | .2323c | .2496b |

The superscripts are as in Table 3

Censoring Analysis: