Abstract

We develop a weak-form sparse identification method for interacting particle systems (IPS) with the primary goals of reducing computational complexity for large particle number and offering robustness to either intrinsic or extrinsic noise. In particular, we use concepts from mean-field theory of IPS in combination with the weak-form sparse identification of nonlinear dynamics algorithm (WSINDy) to provide a fast and reliable system identification scheme for recovering the governing stochastic differential equations for an IPS when the number of particles per experiment is on the order of several thousands and the number of experiments is less than 100. This is in contrast to existing work showing that system identification for less than 100 and on the order of several thousand is feasible using strong-form methods. We prove that under some standard regularity assumptions the scheme converges with rate in the ordinary least squares setting and we demonstrate the convergence rate numerically on several systems in one and two spatial dimensions. Our examples include a canonical problem from homogenization theory (as a first step towards learning coarse-grained models), the dynamics of an attractive–repulsive swarm, and the IPS description of the parabolic–elliptic Keller–Segel model for chemotaxis. Code is available at https://github.com/MathBioCU/WSINDy_IPS.

Keywords: Data-driven modeling, Interacting particle systems, Weak form, Mean-field limit, Sparse regression

1. Problem statement

Recently there has been considerable interest in the methodology of data-driven discovery for governing equations. Building on the Sparse Identification of Nonlinear Dynamics (SINDy) [1], we developed a weak form version (WSINDy) for ODEs [2] and for PDEs [3]. In this work, we develop a formulation for discovering governing stochastic differential equations (SDEs) for interacting particle systems (IPS). To promote clarity and for reference later in the article, we first state the problem of interest. Subsequently, we will provide a discussion of background concepts and current results in the literature.

Consider a particle system where on some fixed time window , each particle evolves according to the overdamped dynamics

| (1.1) |

with initial data each drawn independently from some probability measure , where is the space probability measures on with finite pth moment.1 Here, is the interaction potential defining the pairwise forces between particles, is the local potential containing all exogenous forces, is the diffusivity, and are independent Brownian motions each adapted to the same filtered probability space (, , , (). The empirical measure is defined

and the convolution is defined

where we set whenever is undefined. The recovery problem we wish to solve is the following.

Let be discrete-time data at timepoints for i.i.d. trials of the process (1.1) with , , and and let be a corrupted dataset. For some fixed compact domain containing supp (), and finite-dimensional hypothesis spaces2 , , and , solve

The problem is clearly intractable because we do not have access to , , or , and moreover the interactions between these terms render simultaneous identification of them ill-posed. We consider two cases: (i) and , corresponding to purely extrinsic noise, and (ii) and , corresponding to purely intrinsic noise. The extrinsic noise case is important for many applications, such as cell tracking, where uncertainty is present in the position measurements. In this case we examine representing i.i.d. Gaussian noise with mean zero and variance3 added to each particle position in . In the case of purely intrinsic noise, identification of the diffusivity is required as well as the deterministic forces on each particle as defined by and . A natural next step is to consider the case with both extrinsic and intrinsic noise. However, the combined noise case is sufficiently nuanced as to render it beyond the scope of the article, and we leave it for future work.

2. Background

Interacting particle systems (IPS) such as (1.1) are used to describe physical and artificial phenomena in a range of fields including astrophysics [4,5], molecular dynamics [6], cellular biology [7-9], and opinion dynamics [10]. In many cases the number of particles is large, with cell migration experiments often tracking 103 – 106 cells and simulations in physics (molecular dynamics, particle-in-cell, etc.) requiring in the range 106 – 1012. Inference of such systems from particle data thus requires efficient means of computing pairwise forces from interactions at each timestep for multiple candidate interaction potentials . Frequently, so-called mean-field equations at the continuum level are sufficient to describe the evolution of the system, however in many cases (e.g. chemotaxis in biology [11]) only phenomenological mean-field equations are available. Moreover, it is often unclear how many particles are needed for a mean-field description to suffice. Many disciplines are now developing machine learning techniques to extract coarse-grained dynamics from high-fidelity simulations (see [12] for a recent review in molecular dynamics). In this work we provide a means for inferring governing mean-field equations from particle data assumed to follow the dynamics (1.1) that is highly efficient for large , and is effective in learning mean-field equations when is in range 103 – 105.

Inference of the drift and diffusion terms for stochastic differential equations (SDEs) is by now a mature field, with the primary method being maximum-likelihood estimation, which uses Girsanov’s theorem together with the Radon–Nikodym derivative to arrive at a log-likelihood function for regression. See [13, 14] for some early works and [15] for a textbook on this approach. More recently, sparse regression approaches using the Kramers–Moyal expansion have been developed [16-18] and the authors of [19] use sparse regression to learn population level ODEs from agent-based modeling simulations. The authors of [20] also derived a bias-correcting regression framework for inferring the drift and diffusion in underdamped Langevin dynamics, and in [21] a neural network-based algorithm for inferring SDEs was developed.

Only in the last few years have significant strides been made towards parameter inference of interacting particle systems such as (1.1) from data. Apart from some exceptions, such as a Gaussian process regression algorithm recently developed in [22], applications of maximum likelihood theory are by far the most frequently studied. An early but often overlooked work by Kasonga [23] extends the maximum-likelihood approach to inference of the interaction potential , assuming full availability of the continuous particle trajectories and the diffusivity . Two decades later, Bishwal [24] further extended this approach to discrete particle observations in the specific context of linear particle interactions. In both cases, a sequence of finite-dimensional subspaces is used to approximate the interaction function, and convergence is shown as the dimension of the subspace and number of particles both approach infinity. More recently, the maximum likelihood approach has been carried out in [25,26] in the case of radial interactions and in [27] in the case of linear particle interactions and single-trajectory data (i.e. one instance of the particle system). The authors of [28] recently developed an online maximum likelihood method for inference of IPS, and in [29] maximum likelihood is applied to parameter estimation in an IPS for pedestrian flow. It should also be noted that parameter estimation for IPS is common in biological sciences, with the most frequently used technique being nonlinear least squares with a cost function comprised of summary statistics [7,30].

Problem is made challenging by the coupled effects of , , and . In each of the previously mentioned algorithms, the assumption is made that is known and/or that takes a specific form (radial or linear). In addition, the maximum likelihood-based approach approximates the differential of particle using a 1st-order finite difference: , which is especially ill-suited to problems involving extrinsic noise in the particle positions. Our primary goal is to show that the weak-form sparse regression framework allows for identification of the full model (, , ), with significantly reduced computational complexity, when is on the order of several thousands or more. We use a two-step process: the density of particles is approximated using a density kernel G and then the WSINDy algorithm (weak-form sparse identification of nonlinear dynamics) is applied in the PDE setting [2,3]. WSINDy is a modified version of the original SINDy algorithm [1,31] where the weak formulation of the dynamics is enforced using a family of test functions that offers reduced computational complexity, high-accuracy recovery in low-noise regimes, and increased robustness to high-noise scenarios. The feasibility of this approach for IPS is grounded in the convergence of IPS to associated mean-field equations. The reduction in computational complexity follows from the reduction in evaluation of candidate potentials (as discussed in Section 4.2), as well as the convolutional nature of the weak-form algorithm.

To the best of our knowledge, we present here the first weak-form sparse regression approach for inference of interacting particle systems, however we now review several related approaches that have recently been developed. In [32], the authors learn local hydrodynamic equations from active matter particle systems using the SINDy algorithm in the strong-form PDE setting. In contrast to [32], our approach learns nonlocal equations using the weak-form, however similarly to [32] we perform model selection and inference of parameters using sparse regression at the continuum level. The weak form provides an advantage because no smoothness is required on the particle density (for requisite smoothness the authors of [32] use a Gaussian kernel, which is more expensive to compute than simple particle binning as done here). The authors of [33] developed an integral formulation for inference of plasma physics models from PIC data using SINDy, however their method involves first computing strong-form derivatives and then averaging, rather than integration by parts against test functions as done here, and as in [32], the learned models are local. In [34], the authors apply the maximum likelihood approach in the continuum setting on the underlying nonlocal Fokker–Planck equation and learn directly the nonlocal PDE using strong-form discretizations of the dynamics. While we similarly use the continuum setting for inference (albeit in weak form), our approach differs from [34] in that it is designed for the more realistic setting of discrete-time particle data, rather than pointwise data on the particle density (assumed to be smooth in [34]).

2.1. Contributions

The purpose of the present article is to show that the weak form provides an advantage in speed and accuracy compared with existing inference methods for particle systems when the number of particles is sufficiently large (on the order of several thousand or more). The key points of this article include:

Formulation of a weak-form sparse recovery algorithm for simultaneous identification of the particle interaction force , local potential , and diffusivity from discrete-time particle data.

Convergence with rate of the resulting full-rank least-squares solution as the number of particles and timestep .

Numerical illustration of (II) along with robustness to either intrinsic randomness (e.g. Brownian motion) or extrinsic randomness (e.g. additive measurement noise).

2.2. Paper organization

In Section 3 we review results from mean-field theory used to show convergence of the weak-form method. In Section 4 we introduce the WSINDy algorithm applied to interacting particles, including hyperparameter selection, computational complexity, and convergence of the method under suitable assumptions in the limit of large . Section 5 contains numerical examples exhibiting the convergence rates of the previous section and examining the robustness of the algorithm to various sources of corruption, and Section 6 contains a discussion of extensions and future directions. In the Appendix we provide information on the hyperparameters used A.1, derivation of the homogenized equation (5.3) A.2, results and discussion for the case of small and large (in comparison with [26]) A.3, and proofs to technical lemmas A.4. Table 1 includes a list of notations used throughout.

Table 1.

Notations used throughout.

| Variable | Definition | Domain |

|---|---|---|

| Pairwise interaction potential | ||

| Local potential | ||

| Diffusivity | ||

| Number of particles per experiment | {2, 3,…} | |

| Dimension of latent space | ||

| Final time | (0, ∞) | |

| (, , , ) | Filtered probability space | |

| Independent Brownian motions on (, , , ) | ||

| particle in the particle system (1.1) at time | ||

| -particle system (1.1) at time | ||

| Empirical measure of | ||

| Distribution of | ||

| Mean-field process (3.2) at time | ||

| Distribution of | ||

| discrete timepoints | [0, ] | |

| Collection of independent samples of at | ||

| Sample of corrupted with i.i.d. additive noise | ||

| Approximate density from particle positions | ||

| Density kernel mapping to | ||

| Spatial support of , | Compact subset of | |

| Discretization of | ||

| Discrete approximate density | ||

| semi-discrete inner product, trapezoidal rule over | ||

| Fully-discrete inner product, trapezoidal rule over | ||

| Library of candidate interaction forces | ||

| Library of candidate local forces | ||

| Library of candidate diffusivities | ||

| (, , ) | ||

| Set of test functions | ||

| Test functions used in this work (Eq. (4.4) | ||

| Set of sparsity thresholds | ||

| Loss function for sparsity thresholds (Eq. (4.6) |

3. Review of mean-field theory

Our weak-form approach utilizes that under fairly general assumptions the empirical measure of the process defined in (1.1) converges weakly to , the distribution of the associated mean-field process defined in (3.2). Specifically, under suitable assumptions on , , , and , there exists such that for all , the mean-field limit4

holds in the weak topology of measures,5 where is a weak-measure solution to the mean-field dynamics

| (3.1) |

Eq. (3.1) describes the evolution of the distribution of the McKean–Vlasov process

| (3.2) |

This implies that as , an initially correlated particle system driven by pairwise interaction becomes uncorrelated and only interacts with its mean-field distribution . In particular, the following theorem summarizes several mean-field results taken from the review article [35] with proofs in [36,37].6

Theorem ([35-37]). Assume that is globally Lipschitz, , and . In addition assume that . Then for any , for all it holds that

There exists a unique solution (, ) where is a strong solution to (3.2) and is a weak-measure solution to (3.1).

-

For any ,

(3.3) with depending on and .

-

For any , a.e. , the k-particle marginal

converges weakly to as , where is the distribution of .

The previous result immediately extends to the case of and both globally Lipschitz and has been extended to only locally-Lipschitz in [38], with Coulomb-type singularity at the origin in [39], and domains with boundaries in [40,41]. Analysis of the model (3.1) continues to evolve in various contexts, including analysis of equilibria [42-44] and connections to deep learning [45]. For our convergence result below we simply assume that , , and are such that (i) and (ii) from the above theorem hold.

3.1. Weak form

Despite the convergence of the empirical measure in previous theorem, it is unclear at what particle number the mean-field equations become a suitable framework for inference using particle data, due to the complex variance structure at any finite . A key piece of the present work is to show that the weak form of the mean-field equations does indeed provide a suitable setting when is at least several thousands. Moreover, since in many cases (3.1) can only be understood in a weak sense, the weak form is the natural framework for identification. We say that is a weak solution to (3.1) if for any compactly supported it holds that

| (3.4) |

where denotes the Hessian of and is the trace of the matrix . Our method requires discretizing (3.4) for all where is a suitable test function basis, and approximating the mean-field distribution with a density constructed from discrete particle data at time . We then find , , and within specified finite-dimensional function spaces.

4. Algorithm

We propose the general Algorithm 4.1 for discovery of mean-field equations from particle data. The inputs are a discrete-time sample containing experiments each with particle positions over timepoints . The following hyperparameters are defined by the user: (i) a kernel used to map the empirical measure to an approximate density , (ii) a spatial grid over which to evaluate the approximate density , (iii) a library of trial functions , (iv) a basis of test functions , (v) a quadrature rule over the spatiotemporal grid (, ) denoted by an inner product ⟨·, ·⟩, and (vi) sparsity factors for the modified sequential thresholding least-squares Algorithm 4.2 (MSTLS) reviewed below. We discuss choices of these hyperparameters in Section 4.1, computational complexity of the algorithm in Section 4.2, convergence of the algorithm in Section 4.3. In Section 4.4 we briefly discuss gaps between theory and practice. Table 1 includes a list of notations used throughout.

| Algorithm 4.1 WSINDy for identifying mean-field Eq. (3.1) from particle data | |

|---|---|

4.1. Hyperparameter selection

4.1.1. Quadrature

We assume that the set of gridpoints in Algorithm 4.1 is chosen from some compact domain containing supp (). The choice of (and ) must be chosen in conjunction with the quadrature scheme, which includes integration in time using the given timepoints as well as space. For completeness, the inner products in lines 10, 16, 22, and 27 of Algorithm 4.1 are defined in the continuous setting by

and the convolution in line 10 is defined by

In the present work we adopt the scheme used in the application of WSINDy for local PDEs [3], which includes the trapezoidal rule in space and time with test functions compactly supported in . We take to be a rectangular domain enclosing supp () and to be equally-spaced in order to efficiently evaluate convolution terms. In what follows we denote by ⟨·, ·⟨ the continuous inner product, the inner product over evaluated using the composite trapezoidal rule in space with meshwidth and Lebesgue integration in time, and by the trapezoidal rule in both space and time, with meshwidth in space and in time. With some abuse of notation, will denote the convolution of and , understood to be discrete or continuous by the context. Note also that we denote by , , and the measures over defined by , and , respectively, where is the Lebesgue measure on [0, ].

4.1.2. Density kernel

Having chosen the domain containing the particle data , let be a partition of with indicating the size of the atoms . For the remainder of the paper we take to be hypercubes of equal side length in order to minimize computation time for integration, although this is by no means necessary. For particle positions , we define the histogram7

| (4.1) |

Here the density kernel is defined

and in this setting the corresponding spatial grid is the set of center-points of the bins , from which we define the discrete histogram data . The discrete histogram then serves as an approximation to the mean-field distribution .

Pointwise estimation of densities from samples of particles usually requires large numbers of particles to achieve reasonably low variance, and in general the variance grows inversely proportional to the bin width . One benefit of the weak form is that integrating against a histogram does not suffer from the same increase in variance with small . In particular,

Lemma 1. Let be a sequence of -valued random variables such that the empirical measure of converges weakly to according to

| (4.2) |

for all and a universal constant. Let be the histogram computed with kernel using (4.1) with bins and equal sidelength . Then for any in compactly supported in , we have the mean-squared error (for depending on and )

Remark 1. We note that (4.2) follows immediately for i.i.d.,8 and also for a solution to (1.1) at time with mean-field distribution according to (3.3) (for suitable , , and ), which is the setting of the current article.

Proof of Lemma 1. First we note that by compact support of , the trapezoidal rule can be written

where the midpoint approximation of is given by

| (4.3) |

Hence we simply split the error and use (4.2):

The previous lemma in particular shows that small bin width does not negatively impact as an estimator of , which is in contrast to as a pointwise estimator of . For example, if we assume that is sampled from a density , it is well known that the mean-square optimal bin width is [46]. Summarizing this result, elementary computation reveals the pointwise bias for ,

for some . Letting , we have

For the variance we get

and hence a bound for the mean-squared error

Minimizing the bound over we find an approximately optimal bin width

which provides an overall pointwise root-mean-squared error of . Hence, not only does the weak form remove the inverse dependence in the variance, but fewer particles are needed to accurately approximate integrals of the density .

4.1.3. Test function basis

For the test functions we use the same approach as the PDE setting [3], namely we fix a reference test function and set

where is a fixed set of query points. This, together with a separable representation

enables construction of the linear system (, ) using the FFT. We choose , , of the form

| (4.4) |

where is the integer support parameter such that is supported on points of spacing and is the degree of . For simplicity we set for and , so that only the numbers , , , need to be specified.

Since has exactly weak derivatives, and must be at least as large as the maximum spatial and temporal derivatives appearing in the library , or , . Larger results in higher-accuracy enforcement of the weak form (3.4) in low-noise situations (see Lemma 2 of [2] for details), however the convergence analysis below indicates that smaller , , may reduce variance. The support parameter m determines the length and time scales of interest and must be chosen small enough to extract relevant scales yet large enough to sufficiently reduce variance.

In [3, Appendix A] the authors developed a changepoint algorithm to choose , , , automatically from the Fourier spectrum of the data . Here, for each of the three examples in Section 5, we fix across all particle numbers , extrinsic noises , and intrinsic noises , in order to instead focus on convergence in . To strike a balance between accuracy and small we choose and throughout. We used a combination of the changepoint algorithm and manual tuning to arrive at and which work well across all noise levels and numbers of particles examined. Query points are taken to be an equally-spaced subgrid of with spacing and for spatial and temporal coordinates. The resulting values , , , , , and determine the weak discretization scheme and can be found in Appendix A.1 for each example below.

The results in Section 5 appear robust to , . In addition, choosing and specific to each dataset using the changepoint method often improves results. Although automated in the changepoint algorithm, we recommend visualizing the overlap between the Fourier spectra of and when choosing , , , in order to directly observe which the modes in the data will experience filtering under convolution with . In general, there is much flexibility in the choice of . Optimizing continues to be an active area of research.

4.1.4. Trial function library

The general Algorithm 4.1 does not impose a radial structure for the interaction potential , nor does it assume any prior knowledge that the particle system is in fact interacting. In the examples below,9 the libraries , , are composed of monomial and/or trigonometric terms to demonstrate that sparse regression is effective in selecting the correct combination of nonlocal drift, local drift, and diffusion terms. Rank deficiency can result, however, from naive choices of nonlocal and local bases. Consider the kernel , which satisfies

where and is the first moment of . Since is conserved in the model (3.2) posed in free-space,10 including the same power-law terms in both libraries and will lead to rank deficiency. This is easily avoided by incorporating known symmetries of the model (3.2), however in general we recommend that the user builds the library incrementally and monitors the condition number of while selecting terms.

4.1.5. Sparse regression

As in [3], we enforce sparsity using a modified sequential thresholding least-squares algorithm (MSTLS), included as Algorithm 4.2, where the “modifications” are two-fold. First, we incorporate into the thresholding step the magnitude of the overall term as well as the coefficient magnitude , by defining non-uniform lower and upper thresholds

| (4.5) |

where is the number of columns in . Second, we perform a grid search11 over candidate sparsity parameters and choose the parameter that is the smallest minimizer over of the cost function

| (4.6) |

where is the output of the sequential thresholding algorithm with non-uniform thresholds (4.5) and is the least-squares solution.12 The final coefficient vector is then set to .

We now review some aspects of Algorithm 4.2. Results from [47] on the convergence of STLS carry over for the inner loop of Algorithm 4.2, namely if is full-rank, the inner loop terminates in at most iterations with a resulting coefficient vector that is a local minimizer of the cost function . This implies that the full algorithm terminates in atmost least-squares solves (each on a subset of columns of ).

When considering recovery of the true weight vector , Theorem 1 implies convergence in particle number of to when is full-rank. The rate of convergence depends implicitly on the condition number of , hence it is recommended that one builds the library incrementally, stopping before the conditional number grows too large. If is rank deficient, classical recovery guarantees from compressive sensing do not necessarily apply, due to high correlations between the columns of (recall each column is constructed from the same dataset ).13 One may employ additional regularization (e.g. Tikhonov regularization as in [31]); however, in general, improvements to existing sparse regression algorithms for rank-deficient, noisy, and highly-correlated matrices is an active area of research.

| Algorithm 4.2 Modified sequential thresholding with automatic threshold selection | |

|---|---|

The bounds (4.5) enforce a quasi-dominant balance rule, such that is within orders of magnitude from and is within orders of magnitude from 1 (the coefficient of time derivative . This is specifically designed to handle poorly-scaled data (see the Burgers and Korteweg–de Vries examples in [3]), however we leave a more thorough examination of the thresholding requirements necessary for models with multiple scales to future work.

As the sum of two relative errors, minimizers of the cost function equally weight the accuracy and sparsity of . By choosing to be the smallest minimizer of over , we identify the thresholds such that as those resulting in an overfit model. We commonly choose to be log-equally spaced (e.g. 50 points from 10−4 to 1), and starting from a coarse grid, refine until the minimum of is stationary.

4.2. Computational complexity

To compute convolutions against for each , we first evaluate at the grid defined by

where is the spacing of and , , is the number of points in along the coordinate. Computing14 requires evaluations of , where is the number of points in . We then use the -dimensional FFT to compute the convolutions

where only entries corresponding to particle interactions within are retained. For this amounts to flops per timestep. For and higher dimensions, the -dimensional FFT is considerably slower unless one of the arrays is separable. To enforce separability, trial interaction potentials in can be chosen to be a sum of separable functions,

| (4.7) |

in which case only a series of one-dimensional FFTs are needed to compute , and again the cost is per timestep. When is not separable, a low-rank approximation can be computed from ,

| (4.8) |

which again reduces convolutions to a series of one-dimensional FFTs. For , this is accomplished using the truncated SVD, while for higher dimensions there does not exist a unique best rank-Q tensor approximation, although several efficient algorithms are available to compute a sufficiently accurate decomposition [49-51] (and the field of fast tensor decompositions is advancing rapidly).

We propose to compute convolutions by first computing a low-rank decomposition of using the randomized truncated SVD [52] or a suitable randomized tensor decomposition and then applying the -dimensional FFT as a series of one-dimensional FFTs. In the examples below we consider only and , and leave extension to higher dimensions to future work.

Using low-rank approximations, the mean-field approach provides a significant reduction in computational complexity compared to direct evaluations of particle trajectories when is sufficiently large. A particle-level computation of the nonlocal force in weak-form requires evaluating terms of the form

For a single candidate interaction potential , a collection of test functions , and experiments, this amounts to function evaluations in and flops. If we use the proposed method, employing the convolutional weak form with a separable reference test function (as in WSINDy for PDEs [3]) and exploiting a rank approximation of when computing convolutions against interaction potential, we instead evaluate

using flops and only evaluations of , reused at each of the timepoints.15 Fig. 1 provides a visualization of the reduction in function evaluations for timepoints and experiments over a range of and (points along each spatial dimension when is a hypercube) in and spatial dimensions. Table 5 in Appendix A.1 lists walltimes for the examples below, showing that with particles the full algorithm implemented in MATLAB runs in under 10 s with all computations in serial on a laptop with an AMD Ryzen 7 pro 4750u processor, and requiring less than 8 Gb of RAM. The dependence on is only through the computation of the histogram, hence this approach may find applications in physical coarse-graining (e.g. of molecular dynamics or plasma simulations).

Fig. 1.

Factor by which the mean-field evaluation of interaction forces using histograms reduces total function evaluations as a function of particle number and average gridpoints per dimension for data with experiments each with timepoints. For example, with spatial dimensions (left) and particles, the number of function evaluations is reduced by at least a factor of 104.

4.3. Convergence

We now show that the estimators , , and of the weak-form method converge with a rate when ordinary least squares are used (i.e. ) and only experiment is available. Here is the Hölder exponent of the sample paths of the process . We assume that , , , and the resulting histogram are as in Section 4.1.2. We make the following assumptions on the true model and resulting linear system throughout this section.

Assumption H. Let be fixed.

- (H.1) For each , is a strong solution to (1.1) for , and for some the sample paths are almost-surely -Hölder continuous, i.e. for some ,

- (H.2) The initial particle distribution satisfies the moment bound

- (H.3) and satisfy for some the growth bound:

- (H.4) For the same constant , it holds that16

(H.5) The test functions are compactly supported and together with the library are such that has full column rank with17 almost surely for some constant .

(H.6) The true functions , , and are in the span of .

We will now define some notation and state some technical lemmas with proofs found in Appendix A.4. Define the weak-form operator

| (4.9) |

where is a curve in , is a function compactly supported over , and is an inner product over . If is a weak solution to (3.1) and is the inner product then . If instead , then by Itô’s formula takes the form of an Itô integral, and we have the following:

Lemma 2. Under Assumptions (H.1)-(H.5), there exists a constant independent of such that

Proof. See Appendix A.4.

With the following lemma, we can relate the histogram to the empirical measure through using the inner product defined by trapezoidal-rule integration in space and continuous integration in time.

Lemma 3. Under Assumptions (H.1)-(H.5), for independent of and , it holds that

Proof. See Appendix A.4.

To incorporate discrete-time effects, we consider the difference between and , where recall that denotes trapezoidal rule integration in space with meshwidth and in time with sampling rate .

Lemma 4. Under Assumptions (H.1)-(H.5), for independent of , , and , it holds that

Proof. See Appendix A.4.

The previous estimates directly lead to the following bound on the model coefficients :

Theorem 1. Assume that Assumption H holds. Let be the learned model coefficients and the true model coefficients. For independent of , , and it holds that

Proof. Using that , , and are in the span of (H.6), we have that

where is the row of . From Lemmas 2-4 we have

Using that is full rank, it holds that , hence the result follows from the uniform bound on (H.5):

□

Under the assumption (H.6), an immediate corollary is

| (4.10) |

This follows from

and similarly for and . Finally, setting for will ensure convergence as and .

4.4. Theory vs. Practice

We now make several remarks about the practical performance of Algorithm 4.1 with respect to the theoretical convergence of Theorem 1.

Remark 2. An important case of Theorem 1 is , in which case itself is a weak-measure solution to the mean-field Eq. (3.1) and the algorithm returns, for , . This partially explains the accuracy observed for purely-extrinsic noise examples in Figs. 5 and 9. We note further that in the absence of noise ( and , not included in this work) Algorithm 4.1 recovers systems to high accuracy similarly to WSINDy applied to local dynamical systems [2,3].

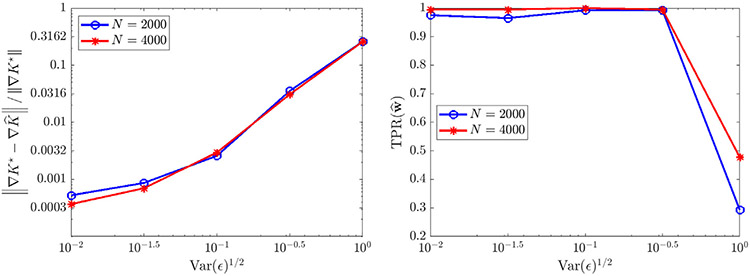

Fig. 5.

Recovery of (3.1) in one spatial dimension for and under different levels of observational noise . Left: relative error in learned interaction kernel . Middle: true positivity ratio for full model (3.1). Right: true positivity ratio for drift term.

Fig. 9.

Recovery of (3.1) in two spatial dimensions with given by (5.5) from deterministic particles () with extrinsic noise .

Remark 3. Algorithm 4.1 in general implements sparse regression, yet Theorem 1 deals with ordinary least squares. Since least squares is a common subroutine of many sparse regression algorithms (including the MSTLS algorithm used here), the result is still relevant to sparse regression. Lastly, the full-rank assumption on implies that as sequential thresholding reduces to least squares.

Remark 4. Theorem 1 assumes data from a single experiment (), while the examples below show that experiments improve results. For any fixed , the limit results in convergence, however, the -fixed and limit does not result in convergence, as this does not lead to the mean-field equations.18 The examples below show that using has a practical advantage, and in Appendix A.3 we demonstrate that even for small particle systems () the large regime yields satisfactory results.

Remark 5. Many interesting examples have non-Lipschitz , in particular a lack of smoothness at . If does not converge to a singular measure as , then the bound (A.4) holds for with a jump discontinuity at , where an additional term arises from pairwise interactions within an distance. The examples below are chosen in part to show that convergence holds for with jumps at the origin.

5. Examples

We now demonstrate the successful identification of several particle systems in one and two spatial dimensions as well as the convergence predicted in Theorem 1. In each case we use Algorithm 4.1 to discover a mean-field equation of the form (3.1) from discrete-time particle data. For each dataset we simulate the associated interacting particle system given by (1.1) using the Euler–Maruyama scheme (initial conditions and timestep are given in each example). We assess the ability of WSINDy to select the correct model using the true positivity ratio19

| (5.1) |

where TP is the number of correctly identified nonzero coefficients, FN is the number of coefficients falsely identified as zero, and FP is the number of coefficients falsely identified as nonzero [53]. To demonstrate the convergence given by (4.10), for correctly identified models (i.e. ) we compute the relative -error of the recovered interaction force , local force , and diffusivity over and , respectively, denoting this by in the plots below. Results are averaged over 100 trials.

For the computational grid we first compute the sample standard deviation of and we choose to be the rectangular grid extending from the mean of in each spatial dimension. We then set to have 128 points in and for dimensions, and 256 points in for , noting that these numbers are fairly arbitrary, and used to show that the grid need not be too large. We set the sparsity factors so that contains 100 equally spaced points from −4 to 0. More information on the specifications of each example can be found in Appendix A.1. (MATLAB code used to generate examples is available at https://github.com/MathBioCU/WSINDy_IPS.)

5.1. Two-dimensional local model and homogenization

The first system we examine is a local model defined by the local potential and diffusivity , where is the identity in . This results in a constant advection, variable diffusivity mean-field model20

| (5.2) |

The purpose of this example is three-fold. First, we are interested in the ability of Algorithm 4.1 to correctly identify a local model from a library containing both local and nonlocal terms. Next, we evaluate whether the convergence is realized. Lastly, we investigate whether for large the weak-form identifies the associated homogenized equation (derived in Appendix A.2)

| (5.3) |

where is given by the harmonic mean of diffusivity:

For we evolve the particles from an initial Gaussian distribution with mean zero and covariance and record particle positions for 100 timesteps with (subsampled from a simulation with timestep 10−4). We use a rectangular domain of approximate sidelength 10 and compute histograms with 128 bins in and for a spatial resolution of (see Fig. 2 for solution snapshots), over which . For we compare recovered equations with the full model (5.2), while for we compare with (5.3), for comparison computing over each domain using MATLAB’s integral2. Fig. 3 shows that as the particle number increases, we do in fact recover the desired equations, with approaching one as increases. For we observe convergence of the local potential and the diffusivity . For , we observe approximate convergence of , and converging to within 2% of , the homogenized diffusivity (higher accuracy can hardly be expected for since (5.3) is itself an approximation in the limit of infinite ).

Fig. 2.

Snapshots at time (left) and (right) of histograms computed with 128 bins in and from 16,384 particles evolving under (5.2) with (top) and (bottom).

Fig. 3.

Convergence of (left) and (middle), recall denotes the norm, for (5.2) with , as well as (right). For , results are compared to the exact model (5.2), while for results are compared to the homogenized equation (5.3).

5.2. One-dimensional nonlocal model

We simulate the evolution of particle systems under the quadratic attraction/Newtonian repulsion potential 1 2

| (5.4) |

with no external potential (). The portion of , leading to a discontinuity in , is the one-dimensional free-space Green’s function for . For , when replaced by the corresponding Green’s function in dimensions, the distribution of particles evolves under into the characteristic of the unit ball in , which has implications for design and control of autonomous systems [54]. We compare three diffusivity profiles, corresponding to zero intrinsic noise, leading to constant-diffusivity intrinsic noise, and leading to variable-diffusivity intrinsic noise. With zero intrinsic noise , we examine the effect of extrinsic noise on recovery, and assume uncertainty in the particle positions due to measurement noise at each timestep, , for i.i.d. and {0.01, 0.0316, 0.1, 0.316}. In this way is the noise ratio, such that (computed with and stretched into column vectors).

Measurement data consists of 100 timesteps at resolution , coarsened from simulations with timestep 0.001. Initial particle positions are drawn from a mixture of three Gaussians each with standard deviation 0.005. Histograms are constructed with 256 bins of width . Typical histograms for each noise level are shown in Fig. 4 computed one experiment with particles.

Fig. 4.

Histograms computed with 256 bins width from 8000 particles in 1D evolving under (5.4). Top left to top right: , , . Bottom: deterministic particles with i.i.d. Gaussian noise added to particle positions with resulting noise ratios (left to right) , 0.1, 0.316.

For the case of extrinsic noise (Fig. 5), we use only one experiment () and examine the number of particles and the noise ratio . We find that recovery is accurate and reliable for , yielding correct identification of with less than 1% relative error in at least 98/100 trials. Increasing from 500 to 8000 leads to minor improvements in accuracy for , but otherwise has little effect, implying that for low to moderate noise levels the mean-field equations are readily identifiable even from smaller particle systems. For (see Fig. 4 (bottom right) for an example histogram), we observe a decrease in (Fig. 5 middle panel) resulting from the generic identification of a linear diffusion term with . Using that , we can identify this as the best-fit intrinsic noise model. Furthermore, increases in lead to reliable identification of the drift term, as measured by (rightmost panel Fig. 5) which is the restriction of TPR to drift terms and .

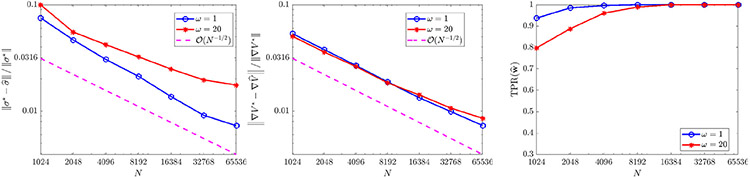

For constant diffusivity (Fig. 6), the full model is recovered with less than 3% errors in and in at least 98/100 trials when the total particle count is at least 8000, and yields errors less than 1% for . The error trends for and in this case both strongly agree with the predicted rate. For non-constant diffusivity (Fig. 7), we also observe robust recovery for with error trends close to , although the accuracy in and is diminished due to the strong order convergence of Euler–Maruyama applied to diffusivities that are unbounded in [55].

Fig. 6.

Recovery of (3.1) in one spatial dimension for and .

Fig. 7.

Recovery of (3.1) in one spatial dimension for and .

5.3. Two-dimensional nonlocal model

We now discuss an example of singular interaction in two spatial dimensions using the logarithmic potential

| (5.5) |

with constant diffusivity . This example corresponds to the parabolic–elliptic Keller–Segel model of chemotaxis, where is the critical diffusivity such that leads diffusion-dominated spreading of particles throughout the domain (vanishing particle density at every point in ) and leads to aggregation-dominated concentration of the particle density to the dirac-delta located at the center of mass of the initial particle density [44,56]. For we examine the affect of additive i.i.d. measurement noise for {0.01, 0.0316, 0.1, 0.316, 1}.

We simulate the particle system with a cutoff potential

| (5.6) |

with , so that is Lipschitz and has a jump discontinuity at the origin. Initial particle positions are uniformly distributed on a disk of radius 2 and the particle position data consists of 81 timepoints recorded at a resolution , coarsened from 0.0025. Histograms are created with 128 × 128 bins in and of sidelength (see Fig. 8 for histogram snapshots over time). We examine experiments with or particles.

Fig. 8.

Histograms created from 4000 particles evolving under logarithmic attraction (Eq. (5.5) with varying noise levels at times (left to right) , , and . Top: , (extrinsic only). Bottom: , (intrinsic only).

In Fig. 9 we observe a similar trend in the case as in the 1D nonlocal example, namely that recovery for is robust with low errors in (on the order of 0.0032), only in this case the full model is robustly recovered up to . At , with the method frequently identifies a diffusion term with , and for the method occasionally identifies the backwards diffusion equation , . This is easily prevented by enforcing positivity of , however we leave this and other constraints as an extension for future work.

With diffusivity , we obtain approximately greater than 0.95 for (Fig. 10, right), with an error trend in following an rate, and a trend in of roughly . Since convergence in for any fixed is not covered by the theorem above, this shows that combining multiple experiments may yield similar accuracy trends for moderately-sized particle systems.

Fig. 10.

Recovery of (3.1) in two spatial dimensions with given by (5.5) and .

6. Discussion

We have developed a weak-form method for sparse identification of governing equations for interacting particle systems using the formalism of mean-field equations. In particular, we have investigated two lines of inquiry, (1) is the mean-field setting applicable for inference from medium-size batches of particles? And (2) can a low-cost, low-regularity density approximation such as a histogram be used to enforce weak-form agreement with the mean-field PDE? We have demonstrated on several examples that the answer is yes to both questions, despite the fact that the mean-field equations are only valid in the limit of infinitely many particles (). This framework is suitable for systems of several thousand particles in one and two spatial dimensions, and we have proved convergence in for the associated least-squares problem using simple histograms as approximate particle densities. In addition, the sparse regression approach allows one to identify the full system, including interaction potential , local potential , and diffusivity .

It was initially unclear whether the mean-field setting could be utilized in weak form for finite particle batches, hence this can be seen as a proof of concept for particle systems with in the range 103 – 105. With convergence in and low computational complexity, our weak-form approach is well-suited as is for much larger particle systems. In the opposite regime, for small fixed , the authors of [26] show that their maximum likelihood-based method converges as (i.e. in the limit of infinite experiments). While the same convergence does not hold for our weak-form method, the results in Section 5 suggest that in practice, combining independent experiments each with particles improves results. Furthermore, we include evidence in Appendix A.3 that even for small , our method correctly identifies the mean-field model when is large enough, with performance similar to that in [26]. We leave a full investigation of the interplay between and to future work.

In the operable regime of , there is potential for improvements and extensions in many directions. On the subject of density estimation, histograms are highly efficient, yet they lead to piecewise-constant approximations of and hence errors. Choosing a density kernel to achieve high-accuracy quadrature without sacrificing the runtime of histogram computation seems prudent, although one must be cautious about making assumptions on the smoothness of mean-field distribution . For instance, in the 1D nonlocal example 5.2, discontinuities develop in for the case , hence a histogram approximation is more appropriate than using e.g. a Gaussian kernel.

The computational grid , quadrature method , and reference test function may also be optimized further or adapted to specific problems. The approach chosen here of equally-spaced and separable piecewise-polynomial , along with integration using the trapezoidal quadrature, has several advantages, including high accuracy and fast computation using convolutions. However, this may need adjustment for higher dimensions. It might be advantageous to adapt to the data , however this may prevent one from evaluating (, ) using the FFT if a non-uniform grid results, hence increases the overall computational complexity. One could also use multiple reference test functions . The possibilities of varying the test functions (within the smoothness requirements of the library ) have been largely unexplored in weak-form identification methods.

Several theoretical questions remain unanswered, namely model recovery statistics for finite . As a consequence of Theorem 1, as well as convergence results on sequential thresholding [47], we have that being full-rank and containing the true model is sufficient to guarantee convergence as at the rate . Noise, whether extrinsic or intrinsic, for finite may result in identification of an incorrect model when is poorly-conditioned. The effect is more severe if the true model has a small coefficient, which requires a small threshold λ, which correspondingly may lead to a non-sparse solution. These are sensitivities of any sparse regression algorithm (see e.g. [57]) and accounting for the effect of noise and poor conditioning is an active area of research in equation discovery.

We also note that several researchers have focused on the uniqueness in kernel identifiability [34,58]. This issue does not directly apply to our scenario21 of identifying the triple (, , ). Moreover, in the cases we considered, we do not see any identifiability issues (e.g. rank deficiency) even in the high noise case with low particle number. Quantifying the transition to identifiability as as a function of the condition number is an important subject for future work.

For extensions, the example system (5.2) and resulting homogenization motivates further study of effective equations for systems with complex microstructure. In other fields this is described as coarse-graining. A related line of study is inference of 2nd-order particle systems, as explored in [32], which often lead to an infinite hierarchy of mean-field equations. Our weak-form approach may provide a principled method for truncated and closing such hierarchies using particle data. Another extension is to enforce convex constraints in the regression problem, such as lower bounds on diffusivity, or with long-range attraction depending on the distribution of pairwise distances (see [26] for further use of ). Finally, the framework we have introduced can easily be used to find nonlocal models from continuous solution data (e.g. given instead of ), whereby questions of nonlocal representations of models can be investigated.

Lastly, we note that MATLAB code is available at https://github.com/MathBioCU/WSINDy_IPS.

Table 3.

Trial function library for nonlocal 1D example (Section 5.2).

| Mean-field term | Trial function library |

|---|---|

| , {1, 2, 3, 4, 5, 6, 7} | |

| , {0, 2, 3, 4, 5, 6, 7, 8} | |

| , {0, 1, 2, 3, 4, 5, 6, 7, 8} |

Table 4.

Trial function library for nonlocal 2D example (Section 5.3). Interaction potentials indicate cutoff potentials of the form (5.6) with such that the resulting potential is Lipschitz.

| Mean-field term | Trial function library |

|---|---|

| , |

Acknowledgements

This research was supported in part by the NSF Mathematical Biology MODULUS grant 2054085, in part by the NSF/NIH Joint DMS/NIGMS Mathematical Biology Initiative grant R01GM126559, and in part by the NSF Computing and Communications Foundations grant 1815983. This work also utilized resources from the University of Colorado Boulder Research Computing Group, which is supported by the National Science Foundation (awards ACI-1532235 and ACI-1532236), the University of Colorado Boulder, and Colorado State University. The authors would also like to thank Prof. Vanja Dukić (University of Colorado at Boulder, Department of Applied Mathematics) for insightful discussions and helpful suggestions of references.

Appendix

A.1. Specifications for examples

In Tables 2-5 we include hyperparameter specifications and resulting attributes of Algorithm 4.1 applied to the three examples in Section 5. In particular, we report the typical walltime in Table 5, showing that on each example Algorithm 4.1 learns the mean-field equation from a dataset with ~64,000 particles in under 10 s.

Table 2.

Trial function library for local 2D example (Section 5.1).

| Mean-field term | Trial function library |

|---|---|

| , {1, 2, 3, 4, 5, 6, 7} | |

| , {0, 1, 2, 3, 4, 5}, {1, 2} | |

| , {0, 1, 2, 3, 4, 5} |

Table 5.

Discretization parameters and general information for examples. The number of nonzeros in the true weight vector is given for each parameter set examined. Namely, for the local 2D example, results in a 4-term model, while the homogenized case results in a three-term model. For the nonlocal 1D example, result in 2-term, 3-term, and 5-term models, respectively, and for the nonlocal 2D example results in 1-term and 2-term models. The norm , condition number and walltime are listed for representative samples with 64,000 total particles.

| Example | (, ) | (, ) | (, ) | size() | (, ) |

|---|---|---|---|---|---|

| Local 2D | (31,16) | (5,3) | (10,5) | 128 × 128 × 101 | (0.078, 0.02) |

| Nonlocal 1D | (29,8) | (5,3) | (5,1) | 256 × 101 | (0.023, 0.01) |

| Nonlocal 2D | (25,8) | (5,3) | (8,1) | 128 × 128 × 81 | (0.047, 0.1) |

| Example | size() | Walltime | |||

| Local 2D | {4, 3} | 686 × 85 | 2.0 × 103 | 3.0 × 107 | 9.2 s |

| Nonlocal 1D | {2, 3, 5} | 3400 × 24 | 1.3 × 105 | 8.7 × 108 | 0.7 s |

| Nonlocal 2D | {1, 2} | 6500 × 59 | 1.1 × 104 | 6.4 × 106 | 8.5 s |

A.2. Derivation of homogenized equation (5.3)

We briefly provide a derivation of the homogenized equation (5.3) in the static case. Let be an open bounded domain with smooth boundary and be the -dimensional torus. Let be continuous and uniformly bounded below,

Then for any , the equation

has a unique weak solution given by

where is the Green’s function for with homogeneous Dirichlet boundary conditions on . By the coercivity of we have that is uniformly bounded in . By the lemma in [59, Section 2.4], up to a subsequence , there exists a function periodic in its second variable such that for any continuous function , we have

Setting , we see that on the same subsequence, . Applying the same lemma to the constant series and letting , we see that (up to possibly a second subsequence),

Letting and putting together the previous limits, we see that

and hence solves the homogenized equation

A.3. Recovery for small and large

The related maximum-likelihood approach [26] is shown to be suitable for small and large , hence a natural line of inquiry is the performance of Algorithm 4.1 in this regime. Theorem 1 does not apply to this regime, and in fact convergence of the algorithm is not expected: letting where is the approximate density constructed from experiment with particles, we have the weak-measure convergence as , where is the 1-particle marginal of the distribution of in . Unlike the mean-field distribution , is not a weak solution to the mean-field Fokker–Planck equation (3.1), instead we have

holding weakly, which depends on the 2-particle marginal [35]. Nevertheless, using the 1D nonlocal example in Section 5.2 with , we observe in Fig. 11 (right panel) that our weak-form algorithm correctly identifies the model in > 96% of trials with just particles per experiment when , and that error in (left panel) follows a trend. At experiments the error22 in is less than 1% and the runtime is approximately 0.9 s. The lack of convergence in is reflected in the diffusivity (middle panel of Fig. 11), where the error appears to plateau at around 1.7% for and at 3.5% for . The lower resolution (larger binwidth ) appears to yield slightly better results, possibly indicating that larger produces a coarse-graining effect such that over larger distances, although this effect deserves more thorough study in future work.

A.4. Technical lemmas

We now prove Lemmas 2-4 under Assumption H. First, some consequences of Assumption H. (I) The -Hölder continuity of sample paths (H.1) implies that for each ,

Together with the moment bound on (H.2), this implies

| (A.1) |

independent of . (II) The growth bounds on , , and (H.3)-(H.4) imply that for some ,

| (A.2) |

where is the Frobenius norm.

Proof of Lemma 2. Applying Itô’s formula to the process , we get that

Note that each integral on the right-hand side is a local martingale, since (A.2) and (H.5) ensure boundedness of over any compact set in , hence has mean zero. By independence of the Brownian motions , exchangeability of , the moment bound (A.1), and the growth bounds on (H.4), the Itô isometry gives us

where depends on , , , and . The result follows from Jensen’s inequality.23

FIG. 11.

Recovery of (3.1) in one spatial dimension for and with only particles per experiment.

Proof of Lemma 3. Using the notation from Lemma 1 to denote piecewise constant approximation of a function over the domain using the grid , we have

The right-hand side includes an interaction error followed by a sum of terms that are linear in the difference between a locally Lipschitz function and its piecewise constant approximation. Hence, we can bound using smoothness of (H.5), the moment assumptions on (H.2), and the growth assumptions on and (H.3)-(H.4). Specifically, for with center , the growth assumptions imply

for and depending on , , and , hence

| (A.3) |

Similarly, for the interaction error we use that for and with centers and , we have

with also depending on , , and . From this we have

| (A.4) |

The result follows from taking expectation and using the moment bound (A.1), where the final constant depends on , , , , , , and .

Proof of Lemma 4. Again rewriting the spatial trapezoidal-rule integration in the form , we see that

| (A.5) |

reduces to four terms of the form

for . Similarly to the bounds derived for in Lemma 3, the growth bounds on , and imply in general that

Rewriting the summands in ,

and using

where and , we see that for ,

Taking expectation on both sides and using the moment bound (A.1), we get

We get the same bound for . Summing over , and taking the average in , we then get

which implies the desired bound on the difference (A.5).

Footnotes

CRediT authorship contribution statement

Daniel A. Messenger: Concept for the article, Wrote the first draft, Editing, Performed the mathematical analysis, Wrote software selected examples, Ran simulations, Analyzed the data. David M. Bortz: Concept for the article, Editing.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

We define the moment of a probability measure for by .

The set is defined .

By we mean the identity in .

We use the notation to denote the evolution of probability measures. Subscripts will not be used to denote differentiation.

Meaning that for all continuous bounded functions , .

The indicator function is defined .

In this case (4.2) is the variance of a Monte-Carlo estimator for .

Details of the libraries used in examples can be found in Tables 2-4 in Appendix A.1.

This is not true in domains with boundaries, where nonlocalities can be seen to impart mean translation [42].

Note that this is feasible because the STLS algorithm terminates in finitely many iterations.

The Moore-Penrose inverse is defined for a rank- matrix using the reduced SVD as . The subscript denotes restriction to the first columns.

In particular, correlations result in large mutual incoherence, which renders algorithms such as Basis Pursuit, Orthogonal Matching Pursuit, and Hard Thresholding Pursuit useless (see [48, Chapter 5] for details).

Note that is simply shifted to lie in the positive orthant and reflected through each coordinate plane . In this way discretizes the set containing all observed interparticle distances.

We neglect the cost of computing the histogram and evaluating , together amounting to an additional flops, as these terms are lower order and reused in each column of and .

For the Frobenius norm is defined

is the induced matrix -norm of .

Note that the opposite convergence holds for the algorithm introduced in [26]: -fixed, results in recovery of .

For example, identification of the true model results in a , while identification of only half of the correct nonzero terms and no additional falsely identified terms results in .

Since the model is local, (5.2) is the Fokker-Planck equation for the distribution of each particle, rather than only in the limit of infinite particles.

E.g. due to multiple representations of the drift combining both nonlocal and local terms – see Section 4.1.4

References

- [1].Brunton Steven L., Proctor Joshua L., Kutz J. Nathan, Discovering governing equations from data by sparse identification of nonlinear dynamical systems, Proc. Natl. Acad. Sci 113 (15) (2016) 3932–3937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Messenger Daniel A., Bortz David M., Weak SINDy: Galerkin-based data-driven model selection, Multiscale Model. Simul 19 (3) (2021) 1474–1497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Messenger Daniel A., Bortz David M., Weak SINDy for partial differential equations, J. Comput. Phys (2021) 110525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Warren Michael S., Salmon John K., Astrophysical N-body simulations using hierarchical tree data structures, Proc. Supercomput (1992). [Google Scholar]

- [5].Guo Jiawei, The progress of three astrophysics simulation methods: Monte-Carlo, PIC and MHD, J. Phys. Conf. Ser. 2012 (1) (2021) 012136, IOP Publishing. [Google Scholar]

- [6].Lelievre Tony, Stoltz Gabriel, Partial differential equations and stochastic methods in molecular dynamics, Acta Numer. 25 (2016) 681–880. [Google Scholar]

- [7].Sepúlveda Néstor, Petitjean Laurence, Cochet Olivier, Grasland-Mongrain Erwan, Silberzan Pascal, Hakim Vincent, Collective cell motion in an epithelial sheet can be quantitatively described by a stochastic interacting particle model, PLoS Comput. Biol 9 (3) (2013) e1002944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Van Liedekerke Paul, Palm MM, Jagiella N, Drasdo Dirk, Simulating tissue mechanics with agent-based models: concepts, perspectives and some novel results, Comput. Part. Mech 2 (4) (2015) 401–444. [Google Scholar]

- [9].Bi Dapeng, Yang Xingbo, Marchetti M Cristina, Manning M Lisa, Motility-driven glass and jamming transitions in biological tissues, Phys. Rev. X 6 (2) (2016) 021011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Blondel Vincent D., Hendrickx Julien M., Tsitsiklis John N., Continuous-time average-preserving opinion dynamics with opinion-dependent communications, SIAM J. Control Optim 48 (8) (2010) 5214–5240. [Google Scholar]

- [11].Keller Evelyn F., Segel Lee A., Model for chemotaxis, J. Theoret. Biol 30 (2) (1971) 225–234. [DOI] [PubMed] [Google Scholar]

- [12].Gkeka Paraskevi, Stoltz Gabriel, Farimani Amir Barati, Belkacemi Zineb, Ceriotti Michele, Chodera John D, Dinner Aaron R, Ferguson Andrew L, Maillet Jean-Bernard, Minoux Hervé, et al. , Machine learning force fields and coarse-grained variables in molecular dynamics: application to materials and biological systems, J. Chem. Theory Comput 16 (8) (2020) 4757–4775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Bibby Bo Martin, Sørensen Michael, Martingale estimation functions for discretely observed diffusion processes, Bernoulli (1995) 17–39. [Google Scholar]

- [14].Lo Andrew W., Maximum likelihood estimation of generalized Itô processes with discretely sampled data, Econom. Theory 4 (2) (1988) 231–247. [Google Scholar]

- [15].Bishwal Jaya P.N., Parameter Estimation in Stochastic Differential Equations, Springer, 2007. [Google Scholar]

- [16].Boninsegna Lorenzo, Nüske Feliks, Clementi Cecilia, Sparse learning of stochastic dynamical equations, J. Chem. Phys 148 (24) (2018) 241723. [DOI] [PubMed] [Google Scholar]

- [17].Callaham Jared L, Loiseau J-C, Rigas Georgios, Brunton Steven L, Nonlinear stochastic modelling with langevin regression, Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci 477 (2250) (2021) 20210092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Li Yang, Duan Jinqiao, Extracting governing laws from sample path data of non-Gaussian stochastic dynamical systems, 2021, arXiv preprint arXiv: 2107.10127. [Google Scholar]

- [19].Nardini John T, Baker Ruth E, Simpson Matthew J, Flores Kevin B, Learning differential equation models from stochastic agent-based model simulations, J. R. Soc. Interface 18 (176) (2021) 20200987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Brückner David B., Ronceray Pierre, Broedersz Chase P., Inferring the dynamics of underdamped stochastic systems, Phys. Rev. Lett 125 (5) (2020) 058103. [DOI] [PubMed] [Google Scholar]

- [21].Chen Xiaoli, Yang Liu, Duan Jinqiao, Karniadakis George Em, Solving inverse stochastic problems from discrete particle observations using the Fokker–Planck equation and physics-informed neural networks, SIAM J. Sci. Comput 43 (3) (2021) B811–B830. [Google Scholar]

- [22].Feng Jinchao, Ren Yunxiang, Tang Sui, Data-driven discovery of interacting particle systems using Gaussian processes, 2021, arXiv preprint arXiv: 2106.02735. [Google Scholar]

- [23].Kasonga Raphael A., Maximum likelihood theory for large interacting systems, SIAM J. Appl. Math 50 (3) (1990) 865–875. [Google Scholar]

- [24].Bishwal Jaya Prakash Narayan, et al. , Estimation in interacting diffusions: Continuous and discrete sampling, Appl. Math 2 (9) (2011) 1154–1158. [Google Scholar]

- [25].Bongini Mattia, Fornasier Massimo, Hansen Markus, Maggioni Mauro, Inferring interaction rules from observations of evolutive systems I: The variational approach, Math. Models Methods Appl. Sci 27 (05) (2017) 909–951. [Google Scholar]

- [26].Lu Fei, Maggioni Mauro, Tang Sui, Learning interaction kernels in stochastic systems of interacting particles from multiple trajectories, Foundations of Computational Mathematics (2021) 1–55. [Google Scholar]

- [27].Chen Xiaohui, Maximum likelihood estimation of potential energy in interacting particle systems from single-trajectory data, Electron. Commun. Probab 26 (2021) 1–13. [Google Scholar]

- [28].Sharrock Louis, Kantas Nikolas, Parpas Panos, Pavliotis Grigorios A, Parameter estimation for the mckean-vlasov stochastic differential equation, 2021, arXiv preprint arXiv:2106.13751. [Google Scholar]

- [29].Gomes Susana N., Stuart Andrew M., Wolfram Marie-Therese, Parameter estimation for macroscopic pedestrian dynamics models from microscopic data, SIAM J. Appl. Math 79 (4) (2019) 1475–1500. [Google Scholar]

- [30].Lukeman Ryan, Li Yue-Xian, Edelstein-Keshet Leah, Inferring individual rules from collective behavior, Proc. Natl. Acad. Sci 107 (28) (2010) 12576–12580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Rudy Samuel H, Brunton Steven L, Proctor Joshua L, Kutz J Nathan, Data-driven discovery of partial differential equations, Sci. Adv 3 (4) (2017) e1602614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Supekar Rohit, Song Boya, Hastewell Alasdair, Mietke Alexander, Dunkel Jörn, Learning hydrodynamic equations for active matter from particle simulations and experiments, 2021, arXiv preprint arXiv:2101.06568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Alves E Paulo, Fiuza Frederico, Data-driven discovery of reduced plasma physics models from fully-kinetic simulations, 2020, arXiv preprint arXiv: 2011.01927. [Google Scholar]

- [34].Lang Quanjun, Lu Fei, Learning interaction kernels in mean-field equations of 1st-order systems of interacting particles, 2020, arXiv preprint arXiv: 2010.15694. [Google Scholar]

- [35].Jabin Pierre-Emmanuel, Wang Zhenfu, Mean field limit for stochastic particle systems, in: Active Particles, Volume 1, Springer, 2017, pp. 379–402. [Google Scholar]

- [36].Sznitman Alain-Sol, Topics in propagation of chaos, in: Ecole D’été de Probabilités de Saint-Flour XIX–1989, Springer, 1991, pp. 165–251. [Google Scholar]

- [37].Méléard Sylvie, Asymptotic behaviour of some interacting particle systems; McKean-Vlasov and Boltzmann models, in: Probabilistic Models for Nonlinear Partial Differential Equations, Springer, 1996, pp. 42–95. [Google Scholar]

- [38].Bolley François, Canizo José A, Carrillo José A, Stochastic mean-field limit: non-Lipschitz forces and swarming, Math. Models Methods Appl. Sci 21 (11) (2011) 2179–2210. [Google Scholar]

- [39].Boers Niklas, Pickl Peter, On mean field limits for dynamical systems, J. Stat. Phys 164 (1) (2016) 1–16. [Google Scholar]

- [40].Fetecau Razvan C., Huang Hui, Sun Weiran, Propagation of chaos for the keller–segel equation over bounded domains, J. Differential Equations 266 (4) (2019) 2142–2174. [Google Scholar]

- [41].Fetecau Razvan C, Huang Hui, Messenger Daniel, Sun Weiran, Zero-diffusion limit for aggregation equations over bounded domains, 2018, arXiv preprint arXiv:1809.01763. [Google Scholar]

- [42].Messenger Daniel A., Fetecau Razvan C., Equilibria of an aggregation model with linear diffusion in domains with boundaries, Math. Models Methods Appl. Sci 30 (04) (2020) 805–845. [Google Scholar]

- [43].Fetecau Razvan C., Kovacic Mitchell, Swarm equilibria in domains with boundaries, SIAM J. Appl. Dyn. Syst 16 (3) (2017) 1260–1308. [Google Scholar]

- [44].Carrillo JA, Delgadino MG, Patacchini FS, Existence of ground states for aggregation-diffusion equations, Anal. Appl 17 (03) (2019) 393–423. [Google Scholar]

- [45].Araújo Dyego, Oliveira Roberto I., Yukimura Daniel, A mean-field limit for certain deep neural networks, 2019, arXiv preprint arXiv:1906.00193. [Google Scholar]

- [46].Freedman David, Diaconis Persi, On the histogram as a density estimator: L2 theory, Z. Wahrscheinlichkeitstheor. Verwandte Gebiete 57 (4) (1981) 453–476. [Google Scholar]

- [47].Zhang Linan, Schaeffer Hayden, On the convergence of the SINDy algorithm, Multiscale Model. Simul 17 (3) (2019) 948–972. [Google Scholar]

- [48].Foucart Simon, Rauhut Holger, A Mathematical Introduction to Compressive Sensing, Birkhäuser Basel, 2013. [Google Scholar]

- [49].Malik Osman Asif, Becker Stephen, Low-rank tucker decomposition of large tensors using tensorsketch, Adv. Neural Inf. Process. Syst 31 (2018) 10096–10106. [Google Scholar]

- [50].Sun Yiming, Guo Yang, Luo Charlene, Tropp Joel, Udell Madeleine, Low-rank tucker approximation of a tensor from streaming data, SIAM J. Math. Data Sci 2 (4) (2020) 1123–1150. [Google Scholar]

- [51].Jang Jun-Gi, Kang U, D-tucker: Fast and memory-efficient tucker decomposition for dense tensors, in: 2020 IEEE 36th International Conference on Data Engineering (ICDE), IEEE, 2020, pp. 1850–1853. [Google Scholar]

- [52].Yu Wenjian, Gu Yu, Li Yaohang, Efficient randomized algorithms for the fixed-precision low-rank matrix approximation, SIAM J. Matrix Anal. Appl 39 (3) (2018) 1339–1359. [Google Scholar]

- [53].Lagergren John H., Nardini John T., Lavigne G. Michael, Rutter Erica M., Flores Kevin B., Learning partial differential equations for biological transport models from noisy spatio-temporal data, Proc. R. Soc. A 476 (2234) (2020) . [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Fetecau Razvan C., Huang Yanghong, Kolokolnikov Theodore, Swarm dynamics and equilibria for a nonlocal aggregation model, Nonlinearity 24 (10) (2011) 2681. [Google Scholar]

- [55].Milstein Grigorii Noikhovich, Numerical Integration of Stochastic Differential Equations, Vol. 313, Springer Science & Business Media, 1994. [Google Scholar]

- [56].Dolbeault Jean, Perthame Benoît, Optimal critical mass in the two dimensional Keller–Segel model in R2, C. R. Math 339 (9) (2004) 611–616. [Google Scholar]

- [57].Cai T Tony, Wang Lie, Orthogonal matching pursuit for sparse signal recovery with noise, IEEE Trans. Inform. Theory 57 (7) (2011) 4680–4688. [Google Scholar]

- [58].Li Zhongyang, Lu Fei, Maggioni Mauro, Tang Sui, Zhang Cheng, On the identifiability of interaction functions in systems of interacting particles, Stoch. Processes Appl 132 (2021) 135–163. [Google Scholar]

- [59].Weinan E, Principles of Multiscale Modeling, Cambridge University Press, 2011. [Google Scholar]