Summary

Unpublished data and unused samples are common byproducts of research activity, but little is known about the scope and economic impact of their disuse. To fill this knowledge gap, we collected self-reported anonymous survey responses from 301 academic and government scientists from randomly selected institutions. Respondents estimated that they published ∼60% of their data and 95% had unpublished data. Of those collecting specimens, 60% stored unused samples. Systemic and logistical issues were identified as major contributory factors. The median cumulative self-reported estimated value of unused resources per researcher was $28,857, with life science ($36k) and government ($109k) researchers reporting the costliest assets. Using NSF headcounts, we estimated that the current cumulative value of unused resources at universities is approximately $6.2 billion, about 7% of the current annual R&D budget. These findings provide actionable information that can be used by decision makers to reduce obstacles that undermine scientific progress and productivity.

Subject areas: Biological sciences research methodologies, Surveying, Research methodology social sciences

Graphical abstract

Highlights

-

•

Unpublished data and unused samples represent billions of dollars in stranded assets

-

•

Unfinished projects and orphan data were the primary sources of unpublished data

-

•

Unused samples are not shared because others don’t know they exist

-

•

Addressing logistical problems can increase the efficiency of research

Biological sciences research methodologies; Surveying; Research methodology social sciences

Introduction

In the United States over $600 billion is funneled into R&D each year to advance scientific knowledge and to address pressing societal needs.1 Despite efforts to guarantee a return on investment through mandated reporting and specified deliverables, the problem of unpublished data and unused specimens remains. Ironically, while unpublished data are almost universally acknowledged by scientists, relatively few studies have examined their economic impact or the reasons for their disuse.

Some scientists have been quite vocal about the inefficiency in research.2,3,4,5,6,7,8,9 These concerns reached a pinnacle in 2009 when Iain Chalmers and Paul Glasziou estimated that over 85% of biomedical research is avoidably wasted.10 Marija Purgar et al.11 arrived at a similar estimate for ecology. Although for both analyses this waste was attributed to a variety of factors, including flaws in relevancy, design, methodology, bias, etc.; lack of publishing and reporting of data were one of the main causes.6,9

To date, most of the public discourse on unpublished data have been field-specific7,11,12 with a central focus on highly visible biomedical studies such as clinical trials. However, it has been deduced that most “dark data” are actually held by a greater number of small labs receiving less sizable grants.8 In addition, unused samples have yet to be considered in this problem. To begin to address this knowledge gap, we collected anonymous information on unpublished data and unused samples from 301 US scientists representing a breadth of fields, institutional types and sizes, and research roles. Our goal was to quantify the amount of unpublished data and unused samples researchers possessed and understand the reasons for their disuse.

Recent changes in publication practices have expanded what it means to “publish” data, thus, we established clear definitions for what constituted “unpublished data” (Table 1). Publishing data ahead of peer-review (pre-prints) and uploading stand-alone data in repositories is a valuable way to promote data sharing among scientists and overcome many limitations of the peer-review publication system.13,14,15,16 Despite promising gains in popularity, widespread adoption has yet to be achieved and varies considerably by field17,18,19 with researchers and publishers debating how this information should be used and presented.20,21,22,23 In the mean-time peer-reviewed manuscripts are still viewed by many as the “gold standard” for justifying experimental rationale and demonstrating productivity for grants and career advancement. Thus, we retained the traditional view of “publishing” as being in peer-reviewed scientific journals. However, many pre-prints published ahead of journal submission would be included in our definition of “publishing” and using these new mechanisms does not prevent data from being published in peer-reviewed format. Study definitions are presented in Table 1.

Table 1.

Core definitions as provided in survey

| Terms | Definition as provided to respondents |

|---|---|

| Unpublished Data | “Publishable” data that have not been published in a peer-reviewed scientific journal, are not in articles currently under review, and are not a part of a publication to be submitted in the coming year. |

| Publishable Data | Data that are suitable for publication in a peer-reviewed scientific journal because they meet rigor and reproducibility standards in your field. Do not exclude data from your estimates based on their projected value or impact (i.e. negative or inconclusive results) |

| Negative Results | Publishable data in which there is a failure to reject the null hypothesis (i.e., an effect doesn’t happen). For negative results to be publishable, the proper positive and negative controls must be included and there should be no technical or experimental issues. |

| Orphan Data | Data that doesn’t “fit” well into the lab’s other papers but does not constitute an entire publishable unit |

| Ancillary Findings | Findings unrelated to lab’s mission |

| Publication Efficiency | 100% Efficiency = Publishing 100% of all publishable data in peer-reviewed journals |

| 1 Unit of Data | Sufficient data to create a single graph/table. |

| Unused Samples | Samples that are produced in excess, left-over from experiments or collections, or so easily generated that the laboratory would be willing to share them with other respected collaborators. DO NOT include Precious samples that the laboratory would be unwilling to share; Samples/specimens that are not suitable for use in publication due to insufficient rigor or reproducibility standards or technical/experimental issues. |

Results

Respondent demographics

To assemble contact lists for academic institutions, a random number generator was used to select institutions that were stratified by Carnegie Classification24 and sector. Carnegie Classification ranks accredited degree-granting institutions by factors such as the size of the student body, degrees conferred, and/or research dollars. We selected 22 R1 (doctoral universities—very high research activity), 15 R2 (doctoral universities—high research activity), 15 D/PU (doctoral/professional universities, moderate research activity), and 15 M1-3 academic institutions (Master’s colleges and universities; 1, larger; 2, medium; and 3, smaller programs) and 28 government research agencies that represented 39 states across the US. Contact lists were compiled from departmental websites or institutional directories and primarily included tenured and tenure-track faculty. Research staff, post-docs, and graduate students were also included if their contact information was available.

Survey invitations were sent to 10,206 contacts via email and an additional 176 contacts made via LinkedIn messages Invitation emails from 568 accounts bounced due to defunct email addresses or recipients opting out of Survey Monkey emails. Of the 5,713 individuals who opened their invitation emails, 317 responses were received for a 5.5% response rate and a 74% completion rate. The survey questions can be found in Data S1.

Sixteen responses were excluded from further evaluation due to incomplete information. The remaining 301 respondents were binned by research field into life sciences (54%), physical sciences (24%), social sciences (15%), or engineering (7%). Position and sector data sums exceeded 100%, as some respondents held cross-institutional appointments. The majority of respondents were academic researchers affiliated with R1 institutions (58%), followed by R2 (21%), and government researchers (14%). Researchers at D/PU and M1-3 institutions were collapsed into a single category (11%) due to low numbers. Most respondents were tenured faculty (44%), tenure-track faculty (14%), or non-tenure track faculty/postdocs (23%). Other position and demographic information are indicated in Table 2.

Table 2.

Respondent demographics

| Respondent characteristics | Count | Percent |

|---|---|---|

| Gender | ||

| Male | 179 | 59% |

| Female | 109 | 36% |

| Non-Binary | 1 | 0% |

| Prefer not to answer | 12 | 4% |

| Total | 301 | 100% |

| Field | ||

| Life Sciences | 164 | 54% |

| Physical Sciences | 71 | 24% |

| Engineering & Technology | 20 | 7% |

| Social Sciences (Psych, Sociology, Anthrop) | 46 | 15% |

| Total | 301 | 100% |

| Sector/Tiera | ||

| Government | 43 | 14% |

| Academia - R1 | 174 | 58% |

| Academia - R2 | 63 | 21% |

| Academia - D/PU, M1-M3 | 33 | 11% |

| Industry | 2 | 1% |

| Non-Profit | 7 | 2% |

| Positiona | ||

| Tenured Faculty | 133 | 44% |

| Tenure-Track Faculty | 41 | 14% |

| Government Scientist | 43 | 14% |

| Non-Tenure Track Faculty and Postdocs | 68 | 23% |

| Graduate Students | 22 | 7% |

| Lab Managers | 4 | 1% |

| Retired | 5 | 2% |

| Physicians | 17 | 6% |

| Veterinarians | 5 | 2% |

| Industry Scientist | 2 | 1% |

Some individuals held dual affiliations and/or job titles.

Unpublished data

Respondents were asked detailed questions regarding their unpublished data, as defined in Table 1. The publication process is a lengthy continuum that can dead-end in the manuscript preparation or revision stage.7,12 To institute a reasonable time cutoff, we asked researchers to exclude from their responses data that were “part of articles currently under review and/or not part of a publication to be submitted in the coming year” (which would include many pre-prints). Finally, we asked respondents to remain unbiased as to the data’s projected value or impact (i.e., not to exclude data in which the results were negative, null, or inconclusive). Additional definitions used in the study are presented in Table 1.

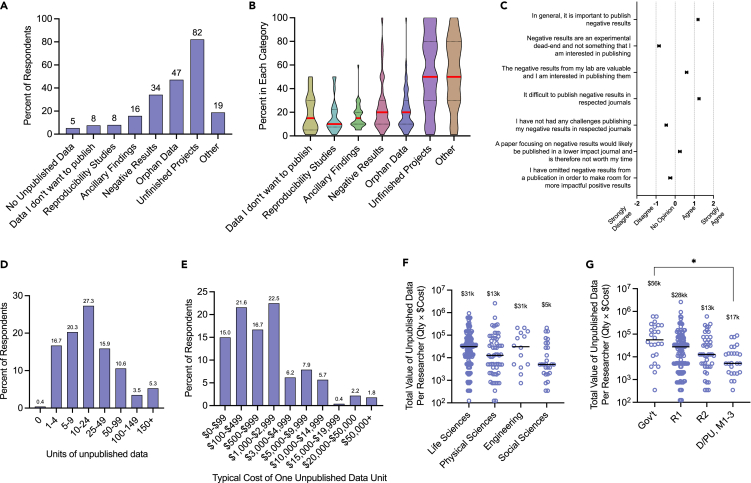

Based on the criteria given, 95% of respondents indicated that they possessed unpublished data. Examples of responses provided included: “gene overexpression in cell lines,” “plant physiology,” “training evaluation data,” “large longitudinal dataset of pregnancy,” “imaging studies on human brain tissue,” “attitudes and perceptions on local environmental issues,” “diversity and prevalence of pathogens,” “sediment transport to marshes,” etc. (Data S2). Respondents were then asked if they possessed unpublished data falling into certain categories (Figure 1A) and to estimate how much of their unpublished data fell into each of those categories (Figure 1B). Types of data meriting additional definitions included: ancillary findings, data unrelated to the lab’s mission; orphan data, data that did not “fit" well into the lab’s other papers but would not constitute a publishable unit; and data producing negative results (also termed “null data”), data for which there was a failure to reject the null hypothesis, including positive and negative controls, and collected without technical issues. As data could be assigned to more than one category, the sum of percentages exceeded 100%. “Unfinished projects,” the largest source of unpublished data, were reported by 82% of respondents and represented ∼50% of their unpublished data. “Orphan data” was the second most common category at 47%, followed by data producing “negative (null) results” at 34%. Write-in responses in the “other” category (19%) echoed issues related to unfinished projects, such as personnel changes, lack of time to write, or difficulty publishing (Figure S1A). The age of unpublished data are shown in Figure S1B.

Figure 1.

Self-reported estimates of the quantity, type, and cost of unpublished data among researchers

Unpublished data were defined as being: publishable (meets respondents’ field’s rigor and reproducibility standards; no technical issues); not currently published in a peer-reviewed scientific journal; not in articles currently under review; not part of a publication to be submitted in the coming year. Respondents were asked to remain agnostic of projected value or impact.

(A) Percent of researchers possessing each type of unpublished data. Types of data meriting additional definitions: Ancillary findings, data unrelated to the lab’s mission; Orphan data, data that does not “fit” well into the lab’s other papers but does not constitute an entire publishable unit; and negative results, data in which there was a failure to reject the null hypothesis, includes positive and negative controls, and was collected without technical issues.

(B) Violin plot depicting the percent of researchers’ unpublished data falling into each category. Median indicated by red line.

(C) Inventory of attitudes and experiences regarding the publishing of negative data. Mean and s.e.m. shown.

(D) Percent of respondents possessing indicated quantities of unpublished data and (E) Percent of respondents indicating average cost of producing one unit of data (excluding employee time cost).

(F and G) Calculated estimate of unpublished data per researcher (avg quantity × avg cost) stratified by field (F) and sector/tier (G). Median indicated by solid lines and annotations. ∗p < 0.05, One-way ANOVA of log transformed values with Tukey’s multiple comparisons. Mean and s.e.m. shown. n = 240–301.

To prepare for the survey creation, we informally interviewed 13 scientists regarding their unused resources. These discussions revealed wide differences of opinion regarding whether negative results were “publishable” and prompted us to ask respondents to remain unbiased as to their data’s projected value or impact. To quantify varying opinions regarding the publishing of negative results, we presented respondents with various statements and ascertained the degree to which they agreed, disagreed, or held no opinion (Figure 1C). The strongest opinions were that publishing of negative results was important (agreed-strongly agreed; mean ± s.e.m 1.2 ± 0.5), but that publishing negative results in respected journals was difficult (agreed-strongly agreed; 1.25 ± 0.56).

Next, we sought to quantify the amount of unpublished data respondents had accumulated. To proceed, we defined a “unit” of unpublished data as being “sufficient to create a single table or graph.” We took this approach so that respondents could answer based on conventions within their field and area of research. In addition, this estimate would capture subsets of “orphan data” that may have been excluded from a larger set of data used for publication.

In keeping with this definition, most respondents estimated that they possessed 10–24 units of unpublished data, with over 60% possessing between 5 and 50 units (Figure 1D). Respondents were then asked to estimate the typical cost to produce one unit of data, including the cost of materials, supplies, services, etc. but excluding the salary costs of full-time laboratory personnel (Figure 1E). Most estimates (60.8%) fell between $100 and $2,999 but 10% of respondents estimated that the average unit of data cost them more than $10,000.

The midpoints of these ranges were then multiplied (quantity × cost) to calculate the approximate estimated value of unpublished data for each researcher. These values were stratified by field (Figure 1F) and tier/sector (Figure 1G). Researchers in engineering and life sciences possessed the highest median value of unpublished data ($31k), followed by the physical sciences ($13k), and social sciences ($5k). Researchers in the government sector possessed the costliest data ($56k), followed by R1 ($28k), D/PU and M1-3 ($17k), and R2 ($13K) institutions.

While these numbers represent self-reported estimates for the costs of data collection and processing over a range of data types, the findings demonstrate that unpublished data may represent a significant potential loss of resource investment and that systemic issues such as personnel turnover, time constraints, and perceived publication biases contribute to deficits in their utilization.

Publication efficiency and publication pressure

To gain further insights into how researchers viewed their own publishing activity, we asked respondents to imagine their publication efficiency, a theoretical scenario in which being 100% efficient means publishing 100% of all “publishable” data in peer-reviewed journals. These were compared to self-reported estimates of publication pressure (Figures S2A–S2C). Estimates of publication efficiency were normally distributed with a mean, s.d. of 59.18 ± 23.05% (Figure 2A). Publication efficiency did not significantly differ between researchers in different fields (Figure 2B) but was lower at D/PU and M1-3 academic institutions (Figure 2C) where perceived publication pressure was also reduced (Figure S2B). Among individual researchers, there was no significant relationship between publication pressure and publication efficiency (p = 0.70) nor the number of estimated unpublished data units (p = 0.83). There was an association between the number of unpublished data units and publication efficiency (rs = −0.22, p < 0.01).

Figure 2.

Publication efficiency estimates of self and peers

Participants were asked to imagine a theoretical scenario in which “100% efficiency = publishing 100% of all publishable data in peer-reviewed journals,” in keeping with previous definitions of “publishable data”.

(A) Self-reported estimates of publication efficiency from all respondents, with mean indicated by black line.

(B) Comparison of mean publication efficiency by field and (C) sector/tier. Mean and s.e.m shown. ∗∗p < 0.01, One-way ANOVA with Tukey’ multiple comparisons. Participants were then asked to estimate the publication efficiency of the average research laboratory in their field, noted as “peers in field.”

(D) Histogram of differences between self and peers.

(E and F) Differences stratified by position (E) and (F), gender. Mean and s.e.m shown. ∗p < 0.05, One-way ANOVA with Tukey’s multiple comparisons (e) and unpaired t-test (f). n = 230–240.

We then asked respondents to estimate the publication efficiency of the average research laboratory in their field. Differences in the perception of efficiency between self and peers are shown in Figure 2D. On average, individuals felt that they were as efficient or slightly less efficient than their peers (mean, −3.19%; median, 0%); however, greater differences emerged when respondents were stratified by position (Figure 2E) and gender (Figure 2F). While the number of estimated unpublished data units were identical between males and females (mean, median of 10–24 units of data), females viewed themselves as being significantly less efficient (−6.50% versus −0.76%; p < 0.05). Together, these data grant additional psychological and sociological insights into the research and publication process.

Unused samples

Next, we asked respondents about their unused samples. In order to include as many research fields as possible, we broadly defined an unused samples as “samples/specimens that are produced in excess, left-over from experiments or collections, or so easily generated that the laboratory would be willing to share them with other respected collaborators” and exclude “samples that the laboratory would be unwilling to share or are not suitable for use in publication due to insufficient rigor and/or reproducibility standards or technical/experimental issues.” Given the wide range of fields we included in the survey, we allowed each researcher to decide what constituted an “unused” “sharable” sample within their specialty.

A total of 53% of survey respondents collected samples or specimens as part of their research. Sixty percent of these individuals indicated that they had material meeting the definition of unused samples/specimens, with wide variation seen among respondents. Respondents were also asked to provide examples of their unused samples, which included, “serum and tissue from infected animals,” “whole animals; frozen tissues,” “frozen fruit flies from a selection experiment,” “embedded mouse brain tissue,” etc. (Data S3). A range of 10–100 samples was the most common answer (22%), but the median answer corresponded to 300–500 samples (Figure 3A). Respondents were then asked to estimate the average cost required to generate one sample, including the cost of materials, reagents, services, maintenance fees, disposables, etc. but excluding salary costs for full-time laboratory employees (Figure 3B). Seventy-five percent of respondents estimated this cost to be between $1 and $100 per sample. The age of samples reported ranged from 0 to 50 years (Figure 3C), implying gradual accumulation of unused samples over time.

Figure 3.

Self-reported estimates of the quantity and cost of unused samples

Unused or left-over samples were defined as “samples/specimens that are produced in excess, left-over from experiments or collections, or so easily generated that the laboratory would be willing to share them with other respected collaborators.” This excludes samples that the laboratory would be unwilling to share or are not suitable for use in publication due to insufficient rigor and/or reproducibility standards or technical/experimental issues. Of the 53% of respondents whose research entails sample or specimen collection, 60% indicated that they had unused samples that met this definition. These individuals then answered questions about the estimated quantity and cost of their unused samples.

(A) Assuming 1 specimen, tube, sample, aliquot, etc. = 1 Unit, percent of respondents with indicated unused sample quantities.

(B) Percent of respondents indicating the average estimated cost to generate one sample (excluding employee time cost).

(C) Age of samples.

(D and E) Approximate estimated value of unused samples per researcher (avg quantity × avg cost) stratified by field (D) and (E), sector/tier. Median indicated by lines and annotations.

(F) Rating of obstacles preventing the sharing of unused samples with others. Mean and s.e.m. shown. n = 78–250.

For each researcher, the midpoints for the ranges they selected were multiplied (quantity × cost) to calculate the approximate estimated value of unused samples. These values were stratified by field (Figure 3D) and tier/sector (Figure 3E). Researchers in the life sciences possessed the costliest unused samples (median of $26k), followed by the physical and social sciences ($11k). None of the engineering and technology respondents possessed unused samples that met the specified criteria (Figure 3 legend). In terms of sector and tier, government researchers had the most expensive unused samples ($100k), followed by R1 researchers ($26k), R2 ($22k), and D/PU and M1-3 ($15k).

In addition, respondents identified obstacles that interfered with their ability to share their samples with potential collaborators (Figure 3F). These researchers reported that others not knowing of the samples’ existence or not being able to find collaborators as the largest obstacles compared to accessibility, selectivity, resource challenges, or confidentiality.

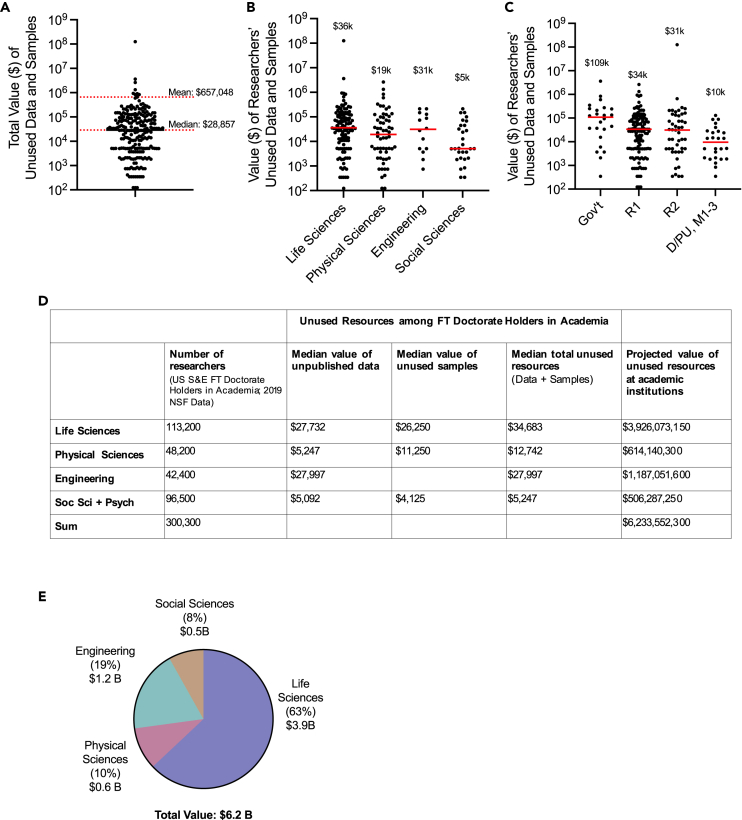

Total unused resources

To estimate the total cost of unused resources per investigator, the self-reported estimated costs of unpublished data and unused samples were summed for each researcher (Figure 4A). Across all researchers, the median value of total unused resources was $28,857. However, the possession of very costly assets among some researchers skewed the mean to $657,048. Researchers in the life sciences ($36k) and engineering ($31k) had the most expensive median unused resources compared to the physical ($19k) and social sciences ($5k). The median value of unused resources for government researchers ($109k) was approximately three times as high at R1 institutions ($34k), followed by R2 ($31k) and D/Pu-M1-3 institutions ($10k).

Figure 4.

Estimate of the unused laboratory resource economy at US academic institutions

For each respondent, the self-reported estimates of unused data and samples were added together to calculate the total estimated value of their unused resources per researcher (A). These values were then stratified by field (B) and sector/tier (C). Medians are indicated by the red lines and annotations.

(D) To estimate the value of unused resources at US academic institutions, the median total value of unused resources (data + samples) was re-calculated for respondents who were full time (FT) doctorate holders in academia only. This value was then multiplied by corresponding NSF science and engineering (S&E) headcounts in each field.25

(E) Size estimate of the unused resource economy at US academic institutions.

These data were then used to estimate the total value of unused resources at U.S. academic institutions. We recalculated the median total unused resource value for full-time doctorate holders in academia only and then multiplied this number by corresponding NSF headcounts25 across research fields (Figure 4D). The resulting calculations projected a total of $6.2 billion in unused laboratory assets, the bulk of which may reside within the life sciences (65%, $3.9b; Figure 4E). Engineering researchers were estimated to hold 20% of unused assets, $1.2b, followed by the physical sciences (10%, $0.6b), and social sciences (8%, $0.5b). Although government researchers were included in our survey, they were excluded from this computation as similarly stratified headcount data were not available for this group.

Discussion

Economists define “stranded assets” as “assets that have suffered from unanticipated or premature write-downs, devaluation, or conversion to liabilities.”26,27 While this term is often used in the context of devalued real estate or inaccessible fossil fuels, it is highly applicable to unpublished data and unused samples among government and academic researchers. In analogous terms, the research process distills investments of time and money into data, the value of which is scientific knowledge and advances to meet societal needs. In some research fields, the generation or acquisition of samples is a vital, resource-intensive precursor to obtaining and using data. In addition, the publications that originate from scientific activity provide value in the form of career advancement, ability to obtain future grant funding, and societal impact. However, when data remain unpublished and samples are left unused, the value of these investments becomes “stranded” in a state of stored potential. In cases where these materials are time-sensitive or their existence remains unknown, their value may be lost completely.

Our data suggest that the average researcher possesses about $29,000 in stranded assets, which means that the average R1 institution has millions of dollars in unused assets and, across US academic institutions, a grand total of $6.2 billion may be at stake. It is important to place these findings in temporal context and emphasize that this is a cumulative snapshot of unused resources. The rate of aggregation is currently unclear, as some respondents’ answers may represent an entire career’s worth of accumulated unused resources, while junior investigators may possess only a few years’ worth. While this figure does encompass an enormous amount of resources, it represents a fraction of the total academic R&D spending in a given year—about 7% of the $90 billion spent at universities in 2021.28 Moreover, it should again be emphasized that is not an estimate of “waste” but instead the amount of stranded assets that are in danger of becoming waste.

Here, we extrapolated the approximate value of unused resources in academia; however, the total unused laboratory resource economy in the U.S. is likely orders of magnitude larger when considering inputs from the government sector and private industry. Others have given much larger estimates. In their Lancet paper on research waste, Glasziou and Chalmers (2009) surmised that the percent loss attributed to non-publication alone (if data passed muster in other areas) would be ∼25%. Given NIH’s $45 billion annual budget,29 this would translate to about ∼$11 billion each year for biomedical research alone.

Our findings suggest that data are often not withheld by choice, as only 8% of respondents indicated that they possessed data they did not want to publish. Instead, the evidence indicates that systemic issues and publication bottlenecks hamper the efficient conversion of data into peer-reviewed publications. During the manuscript preparation process, carving out a cohesive publishable unit from results often leads to data being “orphaned” because some datasets are not sufficiently robust to be published on their own or may be regarded as tangential. Indeed, 47% percent of respondents possessed unpublished data that meet this description. Over a third possessed unpublished negative (null) results and felt strongly that these data are undervalued by peers and publishers. Finally, unfinished projects were by far the primary source of unpublished data with personnel turnover and a lack of time identified as major culprits. These results echo findings from other studies where lack of time, directionality of findings, and stalling in the preparation/review process have all been identified as causes of unpublished data.7,12,30 With respect to unused samples, it appears that the opportunity to convert these stranded assets into usable resources via collaboration is stymied primarily by a logistical constraint: widespread knowledge of their availability.

Despite the obstacles faced by researchers in publishing, most feel their performance is commensurate with their peers. However, further stratification reveals that women tend to view themselves much more critically than men despite reporting identical quantities of unpublished data; a discrepancy that many would ascribe to “imposter syndrome.”31,32,33,34 Although the literature is divided over the effects of gender on imposter syndrome, this finding supports previous reports that females experience higher rates than men.34 Also of note is that the amount of publication pressure individuals experienced had no bearing on their perceived publication efficiency nor the self-reported amount of unpublished data. Together these data provide a unique view the intersection of scientific productivity with factors such as stress, systemic issues, resource limitations, and self-image.

While it is not realistic to expect that all the challenges identified here can be completely overcome, there is hope that solutions can be identified. In the past 10 years, much attention has been given to shifting the publishing framework toward open science as a means of facilitating data sharing. As these practices become more mainstream, they represent an essential way to reduce waste in the form of unpublished data. Moreover, they may represent the one of the few options to overcome publication bias against negative or null results.

While continued efforts in this area are valuable, our findings argue that tackling unfinished projects would also be fruitful, as they are a predominant source of unpublished data. In regard to unfinished projects, perhaps the greatest challenge is the fact that career development timelines and financial pressures are often not compatible with the pace and compensation structure of research. In academia, those directly involved with data generation (typically students and early career researchers) often leave their positions to acquire better salaries and to advance their careers, leaving behind unfinished projects. Indeed, our data provide actionable evidence and the financial impetus that decision-makers could use to justify policy changes targeting these problems. For example, requirements for better research planning can be put in place, such as transition plans for personnel changes and institutional incentives for completing projects and publishing manuscripts and/or raw data. One survey respondent suggested an “institutional requirement for delivering all unpublished data with details required for publication and an agreement for authorship before departure from the lab.” As the financial loss from unfinished projects is significant, implementing incentives to conclude experiments, publish results, and mentor replacement staff could also be justified.

There is also an important lesson to be learned from conventional “stranded assets” in economics: the way to make use of them is to repurpose them. Similarly, unpublished data and unused samples can be mobilized through new applications and collaborative efforts. Another respondent commented “sometimes we don’t see value in data … but others might find it very valuable” and suggested the need for collaborative tools and exchanges to helps scientists “make mutual agreements for analysis/publication.” Such an approach could help make use of hard-to-publish negative results, reproducibility studies, pilot data, orphan data, or ancillary findings and would help overcome the central challenge of unused samples: knowledge of their availability.

Limitations of the study

These findings are based on the parameters and definitions set forth in the survey, which could under- or over-estimate the actual pool of unused resources in several respects. First, data that researchers anticipate will take longer than one year to submit to a peer-reviewed journal are counted as “unpublished.” This cut-off was established because many papers can stall in the manuscript preparation and the likelihood of publication tends to declines over time7,12; however, it is not uncommon for some completed projects to take multiple years to be submitted, therefore some data could have been prematurely counted as “unpublished.” Moreover, we did not count stand-alone submissions in data repositories or pre-prints, although many such data would have been included in our estimates as they are often posted ahead of or in tandem with peer-review publications. Alternatively, the survey could have underestimated resources in that it did not quantify data that still need work in order to become “publishable.” Finally, we did not include the cost of employee time and effort in the value of data and samples, as we felt this would be too difficult to disentangle within the confines of this survey. This is arguably the most resource-intensive aspect of data and sample generation, which leads us to hypothesize that findings are overall likely underestimated.

Another challenge of this survey was that the generalizability of the questions made it difficult to capture field or institution-specific idiosyncrasies. This is particularly challenging because some “units” of data may be large and complex, such as cohort or population data, and some may be small, such as data from a simple experiment.

As with any survey, concerns regarding response bias must be acknowledged. At 5.5%, our response rate was on the low side, but not unexpected for a “cold” email survey and the characteristics of population being surveyed (i.e. busy professionals).35,36,37,38 However, lower response rates are not necessarily correlated with response bias39,40 and previous studies have demonstrated that response rates of 5% in similar respondent populations produce reliable survey results provided a sampling frame of at least 500 individuals.41 In support of this interpretation, our publication efficiency statistics of ∼60% align closely with previously reported publication rates of 58%,12 ∼45%,3 >50%.4

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited data | ||

| Survey Data | Mendeley Data | https://doi.org/10.17632/6zxhcdsf7x.1 |

Resource availability

Lead contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the lead contact, Dr. Emma Bowers (emma.ciel@gmail.com).

Materials availability

This study did not generate new unique reagents.

Experimental model and study participant details

In compliance with the institutional policy for human subjects research, the survey protocol was reviewed by the University of Arizona Institutional Review Board and deemed exempt from full review. The selection process is described in method details and respondent characteristics are included in Table 2. We did not collect information on ancestry, race, or ethnicity.

Method details

Survey creation

Prior to preparing the survey we informally interviewed 13 academic scientists about their unused data and samples, which provided preliminary information as to the scope of the problem and underlying issues. Using this information, survey questions were developed to assess the quantity and value of unused data and samples and the reasons for their disuse.

Selection process and deployment

US academic institutions were stratified by Carnegie Classification and a random number generator was used to select those to be included in the study. We selected 22 R1, 15 R2, 15 D/Pu, and 15 M1-3 institutions. For each institution, we sought individuals from the biomedical sciences, biological sciences, medicine, earth sciences, engineering, chemistry, physics/astronomy, and social sciences (psychology, sociology, anthropology). In addition to comprising the bulk of R&D, we focused on the aforementioned fields because they are the most likely to utilize tangible data and samples and capable of being assessed by our survey instrument.

Within each subject area, we assembled contact lists from publicly available online institutional directories. Contacts consisted primarily of tenured and tenure-track faculty (65-80%, depending on the institution type). Research staff, post-docs, and graduate students were also included if their contact information was available.

Similarly, we assembled a list of government research laboratories and institutes and randomly selected 30 for study inclusion; however, contact information for many government agencies was limited. We did not include researchers in industry given the differences in organizational structure, staffing, publication pressure, and funding mechanisms.

In total, 9,149 contacts from academia and 1,057 contacts from government researchers were collected. In addition, 176 invitations were sent out on LinkedIn to increase response rates. Informed consent was obtained on the first page of the survey. All respondents indicated that they were at least 18 years old, worked in a U.S. based laboratory, and were directly involved in research. The survey was deployed from May 30, 2022 to September 2, 2022 using SurveyMonkey software, which also sent invitation and reminder emails. Responses were collected anonymously.

Quantification and statistical analysis

Statistical analysis was conducted using SPSS Statistics and Graph Pad Prism. Results were considered to be significant at P<0.05. Samples sizes ranged from 78-301 and are indicated in the legend of each figure. Unpublished data and unused samples were presented as percent of respondents, median, and mean, where indicated. Multiple comparisons were made using a one-way ANOVA with Tukey’s multiple comparisons. Single comparisons were made using an unpaired t-test. To calculate the value of unused resources at academic institutions, NSF headcounts of full-time doctorate holders in academia stratified by field were obtained from https://ncses.nsf.gov/pubs/nsb20212/table/SLBR-21. These numbers were multiplied by the median estimated value of unused total resources for US doctoral holders in academia (obtained from the survey results; excluded government researchers, students, and lab managers). We did not include estimates for the fields of mathematics and statistics and computer science.

Acknowledgments

A National Science Foundation I-Corps grant and Flinn Foundation Entrepreneurial fellowship provided support during the preliminary interviews that showed the value of a wider investigation. We would like to gratefully acknowledge the support of Dr. Christine Flanagan in her role as editor and whose discussions helped to frame the survey content. Dr. Deborah Goldberg and Dr. Shaun McCullough provided valuable mentoring, advice, and input.

Author contributions

E.B., M.F., J.S., and K.S.R provided essential input into formulation of the survey and interpretation of survey data. E.B. and M.F. composed the survey materials. E.B. and J.S. compiled email contact lists and sent survey participation requests. E.B., J.S., M.F., and K.S. composed the manuscript.

Declaration of interests

E.B. is the founder LabPair, Inc. an early-stage startup aimed at helping scientists turn their unused data and samples into publications. J.S. is a part-time LabPair employee. M.F. and K.S.R declare no competing interests. Data are available at Mendeley Data https://doi.org/10.17632/6zxhcdsf7x.1.

Inclusion and diversity

We support inclusive, diverse, and equitable conduct of research.

Published: June 19, 2023

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2023.107166.

Supplemental information

Data and code availability

-

•

The survey data been deposited at Mendeley Data and is publicly available as of the date of publication. The DOI is listed in the key resources table.

-

•

This paper does not report original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

References

- 1.Bouroush M., (NCSES), N.C. for S. and E.S. U.S. R&D Increased by $62 Billion in 2019 to $667 Billion; Estimate for 2020 Indicates a Further Rise to $708 Billion. 2022. https://ncses.nsf.gov/pubs/nsf22330/

- 2.Chan A.-W., Song F., Vickers A., Jefferson T., Dickersin K., Gøtzsche P.C., Krumholz H.M., Ghersi D., van der Worp H.B. Increasing value and reducing waste: addressing inaccessible research. Lancet. 2014;383:257–266. doi: 10.1016/S0140-6736(13)62296-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Scherer R.W., Meerpohl J.J., Pfeifer N., Schmucker C., Schwarzer G., von Elm E. Full publication of results initially presented in abstracts. Cochrane Database Syst. Rev. 2018;11:MR000005. doi: 10.1002/14651858.MR000005.pub4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hopewell S., Clarke M., Stewart L., Tierney J. Time to publication for results of clinical trials. Cochrane Database Syst. Rev. 2007;2007:MR000011. doi: 10.1002/14651858.MR000011.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ziai H., Zhang R., Chan A.-W., Persaud N. Search for unpublished data by systematic reviewers: an audit. BMJ Open. 2017;7 doi: 10.1136/bmjopen-2017-017737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Glasziou P., Chalmers I. Research Waste Is Still a Scandal—An Essay by Paul Glasziou and Iain Chalmers. BMJ. 2018;363 [Google Scholar]

- 7.Pasalic D., Fuller C.D., Mainwaring W., Lin T.A., Miller A.B., Jethanandani A., Espinoza A.F., Grossberg A.J., Jagsi R., Das P., et al. Detecting the Dark Matter of Unpublished Clinical Cancer Studies: An Analysis of Phase 3 Randomized Controlled Trials. Mayo Clin. Proc. 2021;96:420–426. doi: 10.1016/j.mayocp.2020.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Heidorn P.B. Shedding light on the dark data in the long tail of science. Libr. Trends. 2008;57:280–299. [Google Scholar]

- 9.Altman D.G. The scandal of poor medical research. BMJ. 1994;308:283–284. doi: 10.1136/bmj.308.6924.283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chalmers I., Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet. 2009;114:1341–1345. doi: 10.1097/AOG.0b013e3181c3020d. [DOI] [PubMed] [Google Scholar]

- 11.Purgar M., Klanjscek T., Culina A. Quantifying research waste in ecology. Nat. Ecol. Evol. 2022;6:1390–1397. doi: 10.1038/s41559-022-01820-0. [DOI] [PubMed] [Google Scholar]

- 12.Chapman P.B., Liu N.J., Zhou Q., Iasonos A., Hanley S., Bosl G.J., Spriggs D.R. Time to publication of oncology trials and why some trials are never published. PLoS One. 2017;12 doi: 10.1371/journal.pone.0184025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gonzalez P., Wilson G.S., Purvis A.J. Peer review in academic publishing: Challenges in achieving the gold standard. J. Univ. Teach. Learn. Pract. 2022;19:1. [Google Scholar]

- 14.Sarabipour S., Debat H.J., Emmott E., Burgess S.J., Schwessinger B., Hensel Z. On the value of preprints: An early career researcher perspective. PLoS Biol. 2019;17 doi: 10.1371/journal.pbio.3000151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Tennant J.P., Ross-Hellauer T. The limitations to our understanding of peer review. Res. Integr. peer Rev. 2020;5:6. doi: 10.1186/s41073-020-00092-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Puebla I. Preprints: a tool and a vehicle towards greater reproducibility in the life sciences. J. Rep. Neurosci. 2020;2:1465. doi: 10.31885/jrn.2.2021.1465. [DOI] [Google Scholar]

- 17.Soderberg C.K., Errington T.M., Nosek B.A. Credibility of preprints: an interdisciplinary survey of researchers. R. Soc. Open Sci. 2020;7 doi: 10.1098/rsos.201520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kleinert S., Horton R., Editors of the Lancet Group Preprints with The Lancet are here to stay. Lancet. 2020;396:805. doi: 10.1016/S0140-6736(20)31950-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Brainard J. Researchers push preprint reviews to improve scientific communications - Shifts in research culture, incentives, and technology would be needed for wide adoption. Science (Washington, DC, U. S.) 2022 doi: 10.1126/science.adg2283. [DOI] [Google Scholar]

- 20.Van Schalkwyk M.C.I., Hird T.R., Maani N., Petticrew M., Gilmore A.B. The Perils of Preprints. BMJ. 2020:370. doi: 10.1136/bmj.m3111. [DOI] [PubMed] [Google Scholar]

- 21.Ravinetto R., et al. Rapid Response to: The Perils of Preprints. Time Is Ripe for “Good Preprint Practices. BMJ. 2020 https://www.bmj.com/content/370/bmj.m3111/rr [Google Scholar]

- 22.Ravinetto R., Caillet C., Zaman M.H., Singh J.A., Guerin P.J., Ahmad A., Durán C.E., Jesani A., Palmero A., Merson L., et al. Preprints in times of COVID19: the time is ripe for agreeing on terminology and good practices. BMC Med. Ethics. 2021;22:106–115. doi: 10.1186/s12910-021-00667-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Clyne B., Walsh K.A., O’Murchu E., Sharp M.K., Comber L., O’Brien K.K., Smith S.M., Harrington P., O’Neill M., Teljeur C., Ryan M. Using preprints in evidence synthesis: Commentary on experience during the COVID-19 pandemic. J. Clin. Epidemiol. 2021;138:203–210. doi: 10.1016/j.jclinepi.2021.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Carnegie Classification Basic Classification Description. 2022. https://carnegieclassifications.acenet.edu/classification_descriptions/basic.php

- 25.National Science Board, N.S.F. The STEM Labor Force of Today: Scientists, Engineers and Skilled Technical Workers. Sci. Eng. Indic. 2022. NSB-2021-2. Alexandria, VA. 2021. https://ncses.nsf.gov/pubs/nsb20212/data#table-block

- 26.Caldecott, B., Howarth, N., and McSharry, P. (2013). Stranded Assets in Agriculture: Protecting Value from Environment-Related Risks.

- 27.Caldecott B. Introduction to special issue: stranded assets and the environment. J. Sustain. Financ. Invest. 2017;7:1–13. doi: 10.1080/20430795.2016.1266748. [DOI] [Google Scholar]

- 28.National Center for Science and Engineering Statistics (NCSES) Universities Report Largest Growth in Federally Funded R&D Expenditures since FY 2011. 2022. https://ncses.nsf.gov/pubs/nsf23303

- 29.(NIH), N.I. of H. Budget. https://www.nih.gov/about-nih/what-we-do/budget.

- 30.Koricheva J. Non-Significant Results in Ecology: A Burden or a Blessing in Disguise? Oikos. 2003;102:397–401. [Google Scholar]

- 31.Clance P.R., Imes S.A. The imposter phenomenon in high achieving women: Dynamics and therapeutic intervention. Psychother. Theory Res. Pract. 1978;15:241–247. [Google Scholar]

- 32.Chrousos G.P., Mentis A.-F.A. Imposter syndrome threatens diversity. Science. 2020;367:749–750. doi: 10.1126/science.aba8039. [DOI] [PubMed] [Google Scholar]

- 33.Abdelaal G. Coping with imposter syndrome in academia and research. Biochem. (Lond). 2020;42:62–64. doi: 10.1042/BIO20200033. [DOI] [Google Scholar]

- 34.Bravata D., Madhusudhan D., Boroff M., Cokley K. Commentary: Prevalence, predictors, and treatment of imposter syndrome: A systematic review. J. Ment. Health Clin. Psychol. 2020;4:12–16. [Google Scholar]

- 35.Van Mol C. Improving web survey efficiency: the impact of an extra reminder and reminder content on web survey response. Int. J. Soc. Res. Methodol. 2017;20:317–327. doi: 10.1080/13645579.2016.1185255. [DOI] [Google Scholar]

- 36.Daikeler J., Bošnjak M., Lozar Manfreda K. Web Versus Other Survey Modes: An Updated and Extended Meta-Analysis Comparing Response Rates. J. Surv. Stat. Methodol. 2020;8:513–539. doi: 10.1093/jssam/smz008. [DOI] [Google Scholar]

- 37.Wu M.-J., Zhao K., Fils-Aime F. Response rates of online surveys in published research: A meta-analysis. Comput. Hum. Behav. Rep. 2022;7 doi: 10.1016/j.chbr.2022.100206. [DOI] [Google Scholar]

- 38.Porter S.R., Umbach P.D. Student Survey Response Rates across Institutions: Why Do they Vary? Res. High. Educ. 2006;47:229–247. doi: 10.1007/s11162-005-8887-1. [DOI] [Google Scholar]

- 39.Hendra R., Hill A. Rethinking Response Rates: New Evidence of Little Relationship Between Survey Response Rates and Nonresponse Bias. Eval. Rev. 2019;43:307–330. doi: 10.1177/0193841X18807719. [DOI] [PubMed] [Google Scholar]

- 40.Massey D.S., Tourangeau R. Where Do We Go from Here? Nonresponse and Social Measurement. Ann. Am. Acad. Polit. Soc. Sci. 2013;645:222–236. doi: 10.1177/0002716212464191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Fosnacht K., Sarraf S., Howe E., Peck L.K. How important are high response rates for college surveys? Rev. High. Ed. 2017;40:245–265. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

-

•

The survey data been deposited at Mendeley Data and is publicly available as of the date of publication. The DOI is listed in the key resources table.

-

•

This paper does not report original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.