Abstract

Background:

Institutional review board (IRB) expertise is necessarily limited by maintaining a manageable board size. IRBs are therefore permitted by regulation to rely on outside experts for review. However, little is known about whether, when, why, and how IRBs use outside experts.

Methods:

We conducted a national survey of U.S. IRBs to characterize utilization of outside experts. Our study uses a descriptive, cross-sectional design to understand how IRBs engage with such experts and to identify areas where outside expertise is most frequently requested.

Results:

The survey response rate was 18.4%, with 55.4% of respondents reporting their institution’s IRB uses outside experts. Nearly all respondents who reported using outside experts indicated they do so less than once a month, but occasionally each year (95%). The most common method of identifying an outside expert was securing a previously known subject matter expert (83.3%). Most frequently, respondents sought consultation for scientific expertise not held by current members (69.6%). Almost all respondents whose IRBs had used outside experts reported an overall positive impact on the IRB review process (91.5%).

Conclusions:

Just over half of the IRBs in our sample report use of outside experts; among them, outside experts were described as helpful, but their use was infrequent overall. Many IRBs report not relying on outside experts at all. This raises important questions about what type of engagement with outside experts should be viewed as optimal to promote the highest quality review. For example, few respondents sought assistance from a Community Advisory Board, which could address expertise gaps in community perspectives. Further exploration is needed to understand how to optimize IRB use of outside experts, including how to recognize when expertise is lacking, what barriers IRBs face in using outside experts, and perspectives on how outside expert review impacts IRB decision-making and review quality.

Keywords: IRB, research ethics, human subjects research, outside expertise, consultant, IRB quality

Institutional Review Boards (IRBs) are charged with protecting the rights and welfare of research participants and ensuring compliance with research regulations. The IRB submission and review process should also avoid unnecessary burden on researchers, delay in study initiation, or changes to ongoing research. Achieving these goals requires extensive and wide-ranging expertise relevant to research ethics, regulatory and legal requirements, study design, scientific and medical context, participant populations, and research settings, among other topics.

The Department of Health and Human Services (HHS) Basic Policy for Protection of Human Research Subjects (Subpart A), otherwise known as the “Common Rule,” applies to most federally-funded research involving human subjects, with similar requirements governing FDA-regulated clinical investigations. The Common Rule requires that IRBs be composed of “at least five members, with varying backgrounds to promote complete and adequate review of research activities commonly conducted by the institution”. Additionally, the Common Rule mandates that an IRB “be sufficiently qualified through the experience and expertise of its members … to ascertain the acceptability of proposed research in terms of institutional commitments (including policies and resources) and regulations, applicable law, and standards of professional conduct and practice” (Protection of Human Subjects, 2018, 45 CFR 46.107(a)).

Given practical constraints around board size and the wide range of protocols that may be submitted for IRB review, even a board that has adequate expertise for their typical protocols may occasionally face a submission that is uncharacteristic of their regular portfolio. Recognizing this challenge, the regulations authorize IRBs to rely on experts outside their membership at their discretion (see 45 CFR 46.107(e) and 21 CFR 56.107(f)). Access to and utilization of appropriate expertise are critical elements in promoting the quality of IRB review, including the IRB’s effectiveness in assessing the scientific and social value of research, as well as any steps necessary for participant protection (Catania et al. 2008; Gold and Dewa 2005; Pritchard 2011).

Some have raised doubts about whether IRBs possess sufficient expertise in general to carry out their assigned role (Emanuel et al. 2004; Ferretti et al. 2020; McNeil 2007). Ethical oversight by individuals lacking appropriate expertise may lead to both under-protection, risking harm to participants, and overprotection, delaying or otherwise constraining potentially beneficial studies. Lack of expertise in review may lead to inadequate informed consent, for example, if risks and benefits are not adequately identified and disclosed. It may also result in suggested changes that weaken the scientific validity or overall value of the data produced. Inadequate review or inappropriate requirements may compromise the IRB’s credibility and participant protection, and permit research of dubious scientific validity and social value to proceed (Emanuel et al. 2004; Ferretti et al. 2020; Musoba, Jacob, and Robinson 2015; Silberman and Kahn 2011; Spellecy et al. 2018; Vitak et al. 2017).

Given the evolving nature of human subjects research and the diversity of research portfolios that an IRB may be responsible for, IRBs are likely to encounter protocols for which their existing membership lacks specific expertise. Involving outside experts in review can promote adequate protections in an individual study, while also contributing to the IRB’s broader understanding of complex and nuanced dimensions of a specific study design, population, technology, intervention, or therapy. However, little is known about whether, when, why, and how IRBs take advantage of the option to call on outside experts (Serpico et al. 2022).

In order to address gaps in what is known about the use of outside experts, we conducted a survey of U.S. IRBs to characterize their board’s utilization of outside experts, understand how they evaluate their experiences with such experts, and identify the types of studies where outside expertise is most frequently utilized.

This survey was part of a larger project on outside expertise facilitated by the Consortium to Advance Effective Research Ethics Oversight (www.AEREO.org). AEREO is a collaborative group of leaders in human subjects research ethics and oversight who conduct conceptual and empirical research to understand and improve the quality and effectiveness of human research protection programs (HRPPs) and IRBs in ways that extend beyond regulatory compliance. For all the reasons described above, questions related to IRB expertise are highly relevant to IRB/HRPP performance in successfully protecting research participants and promoting ethical research. Other AEREO projects have examined existing IRB/HRPP quality assessment instruments, stakeholder definitions of IRB/HRPP quality and approaches to measurement, approaches to using precedents to improve IRB decisions, and approaches to providing key information in informed consent1. Ongoing projects are examining the involvement of lay members on IRBs, IRB engagement with community advisory boards, approaches to balancing inclusion and protection of vulnerable research populations, IRB/HRPP understanding of and learning from participant experiences in research, and investigator perspectives on IRB value, among others.

The AEREO project on outside expertise is a multi-part, mixed methods study involving several phases. The first phase involved a systematic literature review on IRB use of outside experts, as well as the regulations and standards permitting IRBs to do so (manuscript in press). We identified critical gaps in the existing literature regarding the policies and practices relevant to consulting outside experts during IRB review, which guided the exploratory questions posed in this survey study.

Results from the second phase of this work, a national survey of IRBs, are reported here. Our team conducted this survey to better understand whether and how IRBs make use of outside experts, and what impact it has on IRB decision-making. The third phase of this project, qualitative interviews with survey respondents, is ongoing and will layer rich descriptions of experiences with outside experts on these quantitative results.

Methods and materials

Survey design

To inform our recruitment strategy and survey development, in December 2020 we conducted three formative interviews with selected AEREO members who have extensive experience leading HRPPs. We then sought feedback from the full AEREO membership (over 50 institutions) on survey domains, as well as the target population and recruitment strategy. Based on these discussions, we identified the following areas of focus for the survey: (1) the extent to which IRBs/HRPPs consult outside experts; (2) how IRBs identify outside experts; (3) the topics of studies or types of issues for which IRBs request outside expert consultation; and (4) overall impacts, advantages, and disadvantages of using outside experts. Between January and March 2021, our team developed the survey tool and pilot tested it with our three original context experts. The IRBs at HSPH, UPenn, Stanford, and Loyola authorized the conduct of this research.

Participants

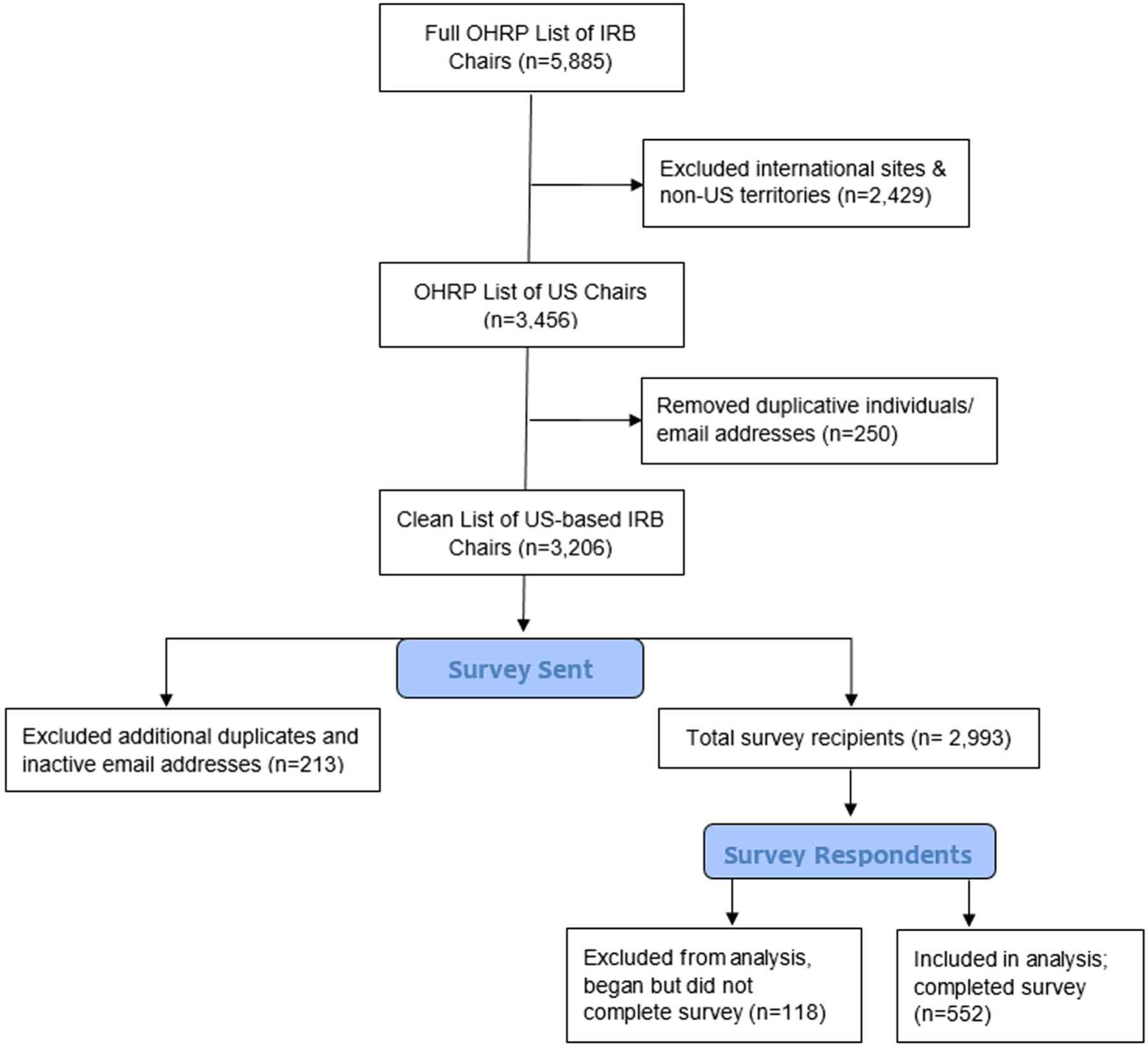

In February 2021, we obtained the complete list of all U.S. IRBs holding federal-wide assurances (FWAs) registered with the U.S. Office for Human Research Protections (OHRP).This list included 5,885 IRB Chairs and their associated organization/IRB. We first filtered the list to remove international sites, keeping all 50 states as well as Puerto Rico, Guam, and American Samoa (N = 3,456 Chairs). We then removed duplicate individuals/email addresses. This left a total of 3,206 IRB Chairs (see Figure 1).

Figure 1.

Schema for Participant Sampling.

The survey was first sent to potential participants via email on March 24, 2021, with reminders sent on April 12th and April 26th. No incentives were offered for completion. Throughout the survey distribution and reminder follow up periods, additional duplicates and inactive email addresses were discovered. Our final study population reflects a total of 2,993 IRB chairs with valid email addresses who received our survey via Qualtrics.

Procedures

We used Qualtrics to host and distribute the survey. In the initial recruitment email, chairs were asked to either complete the survey or forward the survey link to the institutional contact most knowledgeable about their IRB’s use of outside experts. We asked that respondents have firsthand knowledge of IRB review processes, engaging outside experts (whether their IRB does or does not), and/or HRPP operations.

Measures

The survey included questions focused on utilization frequency (formal and informal); factors that motivate IRBs to request outside expert review (e.g., topics, designs, populations, conditions, interventions, contexts, settings); what specific domains of expertise IRBs commonly seek/need consultation on; strategies that IRBs use to identify appropriate outside experts; and the overall impacts, advantages, and disadvantages of outside expert utilization. We also collected basic information about the institution, the respondent’s role in the HRPP, active protocol volume, and types of research reviewed. All questions in the survey were optional except for the primary branching question, which asked if respondents’ IRB/institution uses outside experts or consultants to assist with IRB review, citing the OHRP and FDA regulatory language related to use of outside experts. See Appendix A, supplementary materials for the full survey.

Data analysis

Both data reports and raw data were exported from Qualtrics at the conclusion of the survey. Descriptive statistics were used to summarize findings.

Results

Of the 2,993 IRB Chairs who received our survey via Qualtrics, a total of 670 opened the survey. The survey was completed by 552 individuals and these data were included in the analysis. 118 individuals opened the survey but did not answer the initial branching question about use of outside experts (and therefore did not advance to other questions). These surveys were not included in the analysis nor counted toward the response rate. Figure 1 depicts the schema for determining the response rate of 18.4% (552/2,993). Although not all respondents answered every survey question, we report all results below out of 552 to avoid inflating response proportions.

Participant and IRB characteristics

Survey respondent and institutional characteristics are summarized in Table 1. Respondents came from a geographically diverse group of U.S.-based IRBs representing 49 states (Alaska not present) and Puerto Rico. About half of respondents (53.3%) were from a university/college and half of respondents’ IRBs have an average of 6–10 members (50%). Most respondents were in positions of HRPP Leadership (e.g., institutional official, chair, manager) (62.3%). Other respondents included HRPP staff, educators, government employees, and “other.” Overall, 57.4% of respondents’ IRBs predominantly review social/behavioral/educational research (SBER), 20.3% predominantly review biomedical/clinical research, and 16% review both types of research equally. Other institutional characteristics related to the number of IRB panels, number of active research protocols, and size of the HRPP staff are reported in Table 1.

Table 1.

Sample Characteristics (N = 552).

| n | %* | |

|---|---|---|

| Respondent’s Institution Type** | ||

| University/College Setting | 294 | 53.3% |

| Other (e.g. Commercial IRB, Federal Agency, Research Center, Public Institution) | 111 | 20.1% |

| Hospital/Medical Setting | 105 | 19% |

| Nonprofit/NGO/Foundation | 39 | 7.1% |

| Did Not Answer Question | 3 | 0.5% |

| Existence of standard operating procedures (SOPs) that describe IRB/HRPP’s process for requesting outside expert/consultant review | ||

| Do Not Have SOPs | 286 | 51.8% |

| Do Have SOPs | 160 | 29% |

| Did Not Answer Question | 106 | 19.2% |

| Respondent’s Primary Role/Position at Institution** | ||

| HRPP Leadership (IO, Chair, Manager) | 344 | 62.3% |

| Educator | 92 | 16.7% |

| Other (e.g. Government Employee, Research Staff, Student) | 58 | 10.5% |

| HRPP Staff (IRB Members & Admin) | 31 | 5.6% |

| Compliance/QA Staff | 6 | 1.1% |

| Did Not Answer Question | 21 | 3.8% |

| Total IRB panels at Institution | ||

| 1 | 383 | 69.4% |

| 2 | 50 | 9.1% |

| 3 | 25 | 4.5% |

| 4–10 | 52 | 9.4% |

| 11–15 | 2 | 0.4% |

| 15+ | 1 | 0.2% |

| Did Not Answer Question | 39 | 7% |

| Number of Staff/Administrators Supporting Institution’s IRB | ||

| 0–5 | 458 | 83% |

| 6+ | 63 | 11.4% |

| Did Not Answer Question | 31 | 5.6% |

| Total Active Human Subjects Research Projects Within IRB/HRPP’s portfolio*** | ||

| 0–500 | 393 | 71.1% |

| 500+ | 96 | 17.4% |

| Don’t know | 33 | 6% |

| Did Not Answer Question | 30 | 5.4% |

| Type of Research Predominantly Reviewed at Institution | ||

| Social/behavioral/educational | 317 | 57.4% |

| Biomedical/clinical | 112 | 20.3% |

| Both equally | 88 | 16% |

| Did Not Answer Question | 35 | 6.3% |

Response to the required question “Does the IRB (or IRBs, if multiple panels exist) at your institution use outside experts or consultants to assist with IRB review?” Percentages may not add up to 100% due to missing data.

Categories for this question were sourced from the PRIM&R Workload and Salary Survey. Respondents were asked to select the category most representative of their institution and we consolidated into the four categories shown here.

“Active” is defined as any nonexempt project initially approved or reapproved under continuing review in the last 12 months. Respondents were asked to estimate.

Overall utilization of outside expertise

Of the 552 survey respondents, 55.4% reported their institution’s IRB uses outside experts and 44.6% reported their institution’s IRB does not. Nearly all respondents who reported using outside experts indicated that they do so less than once a month, but occasionally each year (95%); based on the response options provided in the survey, this number may include individuals who selected this response to reflect using outside experts only once a year or infrequently every few years. Table 2 compares respondent and IRB characteristics between users and non-users of outside experts.

Table 2.

Characteristics of institutions that use and don’t use outside experts (N = 552)*.

| IRBs that Use Outside Experts (n = 306) | IRBs that Do Not Use Outside Experts (n = 246) | |||

|---|---|---|---|---|

| Respondent’s Institution Type** | n | % | n | % |

| University/College Setting | 164 | 30% | 123 | 22.3% |

| Hospital/Medical Setting | 68 | 12.3% | 35 | 6.3% |

| Other (e.g. Commercial IRB, Federal Agency, Research Center, Public Institution) | 55 | 10% | 55 | 10% |

| Nonprofit/NGO/Foundation | 12 | 2.2% | 27 | 5% |

| Total Active Human Subjects Research Projects Within IRB/HRPP’s portfolio*** | n | % | n | % |

| 0–500 | 189 | 34.2% | 204 | 37% |

| 500+ | 82 | 14.9% | 14 | 2.5% |

| Don’t know | 26 | 4.7% | 7 | 1.3% |

| Type of Research Predominantly Reviewed at Institution | n | % | n | % |

| Social/behavioral/educational | 166 | 30.1% | 157 | 28.4% |

| Biomedical/clinical | 76 | 14% | 38 | 6.9% |

| Both equally | 57 | 10.3% | 31 | 5.6% |

| Institution has standard operating procedures (SOPs) that describe IRB/HRPP’s process for requesting outside expert/consultant review | n | % | n | % |

| Do Not Have SOPs | 142 | 25.7% | 144 | 26.1% |

| Do Have SOPs | 106 | 19.2% | 54 | 9.8% |

Response to the required question “Does the IRB (or IRBs, if multiple panels exist) at your institution use outside experts or consultants to assist with IRB review?” Percentages may not add up to 100% due to missing data.

Categories for this question were sourced from the PRIM&R Workload and Salary Survey. Respondents were asked to select the category most representative of their institution and we consolidated into the four categories shown here.

“Active” is defined as any nonexempt project initially approved or reapproved under continuing review in the last 12 months. Respondents were asked to estimate.

IRBs that utilize outside experts and those that do not are similar in terms of institution type, respondent’s institutional role, average number of IRB members, total number of active human subjects research protocols, and type of research predominantly reviewed. Groups differed in terms of having standard operating procedures (SOPs) for using outside experts, number of IRB panels at the institution, and number of IRB staff members.

Institutions who did not have SOPs on use of outside experts were almost even in their utilization: 25.7% use outside experts and 26.1% do not. Among institutions with relevant SOPs, a higher percentage of respondents reported using outside experts (19.2%) than not using them (9.8%).

IRBs that do not utilize outside expertise

Of the 44.6% of respondents that reported their institution’s IRB does not use outside experts, the primary reasons cited were that the need had not arisen (76.8%), or they had no need for outside experts because their IRB composition adequately covers all areas of expertise currently needed (41.1%). No respondent indicated they had prior negative experience with outside experts deterring them from future use. Several respondents explained in open text responses that their institution’s portfolio is small and homogeneous, or that the majority of the research their IRB reviews meets the regulatory definition of “minimal risk” and thus the need for outside expertise has not arisen.

IRBs that utilize outside expertise

Table 3 illustrates how IRBs identify outside experts to assist with review. Respondents could select multiple methods from 13 options, including Other, and the mean number of choices selected was 3.2 (median = 3, range = 9). The most frequent method was identifying a previously known subject matter expert (83.3%). Other common methods of identification included requesting suggestions for potential reviewers from within the organization that are not current IRB members (49.7%), receiving a word of mouth referral (35%), requesting a former IRB member to serve as consultant (32.7%), and requesting suggestions for potential reviewers from affiliated organizations (e.g., Cancer Center, pediatric hospital) (25.2%). Infrequent methods of identifying potential outside experts included requesting suggestions from principal investigators (PIs) or seeking assistance from a community advisory board (CAB).

Table 3.

How do IRBs that use outside experts identify them (N = 306).

| n* | % | |

|---|---|---|

| Identify a known subject matter expert | 255 | 83.3% |

| Request suggestions for potential reviewers from within the organization that are not current IRB members | 152 | 49.7% |

| Receive a word of mouth referral | 107 | 35.0% |

| Request Former IRB Member serve as consultant | 100 | 32.7% |

| Request suggestions for potential reviewers from affiliated organizations (e.g., Cancer Center, pediatric hospital) | 77 | 25.2% |

| Request that an IRB member on another IRB panel within our institution, who has the needed expertise, serve as consultant | 62 | 20.3% |

| Request suggestions for potential reviewers from the Principal Investigator | 49 | 16.0% |

| Request suggestions for potential reviewers from a professional network/organization/forum | 42 | 13.7% |

| Seek assistance or request consult from a similar project or institutional advisory committee | 33 | 10.8% |

| Search publicly available information to identify experts or thought leaders in the domain/field | 33 | 10.8% |

| Seek assistance or request consult from a Community Advisory Board | 28 | 9.2% |

| Other [write in] | 23 | 7.5% |

| Request suggestions for potential reviewers from federal regulatory agencies (e.g. OHRP, FDA) | 15 | 4.9% |

Respondents were allowed to choose multiple responses.

Table 4 presents the range of areas, topics, and/or issues for which IRBs request outside expert(s). Again, respondents could select multiple options from 24 choices, including Other, with a mean number of 3.9 selected options (median = 3, range = 20). Most frequently, respondents reported that consultation was sought for scientific expertise not held by current members (69.6%). Less frequently respondents noted requesting outside expert review for: research with vulnerable population(s) (34.3%), risk assessment (30.7%), legal issues (28.1%), the use of FDA-regulated test articles (22.9%), specific therapeutic areas (e.g., oncology, orthopedics, surgery, burn treatment, stem cell treatment) (16%), and protocols that involve novel/emerging areas of study (9.2%).

Table 4.

Areas, topics, and issues for which IRBs use outside experts (N = 306).

| n* | % | |

|---|---|---|

| Scientific expertise not held by current members | 213 | 69.6% |

| Vulnerable population(s) | 105 | 34.3% |

| Risk assessment | 94 | 30.7% |

| Legal issues | 86 | 28.1% |

| Drug, device, and/or biologics use, testing, or intervention | 70 | 22.9% |

| Sensitive, stigmatized, and/or controversial topics (e.g. abortion, mental health, infidelity, sexual or gender identity) | 57 | 18.6% |

| Technology use, testing, or intervention | 55 | 18.0% |

| Specific therapeutic areas (e.g., oncology, orthopedics, surgical studies, burn studies, studies involving stem cells, genetically modified materials) | 49 | 16.0% |

| Study design | 48 | 15.7% |

| Interpretation of regulations | 47 | 15.4% |

| Cultural context – internationally (e.g. norms, practices, customs, beliefs) | 45 | 14.7% |

| Cultural context – within the US (e.g. norms, practices, customs, beliefs) | 44 | 14.4% |

| Statistical analysis | 40 | 13.1% |

| Novel/emerging areas of study** | 28 | 9.2% |

| Informed consent | 28 | 9.2% |

| Regional or location-based considerations (e.g. site-specific laws or regulations, local ethical review structure, socioeconomic issues, safety issues, travel restrictions) | 27 | 8.8% |

| Social justice, human/civil rights advocacy | 26 | 8.5% |

| Fair subject selection, inclusion/exclusion criteria | 26 | 8.5% |

| Recruitment methods | 24 | 7.8% |

| Other [write in] | 20 | 6.5% |

| Study team conflict of interests (perceived or actual) | 16 | 5.2% |

| Political topic of concern (e.g. structure of local government, stability of local government) | 15 | 4.9% |

| Participant compensation | 15 | 4.9% |

| Funding source | 4 | 1.3% |

Respondents were allowed to choose multiple responses.

Select topics of note written in by respondents included: COVID-19, first in human drug/device studies, stem cell research, transcranial magnetic stimulation, CAR-T cell therapy, bioterrorism agent research, novel psychiatric treatments, & CRISPR technology.

Table 5 captures respondents who use outside experts and their perceived overall impact of utilizing outside experts, as well as the perceived advantages, disadvantages, and challenges in doing so. Almost all respondents in this group (93%) reported that they perceived an overall positive impact of outside experts on the IRB review process. Two particular advantages were reported most frequently: demonstration of due diligence on the part of the IRB (56%) and potential addition of objectivity to a specific review (53%). Disadvantages were that outside experts may have limited knowledge of research regulations and the role of the IRB (44.4%), may not provide useful/actionable feedback (37.9%), and may provide review beyond the scope of what they were asked to do (34.6%). Fifteen respondents (4.9%) who selected “Other” noted in free text that using outside experts could be too time consuming, extend review time, and delay IRB decisions.

Table 5.

IRBs Utilizing Outside Experts (N = 302).

| n | % | |

|---|---|---|

| Overall Impact of Using Outside Experts | ||

| Positive | 280 | 91.5% |

| Neutral | 22 | 7.2% |

| Negative | 0 | 0.00% |

| Potential advantages of utilizing outside experts/consultants* | ||

| May contribute knowledge/expertise | 289 | 94.4% |

| May assist/support IRB decision making | 261 | 85.3% |

| May provide additional input/second set of eyes | 238 | 77.8% |

| May demonstrate due diligence on the part of the IRB | 171 | 55.9% |

| May add objectivity | 162 | 52.9% |

| May allow IRBs to remain a reasonable size without having to cover all areas of expertise themselves | 111 | 36.3% |

| May allow IRBs to remain a reasonable size to attain quorum | 48 | 15.7% |

| May allow outside expert to learn more about the IRB before deciding if they would like to formally join | 29 | 9.5% |

| May provide audit coverage | 19 | 6.2% |

| Other [write in] | 6 | 2.0% |

| Potential disadvantages of utilizing outside experts/consultants* | ||

| May have limited knowledge of research regulations and the role of the IRB | 136 | 44.4% |

| May not provide useful/actionable feedback | 116 | 37.9% |

| May provide review beyond the scope of what they’re asked to do | 106 | 34.6% |

| May provide recommendations the IRB disagrees with | 51 | 16.7% |

| Other [write in] | 39 | 12.7% |

| Challenges that prevent seeking outside expert/consultant review* | ||

| We have not experienced any challenges | 181 | 59.2% |

| Pressure to review protocols quickly rather than taking time to seek consultation | 66 | 21.6% |

| Difficulty identifying a consultant with the necessary expertise | 65 | 21.2% |

| Concern that it may be perceived that the IRB isn’t adequately composed | 16 | 5.2% |

| Other [write in] | 15 | 4.9% |

| Concern for offending current IRB members who perceive they are experts in a specific area | 9 | 2.9% |

Respondents were allowed to choose multiple responses.

Discussion

The Common Rule (45 CFR 46.107) and FDA regulations (21 CFR 56.107) mandate that IRBs be composed of “sufficiently qualified” members who provide “complete and adequate review of research activities commonly conducted by the institution.” However, complete domain expertise for any conceivable protocol is nearly impossible for any IRB.

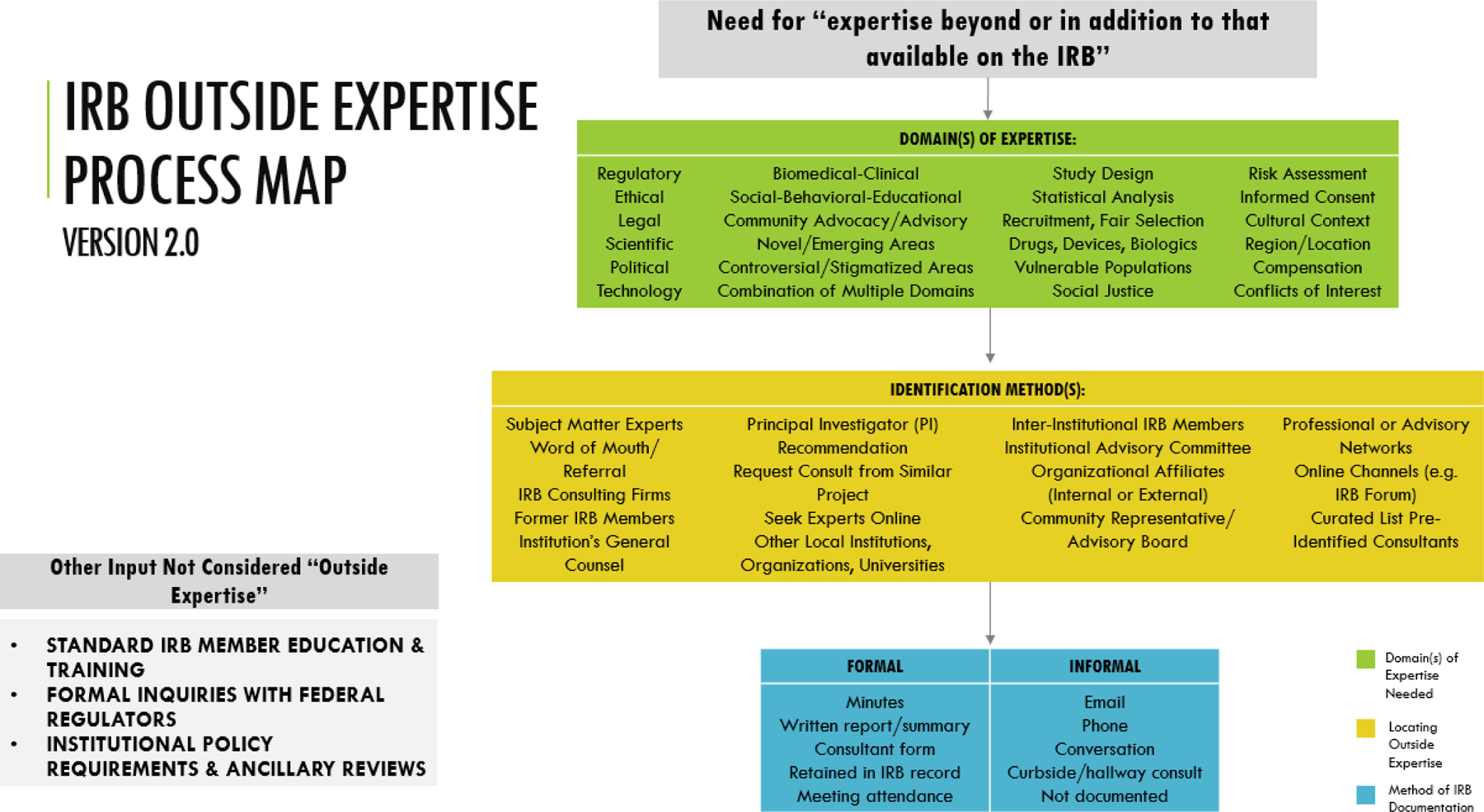

Our survey was part of a sequential mixed-methods initiative to investigate outside expertise, beginning with a literature review and followed by a qualitative interview study with survey respondents that will build on these findings. In our previous work, we identified critical gaps in the existing literature ([citation redacted per AJOB guidelines]) and created a process map (Figure 2) to guide our empirical research on these issues. The process map provides an overview of core elements for empirical inquiry, including: (1) the domains in which outside expertise is needed and sought; (2) how outside experts are located; and (3) the methods by which outside expertise is offered and documented. Based on findings from this survey, we added five domains of expertise to the process map (e.g., Recruitment, Fair Selection, Social Justice, Informed Consent, and Conflicts of Interest) and consolidated the identification methods IRBs use. The process map will inform ongoing qualitative interviews focused on how IRBs decide to seek outside expert reviews and how their feedback is integrated into and influences IRB decisions.

Figure 2. IRB Outside Expertise Process Map Comparison. Based on findings from the survey, we were able to update the IRB Outside Expertise Process Map (Version 1 to Version 2) in the following ways:

1. Five domains of expertise were added (e.g. Recruitment, Fair Selection, Social Justice, Informed Consent, Conflicts of Interest)

2. Identification methods IRBs use were consolidated and no longer differentiated by Internal/External

Findings from our national survey of IRBs address gaps in the existing literature on use of outside experts by providing insight about whether (occasionally or not at all) and for what reasons IRBs choose to use them (largely scientific expertise, among others), how IRBs identify appropriate individuals to serve as outside experts (known expertise), and how IRB and HRPP leaders perceive the impact of using outside experts on the review process (overall positive). However, our results also highlight several open questions.

While many IRBs use outside experts, among those boards, use is relatively infrequent, usually less than once a month or just a few times each year. It is not clear whether this is perceived as - or is - an optimal frequency. These IRBs may not perceive a substantial need for frequent consults with outside experts or perhaps they face barriers to using outside experts as frequently as they would like. Although many respondents from IRBs that do not utilize outside experts noted that the need had simply not yet arisen, it may be difficult to self-assess whether a board’s expertise is sufficient. Further, IRBs have a finite number of members at any given time, but the protocols they review could pose a wide range of research questions. Some of those questions will be typical and within the members’ expertise, but there is always the possibility of an atypical protocol submission. Thus, respondents’ IRBs that have not used outside experts may be simply because the expertise reflected in their membership has been sufficient so far. The need may truly not have arisen for these boards or perhaps the need did arise and they simply did not recognize it. Clearer guidance on when use of outside experts may be necessary and appropriate, potentially informed by the experiences of others, could be helpful across IRBs.

Previous research on IRB membership suggests that smaller IRBs frequently recruit members with scientific expertise from outside of their institution vs. from within, but do not articulate how or when they engage outside expertise (Catania et al. 2008). In our study, we found that use of outside experts is most common at smaller institutions, which perhaps should be expected given that smaller institutions are likely to have smaller HRPPs, and at institutions that primarily review social behavioral research. In the current qualitative component of our work, we are pursuing a deeper understanding of these trends.

Previous research suggests that IRB members “typically have partial knowledge of many areas of research, but sometimes lack in-depth knowledge about subfields in which research occurs” (Klitzman, p. 765). Consistent with this claim, our study found that IRBs use outside experts primarily to meet a perceived need for specific scientific expertise. However, many other reasons were cited beyond this related to ethical, legal/regulatory, clinical, and study design concerns. Our data indicate that, overall, IRBs use outside experts for a wide range of reasons, supporting the general view that, given the dynamic nature of research, IRBs generally cannot possess all necessary expertise within their standing board membership. Yet our survey data cannot address whether outside experts are being used in every case they should be.

Specific concerns have been raised about the ability of IRBs to review research that involves nascent fields (e.g., xenotransplantation and data science) (Ferretti et al. 2020; Hurst et al. 2020). Our survey found that a small number of IRBs engage outside experts for exactly that purpose. This may be a more feasible option than working to build IRB capacity about specific areas that may only arise sporadically for a given board. It can also potentially help boards glean important insight for future review of related protocols.

Among institutions that reported not using outside experts at all, they appear to generally perceive that outside experts are not needed, rather than that they are not accessible. However, we do not know if those who claim they do not need outside experts are aware of their expertise gaps and whether investigators or other boards would agree with these assessments.

Our data suggest that IRBs use a variety of different methods to identify outside experts, when they are perceived to be needed. Very few respondents reported seeking suggestions from outside reviewers from a professional organization or network outside their institution or seeking publicly available information to identify experts or thought leaders in the domain/field. Additionally, 21.2% of respondents reported difficulty identifying a consultant with the necessary expertise. This infrequency and perceived barrier may indicate that these resources are currently missing and that IRBs may benefit from published, centralized lists of potential experts curated by a trusted source such as the Office for Human Research Protections (OHRP), Public Responsibility in Medicine and Research (PRIM&R), or the Association for the Accreditation of Human Research Protection Programs, Inc. (AAHRPP). The availability of such resources may reduce barriers for IRBs seeking to use outside experts, while also normalizing such use.

Few respondents reported seeking assistance from a Community Advisory Board (CAB) or suggestions from PIs about potential experts to engage. Given their direct experience with local populations and familiarity with community perspectives on research, CABs and their members could provide valuable insights and expertise when submitted protocols aim to recruit vulnerable groups or raise challenging ethical issues about which community perspectives could be relevant. Recent research suggests that institutional-level community advisory boards are common at academic research institutions, particularly those with NIH Clinical and Translational Award funding (Stewart et al. 2019; Wilkins et al. 2013). Yet it appears that their status as a source of expertise may be as yet unrecognized. IRBs should therefore consider developing relationships with local CABs and incorporating into their SOPs protocol characteristics that should prompt CAB review. A current AEREO project funded by the National Institutes of Health is presently exploring how increased collaboration between human research protections programs (HRPPs – the institutional offices within which IRBs sit) and institution-level community engagement activities could bring stakeholder perspectives to bear on research ethics oversight.

IRBs may worry that PIs are conflicted in terms of suggesting relevant experts, but they may be in the best position to identify them, particularly in small subfields. Given that it is typical to ask investigators to recommend peer reviewers for their publications, IRBs should consider asking for these expert recommendations as needed and then vetting potential conflicts independently.

Overwhelmingly, respondents whose IRBs had utilized outside experts reported a positive impact (91.5%), and no one reported a negative experience, although this may reflect a clear selection bias. Respondents noted a wide range of potential advantages, which the IRB/HRPP professional community may benefit from considering. Reported disadvantages were theoretical but suggest there may be value in developing guidance about how best to secure high-quality outside consults.

Study limitations

Our findings are limited by a relatively low response rate compared with previous IRB surveys (Catania et al. 2008; Klitzman 2011; Klitzman 2013; Vitak et al. 2017), leading to potential lack of representativeness and limits to generalizability. As OHRP previously acknowledged in the Common Rule, there is a “lack of available data about IRB effectiveness and how IRBs function operationally,” resulting in a need to rely on “anecdotal evidence” (p. 53996). Given the dearth of publications on the topic of IRB expertise (Serpico et al. 2022) we believe our survey offers an important contribution, in spite of these constraints.

Because data are lacking on general demographic characteristics of U.S. IRBs, we are unable to determine the extent to which our respondents are representative of U.S. IRBs more broadly. Another consequence of this gap is that it is not possible to compare the responders to non-responders. The RAND Profile of Institutional Review Board Characteristics (Berry et al. 2019) seemed like a potentially useful benchmark to compare respondent’s institutions to however RAND excluded smaller IRBs from their sample. Given our specific focus on outside expertise, and the possibility that outside expertise may be needed most on boards with smaller portfolios (i.e., fewer than 100 active protocols), the RAND sample is not a comparable reference.

Although the recipients were directed to pass the survey on to the most knowledgeable person about these matters at their institution, it is also possible that respondents did not have the most accurate or comprehensive knowledge of their IRB’s use of outside experts. We do not know how many respondents are from the same institution (e.g., from an institution with multiple IRBs or whose IRB(s) have multiple chairs).

Additionally, our survey did not provide an operational definition of the term “outside expert,” but rather relied on the language in the regulations, so respondents may have applied different definitions for who counts as an outside expert. Neither did we ask about other strategies beyond use of outside experts that could address gaps in board expertise such as creating standalone boards, special review committees, consolidated boards with rotating members, statewide consortiums, outsourcing reviews to external oversight bodies, and internal institutional structures (Ferretti et al. 2020; McNeil 2007; Musoba, Jacob, and Robinson 2015; Stark 2007; Vitak et al. 2017).

Future research directions

Our survey provides primarily the perspective of IRB/HRPP leadership. Gathering information from those who have served as outside experts and examining their feedback on protocols could inform guidance on how to effectively and efficiently use outside expert input. IRB member and investigator perspectives are also needed to inform evaluation of the impact of outside expert review on IRB decision-making. Future research should address how HRPPs/IRBs determine whether outside expertise is needed and how use of outside experts impacts the quality of IRB decisions, researcher satisfaction with the IRB review process, IRB workload and time-to-approval, and participant safety/protections.

As noted above, we are currently building on this survey with in-depth, qualitative interviews with a subset of survey respondents. These interviews will allow us to obtain more nuanced descriptions of IRB experiences utilizing outside experts and the impacts on IRB quality, if any. We hope to identify unmet needs for different types of expertise, assess how IRBs vet the qualifications of outside experts and ensure the quality and usefulness of outside experts’ input, and evaluate the impact of experts’ input on IRB decisions and human research protections.

Conclusions

Just over half of the U.S. IRBs in our sample reported using outside experts and are satisfied with the outcomes. Important questions remain, though, about what level and type of engagement with outside experts should be viewed as optimal to promote the highest quality ethics review. Further exploration is needed to understand how to optimize use of outside experts, including how to subjectively recognize when expertise is lacking, what barriers IRBs face to using outside experts, and perspectives on the impact of outside expert review on IRB decision-making and quality of IRB review.

Supplementary Material

Acknowledgments

This work was completed under the auspices of the Consortium to Advance Effective Research Ethics Oversight (www.AEREO.org). We thank AEREO members for helpful suggestions regarding survey content and design. The U.S. Department of Health and Human Services Office for Human Research Protections provided the list of registered institutional review boards used for recruitment. We appreciate the contributions of those who agreed to participate in this survey.

Funding

The authors have not received any financial support specific to this research or manuscript preparation.

Footnotes

Supplemental data for this article is available online at https://doi.org/10.1080/23294515.2022.2090459.

Disclosure statement

Kimberley Serpico – I have no conflicts of interest to disclose relating to this work – past or present; existing or potential; personal, professional, scientific, and/or financial. Vasiliki Rahimzadeh – I have no conflicts of interest to disclose relating to this work – past or present; existing or potential; personal, professional, scientific, and/or financial. Luke Gelinas – I have no conflicts of interest to disclose relating to this work – past or present; existing or potential; personal, professional, scientific, and/or financial. Lauren Hartsmith – I have no conflicts of interest to disclose relating to this work – past or present; existing or potential; personal, professional, scientific, and/or financial. Holly Fernandez Lynch – I have no conflicts of interest to disclose relating to this work. I am the co-chair of the AEREO Consortium and a board member of Public Responsibility in Medicine & Research. Emily E. Anderson – I have no conflicts of interest to disclose relating to this work – past or present; existing or potential; personal, professional, scientific, and/or financial.

Data availability statement

Data supporting the study findings are available within the article and its supplementary materials. The full dataset is not publicly posted due to privacy and confidentiality restrictions promised to study participants. The authors will honor reasonable requests for access to the de-identified underlying data upon assurance of compliance with the terms of participant consent.

References

- Abbott L, and Grady C. 2011. A systematic review of the empirical literature evaluating IRBs: What we know and what we still need to learn. Journal of Empirical Research on Human Research Ethics 6 (1):3–19. doi: 10.1525/jer.2011.6.1.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berry SHD, Khodyakov D, Grant A, Mendoza-Graf E, Bromley G, Karimi B, Levitan K. Liu, and Newberry S 2019. Profile of Institutional Review Board Characteristics Prior to the 2019 Implementation of the Revised Common Rule. RAND Corporation Accessed March 3, 2022. https://www.rand.org/pubs/research_reports/RR2648.html.

- Catania JA, Lo B, Wolf LE, Dolcini MM, Pollack LM, Barker JC, Wertlieb S, and Henne J. 2008. Survey of U.S. Boards that review mental health-related research. Journal of Empirical Research on Human Research Ethics 3 (4):71–9. doi: 10.1525/jer.2008.3.4.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emanuel E, Wood A, Fleischman A, Bowen A, Getz K, Grady C, Levine C, Hammerschmidt D, Faden R, Eckenwiler L, et al. 2004. Oversight of human participants research: Identifying problems to evaluate reform proposals. Annals of Internal Medicine 141 (4):282–91. doi: 10.7326/0003-4819-141-4-200408170-00008. [DOI] [PubMed] [Google Scholar]

- Ferretti A, Ienca M, Hurst S, and Vayena E. 2020. Big data, biomedical research, and ethics review: New challenges for IRBs. Ethics & Human Research 42 (5):17–28. doi: 10.1002/eahr.500065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JL, and Dewa CS. 2005. Institutional review boards and multisite studies in health services research: Is there a better way? Health Services Research 40 (1):291–308. doi: 10.1111/j.1475-6773.2005.00354.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hurst DJ, Padilla LA, Trani C, McClintock A, Cooper DKC, Walters W, Hunter J, Eckhoff D, Cleveland D, and Paris W. 2020. Recommendations to the IRB review process in preparation of xenotransplantation clinical trials. Xenotransplantation (Københaven) 27 (2):e12587. doi: 10.1111/xen.12587. [DOI] [PubMed] [Google Scholar]

- Klitzman R 2011. The myth of community differences as the cause of variations among IRBs. AJOB Primary Research 2 (2):24–33. doi: 10.1080/21507716.2011.601284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klitzman R 2013. How good does the science have to be in proposals submitted to Institutional Review Boards? An Interview Study of Institutional Review Board personnel. Clinical Trials (London, England) 10 (5):761–6. doi: 10.1177/1740774513500080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNeil C 2007. Debate over institutional review boards continues as alternative options emerge. Journal of the National Cancer Institute 99 (7):502–3. doi: 10.1093/jnci/djk157. [DOI] [PubMed] [Google Scholar]

- Musoba G, Jacob S, and Robinson L. 2015. The Institutional Review Board (IRB) and Faculty: Does the IRB challenge faculty professionalism in the social sciences? Qualitative Report, 2014-12.-.

- Pritchard IA 2011. How Do IRB members make decisions? A review and research agenda. Journal of Empirical Research on Human Research Ethics 6 (2):31–46. doi: 10.1525/jer.2011.6.2.31. [DOI] [PubMed] [Google Scholar]

- Serpico K, Rahimzadeh V, Anderson EE, Gelinas L, and Lynch HF. 2022. Institutional review board use of outside experts: What do we know? Ethics & Human Research 44 (2):26–32. doi: 10.1002/eahr.500121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silberman G, and Kahn KL. 2011. Burdens on research imposed by Institutional Review Boards: The state of the evidence and its implications for regulatory reform. The Milbank Quarterly 89 (4):599–627. doi: 10.1111/j.1468-0009.2011.00644.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spellecy R, Eve AM, Connors ER, Shaker R, and Clark DC. 2018. The real-time IRB: A collaborative innovation to decrease IRB review time. Journal of Empirical Research on Human Research Ethics 13 (4):432–7. doi: 10.1177/1556264618780803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark L 2007. Victims in our own minds? IRBs in myth and practice. Law & Society Review 41 (4):777–86. doi: 10.1111/j.1540-5893.2007.00323.x. [DOI] [Google Scholar]

- Stewart MK, Boateng B, Joosten Y, Burshell D, Broughton H, Calhoun K, Huff Davis A, Hale R, Spencer N, Piechowski P, et al. 2019. Community advisory boards: Experiences and common practices of clinical and translational science award programs. Journal of Clinical and Translational Science 3 (5):218–26. doi: 10.1017/cts.2019.389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vitak J, Proferes N, Shilton K, and Ashktorab Z. 2017. Ethics regulation in social computing research. Journal of Empirical Research on Human Research Ethics 12 (5):372–82. doi: 10.1177/1556264617725200. [DOI] [PubMed] [Google Scholar]

- Wilkins CH, Spofford M, Williams N, McKeever C, Allen S, Brown J, Opp J, Richmond A, and Strelnick AH. 2013. Community representatives’ involvement in clinical and translational science awardee activities. Clinical and Translational Science 6 (4):292–6. doi: 10.1111/cts.12072. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data supporting the study findings are available within the article and its supplementary materials. The full dataset is not publicly posted due to privacy and confidentiality restrictions promised to study participants. The authors will honor reasonable requests for access to the de-identified underlying data upon assurance of compliance with the terms of participant consent.