Abstract

Papilledema is an optic disc swelling with increased intracranial pressure as the underlying cause. Diagnosis of papilledema is made based on ophthalmoscopy findings. Although important, ophthalmoscopy can be challenging for general physicians and nonophthalmic specialists. Meanwhile, artificial intelligence (AI) has the potential to be a useful tool for the detection of fundus abnormalities, including papilledema. Even more, AI might also be useful in grading papilledema. We aim to review the latest advancement in the diagnosis of papilledema using AI and explore its potential. This review was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-analyses guidelines. A systematic literature search was performed on four databases (PubMed, Cochrane, ProQuest, and Google Scholar) using the Keywords “AI” and “papilledema” including their synonyms. The literature search identified 372 articles, of which six met the eligibility criteria. Of the six articles included in this review, three articles assessed the use of AI for detecting papilledema, one article evaluated the use of AI for papilledema grading using Frisèn criteria, and two articles assessed the use of AI for both detection and grading. The models for both papilledema detection and grading had shown good diagnostic value, with high sensitivity (83.1%–99.82%), specificity (82.6%–98.65%), and accuracy (85.89%–99.89%). Even though studies regarding the use of AI in papilledema are still limited, AI has shown promising potential for papilledema detection and grading. Further studies will help provide more evidence to support the use of AI in clinical practice.

Keywords: Artificial intelligence, fundus photography, papilledema

Introduction

Papilledema is an optic disc swelling deriving from a raised intracranial pressure (ICP). It is different from other causes of optic disc edema in that visual function is usually normal in the acute phase. Elevating ICP would be transmitted into the subarachnoid space surrounding the optic nerve that hinders axoplasmic transport within ganglion cell axons. The most common cause of papilledema, especially in patients under 50, is idiopathic intracranial hypertension (IIH).[1,2]

The annual incidence of IIH as the common cause of papilledema is estimated to be 0.9/100,000 in the United States general population. IIH is seen to be most prevalent in obese women of childbearing age, with an incidence in obese women aged 20–44 found to be 13/100,000.[3]

Characteristics of papilledema are determined by the extent of papillary and peripapillary nerve fibers. The normally transparent retinal nerve fiber layer (RNFL) would thicken, opacify, and obscure the disc margin, secondary to increased disc edema. As swelling increases, blood vessels become ambiguous, first at the margin and then on the surface of the optic disc.[4] The Frisén grade is an ordinal scale that defines six stages (Grades 0–5) of swelling.

Early detection of papilledema is crucial. When papilledema is found in the patient, there is certainly an underlying cause. These underlying causes should be discovered as soon as possible as the higher stage of papilledema could indicate a serious disease such as a brain tumor or malignant hypertension.[1]

In the emergency room, the general practitioner often assesses the patient first to confirm the clinical finding of papilledema and refer it to the ophthalmologist. Although important, ophthalmoscopy can be challenging for general physicians and nonophthalmic specialists. Once an ophthalmologist labels the patient as having papilledema, this is rarely questioned further, and the pathway of investigations moves forward. A long sequence of supporting examinations would lead to late treatment.[5]

Papilledema treatment is specific to the underlying cause. Late diagnosis of the disease will lead to untreated papilledema. Without treatment, the pressure that causes papilledema can cause visual loss and permanent damage to one or both optic nerves. In addition, an untreated increase in pressure inside the head can lead to brain damage.[1]

Artificial intelligence (AI) is a branch of computer science that aims to build machines to mimic brain function, which has attracted considerable global interest. AI has been widely studied in ophthalmological image processing, mainly based on fundus photographs. AI has been used in diagnosing diabetic retinopathy, age-related macular degeneration, cataracts, glaucoma, and other fundus abnormalities, including papilledema.[6,7] Even more, AI might also be useful in grading papilledema. We performed this systematic review to quantify the performance of AI and explore its potential in detecting papilledema.

Methods

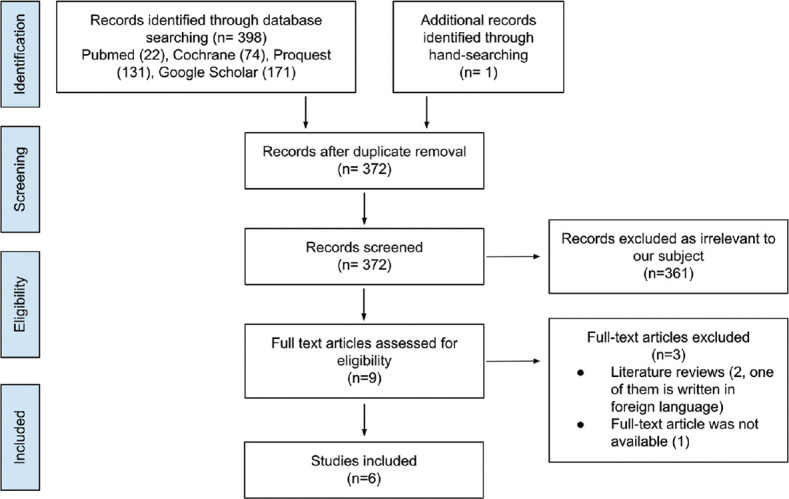

This review compares AI to detect and grade papilledema with experts to comprehend the primary outcomes, which are sensitivity, specificity, and accuracy. The review was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) guidelines [Figure 1]. The PROSPERO registry number for this systematic review is CRD42022381586. The literature search was performed in PubMed, Cochrane, ProQuest, and Google Scholar with no limitation in the publication year using the following keywords with various combinations: “artificial intelligence,” “deep learning,” “machine learning,” “papilledema,” “optic disc swelling,” and “optic disc edema.”

Figure 1.

Preferred Reporting Items for Systematic Reviews and Meta-analyses flowchart

The inclusion criteria were as follows: (1) Studies providing information on detecting or grading papilledema from fundus photographs; (2) Studies using an AI-based model; (3) Studies on humans; and (4) Validity studies. For studies that assess the ability of AI-based models to grade papilledema, we only include articles that used papilledema grading based on the Modified Frisén Scale. The exclusion criteria were as follows: (1) Publication forms including literature review, guidelines, case reports or case series, comments, letters, and editorial; (2) No access to obtain the full paper; and (3) Studies written in a foreign language.

Based on included articles, the following data were extracted: (1) The author and publication year of each study; (2) The classification of subjects; (3) The number of datasets and test images; (4) The name of AI-based algorithm/model; and (5) Reference standard. The primary outcomes reviewed were the sensitivity, specificity, and accuracy of the AI/machine learning/deep learning (DL) algorithm/model to detect and grade papilledema. An appraisal tool from the Centre for Evidence-Based Medicine (CEBM), the University of Oxford, was used to assess the validity of the included articles.

Results

The search resulted in 399 records through database searching and manual hand-searching. The screening was performed in 372 records. A total of six articles were included in the review. These six articles were studies that assessed the diagnostic value of AI-based models in detecting and grading papilledema. The article selection process following the PRISMA guideline[8] is pictured in Figure 1.

In this review, we assessed the validity of each included study using the Diagnostic Study Appraisal Worksheet by CEBM. All of the studies have good validity results. However, some studies did not completely report the test characteristics, such as the area under the curve (AUC), positive predictive value, and negative predictive value. Since the diagnostic method in this review is an automated machine-based tool and the reference standard used was clinician decision, the comparison between the two results is valid and has no risk of bias. Validity assessment results based on each domain are presented in Table 1.

Table 1.

Validity assessment results for each study

| Domains | Explanatory questions | Fatima et al.[12] (2017) | Milea D et al.[9] (2020) | Biousse V et al.[10] (2020) | Akbar S et al.[13] (2017) | Saba T et al.[14] (2021) | Vasseneix C et al.[11] (2021) |

|---|---|---|---|---|---|---|---|

| Validity | Was the diagnosis test evaluated in a representative spectrum of patients? | Yes | Yes | Yes | Yes | Yes | Yes |

| Was the reference standard applied regardless of the index test result? | Yes | Yes | Yes | Yes | Yes | Yes | |

| Was there an independent, blind comparison between the index test and an appropriate reference (“gold”) standard of diagnosis? | Yes | Yes | Yes | Yes | Yes | Yes | |

| Results | Are test characteristics presented? (Sn, Sp, PPV, NPV, AUC, accuracy) | Unclear | Yes | Yes | Unclear | Unclear | Yes |

| Applicability | Were the methods for performing the test described in sufficient detail to permit replication? | Yes | Yes | Yes | Yes | Yes | Yes |

SP=Specificity, PPV=Positive predictive value, NPV=Negative predictive values, SN=Sensitivity, AUC=Area under the curve

This review included six articles, with three articles assessing the use of AI for detecting papilledema, one article evaluating the use of AI for papilledema grading using Frisèn criteria, and two articles assessing the use of AI for both detection and grading. When combined, these six articles used 19,383 fundus photographs for training and validation of their models. The publication years ranged from 2017 to 2021. Models used are various, from the support vector machine (SVM) classifier that identifies features that are used for detecting papilledema to DL systems (DLS). Some studies then compared the accuracy of their model to the diagnosis made by ophthalmologists who were not given clinical information. The characteristics of each study are presented in Table 2.

Table 2.

Study characteristics

| Author (year) | Country | Classification | Total images | Model | Reference standard | Comparison |

|---|---|---|---|---|---|---|

| Fatima et al.[12] (2017) | Pakistan | Normal fundus Papilledema | 160 images from 2 different data sets; 90 from STARE and 70 from local data set acquired from AFIO 90: Normal 70: Abnormal | Robust method to detect the location of the optic disk SVM-RBF classifier to detect and grade papilledema | STARE annotations, then re-annotated by two ophthalmologists + local data set annotated by two ophthalmologists | None |

| Akbar S et al.[13] (2017) | Pakistan | Normal fundus Papilledema Grading Mild papilledema Severe papilledema | 160 images from 2 data sets STARE database Local database collected by AFIO | Robust method to detect the location of the optic disk SVM-RBF classifier to detect and grade papilledema | Classification by two ophthalmologists using Friesen grading rule | None |

| Milea D et al.[9] (2020) | Singapore | Normal fundus Papilledema Disk with other abnormalities | 15.846 photographs from 7.532 patients Training and validation: 14.341 photographs from 6.769 patients External testing: 1.505 photographs |

BONSAI-DLS U-Net to detect the location of the optic disk DenseNet to classify the optic disk | Classification by the study steering committee | None |

| Biousse V et al.[10] (2020) | Singapore, USA | Normal fundus Papilledema Disk with other abnormalities | This study compared the performances of previously trained and validated systems versus expert neuro-ophthalmologists using 800 photographs from 454 patients | BONSAI-DLS U-Net to detect the location of the optic disk DenseNet to classify the optic disk | Diagnosis provided by each center | Two expert neuro-ophthalmologists (without clinical information given) |

| Saba T et al.[14] (2021) | Saudi Arabia | Normal fundus Papilledema Grading Mild papilledema Severe papilledema | Training: 500 images Testing: 60 papilledema and 40 normal fundus from the STARE dataset | DLS: DenseNet to classify optic disc as papilledema or normal U-Net to grade the papilledema | Dataset annotation and ophthalmologist’s classification | None |

| Vasseneix C et al.[11] (2021) | Singapore | Grading Mild/moderate papilledema Severe papilledema | Training: 2.103 photographs of 965 patients (1.052 mild to moderate papilledema, 1.051 severe papilledema) Testing: 214 photographs from 111 patients | DLS: U-Net to detect the location of the optic disk VGGNet to grade the papilledema | Consensus by panel of expert consisting of 2 experts, with additional 2 neuro-ophthalmologists | Three independent neuro-ophthalmologist (masked to clinical information) |

RBF=Radial basis function, SVM=Support vector machine, BONSAI=Brain and optic nerve study with artificial intelligence, DLS=Deep learning systems, AFIO=Armed forces institute of ophthalmology

Milea et al., Biousse et al., and Vasseneix et al. described that they used fundus images of papilledema with proven intracranial hypertension; therefore, the labeling of papilledema is confirmed. Besides normal fundus images, they also included fundus images of other disc abnormalities, such as anterior ischemic optic neuropathy, optic atrophy, pseudo-papilledema, and inflammatory optic neuropathy.[9-11] Biousse et al. also described that diagnosing other disc abnormalities is supported by appropriate diagnostic examination (optic atrophy confirmed by optical coherence tomography (OCT); optic disc drusen confirmed by ultrasound). Therefore, the models in this review are tested not only to differentiate papilledema from normal fundus images but also from other causes of optic disc abnormalities. However, three other studies in this review did not describe their methods in detail because they used fundus images from a dataset. Therefore, the labeling of papilledema is obtained from the annotation from the dataset. No information regarding the labeling process of each database was given in the studies.[10]

The results of each study are listed in Table 3. Overall, studies included in this review have shown that AI has good diagnostic value in detecting and also grading papilledema. The sensitivity ranged from 83.1% to 98.63% for papilledema detection and 91.8% to 99.82% for papilledema grading. Specificity ranged from 84.7% to 97.83% for detection and 82.6% to 98.65% for grading. Accuracy ranged from 85.89% to 99.17% for papilledema detection and 87.9% to 99.89% for papilledema grading.

Table 3.

Performance in papilledema detection and grading

| Author (year) | AUC (95% CI) | Sensitivity (%) | Specificity (%) | Accuracy (%) | Secondary results |

|---|---|---|---|---|---|

| Papilledema detection | |||||

| Fatima et al.[12] (2017) | - | 83.94 | 88.39 | 85.89 | |

| Akbar et al.[13] (2017) | - | 90.01 | 96.39 | 92.86 | |

| Milea D et al.[9] (2020) | 0.96 (0.95–0.97) | 96.4 | 84.7 | 87.5 | |

| Biousse V et al.[10] (2020) | 0.96 (0.94–0.97) | 83.1 not statistically different from Expert 1and 2 | 94.3 significantly better than expert 1 (P<0.001), identical to Expert 2 | 91.5 not statistically different from Expert 1and 2 | Time needed: DLS: 25 s Expert 1: 61 min Expert 2: 74 min |

| Saba T et al.[14] (2021) | - | 98.63 | 97.83 | 99.17 | |

| Papilledema grading | |||||

| Akbar S et al.[13] (2017) | - | 97.32 | 96.90 | 97.85 | |

| Saba T et al.[14] (2021) | - | 99.82 | 98.65 | 99.89 | |

| Vasseneix C et al.[11] (2021) | 0.93 (0.89–0.96) | 91.8 comparable to neuro-ophthalmologists 91.8 (P=1) | 82.6 comparable to neuro-ophthalmologists 73.9 (P=0.09) | 87.9 comparable to neuro-ophthalmologists 84.1 (P=0.19) |

AUC: Area under the curve, CI=Confidence interval, DLS=Deep learning systems

Some studies compared the performance of AI-based models to the performance of ophthalmologists. Biousse et al.[10] reported that based on the AUC, sensitivity, and specificity, the performance of AI was not statistically different from that of ophthalmologists. The specificity of the model was even significantly better when compared to one of the two ophthalmologists. Vasseneix et al. also reported the same finding that the performance of AI is similar to the performance of neuro-ophthalmologists.[11] In addition, Biousse et al. also reported the time needed by the AI-based model to perform this examination was a lot shorter than the time needed by the ophthalmologists.[10]

Discussion

This systematic review has shown that AI-based models are capable to detect and grading papilledema from fundus photographs, even comparable to neuro-ophthalmologists. These studies revealed overall good performance with high sensitivity, specificity, and accuracy. The models for both papilledema detection and grading had shown good diagnostic value, with high sensitivity (83.1%–99.82%), specificity (82.6%–98.65%), and accuracy (85.89%–99.89%).

Other than good diagnostic values (sensitivity, specificity, and accuracy numbers), these models execute data rapidly. It can process a large amount of data only in a count of seconds.[10] The ability of these models to examine numerous photographs rapidly makes these models a valuable tool for examination. While this task may be challenging for general practitioners or nonophthalmologist specialists, AI-based models for detecting papilledema might be useful in evaluating patients with headaches or other neurologic symptoms.

The SVM classifier and radial basis function (RBF) kernels utilized in the earlier studies by Fatima et al. and Akbar et al. automatically detect papilledema from features extracted from preprocessed fundus photograph images with a robust optic disc localization method and vessel segmentation.[12,13,15] These features are divided into four groups based on their color, textural, vascular, and disc margin obscuration properties. The best features are then selected to form a feature matrix that is used by the supervised SVM classifier along with RBF kernels to decide if the optic disc has papilledema.[12] Akbar et al. also used this model to distinguish normal optic discs from discs with papilledema before grading discs with papilledema into mild (Modified Frisén Scale Grade 1–2) and severe papilledema (Modified Frisén Scale Grade 3–5) based on three vascular features including vessel discontinuity index (VDI), VDI to disc proximity (VDIP), and kurtosis.[13]

Hereinafter, recent studies use DL models. Milea et al. and Biousse et al. used Brain and optic nerve study with artificial intelligence-deep learning system (BONSAI-DLS), whose architecture is a combination of a segmentation network (U-Net) and a classification network (DenseNet).[9,10] U-Net in BONSAI-DLS plays a role in localizing the optic disc from fundus photographs, then DenseNet classifies the optic disc into three classes (normal disc, disc with papilledema, and disc with other abnormalities). Vasseneix also used U-Net to localize the optic disc and then used VGGNet to classify papilledema (only discs with papilledema were included in this study) into mild to moderate (Modified Frisén Scale Grade 1–3) and severe papilledema (Modified Frisén Scale Grade 4–5).[11] Saba et al. used a robust method (for optic disc localization) before Dense-Net classified it into two classes (normal disc and disc with papilledema), then performed blood vessels segmentation of papilledema image through U-Net to grade disc with papilledema into mild and severe papilledema from VDI and VDIP calculation.[14,15]

Of five studies that assessed papilledema detection in this review, three that used DL models produced an overall better performance than the earlier two studies that used the SVM classifier model. Akbar et al. used SVM along with RBF to detect and grade papilledema and showed comparable performance with the other two studies that grade papilledema using the DLS, even better than the models’ performance by Vasseneix et al.[11,13] Data are ammunition for DL models. An enormous amount of data are needed to train high-performing models to mimic human intelligence.[16] The diversity of data will also influence the quality of models.[17] This explained the higher diagnostic value of papilledema detection done by the DL models compared to SVM; hence, the higher dataset trained for DL models. Although SVM is not suitable for large datasets, SVM showed comparable performance in grading papilledema to DL models because of its ability to solve a complex problem with a kernel solution function like RBF.[18]

Besides exploring the performance of the DLS in detecting and grading papilledema, a comparison of performance between DLS and neuro-ophthalmologists was also conducted in two studies in this review.[10,11] The results were the overall performance of the DL models was comparable to or even better than the neuro-ophthalmologists. In terms of speed, the model outperformed these experts. These studies also calculated agreement scores between the DLS and neuro-ophthalmologists and among the neuro-ophthalmologists. While Biousse et al. calculated results of this agreement scores between the DLS and the experts (expert 1: 0.72; expert 2: 0.65) and between two experts (0.71) are all classified at the same level (moderate), the agreement scores between the DLS and experts (0.62%; moderate) are classified at a higher level than the agreement scores among the experts (0.54%; weak) in a study by Vasseneix et al.[10,11]

Aside from all the excellence, AI-based models have several limitations. Applicability concerns like digital cameras on-site models, regular improvement by data update, and nonmydriatic digital cameras for clinical settings like emergency departments (EDs) still need confirmation for the best models’ performance. Most data in these studies were obtained after pupillary dilatation. Further studies are needed to verify if the DLS can perform as well on nonmydriatic fundus photograph images taken in hectic clinical settings like ED. Moreover, these models’ diagnoses must be confirmed by following comprehensive examination and cannot be the only tools in diagnosing papilledema.

Studies in this review used various digital fundus cameras with no specific criteria for the camera type. Since some studies used data from various centers or big datasets, the fundus images used for these models varied widely. Different camera types and settings might influence the quality of fundus images, therefore, affecting the model’s performance. Specific evaluation for each camera type might provide better information regarding the applicability of these models.

Cost-effectiveness is a challenge to AI applications, especially in low- and middle-income countries. Considering the direct costs of hardware equipment, AI software, integrating AI systems, examination costs, and indirect costs of the camera operator and logistics in opposition to a direct ophthalmologist is necessary. Technological acceptance, such as AI, is directly related to patient age, with older people not used to daily technological uses, such as E-mail and online Internet activities. Another factor that needs to be considered is socioeconomic status, with a gap in technology access and acceptance among minorities.

However, this review has some limitations. As the reference standards are based on diagnoses made by neuro-ophthalmologists and collected by many centers worldwide, labeling errors are inevitable. The poor-quality scans collected also affected the data quality and resulted in a lesser performance score. All study data that were included in this review were retrospective. This data collection method causes an unequal distribution among data groups. Objective data such as OCT RNFL thickness or macular ganglion cell complex were not systematically collected. Some studies have proposed that the use of OCT might be a better tool to detect papilledema[4,19] therefore the development of an AI-based model using OCT offers great potential.

Conclusion

AI-based models are proven to have a good diagnostic value in detecting and grading papilledema. Further studies are needed to find the most effective model for detecting and grading papilledema. To determine the most appropriate utilization to take full advantage of this new modality, studies regarding cost-effectiveness and applicability in clinical settings are necessary.

Financial support and sponsorship

Nil.

Conflicts of interest

The authors declare that there are no conflicts of interest in this paper.

References

- 1.Rigi M, Almarzouqi SJ, Morgan ML, Lee AG. Papilledema: Epidemiology, etiology, and clinical management. Eye Brain. 2015;7:47–57. doi: 10.2147/EB.S69174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Xie JS, Donaldson L, Margolin E. Papilledema: A review of etiology, pathophysiology, diagnosis, and management. Surv Ophthalmol. 2022;67:1135–59. doi: 10.1016/j.survophthal.2021.11.007. [DOI] [PubMed] [Google Scholar]

- 3.Malhotra K, Padungkiatsagul T, Moss HE. Optical coherence tomography use in idiopathic intracranial hypertension. Ann Eye Sci. 2020;5:7. doi: 10.21037/aes.2019.12.06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sibony PA, Kupersmith MJ, Kardon RH. Optical coherence tomography neuro-toolbox for the diagnosis and management of papilledema, optic disc edema, and pseudopapilledema. J Neuroophthalmol. 2021;41:77–92. doi: 10.1097/WNO.0000000000001078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mollan SP, Markey KA, Benzimra JD, Jacks A, Matthews TD, Burdon MA, et al. Apractical approach to, diagnosis, assessment and management of idiopathic intracranial hypertension. Pract Neurol. 2014;14:380–90. doi: 10.1136/practneurol-2014-000821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kapoor R, Walters SP, Al-Aswad LA. The current state of artificial intelligence in ophthalmology. Surv Ophthalmol. 2019;64:233–40. doi: 10.1016/j.survophthal.2018.09.002. [DOI] [PubMed] [Google Scholar]

- 7.Ting DS, Pasquale LR, Peng L, Campbell JP, Lee AY, Raman R, et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol. 2019;103:167–75. doi: 10.1136/bjophthalmol-2018-313173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Milea D, Najjar RP, Zhubo J, Ting D, Vasseneix C, Xu X, et al. Artificial intelligence to detect papilledema from ocular fundus photographs. N Engl J Med. 2020;382:1687–95. doi: 10.1056/NEJMoa1917130. [DOI] [PubMed] [Google Scholar]

- 10.Biousse V, Newman NJ, Najjar RP, Vasseneix C, Xu X, Ting DS, et al. Optic disc classification by deep learning versus expert neuro-ophthalmologists. Ann Neurol. 2020;88:785–95. doi: 10.1002/ana.25839. [DOI] [PubMed] [Google Scholar]

- 11.Vasseneix C, Najjar RP, Xu X, Tang Z, Loo JL, Singhal S, et al. Accuracy of a deep learning system for classification of papilledema severity on ocular fundus photographs. Neurology. 2021;97:e369–77. doi: 10.1212/WNL.0000000000012226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Fatima KN, Hassan T, Akram MU, Akhtar M, Butt WH. Fully automated diagnosis of papilledema through robust extraction of vascular patterns and ocular pathology from fundus photographs. Biomed Opt Express. 2017;8:1005–24. doi: 10.1364/BOE.8.001005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Akbar S, Akram MU, Sharif M, Tariq A, Yasin UU. Decision support system for detection of papilledema through fundus retinal images. J Med Syst. 2017;41:66. doi: 10.1007/s10916-017-0712-9. [DOI] [PubMed] [Google Scholar]

- 14.Saba T, Akbar S, Kolivand H, Ali Bahaj S. Automatic detection of papilledema through fundus retinal images using deep learning. Microsc Res Tech. 2021;84:3066–77. doi: 10.1002/jemt.23865. [DOI] [PubMed] [Google Scholar]

- 15.Usman A, Khitran SA, Akram MU. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) Berlin: Springer Verlag; 2014. A robust algorithm for optic disc segmentation from colored fundus images. [Google Scholar]

- 16.Raj M, Seamans R. Primer on artificial intelligence and robotics. J Organ Des. 2019;8:1–14. [Google Scholar]

- 17.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–10. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 18.Sonobe T, Tabuchi H, Ohsugi H, Masumoto H, Ishitobi N, Morita S, et al. Comparison between support vector machine and deep learning, machine-learning technologies for detecting epiretinal membrane using 3D-OCT. Int Ophthalmol. 2019;39:1871–7. doi: 10.1007/s10792-018-1016-x. [DOI] [PubMed] [Google Scholar]

- 19.Ahuja S, Anand D, Dutta TK, Roopesh Kumar VR, Kar SS. Retinal nerve fiber layer thickness analysis in cases of papilledema using optical coherence tomography –A case control study. Clin Neurol Neurosurg. 2015;136:95–9. doi: 10.1016/j.clineuro.2015.05.002. [DOI] [PubMed] [Google Scholar]