Summary

Language and music involve the productive combination of basic units into structures. It remains unclear whether brain regions sensitive to linguistic and musical structure are co-localized. We report an intraoperative awake craniotomy in which a left-hemispheric language-dominant professional musician underwent cortical stimulation mapping (CSM) and electrocorticography of music and language perception and production during repetition tasks. Musical sequences were melodic or amelodic, and differed in algorithmic compressibility (Lempel-Ziv complexity). Auditory recordings of sentences differed in syntactic complexity (single vs. multiple phrasal embeddings). CSM of posterior superior temporal gyrus (pSTG) disrupted music perception and production, along with speech production. pSTG and posterior middle temporal gyrus (pMTG) activated for language and music (broadband gamma; 70–150 Hz). pMTG activity was modulated by musical complexity, while pSTG activity was modulated by syntactic complexity. This points to shared resources for music and language comprehension, but distinct neural signatures for the processing of domain-specific structural features.

Subject areas: Musical acoustics, Biological sciences, Neuroscience, Sensory neuroscience

Graphical abstract

Highlights

-

•

Cortical stimulation of posterior temporal cortex disrupts music and language

-

•

Sites in posterior temporal cortex activate for both music and language

-

•

Neighboring temporal sites index sensitivity to musical or syntactic complexity

-

•

Posterior temporal cortex shares processing sites for units but not structures

Musical acoustics; Biological sciences; Neuroscience; Sensory neuroscience

Introduction

Language and music are universal human cognitive faculties. They both involve the generation of structured representations out of basic units. Prior work has compared the neural localization of these processes, though variability in experimental design has led to continuing debate.1,2,3,4 It has been suggested that, while language and music operate over distinct types of units (e.g., phonemes, morphemes, notes, chords), both faculties (re)combine discrete units into structures with defined hierarchies.5 Left hemisphere brain regions commonly implicated in music have been found to overlap with language regions, including superior temporal gyrus, inferior frontal gyrus, and inferior parietal lobule.6,7,8,9,10,11,12,13,14,15,16,17,18,19 At the same time, the substantial distinctions between language and music, such as differences in rhythmic structure, use of pitch, the “meaning” of the representational units, the type of structure-building mechanisms deployed, and broader ecological scope20 point to dissociable cognitive and neural processes. Consistent with the idea of dissociable resources for music and language, some properties of language (e.g., speech perception) have been shown to robustly dissociate from music in the brain,21,22 but many other properties of language have been given less extensive treatment. In particular, certain measures of linguistic and musical complexity remain to be explored at high spatiotemporal resolution. Since language and music appear to differ in their structure-building mechanisms, distinct measures of structural sensitivity may be needed to capture domain-specific effects of higher-order processing.

Prior work comparing representations of language and music using neuroimaging techniques has faced challenges parcellating language-specific from music-specific responses at a fine-grained spatiotemporal resolution. This is true not just for domain-specific units (words, tones), but also domain-specific structures. In addition, these methods are unable to attribute causality to music-responsive regions. By contrast, neuropsychological or lesion studies of individuals with acquired amusia or aphasia23,24 have emphasized dissociable processes, yet these studies do not involve assessment of function at the point of cortical disruption, typically assessing performance days or weeks after insult.

Addressing these topics further requires a direct approach with intracranial recordings, affording high spatiotemporal resolution of cortical function alongside causal perturbation, which may be achieved in an epilepsy monitoring unit25 or as part of an awake craniotomy.26 Cortical stimulation during an awake craniotomy is considered to be the gold-standard for localizing, and thus preserving, critical cognitive faculties.27,28 Awake craniotomies have been widely adopted as a surgical technique for patients who have intra-axial lesions in eloquent tissue,29,30 optimizing resection volume, and minimizing morbidity.31 In both clinical and research contexts, intracranial electrical brain stimulation in awake neurosurgical patients is a useful tool for determining the computations subserved by distinct cortical loci, since it yields transient disruption of function, simulating a focal lesion.32,33,34,35

It remains unclear whether in professional musicians language-dominant cortex plays a causal role in musical comprehension, and whether disruption of left posterior temporal regions impacts musical performance. A previous intraoperative mapping of the left hemispheres in two musicians found slowing and arrest for both speech production and music performance only in the posterior inferior frontal gyrus (pars opercularis) and ventral precentral gyrus, with stimulation to posterior superior temporal cortex resulting only in speech production errors.36 However, the reported patients had electrode coverage only over small portions of posterior superior temporal gyrus (pSTG). Even though musical rhythm and natural language syntax seem to overlap mostly in inferior frontal and supplementary motor regions,37 it nevertheless remains unclear which portions of posterior and superior temporal regions are causally implicated in musical perception and production.38,39 The contributions of frontal versus temporal regions therefore remains a major point of contention.

Given that posterior temporal and inferior frontal cortices remain the major regions of interest with respect to joint music and language cortical activity, any intracranial investigation of this topic would ideally involve electrode coverage of both regions. We were afforded the opportunity to monitor via electrocorticography (ECoG) the processing of music and language in a professional musician who was undergoing an awake craniotomy to localize and preserve important functions during the resection of a tumor from his language-dominant left temporal lobe. During the procedure, the patient was monitored with a subdural grid covering both lateral temporal and posterior inferior frontal cortex. We also conducted a cortical stimulation mapping (CSM) session, allowing us to determine which regions were causally implicated in musical and language comprehension and production. The experimental tasks were carefully catered to meet the requirements of isolating basic melodic processing and language comprehension, in keeping with the surgical goals of preserving both functions.

Previous intracranial research into music and language processing using subdural grid electrodes has analyzed event-related potentials.40 Here, we utilized the high spatiotemporal resolution of intracranial electrodes to instead focus on broadband high gamma activity (BGA, 70–150 Hz) which has been shown to index local cortical processing.41,42,43,44,45

To detect effects of structure building in music and language, we measure the complexity of domain-specific structures, with clearly defined metrics for both domains. We abstract away from particular properties of elements (e.g., lexical features or semantic denotation for language, and tonal profile for music) to explore the assembly of basic linguistic and musical elements into larger structures. Music and language are not necessarily well-matched in terms of their aspects of structural complexity that define their core computational basis. Musical sequences can be highly complex across a range of dimensions (e.g., tone variety, rhythm, harmonic motifs, Shannon information), and natural language syntax can also be complex across various dimensions (e.g., dependency length, derivational complexity, hierarchical embedding). Natural language syntax is also sensitive to hierarchical relations and hierarchical complexity, but not linear order (i.e., syntactic rules apply across structural distance, not linear distance).46,47 As such, we explore here measures of complexity that pertain to the elementary processes of assembling musical objects (tone variety) and linguistic objects (embedding depth). For example, a music sequence can exhibit a repetition of one or two tones, in a simple AnBn fashion, or it can exhibit a broader tone range contributing to a sense of melodic structure. For language, a sentence can be syntactically simple (“The sun woke me up”), or it can involve instances of a phrase being embedded inside a larger structure (e.g., “I think that [the sun is shining]”). We chose to focus on these measures since characterizing the neural signatures of these basic properties will be needed before a more comprehensive model of different types of musical and linguistic complexity can be built.

While distinct neural responses to musical spectral complexity (pitch, harmony, pitch variation) from acoustic dynamics have been documented,48 the topic of structural complexity remains less well addressed. Perceived musical complexity, often grounded in expectancy violations and information theory, yields a number of clear behavioral consequences.49,50 Posterior superior temporal regions have been implicated in the computation of abstract hierarchical structures.51,52,53,54,55 Since complexity is established along the progression of the sequence, this implies a complexity increase over time. Neural correlates of this increase in activity along the course of sentence comprehension have been demonstrated before in the form of BOLD responses and high frequency gamma power56,57,58 and we hypothesized this would also be true for music.

In keeping with what we have reviewed about domain-specific structure-building differences, the most recent research in fMRI points to distinct cortical regions being implicated in musical structure parsing and language, such that language cortex is not sensitive to violations of melodic structure, and individuals with aphasia who cannot judge sentence grammaticality perform well on melody well-formedness judgments.59 This points to distinct areas being involved in domain-specific structure processing. However, this study did not provide a specific measure of linguistic complexity. In addition, the measure of melodic structure (melody vs. scrambled melodies) was used to detect violations, rather than the elementary assembly of musical structure. Lastly, the melodies were characterized by familiar folk tunes, introducing effects not specific to structure composition.

In summary, we investigate here the spatiotemporal dynamics of musical and linguistic structure processing, by utilizing domain-specific measures of complexity, in combination with direct CSM to attribute causal involvement of specific portions of posterior temporal cortex in the comprehension and production of music and language.

Results

Patient profile

The patient was a right-handed, native English-speaking male in his thirties presenting with new onset seizures resulting in temporary aphasia. His MRI revealed a left temporal lobe tumor spanning across the inferior, middle, and superior temporal gyri. The patient was a professional musician with extensive experience with piano, going back to childhood training. Left hemispheric dominance of language function was confirmed with fMRI. Before surgery, the patient performed all tests that were planned to be implemented in the operating room (OR) to establish baseline performance. At baseline, the patient performed at ceiling for all tested language tasks and music tasks. During baseline testing it was established that the patient could use an MIDI keyboard with his left and right hands separately. He scored 172/180 in the Montreal Battery of Evaluation of Amusia (MBEA) test.60 In the OR, the patient performed a finger-tapping test over a range of keys, practicing free-style play to establish familiarity with the keyboard setup.

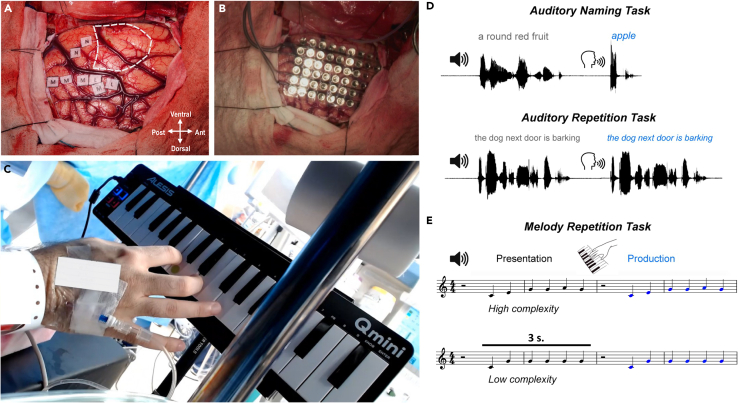

In the OR, the patient performed three main behavioral tasks (Figures 1D and 1E).

-

(1)

Auditory Sentence Repetition: Repeating spoken sentences (e.g., “They drove the large truck home”) (ECoG, Stimulation).

-

(2)

Melody Repetition: Repeating presented six-tone sequences, one-handed, using an MIDI keyboard (ECoG, Stimulation).

-

(3)

Auditory Naming: Providing single-word answers to common object definitions (e.g., “A place you go to borrow books”)61 (Stimulation only).

Figure 1.

Intraoperative stimulation setup and task design

(A) Pre-resection intraoperative image of the craniotomy window, with dotted white lines parcellating the tumor resection site.

(B) ECoG grid placed over craniotomy site (same orientation as [A]).

(C) The patient performed a melody repetition task with his dominant right hand using an MIDI keyboard setup during the operation.

(D) Schematic representation of the auditory naming-to-definition task, in which the surgeon verbally produced a definition and the patient responded with a one-word response, and the auditory repetition task, in which the patient repeated a phrase verbally produced by the surgeon.

(E) Schematic representation of the melody repetition task in which a high or low complexity, six-note sequence was played over speakers and the patient reproduced the sequence on the keyboard.

At his 4-month follow-up, the patient was confirmed to have fully preserved musical and language function, without evidence of deterioration.

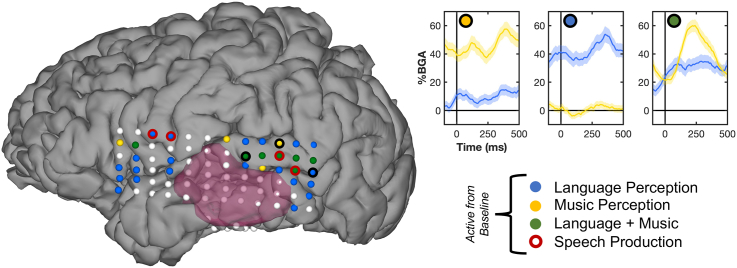

ECoG mapping of music and language

Activity was recorded through the ECoG grid during the sentence and melody repetition tasks (Figure 2). During the sentence repetition task, 28 lateral electrodes were found to be significantly active above baseline (> 20% BGA, FDR-corrected q < 0.05) during the 100–400 ms period following presentation of each word. These sites active for language were found across posterior temporal and inferior frontal cortices. During tone presentation in the melody task, 11 electrodes were significantly active for each tone during the same time window, mostly clustered around pSTG. Seven electrodes were significantly active for both music and language perception, primarily located in pSTG. During language production, two electrodes in ventral precentral gyrus were significantly active, and two in pSTG were active.

Figure 2.

ECoG mapping of music and language

Subdural electrode array colored by task effect. Electrodes that showed significant activation from baseline to language perception (auditory sentence repetition task), music perception (tone sequence repetition task via keyboard play), both language and music perception, and speech production. The MRI-derived mask of pathological tumor tissue is marked in opaque crimson. Exemplar recording sites plotted on top right (with corresponding black circle denoting site on ECoG grid), plotting response to language (blue) and music (yellow). Responses are combined across all tones/words across each sequence. Significant activity from baseline (500–100 ms pre-sequence) plotted on time trace in colored bars (Wilcoxon signed rank, FDR-corrected q < 0.05). Activity was sampled across all tones and words, including mid-trial elements, explaining the lack of return to baseline for active traces before 0ms. 28 electrodes were active for language (green + blue), and 11 were active for music (green + yellow).

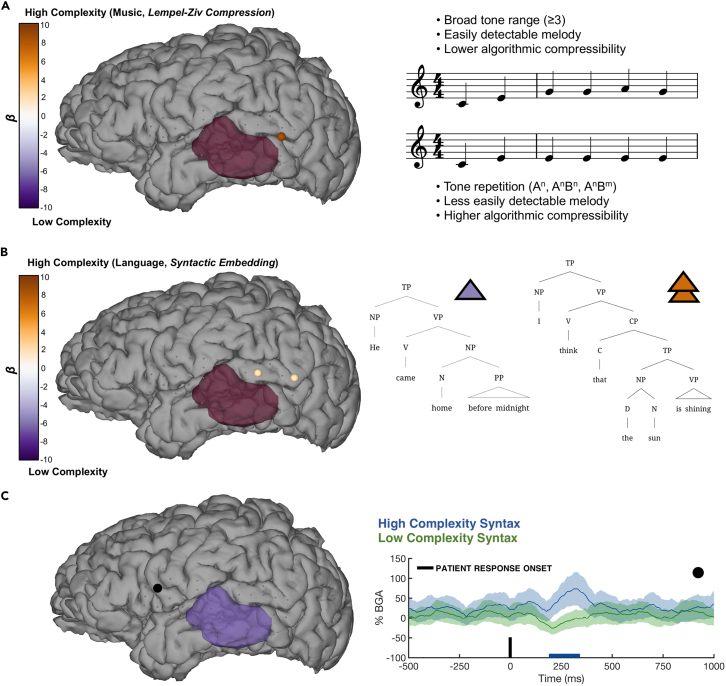

Multiple linear regression (MLR) was used to reveal whether the change in BGA across a sequence was significantly modulated by the complexity of the music and language stimuli. For the music task, one electrode in posterior middle temporal gyrus (pMTG) (on the upper border with pSTG) had a significant interaction between complexity and note position in the sequence, indexing an increase of BGA that was greater for high complexity sequences relative to low complexity sequences (Figure 3A) (MLR, t(360) = 3.38, β = 14.41, p = 0.0008, 95% CI 6.0 to 22.8). This electrode was significantly active for both language and music.

Figure 3.

Intraoperative ECoG of musical and syntactic complexity

(A and B) Results from a multiple linear regression model plotted as colored electrodes on the cortical surface, modeling the impact of the interaction between sequence complexity and position in the sequence for (A) music processing and (B) sentence processing, on the broadband gamma activity (BGA; 70–150 Hz) 100–400 ms following each tone/word onset. Electrode color is scaled based on beta values, with high complexity in orange and low complexity in purple. The MRI-derived mask of pathological tumor tissue is marked in opaque crimson. Exemplar high and low complexity stimuli are highlighted.

(C) Effects of syntactic complexity during language production in the auditory repetition task, localized to inferior frontal cortex. Colored bar represents periods of significant difference (FDR-corrected, q < 0.05). Electrode of interest is denoted via black dot.

When comparing the language stimuli based on syntactic complexity, two electrodes in pSTG exhibited a greater BGA increase for complex syntax (anterior electrode: t(214) = 2.84, β = 3.62, p = 0.004, 95% CI 1.11 to 6.13; posterior electrode: t(214) = 2.83, β = 3.54, p = 0.005, 95% CI 1.07 to 6.01) (Figure 3B). These electrodes were also significantly active for both language and music. The BGA difference for the language contrast was smaller in terms of percentage change than the difference for the music contrast, potentially due to the more salient (task-related) difference between the musical sequences (i.e., amelodic sequences of AnBn are easier to produce than sequences with clear melodies, whereas there is no reasonable difference in task difficulty between sentence types for verbal repetition).

We also analyzed low frequency responses (2–15 Hz and 15–30 Hz) for these same language and music contrasts, and found no electrodes with significant interaction effects between position and complexity (all p > 0.05).

During sentence production, we found that one electrode in inferior frontal cortex was significantly more active for sentences with higher syntactic complexity (FDR-corrected q < 0.05) from approximately 200–300 ms after articulation onset (Figure 3C). Though these syntactic conditions differed slightly in their auditory recording length and word number, the low complexity stimuli did not become significantly active at any point for this frontal electrode, reinforcing the suggestion that this BGA modulation is related to the syntactic manipulation. We stress here that this analysis remains exploratory, given the low trial numbers.

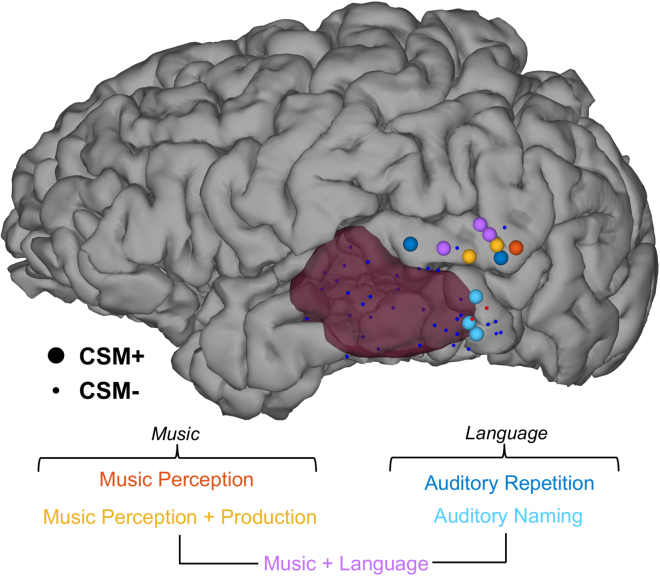

Cortical stimulation mapping of Language and music

Alongside the ECoG tasks, direct CSM was performed in order to test the causal role of posterior and middle temporal areas in language and music processing prior to resection (Figure 4). During the auditory repetition and auditory naming language tasks, CSM was applied separately either during the perception or production phase of each trial. Five sites, clustered in pSTG, were positive during CSM for sentence repetition, resulting in disruption of comprehension. Only two sites tested in pSTG were CSM-negative for sentence repetition. Three sites, clustered in lower pMTG, were CSM-positive during the auditory naming task. No sites showed distinct effects across production and comprehension for the language tasks, with CSM during perception resulting in complete disruption of comprehension; hence, we only label CSM-positive sites by their language task, not period of stimulation.

Figure 4.

Intraoperative stimulation testing during music and language perception

CSM-positive sites for music perception (dark orange) and music perception and production (yellow), as well as sentence reading (dark blue) and common object naming (light blue), alongside sites that were jointly positive for music and language perception (light purple). MRI-derived mask of pathological tissue is highlighted in opaque crimson. CSM-positive sites are represented by large nodes; CSM-negative sites are represented by small nodes (small orange = negative for music; small blue = negative for language).

During the music mapping task, CSM was applied during either perception or production, or both. Six sites, clustered in pSTG, were CSM-positive. For those six sites, error types were consistent regardless of when during the trial CSM was applied. The six CSM music-positive sites were thus classified according to when CSM was applied during the trial. The patient typically cited “interference” during attempted production or was simply unable to select the next note in the melody to play. Two sites in ventral lateral temporal cortex were tested for music perception and production, and both were CSM-negative. As shown (Figure 4), the CSM results indicate a clear overlap between sites implicated in language and music along pSTG.

The patient’s subjective reports during CSM for language mapping suggested disruption to phonological working memory (“It was scrambled”), in addition to disrupted memory access or déjà vu (“Random memories come in”; “I keep getting confused by memories”). CSM disruption during music presentation appeared to have a similar effect (“Keeps changing pitches”; “Too much extra interference”) (Video S1).

Video of cortical stimulation mapping during music production (keyboard play). Sites stimulated are colored on patient’s cortical surface.

Discussion

We conducted ECoG monitoring and CSM of music and language perception and production in the language-dominant hemisphere of a professional musician. This was performed with the patient during an awake craniotomy to remove a left lateral mid-temporal lobe tumor. Our work was designed to generate relevant and actionable clinical information, and to support a basic scientific investigation into the organization of music and language processing.

We discovered spatially diverse responses within pSTG, with electrodes exhibiting sensitivity to music and language, either jointly or separately. These data also unveiled distinct portions of pSTG and pMTG that indexed sensitivity to domain-specific linguistic complexity (pSTG) and musical complexity (pMTG). These complexity-sensitive sites were also active for both music and language, suggesting that the portions of cortex encoding responsiveness to structural complexity are also recruited for basic tone/word processing across both modalities. This supports the hypothesis that music and language share neural resources for comprehension at a basic unit level (tones, words), but at their respective levels of domain-specific complexity we can isolate distinct electrode sites (melodies, sentences). This provides further evidence that high gamma activity indexes cognitive operations pertinent to the construction of linguistic and musical structures.62 In addition, while a number of frontal electrodes were active for language and/or music, these sites were not sensitive to stimuli complexity during perception, suggesting instead a major role for posterior temporal regions. Even though our analysis affords a statistically robust isolation of the effects of syntactic complexity and tone sequence compressibility, we nevertheless note here that we cannot fully rule out the potential effects of auditory working memory; although we also highlight here that auditory working memory resources are usually attributed to Heschl’s gyrus, planum temporale, and dorsal frontal sites,63,64 rather than the more lateral and lower sites of pSTG and pMTG that we document here.

Stimulation of pSTG disrupted the patient’s music perception and production, along with his ability to produce speech, while stimulation of ventral lateral temporal cortex impacted only auditory naming. The stimulated auditory regions may have disrupted processing at a sufficiently early stage as to be integral to both musical and linguistic tasks. A non-exclusive alternative is that stimulation of the pSTG may have impacted processing via current spread (e.g., via arcuate fasciculus).65 These stimulation results point to the possibility that the BGA signatures we detected during ECoG monitoring index underlying processes that play a causal role in music and auditory language comprehension. In addition, the close relation between activation profiles and cortical stimulation profiles provides further support for the reliability of high frequency activity in capturing local cortical processing essential for music and language function. An important clinical implication is that, since stimulation of pSTG disrupted both music and language, this points to the advantage of intraoperative ECoG recordings in addition to CSM in finely adjudicating the functional role of narrow portions of cortex. Rapid, live ECoG data analysis in the OR has already been demonstrated26 and future research and interventions could make use of simultaneous monitoring and cortical mapping. Prior reports have shown that stimulation of left STG impairs speech perception25,66 and speech production,67 and we here extend these findings to both speech and melody perception. Our stimulation sites were closer to planum temporale than other portions of auditory cortex (although most were still more ventral on the lateral surface), and this region has previously been implicated in speech production disruption.25 A previous interoperative mapping in an amateur musician revealed cortical sites for music disruption that included the left pSTG and the supramarginal gyrus.30 Disruption to these regions appears to interfere with the large auditory-motor network recruited for musical performance. Another previous awake craniotomy in a professional musician found that CSM to right pSTG disrupted the patient’s ability to hum a short complex piano melody but did not affect auditory sentence repetition.67 Interestingly, that site in right pSTG is homologous to the site in left pSTG that was here found to be active for both music and language. While we did not find a clear functional segregation during CSM for music and language, we did find a clear separation in ECoG responses, pointing to possibly distinct roles of the left and right hemisphere pSTG when we compare our results with prior stimulation work.67 We also note that our ECoG activation profiles for language sites concords with speech-selective sites in previous joint ECoG-fMRI research, with language-selective electrodes in our study occupying lower STG sites.68

Previous intracranial research has implicated posterior temporal auditory cortex in melodic expectation generation,69 and pSTG activity correlates with musical intensity.70,71 In the current study, we extend the role of posterior temporal cortex to exhibiting sensitivity to tone sequence complexity. This region seems to subserve variable computational demands pertaining to musical and linguistic complexity, of distinct formats and representations (i.e., syntactic complexity along one particular dimension, and musical complexity along a different dimension). As such, posterior temporal regions may be engaged not only in linguistic structure-building53,72,73,74 but also in musical structure-building (Figure 3). Our work suggests that a finer functional map is needed than those implicating pSTG and pMTG grossly in language and/or music, since we found that distinct, closely neighboring sites index sensitivity to diverging complexity metrics. Future work could seek to elaborate which precise features of musical and linguistic complexity are coded by pSTG and pMTG, since complexity can be defined across a number of dimensions, such as recursively enumerated layers of embedding, predictability, and various measures of minimum description length. Our stimuli design and low trial numbers did not afford us space to conduct these exploratory assessments. We highlight that the type of complexity we analyzed differed between our musical and linguistic stimuli, and indeed there are many other possible distinctions that can be drawn isolating language from other cognitive processes.75,76 We also stress that in a given musical representation certain features that can be quantified for their complexity might recede into the backdrop (e.g., pitch or contour) while others (e.g., rhythmic complexity, grammar of the melody) might become more salient, depending on a variety of conditions including current task demands and attention.50 Since our task required holding a structure in memory to repeat via keyboard production, we suspect that our measure of sequence compressibility was an ecologically valid means to isolate the more general issue of structural complexity, but future work could jointly dissociate local cortical sensitivity to distinct types of complexity. For example, a more dorsal region we did not have electrode coverage over, area 55b, has been implicated in music perception and sensorimotor integration,77 and other distinct cortical sites may provide variable contributions to aspects of simple to complex musical structure processing. We also stress here that our research was naturally constrained by clinical priorities, and so our low trial numbers force our analyses to migrate to a more exploratory and preliminary status.

Left posterior temporal regions have previously been implicated in linguistic structures, such as phrasal and morphological hierarchies, while musical and melodic hierarchies have been found to recruit right posterior temporal and parietal cortex in individuals without a musical background.78,79 Our results suggest that in professional musicians, the dominant left-hemisphere for language can be recruited for parsing musical complexity and can causally disrupt perception. While the relevant long-term memory representations required for processing complex music may be stored in the right temporal lobe, it is possible that the left hemisphere is recruited for the generation of more complex structures in professional musicians. Our discovery of syntactic complexity effects in pSTG is also concordant with recent voxel-based lesion symptom mapping results associating damage to pSTG with processing of syntactically complex sentences.80

When considering the role of frontal cortex, spontaneous jazz improvisation, in contrast to over-learned sequences, results in greater activity in the frontal pole,9,81 and previous imaging work has emphasized contributions from Broca’s area in both natural language syntax and structured musical melodies.82 In the present study, we found greater activity for syntactically complex sentences at the early stage of patient verbal articulation in a site over premotor cortex on the border of inferior frontal cortex. This suggests that this site was implicated in expressive complexity, while posterior temporal regions are recruited for parsing interpretive complexity, in line with frontotemporal dissociations posited in the literature.73,83,84 Additionally, we found that ventrolateral temporal cortex was disrupted during stimulation for the language naming task, but not for music, supporting a role for this region in the ventral stream for speech comprehension. More superior portions were disrupted for both language and music, which seems in accord with previous imaging literature.37,85 Frontal sites have been hypothesized to be involved in higher-level processes pertaining to the temporal order of sequences,19,86 and frontal ERP signatures from intracranial participants have been shown to dissociate musical notes from musical chunks.87 Given our sparse frontal coverage, we leave this issue for future research.

We have demonstrated here the viability of brief intraoperative research sessions to provide causal evidence for the cortical substrates of cognitive faculties such as language and music. Music is a culturally universal source of affective and aesthetic experience, much of which is derived not from simple, repetitive tone concatenations, but rather from the inference of some notion of structure, which we isolate here to portions of pSTG and pMTG.

Limitations of the study

We note here some limitations of our report: (1) We were not able to confirm our results with non-invasive pre-operative imaging using the same musical task; (2) We were not able to directly generalize our results to healthy populations and non-professional musicians; (3) We currently lack any direct comparisons between this patient and others who could undergo ECoG monitoring of the same musical and linguistic tasks, including any direct comparisons with non-musicians; (4) Our use of an electrode grid limited access to signals from sulcal structure that would be more accessible to depth probes. Nevertheless, we note that our coverage was relatively dense over pSTG and pMTG, making it well-suited to the task of evincing rapid temporal and spectral dynamics. A healthy skepticism about the clinical value of intraoperative musical performances has served as a counterweight to the disproportionate attention in the media such cases have evoked. Even so, evidence has mounted that intraoperative music mapping can aid in the preservation of critical abilities during tumor resection by providing a firm basis on which to accurately monitor the integrity of a complex function.88 While the 6-tone sequences we used for our task are relatively basic, we stress that in the context of a task demanding musical recitation via keyboard play the patient would have likely treated the stimuli in a manner approximating ecological validity for real world musical engagement. Even though many professional musicians may not deem our basic sequences a clear example of “music,” we stress also that we are aiming to evince the basic processing components of this cognitive system, in a similar way that linguists who study elementary syntactic phrases are utilizing stimuli (e.g., “red boat,” “the ship”) that many authors would not call ordinary use of language, in order to expose essential computational features.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| AFNI/SUMA | Koelsch, et al.85 | afni.nimh.nih.gov |

| Freesurfer | cheung, et al.86 | freesurfer.net |

| MATLAB 2020b | MathWorks, MA, USA | mathworks.com |

| Other | ||

| Blackrock NeuroPort system | BlackRock Microsystems, UT, USA | blackrockmicro.com |

| Intracranial Electrodes (SDE) | PMT, MN, USA | pmtcorp.com |

| Robotic Surgical Assistant (ROSA) | Medtech, Montpellier, France | medtech.fr |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the Lead Contact, Nitin Tandon (nitin.tandon@uth.tmc.edu).

Materials availability

This study did not generate new unique reagents.

Experimental model and study participants

Participants

The patient (native English-speaking male in his thirties) completed research activities after written informed consent was obtained, and consented to voice and face identity being revealed in the video. All experimental procedures were reviewed and approved by the Committee for the Protection of Human Subjects (CPHS) of the University of Texas Health Science Center at Houston as Protocol Number: HSC-MS-06-0385.

Method details

Electrode implantation and data recording

Data were acquired from subdural grid electrodes (SDEs). SDEs were subdural platinum-iridium electrodes embedded in a silicone elastomer sheet (PMT Corporation; top-hat design; 3mm diameter cortical contact), and were surgically implanted during the awake craniotomy.26,89,90,91,92 Electrodes were localized manually in AFNI, based on intraoperative photographs, and localizations using a surgical navigation system (StealthStation S8, Medtronic). Cortical surface reconstruction was performed using FreeSurfer and imported into AFNI where the electrode positions were mapped onto the cortical surface.93,94 Data were digitized at 2 kHz using the NeuroPort recording system (Blackrock Microsystems, Salt Lake City, Utah), imported into MATLAB, referenced to a group of clean, unresponsive ventral temporal strip electrodes and visually inspected for line noise and artifacts.

Stimuli and experimental design

For the music task, the patient listened to 6-tone musical sequences and then attempted to reproduce the sequences by playing an electronic keyboard. The duration of each tone was 500 ms, with each sequence lasting 3 seconds. Fundamental frequency for each note was generated assuming logarithmic spacing, with C4 (“Middle C”) at a frequency of 261.60 Hz. Each note was generated in MATLAB as a sine wave at each fundamental frequency with sampling frequency of 44,100 Hz and gated with a 5 ms cosine slope at the onset and offset of each note. Two types of sequences were designed: high and low complexity. High complexity sequences used a minimum of three different tones per sequence, and could be perceived as exhibiting an easily detectable melody (e.g., ACDDCA, ACDACE). None of these sequences resembled familiar, common melodies. Low complexity sequences included only tone repetitions (An, AnBn, AnBm). Experimental time was dictated by clinical constraints, and in total 36 high complexity trials and 25 low complexity trials were presented. The patient reproduced the musical sequences on a MIDI keyboard (Alesis Qmini portable 32 key MIDI keyboard, 2021 model, inMusic Brands, Inc., Rhode Island) that was affixed to a stand at a comfortable height and distance to be played with the patient’s dominant right hand during the procedure.

For the language tasks, auditory speech stimuli were generated using Google Text-to-Speech using male and female WaveNet voices. Auditory stimuli were delivered though a Bose Companion 2 Series III speaker placed near the patient. The auditory sentences presented during the repetition task were divided into high complexity and low complexity, based on whether the sentences exhibited multiple or single syntactic embeddings (Table S1).95,96 High complexity sentences were slightly longer, and this was reflected in a significant difference in recording length (mean length: high complexity (1.75 s), low complexity (1.58 s), p = 0.002, t(19) = -3.05) and average number of words (all conditions: M (5.75), range (4), SD (0.98); high complexity: M (6.35), range (3), SD (0.81); low complexity: M (5.15), range (3), SD (0.74)). Experimental time was dictated by clinical constraints, and in total for the language stimuli 40 trials were presented (20 each of high/low syntactic complexity, presented in pseudorandom order).

While both of these complexity measures (melodic/amelodic, multiple/single syntactic embedding) are specific to each domain of processing, a more generic measure was used to categorize musical sequences. Given the strong connection between algorithmic complexity and cognitive processes ranging across memory, music, attention, and language,47,97,98 we used a Kolmogorov complexity estimate (Lempel-Ziv) to compute the algorithmic compressibility of each musical sequence.99,100,101 Sequence complexity is identified with richness of content, such that any given signal or sequence is regarded as complex if it is not possible to provide a compressed representation of it. The algorithm scans an n-digit sequence, S = s1 · s2· ...sn, from left to right, and adds a new element to its memory each time it encounters a substring of consecutive digits not previously encountered. Thus, this measure of complexity takes as input a digital string and outputs a normalized measure of complexity.102,103 In this analysis, the input used was musical note identity across every sequence of six notes. The Lempel-Ziv complexity for both conditions were as follows: Low complexity (1.11 ± 0.23; Mean ± SD), high complexity (2.02 ± 0.34). While our sentence stimuli were distinguished based on single vs. multiple syntactic embeddings, which refers to notions such as hierarchical structure not strictly reliant on linear ordering rules pertinent to measuring string complexity, there was insufficient lexical or syntactic variation to measure the Lempel-Ziv complexity of a specific sentence feature.

A further possibility would have been to explore distributional measures like entropy or surprisal, but we note here that these do not pertain to information about the domain-specific representations or structures, and call instead upon more domain-general processing systems and brain regions outside of language cortex.104,105,106 Other researchers have expressed skepticism regarding the usefulness of entropy reduction as a complexity metric.107 Distributional information can form a “cue” to structure in sequential messages, but is not a substitute for the structure itself.108

Quantification and statistical analysis

A total of 84 subdural grid electrode contacts were implanted in the patient. 72 were placed across left posterior temporal lobe and ventral portions of frontal cortex. The remaining 12 were placed across the ventral temporal surface. The current analyses are focused purely on the lateral grid. The patient’s craniotomy allowed access across superior and middle temporal cortices (Figure 1), providing an opportunity to evaluate the engagement of these regions in music and language. Analyses were performed by first bandpass filtering the raw data of each electrode into broadband high gamma activity (BGA; 70-150 Hz) following removal of line noise and its harmonics (zero-phase 2nd order Butterworth band-stop filters). A frequency domain bandpass Hilbert transform (paired sigmoid flanks with half-width 1.5 Hz) was applied and the analytic amplitude was smoothed (Savitzky-Golay FIR, 3rd order, frame length of 251ms; Matlab 2019b, Mathworks, Natick, MA). BGA was defined as percentage change from baseline level; 500 ms to 100 ms before the presentation of the first word or tone in each trial.

For each electrode, the mean activity across the 100 – 400ms window following each word or tone onset in the sequence was calculated. Active electrodes, used for further analyses, were defined as showing >20% BGA with p < 0.05 (one-tailed t-test) above the baseline period (-500 to -100ms prior to sequence onset). We did not report on the precise maximum BGA increases from baseline across conditions, but rather captured the success or failure of a given site to increase beyond the above thresholds of activity. For temporal analyses, periods of significant activation were tested using a one-tailed t-test at each time point, and were corrected for multiple comparisons with a Benjamini-Hochberg false detection rate (FDR) corrected threshold of q < 0.05. For speech production activation, the same BGA and significance thresholds were applied, but over the 100 – 1000ms window after the onset of verbal articulation.

Multiple linear regression (MLR) was used to model effects of multiple factors on the BGA response. For language stimuli, fixed effects in the model were word frequency, word length, syntactic complexity, and word position. For music stimuli, fixed effects in the model were note identify (1 through 6 denoting C4 through A4, excluding half steps), interval size relative to previous note, Lempel-Ziv complexity, and note position. The effect of interest in both models was the interaction effect of Lempel-Ziv complexity (music) or syntactic complexity (language) with position in the sequence, indexing a complexity-related effect over the sequence. We report on effects of complexity due to our central research focus, and include other factors (e.g., word frequency) not as specific components of analysis but as regressors of no interest for our model. Data were assumed to be normal in distribution for statistical comparison. The mean BGA power for the 100 to 400 ms following each note or word onset was the response variable. Electrodes with significant complexity effects were tested using a one-tailed t-test at each time point with a p-value threshold of 0.01. Due to low trial numbers, multiple comparison corrections were not applied for the MLR analysis, instead keeping to this low uncorrected p-value threshold.

Cortical stimulation mapping

Cortical stimulation mapping (CSM) was carried out using an OCS2 handheld stimulator (5mm electrode spacing; Integra LifeSciences, France). 50Hz, 500 μs square pulse stimulation was administered with a current between 5 and 10 mA depending on an established baseline that did not result in after-discharges. Stimulation trials ranged from 2-5 per site, depending on clinical judgment of functional disruption. For analysis, sites of stimulation were inferred based on gyral anatomy from video recording and MRI co-localization of the cortical surface.

Acknowledgments

We express our sincere gratitude to the patient who participated in this study and to the OR staff. The patient participated in the experiments after written informed consent was obtained. All experimental procedures were reviewed and approved by the Committee for the Protection of Human Subjects (CPHS) of the University of Texas Health Science Center at Houston as Protocol Number: HSC-MS-06-0385.

This work was supported by the National Institute of Neurological Disorders and Stroke NS098981 (to N.T.) and the National Institutes of Health R01EY028535 (to B.Z.M.).

Author contributions

M.J.M., E.M., X.S., B.Z.M., N.T., designed research; M.J.M., E.M., X.S., O.W., C.W.M., N.T., performed research; M.J.M., E.M., O.W., K.S., analyzed data; M.J.M., E.M., O.W., B.Z.M., N.T., edited the paper; N.T. conducted funding acquisition and neurosurgical procedures.

Declaration of interests

The authors declare no competing interests.

Published: June 28, 2023

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2023.107223.

Contributor Information

Elliot Murphy, Email: elliot.murphy@uth.tmc.edu.

Nitin Tandon, Email: nitin.tandon@uth.tmc.edu.

Supplemental information

List of sentences used during the sentence repetition task, labeled by syntactic complexity (0 = simple, 1 = complex).

Data and code availability

-

•

The datasets generated from this research are not publicly available due to them containing information non-compliant with HIPAA and the human participant the data was collected from did not consent to their public release. However, they are available on request from the lead contact.

-

•

The custom code that supports the findings of this study is available from the lead contact on request.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

References

- 1.Asano R., Boeckx C. Syntax in language and music: what is the right level of comparison? Front. Psychol. 2015;6:942. doi: 10.3389/fpsyg.2015.00942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Asano R., Boeckx C., Seifert U. Hierarchical control as a shared neurocognitive mechanism for language and music. Cognition. 2021;216:104847. doi: 10.1016/j.cognition.2021.104847. [DOI] [PubMed] [Google Scholar]

- 3.Jackendoff R., Lerdahl F. The capacity for music: What is it, and what’s special about it? Cognition. 2006;100:33–72. doi: 10.1016/j.cognition.2005.11.005. [DOI] [PubMed] [Google Scholar]

- 4.Koelsch S., Rohrmeier M., Torrecuso R., Jentschke S. Processing of hierarchical syntactic structure in music. Proc. Natl. Acad. Sci. USA. 2013;110:15443–15448. doi: 10.1073/pnas.1300272110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tsoulas G. Computations and interfaces: Some notes on the relation between the language and the music faculties. Music. Sci. 2010;14:11–41. doi: 10.1177/10298649100140S103. [DOI] [Google Scholar]

- 6.Angulo-Perkins A., Aubé W., Peretz I., Barrios F.A., Armony J.L., Concha L. Music listening engages specific cortical regions within the temporal lobes: Differences between musicians and non-musicians. Cortex. 2014;59:126–137. doi: 10.1016/j.cortex.2014.07.013. [DOI] [PubMed] [Google Scholar]

- 7.Ding Y., Zhang Y., Zhou W., Ling Z., Huang J., Hong B., Wang X. Neural Correlates of Music Listening and Recall in the Human Brain. J. Neurosci. 2019;39:8112–8123. doi: 10.1523/JNEUROSCI.1468-18.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gandour J., Wong D., Lowe M., Dzemidzic M., Satthamnuwong N., Tong Y., Li X. A Cross-Linguistic fMRI Study of Spectral and Temporal Cues Underlying Phonological Processing. J. Cognit. Neurosci. 2002;14:1076–1087. doi: 10.1162/089892902320474526. [DOI] [PubMed] [Google Scholar]

- 9.Limb C.J., Braun A.R. Neural Substrates of Spontaneous Musical Performance: An fMRI Study of Jazz Improvisation. PLoS One. 2008;3:e1679. doi: 10.1371/journal.pone.0001679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Riva M., Casarotti A., Comi A., Pessina F., Bello L. Brain and Music: An Intraoperative Stimulation Mapping Study of a Professional Opera Singer. World Neurosurgery. 2016;93:486.e13–486.e18. doi: 10.1016/j.wneu.2016.06.130. [DOI] [PubMed] [Google Scholar]

- 11.Rogalsky C., Rong F., Saberi K., Hickok G. Functional Anatomy of Language and Music Perception: Temporal and Structural Factors Investigated Using Functional Magnetic Resonance Imaging. J. Neurosci. 2011;31:3843–3852. doi: 10.1523/JNEUROSCI.4515-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Roux F.-E., Lubrano V., Lotterie J.-A., Giussani C., Pierroux C., Démonet J.F. When “Abegg” is read and (“A, B, E, G, G”) is not: a cortical stimulation study of musical score reading. JNS. 2007;106:1017–1027. doi: 10.3171/jns.2007.106.6.1017. [DOI] [PubMed] [Google Scholar]

- 13.Roux F.-E., Borsa S., Démonet J.F. The Mute Who Can Sing”: a cortical stimulation study on singing: Clinical article. JNS. 2009;110:282–288. doi: 10.3171/2007.9.17565. [DOI] [PubMed] [Google Scholar]

- 14.Russell S.M., Golfinos J.G. Amusia following resection of a Heschl gyrus glioma: Case report. J. Neurosurg. 2003;98:1109–1112. doi: 10.3171/jns.2003.98.5.1109. [DOI] [PubMed] [Google Scholar]

- 15.Schönwiesner M., Novitski N., Pakarinen S., Carlson S., Tervaniemi M., Näätänen R. Heschl’s Gyrus, Posterior Superior Temporal Gyrus, and Mid-Ventrolateral Prefrontal Cortex Have Different Roles in the Detection of Acoustic Changes. J. Neurophysiol. 2007;97:2075–2082. doi: 10.1152/jn.01083.2006. [DOI] [PubMed] [Google Scholar]

- 16.Trimble M., Hesdorffer D. Music and the brain: the neuroscience of music and musical appreciation. BJPsych Int. 2017;14:28–31. doi: 10.1192/s2056474000001720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Uddén J., Bahlmann J. A rostro-caudal gradient of structured sequence processing in the left inferior frontal gyrus. Phil. Trans. R. Soc. B. 2012;367:2023–2032. doi: 10.1098/rstb.2012.0009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Vergara V.M., Norgaard M., Miller R., Beaty R.E., Dhakal K., Dhamala M., Calhoun V.D. Functional network connectivity during Jazz improvisation. Sci. Rep. 2021;11:19036. doi: 10.1038/s41598-021-98332-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Vuust P., Heggli O.A., Friston K.J., Kringelbach M.L. Music in the brain. Nat. Rev. Neurosci. 2022;23:287–305. doi: 10.1038/s41583-022-00578-5. [DOI] [PubMed] [Google Scholar]

- 20.Jackendoff R. Parallels and Nonparallels between Language and Music. Music Percept. 2009;26:195–204. doi: 10.1525/mp.2009.26.3.195. [DOI] [Google Scholar]

- 21.Boebinger D., Norman-Haignere S.V., McDermott J.H., Kanwisher N. Music-selective neural populations arise without musical training. J. Neurophysiol. 2021;125:2237–2263. doi: 10.1152/jn.00588.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Norman-Haignere S., Kanwisher N.G., McDermott J.H. Distinct Cortical Pathways for Music and Speech Revealed by Hypothesis-Free Voxel Decomposition. Neuron. 2015;88:1281–1296. doi: 10.1016/j.neuron.2015.11.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Liégeois-Chauvel C., Peretz I., Babaï M., Laguitton V., Chauvel P. Contribution of different cortical areas in the temporal lobes to music processing. Brain. 1998;121:1853–1867. doi: 10.1093/brain/121.10.1853. [DOI] [PubMed] [Google Scholar]

- 24.Peretz I. The nature of music from a biological perspective. Cognition. 2006;100:1–32. doi: 10.1016/j.cognition.2005.11.004. [DOI] [PubMed] [Google Scholar]

- 25.Forseth K.J., Hickok G., Rollo P.S., Tandon N. Language prediction mechanisms in human auditory cortex. Nat. Commun. 2020;11:5240. doi: 10.1038/s41467-020-19010-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Woolnough O., Snyder K.M., Morse C.W., McCarty M.J., Lhatoo S.D., Tandon N. Intraoperative localization and preservation of reading in ventral occipitotemporal cortex. J. Neurosurg. 2022;137:1610–1617. doi: 10.3171/2022.2.JNS22170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Borchers S., Himmelbach M., Logothetis N., Karnath H.-O. Direct electrical stimulation of human cortex — the gold standard for mapping brain functions? Nat. Rev. Neurosci. 2011;13:63–70. doi: 10.1038/nrn3140. [DOI] [PubMed] [Google Scholar]

- 28.Siddiqi S.H., Kording K.P., Parvizi J., Fox M.D. Causal mapping of human brain function. Nat. Rev. Neurosci. 2022;23:361–375. doi: 10.1038/s41583-022-00583-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dziedzic T., Bernstein M. Awake craniotomy for brain tumor: indications, technique and benefits. Expert Rev. Neurother. 2014;14:1405–1415. doi: 10.1586/14737175.2014.979793. [DOI] [PubMed] [Google Scholar]

- 30.Dziedzic T.A., Bala A., Podgórska A., Piwowarska J., Marchel A. Awake intraoperative mapping to identify cortical regions related to music performance: Technical note. J. Clin. Neurosci. 2021;83:64–67. doi: 10.1016/j.jocn.2020.11.027. [DOI] [PubMed] [Google Scholar]

- 31.De Benedictis A., Moritz-Gasser S., Duffau H. Awake Mapping Optimizes the Extent of Resection for Low-Grade Gliomas in Eloquent Areas. Neurosurgery. 2010;66:1074–1084. doi: 10.1227/01.NEU.0000369514.74284.78. [DOI] [PubMed] [Google Scholar]

- 32.Duffau H., Gatignol P., Mandonnet E., Peruzzi P., Tzourio-Mazoyer N., Capelle L. New insights into the anatomo-functional connectivity of the semantic system: a study using cortico-subcortical electrostimulations. Brain. 2005;128:797–810. doi: 10.1093/brain/awh423. [DOI] [PubMed] [Google Scholar]

- 33.Fried I., Katz A., McCarthy G., Sass K.J., Williamson P., Spencer S.S., Spencer D.D. Functional organization of human supplementary motor cortex studied by electrical stimulation. J. Neurosci. 1991;11:3656–3666. doi: 10.1523/JNEUROSCI.11-11-03656.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ojemann G., Ojemann J., Lettich E., Berger M. Cortical language localization in left, dominant hemisphere: An electrical stimulation mapping investigation in 117 patients. J. Neurosurg. 1989;71:316–326. doi: 10.3171/jns.1989.71.3.0316. [DOI] [PubMed] [Google Scholar]

- 35.Penfield W., Boldrey E. Somatic motor and sensory representation in the cerebral cortex of man as studied by electrical stimulation. Brain. 1937;60:389–443. doi: 10.1093/brain/60.4.389. [DOI] [Google Scholar]

- 36.Leonard M.K., Desai M., Hungate D., Cai R., Singhal N.S., Knowlton R.C., Chang E.F. Direct cortical stimulation of inferior frontal cortex disrupts both speech and music production in highly trained musicians. Cogn. Neuropsychol. 2019;36:158–166. doi: 10.1080/02643294.2018.1472559. [DOI] [PubMed] [Google Scholar]

- 37.Heard M., Lee Y.S. Shared neural resources of rhythm and syntax: An ALE meta-analysis. Neuropsychologia. 2020;137:107284. doi: 10.1016/j.neuropsychologia.2019.107284. [DOI] [PubMed] [Google Scholar]

- 38.Bass D.I., Shurtleff H., Warner M., Knott D., Poliakov A., Friedman S., Collins M.J., Lopez J., Lockrow J.P., Novotny E.J., et al. Awake Mapping of the Auditory Cortex during Tumor Resection in an Aspiring Musical Performer: A Case Report. Pediatr. Neurosurg. 2020;55:351–358. doi: 10.1159/000509328. [DOI] [PubMed] [Google Scholar]

- 39.Piai V., Vos S.H., Idelberger R., Gans P., Doorduin J., ter Laan M. Awake Surgery for a Violin Player: Monitoring Motor and Music Performance, A Case Report. Arch. Clin. Neuropsychol. 2019;34:132–137. doi: 10.1093/arclin/acy009. [DOI] [PubMed] [Google Scholar]

- 40.Sammler D., Koelsch S., Ball T., Brandt A., Grigutsch M., Huppertz H.-J., Knösche T.R., Wellmer J., Widman G., Elger C.E., et al. Co-localizing linguistic and musical syntax with intracranial EEG. Neuroimage. 2013;64:134–146. doi: 10.1016/j.neuroimage.2012.09.035. [DOI] [PubMed] [Google Scholar]

- 41.Buzsáki G., Watson B.O. Brain rhythms and neural syntax: implications for efficient coding of cognitive content and neuropsychiatric disease. Dialogues Clin. Neurosci. 2012;14:345–367. doi: 10.31887/DCNS.2012.14.4/gbuzsaki. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hovsepyan S., Olasagasti I., Giraud A.-L. Combining predictive coding and neural oscillations enables online syllable recognition in natural speech. Nat. Commun. 2020;11:3117. doi: 10.1038/s41467-020-16956-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jensen O., Spaak E., Zumer J.M. Magnetoencephalography: From Signals to Dynamic Cortical Networks. Second Edition. 2019. Human brain oscillations: From physiological mechanisms to analysis and cognition. [DOI] [Google Scholar]

- 44.Packard P.A., Steiger T.K., Fuentemilla L., Bunzeck N. Neural oscillations and event-related potentials reveal how semantic congruence drives long-term memory in both young and older humans. Sci. Rep. 2020;10:9116. doi: 10.1038/s41598-020-65872-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Woolnough O., Forseth K.J., Rollo P.S., Roccaforte Z.J., Tandon N. Event-related phase synchronization propagates rapidly across human ventral visual cortex. Neuroimage. 2022;256:119262. doi: 10.1016/j.neuroimage.2022.119262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Everaert M.B.H., Huybregts M.A.C., Chomsky N., Berwick R.C., Bolhuis J.J. Structures, Not Strings: Linguistics as Part of the Cognitive Sciences. Trends Cognit. Sci. 2015;19:729–743. doi: 10.1016/j.tics.2015.09.008. [DOI] [PubMed] [Google Scholar]

- 47.Murphy E., Holmes E., Friston K. Natural Language Syntax Complies with the Free-Energy Principle. arXiv 2022. arXiv:2210.15098. 10.48550/arXiv.2210.15098. [DOI] [PMC free article] [PubMed]

- 48.Wollman I., Arias P., Aucouturier J.-J., Morillon B. Neural entrainment to music is sensitive to melodic spectral complexity. J. Neurophysiol. 2020;123:1063–1071. doi: 10.1152/jn.00758.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Eerola T. Expectancy-Violation and Information-Theoretic Models of Melodic Complexity. EMR. 2016;11:2. doi: 10.18061/emr.v11i1.4836. [DOI] [Google Scholar]

- 50.Margulis E.H. Toward A Better Understanding of Perceived Complexity in Music: A Commentary on Eerola (2016) EMR. 2016;11:18. doi: 10.18061/emr.v11i1.5275. [DOI] [Google Scholar]

- 51.Oxenham A.J. How we hear: the perception and neural coding of sound. Annu. Rev. Psychol. 2018;69:27–50. doi: 10.1146/annurev-psych-122216-011635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Martins M.J.D., Krause C., Neville D.A., Pino D., Villringer A., Obrig H. Recursive hierarchical embedding in vision is impaired by posterior middle temporal gyrus lesions. Brain. 2019;142:3217–3229. doi: 10.1093/brain/awz242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Flick G., Pylkkänen L. Isolating syntax in natural language: MEG evidence for an early contribution of left posterior temporal cortex. Cortex. 2020;127:42–57. doi: 10.1016/j.cortex.2020.01.025. [DOI] [PubMed] [Google Scholar]

- 54.Murphy E. 1st ed. Cambridge University Press; 2020. The Oscillatory Nature of Language. [DOI] [Google Scholar]

- 55.Pylkkänen L. Neural basis of basic composition: What we have learned from the red-boat studies and their extensions. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2020;375:20190299. doi: 10.1098/rstb.2019.0299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Pallier C., Devauchelle A.-D., Dehaene S. Cortical representation of the constituent structure of sentences. Proc. Natl. Acad. Sci. USA. 2011;108:2522–2527. doi: 10.1073/pnas.1018711108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fedorenko E., Scott T.L., Brunner P., Coon W.G., Pritchett B., Schalk G., Kanwisher N. Neural correlate of the construction of sentence meaning. Proc. Natl. Acad. Sci. USA. 2016;113:E6256–E6262. doi: 10.1073/pnas.1612132113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Woolnough O., Donos C., Murphy E., Rollo P.S., Roccaforte Z.J., Dehaene S., Tandon N. Spatiotemporally distributed frontotemporal networks for sentence reading. Proc. Natl. Acad. Sci. USA. 2023;120 doi: 10.1073/pnas.2300252120. e2300252120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Chen X., Affourtit J., Ryskin R., Regev T.I., Norman-Haignere S., Jouravlev O., Malik-Moraleda S., Kean H., Varley R., Fedorenko E. The human language system, including its inferior frontal component in “Broca’s area,” does not support music perception. Cerebr. Cortex. 2023;33:7904–7929. doi: 10.1093/cercor/bhad087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Peretz I., Champod A.S., Hyde K. Varieties of Musical Disorders. Ann. N. Y. Acad. Sci. 2003;999:58–75. doi: 10.1196/annals.1284.006. [DOI] [PubMed] [Google Scholar]

- 61.Hamberger M.J., Seidel W.T. Auditory and visual naming tests: Normative and patient data for accuracy, response time, and tip-of-the-tongue. J. Int. Neuropsychol. Soc. 2003;9:479–489. doi: 10.1017/S135561770393013X. [DOI] [PubMed] [Google Scholar]

- 62.Murphy E. ROSE: A neurocomputational architecture for syntax. arXiv 2023. arXiv:2303.08877. 10.48550/arXiv.2303.08877. [DOI]

- 63.Kumar S., Joseph S., Gander P.E., Barascud N., Halpern A.R., Griffiths T.D. A Brain System for Auditory Working Memory. J. Neurosci. 2016;36:4492–4505. doi: 10.1523/JNEUROSCI.4341-14.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Noyce A.L., Lefco R.W., Brissenden J.A., Tobyne S.M., Shinn-Cunningham B.G., Somers D.C. Extended Frontal Networks for Visual and Auditory Working Memory. Cerebr. Cortex. 2022;32:855–869. doi: 10.1093/cercor/bhab249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ellmore T.M., Beauchamp M.S., O’Neill T.J., Dreyer S., Tandon N. Relationships between essential cortical language sites and subcortical pathways: Clinical article. JNS. 2009;111:755–766. doi: 10.3171/2009.3.JNS081427. [DOI] [PubMed] [Google Scholar]

- 66.Hamilton L.S., Oganian Y., Hall J., Chang E.F. Parallel and distributed encoding of speech across human auditory cortex. Cell. 2021;184:4626–4639.e13. doi: 10.1016/j.cell.2021.07.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Garcea F.E., Chernoff B.L., Diamond B., Lewis W., Sims M.H., Tomlinson S.B., Teghipco A., Belkhir R., Gannon S.B., Erickson S., et al. Direct Electrical Stimulation in the Human Brain Disrupts Melody Processing. Curr. Biol. 2017;27:2684–2691.e7. doi: 10.1016/j.cub.2017.07.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Norman-Haignere S.V., Feather J., Boebinger D., Brunner P., Ritaccio A., McDermott J.H., Schalk G., Kanwisher N. A neural population selective for song in human auditory cortex. Curr. Biol. 2022;32:1470–1484.e12. doi: 10.1016/j.cub.2022.01.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Di Liberto G.M., Pelofi C., Bianco R., Patel P., Mehta A.D., Herrero J.L., de Cheveigné A., Shamma S., Mesgarani N. Cortical encoding of melodic expectations in human temporal cortex. Elife. 2020;9:e51784. doi: 10.7554/eLife.51784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Potes C., Gunduz A., Brunner P., Schalk G. Dynamics of electrocorticographic (ECoG) activity in human temporal and frontal cortical areas during music listening. Neuroimage. 2012;61:841–848. doi: 10.1016/j.neuroimage.2012.04.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Sturm I., Blankertz B., Potes C., Schalk G., Curio G. ECoG high gamma activity reveals distinct cortical representations of lyrics passages, harmonic and timbre-related changes in a rock song. Front. Hum. Neurosci. 2014;8:798. doi: 10.3389/fnhum.2014.00798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Benítez-Burraco A., Murphy E. Why Brain Oscillations Are Improving Our Understanding of Language. Front. Behav. Neurosci. 2019;13:190. doi: 10.3389/fnbeh.2019.00190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Murphy E., Woolnough O., Rollo P.S., Roccaforte Z.J., Segaert K., Hagoort P., Tandon N. Minimal Phrase Composition Revealed by Intracranial Recordings. J. Neurosci. 2022;42:3216–3227. doi: 10.1523/JNEUROSCI.1575-21.2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Murphy E., Forseth K.J., Donos C., Rollo P.S., Tandon N. The spatiotemporal dynamics of semantic integration in the human brain. bioRxiv. 2022 doi: 10.1101/2022.09.02.506386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Murphy E. The brain dynamics of linguistic computation. Front. Psychol. 2015;6:1515. doi: 10.3389/fpsyg.2015.01515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Murphy E., Shim J.-Y. Copy invisibility and (non-)categorial labeling. Linguistic Research. 2020;37:187–215. doi: 10.17250/KHISLI.37.2.202006.002. [DOI] [Google Scholar]

- 77.Siman-Tov T., Gordon C.R., Avisdris N., Shany O., Lerner A., Shuster O., Granot R.Y., Hendler T. The rediscovered motor-related area 55b emerges as a core hub of music perception. Commun. Biol. 2022;5:1104. doi: 10.1038/s42003-022-04009-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Martins M.J.D., Fischmeister F.P.S., Gingras B., Bianco R., Puig-Waldmueller E., Villringer A., Fitch W.T., Beisteiner R. Recursive music elucidates neural mechanisms supporting the generation and detection of melodic hierarchies. Brain Struct. Funct. 2020;225:1997–2015. doi: 10.1007/s00429-020-02105-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Royal I., Vuvan D.T., Zendel B.R., Robitaille N., Schönwiesner M., Peretz I. Activation in the Right Inferior Parietal Lobule Reflects the Representation of Musical Structure beyond Simple Pitch Discrimination. PLoS One. 2016;11:e0155291. doi: 10.1371/journal.pone.0155291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Lukic S., Thompson C.K., Barbieri E., Chiappetta B., Bonakdarpour B., Kiran S., Rapp B., Parrish T.B., Caplan D. Common and distinct neural substrates of sentence production and comprehension. Neuroimage. 2021;224:117374. doi: 10.1016/j.neuroimage.2020.117374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.McPherson M.J., Barrett F.S., Lopez-Gonzalez M., Jiradejvong P., Limb C.J. Emotional Intent Modulates The Neural Substrates Of Creativity: An fMRI Study of Emotionally Targeted Improvisation in Jazz Musicians. Sci. Rep. 2016;6:18460. doi: 10.1038/srep18460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Kunert R., Willems R.M., Casasanto D., Patel A.D., Hagoort P. Music and Language Syntax Interact in Broca’s Area: An fMRI Study. PLoS One. 2015;10:e0141069. doi: 10.1371/journal.pone.0141069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Matchin W., Hickok G. The Cortical Organization of Syntax. Cerebr. Cortex. 2020;30:1481–1498. doi: 10.1093/cercor/bhz180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Andrews J.P., Cahn N., Speidel B.A., Chung J.E., Levy D.F., Wilson S.M., Berger M.S., Chang E.F. Dissociation of Broca’s area from Broca’s aphasia in patients undergoing neurosurgical resections. J. Neurosurg. 2023;138:847–857. doi: 10.3171/2022.6.JNS2297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Koelsch S. Wiley; 2012. Brain and Music. [Google Scholar]

- 86.Cheung V.K.M., Meyer L., Friederici A.D., Koelsch S. The right inferior frontal gyrus processes nested non-local dependencies in music. Sci. Rep. 2018;8:3822. doi: 10.1038/s41598-018-22144-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Feng Y., Quon R.J., Jobst B.C., Casey M.A. Evoked responses to note onsets and phrase boundaries in Mozart’s K448. Sci. Rep. 2022;12:9632. doi: 10.1038/s41598-022-13710-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Scerrati A., Labanti S., Lofrese G., Mongardi L., Cavallo M.A., Ricciardi L., De Bonis P. Artists playing music while undergoing brain surgery: A look into the scientific evidence and the social media perspective. Clin. Neurol. Neurosurg. 2020;196:105911. doi: 10.1016/j.clineuro.2020.105911. [DOI] [PubMed] [Google Scholar]

- 89.Conner C.R., Ellmore T.M., Pieters T.A., DiSano M.A., Tandon N. Variability of the Relationship between Electrophysiology and BOLD-fMRI across Cortical Regions in Humans. J. Neurosci. 2011;31:12855–12865. doi: 10.1523/JNEUROSCI.1457-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.McCarty M.J., Woolnough O., Mosher J.C., Seymour J., Tandon N. The listening zone of human electrocorticographic field potential recordings. eNeuro. 2022 doi: 10.1523/ENEURO.0492-21.2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Pieters T.A., Conner C.R., Tandon N. Recursive grid partitioning on a cortical surface model: an optimized technique for the localization of implanted subdural electrodes: Clinical article. JNS. 2013;118:1086–1097. doi: 10.3171/2013.2.JNS121450. [DOI] [PubMed] [Google Scholar]

- 92.Tong B.A., Esquenazi Y., Johnson J., Zhu P., Tandon N. The Brain is Not Flat: Conformal Electrode Arrays Diminish Complications of Subdural Electrode Implantation, A Series of 117 Cases. World Neurosurg. 2020;144:e734–e742. doi: 10.1016/j.wneu.2020.09.063. [DOI] [PubMed] [Google Scholar]

- 93.Cox R.W. AFNI: Software for Analysis and Visualization of Functional Magnetic Resonance Neuroimages. Comput. Biomed. Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- 94.Dale A.M., Fischl B., Sereno M.I. Cortical Surface-Based Analysis. Neuroimage. 1999;9:179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- 95.Adger D. Oxford University Press; 2003. Core Syntax: A Minimalist Approach. [Google Scholar]

- 96.Radford A. Cambridge University Press; 2004. Minimalist Syntax: Exploring the Structure of English. [Google Scholar]

- 97.van de Pol I., Lodder P., van Maanen L., Steinert-Threlkeld S., Szymanik J. 2021. Quantifiers Satisfying Semantic Universals Are Simpler (PsyArXiv) [DOI] [PubMed] [Google Scholar]

- 98.Dehaene S., Al Roumi F., Lakretz Y., Planton S., Sablé-Meyer M. Symbols and mental programs: a hypothesis about human singularity. Trends Cognit. Sci. 2022;26:751–766. doi: 10.1016/j.tics.2022.06.010. [DOI] [PubMed] [Google Scholar]

- 99.Kolmogorov A.N. Three approaches to the quantitative definition of information ∗. Int. J. Comput. Math. 1968;2:157–168. doi: 10.1080/00207166808803030. [DOI] [Google Scholar]

- 100.Lempel A., Ziv J. On the Complexity of Finite Sequences. IEEE Trans. Inf. Theor. 1976;22:75–81. doi: 10.1109/TIT.1976.1055501. [DOI] [Google Scholar]

- 101.Li M., Vitányi P. Springer International Publishing; 2019. An Introduction to Kolmogorov Complexity and its Applications. [DOI] [Google Scholar]

- 102.Faul S. Kolmogorov Complexity. 2022. MATLAB Central File Exchange. https://www.mathworks.com/matlabcentral/fileexchange/6886-kolmogorov-complexity.

- 103.Urwin S.G., Griffiths B., Allen J. Quantification of differences between nailfold capillaroscopy images with a scleroderma pattern and normal pattern using measures of geometric and algorithmic complexity. Physiol. Meas. 2017;38:N32–N41. doi: 10.1088/1361-6579/38/2/N32. [DOI] [PubMed] [Google Scholar]

- 104.Nelson M.J., El Karoui I., Giber K., Yang X., Cohen L., Koopman H., Cash S.S., Naccache L., Hale J.T., Pallier C., Dehaene S. Neurophysiological dynamics of phrase-structure building during sentence processing. Proc. Natl. Acad. Sci. USA. 2017;114:E3669–E3678. doi: 10.1073/pnas.1701590114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Brennan J.R., Stabler E.P., Van Wagenen S.E., Luh W.-M., Hale J.T. Abstract linguistic structure correlates with temporal activity during naturalistic comprehension. Brain Lang. 2016;157–158:81–94. doi: 10.1016/j.bandl.2016.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Hale J.T., Campanelli L., Li J., Bhattasali S., Pallier C., Brennan J.R. Neurocomputational Models of Language Processing. Annu. Rev. Linguist. 2022;8:427–446. doi: 10.1146/annurev-linguistics-051421-020803. [DOI] [Google Scholar]

- 107.Levy R., Fedorenko E., Gibson E. The syntactic complexity of Russian relative clauses. J. Mem. Lang. 2013;69:461–496. doi: 10.1016/j.jml.2012.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Slaats S., Martin A.E. 2023. What’s Surprising about Surprisal (PsyArXiv) [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Video of cortical stimulation mapping during music production (keyboard play). Sites stimulated are colored on patient’s cortical surface.

List of sentences used during the sentence repetition task, labeled by syntactic complexity (0 = simple, 1 = complex).

Data Availability Statement

-

•

The datasets generated from this research are not publicly available due to them containing information non-compliant with HIPAA and the human participant the data was collected from did not consent to their public release. However, they are available on request from the lead contact.

-

•

The custom code that supports the findings of this study is available from the lead contact on request.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.