Abstract.

Purpose

Hyperspectral imaging shows promise for surgical applications to non-invasively provide spatially resolved, spectral information. For calibration purposes, a white reference image of a highly reflective Lambertian surface should be obtained under the same imaging conditions. Standard white references are not sterilizable and so are unsuitable for surgical environments. We demonstrate the necessity for in situ white references and address this by proposing a novel, sterile, synthetic reference construction algorithm.

Approach

The use of references obtained at different distances and lighting conditions to the subject were examined. Spectral and color reconstructions were compared with standard measurements qualitatively and quantitatively, using and normalized RMSE, respectively. The algorithm forms a composite image from a video of a standard sterile ruler, whose imperfect reflectivity is compensated for. The reference is modeled as the product of independent spatial and spectral components, and a scalar factor accounting for gain, exposure, and light intensity. Evaluation of synthetic references against ideal but non-sterile references is performed using the same metrics alongside pixel-by-pixel errors. Finally, intraoperative integration is assessed though cadaveric experiments.

Results

Improper white balancing leads to increases in all quantitative and qualitative errors. Synthetic references achieve median pixel-by-pixel errors lower than 6.5% and produce similar reconstructions and errors to an ideal reference. The algorithm integrated well into surgical workflow, achieving median pixel-by-pixel errors of 4.77% while maintaining good spectral and color reconstruction.

Conclusions

We demonstrate the importance of in situ white referencing and present a novel synthetic referencing algorithm. This algorithm is suitable for surgery while maintaining the quality of classical data reconstruction.

Keywords: hyperspectral imaging, multispectral imaging, intraoperative, white balancing, illuminant correction, vignetting correction

1. Introduction

Hyperspectral imaging (HSI) has shown potential in pre-clinical and clinical studies to non-invasively provide information for disease diagnosis and surgical guidance.1–4 HSI provides multi-channel spectral imaging data across a wide field of view, where each channel represents a narrow spectral measurement centred around a given wavelength. This technique can also be referred to as multispectral imaging when there is a low number of bands, however for simplicity, we will refer to this as HSI in all cases. The diffusely reflected light is measured across a range of wavelengths for each pixel of an image. The diffuse reflection is determined by the scattering properties and absorbing or emitting species in the tissues being examined.5 This provides the opportunity for non-invasive, quantitative analysis of these tissues to investigate physiologically relevant parameters, such as tissue oxygen saturation.6

There are three broad categories of HSI cameras: spatial scanning, spectral scanning, and snapshot acquisition. Spatial scanning is typically implemented with a linescan camera that acquires data across all wavelengths simultaneously but scans through each line of pixels sequentially. In contrast, spectral scanning cameras collect all pixels at a given single wavelength band simultaneously using a band pass filter but scan through each wavelength bands sequentially. These methods both measure a complete hypercube directly. This highly resolved spectral data have been used for the majority of medical applications published to date.4,7–9 These HSI approaches are not able to provide real-time data as their respective scanning mechanisms result in long acquisition times that are prone to motion artifacts. In contrast, snapshot mosaic cameras utilize sensors where each pixel has a dedicated band pass filter. This provides information on one band per pixel but captures all pixels in a single shot.10 This allows highly time resolved data to be obtained, at the cost of lower spatial and spectral resolution. To obtain a full hypercube from a snapshot mosaic camera, the data must be demosaiced with the remaining information inferred using classical or learning-based interpolation,11 followed by spectral cross-talk correction due to the parasitic neighboring bands effects.12 The increased temporal resolution given by effective snapshot mosaic image demosaicing provides the opportunity to aid surgical guidance.13,14

To enable physiological parameter extraction from any of these imaging methods, accurate spectra must be extracted from hyperspectral images. This requires white balancing as an initial step. White balancing uses a white and dark reference to account for lighting conditions, vignetting, and optical transmission through the set-up. The white reference image is typically taken using a well characterized, uniform, highly reflective, Lambertian surface.1 However existing established references, such as 95% reflective Spectralon tiles, cannot be sterilized and are very sensitive, making them challenging to integrate in a surgical environment. This necessitates that the white reference is acquired outside of the surgical field, resulting in different imaging conditions relative to the subject. Ideally, a new white reference should be acquired whenever the imaging conditions are altered, which, at best, disrupts the clinical workflow and, at worst, is impossible. This forces the settings to be kept constant throughout the procedure, thereby limiting the setup’s use, or risks losing the ability to acquire quantitative spectral data.

To alleviate this issue,15 the proposed adaptations of several white balancing models initially developed for standard color photography to determine the light source spectrum from a hyperspectral image. These models assume a range of colors within the spectral scene to ensure that at least one pixel per band will display the maximum value, or a variation of the gray-world algorithm, which assumes the mean of the image will be gray. These assumptions do not hold well for surgical scenes, which often have largely homogeneous color schemes.

Spectral and spatial vignetting corrections can also be performed independently. Reference 16 proposed to use specular highlight analysis for spectral correction. This requires additional low exposure images to be obtained to prevent saturation in the specular reflection regions. This allows illuminant spectra to be determined intraoperatively without the need for a reference; however, it is unable to directly account for vignetting and can only be used to provide relative data as specular reflections cannot quantitatively be related by Lambertian reflections. These relative data can be used to extract ratiometric clinical parameters such as oxygen saturation.2,17 However, this may not allow quantitative measurement of all parameters such as chromophore concentrations, including hemoglobin, which is therefore often provided on a relative scale.7

Vignetting correction can be performed following spectral correction. For hyperspectral images, this assumes a constant optical configuration and requires significant characterization before use.18 In surgical settings, there are often adjustable focal lengths changing the optical configuration meaning this is challenging to apply. Single image vignetting correction models are available for RGB imaging;19 however, these also assume natural images with a variety of colors, which is not an appropriate assumption for surgical imaging.

Addressing the need for an intraoperative model to account for vignetting and illuminant spectrum while allowing quantitative spectral extraction is the focus of this paper, as a theater-ready solution to this problem has yet to be adopted.

In this paper, our contributions are threefold: (i) a novel synthetic reference generation algorithm using a widely available standard reference, (ii) quantification of the impact of improper white balancing, and (iii) demonstration of the suitability of these synthetic references in both a controlled and surgical environment. To the best of our knowledge, the novelty in the synthetic reference algorithm includes the hyperspectral composite raw image construction approach, the reflectivity correction approach, and the novel formalisation of a separable white reference model.

This study aims to use snapshot HSI to reproduce known spectra quantitatively using only reference objects widely available in the operating room. Section 2 describes the postprocessing steps required for snapshot hyperspectral imaging and details a novel synthetic reference generation algorithm and the error quantification methods used to evaluate this. Section 3 quantifies the impact of improper white balancing, followed by the comparison of synthetic references generated using this algorithm to measured non-sterile references in the same conditions alongside their associated reconstructed spectra. Finally, an example of an intra-operative image taken of a human cadaveric spine balanced with both a non-sterile measured reference and a synthetic reference is shown. These results demonstrate a novel algorithm to allow white balancing in a fully sterile environment allowing the recovery of accurate quantitative spectra.

2. Materials and Methods

2.1. Hyperspectral Imaging Setup

A snapshot hyperspectral imaging camera with a mosaic pattern across the sensor is used in this work. More specificially, we utilize a 16 band visible range snapshot mosaic camera (Ximea utilising the IMEC CMV2K-SSM4X4-VIS sensor, Germany) alongside an coupler (Karl Storz, NDTec, VCam HD-F-35 - Camera Lens Adapter, Germany) and a 90 deg exoscope (Karl Storz Endoscopy, VITOM Telescope 0° w Integ. Illuminator, United Kingdom), as detailed in Ref. 14. An exoscope is chosen to create a standalone imaging system and ease translation to first-in-patient clinical use by minimizing disruption to the primary patient care workflow. A disposable sterile ruler commonly found in operating theaters is used as the reference for constructing synthetic references shown in Fig. 1(c).

Fig. 1.

(a) Hyperspectral imaging setup mounted to Kuka robot, (b) the colored checkerboard used to provide a variety of well characterized spectra, (c) the sterile ruler used to construct synthetic references, (d) the spectra of the Karl Storz D light C, Karl Storz power LED 300, and Karl Storz Xenon Nova 300 light sources, alongside (e) an sRGB reconstruction of an image of a snipped section of a ruler against tissue taken with the handheld hyperspectral imaging setup whose field of view is cropped due to the scope.

To capture data in a controlled laboratory environment, allowing quantitative investigation of white referencing significance, we augment our surgical imaging set-up with a robotic mount, the exoscope rotation is fixed and either a Xenon light source (Karl Storz Endoscopy, Cold Light Fountain D light C, United Kingdom) or an LED light source (Karl Storz Endoscopy, Power LED 300, United Kingdom) is used, whose area normalized emission spectra is shown in Fig. 1(d). The system is mounted to a seven degree of freedom robot (LBR, KUKA med7 R800, Germany) to enable multiple images to be taken with a constant reproducible camera position relative to the subject. This is shown in Fig. 1(a). A colored checkerboard target (datacolor, Spyder Checkr, United Kingdom), where each tile has a well characterized measured spectrum, is imaged at each distance and shown in Fig. 1(b).

For seamless integration to the intraoperative environment, the camera is used in a handheld configuration with a Xenon light source (Karl Storz Endoscopy, Xenon Nova 300, United Kingdom) whose area normalized emission spectrum is shown in Fig. 1(d). A standard red, green, blue (sRGB) reconstruction of an image of a snipped section of this ruler on tissue using this handheld system is shown in Fig. 1(e).

2.2. Estimating Reflectance from Hyperspectral Data

As detailed by Ref. 12, estimating reflectance from hyperspectral mosaic data necessitates a computational pipeline encompassing white balancing, demosaicing, and spectral cross-talk correction. Our white balancing approach is detailed in the subsequent sections. For demosaicing purposes, in this work, we rely on bilinear interpolation to maintain the spatial resolution of the raw mosaic image but more advanced approaches such as the learning-based one of Ref. 11 could also be employed with the work presented here. The demosaiced hypercube is then corrected pixel by pixel to account for parasitic spectral cross-talk effects and secondary peaks in the band responses within the sensor to return the final hypercube.12

2.2.1. White balancing

Hyperspectral images must be white balanced before further post-processing to account for the lighting conditions (including light source and illumination distribution), optical transmission through the system, exposure of the camera, quantum efficiency of the sensors, and inherent sensor noise. Any bias in sensor noise is accounted for by using a dark reference, obtained with the lens cap covering the sensor. The dark image is then subtracted from the image. The remainder of the factors are accounted for by using a white reference image, traditionally obtained using a uniformly highly reflective Lambertian surface. Standard practice is to use a Spectralon 95% reflective tile, to provide the maximum possible intensity values. This white image is used to scale the image intensities between 0 and 1. Given the demosaiced data, this is combined to form a full white balancing step:

| (1) |

where and are the spatial co-ordinates and is the spectral co-ordinate in the uncorrected image , white reference , dark reference , and white-balanced reflectance estimate hypercubes, respectively. , , and describe the exposure time used for acquisition of each of the above, and is the bandwise reflectivity of the white reference.

To optimize computational performance, white balancing can be performed on the raw mosaic images before demosaicing. When using established white reference materials, the reflectivity of each band can be assumed to be a constant . These conditions simplify Eq. (1) to

| (2) |

where the superscript denotes mosaic images with the spatio–spectral coordinates and . In Eq. (2), spectral bands are implicitly indexed by the spatial coordinates and as each pixel in a snapshot mosaic sensor only captures a single spectral band.

In the remainder of this section, we focus on the denominator in Eq. (1) or Eq. (2). For brevity, empirically obtained images or videos will be assumed to be exposure corrected and dark corrected before use, whereas reflectivity correction will be discussed in detail in Sec. 2.5.2. We also assume that demosaicing and spectral crosstalk correction as per Ref. 12 is applied throughout.

2.3. Quantifying the Effect of Improper White Balancing

As current white references can not be used within a sterile surgical field, changes in distance and lighting conditions are inevitable between the acquisition of patient and calibration images. In this section, we focus on evaluating the effects of such changes, further considering that these are typically associated with changes in optical focus and low-level camera controls.

An image of each tile of a well-characterized checkerboard, as detailed in Sec. 2.1, is acquired under a set of imaging configurations (distance, light source, etc.) and processed to form a hypercube for each tile. Each tile is annotated with five circles of radius 30 pixels, to ensure the annotations can be performed consistently at different distances where the tile occupies different proportions of the content area. This allows the mean plus or minus the standard deviation of their spectra to be visualized against the spectrometer measurements of that tile to provide a qualitative measure of fit. This calculation is performed for both the quantitative spectra and for mean normalized relative spectra, as relative spectra can be used to compute some physiologically relevant ratiometric parameters as described in Sec. 1.

These datasets were obtained at seven distances between 20 and 35 cm to cover the comfortable operating range of the exoscope-based set-up in theater. This was performed using two light sources, Karl Storz D light C and Karl Storz LED. This allows the comparison of a variety of distances and lighting conditions to mimic the differences encountered in sub-optimal clinical white reference conditions to the imaging conditions.

2.3.1. Spectral NRMSE

For quantitative comparison, the reconstructed spectrum at any given band is evaluated against the intensity at the same wavelength in the reference spectrum using the normalized root mean squared error (NRMSE) calculated across all bands:

| (3) |

2.3.2. Tristimulus color reconstruction and perceptual color differences

In addition, each tile image is converted to sRGB and the center of each composited to construct an sRGB checkerboard image for a qualitative comparison to the original checkerboard in Fig. 1(b). The same region of each tile can also be converted to CIELAB for pixel-by-pixel comparison to the CIELAB values provided by the manufacturer.20 To measure the perceptual discrepancy in tristimulus color reconstruction, a calculation is used as is defined in Ref. 21 (using pyciede v0.0.21 pyciede2000.ciede2000 function). A value of indicates an imperceptible difference in color, whereas values of up to 6 are considered acceptable for commercial reproduction using printing presses.

To compute the RGB and CIELAB images, the spectra are first converted to CIEXYZ22 using a camera-specific matrix. The CIEXYZ values can be converted to linear sRGB using a literature matrix corresponding to a standard D65 light source followed by conversion to sRGB using gamma correction to account for standard monitors.23,24

2.4. White Reference Model

A measured white reference image can be demosaiced to a hypercube where there is a full spectrum per spatial pixel . We propose to factorize the white reference as a product of spatial-only vignetting , spectral sensitivities of each band , and a scalar factor to account for the overall light intensity. In addition, noise should be accounted for in both the spatial and spectral domains:

| (4) |

The assumption of separability is intuitive from the discussion of vignetting and color constancy being addressed separately in the literature.16,18,19,25,26 By convention, to achieve a well-posed decomposition, we constrain it such that and . This ensures that: (1) spatial vignetting only accounts for variations in light intensity and optical transmission across the image; (2) spectral sensitivities only account for relative efficiency of the different bands on the sensor and the spectral shape of the light source; and (3) the scalar factor only accounts for electronic gain, exposure, and overall light intensity settings. For simplicity, this model neglects chromatic aberrations in the optics and assumes all pixels corresponding to a given band behave the same.

2.5. Synthetic White Reference Algorithm

Since a sterile standard reference equivalent to a uniformly reflective Lambertian surface is not commercially available, a standard sterile ruler shown in Fig. 1(c) is used. This can be placed in the sterile surgical field on the subject to ensure the imaging conditions are identical. The reverse of the ruler has a matte white appearance without markings. This ruler does not cover the full field of view and so images must be composited to estimate the vignetting over the entire field of view. To ensure full coverage, a short video of the sterile ruler crossing the field of view is captured to create a synthetic white reference. This allows the readily available, inexpensive reference to be used in a wide variety of operative cavity dimensions.

As compositing introduces imperfections, we exploit constraints in our white reference model to fit a more accurate estimation. The ruler is also not uniformly reflective across the wavelengths of interest and so this must be accounted for.

An overview of the resulting computational pipeline to convert a short video of the ruler to a synthetic white reference is shown Fig. 2(a). This allows reconstruction of quantitatively accurate spectral data. In some cases, only relative data are required where a simplified algorithm can be used to generate only spectral sensitivities, as shown in Fig. 2(b). Individual steps are detailed in the remainder of the section.

Fig. 2.

Flow charts demonstrating the algorithms for using a sterile reference to white balance intra-operative data for (a) quantitative or (b) relative spectral reconstructions.

2.5.1. Generating composite image

Hyperspectral videos capture information across space, wavelength, and time. The video frames are stacked to create a spatio–spectral plane due to the mosaic structure and a temporal axis. Because of the sweeping motion of the bright ruler, for each spatio–spectral pixel in the plane, there is a temporal distribution of measured intensities, which alternates between background and sterile reference intensities in the axis. The temporal regions corresponding to the sterile reference are isolated, as detailed below, and the median of these chosen as the value for that spatio–spectral location in the composite reference. To segment these temporal regions, we first pre-process the per pixel temporal intensity profile to suppress the impact of background values to a large extent by setting to 0 all the values whose intensity lie below a threshold computed with a classical parameter-free Otsu’s method27 (using skimage v0.19.2 skimage.filters.threshold_otsu function). We further clamp high intensities close to saturation with a fixed threshold to remove the impact of specular reflections. These pre-processed temporal profiles are then smoothed using a Savitsky–Golay filter, with window size 15 and order 228 (using SciPy v1.8.1 scipy.signal.savgol_filter function), to limit the impact of noise. Temporal gradients are then calculated for the resulting pre-processed intensities. Finally, the peaks, detected using a peak picking algorithm that finds all local maxima by simple comparison of neighboring values and refines these based on their height and prominence (using SciPy v1.8.1 scipy.signal.find_peaks function), are used to identify the temporal regions of interest. The median of the raw values in these regions, prior to smoothing and thresholding, is then recorded and used as the estimated intensity of that spatial location in the initial mosaic composite reference .

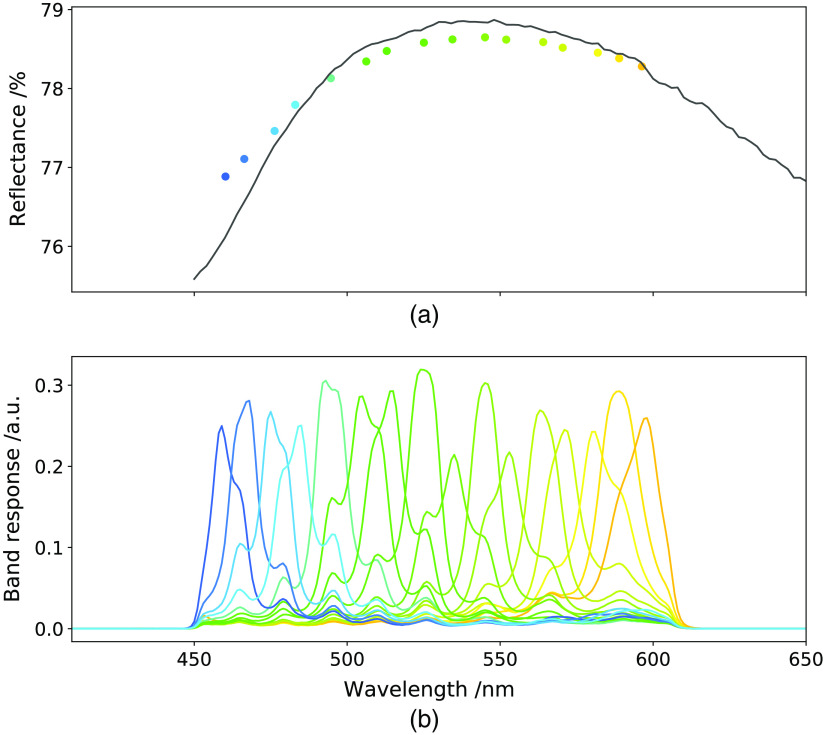

2.5.2. A priori ruler reflectivity correction

The sterile ruler was measured in the laboratory using a benchtop spectrophotometer to obtain its reflectance spectrum as shown in Fig. 3. This ruler back can be approximated as a Lambertian surface as variation in spectrum due to small angle changes is small, and the images of this ruler appear matte with few specular reflections. The ideal imaging condition is fronto-parallel imaging, so angle deviations from this are considered sufficiently small as to have negligible effect. From there, an a priori reflectivity correction factor is calculated per band of the mosaic sensor:

| (5) |

where is the band response as provided by the sensor manufacturer. Both the reflectivity correction factors and the band responses are shown in Fig. 3.

Fig. 3.

The reflectance spectrum of the ruler surface without markings and the appropriate reflectivity correction factors computed from (a) this and (b) the band responses of the camera.

Each pixel of the initial composite reference is then divided by the a priori correction factor for the appropriate band to form the final composite reference: . We note that this correction captures the sensor band responses and the spectrum of the ruler as known a priori but does not account for the unknown spectrum of the light source used intraoperatively. This provides an essential step in the generation of synthetic references or the spectral sensitivities. In the generation of synthetic references, this correction is performed on a composite reference, which may also present important imperfections due to noise and video input processing. We aim to mitigate these through our separable model fitting.

2.5.3. Separable model parameter fitting

A variety of methods can be used to calculate the factors in Eq. (4). We present three options that vary in their modeling of the vignetting, and whether the spectral sensitivities and scalar factor are fitted jointly or sequentially. For simplicity, we present the methods given a measured white reference as input although they can also be applied to a composite white reference .

Vignetting modeled non-parametrically

The first method only makes use of the separability assumption, models the vignetting as a non-parametric function, and calculates the spectral sensitivities and scalar factor. Since vignetting is modeled as independent of wavelength, it should be identical for all bands. Making use of our convention that , and assuming a zero mean noise pattern , we find that, up to residual noise, Eq. (4) leads to

| (6) |

Making use of our second convention that , we find that, up to residual noise:

| (7) |

| (8) |

Finally, spectral sensitivities can be estimated as

| (9) |

This method accounts for imperfections in the system such as dust on lenses more appropriately; however, it is also more likely to retain imperfections from the input reference, for example, artifacts in the compositing process. It is this Eq. (9) that is used to calculate the spectral sensitivities for balancing of relative data as in Fig. 2(b).

Vignetting modeled with a Gaussian function

Alternatively, a parametric model can be used to capture the vignetting. As is standard in computer vision, we use a two-dimensional (2D), normalized, isotropic Gaussian for this purpose:

| (10) |

where and represent the co-ordinates of the center of the Gaussian in the and directions, respectively, and represents the standard deviation.

Our second method fits the Gaussian parameters, the scalar factor, and spectral sensitivities using a joint least squares approach:

| (11) |

The third method calculates the scalar factor and spectral sensitivities as in the first method with the Gaussian fitted to the non-parametric vignetting using a least squares approach on the Gaussian parameters only:

| (12) |

As will be apparent from our experiments, this third method provides advantageous benefits due to the Gaussian fit mitigating imperfections in the composite reference while minimizing the computational time.

Masking content area

Finally, as detailed in Refs. 29 and 30, imaging through a scope leads to a circular content area that needs to be taken in to account in our computational pipeline. In this work, the content area disk is detected from a frame of a video using a pre-trained neural network30 (using the implementation in a GitHub repository available at https://github.com/RViMLab/endoscopy). The radius is reduced to 90% of its original size to discount edge effects. This circle is then used to mask the content area as the final step to generate the synthetic white reference.

2.5.4. Balancing using only spectral sensitivity for relative data

For some use cases, absolute reflectance information is not required so relative data are sufficient as described in Sec. 1. When normalizing the spectral data at each pixel after Eq. (1) using , the spatial effects of the vignetting and absolute intensity information arising from are lost. In this context, only spectral sensitivities are required for white balancing. Dismissing the effect of dark images for simplicity, this is performed as follows:

| (13) |

However, this removes the possibility of proper sRGB or CIELAB construction. The simplified algorithm using only a single image of the ruler to generate these spectral sensitivities is also shown in Fig. 2(b).

2.6. Evaluation Methodology for Synthetic References

For each distance in our experimental setup, a video of the ruler moving across the field of view was taken against a static background. These were used to construct synthetic white references for each distance using the model described in Sec. 2.4 with Gaussian vignetting and calculated scalar factor and spectral sensitivities. Synthetic references were then used to reconstruct quantitative spectra from our checkerboard datasets and the errors calculated as in Sec. 2.3.

The similarity of the synthetic references to measured references was evaluated using a range of metrics. The pixel-by-pixel absolute percentage errors between the synthetic and measured references, and the absolute percentage errors between each set of spectral sensitivities were summarized in terms of median absolute percentage error (MedAPE):

| (14) |

where is a given signal compared against a given reference both indexed by .

For the evaluation of the relative spectra achieved with the method presented in Sec. 2.5.4, a single set of spectral sensitivities is calculated based on the lighting conditions from a 150 pixel radius region of interest in a single image of a ruler. These spectral sensitivities are then used to reconstruct the relative spectra at all distances and the errors calculated in these as in Sec. 2.3. However, good sRGB and CIELAB reconstructions cannot be computed from relative data as the intensity information is lost so this analysis is not performed in this case.

Finally, the synthetic reference method is evaluated in terms of surgical workflow integration in a sterile environment by its use in a human cadaveric spine surgery. Qualitative evaluation is performed on sRGB reconstructions as part of Sec. 3.3.1.

3. Results

3.1. Impact of Improper White Balancing

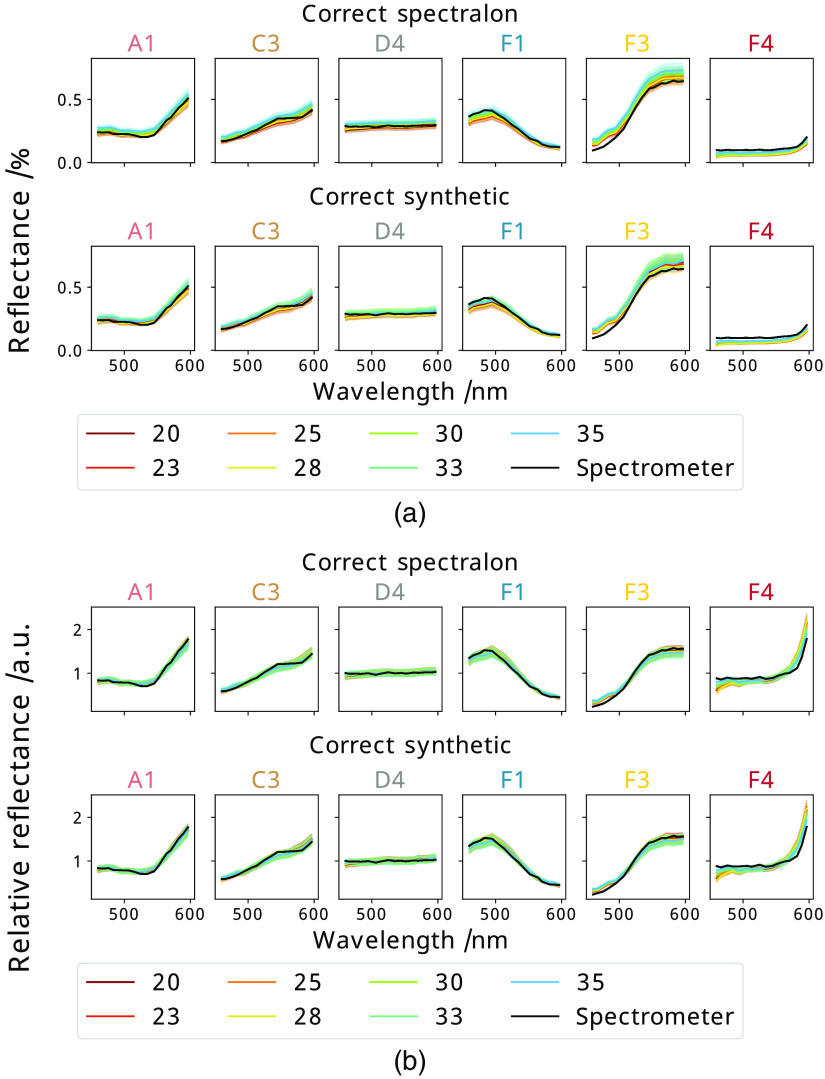

Datasets were obtained at a variety of distances and under different lighting conditions as described in Sec. 2.3. To summarize this spectral data, six representative tiles of interest (A1, C3, D4, F1, F3, F4) are displayed for the variety of white balancing regimes investigated in Fig. 4, and the sRGB reconstructions of each regime for the dataset at 20 cm shown in Fig. 5. These tiles demonstrate primary colors and some colors expected in surgical scenes. The associated errors for these data are shown in Table 1.

Fig. 4.

The mean spectrum of a selection of tiles as measured from each distance (cm) plotted against the spectrometer measurement of that tile, where each plot represents a new tile [labeled above it as corresponding to those in Fig. 1(b)] for both (a) quantitative and (b) relative data. At each distance, the data are balanced with either the correct Spectralon white reference image, the Spectralon reference obtained at 35 cm or the Spectralon reference obtained using a different light source.

Fig. 5.

sRGB reconstructions of the central of a selection of tiles [labeled below as corresponding to those in Fig. 1(b)] taken at 20 cm and balanced with either the correct Spectralon white reference image, the Spectralon reference obtained at 35 cm, or the Spectralon reference obtained using a different light source.

Table 1.

The mean NRMSE for the mean measured spectrum of each tile compared to its spectrometer spectrum of each quantitative and relative dataset, alongside the median pixel-by-pixel value between the composite CIELAB reconstructions compared to the literature values. These datasets are balanced with the correct Spectralon reference, the Spectralon reference obtained at 35 cm, or the Spectralon reference obtained using an LED light source.

| Distance (cm) | NRMSE for quantitative spectra (%) | NRMSE for relative spectra (a.u.) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Correct spectralon | Spectralon at 35 cm | Spectralon using LED | Correct Spectralon | Spectralon at 35 cm | Spectralon using LED | Correct Spectralon | Spectralon at 35 cm | Spectralon using LED | |

| 20 | 0.154 | 0.266 | 0.422 | 6.52 | 7.07 | 18.1 | 0.0491 | 0.0454 | 0.388 |

| 23 | 0.161 | 0.206 | 0.404 | 6.76 | 6.33 | 17.6 | 0.0537 | 0.0540 | 0.403 |

| 25 | 0.137 | 0.151 | 0.441 | 7.03 | 7.68 | 17.7 | 0.0465 | 0.0454 | 0.387 |

| 28 | 0.157 | 0.182 | 0.407 | 6.56 | 6.41 | 16.8 | 0.0522 | 0.0534 | 0.402 |

| 30 | 0.124 | 0.134 | 0.484 | 7.37 | 7.87 | 17.3 | 0.0387 | 0.0381 | 0.383 |

| 33 | 0.124 | 0.126 | 0.497 | 7.45 | 7.11 | 17.4 | 0.0383 | 0.0390 | 0.382 |

| 35 | 0.119 | 0.119 | 0.466 | 7.96 | 7.96 | 16.6 | 0.0336 | 0.0336 | 0.385 |

The first regime shows the optimally reconstructed spectra for a variety of distances of the camera from the checkerboard. These are calculated by balancing each dataset obtained using the Karl Storz D light C using a measured Spectralon white reference obtained at the same location as the data with the same light source, which is not possible in a surgical environment.

To demonstrate the impact of the white reference being obtained at a different distance to the subject, the checkerboard data at all distances are balanced with the white reference obtained at 35 cm. Visually a wider spread of intensities in quantitative spectra can be seen, and the errors confirm that as the distance varies from that of the white reference the errors in the quantitative reconstructed spectra also increase, as shown in Fig. 4. The values and sRGB reconstructions do not show a significant difference compared with the correctly balanced spectra as a large amount of spectral information is lost on conversion to sRGB or CIELAB, which reflects the increase in information expected from the use of hyperspectral imaging compared to conventional cameras.

Another likely problem with limited white referencing in a surgical setting is the likelihood of changing lighting conditions. This was mimicked by obtaining a white reference with the Karl Storz LED light source at each distance and using this to balance the data collected with a different light source, the Karl Storz D light C, which was then processed as above. The resulting errors, spectra, and sRGB reconstructions clearly show the importance of white referencing to account for the lighting conditions of the scene as the shape of the spectra are significantly changed in both quantitative and relative spectra, which is also clearly visible in the sRGB reconstruction and all error metrics so any change in lighting must be accounted for.

3.2. Goodness of Fit of the Separable White Reference Models

The measured white references obtained at each distance were approximated using the non-parametric, Gaussian vignetting with jointly fitted scalar factor and spectral sensitivities, and Gaussian vignetting with calculated scalar factor and spectral sensitivities methods to determine the inherent errors in using this model and these approximations. The pixel-by-pixel absolute percentage errors in the resulting approximated references compared to their respective measured references are then plotted in Fig. 6 and show very low errors across all models. The models using Gaussian fitting show slightly higher errors, likely due to the inability of the Gaussian fit to account for dust on lenses in the set-up.

Fig. 6.

Box plots showing the pixel-by-pixel absolute percentage errors in modeled references compared to the original references at a range of distances using either non-parametric, Gaussian vignetting with fitted scalar factor and spectral sensitivities, and Gaussian vignetting with calculated scalar factor and spectral sensitivities methods.

The spectral sensitivities obtained for each model were found to be invariant with respect to distance or approximation method. Any change in distance requires a change in optical focus, which results in a change of optical vignetting. This suggests that the spectral sensitivities are unaffected by optical vignetting or its modeling. This lends credence to the assumption that the spatial and spectral dependencies of the components of white references can be separated.

This confirms that white reference images can be well modeled using the assumption of separability between spatial and spectral components, and using a 2D isotropic Gaussian.

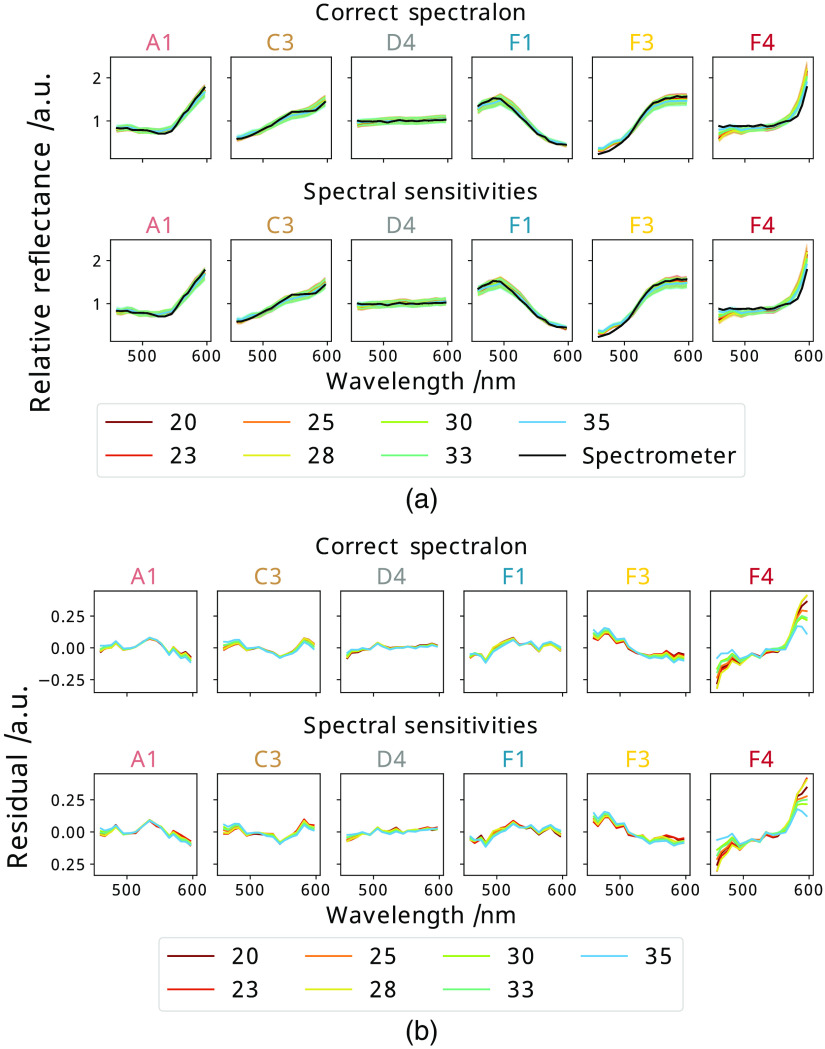

3.3. Evaluation of Synthetic References from Sterile Ruler Sweeps

The errors between the synthetic references compared with measured references were calculated as described in Sec. 2.6 and can be seen in Table 2 for the synthetic references generated from videos of the ruler against a fixed background obtained at the same position as the data. These show that this method reproduces the measured Spectralon references well, particularly as the errors, visualized in Fig. 7, are comparable to the inherent errors in modelling references shown in Fig. 8.

Table 2.

MedAPE (3sf) in the spectral sensitivities and in the pixel-by-pixel percentage errors (3sf) between the synthetic reference generated from a video of the sterile ruler and the reflectivity corrected Spectralon reference for each distance, alongside the mean NRMSE (3sf) when balancing with each synthetic reference for the quantitative and relative datasets for the mean measured spectrum of each tile compared to its spectrometer measurement, alongside the median pixel-by-pixel value (3sf) between the composite checkerboard CIELAB reconstruction and the literature values.

| Distance (cm) | MedAPE (%) | NRMSE | |||

|---|---|---|---|---|---|

| Pixel-by-pixel | Quantitative (%) | Relative (a.u.) | |||

| 20 | 0.166 | 2.37 | 0.148 | 0.0516 | 7.60 |

| 23 | 0.219 | 2.37 | 0.164 | 0.0557 | 7.77 |

| 25 | 0.167 | 3.20 | 0.137 | 0.0478 | 8.29 |

| 28 | 0.141 | 2.61 | 0.163 | 0.0538 | 7.32 |

| 30 | 0.165 | 2.36 | 0.125 | 0.0395 | 8.69 |

| 33 | 0.151 | 2.39 | 0.125 | 0.0393 | 8.84 |

| 35 | 0.494 | 6.38 | 0.117 | 0.0368 | 8.69 |

Fig. 7.

The mean spectrum of a selection of tiles as measured from each distance (cm) plotted against the spectrometer measurement of that tile, where each plot represents a new tile [labeled above it as corresponding to those in Fig. 1(b)] for both (a) quantitative and (b) relative data. At each distance the data are balanced with either the correct Spectralon white reference image, or the synthetic reference generated from the correct video of the sterile ruler.

Fig. 8.

sRGB reconstructions of the central of a selection of tiles [labeled below as corresponding to those in Fig. 1(b)] taken at 20 cm and balanced with either the correct Spectralon white reference image, or the synthetic reference generated from the correct video of the sterile ruler.

These synthetic references are also used to balance the checkerboard data as in Sec. 2.3 and the corresponding errors calculated. The results are summarized in Figs. 7 and 8, and Table 2 shows the resulting errors when using synthetic references. These show very similar errors compared to the best practice method suggesting that these synthetic references are suitable for quantitative data reconstruction. Since this process is fully sterile, it can be repeated easily during surgery allowing for changes in distance and lighting conditions to be better accounted for.

For relative data, the spectral sensitivities are calculated from a single image of the ruler and used to balance the data. The spectral sensitivities of the Karl Storz D light C Xenon light source are used to balance the data before normalizing and the resulting spectra are summarized in Fig. 9 and the corresponding errors in Table 3. This shows that spectral sensitivities alone are sufficient for white balancing of relative data.

Fig. 9.

(a) The relative mean spectrum of a selection of tiles as measured from each distance (cm) plotted against the spectrometer measurement of that tile, where each plot represents a new tile (labeled above it) as corresponding to the labels in Fig. 1(b), and (b) their respective residuals compared to the spectrometer ground truths. All distances of checkerboard data are white balanced with either the correct measured white reference or the spectral sensitivities generated from a single image of the sterile ruler.

Table 3.

NRMSE (3sf) when balancing with the spectral sensitivities generated from a single image of a sterile ruler for the mean measured spectrum of each tile compared to its spectrometer measurement.

| Distance (cm) | NRMSE for relative spectra (a.u.) |

|---|---|

| 20 | 0.0487 |

| 23 | 0.0555 |

| 25 | 0.0461 |

| 28 | 0.0535 |

| 30 | 0.0381 |

| 33 | 0.0386 |

| 35 | 0.0335 |

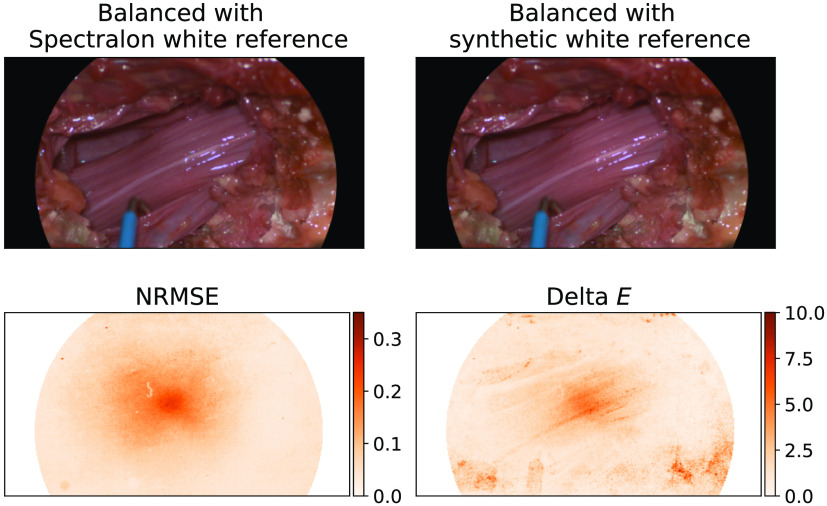

3.3.1. Integration into a surgical environment

Our proposed synthetic reference algorithm was easily integrated into surgical workflow of a human cadaveric spine surgery. The study was approved by the local ethics committee of the canton of Zurich, Switzerland, under the number BASEC 202-01196. The video capture was adjusted to use a snipped section of the ruler to fit within the surgical cavity and the camera was moved in contrast to the ruler to capture the video. In this setting, this is an appropriate adjustment due to the near uniformity of the background tissue and even illumination of the cavity using a single light source. The measured white reference was taken for comparison, where distance was judged based on a visual assessment of image focus. The mean absolute percentage errors in the spectral sensitivities is 0.852% and median absolute percentage pixel-by-pixel errors is 4.77% between the synthetic and Spectralon references, which shows that the synthetic reference is very similar to the best case intraoperative measured Spectralon reference. The NRMSE between the quantitative spectra constructed using the Spectralon and synthetic references was calculated between the spectrum of each pixel in the content area. This had a range of 0% to 0.356% with a median of 0.055% showing that these are extremely similar. sRGB reconstructions of the image balanced with both Spectralon white reference and a synthetic white reference are shown in Fig. 10. The values were calculated for each pixel between CIELAB reconstructions of these images and displayed in Fig. 10 with a range between 0 and 16.17 and a median of 1.37 in the content area indicating a barely perceptible difference to the human eye. This suggests that a synthetic white reference performs similarly to the best case intraoperative Spectralon white reference; however, it is much more easily integrated into surgical workflow allowing for changing conditions to be better accounted for.

Fig. 10.

sRGB reconstructions of human cadaveric rootlets balanced with a Spectralon reference and a synthetic white reference generated from a video of a sterile ruler against tissue, the pixel-by-pixel NRMSE, and values calculated between the balanced spectra and CIELAB reconstructions of these. All images are masked due to the scope.

4. Discussion

This study first examined the limitations of white balancing under different conditions to the subject. The lack of a sterile reference necessitates that the white reference be taken outside of the sterile field. This means that the white reference may be taken at a different distance or under different lighting conditions to the subject. When investigating these effects, it is clear that there is little variation when data are correctly balanced; however, changes in distance or lighting from the conditions of the white reference show appreciable differences.

The change in distance primarily has an effect on quantitative data, which is clearly seen at large differences in distance; however, these changes are not seen in the sRGB reconstructions. The relative data are robust to changes in distance as this does not change the shape of the spectra, only the absolute intensity, which is not relevant in calculation of ratiometric parameters.

The change in lighting conditions has a severe effect on both quantitative and relative data as the shape of the spectra are altered. This effect is significant enough to be easily visually apparent in the sRGB reconstruction.

The ability to model white references by separating the spatial and spectral components was then investigated. This produced relatively low inherent errors for all methods investigated. The approximation method modeling the vignetting as a 2D isotropic Gaussian with the scalar factor and spectral sensitivities calculated was chosen to allow for imperfections in the composite reference while maximizing computational efficiency. The spectral sensitivities in all three approximation methods showed no trends with distance, consistent with the assumption that the spatial and spectral effects can be treated separately.

Synthetic references were generated from short videos of the ruler and compared to the relevant measured references. The errors in the spectral sensitivities and pixel-by-pixel values of the synthetic references compared to the appropriate measured references, of , suggest that there is little difference between the measured and synthetic references.

When these synthetic references were used to balance the datasets at various distances, the results appeared similar to using appropriate measured references, with mean NRMSE for the quantitative spectra of 0.140% and 0.139%, respectively. This demonstrates that these synthetic references can be used as an appropriate method of white balancing to obtain quantitatively accurate data.

For relative data, only the shape of the spectra is relevant so only spectral sensitivities are required to white balance the data. For this, the relevant spectral sensitivities are calculated once and used to balance the data across all datasets, which results in very low errors. This shows that this simplified method of white balancing can be used in the case that relative data alone is required; however, it is not possible to generate good sRGB reconstructions with only spectral sensitivities since absolute intensity information is lost.

The application of this method in a surgical environment was tested in a human cadaveric spine surgery. This demonstrated a simple integration into a surgical environment while producing similar spectra and sRGB reconstructions, with median NRMSE of 0.055% and median of 1.37, and a synthetic reference that closely replicates the best case scenario using a standard reference.

It is expected that this method can be integrated to a wide range of surgical applications where the exoscope can be held at a constant distance for the short ruler video and the imaging of interest. This has been investigated for fronto–parallel distances of 20 to 35 cm, which facilitates good ergonomics for neurosurgery by enabling the capture of a sufficiently wide field of view as well as resolving regions of interest in the depths of cavities, based on experience from the lead clinician on our neurosurgical study. Exposure should be set to prevent saturation of any wavelengths; however, changes in exposure could be corrected linearly. If lighting is uniform across the field of view, this allows the video to be taken by movement of camera instead of reference; however, shadows in the cavity can only be accounted for by movement of the reference itself.

This indicates a promising novel method for synthetic white balancing within the restricted intraoperative environment provided the assumption that spatial and spectral independence is held. This assumption is often met using a single, uniform light source. The quantitative model is currently limited to fronto–parallel imaging with the assumption that imaging geometry does not deviate significantly from this, which also introduces a limitation in its application, however, relative data can be obtained at any angle in principle using the spectral sensitivities independently.

4.1. Conclusions and Future Work

These data demonstrate a novel sterile synthetic white balancing algorithm capable of integrating easily into surgical workflow. This ensures quantitative accuracy of the spectral data obtained while minimizing disruption to the surgical procedure.

Future work should include improvement of the computational efficiency of the composite ruler reference construction for a real-time integration of this method. It should be confirmed that this construction is robust to movement in the background as this enables the video to be obtained by movement of the camera in contrast to movement of the ruler, allowing this method to be more easily used in more restricted surgical cavities assuming an even illumination from a single light source. This method should also be adapted to be able to account for any dust in the system, multiple light sources, validation for the replacement of the exoscope with other operating scopes, validation for distances applicable to other open surgery geometries, and adaptation to use at different imaging angles.

Acknowledgments

This study/project was funded by the National Institute for Health and Care Research (NIHR), award no. 202114. The views expressed are those of the authors and not necessarily those of the NIHR or the Department of Health and Social Care. This work was also supported by core funding from the Wellcome Trust (WT203148/Z16/Z) and the Engineering and Physical Sciences Research Council (ESPRC, award no. NA/A000049/1). This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement no. 101016985 (FAROS project). CH is supported by an InnovateUK Secondment Scholars Grant (project no. 75124). TV is supported by a Medtronic/Royal Academy of Engineering Research Chair (RCSRF1819\7\34). For the purpose of open access, the authors have applied a CC BY public copyright license to any Author Accepted Manuscript version arising from this submission.

Biographies

Anisha Bahl is a PhD student in the Surgical and Interventional Engineering Department of King’s College London. She received her Chemistry MChem degree in 2020 from the University of Oxford. Her main research interests are the integration of spectral measurement and imaging technologies to medical settings, and the extraction of physiological information from these technologies to aid medical decision making.

Biographies of the other authors are not available.

Disclosures

ME, JS, and TV are co-founders and shareholders of Hypervision Surgical.

Contributor Information

Anisha Bahl, Email: anisha.bahl@kcl.ac.uk.

Conor C. Horgan, Email: conor.horgan@hypervisionsurgical.com.

Mirek Janatka, Email: mirek.janatka@hypervisionsurgical.com.

Oscar J. MacCormac, Email: oscar.j.maccormac@kcl.ac.uk.

Philip Noonan, Email: philip.noonan@hypervisionsurgical.com.

Yijing Xie, Email: yijing.xie@kcl.ac.uk.

Jianrong Qiu, Email: jianrong.qiu@kcl.ac.uk.

Nicola Cavalcanti, Email: nicola.cavalcanti@balgirst.ch.

Philipp Fürnstahl, Email: philipp.furnstahl@balgrist.ch.

Michael Ebner, Email: michael.ebner@hypervisionsurgical.com.

Mads S. Bergholt, Email: mads.bergholt@kcl.ac.uk.

Jonathan Shapey, Email: jonathan.shapey@kcl.ac.uk.

Tom Vercauteren, Email: tom.vercauteren@kcl.ac.uk.

Data Availability Statement

The datasets presented in this article are not readily available because of their proprietary nature.

References

- 1.Lu G., Fei B., “Medical hyperspectral imaging: a review,” J. Biomed. Opt. 19(1), 010901 (2014). 10.1117/1.JBO.19.1.010901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Giannoni L., Lange F., Tachtsidis I., “Hyperspectral imaging solutions for brain tissue metabolic and hemodynamic monitoring: past, current and future developments,” J. Opt. 20(4), 044009 (2018). 10.1088/2040-8986/aab3a6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Calin M. A., et al. , “Hyperspectral imaging in the medical field: present and future,” Appl. Spectrosc. Rev. 49(6), 435–447 (2014). 10.1080/05704928.2013.838678 [DOI] [Google Scholar]

- 4.Shapey J., et al. , “Intraoperative multispectral and hyperspectral label-free imaging: a systematic review of in vivo clinical studies,” J. Biophotonics 12(9), e201800455 (2019). 10.1002/jbio.201800455 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jacques S. L., “Optical properties of biological tissues: a review,” Phys. Med. Biol. 58(11), R37–R61 (2013). 10.1088/0031-9155/58/11/R37 [DOI] [PubMed] [Google Scholar]

- 6.Clancy N. T., et al. , “Surgical spectral imaging,” Med. Image Anal. 63, 101699 (2020). 10.1016/j.media.2020.101699 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kulcke A., et al. , “A compact hyperspectral camera for measurement of perfusion parameters in medicine,” Biomedizinische Technik 63(5), 519–527 (2018). 10.1515/bmt-2017-0145 [DOI] [PubMed] [Google Scholar]

- 8.Giannoni L., et al. , “A hyperspectral imaging system for mapping haemoglobin and cytochrome-c-oxidase concentration changes in the exposed cerebral cortex,” IEEE J. Sel. Top. Quantum Electron. 27(4), 1–11 (2021). 10.1109/JSTQE.2021.3053634 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yoon J., et al. , “First experience in clinical application of hyperspectral endoscopy for evaluation of colonic polyps,” J. Biophotonics 14(9), e202100078 (2021). 10.1002/jbio.202100078 [DOI] [PubMed] [Google Scholar]

- 10.Geelen B., Tack N., Lambrechts A., “A compact snapshot multispectral imager with a mono-lithically integrated per-pixel filter mosaic,” Proc. SPIE 8974, 89740L (2014). 10.1117/12.2037607 [DOI] [Google Scholar]

- 11.Li P., et al. , “Deep learning approach for hyperspectral image demosaicking, spectral correction and high-resolution RGB reconstruction,” Comput. Methods Biomech. Biomed. Eng. Imaging Visual. 10(4), 409–417 (2021). 10.1080/21681163.2021.1997646 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pichette J., et al. , “Hyperspectral calibration method for CMOS-based hyperspectral sensors,” Proc. SPIE 10110, 101100H (2017). 10.1117/12.2253617 [DOI] [Google Scholar]

- 13.Ayala L., et al. , “Video-rate multispectral imaging in laparoscopic surgery: first-inhuman application,” (2021).

- 14.Ebner M., et al. , “Intraoperative hyperspectral label-free imaging: from system design to first-in-patient translation,” J. Phys. D Appl. Phys. 54(29), 294003 (2021). 10.1088/1361-6463/abfbf6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Khan H. A., et al. , “Illuminant estimation in multispectral imaging,” JOSA A 34(7), 1085–1098 (2017). 10.1364/JOSAA.34.001085 [DOI] [PubMed] [Google Scholar]

- 16.Ayala L., et al. , “Light source calibration for multispectral imaging in surgery,” Int. J. Comput. Assist. Radiol. Surg. 15(7), 1117–1125 (2020). 10.1007/s11548-020-02195-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.MacKenzie L. E., Harvey A. R., “Oximetry using multispectral imaging: theory and application,” J. Opt. 20(6), 63501 (2018). 10.1088/2040-8986/aab74c [DOI] [Google Scholar]

- 18.Jiang J., et al. , “Analysis and evaluation of the image preprocessing process of a six-band multispectral camera mounted on an unmanned aerial vehicle for winter wheat monitoring,” Sensors 19(3), 747 (2019). 10.3390/s19030747 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cho H., Lee H., Lee S., “Radial bright channel prior for single image vignetting correction,” Lect. Notes Comput. Sci. 8690, 189–202 (2014). 10.1007/978-3-319-10605-2_13 [DOI] [Google Scholar]

- 20.Datacolor, “SpyderCheckr color data reference sheet,” Datacolor website, version 2, https://www.datacolor.com/spyder/downloads/SpyderCheckr_Color_Data_V2.pdf (accessed 2023).

- 21.Sharma G., Wu W., Dalal E. N., “The CIEDE2000 color-difference formula: implementation notes, supplementary test data, and mathematical observations,” Col. Res. Appl. 30, 21–30 (2005). 10.1002/col.20070 [DOI] [Google Scholar]

- 22.Smith T., Guild J., “The C.I.E. colorimetric standards and their use,” Trans. Opt. Soc. 33(3), 73–134 (1931). 10.1088/1475-4878/33/3/301 [DOI] [Google Scholar]

- 23.Magnusson M., et al. , “Creating RGB images from hyperspectral images using a color matching function,” in Int. Geosci. Remote Sens. Symp., September, pp. 2045–2048 (2020). 10.1109/IGARSS39084.2020.9323397 [DOI] [Google Scholar]

- 24.Reinhard E., et al. , Color Imaging: Fundamentals and Applications, CRC Press; (2008). [Google Scholar]

- 25.Yu W., “Practical anti-vignetting methods for digital cameras,” IEEE Trans. Consum. Electron. 50(4), 975–983 (2004). 10.1109/TCE.2004.1362487 [DOI] [Google Scholar]

- 26.Foster D. H., “Color constancy,” Vis. Res. 51(7), 674–700 (2011). 10.1016/j.visres.2010.09.006 [DOI] [PubMed] [Google Scholar]

- 27.Otsu N., “Threshold selection method from gray-level histograms,” IEEE Trans. Syst. Man Cybern. SMC-9(1), 62–66 (1979). 10.1109/TSMC.1979.4310076 [DOI] [Google Scholar]

- 28.Savitzky A., Golay M. J. E., “Smoothing and differentiation of data by simplified least squares procedures,” Anal. Chem. 36(8), 1627–1639 (1964). 10.1021/ac60214a047 [DOI] [Google Scholar]

- 29.Münzer B., Schoeffmann K., Boszormenyi L., “Detection of circular content area in endoscopic videos,” in Proc. 26th IEEE Int. Symp. Comput.-Based Med. Syst., pp. 534–536 (2013). 10.1109/CBMS.2013.6627865 [DOI] [Google Scholar]

- 30.Huber M., et al. , “Deep homography estimation in dynamic surgical scenes for laparoscopic camera motion extraction,” Comput. Methods Biomech. Biomed. Eng. Imaging Visual. 10(3), 321–329 (2022). 10.1080/21681163.2021.2002195 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets presented in this article are not readily available because of their proprietary nature.