Abstract

Conditioned reinforcers are widely used in applied behavior analysis. Basic-research evidence reveals that Pavlovian learning plays an important role in the acquisition and efficacy of new conditioned reinforcer functions. Thus, a better understanding of Pavlovian principles holds the promise of improving the efficacy of conditioned reinforcement in applied research and practice. This paper surveys how (and if) Pavlovian principles are presented in behavior-analytic textbooks; imprecisions and disconnects with contemporary Pavlovian empirical findings are highlighted. Thereafter, six practical principles of Pavlovian conditioning are presented along with empirical support and knowledge gaps that should be filled by applied and translational behavior-analytic researchers. Innovative applications of these principles are outlined for research in language acquisition, token reinforcement, and self-control.

Keywords: conditioned reinforcement, impulsivity, language acquisition, Pavlovian conditioning, praise, token economy

Two cornerstones of applied behavior analysis are (1) providing effective therapy that produces practical, noticeable improvements in client behavior and (2) building these therapies on a rigorous foundation of behavioral principles (Baer et al., 1968). These include both operant and Pavlovian principles.1 In the landscape of empirically supported principles used by applied behavior analysts, reinforcement is undoubtedly at the summit, but a nearby peak must be conditioned reinforcement (Vollmer & Hackenberg, 2001). Tokens, points, and praise are widely employed in applied settings to establish and maintain new, more adaptive behaviors (Donahoe & Vegas, 2021; Hackenberg, 2018). These conditioned-reinforcing consequences, when effectively arranged, render applications more practical (e.g., fewer tangible and edible reinforcers are needed), portable, and helpful in bridging the delay between the desired response and backup reinforcer (Russell et al., 2018). For this reason, conditioned reinforcement has long been recognized as an effective clinical practice (American Psychological Association, 1993).

But these points have been made for decades and are well known to readers of this journal. In addition, excellent reviews of the conditioned and token reinforcement literatures have been published in this journal and elsewhere (Bell & McDevitt, 2014; Cló & Dounavi, 2020; Fantino, 1977; Hackenberg, 2009, 2018; Williams, 1994). Thus, we seek to do something different. This paper will focus on the less often articulated Pavlovian-learning principles underlying conditioned reinforcement. What are the basic Pavlovian research findings, relevant to conditioned reinforcement, that rise to the level of a principle, systematic experimental manipulations producing replicable behavioral outcomes? If these principles were better understood, would they inspire innovative research that develops more effective approaches to establishing and using conditioned reinforcers? Such developments require that knowledge gaps be identified and addressed.

Before diving into Pavlovian principles, a brief refresher on Pavlovian learning may be useful. To that end, imagine a hungry rat in an operant chamber that obtains free food (the unconditioned stimulus, US) once, on average, every 2 min (i.e., a variable-time, VT 120-s schedule). Before each of these free-food events, a cue light is illuminated for 3 s. With experience with this Pavlovian contingency (if light → then food), the light will acquire a conditioned-stimulus (CS) function. That is, when presented alone, it will evoke a variety of conditioned responses (e.g., salivation, amygdala activation, attentional bias toward and physically approaching the cue; Bermudez & Schultz, 2010; Bucker & Theeuwes, 2017; Pavlov, 1927; Robinson & Flagel, 2009).2 Importantly, these conditioned responses evoked by the CS are not operant behaviors maintained by consequences (see the negative auto-maintenance and sensory preconditioning literatures; Davey et al., 1981; Rescorla, 1980; Seidel, 1959; Thompson, 1972; Williams & Williams, 1969).

There is wide agreement that Pavlovian learning plays an important role in the acquisition of a conditioned reinforcement function. For example, when we reviewed six commonly used applied behavior-analytic textbooks and edited volumes3, Pavlovian learning was either explicitly identified as important (Chance, 1998; Cooper et al., 2020; Fisher et al., 2021) or implied as important by the use of terms like “pairing” and “associated” (Martin & Pear, 2019; Mayer et al., 2018; Miltenberger, 2016), two terms commonly used in the Pavlovian literature. This textbook linkage of conditioned reinforcement and Pavlovian learning is supported by the basic-research literature. As we will see throughout this paper, principles of effective Pavlovian conditioning align closely with the principles of effective conditioned reinforcement (Fantino, 1977; Mackintosh, 1974; Shahan, 2010; Skinner, 1938). Additionally, basic researchers working independently in the Pavlovian and operant domains arrived at very similar quantitative models of their respective phenomena (Gibbon & Balsam, 1981; Preston & Fantino, 1991; Shahan & Cunningham, 2015; Williams, 1994). Thus, a thorough understanding of Pavlovian learning principles should facilitate translational research and practice. But before those principles can be considered, it is important to clear the board of some commonly held misunderstandings about Pavlovian learning, misunderstandings inherent to imprecisions in the description of Pavlovian procedures.

THE IMPRECISION OF “PAIRING”

Five of the textbooks and chapters we reviewed (Cooper et al., 2020; Donahoe & Vegas, 2021; Martin & Pear, 2019; Mayer et al., 2018; Miltenberger, 2016) fairly uniformly describe the Pavlovian conditioning procedure as one in which a neutral stimulus (NS) is repeatedly paired with a US until the NS acquires a CS function. “Pairing” is a commonly used term in the Pavlovian literature and in applied behavior-analytic papers on conditioned reinforcement (e.g., Esch et al., 2009; Ivy et al., 2017). But what exactly does “pairing” mean?

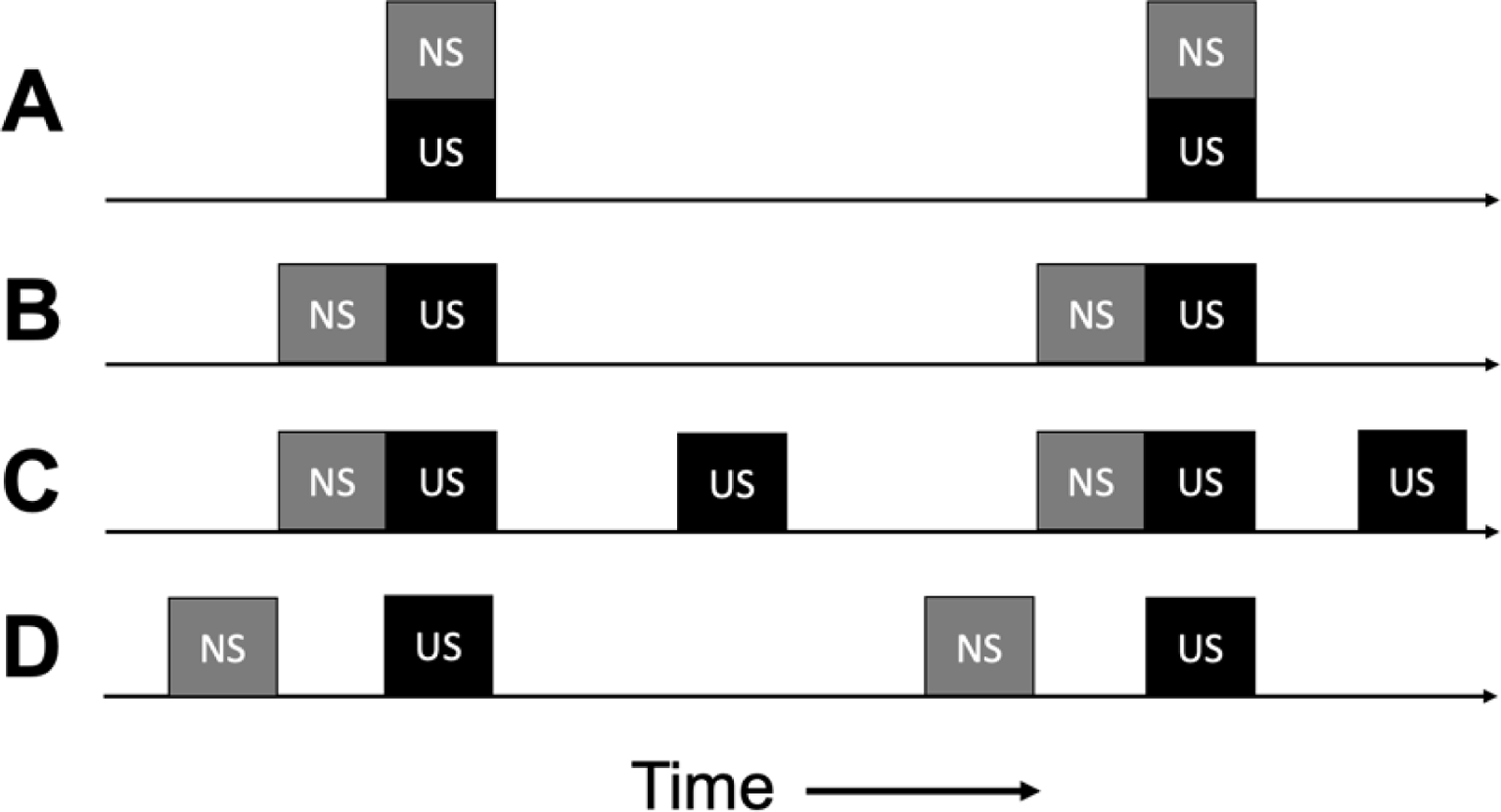

Cooper et al. (2020) describe Pavlovian conditioning as a “stimulus-stimulus pairing procedure” which is “most effective when the NS is presented just before or simultaneous with the US” (p. 31). “Simultaneous” pairing might be read to suggest that presenting the NS and US at the same time is an effective training method (see Figure 1A; left and right edges of the boxes above the timeline indicate the onset and offset of each stimulus). Martin and Pear (2019) visually illustrate NS-US pairing with simultaneous onsets of these two stimuli (their Figures 5.1–5.4) but indicate in the text that the NS should precede the US by approximately 0.5 s. Mayer et al. (2018) provide several examples of conditioned reinforcers in which the NS and US are presented simultaneously, and Cooper et al.’s Figure 2.1 illustrates respondent pairing as “NS+US,” which is open to interpretation. In Pavlovian circles, this arrangement is referred to as a simultaneous conditioning procedure, and it is generally ineffective (for review, see Lattal, 2013). That is, when the NS onset and offset occur in tandem with US onset and offset, the NS will evoke minimal (if any) conditioned responding when the NS is presented alone (e.g., Fitzwater & Thrush, 1956; Pavlov, 1927; White & Schlosberg, 1952).

FIGURE 1.

Presentations of neutral and unconditioned stimuli in Pavlovian conditioning procedures. Four ways in which the neutral stimulus (NS) and unconditioned stimulus (US) might be presented in time in these procedures.

Early experiments on conditioned reinforcement evaluated this simultaneous NS+US Pavlovian pairing procedure as a means of establishing a new conditioned reinforcer function. During the test of conditioned reinforcement that followed (i.e., does the rat engage in an operant response to produce the NS), the NS failed to acquire conditioned reinforcing properties (Bersh, 1951; Marx & Knarr, 1963; Schoenfeld et al., 1950; Stubbs & Cohen, 1972). Thus, we may conclude that stimulus-stimulus pairing does not mean that the would-be conditioned reinforcer (the NS) should be presented simultaneously with the appetitive US (i.e., the back-up reinforcer) during the Pavlovian conditioning phase. Perhaps when Cooper et al. (2020) wrote “simultaneous,” they meant that the NS preceding the US may partially overlap with the US, which is common in effective Pavlovian conditioning procedures.

The remainder of the Cooper et al. (2020) quote from above indicates that a Pavlovian conditioning procedure will be more effective if the NS occurs “just before” the US (Figure 1B). What counts as “just before” is not specified but will be given further consideration in the next section. For now, we will note that “just before” must mean that an effective Pavlovian conditioning procedure will ensure that the NS precedes the US. A similar conclusion was reached by Pavlov (1927) when he found that a NS→US sequence readily established a CS function, whereas simultaneously presenting these stimuli (Figure 1A) did not. Likewise, those early conditioned-reinforcement studies that reported simultaneous NS+US pairings did not work, also reported that a Pavlovian NS→US sequence reliably established a new conditioned-reinforcer function (Bersh, 1951; Marx & Knarr, 1963; Stubbs & Cohen, 1972). In sum, “pairing” is a frequently used but imprecise term. When used as synonymous with a procedure designed to change behavior, it fails to specify how and when the NS and US should be arranged.

“Pairing” implies the centrality of temporal contiguity

When Cooper et al. (2020) note that effective Pavlovian procedures present the NS just before or simultaneous with the US, the reader may deduce that pairing involves temporal contiguity. All the textbooks and chapters we reviewed agree on this point and some suggest that the optimal Pavlovian procedure will present the NS 0.5 s before the US, the so-called “half-second rule” (Donahoe & Vegas, 2021; Martin & Pear, 2019; Miltenberger, 2016). However, this textbook advice has been consistently rejected in seminal review papers in the Pavlovian and operant literatures (Balsam et al., 2010; Fantino, 1977; Lattal, 2013; Rescorla, 1988; Shahan, 2010). These authors outline considerable empirical evidence at odds with the theory that repeatedly presenting the NS and US in close temporal proximity (e.g., within 0.5 s of the US) is either a necessary or a sufficient condition for Pavlovian learning or for establishing a conditioned-reinforcement function. One reason for this stance has already been discussed—simultaneous presentation of the NS and US is generally ineffective, despite the two stimuli being entirely paired in time.

Another category of evidence against the pairing hypothesis is that if repeatedly presenting the NS just before the US were sufficient, then robust Pavlovian learning would occur whenever these stimuli co-occur. In Figure 1C, the NS→US sequence occurs just as often as it did in 1B. However, the US also occurs just as often without a preceding NS event. If this happens, the NS is far less likely to acquire a CS function (e.g., Rescorla, 1968), and hence, will not function as a conditioned reinforcer (Hyde, 1976). This might inadvertently occur in applied settings if the client’s preferred edible (US) is provided just as often without the would-be conditioned reinforcer (NS) as with it. Worse still, if the US occurs more often when the NS is not present than when it is “paired” with the US, the NS can acquire an inhibitory function; that is, conditioned responding will be less frequent when the NS is present, despite the fact that it is always temporally paired with the US (Gottlieb & Begej, 2014; Rescorla, 1969).

Yet another category of evidence against the pairing hypothesis is that a contiguous NS→US sequence, although often helpful (all else being equal), is not a necessary condition for establishing a CS function. Many Pavlovian studies have demonstrated an evocative effect of the CS when, for example, a 12-s no-stimulus interval separates CS offset and US onset (Figure 1D; e.g., Kaplan, 1984; Lucas et al., 1981). In these trace-conditioning procedures, the conditioned response is typically not evoked during the CS. It occurs instead during the so called “trace interval” (the no-stimulus interval between CS and US), and increases as the time to the US approaches (Marlin, 1981). Likewise, Pavlovian trace conditioning can be used to establish a new conditioned-reinforcer function (Jenkins, 1950; Thrailkill & Shahan, 2014; comparison of trace-only and random-SO groups in the latter study). Thus, the oft-quoted half-second rule of Pavlovian conditioning (and conditioned reinforcement) is, at minimum, incomplete. More broadly, the position that temporal contiguity between the NS (would-be conditioned reinforcer) and US (back-up reinforcer) is either necessary or sufficient may be rejected.

SIX PRINCIPLES OF PAVLOVIAN LEARNING AND CONDITIONED REINFORCEMENT

Having cleared the board of some common misunderstandings, we may now consider six principles of Pavlovian learning that applied conditioned-reinforcement researchers should be aware of as they translate principles into effective practice and fill remaining knowledge gaps in the literature. Some, but not all, of these principles are covered in the textbooks we reviewed. For the sake of comprehensiveness, we repeat those textbook principles here, though with pairing-extracting language modifications. The sequence of principles presented here roughly follows the sequence in which they would be considered when designing a Pavlovian procedure for establishing a new CS/conditioned-reinforcer function. For each principle, we outline the extant empirical support, identify knowledge gaps, and discuss how the principle might be employed in applied research. These principles have been better established in the Pavlovian learning literature than they have in operant, conditioned reinforcement experiments. In the Supporting Information, readers may find a list of the knowledge gaps and applied research opportunities identified throughout this paper. By filling these gaps, translational researchers can evaluate the full potential of these principles in enhancing the technology of effective conditioned reinforcement.

Principle 1: Choose a salient and novel NS

Prior to appetitive Pavlovian conditioning, the would-be CS/conditioned-reinforcer is an NS. Our immediate environments are filled with neutral, functionless stimuli, many of which we do not attend. Learning a new “if CS → then US” Pavlovian contingency is facilitated by ensuring that the NS (the would-be CS/conditioned-reinforcer) is salient (see Hall et al., 1977, for empirical support), which is to say that there is a high probability of attending to the stimulus (Catania, 2013). One way to increase the salience of a stimulus is to make it novel, something not previously encountered (Horstmann, 2015). If the screen you are reading this on right now went completely red for 1 s and then returned to normal, this would be simultaneously salient and novel. It happened in a location where you were currently looking (salient) and, presumably, this has never happened before (novel). Another advantage of novel stimuli is that they have no unwanted/unknown experiential baggage that can negatively impact Pavlovian learning. For example, if the individual has previously encountered the NS without the US, then it will take longer for that stimulus to acquire a CS function when a Pavlovian contingency is introduced. This latent inhibition effect has been demonstrated in many species, including humans (Escobar et al., 2003; Lubow, 1973).

We are aware of no systematic experiments evaluating if these NS-history effects also slow the acquisition of a new conditioned-reinforcer function. Petursdottir et al. (2011) manipulated this variable, but because they could not reliably establish a CS/conditioned-reinforcer function, the manipulation did not shed light on the effect of latent inhibition for human subjects. Until this research gap is filled, it seems prudent to ensure that stimuli to be newly established as conditioned reinforcers are both salient and novel.

Counter to this advice is the common research practice of arranging a baseline session in which the NS is repeatedly presented without the US (e.g., Moher et al., 2008). The practice is designed to ensure that the putative NS does not already function as a conditioned reinforcer. However, latent inhibition predicts that this control procedure will have negative effects on subsequent Pavlovian learning. Other control procedures are available. For example, some applied researchers have adopted the practice of using two neutral stimuli (e.g., a red and a green token) and selecting one for NS→US presentations and the other for NS presentations at times uncorrelated with the US, with assignments counterbalanced across participants (e.g., Lepper & Petursdottir, 2017; Petursdottir et al., 2011). If, across participants, the only NS to acquire a CS/conditioned-reinforcer function is the one that occurred prior to the US, then confidence that the conditioned-reinforcer function was learned, rather than preexisting, increases with the sample size. For studies with just a single participant, this strategy will not be convincing, so a very brief pre-test of the conditioned-reinforcer efficacy of the NS would appear to be unavoidable.

The Principle-1 advice to use a novel NS is also inconsistent with current recommendations to find a conditioned reinforcer that is visually preferred or engaging to the individual (Hine et al., 2018). That recommendation is presumably given under the assumption (or clinical observations) that preferred objects are valued by the client, and this added value may aid in maintaining the desired operant behavior. Inconsistent with this assumption, Fernandez (2021) reported that “interest-based tokens” were no more effective in maintaining behavior than novel conditioned reinforcers. If the applied behavior-analytic researcher is interested in demonstrating conditioned reinforcement effects, it is important to control all aspects of the conditioned reinforcer, including past experience with that stimulus.

Principle 1 may also run counter to the previously mentioned half-second rule (i.e., the NS should be presented no more than 0.5 s before the US). This rule is generally ignored by Pavlovian researchers outside the eye-blink conditioning literature. Using such a brief NS may reduce the salience of that stimulus. Perhaps this explains why a common procedure in applied research is to present other stimuli along with the NS (e.g., calling the participant’s name; Petursdottir et al., 2011) so as to draw client attention to the imminent NS presentation. As discussed below in Principle 4, these additional attention-directing stimuli can inhibit or prevent the NS from acquiring a CS/conditioned-reinforcer function. Pavlovian experiments routinely use NS durations exceeding 0.5 s, which increases salience and may facilitate acquisition of the CS function.

Applying Principle 1

Before research begins, the applied behavior analyst should collaborate with stakeholders and members of the clinical team to identify a salient/novel NS. Salience increases with stimulus intensity, so whatever the dimension of the NS (visual, auditory, etc.), it should have greater intensity than other background stimuli in that same dimension. To promote further salience, the NS should be presented in a way that is hard to miss. Just as a red screen momentarily taking the place of this article would be highly salient to the reader, the salience of a visual NS will be enhanced if it is presented near the client and within their field of vision. Choosing a novel stimulus means the NS cannot be something the client sees, hears, smells, etc. daily (consider latent inhibition effects); an ideal NS will be a salient stimulus with which the client has no prior history.

In applied research settings, it may be useful to conduct a single test trial to evaluate if the NS is sufficiently salient/novel. No warning stimuli should precede that trial (e.g., moving the client to a new location or calling their name), which consists of the salient presentation of the NS (and nothing else). If the client orients toward the NS, even momentarily, the test is passed. If attention is not shifted, then a more salient NS should be selected. Such NS test-trials should be conducted just once, as repeatedly presenting the NS without the US could produce a latent-inhibition effect.

Some clinical populations (e.g., individuals with autism spectrum disorders, ASD) may have deficits in shifting their attention between stimuli or shifting from a preferred stimulus. The NS test just described may not be appropriate with those populations, though surprisingly little research has evaluated Pavlovian learning among individuals with ASD who require substantial or very substantial support (American Psychiatric Association, 2013; Powell et al., 2016). If the attention test is failed with more than one NS, then the test may need to be conducted in a location with fewer potential distractor stimuli. If the test is passed in that setting, then the Pavlovian training that follows (see below) should also be conducted in that setting. Once that training is complete, training may be migrated out of that setting, continuing until the new CS/conditioned-reinforcer is established in the settings in which it will be used.

Principle 2: Arrange an effective US/backup-reinforcer

After choosing a salient and novel NS, one must select an appetitive US (i.e., a reinforcer) to follow the NS. Principle 2 is straightforward and familiar to readers of this journal. The principle is exemplified in the widely followed advice to use generalized conditioned reinforcers, which have a variety of backup reinforcers, one of which should address currently operative motivating operations (e.g., Hackenberg, 2018; Martin & Pear, 2009).

Principle 2 applies to both Pavlovian learning and conditioned reinforcement. With respect to the former, if the US does not reliably elicit an unconditioned response, then the NS that precedes it will be similarly ineffective in acquiring a CS function. This has been evaluated in laboratory studies that manipulate the magnitude of the US or the motivating operation (e.g., animal subjects’ food deprivation level). The typical finding is that (a) speed of acquisition of a CS function and (b) magnitude of the CR is determined by the size/efficacy of the US (Annau & Kamin, 1961; Barry, 1959; Morris & Bouton, 2006; Sparber et al., 1991).

The same is true when attempting to establish a new conditioned reinforcer function. Laboratory experiments that manipulate the quality/size of the backup reinforcer, or the motivating operation relevant to backup reinforcer efficacy, find that a more effective backup reinforcer facilitates establishing a new conditioned reinforcer function (Burton et al., 2011; Tabbara et al., 2016; Wolfe, 1936). Likewise, if a client in an applied setting will not engage in operant behavior to acquire the backup reinforcer, then they will not work for a stimulus signaling that the backup reinforcer is coming (Moher et al., 2008).

Applying Principle 2

Applying Principle 2 is easier in principle than it is in practice. Readers will be familiar with the many preference-assessment techniques used to identify stimuli that may function as reinforcers (e.g., Fisher et al., 1992; Pace et al., 1985). Although there are some conditioned reinforcement experiments that have used preference assessments to select the US (e.g., Lepper & Petursdottir, 2017; Petursdottir et al., 2011) it is difficult to evaluate the utility of preference assessments in this context because Pavlovian training was often unsuccessful, perhaps because of lack of adherence to principles other than Principle 2.

A potential problem with the preference-assessment methodology is that a simple preference for one stimulus over another may not be predictive of how much operant behavior the preferred stimulus will maintain when it is arranged as a reinforcer (see Madden et al., 2023, for review). Although it is often impractical to do more than a preference assessment in applied settings (Poling, 2010), establishing the reinforcing function of the US is critical to the success of applied research using Pavlovian procedures. Thus, at minimum, it must be shown that the US will maintain operant behavior at an above-baseline level. Better still would be to demonstrate that the reinforcer will maintain operant behavior when more than one response is required per reinforcer.

Principle 3: Large C/T ratios are better than small ones

So far, if the investigator is adhering to Principles 1 and 2, they will have identified a salient/novel attention-attracting stimulus that will acquire a CS/conditioned-reinforcer function and an effective US/backup reinforcer if they follow the remaining principles. Next, we must consider the temporal logistics of arranging these stimulus events. Our textbooks specify that the NS must precede the US, but precisely when and, just as importantly, how much time should occur between US events is unspecified. These logistics are addressed by Principle 3: large C/T ratios are better than small ones.

The C/T ratio may be new to some readers, but it is at the core of important quantitative models of Pavlovian learning (Balsam & Gallistel, 2009) and conditioned reinforcement (Fantino, 1977; Shahan & Cunningham, 2015). The latter models make predictions that are very similar to even more complex quantitative models of conditioned reinforcement (Christensen & Grace, 2010; Grace, 1994; Mazur, 2001) which, perhaps because of this quantitative complexity, have not had much influence on the use of conditioned reinforcers in applied behavior-analytic research. To address this, the C/T ratio is offered as a practical simplification of more complex theories.

Like all ratios, the C/T ratio is a statement specifying a mathematical operation of division (i.e., C divided by T). The numerator of the ratio, C, stands for cycle time, which is the average time between US events. So, if in a Pavlovian conditioning experiment, free food pellets are provided to a rat once, on average, every 2 min, then C = 120 s. The denominator of the C/T ratio, T, refers to the interval from NS onset until US delivery; if the NS is a cue-light that is turned on for 3 s before the US occurs, then T = 3. Note that after the NS acquires a CS function, T refers to the interval from CS onset until US delivery.

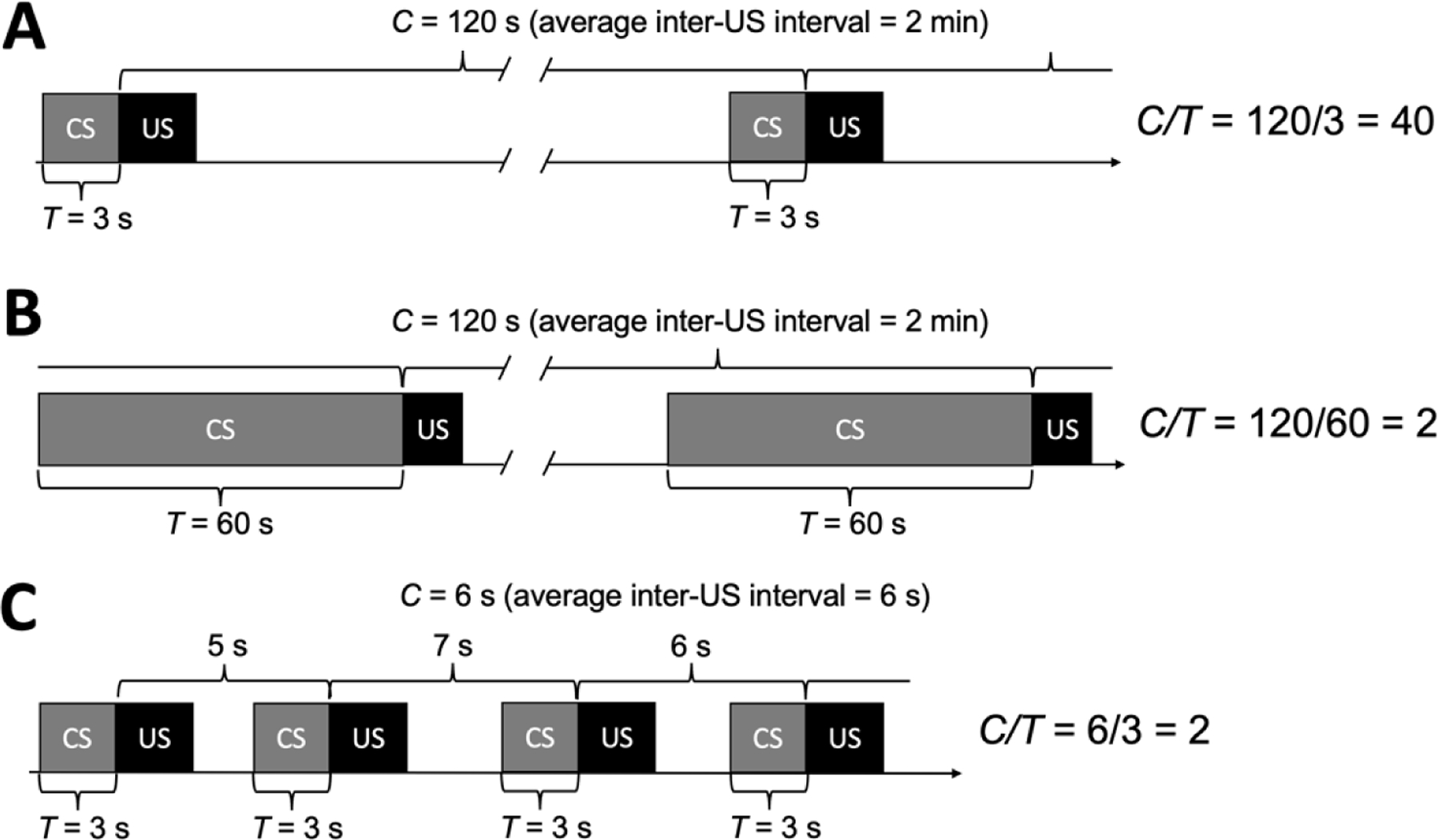

Having defined our terms, let us consider how C and T values influence Pavlovian learning. As previously noted, all else being equal, Pavlovian conditioning will often be more effective if there is temporal contiguity in the CS→US sequence. To illustrate how this is captured by C/T, we will keep C (the inter-US interval) constant at 120 s, and manipulate T. In Figure 2A, the cue-light (CS) is illuminated for 3 s prior to food delivery; therefore, T = 3. In Figure 2B, the light is illuminated for 60 s prior to food, so T = 60. Given these parameters, Principle 3 predicts that Pavlovian conditioning will work better (e.g., faster acquisition, higher conditioned-response rates) in Panel A, when T = 3. This common-knowledge temporal-contiguity principle is quantified by the C/T ratios to the right of the timelines. When the quotient of the C/T ratio is 40, it means that when the cue light comes on, food will be delivered 120/3 = 40 times sooner than normal (“normal” referring to the mean interval separating US events, C). In Panel B, when the C/T ratio is 2, food will be delivered 120/60 = 2 times sooner than normal—not bad, but not anywhere near as good as 40 times sooner. By changing T, we increase the C/T ratio and, according to Principle 3, large C/T ratios are better (more effective) than small ones.

FIGURE 2.

Three Pavlovian conditioning procedures. Panels A and B illustrate the two durations in the C/T ratio. C is the “cycle time” or the average interval separating successive unconditioned stimulus (US) events; T is the interval from conditioned stimulus (CS) onset to US delivery. By dividing C by T, we obtain a C/T ratio value (the quotient). In Panel C, the CS→US temporal contiguity is the same as in Panel A but the average inter-US interval (C) is shorter. Hence, Principle 3 holds that Panel A is the more effective procedure because of its longer duration of C.

Of course, T is only half of the story. The numerator, C, is also important in determining the quotient, but it is often overlooked. In Figure 2C, temporal contiguity between the cue-light and food is as it was in Figure 2A (T = 3 s), but the inter-US interval (C) is, on average, 6 s instead of 120 s. Decreasing C reduces the quotient (C/T = 2), which, according to Principle 3, will reduce the efficacy of the Pavlovian conditioning procedure relative to Figure 2A (C/T = 40). If all we know is that CS-US pairing is important, we would predict that these Figure 2A and 2C procedures would produce comparable outcomes—there has been no change in temporal contiguity. By contrast, Principle 3 holds that, all else being equal, longer inter-US intervals facilitate Pavlovian learning and make for more effective conditioned reinforcers.

A Principle 3 metaphor that may help readers remember and intuitively understand the C/T ratio is to think of an effective CS as a Paul Revere stimulus—its onset signals that “The US is coming! The US is coming!” (Madden et al., 2021).4 When Paul Revere shouted his famous warning that the British army was approaching the city, the townspeople learned that a normally infrequent US event (long-duration C) was imminent (brief T duration and large C/T ratio). This warning undoubtedly evoked a variety of emotional responses (e.g., fear, excitement) among the soldiers, tradespeople, and citizens nearby. Less useful would be if Paul’s warning mirrored Figure 2C; it would be like yelling, “The British are coming!” 3 s prior to each in a long line of troops entering the city. Because the C/T ratio value is so small, such “warnings” would evoke very little conditioned emotional responding; indeed, after the first in this series of warnings (the only one with a large C/T ratio), the rest are likely to be ignored. Thus, if we want our salient/novel NS to function as an effective CS/conditioned-reinforcer, Principle 3 holds that we will arrange a large C/T ratio.

The empirical support for Principle 3 will be separately evaluated in the Pavlovian and conditioned-reinforcement domains. First, there is a sizeable empirical literature showing that experimental manipulations of C and T, when they increase the C/T ratio, yield faster Pavlovian learning (i.e., fewer trials, or sessions, are needed before the NS acquires a CS function; e.g., Gibbon et al., 1977; Lattal, 1999; Perkins et al., 1975; Stein et al., 1958; Terrace et al., 1975; Ward et al., 2012). To our knowledge, only one human laboratory study has experimentally manipulated the duration of C. Consistent with Principle 3, Nelson et al. (2014) reported that increasing the interval between US events (alien spaceships appearing on the screen) decreased the number of Pavlovian training trials needed for a NS (light on the display panel) to acquire a CS function. To our knowledge, no human Pavlovian experiments have manipulated T. Clearly, further studies are needed both in basic and translational research.

Beyond acquisition speed, larger C/T ratios typically produce a CS that evokes more conditioned responding than is achieved with a small C/T ratio (Lattal, 1999; Nelson et al., 2014; Perkins et al., 1977). One form of that conditioned responding is physical attraction to the CS; that is, when a large C/T ratio is arranged with an appetitive US, nonhumans will often physically approach and interact with the CS (Burns & Domjan, 2001; Lee et al., 2018; Thomas & Papini, 2020; van Haaren et al., 1987). Similarly, humans will demonstrate a compulsive attentional bias to a Pavlovian CS in eye-tracking studies (Bucker & Theeuwes, 2017, 2018; Garofalo & di Pellegrino, 2015); although, to the best of our knowledge, no studies have evaluated if this bias is more robust or emerges more quickly with larger C/T ratios.

The other category of empirical support for Principle 3 comes from conditioned reinforcement experiments. Larger C/T ratios generally produce larger conditioned reinforcement effects. This has most often been demonstrated in concurrent-chains procedures where conditioned reinforcer efficacy is gauged by preference for one conditioned reinforcer over another. In this choice procedure, conditioned reinforcers with larger C/T ratios are preferred over smaller ratios (Fantino, 1977; Fantino et al., 1993; Williams & Fantino, 1978; see Shahan & Cunningham, 2015, for review). Additional evidence comes from the observing-response literature. In a nonhuman study of observing, contingencies alternate between periods of time when food can be obtained and periods when food is unavailable; however, the subject cannot tell which period they are in at any given time. In that context, pressing a lever produces a stimulus (e.g., a light) that is correlated with reinforcer-availability periods. If the lever is pressed at an above-baseline level, that stimulus (the light) functions as a conditioned reinforcer. In these observing experiments, conditioned reinforcers with larger C/T ratios maintain more observing responses (Auge, 1974; Case & Fantino, 1981; Roper & Zentall, 1999).

Surprisingly few studies have examined the effects of the C/T ratio on single-operant responding, and, to our knowledge, none have evaluated its effects on human single-operant behavior. This is a significant research gap because conditioned reinforcers are most often used in applied settings to maintain human operant behavior. That is, we are not usually asking clients to choose between conditioned reinforcers (concurrent chains) or to work to produce a schedule-correlated stimulus (observing procedure); we are trying to establish and maintain adaptive behavior with a conditioned reinforcing consequence.

In the nonhuman lab, we are aware of just two studies that have evaluated the effects of manipulating C or T on single-operant behavior maintained by a conditioned reinforcer. In the first, Bersh (1951) had rats complete six appetitive Pavlovian training sessions to establish a light as a CS. Across groups, the light was illuminated between 0.5 and 10 s prior to the delivery of food, which occurred once every C = 35 s, regardless of group; this produced a C/T ratio range of 3.5 to 705. In post-training test sessions, pressing a lever turned on the light-CS for 1 s, but food was never delivered (Pavlovian extinction). Responding for the CS was lowest in the C/T = 3.5 group (T = 10 s) and rates in the remaining groups were significantly higher, though the latter were statistically undifferentiated from each other. The other experiment was conducted in our lab (Mahmoudi et al., 2022). Unlike Bersh, we manipulated the duration of C during Pavlovian training (14 s, 28 s, or 96 s), while holding T constant at 8 s. In a post-training test of conditioned reinforcement that was like that arranged by Bersh, rats in the C/T = 12 group responded to produce the CS significantly more than rats in the C/T = 1.75 or 3.5 groups; the latter two groups were statistically undifferentiated. Thus, consistent with Principle 3, large C/T ratios maintained more responding than small ones. However, neither study showed a direct relation between C/T value and rate of responding for the conditioned reinforcer (e.g., doubling C/T from 1.75 to 3.5 in the Mahmoudi et al., 2022, study did not increase responding).

Clearly more research is needed to flesh out the parametric relation between C/T value and single-operant responding maintained by a conditioned reinforcer. Only two nonhuman parametric studies have been conducted and the relation has not been investigated at all with humans. The few human studies evaluating C/T ratio effects on conditioned reinforcer efficacy have used concurrent-chains schedules in which participants choose between conditioned reinforcers (Alessandri et al., 2010, 2011; Belke et al., 1989; Stockhorst, 1994)6. This approach does not assess how much behavior can be maintained by a conditioned reinforcer. Future studies should take care to manipulate C and T and arrange some conditions with the same C/T ratio but composed of different C and T component values (e.g., 100/10 = 10 = 500/50). Finding an optimal range of C, T, and C/T values—those that (a) speed Pavlovian learning, (b) quickly establish a new conditioned-reinforcer function, and (c) produce a conditioned reinforcer that maintains peak single-operant responding—has important translational implications.

Applying Principle 3: Token reinforcers

Having identified the empirical support for Principle 3 and some remaining gaps to be filled, we will consider how existing knowledge might be useful in designing and implementing token reinforcer programs. To that end, imagine an individual working under a token system in which eight tokens must be earned before they can be exchanged for a backup reinforcer (a fixed-ratio [FR] 8 exchange-production schedule, to use the terminology of Hackenberg, 2009, 2018). In this arrangement, C corresponds to the average time between backup reinforcers and T is the time separating the delivery of any given token and the acquisition of the backup reinforcer. Let’s assume that the inter-backup-reinforcer interval is C = 160 s. If a token is earned once every 20 s, then after the first token is obtained, there are seven tokens x 20 s = 140 s left until the backup reinforcer is available. Assuming a 5-s interval for the eventual token exchange (after all eight tokens are acquired), the C/T value for this first token is 160/(140+5) = 1.1, which should have minimal conditioned-reinforcing efficacy. Consistent with this prediction, long pauses prior to working for the first token are commonly reported in the token reinforcement literature (e.g., Bullock & Hackenberg, 2006; Kelleher, 1958). By contrast, the C/T value for the last token, assuming the same 5-s interval from receipt to exchange, is 160/5 = 32; this higher C/T value accords with the characteristically brief latencies to begin working for the final token (Foster et al., 2001).

The traditional behavior-analytic theory behind long pauses under FR exchange-production schedules is to conceptualize each response run, ending with a token reinforcer, as a single response-unit. Such response units should, the theory goes, behave like single responses under a standard schedule of reinforcement, and considerable evidence supports this account (see Hackenberg, 2018). If simple FR schedules produce long post-reinforcer pauses, then the same should be true of FR exchange-production schedules. If simple variable-ratio (VR) schedules have shorter pauses and maintain more behavior than FR schedules at large ratio values (Ferster & Skinner, 1957; Madden et al., 2005; Zeiler, 1979), then the same should be true of VR and FR exchange-production schedules. Very few empirical tests of the predicted differences in VR vs. FR exchange-production schedules have been conducted, and those that have been conducted have produced equivocal outcomes (Hackenberg, 2018).

Should readers wish to further test this hypothesis in applied research, we encourage you to also test a unique prediction of Principle 3: under a VR exchange-production schedule, a larger net C/T ratio may be achieved by using discriminably different “win” and “no win” tokens. For example, when the client completes a task and earns a token, it is randomly drawn from a bag containing one blue token (the CS/conditioned-reinforcer) and seven brown ones (a VR 8 exchange-production schedule). If the blue token uniquely signals that the backup reinforcer is 5-s away, then C/T = 160/5 = 32. The brown tokens, when encountered, do not signal when the backup reinforcer will be delivered. Their effect appears limited to increasing the duration of C (Cunningham & Shahan, 2018; Shahan & Cunningham, 2015).7

The efficacy of this differentially signaled VR exchange-production schedule has been demonstrated with nonhumans in single-operant experiments (e.g., Notterman, 1951) and in the suboptimal choice literature (Zentall, 2016). In the latter studies, pigeons and rats prefer a differentially signaled VR exchange-production schedule over a variety of alternatives that produce more food but with consequent stimuli that do not differentially signal upcoming “win” and “no win” events (Cunningham & Shahan, 2019; Molet et al., 2012; Stagner & Zentall, 2010; Zentall, 2016). In a comparable applied experiment, Lalli et al. (2000, Experiment 1) reported that two preschoolers with mild developmental delays similarly preferred a suboptimal alternative (differentially signaled VR-2 exchange-production schedule) over an optimal (FR 1) alternative.

To our knowledge, this differentially signaled VR exchange-production schedule has been evaluated only twice in applied research using response rate (rather than choice) as the dependent measure. In the first study, Van Houten and Nau (1980) arranged a VR-8 exchange-production schedule in an academic setting with hearing-disabled children. That is, if a child had been attending, on-task, and nondisruptive, they were allowed to randomly draw a token from a bag containing one blue token and seven brown ones. When a blue token was drawn, it was exchanged for a trinket toy just a few seconds later. This differentially signaled VR-8 exchange-production schedule maintained higher rates of academic behavior than an otherwise equivalent FR-8 schedule in which eight tokens had to be earned every time before the backup reinforcer could be obtained (see Bullock & Hackenberg, 2006; Foster et al., 2001; Notterman, 1951; Saltzman, 1949 for nonhuman systematic replications). The effect was replicated in the same project by Van Houten and Nau at VR- and FR-12 schedules, and teachers preferred the VR over the FR exchange-production schedule.

In the other applied study, Lalli et al. (2000; Experiment 3) reported that a 7-year old with pervasive developmental delays made more functional communication responses than aggressive responses when the former were maintained by a differentially signaled VR-2 exchange-production schedule, and the latter were maintained on an FR-1 schedule for the same backup reinforcer. Although these findings are encouraging, more research is needed. Does the differentially signaled VR exchange-production schedule maintain more adaptive behavior than a simple VR exchange-production schedule? Can the Lalli et al. finding with one client be replicated with other clients, with different ages, challenging behaviors, intellectual capacities, and when discrete-trials procedures are employed?

Those questions remaining, it appears that Principle 3 offers additional principled reasons to agree with Hackenberg (2018) that exchange-production schedules are “powerful variables” with “wide generality.” As we will see later in this paper (Principle 6) exchange-production schedules may yield operant behavior that is more resistant to Pavlovian extinction (i.e., token provided but never exchanged for a backup reinforcer) than behavior produced by manipulating token-production schedules. Where textbooks sometimes encourage applied behavior analysts to manipulate the response requirement for earning a token (e.g., Martin & Pear, 2019), we encourage readers to explore the largely untapped potential of differentially signaled VR exchange-production schedules.

A second application of Principle 3: Language acquisition

Because Principle 3 may be new to some readers, we provide a second area of application for your consideration, language acquisition among children with delayed speech (e.g., children diagnosed with ASD). One strategy for encouraging vocalizations is to alter the function of speech sounds, so they function as conditioned reinforcers. If this can be accomplished, then the child may increase their verbalizations to produce this auditory conditioned reinforcer (for review, see Petursdottir & Lepper, 2015; Shillingsburg et al., 2015). In the existing literature this is accomplished using a stimulus-stimulus pairing procedure in which the therapist makes an auditory vocalization (e.g., “bah”) and then immediately gives the client a highly preferred item (i.e., a NS→US sequence). When this sequence is repeated many times, from a textbook account of conditioned reinforcement, all the boxes have been checked—repeated pairing of a salient NS (“bah”) with an effective US, with close temporal contiguity between the two. Therefore, the sound of “bah” should (a) acquire CS properties (e.g., a positive emotional response should be evoked by CS onset) and (b) should acquire conditioned reinforcing properties (i.e., the client should say “bah” more often than before).

Looking at these procedures from a Principle-3 perspective, two intervals are of interest, C and T. From the reviews of this literature provided by Petursdottir et al. (2015) and Shillingsburg et al. (2015), it is clear that researchers have been conscientious about ensuring that “bah” is presented in close temporal proximity to the US, but the duration of T has received less systematic attention. Recalling that T is the interval from NS onset to US delivery, the duration of the NS can be an important contributor to T. The longer T is, the smaller the C/T ratio, all else being equal. Because smaller C/T ratios are less effective, Principle 3 provides a principled reason to advise against presenting the NS repeatedly before the US (e.g., “bah,” “bah,”, “bah,” US; “bah,” “bah,” “bah”…), which is a common practice in this literature (e.g., Normand & Knoll, 2006). Although Miliotis et al. (2012) reported better outcomes with one NS presentation rather than three, insufficient research has systematically evaluated the effects of manipulating T in applied settings.

In this language-acquisition literature, even less attention has been paid to C (i.e., the inter-US interval). For example, it is common to report no procedural details from which C could be deduced (e.g., Moher et al., 2008; Smith et al., 1996) or to present “bah” immediately after the US is consumed (e.g., Carroll & Klatt, 2008). When the NS is presented right after the back-up reinforcer is consumed, the inter-US interval is nearly identical to the NS→US interval (i.e., C ≈ T), a condition known to produce little to no Pavlovian learning (Gibbon et al., 1975; Gibbon & Balsam, 1981). If the child says “bah” more often after these C ≈ T interventions (and they do with 25% of the targeted vocalizations; Carroll & Klatt, 2008; Esch et al., 2005; Miguel et al., 2002), then the improvement cannot be attributed to Pavlovian learning. Without a theoretical account of these limited successes, it may prove difficult to replicate them (they may be due to an unidentified procedural confound) or to integrate them with behavior-analytic principles (Baer et al., 1968).

Although the durations of C and T have been manipulated between subjects or studies in this language-acquisition literature, no study has been designed to systematically evaluate the efficacy of interventions arranging different values of C, T, and C/T ratios (while holding all other procedural variables constant). This is a significant research gap that should be filled. Principle 3 makes a clear prediction: when the principles of Pavlovian conditioning are adhered to, large C/T values will enhance (relative to small values) the CS and conditioned-reinforcer functions of “bah.” If research is conducted to evaluate this hypothesis, we recommend assessing the CS and conditioned-reinforcer functions independent of tests of increased participant vocalizations (e.g., Petursdottir et al., 2011); doing so provides an independent test of the efficacy of the Pavlovian procedures in establishing the core CS/conditioned-reinforcement functions.

Principle 4: The CS/conditioned-reinforcer should uniquely signal the sooner than normal arrival of the US/backup-reinforcer

With three principles under our belts, we have chosen our NS and our US, and we have ensured that the duration of C is much longer than the duration of T. Next, we must ensure that no other (non-target) stimulus signals that the US will arrive sooner (after T) than normal (again, “normal” is given by the inter-US interval, C), lest our target stimulus fail to acquire CS/conditioned-reinforcer properties. We will discuss two examples of this in the Pavlovian literature—overshadowing and blocking—both of which have important implications for the use of conditioned reinforcement in applied research.

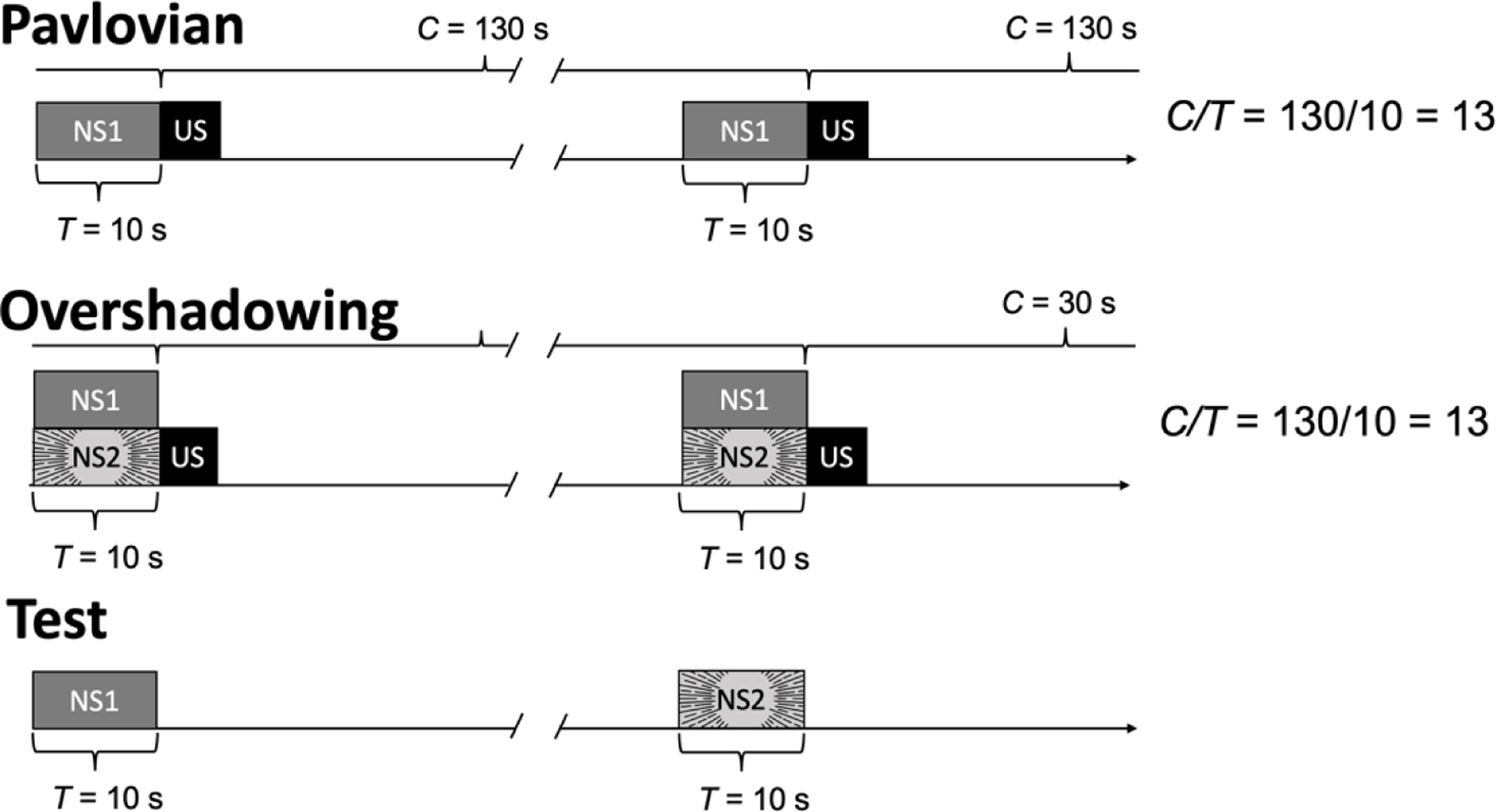

Overshadowing

Figure 3 illustrates a Pavlovian overshadowing procedure. For individuals assigned to the Pavlovian-training group (top panel), a neutral stimulus (NS1) is presented alone 10 s prior to the US; it uniquely signals that the appetitive US will arrive 13 times sooner than normal. For the overshadowing group (middle panel), training is different. Two neutral stimuli, NS1 and NS2, are presented together 10 s before the US. Neither of these neutral stimuli has a behavioral function (they are both neutral), but NS2 is more salient than NS1. Because of this difference in salience, NS2 is more likely to acquire a CS function than NS1. This outcome is revealed in the test phase (bottom panel), when NS1 and NS2 are periodically presented alone, without the US. For Pavlovian-trained subjects, NS1 evokes more conditioned responding than NS2, and the opposite is true among those in the overshadowing group (e.g., Jennings et al., 2007; Mackintosh, 1971; Pavlov, 1927). This is a replicable finding (see Gottlieb & Begej, 2014, for review), and overshadowing effects have been documented in human laboratory experiments (e.g., Chamizo et al., 2003; Prados, 2011). To our knowledge, no published studies have systematically explored overshadowing effects in a test of conditioned reinforcement, not in the lab, not with humans, nor in applied settings; these are significant gaps that should be filled.

FIGURE 3.

Pavlovian overshadowing procedures. The top panel shows the training phase for the Pavlovian group—a neutral stimulus (NS1) is presented 10 s before the unconditioned stimulus (US), and US events happen, on average, every 130 s. For the overshadowing group, everything is the same except a second, highly salient neutral stimulus (NS2) is presented simultaneously with NS1. In the test phase, NS1 is presented alone to evaluate if it will evoke a conditioned response.

Blocking

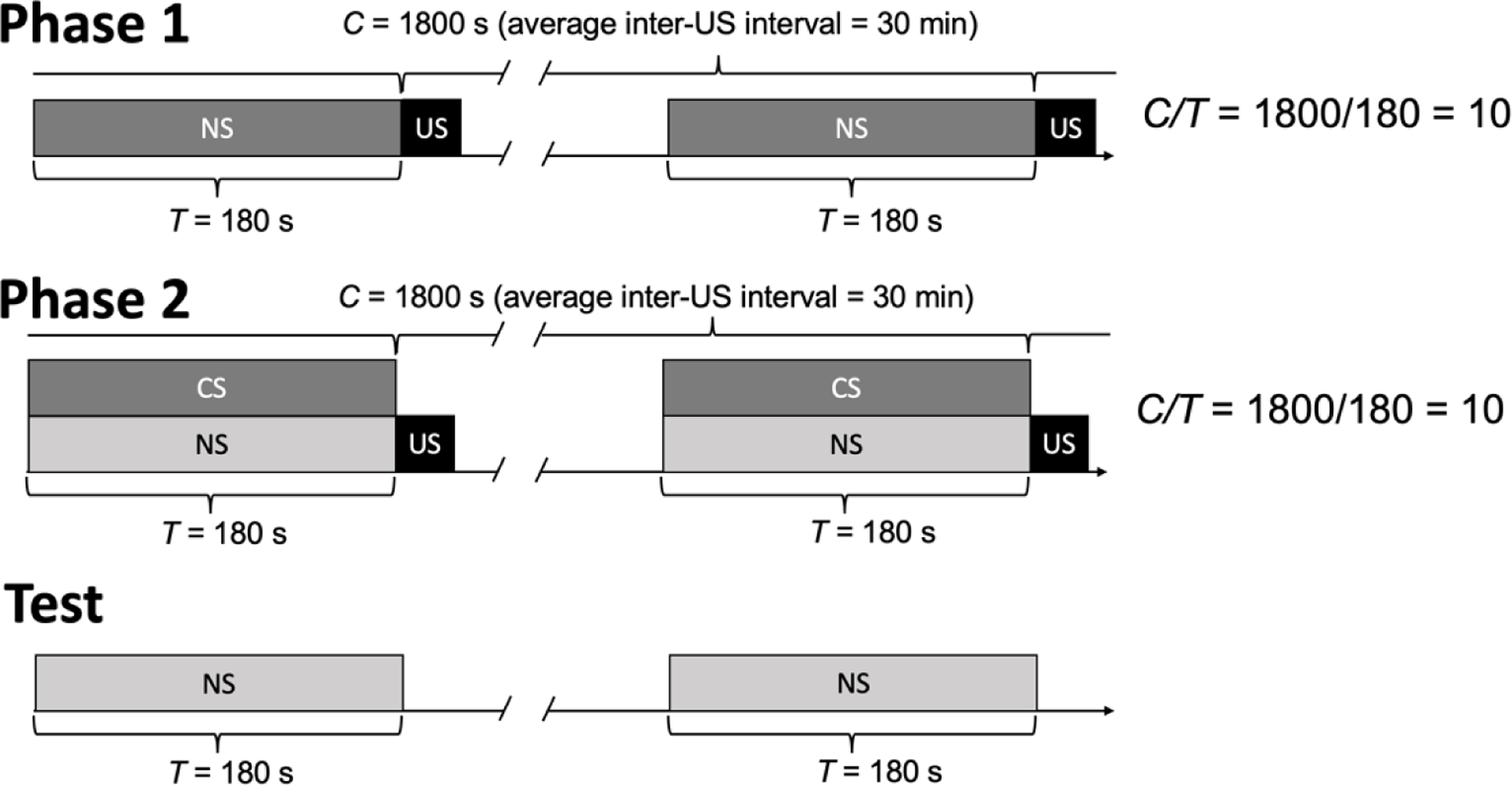

The other example of Principle 4 in the Pavlovian literature, blocking, is illustrated in Figure 4; parameters are from the famous Kamin (1968) experiment. In the first phase, a 180-s stimulus acquired CS properties because it signaled that the US would arrive 10 times faster than normal. In Phase 2, the now functional CS was presented simultaneous with a NS, both of which were followed by the US. In Kamin’s test phase, the NS from Phase 2 failed to acquire CS properties, whereas for a control group that did not complete Phase 1, the NS acquired a CS function. Although replications conducted in other labs, and with other CS and US stimuli, show that blocking is rarely complete, it is nonetheless clear that presenting a NS with a previously established CS hinders Pavlovian learning (Rescorla, 1999a; Soto, 2018), including with humans (e.g., Arcediano et al., 1997).

FIGURE 4.

Pavlovian blocking procedures involving a neutral stimulus (NS), conditioned stimulus (CS) and unconditioned stimulus (US). In Phase 1, the NS→US sequence at C/T = 10 establishes the CS function (C refers to the inter-US interval and T to the CS→US interval). In Phase 2, procedures are unchanged with the exception that an NS is presented simultaneously with the functional CS. In the test phase, the NS is presented alone to evaluate if it will evoke a conditioned response.

When it comes to the relevance of Principle 4 to conditioned reinforcement, several experiments have shown that presenting the would-be conditioned reinforcer with a previously established CS hinders acquisition of the conditioned-reinforcer function (Burke et al., 2007; Palmer, 1988; Panlilio et al., 2007; Vandbakk et al., 2020). For example, in the Burke et al. (2007) experiment, a CS function was established with rats using food as the US (C/T = 150/30 = 5). In the next phase, the established CS was presented simultaneous with a NS, both prior to US delivery (as in Phase 2 of Figure 4). In test sessions, rats made approximately 7.5 times more operant responses to produce the CS established in Phase 1, relative to the NS introduced in Phase 2, thereby suggesting an at least partial blocking of acquisition of the conditioned-reinforcer function.

Applying Principle 4

Applying Principle 4 means the applied behavior analyst should be on the lookout for other, non-target stimuli that are inadvertently correlated with the would-be conditioned reinforcer; the latter should be the only stimulus signaling the more imminent than normal arrival of the US. That non-target stimulus might be a salient NS (overshadowing) or a previously established CS (blocking). For example, presenting a NS with a highly salient praise event could have an overshadow effect; if praise already functions as a CS/conditioned-reinforcer, it may block the NS from acquiring those functions. Likewise, if we are trying to establish the conditioned-reinforcer function of an auditory stimulus like “bah,” the vocal stimulus should not be presented at the same time that the therapist reaches for the backup reinforcer. The latter is a visual non-target stimulus that presumably already has a CS function—it signals that a normally rare backup reinforcer is now imminent. If “bah” is blocked in this way, then it is less likely to acquire conditioned reinforcer properties (see Petursdottir et al., 2011, for discussion).

In reviewing the applied literature, we encountered several apparent violations of Principle 4, though most of these involved presenting a salient stimulus before the NS→US trial began. For example, when Smith et al. (1996) were trying to establish the conditioned reinforcer function of an auditory stimulus like “bah,” they always cleared away the toys before saying “bah.” If a non-target, toy-clearing event signals the shift from a long inter-US interval (C) to its relatively imminent arrival (T), then the highly salient toy-clearing stimulus could overshadow “bah” from acquiring a conditioned-reinforcer function (see Egger & Smith, 1962, for a laboratory demonstration of such overshadowing of conditioned reinforcement). Similarly, it is a common practice in this same applied literature to call the child’s name just before presenting the would-be conditioned reinforcer (e.g., Esch et al., 2009; Petursdottir et al., 2011). Again, this seemingly innocuous but highly salient non-target stimulus could overshadow the acquisition of the CS/conditioned-reinforcer function if that stimulus has already signaled the more imminent than normal arrival of the US. If Paul Revere’s brother, Tom Revere, warned the townspeople of the imminent British invasion just before Paul rode into town, Paul’s warning would largely be ignored. Principle 4 reminds us that the CS/conditioned-reinforcer must uniquely signal that the US/backup reinforcer is coming.

This common practice of attracting participant attention before presenting the NS raises the concern that the participant is not otherwise attending to the presentation of the NS. Returning to Principle 1, it is important to choose a novel and salient NS. This may be challenging in some populations of participants, but it is a challenge that must be met if we are to optimize Pavlovian learning.

Principle 5: Pavlovian learning is facilitated by arranging fewer trials per session

When considering how many Pavlovian training trials to arrange per session, intuition tells us that arranging more trials—more learning opportunities—is a good strategy. Our textbooks imply the same when they indicate that establishing a new CS function requires repeated pairing of NS and US events (Chance, 1998; Cooper et al., 2020; Martin & Pear, 2019; Mayer et al., 2018; Miltenberger, 2016). However, there are two reasons that intuition may be a poor guide. The first reason will take us back to Principle 3 and the second will lead us to argue for a separate principle (Principle 5), which cannot be accounted for by Principle 3 alone. Either way you look at it, per-trial Pavlovian learning is facilitated by arranging fewer NS→US trials per session.

Let’s tackle the first reason first. If an applied behavior analyst has scheduled 10 minutes for a Pavlovian training session, then the number of trials per session will influence the C/T ratio, which will influence Pavlovian learning and performance outcomes (Principle 3). For example, if 40 trials are completed per session (e.g., Dozier et al., 2012), then the average US→US interval (C) is approximately 600/40 = 15 s. If the NS→US interval is always 5 s, then C/T = 15/5 = 3. Principle 3 tells us that arranging such a low C/T value is counter to the goal of Pavlovian training; many more training trials will be necessary for acquisition than would be required if just four trials were arranged in each 10-min session. If all else is held constant in these four-trial sessions, then the average inter-US interval is 600/4 = 150 s, and C/T = 150/5 = 30. When Gottlieb (2008) conducted experiments arranging these kinds of manipulations with rats and mice, he found that arranging 8-fold more trials per session (4 vs. 32 trials) did not improve learning outcomes; indeed, by some performance measures, superior behavioral outcomes were obtained with fewer trials per session (see Papini & Overmier, 1985, for similar outcomes with pigeons). Thus, when session duration is fixed and the applied behavior analyst’s decision is how many trials should be conducted per session, the basic nonhuman literature suggests that more than three or four trials is unnecessary and, perhaps, contraindicated.

Now that readers are gaining expertise with Principle 3 and have been reminded of the importance of it during Pavlovian training, we can ask how many trials should be programmed in a Pavlovian training session in which the experimenter is careful to hold C/T constant. Does the trials-per-session effect hold up when Principle 3 predicts no change in behavioral outcomes? If that effect still holds true, then a new principle is needed.

Several laboratory experiments have examined the trials-per-session effect when C/T is held constant across groups of animals that complete different numbers of trials per session. The results of two of these experiments are shown in Figure 5. In the study conducted by Gallistel and Papachristos (2020; Figure 5A), mice were randomly assigned to groups that completed different numbers of trials per session (2.5 [on average], 10, or 40). As shown in Figure 5A, the new CS function was acquired in fewer trials when mice completed 2.5 trials per session; said another way, Pavlovian learning was facilitated (on a per-trial basis) by arranging fewer trials per session. Figure 5B shows the results of a similar experiment conducted with rats (C/T held constant across groups; Papini & Dudley, 1993). For rats assigned to the 1-trial per session group, the CS evoked conditioned responding after about 20 training trials (i.e., the fourth 5-trial block). By contrast, for rats in the 20-trials per session group, the NS failed to evoke conditioned responding after 40 training trials. Thus, as before, arranging fewer trials per session facilitated per-trial Pavlovian learning. Hence, Principle 5 holds that Pavlovian learning is facilitated on a per-trial basis by arranging fewer trials per session; this is true even when C/T is held constant.

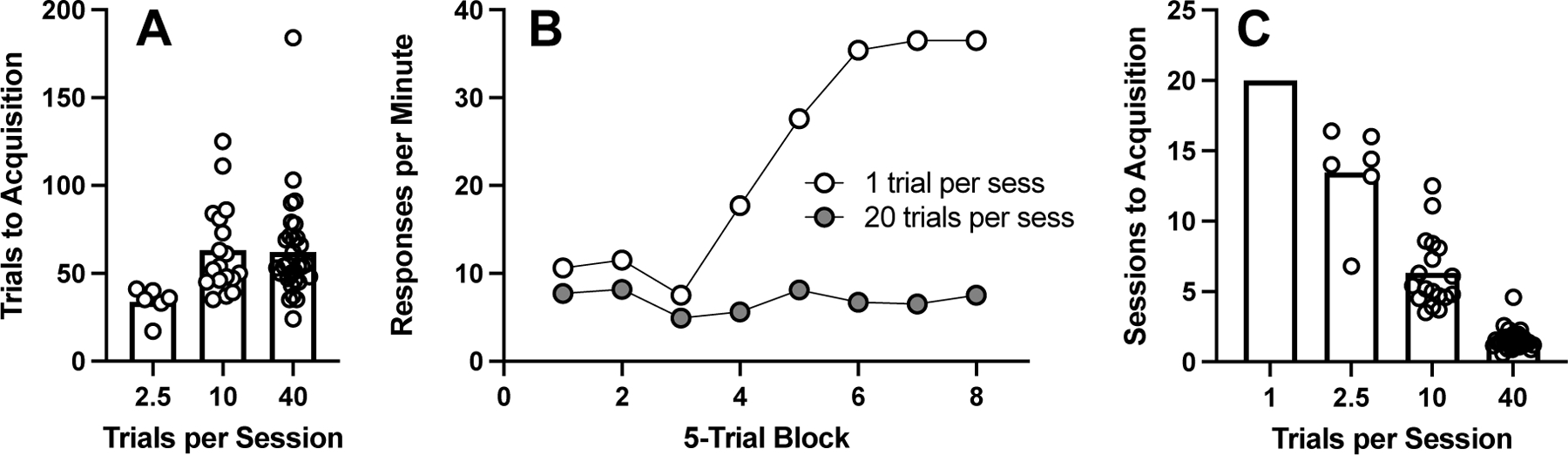

FIGURE 5.

Pavlovian acquisition data. Panel A: Number of Pavlovian training trials needed before mice met an acquisition criterion (i.e., the CS alone reliably evoked the conditioned response) plotted as a function of the number of NS→US trials completed per session (Gallistel & Papachristos, 2020). Panel B: Conditioned response rate in a rat autoshaping experiment (Papini & Dudley, 1993). In rats completing one trial per session, the NS evoked conditioned responding at above baseline levels by the 4th trial block. For rats in the 20-trials per session group, the NS was not yet evoking conditioned responding after 40 training trials. Panel C: Sessions to acquisition across groups that acquired the CS function.

Before we get too excited about this, have a look at the data in Figure 5C. In that panel we have calculated the number of sessions to acquisition based on the trials to acquisition data in Figure 5A and Figure 5B. Those data show that training goals are achieved more quickly (fewer sessions) by arranging more trials per session. Importantly, this will be true only if the C/T ratio is held constant when increasing the number of trials per session, as in the Gallistel and Papachristos (2020) and Papini and Dudley (1993) experiments. Arranging more training trials in a fixed-duration session will decrease C, and hence the C/T ratio, which will slow acquisition (Principle 3). To the best of our knowledge, the trial-per-session effect has not been investigated either with humans or in applied settings.

Shifting from Pavlovian to conditioned-reinforcement experiments, to our knowledge only one nonhuman laboratory study has explored this trials-per-session effect on conditioned-reinforcer outcomes (Bersh, 1951). In that study, different groups of rats completed either 2, 4, 8, 16, or 20 NS→US trials in each of four training sessions. Speed of acquisition was not a dependent measure, just operant response rates in a post-training test in which rats could press a lever to gain brief access to the CS alone. The only significant effect was that rats receiving 20 trials per session pressed the lever more than rats trained with four trials per session. Although this would seem to counter Principle 5, none of the other between-group differences (e.g., rats completing 2 vs. 20 trials per session) were significant. Bersh (1951) concluded that the trials-per-session effect was unreliable in his experiment, perhaps because he did not control many of the variables that are routinely controlled for in more contemporary research (e.g., session duration, C/T ratio). More research is needed on the isolated effects of trials per session in conditioned reinforcement experiments.

Applying Principle 5

The empirical evidence informing Principle 5 suggests that Pavlovian learning is facilitated on a per-trial basis when fewer training trials are arranged per session. This effect is observed when C/T values are held constant but, as noted above, the effect can also occur if fewer trials are arranged in fixed-duration sessions; this increases C/T, which facilitates learning and behavioral outcomes. Below we discuss three implications of these observations for applied research.

First, it is worth noting that applied researchers often conduct several brief sessions in a row in a single day, which is different from nonhuman studies in which just one session is conducted per day. Given that the findings supporting Principle 5 come from nonhuman studies, we should state the obvious, that readers should not implement Principle 5 by simply redefining their sessions as composed of fewer trials (e.g., four NS→US trials instead of 40) and then conducing additional back-to-back sessions until the prior number of trials is reached (e.g., 10 four-trial sessions = 40 trials). This shift in the definition of a session introduces no functional changes that would be expected to facilitate Pavlovian learning on a per-trial basis.

Second, there is some complementarity between Principles 3 and 5. In an applied study in which a salient/novel 10-s NS is slated to acquire a CS/conditioned-reinforcer function, Principle 3 encourages us to program a long inter-US interval, perhaps C = 300 s (C/T = 300/10 = 30). In many applied research settings, scheduling 20 such trials per session will be impractical, as sessions will last one hour and 40 minutes (20 × 300 s = 6,000 s). Thankfully, Principle 5 tells us that many trials per session is unnecessary; arranging fewer trials per session improves the efficiency of Pavlovian learning. Hence, the complementarity of these principles.

The third implication of Principle 5 comes from the observation that, if the inter-US interval lasts an average of 300 s (5 min), there will be a good deal of downtime between Pavlovian training trials. For a rat in an operant chamber this is not a problem, but for a client who comes to the clinic for two hours a day, downtime is an inefficiency that runs counter to the goals of the behavioral interventions. Perhaps Principle 5 can inspire a novel approach to conducting Pavlovian training in applied settings. That approach will dispense with the practice of holding Pavlovian training sessions that have a discriminable beginning and end. Instead, NS→US trials will be conducted at randomly selected times during a clinic visit. That is, these trials will be overlaid on top of normal clinic activities. To reduce disruptions to these activities, we will schedule just a few trials per day, which will have the added benefit of increasing the inter-US interval, and therefore ensuring C/T is large. For example, if a client visits the clinic from 10:30 a.m. until 12:30 p.m., then the normally scheduled behavioral services would be provided throughout the visit; no dedicated session time will be set aside for a Pavlovian training session. Instead, at three random, pre-selected times (e.g., 10:47 a.m., 11:29 a.m., and 12:21 p.m. on Monday, with different randomly selected times on Tuesday and so forth), the salient/novel NS would be conspicuously presented for 10-s and the US provided after that. If the NS uniquely signals that the backup reinforcer will arrive more than 100 times faster than normal while at the clinic (e.g., on Monday C/T = an average of 1,460 s / 10 s = 146 times faster than normal), then acquisition of the CS/conditioned-reinforcer function should proceed as in Figure 5B. As this Pavlovian training continues across trials and days, when noncontingent presentations of the NS alone reliably evoke positive emotional responses (a conditioned response), this reveals that the NS has acquired a CS function and may subsequently function as a conditioned reinforcer.

This “incidental” Pavlovian training approach is seemingly a good fit with those clinical settings in which staff and session-time resources are limited. However, as previously discussed in the context of Principle 1, some clinical populations may not shift their attention toward the NS when it is first presented, which will inhibit learning the temporal relation between that stimulus and the US that follows. Should this happen, Pavlovian training may need to occur in a less-distracting setting until the NS acquires a CS function. Training could then be continued in the settings in which the CS/conditioned-reinforcer will be used therapeutically.

Principle 6: A reliable NS→US contingency facilitates acquisition; an intermittent contingency increases resistance to Pavlovian extinction

Principle 6 has two components; the first is concerned with acquisition. That component holds that, all else being equal, acquisition of a Pavlovian CS function will occur more quickly if the NS is always followed by the US (Gibbon et al., 1980; Humphreys, 1939, 1940; Jenkins & Stanley, 1950; Pavlov, 1927). That is, acquisition will be slowed if the US follows the NS only some of the time (e.g., NS→ [p = 0.25 US])8. If the NS is encountered many times but it is not at all correlated with US events, then the NS will not acquire a CS function (Gottlieb & Begej, 2014).

Considering the implications of the first component of Principle 6 to conditioned reinforcement, new conditioned-reinforcer functions should be acquired faster if a reliable NS→US contingency is used; that is, if the would-be conditioned reinforcer is always, rather than only sometimes followed by the backup reinforcer. Although this outcome seems obvious, to our knowledge, this prediction has never been empirically tested.

The second component of Principle 6 specifies that resistance to Pavlovian extinction is enhanced by sometimes presenting the CS without the US; for which there is considerable empirical support (Chan & Harris, 2017; Fitzgerald, 1963; Gibbs et al., 1978; Harris et al., 2019; Haselgrove et al., 2004; Humphreys, 1939; Rescorla, 1999b). For example, in a series of rat experiments conducted by Chan and Harris (2019), the programmed probability of the US given the CS was a direct predictor of resistance to extinction. That is, if, during training, the US followed one in three (randomly selected) CS events (i.e., CS → [p = .33 US]) then, in the subsequent (post-training) extinction phase, three times as many CS-alone trials were required to extinguish conditioned responding, relative to rats with a training history of CS→ [p = 1 US]. If one in five CS events was followed by the US, extinction took five times as many trials.

When this second component of Principle 6 is applied to conditioned reinforcement, it suggests that arranging a NS → [p < 1 US] contingency during Pavlovian training should produce a conditioned reinforcer that is more resistant to Pavlovian extinction; that is, more likely to maintain operant behavior when the conditioned reinforcer is no longer exchanged for a backup reinforcer. Empirical support for this prediction is mixed. One of the better-controlled studies was conducted by Knott and Clayton (1966). Two experimental groups of rats completed four equal-duration Pavlovian training sessions, with 100 NS events in each. One group was trained with a NS→ [p = 1 US] contingency and the other group with a NS→ [p = 0.5 US] contingency. For both groups the appetitive US was a brief pulse of electronic brain stimulation. In the conditioned-reinforcement test that followed, rats could press a lever to gain 1-s access to the newly acquired CS, but not the US (Pavlovian extinction). Both groups responded at above control-group levels to produce the CS (a conditioned reinforcer effect), but the intermittent-US group’s responding proved to be more durable between Pavlovian extinction sessions. Although results consistent with this finding have been reported in other nonhuman labs (James, 1968; Klein, 1959) and with human children (Fort, 1961, 1965), these systematic replication studies exerted less control of confounded variables, so the supporting evidence is not entirely convincing. Adding further to that skepticism, other studies have failed to systematically replicate these outcomes (e.g., Fox & King, 1961; Jacobs, 1968). Thus, Principle 6 enjoys robust empirical support in the Pavlovian literature (i.e., acquisition of CS function), but application of the principle to conditioned reinforcement is not as clear and requires more systematic research.

Applying Principle 6

In our review of the applied behavior analytic-literature on acquisition of new conditioned-reinforcer functions, the first component of Principle 6 was uniformly followed (e.g., Dozier et al., 2012; Moher et al., 2008; Petursdottir et al., 2011). That is, researchers were careful to use a NS→[p = 1 US] contingency during Pavlovian training. As for the second component, we could find no applied studies that either trained with or moved to a NS→ [p < 1 US] contingency at any point during Pavlovian training. Therefore, to the best of our knowledge, no one has tested if an intermittent Pavlovian contingency produces a conditioned reinforcer that is more robust to lapses in conditioned-reinforcement treatment fidelity (i.e., Pavlovian extinction – tokens received but not exchanged for backup reinforcers).

Instead, it is common to establish a new CS/conditioned-reinforcer function using a NS→[p = 1 US] contingency, use the CS as a conditioned reinforcer that is always exchanged for the backup reinforcer, and then to switch to a gradually more intermittent exchange-production contingency (e.g., Argueta et al., 2019; Leon et al., 2016). The latter contingencies were previously discussed in the context of Principle 3, and those points will not be repeated here. Instead, we will emphasize that Principle 6 predicts that training with a NS→ [p < 1.0 US] Pavlovian contingency should produce a conditioned reinforcer that is more resistant to treatment lapses that approximate Pavlovian extinction. Given the translational utility of such a finding, if it were to be confirmed, we would encourage readers to fill this research gap.

Finally, it is worth noting that the second component of Principle 6 is specific to the continued efficacy of a CS/conditioned-reinforcer in the face of Pavlovian extinction; it is not suggesting that a CS→ [p < 1.0 US] contingency will produce a more preferred conditioned reinforcer than a CS→ [p = 1 US] contingency – it will not. If given a choice between the two, the more consistent CS will be preferred (Anselme, 2021).

TRANSLATING THE PRINCIPLES TO PROMOTE SELF-CONTROL

In this final section, we attempt to illustrate how the six practical principles of Pavlovian conditioning might prove useful in addressing a problem of interest to basic, translational, and applied researchers, reducing impulsive choice. Promoting self-control choice and delay tolerance has been a behavioral target for decades (e.g., Mazur & Logue, 1978; Schweitzer & Sulzer-Azaroff, 1988). In these studies, self-control is defined as preference for a larger-later over a smaller-sooner reward, and impulsive-choice is defined as the opposite. Self-control can also involve adhering to an initial self-control choice (enrolling in substance-use treatment) when defections to impulsivity are possible (daily relapse opportunities). Because impulsive-choice is robustly correlated with substance-use, gambling, and other health-impacting behaviors (for meta-analyses, see Amlung et al., 2016; MacKillop et al., 2011; Weinsztok et al., 2021), there is good reason to target improvements in self-control. All else being equal, a child who cannot make or sustain a self-control choice is more likely to engage in disruptive behavior when asked to wait, relative to a child who patiently waits to obtain what they want (Brown et al., 2021; Ghaemmaghami et al., 2016).

The Pavlovian strategy for promoting self-control is simple—focus on the stimulus presented during the delay to the larger-later reward. To unpack this a bit, when the participant in a laboratory test of impulsive choice chooses the larger-later reward (the self-control choice), a delay-bridging stimulus is presented immediately, and remains in place until the large reward is obtained. The function of that delay-bridging stimulus may be a partial determinant of impulsive or self-control choice—it is, after all, a salient immediate consequence of making a self-control choice.

Peck et al. (2020) evaluated if the function of the delay-bridging stimulus is aversive. In an impulsive-choice test phase, stable choices between smaller-sooner and larger-later rewards were assessed. When the latter reward was selected, a cue light bridged the delay to food. In the next phase, no food rewards were arranged; instead, the delay-bridging cue-light was illuminated periodically during the session and rats could press an escape-lever to turn it off. The prevalence of impulsive choice in the first phase was positively correlated with rates of escape-lever responding in the second phase. Said, another way, rats that made a lot of impulsive choices found the delay-bridging stimulus aversive—they turned that light off; “self-controlled” rats did not. If the immediate consequence of a self-control choice is the presentation of an aversive stimulus, then impulsive choice may be partially influenced by its aversive-stimulus avoidance function.

A Pavlovian approach to self-control training would alter the function of the delay-bridging stimulus before the test of impulsive choice. Rather than allowing that stimulus to acquire aversive properties, it would first be established as a CS/conditioned-reinforcer. In the subsequent test of impulsive choice, Pavlovian-trained subjects may make more self-control choices than control-group rats because doing so produces an immediate conditioned reinforcer (the delay-bridging stimulus). When Principles 1–6 are followed in the Pavlovian-training phase, the new CS/conditioned-reinforcement function can be established with rats after about 75 trials (Robinson & Flagel, 2009). By comparison, other learning-based interventions that reduce impulsive choice in rodents typically require thousands of training trials (see Rung & Madden, 2018; Smith et al., 2019, for reviews).

Delay-bridging stimuli, such as a clock-timer, have been used in translational and applied experiments. Although Vessells et al. (2018) and Vollmer et al. (1999) reported that these stimuli facilitate self-control choice among children with developmental disabilities, Newquist et al. (2012) reported that bridging the delay with a timer did not reduce impulsive choice among typically developing preschoolers (see Cardinal et al., 2000, for comparable results with rats). A seemingly critical difference between these studies is that Vessells et al. and Vollmer et al. arranged systematic training over many sessions (delay-fading; Mazur & Logue, 1978; Schweitzer & Sulzer-Azaroff, 1988), which may have reduced the aversive function of the delay-bridging stimulus. Newquist et al. and Cardinal et al. (2000) provided no such training when they found that delay-bridging stimuli were not helpful. The state of the literature, then, is that delay-bridging stimuli can promote self-control choice, but seemingly only when the non-aversive function of those stimuli is established through systematic training.