Abstract

Objectives

The aim of this study was to evaluate diagnostic ability of deep learning models, particularly convolutional neural network models used for image classification, for femoroacetabular impingement (FAI) using hip radiographs.

Materials and methods

Between January 2010 and December 2020, pelvic radiographs of a total of 516 patients (270 males, 246 females; mean age: 39.1±3.8 years; range, 20 to 78 years) with hip pain were retrospectively analyzed. Based on inclusion and exclusion criteria, a total of 888 hip radiographs (308 diagnosed with FAI and 508 considered normal) were evaluated using deep learning methods. Pre-trained VGG-16, ResNet-101, MobileNetV2, and Inceptionv3 models were used for transfer learning.

Results

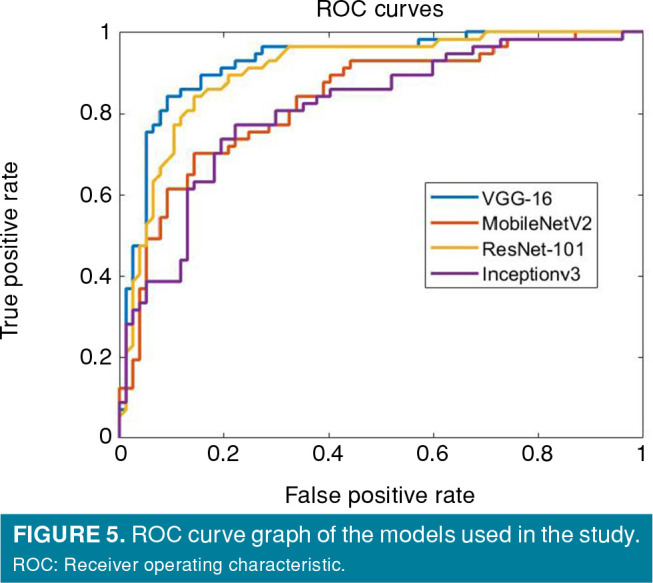

As assessed by performance measures such as accuracy, sensitivity, specificity, precision, F-1 score, and area under the curve (AUC), the VGG-16 model outperformed other pre-trained networks in diagnosing FAI. With the pre-trained VGG-16 model, the results showed 86.6% accuracy, 82.5% sensitivity, 89.6% specificity, 85.5% precision, 83.9% F1 score, and 0.92 AUC.

Conclusion

In patients with suspected FAI, pelvic radiography is the first imaging method to be applied, and deep learning methods can help in the diagnosis of this syndrome.

Keywords: Computer-assisted image processing, deep learning, femoroacetabular impingement, hip.

Introduction

Femoroacetabular impingement (FAI) is a cause of hip pain, particularly in young adults, and it is a syndrome in which the development of osteoarthritis can be prevented with early diagnosis and treatment.[1] It is one of the major causes of hip pain in young individuals, and progression of the problem results in osteoarthritis. Early diagnosis is important in the prevention of osteoarthritis. It is also important to make the differential diagnosis of other causes of hip pain to prevent unnecessary and wrong treatments.[2]

The hip joint is a ball and socket joint. The femoral head fits into the acetabulum and can easily move in all directions. Extra bone formations in the acetabulum periphery or in the femoral head and neck region cause compression between the proximal femur and the acetabulum during normal hip movements, which is known as FAI.[1] In the early period of the syndrome, pain occurs only when the hip approaches the limit of its normal range of motion; however, as the problem progresses, it may also cause pain in daily living activities. Although pain is typically in the anterior of the hip and thigh in FAI patients, it may also radiate to the lateral and posterior of the hip. Patients can usually show the painful area by holding the lateral side of the hip, just above the trochanter major, with their thumb and index finger, and this display is called the ʻC signʼ[2]

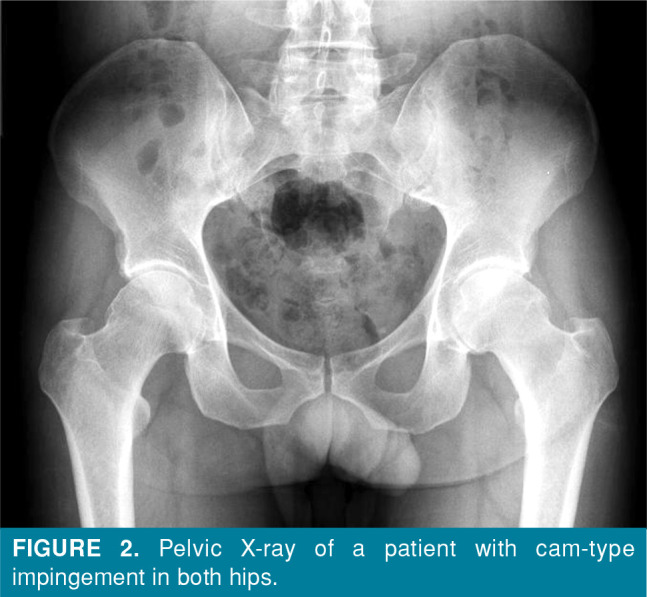

Conventional radiography is the first imaging method that should be performed to exclude causes of non-traumatic hip pain in young adults, such as coxarthrosis due to developmental hip dysplasia, avascular necrosis of the femoral head, stress fractures, rheumatic diseases, and hip region tumors. In pincer-type impingement, the acetabulum periphery elongates toward the collum femoris and, thus, impingement occurs (Figure 1). In cam-type impingement, the appearance of the femoral head and neck is similar to a camshaft, and compression occurs between the femoral neck and the acetabulum due to the pistol grip formation in the femoral head and neck region (Figure 2).[3] The frog-leg lateral view, cross-table lateral view, 45-degree Dunn view, and false profile view are conventional radiographic images that are used in diagnosis.[1,4]

Figure 1. Pelvic X-ray of a patient with pincer-type impingement in both hips.

Figure 2. Pelvic X-ray of a patient with cam-type impingement in both hips.

Magnetic resonance (MR) imaging or MR arthrography can be used to investigate conditions such as labral damage and cartilage degeneration, which cannot be visualized on radiological examination. Machine learning is an application area that offers solutions to the learning problem of artificial intelligence. Convolutional Neural Networks (CNN) is a machine learning application in which artificial neural networks are used. While standard neural networks consist of fully connected layers, CNN consists of at least one convolutional layer.[5] In CNN, feature determination is made by applying filters on images and consists of an input layer, hidden layer and output layer. The CNN performance increases, as the number of data increases. Transfer learning is the use of a previously trained model for a new problem and is used, when the number of data is limited. Alexnet, VGG-16, GoogLeNet, ResNet-50, and Squeezenet are the most well-known transfer learning methods.[6-8] Research has shown that deep learning methods are very effective in medical image analysis by saving time and computational costs.[5]

In deep learning methods, the working mechanisms of neurons in the human brain are imitated, together with simultaneous utilization of the processing power of the computer. Deep learning methods are currently used for situations such as fingerprint reading, license plate recognition, and face recognition. Grayscale photos taken with old technology have been successfully colored using deep learning methods. Advances in artificial intelligence have allowed this application to be widely used in medicine, particularly in the field of image-based diagnosis.[8]

Computer-aided diagnostic methods are systems that assist physicians in the interpretation of medical images such as radiographs, MR imaging scans, and digital pathology images. There are many studies in the literature recently showing that medical analysis can be successfully performed with deep learning methods. Most of them have reported the successful use of these methods, particularly in the fields of radiology, pathology, cardiology, dermatology, and ophthalmology.[9-17] In a recent study, the differentiation of normal and pathological hips in hip ultrasonography images was successfully made with deep learning methods, and ultrasonography images that were not suitable for evaluation were also successfully identified.[14] In another study, it was shown that sacroiliitis could be diagnosed with pre-trained VGG-16, ResNet-101 and Inception-v3 networks models on pelvic radiographs.[16]

Radiography is the preferred imaging method as the first step for patients presenting with musculoskeletal complaints due to its accessibility, low cost, and ease of interpretation. The first imaging method used in patients presenting with this complaint is pelvic radiography, and the findings of this disease can be seen on the radiograph, and the diagnosis of FAI may be missed by clinicians due to fatigue and workload. In the present study, we aimed to develop a computer-aided diagnosis method to assist clinicians in the diagnosis of FAI from pelvic radiographs by using deep learning methods.

Patients and Methods

Dataset

This single-center, retrospective study was conducted at Gazi University Faculty of Medicine, Department of Orthopaedics and Traumatology between January 2010 and December 2020. Pelvic radiographs of a total of 516 patients (270 males, 246 females; mean age: 39.1±3.8 years; range, 20 to 78 years) with hip pain were evaluated. After the pelvic radiographs were recorded in the JPEG format, the right and left hips were evaluated separately with the You Only Look Once (YOLO) application.[18] Exclusion criteria were the presence of protrusio acetabuli, osteoarthritis of Grade 2 (definite narrowing in the presence of definite osteophytes) or higher according to the Kellgren/Lawrence classification,[19] or subluxation of the femoral head. Radiographs showing traumatic or tumoral lesions in the pelvis bones or proximal femur were also excluded. The hip images were independently classified as normal or FAI by two authors (one is a four-year specialist, and the other is a 15-year specialist). A total of 1,032 hips of 516 patients were examined radiographically by two researchers. Any radiographs that were not labeled as belonging to the same class by these two researchers were not included in the study. Finally, a total of 888 hip radiographs (308 diagnosed with FAI and 508 considered normal) were included in the study.

Data preprocessing

The dimensions of the radiographic images used in this study ranged from 560x2,400 (width) to 560x2,700 (height) pixels. Some radiographs had directional signs, metal or clothing remnants, dense intestinal gases and pelvic organ images, which could adversely affect the training and, therefore, these areas were cropped from the image to only include the acetabulum, femoral head and neck, and trochanters. As this would have been a lengthy manual process, the YOLOv4 algorithm was used. Accordingly, 20 images (16 images for training, four images for validation) were labeled in the YOLO format on the online MakeSense AI platform (free to use online tool for labeling photos), the YOLOv4 config file was configured according to one class, and the training parameters of YOLOv4 were set as follows: batch size= 16, subdivisions= 8, momentum= 0.9, learning rate= 0.001. The YOLOv4 uses the pretrained Darknet53 network. With the object detector obtained at the end of 2,000 iterations in the Keras tensorflow environment, all the images of the dataset were automatically cropped using the non-maximum suppression algorithm.[20,21] The classification was made on these images obtained (Figure 3). The dataset was randomly split into training, validation, and test sets (Table I).

Figure 3. Example of an image with bounding boxes and non-maximum suppression applied on pelvic radiography.

Table 1. The numbers of images used for training, validation, and testing.

| Training | Validation | Test | Total | |

| n | n | n | n | |

| Abnormal | 275 | 48 | 57 | 380 |

| Normal | 364 | 67 | 77 | 508 |

The validation dataset was used for hyperparameter tuning and the test set was used only for evaluating the model performance. The training parameters were set as follows: optimizer, sgdm (Stochastic Gradient Descent with Momentum); mini-batch size, 16, L2; regularization, 0.004; initial learning rate, 3e-4; validation frequency, 20; and number of epochs, 20.

Data processing environment

This study was performed on a computer with a GeForce RTX2060 Graphics Processing Unit. The Keras tensorflow environment was used for object detection (YOLO implementation) and MATLAB® and Image Processing Toolbox™ were used for the classification task.[22,23] The YOLO algorithm was used for object detection, automating the tedious and time-consuming preprocessing step. The YOLO was trained with 20 radiographs, with this model, all radiographs were cut-cropped to include the femoral head, neck, and acetabulum. The classification of the radiographs obtained in this method was done with the transfer learning method.

Transfer learning and data augmentation

Transfer learning is the use of models with different features trained with natural images using the ImageNet database for a new classification task. Convolutional neural networks were pre-trained by utilizing ImageNet. In this study, pre-trained VGG-16, ResNet-101, MobileNetV2, and Inceptionv3 models were used for transfer learning due to our limited data. Data augmentation is applied, when there is not enough data for the classification task. For this, slightly modified copies of existing data are added to the dataset, which helps to improve model accuracy and prevent model overfitting. In this study, rotation (–20, +20 degree), translation, and flipping were applied to the images for data augmentation.

Statistical analysis

The performance of the models was evaluated with accuracy, sensitivity, specificity, precision, F1 score and Area under the receiver operating characteristic (ROC) curve (AUC) values. These metrics were calculated from the confusion matrix and ROC curve. The confusion matrixes and ROC curves were obtained by testing the models using the following formulas (TP= true positive; FP= false positive; TN= true negative; FN= false negative):

Results

In this study, the transfer learning method was applied with pre-trained VGG-16, ResNet-101, MobileNetV2, and Inceptionv3 models. The networks obtained by training were, then, tested with test data. The accuracy, sensitivity, specificity, precision, F1 score, and AUC values obtained by testing the VGG-16, ResNet-101, MobileNetV2, and Inceptionv3 models are shown in Table II, and Figures 4 and 5. Among the pre-trained networks in the diagnosis of FAI on pelvic radiographs, the best results were obtained with the VGG-16 model in terms of performance metrics such as accuracy sensitivity specificity.

Table 2. Performance results of the models.

| VGG-16 | ResNet-101 | Inceptionv3 | MobileNetV2 | |

| % | % | % | % | |

| Accuracy | 86.6 | 82.8 | 78.7 | 77.6 |

| Sensitivity | 82.5 | 77.2 | 73.7 | 64.9 |

| Specificity | 89.6 | 87.0 | 81.8 | 87.0 |

| Precision | 85.5 | 81.5 | 75.0 | 78.7 |

| F1 score | 83.9 | 79.2 | 74.3 | 71.1 |

| Area under the curve | 0.92 | 0.88 | 0.84 | 0.80 |

Figure 4. Confusion matrices of the pre-trained models; VGG-16 model (top-left), ResNet-101 model (top-right), Inceptionv3 model (bottom-left), and MobilenetV2 model (bottom-right).

Figure 5. ROC curve graph of the models used in the study. ROC: Receiver operating characteristic.

Discussion

The results of this study demonstrated that with the VGG-16 model, the highest diagnostic accuracy for FAI compared to other models was obtained and it can help the clinician in the diagnosis. There are previous studies in the literature showing successful diagnosis of hip osteoarthritis and sacroiliitis using deep learning methods on pelvis radiographs.[16,24-26] Jang et al.[27] showed that the original hip center in osteoarthritic hips could be successfully determined prior to total hip replacement surgery using deep learning methods on pelvic radiographs. In the current study, impingement syndrome, which did not have hip arthrosis but was characterized by morphological abnormalities in the proximal femur and acetabulum periphery, could be successfully diagnosed with the deep learning methods. To the best of our knowledge, this is the first study in the literature showing that the diagnosis of FAI syndrome can be made by deep learning methods on pelvic radiographs.

Although artificial intelligence is not expected to replace healthcare professionals, it has important benefits such as enabling the correct diagnosis to be made as early as possible, reducing the risk of misdiagnosis or incomplete diagnosis, and accelerating the learning process. Machine learning is an application area that offers solutions to the learning problem of artificial intelligence. Convolutional Neural Networks is a machine learning application in which artificial neural networks are used. In CNN, feature determination is made by applying filters on images and consists of an input layer, hidden layer and output layer. The performance of CNN increases, as the number of data increases. Transfer learning is the use of a previously trained model for a new problem and is used, when the number of data is limited. Alexnet, VGG-16, GoogLeNet, ResNet-50, and Squeezenet are the most well-known transfer learning methods.[6,7] In this study, for the diagnosis of FAI from pelvic radiographs, with the object detector obtained with the YOLOv4 algorithm, the radiographs were cropped to only include the acetabulum, femoral head and neck, and trochanters.

Transfer learning was applied to these images with pre-trained VGG-16, ResNet-101, Inceptionv3, and MobileNetV2 networks. In this study conducted with transfer learning method in the diagnosis of FAI from pelvic radiographs, the VGG-16 model performed better than other pre-trained networks. Previous studies in the literature have also reported that better results are obtained with the VGG-16 model.[7,14,24]

Femoroacetabular impingement is recognized as one of the important causes of secondary hip osteoarthritis. It should be kept in mind in the differential diagnosis of diseases such as femoral head avascular necrosis and rheumatic diseases causing pain originating from the hip joint, which can be seen in young individuals.[28,29] Conventional X-ray examination is routinely performed in patients presenting with hip pain, but FAI is one of the causes of hip pain that can be missed.[1] In patients with a clinical suspicion of FAI syndrome, the morphology of the hip joint can be examined in different sections with computed tomography and MR imaging, and structures such as the labrum cartilage joint capsule can be evaluated to reach the correct diagnosis. Moreover, differential diagnosis of diseases that can be confused with FAI can be made. However, since radiography is an easily accessible and easily evaluated method, it is the imaging method used in the first stage.

The main limitation to this study is that we were unable to evaluate the intra- and interobserver compatibility of the applied method. Another limitation is that only the anterior-posterior radiographs of the pelvis were evaluated in the diagnosis of FAI, and other radiographs such as lateral radiographs and cross-table radiographs were not taken, due to the retrospective design of the study. With this method, the diagnosis of some patients with FAI may have been missed. However, pelvic anteriorposterior X-ray is the routine imaging method used in patients presenting with hip pain. With the help of deep learning methods, the diagnosis of FAI, which can be overlooked in the first evaluation, can be successfully made.

In conclusion, pelvic radiography is the first imaging method to be applied in patients with suspected FAI syndrome, as it is an inexpensive, easily accessible, and easily evaluated method. The results of this study suggest that deep learning methods can help healthcare professionals in the early diagnosis of FAI syndrome.

Footnotes

Conflict of Interest: The authors declared no conflicts of interest with respect to the authorship and/or publication of this article.

Author Contributions: Idea/concept: U.K., E.A.; Design: E.A., Y.M.; Control/supervision: A.V.; Data collection and/or processing: I.K., M.Ç., A.V.; Analysis and/or interpretation: K.U.; Literature review: Y.M., E.A.; Writing the article: E.A.; Critical review: K.U.; References and funding: M.Ç., İ.K.; Materials: I.K., M.Ç., A.V.

Financial Disclosure: The authors received no financial support for the research and/or authorship of this article.

References

- 1.Ganz R, Parvizi J, Beck M, Leunig M, Nötzli H, Siebenrock KA. Femoroacetabular impingement: A cause for osteoarthritis of the hip. Clin Orthop Relat Res. 2003;(417):112–120. doi: 10.1097/01.blo.0000096804.78689.c2. [DOI] [PubMed] [Google Scholar]

- 2.Dooley PJ. Femoroacetabular impingement syndrome: Nonarthritic hip pain in young adults. Can Fam Physician. 2008;54:42–47. [PMC free article] [PubMed] [Google Scholar]

- 3.Macfarlane RJ, Haddad FS. The diagnosis and management of femoro-acetabular impingement. Ann R Coll Surg Engl. 2010;92:363–367. doi: 10.1308/003588410X12699663903791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jesse MK, Petersen B, Strickland C, Mei-Dan O. Normal anatomy and imaging of the hip: Emphasis on impingement assessment. Semin Musculoskelet Radiol. 2013;17:229–247. doi: 10.1055/s-0033-1348090. [DOI] [PubMed] [Google Scholar]

- 5.Kim HE, Cosa-Linan A, Santhanam N, Jannesari M, Maros ME, Ganslandt T. Transfer learning for medical image classification: A literature review. BMC Med Imaging. 2022;22:69–69. doi: 10.1186/s12880-022-00793-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Anwar SM, Majid M, Qayyum A, Awais M, Alnowami M, Khan MK. Medical image analysis using convolutional neural networks: A review. J Med Syst. 2018;42:226–226. doi: 10.1007/s10916-018-1088-1. [DOI] [PubMed] [Google Scholar]

- 7.Maraş Y, Tokdemir G, Üreten K, Atalar E, Duran S, Maraş H. Diagnosis of osteoarthritic changes, loss of cervical lordosis, and disc space narrowing on cervical radiographs with deep learning methods. Jt Dis Relat Surg. 2022;33:93–101. doi: 10.52312/jdrs.2022.445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wang H, Pujos-Guillot E, Comte B, de Miranda JL, Spiwok V, Chorbev I, et al. Deep learning in systems medicine. Brief Bioinform. 2021;22:1543–1559. doi: 10.1093/bib/bbaa237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Singh S, Maxwell J, Baker JA, Nicholas JL, Lo JY. Computeraided classification of breast masses: performance and interobserver variability of expert radiologists versus residents. Radiology. 2011;258:73–80. doi: 10.1148/radiol.10081308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, et al. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 11.Olsen TG, Jackson BH, Feeser TA, Kent MN, Moad JC, Krishnamurthy S, et al. Diagnostic performance of deep learning algorithms applied to three common diagnoses in dermatopathology. J Pathol Inform. 2018;9:32–32. doi: 10.4103/jpi.jpi_31_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Choi RY, Coyner AS, Kalpathy-Cramer J, Chiang MF, Campbell JP. Introduction to machine learning, neural networks, and deep learning. Transl Vis Sci Technol. 2020;9:14–14. doi: 10.1167/tvst.9.2.14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bizopoulos P, Koutsouris D. Deep learning in cardiology. IEEE Rev Biomed Eng. 2019;12:168–193. doi: 10.1109/RBME.2018.2885714. [DOI] [PubMed] [Google Scholar]

- 14.Atalar H, Üreten K, Tokdemir G, Tolunay T, Çiçeklidağ M, Atik OŞ. The Diagnosis of developmental dysplasia of the hip from hip ultrasonography images with deep learning methods. J Pediatr Orthop. 2023;43:132–137. doi: 10.1097/BPO.0000000000002294. [DOI] [PubMed] [Google Scholar]

- 15.Atik OŞ. Artificial intelligence, machine learning, and deep learning in orthopedic surgery. Jt Dis Relat Surg. 2022;33:484–485. doi: 10.52312/jdrs.2022.57906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Üreten K, Maraş Y, Duran S, Gök K. Deep learning methods in the diagnosis of sacroiliitis from plain pelvic radiographs. Mod Rheumatol. 2023;33:202–206. doi: 10.1093/mr/roab124. [DOI] [PubMed] [Google Scholar]

- 17.Zou L, Yu S, Meng T, Zhang Z, Liang X, Xie Y. A Technical review of convolutional neural network-based mammographic breast cancer diagnosis. Comput Math Methods Med. 2019;2019:6509357–6509357. doi: 10.1155/2019/6509357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tulbure AA, Tulbure AA, Dulf EH. A review on modern defect detection models using DCNNs - deep convolutional neural networks. J Adv Res. 2021;35:33–48. doi: 10.1016/j.jare.2021.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kellgren JH, Lawrence JS. Radiological assessment of osteoarthrosis. Ann Rheum Dis. 1957;16:494–502. doi: 10.1136/ard.16.4.494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rothe R, Guillaumin M, Gool LV. Asian conference on computer vision. Cham: Springer; 2014. Non-maximum suppression for object detection by passing messages between windows; pp. 290–306. [Google Scholar]

- 21.Nie Y, Sommella P, O’Nils M, Liguori C, Lundgren J. Automatic detection of melanoma with yolo deep convolutional neural networks; E-Health and Bioengineering Conference EHB 2019; September 30, 2019; Iasi, Romania. 2019. pp. 1–4. [Google Scholar]

- 22.Yamashita R, Nishio M, Do RKG, Togashi K. Convolutional neural networks: An overview and application in radiology. Insights Imaging. 2018;9:611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Li J, Li S, Li X, Miao S, Dong C, Gao C, et al. Primary bone tumor detection and classification in full-field bone radiographs via YOLO deep learning model. Eur Radiol. 2022 doi: 10.1007/s00330-022-09289-y. [DOI] [PubMed] [Google Scholar]

- 24.Üreten K, Arslan T, Gültekin KE, Demir AND, Özer HF, Bilgili Y. Detection of hip osteoarthritis by using plain pelvic radiographs with deep learning methods. Skeletal Radiol. 2020;49:1369–1374. doi: 10.1007/s00256-020-03433-9. [DOI] [PubMed] [Google Scholar]

- 25.Xue Y, Zhang R, Deng Y, Chen K, Jiang T. A preliminary examination of the diagnostic value of deep learning in hip osteoarthritis. e0178992PLoS One. 2017;12 doi: 10.1371/journal.pone.0178992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.von Schacky CE, Sohn JH, Liu F, Ozhinsky E, Jungmann PM, Nardo L, et al. Development and validation of a multitask deep learning model for severity grading of hip osteoarthritis features on radiographs. Radiology. 2020;295(1):136–145. doi: 10.1148/radiol.2020190925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jang SJ, Kunze KN, Vigdorchik JM, Jerabek SA, Mayman DJ, Sculco PK. John Charnley Award: Deep learning prediction of hip joint center on standard pelvis radiographs. S400-S407J Arthroplasty. 2022;37 doi: 10.1016/j.arth.2022.03.033. [DOI] [PubMed] [Google Scholar]

- 28.Peters CL, Erickson JA. Treatment of femoro-acetabular impingement with surgical dislocation and débridement in young adults. J Bone Joint Surg Am. 2006;88:1735–1741. doi: 10.2106/JBJS.E.00514. [DOI] [PubMed] [Google Scholar]

- 29.Menge TJ, Briggs KK, Rahl MD, Philippon MJ. Hip arthroscopy for femoroacetabular impingement in adolescents: 10-year patient-reported outcomes. Am J Sports Med. 2021;49:76–81. doi: 10.1177/0363546520973977. [DOI] [PubMed] [Google Scholar]