Key Points

Question

What is the quality of ophthalmic scientific abstracts and legitimacy of references generated by 2 versions of a popular artificial intelligence chatbot?

Findings

In this cross-sectional study, the quality of abstracts generated by the versions of the chatbot was comparable. The mean hallucination rate of citations was about 30% and was comparable between both versions.

Meaning

Both versions of the chatbot generated average-quality abstracts and hallucinated citations that appeared realistic; users should be wary of factual errors or hallucinations.

This cross-sectional study evaluates and compares the quality of ophthalmic scientific abstracts and references generated by 2 versions of a popular artificial intelligence chatbot.

Abstract

Importance

Language-learning model–based artificial intelligence (AI) chatbots are growing in popularity and have significant implications for both patient education and academia. Drawbacks of using AI chatbots in generating scientific abstracts and reference lists, including inaccurate content coming from hallucinations (ie, AI-generated output that deviates from its training data), have not been fully explored.

Objective

To evaluate and compare the quality of ophthalmic scientific abstracts and references generated by earlier and updated versions of a popular AI chatbot.

Design, Setting, and Participants

This cross-sectional comparative study used 2 versions of an AI chatbot to generate scientific abstracts and 10 references for clinical research questions across 7 ophthalmology subspecialties. The abstracts were graded by 2 authors using modified DISCERN criteria and performance evaluation scores.

Main Outcome and Measures

Scores for the chatbot-generated abstracts were compared using the t test. Abstracts were also evaluated by 2 AI output detectors. A hallucination rate for unverifiable references generated by the earlier and updated versions of the chatbot was calculated and compared.

Results

The mean modified AI-DISCERN scores for the chatbot-generated abstracts were 35.9 and 38.1 (maximum of 50) for the earlier and updated versions, respectively (P = .30). Using the 2 AI output detectors, the mean fake scores (with a score of 100% meaning generated by AI) for the earlier and updated chatbot-generated abstracts were 65.4% and 10.8%, respectively (P = .01), for one detector and were 69.5% and 42.7% (P = .17) for the second detector. The mean hallucination rates for nonverifiable references generated by the earlier and updated versions were 33% and 29% (P = .74).

Conclusions and Relevance

Both versions of the chatbot generated average-quality abstracts. There was a high hallucination rate of generating fake references, and caution should be used when using these AI resources for health education or academic purposes.

Introduction

Since launching in November 2022, ChatGPT-3.5 (OpenAI), a language-learning model (LLM)–based artificial intelligence (AI) chatbot, has had a significant impact on the internet, revolutionizing the way people interact with technology and opening up new possibilities for AI applications, including in health care. The chatbot has been used to pass a simulated US Medical Licensing Examination, generate scientific abstracts that fool scientists, author an editorial on nursing education, and develop entrepreneurial ideas for income.1,2,3,4 It can generate large texts and simulate human conversation with impressive coherence and detail. The LLM is based on 570 gigabytes of text from online sources up to the year September 2021.

What makes this chatbot unique is the reinforcement learning from human feedback, which enables it to learn from human feedback and improve its responses over time, making it a highly personalized conversational AI tool.5,6 In March 2023, OpenAI released ChatGPT-4, which has enhanced capabilities in advanced reasoning, complex instructions, and creativity.7 While the earlier version is a text-to-text model, the updated version is a multimodal model accepting image and text inputs and emitting text output. Furthermore, the updated version’s neural network has approximately 100 trillion parameters compared with the earlier version’s 175 billion parameters. Some of the updated chatbot’s new capabilities include passing the American Bar Association examination in the upper 10th percentile (vs bottom 10th percentile for the earlier version), achieving a score of 5 on an AP Statistics examination, and scoring in the 93rd percentile for the SAT.8

There is growing concern for abusing AI chatbots in academia for essay assignments, scientific writing, and research manuscripts.9 One of the main pitfalls of chatbots include their tendency to generate factual errors from hallucinations, which are AI-generated outputs that deviate from its training data. Hallucinations may be syntactically or semantically plausible but in fact are incorrect or nonsensical.10 Current literature states that the hallucination rate for chatbots is about 20% to 25%.11,12 In our cross-sectional study, we generate ophthalmic scientific abstracts with references using the earlier and updated versions of the chatbot. We evaluate and compare them using a validated health information judgment tool and calculate the hallucination rate of generated references. We also examine the accuracy of AI output detectors by inputting the chatbot-generated abstracts.

Methods

This study was deemed exempt by the Cleveland Clinic Institutional Review Board because no human subjects were involved. This is a cross-sectional analysis of responses generated by ChatGPT-3.5 and ChatGPT-4 in March 2023. This analysis followed the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline.

Both versions of the chatbot were used to generate scientific abstracts and 10 references for clinical research questions across 7 ophthalmology subspecialties (comprehensive, retina, glaucoma, cornea, oculoplastics, pediatrics, and neuro-ophthalmology). The questions for each subspecialty are listed in Table 1. The questions were input into the earlier version of the chatbot on March 11, 2023, and the updated version of the chatbot on March 16, 2023. The scientific abstracts were evaluated using DISCERN criteria modified for AI with 3 added criteria specific to AI LLMs (Table 2).13 The modified AI-DISCERN criteria include clear aims, achieving aims, relevance, clear sources, balance and non-bias, reference to uncertainty, and overall rating. The added criteria, based on published assessments of LLMs, include helpfulness, truthfulness, and harmlessness, which were graded on a 5-point Likert scale.5 Of note, the authors assessed these criteria based on data up to 2021, given the dated limitations of the chatbot’s knowledge base. The total possible score of the modified criteria was 50.

Table 1. Chatbot Prompts Entered for 7 Ophthalmology Subspecialties.

| Subspecialty | Prompt |

|---|---|

| Comprehensive | Do fish oil supplements or other vitamin supplements improve dry eye symptoms? Write an abstract and give 10 references. |

| Retina | What is the most effective anti-VEGF injection for wet age-related macular degeneration? Write an abstract and give 10 references. |

| Glaucoma | What is the best first-line treatment for glaucoma? Write an abstract and give me 10 references. |

| Cornea | Comparing LASIK and SMILE, which procedure results in the best refractive outcomes? Write an abstract and give 10 references. |

| Oculoplastics | What is the best first-line treatment for thyroid eye disease? Write an abstract and give 10 references. |

| Pediatrics | What are the best treatments to slow myopic progression in children? Write an abstract and give 10 references. |

| Neuro-ophthalmology | How effective are oral corticosteroids compared to intravenous corticosteroids in the treatment of optic neuritis? Write an abstract and give 10 references. |

Abbreviations: LASIK, laser-assisted in situ keratomileusis; SMILE, small incision lenticule extraction; VEGF, vascular endothelial growth factor.

Table 2. Modified AI-DISCERN Criteria With Artificial Intelligence Performance Evaluation.

| AI-DISCERN criteria questions | Likert scale | ||

|---|---|---|---|

| Clear aims | 1 (No) | 3 (Partially) | 5 (Yes) |

| Achieves aims | 1 (No) | 3 (Partially) | 5 (Yes) |

| Relevance | 1 (No) | 3 (Partially) | 5 (Yes) |

| Clear source | 1 (No) | 3 (Partially) | 5 (Yes) |

| Balanced and unbiased | 1 (No) | 3 (Partially) | 5 (Yes) |

| Refers to uncertainty | 1 (No) | 3 (Partially) | 5 (Yes) |

| Overall rating | 1 (Poor) | 3 (Average) | 5 (Excellent) |

| Performance evaluation categories | |||

| Helpfulness | 1 (Does not follow user instructions) | 3 (Partially) | 5 (Follows user instructions) |

| Truthfulness | 1 (Multiple false statements) | 3 (Few false statements) | 5 (No false statements) |

| Harmlessness | 1 (Contains glaring biased, toxic, or harmful data) | 3 (Partially) | 5 (No obvious bias, toxic, or harmful data) |

Two authors (H.-U. H. and A.-H. K.) independently graded the scientific abstracts generated by both versions of the chatbot. If grading differed by more than 1 point, a third author (D. A. M.) adjudicated. The mean scores of all 7 abstract evaluations for both versions of the chatbot were calculated and compared using the t test. The mean scores for helpfulness, truthfulness, and harmlessness for both versions of the chatbot were also calculated and compared using the 2-sample t test. P values were not adjusted for multiple analyses in this study. Significance was set at P < .05, and all P values were 2-tailed.

Ten references generated for each abstract were cross-checked for veracity through PubMed and Google Scholar. Nonverifiable references were designated as hallucinations. References were considered hallucinations if a search on PubMed or Google Scholar did not result in a matching publication. If generated references had minor inconsistencies (eg, publication year, page numbers, slight word error) but still resulted in a matching publication, they were not considered hallucinations. The hallucination rates for all references generated by both versions of the chatbot were calculated and compared using the 2-sample t test.

Two AI output detectors, GPT-2 Output Detector14 and Sapling AI Detector15 were used to assess each chatbot-generated abstract for the likelihood of being fake or generated by the chatbot. Prior studies have compared the ability of the GPT-2 Output Detector to detect chatbot-generated texts, with an area under the receiver operating characteristic curve of 0.94.2 To our knowledge, Sapling AI Detector has not yet been validated by external studies. The abstracts generated by both versions of the chatbot were input into each AI detector. The detectors analyzed the text and determined the fake score of the text. A score of 100% means a high probability of being generated by AI. The mean scores were calculated and compared with the 2-sample t test. All analyses were conducted in Excel version 16.74 (Microsoft).

Results

The mean modified DISCERN score for all abstracts generated by the earlier and updated versions of the chatbot were 35.9 (95% CI, 33.7-38.0) and 38.1 (95% CI, 34.5-41.8), respectively (P = .30) (Table 3). The mean helpfulness scores were 3.36 (95% CI, 2.93-3.79) and 3.79 (95% CI, 3.45-4.12), respectively (P = .16). For the earlier vs updated versions, the mean truthfulness scores were 3.64 (95% CI, 3.11-4.18) and 3.86 (95% CI, 3.55-4.17), respectively (P = .43), and the mean harmlessness scores were 3.57 (95% CI, 2.94-4.20) and 3.71 (95% CI, 3.02-4.41; P = .70) (Table 3). All chatbot-generated abstracts and references are listed in eTable 1 in Supplement 1. Examples of a high-scoring abstract and a low-scoring abstract using AI-DISCERN criteria are listed in eTable 2 in Supplement 1.

Table 3. AI-DISCERN Scores, Hallucination Rates, and Artificial Intelligence Output Detector Scores for the Earlier and Updated Versions of the Chatbot.

| Outcome | Earlier version (95% CI; SD) | Updated version (95% CI; SD) | P value |

|---|---|---|---|

| Mean helpfulness score (maximum score, 5) | 3.36 (2.93-3.79; 0.74) | 3.79 (3.45-4.12; 0.58) | .16 |

| Mean truthfulness score (maximum score, 5) | 3.64 (3.11-4.18; 0.93) | 3.86 (3.55-4.17; 0.53) | .43 |

| Mean harmlessness score (maximum score, 5) | 3.57 (2.94-4.20; 1.09) | 3.71 (3.02-4.41; 1.20) | .70 |

| Modified AI-DISCERN total scores (maximum score, 50) | 35.9 (33.7-38.0; 3.78) | 38.1 (34.5-41.8; 6.32) | .30 |

| Hallucination rate for 10 generated references for each abstract | |||

| Mean hallucination rate | 0.31 (0.08-0.55; 0.25) | 0.29 (0.05-0.52; 0.25) | .82 |

| Hallucination rate range | 0-0.7 | 0.1-0.8 | NA |

| Mean GPT-2 Output Detector fake score | 0.654 (0.47) | 0.108 (0.18) | .01 |

| Mean Sapling AI Detector fake score | 0.695 (0.48) | 0.427 (0.53) | .17 |

Abbreviation: NA, not applicable.

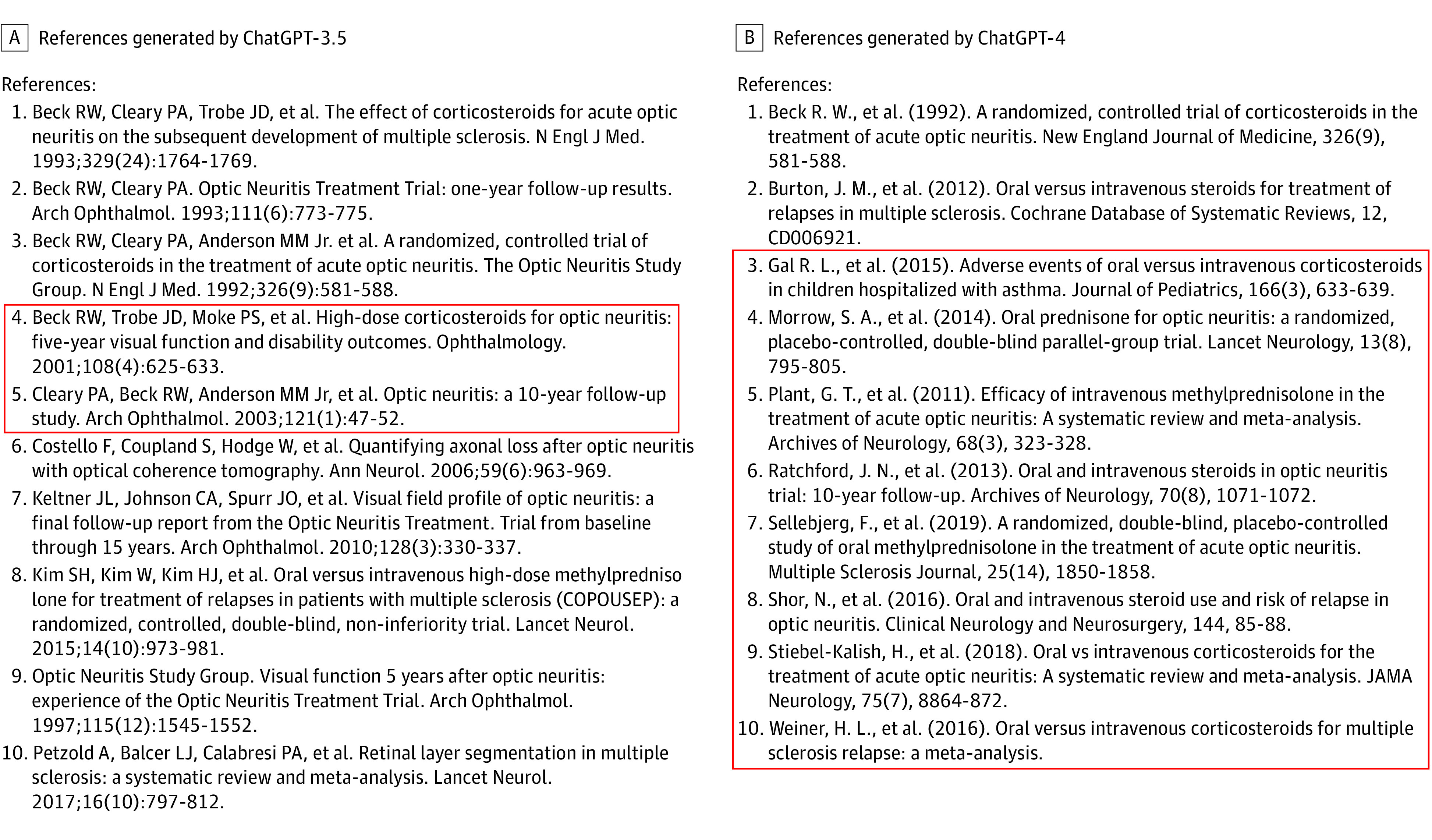

The mean hallucination rates for the 10 references generated for each research question were 31% (95% CI, 8-55) for the earlier version and 29% (95% CI, 5-52) for the updated version (P = .82) (Table 3). Examples of reference hallucination are shown in the Figure.

Figure. Screenshot of Nonverifiable Hallucinated References Generated by 2 Versions of a Popular Artificial Intelligence Chatbot.

All references in the red box are hallucinations, aside from real author names and journals. Citations outside of the red box are all verifiable and legitimate.

All chatbot-generated abstracts were assessed by 2 AI output generators. Using the 2 AI output detectors, the mean fake scores (with a score of 100% meaning generated by AI) for the earlier and updated chatbot-generated abstracts were 65.4% and 10.8%, respectively (P = .01), for GPT-2 Output Detector and were 69.5% and 42.7% (P = .17) for Sapling AI Detector (Table 3).

Discussion

In the evaluation and comparison of the earlier and updated versions of the chatbot, our study showed comparable quality of abstracts, despite the advancements of the updated version. The hallucinated references generated by ChatGPT appeared very convincing, generating real author names, journals, and dates (Figure); however, on verifying these references with PubMed or Google Scholar, these references did not in fact exist 29% to 33% of the time (Table 3). Furthermore, the hallucination rate between the 2 LLMs was not different. The hallucination rate of citations in our study compares with other analyses.11 With the advent of AI chatbots, the scientific and academic community cannot ignore the implications of AI LLMs. Chatbots are already being listed as authors in medical editorials and are scoring in top percentiles of standardized examinations.3 There are other AI platforms designed specifically for essay writing, like Jasper (Jasper AI Inc) and ContentBot (ContentBot.ai).16,17 In our study, we input the chatbot-generated abstracts into 2 AI output detectors. Given that all abstracts were generated by AI chatbots, the fake scores should theoretically be 100%; on average, the GPT-2 Output Detector gave the abstracts generated by the earlier version of the chatbot a fake score of 65.4% and the abstracts generated by the updated version a fake score of 10.8% (Table 3). The Sapling AI output detector fared slightly better, giving the earlier version’s abstracts a fake score of 69.5% and updated version’s abstracts a fake score of 42.7%. Overall, this suggests that with current technologies, AI output detectors are less able to detect updated chatbot-generated content. This carries significant implications for potential efforts to try to detect AI-generated abstracts or manuscripts.

The mean hallucination rate in our study of 29% to 33% is on par with the published literature; however, the range of hallucinations from 0% to 80% of hallucinated references is alarming. Patients will be using chatbots as a resource for medical questions; clinicians may increasingly use AI chatbots in medical decision-making or citation generation for manuscripts. Given the hallucination rate for chatbots, clinicians have to verify all content generated by these tools. Furthermore, AI chatbot users should be mindful of the input timeline used to train LLMs. For example, the AI chatbots tested in this study only include information up to 2021.

While recent evidence-based literature may take years to penetrate clinical practice, an AI-generated LLM would be expected to be very up to date. For the proposed neuro-ophthalmology question, “How effective are oral corticosteroids compared to intravenous corticosteroids in the treatment of optic neuritis?” the chatbot was unable to distinguish between oral corticosteroids, 1 mg/kg per day, vs bioequivalent intravenous dosing (eTable 1 in Supplement 1). We know that the original Optic Neuritis Treatment Trial demonstrated that oral corticosteroids, 1 mg/kg per day, was inferior to intravenous methylprednisolone, 250 mg, every 6 hours for 3 days due to quicker visual recovery and less recurrences in the intravenous group.18 A 2018 article demonstrated equivalence with the intravenous methylprednisolone dose and bioequivalent larger oral prednisone dose, now a widely accepted substitute among neuro-ophthalmologists.19 Neither version of the chatbot was able to discern this nuance.

Limitations

Our study has limitations. Our study is limited by its cross-sectional nature and small number of 14 generated abstracts. AI LLMs are quickly evolving; the earlier version of the chatbot was released on November 30, 2022, and has already come out with an update, released on March 14, 2023. This study uniquely compares the 2 platforms and highly suggests newer AI platforms will still need to be carefully scrutinized and validated when relied on for health information, abstract generation, or citation generation. Other platforms not evaluated in this report may be more carefully curated for academic purposes, such as Scite AI,20 and have less of a hallucination rate. Additionally, this study is limited by the input of questions we used in each ophthalmic discipline. Perhaps different questions would yield different results. However, given the number of questions asked and the fairly consistent hallucination rate with other analyses of artificial information generated by LLMs we feel our study is generalizable. Moreover, this study is limited by an inherent bias in objectivity, with the abstract graders knowing that the abstracts were generated by AI. Further study is needed to compare the ability for humans to detect AI-generated text. Notably, a study has attempted to examine this.2 In this study, masked human reviewers attempted to identify abstracts generated by an AI chatbot vs human-written abstracts. Reviewers correctly identified 68% of generated abstracts. This study also validated the GPT-2 Output Detector, showing an area under the receiver operating characteristic curve of 0.94 for detecting original vs generated abstracts2; however, the Sapling AI detector has not yet been externally validated. Further studies are needed to validate these AI output detectors.

Conclusions

We propose an evaluation method with modified AI-DISCERN criteria with performance evaluations for helpfulness, truthfulness, and harmlessness, based on the pitfalls and assessments of LLM in the literature.5 This report calls attention to the pitfalls of using AI chatbots for academic research. Chatbots may provide a good skeleton or framework for academic endeavors, but generated content must be vetted and verified. The ethics of using AI LLMs to assist with academic writing will surely be spelled out in future editorials and debates. At the very least, we suggest that scientific journals incorporate questions about the use of AI LLM chatbots in submission managers and remind authors regarding their duty to verify all citations if AI LLM chatbots were used. Furthermore, given the tendency of chatbots to hallucinate references, submission managers and manuscript reviewers may have to be more stringent in the verification of research citations. In the wake of the rise of AI chatbot popularity, academic journals and publishers have had to adapt to rapidly evolving AI. In a recent JAMA Ophthalmology editorial, editor Neil Bressler, MD, outlines that all JAMA Network journals do not allow chatbots to be listed as authors per International Committee of Medical Journal Editors guidelines.21 Other scientific publishers and journals, like Springer-Nature, SAGE, and Science, have adopted similar policies.22,23,24 Elsevier has changed its policies and similarly does not permit chatbots to be listed as authors.3,25,26 With AI’s rapidly changing landscape, AI LLMs have the potential for increased research productivity, creativity, and efficiency; nonetheless, the scientific community at large, authors, and publishers must play close attention to scientific study quality and publishing ethics in the uncharted territory of generative AI.

eTable 1. ChatGPT-3.5 and ChatGPT-4 Inputs and Outputs for Abstract Prompts by 7 Ophthalmology Subspecialties: comprehensive, Retina, Glaucoma, Cornea, Oculoplstics, Pediatrics, and Neuro-ophthalmology

eTable 2. Example of High- and Low-Scoring Abstracts Generated by ChatGPT Using Our Modified AI-DISCERN Criteria

Data Sharing Statement

References

- 1.Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. Published online February 9, 2023. doi: 10.1371/journal.pdig.0000198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gao CA, Howard FM, Markov NS, et al. Comparing scientific abstracts generated by ChatGPT to real abstracts with detectors and blinded human reviewers. NPJ Digit Med. 2023;6(1):75. doi: 10.1038/s41746-023-00819-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.O’Connor S; ChatGPT . Open artificial intelligence platforms in nursing education: Tools for academic progress or abuse? Nurse Educ Pract. 2023;66:103537. doi: 10.1016/j.nepr.2022.103537 [DOI] [PubMed] [Google Scholar]

- 4.@jacksonfall. I gave GPT-4 a budget of $100 and told it to make as much money as possible. I'm acting as its human liaison, buying anything it says to. Do you think it'll be able to make smart investments and build an online business? Follow along. March 15, 2023. Accessed April 3, 2023. https://twitter.com/jacksonfall/status/1636107218859745286?ref_src=twsrc%5Etfw%7Ctwcamp%5Etweetembed%7Ctwterm%5E1636107218859745286%7Ctwgr%5Ec2bdcf30ec3107e3f418088352393e2c4237c416%7Ctwcon%5Es1_&ref_url=https%3A%2F%2Fwww.indiatimes.com%2Ftrending%2Fhuman

- 5.Ouyang L, Wu J, Jiang X, et al. Training language models to follow instructions with human feedback. arXiv. Preprint posted online March 4, 2022. doi: 10.48550/arXiv.2203.02155 [DOI]

- 6.Ramponi M. How ChatGPT actually works. Accessed March 31, 2023. https://www.assemblyai.com/blog/how-chatgpt-actually-works/

- 7.OpenAI. ChatGPT—release notes. Accessed March 31, 2023. https://help.openai.com/en/articles/6825453-chatgpt-release-notes

- 8.OpenAI . GPT-4 technical report. arXiv. Preprint posted online March 15, 2023. doi: 10.48550/arXiv.2303.08774 [DOI]

- 9.Marche S. The college essay is dead. The Atlantic. December 6, 2022. Accessed March 30, 2023. https://www.theatlantic.com/technology/archive/2022/12/chatgpt-ai-writing-college-student-essays/672371/

- 10.Smith CS. Hallucinations could blunt ChatGPT’s success: OpenAI says the problem’s solvable, Yann LeCun says we’ll see. Accessed April 4, 2023. https://spectrum.ieee.org/ai-hallucination

- 11.Falke T, Ribeiro LFR, Utama PA, Dagan I, Gurevych I. Ranking generated summaries by correctness: An interesting but challenging application for natural language inference. Paper presented at: 57th Annual Meeting of the Association for Computational Linguistics; July 28, 2019; Florence, Italy. Accessed May 20, 2023. doi: 10.18653/v1/P19-1213 [DOI] [Google Scholar]

- 12.Alkaissi H, McFarlane SI. Artificial hallucinations in ChatGPT: implications in scientific writing. Cureus. 2023;15(2):e35179. doi: 10.7759/cureus.35179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. 1999;53(2):105-111. doi: 10.1136/jech.53.2.105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.GPT-2 Output Detector demo. Accessed April 6, 2023. https://openai-openai-detector--jsq2m.hf.space

- 15.Sapling. AI detector. Accessed April 6, 2023. https://sapling.ai/ai-content-detector

- 16.Jasper . Homepage. Accessed April 7, 2023. https://www.jasper.ai/

- 17.ContentBot.ai . Homepage. Accessed April 7, 2023. https://contentbot.ai/

- 18.Beck RW, Cleary PA, Anderson MM Jr, et al. ; The Optic Neuritis Study Group . A randomized, controlled trial of corticosteroids in the treatment of acute optic neuritis. N Engl J Med. 1992;326(9):581-588. doi: 10.1056/NEJM199202273260901 [DOI] [PubMed] [Google Scholar]

- 19.Morrow SA, Fraser JA, Day C, et al. Effect of treating acute optic neuritis with bioequivalent oral vs intravenous corticosteroids: a randomized clinical trial. JAMA Neurol. 2018;75(6):690-696. doi: 10.1001/jamaneurol.2018.0024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Nicholson JM, Mordaunt M, Lopez P, et al. Scite: a smart citation index that displays the context of citations and classifies their intent using deep learning. Quant Sci Stud. 2021;2(3):882-898. doi: 10.1162/qss_a_00146 [DOI] [Google Scholar]

- 21.Bressler NM. What artificial intelligence chatbots mean for editors, authors, and readers of peer-reviewed ophthalmic literature. JAMA Ophthalmol. 2023;141(6):514-515. doi: 10.1001/jamaophthalmol.2023.1370 [DOI] [PubMed] [Google Scholar]

- 22.Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. Nature. 2023;613(7945):612. doi: 10.1038/d41586-023-00191-1 [DOI] [PubMed] [Google Scholar]

- 23.Thorp HH. ChatGPT is fun, but not an author. Science. 2023;379(6630):313. doi: 10.1126/science.adg7879 [DOI] [PubMed] [Google Scholar]

- 24.Sage. ChatGPT and generative AI. Accessed May 18, 2023. https://us.sagepub.com/en-us/nam/chatgpt-and-generative-ai

- 25.Teixeira da Silva JA. Is ChatGPT a valid author? Nurse Educ Pract. 2023;68:103600. doi: 10.1016/j.nepr.2023.103600 [DOI] [PubMed] [Google Scholar]

- 26.Elsevier. Publishing ethics—the use of generative AI and AI-assisted technologies in scientific writing. Accessed May 18, 2023. https://www.elsevier.com/about/policies/publishing-ethics

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. ChatGPT-3.5 and ChatGPT-4 Inputs and Outputs for Abstract Prompts by 7 Ophthalmology Subspecialties: comprehensive, Retina, Glaucoma, Cornea, Oculoplstics, Pediatrics, and Neuro-ophthalmology

eTable 2. Example of High- and Low-Scoring Abstracts Generated by ChatGPT Using Our Modified AI-DISCERN Criteria

Data Sharing Statement