Abstract

Traditional Chinese Medicine (TCM), as an effective alternative medicine, utilizes tongue diagnosis as a major method to assess the patient’s health status by examining the tongue’s color, shape, and texture. Tongue images can also give the pre-disease indications without any significant disease symptoms, which provides a basis for preventive medicine and lifestyle adjustment. However, traditional tongue diagnosis has limitations, as the process may be subjective and inconsistent. Hence, computer-aided tongue diagnoses have a great potential to provide more consistent and objective health assessments. This paper reviewed the current trends in TCM tongue diagnosis, including tongue image acquisition hardware, tongue segmentation, feature extraction, color correction, tongue classification, and tongue diagnosis system. We also present a case of TCM constitution classification based on tongue images.

Keywords: computerized diagnosis, image analysis, machine learning, mobile app, tongue diagnosis, traditional Chinese medicine

Introduction

Traditional Chinese Medicine (TCM) [1] has a long history in health care. TCM diagnosis is generally based on the information obtained from four diagnostic processes: inspection, auscultation and olfaction, inquiry, and palpation. Inspection tops the four ways of TCM diagnosis, and the tongue is the primary subject for TCM inspection [2, 3]. As a simple, non-invasive, and valuable diagnostic procedure, tongue diagnosis [4] has been successfully utilized by TCM practitioners for at least 2,000 years [5]. In the TCM theory, the tongue has connections to the conditions of organs and body fluids, as well as the degree and progression of the disease [6, 7]. The main features used in tongue diagnosis are color and coating. For example, the normal tongue is red with a thin white coating [8]. Some characteristic changes like red body, yellow coating, or thick coating occur in the tongue in some diseases. For example, tongues of patients with diabetes mellitus are often yellow with thick moss [9]; in cancer patients, the tongue color is mainly purple and the tongues often do not have coating, but with thick greasy moss and slippery moss [10]; in patients with acute ischemic stroke, the tongues are often red and crooked, with greasy white moss [11]; red tongues with greasy white moss are also observed in patients with refractory Helicobacter pylori infection [12]. Tongue shape assessment is also an important component of tongue diagnosis. Such geometrical shape information includes thickness, size, cracks, and teeth-marks. In patients with primary Sjögren’s syndrome, the tongues are typically thin, reddish-red, non-mossy, or cracked [13]. In patients with primary insomnia, tongues are predominantly red and fat, with yellow and white greasy moss, and tooth marks [14]; HIV patients show red and fat tongues as well, but with white thick moss and tooth marks [15]. Tongue coating, which is covered on a tongue like moss, is an important factor with many features, including color, degree of wetness, thickness, form, and distributed range, reflecting a patient’s disease and body condition.

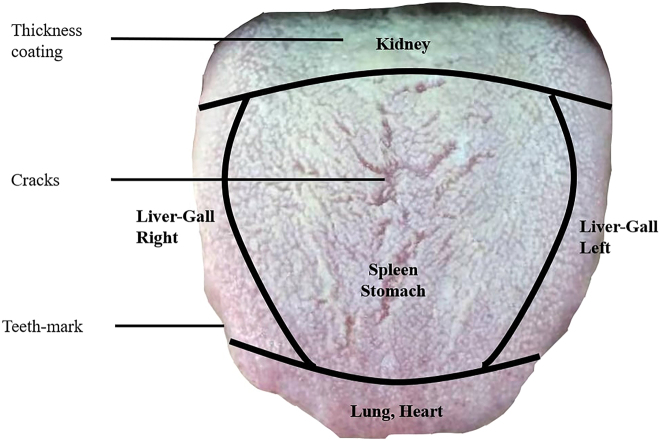

The tongue’s body, tip, and root have changes, which may indicate particular pathologies [16]. The normal tongue is an ellipse tongue, but there are also six other classes of tongue shapes: square, rectangular, round, acute triangular, obtusely triangular, and hammer [17]. Numerous clinical reports [18], [19], [20] have associated tongue shapes with diseases. For instance, a round tongue is associated with gastritis, an obtuse triangular tongue with hyperthyroidism, and a square tongue with coronary heart disease or portal sphygmo-hypertension. Organ conditions, properties, and variations of pathogens can also be found through observation of the tongue. For example, variations in tongue fur represent exogenous pathogenic factors and the flow of the stomach [21]. TCM usually divides the tongue into five areas, as shown in Figure 1. The left and right areas, the tip, the middle, and the tongue base reflect the conditions of the liver and gallbladder, heart and lungs, spleen and stomach, and kidneys, respectively [22], [23], [24], [25].

Figure 1:

Organ correspondence of tongue regions.

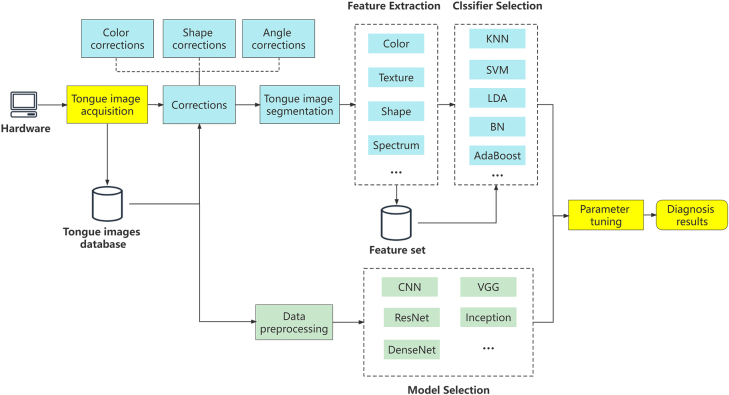

The advantage of tongue diagnosis is that it is a simple and non-invasive technique. However, it is difficult to achieve an objective and standardized examination. Changes in inspection circumstances, such as light sources, affect results significantly. Moreover, because the diagnosis relies on the doctor’s experience and knowledge, it is hard to obtain a standardized result. Recently, various researches are being carried out to solve these problems [26]. In this review, we summarize the development of tongue diagnosis and current technologies. As shown in Figure 2, the general computerized tongue diagnosis process can be divided into two approaches. One is the traditional machine learning method, as shown in the blue part of the figure. This method generally segments the raw tongue image, and then extracts features such as color, texture, shape, and spectrum from the segmented tongue image, and then selects the classifier to finally achieve tasks such as classification and recognition. The other is the deep learning method, which usually uses raw data for training and feature extraction by convolution operations, as shown in the green part of the figure. In this paper, we will conduct a review for both approaches.

Figure 2:

General process of computerized tongue diagnosis. The blue parts represent the tongue diagnosis process using traditional machine learning methods, and the green parts represent the deep learning process.

The remainder of this paper be organized as follows. In “Hardware for tongue images collection” section, we reviewed the development of existing hardware for tongue image collection. Then, in “Tongue image analysis” and “Tongue diagnosis system (TDS)” sections, we reviewed studies on tongue diagnosis, including tongue image segmentation, feature extraction, and color correction, as well as tongue classification and diagnosis system. In “An intelligent TCM constitution classification system based on tongue image” section, we demonstrated our study as an application example of TCM constitution classification. Finally, we discussed the current tongue diagnosis work and gave possible future research directions in “Discussions” section.

Hardware for tongue images collection

Tongue image acquisition is the first step in computerized tongue diagnosis. The quality of tongue image acquisition has an important impact on the subsequent sample labeling and analysis. In particular, clear and complete tongue images benefit tongue segmentation and feature extraction in the training phase of the machine-learning model development. However, the quality of tongue images is highly dependent on the hardware of the tongue image collection. The mainstream tongue image acquisition devices include commercial digital cameras and smartphones. Different manufacturers and brands of cameras/phones use different internal sensors. Devices with high-performance optical sensors are more likely to capture clear, high-resolution tongue images. Table 1 summarizes major hardware for tongue image collections.

Table 1:

Tongue image collection hardware.

| Author | Year | Imaging camera (pixels) | Illumination | Color temperature | Color correction |

|---|---|---|---|---|---|

| Chiu [25] | 2000 | 440 × 400 in a 2/3 CCD camera | Two fluorescent lamps installed in office environment | 5,400 K | Printed color card |

| Cai [28] | 2002 | Commercial digital still camera, 640 × 480 | Office illumination | Munsell ColorChecker | |

| Jang [42] | 2002 | Watec WAT-202D CCD camera, 768 × 494 | Optical fiber source (250 W halogen lamp) | 4,000 K | None |

| Wei [60] | 2002 | Kodak DC 260, 1,536 × 1,024 | OSRAM L18/72–965 fluorescent lamp | 6,500 K | Printed color card |

| Wang [29] | 2004 | Canon G5, 1,024 × 768 | Four standard light sources installed in dark chest | 5,000 K | Printed color card |

| Zhang [43] | 2005 | Sony 900E video camera, 720 × 576 | Two 70 W cold-light type halogen lamps | 4,800 K | Printed color card |

| He [61] | 2007 | DH-HV3103 CMOS camera, 2,048 × 1,536 | PHILIPS YH22 circular fluorescent lamps | 7,200 K | |

| Liu [61] | 2007 | Hitachi KP-F120 CCD camera | KOHLER illumination light source | ||

| Zhi [32] | 2007 | Hyperspectral camera | KOHLER illumination light source | ||

| Lo [37] | 2013 | CCD camera | Circular LED lighting | Datacolor Spyder 3 ELITE | |

| Lu [38] | 2018 | EOS 1200D | Simulated D65 illuminant environment | 6,500 K | Color Checker Digital SG |

| Zhuo [39] | 2016 | Logitech HD Pro C920 camera | Simulated D65 illuminant environment | 6,500 K | Munsell color checker |

| Yamamoto [36] | 2010 | Hyperspectral camera, 480 × 640 pixels | Artificial sunlight lamp | ||

| Kim [41] | 2008 | Digital camera, 1,280 × 960 | Standardized light sources | 5,500 K | Local minimum correction |

| Qi [40] | 2016 | Eolane digital camera, 2,048 × 1,536 | LED illuminator | 6,447 K | Color Checker Digital SG |

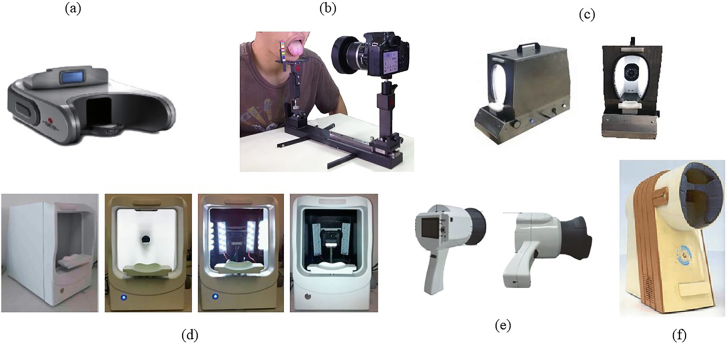

Most recently developed hardware consists of three major components: an image sensing module, an illumination module, and a computing and control module [27] to obtain high-quality and reproducible tongue images under varying conditions. In the early days, Cai et al. [28] applied a modified handheld color scanner with a microscopy slide on top of the tongue. The method can remove artifacts and avoid major color calibration. However, the scanner requires contact with the tongue and it is undesirable in a clinical setting. Hence, they later used a commercial digital camera (640 × 480 pixels) and a ColorChecker embedded inside the image for non-contact tongue image acquisition, a reference for subsequent tongue image acquisition devices. After this, various research groups developed and implemented their digital acquisition devices for tongue data collection. For instance, Wang et al. [29] employed a CCD digital camera with a resolution of 1,024 × 768 pixels, and they mounted this camera on a face-supporting device. Jiang et al. [30] developed a tongue diagnosis system using a high-quality digital camera with a 7.2-megapixel resolution. To minimize color errors, a Munsell color checker was embedded inside their hardware for color calibration. Cibin et al. [31] collected tongue images through an in-house tongue capture device consisting of a three-chip CCD camera with eight-bit resolution. Two D65 fluorescent tubes were placed symmetrically around the camera to produce a uniform illumination, as shown in Figure 3A. The images captured in the JPEG format range from 257 × 189 pixels to 443 × 355 pixels.

Figure 3:

Tongue image collection hardware. (A) A tongue capture device consisting of a three-chip CCD camera [31]. (B) An automatic tongue diagnosis system using a camera with circular LED lighting, a color card, camera support, and a sliding trail for vertical adjustment [37]. (C) A device equipped with Canon EOS 1200D and a simulated D65 illuminant environment [38]. (D) A tongue image capturing device with Logitech Pro C920 camera and the D65 illuminant [38]. (E) The handheld TDA-1 tongue imaging capturing device [40]. (F) An integrated system with standardized light sources, a digital camera, and color correction [41].

CCD camera has the advantages of small size, high reliability, and simple operation, but there are some limitations for images captured by traditional CCD cameras. In particular, it is difficult to distinguish the RGB color space between the tongue and neighboring tissues, as well as between the tongue coating and the tongue body [32]. Some methods [33, 34] perform well only on tongue images acquired under some particular conditions but often fail when the quality of the image is less than ideal. The discriminative capacities of multispectral sensors can be improved in spectral resolution by using hyperspectral sensors with hundreds of observation channels. Spectroscopy is a valuable tool for many applications. For example, in remote sensing applications, researchers have shown that hyperspectral data are adequate for material identification in scenes where other sensing modalities are ineffective [35]. In addition, spectral measurements from human tissues have been used for biomedical applications. Zhi et al. [32] obtained the hyperspectral images by a particular capture device, and they used the hyperspectral properties of the tongue coating to build a Support Vector Machine (SVM) classifier. Yamamoto et al. [36] also used a hyperspectral camera to acquire hyperspectral tongue surface images. Their hyperspectral camera contains an array of transmissive grating sensors and an eight-bit monochrome CCD camera with 480 × 640 pixels. Moreover, this camera is capable of taking a full-sized hyperspectral image every 16 s. They also used multiple scattering reflection technologies for the lamp to reduce reflection and a semi-closed box to avoid unexpected light. However, they did not have a color correction in their hardware system. Overall, hyperspectral sensors in high spectral resolution can achieve better tongue classification performance than optical images.

In addition to the acquisition devices mentioned above, more sophisticated hardware was also developed. Lo et al. [37] developed an automatic tongue diagnosis system (ATDS) to assist TCM practitioners. This system consists of a camera with circular LED lighting, camera support, and a color card, with a sliding trail for vertical adjustment, as shown in Figure 3B. The circular LED lighting can provide a consistent and stable light source, and compensate for the variations in intensity and color temperature by calibrating brightness and color. The ATDS can automatically correct lighting and color deviation caused by the change of background lighting by a color bar placed beside the subject. The color calibration bar can make sure the image quality is consistent even when taken in different circumstances.

Furthermore, Lu et al. [38] utilized a device equipped with a manual camera (Canon EOS 1200D) with a simulated D65 illuminant environment as shown in Figure 3C, and it is intended to render the average daylight with a correlated color temperature of approximately 6,500 K. Although such commercial cameras can obtain higher-resolution images, it makes the whole tongue image acquisition system very bulky. To improve the device’s portability, Zhuo et al. [39] developed a tongue image capturing device by adopting the Logitech Pro C920 camera and the D65 illuminant, whose brightness can be adjusted according to the actual requirements. Its appearance and internal structure are shown in Figure 3D. In addition, Qi et al. [40] introduced a hand-held tongue imaging device called TDA-1, which consists of an image acquisition system, LED illuminator, and removable collecting ring. As shown in Figure 3E, it is equipped with a CCD camera as its photosensitive components, including a small-sized Eolane digital camera (Altek A12, China). Their hardware system can capture color images with a resolution of 2,048 × 1,536 pixels, and operators can adjust white balance, exposure time, exposure compensation, ISO speed, metering modes, flash mode, etc. Thus, the whole hardware system can create a stable light source environment for tongue image acquisition.

In other cases, many tongue acquisition devices use other standards. Kim et al. [41] used standardized light sources, a digital camera, and color correction to acquire tongue images. As shown in Figure 3F, they designed the camera according to the facial contour, and a strobe light with 5,500 K color temperature was used to simulate the standard light source. Then an image with the resolution of 1,280 × 960 pixels with RGB 24 bit-BMP format was obtained with a cross mark on the center of the image itself. The advantage of this tongue acquisition system is a graphic user interface (GUI) on the monitor showing the tongue image in real-time. The operator can locate the center of the tongue and draw a cross on an image, which significantly improves the quality of the tongue image acquisition. Jang et al. [42] chose the Watec WAT-202D CCD camera for the collection of tongue images. This type of camera has less distortion and lower price than the CMOS-type array sensor. It provided a maximum of 768 × 494 pixels with push-lock white balance, 50 dB S/N ratio, and NTSC signal. In their hardware system, the light source was a 250 W halogen with 4,000 K color temperature. Unlike the previously designed acquisition hardware, their hardware system takes more into account the durability, power consumption, and cost of the device. Zhang et al. [43] chose a Sony 900E video camera as the image capture device, with a new type 3 CCD kernel with relatively less distortion. This camera provides images with a maximum of 720 × 576 pixels, 50 dB S/N ratio, as well as both PAL and NTSC signals. The light source of the acquisition device is two 70 W cold-light type halogen lamps with a 4,800 K color temperature. To compensate for the high heat emission, they used optical fiber as a waveguide and installed the light source separated from the image acquisition part. Their tongue acquisition hardware was approved by six TCM experts and received a Chinese Invention patent (NO. 021324581).

In general, most acquisition devices can provide a stable light source environment for tongue diagnosis. However, there is still no uniform standard for tongue acquisition hardware parameters, such as the type of camera and light source conditions.

Tongue image analysis

TCM diagnosis based on tongue images often includes image segmentation, color correction, and tongue type classification, although the former two may be skipped when using deep-learning methods. In this section, we will explain each of them in detail.

Tongue segmentation and effective part extraction

In tongue image diagnosis, color, shape, movement, and coating are the main factors for consideration. The thickness of the tongue, the size and cracks of the tongue surface, and the tooth marks on the edge of the tongue are also important for tongue diagnosis. Therefore, the segmentation of the tongue image not only can effectively filter out the interference of background information but also has great significance for the subsequent classifier training. However, there are many challenges in tongue image segmentation. The main challenge is that the tongue color is similar to the color of the lips and face, making it difficult to segment the tongue from face and lips.

The existing approaches for tongue image segmentation can be divided into two types: one based on traditional image processing technology and the other based on deep learning. In the early research, manual segmentation and automatic segmentation algorithms were used to segment the tongue area. Some methods employed the techniques of the adaptive threshold [44], region growing and merging [45], and edge detection [46, 47] to segment the tongue body from tongue images. These algorithms require the user to draw an initial boundary but often fail to completely extract the tongue body from the surroundings. To make segmentation more automatic, various advanced image processing techniques were proposed. The active contour model (ACM) or Snakes [48] was developed for contour extraction and image interpretation. Snakes incorporates a global view of edge detection by assessing continuity and curvature combined with the local strength of an edge. A major advantage of this approach over other techniques is the integration of image data, an initial estimate of the contour, the desired contour properties, and knowledge-based constraints in a single extraction process [49].

Many variants of the ACM-based algorithms are developed following the initialization methods and the strategy of curve evolution. Regarding the initialization, Pang et al. [49, 50] combined the deformable template techniques with ACM to build an original model called Bi-Elliptical Deformable Contour (BEDC) by introducing a new term, i.e., addressed template force, to maintain the global shape while locally deforming the details. In addition, the template force can prevent undesirable deformation effects of traditional Snakes, like shrinking and clustering. Their method exhibited better performance than traditional Snakes in dealing with noises. However, the BEDC fails to find the correct tongue contour when the tongue edges become very vague or even totally missing. Zuo et al. [33] developed a tongue segmentation method by combining the polar edge detector, edge filtering, edge binarization, active contour model, and a method to filter out useless edges for tongue segmentation. Furthermore, Wu et al. [51] introduced the watershed transform for acquiring initial contour, which was later used as a Snake to converge to the exact edge. Regarding the curve evolution, Yu et al. [52] extracted the tongue body by adding a color gradient to the gradient vector flow (GVF) Snake. Shi et al. [53] applied double geodesic flow to extract tongue body based on the prior information of tongue shape and location. Later, Shi et al. [54] continued the work with the color control-geometric and gradient flow Snake algorithm-enhanced curve velocity. Ling et al. [55] presented a segmentation method based on the combination of gray histogram projection and automatic threshold selection. They determined the area of the tongue by performing horizontal and vertical gray-scale projection of the gray-scale image, and used the Otsu method to select the threshold to segment. Although these mentioned algorithms can segment the tongue part from the original images, active geodesic contour costs much more time than the parameterized mode. Based on the statistical distribution characteristics of tongue color, Wang et al. [56] introduced a mathematically described tongue color space for diagnostic feature extraction. The characteristics of tongue color space includes a tongue color gamut that defines the range of colors, color centers of 12 tongue color categories, and color distribution of typical image features in the tongue color gamut. They built the tongue color gamut in the CIE chromaticity diagram by color gamut boundary descriptor using one-class SVM. Then, they defined centers of 12 tongue color categories and built a relationship between the tongue color space and color distributions of various tongue features. In the end, the descriptor can cover 99.98% of tongue colors, and 98.11% of them are densely distributed in 98% tongue color gamut. These tongue feature extraction methods provide a lot of valuable feature information for computerized tongue diagnosis.

In some other studies, spectral image data have also been applied to tongue segmentation. Li et al. [57] presented a segmentation method based on the hyperspectral tongue image data. They constructed a transformed data cube by finding the spectral angle (SA) between each pixel and every other pixel in the original data cube, and then used a one-dimensional edge detector to analyze each spectrum in the transformed SA cube. The contour is extracted from the hyperspectral tongue image according to the detected edges. This study provides a new perspective on the method of segmentation of the tongue image. In addition to the methods mentioned above, some studies further improved the Snake method. For example, Liang et al. [58] proposed a new efficient tongue segmentation approach based on the combination of a feature of tongue shape and the Snakes correction model. They used the tongue image in the HIS color model to obtain the tongue’s contour, then used the shape of the tongue to correct a preliminary tongue contour and applied it to the Snakes model to get the final result. Some researchers have also done theoretical studies of the method, such as Wei et al. [59] compared the Canny, Snake, and threshold (Otsu’s thresholding algorithm) methods for edge segmentation. They found that the Canny algorithm is not suitable for tongue segmentation because it may produce many false edges after cutting; The Snakes segmentation in the tongue requires a larger convergence number, which is time-consuming computationally. The threshold method using Otsu’s thresholding algorithm and filtering process can achieve an easy, fast and effective segmentation result in tongue diagnosis. This study has some reference value for the selection of tongue segmentation algorithms.

Some methods utilized multiple steps to achieve optimal tongue image segmentation and feature extraction. Kim et al. [41] used several steps to segment the tongue images, including preprocessing, over-segmentation, region merging, local minimum detection, local minimum correction, color edge detection, and curve fitting, and edge selection and edge smoothing. During the preprocessing period, tongue images are changed to the resolution of 533 × 400 pixels before histogram equalization and edge enhancement. Then a graph-based segmentation method is used based on edge selection. However, the tongue segmentation in this study was performed using a natural number scoring from 1 to 5, which lacks a precise assessment method. Zhang et al. [62] used the HSV color space for color feature extraction. After getting tongue images, they changed them from RGB to HSV and then extracted H and S components as color features. Texture features were extracted based on a statistical analysis of the gray level co-occurrence matrix. Specifically, four feature vectors, including contrast (CON), angular second moment (ASM), entropy (ENT), and correlation (COR), were used to determine the features of greasiness in the tongue coating, as well as smoothness and wetness in the tongue body. For teeth mark extraction, the Graham convex hull algorithm was used to construct the convex hull of the tongue body. This study used HSV color space for tongue segmentation and texture and color feature extraction, but the number of samples during the experiment was a bit small. Li et al. [63] established a method to detect the amount of tooth-mark. It applies the characters of Gradient Vector Flow Snakes (GVF) Snakes, the features of the curvatures, and gradients in all the points of tongue contour. A tooth-mark is defined by the curvatures and gradients of the points in the boundary contour of the image. In their experiments, the accuracy achieved 98% compared with the results determined by doctors. These traditional methods can produce satisfactory segmentation results to some extent. However, they have some disadvantages, mainly in three aspects: (1) these methods are sensitive to illumination changes and cluttered backgrounds; (2) they cannot segment the tongue from the lips accurately due to their similar colors, especially in the Snake-based methods; (3) most of these methods require preprocessing, such as tongue-body detection or require the initial region to be specified before segmentation begins.

In addition to traditional machine learning methods, some studies applied deep learning for tongue image segmentation. Li et al. [64] proposed a real-time, automatic tongue images segmentation method using a lightweight architecture based on the encoder-decoder structure. They also constructed a tongue image dataset for model training and testing, which contained 5,600 tongue images and corresponding high-quality segmentation labels. They demonstrated the model’s effectiveness on BioHit, PolyU/HIT, and their datasets, and achieved the performance of 99.15%, 95.69%, and 99.03% intersection over union (IoU) accuracy, respectively. Li et al. [65] proposed a three-step iterative fully convolutional network (TFCN) to extract the tongue body from the original tongue image. Their method can directly learn the alpha matte from the input image by correcting misunderstanding in intermediates steps without user interaction or initialization. Compared with GrabCut [66], the Closed-Form matting [67], and KNN matting [68], their approach can achieve 97.94% IoU accuracy, far better than other methods. Qu et al. applied an encoder-decoder model called SegNet to segment the tongue image automatically. However, these mentioned solutions have some drawbacks. They need some additional preprocessing operations, such as brightness discrimination and image enhancement which complicate the whole segmentation process.

The above methods lose some information on image detail after the successive pooling layers. To solve these drawbacks, Lin et al. [69] presented DeepTongue, an end-to-end trainable segmentation method using a deep convolutional neural network (CNN) based on ResNet. Without preprocessing, tongue images can be segmented using a forward network of 50 layers with fast speed and high accuracy. Huang et al. [70] propose an end-to-end network called Tongue U-Net (TU-Net), which combines the classical U-Net structure with squeeze-and-Excitation (SE) block, Dense Atrous Convolution (DAC) block, and Residual Multi-kernel Pooling (RMP) block. They applied their method on a tongue dataset with 300 images, and it performed better than other segmentation methods such as U-Net, Attention U-Net. Zhou et al. [71] presented a tongue segmentation method using a multi-task, end-to-end learning model named TongueNet, for supervised deep CNN training. They used a feature pyramid network based on the designed context-aware residual blocks to extract multi-scale tongue features. The region of interests (ROIs) from feature maps was also used for finer localization and segmentation. In a small-scale tongue dataset, they also applied U-Net for fast tongue segmentation, and achieved the highest accuracy of 98.45% and consumed 0.267 s per picture on average [72]. Also, using the U-Net structure, Zhu et al. [73] explored to extract the ultrasound tongue contour using the U-Net, and superior performance has been obtained. Their experiment shows that the U-Net model can extract frame-specific contours and be robust to misleading features in the ultrasound tongue image. Tang et al. [74] proposed a Dilated Encode Network (De-Net) for automated segmentation of tongue image acquired from a mobile device in an opening environment. Unlike the previous deep learning methods, which use continuous pooling operations to increase the perceptive field and capture more abstract features, their model designed an HCDC block to expand the receptive field without losing resolution by using dilated convolutions, to ensure more high-level features and high-resolution output. They also compare with some competitive methods, including SegNet, U-Net, and DeepTongue. The result shows their method obtains more accurate segmentation results on their Tongue database, and it is effective for tongue image segmentation tasks with mobile devices.

In another study, Huang et al. [75] presented an automated tongue image segmentation method using an enhanced fully convolutional network with an encoder-decoder structure. In the quantitative evaluation of the segmentation results of 300 tongue images from their tongue image dataset, the average precision was 95.66%. Xu et al. [76] proposed a Multi-Task Joint learning (MTL) method for segmenting and classifying tongue images. The method shares the underlying parameters and adds two different task loss functions. Moreover, two deep neural network variants (U-Net and Discriminative Filter learning) are fused into the MTL. The experimental results show that the joint method outperforms the existing tongue characterization methods. Yuan et al. [77] proposed a framework to integrate tongue detection and segmentation using cascaded CNNs by multitask learning. The advantage of this method is specifically designed for mobile and embedded devices. The size of their model is hundreds of times smaller than other deep learning models such as SegNet, Iterative TFCN, but the accuracy is comparable to other methods. Tongue crack segmentation is also an essential component of computer-aided diagnosis applied. Tongue cracks refer to fissures with different depths and shapes on the tongue’s surface and muscle layer. In most cases, it can be viewed as a changeful curve structure on the tongue’s surface, and its depth is determined by the severity of atrophy and lesions of tongue mucosa. Also, the quantitative value of fissured tongue reflects the health condition of internal organs [78].

Another study by Chen et al. [79] proposed a tongue crack extraction method based on Bot-hat transform and Otsu adaptive threshold, which achieved an extraction accuracy of over 90%. Xue et al. [80] utilize Alexnet to extract the deep features of the crack region and train a multi-instance support vector machine (SVM) to make the final decision to obtain the object detection results. Chang et al. [81, 82] take advantage of ResNet50 as the model’s backbone to recognize and localize tongue crack regions and visualize the fissure regions with Gradient-weighted Class Activation Mapping (Drad-cam). However, these methods have not achieved pixel-level precise extraction due to the vague tongue crack boundary. Peng et al. [83] propose a P-shaped neural network architecture based on a lightweight encoder-decoder structure to extract tongue cracks. They improved the U-Net framework structure and applied dual attention gates for better information fusion. For the class imbalance issue, they applied oversampling pre-training strategy to solve this problem. Their methods achieved better results and less time consumption on the extraction of tongue cracks. In addition, applying an attention mechanism to discover new tongue features (e.g., a particular color in a region that associates with a disease) may help TCM professionals to define robust diagnosis protocols.

In general, most segmentation methods can split out the tongue region, but for some images with low resolution or blurred tongue edges, the accuracy and effectiveness of tongue segmentation are still significant challenges.

Tongue color correction

In the TCM tongue analysis, color and color differences convey important diagnostic information. However, there are often color deviations in the tongue image acquisition process, as different types and brands of digital cameras may use different color spaces to acquire images. As a result, it will be difficult to exchange or compare images reliably and meaningfully. The developed methods and obtained results on these device-dependent images may suffer from limited applicability. The other problem is that the color and intensity of the external light source have an impact on tongue pictures. Therefore, tongue image color correction is an essential issue in the field of automatic tongue diagnosis.

To reduce the interference of the external light sources on tongue diagnosis, several color correction methods have been proposed [23, 43, 84], [85], [86]. Existing color correction methods can be classified into four categories, i.e., methods based on simple image statistics, color temperature curve calibration, double exposures, and supervised learning [87]. Among the supervised learning methods, polynomial-based correction and network mapping are the most widely used. However, most related research has focused on color correction for general imaging devices, such as digital cameras, cathode ray tube/liquid crystal display (CRT/LCD) monitors, and printers, where the color gamut covers almost the whole visible color area. Since the color gamut of the human tongue images is much narrower, these color correction algorithms need to be adapted and optimized.

In the early research, Jang et al. [42] proposed a Trigonal Pyramid (TP) color reproduction method without any color checker for reference. The disadvantage of their approach is which lacks enough objectivity. To compensate for the errors in lighting, camera angle, and color representation in the tongue image acquisition process, the color calibration method uses an embedded color check-board. The color correction process typically involves deriving a transformation between the device-dependent camera RGB values and device-independent chromatic attributes with the aid of several reference colors, which are often printed and arranged in a checkboard chart named ColorChecker [88], [89], [90], [91]. The ColorChecker is the reference target for training a correction model, and it plays a crucial role in tongue color correction. Cai [28] developed a semi-automatic color calibration tool, the Munsell ColorChecker [92], which contains 24 scientifically selected color patches, including additive primaries and colors of ordinary natural objects. The Munsell Colorchecker was designed in 1967 and is widely used in the color reproduction process of photography, television, and printing. Cai’s software can find the points in each square of the color checker and apply a linear color calibration model to recover the original color of the tongue under various lighting conditions. However, its linear model cannot obtain satisfactory calibration results.

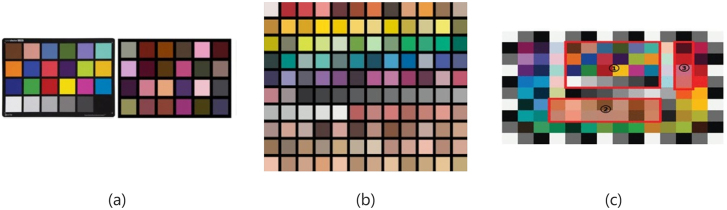

Among the supervised learning methods, the polynomial regression-based correction method is most widely used for its low computational complexity. Based on Support Vector Regression (SVR), Wang and Zhang [84] used a polynomial regression-based correction method for tongue image analysis. They proposed an optimized tongue color correction scheme to achieve accurate tongue image rendering. They chose the sRGB color space as selection criteria and then optimized the color correction algorithms accordingly. The method can reduce the color difference between images captured using different cameras or under different lighting conditions. The distances between the color centers of tongue images by more than 95%. Their further research [85] conducted a thorough study on the design of ColorChecker for more precise tongue color correction. Unlike the Munsell ColorChecker, they proposed a space-based color checker, as shown in Figure 4A. The Munsell ColorChecker was designed to process natural color but not especially for tongue colors, which means most colors like green and yellowish-green are unlikely to appear in tongue images. Therefore, this method is more accurate than the Munsell ColorChecker for tongue color correction.

Figure 4:

Three common color checkers. (A) A checker with 24 colors in an improved version [85]. (B) A checker with 120 colors [97]. (C) Color checker SG highlights the interesting regions [38].

To improve the correction accuracy of tongue colors, more studies have been reported. Hu et al. [93] applied the polynomial regression-based method for tongue image color correction and investigated the design of model parameters. Unlike the previous studies of tongue image analysis, the tongues were captured using a device with a fixed camera position, camera setting, and lighting condition to simplify the color correction procedure. They propose a lighting estimation method based on analyzing the CIE XYZ color information of photos taken with/without flash. Hence, their system works on smartphones and smartphone users do not have to take a color checker with them. Hu et al. [94] also applied SVM to predict the lighting condition and the corresponding color correction matrix according to the color difference of images taken with and without flash. They also add a denoising step and the hue information of tongue images into the fur and fissure detection part for color correction. Zhang et al. [86] proposed a novel method to calibrate color distortion of tongue images captured under different lighting conditions. They adopted Li’s regularized color clustering algorithm to produce the color codes of the new color checker [95]. To compare the difference of calibrations using different color codes, they combined standard 24 color values provided by Microsoft Windows, the cluster values from gray values into the color checker. Their experiments show that the SVR-based calibration method, cooperating with the proposed colorchecker, provides better overall performance than polynomial-based methods. In order to guarantee better color representation of the tongue body, researchers in Ref. [96] introduced a new tongue Color Rendition Chart for color calibration algorithms. They built a statistical tongue color gamut based on tongue image database, and determined different quantities of colors in the chart with experimentation. With the color difference calculation formula of CIELAB and CIEDE2000, they compared the results between tongue Color Rendition Chart and X-Rite’s ColorChecker Color Rendition Chart, and got a smaller error rate with 24 colors (Figure 4).

To overcome the problem of too few samples, Wei et al. [98] adopted Partial Least Squares Regression (PLSR) to correct tongue images in RGB color space. PLSR is a robust statistical analysis method for ordinary multiple regression, using relatively few samples, as the multiple corrections between variables. However, RGB color space is a device-dependent color space, making it difficult to calculate the difference in the device-dependent color space. Rosipalet et al. [99] improved the precision of fitting and prediction of the PLSR method by introducing a nonlinear kernel (named K-PLSR) to map the independent variable space to a high dimensional feature space. Zhuo et al. [39] proposed a K-PLSR-based color correction method for tongue images. The device-independent CIE LAB color space is adopted to train a K-PLASE model. Their method can correct tongue images under different illumination conditions to a consistent rendering result, suitable for standardized storage and automatic analysis in TCM.

Several studies applied neural networks for tongue image color correction. Zhuo et al. [97] proposed a simulated annealing (SA)–genetic algorithm (GA)–backpropagation (BP) neural network-based color correction algorithm for tongue images. They also only used several colors similar to the tongue body, tongue coating, and skin. The training samples consist of 120 color checkers, as shown in Figure 4B. They also established the color mapping model to improve the correction accuracy, with the captured samples of the color checkers under the capturing environment taken as the input data and the standard color data as the output. Their experimental results demonstrate that their color correction algorithm could improve the correction accuracy with a much lower computational complexity. Zhang et al. [100] developed a neural network-based color correction algorithm incorporating an evolutionary computation method. Their experimental results demonstrate that their method achieves better correction performance than the polynomial regression model, the conventional back-propagation neural network, or the genetic algorithm-back-propagation neural network.

Lu et al. [38] proposed a Deep Color Correction Neural Network (DCCN) to model the relationship among the captured tongue images under different lighting conditions to the target images. The proposed DCCN learns the color mapping model with the operations in hidden layers. In their convolutional neural network architecture, the number of layers is determined via patches from the color checker, consisting of 140 patches, as shown in Figure 4C. Sui et al. [101] used the Root-Polynomial Color Correction (RPCC) algorithm to correct the color of the tongue. They collected the images of tongue and x-rite Color Checker Classic with a mobile phone simultaneously and used the CIR1976 L × a × b color difference equation to evaluate the effect of their algorithm. The experimental results demonstrate that RPCC could improve color correction quality compared with the traditional polynomial regression algorithm and back-propagation neural network. Zhu et al. [102] used a new image retrieval method based on multi-feature of tongue images. For feature extraction, HIS color space is used; and statistics of gray difference presents texture. Then color and texture are uninformed from the 12-D eigenvector of colors and 8-D eigenvector of texture so that the matching degree of images could be judged by calculating the weighted distance of the uniform results.

Tongue classification

In the TCM theory, a tongue is composed of a body, tip, and root [20]. These parts give the tongue its shape, which may change over time, and these changes may indicate pathologies. In oriental medicine, the classification of tongue images has important clinical diagnostic significance. Therefore, researchers have conducted many studies on the classification of tongue images. For example, Huang et al. [103] presented a classification approach for automatically recognizing and analyzing tongue shapes based on geometric features. The approach corrects the tongue deflection by applying three geometric criteria and then classifies tongue shapes according to seven geometric features defined by various measurements of length, area, and angle of the tongue. The results show that the proposed shape correction method reduces the deflection of tongue shapes in a test on a total of 362 tongue samples. It achieved an accuracy of 90.3%, making it more accurate than either the K-nearest neighbors (KNN) or Linear Discriminant Analysis (LDA) method.

Zhang et al. [104] proposed a tongue images classification method via geometry features with Sparse Representation Classifier (SRC). Based on areas, measurements, distances, and ratios of foreground pixels, they extracted 13 geometry features in total in terms of width, length, length-width ratio, smaller-half-distance, center distance, center distance ratio, area, circle area, circle area ratio, square area, square area ratio, triangle area, and triangle area ratio. After that, they used two sub-tongue geometry feature dictionaries Healthy and Disease to classify SRC. In the end, they got an average accuracy of 79.23%, a sensitivity of 86.15%, and a specificity of 72.31% with 130 Healthy and 130 Disease samples. Zhang et al. [105] used geometric features for quantitative analysis through computerized methods. They used a decision tree model to classify the five tongue shapes (rectangle, acute and obtuse triangles, square, and circle) defined based on TCM. The experimental dataset included 130 healthy and 542 diseased Western medical calibration samples, and the average accuracy of the experiment was 76.24% for all shapes. This work provides a foundation for the objectification of tongue diagnosis. However, when extracting the shape and geometric features of the tongue, tongue image alignment faces significant challenges. One is that the tongue shape is highly unpredictable; the other is the consistency between the tongues of different people [106]. Wu et al. [107] present a conformal mapping method for tongue image alignment challenges. This method first establishes the mapping on the boundary by Fourier descriptors. It then extends the mapping to the interior region by means of the Coasean integration and finite difference methods. Their proposed method can be robust against tongue deformation and is faster and more accurate than the baseline method. Their work provides an effective and accurate tool for deformable medical image alignment and disease dispute.

In addition to classifying tongues based on their geometric features, some researchers have classified tongues based on their textural features. For instance, Pang et al. [103] proposed a computerized tongue diagnosis method using a Bayesian network based on quantitative features, namely chromatic and textural measurements, as the decision models for diagnosis. Experiments were carried out on 455 in-patients affected by 13 common internal diseases and 70 healthy volunteers, with a prediction accuracy of up to 75.8% for diagnosing four groups, i.e., healthy, pancreatitis, hypertension, and cerebral infarction. Gao et al. [108, 109] presented a computerized tongue inspection method based on SVM. They used chromatic and textural measures extracted from tongue images as two quantitative features and then built the mapping relations between features and diseases using SVM and Bayesian networks. Experiments were carried out on 665 inpatients affected by six common internal diseases and 103 healthy volunteers. The estimated prediction accuracy of the multi-class SVM classification is 86.6%, which outperforms the Joint Bayesian Network (BN) Classification.

In our study, Ratchadaporn et al. [110] proposed a color-space-based feature set, which can be extracted from tongue images of clinical patients to build an automated ZHENG classification system. ZHENG (TCM syndrome) is an integral and essential part of TCM theory [111]. It is a characteristic profile of all clinical manifestations that can be identified by TCM practitioners, representing all the symptoms and signs (tongue appearance and pulse feeling included). Our experiment used Multilayer Perception (MLP) and SVM to establish the relationship between the tongue images features and ZHENG. The experimental results obtained over 263 gastritis patients, most of whom suffered Cold Zheng or Hot ZHENG, and a control group of 48 healthy volunteers demonstrate excellent performance.

Zhang et al. [112] introduced a new tongue color analysis system. They collected 1,045 images from 143 people as the control group (Healthy) and 902 patients with disease (Disease), and composed 13 ailment groups and one miscellaneous group. From CIE-xy chromaticity diagram, they marked 12 colors to represent the tongue color gamut and converted RGB value to CIELAB after images segmentation. With extracted features, they found Disease tongues have a higher ratio in red, deep red, black, gray, and yellow. They classified Healthy and Disease using KNN and SVM with quadratic kernel and selected features from sequential forward search. Then, 13 illnesses were grouped into three clusters by FCM to perform classification separately. It has an average accuracy of 91.99% to classify Healthy and Disease and more than 70% accuracy to distinguish between different illnesses. Lo et al. [21] investigated discriminating tongue features to differentiate between Breast cancer (BC) patients and the normal control group, and established a differentiating index to facilitate the non-invasive detection of BC. They build an automatic tongue diagnosis system to extract the tongue features for 60 BC patients and 70 normal persons. The accuracy reached 80% by applying the seven tongue features. Some studies combine chromatic and textural features of the tongue for tongue classification. Researchers in Ref. [113] presented a tongue-computing model (TCoM) to diagnose appendicitis. Compared with other existing models and approaches, the underlying validity of the model is based on diagnostic results using Western medicine. The measurements of a tongue’s chromatic and textural properties obtained via image processing techniques are compared with the corresponding diagnostic results from Western medicine instead of the judgment of a TCM doctor. This forms an evidence-based model and such an approach may avoid some subjective issues in judging Zheng. Their experiments selected 912 samples from a tongue-image database with more than 12,000 tongue images, including 114 images from appendicitis and 798 samples from 13 other familiar diseases. They evaluated the performance of the color metrics in each color space (RGB, CIEYxy, CIELUV, CIELAB) and textural metrics in different partitions of the tongue. The accuracy of the diagnosis of appendicitis is 92.28% after the identification of filiform papillae. In their experiments, integrating TCM tongue images with Western diagnostic results may provide a viable method for objectifying tongue diagnosis. Also based on a combination of features such as tongue color and texture, Zhang et al. [114] detect diabetes mellitus (DM) and nonproliferative diabetic retinopathy (NPDR) using tongue color, texture, and geometry features. They built a tongue color gamut with 12 colors, texture values with nine blocks, and geometry features from measurements, distances, areas, and ratios of the images. Then, these features were used to classify two groups healthy/DM and NPDR/DM without NPDR on a dataset of 130 Healthy and 296 DM samples, where 29 of those in DM are NPDR. In the end, the average accuracies of 80.52% for Healthy/DM group and 80.33% for NPDR/DM without NPDR group can be reached.

Spectral information is also an important feature used in the classification and diagnosis of tongue images. Hyperspectral images are carefully segmented in the spectral dimension. Unlike traditional color spaces such as RGB, hyperspectral images can obtain the spectral data of each point and the image information of either spectral band. Zhi et al. [32] presented a classification method for chronic cholecystitis based on hyperspectral medical tongue images. They collected 375 tongue images from 300 patients and 75 healthy volunteers by using a hyperspectral medical sensor. It shows that hyperspectral tongue images can get better classification performance for patients than optical tongue images. This also indicates that hyperspectral cameras can capture more feature information compared to color digital cameras. Wan et al. [115] described the characteristic of tongue images of patients with lung cancer of different TCM syndromes and revealed the basic rule on the changes of the tongue images. A total of 207 patients with lung cancer were divided into four syndrome groups. The correct identification rate of the discriminant function on the raw data was 65.7%. Ding et al. [116] investigated an approach for the classification based on the doublet SVM. They acquired the pathological characteristics by using a robust approach with full use of the local information of tongue images. They extracted Histogram of Oriented Gradient (HOG) [117] features based on local object appearance and shape, and then calculated the distance metric by the SVM classifier and doublets with samples built by tongue images with different labels. The prediction accuracy of their method is 89.1%, with the specificity being 61.3% and the sensitivity being 95.8%.

Tooth marks are also an important feature used for tongue classification. In the actual diagnosis of TCM, a tooth-marked tongue or crenated tongue can provide valuable diagnostic information for TCM doctors. Li et al. [118] presented a multiple-instance method for the classification of teeth. The teeth marks are along the lateral borders. They generated the suspected regions, and then used a deep ConvNet to extract the feature. A bag of feature vectors represents a tongue and a multiple-instance SVM is used to make the final classification. Their experiments show that the proposed method dramatically improves accuracy and effectiveness. Zhang et al. [119] used transductive support vector machine (TSVM) for classification to improve the accuracy and reduce human labor from previous methods of others. They organized tongue images into 13 binary-category classification problems, and the classification rate is about 85%. However, the use of TSVM has high requirements for the quality of unlabeled tongue images. The classification rate would be lower, so the selection of images greatly influences the results of the classification. Then after TSVM, they also used Universum SVM to add labeled data and some selected irrelevant data (universum data) into the learning process to improve the classifier’s performance [120]. Because not all universum data are useful for classification, they imported a selection algorithm to select the in-between universum samples (IBU). From their results, Universum SVM can get better performance than traditional SVM, and the parameters in kernel functions also play an important role.

Another common feature of classification is tongue coating. Li et al. [121] presented a method to classify rotten and greasy coating. They used random oversampling, Gabor feature, and distribution feature of tongue coating to solve the unbalanced classification and texture recognition problem. Gabor feature, as a rich and typical texture, is optimal in the sense of minimizing the joint two-dimensional uncertainty in space and frequency [122], and its micro-features are often used to characterize the underlying texture information [123]. Their experiment shows the method achieved high accuracy in the unbalanced data set. Huang et al. [124] employed a naïve Bayesian classifier to differentiate the three categories of fur coating, greasy fur coating, sub-greasy coating, and normal coating. Their experiment shows that the proposed method has a good performance in tongue coating classification. Xu et al. [125] established a tongue diagnosis method based on tongue images for tongue diagnosis. They used CCD devices to acquire images of the front and lateral of the tongue. After that, the tongue’s length, width, and height are measured, and an optimum formula between the body surface area and the sum of the width of the tongue and height is established from the data. As a result of clinical studies, the accuracy rate for fat and thin tongues is 93.40% and 88.57%, respectively. This method is also helpful in the diagnosis of diabetes mellitus, hypertension, chronic gastritis, and hyperthyroidism.

In addition to the classification of tongue images using traditional machine learning methods, some studies have also used some deep learning methods to classify tongue images. For example, Tang et al. [126] have a more novel paradigm to classify tongue images by Multiple-Instance Learning (MIL) and in-depth features. They first selected suspected rotten-greasy tongue coating patches and then used a deep CNN to extract features of each patch. In the end, tongue coating is represented by a bag consisting of multiple feature vectors, and Multiple-Instance Support Vector Machine (MI-SVM) are used to perform the final classification. They achieved an accuracy of 85.0% and a recall rate (TPR) of 89.8%. Hou et al. [127] performed tongue color classification by modifying CaffeNet, and they constructed a tongue images dataset containing about 1,500 tongue images. They modified the traditional network parameters for tongue color classification and then fine-tuned the neural network model. Their experimental results show the method is practical and accurate in color classification. Huo et al. [128] presented a CNN method to classify three different tongue shapes, i.e., tooth-marked tongue, dot-sting tongue, and fissured tongue. They used the Gabor filtering algorithm and edge extraction approach for preprocessing and then optimized CNN for the tongue image training. The experimental results indicate that the preprocessing methods increase the accuracy and decrease the time of the training process of tongue shape classification. Ful et al. [129] propose to computerize tongue coating nature using deep neural networks. Their method combines the characteristics of basic image processing and deep learning. Nonlinear activation function ReLU and dropout technique are used in their neural networks. They carried out their four-classification experiment and three-classification experiment, and the accuracy reached 0.87 and 0.95, respectively. Hou et al. [127] proposed a method combining deep learning with tongue color for classification. They created their tongue images database by preprocessing and enhancing tongue images. And then, they modified the parameters of the traditional networks. Their result shows that their method is more practical and accurate than the traditional methods. Meng et al. [130] propose a feature extraction framework called constrained high dispersal neural network (CHDNet) to extract unbiased features and reduce human labor for tongue diagnosis. High dispersal and local response normalization operation are introduced to address the issue of redundancy. They tested the proposed method on 267 gastritis patients and a control group of 48 healthy volunteers. The experiment results show that CHDNet is a promising method in tongue image classification for the TCM study. Song et al. [131] attempt to use deep transfer learning for tongue image classification. They extract the tongue features through the pre-trained networks (ResNet and Incepetion_V3) and then rewrite the output layer of the original network with global average pooling and full-connected layer. A dataset of 2,245 tongue images collected from specialized TCM medical institutions is used for tongue classification performance evaluation. The proposed method performs well and achieves an average classification accuracy of 95.92%. Ma et al. [132] designed a system framework to take the constitution recognition. The system contains tongue images acquisition, image pre-processing, features extraction, and constitution recognition in total. At first, they take images by a camera from the wild environment directly. Then for the pro-processing part, tongue detection and images segmentation are included. They used a faster R-CNN method to detect tongue from the images, and another method, VGG, is also used to calibrate the detected tongue region. After features extraction, constitution recognition is performed with the complexity perception method. These studies of tongue classification provide methodological support for tongue diagnosis. We summarize the classification methods and the diseases classified in Table 2.

Table 2:

Tongue classification and diseases.

| Authors | Year | Classification type | Methods | Features | Sample size | Devices | Best accuracy |

|---|---|---|---|---|---|---|---|

| Pang [113] | 2005 | Appendicitis | quantitative measurements | Color and textural | 912 | 3-CCD digital camera, standard D65 lights | 92.98% |

| Zhi [32] | 2007 | Chronic Cholecystitis | SVM, RBFNN, KNN | Spectrum property | 375 | Hyperspectral medical sensor | 93.11% |

| Gao [108, 109] | 2007 | Six common internal diseases | Bayesian Network, SVM | Color and textural metrics | 768 | 86.60% | |

| Huang [103] | 2009 | Tongue shapes | KNN, LDA | Geometric features | 362 | 90.30% | |

| Kanawong [110] | 2012 | ZHENG | AdaBoost, SVM, MLP | Color | 263 | ||

| Lo [21, 133] | 2016 | Breast cancer | Logistic Regression | Color, thickness, shape, etc. | 130 | CCD camera with circular LED lighting | 80.00% |

| Ding [116] | 2016 | Gastritis | KNN, RF, SVM | Textural | 326 | SONY 3-CDD camera | 89.10% |

| Tang [126] | 2021 | Tongue coating | CNN, SVM | Coating | 274 | DS01-B Information Collection System of Tongue and Face Diagnosis | 85.00% |

| Hou [127] | 2017 | Tongue color | CNN | Color | 1,500 | 93.00% | |

| Li [118] | 2019 | Teeth marks | CNN, SVM | Teeth marks | 641 | 76.20% | |

| Fu [129] | 2017 | Tongue coating | CNN | Textural | 120 | 91.7% | |

| Huo [128] | 2017 | Tongue shapes | CNN | Textural, tongue edge | 718 | 81.2% | |

| Meng [130] | 2017 | Gastritis | CNN, SVM | Color and textural metrics | 315 | 91.14% | |

| Song [131] | 2020 | Tooth-marks, cracks, etc. | ResNet, Inception | Tooth-marks, cracks, thickness | 2,245 | Tongue diagnostic instrument | 95.92% |

Tongue diagnosis system (TDS)

A comprehensive tongue diagnosis system often has all the components, including hardware, image segmentation and extraction, color correction, and image classification. For example, Zhang et al. [43] presented a computer-aided tongue diagnosis system (CATDS), constituted by five components: user interface module, acquisition module, tongue image database, image preprocessing module, and diagnosis engine. This system aims to establish the relationship between quantitative features and disease via Bayesian networks. It is carried out on 544 patients affected by nine common diseases and 56 healthy volunteers. The results show that the system can adequately identify six groups: healthy, pulmonary heart disease, appendicitis, gastritis, pancreatitis, and bronchitis, with accuracy higher than 75%. The whole diagnosis process is less than 5 s. Jang et al. [42] developed a digital tongue inspection system, including hardware parts for tongue image acquisition, image processing for color interpolation, edge detection for tongue area separation, tongue color detection, and a database and user interface system for archiving and managing the acquired tongue images.

Kim et al. [134] presented a tongue diagnosis system to assess tongue coating thickness with functional dyspepsia. They obtain tongue images twice with a 30-min interval and then classify the type of tongues into three categories: no coating, thin coating, and thick coating. The system consists of an image acquisition system, LED illuminator, case, and analysis software. It is equipped with a vision camera (HVR-2130CPA, Hyvision System, Korea) and an H2Z0414C-MP lens (Hyvision System, Korea). The camera can take color images of SXGA resolution (1,280 × 1,024 pixels) and automatically adjust white balance, exposure time, and gain settings. To evenly illuminate the dorsal surface of the tongue, 12 white LED lamps are arranged around the camera, and thin double diffusion plates are placed in front of the lamps. To extract the tongue area, 17 nodes are generated to determine the boundary line of the tongue. Then, the image with RGB color space is converted into CIE-Lab color space to extract the coating area. For measurement of the system, they used a cutoff point of 29.06% to differentiate between no coating and thin coating, and a cutoff of 63.51% to differentiate between the thin coating and thick coating.

Lo et al. [133] presented an automatic tongue diagnosis system to use discriminating tongue features to distinguish early-stage breast cancer (BC) patients. Nine tongue features, including tongue color, tongue quality, tongue fissure, tongue fur, red dot, ecchymosis, tooth mark, saliva, and tongue shape, are subdivided according to the areas located such as spleen–stomach, liver–gall-left, liver–gall-right, kidney, and heart–lung areas. The system’s main functions are image capturing and color calibration, tongues area segmentation, and tongue feature extraction. For image analysis, they isolated the tongue region within an image to eliminate irrelevant lower facial portions and background surrounding the tongue at first. Then, tongue features are extracted according to the aspect ratio, color composition, location, shape, and color distribution of the tongue. After that, the Mann–Whitney test, a non-parametric test, was used to compare two independent groups of sampled data with no assumption of normal distributions to identify features with significant differences. As for the result, the model employing five tongue features induced by logistic regression with independently significant meaning achieved 90% accuracy for non-breast cancer individuals and 50% accuracy for early-stage BC patients. The model employing six tongue features induced by logistic regression with independently significant meaning achieved 80% accuracy for non-breast cancer individuals and 60% accuracy for early-stage BC patients. Finally, the model employing seven tongue features achieved an accuracy of 80% on non-breast cancer individuals and 60% on early-stage BC patients.

Wu et al. [135] presented an automatic tongue diagnosis system (ATDS) to show the association between gastroesophageal reflux disease (GERD) and tongue manifestation and try to apply it to the process of noninvasive diagnosis of GERD. They used the system to acquire tongue images before the endoscope examination for some participants. In the process, nine tongue features were extracted, and a receiver operating characteristic (ROC) curve, analysis of variance, and logistic regression were used. The system contains a camera, light-emitting diode light, chin support, color bar, and adjustment. Three primary functions in terms of image capturing and color calibration, tongue area segmentation, and tongue feature extraction are processed successively. After that, tongue images are sub-divided into five segments: the spleen–stomach, liver-gall-left, liver-gall-right, kidney, and heart–lung areas; tongue features are also extracted, including tongue shape, tongue color, tooth marks, tongue fissure, fur color, fur thickness, saliva, ecchymosis, and red dots. With the records of endoscopic findings, GERD lesions are graded manually from A to D. Then, categorical test data and continuous data are tested by Chi-square tests and analysis of variance (ANOVA) tests, respectively. The odds ratio and probability of a binary response are also estimated by logistic regression analysis. In the end, they got an AUC of 0.606 ± 0.049 for the amount of saliva and 0.615 ± 0.050 for tongue fur in the spleen–stomach area. Both the amount of saliva and percentage of tongue fur in the spleen–stomach area might predict the risk and severity of GERD, which might serve as noninvasive indicators of GERD. These systems have improved the efficiency of tongue diagnosis and helped TCM practitioners to manage patient health data more efficiently. We have organized the features and components of these tongue diagnosis systems in Table 3.

Table 3:

Tongue diagnosis system.

| Authors | Year | System name | System components | System features and functions |

|---|---|---|---|---|

| Min-Chu [25] | 2000 | Automatic tongue diagnosis framework |

|

|

|

||||

|

||||

| Jang et al. [42] | 2002 | Digital tongue inspection system |

|

|

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

| Zhang [43] | 2007 | Computer-aided tongue diagnosis system |

|

|

|

and disease | |||

|

||||

|

||||

|

||||

| Kim et al. [134] | 2013 | Tongue diagnosis system for tongue coating thickness assessment with functional dyspepsia |

|

|

|

|

|||

|

|

|||

|

||||

| Lo et al. [133] | 2015 | Automatic diagnosis system for tongue diagnosis of patients with early breast cancer |

|

|

|

|

|||

|

||||

|

||||

| Wu et al. [135] | 2020 | Automatic tongue diagnosis system for gastroesophageal reflux disease |

|

|

|

|

|||

|

|

|||

|

|

|||

|

|

|||

|

||||

|

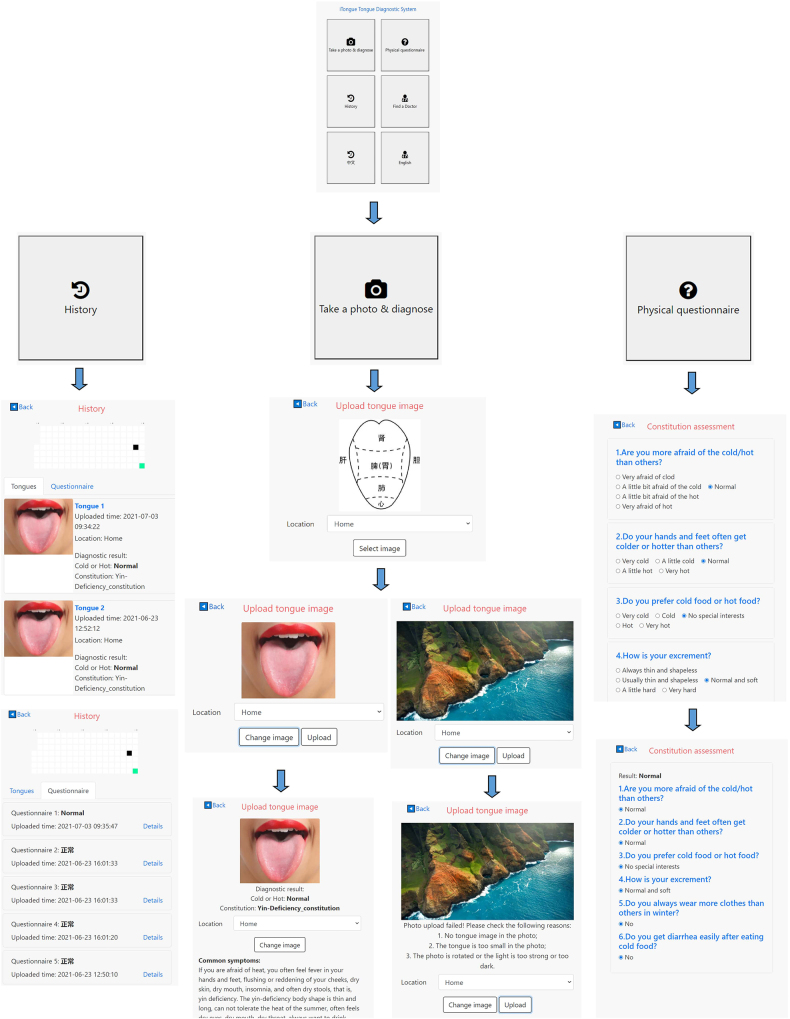

In addition to these desktop-based systems, there are some systems developed for mobile devices. For example, Ryu et al. [136] developed a tongue diagnosis system named TongueDx, including color correction and data history. Users can track their health condition by recording the color of tongue coating and body on smartphones. They also developed a line graph of tongue coating and body-color function, and users can know health conditions timely. Min-Chun et al. [25] presented an automatic tongue diagnosis framework to analyze tongue images taken by the smartphone. Since the same tongue’s images may look quite different in color due to various lighting conditions, they proposed a method to detect tongue features under different lighting conditions. They trained the lighting condition classifier based on the color distance of the tongue images pairs, which are captured with/without a flashlight. They used a color-checker based correction method to train the tongue image color correction matrices. They also trained a tongue feature detector for images under the standard lighting condition based on color features and SVM. They found that some tongue features have a strong correlation with the aspartate aminotransferase (AST) or alanine aminotransferase (ALT), which suggests the possible use of these tongue features captured on a smartphone for an early warning of liver diseases.

Hu et al. [137] proposed an automatic tongue diagnosis system with four main components, i.e., tongue photo-taking guide, tongue image color correction, tongue region segmentation, and tongue image diagnosis. In addition, a lighting condition estimation method based on the SVM classifier was used for unknown light sources and low-resolution images uploaded by users. Thus, tongue diagnosis systems can provide the general public and medical practitioners with convenient, prompt, and reliable diagnostic results for assessing health conditions. However, there is not yet a uniform standard for tongue diagnostic systems, leading to inconsistent analysis results in clinical diagnosis.

An intelligent TCM constitution classification system based on tongue image

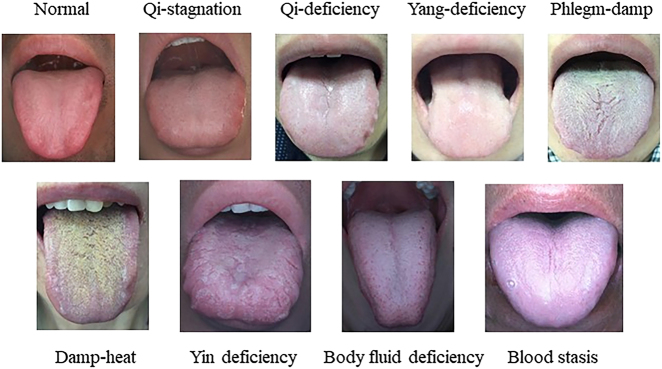

TCM focuses on preventive and personalized medicine by comprehensively assessing the physiological and pathological conditions (called constitution in TCM). In TCM, body constitution is regarded as a form of innate and acquired talent in the process of human life. It is a comprehensive expression of physiological function and psychological state [138]. The type of body constitution is highly related to some diseases and even determines the tendency of the disease [139, 140]. The classification of the TCM constitution is empirically based on many practitioners and a long TCM history. Since 2005, Wang Qi’s classification of TCM constitutions has been regarded as the standard of TCM physique classification [141]. According to the national standard classification and determination of constitution in TCM published by China Association of Chinese Medicine in 2009, the constitution is categorized as into nine types: normal constitution, Qi-stagnation constitution, Qi-deficiency constitution, Yang-deficiency constitution, Phlegm-damp constitution, Damp-heat constitution, Yin deficiency constitution, Body fluid deficiency constitution and Blood stasis constitution [142]. The example samples are shown in Figure 5. The traditional constitution classification is generally determined by answers to a constitutional questionnaire that has been designed by TCM experts [143]. However, this method can be easily influenced by the individual subjective intention, and it will take a long time to finish the whole testing [132]. Zhang et al. [144] explored the correlation between TCM constitution and facial features. All face images were taken using a facial biometric image acquisition system. Then facial features were extracted using a multi-channel detection method with color space and gradient and directional gradient histograms. They processed images by converting each pixel into a one-dimensional vector. After local binary pattern (LBP) feature maps were obtained, local histograms were used to analyze the regional distribution of texture. In the end, they got the result of 36.72% ± 1.73% classification accuracy rate based on LBP texture features for the 10 randomizations, and the experiment showed higher accuracy for LBP texture features compared with RGB pixel features. Lin et al. [145] analyzed clinical data with a topic model. The weighted mechanism was adopted for each feature word to improve the distinguishing ability and interpretability between the topics based on the LDA model. They adopted TF-IDF and Gauss function weighting, compared the KL distance, SVM classification accuracy, model complexity, and topic similarity, resulting improvement of the weighted LDA’s performance. Then, a symptom-herb-therapy-diagnosis topic model was obtained and the Multi-Relationship LDA Model topic model combining symptom, herb, therapy, and diagnosis was proposed under TCM clinical background. As a result, they found weighted topic model can improve the performances of topic models at different levels. Their studies utilize biomedical and computer technologies to obtain constitution types more accurately and quickly. Tongue diagnosis offers a custom, immediate, inexpensive, and non-invasive solution to recognize the constitution [146, 147].

Figure 5:

Tongue image of nine constitutions in traditional Chinese medicine.

In this section, we implemented two types of deep-learning methods: VGG and ResNet models, as well as two types of machine learning methods: Random Forest (RF) and SVM. The experiments results show that the deep learning method is feasible in TCM classification.

Data acquisition and preprocessing

All the data in our experiment comes from two parts, and the one part is from the Shanghai University of Traditional Chinese Medicine. There are 2,215 tongue images collected by professional personnel of TCM through the TFDA-1 digital tongue diagnosis instrument [148]. The other part is from the iTongue app, an intelligent system for personal health monitoring based on tongue image. It is also available in Apple App Store and other Android platforms. There are 2,572 tongue images from our app server database. The characteristic of this part of data is that the light source conditions of the tongue are complex and inconsistent, but it can well contain all kinds of complex light sources when users take photos.

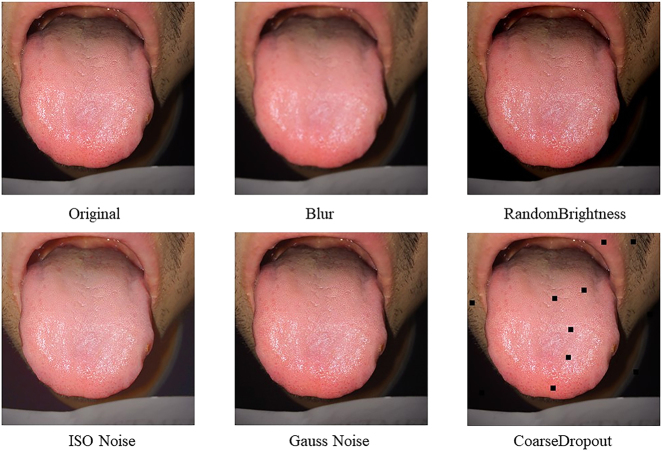

As deep learning methods are applied to perform the constitution classification, it generally needs a large number of tongue images as training databases. It is common knowledge that the more data an ML algorithm has access to, the more effective it can be. Even when the data is of lower quality, algorithms can perform better, as long as useful information can be extracted by the model from the original data set [149]. Therefore, data augmentation is a common method to increase the size of the training database.

In the experiment, we performed data augmentation using Albumentations, a python library for fast and flexible image augmentations [150]. As shown in Figure 6, we performed some basic computer graphics transformations on the images in the original training database, such as blurring, random brightness, ISO noise, Gauss noise, and coarse dropout. In this way, we expanded the training dataset to 20 times the original. Some transformed parameters are shown in Table 3.

Figure 6:

Example of tongue image data augmentation.

Table 3:

Key parameters in images transformation.

| Methods | p | Range |

|---|---|---|

| Blur | 0.3 | blur_limit(3, 7) |

| RandomBrightness | 0.5 | limit(−0.2, 0.2) |

| ISO Noise | 0.3 | intensity(0.1, 0.5), color_shift(0.01,0.05) |

| Gauss Noise | 0.2 | var_limit(10.0, 50.0) |

| CoarseDropout | 0.5 | max_holes=8, max_height=8, max_width=8, |

| min_holes=8, min_height=8, min_width=8 |

p represents the probability of applying the transform.

We used 70% of the mixed data set as the training set and the remaining data as the test set to preprocess the training set. In total, 11,200 tongue images were used as the training set. Then we train the model based on PyTorch, an open-source machine learning framework, and use tenfolds cross-validation to select the optimal model.

Methods and experiments