Keywords: artificial intelligence, health care, medicine, physiology

Abstract

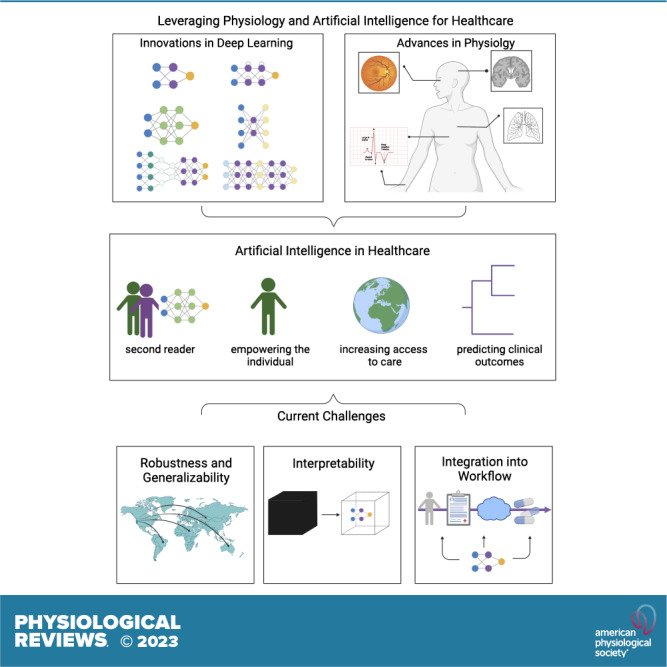

Artificial intelligence in health care has experienced remarkable innovation and progress in the last decade. Significant advancements can be attributed to the utilization of artificial intelligence to transform physiology data to advance health care. In this review, we explore how past work has shaped the field and defined future challenges and directions. In particular, we focus on three areas of development. First, we give an overview of artificial intelligence, with special attention to the most relevant artificial intelligence models. We then detail how physiology data have been harnessed by artificial intelligence to advance the main areas of health care: automating existing health care tasks, increasing access to care, and augmenting health care capabilities. Finally, we discuss emerging concerns surrounding the use of individual physiology data and detail an increasingly important consideration for the field, namely the challenges of deploying artificial intelligence models to achieve meaningful clinical impact.

CLINICAL HIGHLIGHTS.

-

1)

We discuss the historical milestones in artificial intelligence (AI) and physiology that have led to the recent resurgence of AI and its innovations in health care. We define AI, machine learning, and deep learning and delve into the origins of deep learning. We highlight how the synergy of innovations of neural networks, convolutions, backpropagation, GPUs, and large training datasets catalyzed the deep learning revolution of the early 2010s to make recent progress possible.

-

2)

We give an overview of the procedure of training neural networks. Training of current neural networks now includes forward propagation, backpropagation, and optimization functions to minimize loss.

-

3)

We discuss the current classes of deep learning neural networks. The fundamental goal of deep learning models is to convert the input data into a faithful vector representation. Earliest classes of deep learning neural networks can be defined by the class of data they were designed to handle: feedforward networks for tabular data; convolutional neural networks for images; and recurrent neural networks for text. The development of attention models and transformers has revolutionized natural language processing to make ChatGPT possible and is now also being utilized to handle imaging datasets. Additionally, graph neural networks may be the most adept model to date at modeling the networks of biological processes.

-

4)

Physiology has been leveraged to create datasets, correlations, and insights into underlying mechanisms of AI in health care applications. This has led to advancements in automating existing health care tasks, increasing access to health care, and augmenting existing capabilities.

-

5)

The defining challenge of AI in health care has shifted from model development to model deployment. Implementation hurdles such as demonstrating robustness, generalizability, and interpretability of models will become increasingly important. As AI health care platforms begin to mature and demonstrate potential real clinical impact, how these platforms will be approved and regulated is now a leading problem. Finally, we discuss how inclusion of metrics beyond performance could be a solution to developing tools for enhanced integration into clinical workflows.

1. INTRODUCTION

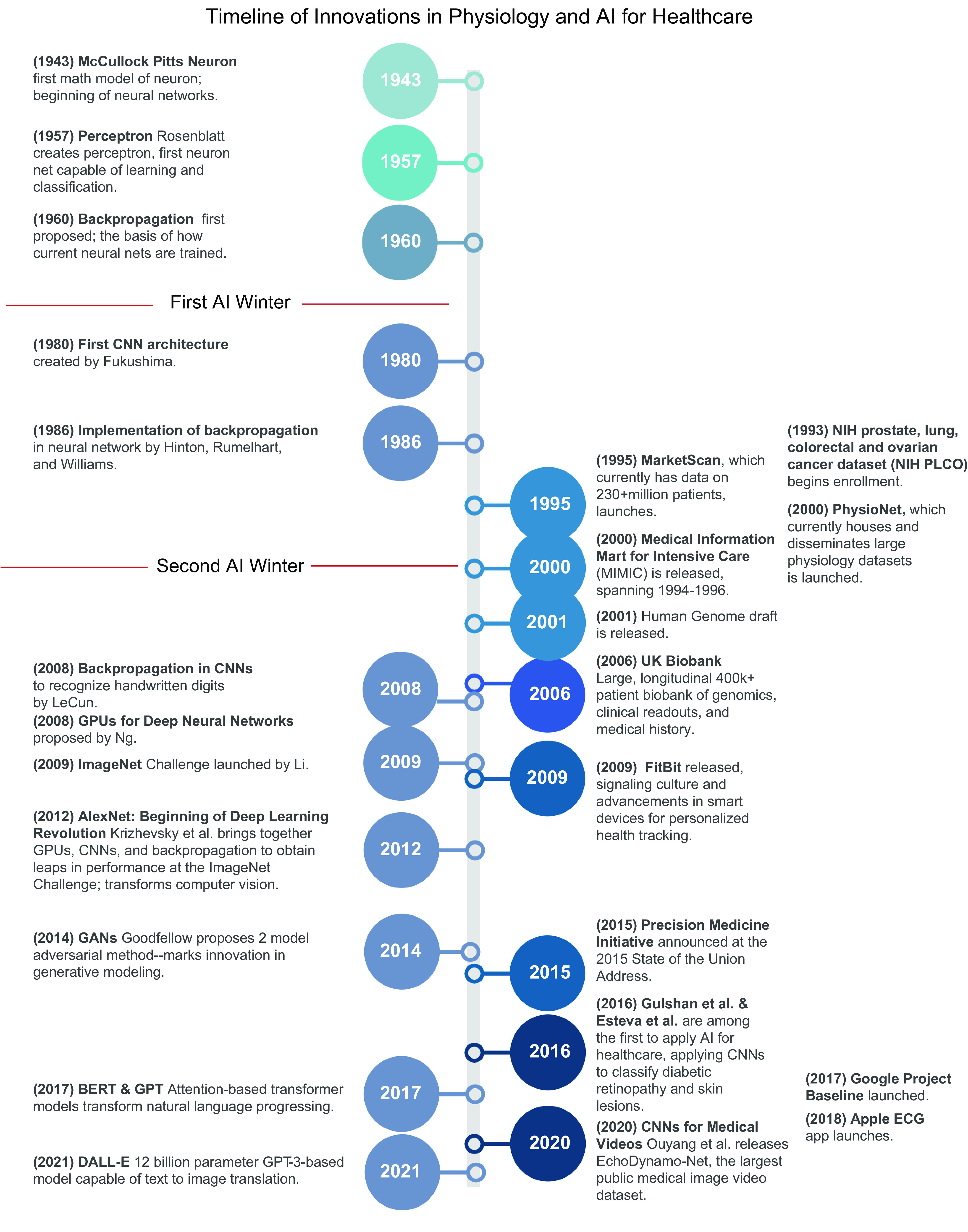

The last decade has seen unprecedented applications of artificial intelligence (AI) to health care (1–4). Advancements and innovations in AI in the past several years have been critical to this success (FIGURE 1). There is now an unprecedented ability to represent and model complex data, resulting in advancements in computer vision (5), natural language processing (6), and robotics (7). This has created the opportunity to automate tasks and augment the ability to learn from increasingly large datasets. Computer vision models have been applied to classify and segment medical imaging from nearly every organ system, including retinal optical coherence tomography (OCT) scans (8), brain computed tomography (CT), magnetic resonance imaging (MRI) (9), and chest X-rays (10). Breakthroughs in natural language processing have allowed for the translation of patient interactions to clinical text, interpretation and summarization of electronic medical records, and captioning of medical images (11–13). Emerging advancements in the field of reinforcement learning have allowed the prospective identification of health problems and semiautonomous surgical robots (14), whereas graph neural networks provide the capability to model non-Euclidean data.

Figure 1.

Timeline of innovations in physiology and artificial intelligence (AI) for health care. Innovations in AI, with special focus on deep learning, are shown on left, and advancements in physiology, particularly the creation of large physiology datasets, are shown on right. 2012 marked the reinvigoration of the current wave of deep learning. AlexNet successfully leveraged and harmonized a number of prior developments in deep learning [neural networks (1943, 1957), convolutional neural networks (1980, 2008), backpropagation (1986, 2008), training neural nets on graphics processing units (GPUs) (2008), and the importance of large training datasets (2009)] to demonstrate for the first time that deep neural networks [convolutional neural networks (CNNs)] trained via backpropagation on GPUs could realistically be implemented and deliver large increases in performance. Since 2012, the field of deep learning has encountered rapid expansion and innovation. AlexNet and subsequent work has led to consistent, stable, and mature high-performing models in computer vision; the development of generative adversarial networks (GANs) (2014) revolutionized generative modeling, and the developments of bidirectional encoder representations from transformers (BERT) and generative pretrained transformer (GPT) have been breakthroughs in natural language processing. Simultaneously, a number of international, national, and institutional initiatives have led to the detailed creation of high-resolution and/or high-frequency physiology datasets (1995, 2000, 2001, 2006, 2009, 2015, 2017, 2018). Whereas innovations in AI have been the catalyst to current innovations of AI in health care, large physiology datasets represent the foundation. Work done by Gulshan et al. and Esteva et al. marked one of the first robust applications of AI to health care. Image created with BioRender.com, with permission.

Equally important have been the datasets used to train the models, namely rich, clinically relevant physiology datasets. Concurrent to advancements in AI, a wealth of large, rich physiological datasets have been generated (15). Advancements in technologies such as genome sequencing, medical imaging, and personalized smart devices have allowed unrivaled characterization of human physiology. Their decreasing costs and widespread use led to boons in the size and resolution of data (16). Simultaneously, initiatives in precision medicine promoted detailed collection of an individual’s physiology to deliver datasets that were varied and spanned the spectrum of human health (17–19). Advancements in data storage and digitization of health records resulted in large databases and a way to interact with clinically relevant physiology data.

Through leveraging large physiology datasets, AI models analyze, interpret, and extract physiology patterns that can be mapped to clinically meaningful impacts to tackle and mitigate health care’s most pressing problems: rising costs, increasing shortage of physicians, unequal access to care, and inefficiencies and errors that harm patient outcomes. In this review we aim to explore the advancements in AI and physiology data that have made the last decade of AI in health care possible. We begin with an overview of AI and discuss emerging and popular models (for terminology, see TABLE 1). We then discuss how AI has harnessed physiology data to deliver three primary impacts in health care: automating existing health care tasks, increasing access to care, and augmenting health care capabilities. We end the review with an overview of emerging trends and future directions. As AI health care platforms transition into clinical use, attention has shifted from creation of AI platforms to implementation of AI platforms, making deployment the next defining challenge. Matters such as generalizability, interpretability, and meaningful clinical impact are now driving considerations (20). Additionally, concerns such as maintaining privacy of an individual’s physiology data used to develop health care AI models have emerged.

Table 1.

Glossary of artificial intelligence terminology

| Term | Definition |

|---|---|

| Artificial intelligence | A field dedicated to building computational entities that mimic human intelligence and capabilities, namely natural language processing (communication), knowledge representation (understanding), automated reasoning (thinking), machine learning (learning), computer vision (sight), and robotics (movement) |

| Machine learning | A subfield of artificial intelligence that focuses on learning patterns within a dataset |

| Deep learning | An approach to machine learning that involves learning the features that best represent the data within a dataset |

| Supervised learning | A class of machine learning that trains on labeled datasets in order to map an input (data) to an output (label) |

| Unsupervised learning | A class of machine learning that trains on unlabeled datasets in order to learn inherent patterns and structure within a dataset |

| Reinforcement learning | Reinforcement learning involves learning to interact with an environment in order to optimize a goal |

| Features | Characteristics of a dataset that the model utilizes to find patterns within a dataset |

| Perceptron | The fundamental unit of a deep learning model. It takes an input signal, aggregates and processes the inputs, passes the result through an activation function, and outputs and disseminates an output signal. |

| Neural net | Multilayer perceptrons |

| Weights | A value that is learned through training. A weight effectively determines how much each feature affects the prediction. |

| Biases | A constant that is added to the product of weights and features |

| Forward propagation | Deep learning training involves forward propagation and backpropagation. In forward propagation inputs are propagated forward throughout the network. This involves complexing the initial weights and biases and thresholding via the activation function. |

| Gradient descent | Gradient descent involves computing the local gradient/slope (which direction a function is increasing or decreasing most rapidly) of the loss function via backpropagation to determine in which direction a step should be taken to move closer to the minimum. |

| Saliency maps | A method used to interpret how a model makes predictions. It highlights the features or areas of an image that are used to make model predictions. |

| Hyperparameters | Parameters that are determined by the individual training the model that control how the model is trained |

2. ARTIFICIAL INTELLIGENCE

2.1. Machine Learning

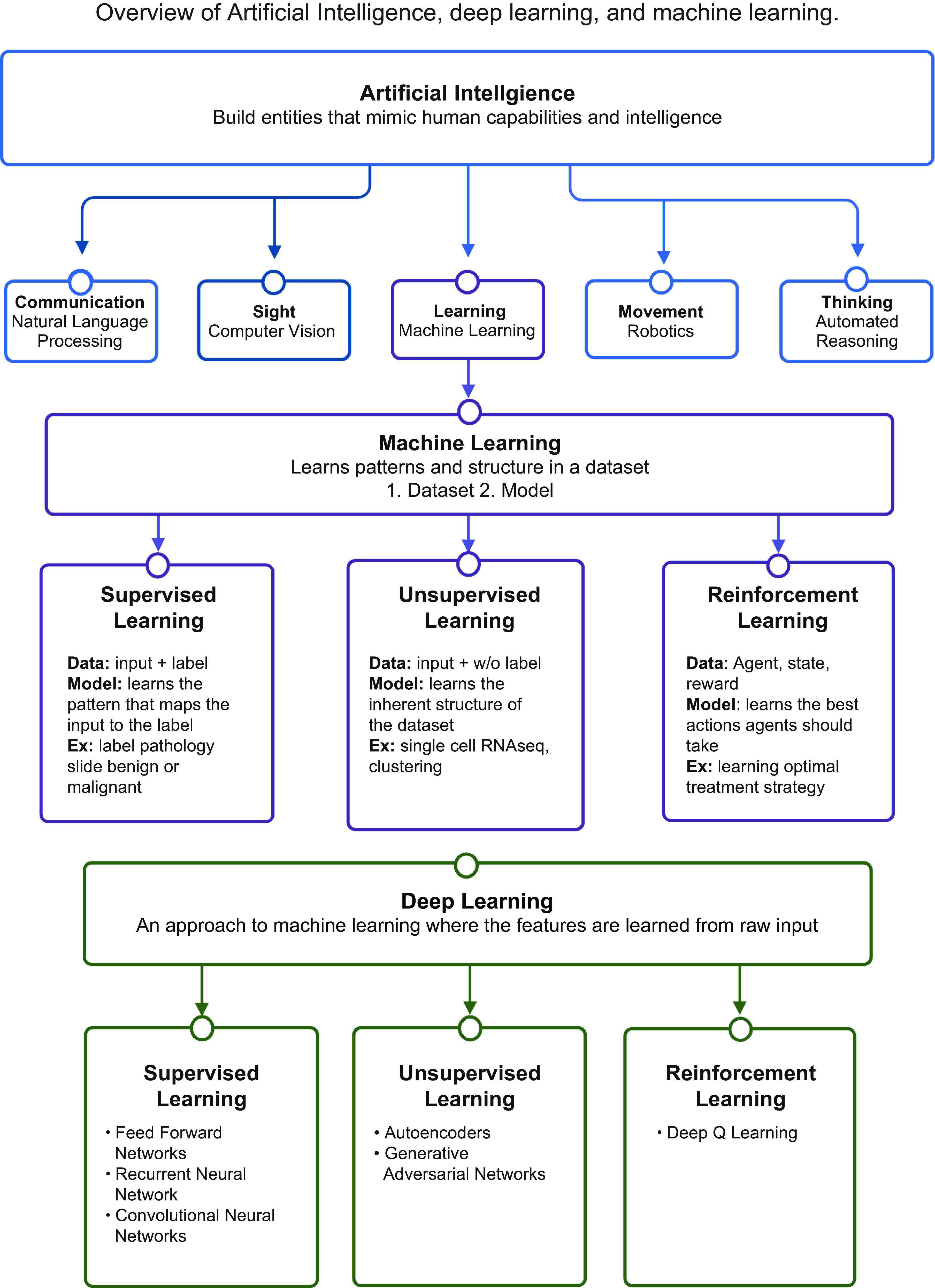

AI aims to build computational entities that mimic human intelligence and capabilities, namely natural language processing (communication), knowledge representation (understanding), automated reasoning (thinking), machine learning (learning), computer vision (sight), and robotics (movement) (21) (FIGURE 2). Machine learning, a subfield of AI, has garnered attention in the past decade owing to achievements in the field that have allowed it to nearly parallel human abilities in learning and reasoning. At its core, machine learning can be defined as identifying patterns and structure in a dataset. Thus, a machine learning problem is defined by 1) the data and 2) the model used to learn from the data.

FIGURE 2.

Overview of artificial intelligence. An overview of artificial intelligence, machine learning, deep learning, and their relationship to one another. Machine learning is a subfield of artificial intelligence and is composed of 3 predominant classes. Furthermore, deep learning is a subfield of and approach to machine learning. Image created with BioRender.com, with permission.

A dataset is a representative sampling of the domain space. Datasets can be unlabeled or labeled, e.g., a pathology sample that is labeled “malignant” or “benign.” In health care, the primary sources of data are rooted in capturing the physiology of an individual and include 1) medical images, 2) text or electronic health records (EHRs), and 3) genomic sequences (22).

There are three predominant classes of models in machine learning: 1) Supervised learning learns from labeled datasets to identify patterns that map the input data to the output label. For example, supervised learning can be used to identify patterns that would map a pathology slide to its label, benign or malignant. There are two primary forms of supervised learning. Regression maps input features to an output that is numerical and continuous (i.e., predicting oxygen saturation levels), whereas classification maps input features to outputs that are discrete and in categories (i.e., classifying an EKG as tachycardic or not) (23). 2) Unsupervised learning, which deals with unlabeled data, aims to find inherent structure within the data, such as subclusters, outliers, or low-dimensional representations (3). As an example, dimensionality reduction and clustering are used to learn the structure within single-cell transcriptomic datasets to identify clusters of cell populations (24).

Both supervised and unsupervised learning deal with fixed data in a static, nonchanging environment. However, many tasks in the world and particularly in health care are dynamic and interactive. 3) The third class of machine learning, reinforcement learning, involves learning to interact with an environment to optimize a goal (25, 26). Reinforcement learning is adapted from reinforcement in psychology, where correct actions taken in an environment result in a reward, leading to learning of the actions that maximize the reward. Reinforcement learning is the basis of training AI platforms to beat opponents in games like AlphaGo and in health care to learn treatment strategies that optimize patient outcomes (4, 27).

2.2. Deep Learning

The resurgence of AI in the past decade can be attributed to the successful implementation of deep learning, a subfield of, and approach to, machine learning (28) (FIGURE 2). Before the widespread use of deep learning, machine learning required domain expertise and hand-engineering to select the features that the models learned from. Features are characteristics used to define the dataset and are the inputs that machine learning models use to find patterns. For example, features of a pathology slide, such as cell size or number of cell nuclei, can be used by the model to determine whether there is malignancy. Creating a proper featurization is a core problem of machine learning, as features dictate the patterns that can be learned. However, it can be difficult to achieve high performance by hand-selecting features because determining which features should be selected and how to define them can be challenging and require extensive domain expertise. Furthermore, traditional machine learning models must rely on previously defined features and cannot utilize raw data such as an image of a pathology sample.

Deep learning provides a solution by optimizing feature selection through learning not just a mapping of the features to a pattern but also which features best represent the data (28, 29). This is achieved by passing raw data through the layers of a neural network, where each layer receives the outputs of the previous layer and learns a representation at each layer. As the raw data progress from initial layers to deeper layers of the network, representations are extracted and used to form more complex representations at each step. As an image of an eye passes through a deep learning network, the raw pixels of the image are fed into the first few layers, which extract edges that are then combined to form corners and contours in subsequent layers and are further combined to form an eye in the last few layers. Deep learning models can leverage larger datasets than previously seen before, which can facilitate higher performance that can be more rapidly and widely implemented, no longer needing heavy human involvement or domain expertise for feature engineering.

2.2.1. Deep learning foundations.

Historically, deep learning had been built around optimizing performance on supervised learning tasks: training a model to learn how to map an input to an expected output (28). To do so, deep learning models have taken inspiration from neuroscience (4, 28). The fundamental unit of a deep learning model is a perceptron. The perceptron, one of the first iterations of artificial neural networks, dates back to the 1950s and aims to recapitulate the mechanics of a depolarizing neuron (30). Similar to a neuron, the perceptron receives input signals, aggregates and processes the inputs, passes the result through an activation function, and outputs and disseminates an output signal. Importantly, the perceptron was the first neural net that introduced learnable weights and biases to functions that were used to aggregate and process inputs. Weights and biases were learned to more accurately map inputs to the desired corresponding outputs. Learning and updating weights and biases is a fundamental goal when training neural network models (29).

The process of training a neural network is analogous to how individuals typically learn: given a new task, an individual makes an attempt, compares the attempt to the anticipated output, and analyzes the differences to obtain feedback on how to better execute the task in future iterations. Deep learning formalizes and gives numerical structure to each component of this process (29) (FIGURE 3). Broadly, this process begins with forward propagation, in which inputs are propagated forward throughout the network (complexed with the current initial, often random, weights and biases and thresholded via the activation function) to deliver an output. Next, the difference between the predicted model output and the ground-truth expected output, also known as the loss, is computed by the loss function, a function that takes the predicted values and expected values (label) and outputs a level of discrepancy (31). The goal of training neural networks is to determine the weights that minimize the loss. To minimize loss and find the minimum of the loss function, optimization techniques are used, of which gradient descent has been the most popular. Gradient descent involves computing the local gradient/slope (which direction a function is increasing or decreasing most rapidly) of the loss function via backpropagation to determine in which direction a step should be taken to move closer to the minimum. This information is then used to update the weights. For a more rigorous explanation of model training, we refer the reader to Goodfellow et al. (29). With the weights updated, the model prediction is expected to deviate less from the ground truth. This process can be performed repeatedly, iteratively updating the weights and biases until the difference between the model predictions and desired outputs are minimized, resulting in a model that is able to make predictions with high performance and one that is faithful to the desired output.

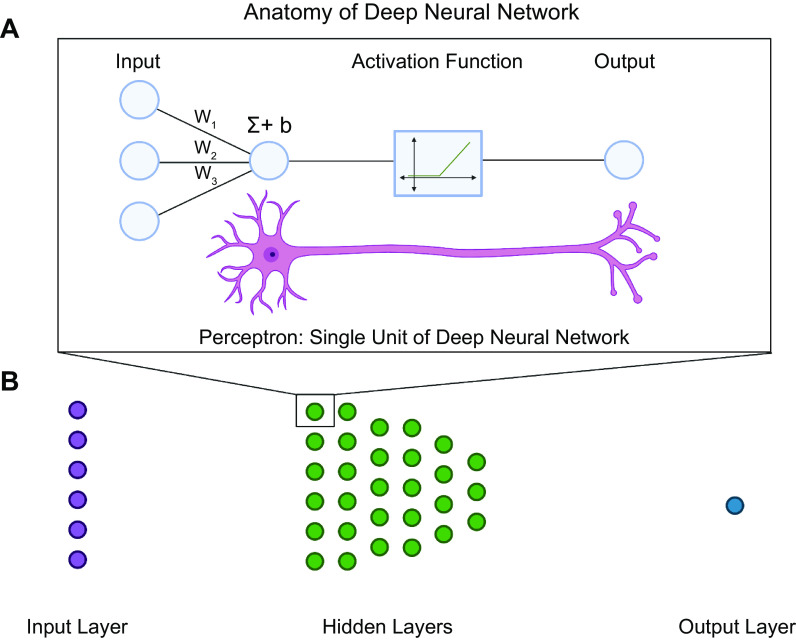

FIGURE 3.

Anatomy of a deep neural network. A: the fundamental unit of a deep neural network is a perceptron. A perceptron aims to model the dynamics of a neuron while also delivering the capability to learn weights to deliver binary classification. Similar to a neuron, the perceptron takes inputs from multiple sources. The inputs are multiplied by weights (W1–W3), summed (Σ), and then added to a learned constant (b). The result is passed through an activation function that determines whether the signal meets the threshold to be propagated. If the threshold is met, the signal is propagated. If it is not met, the signal is not propagated. This is analogous to binary classification, where signals that are propagated are classified as “1” and signals that are not propagated are classified as “0.” Stacked perceptrons make up a neural network layer. B: neural network layers make up a neural network. There are 3 main types of neural network layers: 1) input layer, where the input is fed into the network; 2) hidden layers, where weights and biases are learned; and 3) output layer, where the internal representation is mapped to the model prediction. Image created with BioRender.com, with permission.

2.2.2. Deep learning models.

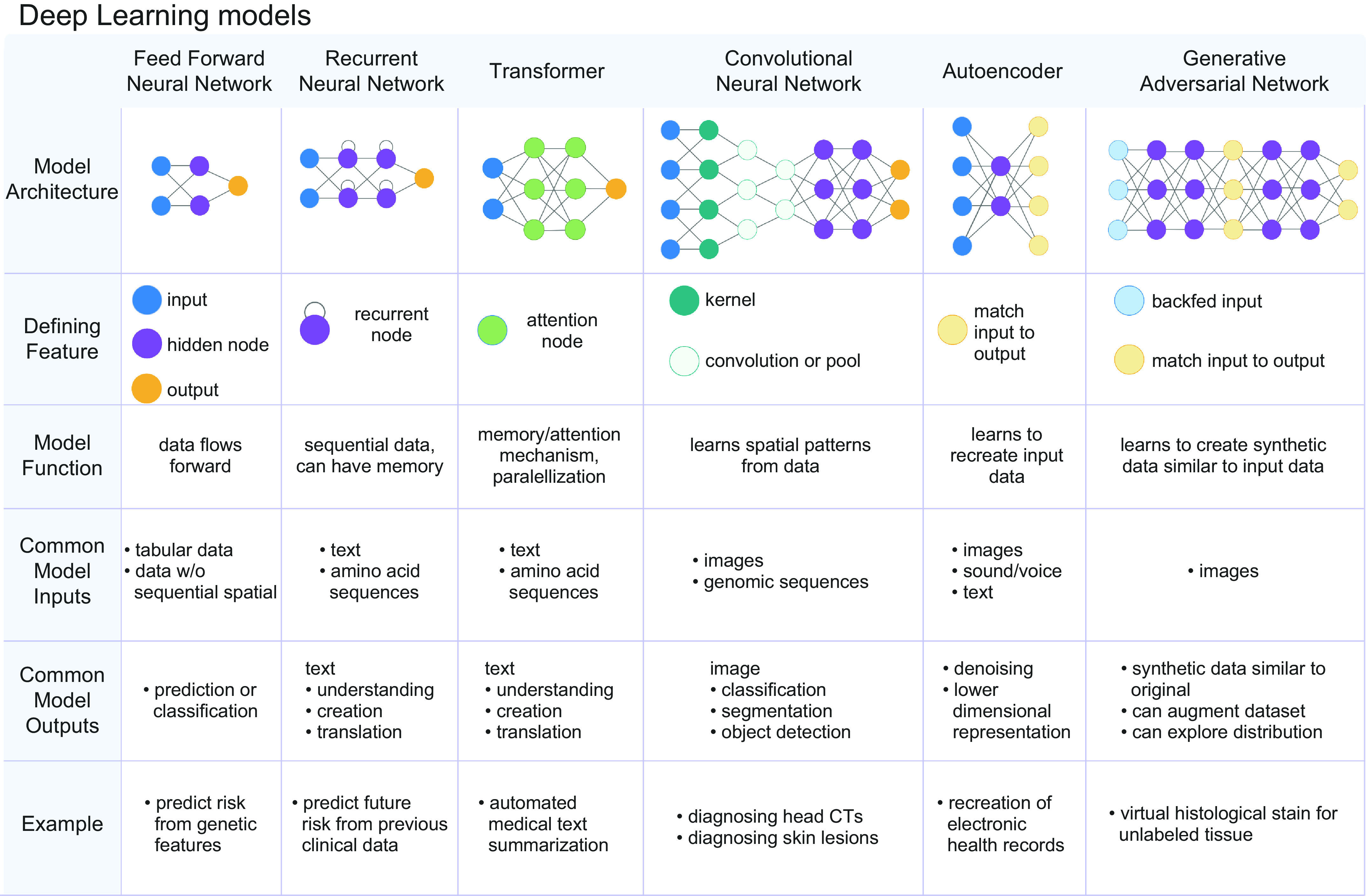

Deep learning neural networks are stacked multilayer perceptrons and are composed of three main types of layers: 1) input layer (which feeds the input into the model), 2) hidden layers (which learn to featurize the input), and 3) output classification or regression layer (which maps learned features and patterns to a prediction/output). Throughout the past decade, many design decisions have been engineered into neural networks to improve general performance (e.g., batch normalization, drop out, skip connections) and/or improve performance on specialized data (e.g., convolutions for spatial datasets and images, recurrent units for sequential datasets, attention). There are now many classes of deep learning models that are variations of the fundamental feedforward model architecture. The models can be defined by the unique modules they possess to handle specific classes of data (e.g., images, text, graphs) (FIGURE 4). Here, we survey a few of the most popular classes of deep learning models that have been used to leverage physiology data for health care applications.

FIGURE 4.

There are numerous classes of deep learning models. The different classes can be defined by the data type that the model has been designed to handle. Feed-forward networks are bread-and-butter neural networks and best handle tabular data. Recurrent neural networks possess a recurrent node, which allows the model to exhibit memory for previously seen information. This renders recurrent neural networks adept at handling sequential data. Transformers are a class of models that are composed of attention mechanisms. This allows transformers to exhibit longer range memory and greater efficiency and parallelization, which allows these models to handle longer sequences and larger data sets. Convolutional neural networks are defined by repeating convolutional layers, which are adept at analyzing spatially arranged patterns commonly seen in images. Autoencoders are composed of encoders and decoders, and they are adept at featurizing the input and recreating the input to learn underlying patterns and structure within the data. Generative Adversarial Networks are composed of a pair of adversarial models, where one model endeavors to generate synthetic data and the other endeavors to detect synthetic data. Image created with BioRender.com, with permission.

2.2.2.1. feedforward neural networks.

Feedforward networks are the foundational deep learning neural networks. They are fully connected models with fully connected layers and are deemed feedforward because information flows forward from the input to the hidden layers and to the output. They are used for tabular data or data that do not have specific temporal or spatial structure.

2.2.2.2. recurrent neural networks and transformers.

Recurrent neural networks (RNNs) are defined by their recurrent module (32, 33), which allows input to flow in cycles, allowing the network to exhibit memory for previously seen information. As a result, RNNs are particularly adept at handling datasets with sequential information, such as language, genomic sequences, or clinical time series data (34, 35). Despite their successes, RNNs can be difficult to train, particularly on larger datasets, and can exhibit loss of memory when handling longer sequences.

Transformers were introduced by a team at Google Brain in 2017 to address the limitations of RNNs (36). Transformers, in contrast to RNNs, are able to capture longer-range dependencies and train on larger datasets. This is primarily owing to the decision not to use recurrent modules for handling sequential data and instead utilize attention mechanisms. Attention mechanisms featurize sequences through systematically relating tokenized pairs of a sequence. Importantly, attention mechanisms perform these operations in parallel, which allows for increased computing efficiency and featurization of longer relations of the input sequence (1). As a result, transformers allow for more parallelization than RNNs and therefore reduced training time. Using transformers, researchers have been able to train increasingly large datasets, leading to state-of-the-art performance on natural language processing tasks (6, 37). Improvements in natural language processing enable the development of text-oriented tasks in health care, such as clinical text summarization or clinical question and answering databases (11, 38). Outside of language, the attention module has been instrumental in a number of tasks, including the development of AlphaFold2.0, the deep learning model capable of predicting protein structure from amino acid sequences (39). For a more in depth review of transformers, we refer the reader to Zhang et al. (1).

2.2.2.3. convolutional neural networks.

Convolutional neural networks (CNNs) are defined by convolutional layers that make CNNs adept at handling spatially related data, such as images (40). CNN models are adept at three primary computer vision tasks: image classification, image segmentation, and object detection (4). CNNs were critical in defining much of the early and current deep learning landscape. In 2012, CNNs were utilized to achieve state-of-the-art performance at the annual ImageNet Large Scale Visual Recognition Challenge (ILSVRC), a competition that challenges researchers to develop models capable of classifying millions of images (40). This feat returned attention to neural networks, as it demonstrated that neural nets could deliver leaps in performance and be practically and feasibly trained, which addressed a long-standing criticism (41–43).

As work on CNNs continued, CNNs contributed to the conceptualization and dissemination of transfer learning, a staple method in current deep learning training. Transfer learning involves pretraining deep neural networks on large datasets that are frequently difficult to obtain or create, transferring what has been learned from the previous layers, and retraining the final layers on a domain-specific dataset (3). Transfer learning takes advantage of the observation that earlier layers of the CNN have learned fundamental visual structures, such as curves, lines, and shapes, whereas the latter layers learn domain-specific features, such as ground-glass opacities in chest X-rays. The development of transfer learning is one of the primary reasons that CNNs and deep learning have been able to propagate rapidly through health care applications. It reduces the barrier of entry from a large multimillion size image dataset for training a deep model from scratch to a dataset in the thousands for retraining a few final layers of the model. Applications of transfer learning have led to models that can screen for diabetic retinopathy (8) and classify pathology slides at 1/1,000th of the time it takes a pathologist to do so (44). Whereas application of CNNs to analyze images is now a mature field, the use of CNNs to analyze videos, which adds an additional dimension of time, has presented itself as a new frontier (45, 46), with EchnoNet-Dynamic being the largest publicly available medical video dataset (47).

2.2.2.4. generative modeling.

In machine learning, models can be classified as discriminative or generative. Both classes of models learn the characteristics and statistics of datasets. Discriminative models utilize the learned patterns to distinguish between types of data, for example, chest X-rays that would be considered diseased versus healthy. Generative modeling, instead, uses learned characteristics and statistics of the dataset to generate additional synthetic data. There are a number of models that exist for generative learning, including variational autoencoders (48), normalizing flows (49), and autoregressive models (50), but among the most popular in health care applications have been generative adversarial networks (GANs) (1, 51). GANs aim to learn the implicit distribution of a dataset, using two adversarial models. GANs are composed of 1) a generator that generates synthetic samples and 2) a discriminator that classifies the sample as fake/generated by the generator or real/not generated by the generator (52). Intertwining the two models pushes them to work “adversarially” against each other. The generator attempts to generate more real images with the hope of fooling the discriminator, while the discriminator works to be able to distinguish real from fake, pushing the generator to create more realistic images. The result is a model that has learned the implicit distribution.

In health care GANs can serve many functions. GANs can create additional realistic data to augment a dataset (53). This overcomes limitations commonly seen in health care datasets such as small datasets or datasets limited by patient privacy (1, 54). Furthermore, GANs can be used to explore the full distribution of a clinical state (55). GANs have also facilitated the annotation of medical images with text- and image-to-image translation, translating MRI images to CT images (56).

2.2.2.5. graph neural networks.

The aforementioned models are designed to handle data that internally relate to each other via grids (such as images) or sequences (text). However, a vast majority of data exist not in grids or sequences but in an undefined manner such as those seen in networks or graphs. Graph neural networks are a class of neural networks that have been developed to handle data that exist as graphs (57, 58). They have been used to perform contract tracing for COVID-19, identify individuals who were exposed, and recommend indications for potential reopenings (59).

2.2.3. Reinforcement learning.

Reinforcement learning involves learning a series of decisions within a specific environment that optimize an outcome. The capability to create complex and nonlinear featurization of raw data in recent years has led to advancements in the field of reinforcement learning (60). Deep reinforcement learning has been used to succeed at tasks that require complex dynamic decision making, such as learning to defeat chess masters or win at 98 different video games (60). In health care, it is particularly well suited to deal with scenarios with inherent time delays, where decisions are performed without immediate knowledge of effectiveness but evaluated by a long-term future reward (61). It has been utilized to select treatment regimens for chronic diseases, plan clinical decision strategies in cases like sepsis, and perform automated robotic surgery.

2.2.4. Deep learning model limitations.

Although deep learning models have delivered significant advancements, they are not without limitations. Deep learning models often require hyperparameter tuning of model parameters (such as learning rate, number of epochs, etc.), which can be difficult to optimize because of difficulty in model convergence for models as complex as those seen in deep learning. The problem of hyperparameter tuning is further amplified by the large computing resources needed to train models. The computing resources needed are a reflection of the increasingly growing size of models and large dataset demand. Similarly, deep learning models are often known for being “black box” models because of difficulty in interpretation. One of the greatest strengths of deep learning models, learned features, also contributes to one of its greatest limitations. Learned features are typically not linearly correlated with model outputs and can be difficult to correlate with physiological mechanisms. Although methods are being developed to increase the interpretability of models, this remains one of the greatest hurdles to translation. Another significant limitation is the tendency for deep learning models to overfit to the training dataset and therefore perform poorly on datasets that differ slightly in data collection, demographics, or distributions.

Traditional machine learning (ML) models, such as logistic regression, support vector machine, and random forest classifiers, are not hampered by these limitations as severely. This is primarily because of the reduced complexity of traditional ML models (i.e., fewer parameters) and hand feature engineering. Traditional machine learning models are frequently interpretable: the relationships between input features and model outputs are explicit and correlated. Additionally, less hyperparameter tuning is required because of the reduced number of parameters and ease of convergence with less complex models. Additionally, because ML models are less complex and include more regularization, in some situations they can exhibit less overfitting compared with deep learning models. Thus, careful consideration of whether traditional machine learning models (logistic regression, support vector machine, random forest classifiers, etc.) are more suitable for the physiology problem at hand is frequently warranted.

3. AI IN HEALTH CARE: HARNESSING PHYSIOLOGY FOR HEALTH CARE

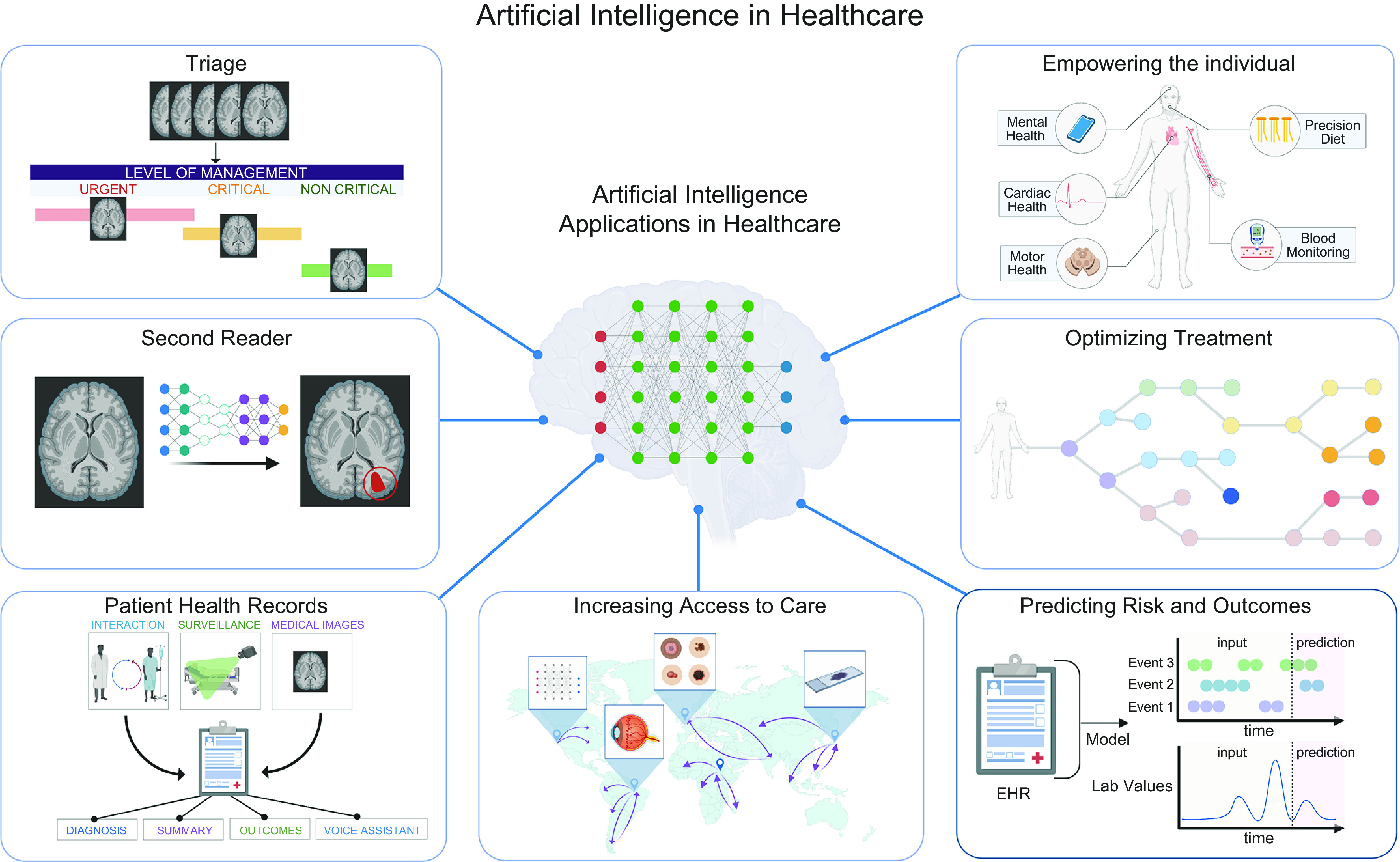

Health care is ripe with large datasets that characterize the physiology of a patient, as evidenced by the hundreds of datasets that now populate PhysioNet (15), a repository of open source physiology datasets. Here, we define physiology as the characterization of the normal functions of human organ systems and cells. Increasingly, physiology datasets characterize not only normal function of human organ systems and cells but also pathophysiology or when organ systems and cells become aberrant. In the past several decades, we have seen a revolution in the ability to collect physiology data at a patient level. This can be attributed to smart devices such as smartphones, watches, and glasses that are able to capture high-frequency, longitudinal readouts of an individual and new medical technology that is able to resolve an individual’s physiology at a higher resolution (FIGURE 5). The physiology data that are collected are inherently meaningful: they reflect clinical status and clinical outcomes and can be correlated with underlying clinical states (62–64). AI and machine learning have been leveraged in recent years to unearth patterns from these physiology datasets, identifying the features that distinguish between normal and pathological physiology, and make correlations that map physiology data to their clinical interpretations. In doing so, our understanding of both normal physiology and physiology of disease has deepened. For example, using deep learning models, a team at Google Brain used OCT scans characterizing the fundus of an eye to predict underlying cardiovascular health (65). The capability to harness physiology data with AI has manifested in three main forms in health care: 1) automating existing health care tasks, where the interpretation of physiology data is automated; 2) increasing access to care, where easy-to-collect physiology data are mapped to underlying clinical states that would typically require more intensive equipment to determine; and 3) augmenting existing health care capabilities, where raw physiology data are aggregated to intuit more complex, unknown, underlying disease mechanisms and clinical outcomes. Here, we discuss how physiology data have been leveraged by AI in its applications to improve patient care (FIGURE 6).

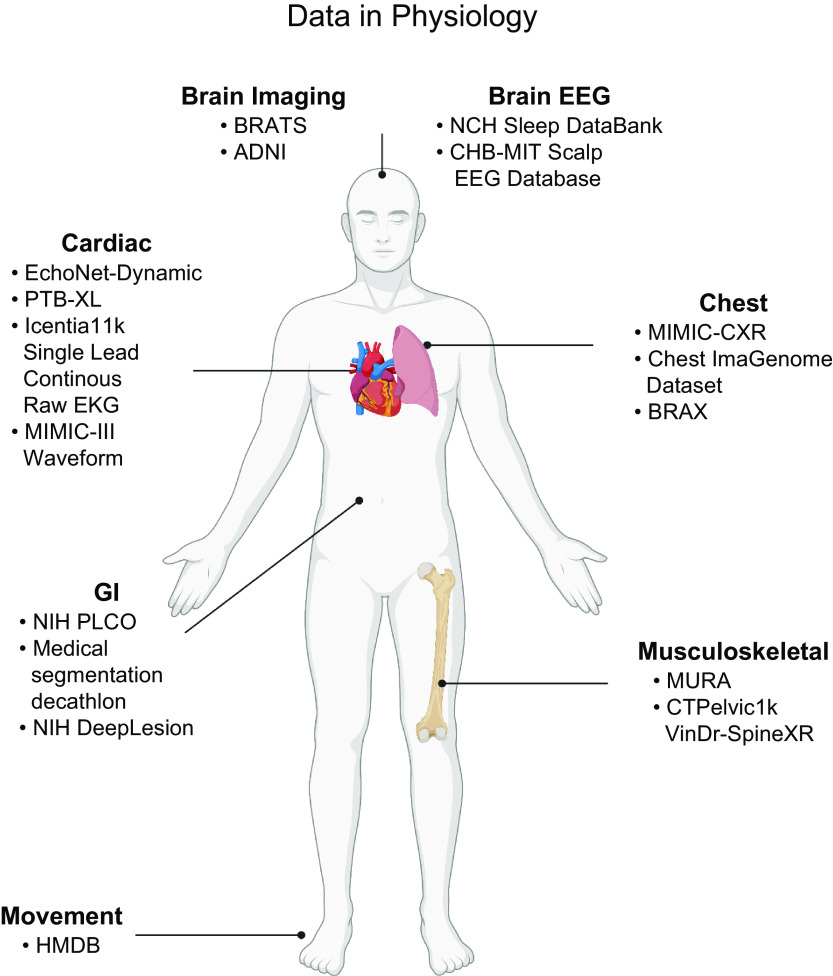

FIGURE 5.

Data in physiology. In the last three decades, there has been an increase in the size and resolution of physiology datasets. Owing to international, national, and institutional initiatives, there are now high-resolution and high-frequency datasets that describe the physiology of many organ systems within the body. Furthermore, the rise of smart devices in the last decade has seen an increase in individual physiology data. Image created with BioRender.com, with permission.

FIGURE 6.

Application of artificial intelligence (AI) in health care. Through leveraging physiology data, AI models are capable of mapping physiology patterns to clinical impacts. Main applications of AI in health care include automating existing health care tasks (triage, second reader, and patient health records); increasing access to health care (empowering the individual and increasing access to care); and augmenting health care capabilities (optimizing treatment and predicting risk and outcomes). EHR, electronic health record. Image created with BioRender.com, with permission.

3.1. Automating Existing Health Care Tasks

3.1.1. Triage.

In health care, where patient cases frequently outnumber health care providers, triage can be used to prioritize critical patients, decrease the time to treat, and give greater clarity to patients as to whether medical attention is needed. The process of triaging patients can be reframed as a supervised learning classification task, where a model learns to classify patients into categories, such as “urgent” or “not urgent,” based on the underlying physiology and degree of pathology on clinical readouts. Machine learning has historically been adept at classification tasks, and with the advent of deep learning larger datasets have been leveraged to improve model performance (5, 66). In fields where triage is based on images or physical appearance, machine learning has matched the performance of physicians across a multitude of specialties, classifying skin lesions (66), referring retinopathy cases (67), and determining risk assessments of fractures (68).

An important extension has been the application of AI platforms to triage emergent cases, where delaying intervention by minutes can lead to irreversible damage and effective triage can shorten time to intervention. A focus of the field has been triaging emergent neurological events, where “time is brain” (69). In one study, a three-dimensional (3-D) CNN, developed to identify and then reorder acute neurological events (head trauma and stroke) according to the predicted criticalness, matched the average sensitivity of specialists and flagged critical cases 150 times faster than humans (1.2 s vs. 177 s) (70).

Similar technologies are currently being deployed in the clinic. Viz LVO is the first Food and Drug Administration (FDA)-approved AI-based triage system for large vesicle occlusions and is being used in >100 centers in the United States (71). Viz LVO analyzes incoming CT angiograms and alerts specialists through mobile notification when a stroke has been detected, with a median time of 6 min. In a small retrospective trial, patients triaged by Viz LVO saw a reduced triage time of 22.5 min and an overall reduced length in hospital stay (72, 73).

Viz.ai is expanding to include triage of other acute neurological diseases (74) and COVID, whereas Aidoc (75, 76), ZebraMedical (77), and others have also developed FDA-approved triage platforms to handle emergent neurological (74), orthopedic (78), and pulmonary (75) events. AI-driven triage platforms are among the first forays of AI platforms in the clinic. Their deployment will give much needed insight as to whether AI can be deployed on a large scale, successfully integrate into the workflow of a clinician, and improve outcomes for patients.

In nonemergent cases, AI triage platforms can be used to offset physician workload. A number of studies have found that when using AI platforms to triage and reorder a physician’s workload according to severity, more than half and up to 88% of the workload could be excluded without sacrificing sensitivity (44, 79, 80). When multiple rounds of screening were used for diagnosis, AI triage platforms could ensure that more severe cases were detected in earlier rounds (79).

A final important role for AI triage is triaging of patients before they arrive at the clinic. Initial triage is based on obtaining an accurate patient history, which can be automated through advancements in natural language processing and question and answering models. A number of AI platforms have utilized questionnaires or chat boxes to interact with patients to assess their symptoms and then determined whether further medical attention was needed (81). Typically, these models are trained on existing electronic health records (EHRs) to learn patterns of a disease and further combined with question and answering models to create an interactive platform. A few (82–84) pretriage AI platforms are currently being deployed, with Babylon (85–87) in collaboration with the UK National Health Service being one of the most notable.

3.1.2. Second reader.

Diagnostic error is one of the most common of patient care problems (88). It affects at least 1 in 20 United States adults each year, and most individuals are likely to encounter one or more diagnostic errors in their lifetime (88–90). Having more than one health care reader review a case can decrease rates of diagnostic variation and error (89, 90), but because of a continual shortage in health care providers having multiple readers can be difficult to achieve.

Spanning across specialties, AI has matched the performance of physicians in diagnostic tasks (8, 44, 91, 92), allowing AI platforms to take on the role of second readers. In a number of diagnostic tasks, models were found to outperform physicians (93–96). This is owing to a number of advantages of AI systems. AI systems excel at tasks that require meticulous, laborious, redundant calculations that are error prone for humans (47). Furthermore, models are capable of identifying cases that are missed by physicians, reducing the false negative rate significantly with only a moderate increase in false positives (94–96). AI platforms excel in this regard because when trained on large datasets they are capable of efficiently leveraging the collective experience from thousands of patient cases and physician diagnoses, a task that would take physicians years to acquire. AI second readers also excel in diagnostic scenarios where diagnosis can be difficult for the physician, as in endoscopy for colon cancer screening where views are obstructed (97, 98). Even with partial views of polyps, AI models were capable of alerting physicians to malignant lesions that would have gone undetected otherwise (99).

AI platforms are now being increasingly integrated into advanced optical modalities (MRI, CTs) to create computer-aided detection (CADe) and computer-aided diagnosis (CADx) (100). Many are now being actively utilized in clinics (101). Encouragingly, it has been reported that physicians assisted with AI second readers saw greater performance than when acting alone, demonstrating that AI second readers can be successfully integrated into a clinical workflow to have meaningful impact (102–106). However, how and when physicians are notified will require optimization, as one study found that up to a fifth of alerts from the AI second reader were ignored, with redundancy and alarm fatigue cited as the most common reasons (102).

3.1.3. Electronic health records.

Electronic health records (EHRs) are a rich resource documenting patient clinical history and a method for interacting with clinical data (4). However, physician dissatisfaction with EHRs is well documented (107), as EHRs are often laborious and cited as a leading cause of physician burnout (108, 109). Furthermore, scribing patient notes has been shown to limit patient interactions and consequently negatively affect patient care (110–112). Advancements in natural language processing have enabled high performance in text translation, comprehension, and generation (6). When applied to health care, this has the capability to facilitate the EHR process in automated transcription of patient interactions (113), automated summarization of patient interactions into notes (114), and extrapolation of diagnoses (115, 116), outcomes (115, 116), or summaries from existing notes (117, 118).

Others have also combined two powerful fields of AI, computer vision and natural language processing, to achieve image-to-text translation (119). When utilized in medicine, image captioning can alleviate the bottleneck of creating summary reports for image-based fields such as pathology (120) and radiology (121–123).

A future frontier for EHRs enabled by machine learning is video- or audio-based EHRs. Continuous recordings of patients with thermal sensors or videos that characterize the physiology of a patient (heart rate, body temperature, mobility, food intake, bowel movements) can be analyzed by CNNs and RNNs to translate videos into text summaries of patient activity. The result is a living clinical document of unparalleled resolution of patient activity during a hospital stay (7, 45, 46). And just as Apple’s Siri or Amazon’s Alexa have streamlined information retrieval, many have extended these pocket assistants into the medical sector (124–126). This would allow health care providers to verbally access information about the patient and relevant clinical information in real time.

3.2. Increasing Access to Care

3.2.1. Increasing access to care in developing countries.

Low- and middle-income countries (LMICs) account for nearly 90% of the global burden of disease but have only a fraction of the health care workers compared with developed countries (127–129). Many current AI health care platforms can be deployed in low-cost settings, needing only the computing power of a smartphone and internet access. Utilizing machine learning to increase access to health care in LMICs has been a primary goal for the field (130).

One of the main applications of AI in LMICs is to leverage low-cost physiology readouts with AI models to develop a screening tool for diseases that can be managed or treated. A main focus has been building AI screening platforms for diabetic retinopathy (8, 91, 96, 131, 132), a leading cause of preventable blindness in LMICs. An important problem in creating AI platforms is ensuring high and consistent performance across countries, where incidence, presentation, and patient demographics can vary widely. To address this, researchers have evaluated model performance on large multiethnic population datasets (91) and prospectively in countries with low resource settings (96, 131, 132). Importantly, models trained on datasets from developed countries were found to generalize well when evaluated in LMICs (96, 131, 132).

Oftentimes a significant bottleneck for health care delivery in LMICs is the lack of equipment. Increasingly, researchers have developed low-cost equipment that is integrated with AI screening platforms to combat the dual problem of dearth of health care providers and resources. Recently, one group developed a low-cost ($180) contrast-enhanced micrograph integrated with a machine learning model for the molecular diagnosis of lymphoma. A diagnosis can be achieved in <1 min with access to the internet and <10 min without (133).

Similarly, the Prakash laboratory, a champion of frugal science, recently developed the Octopi, a $500 bright-field and fluorescent microscope capable of automated scanning (130, 134). When combined with a CNN trained on 20,000 blood-smeared slides of malaria parasites, the Octopi can be used for automated and real-time detection of malaria 120 times faster than manual analysis (134). Octopi are now being deployed in Peru, Uganda, and India (130).

3.2.2. Increasing access to care in developed countries.

Many developed countries anticipate a shortage in physicians within the next decade (135, 136). At the same time, the disease burden is expected to continue to grow. These issues are further compounded by uneven access to health care.

One hope is that AI health care diagnostic platforms can assist practitioners in providing care comparable to trained specialists, thus increasing the number of areas with access to specialized care (93). Additionally, other groups have utilized AI to develop models to bypass parts of the clinical workflow that may need specialized expertise or resources to accomplish, such as “augmented microscopy” to assist with pathology slide analysis (137) and real-time diagnosis of tumor biopsies (138, 139) or “digital stains” of histology slides (140) to bypass sample preparation.

Of the shortage of physicians anticipated, a quarter is projected to be in surgical specialties (136). Currently, many simple surgical tasks, such as suturing (14, 141) and knot tying, can be automated by AI platforms (142, 143). Automated robotic surgery uses a combination of computer vision to understand the surgical terrain and reinforcement learning to optimize the series of steps (4). AI-automated surgery can be used to complement telesurgery operations and, perhaps in the future, to automate short minimally invasive or laparoscopic surgeries.

3.2.3. Empowering the individual.

A current revolution in health care is the unparalleled ability for individuals to collect real-time, dynamic, and noninvasive health data from wearable technology. This information delivers information back into the hands of the individual and can enable lifestyle changes (144), increased compliance with medical practices, precision and preventative medicine, and early diagnosis and interventions (145). Additionally, environmental and lifestyle factors are often not well captured in the current EHR but could be an important data type when considering the role of physiology in the application of AI in health care. Machine learning plays a pivotal role in translating the raw readouts from smart technology into understandable and actionable information for the user.

An important application of wearable technologies is the capability to use noninvasive physiology readouts to detect diseases that are frequently asymptomatic or often require continuous monitoring to confirm diagnosis (146). A great deal of work has involved training machine learning models to detect arrhythmias (62, 147), such as atrial fibrillation (148), tachycardia (149), or ectopic beats (150). Other readouts from wearable technology have allowed inferring hemoglobin levels from images of fingernails (151), prediction of diabetes risk with photoplethysmography (PPG) (152), diagnosis of sleep apnea (153), and characterization of motor and neurological diseases (154, 155).

Outside of disease detection, wearable technologies play an important role in staying vigilant about one’s health, enabling precision and preventative health. Researchers have developed a machine learning model to predict blood triglyceride and glycemic responses after meals in an effort to facilitate precision nutrition to combat cardiometabolic disease (156). Additional applications have included medication compliance (157) and mental health monitoring (158, 159).

A current challenge for wearable technology is determining the optimal data collection source and frequency of collection to obtain noise-free and representative data for analysis. New technologies such as smart glasses, contact lenses, and toilets (64) have been developed to increase either the quality or scope of data collected (160). Machine learning models play an important role in processing the data, integrating multiple data sources, and mapping patterns with health outcomes.

3.3. Augmenting Existing Capabilities

3.3.1. Early prediction of risk and outcomes.

The capability to identify which individuals are at risk for disease can improve patient outcomes and optimize allocation of health resources. For many decades, models have been developed to predict health outcomes for these purposes (161). The increasing number of large clinical datasets paired with advancements in deep learning have led to models capable of predicting outcomes earlier and with higher accuracy (65, 162–164).

An area of focus has been predicting outcomes of acute adverse events (165, 166). This is owing to the large number of datasets with rich medical data on intensive care unit (ICU) patients (16, 167–169). In one instance, a recurrent neural network trained on EHRs from US Department of Veterans Affairs sites was capable of predicting the risk of acute kidney injury with a lead time of up to 48 h (35).

Many risk prediction models are beginning to be evaluated for clinical use. A great body of work has been done on building models to predict sepsis, with models capable of predicting sepsis up to hours before the progression (170–173). One such model resulted in a 39.5% reduction of in-hospital mortality, a 32.3% reduction in hospital length of stay, and a 22.7% reduction in 30-day readmission rate for sepsis-related patient stays (174) (NCT03960203). Many of these studies have utilized the Medical Information Mart for Intensive Care dataset, a dataset of >40,000 patients who were admitted to the critical care unit between 2001 and 2012. Given the abundance of quantitative physiological data collected in critical units, this dataset is one of the largest clinically annotated physiological datasets.

Another application has been the capability of AI risk models to identify previously unidentified biomarkers and underlying disease mechanisms (65, 175) from physiology data. In one instance, saliency maps derived from a model developed to predict the risk of developing diabetic retinopathy consistently identified regions that later progressed to microaneurysms, illuminating a potential early uninvestigated biomarker for diabetic retinopathy (176). Similar analysis done on models used to classify mesothelioma pathology slides identified epithelioid components, which have been reported in recent reports to be correlated with aggressive mesothelioma (177).

3.3.2. Optimizing and personalizing treatment.

Treatment often involves multistep decision making that takes into account traditional treatment strategies and the patient’s individual presentation. Current AI models have leveraged the collective experience from large clinical datasets and deep reinforcement models to select optimal and personalized treatment strategies for patient outcomes (170–172, 178).

One such example has been development of treatment strategies for sepsis (173), where the management of intravenous fluids and vasopressors has been a key clinical challenge. A group of researchers created “AI Clinician,” a reinforcement learning model that suggests optimal treatments for adult patients with sepsis in the intensive care unit (ICU) using the MIMIC-III dataset. To train the AI Clinician, researchers tracked 48 physiology variables from the individuals during their hospital admission in increments of 4 h over 72 h (179). Using this data, they then simulated different possible trajectories for the patient. Trajectories that led to patient survival were rewarded with a positive score, while patient deaths were given negative scores. Having mapped out numerous different treatment strategies, the model could then determine the optimal sequence of treatment strategies to take given the patient and the clinical state. When evaluated on a test dataset, the AI Clinician selected treatments that were on average reliably higher than those of human clinicians and found that their treatment recommendations matched treatment strategies that resulted in the lowest mortality (172).

Similar modeling has been applied to optimizing cancer treatments, where the decisions of when to start treatment and which combination of treatments to select are critical for patient outcomes (180–185). As cancer treatments become more targeted and molecular based, being able to map a patient’s underlying physiology and pathology to treatments and treatment outcomes is becoming increasingly important. Owing to advancements in genomic technologies, there is now a growing field that utilizes genomics and machine learning to identify targeted treatment, predict treatment outcomes, and monitor treatment results. The recent emergence of genomic technologies is currently revolutionizing tumor pathology. Single-cell transcriptomics, proteomics, and chromatin state allow for unparalleled characterization and resolution of tumor physiology. A number of studies have utilized machine learning and single-cell sequencing of tumor biopsies to characterize and map the tumor’s physiology, underlying cell composition, and tumor environment to targeted therapies (186, 187). The emergence of spatial technologies now allows for the incorporation of the spatial features of tumor physiology, such as cell communities, cell-cell interactions, and tumor microenvironments. Owing to the potential complexity of the cell spatial relationships and interactions, deep learning models, in particular graph neural networks, have been leveraged to map spatial datasets to treatment outcomes (188).

In addition to leveraging tumor biopsies, cancer diagnostics and targeted therapeutics have also leveraged another key aspect of tumor physiology: circulating tumor DNA (ctDNA). Here, machine learning has played a key role in mapping ctDNA to clinical outputs and enhancing our understanding of tumor physiology. Ensemble machine learning models have been developed to map ctDNA tumor burden to anticipated treatment outcomes (189). In doing so, these models have also elucidated underlying mechanisms relating tumor physiology to pathology, such as the role that fragmentomics, the length of DNA fragments with tumors releasing shorter fragments, and DNA methylation states play in characterizing tumor burden (190, 191).

An exciting prospective future application for machine learning and optimizing treatment strategies is the creation of a “google for patients” or a “digital twin.” A “google for patients” would enable searches for patients with similar demographics, biologic-omics, physiology, and disease history to better inform disease treatment strategies. A small-scale version, SMILY (Similar Medical Images Like Yours), a searchable database for pathology slides that can be annotated, has been created, demonstrating potential hope for this to come to fruition (192).

3.3.3. COVID and AI.

COVID-19, the worst global health pandemic in the last hundred years, has created many technical and medical problems. AI has been used to develop solutions for many of the problems, illustrating the many indispensable roles that AI now fills in health care (193, 194). CNNs were used to develop models for rapid triage and diagnosis of COVID patients (195). Natural language processing and EHRs were used to identify patients who were at greatest risk for mortality, while smartwatch data were used to detect patients at risk of developing COVID (63). AI had a critical role in discovering therapies for COVID, from drug repurposing neural networks to performing virtual screens for potential therapeutic targets (196). In an effort to increase public understanding, Salesforce developed “CO-search” to aggregate COVID literature into an interactive website with semantic search, question answering, and abstractive summarization (197).

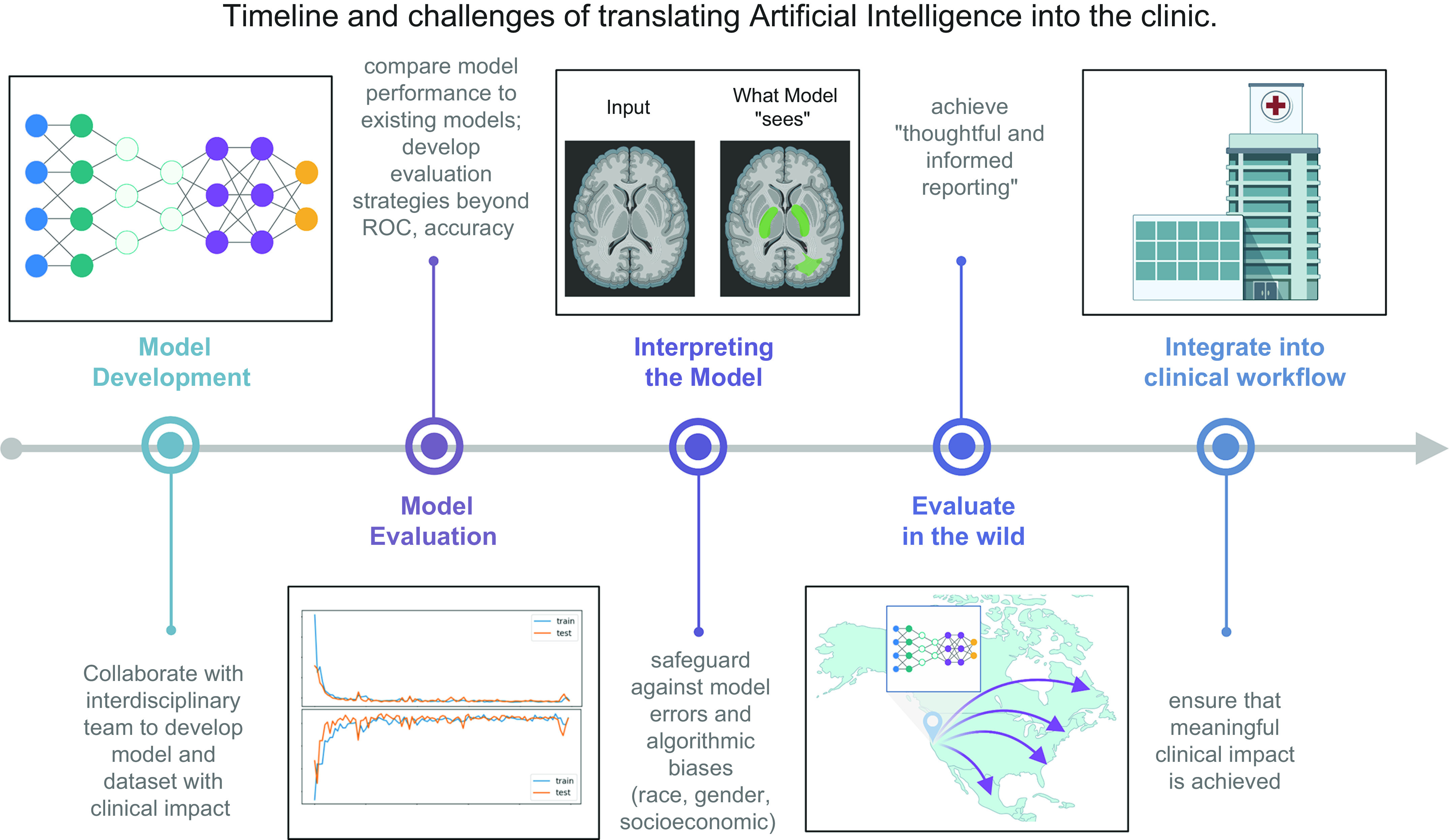

4. CURRENT CHALLENGES

Whereas the previous decade has focused on demonstrating that AI models can leverage physiology data to deliver clinical interpretations, work in the field of AI health care over the next few years will be defined by whether these initial findings can be translated into the clinic. Before AI can be translated into the clinic, we must address current concerns of 1) demonstrating generalizability and robustness through validation on multiple datasets, in multiple clinics, and prospectively; 2) garnering the trust, understanding, and usability of health care providers; and 3) integrating into the clinical workflow to tangibly improve care. Below we discuss challenges in translating AI models into a health care setting (FIGURE 7).

FIGURE 7.

Timeline and challenges of translating artificial intelligence (AI) into the clinic. The last decade of AI in health care has been focused on developing, applying, and optimizing models for applications in health care. Large physiology datasets and advancements in deep learning models have delivered AI models that can match or surpass human performance on a number of health care tasks. The next decade of AI in health care will focus on translating these models from research to clinical impact. Obstacles to deployment of AI models include demonstrating the robustness and generalizability of AI models, increasing interpretability of models, and integrating AI models into clinical workflows. ROC, receiver operating characteristic curve. Image created with BioRender.com, with permission.

4.1. Evaluating Models in the Wild

After developing an AI model, the next major milestone is to characterize the generalizability of the model. Generalization is the ability to maintain model performance on previously unseen data. Clinical datasets used to train models are incredibly rich but may also unknowingly carry institute (how and where the data were collected)- or patient (pediatric vs. adult profile)-specific characteristics. Current deep learning models may learn subtle patterns that do not reflect true or generalizable clinical patterns, resulting in decreased performance when evaluated on external datasets (198). It is critical to rigorously test AI models in the wild to answer these questions: 1) In a different institution, under different data collection workflows, in the hands of a different individual, how does the model perform? 2) For a different patient population, is model performance maintained? 3) Given that most models are trained on retrospective datasets, how does the model perform prospectively?

To address these questions, models in development are increasingly being evaluated on multihospital, multicountry, multipatient population datasets (91, 96, 131, 132, 199). The ultimate desired output from these evaluations is “thoughtful informed reporting” (20). It is necessary to state not only performance but also in which environments, with which data collection techniques and instruments, and in which patient demographics the model succeeds and therefore can be deployed (200). Informed reporting is a critical component of recently released SPIRIT-AI (201) and CONSORT-AI (202) guidelines for clinical trials of AI platforms.

To facilitate model generalization, a range of solutions have been proposed, from embedding safeguards directly into the model to improve generalizability (203) to combatting the phenomenon of “data shift” where the distributions of the training and test datasets are significantly different because of evolving patient populations and clinical practices (204), improving data collection harmonization among institutes to facilitate multicenter evaluations, and creating gold standard datasets that reflect patient populations and are updated regularly (205). Gold standard datasets will be critical in being able to compare different models with similar clinical goals and have already been adopted in other fields (206, 207).

However, data sharing and creation of multicenter large-scale datasets is rife with obstacles. Issues such as lack of data standardization, incompatible systems and proprietary software, and violation of patient privacy are common (205, 208, 209).

Federated learning has arisen as a possible solution (210). Federated learning rebukes the notion that data must be aggregated in a single location to train a model and instead sends the model to where the training data are located and trains the model locally. Each version of the trained model, but not the training data itself, is then sent back to a single place and aggregated. The result is one overall model that functions as if it had been trained on the entire dataset at once. Federated learning has already begun delivering promising results in AI health care applications (211–213).

4.2. Interpretability, Usability, and Trust

A significant barrier to implementing current AI models, namely deep learning models, is the inability to understand how models learn. Deep learning models are frequently seen as black boxes for a number of reasons. Features learned by deep learning models are not usually understandable in terms of domain knowledge (i.e., clinical or biological concepts). Furthermore, the relationships among features and between outputs are typically nonlinear. Compared with linear relationships that can be understood as an increase in x leading to an increase in y, nonlinear relationships are difficult to explain semantically or in terms of simple concepts.

Although the lack of interpretability is problematic for any application of deep learning, it is particularly egregious in health care, where clinical decisions are based on explanations and evidence. Model interpretability plays a critical role in facilitating trust and usability by safeguarding against errors in the model such as training on artifacts or algorithmic biases (214, 215). If left undetected, model errors have the potential to harm patient outcomes.

Given its importance, the creation of methods to interpret AI models is an active field (216–221). In health care, saliency maps have been particularly useful in determining “what the machine is looking at” and “what the machine thinks and sees” (222–225). In one notable instance, saliency maps revealed that a deep learning model utilized nondisease features, such as the clinical center where the data were collected, to diagnose pneumonia from chest X-rays (198).

Another strategy that has become increasingly common has been reporting intermediary model steps or model predictions of related clinical outcomes. Doing so has been found to better mimic the workflow of a clinician. When creating a model to predict acute renal failure, researchers reported model predictions for laboratory values that clinicians customarily use to characterize renal failure (35). Similarly, in developing a model to diagnose heart failure from echocardiograms, researchers developed a left ventricle segmenter, beat-to-beat evaluation, and ejection fraction classification, calculations that are traditionally done before diagnosing heart failure from echocardiograms (47). Importantly, when AI models are paired with interpretation mechanisms, physicians see increases in usability and performance, validating the role that interpretation plays in implementing AI in health care (67, 104).

4.3. AI Health Care and Regulation

Before AI models can be legally marketed and sold in the United States, they must receive regulatory approval from the US Food and Drug Administration (FDA) (226). AI software is evaluated by the FDA a medical device and thus must either be granted de novo classification (if no prior approved device of the same nature exists), receive 510(k) clearance (if such a predicate device has already been approved), or be granted premarket approval (PMA) (if the device is considered high risk) (100). In each case, the AI device must be deemed safe and effective to be used on patients on the basis of evidence from a clinical study conducted and presented by the AI developers.

However, FDA evaluation standards for clinical studies have somewhat lagged behind the pace of technical development in medical AI. A study of public report summaries of FDA-approved medical devices from 2015–2020 revealed shortcomings in the evaluation process (227): most studies were performed retrospectively on data from a single clinical site, which can mask true clinical outcomes (227, 228); demographic subgroup analyses, which are important for detecting model biases (229–235), were missing in most study reports. Across all studies, evaluation datasets were relatively small, and none was made publicly available.

After a device is approved, the FDA does not require postmarket surveillance, as is the case with drug approvals, making performance degradations over time challenging to measure and regulate (236, 237). Additionally, models cannot be updated or modified after approval (238, 239). In response to these concerns, the FDA has made public statements and action plans for more rigorous evaluations of model biases, continuous postmarket monitoring, and model updating (240, 241).

Beyond FDA regulation, there exist additional challenges to the successful clinical deployment of medical AI. Legal liability of malpractice, especially in the case of high-risk, fully autonomous diagnostic AI, remains to be answered (242, 243); insurance reimbursement schemes for AI algorithms are still in their nascent stages (244, 245); and publicly available, multisite gold standard evaluation datasets are limited (246), in part because of ongoing patient data privacy concerns (247).

4.4. AI Health Care and Quality Metrics

Although there are current metrics for the FDA regulation and evaluation of AI models, there are many additional metrics that should be evaluated to obtain a holistic assessment of AI health care models. Determining the metrics to evaluate the performance of AI health care models is critical for the successful deployment of AI models. Historically, AI models have focused on maximizing sensitivity, specificity, and accuracy. However, as AI models are beginning to be deployed in real-world settings, increasingly it has been demonstrated that these metrics do not always correlate with how well models integrate and assist clinical workflows (248). Numerous works have explored different quality metrics that can be used to evaluate the performance of models. Rather than solely focusing on optimizing accuracy, these models instead are engineered to optimize additional quality metrics such as cost (249), mortality (172), or medical ethics (250). CoAI was developed to optimize model performance in the setting of cost, yielding cost-aware, low-cost predictions (249). They found that doing so could also introduce robustness to the model, a current limitation hindering many AI health care models. In another model, Artificial Intelligence (AI) Clinician, reinforcement learning was used to find the optimal path to minimize mortality or complications in patients with sepsis using the MIMIC-III data set (172). Whether a model widens racial and gender health disparities is often a consideration when introducing novel technologies to clinical workflows. Work done by Pierson et al. (250) found that utilizing a deep learning approach to assess pain can provide an unbiased and quantitative metric that can reduce health inequities. These works raise an important consideration. Many current AI models are focused on high accuracy, particularly in determining a diagnosis or label; however, in clinical workflows AI-physiology models are used to make decisions and further the management of patients. Thus, models trained to optimize additional quality metrics will be increasingly important in the coming years.

4.5. Integrating into the Workflow and Evaluating Improved Care

A final hurdle is to successfully integrate AI platforms into the health care workflow and evaluate whether meaningful impact is seen. Implementation issues such as where to store the data and model, how the user interfaces with the model, how and when to integrate the platform into the clinical workflow, how to educate users and beneficiaries of the system, and what are follow-up action plans and how to proceed when the health care provider and the AI platform disagree are critical questions that must be answered before use (251–254). Furthermore, whether the AI model results in meaningful improvements in patient outcomes or clinical care must also be evaluated, as past deployments of computer-assisted devices saw increased costs without benefits to patients (255).

A number of AI models currently being deployed in clinics or in clinical trials are addressing these issues (100). General trends include how best to position the AI platform so it does not succumb to alert fatigue, where health care providers overburdened by information may start to ignore important notifications (102). Another common trend has been the observation that high model performance and accuracy may not always translate to improved patient outcomes or streamlined care (256, 257). This may be due to the fact that customary model performance metrics such as AUC curves, sensitivity, specificity values, or accuracy may not always be the most informative/accurate metric to evaluate patient outcomes. Identifying metrics other than performance to describe AI health care models is increasingly being practiced and is an area of study (253, 258, 259).

A final consideration is to educate invested parties about AI in health care. Machine learning scientists who develop AI models must be increasingly informed about limitations of physiology and clinical data and how models can best be developed to solve impactful clinical problems. Health care providers have a duty to not only learn how to deploy AI platforms but also understand the assumptions models make and the datasets models are trained on to safeguard the model against patient harm. Finally, patients must have a working understanding of how AI models will affect their health care and the role they play in the AI-health care ecosystem.

GRANTS

This work was supported in part by National Institutes of Health Grants F30HL156478 (to A.Z.), P30AG059307 (to J.Z.), U01MH098953 (to J.Z.), R01HL163680 (to J.C.W), R01HL130020 (to J.C.W), R01HL146690 (to J.C.W.), and R01HL126527 (to J.C.W.); by National Science Foundation Grant CAREER1942926 (to J.Z.); and by American Heart Association Grant 17MERIT3361009 (to J.C.W.).

DISCLOSURES

J.C.W. is a founder of Greenstone Biosciences, and A.Z. is a consultant of Greenstone Biosciences.

AUTHOR CONTRIBUTIONS

A.Z. and J.C.W. conceived and designed research; A.Z. and M.W. prepared figures; A.Z., Z.W., E.W., and M.W. drafted manuscript; A.Z., Z.W., E.W., M.W., M.P.S., J.Z., and J.C.W. edited and revised manuscript; A.Z., Z.W., E.W., M.P.S., J.Z., and J.C.W. approved final version of manuscript.

ACKNOWLEDGMENTS

Figures were created with BioRender.com.

REFERENCES

- 1. Zhang A, Xing L, Zou J, Wu JC. Shifting machine learning for healthcare from development to deployment and from models to data. Nat. Biomed Eng 6: 1330–1345, 2022. doi: 10.1038/s41551-022-00898-y. [DOI] [PubMed] [Google Scholar]

- 2. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med 25: 44–56, 2019. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 3. Yu KH, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat Biomed Eng 2: 719–731, 2018. doi: 10.1038/s41551-018-0305-z. [DOI] [PubMed] [Google Scholar]

- 4. Esteva A, Robicquet A, Ramsundar B, Kuleshov V, DePristo M, Chou K, Cui C, Corrado G, Thrun S, Dean J. A guide to deep learning in healthcare. Nat Med 25: 24–29, 2019. doi: 10.1038/s41591-018-0316-z. [DOI] [PubMed] [Google Scholar]

- 5. Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems 25, edited by Pereira F, Burges CJ, Bottou L, Weinberger KQ. Red Hook, NY: Curran Associates, Inc., 2012, p. 1097–1105. [Google Scholar]

- 6. Devlin J, Chang MW, Lee K, Toutanova K. BERT: pre-training of deep bidirectional transformers for language understanding (Preprint). arXiv 1810.04805, 2018. doi: 10.48550/arxiv.1810.04805. [DOI]

- 7. Yeung S, Downing NL, Fei-Fei L, Milstein A. Bedside computer vision—moving artificial intelligence from driver assistance to patient safety. N Engl J Med 378: 1271–1273, 2018. doi: 10.1056/NEJMp1716891. [DOI] [PubMed] [Google Scholar]

- 8. Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J, Kim R, Raman R, Nelson PC, Mega JL, Webster DR. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 316: 2402–2410, 2016. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 9. Xue Y, Xu T, Zhang H, Long LR, Huang X. SegAN: adversarial network with multi-scale L1 loss for medical image segmentation. Neuroinformatics 16: 383–392, 2018. doi: 10.1007/s12021-018-9377-x. [DOI] [PubMed] [Google Scholar]

- 10. Wang G, Liu X, Shen J, Wang C, Li Z, Ye L, , et al. A deep-learning pipeline for the diagnosis and discrimination of viral, non-viral and COVID-19 pneumonia from chest X-ray images. Nat Biomed Eng 5: 509–521, 2021. doi: 10.1038/s41551-021-00704-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Huang K, Altosaar J, Ranganath R. ClinicalBERT: modeling clinical notes and predicting hospital readmission. CHIL Workshop, 2020. doi: 10.48550/arXiv.1904.05342. [DOI]

- 12. Smit A, Jain S, Rajpurkar P, Pareek A, Ng AY, Lungren MP. CheXbert: combining automatic labelers and expert annotations for accurate radiology report labeling using BERT. EMNLP, 2020. doi: 10.48550/arXiv.2004.09167. [DOI]

- 13. Alsentzer E, Murphy J, Boag W, Weng W-H, Jindi D, Naumann T, McDermott M. Publicly available clinical BERT embeddings. In: Proceedings of the 2nd Clinical Natural Language Processing Workshop. Minneapolis, MN: Association for Computational Linguistics, 2019, p. 72–78. doi: 10.18653/v1/W19-1909. [DOI] [Google Scholar]

- 14. Luongo F, Hakim R, Nguyen JH, Anandkumar A, Hung AJ. Deep learning-based computer vision to recognize and classify suturing gestures in robot-assisted surgery. Surgery 169: 1240–1244, 2021. doi: 10.1016/j.surg.2020.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Goldberger AL, Amaral LA, Glass L, Hausdorff JM, Ivanov PC, Mark RG, Mietus JE, Moody GB, Peng CK, Stanley HE. PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circulation 101: E215–E220, 2000. doi: 10.1161/01.cir.101.23.e215. [DOI] [PubMed] [Google Scholar]

- 16. Johnson AE, Pollard TJ, Shen L, Lehman LW, Feng M, Ghassemi M, Moody B, Szolovits P, Celi LA, Mark RG. MIMIC-III, a freely accessible critical care database. Sci Data 3: 160035, 2016. doi: 10.1038/sdata.2016.35. [DOI] [PMC free article] [PubMed] [Google Scholar]