Abstract

To explore the mechanism of emotional words semantic satiation effect on facial expression processing, participants were asked to judge the facial expression (happiness or sadness) after an emotional word (哭(cry) or 笑(smile)) or a neutral word (阿(Ah), baseline condition) was presented for 20 s. The results revealed that participants were slower in judging valence-congruent facial expressions and reported a more enlarged (Experiment 1) and prolonged (Experiment 2) N170 component than the baseline condition. No significant difference in behavior and N170 appeared between the valence-incongruent and the baseline condition. However, the amplitude of LPC (Late Positive Complex) under both valence-congruent/incongruent conditions was smaller than the baseline condition. It indicates that, in the early stage, the impeding effect of satiated emotional words is specifically constrained to facial expressions with the same emotional valence; in the late stage, such an impeding effect might spread to facial expressions with the opposite valence of the satiated emotional word.

Keywords: Semantic satiation, Facial expression, N170, LPC

Highlights

-

•

A satiated emotional word delayed the valence-congruent face processing.

-

•

Emotional semantic satiation effects appeared on two stages of face processing.

-

•

Enlarged/prolonged N170 presented on the valence-congruent face processing.

-

•

Decreased LPC presented on the valence congruent/incongruent face processing.

1. Introduction

Language, from a functional standpoint, can refine and facilitate face processing in at least two ways. Firstly, it can serve as a prime to pre-activate the related facial information [1]. Secondly, it may function as a context to reduce the uncertainty of incoming information [[2], [3], [4], [5], [6]].

However, the facilitation effect of language on facial information processing would change into an inhibition effect if the accessibility of its semantic meaning was temporarily reduced. Through continuous repetition [7] or prolonged visual inspection [8], a stimulus word will temporarily lose its meaning, which is known as semantic satiation. A satiated word would then hinder the processing of subsequent relevant stimuli. For instance, when an emotional word was temporarily satiated, it was more difficult for participants to categorize the subsequent facial behaviors depicting emotion. Such impedance occurred not only to the faces sharing the same basic emotion category with the satiated word, but also to the faces belonging to the different emotion categories [9]. Furthermore, a satiated emotional word would interfere with the following face processing, even if the experimental task was not to judge the emotion of the face but to recognize whether the face had been presented before [10]. In summary, the effect of a satiated emotional word could spread to the various related item processing. However, it is reasonable to speculate that the category-based effect would decrease as the distance of the semantic relationship increased between the satiated word and the target face.

Although the effect of the satiated emotional words has been confirmed repeatedly on decreasing the accuracy and/or the speed of subsequent face processing, its neuro-cognitive mechanism has not been disclosed. To explore this issue, the present study integrated the modified semantic satiation paradigm with the millisecond resolution ERP technique. Several ERP components could index the time course of the semantic satiation effect on face processing [11]. For example, N170 is a face-specific component relating to facial structure encoding [12,13], its latency and amplitude are proportional to the difficulty of face processing [[14], [15], [16]]. The valence effect of emotional faces was also revealed as early as the N170 component [17,18]. Some researchers have pointed out that the emotional valence effect of N170 results from the overlap of face-specific N170 [12] and the emotion-sensitive early posterior negativity (EPN), which is considered to reflect enhanced sensory processing of emotional stimuli due to reflexive attention [19,20]. Consistent evidence revealed that the EPN amplitude induced by emotional stimulus was larger than that of neutral stimulus, but there was no consensus on whether EPN could differentiate the various emotional categories [20,21]. Even though it has been suggested that the EPN could reflect the automatic grabbing attention by emotional stimuli, this interpretation should be taken cautiously as recent evidence showed that attention processing of emotional stimuli occurs just when they are relevant to the participants’ goals [[22], [23], [24], [25], [26]]. Since the present study will analyze the event-related potentials (ERP) locked to the happy and sad face photos without the neutral face, we focus on component N170 instead of EPN.

The emotional effect also appears in the late stage of face processing, indexed by a stable larger LPC (Late Positive Complex that appears 300 ms after stimulus onset) for emotional faces than for neutral ones [20,27,28]. The amplitude of LPC is regarded as reflecting an increased motivational significance and arousal value of emotional stimuli [19,29] and a continuous perceptual analysis due to the high intrinsic relevance of emotional stimuli [30]. In addition, different emotional facial expressions can be better discriminated in LPC than in earlier ERP components [18,31].

Besides the ERP technique, the present study has introduced another two innovative modifications to the semantic satiation paradigm based on previous studies [9,10,32]. Firstly, in case of overestimating the semantic satiation effect, this study did not compare the satiated condition (e.g., repeated a word 30 times or long presentations) with the primed condition (e.g., repeated a word 3 times or short presentations) as before. Because the short-time presentation or a few times repetition could induce a priming effect and facilitate the subsequent related information processing [33]. Although the semantic satiation effect can be induced by both repetition and prolonged viewing [8], frequent flickering of word stimuli would increase artifacts either directly or by eliciting eye blinking or eye movements. The present study obtained semantic satiation through prolonged viewing [11] rather than repetition. In addition, Lindquist et al. suggested that control trials of satiated irrelevant category words (e.g., “car”) before emotion perception should be studied a bit further [9], so we satiated the neutral function and closed-word “阿” (Ah) to work as the control trial. The word “Ah” does not have any emotional semantic connection with the target faces.

Secondly, considering that the positive and the negative emotion processing have different manifestations and mechanisms [[34], [35], [36]], we investigated the semantic satiation effects of the positive and the negative emotional words separately in two experiments. These two experiments could help to clearly observe the positive semantic satiation effect and the negative semantic satiation effect respectively, by preventing unnecessary interactions of too many factors; on the other hand, they could also work as a nearly repeated experiment to examine the reliability of the semantic satiation effect. Specifically, we selected two groups of face photos depicting happy or sad emotion as the target stimuli, using “笑(smile)” and “哭(cry)” as the satiated emotional words. “笑(smile)” and “哭(cry)” are the most typical facial expressions of the happy and the sad emotion respectively. In addition, “笑(smile)” and “哭(cry)” are very similar in the morphological structure, which increases their comparability in the present study, because the physical character of stimuli could affect the EEG-ERP results.

We hypothesized that, compared with the satiated neutral word, the satiated emotional words, both the negative and the positive ones, would impair the processing of subsequent valence-congruent faces. Such an effect, in behavior data, would show as the decreased accuracy and/or delayed categorization. Consistently, the impaired processing would make the facial structure encoding more difficult and require more attentional resources, indexed by increased N170 amplitude and/or longer N170 latency. In the late stage, the inhibition effect may manifest as decreased LPC. This study might help to clarify the connections between language and facial expression processing, especially to reveal the time course of the semantic satiation effect on face processing.

2. Methods

2.1. Experiment 1

2.1.1. Participants

Twenty-six students (13 males, 13 females, aged 18–28 years, M = 22.69, SD = 2.13) were recruited to rate the coincidence degree of emotional characters of face images and words (“哭” (cry), “阿” (Ah), “笑” (smile)) stimuli (from 1 = completely accorded with sadness, 4 = neutral, to 7 = completely accorded with happiness), and the arousal of face images on a 7-point scale (from 1 = calming to 7 = exciting).

Twenty-seven students were recruited for the formal experiment. Three of them were excluded due to excessive signal artifacts (with less than 75% clean segments of ERP per condition), resulting in 24 participants (14 females, 10 males, aged 20–26 years, M = 23, SD = 2.12) remaining in data analysis. A power analysis with G*Power3.1 revealed that this sample size with an estimated moderate to large effect size (0.5 < d < 0.8) would result in a power above .80 [37]. All participants, self-reported right-handed with normal or corrected-to-normal vision, were provided informed consent before participating in the studies. They were reimbursed with course credits or a moderate amount of money after completing the experiment. The protocol (No. LL2023019) was approved by the ethical review committee of the University.

2.1.2. Materials

Picture stimuli. Forty black-and-white photographs of different faces (20 happy (高兴) and 20 sad (悲伤)) were selected from the Chinese Facial Affective Picture System (CFAPS) [38]. There was no significant difference in emotional intensity (9-level ratings, from 1 = weakest to 9 = strongest) between the happy and the sad faces (Msad = 5.64, SD = 0.38, Mhappy = 5.66, SD = 0.32; t (19) = −0.20, p > .05). The number of male and female face images were balanced in each emotion category.

Results of coincidence ratings revealed that the sad faces (Msad = 1.94, SD = 0.46; t (25) = 20.60, p = .001) and the happy faces (Mhappy = 5.83, SD = 0.69; t (25) = 41.68, p = .001) were different from the neutral score 4 of 1–7 scale; the sad vs. the happy, t (25) = 18.74, p = .001. No significant difference in arousal between the happy and the sad faces (Msad = 4.88, SD = 1.15; Mhappy = 4.63, SD = 1.27; t (25) = 0.97, p > .05). All images were adjusted to 115 (W) × 100 (H) pixels using Photoshop software, with a resolution of 96 pixels. All of the above ensured that the sad and happy faces had contrary emotion but the emotional intensity and arousal were at the same degree.

Word stimuli. The Chinese characters “哭(cry)” and “阿(Ah)”1 were selected to represent the emotional negative and neutral word respectively. Results for coincidence degree of emotional characters (from 1 = completely accorded with sadness, 4 = neutral, to 7 = completely accorded with happiness) revealed that the coincidence ratings of 哭(cry) (M哭 (cry) = 1.86, SD = 1.25) were significantly different from that of “阿(Ah)” (M阿 (Ah) = 3.95, SD = 0.58) (t (25) = 7.31, p = .001); while the 阿 (Ah) was not significantly different from the neutral score 4 of 1–7 scale, t (25) = −0.37, p = .71. It ensured that “阿(Ah)” could represent the neutral word, “哭(cry)” could represent the sad word.

2.1.3. Procedures

Each participant was seated in a dimmed room approximately 100 cm from a 17-inch monitor. The refresh rate of the monitor was 75 Hz, and the resolution was 1280 × 1024 pixels. The visual angle was 4.5° (H) × 4.9° (V) for the facial images and 5.2° (H) × 4° (V) for the words. Participants were instructed to minimize blinking or eye movements and keep their eyes fixating at the center of the screen.

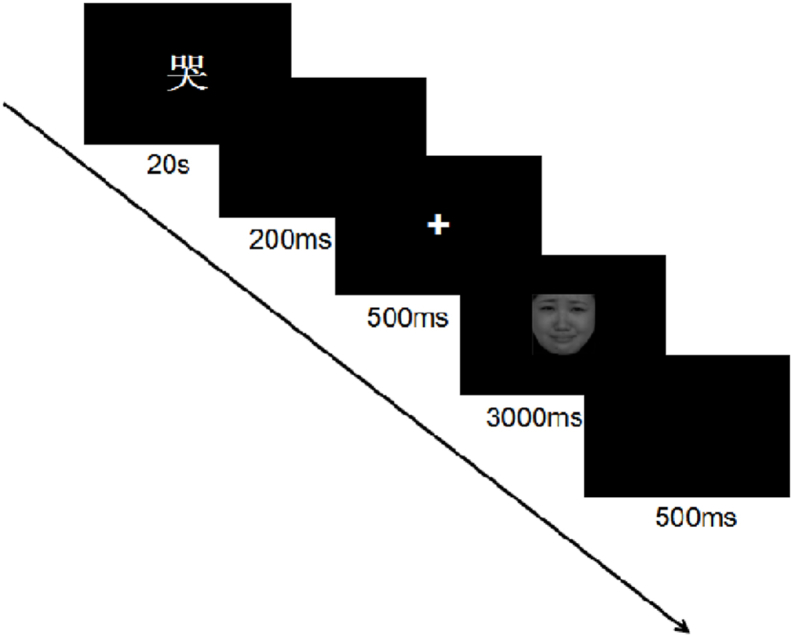

The experimental procedure was programmed with E-Prime 1.2 (Psychology Software Tools Inc., Pittsburgh, Pennsylvania, USA). Procedures in practice and formal experiments were identical. The formal experiment consisted of four blocks separated by a short break. Each block included 40 trials. Each trial was initiated either by the word “哭(cry)” or by the word “阿(Ah)”, and presented at the center of the screen for 20 s [39]. The word flickered randomly once to three times during its presentation to ensure that participants were paying attention to it, and participants were required to press the space key as soon as it flickered. After the word, a blank screen displayed for 200 ms, followed immediately by a 500 ms fixation cross. Next, a face image depicting either happy or sad emotion was displayed on the screen until participants judged its expression by pressing a corresponding key as accurately and quickly as possible, or disappeared after 3000 ms. After a blank screen presented for 500 ms, the next trial began. Fig. 1 illustrates the sequence of events in a trial.

Fig. 1.

Example of a trial in Experiment 1.

2.1.4. Data acquisition and pre-analysis of the ERPs

EEG signals were recorded using an elastic cap with 64 electrode sites placed according to the 10/20 system (Brain Products, Germany). All electrodes were sintered Ag/AgCl electrodes. The EEG was digitized with a sampling rate of 500 Hz and filtered online using 0.05–100 Hz band-pass filter. Electrode impedance was kept below 10 kΩ. All channels were referenced to the Cz electrode during acquisition.

EEG data were analyzed offline using the Brain Vision Analyzer (Brain Products, Germany). The continuous EEG data were filtered with a 30 Hz low-pass and 0.5 Hz high-pass, then re-calculated to average reference. Ocular artifacts were processed using ocular correction with independent component analysis (ICA). The data were then segmented relative to the onset of the face image (200 ms before and 1000 ms after) according to the experimental conditions. After being averaged, the segments were baseline-corrected relative to the mean amplitude of the 200 ms before stimulus onset.

Segments with artifacts (criteria: differences between the maximal and minimal voltage superior to 200 μV, voltage steps superior to 50 μV/ms, and low electrical activity inferior to 0.5 μV in a 200-ms interval) were excluded from analyses. The number of valid trials was not significantly different between conditions2 (F (1, 23) = 1.63, p > .05) (M (哭 (cry)- sad) = 38.04, SE = 0.44; M (哭 (cry)-happy) = 37.67, SE = 0.68; M (阿 (Ah)-sad) = 35.50, SE = 0.75; M (阿 (Ah)-happy) = 36.08, SE = 0.50).

3. Results

Repeated measures ANOVAs with Satiated Word (“哭(cry)” vs. “阿(Ah)”) and Facial Expression (happy vs. sad) as within factors were conducted on Reaction time (RT) and Accuracy (ACC), as well as the amplitude and latency of N170 and LPC components. For significant main effects and interactions, post-hoc tests were performed using Bonferroni correction. The p-values were adjusted by the Greenhouse-Geisser correction when the assumption of sphericity was violated.

3.1. Behavioral results

RT (Reaction time). The interaction between Satiated Word and Facial Expression reached statistical significance (F (1, 23) = 5.196, p = .032, ŋp2 = 0.18, 1-β = 0.59). Post-hoc analyses indicated that participants were slower in judging the sad face image after the satiated word “哭(cry)” (satiation-congruent condition) (M = 801.32 ms, SE = 32.46) than after the satiated word “阿(Ah)” (baseline condition) (M = 764.45 ms, SE = 26.31) (F (1, 23) = 7.80, p = .01, ŋp2 = 0.25, 1-β = 0.76). No significant difference was found between judging the happy face image after the satiated word “哭(cry)” (satiation-incongruent condition) (M = 699.33 ms, SE = 26.54) and “阿(Ah)” (baseline condition) (M = 700.24 ms, SE = 27.97) (F (1, 23) = 0.01, p > .05). The results were illustrated in Fig. 2.

Fig. 2.

Mean RT of judging facial expression after different words (“哭(cry)” vs. “阿(Ah)”) satiated.

ACC (Accuracy). Neither the main effect of Satiated Word (F (1, 23) = 1.49, p > .05), Facial Expression (F (1, 23) = 2.31, p > .05), nor the two-way interaction (F (1, 23) = 0.89, p > .05) were significant.

3.2. ERP results

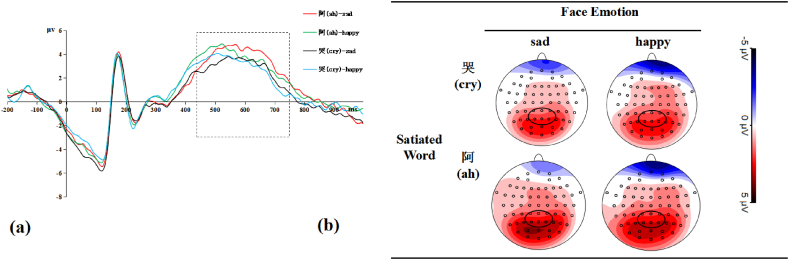

The time windows and the electrode clusters of N170 and LPC were chosen, respectively, based on visual inspection of the ERPs and previous studies [[40], [41], [42], [43]]. For the N170, peak amplitude and latency were calculated during 150∼230 ms over the electrode cluster of P7, P8, PO7, PO8 (see Fig. 3 (a, b)). For the LPC, the averaged amplitude was calculated from the time window of 450∼750 ms over the electrode cluster of CP1, CPz, CP2, P1, Pz, P2 (see Fig. 4 (a, b)).

Fig. 3.

(a) ERPs of the selected electrodes group (averaged across the electrodes of P7, P8, PO7, PO8) in each condition, the time window of N170 was marked with a dashed rectangular box. (b) Grand-average topographic maps during the time window (150–230 ms), the selected electrodes for calculating N170 were marked with a circle.

Fig. 4.

(a) Mean amplitude of the LPC component (averaged across the electrodes of P1, P2, Pz, CP1, CP2, CPz) in each condition, the time window of the LPC was marked with a dashed rectangular box. (b) Grand-average topographic maps during the time window (450–750 ms), the selected electrodes for calculating LPC were marked with a circle.

N170 (150–230 ms). A three-way repeated measure ANOVA of peak amplitude with the factors of Satiated Word (“哭(cry)”, “阿(Ah)”), Facial Expression (happy, sad), Electrode Location (left side, right side) revealed that the interaction between Satiated Word and Facial Expression reached statistical significance (F (1, 23) = 6.74, p = .016, ŋp2 = 0.23, 1-β = 0.70). Post-hoc analyses indicated a larger N170 under the satiation-congruent condition (M = −4.74 μV, SE = 0.62) than the baseline condition (M = −3.67 μV, SE = 0.65) (F (1, 23) = 9.89, p = .005, ŋp2 = 0.30, 1-β = 0.85), while there was no significant difference in N170 peak value between the satiation-incongruent condition (M = −4.49 μV, SE = 0.62) and the baseline condition (M = −4.53 μV, SE = 0.67) (F (1, 23) = 0.04, p > .05). The significant main effect of Satiated Word revealed larger N170 of “哭(cry)” (M = −4.62 μV, SE = 0.60) than “阿(Ah)” (M = −4.10 μV, SE = 0.65) (F (1, 23) = 7.01, p = .014, ŋp2 = 0.23, 1-β = 0.72). The significant main effect of Facial Expression revealed larger N170 of happy face (M = −4.51 μV, SE = 0.64) than sad face (M = −4.21 μV, SE = 0.61) (F (1, 23) = 5.14, p = .033, ŋp2 = 0.18, 1-β = 0.58), see Fig. 3 (a, b).

The repeated measure ANOVA of latency yielded no significant main effects, the two-way interactions or the three-way interaction, all ps > .05.

LPC (450–750 ms). A two-way repeated measure ANOVA with the factors Satiated Word (“哭(cry)”, “阿(Ah)”), Facial Expression (happy, sad) was performed, which revealed a statistically significant main effect of Satiated Word in mean amplitude of LPC (F (1, 23) = 6.84, p = .015, ŋp2 = 0.23, 1-β = 0.71), with LPC being larger for “阿(Ah)” (M = 3.71 μV, SE = 0.45) than “哭(cry)” (M = 2.88 μV, SE = 0.56) (see Fig. 4 (a, b)). There was no significant main effect of Facial Expression and of the two-way interaction.

3.3. Experiment 2

3.3.1. Participants

Twenty-six participants different from that of Experiment 1 were recruited. Two of them were excluded due to excessive signal artifacts (with less than 75% clean segments of ERP per condition), resulting in 24 participants (14 females, 10 males, M = 22.88, SD = 1.84, aged 20–26 years) remaining in data analysis. The power analysis with G*Power3.1 ensured the effect size as 0.5 < d < 0.8 and power above .80.

3.3.2. Materials

Picture stimuli. Same as Experiment 1.

Word stimuli. Chinese characters “笑(smile)” and “阿(Ah)” were selected to represent the happy and the neutral words respectively. “笑(smile)” is more often used to describe positive face in Chinese than “高兴(happy)”, and its font structure is similar to the character “哭(cry)” used in experiment 1. Results for coincidence degree of emotional characters revealed that the coincidence ratings of “笑(smile)” (M笑 (smile) = 6.23, SD = 1.11) were significantly different from “阿(Ah)” (M阿 (Ah) = 3.95, SD = 0.58) (t (25) = −8.587, p = .001), while the “阿(Ah)” was not significantly different from neutral score 4 of 1–7 scale, t (25) = −0.37, p = .71.

3.3.3. Procedure

The experimental procedure was the same as that of Experiment 1 except that the satiated words were “笑(smile)” and “阿(Ah)”. See Fig. 5.

Fig. 5.

Example of a trial in Experiment 2.

3.3.4. Data acquisition and pre-analysis of the ERPs

The methods of acquiring and analyzing behavioral and EEG data were identical to those of Experiment 1. The number of valid trials was not significantly different between conditions3 (F (1, 23) = 3.42, p > .05, ŋp2 = 0.15) (M (笑 (smile)-sad) = 38.38, SE = 0.42; M (笑 (smile)-happy) = 37.81, SE = 0.71; M (阿 (Ah)-sad) = 36.00, SE = 0.41; M (阿 (Ah)-happy) = 36.48, SE = 0.43).

4. Results

Repeated measures ANOVAs with Satiated Word (“笑(smile)” vs. “阿(Ah)”) and Facial Expression (happy vs. sad) as within factors were conducted on Reaction time (RT) and Accuracy (ACC), as well as the amplitude and latency of N170 and LPC components. Post-hoc testing with Bonferroni correction were used for significant main effects and interactions. The p-values were adjusted by the Greenhouse-Geisser correction when the assumption of sphericity was violated.

4.1. Behavioral results

RT (Reaction time). The interaction between Satiated Word and Facial Expression reached statistical significance, F (1, 23) = 4.37, p = .048, ŋp2 = 0.16, 1-β = 0.52. Post-hoc analyses indicated that participants responded slower under the satiation-congruent condition (“笑(smile)”-happy face) (M = 660.96 ms, SE = 26.13) than the baseline condition (“阿 (Ah)”-happy face) (M = 640.56 ms, SE = 26.06) (F (1, 23) = 6.05, p = .022, ŋp2 = 0.21, 1-β = 0.65), while no significant difference was found between the satiation-incongruent (“笑(smile)”-sad face) condition (M = 690.37 ms, SE = 32.47) and the baseline (“阿(Ah)”-sad face) condition (M = 701.52 ms, SE = 33.56) (F (1, 23) = 1.30, p > .05). See Fig. 6.

Fig. 6.

Mean RT of judging facial expression after different words (笑 (smile) vs. 阿 (Ah)) satiated.

ACC (Accuracy). Neither the main effects of Facial Expression and Satiated Word nor the two-way interaction was significant, all ps > .05.

4.2. ERP results

N170 (150–230 ms). A three-way repeated measure ANOVA with the factors of Satiated Word (“笑(smile)”, “阿(Ah)”), Facial Expression (happy, sad), Electrode Location (left side, right side) was performed on the peak amplitude of N170. Neither the main effects of Satiated Word nor that of Facial Expression were significant, all ps > .05. The main effect of Electrode Location was significant (F (1, 23) = 6.13, p = .021, ŋp2 = 0.21). There were no significant interaction effects, all ps > .05.

Analysis on the N170 latency yielded a significant interaction of Satiated Word by Facial Expression (F (1, 23) = 5.32, p = .03, ŋp2 = 0.19, 1-β = 0.60). Post-hoc analyses revealed that the satiation-congruent condition had a delayed N170 (M = 179.77 ms, SE = 2.99) compared to the baseline condition (M = 174.02 ms, SE = 2.09) (F (1, 23) = 11.41, p = .003, ŋp2 = 0.34, 1-β = 0.89), whereas there was no significant difference in N170 latency between the satiation-incongruent condition (M = 174.79 ms, SE = 3.07) and the baseline condition (M = 175.00 ms, SE = 2.30) (F (1, 23) = 0.01, p > .05). Results are illustrated in Fig. 7 (a, b).

Fig. 7.

(a) ERPs of the selected electrodes group (averaged across the electrodes of P7, P8, PO7, PO8) in each condition, the time window of N170 was marked with a dashed rectangular box. (b) Grand-average topographic maps during the time window (150–230 ms), the selected electrodes for calculating N170 were marked with a circle.

LPC (450–750 ms). A two-way repeated measure ANOVA with the factors of Satiated Word (“笑(smile)”, “阿(Ah)”) and Facial Expression (happy, sad) was performed on the mean amplitude of LPC, which revealed a significant main effect of Satiated Word (F (1, 23) = 13.95, p = .001, ŋp2 = 0.38, 1-β = 0.95), with larger LPC for “阿(Ah)” (M = 1.81 μV, SE = 0.39) than “笑(smile)” (M = 1.23 μV, SE = 0.40). Neither the main effect of Facial Expression nor the two-way interaction between them was significant. Results are illustrated in Fig. 8 (a, b).

Fig. 8.

(a) Mean amplitude of the LPC component (averaged across the electrodes of P1, P2, Pz, CP1, CP2, CPz) in each condition, the time window of the LPC was marked with a dashed rectangular box. (b) Grand-average topographic maps during the time window (450–750 ms), the selected electrodes for calculating LPC were marked with a circle.

5. Discussion

In both experiments, participants were required to judge the facial expression (happiness or sadness) following an emotional or a neutral word that was presented for 20 s. The behavioral evidence revealed that participants were slower in judging the expression when the face image shared the same emotional valence with the emotional word than when the word was neutral (i.e., baseline condition). When the emotional word and the face image had incongruent valences, participants’ RT was not significantly different from the baseline condition.

ERP results showed that the satiated emotional word only increased the amplitude or the latency of N170, for the face matched the emotional valence of the previous satiated word, as well as decreased the amplitude of LPC of all following faces.

5.1. Consistency and conflict between the behavior and the ERP results

Firstly, the present study confirmed that, after a long presentation of an emotional word, the participants would be interfered in processing the related emotional information of a face. To ensure that participants were paying attention during the emotional word presentation, we asked them to press the space key as soon as the word flickered. In addition, in this context of judging facial expression, participants would automatically process the emotional word meaning [44,45] and become satiated after the prolonged presentation. That is, participants were temporarily blocked in the emotional information processing, so as to be hindered in the similar emotional information processing in the subsequent face.

As for to what extent the satiated emotional word can affect, Lindquist et al. proposed two hypotheses: category-based hypothesis and spreading activation hypothesis [9]. According to the category-based hypothesis, the effect of the semantic satiation of an emotional word is constrained to a stimulus that matches the same emotion category (e.g., a specific emotion) with the word. However, the spreading activation hypothesis suggests that the effect of a satiated emotional word could expand to the processing of all semantically related emotional facial stimuli. Although these two hypotheses seem to be contrary, Lindquist et al. demonstrated evidence for both hypotheses in one study [9].

The behavioral results of the present study confirmed that the effect of the satiated emotional word supported the category-based hypothesis. Because the satiated emotional word only prolonged the response time for judging the type of emotion appearing on the face belonging to the same emotion category. However, the ERP results could accommodate both the category-based hypothesis and the spreading activation hypothesis, respectively, in the early and late stages of face processing. The mechanism revealed by ERP results might explain the reasons for the possibility that both the category-based and the spreading activation hypotheses were supported in one of Lindquist et al.‘s studies [9].

5.2. Category-based effect of emotional word semantic satiation in the early stage of face processing

The behavioral results of the present study obtained very consistent ERP evidence in the early stage of face processing, which together supported the category-based hypothesis. The satiated emotional word only modulated the N170 of the subsequent valence-congruent face image processing; it did not affect the N170 of the valence-incongruent face image. The amplitude of N170 in Experiment 1 and the latency of N170 in Experiment 2 increased when the emotional valence of the face was the same as a previously satiated emotional word compared with a satiated neutral one. The results of the N170 provided electrophysiological evidence of the increased difficulty in face processing after participants were satiated with a valence-congruent emotional word, for the latency and amplitude of the N170 proportionally reflect the difficulty of face processing [[12], [13], [14]]. Considering the “笑(smile)” and “哭(cry)” are the words describing the facial expressions of the happy and the sad emotion, respectively, they have both conceptual and emotional meanings. So it is an open question which of their meaning blocked was the direct cause of the N170 results in the present study.

The different manifestation of the N170 results between Experiments 1 and 2 provided evidence of the effects due to the emotional valence of “笑(smile)” and “哭(cry)” blocked. In Experiment 1, the satiated negative word increased the N170 for the sad face; in experiment 2, the satiated positive word delayed the N170 for the happy face. This difference could be explained by different underlying mechanisms of the facilitation effect of positive and negative facial expression processing [17]. As reported in previous studies, the happy faces evoked significantly earlier N170 than the negative faces [46], while the negative faces evoked greater N170 amplitude than the positive faces [47]. This difference might imply that the negative emotion facilitates facial configuration processing by attracting more attention, presenting as the enhancement of N170; the positive emotion facilitates it by starting early on facial configuration processing, reflecting as shorter latency of N170. However, in the present study, these specific facilitation effects of the emotional faces have been weakened or eliminated by the satiated emotional words. Therefore, compared with following the satiated neutral word, participants may require more attention (i.e., N170 increased) to process the sad face configuration after a satiated negative word, and more time (i.e., N170 delayed) to process the happy face configuration after a satiated positive word.

5.3. Spreading activation effect of emotional word semantic satiation in the late stage of face processing

Both experiments revealed the significant main effect of satiated word types (emotional word vs. neutral word) in the late stage of face processing, indexed by LPC. Specifically, the face image following an emotional word elicited a smaller LPC than that following a neutral word, no matter whether the emotional valence of the face image was congruent or incongruent with that of the emotional word. This result confirmed that the semantic satiation effect of the emotional word spread to the processing of the subsequent facial expression even when they were contrary in emotional valence, which properly supported the spreading activation hypothesis [9].

The amplitude of LPC reflects attention allocation to motivational relevant information [19,29]. In the present study, the LPC for an emotional face image got attenuated after exposure to an emotional word for a long time. One possible reason for this was that participants became desensitized to the emotional information due to the satiated emotional word, and such a state spread and transferred to all the subsequent emotional stimuli processing. Specifically, the emotional information reduced in this stage might be the emotional arousal because the two groups of emotional words (negative vs. neutral & positive vs. neutral) were similar in the arousal but different in the emotional valence; however, their semantic satiation effect was similar in this stage.

Alternatively, the LPC can also reflect context updating [[48], [49], [50], [51]]. The LPC is small and flat when embedded in a relatively stable and consistent background, while it becomes larger and fluctuant (waved) if the background information is altered and/or replaced. Therefore, the attenuation of LPC for the emotional face image after a long-time presentation for the emotional word in the present study could also be due to the emotional word providing a stable and congruent context for the upcoming emotional face.

6. Conclusion and limitation

In summary, the present study confirmed that, after a long time presented emotional word, the subsequent face processing would be hindered if they shared a similar category of emotion; such an effect showed as two stages in the face processing: In the early stage, the impact of the satiated emotional word is restricted to valence-congruent faces, but it spreads to valence-incongruent faces in the late stage. It means that the effect of a satiated emotional word starts within one category (e.g., valence) and then generalizes to a wider category, even include the category with contrary emotional valence, but the effect would decrease along the distance of spread. These findings informed that language automatically plays a necessary role in classifying facial expressions.

However, since only three words were used for satiation in the present study, and the two emotional words (cry and smile) have both conceptual and emotional meaning, as well as the emotional valence and specific emotional categories of the faces were not separated in this study, the mechanism of the semantic satiation effect needs to be verified by other categories of emotional words and facial expressions.

Funding

This work was supported by the Education Department of Liaoning Province [LZ2020001].

Author contribution statement

Zhongqing Jiang: Conceived and designed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Zhao Li: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Kewei Li: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data.

Ying Liu: Conceived and designed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data.

Mingliang Gong, Junchen Shang, Wen Liu, Yangtao Liu: Analyzed and interpreted the data; Wrote the paper.

Data availability statement

Data associated with this study has been deposited at https://yunpan.360.cn/surl_yEb5qFCMNPQ (accession number: 287c).

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

In Chinese, 阿 is a function word, pronounced Ah. It is usually used as a prefix for individuals' names.

M(哭(cry)-sad) means the satiated emotional word 哭 (cry) followed by a target sad expression. M(哭(cry)-happy) means the satiated emotional word 哭 (cry) followed by a target happy expression. M(阿(Ah)-sad) means the satiated neutral word 阿 (Ah) followed by a target sad expression. M(阿(Ah)-happy) means the satiated neutral word 阿 (Ah) followed by a target happy expression.

M(笑(smile)-sad) means the satiated emotional word 笑 (smile) followed by a target sad expression. M(笑(smile)-happy) means the satiated emotional word 笑 (smile) followed by a target happy expression. M(阿(Ah)-sad) means the satiated neutral word 阿 (Ah) followed by a target sad expression. M(阿(Ah)-happy) means the satiated neutral word 阿 (Ah) followed by a target happy expression.

References

- 1.Spruyt A., Hermans D., Houwer J.D., Eelen P. On the nature of the affective priming effect: affective priming of naming responses. Soc. Cognit. 2002;20(3):227–256. doi: 10.1521/soco.20.3.227.21106. [DOI] [Google Scholar]

- 2.Aviezer H., Bentin S., Dudarev V., Hassin R.R. The automaticity of emotional face-context integration. Emotion. 2011;11(6):1406–1414. doi: 10.1037/a0023578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Barrett L.F., Lindquist K.A., Gendron M. Language as context for the perception of emotion. Trends Cognit. Sci. 2007;11(8):327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Barrett L.F., Mesquita B., Gendron M. Context in emotion perception. Curr. Dir. Psychol. Sci. 2011;20(5):286–290. doi: 10.1177/0963721411422522. [DOI] [Google Scholar]

- 5.Lindquist K.A., Gendron M. What's in a word? Language constructs emotion perception. Emot. Rev. 2013;5(1):66–71. doi: 10.1177/1754073912451351. [DOI] [Google Scholar]

- 6.Wieser M.J., Brosch T. Faces in context: a review and systematization of contextual influences on affective face processing. Front. Psychol. 2012;3:471. doi: 10.3389/fpsyg.2012.00471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lambert W.E., Jakobovits L.A. Verbal satiation and changes in the intensity of meaning. J. Exp. Psychol. 1960;60(6):376–383. doi: 10.1037/h0045624. [DOI] [PubMed] [Google Scholar]

- 8.Esposito N.J., Pelton L.H. Review of the measurement of semantic satiation. Psychol. Bull. 1971;75(5):330–346. doi: 10.1037/h0031001. [DOI] [Google Scholar]

- 9.Lindquist K.A., Barrett L.F., Bliss-Moreau E., Russell J.A. Language and the perception of emotion. Emotion. 2006;6(1):125–138. doi: 10.1037/1528-3542.6.1.125. [DOI] [PubMed] [Google Scholar]

- 10.Gendron M., Lindquist K.A., Barsalou L., Barrett L.F. Emotion words shape emotion percepts. Emotion. 2012;12(2):314–325. doi: 10.1037/a0026007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Li Z., Zhu P., Liu Y., Jiang Z.Q. Gender word semantic satiation inhibits facial gender information processing. J. Psychophysiol. 2021;35(4):214–222. doi: 10.1027/0269-8803/a000274. [DOI] [Google Scholar]

- 12.Bentin S., Allison T., Puce A., Perez E., McCarthy G. Electrophysiological studies of face perception in humans. J. Cognit. Neurosci. 1996;8(6):551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Eimer M. Effects of face inversion on the structural encoding and recognition of faces: evidence from event-related brain potentials. Cognit. Brain Res. 2000;10(1–2):145–158. doi: 10.1016/S0926-6410(00)00038-0. [DOI] [PubMed] [Google Scholar]

- 14.Guillaume C., Guillery-Girard B., Chaby L., Lebreton K., Hugueville L., Eustache F., Fiori N. The time course of repetition effects for familiar faces and objects: an ERP study. Brain Res. 2009;1248:149–161. doi: 10.1016/j.brainres.2008.10.069. [DOI] [PubMed] [Google Scholar]

- 15.Itier R.J., Taylor M.J. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage. 2002;15(2):353–372. doi: 10.1006/nimg.2001.0982. [DOI] [PubMed] [Google Scholar]

- 16.Itier R.J., Taylor M.J. Effects of repetition learning on upright, inverted and contrast-reversed face processing using ERPs. Neuroimage. 2004;21(4):1518–1532. doi: 10.1016/j.neuroimage.2003.12.016. [DOI] [PubMed] [Google Scholar]

- 17.Hinojosa J.A., Mercado F., Carretié L. N170 sensitivity to facial expression: a meta-analysis. Neurosci. Biobehav. Rev. 2015;55:498–509. doi: 10.1016/j.neubiorev.2015.06.002. [DOI] [PubMed] [Google Scholar]

- 18.Luo W.B., Feng W.F., He W.Q., Wang N.Y., Luo Y.J. Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage. 2010;49(2):1857–1867. doi: 10.1016/j.neuroimage.2009.09.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Pourtois G., Schettino A., Vuilleumier P. Brain mechanisms for emotional influences on perception and attention: what is magic and what is not. Biol. Psychol. 2013;92(3):492–512. doi: 10.1016/j.biopsycho.2012.02.007. [DOI] [PubMed] [Google Scholar]

- 20.Schupp H.T., Ohman A., Junghofer M., Weike A.I., Stockburger J., Hamm A.O. The facilitated processing of threatening faces: an ERP analysis. Emotion. 2004;4(2):189–200. doi: 10.1037/1528-3542.4.2.189. [DOI] [PubMed] [Google Scholar]

- 21.Schupp H.T., Junghofer M., Weike A.I., Hamm A.O. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004;41(3):441–449. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- 22.Calbi M., Montalti M., Pederzani C., Arcuri E., Umiltà M.A., Gallese V., Mirabella G. Emotional body postures affect inhibitory control only when task-relevant. Front. Psychol. 2022;13 doi: 10.3389/fpsyg.2022.1035328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mancini C., Falciati L., Maioli C., Mirabella G. Threatening facial expressions impact goal-directed actions only if task-relevant. Brain Sci. 2020;10(11):794. doi: 10.3390/brainsci10110794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mancini C., Falciati L., Maioli C., Mirabella G. Happy facial expressions impair inhibitory control with respect to fearful facial expressions but only when task-relevant. Emotion. 2022;22(1):142–152. doi: 10.1037/emo0001058. [DOI] [PubMed] [Google Scholar]

- 25.Mirabella G. The weight of emotions in decision-making: how fearful and happy facial stimuli modulate action readiness of goal-directed actions. Front. Psychol. 2018;9:1334. doi: 10.3389/fpsyg.2018.01334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mirabella G., Grassi M., Mezzarobba S., Bernardis P. Angry and happy expressions affect forward gait initiation only when task relevant. Emotion. 2023;23(2):387–399. doi: 10.1037/emo0001112. [DOI] [PubMed] [Google Scholar]

- 27.Recio G., Sommer W., Schacht A. Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Res. 2011;1376:66–75. doi: 10.1016/j.brainres.2010.12.041. [DOI] [PubMed] [Google Scholar]

- 28.Rellecke J., Sommer W., Schacht A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol. Psychol. 2012;90(1):23–32. doi: 10.1016/j.biopsycho.2012.02.002. [DOI] [PubMed] [Google Scholar]

- 29.Ashley V., Vuilleumier P., Swick D. Time course and specificity of event-related potentials to emotional expressions. Neuroreport. 2004;15(1):211–216. doi: 10.1097/00001756-200401190-00041. [DOI] [PubMed] [Google Scholar]

- 30.Schacht A., Sommer W. Emotions in word and face processing: early and late cortical responses. Brain Cognit. 2009;69(3):538–550. doi: 10.1016/j.bandc.2008.11.005. [DOI] [PubMed] [Google Scholar]

- 31.Recio G., Schacht A., Sommer W. Recognizing dynamic facial expressions of emotion: specificity and intensity effects in event-related brain potentials. Biol. Psychol. 2014;96:111–125. doi: 10.1016/j.biopsycho.2013.12.003. [DOI] [PubMed] [Google Scholar]

- 32.Lewis M.B., Ellis H.D. Satiation in name and face recognition. Mem. Cognit. 2000;28(5):783–788. doi: 10.3758/BF03198413. [DOI] [PubMed] [Google Scholar]

- 33.Jiang Z.Q., Qu Y.H., Xiao Y.L., Wu Q., Xia L.K., Li W.H., Liu Y. Comparison of affective and semantic priming in different SOA. Cogn. process. 2016;17(4):357–375. doi: 10.1007/s10339-016-0771-8. [DOI] [PubMed] [Google Scholar]

- 34.Li W.H., Jiang Z.Q., Liu Y., Wu Q., Zhou Z.J., Jorgensen N., Li X., Li C. Positive and negative emotions modulate attention allocation in color-flanker task processing: evidence from event related potentials. Motiv. Emot. 2014;38(3):451–461. doi: 10.1007/s11031-013-9387-9. [DOI] [Google Scholar]

- 35.Rossell S.L., Nobre A.C. Semantic priming of different affective categories. Emotion. 2004;4(4):354–363. doi: 10.1037/1528-3542.4.4.354. [DOI] [PubMed] [Google Scholar]

- 36.Wentura D., Rothermund K., Bak P. Automatic vigilance: the attention-grabbing power of approach-and avoidance-related social information. J. Pers. Soc. Psychol. 2000;78(6):1024–1037. doi: 10.1037/0022-3514.78.6.1024. [DOI] [PubMed] [Google Scholar]

- 37.Faul F., Erdfelder E., Lang A.G., Buchner A. G* Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods. 2007;39(2):175–191. doi: 10.3758/BF03193146. [DOI] [PubMed] [Google Scholar]

- 38.Gong X., Huang Y.X., Wang Y., Luo Y.J. Revision of the Chinese facial affective picture system. Chin. Ment. Health J. 2011;25(1):40–46. https://psycnet.apa.org/record/2011-05085-005 [Google Scholar]

- 39.Kasschau R.A. Semantic satiation as a function of duration of repetition and initial meaning intensity, J. Verbal. Learn.Verbal. Beyond Behav. 1969;8(1):36–42. doi: 10.1016/S0022-5371(69)80008-3. [DOI] [Google Scholar]

- 40.Bentin S., Golland Y., Flevaris A., Robertson L.C., Moscovitch M. Processing the trees and the forest during initial stages of face perception: electrophysiological evidence. J. Cognit. Neurosci. 2006;18(8):1406–1421. doi: 10.1162/jocn.2006.18.8.1406. [DOI] [PubMed] [Google Scholar]

- 41.Cheal J.L., Heisz J.J., Walsh J.A., Shedden J.M., Rutherford M.D. Afterimage induced neural activity during emotional face perception. Brain Res. 2014;1549:11–21. doi: 10.1016/j.brainres.2013.12.020. [DOI] [PubMed] [Google Scholar]

- 42.Huang Y.X., Luo Y.J. Temporal course of emotional negativity bias: an ERP study. Neurosci. Lett. 2006;398(1–2):91–96. doi: 10.1016/j.neulet.2005.12.074. [DOI] [PubMed] [Google Scholar]

- 43.Nemrodov D., Itier R.J. Is the rapid adaptation paradigm too rapid? Implications for face and object processing. Neuroimage. 2012;61(4):812–822. doi: 10.1016/j.neuroimage.2012.03.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Stroop J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935;18(6):643–662. doi: 10.1037/h0054651. [DOI] [Google Scholar]

- 45.Qiu J., Luo Y.J., Wang Q.H., Zhang F.H., Zhang Q.L. Brain mechanism of Stroop interference effect in Chinese characters. Brain Res. 2006;1072(1):186–193. doi: 10.1016/j.brainres.2005.12.029. [DOI] [PubMed] [Google Scholar]

- 46.Batty M., Taylor M.J. Early processing of the six basic facial emotional expressions. Cognit. Brain Res. 2003;17(3):613–620. doi: 10.1016/S0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- 47.Brenner C.A., Rumak S.P., Burns A.M., Kieffaber P.D. The role of encoding and attention in facial emotion memory: an EEG investigation. Int. J. Psychophysiol. 2014;93(3):398–410. doi: 10.1016/j.ijpsycho.2014.06.006. [DOI] [PubMed] [Google Scholar]

- 48.Davis T.M., Jerger J. The effect of middle age on the late positive component of the auditory event-related potential. J. Am. Acad. Audiol. 2014;25(2):199–209. doi: 10.3766/jaaa.25.2.8. [DOI] [PubMed] [Google Scholar]

- 49.Frühholz S., Fehr T., Herrmann M. Early and late temporo-spatial effects of contextual interference during perception of facial affect. Int. J. Psychophysiol. 2009;74(1):1–13. doi: 10.1016/j.ijpsycho.2009.05.010. [DOI] [PubMed] [Google Scholar]

- 50.Juottonen K., Revonsuo A., Lang H. Dissimilar age influences on two ERP waveforms (LPC and N400) reflecting semantic context effect. Cognit. Brain Res. 1996;4(2):99–107. doi: 10.1016/0926-6410(96)00022-5. [DOI] [PubMed] [Google Scholar]

- 51.Xu Q., Yang Y.P., Tan Q., Zhang L. Facial expressions in context: electrophysiological correlates of the emotional congruency of facial expressions and background scenes. Front. Psychol. 2017;8:2175. doi: 10.3389/fpsyg.2017.02175. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data associated with this study has been deposited at https://yunpan.360.cn/surl_yEb5qFCMNPQ (accession number: 287c).