Abstract

The ability to attribute attentional states to other individuals is a highly adaptive socio-cognitive skill and thus may have evolved in many social species. However, whilst humans excel in this ability, even chimpanzees appear to not accurately understand how visual attention works, particularly in regard to the function of eyes. The complex socio-ecological background and socio-cognitive skill-set of bottlenose dolphins (Tursiops sp.), alongside the specialised training that captive dolphins typically undergo, make them an especially relevant candidate for an investigation into their sensitivity to human attentional states. Therefore, we tested 8 bottlenose dolphins on an object retrieval task. The dolphins were instructed to fetch an object by a trainer under various attentional state conditions involving the trainer’s eyes and face orientation: ‘not looking’, ‘half looking’, ‘eyes open’, and ‘eyes closed’. As the dolphins showed an increased latency to retrieve the object in conditions where the trainer’s head and eyes cued a lack of attention to the dolphin, particularly when comparing ‘eyes open’ vs ‘eyes closed’ conditions, we demonstrate that dolphins can be sensitive to human attentional features, namely the functionality of eyes. This study supports growing evidence that dolphins possess highly complex cognitive abilities, particularly those in the social domain.

Subject terms: Animal behaviour, Behavioural ecology

Introduction

The ability to attribute attentive states to other individuals is a highly valuable socio-cognitive skill and may offer immediate adaptive benefits1,2. As well as allowing an individual to know if it is being observed by another individual, attending to a con- or a heterospecific’s attentional cues, i.e., turning to look, can lead to the acquisition of valuable information regarding food resources, predators, or social interactions. Furthermore, the ability to accurately assess what others are attending to is an essential requirement for effective communication, and thus the evolution of such an ability would be advantageous for many social species3. This is apparent in humans, as from early infancy children seem to understand the importance of eyes as an indicator of what another individual is attending to. By their first year, children will consistently follow their mother’s gaze4 and by two years old, will not use head direction as an indicator of attention if an adult’s eyes are closed or covered5.

However, even chimpanzees, our closest living relatives, appear to not accurately understand how visual perception works, particularly in regard to the role of eyes in attention3,6–8. When tested on their ability to understand the fundamental conditions underlying the perception of others (relating to body and face orientation as well as the state of the eyes), chimpanzees were unable to attribute attention correctly except for in the most basic condition, in which they chose to beg from either a trainer facing the subject or one who had their back turned6. Comparable studies also suggest that multiple non-human primates fail to use eyes as an attentional cue, including chimpanzees7,8, rhesus macaques9,10, and capuchin monkeys11,12. In contrast, other studies report some evidence of the same non-human primates possibly using eyes as an attentional cue. When requesting food from a trainer by pointing to a baited cup, capuchin monkeys looked at the trainer’s face for longer when she returned its gaze than when looking at the ceiling in a first experiment, and looked longer in eyes open vs eyes closed conditions in a second experiment13. However, there was no difference in the capuchins’ pointing behaviour between these conditions in either of the experiments. If the monkeys fully understood the role of eyes in attention, it would be expected there would be differences found between these conditions. Furthermore, although Hostetter et al.3 found that chimpanzees used more vocalisations when a trainer’s eyes were closed (vs open), the same result was not found when the trainer’s eyes were covered (vs uncovered). This discrepancy therefore reveals failures in the chimpanzees’ ability to interpret attentional states in the presence of (or lack of) eye cues across different experimental contexts. The inconsistent results found in the aforementioned studies may reflect an experimental failure to dissociate different cues given by the trainers. For example, the orientation of the trainer’s body may provide information about their general inclination to give food, whereas the visibility of their face may provide information about their attentional state14,15. Unless these information sources are properly separated in the experimental design, the animals’ responses cannot be properly understood.

With socio-ecological backgrounds comparable to that of chimpanzees (including fission–fusion groups, social learning and culture, and complex cooperation16) bottlenose dolphins (Tursiops sp.) are often placed among the most cognitively advanced species, with abilities rivalling that of the non-human primates17–24. As highly social animals, bottlenose dolphin live in pods ranging in size from pairs to around 100 individuals25. They demonstrate complex fission–fusion social dynamics26 and support one of the most intricate alliance systems documented in non-human animals, including the great apes27. Furthermore, dolphins engage in elaborate cooperative hunting strategies28, with division of labour and role specialisation29, and spread foraging strategies via social networks to form diverse cultures30,31. Living within this convoluted social environment may demand a suite of socio-cognitive skills, such as multi-modal individual recognition32,33, selective social learning34, life-long social memory35, episodic-like memory in a social context36, and precise behavioural synchronization37, as well as abilities that depend on social attention (paying attention to the attention of others)38. These include the ability to imitate21, the understanding and production of indicative pointing39–41, and the capacity to understand the focus of another individual’s gaze42–45. It would follow then, that bottlenose dolphins may have evolved a socio-cognitive toolkit that would allow them to successfully use social information to attribute attentional states onto other individuals. Alongside these complex socio-cognitive abilities, captive dolphins, such as the ones tested in this study, are often highly trained animals that routinely perform shows in which their trainers will give them commands of actions to perform (i.e., do a back flip, search for and return a floating object, swim belly up in circles, etc.) in exchange for highly valued rewards. These interactions may prime performing dolphins to be more perceptive of their trainer’s attentional states.

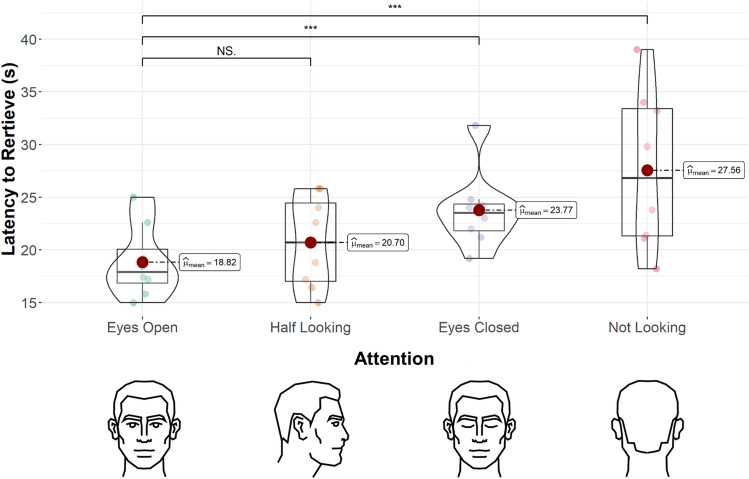

Therefore, the intricate socio-ecological background and advanced social cognition skill-set of bottlenose dolphins, alongside the highly specialised training that captive dolphins typically undergo, make them a highly relevant candidate for an investigation into their sensitivity to human attentional states, including the functionality of eyes. In this study we tested 8 common bottlenose dolphins (Tursiops truncatus) on their sensitivity to human attentional states using an object retrieval task. In this task, the dolphins were commanded by a trainer to retrieve an object from the other side of a pool, whilst varying the visual cues suggestive of the trainer’s attentional levels through four conditions (Fig. 1): ‘eyes open’ (commanding with their head directed towards the dolphin with their eyes open); ‘half looking’ (commanding with their head at a right angle to the dolphin with their eyes open); ‘eyes closed’ (commanding with their head directed towards the dolphin but with their eyes closed); and ‘not looking’ (commanding with their head turned away from the dolphin). To assess the sensitivity of the dolphins to the various attentional state features, we measured the latency to retrieve the object (time difference between the moment the command was given to the moment the dolphin raised its body out of the water to give the object to the trainer). As dolphins can successfully follow human head-directed gaze43, we predicted that the dolphins would show a greater latency to retrieve the object in the ‘not looking’ and ‘half looking’ conditions compared to the ‘eyes open’ condition. However, in line with the literature suggesting that non-human primates fail to use eyes as an attentional cue6–12, or at best show only partial evidence of responding to eye-related cues3,13, as well as the significant physiological contrast in the position of the eyes between dolphins and humans, we also predicted that there would be no significant difference between latencies in the ‘eyes open’ and the ‘eyes closed’ conditions.

Figure 1.

Dolphin’s latency (s) to retrieve the object by attention condition of the trainer. Latency to retrieve the object was measured as the time difference between the moment the command was given to the moment the dolphin raised its body out of the water to give the object to the trainer. Coloured dots show each data point. ***Indicates a p-value of p < 0.05. NS indicates a non-significant p-value.

Results and discussion

A generalised linear mixed model (GLMM) revealed that there was a significant effect of attention (χ2 = 18.15; n = 8; df = 3; p < 0.001; Fig. 1) and no significant effect of trial number (χ2 = 4.75; n = 8; df = 3; p = 0.19). Between conditions, there was a significant difference in latency to retrieve between ‘eyes open’ and ‘not looking’ (p < 0.001; Fig. 1), ‘half looking’ and ‘not looking’ (p = 0.002; Fig. 1), and ‘eyes open’ and ‘eyes closed’ (p = 0.03; Fig. 1). There was no significant difference between ‘eyes open’ and ‘half looking’ (p = 0.39; Fig. 1), ‘half looking’ and ‘eyes closed’ (p = 0.21; Fig. 1), and ‘eyes closed’ and ‘not looking’ (p = 0.06; Fig. 1).

As the dolphins showed an increased latency to retrieve the object in conditions where the trainer’s head and eyes cued a lack of attention to the dolphin, this suggests that dolphins can be sensitive to human attentional features. As latency in ‘not looking’ conditions differed significantly from ‘half looking’ as well as ‘eyes open’ conditions, this suggests that, in line with our first prediction, the dolphins could use head direction to successfully attribute human attentional states. However, as we found a significant difference between ‘eyes open’ and ‘eyes closed’, contrary to our second prediction, but not between ‘eyes closed’ and ‘not looking’, these results also suggest that the dolphins could successfully use eye cues to attribute human attentional states. That said, we must note that the difference between ‘eyes closed’ and ‘not looking’ conditions approaches significance and thus may likely reach significance with a larger sample. The lack of difference between the ‘half looking’ and both ‘eyes closed’ and ‘eyes open’ conditions may reflect confusion over conflicting head vs eye cues. The dolphins’ ability to successfully attend to eye cues is particularly interesting, considering the inconsistent evidence for this ability in chimpanzees and other non-human primates, as well as the distinct difference in eye placement between humans and dolphins. Therefore, these results, alongside other evidence21,32–37,39–44, raise the possibility that bottlenose dolphins possess complex social cognitive abilities.

Whilst this study is the first to evidence that bottlenose dolphins are able to use the functionality of eyes (open or closed) as an indicator of human attentional states, our findings are supported by other research demonstrating that dolphins can follow human gaze and pointing gestures42–44, as well as responding to human body orientation as a cue of their attention. For example, in two-object choice tasks, dolphins can successfully follow human-directed gaze and pointing gestures42,43, as well as indicating the identity of an object a human is pointing to in addition to its location44. However, they were not successful at following gaze cues when only the eyes were used (with the head stationary)43, and when the objects were placed at different distances away from them (as opposed to laterally in front of them)44. Xitco et al.41 show that dolphins pointed (facing in a direction for 2 s or longer) more towards jars containing food when a trainer was facing them compared to when the trainer’s body was turned away from them. Furthermore, they were significantly more likely to leave the testing apparatus during back-turned trials compared to face-forward trials, and rarely pointed during trials where the experimenter swam away after baiting the jars.

However, our inferences about which attentional cues (e.g., body and/or head) used by dolphins differ from comparable studies that independently varied the orientation of these features46. For example, in a study in which bottlenose dolphins had to follow gestural commands given by humans with varied attentional states, Tomonaga et al.46 found that the behaviour of the dolphins seemed to be controlled by the orientation of the trainer’s body but not their head. However, as Tomonaga et al., only orientated the trainer’s head direction through 90 degrees, representing the equivalent of our ‘half looking’ condition, they may have found an effect of head direction if they had fully rotated the head away from the dolphin, as in our ‘not looking’ condition. Furthermore, the authors argue that as the dolphins were tested in an experimental context very similar to their daily performance training and husbandry, and used the same gestural commands used within a strict positive reinforcement procedure, the dolphins may have ignored the subtle social cues presented by the trainers as they had been trained extensively to follow only the command gestures. In contrast, we tested our dolphins outside of their usual training context, both in a physically separate pool (usually used for veterinarian purposes) as well as differing from their daily performance training schedule in terms of timings, trainers, and the context of the commands (commands are usually given with the trainers full attention). Alternatively, the discrepancy in findings may reflect differences in the behavioural responses measured. Tomonaga et al., tested dolphins on their ability to produce correct responses to commands, whereas we tested our dolphins on their latency to perform a behaviour. Therefore, our analysis was able to detect much more subtle differences in behaviour across conditions, which Tomonaga et al., may not have been able to discern using binary (correct vs incorrect) measures. That said, altering the trainer’s body orientation in Tomonaga et al., necessarily changed what the commands looked like to the dolphins, and so their responses may not have been related to the attention of the trainers, but rather confusion over what command was being given. These results may also be due to differences in the information that body and head direction represent14,15. Tempelmann et al.15 found that as long as giving food was no longer restricted by body orientation, the orientation of the trainer’s head became the main factor in dictating apes’ behaviour. As the trainer’s body remained in the same position across all conditions in the current study, unlike Tomonaga et al.46, head and eye cues seem to have been attended to by our dolphins, which follows the conclusions reported by Tempelmann et al.15.

Perhaps a more parsimonious explanation for the increased latency in conditions differing from ‘eyes open’ could be attributed to the dolphins’ expectations of how a command of this nature is typically presented, which may have been contradicted or disrupted. Indeed, it is important to note this sample of dolphins has been extensively trained with the ‘retrieve’ command in a context in which the trainer is giving them their full attention (i.e., head facing the dolphin with their eyes open), and thus the situation experienced by the dolphins in this experiment is novel. Therefore, there is a possibility that the increased latency in conditions differing from ‘eyes open’ represents an expectancy violation due to confusion surrounding the trainer’s behaviour. Due to the limitations associated with the methods of analysis employed in this study, it is not practically possible to separate this possibility independently from the observed behavioural responses exhibited by the dolphins in our sample. However, we deem this prospect unlikely due to the subtle visual differences between ‘eyes open’ and ‘eyes closed’ conditions, and the reported lack of difference between the ‘eyes open’ and ‘half looking’ conditions, as well as the ‘eyes closed’ and ‘not looking’ conditions (although the later relationship was approaching significance (p = 0.06) and would likely be significant in a more powered study). Furthermore, it is crucial to acknowledge that even if such a violation of expectation were to occur in the ‘eyes closed’ condition for the dolphin sample, it would still indicate a significant sensitivity towards the human trainers’ eyes. This sensitivity would remain noteworthy, irrespective of whether it is specifically attributed to the attentional aspects of human vision or not.

Interestingly, whilst our dolphins demonstrated their sensitivity to the factors involved in human visual attention, this ability does not seem to correspond directly to their primary method of communication in nature47,48. The ‘cooperative eye hypothesis’ states that human eyes, compared to other primates, allow for particularly visible signals (due to the white sclera and dark pupil) and that we may have evolved this feature in order to facilitate cooperation with conspecifics through gaze following in close-range joint attentional and communicative interactions49. Therefore, the ability to attribute attentional states based on visual signals may have been highly selected for in hominin evolution. Dolphins, on the other hand, appear to communicate and interpret their environment primarily through acoustic signals, using high-pitched whistles and echolocation clicks47,48. For example, in a cooperative task, dolphins were shown to use vocal signals to facilitate the successful execution of the task, as they were significantly more likely to cooperate successfully when they produced whistles37, and were less successful in the presence of distracting anthropogenic noise50. Dolphins also appear to use echolocation cues in role-specialised group hunting and produce more whistles during this group hunting strategy than when solo foraging29. Furthermore, males within alliances formed for coercive mate guarding, in which males cooperate to control female movements through aggression, use vocal communication to coordinate their movements51 and to increase their bond strength52 (with direct fitness implications53).

Nevertheless, dolphins do appear to somewhat rely on visual information in social interactions and cooperation. Whilst more successful when using whistles in the cooperative task, dolphins were still able to coordinate their behaviour without producing any whistles, and thus, as suggested by the authors, were likely using visual information to succeed in these cases37. Furthermore, the coordination of movement, resulting in elaborate synchronous displays, has been demonstrated to play an important role in dolphin social interactions. Most motor synchrony behaviour is witnessed between males within female-consortship alliances54, which include joint surfacing, aerial leaps, and underwater turns in different directions (e.g., parallel swimming, swimming in opposite directions, etc.)26. These displays are most common in the presence of females, but also likely function in strengthening the alliance54. It is highly likely that these synchronous displays rely on visual information to coordinate movement, particularly as this synchrony is remarkably precise, with behaviours separated by just 80–130 ms54,55. Moreover, dolphins perform an ‘S-shape’ posture as a signal of aggression in sexual interactions and disciplinary behaviour toward juveniles47. Whilst these observations do suggest a role of visual information in dolphin social interactions, there is no evidence of dolphins using conspecific eye-related cues as signals in the wild or captivity. Although Johnson et al.45 report evidence of conspecific “gaze-following” in dolphins, this behaviour could be performed through utilising body, head, or movement cues alone, without the use of the eyes, and may also involve vocalisations. Therefore, the ‘cooperative eye hypothesis’ is not sufficient in explaining the behaviour of our dolphins in the current study, as it is unlikely that there has been significant selection in the dolphin lineage to evolve the ability attribute attentional states towards conspecifics based on visual signals.

Consequently, the ability of our dolphins to attend to human attentional cues may lie in their training and subsequent development in captivity. Indeed, such specialised and intense training might have primed performing dolphins to be more perceptive of their trainers’ attentional states, as these would be highly associated to the possibility of the trainer asking for a particular behaviour and acting as a faithful reference point for a dolphin's own behavioural regulation (i.e., if the trainer is not paying attention to me, then there is no need for me to attend to the trainer). Moreover, trainers often direct more than one dolphin simultaneously, and these are usually not commanded equally. For example, one dolphin might be asked to do a back flip whilst the other one is asked to show their flukes. Therefore, the attentional state of the trainer (i.e., is the trainer looking at me or the other dolphin) gives the dolphin a powerful cue to discern what it is being asked to do (and subsequently rewarded for). Perhaps then, whilst not relevant in nature, these dolphins may have learned to use human eyes as a cue reflecting their attentional state in order to accurately interpret and perform various behaviours commanded by their trainers for rewards. In fact, other species with comparable training relationships with humans, such as dogs and horses, have also shown evidence for a sensitivity towards human attentional states56–62. This has been argued to be an innate ability facilitated by selective pressures involved in domestication57, or instead (although not mutually exclusive) due to learning from repeated experiences with humans over years of training. The later hypothesis is supported by a study testing young domestic horses which reports that although they were successful in using a human trainer’s body orientation as an attentional cue, they could not, in contrast with adult trained horses61, use more subtle cues such as their head orientation and eye functionality62. Therefore, in at least some species it seems that significant experience is required to develop an ability to attend to subtle human attentional cues.

As all the dolphins in this experiment have been trained to perform behaviours from an early age, having been born and raised in captivity, it would be highly informative to assess the ability of untrained dolphins to attribute human attentional states, whether in the wild or captivity. Similarly, it would also be highly enlightening to assess the attention attribution abilities of dolphins which, although wild and untrained (i.e., to perform in shows), have had long-standing mutualistic interactions with humans, such as the population of bottlenose dolphins living off the coast of Laguna, Brazil63. These dolphins regularly cooperate with local artisanal fishers in a century-old practice whereby dolphins shoal fish towards the shore, and experience increased foraging success as a result of the fishers casting their nets in synchrony with the dolphins’ dive cues64. As the dolphins only modify their foraging behaviour, which is necessary for them to actually benefit from the interaction, when the fishers respond appropriately to their foraging cues65, it may be beneficial for the dolphins to attend to the humans’ attentional features in order to maximize their foraging efficiency and not waste effort on inattentive cooperators. Assessing possible variation in the ability to attribute attentional states onto other individuals (namely humans) across different populations of dolphins with differing life experiences, may reveal whether this ability is shared amongst the entire species, or subject to behavioural/cognitive flexibility and therefore learned through relevant experience.

It could be argued, however, that due to the limited evidence of dolphins relying on visual information (particularly eye-related cues) for social interactions, as well as the anatomical and sensory differences between dolphins and humans, the dolphins in this study had learned that it is more “rewarding” to retrieve the object when the trainer was facing them with their ‘eyes open’ than when they were ‘not looking’, rather than a full understanding of the concept of gaze direction and eye-related attention. However, as the experimental situation (in which the trainer was giving the dolphin anything but full ‘eyes open’ attention) was novel, and that each dolphin only participated in a single trial per condition, they did not have the opportunity to learn this difference through repeated experience. Moreover, the dolphins were always rewarded equally across the conditions, providing they successfully retrieved the object and gave it to the commander, which further casts doubt on the validity of this argument.

Furthermore, whilst this study only measured the behavioural response of the dolphins through their physical behaviour (latency to retrieve the object), designing a similar task in a context where vocalisations could be used (i.e., to attain the trainers attention when they are not looking, or their eyes are closed) would be highly informative to our understanding of attentional attribution in dolphins, as vocalisations appear to be much more important in bottlenose dolphin social interactions, as detailed above. In addition, whilst relatively large for comparative psychology experiments, our sample size of 8 dolphins is fairly small for measuring variation in latency. Although we were able detect significant variation between conditions, even with this small sample size, a replication with a larger sample size would nevertheless be highly informative to validate the robustness of our results. Furthermore, as the behavioural response measure of ‘latency to retrieve an object’ is somewhat abstract and could be controlled by other unmeasured factors that do not involve attentional state attribution, such as expectancy violation, investigating whether the attentional state of the trainer impacts the dolphins’ behavioural responses in other tasks, for example performing trained performance related behaviours, e.g., a backflip, may corroborate the findings of this study (i.e., the dolphin may perform a behaviour less successfully when the trainer cues a lack of attention).

Nevertheless, this study adds valuable insight regarding the question of how non-human animals perceive and understand social information, which is a topic predominantly studied using chimpanzees and other non-human primates. As we build on evidence suggesting that dolphins may be able to attribute attentional states to humans41, and demonstrate for the first time the successful use of head orientation and eye functionality-related attentional cues, this discovery opens new avenues of research into dolphin social cognition as well as inter-species cooperation and communication.

Methods

Subjects and housing

8 dolphins (3 females; 6–29 years old) participated in this study. This sample was comprised of all the dolphins available for testing (i.e., not calves and in full health). Dolphins were individually identified using their distinctive physical characteristics, such as facial differences and tooth rake patterns. The dolphins were grouped into three pods, housed in three adjacent pools (pool 1 = 2581 m3; pool 2 = 1840 m3; pool 3 = 1840 m3) at Zoomarine Italia (Torvaianica, Italy). This arrangement was already in place before the current experiment and was in line with individual social and breeding requirements. The pools were connected via gates, which also connected to an additional two veterinarian pools, one of which was used for this study (1020 m3). The experimental pool was not typically used for training purposes. Overall, the pools totalled to 7667 m3. Each dolphin that participated in this study was born and raised in captivity. The dolphins had previously participated in other research using the same commands36. Zoomarine trains their dolphins to perform different behaviours for various reasons, including medical care and zoo performances, as in common in zoological settings66. This is done when the dolphins are young, through positive reinforcement based operant conditioning and social learning. For testing, the dolphins were individually isolated in the experimental pool, when possible, but if not (e.g., when testing a cow with a young calf), the calf was kept at the other side of the pool by a trainer.

Procedures

The project was approved by the University of Cambridge Animal Welfare Ethical Review Body and was conducted under a university non-regulated procedure licence (OS2022/01) and all methods were performed in accordance with relevant guidelines and regulations. This study is reported in accordance with ARRIVE guidelines. Dolphins were tested on their sensitivity to human attentional cues using an object retrieval task. Before the onset of the current study, all dolphins were trained to retrieve an object floating in the water by Zoomarine staff, using a specific command. This command consisted of two consecutive hand motions pointing with a closed fist (index finger facing upwards) in the direction of the object. Before any commands were given, in normal Zoomarine training and for this study, individuals are encouraged to face the trainer and attend to the subsequent command (using other trained commands: a flat palm facing either up or down, used to encourage the dolphin to approach and to signal to the dolphin that they are about to receive a command, respectively). Commands were only given once the dolphin was positioned directly in front of the commanding platform (the dolphins were trained to wait for the command in this position) and was facing the trainer (who was informed by other trainers in conditions when they could not see the dolphin). In test trials, the dolphins were commanded by a trainer to retrieve an object from the other side of the pool, whilst varying the visual cues suggestive of the trainer’s attentional levels through four conditions (Fig. 1): ‘eyes open’ (commanding with their head directed towards the dolphin with their eyes open); ‘half looking’ (commanding with their head at a right angle to the dolphin with their eyes open); ‘eyes closed’ (commanding with their head directed towards the dolphin but with their eyes closed); and ‘not looking’ (commanding with their head turned away from the dolphin). In all conditions, the trainer’s body remained perpendicular to the dolphin (so that the head could be swivelled in either direction without moving the body). The ‘eyes open’ condition can be thought of as a control condition, as it is the context in which commands are usually given. Each dolphin received one trial per condition in a randomised order (using Excel function = Rand()) to control for any effect of trial order (all dolphins had a unique trial order). In order to standardise the location of the object in the pool, it was temporarily held in position by a second trainer until the dolphin obtained it. To assess the sensitivity of the dolphins to the various attentional states, we measured the latency to retrieve the object (time difference between the moment the command was given to the moment the dolphin raised its body out of the water to give the object to the trainer).

Analysis

We video recorded all trials and coded all videos using Solomon Coder67. We second coded 20% of videos and inter-rater reliability was strong: (Cohen’s Kappa = 0.8). The second coder was blinded to the study’s aim whilst coding. We conducted a generalised linear mixed model (GLMM) to test whether condition (attentional input) influenced the dolphin’s latency to retrieve the object, with dolphin as a random effect and attention condition and trial number as fixed effects, using likelihood ratio test (drop1() function) and Tukey post hoc comparisons (package multcomp68, function glht()). To check our model’s assumptions, we used the DHARMa package69. Our model did not fail to converge and exhibited a confidence interval of 97.5%. The assumption checks of our model evidenced no deviation from the expected distribution but showed some quantile deviations of the residuals against the predicted values.

Acknowledgements

We thank Zoomarine Italia, Luigi Baciadonna, Cristina Pilenga, and Livio Favaro for providing access to the dolphin sample, and the dolphin trainers Angelo, Ilaria, Margherita, and Federica for their excellent support.

Author contributions

Both authors contributed equally to this work.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

James R. Davies, Email: jd940@cam.ac.uk

Elias Garcia-Pelegrin, Email: egarpel@nus.edu.sg.

References

- 1.Tomasello M, Call J, Hare B. Five primate species follow the visual gaze of conspecifics. Anim. Behav. 1998;55:1063–1069. doi: 10.1006/anbe.1997.0636. [DOI] [PubMed] [Google Scholar]

- 2.Itakura S. Gaze-following and joint visual attention in nonhuman animals: Gaze-following and joint visual attention in nonhuman animals. Jpn. Psychol. Res. 2004;46:216–226. doi: 10.1111/j.1468-5584.2004.00253.x. [DOI] [Google Scholar]

- 3.Hostetter AB, Russell JL, Freeman H, Hopkins WD. Now you see me, now you don't: Evidence that chimpanzees understand the role of the eyes in attention. Anim. Cogn. 2007;10:55–62. doi: 10.1007/s10071-006-0031-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Butterworth G, Jarrett N. What minds have in common is space: Spatial mechanisms serving joint visual attention in infancy. Br. J. Dev. Psychol. 1991;9:55–72. doi: 10.1111/j.2044-835X.1991.tb00862.x. [DOI] [Google Scholar]

- 5.Brooks R, Meltzoff AN. The importance of eyes: How infants interpret adult looking behavior. Dev. Psychol. 2002;38:958. doi: 10.1037/0012-1649.38.6.958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Povinelli DJ, Eddy TJ, Hobson RP, Tomasello M. What young chimpanzees know about seeing. Monogr. Soc. Res. Child Dev. 1996;1996:189. [PubMed] [Google Scholar]

- 7.Call J, Hare BA, Tomasello M. Chimpanzee gaze following in an object-choice task. Anim. Cogn. 1998;1:89–99. doi: 10.1007/s100710050013. [DOI] [PubMed] [Google Scholar]

- 8.Call J, Agnetta B, Tomasello M. Cues that chimpanzees do and do not use to find hidden objects. Anim. Cogn. 2000;3:23–34. doi: 10.1007/s100710050047. [DOI] [Google Scholar]

- 9.Anderson JR, Montant M, Schmitt D. Rhesus monkeys fail to use gaze direction as an experimenter-given cue in an object-choice task. Behav. Proc. 1996;37:47–55. doi: 10.1016/0376-6357(95)00074-7. [DOI] [PubMed] [Google Scholar]

- 10.Canteloup C, Bovet D, Meunier H. Intentional gestural communication and discrimination of human attentional states in rhesus macaques (Macaca mulatta) Anim. Cogn. 2015;18:875–883. doi: 10.1007/s10071-015-0856-2. [DOI] [PubMed] [Google Scholar]

- 11.Anderson JR, Sallaberry P, Barbier H. Use of experimenter-given cues during object-choice tasks by capuchin monkeys. Anim. Behav. 1995;49:201–208. doi: 10.1016/0003-3472(95)80168-5. [DOI] [Google Scholar]

- 12.Vick S-J, Anderson JR. Learning and limits of use of eye gaze by capuchin monkeys (Cebus apella) in an object-choice task. J. Comp. Psychol. 2000;114:200. doi: 10.1037/0735-7036.114.2.200. [DOI] [PubMed] [Google Scholar]

- 13.Hattori Y, Kuroshima H, Fujita K. I know you are not looking at me: Capuchin monkeys’ (Cebus apella) sensitivity to human attentional states. Anim. Cogn. 2007;10:141–148. doi: 10.1007/s10071-006-0049-0. [DOI] [PubMed] [Google Scholar]

- 14.Kaminski J, Call J, Tomasello M. Body orientation and face orientation: Two factors controlling apes’ begging behavior from humans. Anim. Cogn. 2004;7:216–223. doi: 10.1007/s10071-004-0214-2. [DOI] [PubMed] [Google Scholar]

- 15.Tempelmann S, Kaminski J, Liebal K. Focus on the essential: All great apes know when others are being attentive. Anim. Cogn. 2011;14:433–439. doi: 10.1007/s10071-011-0378-5. [DOI] [PubMed] [Google Scholar]

- 16.Box HO. Primate Behaviour and Social Ecology. Springer Science & Business Media; 2012. [Google Scholar]

- 17.Herman LM. Cetacean Behavior: Mechanisms and Functions. Wiley; 1980. [Google Scholar]

- 18.Smith JD, et al. The uncertain response in the bottlenosed dolphin (Tursiops truncatus) J. Exp. Psychol. Gen. 1995;124:391. doi: 10.1037/0096-3445.124.4.391. [DOI] [PubMed] [Google Scholar]

- 19.McCowan B, Marino L, Vance E, Walke L, Reiss D. Bubble ring play of bottlenose dolphins (Tursiops truncatus): Implications for cognition. J. Comp. Psychol. 2000;114:98. doi: 10.1037/0735-7036.114.1.98. [DOI] [PubMed] [Google Scholar]

- 20.Reiss D, Marino L. Mirror self-recognition in the bottlenose dolphin: A case of cognitive convergence. Proc. Natl. Acad. Sci. 2001;98:5937–5942. doi: 10.1073/pnas.101086398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Herman, L. M. Imitation in animals and artifacts (eds. Dautenhahn, K. & Nehaniv, C. L.) 63–108 (Boston Review, 2002).

- 22.Herman LM. What laboratory research has told us about dolphin cognition. Int. J. Compar. Psychol. 2010;2010:23. [Google Scholar]

- 23.Marino, L. Whales and Dolphins (eds. Brakes, P. & Simmonds, M. P.) 143–156 (Routledge, 2013).

- 24.Jaakkola K, Guarino E, Donegan K, King SL. Bottlenose dolphins can understand their partner's role in a cooperative task. Proc. R. Soc. B: Biol. Sci. 2018;285:20180948. doi: 10.1098/rspb.2018.0948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shirihai H, Jarrett B, Kirwan GM. Whales, Dolphins, and Other Marine Mammals of the World. Princeton University Press; 2006. [Google Scholar]

- 26.Connor, R. C., Wells, R. S., Mann, J. & Read, A. J. Cetacean Societies: Field Studies of Dolphins and Whales (eds. Janet, M. et al.) 91–126 (University of Chicago Press, 2000).

- 27.Connor RC, Krützen M. Male dolphin alliances in Shark Bay: Changing perspectives in a 30-year study. Anim. Behav. 2015;103:223–235. doi: 10.1016/j.anbehav.2015.02.019. [DOI] [Google Scholar]

- 28.Silber GK, Fertl D. Intentional beaching by bottlenose dolphins (Tursiops truncatus) in the Colorado River Delta, Mexico. Aquat. Mammals. 1995;21:183–186. [Google Scholar]

- 29.Hamilton RA, Gazda SK, King SL, Starkhammar J, Connor RC. Bottlenose dolphin communication during a role-specialized group foraging task. Behav. Proc. 2022;200:104691. doi: 10.1016/j.beproc.2022.104691. [DOI] [PubMed] [Google Scholar]

- 30.Krützen M, et al. Cultural transmission of tool use in bottlenose dolphins. Proc. Natl. Acad. Sci. 2005;102:8939–8943. doi: 10.1073/pnas.0500232102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mann J, Stanton MA, Patterson EM, Bienenstock EJ, Singh LO. Social networks reveal cultural behaviour in tool-using dolphins. Nat. Commun. 2012;3:1–8. doi: 10.1038/ncomms1983. [DOI] [PubMed] [Google Scholar]

- 32.Sayigh LS, et al. Individual recognition in wild bottlenose dolphins: A field test using playback experiments. Anim. Behav. 1999;57:41–50. doi: 10.1006/anbe.1998.0961. [DOI] [PubMed] [Google Scholar]

- 33.Bruck JN, Walmsley SF, Janik VM. Cross-modal perception of identity by sound and taste in bottlenose dolphins. Sci. Adv. 2022;8:eabm7684. doi: 10.1126/sciadv.abm7684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kuczaj SA, II, Yeater D, Highfill L. How selective is social learning in dolphins? Int. J. Compar. Psychol. 2012;2012:25. [Google Scholar]

- 35.Bruck JN. Decades-long social memory in bottlenose dolphins. Proc. R. Soc. B: Biol. Sci. 2013;280:20131726. doi: 10.1098/rspb.2013.1726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Davies JR, et al. Episodic-like memory in common bottlenose dolphins. Curr. Biol. 2022;32:3436. doi: 10.1016/j.cub.2022.06.032. [DOI] [PubMed] [Google Scholar]

- 37.King SL, Guarino E, Donegan K, McMullen C, Jaakkola K. Evidence that bottlenose dolphins can communicate with vocal signals to solve a cooperative task. R. Soc. Open Sci. 2021;8:202073. doi: 10.1098/rsos.202073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Pack AA, Herman LM. Dolphin social cognition and joint attention: Our current understanding. Aquat. Mamm. 2006;32:443. doi: 10.1578/AM.32.4.2006.443. [DOI] [Google Scholar]

- 39.Herman LM, et al. Dolphins (Tursiops truncatus) comprehend the referential character of the human pointing gesture. J. Comp. Psychol. 1999;113:347. doi: 10.1037/0735-7036.113.4.347. [DOI] [PubMed] [Google Scholar]

- 40.Xitco MJ, Gory JD, Kuczaj SA. Spontaneous pointing by bottlenose dolphins (Tursiops truncatus) Anim. Cogn. 2001;4:115–123. doi: 10.1007/s100710100107. [DOI] [Google Scholar]

- 41.Xitco MJ, Gory JD, Kuczaj SA. Dolphin pointing is linked to the attentional behavior of a receiver. Anim. Cogn. 2004;7:231–238. doi: 10.1007/s10071-004-0217-z. [DOI] [PubMed] [Google Scholar]

- 42.Tschudin A, Call J, Dunbar RI, Harris G, van der Elst C. Comprehension of signs by dolphins (Tursiops truncatus) J. Comp. Psychol. 2001;115:100. doi: 10.1037/0735-7036.115.1.100. [DOI] [PubMed] [Google Scholar]

- 43.Pack AA, Herman LM. Bottlenosed dolphins (Tursiops truncatus) comprehend the referent of both static and dynamic human gazing and pointing in an object-choice task. J. Comp. Psychol. 2004;118:160. doi: 10.1037/0735-7036.118.2.160. [DOI] [PubMed] [Google Scholar]

- 44.Pack AA, Herman LM. The dolphin’s (Tursiops truncatus) understanding of human gazing and pointing: Knowing what and where. J. Comp. Psychol. 2007;121:34. doi: 10.1037/0735-7036.121.1.34. [DOI] [PubMed] [Google Scholar]

- 45.Johnson CM, Ruiz-Mendoza C, Schoenbeck C. Conspecific”” gaze following" in bottlenose dolphins. Anim. Cogn. 2022;25:1219–1229. doi: 10.1007/s10071-022-01665-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Tomonaga M, Uwano Y. Bottlenose dolphins' (Tursiops truncatus) theory of mind as demonstrated by responses to their trainers’ attentional states. Int. J. Compar. Psychol. 2010;2010:23. [Google Scholar]

- 47.Kremers D, et al. Sensory perception in cetaceans: Part I—current knowledge about dolphin senses as a representative species. Front. Ecol. Evol. 2016;4:49. doi: 10.3389/fevo.2016.00049. [DOI] [Google Scholar]

- 48.Herzing DL. Making Sense of it All: Multimodal Dolphin Communication. The MIT Press; 2015. [Google Scholar]

- 49.Tomasello M, Hare B, Lehmann H, Call J. Reliance on head versus eyes in the gaze following of great apes and human infants: The cooperative eye hypothesis. J. Hum. Evol. 2007;52:314–320. doi: 10.1016/j.jhevol.2006.10.001. [DOI] [PubMed] [Google Scholar]

- 50.Sørensen PM, et al. Anthropogenic noise impairs cooperation in bottlenose dolphins. Curr. Biol. 2023;2023:33. doi: 10.1016/j.cub.2022.12.063. [DOI] [PubMed] [Google Scholar]

- 51.King SL, Allen SJ, Krützen M, Connor RC. Vocal behaviour of allied male dolphins during cooperative mate guarding. Anim. Cogn. 2019;22:991–1000. doi: 10.1007/s10071-019-01290-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Chereskin E, et al. Allied male dolphins use vocal exchanges to “bond at a distance”. Curr. Biol. 2022;32:1657–1663. doi: 10.1016/j.cub.2022.02.019. [DOI] [PubMed] [Google Scholar]

- 53.Gerber L, et al. Social integration influences fitness in allied male dolphins. Curr. Biol. 2022;32:1664–1669. doi: 10.1016/j.cub.2022.03.027. [DOI] [PubMed] [Google Scholar]

- 54.Connor RC, Smolker R, Bejder L. Synchrony, social behaviour and alliance affiliation in Indian Ocean bottlenose dolphins, Tursiops aduncus. Anim. Behav. 2006;72:1371–1378. doi: 10.1016/j.anbehav.2006.03.014. [DOI] [Google Scholar]

- 55.Connor, R. C., Smolker, R. A. & Richards, A. F. Coalitions and Alliances in Humans and Other Animals (eds. Alexander, H. H. & Frans de, W.) 415–443 (Oxford University Press, 1992).

- 56.Kaminski J, Hynds J, Morris P, Waller BM. Human attention affects facial expressions in domestic dogs. Sci. Rep. 2017;7:12914. doi: 10.1038/s41598-017-12781-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Virányi Z, Topál J, Gácsi M, Miklósi Á, Csányi V. Dogs respond appropriately to cues of humans’ attentional focus. Behav. Proc. 2004;66:161–172. doi: 10.1016/j.beproc.2004.01.012. [DOI] [PubMed] [Google Scholar]

- 58.Gácsi M, Miklósi Á, Varga O, Topál J, Csányi V. Are readers of our face readers of our minds? Dogs (Canis familiaris) show situation-dependent recognition of human’s attention. Anim. Cogn. 2004;7:144–153. doi: 10.1007/s10071-003-0205-8. [DOI] [PubMed] [Google Scholar]

- 59.Call J, Bräuer J, Kaminski J, Tomasello M. Domestic dogs (Canis familiaris) are sensitive to the attentional state of humans. J. Comp. Psychol. 2003;117:257. doi: 10.1037/0735-7036.117.3.257. [DOI] [PubMed] [Google Scholar]

- 60.Schwab C, Huber L. Obey or not obey? Dogs (Canis familiaris) behave differently in response to attentional states of their owners. J. Comp. Psychol. 2006;120:169. doi: 10.1037/0735-7036.120.3.169. [DOI] [PubMed] [Google Scholar]

- 61.Proops L, McComb K. Attributing attention: The use of human-given cues by domestic horses (Equus caballus) Anim. Cogn. 2010;13:197–205. doi: 10.1007/s10071-009-0257-5. [DOI] [PubMed] [Google Scholar]

- 62.Proops L, Rayner J, Taylor AM, McComb K. The responses of young domestic horses to human-given cues. PLoS ONE. 2013;8:e67000. doi: 10.1371/journal.pone.0067000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Daura-Jorge FG, Ingram SN, Simões-Lopes PC. Seasonal abundance and adult survival of bottlenose dolphins (Tursiops truncatus) in a community that cooperatively forages with fishermen in southern Brazil. Mar. Mamm. Sci. 2013;29:293–311. doi: 10.1111/j.1748-7692.2012.00571.x. [DOI] [Google Scholar]

- 64.Pryor K, Lindbergh J. A dolphin-human fishing cooperative in Brazil. Mar. Mamm. Sci. 1990;6:77–82. doi: 10.1111/j.1748-7692.1990.tb00228.x. [DOI] [Google Scholar]

- 65.Cantor M, Farine DR, Daura-Jorge FG. Foraging synchrony drives resilience in human–dolphin mutualism. Proc. Natl. Acad. Sci. 2023;120:e2207739120. doi: 10.1073/pnas.2207739120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Garcia-Pelegrin E, Clark F, Miller R. Increasing animal cognition research in zoos. Zoo Biol. 2022;41:281–291. doi: 10.1002/zoo.21674. [DOI] [PubMed] [Google Scholar]

- 67.Péter, A. Solomon coder (version beta 11.01. 22): A simple solution for behavior coding. Computer program. http://solomoncoder.com (2011).

- 68.Hothorn, T. et al. Package ‘multcomp’. Simultaneous inference in general parametric models. In Project for Statistical Computing, Vienna, Austria (2016).

- 69.Hartig, F. & Hartig, M. F. Package ‘DHARMa’. R package (2017).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.