Abstract

Most randomized controlled trials are unable to generate information about a product’s real-world effectiveness. Therefore, payers use real-world evidence (RWE) generated in observational studies to make decisions regarding formulary inclusion and coverage. While some payers generate their own RWE, most cautiously rely on RWE produced by manufacturers who have a strong financial interest in obtaining coverage for their products.

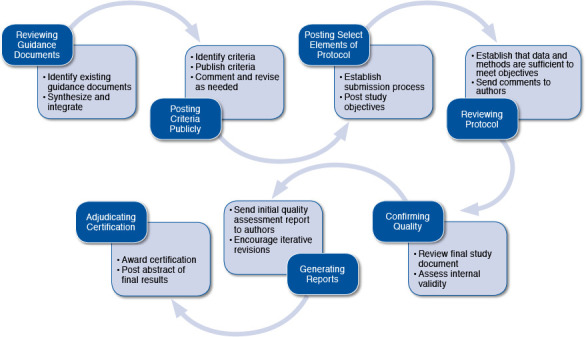

We propose a process by which an independent body would certify observational studies as generating valid and unbiased estimates of the effectiveness of the intervention under consideration. This proposed process includes (a) establishing transparent criteria for assessment, (b) implementing a process for receipt and review of observational study protocols from interested parties, (c) reviewing the submitted protocol and requesting any necessary revisions, (d) reviewing the study results, (e) assigning a certification status to the submitted evidence, and (f) communicating the certification status to all who seek to use this evidence for decision making.

Accrediting organizations such as the National Center for Quality Assurance and the Joint Commission have comparable goals of providing assurance about quality to those who look to their accreditation results. Although we recognize potential barriers, including a slowing of evidence generation and costs, we anticipate that processes can be streamlined, such as when familiar methods or familiar datasets are used. The financial backing for such activities remains uncertain, as does identification of organizations that might serve this certification function. We suggest that the rigor and transparency that will be required with such a process, and the unassailable evidence that it will produce, will be valuable to decision makers.

Randomized clinical trials (RCTs) of prescription drugs and other medical products have long been considered the gold standard of evidence to support decision making by clinicians and policymakers. However, it is increasingly recognized that most RCTs are unable to generate information about a product’s real-world effectiveness. Measures such as long-term outcomes, comparisons among multiple treatment options, and utilization are not well captured in trials. Furthermore, benefit-risk balance and value for money are best learned in cost-effectiveness or cost-benefit analyses.1 The increased availability of data from electronic, clinical, and financial administrative data systems in the United States2 and around the world; the need for epidemiological information; and a growing appreciation for the limits of inference from RCTs alone have propelled interest in real-world evidence (RWE).

In a recent symposium held at Johns Hopkins University, we first explored barriers to the use of RWE and then had in-depth discussions about a series of options that could facilitate payers’ use of RWE that is generated by manufacturers and others. In this article, we address 1 option that was heavily discussed and deserves further consideration—a process of third-party certification of RWE.3

Barriers to Use of RWE

Although some payers generate limited RWE internally, many smaller payers need to rely extensively on RWE generated by manufacturers of the products they are considering for coverage. In addition, even large payers have the potential to benefit substantially from externally generated RWE. Despite the expected value, there are barriers that have historically prevented RWE’s widespread generation and use by payers.

Often payers discount or disregard the results from an observational study that has been conducted by the manufacturers of a product when determining its formulary placement or making other coverage decisions.4 Payers have concerns about the conflicts of interest of researchers employed or supported by the pharmaceutical or devices industries. Although financially conflicted parties can produce high-quality and valid evidence, there are concerns about the possible influence of financial incentives on the evidence produced.

Other barriers to payers’ use of RWE are present as well. For example, in many cases payers may be uncertain that the results generated, even if internally valid, apply to their customer base.5 These challenges may be particularly pronounced when the evidence is generated using methods such as pragmatic or adaptive trial designs, or from combining results using Bayesian methods, or using retrospective cohort designs with large datasets or electronic health records that require multivariate adjustment techniques such as propensity score matching.

The potential threats to internal validity, particularly with observational study designs, are also very real and the solutions sometimes complex. These threats include challenges with respect to data completeness as well as the validity of available exposure and outcome variables. The researcher generating the evidence needs to be competent in state-of-the-art methods for observational data to ensure that confounders and biases, such as confounding by indication and immortal time bias, are adequately addressed.6,7

Historical Efforts to Improve Development and Use of RWE

Interest in and use of RWE have been growing for decades. In 2004, the International Society for Pharmacoeconomics and Outcomes Research (ISPOR) sponsored a Real-World Data Task Force, which culminated in the release of Using Real-World Data for Coverage and Payment Decisions: The ISPOR Real-World Data Task Force Report.1 The ISPOR report covers methodological considerations for researchers developing RWE, details the usefulness and limitations of RWE for payers, and discusses considerations for payers interpreting RWE data for payment decisions.

The ISPOR task force called for the development of additional comprehensive guidance to inform this field. During the past 10 years, working groups and varied stakeholders have convened with increasing frequency to inform researchers on best practices for producing RWE and to guide payers using RWE in making coverage and reimbursement decisions.

A number of U.S. organizations focus on health technology assessment and comprehensively review evidence. These evidence summaries typically include information from efficacy trials (both pre- and post-approval) and RWE from observational studies. For example, the Institute for Clinical and Economic Review independently evaluates the clinical effectiveness and comparative value of health care interventions, with its work informing diverse stakeholders. Similarly, the Agency for Healthcare Research and Quality funds large-scale systematic reviews of the evidence through its Evidence-based Practice Centers. These reviews are extensively used by payers but do not focus specifically on RWE. Health technology assessment is not what we are proposing here.

Using Certification to Facilitate Payer Adoption of RWE

We propose a voluntary, so-called Good Housekeeping Seal of Approval mechanism for the transparent review and certification of either prospective or retrospective observational research studies. There is an especially high level of uncertainty and even skepticism about the quality of the effectiveness research produced by manufacturers when it is supplied to support their products. Indeed, manufacturers often take steps to reduce the uncertainty: They employ academic researchers with a guarantee of freedom to publish, and they submit their analyses to peer-reviewed journals for independent validation. Some studies are even prospectively registered on ClinicalTrials.gov (https://clinicaltrials.gov/). However, there remains considerable, rightful concern about the value of peer review and sponsored research.8,9

Drawing on experience in other realms—from consumer products (e.g., the Good Housekeeping Seal of Approval) to university department accreditation—it is clear that independent private or public organizations can be used to monitor and certify quality. Although many strategies could facilitate payers’ use of manufacturer-generated RWE, we explore here the potential of a certification process. By certification we mean that results of observational studies conducted by manufacturers would be voluntarily submitted for review and certification by a third party that uses a transparent and rigorous process to evaluate the investigations and to confirm that they sufficiently fulfill criteria to produce internally valid results. This process will need to be implemented as transparently as possible, as has also been suggested for pharmacoeconomic studies.10,11

Such a process will be voluntary; however, companies that do not pursue certification of their observational research will risk not being able to include the study’s results in a submitted dossier, such as that recommended by the Academy of Managed Care Pharmacy for submissions for formulary consideration.12 We might also suggest that the dossiers highlight the studies that have been through the certification process to streamline the review by payers, who often receive a large body of documentation of mixed quality.

We expect that the certification process will require the establishment of a private organization, possibly hosted by an academic institution, group of institutions, or professional associations. Although the Centers for Medicare & Medicaid Services (CMS) is likely to benefit from this process, a private-public partnership toward this goal currently seems politically and legislatively infeasible. We note, however, that the National Quality Forum was created in 1999 as a public-private partnership in response to recommendations from the President’s Advisory Commission on Consumer Protection and Quality in the Health Care Industry. Having an independent organization will increase the likelihood that RWE will become integrated into evidence-based medicine and routine decision making. The establishment and conduct of the organization should involve all of the stakeholders and be as transparent as possible. This private organization, which we will refer to here as the “certifier,” will establish provisions for the activities described below.

Reviewing Guidance Documents

The certifier will review guidance documents produced by relevant organizations. These key guidance documents might include the 2005 FDA Guidance for Industry document entitled Good Pharmacovigilance Practices and Pharmacoepidemiologic Assessment13; the 2007 Using Real-World Data for Coverage and Payment Decisions: The ISPOR Real-World Data Task Force Report1; the International Society for Pharmacoepidemiology’s 2008 Guidelines for Good Pharmacoepidemiology Practices14; and the more recent Best Practices for Conducting and Reporting Pharmacoepidemiologic Safety Studies Using Electronic Healthcare Data.15 The certifier will look to European and Canadian guidelines as well, including the European Network of Centres for Pharmacoepidemiology and Pharmacovigilance’s Guide on Methodological Standards in Pharmacoepidemiology that was published in 2014.16 Additionally, other organizations have produced guidance documents about methods for evidence generation and evidence synthesis. Therefore, other important documents for possible review include the methods guide for observational research from the Agency for Healthcare Research and Quality,17 the GRACE principles,18 the methods standards put forth by the Patient-Centered Outcomes Research Institute,19 and other documents highlighted by Brixner et al. (2009).20 These guidance documents can inform the processes that the certifier establishes to judge the validity of submitted evidence.

FIGURE 1.

Process of Real-World Evidence Certification

Posting Criteria Publicly

The certifier will then establish criteria for assessing the validity of submitted observational research, and these criteria will be publicly posted for comment by the affected parties— largely industry and payers. The certifier will incorporate these comments when designing the review process. Although this process can be modified over time, the certifier will aim for consistency and transparency from the outset.

Posting Select Elements of Protocol

The certifier will establish a process by which manufacturers or other researchers will submit observational research protocols for review. The protocols will not be publicly posted; however, there will be posting of elements of the planned observational research for public review, similar to the level of detail in the postings on the ClinicalTrials.gov website (https://clinicaltrials.gov/), including a statement about the primary outcome(s) of interest. The purpose of public posting is to improve transparency regarding selective outcomes reporting and reporting bias.

Reviewing Protocol

The certifier will review the submitted protocol and request revision if the level of detail is insufficient to anticipate if the resultant study will generate valid evidence. The certifier’s task is to ensure that the research, if conducted according to protocol, will generate the data to meet its objectives. If a specific investigation is closely related in design to earlier studies (with perhaps different drug dosages or different comparators), the certifier will not have to request an additional detailed protocol. We anticipate that the processes can be streamlined, such as when familiar methods or familiar datasets are used. Datasets from commercial sources, such as those generated by Truven Health Analytics, Optum Labs, and IMS Health, as well as datasets from Medicare and Medicaid, have content that will in time become familiar to certifiers.

Confirming Quality

The investigators will conduct the observational research in accordance with the protocol and submit a document of results and any necessary appendix material for a complete review to the certifier. This process will presumably take place before any manuscript is submitted for peer review and publication, if desired. The certifier will confirm, using a detailed checklist, that the conduct of the research meets criteria for producing highly internally valid results. Elements of this checklist are likely to include many of those that are included in various risk-of-bias assessment instruments that are currently used for evaluating observational studies. Examples include the Newcastle-Ottawa tool,21 the Downs and Black instrument,22 and the newly developed ACROBAT-NRSI instrument from the Cochrane Collaboration.23 This last tool, published in 2014, is for assessing the risk of bias in nonrandomized studies that compare the health effects of 2 or more interventions. The tool focuses on internal validity and covers 7 domains through which bias might be introduced into a nonrandomized study. The external validity of a study and its applicability to the population that the payer covers will be left to the payers to assess, but the clear delineation of the inclusion and exclusion criteria is critical for interpreting the study results.

Generating Reports

The certifier will prepare a written report to the study’s authors describing the results of the evaluation and whether the observational research will be “certified” for payers’ use. If the study is found to have deficiencies that may affect the validity of the results, the authors will have the opportunity to revise the study and submit results again.

Alternatively, the authors might choose to accept a rating of less than the highest level of certification. If a study’s authors are dissatisfied or disagree with the results of the review, and yet decline to resubmit, they can appeal and this will be publicly noted. This process can be iterative, where several back-and-forth revisions and comments could happen in order to improve the final study or provide additional detail to the reviewers. The final report by the certifier will outline the strengths and weaknesses of the research and its conclusions.

Adjudicating Certification

When the certifier is satisfied that the research is valid, certification is awarded to the study. A certified study will be considered to have met standards for internal validity that make its results valuable to the public and others, including payers, and all can have confidence in using the study results for decision making in many contexts. An abstract of the study results could be posted along with the certification statement.

Relevant Precedents by Other Certifying Entities

There are parallels between the voluntary process proposed here and the Joint Commission’s and the National Committee for Quality Assurance’s (NCQA’s) accreditation processes. These organizations provide voluntary accreditation for health care facilities and health plans, respectively, and their accreditations are widely used by private and public payers.24,25 Widespread use of a certifying body occurs when accrediting organizations meet a need that has been identified by an industry or its customers, and when the accreditation standards are developed with the support and involvement of the industry.

The Joint Commission hospital accreditation program was developed by physicians in response to physicians’ and administrators’ needs for unbiased evaluations of the quality of care provided within their own health care organizations. Members of national health care professional organizations oversee the Joint Commission.26 The NCQA was developed by managed care trade organizations in response to a demand by employee health plan purchasers for quality information about these organizations. NCQA was founded, funded, and is overseen by members of managed care trade organizations, health maintenance organization leaders, major employee groups, health services researchers, clinicians, and consumer advocates.27

In the years since their creation, organizations with Joint Commission and NCQA accreditation have been deemed by state and federal government (CMS) to meet standards for participation in publicly funded health programs. Some health care facilities with Joint Commission accreditation receive CMS certification without an additional government approval process. Health plans with NCQA accreditation are deemed appropriate for participation in Medicare Advantage and the Federal Employees Health Benefits Program. Thus, a quasi-governmental and voluntary entity is a realistic possibility and could help solve the perceived problem of quality issues in RWE generation and acceptance in the United States. In time, this proposed RWE process could conceivably be used by CMS for its coverage decisions for Medicare Part D coverage. We envision that CMS could offer provisional coverage of a new medication and require the manufacturer to collect and certify evidence to demonstrate its effectiveness in a usual care setting.

Barriers to a Certification Process

There are several barriers to establishing an RWE certification process. The greatest challenge may be to ensure that the certifying organization has credibility and impartiality so that its decisions are considered valid. Furthermore, the range of expertise needed for a thorough review is very wide, and thus the skills, expertise of reviewers, and process for review may be as important as the criteria used in the review. Multidisciplinary review committees may be required.

It may be that the process will need to be significantly streamlined so that this process is not seen as an acceptable barrier to payer decision making. If there are datasets that are known to be complete and likely to be valuable across studies, a process by which datasets are certified will speed the process. Similarly, if a manufacturer develops a set of analyses that are broadly applicable across products, such methods might be certified so that they can be applied without a great deal of additional review beyond confirmation of the validity of the exposures and outcomes definitions, and review of the plan for adjustment. Speed is of the essence, and so the process of certification must be expeditious; otherwise, manufacturers may continue to rely on the status quo, including peer-reviewed publication as an indicator of quality.

There will need to be test cases presented to the consumers of this information and to manufacturers that illustrate how this process can benefit everyone. These test cases will likely need to include estimates of the cost-benefit balance from using a certification process. An additional barrier might be funding; however, payers and the affected industries have an interest in seeing a certification process succeed, and funding could come jointly from these parties. There is an inherent challenge in such an effort, insofar as the organization needs credibility to get volume, yet volume is required to get credibility.

Conclusions

The adequacy and acceptability of RWE has been hampered by lack of standardization and variability in methodologies and study conduct. Sound theoretical foundations, standardized methodological approaches, and research practices that serve to minimize real or perceived bias will increase the credibility and quality of RWE studies. A RWE certification process will be a consequential next step that may provide a structured process to allow for more widespread application of RWE in health care decision making.

The past decade has brought increasing access to large data-sets from electronic health records and exciting linked datasets. The methodologies for causal inference from observational data continue to advance. If such a process of certification takes hold, we believe payers and other stakeholders will see the value of this process and will demand—or at least prefer—certified studies to inform their decision making. Payers might require that certified studies be included in dossiers submitted for their review, while the quality of nonrandomized studies included in systematic reviews could be substantially improved. However, we acknowledge barriers to implementing this certification process and anticipate that there could be slow uptake and some learning by doing.

Payers will recoup investments through the reduction of waste and inefficiencies for currently covered services. Manufacturers will benefit from having the evidence that they generate about the superiority of their products accepted confidently by payers. Alternatively, external foundation support can be sought for this activity, if this is recognized as a public good. Maintaining the status quo while preserving the potential to improve and innovate will require a cautious balance. Structured focus, transversal stakeholder collaboration, and a quest for methodological rigor will maintain a favorable paradigm for RWE.

References

- 1. Garrison LP Jr., Neumann PJ, Erickson P, Marshall D, Mullins CD. Using real-world data for coverage and payment decisions: the ISPOR Real-World Data Task Force report. Value Health. 2007;10(5):326-35. [DOI] [PubMed] [Google Scholar]

- 2. Randhawa GS. Building electronic data infrastructure for comparative effectiveness research: accomplishments, lessons learned and future steps. J Comp Eff Res. 2014;3(6):567-72. [DOI] [PubMed] [Google Scholar]

- 3. Real World Evidence Leadership Symposium: Realizing the full potential of RWE to support pricing and reimbursement decisions. Jointly sponsored by Johns Hopkins Center for Drug Safety and Effectiveness and IMS Health. November 4, 2014. Baltimore, MD. [Google Scholar]

- 4. Lexchin J, Bero LA, Djulbegovic B, Clark O. Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ. 2003;326(7400):1167-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Institute of Medicine. Evidence-Based Medicine and the Changing Nature of Healthcare: 2007 IOM Annual Meeting Summary. Washington, DC: National Academies Press; 2008. [PubMed] [Google Scholar]

- 6. Margulis AV, Pladevall M, Riera-Guardia N, et al. . Quality assessment of observational studies in a drug-safety systematic review, comparison of two tools: the Newcastle-Ottawa Scale and the RTI item bank. Clin Epidemiol. 2014;6:359-68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Deeks JJ, Dinnes J, D’Amico R, et al. ; International Stroke Trial Collaborative Group; European Carotid Surgery Trial Collaborative Group. Evaluating non-randomised intervention studies. Health Technol Assess. 2003;7(27):1-173. [DOI] [PubMed] [Google Scholar]

- 8. Bohannon J. Who’s afraid of peer review? Science. 2013;342(6154):60-65. [DOI] [PubMed] [Google Scholar]

- 9. Jefferson T, Rudin M, Brodney Folse S, Davidoff F.. Editorial peer review for improving the quality of reports of biomedical studies. Cochrane Database Syst Rev. 2007;(2):MR000016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Siegel JE, Torrance GW, Russell LB, Luce BR, Weinstein MC, Gold MR.. Guidelines for pharmacoeconomic studies. Recommendations from the Panel on Cost Effectiveness in Health and Medicine. Panel on Cost Effectiveness in Health and Medicine. Pharmacoeconomics. 1997;11(2):159-68. [DOI] [PubMed] [Google Scholar]

- 11. Phillips KA, Chen JL.. Impact of the U.S. panel on cost-effectiveness in health and medicine. Am J Prev Med. 2002;22(2):98-105. [DOI] [PubMed] [Google Scholar]

- 12. Academy of Managed Care Pharmacy. The AMCP format for formulary submissions version 3.1. December 2012. Available at: www.amcp.org/practice-resources/amcp-format-formulary-submisions.pdf. [DOI] [PMC free article] [PubMed]

- 13. U.S. Food and Drug Administration. Guidance for industry. Good pharmacovigilance practices and pharmacoepidemiologic assessment. March 2005. Available at: http://www.fda.gov/downloads/RegulatoryInformation/Guidances/UCM126834.pdf. Accessed December 10, 2015.

- 14. International Society for Pharmacoepidemiology. Guidelines for good pharmacoepidemiology practices (GPP). Revised June 2015. Available at: https://www.pharmacoepi.org/resources/guidelines_08027.cfm. Accessed December 10, 2015.

- 15. U.S. Food and Drug Administration. Guidance for industry and FDA staff. Best practices for conducting and reporting pharmacoepidemiologic safety studies using electronic healthcare data. May 2013. Available at: http://www.fda.gov/downloads/drugs/guidancecomplianceregulatoryinformation/guidances/ucm243537.pdf. Accessed December 10, 2015.

- 16. The European Network of Centres for Pharmacoepidemiology and Pharmacovigilance (ENCePP). Guide on methodological standards in pharmacoepidemiology (revision 4). EMA/95098/2010. Available at: http://www.encepp.eu/standards_and_guidances/documents/ENCePP GuideofMethStandardsinPE_Rev4.pdf. Accessed December 10, 2015.

- 17. Velentgas P, Dreyer NA, Nourjah P, Smith SR, Torchia MM, eds. Developing a protocol for observational comparative effectiveness research: a user’s guide. AHRQ Publication No. 12(13)-EHC099. Rockville, MD: Agency for Healthcare Research and Quality; January 2013. Available at: http://effectivehealthcare.ahrq.gov/index.cfm/search-for-guides-reviews-and-reports/?productid=1166&pageaction=displayproduct. Accessed December 10, 2015. [PubMed]

- 18. Dreyer NA, Schneeweiss S, McNeil BJ, et al. . GRACE principles: recognizing high-quality observational studies of comparative effectiveness. Am J Manag Care. 2010;16(6):467-71. [PubMed] [Google Scholar]

- 19. Patient-Centered Outcomes Research Institute. Research methodology. April 28, 2014. Available at: http://www.pcori.org/content/research-methodology. Accessed December 10, 2015.

- 20. Brixner DI, Holtorf AP, Neumann PJ, Malone DC, Watkins JB. Standardizing quality assessment of observational studies for decision making in health care. J Manag Care Pharm. 2009;15(3):275-83. Available at: http://www.amcp.org/data/jmcp/275-283.pdf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Wells GA, Shea B, O’Connell D, et al. . The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. Available at: http://www.medicine.mcgill.ca/rtamblyn/Readings/The%20 Newcastle%20-%20Scale%20for%20assessing%20the%20quality%20 of%20nonrandomised%20studies%20in%20meta-analyses.pdf. Accessed December 10, 2015.

- 22. Downs SH, Black N. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health.1998;52(6):377-84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Sterne JAC, Higgins JPT, Reeves BC, eds., on behalf of the development group for ACROBAT-NRSI. A Cochrane Risk Of Bias Assessment Tool: for Non-Randomized Studies of Interventions (ACROBAT-NRSI), Version 1.0.0. September 24, 2014. Available at: http://www.riskofbias.info. Accessed December 12, 2015.

- 24. National Committee for Quality Assurance. Health plan accreditation. Available at: http://www.ncqa.org/Programs/Accreditation/HealthPlanHP.aspx. Accessed December 10, 2015.

- 25. The Joint Commission. Facts about hospital accreditation. Available at: http://www.jointcommission.org/accreditation/accreditation_main.aspx. Accessed December 10, 2015.

- 26. Roberts JS, Coale JG, Redman RR. A history of the Joint Commission on accreditation of hospitals. JAMA. 1987;258(7):936-40. [PubMed] [Google Scholar]

- 27. Romano PM Managed care accreditation: the process and early findings. J Health Care Qual. 1993;15(6):12-16. [DOI] [PubMed] [Google Scholar]