Abstract

The role of perceived experts (i.e., medical professionals and biomedical scientists) as potential anti-vaccine influencers has not been characterized systematically. We describe the prevalence and importance of anti-vaccine perceived experts by constructing a coengagement network based on a Twitter data set containing over 4.2 million posts from April 2021. The coengagement network primarily broke into two large communities that differed in their stance toward COVID-19 vaccines, and misinformation was predominantly shared by the anti-vaccine community. Perceived experts had a sizable presence within the anti-vaccine community and shared academic sources at higher rates compared to others in that community. Perceived experts occupied important network positions as central anti-vaccine nodes and bridges between the anti- and pro-vaccine communities. Perceived experts received significantly more engagements than other individuals within the anti- and pro-vaccine communities and there was no significant difference in the influence boost for perceived experts between the two communities. Interventions designed to reduce the impact of perceived experts who spread anti-vaccine misinformation may be warranted.

2. Introduction

Vaccine refusal poses a major threat to public health, and has been a particular concern during the COVID-19 pandemic [Islam et al., 2020, Tangcharoensathien et al., 2020, Bonnevie et al., 2021, Carpiano et al., 2023]. An estimated 232,000 vaccine-preventable COVID-19 deaths occurred in unvaccinated adults in the United States across a fifteen-month period (May 2021 - September 2022) [Jia et al., 2023]. Exposure to misinformation (i.e., false or misleading claims) may reduce vaccine uptake, increasing individual risk of morbidity and mortality and potentially lead to disease outbreaks [Loomba et al., 2021, Wilson and Wiysonge, 2020]. The Internet, particularly social media, is an important source of both vaccine information and misinformation [Jones et al., 2012, Kata, 2010, Wawrzuta et al., 2021, Islam et al., 2020]. Social media surveillance has been proposed as a strategy to assess public opinion about vaccination and to study patterns in vaccine decision-making that may inform interventions [Cascini et al., 2022, Tavoschi et al., 2020, Boucher et al., 2021, Griffith et al., 2021, Tangcharoensathien et al., 2020]. For example, online social networks often contain influencers, users who play outsized roles in information propagation and receive significantly more engagement with their content than other users [Peng et al., 2017, Bakshy et al., 2011, Cha et al., 2010, Goyal et al., 2008, DeVerna et al., 2022]. Once identified, influencers may be targeted to optimize rapid dissemination of information (e.g., for advertising, public service announcements, or fact-checking purposes) [Peng et al., 2017, Bakshy et al., 2011, Nguyen et al., 2013, Candogan and Drakopoulos, 2017] or to reduce the propagation of harmful content with minimal intervention [Smith et al., 2021, DeVerna et al., 2022, Manoel Horta Ribeiro et al., 2018].

Information consumers often use markers of credibility to assess different sources [Acerbi, 2016, Sundar, 2007]. Specifically, prestige bias describes a heuristic where one preferentially learns from individuals who present signals associated with higher status (e.g., educational and professional credentials) [Jiménez and Mesoudi, 2019, Jucks and Thon, 2017, Berl et al., 2021]. Importantly, prestige-biased learning relies on signifiers of expertise that may or may not be accurate or correspond with actual competence in a given domain [Acerbi, 2016, Brand et al., 2020]. Therefore we will refer to perceived experts to denote individuals and organizations that signal biomedical expertise in their social media profiles, but note that credentials may be misrepresented or misunderstood. In particular, we focus on the understudied role of perceived experts as potential anti-vaccine influencers who accrue influence through prestige bias [Cascini et al., 2022, Tangcharoensathien et al., 2020]. Medical professionals, biomedical scientists, and organizations are trusted sources of medical information who may be especially effective at persuading people to get vaccinated and correcting misconceptions about disease and vaccines [Amazeen and Krishna, 2020, Vraga and Bode, 2017, Freed et al., 2011, Gesser-Edelsburg et al., 2018, Jucks and Thon, 2017], suggesting that prestige bias may apply to vaccination decisions, including for COVID-19 vaccines [Lopes and 2021, 2021, Reiter et al., 2020, Berenson et al., 2021].

Despite the large body of research on perceived experts who recommend vaccination, the prevalence and influence of perceived experts acting in the opposite role, as disseminators of false and misleading claims about health has not been studied directly. Prior work on anti-vaccine influencers suggested a category analogous to our definition of perceived experts and provided notable examples [Smith, 2017, Van Schalkwyk, 2019, The Virality Project, 2022, Carpiano et al., 2023]. For example, former physician Andrew Wakefield and other perceived experts promulgated the myth that the measles, mumps, and rubella (MMR) vaccine causes autism [Kata, 2010], perceived experts appeared in the viral Plandemic conspiracy documentary and other anti-vaccine films [Prasad, 2022, Bradshaw et al., 2020, 2022], and six of the twelve anti-vaccine influencers identified as part of the “Disinformation Dozen” responsible for a majority of anti-vaccine content on Facebook and Twitter included medical credentials in their social media profiles [Center for Countering Digital Hate, 2021]. Anti-vaccine users comprised a considerable proportion of apparent medical professionals (a subset of perceived experts excluding scientific researchers) sampled based on use of a particular hashtag (#DoctorsSpeakUp) [Bradshaw, 2022] or inclusion of certain keywords in their profiles [Ahamed et al., 2022, Kahveci et al., 2022]. The number and population share of perceived experts across the community of individuals on the microblogging website Twitter opposing COVID-19 vaccines has not been assessed systematically, an important step toward understanding the scale of this set of potential anti-vaccine influencers and one of the goals of this paper.

In addition to signaling expertise in their profiles, perceived experts may behave like biomedical experts by making scientific arguments and sharing scientific links, but also propagate misinformation by sharing unreliable sources. Anti-vaccine documentaries frequently utilize medical imagery and emphasize the scientific authority of perceived experts who appear in the films [Prasad, 2022, Bradshaw et al., 2020, Hughes et al., 2021]. Although vaccine opponents reject scientific consensus, many still value the brand of science and engage with peer reviewed literature [Koltai and Fleischmann, 2017]. Scientific articles are routinely shared by Twitter users who oppose vaccines and other public health measures (e.g., masks), but sources may be presented in a highly selective or misleading manner [van Schalkwyk et al., 2020, Van Schalkwyk, 2019, Abhari et al., 2023, Lee et al., 2021, Beers et al., 2022, Koltai and Fleischmann, 2017]. At the same time, misinformation claims from sources that often fail fact checks (i.e., low-quality sources) are pervasive within anti-vaccine communities, where they may exacerbate vaccine hesitancy [Loomba et al., 2021, Sharma et al., 2021, DeVerna et al., 2022, Muric et al., 2021]. Here, we investigate how much perceived experts in the anti-vaccine community share both misinformation and scientific sources compared to other users to understand the types of evidence perceived experts use to support their arguments.

After describing the types of information that perceived experts share, we evaluate their ability to reach large audiences who help spread their messages. Various network centrality metrics have been developed to describe the importance of a given node (in this case, user) to information flow based on connections to other nodes. For example, degree centrality is the count of edges (connections between nodes) linking a given node to other nodes, betweenness centrality is the number of times a given node lies on the shortest path between two other nodes, and eigenvector centrality scores nodes recursively based on the centrality of the nodes to which they are connected (Supplemental Table 1). Centrality metrics are commonly used to rank the importance of different users within a social media and help identify influential users [Cha et al., 2010, Hagen et al., 2018, Simmie et al., 2014, Riquelme and González-Cantergiani, 2016, Gilbert and Paulin, 2015]. Pro-vaccine perceived experts were highly central in other Twitter networks discussing vaccines, but no prior analysis has focused on the the centrality of perceived experts in the anti-vaccine community [Hagen et al., 2022, Sanawi et al., 2017]. In addition to a node’s centrality to the whole network, its ability to span opposing communities may be particularly significant [Beers et al., 2023]. “Cognitive bridges,” or users who share content of interest to both anti-vaccine and pro-vaccine communities may be particularly important due to their potential to connect vaccine skeptics with accurate information or to reduce vaccine confidence in pro-vaccine audiences [van Schalkwyk et al., 2020]. If perceived experts occupy central and bridging network positions, they may be well-positioned to share their opinions with other users and share (mis)information about vaccines.

In general, perceived expertise may increase user influence within anti-vaccine communities. Although vaccine opponents express distrust in scientific institutions and the medical community writ large, they simultaneously embrace perceived experts who oppose the scientific consensus as heroes and trusted sources [Kata, 2010, Prasad, 2022, Hughes et al., 2021, Bradshaw et al., 2022]. Medical misinformation claims attributed to perceived experts were some of the most popular and durable topics within misinformation communities on Twitter during the COVID-19 pandemic [Boucher et al., 2021, Haupt et al., 2021]. In fact, compared to individuals who agree with the scientific consensus, individuals who hold counter-consensus positions may actually be more likely to engage with perceived experts that align with their stances [Beers et al., 2022, Faasse et al., 2016, Wood, 2018]. This expectation is based on experimental work on source-message incongruence, which suggests that messages are more persuasive when they come from a surprising source [Manes, 2019, Wood and Eagly, 1980]. This phenomenon may extend to the case where perceived experts depart from the expected position of supporting vaccination. In an experiment where participants were presented with claims from different sources about a fictional vaccine, messages from doctors opposing vaccination were especially influential and were transmitted more effectively than pro-vaccine messages from doctors [Jiménez et al., 2018]. However, this effect has not been tested for actual perceived experts commenting on real vaccines. We posit that perceived experts who spread misinformation may be disproportionately amplified within the Twitter network and have a greater influence on opinion compared to those who advance consensus positions and encourage vaccination [Efstratiou and Caulfield, 2021]. By quantifying the relative impact of perceived experts within the anti-vaccine community compared to other individuals, we will establish whether they represent a particularly influential group that should be specifically considered in interventions to encourage vaccine uptake.

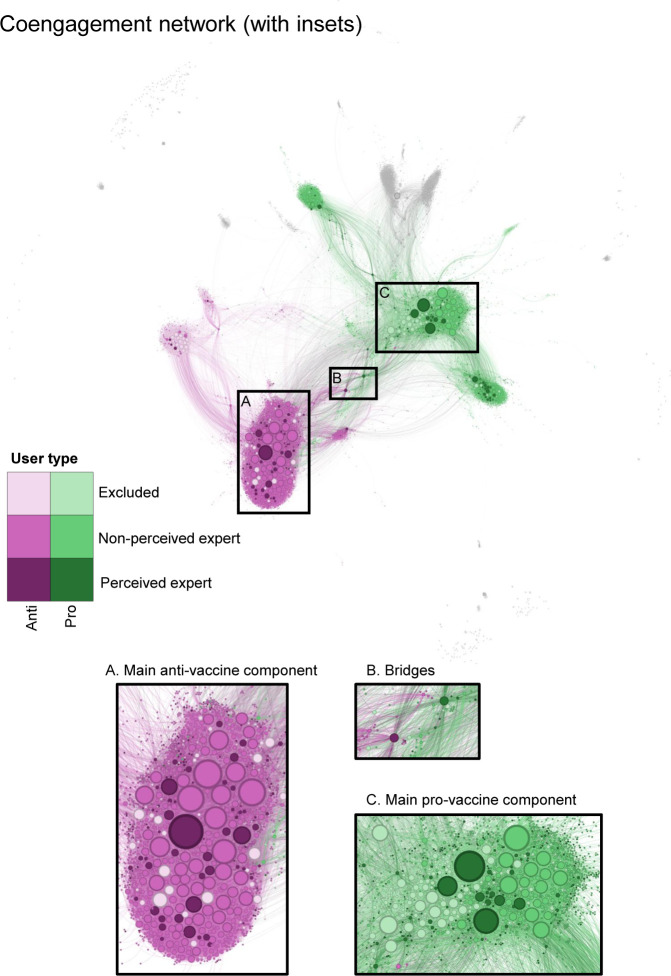

For this study, we collected over 4.2 million unique posts to Twitter containing keywords about COVID-19 vaccines during April 2021 (listed in Supplemental File 1). Users with shared audiences (i.e., those whose posts were shared at least twice by at least ten of the same users) were linked to form a coengagement network Figure 1, which is analogous to co-citation networks in bibliographic analyses that connect sources that are commonly referenced together [Beers et al., 2023]. This method groups users based on how their tweets are received instead of how they engage with other users (as would be the case if edges were based on whether users retweeted, mentioned, or followed each other) and filters the network to users who were retweeted by people who also retweeted other people in the network (i.e., users who have an audience). Profile names and descriptions for all accounts in the coengagement network were reviewed to identify individual users with English profiles and classify those with professional or academic biomedical credentials in their profiles as perceived experts. The network was separated into two main communities using the Infomap community detection algorithm, which identifies densely interconnected groups of nodes. Three coders assessed a sample of popular tweets from both communities and determined that users in one generally expressed a negative stance toward COVID-19 vaccines while users in the other were primarily positive, leading us to label the communities as anti- and pro-vaccine respectively.

Figure 1: The coengagement network of users tweeting about COVID-19 vaccines is divided into two large communities.

Users are represented as circles and scaled by degree centrality. Edges connect users that were retweeted at least twice by at least ten of the same users. Nodes in the two largest communities detected using the Infomap algorithm are colored in pink (anti-vaccine) and green (pro-vaccine). Shades indicate account type: excluded from analyses (light); individual perceived non-expert (medium); and perceived expert (dark). Nodes outside of the two largest communities are gray. Insets provide more detailed views of: (A) the main component of the anti-vaccine community, (B) bridges between the pro- and anti-vaccine communities, and (C) the main component of the pro-vaccine community. Each edge is colored based on the color of one of the two nodes it connects, randomly selected. A higher-resolution image without annotations is available as Supplemental Figure 6.

Our analysis is divided into two parts, where the first focuses on describing the community of perceived experts and the second evaluates their influence relative to other individuals. First, we characterized the prevalence of perceived experts and roles they play in conversations about COVID-19 vaccines, asking: (1) How many perceived experts are there in the anti- and pro-vaccine communities? and (2) How often do perceived experts in the anti-vaccine community share misinformation and scientific sources relative to other users? We first calculated the number and proportion of perceived experts in the anti- and pro-vaccine communities. To assess the types of evidence shared in the anti- and pro-vaccine communities depending on perceived expertise, we determined the proportion of links shared from domain names classified as low-quality sources by Media Bias/Fact Check or as academic by databases of research publications [Newbold et al., 2022] and pre-print servers [Kirkham et al., 2020].

Second, we tested whether perceived experts are particularly important in discussion around COVID-19 vaccines, asking: (3) Do perceived experts occupy key positions within the coengagement network (i.e., as central and bridging nodes)? and (4) Are perceived experts more influential than other individual users? First, we tested whether perceived experts were disproportionately part of the group of highly central users in the anti-vaccine and pro-vaccine community based on degree, betweenness, and eigenvector centrality Table 1. We also tested whether perceived experts were overrepresented among the users with the greatest community bridging scores relative to their share of the population. Second, we conducted propensity score matching to assess whether, on average, perceived experts received more engagements (i.e., likes and retweets) and had greater network centrality compared to other users with similar profile characteristics and posting behaviors who were not perceived experts. We conducted this analysis for both communities separately, hypothesizing that: perceived experts experienced an influence boost in both the anti-vaccine community (H1) and the pro-vaccine community (H2). We then compared the size of this effect between the two communities, hypothesizing that perceived experts experienced a larger influence boost within the anti-vaccine community due to source-message incongruence (H3).

3. Results

3.1. Perceived experts are present throughout the coengagement network, including in the anti-vaccine community

The coengagemement network consisted of 7,720 accounts (nodes) linked by 72,034 edges (Figure 1). 5,171 of those accounts had English language profiles and appeared to correspond to individual users. 24 of the individual users with English profiles added or removed expertise cues in their profiles over the course of the study and were thus excluded from the analysis. Of the remaining 5,147 individual users, 13.1% (678 users) were perceived experts. Perceived experts rarely provided cues of expertise in their name alone. Instead, almost all users indicated expertise in their description, with approximately half of users including expertise cues in both their name and description (Supplemental Figure 7). There was substantial agreement between coders on whether a user was in an excluded category, a perceived non-expert, or a perceived expert (κ = 0.715).

The two largest communities contained 79.6% of total accounts and 66.1% of English-language individual accounts in the network. Stance toward COVID-19 vaccines by users with the greatest degree centrality was relatively consistent, meaning that very few users posted a combination of tweets that were positive and negative in stance, although most users posted some neutral tweets as well (Supplemental Figure 8). Further, stance was generally shared within communities; popular tweets by central users in the anti- and pro-vaccine communities almost exclusively expressed negative and positive stances, respectively, or neutral stances, with a few exceptions. Although there was moderate inter-rater reliability (κ = 0.567) between coders on whether individual tweets were negative, positive or neutral about vaccines, there was high agreement on the overall stance of each user (κ = 0.805 for whether a given user posted more anti-vaccine tweets, pro-vaccine tweets, or equal numbers of either).

The pro-vaccine community was larger than the anti-vaccine community (3,443 and 2,704 users respectively). The anti-vaccine community was made up of a large, densely connected component split into two communities and linked to two additional medium-sized subcommunities (Figure 1A), which were dominated by users tweeting in languages other than English (Italian and French) (see Supplemental Figure 10 and Supplemental Table 4 for visualization and further discussion of subcommunities). The pro-vaccine community similarly consisted of one large component that was connected to the next largest community, which was focused on vaccination in India, and two medium-sized subcommunities (Figure 1C). The largest component of the pro-vaccine community was divided into two subcommunities, one of which was dominated by non-individual accounts. Perceived experts were present in both communities, but constituted a larger share of individual users in the pro-vaccine community (17.2 % or 386 accounts) compared to the anti-vaccine community (9.8 % or 185 accounts) (Figure 3). The two communities were connected by a few bridging nodes, including some perceived experts (Figure 1B).

Figure 3: Perceived experts are overrepresented as the most central users in the pro-vaccine (green) and anti-vaccine (pink) communities.

Plots are arranged in a grid where each row corresponds to users in one of the two largest communities: the anti- and pro-vaccine communities (top and bottom respectively). Each column corresponds to a different centrality metric: betweenness centrality (left), degree centrality (middle), and eigenvector centrality (right). For each plot, we subset the network to the nodes with the greatest values for a given centrality metric, where is full population size (all individual users in the community), 500, or 50 (x-axis). Bar height indicates the proportion of users in each subset that are perceived experts and error bars give 95% binomial proportion confidence intervals. Stars above the error bars indicate whether perceived experts are significantly overrepresented within a given sample of central users (one star indicates , two stars indicate , three stars indicate ).

3.2. Perceived experts in the anti-vaccine community share both low quality and academic sources

We found marked differences in sharing of low quality and academic sources depending on community and perceived expertise Figure 2. For both types of sources, we calculated a user-level metric (the proportion of users who shared at least one link of a given type; Figure 2B, D) and a link-level metric (the proportion of all links shared during the study period that were of a given type; Figure 2A, C). Perceived experts posted more frequently, and included links in a greater proportion of their posts (Supplemental Figure 9)

Figure 2: Users in the anti-vaccine community share low quality sources and perceived experts share academic research.

Each panel compares a different metric of link-sharing by perceived experts and perceived non-experts in the anti- (pink) and pro- (green) vaccine communities. The metrics are: (A) proportion of checked links that were from low quality sources, (B) proportion of users that shared at least one low quality source, (C) proportion of checked links that were from academic research sources, and (D) proportion of users that shared at least one academic research source. 95% binomial proportion confidence intervals are indicated by black error bars.

Compared to the pro-vaccine community, perceived experts and perceived non-experts in the anti-vaccine community shared low quality sources at significantly greater rates (p < 0.001 for proportion of links and proportion of users). Low quality sources were almost exclusively shared in the anti-vaccine community, although low quality sources generally comprised a relatively small proportion of assessed links (Figure 2A, B). Many users in the anti-vaccine community shared at least one low quality link (29% of perceived experts and 26% of perceived non-experts) compared to fewer than 0.5% of users in the pro-vaccine community (two perceived experts and seven perceived non-experts) (Figure 2B).

In both communities, perceived experts shared academic research links at a significantly greater rate compared to other individual users (Figure 2C, D) ( for proportion of links and proportion of users). There was greater academic link-sharing by perceived non-experts in the anti-vaccine community compared to the pro-vaccine community ( and for proportion of links and proportion of users respectively).

3.3. Perceived experts are overrepresented as central and bridging users

Although perceived experts represented a relatively small share of the individual users in the coengagement network, they disproportionately occupied important positions in the network as central and bridging nodes (Figure 3, Figure 4). Perceived experts were overrepresented among users with the greatest betweenness, degree, and eigenvector centrality in both communities, although this effect was not always statistically significant for the pro-vaccine community (Figure 3).

Figure 4: Perceived experts disproportionately act as key bridges between the pro-vaccine and anti-vaccine communities.

Bar height indicates the proportion of users in each population sample that are perceived experts and error bars give 95% confidence intervals for the proportions. The x-axis indicates the size of each subset (), corresponding to the full population of 5,147 (all individual users as a basis of comparison), and the 500, 50, or 10 users with the greatest community bridging score (x-axis). Stars above the error bars indicate whether perceived experts are significantly overrepresented within a given sample of bridging users (one star indicates , two stars indicate , three stars indicate ).

Perceived experts in both communities were highly overrepresented among the five hundred users with the greatest betweenness centrality ( for both communities) and overrepresented among the fifty users with the greatest betweenness centrality by a factor of about two ( and for the anti- and pro-vaccine communities respectively) (Figure 3).

Ranking on degree centrality and eigenvector centrality, perceived experts were more strongly overrepresented in the anti-vaccine community compared to the pro-vaccine community (Figure 3). Perceived experts in the anti-vaccine community were about two times more prevalent in the group of central users (20% of the fifty top users ranked on both metrics) compared to their share of the population, while perceived experts in the pro-vaccine community were overrepresented by a factor of approximately 1.6. By both metrics, perceived experts in the anti-vaccine community were significantly overrepresented in the 500 most central users ( and for degree and eigenvector centrality respectively) and the fifty most central users ( for both degree and eigenvector centrality). In the pro-vaccine community, perceived experts were significantly overrepresented in the 50 most central users ( for both degree and eigenvector centrality) but not in the 500 most central users ( and ). It is unsurprising that the results for eigenvector and degree centrality were similar given that the PageRank algorithm used to compute eigenvector centrality is proportional to degree centrality on an undirected graph [Grolmusz, 2015].

Perceived experts were significantly overrepresented in the group of the 500 and ten most important bridges between the pro- and anti-vaccine communities (, respectively), but not significantly overrepresented in the fifty most important bridges (). Figure 4. About 20% of the top 500 and top fifty users ranked by community bridging scores were perceived experts, and five of the 10 users with the greatest community bridging scores were perceived experts (Figure 4, Figure 1B). There was little variation in which users were highly ranked between different network metrics (Supplemental Figure 12, Supplemental Figure 13, Supplemental Figure 14, Supplemental Figure 15).

3.4. Perceived experts are more influential than other individuals in the anti- and pro- vaccine communities

Using propensity score matching, we achieved excellent balance across matching covariates within the anti-vaccine community (Supplemental Figure 16) and good matching within the pro-vaccine community for all variables except for the percent of posts with links (Supplemental Figure 17 and Supplemental Figure 18). There were minimal differences in the results of propensity score matching with and without matching on subcommunities (compare Figure 5 and Supplemental Figure 19; Supplemental Table 6, Supplemental Table 7, and Supplemental Table 8). Using these propensity-matched pairs for comparison, on average, perceived experts received more engagements (likes and retweets) on their posts, but were not more central than other individual users (Figure 5, Supplemental Figure 19, Supplemental Table 6, Supplemental Table 7, Supplemental Table 8).

Figure 5: In the anti-vaccine and pro-vaccine communities, perceived experts receive greater engagements compared to other users.

For each influence metric (y-axis, Supplemental Table 3), we plot the standardized average treatment effect on the treated (ATT) as a point and corresponding 95% confidence interval. Values are shown for the matching analysis directly comparing the anti-vaccine (pink) and pro-vaccine (green) communities (corresponding to Supplemental Table 8). Positive values (to the right of the vertical line) indicate an influence boost for perceived experts. Instances where the effects were significantly greater than zero () are indicated with an additional circle around the point estimate.

Perceived experts in the anti-vaccine community received 20% (95% CI: 3 – 43%) more retweets and 37% (95% CI: 9 – 63%) more likes on their median post than would be expected if they were not perceived experts (Figure 5, Supplemental Figure 19, Supplemental Table 6). We also found a significant effect of perceived expertise when using an alternative metric, the h-index, to assess engagements across tweets posted during the time period (ATT for natural logged retweets: 1.90 (95% CI: 0.36–3.44); ATT for natural logged likes: 3.43 (95% CI: 1.11–5.76)) (Figure 5, Supplemental Figure 19, Supplemental Table 6). In contrast to these measures of engagement, perceived expertise did not significantly affect centrality (betweenness, degree, and eigenvector) within the anti-vaccine community on average (Figure 5, Supplemental Table 8, Supplemental Table 6), although we found in the previous section that perceived experts were overrepresented in the tail of the distribution as highly central users compared to the full (unmatched) set of perceived non-experts (see subsection 7.8 for further discussion reconciling these two results).

Perceived experts in the pro-vaccine community also had a positive ATT across engagement metrics and additionally had a significantly positive ATT for betweenness centrality (Figure 5, Supplemental Figure 19, Supplemental Table 7). Although perceived experts in the pro-vaccine community tended to have a greater ATT across all influence metrics than those in the anti-vaccine community, the differences in ATT between groupswere not statistically significant for any influence metric (Supplemental Figure 20, Figure 5, Supplemental Table 9). Matching results were robust to matching parameters and exclusion of different matching covariates (Supplemental Figure 22, Supplemental Figure 23, and Supplemental Figure 24).

4. Discussion

The anti-vaccine community contains its own set of perceived experts. These perceived experts represent 9.8% of individual users within the anti-vaccine community, comprising a substantial group that extends beyond the handful of high-profile anti-vaccine influencers with biomedical credentials who have been noted anecdotally [Smith, 2017, The Virality Project, 2022]. Although surveys have found broad support for COVID-19 vaccination among medical providers [Callaghan et al., 2022, Bartoš et al., 2022], 28.9% of perceived experts in the two largest communities of the coengagement network were part of the anti-vaccine community, a proportion similar to those reported by other studies of COVID-19 vaccine attitudes expressed by medical professionals on Twitter [Ahamed et al., 2022, Kahveci et al., 2022, Bradshaw, 2022]. Anti-vaccine perceived experts are therefore overrepresented on Twitter compared to the share of actual biomedical experts who oppose Covid-19 vaccines, which may lead observers to underestimate the scientific consensus in favor of COVID-19 vaccination, in turn reducing vaccine uptake [Efstratiou and Caulfield, 2021, Bartoš et al., 2022, Motta et al., 2023].

Within the anti-vaccine community, perceived experts may combine misinformation with claims that appear scientific. Low quality sources in the coengagement network were overwhelmingly shared by the anti-vaccine community, and perceived experts shared low quality sources at similar rates compared to other individuals (Figure 2), suggesting that they directly contribute to the widespread misinformation in the anti-vaccine community noted by other studies [Memon and Carley, 2020, Muric et al., 2021, Jamison et al., 2020]. Perceived experts, including those in the anti-vaccine community, performed expertise by sharing and commenting on academic articles at much higher rates compared to other individual users (Figure 2C, D). Misinformation claims containing arguments that appear scientific may be particularly effective at reducing vaccine intent [Loomba et al., 2021], suggesting that perceived experts may be responsible for some of the most compelling anti-vaccine claims.

Perceived experts may also be well-poised to spread their claims online, as they disproportionately occupied key network positions between anti- and pro-vaccine communities and within the anti-vaccine community (Figure 3, Figure 4). Across various centrality metrics, perceived experts were overrepresented in the group of highly central users Figure 3, meaning that they reached (and had posts shared by) large and unique audiences. Perceived experts were half of the ten individuals users with the greatest community bridging scores, meaning that they were shared by audiences for users in both the anti- and pro-vaccine communities. Within this set of five perceived experts, four made highly technical arguments in favor of vaccines and corrected potential misunderstandings related to the frequency of vaccine-linked adverse events and to the severity of breakthrough infections reported at the time. Such users could play an important role in changing vaccine stance, although the two communities may share distinct subsets of their tweets for substantially different reasons [Van Schalkwyk, 2019, Beers et al., 2023]. For example, anti-vaccine audiences may retweet reports of adverse events out of concern that vaccines are unsafe while pro-vaccine audiences may share the same content to emphasize their rarity.

Our hypothesis that perceived experts are, on average, more influential than other users in the anti-vaccine community was supported by the finding that they received more engagement (i.e., likes and retweets) on their vaccine-related posts than similar users without credentials in their profiles Figure 5. We also found evidence of this effect in the pro-vaccine community. Perceived experts were not significantly more central on average than a matched set of perceived non-experts (except in the case of perceived experts in the pro-vaccine community who had greater betweenness centrality than perceived non-experts) Figure 5. These findings may be explained by the observations that matching covariates (e.g., follower count, post frequency) contribute importantly to centrality (Supplemental Figure 21) and that overrepresentation of perceived experts in the set of highly central users (i.e., the tail of the distribution) may not be sufficient to significantly increase the mean centrality of perceived experts compared to perceived non-experts Figure 3. There was no significant difference in the influence boost for perceived experts between the anti- and pro-vaccine communities, contradicting our hypothesis that perceived experts hold a greater advantage within the anti-vaccine community. However, this finding suggests that anti-vaccine audiences do value expert opinion, at least when it confirms their own stances, a result aligned with other studies that have found that scientists and medical professionals are popular sources amongst vaccine opponents [Koltai and Fleischmann, 2017, Kata, 2010, Prasad, 2022, Hughes et al., 2021, Bradshaw et al., 2022, Boucher et al., 2021, Haupt et al., 2021].

In sum, this works goes beyond high-profile examples of anti-vaccine perceived experts to systematically characterize the sizable population of anti-vaccine perceived experts who have a significant impact in the the Twitter conversation about COVID-19 vaccines. Our findings have implications for interventions focused on education of medical professionals and the general public. Educational interventions that encourage trust in science could backfire if individuals defer to anti-vaccine perceived experts who share low quality sources [O’Brien et al., 2021]. Instead, education efforts should focus on teaching the public how to evaluate source credibility to counter the potentially fallacious heuristic of deferring to individual perceived experts [O’Brien et al., 2021, Osborne and Pimentel, 2022, Sundar, 2007]. Although the sample of perceived experts in this study is not representative of the broader community of experts, surveys have found that a non-negligible minority of medical students and health professionals are vaccine hesitant and believe false claims about vaccine safety [Lucia et al., 2021, Mose et al., 2022, Callaghan et al., 2022, Le Marechal et al., 2018]. Given that perceived experts are particularly influential in vaccine conversations (Figure 3, Figure 4, Figure 5) and that healthcare providers with more knowledge about vaccines are more willing to recommend vaccination, efforts to educate healthcare professionals and bioscientists on vaccination and to overcome misinformation within this community may help to improve vaccine uptake [Paterson et al., 2016].

In addition to helping people evaluate vaccine information, interventions may focus on improving information quality by focusing on communications, social media platform design, and expert community self-governance. From a communication standpoint, greater engagement by perceived experts recommending COVID-19 vaccines and debunking medical misinformation may help to correct public misunderstandings about expert consensus based on the overrepresentation of anti-vaccine perceived experts on social media [Bartoš et al., 2022]. However, perceived experts already constituted one fifth of individuals in the pro-vaccine community according to our analysis [Hernandez et al., 2021, Gallagher et al., 2021, Hernández-García et al., 2021] and it is not clear whether individual pro-vaccine communicators will be especially persuasive to people who are already engaging with perceived experts who oppose vaccines. Instead, emphasizing the scientific consensus in favor of COVID-19 vaccines and avoiding false balance in communication may help ameliorate misconceptions [Bartoš et al., 2022, Ceccarelli, 2011]. Further, perceived experts and their professional organizations may build trust and disseminate health information more effectively by developing networked communication strategies to rapidly, openly, and factually address false claims that gain traction while clearly explaining areas of uncertainty and directly addressing legitimate safety concerns (as several of the most central perceived experts in the pro-vaccine community did during the study period, see 7.4) [Carpiano et al., 2023]. User-provided expertise cues were sufficient to garner greater engagement on Twitter in vaccine-related discussions, suggesting that individuals could misrepresent their own credentials to more effectively spread anti-vaccine misinformation (Figure 5). Platforms may counter potential deception by establishing a mechanism to verify academic and professional credentials and creating signals within profiles to identify authorities on health-related topics. These measures were implemented on Twitter in the early months of the COVID-19 pandemic, but have since been abandoned. Finally, this work illustrates the importance of self-regulation within expert communities, particularly as medical boards clarify that health professionals who spread vaccine misinformation may face disciplinary consequences [Federation of State Medical Boards Ethics and Professionalism Committee, 2022, SoRelle, 2022].

Limitations and Extensions

This study relies on proxies for tweet content and user activity that may miss important variation and nuance in stance. Breaking the network into pro- and anti-vaccine communities is common across studies of social media networks [Hagen et al., 2022, Sharma et al., 2021, ?, Jamison et al., 2020], and stance was generally consistent across popular tweets in either community (Figure 8), but there were several notable exceptions. Positive tweets from users in the anti-vaccine community tended to cite vaccine efficacy as an argument against mandating vaccines, while negative tweets from users in the pro-vaccine community (particularly those by perceived experts) praised vaccine regulators for responding to safety signals. Further, sharing a particular misinformation or academic link may not constitute endorsement, particularly in fact-checking contexts. Although academic sources were shared in the anti-vaccine community, academic sources may be misrepresented by these users [?Beers et al., 2022, Kata, 2010] or include articles that have been retracted [Van Schalkwyk, 2019, Abhari et al., 2023]. Future work may examine which academic links were shared within the anti-vaccine community and how these sources were interpreted. More detailed content analysis may reveal important differences between the communities beyond attitudes toward vaccine (e.g. attitudes toward non-pharmaceutical interventions and perceptions of severity of COVID-19 infection), heterogeneity in vaccine opinion within groups, and specific rhetorical strategies utilized by perceived experts in either group [Bradshaw et al., 2020, Maddox, 2022].

An important limitation of our study is that we were unable to assess how many users are exposed to a given tweet. By relying on engagements as an indicator of tweet popularity, we may underestimate the true reach of content. We also could not ascertain how exposure to vaccine-related information in this study influenced health decision-making and behavior, questions that could be directly evaluated in an experimental setting [Jiménez et al., 2018]. Further work may additionally examine the relative contribution of prestige bias to vaccine decision-making compared to other types of biases and how the effects of perceived expertise are moderated by other source characteristics (e.g., trust-worthiness and competence) [Pornpitakpan, 2004] and other forms of bias (e.g., confirmation bias, repetition bias, or success bias) [Acerbi, 2016, Jiménez et al., 2018, Brand et al., 2020, Berl et al., 2021, Nadarevic et al., 2020, Lachapelle et al., 2014, Lin et al., 2016].

This analysis is limited to a single month, based on events in the United States and English keywords, constrained to Twitter, and focused on conversations about COVID-19 vaccination. Initial vaccination capacity, trust in experts, and vaccine uptake vary considerably between countries [Wilson and Wiysonge, 2020] and our own analysis found considerable geographically clustering across the coengagement network (Figure 10). Further work may examine how the role of perceived experts in conversations about vaccination differed between regions and across different time periods (including prior to the COVID-19 pandemic and after the emergence of variants of concern with high breakthrough infection rates). Although we excluded the relatively small set of individuals who added or removed signals of expertise from their profile during the month of the study, extending the study period to expand this set of users could enable further examination of how user behavior and influence changes depending on perceived expertise [Hasan et al., 2022]. Twitter users are not representative of the general population [Wojcik and Hughes, 2019], and patterns in vaccine conversations on social media do not necessarily reflect actual vaccination trends [Cascini et al., 2022, Tavoschi et al., 2020]. The generalizability of these findings should be assessed on different social media platforms [Jones et al., 2012, Cascini et al., 2022, Tangcharoensathien et al., 2020]. The role of experts, particularly those who take counter-consensus positions, is relevant across other scientific topics including climate change, tobacco, and AIDS etiology and treatment [Oreskes and Conway, 2022, Epstein, 1996]. These methods could be applied to compare the role of perceived experts in conversations about different scientific and non-scientific topics (e.g., politics and entertainment) to test the extent to which domain specific credentials are necessary to be perceived as an experts and compare the effects of perceived expertise relevant to different conversations (e.g., whether politicians speaking on political matters receive an influence boost comparable to that of medical professionals and scientists discussing COVID-19 vaccines).

Conclusion

We found that the set of anti-vaccine perceived experts extends far beyond prominent examples noted by others previously [Smith, 2017, The Virality Project, 2022], suggesting that they should be addressed as a unique and sizable group that blends misinformation with arguments that appear scientific. We also found evidence that perceived experts are more influential than other individuals in the anti-vaccine community, as they disproportionately occupied central network positions where they could reach large audiences and received significantly more engagements (20% more likes and 37% more retweets) on their vaccine-related posts compared to perceived non-experts. Perceived experts are not only some of the most effective voices speaking out against vaccine misinformation; they may be some of its most persuasive sources.

5. Methods

5.1. Data collection

The Institutional Review Board of Washington University determined that this study (STUDY00017030) was exempt. Our analysis was conducted on a subset of collections of public tweets containing keywords related to vaccines and the COVID-19 pandemic, retrieved and stored in by the University of Washington Center for an Informed Public in real-time as they were posted. Tweets that were later deleted and public tweets from accounts that were suspended or became private are included in the dataset.

We constrained our search period to April of 2021, noting that all individuals sixteen and older were eligible for vaccination by April 19th, marking this time period as an especially critical window for vaccine decision-making [Roy, 2021]. Further, administration of the Johnson & Johnson (Janssen) vaccine was paused in the United States between April 13th and April 23rd while the Centers for Disease Control and Prevention (CDC) and Food and Drug Administration (FDA) investigated a safety signal involving six reported cases of severe blood clots [U.S. Food and Drug Administration, 2021]. Focusing on April of 2021 also allows us to examine how different communities reacted to credible news of a serious but rare vaccine safety signal. During the same time period, fact checkers and researchers responded to several false claims about vaccination, including rumors that vaccinated people were able to “shed” vaccine components that might infect and harm unvaccinated people [The Virality Project, 2021].

To focus our analysis on content related to COVID-19 vaccines, we selected tweets (i.e., posts) within the collections that mentioned keywords related to vaccines in general or the specific manufacturers of the three COVID-19 vaccines initially offered in the United States (Supplemental File 1). Although this protocol focuses on vaccine administration in the United States and English language, tweet selection was not constrained to a specific geographic region. In total, we retrieved 4,276,842 unique tweets including quote tweets (when another post is shared with commentary) and replies (a direct response to another user’s tweet) from April. 5,523,595 unique users participated in the Twitter conversation about COVID-19 vaccines during the study period by either posting original content or retweeting (i.e., sharing) another user’s tweet on the topic. We additionally retrieved retweets, quote tweets, and replies linked to tweets in the April collection that were posted within 28 days of the original tweet (extending the dataset to May 28th) to compare the number of likes and retweets each tweet in the April study period received across a window of the same length (four weeks).

We randomly generated a unique numeric identifier for each user to protect user privacy (particularly for users who are not public figures) while allowing our findings to be reproduced. Individual users are not named in the analyses, reinforcing that we aim to characterize the group of perceived experts within the anti-vaccine community instead of focusing on individual, high-profile examples. User profiles and numeric identifiers for accounts used by Twitter were stored separately from tweets, which were instead linked to the anonymous identifiers we assigned. We also stored a dictionary that allows for translation between these two distinct numeric identifiers and utilized the dictionary to connect statistics about tweets to properties of the network and users (e.g., to determine community stance after tagging the stance of a sample of popular tweets, to compare the types of links shared depending on perceived expertise and community, and to collect matching covariates based on Twitter activity and engagements). In tweets we coded for stance, we anonymized mentions of other usernames and removed links to other posts and images on Twitter to prevent user identification. Therefore, researchers were blinded to user identity when assessing tweet stance. We have made tweet and user information relevant to results in this study publicly available without the linking dictionary.

5.2. Coengagement network construction

We examined the activity of influential users with shared audiences by generating a coengagement network. First, we constructed a directed graph where each edge connects a user to another user whom they have retweeted at least twice. Next, we used a Docker container developed by Beers et al. [2023] to transform the network into an undirected graph. Edges link accounts that were retweeted at least twice by at least ten of the same users. The resulting graph therefore filters users to those that received some amount of repeated engagement from several accounts rather than those that produced a single viral tweet. We used the Infomap hierarchical clustering algorithm implemented at mapequation.org to detect communities within the coengagement network. Infomap balances the detection of potential substructures (i.e., subcommunities within larger communities) against concisely describing a random walker’s movements through the network to determine the total number of levels to use [Rosvall and Bergstrom, 2008, Holmgren et al., 2022]. The subcommunities detected using Infomap correspond well to communities detected using the Louvain method, an alternative community detection algorithm (Supplemental Figure 10, Supplemental Figure 11, Supplemental Table 4). Coengagement networks were visualized using the open-source software package Gephi with the ForceAtlas2 layout algorithm [Bastian et al., 2009, Jacomy et al., 2014].

5.3. Tagging profiles

Based on username, display name, and user description collected each time a user tweeted or was retweeted during the study period, we manually labeled each profile in this group as a perceived expert or not (Supplemental Figure 7). The lead author tagged all users in the coengagement network and two additional authors labeled a subset of 500 users to test robustness of tags based on interrater reliability. We first noted whether a profile indicated that the user primarily tweeted in a language other than English. Because we were unable to assess non-English expertise signals and tweets associated with these users, who may also have reached a substantially different audience compared to English-language accounts, we excluded non-English accounts from our analysis. We also noted accounts that appeared not to represent individuals (e.g., accounts for media groups, non-profit organizations, governmental agencies, and bot accounts). Non-English and non-individual accounts (2,549 accounts in total) remained in the network for visualizations and calculations related to network centrality but were excluded from analyses focused on comparing individuals with and without signals of expertise in their profiles.

For the remaining individual profiles, we noted whether there were signals of expertise in the account username or display name (which are listed with tweets in a user’s feed) or the account description (which is only visible if a user mouses over the author’s tweet or directly visits the account) (Supplemental Figure 7). Signals of expertise included academic prefixes (e.g., Dr. or Professor), suffixes (e.g., MD, MPH, RN, PhD), and professional information (e.g., scientist, retired nurse). We limited our definition of perceived expertise to include training or professional experience in a potentially relevant field but excluded individuals who expressed an interest in a related topic without providing qualifications (e.g., “virology is the coolest”). We included anonymous accounts and ones that may have been parodies (e.g., “Dr. Evil” and “The Mad Scientist”) since we expect that users evaluate profiles based on heuristics and without investigating the veracity of information provided [Sundar, 2007]. We assumed that medical and wellness professionals, including practitioners of alternative medicine, may broadly be perceived as experts regardless of specialty [Jiménez and Mesoudi, 2019]. To focus on biomedical expertise, we did not code users from other fields as perceived experts (e.g., science journalists, disability rights advocates, and governmental officials without biomedical backgrounds), acknowledging that these sources may provide trusted and knowledgeable perspectives relevant to health decision-making.

Users that indicated expertise in any part of their profile (names, description, or both) at any point in time were tagged as perceived experts based on experimental evidence that users with biomedical expertise signals in their profiles are perceived to have greater expertise on COVID-19 vaccines [Jalbert et al.]. Users that changed their profiles over the course of the study in a manner that changed whether they might be perceived as an expert (24 accounts) were excluded from the following analyses.

5.4. Determining community stance

To understand the stance of individuals in the two largest communities, we analyzed tweets that received retweets during April 2021. Of the 251,040 tweets satisfying those criteria, we tagged a subset posted by the ten perceived experts and perceived non-experts in both communities with the greatest degree centrality. We collected the ten most retweeted tweets by the forty selected users. A few of the users had fewer than ten tweets that were retweeted during the study period, so we instead retrieved all of their tweets that received retweets in April. In total, we reviewed 392 tweets.

For each tweet, three coders assessed stance toward COVID-19 vaccines as positive, negative, or neutral. Stance was evaluated as positive if the tweet supported vaccination, including by providing evidence that COVID-19 vaccines are safe and effective. Tweets expressing the opposite view were coded as negative. Stance was often implicit. For example, calls for deployment of vaccines to “hotspots” with elevated disease burden suggest that the author believes the vaccine can prevent disease, even if this rationale is not stated directly. Unless the author refuted or negatively reacted to a quote they provided, a tweet they quote tweeted, or an article they linked, the post was assumed to take the same stance as referenced sources. Tweets containing multiple contrasting viewpoints or providing information without a clearly implied position (e.g., sharing statistics about the pace of vaccination without any additional commentary) were coded as neutral. The neutral tag was also applied to tweets if the coder was uncertain about the argument being made or context was missing (e.g., criticism of an article that could not be retrieved). We noted many tweets during this time period that opposed vaccine mandates and coded these tweets as neutral unless the author justified their position using a specific argument regarding vaccine safety or efficacy. Tweets comparing multiple types of vaccines with negative stances toward some and positive stances toward others were tagged neutral. Tweets that took a uniform stance toward different types of COVID-19 vaccines while explaining their differences were coded based on the corresponding stance. Based on this analysis, we determined that negative stances toward COVID-19 vaccines were prevalent in tweets from one community, which we refer to as the anti-vaccine community in following analyses (Supplemental Figure 8). The other community, in which tweets mainly expressed positive stances, was the pro-vaccine community that served as a basis of comparison.

5.5. Academic and low-quality link-sharing

We assessed the types of sources users referenced in their tweets by comparing links shared to databases of: (1) low quality sources and (2) academic research journals and pre-print servers. We expanded shortened URLs using the RCurl package in R [Temple Lang, 2023]. In some cases, this process timed out or links connected to other content shared on Twitter, in which case links were excluded from the following analysis. Across community and perceived expertise, we compared the average number of tweets per user, proportion of tweets with links, and proportion of links that were checked, and number of links that were checked to ensure there was minimal risk of differences in link-sharing behavior leading to bias in the following analyses (Supplemental Figure 9). We checked the remaining 52,073 links, first determining whether the domain name was rated “low” or “very-low” quality by Media Bias/Fact Check according to the Iffy Index of Unreliable Sources [Golding, 2023, Van Zandt], a common proxy for misinformation sharing [Sharma et al., 2021, DeVerna et al., 2022]. To assess sharing of academic research, we also checked links to research publications [Priem et al., 2022] and pre-print servers used in biomedical and medical sciences [Kirkham et al., 2020]. For perceived experts and perceived non-experts in each of the two large communities, we calculated: (1) the proportion of assessed links from low quality or academic research sources and (2) the proportion of total users that shared at least one link from a low quality or academic research source in their original posts during April 2021. For each metric, we calculated the binomial proportion 95% confidence interval as where is the observed proportion and is the total links or users in a given category. To compare proportions for different categories of users, we conducted two proportion one-tailed Z-tests with Yates’ continuity correction to account for the small number of links from low quality sources shared by the pro-vaccine community.

5.6. Network centrality and bridging metrics

We calculated degree, betweenness, and eigenvector centrality to describe network position using the igraph package in R [Csardi and Nepusz, 2006] (see Supplemental Table 1 for a description of centrality metrics and their interpretation). Specifically, we calculated eigenvector centrality using the PageRank algorithm. We additionally detected nodes with audiences spanning the antiand pro-vaccine communities using a community bridging index calculated as the minimum number of edges that a node has linking it to either of the two communities.

We tested whether perceived experts were overrepresented within the group of highly ranked users by each metric by calculating the proportion of perceived experts in the top users ranked by a given metric (where varies between 500 and 50 for all metrics and ten for bridging score). Several perceived experts and perceived non-experts had the same bridging scores, leading to ties for ranking in the top 500 and 50 bridges. In these cases, we randomly drew 1,000 samples from the tied users without replacement to complete the set of top bridges and calculated the mean proportion of perceived experts across all samples. To calculate the 95% confidence interval in these cases, we added the margin of error to the .025th and 97.5th percentile proportion value across the 1,000 samples. For the remaining metrics and subsets, we calculated the binomial proportion 95% confidence interval as in the previous section except when examining the ten top bridges, in which case we calculated the Wilson score interval to account for the small sample size.

To test whether perceived experts were significantly overrepresented in each sample of highly ranked individuals, we conducted two proportion Z-tests with the alternative hypothesis that perceived experts were a significantly greater proportion of the top individuals than individuals in the complement (i.e., individuals who were not among the top individuals ranked by a given metric). When considering the top ten bridges, we instead used a Fischer’s exact t-test to account for the small sample size. We reported p-values for significance tests.

5.7. Matching to assess relative influence of perceived experts

This portion of the analysis was pre-registered on OSF Registries prior to hypothesis testing (https://osf.io/6u3rn). We assessed how perceived expertise affects influence using propensity score matching, a technique to select a sample of individual perceived non-experts with a similar covariate distribution to perceived exerts in the dataset [Rosenbaum and Rubin, 1983]. We can then compare the average influence between perceived experts and perceived non-experts while adjusting for confounding from covariates that may predict being a perceived expert without being a direct consequence of perceived expertise. Propensity score matching has been applied previously to compare the effectiveness of different users in the context of online advertising [Yan et al., 2018, Kuang et al., 2018] and to understand whether following prominent public health institutions on Twitter impacts vaccine sentiment [Rehman et al., 2016]. Propensity score matching was performed using the MatchIt package in R [Ho et al., 2011].

We matched on the following covariates: natural logged follower count at the beginning of April 2021, total on-topic posts during the study period (i.e., posts included in our data set because they contained a COVID-19 vaccine keyword), account creation date, whether the account is verified, percent of on-topic posts containing links, percent of on-topic tweets that were original posts versus retweets, posting time of day, and uniformity of posting date across the study period (Supplemental Table 2). For the first two analyses focused on relative influence within the anti-vaccine and pro-vaccine communities, we also matched on subcommunity assignment based on the Infomap community detection algorithm Figure 10. We used logistic regression to compute propensity score as the predicted probability that each user is a perceived expert given their covariates. Based on propensity scores, each perceived expert was matched with replacement to their three nearest neighbor perceived non-experts. To test the first two hypotheses about influence within the anti-vaccine and pro-vaccine communities respectively, we included only users in either community. To test the third hypothesis comparing the influence of perceived experts in the anti- versus pro-vaccine community, we calculated the interaction of perceived expertise and community. Sample sizes for all analyses are provided in Supplemental Table 5.

The outcome variables for influence are: eigenvector centrality, degree centrality, betweenness centrality, natural logged median likes, natural logged median retweets, h-index for likes, and h-index for retweets (Supplemental Table 3). h-index is the maximum number h such that the user wrote at least h original tweets (including replies and quote tweets) that received at least h likes or retweets. We calculated engagements (likes and retweets) for each tweet as the maximum number observed for that tweet in our collection within twenty-eight days of when it was posted. Importantly, we only retrieved like counts for a given tweet when another user engaged with the tweet through a quote tweet or retweet.

We then estimated the effect of perceived expertise on influence by calculating the average treatment effect on the treated , which corresponds to the difference in expected influence with and without perceived expertise given that a user is a perceived expert with covariates drawn from the distributions for the matched users :

ATT was estimated using the marginal effects package [Arel-Bundock, 2023]. We tested the robustness of our results to different matching parameters and covariates, as displayed in Supplemental Figure 22, Supplemental Figure 23, and Supplemental Figure 24.

Supplementary Material

Acknowledgments:

We thank Lia Bozarth and Alex Lodengraad for their work constructing and maintaining the Twitter collections used in these analyses, and we thank Lia Bozarth for querying the collection on our behalf. We thank Andrew Beers and Joey Schafer for their assistance with coengagement network methods. We thank Stephen Prochaska for his input on methods to code profiles and tweets and assess interrater reliability. We thank Maddy Jalbert, Luke Williams, Noah Louis-Ferdinand, Alexander Sherman, Caroline Glidden, Desire Nalukwago, Eloise Skinner, Emma Southworth, Isabel Delwel, Jasmine Childress, Kelsey Lyberger, Kimberly Cardenas, Lisa Couper, Marissa Childs, and Mauricio Cruz Loya for their helpful feedback on the manuscript. We additionally thank Ihsan Kahveci, members of the James Holland Jones’ lab, and members of the University of Washington’s Center for an Informed Public for their feedback and insights on this project.

Funding:

MJH was funded by the Achievement Rewards for College Scientists Scholarship, the National Institutes of Health (R35GM133439), the University of Washington’s Center for an Informed Public, and the John S. and James L. Knight Foundation. RM was funded by the Bryce and Bonnie Nelson Fellowship. EAM was funded by the National Science Foundation (DEB2011147, with the Fogarty International Center), the National Institutes of Health (R35GM133439, R01AI168097, and R01AI102918), the Stanford King Center on Global Development, Woods Institute for the Environment, Center for Innovation in Global Health, and the Terman Award. JDW was funded by the Knight Foundation and the National Science Foundation grants 2120496 and 2230616.

Footnotes

Conflicts of interest: All authors declare that there are no competing interests.

Data and code availability:

Code and data used to conduct main analyses and reproduce figures are available on Github at: https://github.com/mjharris95/perceived-experts

As explained in the repository, users were anonymized throughout the analysis and in the dataset. Additional information may be provided by the corresponding author on reasonable request.

8 Works Cited

- Abhari R., Villa-Turek E., Vincent N., Dambanemuya H., and Horvát E.. Retracted Articles about COVID-19 Vaccines Enable Vaccine Misinformation on Twitter, Mar. 2023. URL http://arxiv.org/abs/2303.16302. arXiv:2303.16302 [cs]. [Google Scholar]

- Acerbi A.. A Cultural Evolution Approach to Digital Media. Frontiers in Human Neuroscience, 10, 2016. ISSN 1662–5161. URL 10.3389/fnhum.2016.00636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahamed S. H. R., Shakil S., Lyu H., Zhang X., and Luo J.. Doctors vs. Nurses: Understanding the Great Divide in Vaccine Hesitancy among Healthcare Workers. In 2022 IEEE International Conference on Big Data (Big Data), pages 5865–5870, Osaka, Japan, Dec. 2022. IEEE. ISBN 978–1-66548–045-1. doi: 10.1109/BigData55660.2022.10020853. URL https://ieeexplore.ieee.org/document/10020853/. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amazeen M. and Krishna A.. Correcting Vaccine Misinformation: Recognition and Effects of Source Type on Misinformation via Perceived Motivations and Credibility. SSRN Electronic Journal, 2020. ISSN 1556–5068. doi: 10.2139/ssrn.3698102. URL https://www.ssrn.com/abstract=3698102. [DOI]

- Arel-Bundock V.. marginaleffects: Predictions, Comparisons, Slopes, Marginal Means, and Hypothesis Tests. 2023. URL https://CRAN.R–project.org/package=marginaleffects. [Google Scholar]

- Bakshy E., Hofman J. M., Mason W. A., and Watts D. J.. Everyone’s an influencer: quantifying influence on twitter. In Proceedings of the fourth ACM international conference on Web search and data mining, WSDM ‘11, pages 65–74, New York, NY, USA, Feb. 2011. Association for Computing Machinery. ISBN 978–1-4503–0493-1. doi: 10.1145/1935826.1935845. URL 10.1145/1935826.1935845. [DOI] [Google Scholar]

- Bartoš V., Bauer M., Cahlíková J., and Chytilová J.. Communicating doctors’ consensus persistently increases COVID-19 vaccinations. Nature, 606(7914):542–549, June 2022. ISSN 0028–0836, 1476–4687. doi: 10.1038/s41586-022-04805-y. URL https://www.nature.com/articles/s41586-022-04805-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bastian M., Heymann S., and Jacomy M.. Gephi: An Open Source Software for Exploring and Manipulating Networks. Proceedings of the International AAAI Conference on Web and Social Media, 3(1):361–362, Mar. 2009. ISSN 2334–0770, 2162–3449. doi: 10.1609/icwsm.v3i1.13937. URL https://ojs.aaai.org/index.php/ICWSM/article/view/13937. [DOI] [Google Scholar]

- Beers A., Nguyên S., Starbird K., West J. D., and Spiro E. S.. The architects of perceived consensus. In review, Dec. 2022. [Google Scholar]

- Beers A., Schafer J. S., Kennedy I., Wack M., Spiro E. S., and Starbird K. . Followback Clusters, Satellite Audiences, and Bridge Nodes: Coengagement Networks for the 2020 US Election. Proceedings of the International AAAI Conference on Web and Social Media, June 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berenson A. B., Chang M., Hirth J. M., and Kanukurthy M.. Intent to get vaccinated against COVID-19 among reproductive-aged women in Texas. Human Vaccines & Immunotherapeutics, 17(9):2914–2918, Sept. 2021. ISSN 2164–5515, 2164–554X. doi: 10.1080/21645515.2021.1918994. URL 10.1080/21645515.2021.1918994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berl R. E. W., Samarasinghe A. N., Roberts S. G., Jordan F. M., and Gavin M. C.. Prestige and content biases together shape the cultural transmission of narratives. Evolutionary Human Sciences, 3, 2021. ISSN 2513–843X. doi: 10.1017/ehs.2021.37. URL https://www.cambridge.org/core/journals/evolutionary-human-sciences/article/prestige-and-content-biases-together-shape-the-cultural-transmission-of-narratives/4BB933C47461D60F285514311028DA24.Publisher: Cambridge University Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnevie E., Gallegos-Jeffrey A., Goldbarg J., Byrd B., and Smyser J.. Quantifying the rise of vaccine opposition on Twitter during the COVID-19 pandemic. Journal of Communication in Healthcare, 14(1):12–19, Jan. 2021. ISSN 1753–8068. doi: 10.1080/17538068.2020.1858222. URL 10.1080/17538068.2020.1858222. Publisher: Taylor & Francis eprint: 10.1080/17538068.2020.1858222. [DOI] [Google Scholar]

- Boucher J.-C., Cornelson K., Benham J. L., Fullerton M. M., Tang T., Constantinescu C., Mourali M., Oxoby R. J., Marshall D. A., Hemmati H., Badami A., Hu J., and Lang R.. Analyzing Social Media to Explore the Attitudes and Behaviors Following the Announcement of Successful COVID-19 Vaccine Trials: Infodemiology Study. JMIR Infodemiology, 1(1):e28800, Aug. 2021. ISSN 2564–1891. doi: 10.2196/28800. URL https://infodemiology.jmir.org/2021/1/e28800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradshaw A. S.. #DoctorsSpeakUp: Exploration of Hashtag Hijacking by Anti-Vaccine Advocates and the Influence of Scientific Counterpublics on Twitter. Health Communication, pages 1–11, Apr. 2022. ISSN 1041–0236, 1532–7027. doi: 10.1080/10410236.2022.2058159. URL 10.1080/10410236.2022.2058159. [DOI] [PubMed] [Google Scholar]

- Bradshaw A. S., Treise D., Shelton S. S., Cretul M., Raisa A., Bajalia A., and Peek D.. Propagandizing anti-vaccination: Analysis of Vaccines Revealed documentary series. Vaccine, 38(8):2058–2069, Feb. 2020. ISSN 0264410X. doi: 10.1016/j.vaccine.2019.12.027. URL https://linkinghub.elsevier.com/retrieve/pii/S0264410X1931669X. [DOI] [PubMed] [Google Scholar]

- Bradshaw A. S., Shelton S. S., Fitzsimmons A., and Treise D.. ‘From cover-up to catastrophe:’ how the anti-vaccine propaganda documentary ‘ Vaxxed’ impacted student perceptions and intentions about MMR vaccination. Journal of Communication in Healthcare, pages 1–13, Sept. 2022. ISSN 1753–8068, 1753–8076. doi: 10.1080/17538068.2022.2117527. URL 10.1080/17538068.2022.2117527. [DOI] [Google Scholar]

- Brand C. O., Heap S., Morgan T. J. H., and Mesoudi A.. The emergence and adaptive use of prestige in an online social learning task. Scientific Reports, 10(1):12095, July 2020. ISSN 2045–2322. doi: 10.1038/s41598-020-68982-4. URL https://www.nature.com/articles/s41598-020-68982-4. Number: 1 Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callaghan T., Washburn D., Goidel K., Nuzhath T., Spiegelman A., Scobee J., Moghtaderi A., and Motta M.. Imperfect messengers? An analysis of vaccine confidence among primary care physicians. Vaccine, 40(18):2588–2603, Apr. 2022. ISSN 0264–410X. doi: 10.1016/j.vaccine.2022.03.025. URL https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8931689/. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Candogan O. and Drakopoulos K.. Optimal Signaling of Content Accuracy: Engagement vs. Misinformation. SSRN Scholarly Paper ID 3051275, Social Science Research Network, Rochester, NY, Oct. 2017. URL https://papers.ssrn.com/abstract=3051275. [Google Scholar]

- Carpiano R. M., Callaghan T., DiResta R., Brewer N. T., Clinton C., Galvani A. P., Lakshmanan R., Parmet W. E., Omer S. B., Buttenheim A. M., Benjamin R. M., Caplan A., Elharake J. A., Flowers L. C., Maldonado Y. A., Mello M. M., Opel D. J., Salmon D. A., Schwartz J. L., Sharfstein J. M., and Hotez P. J.. Confronting the evolution and expansion of anti-vaccine activism in the USA in the COVID-19 era. The Lancet, page S0140673623001368, Mar. 2023. ISSN 01406736. doi: 10.1016/S0140-6736(23)00136-8. URL https://linkinghub.elsevier.com/retrieve/pii/S0140673623001368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cascini F., Pantovic A., Al-Ajlouni Y. A., Failla G., Puleo V., Melnyk A., Lontano A., and Ricciardi W.. Social media and attitudes towards a COVID-19 vaccination: A systematic review of the literature. eClinicalMedicine, 48:101454, June 2022. ISSN 25895370. doi: 10.1016/j.eclinm.2022.101454. URL https://linkinghub.elsevier.com/retrieve/pii/S2589537022001845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceccarelli L.. Manufactured Scientific Controversy: Science, Rhetoric, and Public Debate. Rhetoric and Public Affairs, 14(2):195–228, 2011. ISSN 1094–8392. URL https://www.jstor.org/stable/41940538. Publisher: Michigan State University Press. [Google Scholar]

- Center for Countering Digital Hate. The disinformation dozen: Why platforms must act on twelve leading online anti-vaxxers. Technical report, Mar. 2021. URL https://counterhate.com/wp-content/uploads/2022/05/210324-The-Disinformation-Dozen.pdf. [Google Scholar]

- Cha M., Haddadi H., Benevenuto F., and Gummadi K. P.. Measuring user influence in twitter: The million follower fallacy. Proceedings of the International AAAI Conference on Web and Social Media, 4(1):8, May 2010. [Google Scholar]

- Csardi G. and Nepusz T.. The igraph software package for complex network research. InterJournal, Complex Systems:1695, 2006. URL https://igraph.org. [Google Scholar]

- DeVerna M. R., Aiyappa R., Pacheco D., Bryden J., and Menczer F.. Identification and characterization of misinformation superspreaders on social media, July 2022. URL http://arxiv.org/abs/2207.09524. arXiv:2207.09524 [cs]. [Google Scholar]

- Efstratiou A. and Caulfield T.. Misrepresenting Scientific Consensus on COVID-19: The Amplification of Dissenting Scientists on Twitter. arXiv, Nov. 2021. URL http://arxiv.org/abs/2111.10594. arXiv: 2111.10594. [Google Scholar]

- Epstein S.. Impure science: AIDS, activism, and the politics of knowledge. Number 7 in Medicine and society. University of California press, Berkeley, 1996. ISBN 978–0-520–21445-3. [PubMed] [Google Scholar]

- Faasse K., Chatman C. J., and Martin L. R.. A comparison of language use in pro- and anti-vaccination comments in response to a high profile Facebook post. Vaccine, 34(47):5808–5814, Nov. 2016. ISSN 1873–2518. doi: 10.1016/j.vaccine.2016.09.029. [DOI] [PubMed] [Google Scholar]

- Federation of State Medical Boards Ethics and Professionalism Committee. Professional Expectations Regarding Medical Misinformation and Disinformation. Technical report, Apr. 2022. URL https://www.fsmb.org/siteassets/advocacy/policies/ethics-committee-report-misinformation-april-2022-final.pdf.

- Freed G. L., Clark S. J., Butchart A. T., Singer D. C., and Davis M. M.. Sources and perceived credibility of vaccine-safety information for parents. Pediatrics, 127 Suppl 1:S107–112, May 2011. ISSN 1098–4275. doi: 10.1542/peds.2010-1722P. [DOI] [PubMed] [Google Scholar]

- Gallagher R. J., Doroshenko L., Shugars S., Lazer D., and Foucault Welles B. . Sustained Online Amplification of COVID-19 Elites in the United States. Social Media + Society, 7(2):205630512110249, Apr. 2021. ISSN 2056–3051, 2056–3051. doi: 10.1177/20563051211024957. URL 10.1177/20563051211024957. [DOI] [Google Scholar]

- Gesser-Edelsburg A., Diamant A., Hijazi R., and Mesch G. S.. Correcting misinformation by health organizations during measles outbreaks: A controlled experiment. PLOS ONE, 13(12):e0209505, Dec. 2018. ISSN 1932–6203. doi: 10.1371/journal.pone.0209505. URL 10.1371/journal.pone.0209505. Publisher: Public Library of Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert S. and Paulin D.. Tweet to Learn: Expertise and Centrality in Conference Twitter Networks. In 2015 48th Hawaii International Conference on System Sciences, pages 1920–1929, HI, USA, Jan. 2015. IEEE. ISBN 978–1-4799–7367-5. doi: 10.1109/HICSS.2015.231. URL http://ieeexplore.ieee.org/document/7070042/. [DOI] [Google Scholar]

- Golding B.. Iffy Index of Unreliable Sources, Jan. 2023. URL https://iffy.news/index/. [Google Scholar]

- Goyal A., Bonchi F., and Lakshmanan L. V.. Discovering leaders from community actions. In Proceeding of the 17th ACM conference on Information and knowledge mining - CIKM ‘08, page 499, Napa Valley, California, USA, 2008. ACM Press. ISBN 978–1-59593–991-3. doi: 10.1145/1458082.1458149. URL http://portal.acm.org/citation.cfm?doid=1458082.1458149. [DOI] [Google Scholar]