Abstract

The Long-read RNA-Seq Genome Annotation Assessment Project (LRGASP) Consortium was formed to evaluate the effectiveness of long-read approaches for transcriptome analysis. The consortium generated over 427 million long-read sequences from cDNA and direct RNA datasets, encompassing human, mouse, and manatee species, using different protocols and sequencing platforms. These data were utilized by developers to address challenges in transcript isoform detection and quantification, as well as de novo transcript isoform identification. The study revealed that libraries with longer, more accurate sequences produce more accurate transcripts than those with increased read depth, whereas greater read depth improved quantification accuracy. In well-annotated genomes, tools based on reference sequences demonstrated the best performance. When aiming to detect rare and novel transcripts or when using reference-free approaches, incorporating additional orthogonal data and replicate samples are advised. This collaborative study offers a benchmark for current practices and provides direction for future method development in transcriptome analysis.

Introduction

There is a growing trend of using long-read RNA-seq (lrRNA-seq) data for transcript identification and quantification, primarily with Oxford Nanopore Technologies (ONT) and Pacific Biosciences (PacBio) platforms1–4. Consequently, there is a need to evaluate these approaches for transcriptome analysis to compare the impact of different sequencing platforms, multiple sequencing library preparation methods, and computational analysis methods5–8.

A previous effort by the RNA-Seq Genome Annotation Assessment Project (RGASP) Consortium9,10 involved evaluating short-read Illumina RNA-seq for transcript identification. It revealed limitations in recalling full-length transcript products due to the complexity of eukaryotic transcriptomes. To evaluate long-read approaches for transcriptome analysis, we formed the Long-read RNA-Seq Genome Annotation Assessment Project (LRGASP) Consortium modeled after the previous GASP11, EGASP12, and RGASP9,10 efforts. For this project, we aimed for an open community effort to be as transparent and inclusive as possible in evaluating technologies and computational methods (Fig. 1).

Fig. 1. Overview of the Long-read RNA-seq Genome Annotation Assessment Project (LRGASP).

a, Data produced for LRGASP consists of multiple species, multiple sample types, multiple library protocols, and multiple sequencing platforms for comparison. b, Distribution of read lengths, identify Q score, and sequencing depth (per biological replicate) for the WTC11 sample. c, LRGASP as an open research community effort for benchmarking and evaluating long-read RNA-seq approaches. d, Number of isoforms reported by each tool on different data types for the human WTC11 sample for Challenge 1. e, Median TPM value reported by each tool on different data types for the human WTC11 sample for Challenge 2. f, Number of isoforms reported by each tool on different data types for the mouse ES data for Challenge 3. g, Pairwise relative overlap of unique junction chains (UJCs) reported by each submission. The UJCs reported by a submission is used as a reference set for each row. The fraction of overlap of UJCs from the column submission is shown as a heatmap. For example, a submission that has a small, subset of many other UJCs from other submissions will have a high fraction shown in the rows, but low fraction by column for that submission. Data only shown for WTC11 submissions. h, Spearman correlation of TPM values between submissions to Challenge 2. i, Pairwise relative overlap of UJCs reported by each submission. The UJCs reported by a submission is used as a reference set for each row. The fraction of overlap of UJCs from the column submission is shown as a heatmap.

The LRGASP Consortium evaluated three fundamental aspects of transcriptome analysis. First, we assessed the reconstruction of full-length transcripts expressed in a given sample from a well-curated eukaryotic genome, such as from human or mouse. Second, we evaluated the quantification of the abundance of each transcript. Finally, we assessed the de novo reconstruction of full-length transcripts from samples without a high-quality genome, which would be beneficial for annotating genes in non-model organisms. These evaluations became the basis of the three challenges that comprised the LRGASP effort (Box 1).

Box 1: Overview of the LRGASP Challenges.

Challenge 1: Transcript isoform detection with a high-quality genome

Goal: Identify which sequencing platform, library prep, and computational tool(s) combination gives the highest sensitivity and precision for transcript detection.

Challenge 2: Transcript isoform quantification

Goal: Identify which sequencing platform, library prep, and computational tool(s) combination gives the most accurate expression estimates.

Challenge 3: De novo transcript isoform identification

Goal: Identify which sequencing platform, library prep, and computational tool(s) combination gives the highest sensitivity and precision for transcript detection without a high-quality annotated genome.

Our benchmarking effort demonstrated the potential of long-read sequencing technologies in obtaining full-length transcripts and discovering new transcripts, even in well-annotated genomes. However, the agreement among bioinformatics tools was moderate, reflecting different goals in data analysis. Accurately detecting novel transcripts was more challenging than identifying transcript models already present in reference annotations. This indicates the need to improve de novo annotation of genomes using long-read data alone. Furthermore, transcript quantification accuracy varies significantly among bioinformatics tools depending on data scenarios, with long-read-based tools typically having lower quantitative accuracy than short-read-based tools due to low throughput and higher error rates. Improvements in long-read-based tools and increased throughput are expected to enhance their accuracy further. Evaluation analysis also highlights challenges in quantifying complex and lowly expressed transcripts. We experimentally validated many lowly expressed, single-sample transcripts, prompting further discussions on using long-read data for creating reference transcriptomes.

Results

LRGASP Data and study design

The LRGASP Consortium Organizers produced long-read and short-read RNA-seq data from aliquots of the same RNA samples using a variety of library protocols and sequencing platforms (Fig. 1a, Table 1, Extended Data Table 1). The Challenges 1 and 2 samples consist of human and mouse ENCODE biosamples with extensive chromatin-level functional data generated separately by the ENCODE Consortium. These include the human WTC11 iPSC cell line and a mouse 129/Casteneus ES cell line for Challenge 1 and a mix (H1-mix) of H1 human Embryonic Stem Cell (H1-hESC) and Definitive Endoderm derived from H1 (H1-DE) for Challenge 2. In addition, individual H1-hESC and H1-DE samples were sequenced on all platforms; however, those reads were only released after initial H1-mix predictions were submitted. All samples were grown as biological triplicates with the RNA extracted at one site, spiked with 5’-capped Spike-In RNA Variants (Lexogen SIRV-Set 4), and distributed to all production groups. A single pooled sample of manatee whole blood transcriptome was generated for Challenge 3. After sequencing, reads for human, mouse, and manatee samples were deposited at the ENCODE Data Coordination Center (DCC) for community access, including but not limited to usage for the challenges. We performed different cDNA preparation methods for each sample, including an early-access ONT cDNA kit (PCS110), ENCODE PacBio cDNA, and R2C2 for increased sequence accuracy ONT data, and CapTrap13 to enrich for 5’-capped RNAs (see Methods). CapTrap is derived from the CAGE technique14 and was adapted for lrRNA-seq. We also performed direct RNA sequencing (dRNA) with ONT.

Table 1:

Overview of LRGASP sequencing data.

| Sample | # of Reps | cDNA-PacBio | cDNA-ONT | dRNA-ONT | R2C2-ONT | CapTrap-PacBio | CapTrap-ONT | cDNA-Illumina |

|---|---|---|---|---|---|---|---|---|

| Mouse ES | 3 | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Human WTC11 | 3 | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Human H1-mix | 3 | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Human H1-hESC | 3 | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Human H1-DE | 3 | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Manatee PBMCs | 1 | Yes | Yes | No | No | No | No | Yes |

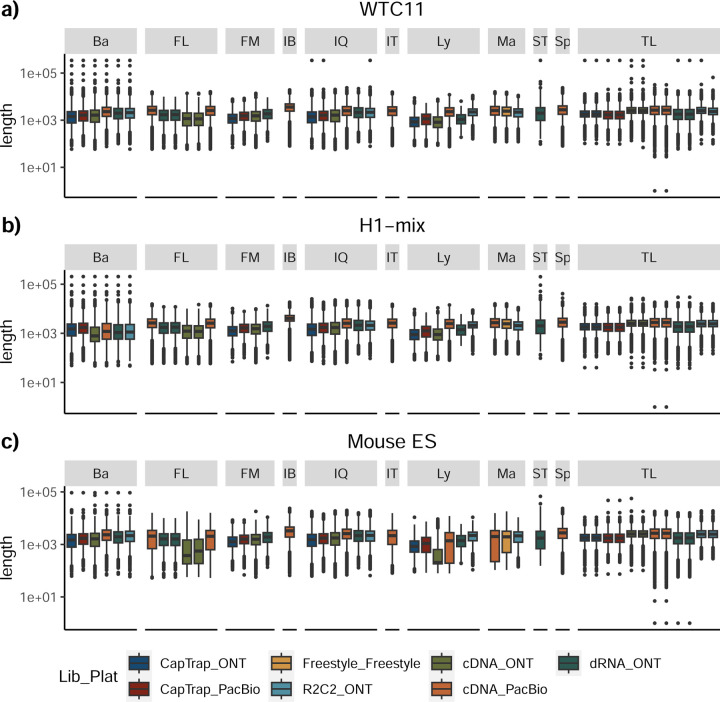

The quality of the LRGASP datasets was extensively assessed (Supplementary information: LRGASP Data QC). cDNA-PacBio and R2C2-ONT datasets contained the longest read-length distributions, while sequence quality (assessed as % identity after genome mapping) was higher for CapTrap-PacBio, cDNA-PacBio, and R2C2-ONT than other experimental approaches. We obtained approximately ten times more reads from CapTrap-ONT and cDNA-ONT than with other methods (Fig. 1b).

The overall design of the LRGASP Challenge aimed for a fair and transparent process of evaluating long-read methods. The LRGASP effort was announced to the broader research community via social media and the GENCODE main website to recruit tool developers to submit transcript detection and quantification predictions based on the LRGASP data. Having tool developers provide results, rather than a third party, was likely to result in the best-performing parameters for that tool, making for a more fair comparison. In addition to data, the tool developers were provided detailed descriptions of the evaluation metrics and benchmarks, including scripts for challenge evaluations (Fig. 1c, detailed in Supplementary information: Challenge submissions and timeline, Data and code availability). Benchmarks included ground truth datasets known and unknown to participants before submission. Additionally, several community video conferences were held to answer questions about the data and evaluation and receive feedback on improving the study. Tool developers submitted challenge predictions, which were evaluated and validated by a subgroup of the LRGASP organizers that did not submit predictions (Fig. 1c). The separation of evaluators from prediction submitters was made to reduce any bias in the evaluation.

The evaluation of the challenges consisted of both bioinformatic and experimental approaches. SQANTI315 was used to obtain transcript features and performance metrics (Table 2) computed based on SIRV-Set 4 spike-ins, simulated data, and a set of undisclosed, manually curated transcript models defined by GENCODE16. Human models were further compared to CAGE and Quant-seq data from the same samples. In addition, multiple evaluation metrics were designed for transcript quantification performance assessment both with and without known ground truth in different data scenarios. Experimental validation was performed on a select number of loci with either high agreement or disagreement between sequencing platforms or analysis pipelines, as well as several GENCODE annotated transcript models.

Table 2:

Transcript Classifications and Definitions used by the LRGASP computational evaluation.

| Classification | Description |

|---|---|

| Full Splice Match (FSM) | Transcripts matching a reference transcript at all splice junctions |

| Incomplete Splice Match (ISM) | Transcripts matching consecutive, but not all, splice junctions of the reference transcripts |

| Novel in Catalog (NIC) | Transcripts containing new combinations of 1) already annotated splice junctions, 2) novel splice junctions formed from already annotated donors and acceptors, or 3) unannotated intron retention |

| Novel Not in Catalog (NNC) | Transcripts using novel donors and/or acceptors |

| Reference Match (RM) | FSM transcript with 5´ and 3énds within 50 nts of the transcription start site (TSS)/transcription termination site (TTS) annotation |

| 3´ poly(A) supported | Transcript with poly(A) signal sequence support or short-read 3’ end sequencing (e.g. QuantSeq) support at the 3énd |

| 5´ CAGE supported | Transcript with CAGE support at the 5énd |

| 3´ reference supported | Transcript with 3énd within 50 nts from a reference transcript TTS |

| 5´ reference supported | Transcript with 5énd within 50 nts from a reference transcript TSS |

| Supported Reference Transcript Model (SRTM) | FSM/ISM transcript with 5´ end within 50 nts of the TSS or has CAGE support AND 3´ end within 50 nts of the TTS or has poly(A) signal sequence support or short-read 3’ end sequencing support |

| Supported Novel Transcript Model (SNTM) | NIC/NNC transcript with 5´ end within 50 nts of the TSS or CAGE support AND 3´ end within 50 nts of the TTS or has poly(A) signal sequence support or short-read 3’ end sequencing support AND Illumina read support at novel junctions |

| % Long Read Coverage (%LRC) | Fraction of the transcript model sequence length mapped by one or more long reads |

| Read multiplicity | Number of assigned transcripts per read |

| Redundancy | # LR transcript models / reference model |

| Longest Junction | |

| Chain ISM | # junctions in ISM / # junctions reference |

| NIC / NNC | # reference junctions / # junctions in NIC/NNC |

| Intron retention (IR) level | Number of IR within the NIC category |

| Illumina Splice Junction (SJ) Support | % SJ in transcript model with Illumina support |

| Full Illumina Splice Junction Support | % transcripts in category with all SJ supported |

| % Novel Junctions | # of new junctions / total # junctions |

| % Non-canonical junctions | # of non-canonical junctions / total # junctions |

| % Non-canonical transcripts | % transcripts with at least one non-canonical junction |

| Intra-priming | Evidence of intra-priming (described in 15) |

| RT-switching | Evidence of RT-switching (described in 15) |

LRGASP Challenge submissions

A total of 14 tools and labs participated in LRGASP, submitting predictions for each of the three challenges.(Extended Data Table 2) While submitters could choose the type of experimental procedure (a combination of library preparation and sequencing platform) they wished to participate in, predictions were required to be provided for all biological samples in the chosen experimental procedure to assess pipeline consistency. We received 141, 143, and 25 submissions for Challenges 1, 2, and 3, respectively.

We observed a large variability in the number and quantification of transcript models predicted by each submission, with differences of up to 10-fold in each challenge (Figs. 1d-f). Moreover, there was little overlap in the transcripts identified by any two pipelines in Challenges 1 and 3, and low pairwise correlations were detected for Challenge 2 quantification results (Figs. 1g-i, Extended Data Table 3). These results highlight the importance of the comprehensive benchmarking presented here.

Challenge 1 Results and Evaluation: Transcript isoform detection with a high-quality genome

The evaluation of transcript model predictions in Challenge 1 used a variety of datasets, each of them addressing different performance aspects. When looking at predictions using datasets of real samples, we noticed that the fraction of the long reads that were used to build the transcript model (Percentage of Reads Used, PRU) greatly varied among and within methods, with tools such as LyRic13 only utilizing less than 20% of the available reads and others, e.g., Iso_IB17, FLAMES18, and IsoQuant19 showing PRU greater than 100%, indicating that the same read supported more than one transcript model (Extended Data Fig. 1), and revealing very different strategies in the reconstruction of the transcriptomes. We evaluated the overall capacity of the experimental methods and tools for transcript and gene detection based on SQANTI categories and various orthogonal datasets, as described in Table 3. Results were consistent for all three samples (Extended Data Tables 4-6). Fig. 2a shows data for WTC11, while Extended Data Figs. 2,3 contain the results for H1-mix and mouse ES samples, respectively. We found a large variation in the number of known genes (399–23,647) and transcripts (524–329,131) detected by the LyRic-only (Fig. 2a), although there was a consistent relationship between both figures, with most pipelines reporting on average 3–4 transcripts per gene, except for Spectra20 and Iso_IB that reported a huge number of transcripts (~170K and 330K, respectively). Due to pipeline variation, we did not find a clear relationship between the number of reads, the read length, and read quality with the number of detected transcripts (Extended Data Figs. 4–6). Roughly the number of detected genes and transcripts were determined to a great extent by the analysis tool (Fig. 2a and Extended Data Figs. 7,8). In general, pipelines detected more genes using cDNA library preparations and the PacBio sequencing platform. At the same time, there was not a clear best library preparation method for ONT in terms of the number of detected genes (Extended Data Fig. 9) or transcripts (Extended Data Fig. 10). The same trend was observed when comparing results within each analysis tool, (Extended Data Figs. 11–14).

Table 3:

Metrics for evaluation against GENCODE annotation

| Metric | FSM | ISM | NIC | NNC | Others |

|---|---|---|---|---|---|

| Count | ✓ | ✓ | ✓ | ✓ | ✓ |

| Reference Match (RM)* | ✓ | ||||

| _3´ poly(A) supported | ✓ | ✓ | ✓ | ✓ | |

| _5´ CAGE supported | ✓ | ✓ | ✓ | ✓ | |

| _3´ reference supported | ✓ | ✓ | ✓ | ✓ | |

| _5´ reference supported | ✓ | ✓ | ✓ | ✓ | |

| Supported Reference Transcript Model (SRTM) | ✓ | ✓ | |||

| Supported Novel Transcript Model (SNTM) | ✓ | ✓ | |||

| Distance (nts) to TSS/TTS of matched transcript | ✓ | ✓ | |||

| Redundancy | ✓ | ✓ | |||

| % Long Read Coverage (%LRC) | ✓ | ||||

| Longest Junction Chain | ✓ | ✓ | ✓ | ||

| Intron retention level | ✓ | ✓ | |||

| Illumina Splice Junction Support | ✓ | ✓ | ✓ | ✓ | ✓ |

| Full Illumina Splice Junction Support | ✓ | ✓ | ✓ | ✓ | ✓ |

| % Novel Junctions | ✓ | ✓ | |||

| % Non-canonical junctions | ✓ | ✓ | ✓ | ✓ | ✓ |

| % Transcripts with non-canonical junctions | ✓ | ✓ | ✓ | ✓ | ✓ |

| Intra-priming | ✓ | ✓ | ✓ | ✓ | ✓ |

| RT-switching | ✓ | ✓ | ✓ | ✓ | ✓ |

| Number of exons | ✓ | ✓ | ✓ | ✓ | ✓ |

See Table 2 for description of LRGASP metrics

X indicates the LRGASP metric in the row is applied to the structural category in the column

Figure 2: Overview of evaluation for Challenge 1: transcript identification with a reference annotation.

a) Number of genes and transcripts per submission. Abundance of the main structural categories and support by external data. b) Agreement in transcript detection as a function the number of detecting pipelines. c) Performance based on for spliced-short and unspliced-long SIRVs. d) Performance based on simulated data. e) Performance for known and novel transcripts based on 50 manually-annotated genes by GENCODE. Ba: Bambu, FM: Flames, FR: FLAIR, IQ: IsoQuant, IT: IsoTools, IB: Iso_IB, Ly: LyRic, Ma: Mandalorion, TL: TALON-LAPA, Sp: Spectra, ST: StringTie2.

Pipelines also greatly varied in detecting transcripts annotated in GENCODE (Full-Splice Match, FSM), containing annotated splice junctions but unannotated transcript start or ends (Incomplete Splice Match, ISM), containing novel junctions between annotated GENCODE splice sites (Novel In Catalog, NIC), or containing novel splice sites with respect to GENCODE (Novel Not in Catalog, NNC). Bambu21, FLAIR22, FLAMES, and IsoQuant consistently detected a high percentage of FSM and a low proportion of ISM transcripts. In contrast, TALON23,24, IsoTools25, and LyRic detected a relatively high number of ISM (Fig. 2a). It was noted by the Lyric submission group that they did not use existing annotations to guide their analysis, which can explain their results. As for novel transcripts, Bambu was the tool that reported the lowest values for NNC and NIC. Also, IsoQuant and TALON had a low discovery of novel transcripts. FLAIR and Mandalorion26 pipelines typically returned around 20% NIC and low NNC percentages. LyRic and FLAMES were among the pipelines with the highest percentages of novel transcript detections, and so were Iso_IB and Spectra, which generally returned many isoforms, with only a small fraction of them being FSM. When these values were further analyzed stratified by library preparation and sequencing platform, a similar pattern was observed for the total number of transcripts: cDNA library preps, especially in combination with PacBio, more frequently had the highest number of FSM, NIC, and NNC while there was not a clear pattern for the detection of ISM (Extended Data Figs. 15–20). Within each analysis tool, SQANTI categories Antisense and Genic Genomic were more frequently detected using ONT sequencing data combined with cDNA or CapTrap. Intergenic transcripts were often found in cDNA library preparations (Extended Data Figs. 21,22).

We then evaluated the support of transcript models by reference annotation and orthogonal data derived from short-read sequencing (i.e., cDNA sequencing, CAGE, and Quant-seq). We observed that many pipelines had a high percentage of known transcripts with full support at TTS, TSS, and junctions (SRTM, see Methods), which were not mirrored by full support in novel transcript models (SNTM) (Fig. 2a). In general, tools analyzing cDNA-PacBio and cDNA-ONT data had high values of full support both for novel and known transcripts. A significant number of TALON pipelines had only moderate full support of known transcripts, possibly due to their high number of ISM. However, TALON showed consistent full support of novel transcripts in most cases. In contrast, methods such as LyRic, IsoQuant, FLAMES, and Bambu, which had high full support values for novel transcripts when analyzing cDNA-PacBio data, returned novel transcripts models with lower support when processing ONT library preparations. When looking at the specific evidence for 5’ and 3’ ends, we found that, in general, pipelines were more successful in reporting experimentally supported 3’ ends than 5’ ends. At the same time, transcript models matched the reference TTS and TSS annotations relatively well. There were, though, significant differences between pipelines. Tools such as Bambu and IsoQuant reported a high percentage of transcripts matching reference TTSs and TSSs. However, their support by matched CAGE and Quant-seq experimental data was relatively low. On the contrary, some of the LyRic and FLAMES submissions contained transcript models well supported by both types of experimental data, and Mandalorion had the highest CAGE support rates. TALON, Iso_IB, Spectra, and StringTie227 had intermediate support at both transcript ends, considering the reference annotation or the orthogonal experimental data. This result suggested that a number of lrRNA-seq analysis pipelines that rely on reference annotations may use this information to adjust or complete transcript model predictions. To assess this hypothesis, we evaluated to which extent the actual long read data supported the predicted transcript sequences provided by each analysis pipeline by calculating the Long Read Coverage (LRC), i.e., the percentage of transcript model nucleotides mapped by at least one long read, based on our independent alignments. We found that FLAMES, Iso_IB, IsoTools, LyRic, and Mandalorion had nearly 100% of their transcript models fully supported (>98% coverage). While for FLAIR, Spectra, TALON, IsoQuant, Bambu, and StringTie2, these percentages dropped to approximately 90, 90, 85, 75, 60, and 45%, respectively (Extended Data Fig. 23). This suggests that this second set of tools employs a distinct strategy of the read alignments from the ones used for the assessment, or partially complete their transcript sequences using additional information such as the reference annotation or short-read data. Finally, we looked at the percentage of junctions with Illumina reads support and canonical splice sites. We found these values were generally very high for all pipelines except Spectra, Iso_IB, and FLAMES using cDNA-ONT and CapTrap-ONT data, with LyRic_PacBio showing the highest percentage of splice junctions supported by Illumina reads (Fig. 2a). The distribution of detected gene biotypes was, however, very similar for all methods, with the largest majority of detected genes being protein-coding (84.5%±6.5%), followed by lncRNAs (9.8%±4.1%) and pseudogenes (3.9%±2.2%) (Extended Data Fig. 24). Only Bambu reported an unusual number of non-coding genes when analyzing several library preparations of the H1-mix sample (Extended Data Fig. 25).

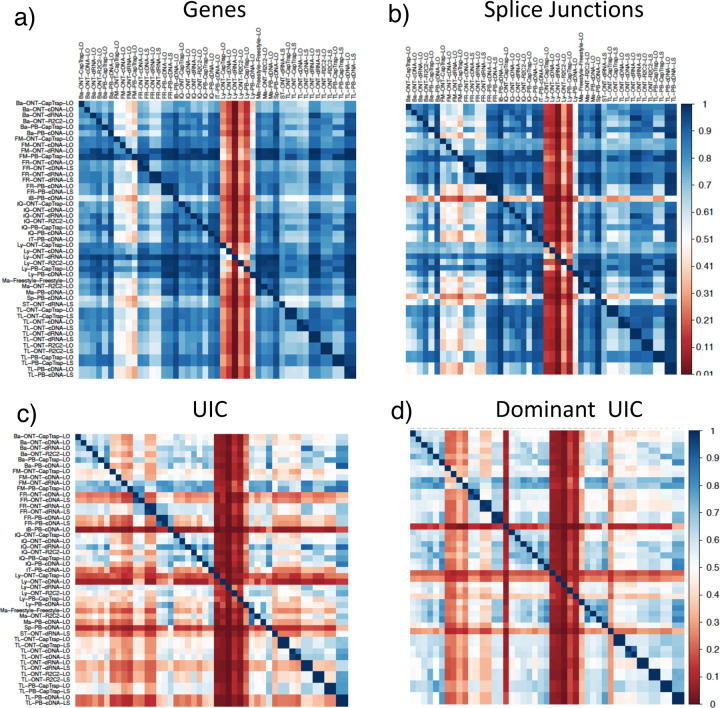

We then evaluated the agreement in the detection of known and novel Unique Intron Chains (UICs) across sequencing platforms, library preparations, and analysis pipelines (Extended Data Tables 3-7). When considering all 47 WTC11 submissions, detection by only one pipeline was the most frequent transcript class. The number of transcripts consistently detected by an increasing number of pipelines steadily decreased (Fig. 2b, Extended Data Fig. 26). Moreover, there was a clear relationship between the transcriptś SQANTI structural category and the number of pipelines it was detected by, with novel transcripts being more frequently identified by few pipelines and FSMs being nearly the only type of transcripts that were found by more than 40 pipelines. Overall, the overlap in detection between any two pipelines was higher for genes and junctions than for UICs, even when we only considered dominant UICs accounting for over 50% of the gene expression (Extended Data Figs. 27–29), highlighting the disparity in the identification of transcript models across methodologies. We then re-evaluated the agreement in UIC detection by looking at the overlap of each analysis method with the rest and discarding those tools with a large number of detections (Spectra and Iso_IB). Results were highly reproducible for the three samples evaluated in Challenge 1. Overall, a significant number of UICs were detected by all analysis pipelines when considering each library preparation/sequencing platform combination separately. However, important differences were observed for different SQANTI categories and experimental methods (Extended Data Figs. 30–35). FSMs were the most consistently detected transcript type across tools, in tens of thousands of different UICs in cDNA-PacBio, and R2C2-ONT datasets, while ISM, NIC, and NNC consistent detections were in the hundreds range for these experimental protocols. Other types of combinations (CapTrap-PacBio, CapTrap-ONT, and dRNA-ONT) returned agreement rates that were one order of magnitude lower: in the range of thousands for FSM and tens for ISM and NNC. Although detected by all individual tools, other structural categories, such as Fusion, Antisense, Genic Genomic, and Genic Intron, disappeared when imposing replicability across analysis methods. We also investigated the consistency in UIC detections for the same tool across different experimental datasets with similar conclusions: FSM transcripts were the most repeatedly detected throughout experimental protocols, while ISM, NIC, and NNC counts dropped quickly as more protocols were considered, with a few hundred models being present in over three datasets. One exception was Mandalorion, which reported consistent numbers of UICs regardless of the experimental data used, even in the ISM, NIC, and NNC categories. Multiple experimental protocols did not consistently detect UICs of other SQANTI categories (Extended Data Fig. 36). LyRic was the tool with the lowest number of consistently detected transcript models. Finally, we defined a set of “frequently detected transcripts” (FDT) as UICs present in at least three experimental datasets and detected by at least three analysis tools. This set contained around 45,000 UICs in each biological sample, representing ~8% of the total number of UICs found by any method. Of these, the great majority (~80%) were FSM, followed by NIC transcripts that accounted for ~10% of the FDT (Extended Data Fig. 37). This implies that compared to all detections, FSM was enriched by 4–5 fold in the FDT set, while the other categories were significantly depleted. Finally, the set of FDT was more frequently found by IsoQuant, Mandalorion, Bambu, TALON-LAPA, and FLAMES than by other tools, even when correcting by the number of analysis pipelines submitted by each lab (Extended Data Fig. 37).

To understand which characteristics were driving differences in the detection of transcripts by the long read technologies, we compared expression level, transcript length and the number of exons of transcripts exclusively detected by each experimental method. We found that transcripts found only by pipelines processing PacBio reads were longer and had more exons and lower expression values than transcripts detected by Nanopore only or by both technologies (Extended Data Fig. 38). This pattern was also seen in the transcripts exclusively identified when tools analyzed cDNA data –and to a lesser extent R2C2-ONT, compared to those only detected by other library preparations methods (Extended Data Fig. 39). The combination cDNA-PacBio was the experimental procedure where their exclusively detected transcripts were the longest and had significantly lower expression (Extended Data Fig. 40). These results are in keeping with the read quality data and reveal that global capacity for transcript detection is associated with quality parameters of the sequencing reads. Interestingly, although these differences in transcript length were broadly recapitulated by analysis method, large transcripts (>10,000 nts in length) were exclusively reported by Bambu, IsoQuant, StringTie2, and TALON-LAPA (Extended Data Fig. 41).

The evaluation based on cell-line data lacks a ground truth that can be used to estimate the actual accuracy of the methods. We used a combination of spike-ins (SIRV), simulated data, and GENCODE manual annotation to estimate sensitivity, precision, and other performance metrics. Using the spliced SIRVs Lexogen dataset, most tools showed high sensitivity, except for TALON, while LyRic and Bambu sensitivity varied as a function of the library preparation method (Fig. 2c, Extended Data Tables 4-6). LyRic only had a sensitivity above 0.8 for the cDNA-PacBio sample, while Bambu showed lower sensitivity with R2C2-ONT and CapTrap-ONT. Precision was generally high for Bambu, IsoQuant, IsoTools, and Mandalorion methods and low for TALON, Iso_IB, and Spectra, with FLAMES, FLAIR, and LyRic showing variable results. F1 score figures were, in general, similar to the precision values, with IsoQuant, Mandalorion, FLAIR, Bambu, and IsoTools being the highest. However, It should be noted that conclusions on performance consistency are biased by the actual samples analyzed by the different tools. For example, the IsoTools team only analyzed the cDNA-PacBio data and performed well, comparable to FLAIR and Bambu. However, these methods also submitted predictions for ONT samples and yielded more variable results.

The SIRVs dataset also contained long, non-spliced transcripts that were evaluated separately. Interestingly, performance was very different with this subset (Fig. 2c, Extended Data Tables 3-5). FLAMES did not report any due to the lack of support for single-exon transcripts. While Bambu had 100% sensitivity and precision in all library preparation protocols, possibly due to their support by reference annotation data (Extended Data Fig. 42). In general, sensitivity was lower across all analysis methods, except when using the cDNA-PacBio library preparation, which was 100% for most tools, including TALON and LyRic. Interestingly, when tools used CapTrap data, sensitivity was generally low, even in combination with PacBio sequencing, suggesting that the CapTrap library preparation was limited for capturing long molecules. Precision values were more variable and dependent on the tool and library preparation protocol. Since these SIRVs do not contain splice sites, low precision values indicate false variability at TTS and TSS. As results obtained with the long non-spliced dataset may be the combination of the ability of the analysis tool to process non-spliced data and accurately define TSS and TTS, but also of the capacity of the experimental protocol to capture long molecules, we looked specifically at the long read coverage for long SIRVs (Extended Data Fig. 42). We found that cDNA-PacBio was the combination where long reads more uniformly covered long SIRVs. However, coverage strongly dropped towards the 5’ end for the longest molecules. CapTrap library preparations -sequenced with PacBio and Nanopore-showed a strong coverage drop, frequently down to 0, at the middle of the SIRV sequence. R2C2 preparations lacked complete coverage for the extremely long SIRVs, while dRNA data showed more uniform coverage, albeit with striped patterning denoting sequencing errors.

SIRVs-based evaluation, while allowing assessment of experimental protocols, represents a reduced dataset that might be biased by the knowledge of the ground truth and cannot evaluate the capacity of tools to accurately discover new transcripts, which is one of the major claims of long-read sequencing platforms. We used simulated data to provide a more extensive assessment scenario, including novel transcripts in the synthetic dataset but not made available to the participants (Extended Data Tables 7,8). Using PacBio simulated data, we found that Mandalorion generally had good prediction and sensitivity for annotated and novel transcripts (Fig. 2d and Extended Data Fig. 43). IsoTools and LyRic also generally performed well except for the precision of novel transcripts. Bambu and FLAIR showed high sensitivity and precision when considering known transcripts but considerably lower figures when looking for novel isoforms. This was particularly noticeable for FLAIR, when not using short-read data, could not discover new transcripts accurately. Spectra and Iso_IB conversely had high sensitivity for novel isoforms at the cost of lower precision. For all tools, sensitivity increased on highly expressed transcripts, and redundancy values were close to 1, except for Iso IB and Spectra, which returned a higher number of redundant predictions. Simulation of Nanopore data resulted in datasets where most tools returned very low-performance figures, possibly due to lower coverage of the NanoSim28 reads of the simulated transcript models (Extended Data Fig. 44). Exceptions were Bambu and IsoQuant, which had good precision for ONT-known simulated transcripts, and StringTie at metrics other than those related to novel transcript discovery. Overall, results using simulated data indicated lower sensitivity and prediction for novel than known transcripts, with some tools being these differences considerable (Fig. 2d).

Synthetic data, allowing performance assessment on large datasets and novel transcripts, is limited by the properties of the simulation algorithms that are designed to reproduce sequencing errors but ignore other sources of artifacts such as library preparation and biological noise. To evaluate performance considering the complexity of real data, a set of 50 genes were selected for which the ground truth was set by exhaustive manual annotation by GENCODE annotators, and loci were unknown to submitters. Manually annotated loci were chosen for having mapped reads in all six library preparation/sequencing platform combinations and average to moderately high expression levels (Extended Data Fig. 45). GENCODE annotators evaluated the long read data for each experimental procedure independently and called transcript models in each case (See Supplementary Methods). Globally, 271 models were accepted as true transcripts in the WTC11 sample. cDNA-PacBio and cDNA-ONT were the experimental methods with the highest number of annotated transcripts (129 and 125, respectively), followed by R2C2-ONT and CapTrap-ONT (118 and 110, respectively). CapTrap-PacBio and dRNA-ONT had the lowest number (63 and 67, respectively), due to overall fewer aligned reads to the selected regions. Interestingly, the majority of transcripts in this subset were novel, with NNC being the most predominant SQANTI category. However, while FSM accounted only for 30% of these transcript models, they were the only category present within the transcripts detected in five or more experimental conditions. Most novel transcripts were found only in one dataset (Extended Data Fig. 45). Similar results were obtained on 50 loci annotated from the ES_mouse sample (Extended Data Fig. 46).

When pipelines were assessed based on this set of loci, we found that the analysis pipeline drove performance differences. All methods showed high precision at the gene level, but sensitivity was lower than with previous evaluating datasets. FLAMES and LyRic showed overall low sensitivity, as did FLAIR on dRNA data and TALON on CapTrap and cDNA-ONT datasets. Bambu, IsoTools, IsoQuant, and Spectra showed the highest sensitivity at the gene detection level (Fig. 2e), followed by TALON and Mandalorion but were more affected by the data type. A similar pattern of sensitivity and prediction was observed when considering transcripts already present in the reference annotation (Fig. 2e). ONT-known sensitivity dramatically dropped for the transcripts classified as novel by the GENCODE annotators, being close to 0 in most cases, and only IB had a sensitivity higher than 0.4. On the contrary, precision values greatly varied, ranging from 1 to 0 to non-computable even within the same tool, due to a generally low number of novel discoveries (<4) by most pipelines (Fig. 2f). Results were similar for the mouse ES annotated dataset (Extended Data Figs. 47,48).

Since our analysis of the manually annotated transcripts indicated that many new isoforms were found in only one dataset, we also calculated performance metrics considering only those transcripts detected in at least two experimental samples (114 transcripts) or with more than two reads (94 transcripts). These filters did not significantly affect the sensitivity of the methods and worsened the precision for novel transcript detection (Extended Data Figs. 49,50). When reevaluating performance based on library preparation method and sequencing platform, no clear best experimental method was evident; however, there was slightly higher precision for novel transcripts and higher sensitivity for known transcripts for tools working on PacBio, rather than Nanopore datasets (Extended Data Figs. 51,52).

In summary, the library preparation method, sequencing platform, and transcript analysis tool influenced many differences in the reported transcriptomes. Interestingly, the number of transcripts detected was not associated with the number of reads. Some tools are more reliant on annotation than others. For example, some tools correct their transcript models based on the annotation (Bambu, FLAMES, FLAIR, and IsoQuant), while other methods allow more novelty based on the actual data (Iso_IB, IsoTools, Mandalorion, Spades, TALON, LyRic).

We observe discrepancies among performance results depending on the benchmark. For example, LyRic performed poorly on SIRVS and GENCODE manual annotation but good on simulated data. In general, sensitivity for novel transcripts when using simulated data resulted in better performance than when using the GENCODE manual annotation, indicating challenges in benchmarking approaches. It could be argued that the GENCODE manual annotation is based on real data that presents a more realistic annotation challenge; however, the manual review resulted in a bias in locus selection that had relatively fewer reads to review with fewer replicates in different libraries.

Challenge 2 Results and Evaluation: Transcript isoform quantification

To evaluate the performance of transcript quantification, both with and without a ground truth, we generated 84 real sequencing datasets (including SIRV-set 4) from four human cell lines (H1-hESC, H1-DE, H1-mix, and WTC11) and six simulation datasets for Nanopore (NanoSim), PacBio (IsoSeqSim29) and Illumina (RSEM30) reads (Fig. 1a). We received a total of 143 submitted datasets from seven quantification tools (IsoQuant, Bambu, TALON, FLAIR, FLAMES, NanoSim and IsoTools) on six combinations of protocols-platforms (cDNA-PacBio, cDNA-ONT, dRNA-ONT, CapTrap-PacBio, CapTrap-ONT, and R2C2-ONT). As a control, short-read datasets (cDNA-Illumina) were also quantified using the RSEM tool with GENCODE reference annotation. We designed nine metrics for performance assessment under different data scenarios (Fig. 3a and Table 4). We further provided a benchmarking web application (https://lrrna-seq-quantification.org/), allowing users to upload their transcript quantification results and produce interactive evaluation reports in HTML and PDF format.

Fig. 3. Overview of performance evaluation for Challenge 2: transcript isoform quantification.

(a) Cartoon diagrams are used to explain 9 evaluation metrics under the ground truth given or not given.

(b) - (e) Overall evaluation results of 8 quantification tools and 7 protocols-platforms on real data with multiple replicates, cell mixing experiment, SIRV-set4 data and simulation data.

(f) - (g) Top-4 overall performance on quantification tools and protocols-platforms for each metric.

(h) Evaluation of quantification tools with respect to multiple transcript features, including the number of isoforms, number of exons, isoform length and a customized statistic K-value representing the complexity of exon-isoform structures. Here, we use the normalized MRD metric to evaluate performance on human cDNA-PacBio simulation data. Additionally, we show RSEM evaluation results with respect to transcript features based on human short-read simulation data as a control.

Table 4:

Metrics for Challenge 2 evaluation

| Metrics | Description |

|---|---|

| Irreproducibility Measure (IM) and Area under the Coefficient of Variation Curve (ACVC) | IM and ACVC characterize the coefficient of variation of abundance estimates among multiple replicates. |

| Consistency Measure (CM) and Area under the Consistency Curve (ACC) | CM and ACC characterize the similarity of abundance profiles between pairs of replicates. |

| Resolution Entropy (RE) | RE characterizes the resolution of abundance estimation. |

| Spearman Correlation Coefficient (SCC) | SCC evaluates the monotonic relationship between the estimation and the ground truth. |

| Median Relative Difference (MRD) | MRD is the median of the relative difference of abundance estimates among all transcripts. |

| Normalized Root Mean Square Error (NRMSE) | NRMSE measures the normalized root mean square error between the estimation and the ground truth, which characterizes the variability of the quantification accuracy. |

| Percentage of Expressed Transcripts (PET) | PET characterizes the percentage of truly expressed transcripts in SIRV-set4 data |

First, we assessed the overall performance of the eight quantification tools with different protocols-platforms under four different data scenarios by considering the metrics evaluated above (Figs. 3b-f, and Extended Data Fig. 53).

For real data with multiple replicates, four metrics (Irreproducibility Measure/IM, Area under the Coefficient of Variation Curve/ACVC, Consistency Measure/CM, and Area under the Consistency Curve/ACC) were designed to evaluate the reproducibility and consistency of transcript abundance estimates among multiple replicates (Fig. 3b, Extended Data Figs. 54–55 and Extended Data Table 5). Overall, FLAMES and IsoQuant, together with IsoTools, had similar overall performance: low IM and ACVC and high CM and ACC. They achieved comparable performance with the control RSEM tool (e.g., 0.18–0.33 vs. 0.35 and 0.65–0.86 vs. 0.77 for IM and ACVC, 0.81–0.88 vs. 0.84 and 9.31–9.46 vs. 9.51 for CM and ACC). FLAIR and Bambu followed closely behind, performing slightly worse than RSEM but outperforming the other quantification tools (Fig. 3b). Specifically, IsoQuant on cDNA-ONT and CapTrap-ONT, as well as FLAMES on CapTrap-ONT, demonstrated the top 3 performances across all datasets (<0.15 and <0.53 for IM and ACVC, >0.89 and >9.53 for CM and ACC). Of note, all tools showed poor performance on dRNA-ONT (mean IM=0.66, ACVC=2.62, CM=0.64, and ACC=8.31), which may be attributed to the low throughput of dRNA-ONT (<1 Million for each replicate).

In addition, the Resolution Entropy (RE) metric was also used to characterize the resolution of transcript abundance estimates among multiple replicates in real data (Fig. 3b, Extended Data Fig. 56, and Extended Data Table 5). Among the REs, the top 6 tools (IsoQuant, IsoTools, FLAMES, FLAIR, TALON, and Bambu) achieved comparable resolution of transcript abundance, which were at least 2.7 -fold higher RE compared to the NanoSim and RSEM. This may be that NanoSim and RSEM use the GENCODE reference annotation, which contains a large number of transcripts not expressed in a specific sample, resulting in many transcripts with low expression in the quantification results (79.02% and 58.04% of transcripts with TPM<=1 in H1-hESC sample).

Due to the challenges of transcript-level quantification and the lack of a gold standard in real data, we designed an evaluation strategy using a cell mixing experiment (see details in Extended Data Fig. 57a), in which an undisclosed ratio of two samples (H1-hESC and H1-DE) is mixed before sequencing and provided to the participants at an initial phase. After predictions of transcript abundance in the mixed sample, sequencing data from the individual H1-hESC and H1-DE samples were released, and participants submitted quantification separately on these two datasets. The quantification of the mix samples should be equivalent to the expected ratios from the quantification of the individual cell lines. Three metrics (Spearman Correlation Coefficient/SCC, Median Relative Difference/MRD, and Normalized Root Mean Square Error/NRMSE) were used to evaluate quantification accuracy by comparing expected and observed abundance (Fig. 3c and Extended Data Table 10. Most quantification tools generally had a good correlation (0.74–0.87 for mean SCC) between expected abundance and observed abundance, except for Bambu (0.53). In particular, RSEM had the best performance in cell mixing experiments (Fig. 3c and Extended Data Fig. 57b), which had the highest SCC (0.87) and lowest MRD (0.13) and NRMSE (0.38) values. Compared with the long-read-based tools, IsoQuant on cDNA-ONT had the best performance in MRD (0.14) and SCC (0.85), and FLAIR on cDNA-ONT had the lowest NRMSE (0.43).

Furthermore, SIRV-set 4 and the simulation data were used to evaluate how close the estimations and the ground truth values are by four metrics: Percentage of Expressed Transcripts (PET), SCC, MRD, and NRMSE (Figs. 3d,e, Extended Data Figs. 58,59 and Extended Data Tables 11,12). Generally, for SIRV-set 4 data, there is a large variation in the number of SIRV transcripts with TPM>0 quantified by tools (28–136). RSEM outperformed other long-read-based tools in average SCC (0.84 vs. 0.29–0.78), MRD (0.12 vs. 0.13–1.00), and NRMSE (0.45 vs. 0.89–2.19) scores. Especially in long-read-based tools, NanoSim (SCC=0.78, MRD=0.23, and NRMSE=0.89) and IsoQuant (0.76, 0.19, and 0.89) had the best overall performance, and IsoTools (0.69, 0.13 and 1.02), FLAIR (0.73, 0.42 and 1.13) and Bambu (0.68, 0.79 and 1.55) followed behind. All tools except TALON and FLAMES could better quantify the regular and long SIRV transcripts with TPM>0 (PET>80%). Conversely, most methods had poor performance for quantifying ERCC transcripts with TPM>0 (PET<50%), which may be attributed to the low expression levels of many ERCC transcripts 31,32.

For simulation data, all tools performed significantly better on PacBio data than ONT data (0.96 vs. 0.69 for mean SCC, 0.07 vs. 0.23 for mean MRD, and 0.25 vs. 0.78 for mean NRMSE). In particular, FLAIR, IsoQuant, IsoTools, and TALON on cDNA-PacBio had the highest correlation (SCC>0.97) between the estimation and the ground truth compared with other pipelines, which was slightly better than RSEM (SCC=0.90) and outperformed other long-read pipelines (SCC<0.83). Moreover, we observed that the transcript annotation accuracy significantly impacted quantitative accuracy. Specifically, when using an inaccurate annotation, RSEM yielded mean NRMSE values 2.74 and 3.27 times higher than those of LR-based tools and RSEM with accurate annotation, respectively (Extended Data Fig. 60, mean NRMSE: 1.70 vs. 0.62 vs. 0.52).

This emphasizes the critical importance of identifying a sample-specific accurate annotation for accurate transcript quantification.

Next, we evaluated the overall performance of the seven protocols-platforms across all quantification tools (Figs. 3b-e, Fig. 3g, and Extended Data Fig. 53).

Among the reproducibility and consistency in real data (Fig. 3b), CapTrap-ONT, CapTrap-PacBio, cDNA-PacBio, and cDNA-ONT had similar overall performance: low IM and ACVC, and high CM and ACC, which was significantly better than dRNA-ONT and R2C2-ONT (0.20–37 vs. 0.61–0.63 for IM, 0.50–1.05 vs. 2.39–2.87 for ACVC, 0.82–0.89 vs. 0.69–0.76 for CM and 9.19–9.51 vs. 8.61–8.68 for ACC). This is probably due to the relatively lower sequencing depth of dRNA-ONT and R2C2-ONT compared with other protocols-platforms (Fig. 1b). In particular, CapTrap-ONT and cDNA-ONT have the lowest irreproducibility (mean IM= 0.19 and 0.20, and ACVC=0.50 and 0.51) and highest consistency (mean CM= 0.89 and 0.86, and ACC=9.49 and 9.51). In terms of the abundance resolution, cDNA-PacBio, and R2C2-ONT dominated the other protocols-platforms, which is at least 2-fold higher RE compared to the cDNA-ONT (Fig. 3b). Notably, there are bimodal distributions of read length for some of the protocols-platforms (cDNA-PacBio, CapTrap-PacBio, dRNA-ONT definitely for R2C2-ONT, Extended Data Fig. 61) in real data. The sequencing error rate varies across the different sequencing platforms (Fig. 1b). Some tools may have a distinct advantage when dealing with particular data types if it takes into account the characteristics of these data (Fig. 3f).

For the cell mixing experiments (Fig. 3c and Extended Data Fig. 57b), CapTrap-PacBio, CapTrap-ONT, cDNA-PacBio, cDNA-ONT, and dRNA-ONT have similar performances with mean SCC scores between 0.73 and 0.83, while the remaining R2C2-ONT did not get to mean SCC scores above 0.60. MRD and NRMSE showed consistent evaluation results with SCC (e.g., 0.16–0.21 vs. 0.32 for MRD and 0.45–0.63 vs. 0.88 for NRMSE). In particular, CapTrap-PacBio exhibited the best overall quantification accuracy (SCC=0.83, MRD=0.16, and NRMSE=0.45) between expected abundance and observed abundance compared with other long-read-based protocols-platforms, which can achieve comparable performance with cDNA-Illumina.

For the SIRV-set 4 data, cDNA-PacBio had the best overall performance in long-read-based protocols-platforms, e.g., highest SCC (0.70 vs. 0.60–0.66) and lowest MRD (0.40 vs. 0.58–0.75) and NRMSE values (1.14 vs. 1.38–1.52). cDNA-ONT followed behind and outperformed the other four protocols-platforms (Fig. 3d). Notably, the ability of all protocols-platforms to quantify ERCC transcripts with TPM>0 (Mean PET=33.01%) was significantly worse than that of regular SIRV (Mean PET=82.17%) and long SIRV transcripts (Mean PET=69.75%). In particular, cDNA-ONT, cDNA-PacBio, CapTrap-ONT, and R2C2-ONT achieved similar performance with PET between 34.39% and 43.27% in quantifying ERCC transcripts, which was higher than dRNA-ONT (18.35%) and CapTrap-PacBio (27.99%). With respect to the long SIRV transcripts, all protocols-platforms, except for CapTrap-PacBio and dRNA-ONT, can quantify more than 70% of transcripts with TPM>0. All protocols-platforms performed well for the regular SIRV transcripts, among which dRNA-ONT, CapTrap-PacBio, cDNA-PacBio, and cDNA-ONT were the most prominent (PET>82.00%). Similar to SIRV-set 4 data, the simulation study showed that the cDNA-PacBio exhibited better performance in SCC, MRD, and NRMSE than cDNA-ONT and dRNA-ONT (Fig. 3e and Extended Data Fig. 59b).

Finally, we evaluated the performance of quantification tools for different sets of genes/transcripts grouped by transcript features, including transcript abundance, isoform number, exon number, isoform length, and a customized statistic K-value representing the complexity of exon-isoform structures (Fig. 3h and Extended Data Figs. 54b,c and 62).

For real data with multiple replicates, all quantification tools revealed a decreased Coefficient of Variation (CV) and increased CM on six protocols-platforms with increasing transcript abundances (Extended Data Figs. 54b,c). Furthermore, we explored the changes of normalized MRD metric with different gene/transcript features based on the human cDNA-PacBio simulation data (Fig. 3h). The MRD scores on all quantification tools increased dramatically when the transcript abundance was TPM52. Therefore, there existed greater variabilities and errors of abundance estimation for lowly expressed transcripts on all quantification tools. In addition, we found that all quantification tools had poor performance at the high K-value and isoform number, which revealed that more complex gene structures tend to be harder to quantify accurately. Notably, most tools had difficulty accurately quantifying transcripts with less than five exons but performed well with isoform counts between 5 and 15 exons, except for RSEM and Bambu. Interestingly, transcripts shorter than 1 kb had larger quantification errors, but the performance of different tools for transcripts longer than 1 kb varied significantly.

In summary, our evaluation results demonstrated that the performance of quantification tools varies depending on the features of genes and transcripts, and it remains challenging to accurately quantify transcripts with low expression and complex structure. Notably, there are significant performance differences among quantification tools under different data scenarios (Fig. 3f). Overall, RSEM outperformed long-read-based tools across different protocols-platforms and evaluation metrics. IsoQuant, IsoTools, and FLAIR exhibited superior overall performance among long-read-based tools across different data scenarios. Additionally, FLAMES demonstrated good reproducibility and consistency across multiple replicates in real data, while NanoSim achieved superior performance in quantifying SIRV transcripts. Generally, in different protocols-platforms, cDNA-Illumina showed the best overall performance, ranking among the top 3 in all evaluation metrics except for RE (Fig. 3g). Meanwhile, cDNA-PacBio, cDNA-ONT, CapTrap-ONT, and CapTrap-PacBio exhibited overall good performance across various data scenarios, surpassing dRNA-ONT and R2C2-ONT. It is worth noting that some protocols-platforms, such as cDNA-PacBio, CapTrap-PacBio, dRNA-ONT definitely for R2C2-ONT, exhibited bimodal distributions of read length in real data, and the error rate in sequencing varies among different sequencing platforms. Therefore, considering the characteristics of the data, some tools may have a distinct advantage in dealing with specific data types.

Currently, the throughput of long reads is relatively low, and most of the long-read-based tools mainly focus on transcript identification. However, with the improvement of long-read-based tools and the increase in throughput, the quantification accuracy of long-read-based tools is likely to be further improved.

Challenge 3 Results and Evaluation: De novo transcript isoform identification

We evaluated the potential of long-read methods for transcriptome identification without a reference annotation using two types of samples. The mouse ES sample analyzed in Challenge 1 represents a case of high-quality genome assembly and data. The manatee white blood cell data depicts a typical scenario for a non-model species where limited genomics resources are available, and samples are directly taken from a field experiment. Additionally, the manatee sample had an excess of SIRV spike-ins, representing a challenging dataset. A draft genome of the manatee was assembled using Nanopore and Illumina genomic reads (Extended Data Fig. 63) and provided to submitters to support analysis. Still, no genome annotation was allowed in either the manatee or mouse analyses of Challenge 3. Matched short-read RNA-seq data was available to all submitters.

Four different tools were evaluated in Challenge 3: Bambu, StringTie2+IsoQuant, RNA-Bloom33, and rnaSPAdes34, which submitted a total of 17 and 8 transcriptome prediction sets for the mouse ES and manatee samples, respectively (Fig. 1f). While all long-read methods predicted high transcript mapping rates to the genome, both for mouse and manatee data (Extended Data Fig. 1), the number of detected transcripts varied among analysis tools, ranging from ~20K to ~150K in mouse ES and from ~2K to ~500K in the manatee sample (Figs. 4a,b, Extended Data Tables 12-13). rnaSPADes predicted the largest number of transcripts and the largest fraction of non-coding sequences, followed by RNA-Bloom (Fig. 4a). Bambu has the least number of predicted transcripts in all analyzed samples. Since the actual genome annotation of the mouse is available, a SQANTI structural classification analysis of this sample is possible. Remarkably, most transcripts detected by all methods under the reference annotation-free scenario for the mouse data were novel transcripts. rnaSPAdes predicted a large fraction of intergenic and antisense transcripts, RNA-Bloom was enriched in NNC, StringTie2+IsoQuant reported a high number of NIC and NNC, while for Bambu the most abundant novel class was ISM in most scenarios (Fig. 4a). This strongly contrasts with results obtained by these last two tools in Challenge 1 when guided by the reference annotation, where a large majority of transcript detections were FSM (Fig. 2a and Extended Data Fig. 65) and shows that, at least for Bambu and IsoQuant, the utilization of a reference annotation has a strong impact in their predicted transcript models. Since no reference annotation is available for manatee, a structural category analysis is not possible. However, we evaluated the number of transcripts per locus detected by each method. Bambu, rnaSPAdes, and RNA-Bloom predicted a single transcript for most loci. In contrast, StringTie2+IsoQuant, especially when using cDNA-ONT data, predicted two or more transcripts for nearly half of the loci, especially when using cDNA-ONT data.

Figure 4. Evaluation of Challenge 3: transcript identification without a reference annotation.

a) Number of detected transcripts and distribution of SQANTI structural categories, Mouse ES sample. b) Number of detected transcripts and distribution of transcripts per loci, Manatee sample. c) Length distribution of Mouse ES transcripts predictions. d) Length distribution of Manatee transcripts predictions. e) Support by orthogonal data. f) BUSCO metrics. g) Performance metrics based on SIRVs. Sen: Sensitivity, PDR: Positive Detection Rate, Pre: Precision, nrPred: non-redundant Precision, FDR: False Discovery Rate, 1/Red: Inverse of Redundancy.

Without a reference annotation, Bambu, StringTie2+IsoQuant, and RNA-Bloom could predict most transcripts models with lengths ranging between 1 kb and 3 kb. However, both Bambu and StringTie2+IsoQuant reported a significant number of short transcripts from the mouse ES cDNA-ONT dataset (Fig. 4c), possibly due to the large fraction of shorter reads in this sample (Fig. 1c) and Bambu had a lower transcript length distribution on the manatee cDNA-PacBio dataset (Fig. 4d). For all mouse ES and manatee samples rnaSPAdes returned a large number of short transcripts that strongly pulled down the length distributions (Figs. 4c,d).

When looking at supporting orthogonal data for the mouse ES transcript models, we found a clear relationship between the total number of predicted transcripts and the orthogonal support: rnaSPAdes, which predicted a large number of transcripts, had the least support for Illumina, CAGE, and Quantseq junctions, 5’ end and 3’ end respectively, and the largest (~40%) number of non-canonical splice junctions. Bambu, on the contrary, with the least number of predicted transcripts, generally had the highest orthogonal support. RNA-Bloom had transcripts with only 50% of junctions supported by Illumina and a considerable fraction of non-canonical junctions, while support at 3’ and 5’ ends was moderate. StringTie2+IsoQuant produced transcripts with quality junctions but low CAGE support (Fig. 4e).

A majority of transcripts identified by Bambu and StringTie2+IsoQuant were predicted to be protein-coding both in the manatee and mouse ES samples, except for CapTrap-ONT and cDNA-ONT datasets, with ~25% of transcript models predicted to be non-coding, which could be related to the higher number of reads in this dataset. RNA-Bloom predicted a similar percentage of protein-coding genes on the cDNA-ONT sample but was significantly lower in rnaSPAdes transcript models. Moreover, all tools predicted a lower fraction of transcripts with coding potential in the manatee sample, ranging from ~70% for IsoQuant and Bambu and less than 20% for all rnaSPAdes predictions (Extended Data Fig. 66).

We used the BUSCO35 database of highly conserved genes to assess the relative completeness of the predicted transcriptomes. Interestingly, despite the observed differences in protein-coding transcript rates, BUSCO analysis indicated a relatively good performance in most cases. On the mouse ES sample, rnaSPAdes, RNA-Bloom detected above 60% of complete BUSCO genes, while Bambu only reached this threshold when analyzing cDNA-PacBio and R2C2-ONT data. In the case of the manatee sample, the highest BUSCO completeness (~50%) was achieved by IsoQuant and RNA-Bloom on the Nanopore datasets, rnaSPAdes returned ~30% of complete BUSCO genes, and performance for Bambu was poor. Following these results, the fraction of incomplete BUSCO genes was generally lower in the mouse ES than in the manatee sample, and the highest ratio of incomplete BUSCO genes was provided by rnaSPAdes in the manatee sample (Fig. 4f).

Performance analysis using SIRV spike-ins revealed significant differences between tools and samples. On the mouse ES sample SIRVs were detected with relatively high sensitivity (~70%) by RNA-Bloom. However, the precision was low, and the False Discovery Rate (FDR) was high.

A similar pattern was observed by rnaSPAdes, although this tool had low sensitivity and a high Positive Detection Rate, indicating the detection of SIRVs by incomplete transcript models. RNA-Bloom and rnaSPAdes had low values for 1/Red, implying that multiple transcripts models were predicted for the same SIRV. On the contrary, StringTie2+IsoQuant and Bambu had low sensitivity (~25%) but medium to high precision and better control of the FDR. For both tools, precision figures were better when analyzing cDNA-PacBio data (Fig. 4g). Analysis of the manatee sample, which contained an excess of SIRVs, resulted in generally poorer performance results for all pipelines, especially for Bambu, which was unable to recover the spiked RNAs. On the manatee sample, the best sensitivity for SIRVs was obtained by RNA-Bloom (~70%) and the best precision by StringTie2+IsoQuant on cDNA-PacBio (~40%) (Fig. 4g). To understand whether the limited sensitivity of the pipelines was a data or algorithm issue we evaluated mapped SIRVs reads with SQANTI. Interestingly, we found that over 90% and 46% of the SIRV reads were FSMs and that 90% and 64% of the SIRVs had at least one reference-match read in the manatee cDNA-PacBio and cDNA-ONT datasets (Extended Data Fig. 67), suggesting that sufficient quality data was present and that sensitivity may be challenged at the correct processing of the noisy reads.

As expected, transcript detection without a reference annotation is a challenging problem. Interestingly, transcripts with higher coverage, as in our SIRV spike-ins in the manatee sample, led to poorer performance for all tools, suggesting that accurate detection from highly expressed genes may be problematic. Overall, Bambu and IsoQuant had moderate to good precision but low sensitivity. RNA-Bloom had high sensitivity but low precision, and rnaSPAdes produced many fragmented and short transcripts with a high FDR.

Experimental validation of transcript isoform predictions

To experimentally validate isoforms, we targeted isoform-specific regions for PCR amplification followed by gel electrophoresis and sequencing of pooled amplicons via ONT and PacBio sequencing (Fig. 5a). To support isoform-specific PCR design, we developed Primers-JuJu, a program employing Primer336 for semi-automated isoform-specific primer design (see Supplementary Information). Due to practical limitations of the scale of experimental validation, we prioritized targets of interest in three comparison groups: GENCODE known and novel, consistently versus rarely identified novel isoforms, and ONT and PacBio preferential isoforms. The first comparison group included isoforms that GENCODE Annotators identified from LRGASP data and were classified as occurring in the GENCODE annotation (known) or not (novel). The second group compared isoforms identified consistently by more than half the pipelines or rarely by 1 or 2 pipelines. The final group was isoforms preferentially identified in ONT libraries or only in PacBio libraries. From these comparison groups, we designed primers for 178 target regions, in which the length of the amplified region ranged from 120 to 4406 bp long, with a median of 488 bp and 25–75 interquartile range of 305 to 795 bps.

Figure 5. Experimental validation of known and novel isoforms.

a) Schematic for the experimental validation pipeline. b) Example of a consistently detected NIC isoform (detected in over half of all LRGASP pipeline submissions) which was successfully validated by targeted PCR. The primer set amplifies a novel event of exon skipping (NIC). Only transcripts above ~5 CPM and and part of the GENCODE Basic annotation are shown. c) Example of a successfully validated novel terminal exon, with ONT amplicon reads shown in the IGV track (PacBio produce similar results). d) Recovery rates for GENCODE annotated isoforms that are reference-matched (known), novel, and rejected. e) Recovery rates for consistently versus rarely detected isoforms, for known and novel isoforms. f) Recovery rates between isoforms that are more frequently identified in ONT versus PacBio pipelines. g-i) Relationship between estimated transcript abundances (calculated as the sum of reads across all WTC11 sequencing samples) and validation success for GENCODE (g), consistent versus rare (h), and platform-preferential (i) isoforms. j) Fraction of validated transcripts as a function of the number of WTC11 samples in which supportive reads were observed. k) Example of two de novo isoforms in Manatee validated through isoform-specific PCR amplification, blue corresponds to supported transcripts and red to unsupported transcripts. l) PCR validation results for manatee isoforms for seven target genes.

Target validation was confirmed upon sequencing of the PCR-based amplicons on both ONT and PacBio platforms, and reads were aligned to determine support for the isoform (Fig. 5a, see Methods). Examples of a validated exon skipping event (NIC) and a novel terminal exon (NNC) are shown in Figs. 5b,c.

To evaluate GENCODE annotated isoforms, we compared groups of randomly selected isoforms that were 1) annotated (GENCODE-known, n=26), 2) novel and confirmed through manual annotation (GENCODE-novel, n=41), and 3) unsupported isoforms that were investigated but did not pass rigorous manual curation (GENCODE-rejected n=9). As expected, we found a high validation rate for GENCODE-known, 81% (Fig. 5d). Of the GENCODE-known isoforms, we found that 5 of the 28 targets failed to validate. Re-checking these isoforms confirmed a high degree of support for annotated introns from short-read RNA-seq datasets in the recount3 database, and we speculate that the failed validation reflects suboptimal primer or PCR conditions. GENCODE-novel isoforms validated at a slightly lower but still high validation rate (63%) compared to GENCODE-known. Manual review again confirmed GENCODE-novel isoforms that failed to validate as correctly annotated but showed that they tended to be lower in abundance compared to their successfully validated counterparts (Fig. 5g). In some cases, GENCODE annotators had confidently annotated a full-length isoform from a single long read sequenced in one experiment, and such examples were more difficult to validate. As expected, only two out of nine GENCODE-rejected isoforms were amplified (22% validation rate). Notably, upon close examination, we found that validated examples were mismapping cases due to tandem repeats, where the validated transcript sequence was correct but not the original junction model, which was correctly rejected by GENCODE as not supported by the initial aligned reads.

A large number of novel isoforms were detected in this study (e.g., 279,791 novel isoforms in WTC11, Fig. 1b). We found that 743 novel isoforms were detected consistently, but a vast majority, or 242,125 isoforms, were rarely detected, and found in only 1 or 2 of the pipelines. We obtained a 100% validation rate for consistently novel isoforms, underscoring their experimental amenability to PCR and sequencing-based processing (Fig. 5e). Strikingly, for isoforms with exceedingly low reproducibility across pipelines, we found a surprisingly high validation rate of 91% and 57% for NIC and NNC isoforms, respectively. Though the validation rate was lower for rarely detected isoforms, as they comprise a vast majority of novel isoforms reported in this study, a simple linear extrapolation would imply that many isoforms may be expressed in WTC11. Abundance correlated with a validation rate (Fig. 5h), as found for the GENCODE validation set.

Lastly, we determined the validation rates of known and novel isoforms in common or preferentially detected in the ONT and PacBio platforms for their respective cDNA preparations. For example, an isoform detected in more than 50% of ONT pipelines but less than 50% of PacBio pipelines would be considered ONT-preferential and vice versa. We found that all known and novel isoforms found frequently (more than 50% of all pipelines) across both platforms were validated (Fig. 5f-i). We note that most of all validated isoforms were identified by amplicon sequencing on both ONT and PacBio. While the consistency of the validation is remarkable, we acknowledge that this subseťs relatively small sample size limits drawing general conclusions regarding validation rates for platform-preferential isoforms.

The validation experiments underscore that the transcript models from long-read pipelines will likely capture biologically real isoforms. In other words, novel isoform predictions generally have high accuracy, even if such isoforms are not consistently predicted across pipelines and platforms. Notably, our results suggest that validation success was related to the frequency of detection of the isoform, measured either by the number of WTC11 datasets (resulting from different combinations of library preparation and sequencing technologies) in which the isoform was detected (Fig. 5j) or by the total number of read counts supporting the isoform across WTC11 datasets (Extended Data Fig. 68). Other genomic features, such as transcript lengths, did not show a clear relationship with validation rates (Extended Data Fig. 69). While long-read transcript alignments are often able to resolve regions that are problematic for more accurate short-read alignments, these results highlight “blind spots” in a long-read-based transcript annotation, including imperfectly aligned reads (e.g., tandem repeats, inversions, short exon overhangs) in which isoform alignment can be highly affected by assumptions of the aligner. While expert human intervention can resolve many difficult cases, others remain unsolvable.

To validate long-read-based isoform discovery without a reference annotation, we focused on the manatee dataset. Compared to Challenges 1 and 2, Challenge 3 had fewer submissions; therefore, we established a goal of not explicitly comparing pipelines but, rather, assessing the ability of the long-read RNA-seq datasets, collectively, to return accurate transcript isoform annotation. To select genes and isoforms of interest, we used a targeted approach, focusing on a small, pre-defined list of genes related to immune pathways (see Methods).

Genes and their respective isoform targets were manually selected based on visualization and evaluation of the isoform structures on a custom UCSC Genome Browser track. We designed 22 primers that could potentially amplify 26 transcript predictions, with some of the primers targeting multiple transcripts. The length of the amplified region ranged from 78 to 2633 bp long, with a median of 1038 bp and a 25–75 interquartile range of 379 to 1379 bps. Validation of targets was confirmed upon PacBio sequencing of the amplicons. In total, we assessed isoforms from seven manatee genes (example shown in Fig. 5k). For five of the genes, those with one or a few isoforms, all isoforms were validated. For the two genes for which many isoform models were predicted and for which there was more variability across participants, approximately half of the targets were validated (Fig. 5l).

Overall, we find a greater technical challenge and variability of “field collected” non-model organism LR data, as compared to the human or mouse datasets. Though our sample population was small, they tend to validate when many pipelines coincide in their predictions of the number and identity of isoforms. In addition, ONT platforms tended to predict more isoforms than PacBio platforms, although with a higher false positive rate (Extended Data Fig. 70).

Discussion

The LRGASP project aimed to provide a thorough and impartial evaluation of long-read sequencing methods for characterizing the transcriptome. Replicated data was gathered for various sequencing platforms and library preparation methods, and 14 bioinformatics approaches took part in one or more of the three challenges. Predictions were submitted by the tool developers, allowing for optimal utilization of the methodologies, and evaluation scripts were provided along with the data to enable self-assessment and transparent review.

We found significant differences among sequencing platforms and library preparation methods in terms of the number and quality of reads obtained. ONT sequencing of cDNA and CapTrap libraries produced ten times more reads than other combinations, while cDNA-PacBio and R2C2-ONT provided the longest and more accurate reads. Interestingly, more reads did not consistently lead to more transcripts, indicating that read quality and length are important factors for transcript identification. We also found a large influence of the analysis tool on the results and identified fundamental differences in the strategy followed by each algorithm. Some tools used long reads as evidence to recall known transcripts, leading to predictions biased toward the reference annotation. Other methods inferred transcript models from the actual reads and were more receptive to novelty. Some of these approaches returned a broad set of transcript predictions based on the processed sequences neglecting possible RNA degradation and library preparation errors. In contrast, others targeted the identification of novel transcripts with high confidence. These different conceptions of the data analysis challenged our evaluation strategy, prompting us to examine different aspects of the transcript model support. Thanks to the numerous evaluation metrics and orthogonal data sources used in the benchmark, we accomplished this.

Remarkably, our three approaches for which ground truth was available yielded different conclusions about performance. Assessment using SIRVs, where the annotation was known, concluded high accuracy for the majority of methods. In contrast, the simulated data indicated poorer performance for novel transcripts, and the GENCODE manual annotation revealed that most tools failed to predict most of the transcript models inferred by human annotators, likely due to their low expression. However, our experimental validation results confirmed the presence of many novel transcripts, highlighting the relevance of long-read sequencing technologies in profiling the complexity of transcriptomes.

The novel transcript class with the highest overall consensus and validation was the NIC, implying that novel combinations of splice junctions, TTS, and TSS in the transcriptome are to be expected, at least for well-characterized organisms like mouse and human. Surprisingly, ~50% of the tested NNCs were validated, indicating additional novelty and high false discovery in this category. These results point to the utility of having a deep reference annotation capturing the largest possible catalog of splice features. Interestingly, many of these novel transcripts were detected by just one or few reads, found in only one or few samples, yet still validated by PCR. This suggests that many rare RNA molecules are present in specific samples, raising questions about considering them when reporting transcriptome composition using long reads. Arguably, while the comprehensive profiling of the RNA molecular content of a particular sample may require the inclusion of any detected transcript when defining the transcriptional signature of cell types and cell states, including only consistently detected transcripts might be advisable. The LRGASP results show that the analysis strategies implemented by the different tools are differently suited for these two scenarios.