Abstract

Protein engineering is an emerging field in biotechnology that has the potential to revolutionize various areas, such as antibody design, drug discovery, food security, ecology, and more. However, the mutational space involved is too vast to be handled through experimental means alone. Leveraging accumulative protein databases, machine learning (ML) models, particularly those based on natural language processing (NLP), have considerably expedited protein engineering. Moreover, advances in topological data analysis (TDA) and artificial intelligence-based protein structure prediction, such as AlphaFold2, have made more powerful structure-based ML-assisted protein engineering strategies possible. This review aims to offer a comprehensive, systematic, and indispensable set of methodological components, including TDA and NLP, for protein engineering and to facilitate their future development.

Keywords: Topological data analysis, Protein language models, Protein engineering, Deep learning and machine learning

Introduction

Protein engineering aims to design and discover proteins with desirable functions, such as improving the phenotype of living organisms, enhancing enzyme catalysis, and boosting antibody efficacy [1]. It has tremendous impacts on drug discovery, enzyme development and applications, the development of biosensors, diagnostics, and other biotechnology, as well as understanding the fundamental principles of the protein structure-function relationship and achieving environmental sustainability and diversity. Protein engineering has the potential to continue to drive innovation and improve our lives in the future.

Two traditional protein engineering approaches include directed evolution [2] and rational design [3, 4]. Directed evolution is a process used to create proteins or enzymes with improved or novel functions [5]. The method involves introducing mutations into the genetic code of a target protein and screening the resulting variants for improved function. The process is “directed” because it is guided by the desired outcome, such as increased activity, stability, specificity, binding affinity, and fitness. Rational design involves using knowledge of protein structure and function to engineer desirable specific changes to the protein sequence and/or structure [4, 6]. Both approaches resort to experimental screening of astronomically large mutational space, i.e., for protein of amino acid residues, which is expensive, time-consuming, and intractable [7]. As a result, only a small fraction of the mutational space can be explored experimentally even with the most advanced high-throughput screening technology.

Recently, data-driven machine learning has emerged as a new approach for directed evolution and protein engineering [8, 9]. Machine learning-assisted protein engineering (MLPE) refers to the use of machine learning models and techniques to improve the efficiency and effectiveness of protein engineering. MLPE not only reduces the cost and expedites the process of protein engineering, but also optimizes the screening and selection of protein variants [10], leading to the higher efficiency and productivity. Specifically, by using machine learning to analyze and predict the effects of mutations on protein function, researchers can rapidly generate and test large numbers of variants, which establish the protein-to-fitness map (i.e., fitness landscape) from sparsely sampled experimental data [11, 12]. This approach accelerates the process of protein engineering.

The process of data-driven MLPE typically involves several elements, including data collection and preprocessing, model design, feature extraction and selection, algorithm selection and design, model training and validation, experimental validation, and iterative model optimization. Driven by technological advancements in high-throughput sequencing and screening technologies, there has been a substantial accumulation of general-purpose experimental datasets on protein sequences, structures, and functions [13, 14]. These datasets, along with numerous protein-engineering specific deep mutational scanning (DMS) libraries [15], provide valuable resources for machine learning training and validation.

Data representation and feature extraction are crucial steps in the design of machine learning models, as they help to reduce the complexity of biological data and enable more effective model training and prediction. There are several typical types of feature embedding methods, including sequence-based, structure-based [16, 17], physics-based [18, 19], and hybrid methods [5]. Among them, sequence-based embeddings have been dominant due to the success of various natural language processing (NLP) methods such as long short-term memory (LSTM) [21], autoencoders [22], and Transformers [23], which allow unsupervised pre-training on large-scale sequence data. Structure-based embeddings take advantage of existing protein three-dimensional (3D) structures in the Protein Data Bank (PDB) [13] and advanced structure predictions such as AlphaFold2 [24]. These methods further exploit advanced mathematical tools, such as topological data analysis (TDA) [25, 26], differential geometry [27, 28], or graph approaches [29]. Physics-based methods utilize physical models, such as density functional theory [30], molecular mechanics [31], Poisson-Boltzmann model [32], etc. While these methods are highly interpretable, their performance often depends on model parametrization. Hybrid methods may select a combination of two or more types of features.

The designs and selections of MLPE algorithms depend on the availability of data and efficiency of experiments. In real-world scenarios, where smaller training datasets are prevalent, simpler machine learning algorithms such as support vector machines and ensemble methods are often employed for small training datasets, which is often the case in real scenarios. In contrast, deep neural networks are more suitable for larger training datasets. Although regression tasks are typically used to distinguish one set of mutations from another [8], unsupervised zero-shot learning methods can also be utilized to address scenarios with limited data availability [33, 34]. The iterative interplay between experiments and models is another crucial component in MLPE by iteratively screening new data to refine the models. Consequently, the selection of an appropriate MLPE model is influenced by factors like experimental frequency and throughput. This iterative refinement process enables MLPE to deliver optimized protein engineering outcomes.

MLPE has the potential to significantly accelerate the development of new and improved proteins, revolutionizing numerous areas of science and technology (Figure 1). Despite considerable advances in MLPE, challenges remain in many aspects, such as data preprocessing, feature extraction, integration with advanced algorithms, and iterative optimization through experimental validation. This review examines published works and offers insights into these technical advances. We place particular emphasis on the advanced mathematical TDA approaches, aiming to make them accessible to general readers. Furthermore, we review current advanced NLP-based models and efficient MLPE approaches. Last, we discuss potential future directions in the field.

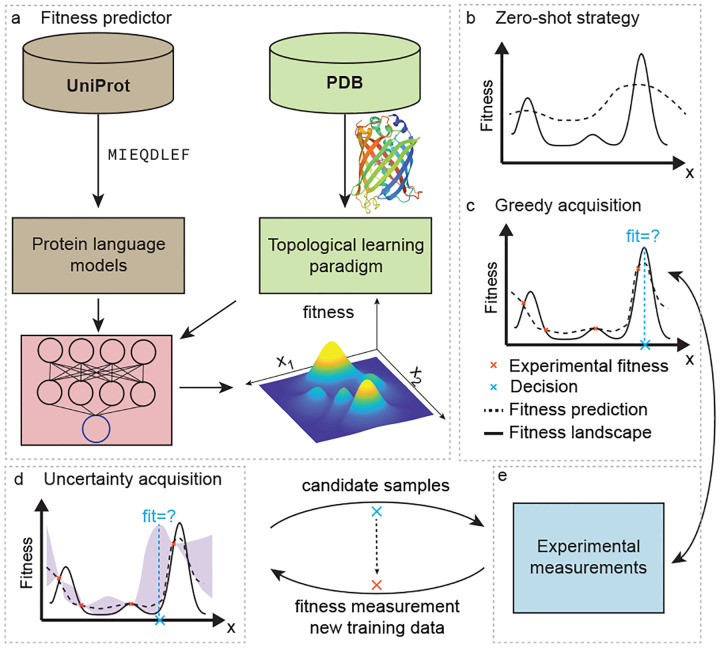

Fig. 1. Machine learning-assisted protein engineering (MLPE).

(a). Machine learning models build fitness predictor using structure and sequence protein data. (b). Zero-shot predictors navigate fitness landscape without labeled data. (c). Greedy acquisition exploits fitness using fitness predictions. (d). Uncertainty acquisition balances exploitation and exploration. The example shows a Gaussian upper confidence bound (UCB) acquisition. (e). Experimental measurements query fitness of candidate proteins in sequential optimization.

Sequence-based deep protein language models

In artificial intelligence, natural language processing (NLP) has recently gained much attention for representing and analyzing human language computationally [35]. NLP covers a wide range of tasks, including language translation, sentiment analysis, chatbot development, speech recognition, and information extraction, among others. The development and advancement of various machine learning models have been instrumental in tackling the complex challenges posed by NLP tasks.

Similar to human language, the primary structure of a protein is also represented by a string of amino acids, with 20 canonical amino acids. The analogy between protein sequences and human languages has inspired the development of computational methods for analyzing and understanding proteins using models adopted from NLP (Figure 1a). The self-supervised sequence-based protein language models have been applied to study the underlying patterns and relationships within protein sequences, predict their structural and functional properties, and facilitate protein engineering. These language models are pretrained on a given data allowing to model protein properties for each given protein. There are two major types of protein language models utilizing different resources of protein data [33] (Table 1). The first one is the local evolutionary models which focus on homologs of the target protein such as multiple sequence alignments (MSAs) to learn the evolutionary information from the mostly related mutations. The second one is the global evolutionary models which learn from large protein sequence databases such as UniProt [14] and Pfam [36].

Table 1.

Summary of protein language models.

| Model | Architecture | Max len | Dim | # para | Pretrained data | Time1 | |

|---|---|---|---|---|---|---|---|

| Source | Size | ||||||

| Local Models | |||||||

| Profile HMMs [37] | Hidden Markov | – | – | – | MSAs | – | Oct 2012 |

| EvMutation [38] | Potts Models | – | – | – | MSAs | – | Jan 2017 |

| MSA Transformer [39] | Transformer | 1024 | 768 | 100M | UniRef50 [14] | 26M | Feb 2021 |

| DeepSequence [22] | VAEs | – | – | – | MSAs | – | Dec 2017 |

| EVE [40] | Bayesian VAEs | – | – | – | MSAs | – | Oct 2021 |

| Global Models | |||||||

| TAPE ResNet [41] | ResNet | 1024 | 256 | 38M | Pfam [36] | 31M | Jun 2019 |

| TAPE LSTM [41] | LSTM | 1024 | 2048 | 38M | Pfam [36] | 31M | Jun 2019 |

| TAPE Transformer [41] | Transformer | 1024 | 512 | 38M | Pfam [36] | 31M | Jun 2019 |

| Bepler [42] | LSTM | 512 | 100 | 22M | Pfam [36] | 31M | Feb 2019 |

| UniRep [21] | LSTM | 512 | 1900 | 18M | UniRef50 [14] | 24M | Mar 2019 |

| eUniRep [43] | LSTM | 512 | 1900 | 18M | UniRef50 [14]; MSAs | 24M | Jan 2020 |

| ESM-1b [23] | Transformer | 1024 | 1280 | 650M | UniRef50 [14] | 250M | Dec 2020 |

| ESM-1v [44] | Transformer | 1024 | 1280 | 650M | UniRef90 [14] | 98M | Jul 2021 |

| ESM-IF1 [45] | Transformer | – | 512 | 124M | UniRef50 [14]; CATH [46] | 12M sequences; 16K structures | Sep 2022 |

| ProGen [47] | Transformer | 512 | – | 1.2B | UniParc [14]; UniprotKB [14]; Pfam [36]; NCBI Taxonomy [48] | 281M | Jul 2021 |

| ProteinBERT [49] | Transformer | 1024 | – | 16M | UniRef90 [14] | 106M | May 2021 |

| Tranception [15] | Transformer | 1024 | 1280 | 700M | UniRef100 [14] | 250M | May 2022 |

| ESM-2 [50] | Transformer | 1024 | 5120 | 15B | UniRef90 [14] | 65M | Oct 2022 |

# para: number of parameters which are only provided for deep learning models. Max len: maximum length of input sequence. Dim: latent space dimension. Size: pre-trained data size where it refers to number of sequences without specification except MSA transformer includes 26 millions of MSAs. K: thousands; M: millions; B: billions.

Time for the first preprint. The input data size, hidden layer dimension, and number of parameters are only provided for global models.

Local evolutionary models

To train a local evolutionary model, MSAs search strategies such as jackhmmer [51] and EvCouplings [52] are first employed. Taking MSAs as inputs, local evolutionary models learn the probabilistic distribution of mutations for a target protein. Probabilistic models, including Hidden Markov Models (HMMs) [53, 37] and Potts-based models [38], are popular in modeling mutational effects. Transformer models have been introduced to learn distribution from MSAs. The MSA Transformer [39] introduces a row- and column-attention mechanism. Recent years, variational autoencoders (VAEs) [54] serve as the alternate to model MSAs by including the dependency between residues and aligning all sequences to a probability distribution. The VAE model DeepSequence [22] and the Bayesian VAE model EVE [40] exhibit excellent performance in modeling mutational effects [55, 33, 5].

Global evolutionary models

With large-size data, global evolutionary models usually adopt the large NLP models. Convolutional Neural networks (CNNs) [56] models and residual network (ResNet) [57] have been employed for protein sequence analysis [41]. Large-scale models, such as long short-term memory (LSTM) [58], have also gained popularity as seen in Bepler [42], UniRep [21], and eUniRep [43]. In recent years, the Transformer architecture has achieved state-of-the-art performance in NLP by introducing the attention mechanism and the self-supervised learning via the masked filling training strategy [59, 60]. Inspired by these advances, Transformer-based protein language models provide new opportunities for building global evolutionary models. A variety of Transformer-based models have been developed such as evolutionary scale modeling (ESM) [23, 44], ProGen [47], ProteinBERT [49], Tranception [15] and ESM-2 [50].

Hybrid approach via fine-tune pre-training

Although global evolutionary models can learn a variety of sequences derived from natural evolution, they face challenges in concentrating on local information when predicting the effects of site-specific mutations in a target protein. To enhance the performance of global evolutionary models, fine-tuning strategies are subsequently implemented. Specifically, fine-tune strategy further refines the pre-trained global models with local information using MSAs or target training data. The fine-tuned eUniRep [43] shows significant improvement over UniRep [21]. Similar improvement was also reported for ESM models [23, 44]. The Tranception model also proposed a hybrid approach combining a global autoregressive inference and a local retrieval inference from MSAs [15]. Tranception achieved the advanced performance over other global and local models.

With various language models proposed, comprehensive studies on various models and the strategy in building downstream model is necessary. A study explored different approaches utilizing the sequence embedding to build downstream models [61]. Two other studies further benchmarked many unsupervised and supervised models in predicting protein fitness [55, 33].

Structure-based topological data analysis (TDA) models

Aided by advanced NLP algorithms, sequence-based models have become the dominant approach in MLPE [12, 11]. However, sequence-based models suffer from a lack of appropriate description of stereochemical information, such as cis-trans isomerism, conformational isomerism, enantiomers, etc. Therefore, sequence embeddings cannot distinguish stereoisomers, which are widely present in biological systems and play a crucial role in many chemical and biological processes. Structure-based models offer a solution to this problem. TDA has became a successful tool in building structure-based models for MLPE [5].

TDA is a mathematical framework based on algebraic topology [62, 63], which allows us to characterize complex geometric data, identify underlying geometric shapes, and uncover topological structures present in the data. TDA finds its applications in a wide range of fields, including neuroscience, biology, materials science, and computer vision. It is especially useful in situations where the data is complex, high-dimensional, and noisy, and where traditional statistical methods may not be effective. In this section, we provide an overview of various types of TDA methods (Table 2). In addition, we review graph neural networks, which are deep learning frameworks cognizant of topological structures, along with their applications in protein engineering. For those readers who are interested in the deep mathematical details of TDA, we have added a supplementary section dedicated to two TDA methods - persistent homology and persistent spectral graph (PSG) in Supplementary Methods.

Table 2.

Summary of topological data analysis (TDA) methods for structures.

| Method | Topological space | Node attribute | Edge attribute |

|---|---|---|---|

| Homology-based | |||

| Persistent Homology [62, 63] | Simplicial complex | None | None |

| Element-specific PH (ESPH) [16] | Simplicial complex | Group labeled | Group labeled |

| Persistent Cohomology [64] | Simplicial complex | Labeled | Labeled |

| Persistent Path Homology [65] | Path complex | Path | Directed |

| Persistent Flag Homology [66] | Flag complex | None | Directed |

| Evolutionary homology [67] | Simplicial complex | Weighted | Weighted |

| Weighted persistent homology [68] | Simplicial complex | Weighted | Weighted |

| Laplacian-based | |||

| Persistent Spectral Graph [3, 4] | Simplicial complex | None | None |

| Persistent Hodge Laplacians [71] | Manifold | Continuum | Continuum |

| Persistent Sheaf Laplacians [72] | Cellular complex | Labeled | Sheaf relation |

| Persistent Path Laplacians [73] | Path complex | Path | Direction |

| Persistent Hypergraph [74] | Hypergraph | Hypernode | Hyperedge |

| Persistent Directed Hypergraphs [75] | Hypergraph | Hypernode | Directed hyperedge |

Homology

The basic idea behind TDA is to represent the data as a point cloud in a high-dimensional topological space, and then study the topological invariants of this space, such as the genus number, Betti number, and Euler characteristic. Among them, the Betti numbers, specifically Betti zero, Betti one, and Betti two, can be interpreted as representing connectedness, holes, and voids, respectively [76, 77]. These numbers can be computed as the ranks of the corresponding homology groups in appropriate dimensions.

Homology groups are algebraic structures that are associated with topological spaces [76]. They provide information about the topological connectivity of geometric objects. The basic idea behind homology is to consider the cycles and boundaries of a space. Loosely speaking, a cycle is a set of points in the space that form a closed loop, while a boundary is a set of points that form the boundary of some region in the space. The homology group of a space is defined as the group of cycles modulo the group of boundaries. That is, we identify two cycles that differ by a boundary and consider them to be equivalent. The resulting homology group encodes information about the Betti numbers of the space.

Homology theory has many applications in mathematics and science. It is used to classify topological spaces in category theory, to study the properties of manifolds in differential geometry and algebraic geometry, and to analyze data in various scientific fields [76]. However, the original homology groups offer truly geometry-free representations and are too abstract to carry sufficient geometric information of data. Persistent homology was designed to improve homology groups’ ability for data analysis.

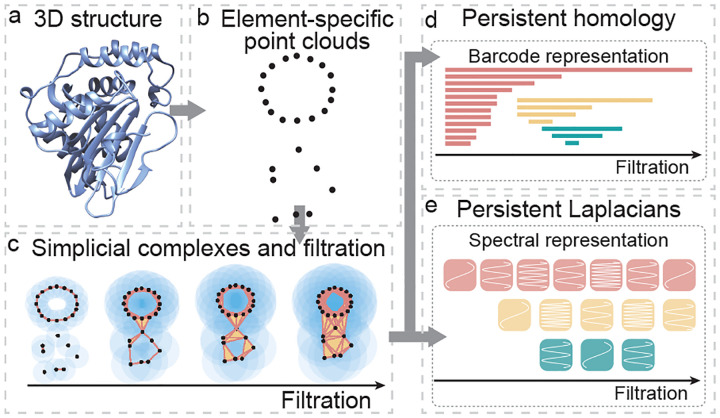

Persistent homology

Persistent homology is a relatively new tool in algebraic topology that is designed to incorporate multiscale topological analysis of data [62, 63]. The basic idea behind persistent homology is to construct a family of geometric shapes of the original data by filtration (Figure 2c). Filtration systematically enlarges the radius of each data point in a point cloud, leading to a family of topological spaces with distinct topological dimensions and connectivity. Homology groups are built from the family of shapes, giving rise to systematic changes in topological invariants, or Betti numbers, at various topological dimensions and geometric scales. Topological invariants based on Betti numbers are expressed in terms of persistence barcodes [78] (Figure 2d), persistence diagrams [79], persistence landscapes [80], or persistence images [81]. Persistent topological representations are widely used in applications, particularly in association with machine learning models [82].

Fig. 2. Conceptual illustration of the TDA-based protein modeling.

(a). A three-dimensional protein structure. (b). Point cloud representation of protein structure. (c). Simplicial complexes and filtration provide multiscale topological representation of the point cloud. (d). Persistent homology characterizes topological evolution of the point cloud. (e). Persistent Laplacian characterizes shape evolution of the point cloud.

Persistent homology is the most important approach in TDA (see Table 2 for a summary of major TDA approaches). It reveals the shape of data in terms of the topological invariants and has had tremendous success in scientific applications, including image and signal processing [83], machine learning [84], biology [82], and neuroscience[85]. Nonetheless, to effectively analyze complex biomolecular data, persistent homology requires further refinement and adjustment. [86].

Persistent cohomology and element-specific persistent homology

One major limitation of persistent homology is that it fails to describe heterogeneous information of data point [64]. In other words, it treats all entries in the point cloud equally without considering other important information about the data. Biomolecules, for example, contain many different element types and each atom may have a different atomic partial charge, atomic interaction environment, and electrostatic potential function that cannot be captured by persistent homology. Thus, it is crucial to have a topological technique that can incorporate both geometric and nongeometric information into a unified framework.

Persistent cohomology was developed to provide such a mathematical paradigm [64]. In this framework, nongeometric information can either be prescribed globally or reside locally on atoms, bonds, or many-body interactions. In topological terminology, nongeometric information is defined on simplicial complexes. This persistent cohomology-based approach can capture multiscale geometric features and reveal non-geometric interaction patterns through topological invariants, or enriched persistence barcodes. It has been demonstrated that persistent cohomology outperforms other methods in benchmark protein-ligand binding affinity prediction datasets [64], which is a non-trivial problem in computational drug discovery.

An alternative approach for addressing the limitation of persistent homology is to use element-specific persistent homology (ESPH) [16]. The motivation behind ESPH is the same as that for persistent cohomology, but ESPH is relatively simple. Basically, atoms in the original biomolecule are grouped according to their element types, such as C, N, O, S, H, etc. Then, their combinations, such as CC, CN, CO, etc., are identified, and persistent homology analysis is applied to the atoms in each element combination, resulting in ESPH analysis. As a result, ESPH reduces geometric and biological complexities and embeds chemical and biological information into topological abstraction. The ESPH approach was used to win the D3R Grand Challenges, a worldwide competition series in computer-aided drug design [87].

Persistent topological Laplacians

However, aforementioned TDA methods are still limited in describing complex data, such as its lack of description of non-topological changes (i.e., homotopic shape evolution) [5], its incapability of coping with directed networks and digraphs (i.e., atomic partial charges and polarizations, gene regulation networks), and its inability to characterize structured data (e.g., functional groups, binding domains, and motifs) [86]. These limitations necessitate the development of innovative strategies.

Persistent topological Laplacians (PTLs) are a new class of mathematical tools designed to overcome the aforementioned challenges in TDA [86]. One of the first methods in this class is the PSG [3], also known as persistent combinatorial Laplacians [3] or persistent Laplacians [4]. PSGs have both harmonic spectra with zero eigenvalues and non-harmonic spectra with non-zero eigenvalues (Figure 2e). The harmonic spectra recover all the topological invariants from persistent homology, while the non-harmonic spectra capture the homotopic shape evolution of data that cannot be described by persistent homology [86]. PSGs have been used for accurate forecasting of emerging dominant SARS-CoV-2 variants BA.4/BA.5 [88], facilitating machine learning-assisted protein engineering predictions [5], and other applications [89].

Like persistent homology, persistent Laplacians are limited in their ability to handle directed networks and atomic polarizations. To address these limitations, persistent path Laplacians have been developed [73]. Their harmonic spectra recover the topological invariants of persistent path homology [65], while their non-harmonic spectra capture homotopic shape evolution. Both persistent path Laplacians and persistent path homology were developed as a generalization of the path complex [90].

None of the PTLs mentioned above are capable of handling different types of elements in a molecule as persistent cohomology does. To overcome this limitation, persistent sheaf Laplacians [72] were designed, inspired by persistent cohomology [64], persistent Laplacians [3], and sheaf Laplacians for cellular sheaves [91]. The aim of persistent sheaf Laplacians is to discriminate between different objects in a point cloud. By associating a set of non-trivial labels with each point in a point cloud, a persistent module of sheaf cochain complexes is created, and the spectra of persistent sheaf Laplacians encode both geometrical and non-geometrical information [72]. The theory of persistent sheaf Laplacians is an elegant method for the fusion of different types of data and opens the door to future developments in TDA, geometric data analysis, and algebraic data analysis.

Persistent hypergraph Laplacians enable the topological description of internal structures or organizations in data [74]. Persistent hyperdigraph Laplacians further allow for the topological Laplacian modeling of directed hypergraphs [75]. These persistent topological Laplacians can be utilized to describe intermolecular and intramolecular interactions. As protein structures are inherently multiscale, it is natural to apply persistent hypergraph Laplacians and persistent hyperdigraph Laplacians to delineate the protein structure-function relationship.

Finally, unlike all the aforementioned PTLs, evolutionary de Rham-Hodge Laplacians or persistent Hodge Laplacians are defined on a family of filtration-induced differentiable manifolds [71]. They are particularly valuable for the multiscale topological analysis of volumetric data. Technically, a similar algebraic topology structure is shared by persistent Hodge Laplacians and persistent Laplacians, but the former is a continuum theory for volumetric data and the latter is a discrete formulation for point cloud. As such, their underlying mathematical definitions, i.e., differential forms on manifolds and simplicial complexes on graphs, are sharply different.

Deep graph neural networks and topological deep learning

Similar to topological data analysis, graph- and topology-based deep learning models have been proposed to capture connectivity and shape information of protein structure data. Graph neural networks (GNNs) consider the low-order interactions between vertices by aggregating information from neighbor vertices. A variety of popular graph neural network layers has been proposed, such as convolution graph networks (GCN) [92], graph attention networks (GAT) [93], graph sample and aggregate (GraphSAGE) [94], Graph Isomorphism Network (GIN) [95], and gated graph neural network [96].

With variety of architectures of GNN layers, self-supervised learning models are widely used for representation learning of graph-based data. Graph autoencoder (GAE) and variational graph autoencoder (VGAE) consist of both encoder and decoder, where the decoder employ a linear inner product to reconstruct adjacent matrix [97]. While most of graph-based self-supervised models only have encoder. Deep graph infomax (DGI) maximizes mutual information between a graph’s local and global features to achieve self-supervised learning [98]. Graph contrastive learning (GRACE) constructs positive and negative pairs from a single graph, and trains a GNN to differentiate between them [99]. Self-supervised graph transformer (SSGT) uses masked node prediction to train the model. Given a masked graph, it tries to predict the masked node’s attributes from the unmasked nodes [100].

In applications to learning protein structures, GCNs have been widely applied to building structure-to-function map of proteins [101, 102]. Moreover, self-supervised models provide powerful pre-trained model in learning representation of protein structures. GeoPPI [103] proposed a graph neural network-based autoencoder to extract structural embedding at the protein-protein binding interface. The subsequent downstream models allow accurate predictions for protein-protein binding affinity upon mutations [103] and further design effective antibody against SARS-CoV-2 variants [104]. GRACE has been applied to learn geometric representation of protein structures [105]. To adopt the critical biophysical properties and interactions between residues and atoms in protein structures, graph-based self-supervised learning models have been customized to achieve the specific functions. The inverse protein folding protocol was proposed to capture the complex structural dependencies between residues in its representation learning [106, 45]. OAGNNs was proposed to better sense the geometric characteristics such as nner-residue torsion angles, inter-residue orientations in its representation learning [107].

Topological deep learning, proposed by Cang and Wei in 2017 [108], is an emerging paradigm. It integrates topological representations with deep neural networks for protein fitness learning and prediction [108, 87, 5]. Similar graph and topology-based deep learning architectures have also been proposed to capture connectivity and shape information of protein structure data [88, 75]. Inspired by TDA, high-order interactions among neural nodes were proposed in -GNNs [109] and simplicial neural networks [110].

Artificial intelligence-aided protein engineering

Protein engineering is a typical black-box optimization problem, which focuses on finding the optimal solution without explicitly knowing the objective function and its gradient. In protein engineering, the goal in designing algorithms for this problem is to efficiently search for the best sequence within a large search space:

| (1) |

where is an unlabeled candidate sequence library, is a sequence in the library and is the unknown sequence-to-fitness map for optimization. The fitness landscape, , is a high-dimensional surface that maps amino acid sequences to properties such as activity, selectivity, stability, and other physicochemical features.

There are two practical challenges in protein engineering. First, the fitness landscape is usually epistatic [111, 112], where the contribution of individual amino acid residues to protein fitness have dependency to each other. The interdependence leads to complex, non-linear interactions among different residues. In other word, the fitness landscape contains large number of local optima. For example, in a four-site mutational fitness landscape for GB1 protein with 204 = 160,000 mutations, 30 local maximum fitness peaks were found [111]. Either traditional directed evolution experiments such as single-mutation walk and recombination, or machine learning models, is difficult to find the global optima without trapped at local one. Second, protein engineering process usually collects limited number of data comparing to the huge sequence library. There are an enormous number of ways to mutate any given protein: for a 300-amino-acid protein, there are 5,700 possible single-amino-acid substitutions and 32,381,700 ways to make just two substitutions with the 20 canonical amino acids [12]. Even with high-throughput experiments, only a small fraction of the sequence library can be screened. Despite this, many systems only have low-throughput assays such as membrane proteins [113], making the process more difficult.

With enriched data-driven protein modeling approaches from protein sequences to structures, recent advanced machine learning methods have been widely developed to accelerate protein engineering in silico (Figure 1a) [1, 11, 12, 114, 115]. Utilizing a limited experimental capacity, machine learning models can effectively augment the fitness evaluation process, enabling the exploration of a vast search space . This approach facilitates the discovery of optimal solutions within complex design spaces, despite constraints on the number of trials or experiments.

Using a limited number of experimentally labeled sequences, machine learning models can carry out zero-shot or few-shot predictions [11]. The accuracy of these predictions largely depends on the distribution of the training data, which influences the model’s ability to generalize to new sequences. Concretely, if the training data is representative or closer to a given sequence, the model is more likely to make accurate predictions for that specific sequence. Conversely, if the training data is not representative or distant from the given sequence, the model’s predictive accuracy may be compromised, leading to less reliable results. Therefore, MLPE are usually an iterative process between machine learning models and experimental screens. Incorporating the exploration-exploitation trade-off in this context is essential for achieving optimal results. During the iterative process, the model must balance exploration, where it seeks uncertain regions that machine learning models have low accuracy, with exploitation, where it refines and maximizes fitness based on previously gained knowledge. A right balance is critical to preventing overemphasis on either exploration or exploitation leading, which may lead to suboptimal solutions. In particular, the epistatic nature of protein fitness landscapes influences the exploration-exploitation trade-off in the design process.

MLPE methods need to take the experimental capacity into account when attempt to balance the exploitation-exploration. In this section, we discuss different strategies upon the number of experimental capacity. First, we discuss zero-shot strategy when no labeled experimental data is available. Second, we discuss supervised models for performing greedy search (i.e., exploitation). Last, we discuss uncertainty quantification models that balance exploration and exploitation trade-off.

Unsupervised zero-shot strategy

First, we review the zero-shot strategy that interrogates protein fitness with an unsupervised manner (Figure 1b and Table 3). This is designed for the scenarios in the early stage designs where no experiments have been conducted or the experimentally labeled data is too limited allowing accurate fitness predictions from supervised models [11, 5]. They delineate a fitness landscape at the early stage of protein engineering. Essential residues can be identified and prioritized for mutational experiments, allowing for a more targeted approach to protein engineering [22]. Additionally, the initial fitness landscape can be utilized to filter out protein candidates with a low likelihood of exhibiting the desired functionality. By focusing on sequences with higher probabilities, protein engineering process can be made more efficient and effective [34].

Table 3. Comparisons for fitness predictors.

Results were adopted from TopFit [5]. Performance was reported by average Spearman correlation over 34 DMS datasets and 20 repeats. Supervised model use ensemble regression from 18 regression models [5].

| Zero-shot predictors | ||||

|---|---|---|---|---|

| Model name | training set size | |||

| 0 | ||||

| ESM-1b PLL [23, 33] | 0.435 | |||

| eUniRep PLL [127] | 0.411 | |||

| EVE [40] | 0.497 | |||

| Tranception [15] | 0.478 | |||

| DeepSequence [22] | 0.504 | |||

| Supervised models | ||||

| Embedding name | training set size | |||

| 24 | 96 | 168 | 240 | |

| Persistent Homology [5] | 0.263 | 0.432 | 0.496 | 0.534 |

| Persistent Laplacian [5] | 0.280 | 0.457 | 0.525 | 0.564 |

| ESM-1b [23] | 0.219 | 0.421 | 0.494 | 0.537 |

| eUniRep [43] | 0.259 | 0.432 | 0.485 | 0.515 |

| Georgiev [127] | 0.169 | 0.326 | 0.402 | 0.446 |

| UniRep [21] | 0.183 | 0.347 | 0.420 | 0.462 |

| Onehot | 0.132 | 0.317 | 0.400 | 0.450 |

| Bepler [42] | 0.139 | 0.287 | 0.353 | 0.396 |

| TAPE LSTM [41] | 0.259 | 0.436 | 0.492 | 0.522 |

| TAPE ResNet [41] | 0.080 | 0.216 | 0.305 | 0.358 |

| TAPE Transformer [41] | 0.146 | 0.304 | 0.371 | 0.418 |

Zero-shot predictions rely on the model’s ability to recognize patterns in naturally observed proteins, enabling it to make informed predictions for new sequences without having direct training data for the target protein. As discussed in Section 3, protein language models, particularly generative models, learn the distribution of naturally observed proteins which are usually functional. The learned distribution can be used to assess the likelihood that a newly designed protein lies within the distribution of naturally occurring proteins, thus providing valuable insights into its potential functionality and stability [11].

VAEs are popular local evolutionary models for zero-shot predictions such as DeepSequence [22] and EVE models [40]. In VAEs, the conditional probability distribution is the decoder in a form of neural network with parameters , where is the sequence being query and is its latent space variable. Similar, encoder, , is modeled by another neural network with parameters to approximate the true posterior distribution . For a given sequence , its probabilistic likelihood in VAEs is parameterized by parameters . Direct computation of this probability, , is intractable in the general case. The evidence lower bound (ELBO) forming a variation inference [54] provides a lower bound of the log likelihood:

| (2) |

ELBO is taken as the scoring function to quantify the mutational likelihood of each query sequence. The ELBO-based zero-shot predictions show advanced performance reported in multiple works [33, 55, 5].

Transformer is the currently state-of-the-art model which has been used in many supervised tasks [23]. It learns a global distribution of nature proteins. It has also been proved to have advanced performance for zero-shot predictions [33, 44]. The training of Transformer uses mask filling that refers to the process of predicting masked amino acid in a given input sequence by leveraging the contextual information encoded in the Transformer’s self-attention mechanism [59, 60]. The mask filling procedure creates a classification layer on the top of the Transformer architecture. Given a sequence , the masked filling classifier generate probability distributions for amino acids at masked positions. Suppose has amino acids , by masking a single amino acid at -th position, the classifier calculates the conditional probability of , where is the remaining sequence excluding the masked -th position. To reduce the computational cost, the pseudo-log-likelihoods (PLLs) are usually used to estimate the log-likelihood of a given sequence [33, 34] :

| (3) |

The PPLs assume the independence between amino acids. To consider the dependence between amino acids, one can calculate the conditional probability by summing up all possible factorization [34]. But this approach leads to much higher computational cost.

Furthermore, many different strategies have been employed to make zero-shot predictions. Fine-tune model can improve the predictions by combining both local and global evolutionary models [43]. Tranception scores combine global autoregressive inference and an local MSAs retrieval inference to make more accurate predictions. In addition to these sequence-based models, the structure-based GNN-based models including ESM-1F [45] and RGC [116] have also been proposed by utilizing large-scale structural data from AlphaFold2. However, the structure-based model is still limited in accuracy comparing to sequence-based models.

Supervised regression models

Supervised regression models are among the most prevalent approaches used in guiding protein engineering, as they enable greedy search strategies to maximize protein fitness (Figure 1c). These models, including statistical, machine learning, and deep learning techniques, rely on a set of labeled data as their training set to predict the fitness landscape. By leveraging the information contained within the training data, supervised regression models can effectively estimate the relationship between protein sequences and their fitness, providing valuable insights for protein engineering and optimization [12, 1].

A variety of supervised models have been applied to predict protein fitness. In general, statistical models and machine learning models such as linear regression [117], ridge regression [33], support vector machine (SVM) [118], random forest [119], gradient boosting tree [120] have accurate performance for small training set. And deep learning methods such as deep neural networks [121], convolutional neural networks (CNNs) [17], attention-based neural networks [122] are more accurate with large size of training data. However, in protein engineering, the size of training data increases sequentially which make the supervised models difficult to provide accurate performance all time. Alternatively, the ensemble regression was proposed to provide robust fitness predictions despite of training data size [11, 123]. The ensemble regression average predictions from multiple supervised models and they provide more accurate and robust performance than single model [5]. To remove the inaccurate models in the average, cross-validation is usually used to rank accuracy of each model and only top models are taken to average the predictions. Paired with the zero-shot strategy, the ensemble regression trained on informed training set pre-selected by zero-shot predictions can efficiently pick up the global optimal protein with a few round of experiments [34, 124, 125]. And such approach has been applied to enable resource-efficient engineering CRISPR-Cas9 genome editor activities [126].

Rather than the architectures of supervised models, the predictive accuracy highly rely on the amount of information obtained from the featurization process (Table 3). The physical-chemical properties extract the properties of individual amino acids or atoms [127]. The energy-based scores provide descriptions for the overall property of the target protein [18]. However, neither of them successfully take the complex interactions between residues and atoms into account. To tackle this challenge, recent mathematics-initiated topological and geometric descriptors achieved great success in predicting protein fitness including protein-protein interactions [17], protein stability [120], enzyme activity, and antibody effectivity [5]. The aforementioned descriptors (Section 10) extract structural information from atoms at different characteristic lengths. Furthermore, the sequence-based protein language models provide another featurization strategies. The deep pre-trained models have the latent space which provide the informative representation of each given sequence. Building supervised models from the deep embedding exhibits accurate performance [5, 128]. Recent works combine different types of sequence-based features [129, 33] or combine structure-based and sequence-based features [5] show the complementary roles of different featurization approaches.

Active learning models for exploration-exploitation balance

With the extensive accurate protein-to-fitness machine learning models, active learning further designs iterative strategy between models and experiments to sequentially optimize fitness with the consideration of exploitation-exploration trade-off (Figure 1d–e) [115].

To balance the exploitation-exploration trade-off, the supervised models require to predict not only the protein fitness but also quantify the uncertainty of the given protein [130]. The most popular uncertainty quantification in protein engineering is Gaussian process (GP) [131], which automatically calibrate the balance. Especially, GP using the upper confidence bounds (UCBs) acquisition has efficient convergent rate theoretically for solving the black-box optimization (Equation 1). A variety protein engineering employed GP to accelerate the fitness optimization. For examples, the light-gated channelrhodopsins (ChRs) were engineered to improve photocurrence and light sensitivity [132, 133], green fluorescent protein has been engineered to become yellow fluorescence [134], acyl-ACP reductase was engineered to improve fatty alcohol production [135], P450 enzyme has been engineered to improve thermostability [136].

The tree-based search strategy is also efficient by building a hierarchical search path, such as the hierarchical optimistic optimization (HOO) [137], the deterministic optimistic optimization (DOO), and the simultaneous optimistic optimization (SOO) [138]. To handle the discrete mutational space in protein engineering, an unsupervised clustering approach was employed to construct the hierarchical tree structure [124, 125].

Recently, researchers have turned to generative models to quantify uncertainty in protein engineering, employing methods such as Variational Autoencoders (VAEs) [54, 22, 40], generative adversarial networks (GANs) [139, 140], and autoregressive language models [141, 15]. Generative models are a class of machine learning algorithms that aim to learn the underlying data distribution of a given dataset, in order to generate new, previously unseen data points that resemble the training data. These models capture the inherent structure and patterns present in the data, enabling them to create realistic and diverse samples that share the same characteristics as the original data. For examples, ProGen [47] is a large language model that generate functional protein sequences across diverse families. A Transformer-based antibody language models utilize fine-tuning processes to assist design antibody [142]. Recently, a novel Transformer-based model called ReLSO has been introduced [143]. This innovative approach simultaneously generates protein sequences and predicts their fitness using its latent space representation. The attention-based relationships learned by the jointly trained ReLSO model offer valuable insights into sequence-level fitness attribution, opening up new avenues for optimizing proteins.

Conclusions and future directions

In this review, we discuss the advanced deep protein language models for protein modeling. We further provide an introduction of topological data analysis methods and their applications in protein modeling. Relying on both structure-based and sequence-based models, MLPE methods were widely developed to accelerate protein engineering. In the future, various machine learning and deep learning will have potential perspectives in protein engineering.

Accurate structure prediction methods enhanced accurate structure-based models

Comparing to sequence data, three-dimensional protein structural data offer more comprehensive and explicit descriptions of the biophysical properties of a protein and its fitness. As a result, structure-based models usually provide superb performance than sequence-based models for supervised tasks with small training set [5, 120].

As protein sequence databases continue to grow, self-supervised models demonstrate their ability to effectively model proteins using large-scale data. The protein sequence database provides a vast amount of resources for building sequence-based models, such as UniProt [14] database contains hundreds of millions sequences. In contrast, protein structure databases are comparatively limited in size. The largest among them, Protein Data Bank (PDB), contains only 205 thousands of protein structures as of 2023 [13]. Due to the abundance of data resources, sequence-based models typically outperform structure-based models significantly [116].

To address the limited availability of structure data, researchers have focused on developing highly accurate deep learning techniques aimed at enabling large-scale structure predictions. These state-of-the-art methodologies have the potential to significantly expand the database of known protein structures. Two prominent methods are AlphaFold2 [24] and RosettaFold [144], which have demonstrated remarkable capabilities in predicting protein structures with atomic-level accuracy. By harnessing the power of cutting-edge deep learning algorithms, these tools have successfully facilitated the accurate prediction of protein structures, thus contributing to the expansion of the structural database.

Both AlphaFold2 and RosettaFold are alignment-based, which rely on MSAs of the target protein for structure prediction. Alignment-based approaches can be highly accurate when there are sufficient number of homologous sequences (that is, MSAs depth) in the database. Therefore, these methods may have reduced accuracy with low MSAs depth in database. In addition, the MSAs search is time consuming which slows down the prediction speed. Alternatively, alignment-free methods have also been proposed to tackle these limitations [145]. An early work RGN2 [146] exhibits more accurate predictions than AlphaFold2 on orphans proteins which lack of MSAs. Supervised transformer protein language models predict orphan protein structures [147]. With the development of variety of large-scale protein language models in recent years, the alignment-free structural prediction methods incorporate with these models to exhibit their accuracy and efficiency. For example, ESMFold [50] and OmegaFold [148] achieve similar accuracy with AlphaFold2 with faster speed. Moreover, extensive language model-based methods were developed for structural predictions of single-sequence and orphan proteins [149, 150, 151, 152]. Large-scale protein language models will provide powerful toolkit for protein structural predictions.

In building protein fitness model, the structural TDA-based model has exemplified that the AlphaFold2 structure is as reliable as the experimental structure [5]. The zero-shot model, ESM-IF1, also shows advanced performance with coupling with the large structure AlphaFold database [45]. In the light of the revolutionary structure predictive models, structure-based models will open up a new avenue in protein engineering, from directed evolution to de novo design [153, 154]. More sophisticated TDA methods will be demanded to handle the large-scale datasets. Large-scale deep graph neural networks will need to be further developed, for example, to consider the high-order interactions using simplicial neural networks [110, 155].

Large highthroughput datasets enabled larger scale models

Current MLPE methods are usually designed for limited training set. The ensemble regression is an effective approach to accurately learn the fitness landscape with small but increasing size of training sets from deep mutational scanning [34].

The breakthrough biotechnology, next-generation sequencing (NGS) [156] largely enhances the capacity of DMS for collecting supervised fitness data in various protein systems [112, 111, 157]. The resulting large-scale deep mutational scanning databases expand the exploration range of protein engineering. Deeper machine learning models are emerging to enhance the accuracy and adaptivity for protein engineering.

Supplementary Material

Key points.

Machine learning and deep learning techniques are revolutionizing protein engineering.

Topological data analysis enables advanced structure-based machine learning-assisted protein engineering approaches.

Deep protein language models extract critical evolutionary information from large-scale sequence databases.

Acknowledgments

This work was supported in part by NIH grants R01GM126189 and R01AI164266, NSF grants DMS-2052983, DMS-1761320, and IIS-1900473, NASA grant 80NSSC21M0023, Michigan Economic Development Corporation, MSU Foundation, Bristol-Myers Squibb 65109, and Pfizer.

Footnotes

Competing interests

No competing interest is declared.

References

- 1.Narayanan Harini, Dingfelder Fabian, Butté Alessandro, Lorenzen Nikolai, Sokolov Michael, and Arosio Paolo. Machine learning for biologics: opportunities for protein engineering, developability, and formulation. Trends in pharmacological sciences, 42(3):151–165, 2021. [DOI] [PubMed] [Google Scholar]

- 2.Arnold Frances H. Design by directed evolution. Accounts of chemical research, 31(3):125–131, 1998. [Google Scholar]

- 3.Karplus Martin and Kuriyan John. Molecular dynamics and protein function. Proceedings of the National Academy of Sciences, 102(19):6679–6685, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Boyken Scott E, Chen Zibo, Groves Benjamin, Langan Robert A, Oberdorfer Gustav, Ford Alex, Gilmore Jason M, Xu Chunfu, DiMaio Frank, Pereira Jose Henrique, et al. De novo design of protein homo-oligomers with modular hydrogen-bond network-mediated specificity. Science, 352(6286):680–687, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Romero Philip A and Arnold Frances H. Exploring protein fitness landscapes by directed evolution. Nature reviews Molecular cell biology, 10(12):866–876, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bhardwaj Gaurav, Mulligan Vikram Khipple, Bahl Christopher D, Gilmore Jason M, Harvey Peta J, Cheneval Olivier, Buchko Garry W, Pulavarti Surya VSRK, Kaas Quentin, Eletsky Alexander, et al. Accurate de novo design of hyperstable constrained peptides. Nature, 538(7625):329–335, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pierce Niles A and Winfree Erik. Protein design is np-hard. Protein engineering, 15(10):779–782, 2002. [DOI] [PubMed] [Google Scholar]

- 8.Siedhoff Niklas E, Schwaneberg Ulrich, and Davari Mehdi D. Machine learning-assisted enzyme engineering. Methods in enzymology, 643:281–315, 2020. [DOI] [PubMed] [Google Scholar]

- 9.Mazurenko Stanislav, Prokop Zbynek, and Damborsky Jiri. Machine learning in enzyme engineering. ACS Catalysis, 10(2):1210–1223, 2019. [Google Scholar]

- 10.Diaz Daniel J, Kulikova Anastasiya V, Ellington Andrew D, and Wilke Claus O. Using machine learning to predict the effects and consequences of mutations in proteins. Current Opinion in Structural Biology, 78:102518, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wittmann Bruce J, Johnston Kadina E, Wu Zachary, and Arnold Frances H. Advances in machine learning for directed evolution. Current opinion in structural biology, 69:11–18, 2021. [DOI] [PubMed] [Google Scholar]

- 12.Yang Kevin K, Wu Zachary, and Arnold Frances H. Machine-learning-guided directed evolution for protein engineering. Nature methods, 16(8):687–694, 2019. [DOI] [PubMed] [Google Scholar]

- 13.Berman Helen M, Westbrook John, Feng Zukang, Gilliland Gary, Bhat Talapady N, Weissig Helge, Shindyalov Ilya N, and Bourne Philip E. The protein data bank. Nucleic acids research, 28(1):235–242, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Uniprot: the universal protein knowledgebase in 2021. Nucleic Acids Research, 49(D1):D480–D489, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Notin Pascal, Dias Mafalda, Frazer Jonathan, Hurtado Javier Marchena, Gomez Aidan N, Marks Debora, and Gal Yarin. Tranception: protein fitness prediction with autoregressive transformers and inference-time retrieval. In International Conference on Machine Learning, pages 16990–17017. PMLR, 2022. [Google Scholar]

- 16.Cang Zixuan and Wei Guo-Wei. Integration of element specific persistent homology and machine learning for protein-ligand binding affinity prediction. International journal for numerical methods in biomedical engineering, 34(2):e2914, 2018. [DOI] [PubMed] [Google Scholar]

- 17.Wang Menglun, Cang Zixuan, and Wei Guo-Wei. A topology-based network tree for the prediction of protein–protein binding affinity changes following mutation. Nature Machine Intelligence, 2(2):116–123, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Schymkowitz Joost, Borg Jesper, Stricher Francois, Nys Robby, Rousseau Frederic, and Serrano Luis. The foldx web server: an online force field. Nucleic acids research, 33(suppl_2):W382–W388, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Leman Julia Koehler, Weitzner Brian D, Lewis Steven M, Adolf-Bryfogle Jared, Alam Nawsad, Alford Rebecca F, Aprahamian Melanie, Baker David, Barlow Kyle A, Barth Patrick, et al. Macromolecular modeling and design in rosetta: recent methods and frameworks. Nature methods, 17(7):665–680, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Qiu Yuchi and Wei Guo-Wei. Persistent spectral theory-guided protein engineering. Nature Computational Science, pages 1–15, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Alley Ethan C, Khimulya Grigory, Biswas Surojit, AlQuraishi Mohammed, and Church George M. Unified rational protein engineering with sequence-based deep representation learning. Nature Methods, 16(12):1315–1322, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Riesselman Adam J, Ingraham John B, and Marks Debora S. Deep generative models of genetic variation capture the effects of mutations. Nature Methods, 15(10):816–822, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rives Alexander, Meier Joshua, Sercu Tom, Goyal Siddharth, Lin Zeming, Liu Jason, Guo Demi, Ott Myle, Zitnick C Lawrence, Ma Jerry, et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proceedings of the National Academy of Sciences, 118(15), 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jumper John, Evans Richard, Pritzel Alexander, Green Tim, Figurnov Michael, Ronneberger Olaf, Tunyasuvunakool Kathryn, Bates Russ, Žídek Augustin, Potapenko Anna, et al. Highly accurate protein structure prediction with alphafold. Nature, 596(7873):583–589, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Edelsbrunner Herbert and Harer John. Computational topology: an introduction. American Mathematical Soc., 2010. [Google Scholar]

- 26.Zomorodian Afra and Carlsson Gunnar. Computing persistent homology. Discrete & Computational Geometry, 33(2):249–274, 2005. [Google Scholar]

- 27.Nguyen Duc Duy and Wei Guo-Wei. Dg-gl: differential geometry-based geometric learning of molecular datasets. International journal for numerical methods in biomedical engineering, 35(3):e3179, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wee JunJie and Xia Kelin. Ollivier persistent ricci curvature-based machine learning for the protein–ligand binding affinity prediction. Journal of Chemical Information and Modeling, 61(4):1617–1626, 2021. [DOI] [PubMed] [Google Scholar]

- 29.Nguyen Duc Duy and Wei Guo-Wei. Agl-score: algebraic graph learning score for protein-ligand binding scoring, ranking, docking, and screening. Journal of chemical information and modeling, 59(7):3291–3304, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ryczko Kevin, Strubbe David A, and Tamblyn Isaac. Deep learning and density-functional theory. Physical Review A, 100(2):022512, 2019. [Google Scholar]

- 31.Butler Keith T, Davies Daniel W, Cartwright Hugh, Isayev Olexandr, and Walsh Aron. Machine learning for molecular and materials science. Nature, 559(7715):547–555, 2018. [DOI] [PubMed] [Google Scholar]

- 32.Chen Jiahui, Geng Weihua, and Wei Guo-Wei. Mlimc: Machine learning-based implicit-solvent monte carlo. Chinese journal of chemical physics, 34(6):683–694, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hsu Chloe, Nisonoff Hunter, Fannjiang Clara, and Listgarten Jennifer. Learning protein fitness models from evolutionary and assay-labeled data. Nature biotechnology, pages 1–9, 2022. [DOI] [PubMed] [Google Scholar]

- 34.Wittmann Bruce J, Yue Yisong, and Arnold Frances H. Informed training set design enables efficient machine learning-assisted directed protein evolution. Cell Systems, 12(11):1026–1045, 2021. [DOI] [PubMed] [Google Scholar]

- 35.Khurana Diksha, Koli Aditya, Khatter Kiran, and Singh Sukhdev. Natural language processing: State of the art, current trends and challenges. Multimedia tools and applications, 82(3):3713–3744, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.El-Gebali Sara, Mistry Jaina, Bateman Alex, Eddy Sean R, Luciani Aurélien, Potter Simon C, Qureshi Matloob, Richardson Lorna J, Salazar Gustavo A, Smart Alfredo, et al. The pfam protein families database in 2019. Nucleic acids research, 47(D1):D427–D432, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shihab Hashem A, Gough Julian, Cooper David N, Stenson Peter D, Barker Gary LA, Edwards Keith J, Day Ian NM, and Gaunt Tom R. Predicting the functional, molecular, and phenotypic consequences of amino acid substitutions using hidden markov models. Human mutation, 34(1):57–65, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hopf Thomas A, Ingraham John B, Poelwijk Frank J, Schärfe Charlotta PI, Springer Michael, Sander Chris, and Marks Debora S. Mutation effects predicted from sequence co-variation. Nature biotechnology, 35(2):128–135, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rao Roshan M, Liu Jason, Verkuil Robert, Meier Joshua, Canny John, Abbeel Pieter, Sercu Tom, and Rives Alexander. Msa transformer. In International Conference on Machine Learning, pages 8844–8856. PMLR, 2021. [Google Scholar]

- 40.Frazer Jonathan, Notin Pascal, Dias Mafalda, Gomez Aidan, Min Joseph K, Brock Kelly, Gal Yarin, and Marks Debora S. Disease variant prediction with deep generative models of evolutionary data. Nature, 599(7883):91–95, 2021. [DOI] [PubMed] [Google Scholar]

- 41.Rao Roshan, Bhattacharya Nicholas, Thomas Neil, Duan Yan, Chen Xi, Canny John, Abbeel Pieter, and Song Yun S. Evaluating protein transfer learning with tape. Advances in Neural Information Processing Systems, 32:9689, 2019. [PMC free article] [PubMed] [Google Scholar]

- 42.Bepler Tristan and Berger Bonnie. Learning protein sequence embeddings using information from structure. In International Conference on Learning Representations, 2018. [Google Scholar]

- 43.Biswas Surojit, Khimulya Grigory, Alley Ethan C, Esvelt Kevin M, and Church George M. Low-n protein engineering with data-efficient deep learning. Nature methods, 18(4):389–396, 2021. [DOI] [PubMed] [Google Scholar]

- 44.Meier Joshua, Rao Roshan, Verkuil Robert, Liu Jason, Sercu Tom, and Rives Alex. Language models enable zero-shot prediction of the effects of mutations on protein function. Advances in Neural Information Processing Systems, 34, 2021. [Google Scholar]

- 45.Hsu Chloe, Verkuil Robert, Liu Jason, Lin Zeming, Hie Brian, Sercu Tom, Lerer Adam, and Rives Alexander. Learning inverse folding from millions of predicted structures. In International Conference on Machine Learning, pages 8946–8970. PMLR, 2022. [Google Scholar]

- 46.Orengo Christine A, Michie Alex D, Jones Susan, Jones David T, Swindells Mark B, and Thornton Janet M. Catha–hierarchic classification of protein domain structures. Structure, 5(8):1093–1109, 1997. [DOI] [PubMed] [Google Scholar]

- 47.Madani Ali, Krause Ben, Greene Eric R, Subramanian Subu, Mohr Benjamin P, Holton James M, Olmos Jose Luis Jr, Xiong Caiming, Sun Zachary Z, Socher Richard, et al. Large language models generate functional protein sequences across diverse families. Nature Biotechnology, pages 1–8, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Federhen Scott. The ncbi taxonomy database. Nucleic acids research, 40(D1):D136–D143, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Brandes Nadav, Ofer Dan, Peleg Yam, Rappoport Nadav, and Linial Michal. Proteinbert: a universal deep-learning model of protein sequence and function. Bioinformatics, 38(8):2102–2110, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lin Zeming, Akin Halil, Rao Roshan, Hie Brian, Zhu Zhongkai, Lu Wenting, Smetanin Nikita, Verkuil Robert, Kabeli Ori, Shmueli Yaniv, et al. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science, 379(6637):1123–1130, 2023. [DOI] [PubMed] [Google Scholar]

- 51.Eddy Sean R. Accelerated profile hmm searches. PLoS computational biology, 7(10):e1002195, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Hopf Thomas A, Green Anna G, Schubert Benjamin, Mersmann Sophia, Schärfe Charlotta PI, Ingraham John B, Toth-Petroczy Agnes, Brock Kelly, Riesselman Adam J, Palmedo Perry, et al. The evcouplings python framework for coevolutionary sequence analysis. Bioinformatics, 35(9):1582–1584, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Rabiner Lawrence R. A tutorial on hidden markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2):257–286, 1989. [Google Scholar]

- 54.Kingma Diederik P and Welling Max. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013. [Google Scholar]

- 55.Livesey Benjamin J and Marsh Joseph A. Using deep mutational scanning to benchmark variant effect predictors and identify disease mutations. Molecular systems biology, 16(7):e9380, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Kim Yoon. Convolutional neural networks for sentence classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 1746–1751, Doha, Qatar, October 2014. Association for Computational Linguistics. [Google Scholar]

- 57.He Kaiming, Zhang Xiangyu, Ren Shaoqing, and Sun Jian. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016. [Google Scholar]

- 58.Hochreiter Sepp and Schmidhuber Jürgen. Long short-term memory. Neural computation, 9(8):1735–1780, 1997. [DOI] [PubMed] [Google Scholar]

- 59.Vaswani Ashish, Shazeer Noam, Parmar Niki, Uszkoreit Jakob, Jones Llion, Gomez Aidan N, Kaiser Łukasz, and Polosukhin Illia. Attention is all you need. In Advances in neural information processing systems, pages 5998–6008, 2017. [Google Scholar]

- 60.Devlin Jacob, Chang Ming-Wei, Lee Kenton, and Toutanova Kristina. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018. [Google Scholar]

- 61.Detlefsen Nicki Skafte, Hauberg Søren, and Boomsma Wouter. Learning meaningful representations of protein sequences. Nature communications, 13(1):1914, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Edelsbrunner Herbert, Harer John, et al. Persistent homology-a survey. Contemporary mathematics, 453(26):257–282, 2008. [Google Scholar]

- 63.Zomorodian Afra and Carlsson Gunnar. Computing persistent homology. In Proceedings of the twentieth annual symposium on Computational geometry, pages 347–356, 2004. [Google Scholar]

- 64.Cang Zixuan and Wei Guo-Wei. Persistent cohomology for data with multicomponent heterogeneous information. SIAM journal on mathematics of data science, 2(2):396–418,2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Chowdhury Samir and Mémoli Facundo. Persistent path homology of directed networks. In Proceedings of the Twenty-Ninth Annual ACM-SIAM Symposium on Discrete Algorithms, pages 1152–1169. SIAM, 2018. [Google Scholar]

- 66.Lütgehetmann Daniel, Govc Dejan, Smith Jason P, and Levi Ran. Computing persistent homology of directed flag complexes. Algorithms, 13(1):19, 2020. [Google Scholar]

- 67.Cang Zixuan, Munch Elizabeth, and Wei Guo-Wei. Evolutionary homology on coupled dynamical systems with applications to protein flexibility analysis. Journal of applied and computational topology, 4:481–507, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Meng Zhenyu, Anand D Vijay, Lu Yunpeng, Wu Jie, and Xia Kelin. Weighted persistent homology for biomolecular data analysis. Scientific reports, 10(1):2079, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Wang Rui, Nguyen Duc Duy, and Wei Guo-Wei. Persistent spectral graph. International journal for numerical methods in biomedical engineering, 36(9):e3376, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Mémoli Facundo, Wan Zhengchao, and Wang Yusu. Persistent laplacians: Properties, algorithms and implications. SIAM Journal on Mathematics of Data Science, 4(2):858–884, 2022. [Google Scholar]

- 71.Chen Jiahui, Zhao Rundong, Tong Yiying, and Wei Guo-Wei. Evolutionary de rham-hodge method. Discrete and continuous dynamical systems. Series B, 26(7):3785, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Wei Xiaoqi and Wei Guo-Wei. Persistent sheaf laplacians. arXiv preprint arXiv:2112.10906, 2021. [Google Scholar]

- 73.Wang Rui and Wei Guo-Wei. Persistent path laplacian. Foundations of Data Science, 5:26–55, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Liu Xiang, Feng Huitao, Wu Jie, and Xia Kelin. Persistent spectral hypergraph based machine learning (psh-ml) for protein-ligand binding affinity prediction. Briefings in Bioinformatics, 22(5):bbab127, 2021. [DOI] [PubMed] [Google Scholar]

- 75.Chen Dong, Liu Jian, Wu Jie, and Wei Guo-Wei. Persistent hyperdigraph homology and persistent hyperdigraph laplacians. arXiv preprint arXiv:2304.00345, 2023. [Google Scholar]

- 76.Kaczynski Tomasz, Mischaikow Konstantin Michael, and Mrozek Marian. Computational homology, volume 3. Springer, 2004. [Google Scholar]

- 77.Wasserman Larry. Topological data analysis. Annual Review of Statistics and Its Application, 5:501–532, 2018. [Google Scholar]

- 78.Ghrist Robert. Barcodes: the persistent topology of data. Bulletin of the American Mathematical Society, 45(1):61–75, 2008. [Google Scholar]

- 79.Cohen-Steiner David, Edelsbrunner Herbert, and Harer John. Stability of persistence diagrams. In Proceedings of the twenty-first annual symposium on Computational geometry, pages 263–271, 2005. [Google Scholar]

- 80.Bubenik Peter et al. Statistical topological data analysis using persistence landscapes. J. Mach. Learn. Res., 16(1):77–102, 2015. [Google Scholar]

- 81.Adams Henry, Emerson Tegan, Kirby Michael, Neville Rachel, Peterson Chris, Shipman Patrick, Chepushtanova Sofya, Hanson Eric, Motta Francis, and Ziegelmeier Lori. Persistence images: A stable vector representation of persistent homology. Journal of Machine Learning Research, 18, 2017. [Google Scholar]

- 82.Cang Zixuan, Mu Lin, Wu Kedi, Opron Kristopher, Xia Kelin, and Wei Guo-Wei. A topological approach for protein classification. Computational and Mathematical Biophysics, 3(1), 2015. [Google Scholar]

- 83.Clough James R, Byrne Nicholas, Oksuz Ilkay, Zimmer Veronika A, Schnabel Julia A, and King Andrew P. A topological loss function for deep-learning based image segmentation using persistent homology. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(12):8766–8778, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Pun Chi Seng, Xia Kelin, and Lee Si Xian. Persistent-homology-based machine learning and its applications—a survey. arXiv preprint arXiv:1811.00252, 2018. [Google Scholar]

- 85.Stolz Bernadette J, Harrington Heather A, and Porter Mason A. Persistent homology of time-dependent functional networks constructed from coupled time series. Chaos: An Interdisciplinary Journal of Nonlinear Science, 27(4):047410, 2017. [DOI] [PubMed] [Google Scholar]

- 86.Wei Guo-Wei. Topological data analysis hearing the shapes of drums and bells. arXiv preprint arXiv:2301.05025, 2023. [Google Scholar]

- 87.Nguyen Duc Duy, Cang Zixuan, Wu Kedi, Wang Menglun, Cao Yin, and Wei Guo-Wei. Mathematical deep learning for pose and binding affinity prediction and ranking in d3r grand challenges. Journal of computer-aided molecular design, 33:71–82, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Chen Jiahui, Qiu Yuchi, Wang Rui, and Wei Guo-Wei. Persistent laplacian projected omicron ba. 4 and ba. 5 to become new dominating variants. Computers in Biology and Medicine, 151:106262, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Meng Zhenyu and Xia Kelin. Persistent spectral-based machine learning (perspect ml) for protein-ligand binding affinity prediction. Science Advances, 7(19):eabc5329, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Grigor’yan AA, Lin Yong, Muranov Yu V, and Yau Shing-Tung. Path complexes and their homologies. Journal of Mathematical Sciences, 248:564–599, 2020. [Google Scholar]

- 91.Hansen Jakob and Ghrist Robert. Toward a spectral theory of cellular sheaves. Journal of Applied and Computational Topology, 3:315–358, 2019. [Google Scholar]

- 92.Kipf Thomas N and Welling Max. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907, 2016. [Google Scholar]

- 93.Veličković Petar, Cucurull Guillem, Casanova Arantxa, Romero Adriana, Lio Pietro, and Bengio Yoshua. Graph attention networks. arXiv preprint arXiv:1710.10903, 2017. [Google Scholar]

- 94.Hamilton Will, Ying Zhitao, and Leskovec Jure. Inductive representation learning on large graphs. Advances in neural information processing systems, 30, 2017. [Google Scholar]

- 95.Xu Keyulu, Hu Weihua, Leskovec Jure, and Jegelka Stefanie. How powerful are graph neural networks? arXiv preprint arXiv:1810.00826, 2018. [Google Scholar]

- 96.Li Yujia, Tarlow Daniel, Brockschmidt Marc, and Zemel Richard. Gated graph sequence neural networks. arXiv preprint arXiv:1511.05493, 2015. [Google Scholar]

- 97.Kipf Thomas N and Welling Max. Variational graph auto-encoders. arXiv preprint arXiv:1611.07308, 2016. [Google Scholar]

- 98.Veličković Petar, Fedus William, Hamilton William L, Liò Pietro, Bengio Yoshua, and Hjelm R Devon. Deep graph infomax. arXiv preprint arXiv:1809.10341, 2018. [Google Scholar]

- 99.You Yuning, Chen Tianlong, Sui Yongduo, Chen Ting, Wang Zhangyang, and Shen Yang. Graph contrastive learning with augmentations. Advances in neural information processing systems, 33:5812–5823, 2020. [Google Scholar]

- 100.Rong Yu, Bian Yatao, Xu Tingyang, Xie Weiyang, Wei Ying, Huang Wenbing, and Huang Junzhou. Self-supervised graph transformer on large-scale molecular data. Advances in Neural Information Processing Systems, 33:12559–12571, 2020. [Google Scholar]

- 101.Li Shuangli, Zhou Jingbo, Xu Tong, Huang Liang, Wang Fan, Xiong Haoyi, Huang Weili, Dou Dejing, and Xiong Hui. Structure-aware interactive graph neural networks for the prediction of protein-ligand binding affinity. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 975–985, 2021. [Google Scholar]

- 102.Gligorijević Vladimir, Renfrew P Douglas, Kosciolek Tomasz, Leman Julia Koehler, Berenberg Daniel, Vatanen Tommi, Chandler Chris, Taylor Bryn C, Fisk Ian M, Vlamakis Hera, et al. Structure-based protein function prediction using graph convolutional networks. Nature communications, 12(1):3168, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Liu Xianggen, Luo Yunan, Li Pengyong, Song Sen, and Peng Jian. Deep geometric representations for modeling effects of mutations on protein-protein binding affinity. PLoS computational biology, 17(8):e1009284, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Shan Sisi, Luo Shitong, Yang Ziqing, Hong Junxian, Su Yufeng, Ding Fan, Fu Lili, Li Chenyu, Chen Peng, Ma Jianzhu, et al. Deep learning guided optimization of human antibody against sars-cov-2 variants with broad neutralization. Proceedings of the National Academy of Sciences, 119(11):e2122954119, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Zhang Zuobai, Xu Minghao, Jamasb Arian, Chenthamarakshan Vijil, Lozano Aurelie, Das Payel, and Tang Jian. Protein representation learning by geometric structure pretraining. arXiv preprint arXiv:2203.06125, 2022. [Google Scholar]

- 106.Ingraham John, Garg Vikas, Barzilay Regina, and Jaakkola Tommi. Generative models for graph-based protein design. Advances in neural information processing systems, 32, 2019. [Google Scholar]

- 107.Li Jiahan, Luo Shitong, Deng Congyue, Cheng Chaoran, Guan Jiaqi, Guibas Leonidas, Ma Jianzhu, and Peng Jian. Orientation-aware graph neural networks for protein structure representation learning. 2022.

- 108.Cang Zixuan and Wei Guo-Wei. Topologynet: Topology based deep convolutional and multi-task neural networks for biomolecular property predictions. PLoS computational biology, 13(7):e1005690, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Morris Christopher, Ritzert Martin, Fey Matthias, Hamilton William L, Lenssen Jan Eric, Rattan Gaurav, and Grohe Martin. Weisfeiler and leman go neural: Higherorder graph neural networks. In Proceedings of the AAAI conference on artificial intelligence, volume 33, pages 4602–4609, 2019. [Google Scholar]

- 110.Ebli Stefania, Defferrard Michaël, and Spreemann Gard. Simplicial neural networks. arXiv preprint arXiv:2010.03633, 2020. [Google Scholar]

- 111.Wu Nicholas C, Dai Lei, Olson C Anders, Lloyd-Smith James O, and Sun Ren. Adaptation in protein fitness landscapes is facilitated by indirect paths. Elife, 5:e16965, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Podgornaia Anna I and Laub Michael T. Pervasive degeneracy and epistasis in a protein-protein interface. Science, 347(6222):673–677, 2015. [DOI] [PubMed] [Google Scholar]