Abstract

To navigate and guide adaptive behaviour in a dynamic environment, animals must accurately estimate their own motion relative to the external world. This is a fundamentally multisensory process involving integration of visual, vestibular and kinesthetic inputs. Ideal observer models, paired with careful neurophysiological investigation, helped to reveal how visual and vestibular signals are combined to support perception of linear self-motion direction, or heading. Recent work has extended these findings by emphasizing the dimension of time, both with regard to stimulus dynamics and the trade-off between speed and accuracy. Both time and certainty—i.e. the degree of confidence in a multisensory decision—are essential to the ecological goals of the system: terminating a decision process is necessary for timely action, and predicting one's accuracy is critical for making multiple decisions in a sequence, as in navigation. Here, we summarize a leading model for multisensory decision-making, then show how the model can be extended to study confidence in heading discrimination. Lastly, we preview ongoing efforts to bridge self-motion perception and navigation per se, including closed-loop virtual reality and active self-motion. The design of unconstrained, ethologically inspired tasks, accompanied by large-scale neural recordings, raise promise for a deeper understanding of spatial perception and decision-making in the behaving animal.

This article is part of the theme issue ‘Decision and control processes in multisensory perception’.

Keywords: vestibular, visual, self-motion, navigation

1. Introduction

Consider the challenge of scaling a wall at your local rock-climbing centre. A successful, fast, climb to the top is facilitated by estimating an optimal route from an initial vantage point (or several) on the mat. During each movement across or up the wall, multiple sensory inputs are available to the brain to guide a successful climb: vestibular signals arising from motion of the head through space; visual signals from motion of the scene across the retina; proprioceptive and tactile signals indicating the position and motion of the limbs and the quality of a hand- or foothold. Small or slippery holds may render tactile information unreliable. Visual input could be ambiguous or uncertain, for example if one is climbing on an overhang or with reduced ambient light levels. Depending on the frequency and amplitude of head motion, vestibular inputs may be unreliable or fail to disambiguate translation from tilt. Thus, to estimate their ongoing motion with respect to the goal and select actions accordingly, the optimal climber will use information from all available sources, at each moment instinctively leaning more heavily on the more reliable ones.

With each self-motion judgement, two other features of a perceptual decision are at play. First, the timing of commitment to a decision about one's direction of motion must itself be decided. Fast decisions during a climb could yield a quicker finish, thereby conserving energy or winning a competition—but committing too early during movement risks a dangerous error. Second, a climber's confidence that they have made an accurate self-motion judgement is also critical. Low confidence may lead to a more tentative reach, allow for a re-evaluation or adjustment of trajectory (figure 1), or leave open the possibility of reverting to a previous position. Higher confidence, on the other hand, could drive a quicker motion and firmer limb placement, or allow for a riskier manoeuvre with a greater pay-off in terms of positioning for ultimate success. In a reinforcement learning framework, confidence can be seen as a critical modulator of learning rate, or as impetus for revising an agent's internal model [1,2]—for instance if a high-confidence decision is revealed to be an error, it means something about the world has probably changed.

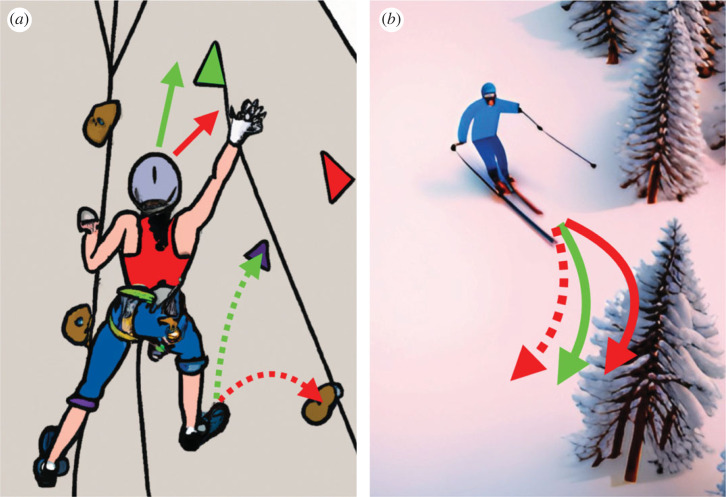

Figure 1.

Sequential self-motion decisions and the role of confidence. The brain executes a motor plan to reposition the body along a desired trajectory. Multisensory feedback allows perceptual judgement of the actual trajectory, but this may not be exactly as planned. (a) For the climber, the intended trajectory (green solid arrow) affords grasping the green handhold and advancing the foot upwards (green dashed arrow), but if the trajectory turns out to be more lateral (red solid arrow) this could prompt a more conservative approach (red handhold and dashed arrow). A low degree of confidence in the initial heading judgement would recommend the conservative strategy. (b) Similarly, the skier intends to direct her turn just in front of the next tree (green), but in actuality might be heading dangerously close to it (solid red). Low confidence should cause her to hedge and keep a more comfortable distance (dashed red). High confidence followed by a negative outcome (a branch to the face) should trigger adjustment of an internal model or sensory-motor mapping. Illustrations generated with the help of AI tools (DALL-E and Jasper AI).

In the following sections, we first summarize existing work on the integration of visual and vestibular cues for heading perception and discuss the importance of considering decision speed and confidence in multisensory decision-making. We present preliminary findings in a heading discrimination task that combines measurement of choice, reaction time (RT) and confidence, which may be considered a bridge towards more complex tasks with multiple decisions forming a hierarchical sequence. We then review the recent development of naturalistic paradigms which can be used to study self-motion perception during target-tracking and virtual-reality (VR) navigation. When paired with continuous behavioural monitoring and multi-area neural recordings, these ecologically inspired paradigms promise unprecedented neurobiological insights into spatial cognition and guidance of movement in the real world.

2. Visual-vestibular integration: raison d'être and neural substrates

Although vestibular sensations rarely impinge on conscious awareness under healthy conditions, the system continuously signals linear acceleration of the head in space [3] via the two otolith organs in each inner ear. Owing to fundamental physical constraints captured by Einstein's equivalence principle, the otoliths alone cannot distinguish translational inertial motion from a change in orientation relative to gravity. This ambiguity can be resolved using signals from the semicircular canals, which detect angular acceleration in three orthogonal planes [4]—although canal afferents themselves are relatively insensitive to low-frequency motion and static tilts [5]. These physical limitations can give rise to phenomena like the somatogravic illusion, the erroneous perception of linear acceleration as tilt [6]. Separately, scene motion across the retina (optic flow) can cause a profound sensation of self-motion, and has long been studied as the visual basis of heading judgements [7,8]. Yet vision also has shortcomings: the information quality of optic flow can vary dramatically with viewing conditions or behavioural context, and the visual system must contend with confounding movements of one's own eyes and head, and moving objects in the environment [7,9].

Fortunately, under most real-world conditions, informative signals are available from both vestibular and visual senses. If these signals arise from a common source, such as intentional self-generated locomotion, the information they provide is generally convergent. Since each source is encumbered with limitations and ambiguities it cannot resolve alone, it is natural to consider combining information from both when possible.

Indeed, monkeys and humans can accurately and precisely perceive heading from either visual [10] or vestibular cues [11], and performance improves when both are presented together [12,13], suggesting that these two sensory cues are combined to improve heading perception. In theory, statistically optimal integration (Bayesian or maximum-likelihood estimation (MLE)) [14–16] is realized by weighting the cues according to their reliability, and empirical investigations demonstrated behavioural signatures of near-optimal cue integration in a multisensory heading discrimination task [12,13]. This classic task has been used for a comparative understanding of human and non-human primate self-motion perception, and is well suited for the investigation of its neurophysiological correlates, through invasive recordings in trained macaque monkeys (see also Zeng et al. [17]).

A number of cortical areas with selectivity for visual and vestibular heading stimuli have been linked to a network for self-motion perception in both humans and monkeys [18,19]. Key nodes include the dorsal medial superior temporal area (MSTd), ventral intraparietal area (VIP), the smooth pursuit region of the frontal eye fields (FEFsem), and a multimodal region of the posterior sylvian fissure (VPS). Vestibular and visual motion signals, arriving from unimodal areas such as parieto-insular vestibular cortex and the middle temporal (MT) visual area, respectively, are thought to converge in these downstream multisensory areas [20,21]—although direct projections from MT to parts of the network other than MST and VIP have not been verified.

MSTd has been of long-standing interest in particular [22], as it contains a population of neurons with congruent selectivity for visual and vestibular heading. These neurons show striking correlates of both the increase in perceptual sensitivity during cue combination, and reliability-dependent cue weighting [12,23–25]. On the other hand, MSTd neurons lack a common spatial reference frame for visual and vestibular information (they remain eye- and head-centred, respectively) [26], although this may not be a necessity for effective integration [27]. Furthermore, although MSTd's velocity-dominated vestibular responses suggest a temporal integration from the periphery to match the dynamics of visual motion signals [28–30], reversible inactivation of MSTd has minimal effect on vestibular heading judgements [31], and downstream decision-related activity tracks vestibular acceleration [32]. Thus, while recordings in MSTd support some theoretical predictions regarding optimal cue combination, there remain inconsistencies and open questions regarding its role and the nature of network-level interactions subserving heading discrimination [33,34].

Other areas have their own quirks. The multimodal population in VPS is dominated by neurons with opposite tuning to the two modalities, implying a greater role in segregation than combination [35]. Area VIP, meanwhile, shows strong choice-correlated activity, but surprisingly no apparent causal role in heading discrimination [36]. To date, neurophysiological studies have shed some light on the possible functions of each area, but there remains work to reconcile various models of where and how near-optimal cue integration is achieved.

(a) . Limitations of the conventional definition of optimality

Following the lead of earlier research, many of these studies implicitly assumed that subjects use all the information available throughout the stimulus presentation—or at least that the same subset of the presentation epoch is used for the unisensory and multisensory conditions. This assumption matters because testing normative models of cue integration generally requires estimating the reliability of individual cues to generate predictions for the multisensory percept; namely, how the cues should be weighted, and how much more precise the multisensory estimate should be. However fixed-duration tasks permit the decision to be formed covertly at any time during stimulus presentation [37,38], and at different times for different trials/conditions, leaving it unclear how to compute the predictions for an optimal observer.

For instance, the classic Bayesian/MLE approach defines optimality as maximizing the precision of the combined estimate, given the precision of the unimodal estimates. For linear weighted integration with uncorrelated inputs, the MLE prediction is captured by the equation: , where is the variance of the combined estimate, and and are the variances of the unimodal estimates. Typically, performance on unimodal conditions is used to estimate the reliability of the signals being combined, and the prediction from the above equation is compared to performance in a multisensory condition. However, this comparison is invalid if different temporal windows are used on unisensory versus multisensory trials, or if the decision process differs in some other way across conditions (e.g. termination criteria; see below). Thus, a more general conception of optimality requires consideration of how the decision process unfolds in time.

This is just one example of a broader problem with the conventional approach to defining and testing for optimality: experimental conditions where only one cue is presented may not accurately quantify the reliability of the cues when presented together ([39,40], and see Zaidel & Salomon [41], for a more nuanced and expansive view on this topic). Nevertheless, one can at least investigate the time course of multisensory decisions by measuring RT (see below), and by relating stimulus fluctuations [37,42] or neural activity [43,44] to behaviour in a temporally resolved fashion. In addition to providing an update to the concept of optimality [45], studying the temporal properties of individual decisions—including how the brain decides when to decide—is essential if we wish to understand how they are strung together into sequences, as is the case during real-world navigation.

3. Two key ingredients for sequential decisions in complex environments

(a) . Decision termination and the speed-accuracy trade-off

The time it takes to reach a decision (perceptual, mnemonic or otherwise) has been a bedrock of quantitative psychology for many decades [46–49]. The need to place limits on the time of decision formation is especially salient during self-motion, where timely action can mean the difference between obstacle avoidance and collision, or catching versus missing a prey item. Yet we do not know exactly what determines the time of commitment to a heading judgement, a process made more complicated by the multiple, dynamic sources of information that must be taken into account.

A reasonable starting point is to assume, as is standard in the field, that the brain accumulates noisy evidence until an internal bound is reached, at which point the decision process is terminated and a choice is rendered [50]. Drugowitsch and colleagues therefore developed a multisensory drift-diffusion model in their investigation of choices and RT during multisensory heading discrimination [45]. As mentioned above, considering the dimension of time motivates an update to conventional theories of Bayes-optimal cue combination. In the classic (static) model [14,16], stimuli give rise to individual noisy estimates, and the multisensory estimate is their reliability-weighted average. By contrast, a dynamic model seeks to explain the combination of signals both across modalities and across time. In the model of Drugowitsch et al. [45], ‘momentary evidence’ at each time step is constructed by weighting visual and vestibular signals by their instantaneous reliability—which is a function of (i) a global stimulus property that varies across trials (e.g. visual motion coherence), and (ii) within-trial stimulus dynamics, namely its velocity or acceleration profile, respectively. The temporal accumulation of this evidence to a bound gives rise to both the choice and RT, and the height of the bound dictates the trade-off between speed and accuracy.

Crucially, when considered as a bounded process, multisensory decisions are not always more precise than unisensory ones, as predicted by the static model—but in that case they ought to be faster, and this is what Drugowitsch et al. [45] found. Thus, when optimality is defined at the level of the momentary evidence, discrimination thresholds alone are not sufficient to assess optimality, as increasing decision speed at the cost of accuracy can be optimal in terms of maximizing reward rate [51]. Another important contribution of this work is to derive a normative solution for decisions where evidence reliability varies over short time scales [52], a common feature of natural environments but one that is largely unaddressed in classic studies of perceptual decision-making (but see [53] for a thorough and timely review of more recent efforts)). It can be shown that optimal integration of time-varying evidence is theoretically tractable under certain assumptions [52], but whether and how the brain achieves this remains unresolved.

One approach to the question of neural implementation was undertaken by Hou et al. [32]. They suggested that integration of multiple dynamic evidence streams could be mediated by invariant linear combinations of neural inputs across time and sensory modalities, and observed neural activity consistent with this integration in the lateral intraparietal area (LIP) [32]. However, this experiment did not measure behavioural RT, and trial-averaged neural responses by themselves may not be diagnostic of bounded evidence accumulation [54]. Indeed, there is reason to wonder whether the brain actually uses a strategy of accumulating noisy samples of evidence in the heading task. The main motivation to accumulate evidence in the first place is to average out the noise, but this works best when the samples are independent [55] or at most weakly correlated. The degree to which self-motion fits the bill is unclear, given that the relevant signals have a high degree of autocorrelation (being tied to inertial motion, not an arbitrary pattern of inputs), and unique noise properties [56–58] whose implications for decision-making have not been fully explored.

Thus far the available evidence [32,45] (and see following section) is consistent with bounded accumulation, but it is still worth considering alternatives, such as taking a single ‘snapshot’ of evidence [38] at the predicted time of maximum information content (i.e. the peak of the velocity or acceleration profile [18]). At the other extreme, one might envision a continuous process best explained using elements of control theory [59,60], although the relationship between evidence and decision termination is less clear in such a framework. Previous work has shown that adjudicating candidate decision processes may require testing the same subjects in different task variants, for instance experimenter-controlled duration versus reaction-time versions [38]. Data from either variant by itself may be consistent with several distinct processes, so a more stringent test is to fit the data from one variant and use a subset of the fitted parameters to predict the other variant [38]. Neurophysiology could help as well, especially high-density recordings which permit single-trial analyses of decision variable representations [61–63]. This three-pronged strategy—behavioural readout of decision dynamics/termination, computational frameworks that accommodate time-varying signals, and neurophysiological approaches capable of linking the two—seems like a promising path towards expanding the neurobiology of decision-making to more natural multisensory contexts.

(b) . Confidence in multisensory decisions: candidate models and a pilot experiment

In visual perception, the framework of bounded evidence accumulation has been extended to explain a third key outcome inherent to the decision-making process: confidence, defined as the graded, subjective belief that the current or pending decision is correct. Considered an elemental component of metacognition, confidence is of long-standing interest to psychologists and philosophers of mind, and (alongside RT) has figured prominently in psychophysical theory and experiment for over a century [64–67]. Yet it also serves a practical purpose in natural behaviour. Most real-world decisions are not made in isolation but are part of a sequence or hierarchy in which the appropriate choice depends on the unknown outcome of earlier decisions. In such a scenario, confidence functions as a proxy for feedback, a prediction of accuracy that informs the next choice in a sequence—or more generally, adjustments of decision strategy [68,69] or learning rate [1,70].

Almost all models of confidence devote a key role to the strength of the evidence informing the accompanying choice. In models based on signal detection theory (SDT) [71,72], observations further away from a decision criterion (i.e. stronger evidence) are more likely to have arisen from one distribution over another, justifying higher confidence. When decision time is factored in and controlled by the subject, the accumulated evidence and the time taken to reach a decision maps onto the probability of making a correct choice, and this mapping (or an approximation thereof) could be learned and used to assign a degree of confidence [73,74].

Interestingly, these two frameworks offer distinct interpretations for the empirical relationship between confidence and stimulus strength [74,75]: confidence increases with stimulus strength on correct trials, but often (though not always) decreases with stimulus strength on error trials. SDT attributes this to the fact that an observation leading to an error must be closer to the criterion when d’ is large, whereas in accumulator models it can be explained by continued accumulation of contradictory evidence after an initial choice is made [74,76]. This discrepancy further underscores the relevance of time as a factor in decision-making, and especially confidence; indeed, failing to consider the time dimension can lead to misinterpretation of common measures of metacognitive performance [77].

The study of confidence in perception has typically considered situations in which the relevant sensory evidence arises from just one external source. However, most real-world sensory experiences consist of concurrent inputs from multiple modalities, and some basic unanswered questions arise when studying metacognition in a multisensory context [78]. For example, we do not know whether confidence is generated by the same cue-integration process (be it evidence accumulation, Bayesian inference or something else) underlying the decision itself. Alternatively, confidence could be computed by a parallel process that is not contingent on moment-by-moment sensory evidence, or by a post-decision accumulation process [79] with distinct termination criteria [80]. A quantitative link between choice and confidence was supported by perturbations of visual cortical neurons supplying the evidence in a random-dot motion task [81,82], but these studies did not rule out post-decisional processing, and the decision was based on a single modality.

To begin to address this question for multisensory decisions, we reasoned that behavioural measures of choice and confidence should demonstrate similar dependence on relative cue reliability, which is classically assessed using a cue-conflict manipulation [13,16,83]. When cues are placed in conflict, the resulting bias in the psychometric function reflects the weight assigned to each cue during discrimination. By randomly interleaving different levels of relative reliability, one can test whether cues are reweighted on a trial-by-trial basis, as predicted by normative theory and demonstrated empirically in previous work. What remains to be seen is whether confidence judgements reflect the same reliability-based cue weighting that has been observed in choices.

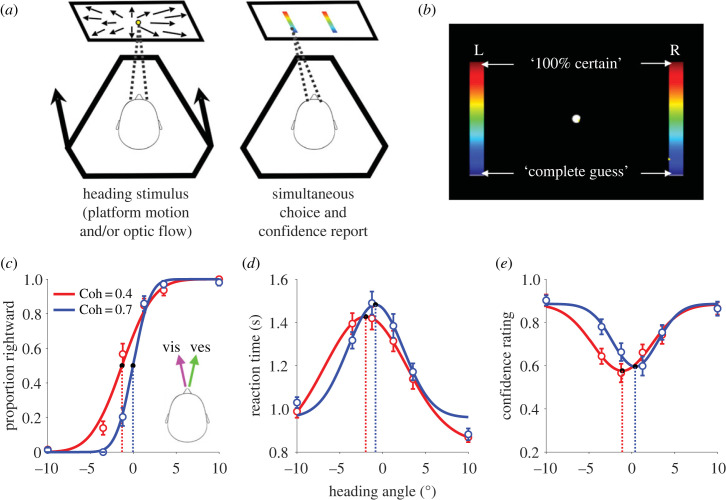

We adapted a well-established heading discrimination task [13] to include simultaneous reports of choice and confidence via a continuous rating scale [74]. The task also measures RT, allowing us to test whether a bounded accumulation process underlies all three behavioural variables in this task, as has been suggested for visual motion discrimination [75]. Human participants (n = 5) seated in a motion platform were instructed to report their heading (left or right) relative to a fixed reference of straight ahead. Each trial consisted of a translational heading stimulus comprising inertial motion and/or synchronous optic flow of a random-dot cloud. Participants indicated their choice and confidence by making a saccade to one of two colour-gradient bars (figure 2a,b). The top of the bar represented 100% certainty, while the bottom of the bars represented a complete guess. Participants were not given immediate feedback about their performance, but instead were shown their percentage of correct choices at the end of each set of 30 trials. Interleaved throughout the session were cue-conflict trials in which the heading angles specified by visual and vestibular cues were separated by ±3°, a subtle difference not readily noticeable and with no qualitative impact on overall confidence level. Relative cue reliability was controlled by the coherence of the random dots, also randomly interleaved.

Figure 2.

Pilot experiment with concurrent measurement of choice, RT, and confidence in heading discrimination. Human participants (n = 5 subjects, 4092 total trials) were seated in a cockpit-style chair within a motion platform, facing a rear-projection screen and eye-tracking camera. (a) Each trial consisted of a translational motion stimulus delivered visually (optic flow of a random-dot cloud with variable motion coherence, (Coh)) and/or by inertial motion of the platform. When ready, participants indicated whether their perceived heading was to the right or left relative to straight ahead, by making a saccade to one of two vertical colour-gradient bars. (b) Subjects were instructed to aim their saccade to a vertical position along the chosen target based on their confidence in the right/left choice, defined on a continuous scale from ‘complete guess’ to ‘100% certain’. (c) Proportion of rightward choices as a function of heading angle (positive = rightward) for cue-conflict trials where the visually-defined heading was displaced 1.5° to the left, and vestibular heading 1.5° to the right of the assigned heading angle on each trial. The two curves are for low (0.4, red) and high (0.7, blue) visual coherence. The results replicate the known effects of cue reliability on sensitivity (slope) and cue weights (bias) [11]. (d) Reaction time as a function of heading angle for the same conditions as in (c). (e) The same as (d) but for confidence ratings (mean saccade endpoint). Smooth curves are descriptive Gaussian fits (cumulative, regular or inverted), and dotted lines highlight the mean of the fitted Gaussians.

Cue reliability was reflected in the relative slope of psychometric functions (figure 2c; compare low (red) versus high (blue) visual coherence)—and correspondingly in the relative width of the RT and confidence functions (figure 2d,e). At the same time, the psychometric functions reveal a bias indicative of trial-by-trial reliability-based cue weighting, as shown previously [13]. In the condition shown in figure 2c–e, vestibular heading was offset 1.5° to the right and visual 1.5° to the left of the angle specified by the abscissa, and hence participants made more rightward choices when the vestibular stimulus was more reliable (low visual coherence, red curve shifted to the left). Strikingly, the RT and confidence curves show very similar shifts (figure 2d,e), suggesting that the multisensory evidence guiding choice also underlies a degree of confidence in the choice [81]. Although it awaits quantitative confirmation in a larger dataset, this to our knowledge is the first indication that reliability-based cue combination at the level of choices also manifests in confidence and RT.

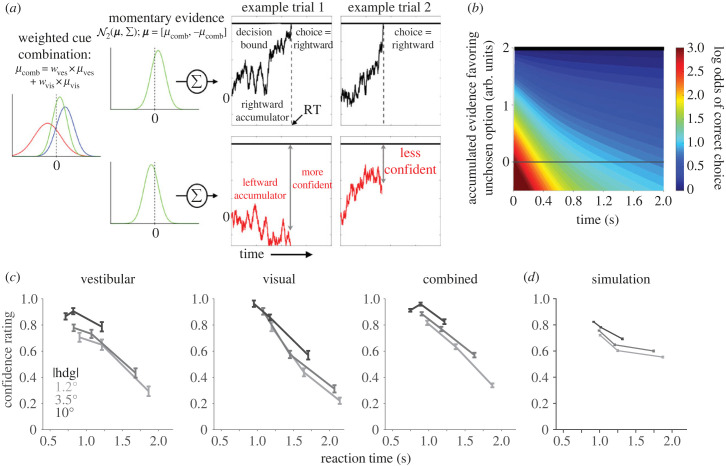

These preliminary findings are consistent with the hypothesis that confidence arises from the same evidence accumulation process that governs the decision (and termination thereof), rather than by a downstream or parallel mechanism independent of the reliability-based weighting process. We developed a multisensory decision model (figure 3a,b) that combines the reliability-weighted combination of momentary evidence from Drugowitsch and colleagues [45] with a two-dimensional accumulation process (anticorrelated race) that has successfully explained choice, RT, and confidence in a visual task [74,84]. In this model, evidence at each time step is drawn from a bivariate normal distribution with mean = [μcomb, −μcomb] and , where the two dimensions correspond to the two choice alternatives (right versus left) and the evidence for each is partially anticorrelated (i.e. ρ < −0.5 [85]). The mean μcomb is assumed to reflect the optimal weighting of evidence [45] and is therefore biased towards the more reliable cue (figure 3a, left). Evidence samples are fed into competing accumulators, and the winning accumulator determines both the choice and the decision time. Because the winning accumulator is always at a fixed value at decision time (i.e. the bound), confidence is determined by the status of the losing accumulator (figure 3a, right): the closer the losing race was to its bound, the lower the degree of confidence. This intuitive relationship is formalized by calculating the log odds of a correct choice as a function of accumulated evidence and time ([74,84]; figure 3b). The accumulation process jointly dictates choice, RT, and confidence—and because this process is downstream of the weighted cue-combination step, all three behavioural variables should exhibit the same reliability-based shift. This is what we observed in the pilot experiment (figure 2c–e).

Figure 3.

Multisensory bounded evidence accumulation model for unifying choice, reaction time (RT), and confidence. (a) Noisy momentary evidence is constructed as a reliability-weighted sum of heading evidence from visual and vestibular cues (red and blue distributions in leftmost panel). In this example, the two cues are conflicting and of different reliability (variance), and the resulting combined distribution (green curve) is biased towards the more reliable cue (here, shifted rightwards). Evidence favouring each of the two alternatives is accumulated separately and the choice is determined by which accumulator reaches its bound first; RT is given by the time of bound crossing (plus a small non-decision time). Two simulated trials are depicted. In both, the rightward accumulator wins the race, but in example trial 2, the decision maker is less confident because the losing accumulator was closer to the bound as compared to trial 1. (b) The model asserts that the brain has implicit knowledge of the relationship between the amount of evidence gathered by the losing accumulator and the probability (shown as the log odds) of making a correct choice, and uses this knowledge to compute confidence. (c) Confidence rating (mean ± s.e.) as a function of RT quantile, plotted separately for the three different heading angle magnitudes (|hdg|, leftward and rightward pooled) and three modality conditions: vestibular (platform motion only), visual (optic flow only), and combined. Data are from the same dataset as figure 2 (n = 5 subjects). (d) Simulations from the model also exhibit a negative relationship between confidence and RT, conditioned on |hdg|.

Under common assumptions, the mathematics of drift-diffusion guarantees that the mapping between evidence and confidence is time-dependent [73,74]: a given level of evidence favouring the unchosen option maps onto a probability of being correct, but this probability decreases with elapsed decision time (figure 3b). The prediction is that confidence should be inversely related to decision time, even for a fixed level of stimulus strength [74] (here, heading angle; figure 3d). Our preliminary results are consistent with this prediction as well (figure 3c), which supports the notion that elapsed decision time is a vital part of the computation of confidence.

In summary, although more work is required to develop plausible alternatives for model comparison, the data are qualitatively consistent with a multisensory accumulator model such as the one in figure 3a. One major open question is where and how the weighted combination of momentary evidence is achieved in the brain. The output of this process—reliability-weighted accumulated evidence—appears to be represented in parietal and frontal cortices [32,86], but the upstream circuitry is unknown. As alluded to above, the dynamics of these accumulation-like signals differ for visual versus vestibular stimuli, suggesting they might originate from separate unimodal representations rather than a single multisensory representation such as in MSTd. Simultaneous recordings from (and perturbations of) multiple nodes in the self-motion network, along with downstream decision-related areas, may be needed to resolve this question.

(c) . Sequential self-motion judgements as a scaffold for navigation

Decision termination and confidence are critical in furnishing a decision-maker with the ability to make sequential judgements at appropriate intervals to achieve their goals. If external feedback about decision accuracy is available, decision-makers generally exhibit slower RT after errors, consistent with an evolving trade-off between speed and accuracy based on recent experience [68,87]. On the other hand, in the absence of external feedback, as is frequently the case for real-world decisions, the internal ‘feedback’ furnished by a representation of confidence could drive adjustments of decision policy, through a modification of the termination bound or accumulation process [88,89].

In the case of self-motion, an individual decision could equate to a prediction of body position or orientation in the near future, derived from an integration of multisensory evidence and expected dynamics (spatio-temporal priors) over some period of time [90]. Performed repeatedly, these perceptual decisions constitute the building blocks for a path integration process, with the decision at one time-step feeding into the next. The perception of egocentric heading for path integration is a key component of real-world navigation, although successful navigation also draws on salient environmental information [91], and a psychological sense of self-location [92].

Since path integration accumulates errors, monitoring one's ongoing certainty and incorporating this into an evolving behavioural strategy could be quite useful, particularly when knowledge of one's current position or goal location is incomplete. This idea goes beyond using uncertainty to update a position estimate, in the manner of a Kalman filter [93,94]. Our conjecture is that a metacognitive certainty judgement accompanies each position update and can be used to guide higher-order decisions, for instance whether to maintain or reverse the current course, or to stop and sample more information.

During truly continuous natural behaviour, one might assume that the time interval between successive position estimates should reach zero at its limit. However, given that upcoming spatio-temporal sequences of natural motion stimuli are generally predictable to the agent (through motor efference and plentiful experience), the interval between updates could be adjusted based on inferred changes in control dynamics of the current environment. In other words, although continuous computation of self-motion is necessary for reflexes and postural control, it may be unnecessary to maintain a perceptual estimate of self-motion at all times, agnostic to the current behavioural state. Instead, the brain could reduce the computational burden by only consulting the self-motion system when a salient transition or event boundary is detected. This might correspond to a change in expected reliability of different sensory cues, or in the control dynamics needed for that part of the environment [90], such as a change in ambient light level or terrain. The relationship between inputs (i.e. motor commands), and outputs (body motion) is predictable from an internal model of control dynamics and the autocorrelation structure of self-motion, but in situations where the environment changes rapidly, confidence judgements associated with each position update could play a key role. In the next section, we discuss increasing efforts to develop more closed-loop behavioural tasks to address the computational and neural bases of self-motion perception along these lines.

4. Where are we heading? Naturalistic self-motion

The motivation for framing self-motion perception as a decision-making process arises from a consideration of the goal it ultimately serves, which is to allow an agent to accurately orient and navigate within its environment. In such dynamic environments, the brain must use perceptual observations to guide subsequent actions, and, as described by classical reinforcement learning models, actions are chosen to maximize the likelihood of immediate and/or future desirable outcomes [95]. An agent may also select actions, including eye movements, to improve its ‘vantage point’ for new observations that could lead to desirable outcomes further down the line [96]. Previous studies of self-motion perception, by operating under fixed-gaze conditions and soliciting single, binary responses to passively experienced stimuli, decouple this natural link between perception and action, which may impose fundamental limitations on our ability to understand general principles of neural computation and behaviour.

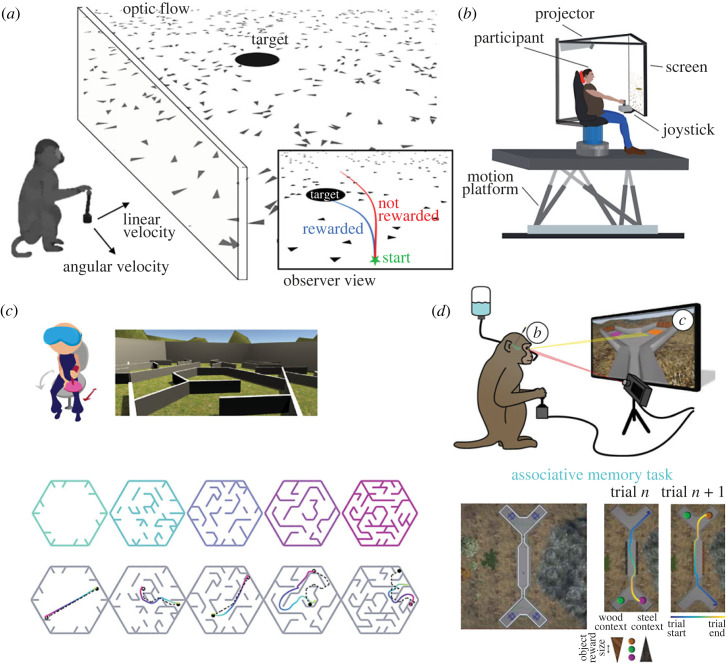

Approximating real-world decision-making about self-motion can still be achieved within the laboratory and build on existing understanding of perception, by using natural planning strategies in animal behaviour, and tracking continuous variables over individual trials to generate rich time-varying behavioural data. The appeal to naturalistic, evolutionarily ingrained, behaviours in such tasks provides an opportunity to explore decision-making processes during self-motion across species, often while avoiding the need for heavily over-trained animals. Adopting this approach, a recent set of experiments instructed human and non-human primate subjects to navigate with a joystick to memorized target locations (fireflies) in a virtual environment with ground-plane optic flow cues [97–101] (figure 4a, and see [105]). In this task, the evolution of the optic flow pattern is driven by the subject's own movements in the virtual environment, maintaining a link between perception and action. The task also emulates foraging behaviour, a natural example of the use of path integration. Eye movements over the course of each trial, lasting up to several seconds, reliably track the evolving latent location of the target and correlate with success, reflecting the subject's dynamic belief about the target location [98]. Perturbations in optic flow or joystick gain indicate that humans and monkeys rely on optic flow to perform this task [101], and subjects are also able to rapidly and effectively generalize to novel task variants, including joystick gain changes, moving latent targets, selecting between two targets, and chasing an inexhaustible supply of flashing ‘fireflies’ over tens of minutes [102].

Figure 4.

Virtual reality (VR) paradigms for bridging self-motion perception and spatial navigation. (a) Schematic of ‘fireflies’ task [88–92]. Human or monkey subjects control linear and angular velocity in a virtual environment via a joystick to navigate to a briefly shown target, using ground-plane optic flow cues. Reward is obtained by stopping within a zone surrounding the location of the target (inset). Reproduced with permission from [102]. (b) Experimental set-up in a multisensory fireflies task. Subjects sit within a 6-degree-of-freedom motion platform with coupled rotator. Reprinted from Stavropoulos et al. [90], Creative Commons Attribution License. (c) (Top) VR navigation task in [103]. Human subjects wearing a VR headset navigated in a virtual maze, using a joystick to control fore-aft motion and turning by rotating in a swivel chair. Screenshot shows first-person view. (Bottom) Layout of maze arenas used in the task, with increasing complexity defined by obstacle configuration. Example subject trajectories are shown in each maze. Empty and filled circles denote start and end point, respectively. Black dashed line shows optimal trajectory. Reprinted from Zhu et al. [103], Creative Commons Attribution License. (d) Other applications of monkey VR include tests of associative learning and memory, with concurrent recording in the hippocampus and prefrontal cortex. Reproduced with permission from [104].

A multisensory version of the firefly paradigm used gradual across-trial fluctuations in joystick parameters to assess the contribution of visual and vestibular cues and control dynamics to path integration [90]. To overcome limitations in the linear trajectories possible with the motion platform (figure 4b), Stavropolous and colleagues made use of the tilt-translation (gravito-inertial) ambiguity to provide combined rotation and translation of the platform (and accompanying optic flow stimulus in combined visuo-vestibular trials), which would be perceived equivalently to the linear accelerations intended by subjects' joystick commands. Successful navigation in this task depends not only on integration of momentary sensory evidence, but on combining this information with an accurate internal model of latent control dynamics [90]. In particular, vestibular inputs alone generally provided unreliable cues for navigation compared to optic flow, and better performance was mainly seen in the presence of sustained acceleration, consistent with the dominance of acceleration-dependent responses within the vestibular processing hierarchy [106,107]. This also complements the observation that longer duration stimuli elicit greater reliance on visual (velocity) information [108], although velocity estimation from optic flow can still result in systematic undershoot biases owing to a prior expectation of slower velocities [97,99].

Joysticks, and the ‘continuous’ behaviour they permit, thus provide a useful tool to re-establish the link between perception and action, a link broken in classic psychophysical tasks with independent stimuli and discrete end-of-trial responses. Yet, there remains an abstraction from true self-generated motion, or at least a distinct mapping between intended actions and the gamut of idiothetic cues of self-motion (i.e. vestibular, proprioceptive and motor efference). This distinction has meaningful consequences for the central processing of vestibular information [109]. While steering-based navigation with real vestibular cues elicits responses in the brainstem vestibular nuclei, these responses are attenuated during true active self-motion [110], probably because the reafferent signals from actively generated movements are cancelled through a comparison with expected consequences within the cerebellum [111]. Nevertheless, modelling work [112] demonstrates that both active and passive vestibular stimuli can be processed within the same internal model which makes continuous predictions of expected sensory feedback. This validates the use of externally applied motion stimuli in experimental settings, and their relevance in real-world self-motion, such as during perturbations, or mismatches between planned and executed movements [112].

(a) . Active motion and virtual reality in monkeys: new applications

Closed-loop tasks which retain some grounding in established theory and produce rich behaviour in primate subjects open exciting new avenues for probing the neural basis of self-motion perception. Simultaneous recordings in MSTd and the dorsolateral prefrontal cortex in the fireflies task suggest that these areas, and the coupling between them, may represent important latent variables such as angular distance to the target [100], consistent with task strategies inferred from gaze location [98]. Going forward, it will be important to reconcile MSTd responses during goal-directed navigation with existing foundations from classic paradigms. This will help to understand the circumstances in which MSTd responses may shift from encoding current heading during smooth pursuit eye movements [113] to encoding angular or linear distance to a goal location [100,114]. It may also be interesting to ask whether variability in eye and joystick position could be a useful proxy measure of confidence, assuming that a greater degree of confidence is associated with greater movement vigor [115,116] or fewer changes-of-mind [84].

Gaze patterns have been shown to form an integral component of planning behaviour in larger and yet more complex virtual environments. Zhu et al. [103] found that human subjects' eye movements map out future trajectories to the goal, and relevant subgoals (turning locations), in an arena navigation task, emphasizing the value of natural oculomotor behaviour in untangling deliberations and strategies during sequential decision-making. In this task, fore-aft motion was controlled via a joystick, but subjects could rotate in the VR environment through actual movement on a 360° swivel chair. Although the primacy of actively sampled visual input is clear, such set-ups could provide cross-modal inputs from vestibular and proprioceptive systems of the head and neck, offering opportunities to extend investigations of multisensory self-motion perception into the realm of flexible goal-directed navigation. This less-constrained style of VR experiment has found its way into several domains of non-human primate systems neuroscience, including studies focused on learning and memory [104] (figure 4d), abstract decision-making [117] and visual experience [118]—further greying the traditional boundaries between these areas of study.

The emerging trend for more complex, naturalistic tasks, and the ability to extract meaningful, quantitative insights from them, is symbiotic with increasingly available technologies for large-scale recording of neural populations [119] and sophisticated analytical tools [120–122]. Such recordings have already highlighted how perception and behaviour arise from coordinated activity patterns across multiple areas [123–126], implementing computations that evolve over time and are best understood at the population level [127–133]. Making sense of high-dimensional neural data in the context of self-motion may require not only analysing the dynamics of neural activity on single trials [121,134] but also relating these dynamics to suitably high-dimensional behaviour, for instance by using new methods for pose estimation in unrestrained animals [135–138].

5. Summary and outlook

Self-motion perception is a fundamentally multimodal cognitive process, essential for survival in mobile organisms. Particularly indispensable to this process are inertial motion signals arising from the peripheral vestibular apparatus—semicircular canals and otolith organs—and optic flow responses to global motion of the visual scene. Classical psychophysical studies in humans and non-human primates, often combined with neurophysiology and normative theoretical approaches, have exposed key principles of heading perception grounded in the idea that sensory information is inherently probabilistic. At the cortical level, multisensory heading perception probably involves the concerted interaction of multiple areas, and ongoing work continues to piece together the response properties of cells across these areas, their potential roles, and the interactions between them.

The introduction of RT measurements in multisensory heading discrimination is an important step towards understanding self-motion decisions in an ecological setting and clarifying what is meant by ‘optimality.’ At both the computational and neural levels, subjective reports of certainty during multisensory heading perception could also uncover important features of time-dependent decision-making and sequential judgements of heading.

Nonetheless, these additions remain grounded in the passive, independent-trial approach of conventional psychophysics and neurophysiology, which is bound to provide a limited view of computation during natural self-motion and its associated decisions. There remain significant open questions about how and where noisy sensory information is integrated across modalities, over time, and combined with internal models of dynamics for the perception of self-motion in complex environments. The ability to uncover latent variables from continuous behaviour and neural population activity will be essential to this endeavour, unveiling mechanisms that bridge time scales from individual decisions to goal-directed navigation.

Acknowledgements

The authors thank Avery Wooten, Arielle Summitt, and Daniel Fraker for assistance with data collection, and Bill Nash, Bill Quinlan and Justin Killebrew for technical support.

Ethics

Data were collected under a protocol approved by the Homewood Institutional Review Board of Johns Hopkins University (protocol no. HIRB00008959). Each participant gave written informed consent prior to the experiments.

Data accessibility

Data are freely available from the GitHub repository: https://github.com/Fetschlab/dots3DMP_humanPilotData [139].

Authors' contributions

S.J.J.: conceptualization, data curation, formal analysis, investigation, methodology, project administration, validation, visualization, writing—original draft, writing—review and editing; D.R.H.: conceptualization, data curation, formal analysis, investigation, writing—review and editing; C.R.F.: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, project administration, resources, supervision, visualization, writing—review and editing.

Conflict of interest declaration

We declare we have no competing interests.

Funding

This work was supported by the France-Merrick Foundation, the Whitehall Foundation, and the E. Matilda Ziegler Foundation for the Blind.

References

- 1.Lee D, Seo H, Jung MW. 2012. Neural basis of reinforcement learning and decision making. Annu. Rev. Neurosci. 35, 287-308. ( 10.1146/annurev-neuro-062111-150512) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lak A, et al. 2020. Reinforcement biases subsequent perceptual decisions when confidence is low, a widespread behavioral phenomenon. eLife 9, e49834. ( 10.7554/eLife.49834) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fernandez C, Goldberg JM. 1976. Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. II. Directional selectivity and force-response relations. J. Neurophysiol. 39, 985-995. ( 10.1152/jn.1976.39.5.985) [DOI] [PubMed] [Google Scholar]

- 4.Fernandez C, Goldberg JM. 1971. Physiology of peripheral neurons innervating semicircular canals of the squirrel monkey. II. Response to sinusoidal stimulation and dynamics of peripheral vestibular system. J. Neurophysiol. 34, 661-675. ( 10.1152/jn.1971.34.4.661) [DOI] [PubMed] [Google Scholar]

- 5.Goldberg JM, Wilson VJ, Cullen KE, Angelaki DE, Broussard DM, Buttner-Ennever J, Fukushima K, Minor LB. 2012. The vestibular system: a sixth sense. Oxford, UK: Oxford University Press. [Google Scholar]

- 6.Clark B, Graybiel A. 1949. Linear acceleration and deceleration as factors influencing non-visual orientation during flight. J. Aviat. Med. 20, 92-101. [PubMed] [Google Scholar]

- 7.Gibson JJ. 1950. The perception of the visual world (ed. L Carmichael). Boston, MA: Houghton Mifflin. [Google Scholar]

- 8.Warren WH, Hannon DJ. 1988. Direction of self-motion is perceived from optical flow. Nature 336, 162-163. [Google Scholar]

- 9.Warren WH. 2003. Optic flow. In The visual neurosciences (eds Chalupa LM, Werner JS), pp. 1247-1259. Cambridge, MA: MIT Press. [Google Scholar]

- 10.Warren WH, Morris MW, Kalish M. 1988. Perception of translational heading from optical flow. J. Exp. Psychol. Hum. Percept. Perform. 14, 646-660. ( 10.1037/0096-1523.14.4.646) [DOI] [PubMed] [Google Scholar]

- 11.Benson AJ, Spencer MB, Stott JR. 1986. Thresholds for the detection of the direction of whole-body, linear movement in the horizontal plane. Aviat. Space Environ. Med. 57, 1088-1096. [PubMed] [Google Scholar]

- 12.Gu Y, Angelaki DE, DeAngelis GC. 2008. Neural correlates of multisensory cue integration in macaque MSTd. Nat. Neurosci. 11, 1201-1210. ( 10.1038/nn.2191) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. 2009. Dynamic reweighting of visual and vestibular cues during self-motion perception. J. Neurosci. 29, 15 601-15 612. ( 10.1523/jneurosci.2574-09.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Landy MS, Maloney LT, Johnston EB, Young M. 1995. Measurement and modeling of depth cue combination: in defense of weak fusion. Vision Res. 35, 389-412. ( 10.1016/0042-6989(94)00176-M) [DOI] [PubMed] [Google Scholar]

- 15.Jacobs RA. 1999. Optimal integration of texture and motion cues to depth. Vision Res. 39, 3621-3629. ( 10.1016/s0042-6989(99)00088-7) [DOI] [PubMed] [Google Scholar]

- 16.Ernst MO, Banks MS. 2002. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429-433. ( 10.1038/415429a) [DOI] [PubMed] [Google Scholar]

- 17.Zeng Z, Zhang C, Gu Y. 2022. Visuo-vestibular heading perception: a model system to study multisensory decision making. Phil. Trans. R. Soc. B 378, 20220334. ( 10.1098/rstb.2022.0334) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zhou L, Gu Y. 2023. Cortical mechanisms of multisensory linear self-motion perception. Neurosci. Bull. 39, 125-137. ( 10.1007/s12264-022-00916-8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Frank SM, Greenlee MW. 2018. The parieto-insular vestibular cortex in humans: more than a single area? J. Neurophysiol. 120, 1438-1450. ( 10.1152/jn.00907.2017) [DOI] [PubMed] [Google Scholar]

- 20.Cheng Z, Gu Y. 2018. Vestibular system and self-motion. Front. Cell. Neurosci. 12, 456. ( 10.3389/fncel.2018.00456) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Guldin WO, Grüsser OJ. 1998. Is there a vestibular cortex? Trends Neurosci. 21, 254-259. ( 10.1016/S0166-2236(97)01211-3) [DOI] [PubMed] [Google Scholar]

- 22.Duffy CJ. 1998. MST neurons respond to optic flow and translational movement. J. Neurophysiol. 80, 1816-1827. ( 10.1152/jn.1998.80.4.1816) [DOI] [PubMed] [Google Scholar]

- 23.Morgan ML, DeAngelis GC, Angelaki DE. 2008. Multisensory integration in macaque visual cortex depends on cue reliability. Neuron 59, 662-673. ( 10.1016/j.neuron.2008.06.024) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gu Y, Fetsch CR, Adeyemo B, DeAngelis GC, Angelaki DE. 2010. Decoding of MSTd population activity accounts for variations in the precision of heading perception. Neuron 66, 596-609. ( 10.1016/j.neuron.2010.04.026) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fetsch CR, Pouget A, Deangelis GC, Angelaki DE. 2012. Neural correlates of reliability-based cue weighting during multisensory integration. Nat. Neurosci. 15, 146-154. ( 10.1038/nn.2983) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fetsch CR, Wang S, Gu Y, DeAngelis GC, Angelaki DE. 2007. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J. Neurosci. 27, 700-712. ( 10.1523/JNEUROSCI.3553-06.2007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Pouget A, Deneve S, Duhamel J-R. 2002. A computational perspective on the neural basis of multisensory spatial representations. Nat. Rev. Neurosci. 3, 741-747. ( 10.1038/nrn914) [DOI] [PubMed] [Google Scholar]

- 28.Chen A, DeAngelis GC, Angelaki DE. 2011. A comparison of vestibular spatiotemporal tuning in macaque parietoinsular vestibular cortex, ventral intraparietal area, and medial superior temporal area. J. Neurosci. 31, 3082-3094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fetsch CR, Rajguru SM, Karunaratne A, Gu Y, Angelaki DE, DeAngelis GC. 2010. Spatiotemporal properties of vestibular responses in area MSTd. J. Neurophysiol. 104, 1506-1522. ( 10.1152/jn.91247.2008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. 2006. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J. Neurosci. 26, 73-85. ( 10.1523/jneurosci.2356-05.2006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gu Y, Deangelis GC, Angelaki DE. 2012. Causal links between dorsal medial superior temporal area neurons and multisensory heading perception. J. Neurosci. 32, 2299-2313. ( 10.1523/JNEUROSCI.5154-11.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hou H, Zheng Q, Zhao Y, Pouget A, Gu Y. 2019. Neural correlates of optimal multisensory decision making under time-varying reliabilities with an invariant linear probabilistic population code. Neuron 104, 1010-1021.e10. ( 10.1016/j.neuron.2019.08.038) [DOI] [PubMed] [Google Scholar]

- 33.Bizley JK, Jones GP, Town SM. 2016. Where are multisensory signals combined for perceptual decision-making? Curr. Opin. Neurobiol. 40, 31-37. ( 10.1016/j.conb.2016.06.003) [DOI] [PubMed] [Google Scholar]

- 34.Zhang W-h, Chen A, Rasch MJ, Wu S. 2016. Decentralized multisensory information integration in neural systems. J. Neurosci. 36, 532-547. ( 10.1523/JNEUROSCI.0578-15.2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Chen A, DeAngelis GC, Angelaki DE. 2011. Convergence of vestibular and visual self-motion signals in an area of the posterior sylvian fissure. J. Neurosci. 31, 11 617-11 627. ( 10.1523/JNEUROSCI.1266-11.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chen A, Gu Y, Liu S, DeAngelis GC, Angelaki DE. 2016. Evidence for a causal contribution of macaque vestibular, but not intraparietal, cortex to heading perception. J. Neurosci. 36, 3789-3798. ( 10.1523/JNEUROSCI.2485-15.2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kiani R, Hanks TD, Shadlen MN. 2008. bounded integration in parietal cortex underlies decisions even when viewing duration is dictated by the environment. J. Neurosci. 28, 3017-3029. ( 10.1523/JNEUROSCI.4761-07.2008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Stine GM, Zylberberg A, Ditterich J, Shadlen MN. 2020. Differentiating between integration and non-integration strategies in perceptual decision making. eLife 9, 1-28. ( 10.7554/eLife.55365) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Shalom S, Zaidel A. 2018. Better than optimal. Neuron 97, 484-487. ( 10.1016/j.neuron.2018.01.041) [DOI] [PubMed] [Google Scholar]

- 40.Rahnev D, Denison RN. 2018. Suboptimality in perceptual decision making. Behav. Brain Sci. 41, e223. ( 10.1017/S0140525X18000936) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zaidel A, Salomon R. 2022. Multisensory decisions from self to world. Phil. Trans. R. Soc. B 378, 20220335. ( 10.1098/rstb.2022.0335) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Nienborg H, Cumming BG. 2009. Decision-related activity in sensory neurons reflects more than a neuron's causal effect. Nature 459, 89-92. ( 10.1038/nature07821) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Price NSC, Born RT. 2010. Timescales of sensory- and decision-related activity in the middle temporal and medial superior temporal areas. J. Neurosci. 30, 14 036-14 045. ( 10.1523/JNEUROSCI.2336-10.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Yates JL, Park IM, Katz LN, Pillow JW, Huk AC. 2017. Functional dissection of signal and noise in MT and LIP during decision-making. Nat. Neurosci. 20, 1285-1292. ( 10.1038/nn.4611) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Drugowitsch J, DeAngelis GC, Klier EM, Angelaki DE, Pouget A. 2014. Optimal multisensory decision-making in a reaction-time task. eLife 3, e03005. ( 10.7554/eLife.03005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Henmon VAC. 1911. The relation of the time of a judgment to its accuracy. Psychol. Rev. 18, 186-201. ( 10.1037/h0074579) [DOI] [Google Scholar]

- 47.Link SW. 2015. Psychophysical theory and laws, history of. In International encyclopedia of the social & behavioral sciences (ed. JD Wright), pp. 470-476. Oxford, UK: Elsevier. [Google Scholar]

- 48.Link SW, Heath RA. 1975. A sequential theory of psychological discrimination. Psychometrika 40, 77-105. ( 10.1007/BF02291481) [DOI] [Google Scholar]

- 49.Luce RD (ed.) 1986. Response times: their role in inferring elementary mental organization. New York, NY: Oxford University Press. [Google Scholar]

- 50.Gold JI, Shadlen MN. 2007. The neural basis of decision making. Annu. Rev. Neurosci. 30, 535-574. ( 10.1146/annurev.neuro.29.051605.113038) [DOI] [PubMed] [Google Scholar]

- 51.Drugowitsch J, Deangelis GC, Angelaki DE, Pouget A. 2015. Tuning the speed-accuracy trade-off to maximize reward rate in multisensory decision-making. eLife 4, 1-11. ( 10.7554/eLife.06678) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Drugowitsch J, Moreno-Bote R, Pouget A. 2014. Optimal decision-making with time-varying evidence reliability. Adv. Neural Inform. Process. Syst. 27, 748-756. [Google Scholar]

- 53.Kilpatrick ZP, Holmes WR, Eissa TL, Josić K. 2019. Optimal models of decision-making in dynamic environments. Curr. Opin. Neurobiol. 58, 54-60. ( 10.1016/j.conb.2019.06.006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Latimer KW, Yates JL, Meister MLR, Huk AC, Pillow JW. 2015. Single-trial spike trains in parietal cortex reveal discrete steps during decision-making. Science 349, 184-187. ( 10.1126/science.aaa4056) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. 2006. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol. Rev. 113, 700-765. ( 10.1037/0033-295X.113.4.700) [DOI] [PubMed] [Google Scholar]

- 56.Jamali M, Chacron MJ, Cullen KE. 2016. Self-motion evokes precise spike timing in the primate vestibular system. Nat. Commun. 7, 13229. ( 10.1038/ncomms13229) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Carriot J, Cullen KE, Chacron MJ. 2021. The neural basis for violations of Weber's law in self-motion perception. Proc. Natl Acad. Sci. USA 118, e2025061118. ( 10.1073/pnas.2025061118) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Liu S, Gu Y, Deangelis GC, Angelaki DE. 2013. Choice-related activity and correlated noise in subcortical vestibular neurons. Nat. Neurosci. 16, 89-97. ( 10.1038/nn.3267) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Michalski J, Green AM, Cisek P. 2020. Reaching decisions during ongoing movements. J. Neurophysiol. 123, 1090-1102. ( 10.1152/jn.00613.2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Yoo SBM, Hayden BY, Pearson JM. 2021. Continuous decisions. Phil. Trans. R. Soc. B 376, 20190664. ( 10.1098/rstb.2019.0664) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kiani R, Cueva CJ, Reppas JB, Newsome WT. 2014. Dynamics of neural population responses in prefrontal cortex indicate changes of mind on single trials. Curr. Biol. 24, 1542-1547. ( 10.1016/j.cub.2014.05.049) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Peixoto D, et al. 2021. Decoding and perturbing decision states in real time. Nature 591, 604-609. ( 10.1038/s41586-020-03181-9) [DOI] [PubMed] [Google Scholar]

- 63.Steinemann NA, Stine GM, Trautmann EM, Zylberberg A, Wolpert DM, Shadlen MN. 2022. Direct observation of the neural computations underlying a single decision. bioRxiv. 20.490321. ( 10.1101/2022.05.02.490321) [DOI]

- 64.Peirce CS, Jastrow J. 1884. On small differences in sensation. Mem. Natl Acad. Sci. 3, 75-83. [Google Scholar]

- 65.Fullerton GS, Cattell JM. 1892. On the perception of small differences: with special reference to the extent, force and time of movement. Philadelphia, PA: University of Pennsylvania Press. [Google Scholar]

- 66.Festinger L. 1943. Studies in decision: I. Decision-time, relative frequency of judgment and subjective confidence as related to physical stimulus difference. J. Exp. Psychol. 32, 291-306. ( 10.1037/h0056685) [DOI] [Google Scholar]

- 67.Vickers D. 1979. Decision processes in visual perception. New York, NY: Academic Press. See https://www.elsevier.com/books/decision-processes-in-visual-perception/vickers/978-0-12-721550-1. [Google Scholar]

- 68.Purcell BA, Kiani R. 2016. Neural mechanisms of post-error adjustments of decision policy in parietal cortex. Neuron 89, 658-671. ( 10.1016/j.neuron.2015.12.027.Neural) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Desender K, Boldt A, Verguts T, Donner TH. 2019. Confidence predicts speed-accuracy tradeoff for subsequent decisions. eLife 8, e43499. ( 10.7554/eLife.43499) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Drugowitsch J, Mendonça AG, Mainen ZF, Pouget A. 2019. Learning optimal decisions with confidence. Proc. Natl Acad. Sci. USA 116, 24 872-24 880. ( 10.1073/pnas.1906787116) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Ferrell WR, McGoey PJ. 1980. A model of calibration for subjective probabilities. Organ. Behav. Hum. Perform. 26, 32-53. ( 10.1016/0030-5073(80)90045-8) [DOI] [Google Scholar]

- 72.Treisman M, Faulkner A. 1984. The setting and maintenance of criteria representing levels of confidence. J. Exp. Psychol. Hum. Percept. Perform. 10, 119-139. ( 10.1037/0096-1523.10.1.119) [DOI] [Google Scholar]

- 73.Kiani R, Shadlen MN. 2009. Representation of confidence associated with a decision by neurons in the parietal cortex. Science 324, 759-764. ( 10.1126/science.1169405) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Kiani R, Corthell L, Shadlen MN. 2014. Choice certainty is informed by both evidence and decision time. Neuron 84, 1329-1342. ( 10.1016/j.neuron.2014.12.015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Fetsch CR, Kiani R, Shadlen MN. 2014. Predicting the accuracy of a decision: a neural mechanism of confidence. Cold Spring Harb. Symp. Quant. Biol. 79, 185-197. ( 10.1101/sqb.2014.79.024893) [DOI] [PubMed] [Google Scholar]

- 76.Desender K, Donner TH, Verguts T. 2021. Dynamic expressions of confidence within an evidence accumulation framework. Cognition 207, 104522. ( 10.1016/j.cognition.2020.104522) [DOI] [PubMed] [Google Scholar]

- 77.Desender K, Vermeylen L, Verguts T. 2022. Dynamic influences on static measures of metacognition. Nat. Commun. 13, 4208. ( 10.1038/s41467-022-31727-0) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Deroy O, Spence C, Noppeney U. 2016. Metacognition in multisensory perception. Trends Cogn. Sci. 20, 736-747. ( 10.1016/j.tics.2016.08.006) [DOI] [PubMed] [Google Scholar]

- 79.Pleskac TJ, Busemeyer JR. 2010. Two-stage dynamic signal detection: a theory of choice, decision time, and confidence. Psychol. Rev. 117, 864-901. ( 10.1037/a0019737) [DOI] [PubMed] [Google Scholar]

- 80.Herregods S, Denmat PL, Desender K. 2023. Modelling speed-accuracy tradeoffs in the stopping rule for confidence judgments. bioRxiv. 2023.02.27.530208. ( 10.1101/2023.02.27.530208) [DOI]

- 81.Fetsch CR, Kiani R, Newsome WT, Shadlen MN. 2014. Effects of cortical microstimulation on confidence in a perceptual decision. Neuron 83, 797-804. ( 10.1016/j.neuron.2014.07.011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Fetsch CR, Odean NN, Jeurissen D, El-Shamayleh Y, Horwitz GD, Shadlen MN. 2018. Focal optogenetic suppression in macaque area MT biases direction discrimination and decision confidence, but only transiently. eLife 7, 1-23. ( 10.7554/eLife.36523) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Alais D, Burr D. 2004. The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 14, 257-262. ( 10.1016/j.cub.2004.01.029) [DOI] [PubMed] [Google Scholar]

- 84.van den Berg R, Anandalingam K, Zylberberg A, Kiani R, Shadlen MN, Wolpert DM. 2016. A common mechanism underlies changes of mind about decisions and confidence. eLife 5, e12192. ( 10.7554/eLife.12192) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Shan H, Moreno-Bote R, Drugowitsch J. 2019. Family of closed-form solutions for two-dimensional correlated diffusion processes. Phys. Rev. E 100, 032132. ( 10.1103/PhysRevE.100.032132) [DOI] [PubMed] [Google Scholar]

- 86.Zheng Q, Zhou L, Gu Y. 2021. Temporal synchrony effects of optic flow and vestibular inputs on multisensory heading perception. Cell Rep. 37, 109999. ( 10.1016/j.celrep.2021.109999) [DOI] [PubMed] [Google Scholar]

- 87.Rabbitt P, Rodgers B. 1977. What does a man do after he makes an error? An analysis of response programming. Q. J. Exp. Psychol. 29, 727-743. ( 10.1080/14640747708400645) [DOI] [Google Scholar]

- 88.van den Berg R, Zylberberg A, Kiani R, Shadlen MN, Wolpert DM. 2016. Confidence is the bridge between multi-stage decisions. Curr. Biol. 26, 3157-3168. ( 10.1016/j.cub.2016.10.021) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Balsdon T, Wyart V, Mamassian P. 2020. Confidence controls perceptual evidence accumulation. Nat. Commun. 11, 1753. ( 10.1038/s41467-020-15561-w) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Stavropoulos A, Lakshminarasimhan KJ, Laurens J, Pitkow X, Angelaki DE. 2022. Influence of sensory modality and control dynamics on human path integration. eLife 11, e63405. ( 10.7554/eLife.63405) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Barry C, Burgess N. 2014. Neural mechanisms of self-location. Curr. Biol. 24, R330-R339. ( 10.1016/j.cub.2014.02.049) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Noel J-P, Angelaki DE. 2022. Cognitive, systems, and computational neurosciences of the self in motion. Annu. Rev. Psychol. 73, 103-129. ( 10.1146/annurev-psych-021021-103038) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Petzschner FH, Glasauer S. 2011. Iterative Bayesian estimation as an explanation for range and regression effects: a study on human path integration. J. Neurosci. 31, 17 220-17 229. ( 10.1523/JNEUROSCI.2028-11.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Kutschireiter A, Basnak MA, Wilson RI, Drugowitsch J. 2023. Bayesian inference in ring attractor networks. Proc. Natl Acad. Sci. USA 120, e2210622120. ( 10.1073/pnas.2210622120) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Sutton RS, Barto AG. 2018. Reinforcement learning: an introduction, 2nd edn. Cambridge, MA: MIT Press. See https://mitpress.mit.edu/9780262039246/reinforcement-learning/. [Google Scholar]

- 96.Kaplan R, Friston KJ. 2018. Planning and navigation as active inference. Biol. Cybern. 112, 323-343. ( 10.1007/s00422-018-0753-2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Lakshminarasimhan KJ, Petsalis M, Park H, DeAngelis GC, Pitkow X, Angelaki DE. 2018. A dynamic Bayesian observer model reveals origins of bias in visual path integration. Neuron 99, 194-206. ( 10.1016/j.neuron.2018.05.040) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Lakshminarasimhan KJ, Avila E, Neyhart E, DeAngelis GC, Pitkow X, Angelaki DE. 2020. Tracking the mind's eye: primate gaze behavior during virtual visuomotor navigation reflects belief dynamics. Neuron 106, 1-13. ( 10.1016/j.neuron.2020.02.023) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Noel J-P, Lakshminarasimhan KJ, Park H, Angelaki DE. 2020. Increased variability but intact integration during visual navigation in Autism Spectrum Disorder. Proc. Natl Acad. Sci. USA 117, 11 158-11 166. ( 10.1073/pnas.2000216117) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Noel J-P, Balzani E, Avila E, Lakshminarasimhan KJ, Bruni S, Alefantis P, Savin C, Angelaki DE. 2022. Coding of latent variables in sensory, parietal, and frontal cortices during closed-loop virtual navigation. eLife 11, e80280. ( 10.7554/eLife.80280) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Alefantis P, Lakshminarasimhan K, Avila E, Noel J-P, Pitkow X, Angelaki DE. 2022. Sensory evidence accumulation using optic flow in a naturalistic navigation task. J. Neurosci. 42, 5451-5462. ( 10.1523/JNEUROSCI.2203-21.2022) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Noel J-P, Caziot B, Bruni S, Fitzgerald NE, Avila E, Angelaki DE. 2021. Supporting generalization in non-human primate behavior by tapping into structural knowledge: examples from sensorimotor mappings, inference, and decision-making. Prog. Neurobiol. 201, 101996. ( 10.1016/j.pneurobio.2021.101996) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Zhu S, Lakshminarasimhan KJ, Arfaei N, Angelaki DE. 2022. Eye movements reveal spatiotemporal dynamics of visually-informed planning in navigation. eLife 11, e73097. ( 10.7554/eLife.73097) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Gulli RA, Duong LR, Corrigan BW, Doucet G, Williams S, Fusi S, Martinez-Trujillo JC. 2020. Context-dependent representations of objects and space in the primate hippocampus during virtual navigation. Nat. Neurosci. 23, 103-112. ( 10.1038/s41593-019-0548-3) [DOI] [PubMed] [Google Scholar]

- 105.Noel J-P, Bill J, Ding H, Vastola J, DeAngelis G, Angelaki D, Drugowitsch J. 2022. Causal inference during closed-loop navigation: parsing of self- and object-motion. Phil. Trans. R. Soc. B 378, 20220344. ( 10.1098/rstb.2022.0344) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Goldberg JM, Fernandez C. 1971. Physiology of peripheral neurons innervating semicircular canals of the squirrel monkey. I. Resting discharge and response to constant angular accelerations. J. Neurophysiol. 34, 635-660. ( 10.1152/jn.1971.34.4.635) [DOI] [PubMed] [Google Scholar]

- 107.Chen A, DeAngelis GC, Angelaki DE. 2010. Macaque parieto-insular vestibular cortex: responses to self-motion and optic flow. J. Neurosci. 30, 3022-3042. ( 10.1523/JNEUROSCI.4029-09.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Winkel Kd, Katliar M, Bülthoff HH. 2017. Causal inference in multisensory heading estimation. PLoS ONE 12, e0169676. ( 10.1371/journal.pone.0169676) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Cullen K. 2019. Vestibular processing during natural self-motion: implications for perception and action. Nat. Rev. Neurosci. 20, 346-363. ( 10.1038/s41583-019-0153-1.Vestibular) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Roy JE, Cullen KE. 2001. Selective processing of vestibular reafference during self-generated head motion. J. Neurosci. 21, 2131-2142. ( 10.1523/JNEUROSCI.21-06-02131.2001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Brooks JX, Carriot J, Cullen KE. 2015. Learning to expect the unexpected: rapid updating in primate cerebellum during voluntary self-motion. Nat. Neurosci. 18, 1310-1317. ( 10.1038/nn.4077) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Laurens J, Angelaki DE. 2017. A unified internal model theory to resolve the paradox of active versus passive self-motion sensation. eLife 6, 1-45. ( 10.7554/eLife.28074) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Komatsu H, Wurtz RH. 1988. Relation of cortical areas MT and MST to pursuit eye movements. III. Interaction with full-field visual stimulation. J. Neurophysiol. 60, 621-644. ( 10.1152/jn.1988.60.2.621) [DOI] [PubMed] [Google Scholar]

- 114.Jacob MS, Duffy CJ. 2015. Steering transforms the cortical representation of self-movement from direction to destination. J. Neurosci. 35, 16 055-16 063. ( 10.1523/JNEUROSCI.2368-15.2015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Seideman JA, Stanford TR, Salinas E. 2018. Saccade metrics reflect decision-making dynamics during urgent choices. Nat. Commun. 9, 2907. ( 10.1038/s41467-018-05319-w) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Korbisch CC, Apuan DR, Shadmehr R, Ahmed AA. 2022. Saccade vigor reflects the rise of decision variables during deliberation. Curr. Biol. 32, 5374-5381.e4. ( 10.1016/j.cub.2022.10.053) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Gulli R, Hashim R, Fusi S, Salzman D. 2022. Prog. No. 018.05. Neuroscience Meeting Planner. San Diego, CA: Society for Neuroscience. See https://www.nature.com/articles/s43588-022-00282-5.

- 118.Lu J, Mohan K, Tsao D. 2022. Prog. No. 132.20. Neuroscience Meeting Planner. San Diego, CA: Society for Neuroscience. See https://www.annualreviews.org/doi/10.1146/annurev-neuro-092021-121730.

- 119.Jun JJ, et al. 2017. Fully integrated silicon probes for high-density recording of neural activity. Nature 551, 232-236. ( 10.1038/nature24636) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Huk A, Bonnen K, He BJ. 2018. Beyond trial-based paradigms: continuous behavior, ongoing neural activity, and natural stimuli. J. Neurosci. 38, 7551-7558. ( 10.1523/JNEUROSCI.1920-17.2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Pandarinath C, et al. 2018. Inferring single-trial neural population dynamics using sequential auto-encoders. Nat. Methods 15, 805-815. ( 10.1038/s41592-018-0109-9) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Gokcen E, Jasper AI, Semedo JD, Zandvakili A, Kohn A, Machens CK, Yu BM. 2022. Disentangling the flow of signals between populations of neurons. Nat. Comput. Sci. 2, 512-525. ( 10.1038/s43588-022-00282-5) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Inagaki HK, Chen S, Daie K, Finkelstein A, Fontolan L, Romani S, Svoboda K. 2022. Neural algorithms and circuits for motor planning. Annu. Rev. Neurosci. 45, 249-271. ( 10.1146/annurev-neuro-092021-121730) [DOI] [PubMed] [Google Scholar]

- 124.Kohn A, Jasper AI, Semedo JD, Gokcen E, Machens CK, Yu BM. 2020. Principles of corticocortical communication: proposed schemes and design considerations. Trends Neurosci. 43, 725-737. ( 10.1016/j.tins.2020.07.001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Machado TA, Kauvar IV, Deisseroth K. 2022. Multiregion neuronal activity: the forest and the trees. Nat. Rev. Neurosci. 23, 683-704. ( 10.1038/s41583-022-00634-0) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 126.Ruff DA, Ni AM, Cohen MR. 2018. Cognition as a window into neuronal population space. Annu. Rev. Neurosci. 41, 77-97. ( 10.1146/annurev-neuro-080317-061936) [DOI] [PMC free article] [PubMed] [Google Scholar]