Abstract

Head CT, which includes the facial region, can visualize faces using 3D reconstruction, raising concern that individuals may be identified. We developed a new de-identification technique that distorts the faces of head CT images. Head CT images that were distorted were labeled as "original images" and the others as "reference images." Reconstructed face models of both were created, with 400 control points on the facial surfaces. All voxel positions in the original image were moved and deformed according to the deformation vectors required to move to corresponding control points on the reference image. Three face detection and identification programs were used to determine face detection rates and match confidence scores. Intracranial volume equivalence tests were performed before and after deformation, and correlation coefficients between intracranial pixel value histograms were calculated. Output accuracy of the deep learning model for intracranial segmentation was determined using Dice Similarity Coefficient before and after deformation. The face detection rate was 100%, and match confidence scores were < 90. Equivalence testing of the intracranial volume revealed statistical equivalence before and after deformation. The median correlation coefficient between intracranial pixel value histograms before and after deformation was 0.9965, indicating high similarity. Dice Similarity Coefficient values of original and deformed images were statistically equivalent. We developed a technique to de-identify head CT images while maintaining the accuracy of deep-learning models. The technique involves deforming images to prevent face identification, with minimal changes to the original information.

Keywords: Head CT images, Reconstructed face models, Personal information, De-identification, Deformation

Introduction

Data sharing is being widely promoted in medicine to advance research activities; however, there is increasing concern regarding the protection of personal information (El Emam et al., 2015; Gallagher, 2002; Huh, 2019; Poline et al., 2012; Raghupathi & Raghupathi, 2014; Scheinfeld, 2004; Vallance & Chalmers, 2013). Medical images such as head CT can be used to reproduce human facial features using 3D reconstruction (Bischoff-Grethe et al., 2007; Budin et al., 2008; Chen et al., 2014; Matlock et al., 2012; Mazura et al., 2012; Milchenko & Marcus, 2013; Prior et al., 2009; Theyers et al., 2021). The Health Insurance Portability and Accountability Act 2006 summit in the United States highlighted that 3D-reconstructed facial information could be personally identifiable (Steinberg, 2006). Tremendous changes in communication methods between medical professionals make it necessary to consider legal, social, and ethical issues regarding protecting personal information (Lamas et al., 2015; Lamas et al., 2016; Voßhoff et al., 2015).

Various studies indicate the risk of 3D facial reconstruction of head medical images in identifying patient faces (Chen et al., 2014; Mazura et al., 2012; Parks & Monson, 2017; Prior et al., 2009; Schwarz et al., 2019; Schwarz et al., 2021).

Typical existing de-identification techniques include deleting facial voxels (QuickShear, Defacing) (Bischoff-Grethe et al., 2007; Matlock et al., 2012), local deformation (Facial Deformation) (Budin et al., 2008), blurring (Face Masking) (Milchenko & Marcus, 2013), and face replacement (Reface) (Schwarz et al., 2021).

QuickShear and Defacing aim to prevent facial identification by deleting voxels in the face region and eliminating facial information. Loss of anatomical structures on the surface and inside the face results in considerable alteration of information and could impede data utilization (de Sitter et al., 2020). Removal of the entire face makes detecting faces from the reconstructed face models difficult. Images in which faces cannot be detected from the reconstructed face models have been shown to reduce the accuracy of medical image alignment, such as affine transforms. It has various effects on medical image processing which may be problematic for use in surgical simulation and research. Facial Deformation only deforms characteristic facial regions and preserves the internal anatomy. Achieving sufficient de-identification using this technique requires such great deformations that the face shape cannot be preserved, making face detection from reconstructed models difficult. Face Masking applies smoothing of the face surface to prevent face identification; preventing face identification in this way requires such strong blurring that the anatomical structure of the face surface cannot be preserved, making face detection difficult. Reface performs face removal and then replaces the face with another face. In this technique, the change in information is so great that boundary areas between the original and additional images are created, tissue continuity is not maintained, and the integrity of the internal anatomical structures is greatly disrupted.

A report revealed that images processed with QuickShear, Defacing, and Face Masking for segmenting brain tissue and tumors achieved reduced output accuracy in deep learning models (de Sitter et al., 2020).

We aimed to develop a new de-identification technique whereby post-processing reconstructed face models are face-detectable, pre- and post-processing reconstructed face models are not considered for the same person, pre-and post-processing information changes are small, and post-processing images are suitable for deep learning.

Materials and Methods

Acquisition of CT Images

Non-contrast head CT images of 140 Japanese patients and volunteers admitted to our hospital between January 2021 and May 2022 were obtained. CT was performed using a 320-row multi-slice CT scanner (Aquilion ONE; Toshiba Medical Systems, Tokyo, Japan), using the following parameters: collimation, 0.5 mm; tube voltage, 120 kV; tube current, 200 mA; rotation time, 0.6 s; reconstruction section width, 0.5 mm; reconstruction interval, 0.5 mm; and voxel size, 0.43 × 0.43 × 1.00 mm. The imaging range included the entire face ( eyes, nose, mouth, and ears). Images of patients < 20 years old (11 cases), patients with prominent skin or bone lesions (five), patients with specific facial features due to congenital anomalies or disease (one), and patients wearing oxygen masks (four) were excluded; 119 head CT images were included (Fig. 1). All procedures involving human participants were in accordance with the 1964 Helsinki declaration and its later amendments. The Institutional Review Board of our hospital approved the study protocol (approval number: #2021107NI). Written informed consent was obtained from all patients before participation.

Fig. 1.

Flowchart for selection of eligible data in this development studies. A total of 140 non-contrast head CT images were obtained, and 119 images were included

Methods for Creating Reconstructed Face Models

Head CT images were input into the image processing software Amira 3D® (version 2021.1; Thermo Fisher Scientific, Waltham, MA, USA) (Thermo Fisher Scientific, 2022) using Digital Imaging and Communications in Medicine format to create reconstructed face models. The images were processed using the marching cubes algorithm with a simple thresholding method and visualized using the surface rendering method. Directional lighting was used, the object color scheme was gray, and the threshold was set to -200. Other than the head, the human body and head fixtures were deleted. Snapshots (.png) in the forward-facing position (arbitrarily set by the creator, a board-certified neurosurgeon) were used as visual information.

Methods of Deformation Processing

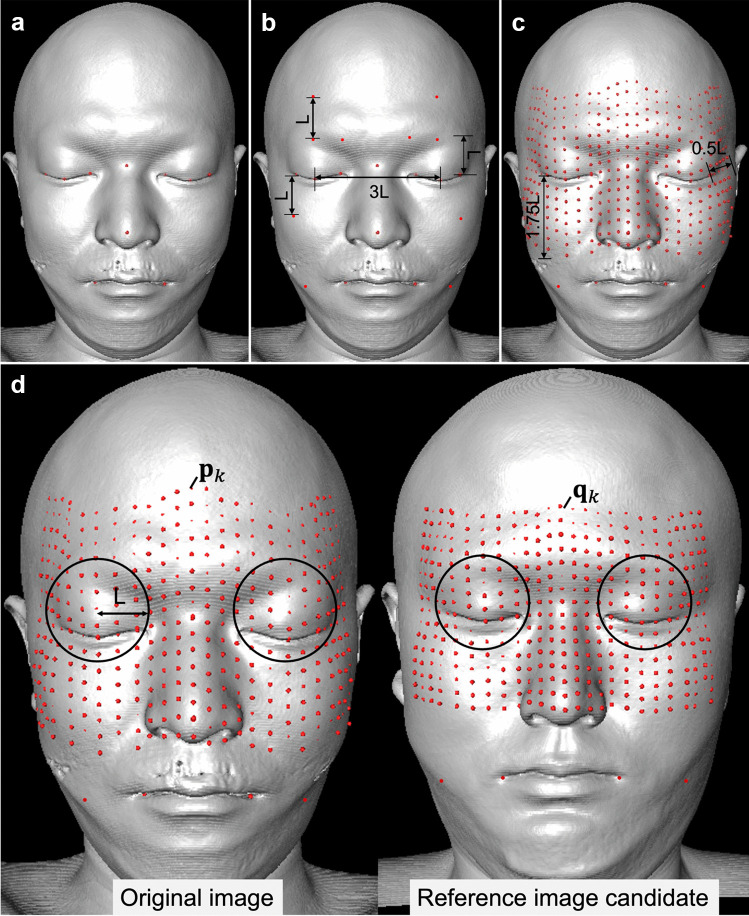

Standard control points (10 points) were set on the feature parts of the face (Fig. 2a).

- Eye: six points in total on the internal, midpoints, and external areas

- Nose: two points on the root and tip

- Mouth: two points on both corners of the mouth

Fig. 2.

Procedure for setting control points and selecting a reference image. a Ten points were set as standard control points: inner canthus, outer canthus, midpoints, nasal root, nasal tip, and both corners of the mouth. b Four control points were added for the eyebrows, two for the forehead, two for both cheeks, and two for the chin, for 20 points. c Control points were added in a grid-like pattern to the area bounded by the set control points, and the area was expanded in the lateral direction of the outer canthus. The additional control points were limited to the area above the mouth for 400 points. d The area around the eyes is defined as the range within a sphere of radius L centered at the midpoint between the eyes and eyebrows. The image with the largest summed distance between the corresponding control points around the eye between the two images was used as the reference image. L: baseline distance (1/3 of the distance between the midpoints of both eyes), : control point of the original image, : control point of the reference image candidate, : index of the control point

One-third of the distance between the midpoints of both eyes was used as the baseline distance (L), and the following 10 control points were added at locations along the geodesic line above and below the set control points (Fig. 2b).

- Eyebrow: four points on the midpoint (above the midpoint of the eyes) and the medial side (above the inner canthi)

-Forehead: two points on the midpoint (above the midpoint of the eyebrows)

- Cheek: two points on the lower part of the outer canthus

- Chin: two points on the corner line of the mouth below the outer canthus

In a grid-like pattern, additional control points were added to the area bounded by the 20 set control points. The range of control points to be added was extended by 0.5 L in the lateral direction of the outer canthi. The added control points were limited to the area above the mouth to minimize the influence of intraoral metal artifacts. Finally, 400 control points were set (Fig. 2c). All control points were set manually using Amira 3D®.

The 118 images other than the original image to be transformed were considered as the reference image candidate group, and the reference image was selected from among these images. The image with the greatest difference in shape around the eyes compared to the original image was selected as the reference image to deform the face and resemble a different face. Details of the reference image selection method are provided below.

Based on the assumption that the control points were set for the original image and all the reference images, registration between the original image and the reference image candidates was first performed. Scale alignment between the original image and reference image candidates was performed as a preprocessing step for registration. The scale of the reference image candidates was enlarged or reduced such that the distance between the midpoints of both eyes between the original image and the reference image candidates was equal.

After scale alignment, the corresponding points (midpoints of the eyes, eyebrows, and forehead, six points in total for each image) were set for the original image and the reference image candidates, and registration was performed using the least-squares method (Abdi, 2007; Jiang, 1998), which minimizes the sum of the distances between the corresponding points. Because rigid body registration with six degrees of freedom (three translation degrees of freedom and three rotation degrees) was used, no interpolation was performed for the surface points between the control points.

Next, control points around the eyes were selected. The area around the eyes was defined as the range contained within a sphere of radius L (1/3 of the distance between the midpoints of both eyes) centered at the midpoint between the eyes and the eyebrows (Fig. 2d). Control points around the eyes were selected for each of the original and reference image candidates. The sum of the distances (D) between the corresponding control points in the two images was calculated.

| 1 |

p is the control point of the original image, q the control point of the reference image candidate, k the control point index, and n the number of control points used in the evaluation. The image with the largest D is the reference image R, which is given by the following formula:

| 2 |

i is the index of the reference image candidate and D(i) is the sum of the distances between the control points when the reference image candidate i is used as the reference image.

Based on the assumption that registration of the original and reference images was complete, voxels in the original image were moved according to the following procedure.

First, to deform the control points in the original image to the corresponding control points in the reference image, the deformation vector d of each control point is calculated using the following formula:

| 3 |

p is the control point in the original image, q is the control point in the reference image R, d is the deformation vector of the control point, and k is the index of the control point.

Next, the deformation vector Cj at the voxel coordinate Ij was calculated by computing a weighted addition of the deformation vectors of the surrounding control points using the following formula:

| 4 |

| 5 |

| 6 |

C is the deformation vector of each voxel in the original image, n is the number of control points, I is the voxel coordinates in the original image, j is the index of voxels in the original image, σ1 and σ2 are the standard deviations, G is the normal distribution, and w is the normal distribution divided by the center value. This formula shows that the deformation vectors of nearby control points affect C more when performing a weighted addition.

The deformation vectors of all voxels in the original image were calculated, and the deformed image was obtained by moving the voxel positions according to the deformation vectors (Fig. 3). The amount of voxel deformation was attenuated with the distance from the control points. 1σ was set to 7.5 mm, and the effect on voxels at distances greater than 3σ was ignored.

Fig. 3.

Movement of control points and voxels. The voxels in the original image are moved based on the deformation vector calculated for each control point. The closer the voxel is to the control points, the greater the deformation; the further away the voxel is from the control points, the more it decays. In addition, when adding voxel deformations, the closer the control points, the stronger the effect. : deformation vector of each voxel in the original image; : deformation vector of the control point; : voxel coordinates in the original image; : index of voxels in the original image; : index of the control point; : control point of the original image; : control point of the reference image

Evaluation of Accuracy

Face detection test

Face detection tests were conducted using face detection programs provided by three software packages: Face API (Microsoft Corp., Redmond, WA, USA) (Azure Microsoft, 2022), Rekognition (Amazon.com, Inc, Seattle, WA, USA) (Amazon, 2022), and NeoFace KAOATO (NEC Corp., Tokyo, Japan) (NEC solution innovator, 2022). The face detection rate (percentage of faces detected from 119 cases) was calculated.

-

2.

Face identification test

Face identification tests were conducted using the face identification programs provided by the same software packages. Face identification programs detect the feature points of each face and output the match confidence score, which indicates the confidence level that the two faces are the same person, based on the coincidence of these feature points.

In the reconstructed face models of the original images, seven snapshots (.png) for each person at different angles were taken: straight ahead, 10° and 20° left and right, and 10° up and down (119 cases, 833 snapshots). They were registered with the program Ground Truth. A snapshot of the reconstructed face model (straight ahead) of the deformed image was input into the programs as test data. Subsequently, the match confidence score between the original and deformed images of the same person was obtained. Match confidence scores are output in the range 0–100 (0: absolute disagreement, 100: absolute match) (Face API outputs the match confidence scores in the range of 0–1, so the output results are multiplied by 100 to standardize the range). In all 119 cases, the match confidence scores were verified (Fig. 4).

-

3.

Verification of information changes

Fig. 4.

Face identification test flow. The reconstructed face models of the original images were registered with the three face identification programs as Ground Truth (STEP 1). The reconstructed face model of the deformed image was input into the programs as test data. The match confidence score between the original and deformed images of the same person was obtained (STEP 2)

Intracranial volume was measured using image processing software using Amira 3D®. A seed was set in the thalamus, and intracranial segmentation was performed with thresholds ranging from -50 to 120. Unnecessary soft tissue and the spinal cord below the inferior end of the frontal lobe were removed. The volume of the segmentation area was measured. In all cases, intracranial volumes of the original and deformed images were measured and compared.

The similarity between the intracranial pixel-value histograms of the original and deformed images was verified. Intracranial regions in the two images were segmented using the method described above, and only the segmented intracranial regions were extracted from the volume data. The correlation coefficient (Guilford, 1956) between the two images was calculated using the normalized correlation to evaluate the similarity of the intracranial pixel value histograms in the images before and after deformation. The image processing software Amira 3D® was used for the entire process and calculation of the correlation coefficients. The correlation coefficient (r) is given by the following formula:

| 7 |

-

4.

Verification of utilization

A deep learning model for intracranial segmentation was created using the Dragonfly® software (version 2021.3; Object Research Systems, Montreal, Canada) (Research Systems Object, 2022), which can create deep learning models for medical image segmentation. Of the 119 eligible images, 100 were used as training data, and 19 were used as test data.

The intracranial region was segmented in the same way as when the intracranial volume was measured and outputted as binary data (1: intracranial, 0: other regions), and the Ground Truth was created. The original images and Ground Truth were used as the training dataset (100 cases) and were trained on the 2D U-net (Ronneberger et al., 2015; Presotto et al., 2022). Data augmentation was performed by flipping horizontally and vertically, rotating, shearing, and scaling. The parameters were a batch size of 512, an epoch number of 100, and categorical cross-entropy as the loss function. For the validation data, 20% of the training data was used (Fig. 5).

Fig. 5.

Output accuracy of deep learning models for intracranial segmentation. Left: The 100 cases training dataset were trained on 2D Unet to create a deep learning model. Right: Nineteen cases, each with a corresponding original and the deformed image, were used as test data. The DSC was calculated by inputting the test data into a deep-learning model for intracranial segmentation. DSC for the original images and deformed images input were calculated and compared, respectively. DSC: Dice Similarity Coefficient

The Dice Similarity Coefficient (DSC) (Dice, 1945) was used to evaluate the output accuracy of the deep learning model. In the original and deformed images, each of the 19 images not used for training was input into the deep learning model as test data. DSC was calculated and compared between the original and deformed image inputs (Fig. 5).

Statistical Analysis

Equivalence tests (Dunnett & Gent, 1977) were performed to verify whether the intracranial volumes of the original and deformed images were equivalent. The equivalence margin was set at 1% of the mean intracranial volume of the original image, with a significance level of 5%. Brand–Altman analysis (Bland & Altman, 1986) was performed to verify the equivalence of the DSCs for the original and deformed images. Statistical analyses were performed using the JMP ProⓇ 16 (SAS Institute Inc., Cary, NC, USA).

Results

Head CT images were acquired from 119 participants. The study participants were 48 men and 71 women (mean age: 55 ± 18 [range: 21–92] years). The clinical characteristics of participants are presented in Table 1. The clinical diagnoses were cerebrovascular diseases in 34 (29%), brain tumors in 54 (45%), functional diseases in 22 (18%), and others in 9 (8%) cases.

Table 1.

Characteristics of Participants

| No. of participants | 119 |

|---|---|

| Age (years)* | 55 ± 18 [21–92] |

| Sex | |

| Female | 71 (60) |

| Male | 48 (40) |

| Diagnosis | |

| Cerebrovascular disease | 34 (29) |

| Brain tumor | 54 (45) |

| Functional disorder | 22 (18) |

| Others | 9 (8) |

Except where indicated, data are numbers of patients, with percentages in parentheses

*Age is expressed in years as the mean ± standard deviation, with the range in brackets

Deformation Processing

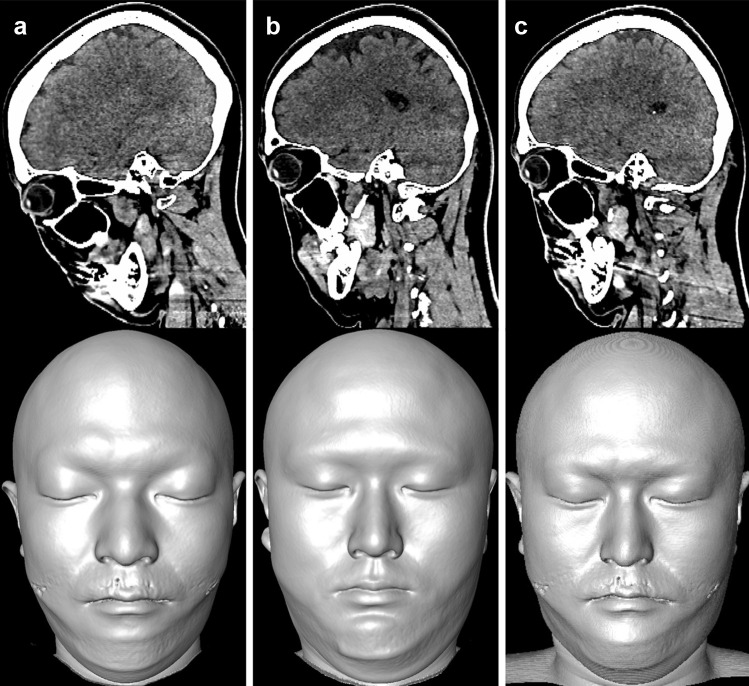

In all 119 cases, facial reconstruction models were created, and control points were set. Reference images could be selected in all cases, and deformation processing was performed on all original images using the selected reference images. The original, reference, and deformed images of one case are shown in Fig. 6.

Fig. 6.

Deformation processing for the original image. Top: CT volume data, Bottom: reconstructed face models from above images. a original image, b reference image, and c deformed image. In all cases, the control points could be set, the reference images were selected, and the deformed images were created

Evaluation of Accuracy

The face detection rate was 100% in all face detection programs for both reconstructed face models of the original images and those of deformed images.

The distribution of the match confidence scores is shown in Fig. 7. The median match confidence scores were 86.77 (interquartile range: 84.16–87.93) for Face API (Fig. 7a), 85.36 (interquartile range: 83.29–87.70) for Rekognition (Fig. 7b), and 84.59 (interquartile range: 81.07–88.04) for NeoFace KAOATO (Fig. 7c). In all cases, the match confidence scores were less than 90 for all face identification programs, and the distribution of match confidence scores did not differ among the three programs.

Fig. 7.

Match confidence scores distribution for each face identification program. Distribution of match confidence scores in a Face API, b Rekognition, and c NeoFace KAOATO. All face identification programs had match confidence scores below 90 in all cases, and there were no significant differences among the three programs

The mean ± standard deviation of the intracranial volume in the original images was 1412 ± 148 (×103 mm3), and that in the deformed images was 1410 ± 152 (×103 mm3).

The relationship between the intracranial volumes of the original and deformed images for each case is shown in Fig. 8. An equivalence test was performed using 1% of the mean intracranial volume in the original images, 14 × 103 mm3 as the equivalence margin. Statistical analysis shows that the difference between the means is "above the lower limit (-14 × 103 mm3) (P = .0148)" and " below the upper limit (14 × 103 mm3) (P = .0012)," indicating that the intracranial volume means between the original images and the deformed images were statistically equivalent; in other words, the two mean volumes were within a certain range. The % variance in intracranial volume difference was 105.5%, a small increment in the deformed images, but not an important difference.

Fig. 8.

Relationship between intracranial volume in original and deformed images. The intracranial volume changes due to deformation are shown in the scatter plot. The red line represents the identity line that shows a perfect match

The median correlation coefficient was 0.9965 (interquartile range: 0.9951–0.9974), and the intracranial pixel value histograms between the two images showed high similarity in all cases (Table 2).

Table 2.

Correlation coefficient of intracranial pixel value histograms

| Correlation coefficient | No. of cases |

|---|---|

| > 0.998 | 14 (12) |

| 0.996–0.998 | 58 (49) |

| 0.994–0.996 | 31 (26) |

| 0.992–0.994 | 7 (6) |

| < 0.992 | 9 (7) |

The intracranial pixel value histograms between the original and deformed images were higher than 0.990 in all cases, indicating a high degree of similarity. Data are numbers of cases, with percentages in parentheses

The original and deformed images were input to the deep learning model for intracranial segmentation, and the DSC for the original and deformed images was calculated for all 19 cases. The DSC distribution is shown in Fig. 9. The median DSC was 0.9967 (interquartile range: 0.9962–0.9973) for the original images and 0.9952 (interquartile range: 0.9945–0.9958) for the deformed images, both with a high DSC. A Brand-Altman analysis was performed to compare the DSCs of the original and deformed images. The mean differences in the DSCs between the two images were within an acceptable error margin (95% confidence interval). The DSCs of the original and deformed images were nearly statistically equivalent.

Fig. 9.

Distribution of the Dice Similarity Coefficient. Distribution of the Dice Similarity Coefficient (DSC) when the original and deformed images are input to the deep learning model for intracranial segmentation. The Brand–Altman analysis showed that the mean difference in DSCs in all cases was within the acceptable error margin. The DSCs of the original and deformed images could be interpreted as being close to equivalent

Discussion

Study Results Summary

Using control points, a new de-identification technique was developed in head CT images to deform original images to resemble reference images. The reconstructed face models of the deformed images were face-detectable and provided sufficient facial changes from the original images. The intracranial volume and pixel value histograms were equivalent before and after deformation. The output accuracy of the deep learning model for intracranial segmentation was equivalent to the original and deformed images.

Importantly, all the deformed reconstructed face models could likely detect faces because using another person's images as a reference guaranteed that the destination was a human face, even if the control points moved significantly.

According to guidelines (European Data Protection Board, 2022) provided by the European Data Protection Board, the threshold for match confidence scores in critical security situations involving personal information, such as police and banks, is recommended to be ≥ 90 to consider it as the same person. However, no appropriate thresholds for face identification tests between reconstructed face models have been reported. Here, a match confidence score < 90, generally judged to indicate a different person, was accepted as a change in the face. The match confidence scores were < 90 for all face identification programs, possibly because the facial features of the original images were sufficiently altered to the facial features of the reference images, and the deformations were performed to achieve a closer resemblance to the faces of the reference images.

Because the accuracy of face identification by human visual assessment has been reported to be significantly less than that of face identification programs (Chen et al., 2014; Prior et al., 2009), a human visual assessment was not performed.

Intracranial volume has been reported to decrease by approximately 10% between 40 and 75 years old (Fillmore et al., 2015); therefore, the effect of a volume change < 1% in this study was considered sufficiently small. The correlation coefficients of the intracranial pixel value histograms between the original and deformed images were higher than 0.9, indicating a robust correlation. One reason for the small changes in intracranial information before and after deformation may be that the deformation was attenuated with distance from the face surface, so the deformation effect was smaller in the interior. The reasons for the equivalence of the DSCs could be the absence of high-impact processing, such as deletion and blurring, the suppression of internal deformations, and the absence of unnatural boundary areas.

Strengths and Novelties of this Technique

The problems with existing techniques are that face detection is no longer possible, substantial information changes occur during processing, and the output accuracy of deep learning models related to medical image segmentation is reduced. No technique has overcome all these problems. The de-identification technique proposed in this study has the potential to be more useful regarding face detection, information changes, and maintaining the accuracy of deep learning models. The main advantage of our technique over existing techniques is that no unnatural boundaries are generated, consistency is maintained, and the deformed image is indistinguishable from the original one when compared.

If de-identification techniques involve excessive processing, too much information is lost, and the processed images are impractical to use as research material. Conversely, if the degree of processing is too small, there is a greater concern that the face may be identified. There has always been a trade-off between information preservation and face identification prevention. The three main novelties of this technique are that control points were set for the deformation of the face surface, a reference image was used to move the control points, and the degree of deformation was attenuated according to the distance from the control points. Using the reference images, the shape of the face was preserved regardless of how far the control points moved. Because the control point was located only on the face surface, the deformation was attenuated according to the distance from the control points, thereby minimizing changes to intracranial information.

Limitations and Future Work

This study had several limitations. Only CT images were used, but in the future, the technique must also be applied to MRI to confirm its accuracy.

In medical images, there is no established method to evaluate the success or failure of de-identification of medical images, and the degree of deformation that can be said to be "a different face" in the facial reconstruction model is unknown. We reported only the technical details of de-identification, and it cannot be strictly asserted that the results of this study make it possible to legally anonymize the data. The concept of "ELSI" is considered an essential nontechnical issue when developing medical technology and sharing medical data (Fisher, 2005). ELSI indicates "Ethical, Legal, and Social Issues" and advocates the need to discuss ethical issues and their impact on individuals and society when new methods and technologies not previously available are not addressed by current laws. The proposed de-identification technique could contribute to the social and ethical treatment of personal information, even if legal interpretation is challenging.

Conclusion

A new de-identification technique was developed in head CT images to deform original images to reference images using control points. In deformed images, the reconstructed face models exhibited detectable and sufficient facial changes from the original in all cases; intracranial volume and intracranial pixel value histograms were equivalent before and after deformation. The output accuracy of the deep learning model for intracranial segmentation was equivalent to the original and deformed images.

Acknowledgment

We are thankful to the patients and hospital staff for their collaboration. We would also like to thank Editage (www.editage.jp) for English language editing.

Author Contributions

Uchida, Kin and Saito decided study conception and design. Uchida performed data acquisition. Uchida wrote the main manuscript text and prepared all figures. Takashima and Kawahara performed statistical analysis. Kin and Saito gave the final approval of submitted version. All authors reviewed the manuscript.

Funding

Open access funding provided by The University of Tokyo. This research was supported by JST CREST, Japan (grant number JPMJCR17A1) and JSPS KAKENHI, Japan (grant number JP21K09095).

Data Availability

Data generated or analyzed during the study are available from the corresponding author by request.

Declarations

Information Sharing Statement

Data are not publicly available. Amira 3D® is available at https://www.thermofisher.com/jp/en/home/electron-microscopy/products/software-em-3d-vis/amira-software/cell-biology.html. Face API is available at https://azure.microsoft.com/en-gb/products/cognitive-services/face. Rekognition is available at https://aws.amazon.com/rekognition/?nc1=h_ls. NeoFace KAOATO is available at https://www.nec-solutioninnovators.co.jp/sl/kaoato/index.html. Dragonfly is available at http://www.theobjects.com/dragonfly/.

Ethics Approval and Consent

The internal review board of the University of Tokyo Hospital approved the study protocol (consent number #2021107NI), and written informed consent was obtained from all patients prior to participation.

Competing Interests

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Abdi H. The method of least squares. Encyclopedia of measurement and statistics. 2007;1:530–532. [Google Scholar]

- Amazon. (2022). Rekognition. Retrieved 12/09 from: https://aws.amazon.com/jp/rekognition/

- Azure Microsoft. (2022). Face API. Retrieved 09/12 from: https://azure.microsoft.com/ja-jp/services/cognitive-services/face/

- Bischoff-Grethe, A., Ozyurt, I. B., Busa, E., Quinn, B. T., Fennema-Notestine, C., Clark, C. P., . . . & Fischl, B. (2007). A technique for the deidentification of structural brain MR images. Hum Brain Mapp, 28(9), 892–903. 10.1002/hbm.20312 [DOI] [PMC free article] [PubMed]

- Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;1(8476):307–310. doi: 10.1016/S0140-6736(86)90837-8. [DOI] [PubMed] [Google Scholar]

- Budin F, Zeng D, Ghosh A, Bullitt E. Preventing facial recognition when rendering MR images of the head in three dimensions. Med Image Anal. 2008;12(3):229–239. doi: 10.1016/j.media.2007.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen JJ, Juluru K, Morgan T, Moffitt R, Siddiqui KM, Siegel EL. Implications of surface-rendered facial CT images in patient privacy. AJR Am J Roentgenol. 2014;202(6):1267–1271. doi: 10.2214/ajr.13.10608. [DOI] [PubMed] [Google Scholar]

- de Sitter, A., Visser, M., Brouwer, I., Cover, K. S., van Schijndel, R. A., Eijgelaar, R. S., . . . & Vrenken, H. (2020). Facing privacy in neuroimaging: removing facial features degrades performance of image analysis methods. Eur Radiol, 30(2), 1062-1074. 10.1007/s00330-019-06459-3 [DOI] [PMC free article] [PubMed]

- Dice LR. Measures of the amount of ecologic association between species. Ecology. 1945;26(3):297–302. doi: 10.2307/1932409. [DOI] [Google Scholar]

- Dunnett CW, Gent M. Significance testing to establish equivalence between treatments, with special reference to data in the form of 2X2 tables. Biometrics. 1977;33(4):593–602. doi: 10.2307/2529457. [DOI] [PubMed] [Google Scholar]

- El Emam, K., Rodgers, S., & Malin, B. (2015). Anonymising and sharing individual patient data. Bmj, 350, h1139. 10.1136/bmj.h1139 [DOI] [PMC free article] [PubMed]

- European Data Protection Board. (2022). Guidelines 05/2022 on the use of facial recognition technology in the area of law enforcement. Retrieved 12/05 from: https://edpb.europa.eu/system/files/2022-05/edpb-guidelines_202205_frtlawenforcement_en_1.pdf

- Fillmore PT, Phillips-Meek MC, Richards JE. Age-specific MRI brain and head templates for healthy adults from 20 through 89 years of age. Front Aging Neurosci. 2015;7:44. doi: 10.3389/fnagi.2015.00044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher E. Lessons learned from the Ethical, Legal and Social Implications program (ELSI): Planning societal implications research for the National Nanotechnology Program. Technology in Society. 2005;27(3):321–328. doi: 10.1016/j.techsoc.2005.04.006. [DOI] [Google Scholar]

- Gallagher SM. Patient privacy: how far is too far? Ostomy Wound Manage. 2002;48(2):50–51. [PubMed] [Google Scholar]

- Guilford JP. Fundamental statistics in psychology and education. 3. McGraw-Hill; 1956. [Google Scholar]

- Huh S. Protection of Personal Information in Medical Journal Publications. Neurointervention. 2019;14(1):1–8. doi: 10.5469/neuroint.2019.00031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang B-N. On the least-squares method. Computer methods in applied mechanics and engineering. 1998;152(1–2):239–257. doi: 10.1016/S0045-7825(97)00192-8. [DOI] [Google Scholar]

- Lamas E, Barh A, Brown D, Jaulent MC. Ethical, Legal and Social Issues related to the health data-warehouses: re-using health data in the research and public health research. Stud Health Technol Inform. 2015;210:719–723. [PubMed] [Google Scholar]

- Lamas E, Salinas R, Vuillaume D. A New Challenge to Research Ethics: Patients-Led Research (PLR) and the Role of Internet Based Social Networks. Stud Health Technol Inform. 2016;221:36–40. [PubMed] [Google Scholar]

- Matlock, M., Schimke, N., Kong, L., Macke, S., & Hale, J. (2012). Systematic Redaction for Neuroimage Data. Int J Comput Models Algorithms Med, 3(2). 10.4018/jcmam.2012040104 [DOI] [PMC free article] [PubMed]

- Mazura JC, Juluru K, Chen JJ, Morgan TA, John M, Siegel EL. Facial recognition software success rates for the identification of 3D surface reconstructed facial images: implications for patient privacy and security. J Digit Imaging. 2012;25(3):347–351. doi: 10.1007/s10278-011-9429-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milchenko M, Marcus D. Obscuring surface anatomy in volumetric imaging data. Neuroinformatics. 2013;11(1):65–75. doi: 10.1007/s12021-012-9160-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NEC Solution Innovator. (2022). NeoFace KAOATO. Retrieved 09/11 from: https://www.nec-solutioninnovators.co.jp/sl/kaoato/index.html

- Parks CL, Monson KL. Automated Facial Recognition of Computed Tomography-Derived Facial Images: Patient Privacy Implications. J Digit Imaging. 2017;30(2):204–214. doi: 10.1007/s10278-016-9932-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poline, J. B., Breeze, J. L., Ghosh, S., Gorgolewski, K., Halchenko, Y. O., Hanke, M., . . . & Kennedy, D. N. (2012). Data sharing in neuroimaging research. Front Neuroinform, 6, 9. 10.3389/fninf.2012.00009 [DOI] [PMC free article] [PubMed]

- Presotto, L., Bettinardi, V., Bagnalasta, M., Scifo, P., Savi, A., Vanoli, E. G., . . . & De Bernardi, E. (2022). Evaluation of a 2D UNet-Based Attenuation Correction Methodology for PET/MR Brain Studies. J Digit Imaging, 35(3), 432–445. 10.1007/s10278-021-00551-1 [DOI] [PMC free article] [PubMed]

- Prior FW, Brunsden B, Hildebolt C, Nolan TS, Pringle M, Vaishnavi SN, Larson-Prior LJ. Facial recognition from volume-rendered magnetic resonance imaging data. IEEE Trans Inf Technol Biomed. 2009;13(1):5–9. doi: 10.1109/titb.2008.2003335. [DOI] [PubMed] [Google Scholar]

- Raghupathi W, Raghupathi V. Big data analytics in healthcare: promise and potential. Health Inf Sci Syst. 2014;2:3. doi: 10.1186/2047-2501-2-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Research Systems Object. (2022). Dragonfly. Retrieved 10/15 from: https://www.maxnt.co.jp/products/Dragonfly.html

- Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing and Computer-Assisted Intervention. 2015;9351:234–241. [Google Scholar]

- Scheinfeld N. Photographic images, digital imaging, dermatology, and the law. Arch Dermatol. 2004;140(4):473–476. doi: 10.1001/archderm.140.4.473. [DOI] [PubMed] [Google Scholar]

- Schwarz, C. G., Kremers, W. K., Therneau, T. M., Sharp, R. R., Gunter, J. L., Vemuri, P., . . . & Jack, C. R., Jr. (2019). Identification of Anonymous MRI Research Participants with Face-Recognition Software. N Engl J Med, 381(17), 1684–1686. 10.1056/NEJMc1908881 [DOI] [PMC free article] [PubMed]

- Schwarz, C. G., Kremers, W. K., Wiste, H. J., Gunter, J. L., Vemuri, P., Spychalla, A. J., . . . & Jack, C. R., Jr. (2021). Changing the face of neuroimaging research: Comparing a new MRI de-facing technique with popular alternatives. Neuroimage, 231, 117845. 10.1016/j.neuroimage.2021.117845 [DOI] [PMC free article] [PubMed]

- Steinberg D. Privacy and security in a federated research network. In. Thirteenth National HIPAA Summitt; 2006. [Google Scholar]

- Thermo Fisher Scientific. (2022). Amira Software for cell biology. Retrieved 10/15 from: https://www.thermofisher.com/jp/en/home/electron-microscopy/products/software-em-3d-vis/amira-software/cell-biology.html

- Theyers, A. E., Zamyadi, M., O'Reilly, M., Bartha, R., Symons, S., MacQueen, G. M., . . . & Arnott, S. R. (2021). Multisite Comparison of MRI Defacing Software Across Multiple Cohorts. Front Psychiatry, 12, 617997. 10.3389/fpsyt.2021.617997 [DOI] [PMC free article] [PubMed]

- Vallance P, Chalmers I. Secure use of individual patient data from clinical trials. Lancet. 2013;382(9898):1073–1074. doi: 10.1016/s0140-6736(13)62001-2. [DOI] [PubMed] [Google Scholar]

- Voßhoff, A., Raum, B., & Ernestus, W. (2015). [Telematics in the public health sector. Where is the protection of health data?]. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz, 58(10), 1094–1100. 10.1007/s00103-015-2222-6 . (Telematik im Gesundheitswesen. Wo bleibt der Schutz der Gesundheitsdaten?). [DOI] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data generated or analyzed during the study are available from the corresponding author by request.