Abstract

IoT in healthcare systems is currently a viable option for providing higher-quality medical care for contemporary e-healthcare. Using an Internet of Things (IoT)–based smart healthcare system, a trustworthy breast cancer classification method called Feedback Artificial Crow Search (FACS)–based Shepherd Convolutional Neural Network (ShCNN) is developed in this research. To choose the best routes, the secure routing operation is first carried out using the recommended FACS while taking fitness measures such as distance, energy, link quality, and latency into account. Then, by merging the Crow Search Algorithm (CSA) and Feedback Artificial Tree, the produced FACS is put into practice (FAT). After the completion of routing phase, the breast cancer categorization process is started at the base station. The feature extraction step is then introduced to the pre-processed input mammography image. As a result, it is possible to successfully get features including area, mean, variance, energy, contrast, correlation, skewness, homogeneity, Gray Level Co-occurrence Matrix (GLCM), and Local Gabor Binary Pattern (LGBP). The quality of the image is next enhanced through data augmentation, and finally, the developed FACS algorithm’s ShCNN is used to classify breast cancer. The performance of FACS-based ShCNN is examined using six metrics, including energy, delay, accuracy, sensitivity, specificity, and True Positive Rate (TPR), with the maximum energy of 0.562 J, the least delay of 0.452 s, the highest accuracy of 91.56%, the higher sensitivity of 96.10%, the highest specificity of 91.80%, and the maximum TPR of 99.45%.

Keywords: Breast cancer, Internet of Things, Crow Search Algorithm, Feedback Artificial Tree, And Shepherd Convolutional Neural Network

Introduction

An IoT is a physical network modeled by sensors, software, and technologies to connect, transmit and receive data through the Internet to other devices. Smart homes, smart grids, smart cities, sensor networks used in the body, and ad hoc networks used in vehicles are just a few of the latest applications that leverage IoT devices [1, 2]. Besides, IoT generally defines the systems with distinctively specific objects, which are independent and can unite to the Internet for transferring real-world data in a digital structure [3]. In addition, everything would be sensed, accessible, and organized within the dynamic, existing, and global composition of the Internet by IoT [4, 5]. The expansion of IoT relies on several advanced technologies, namely Wireless Sensor Networks (WSNs), information sensing, and cloud computing. At IoT End Nodes (IEN), a low-priced data acquirement system is essential in the IoT-driven information models for gathering and processing the data and information effectively [6].

Furthermore, the progression of IoT [7, 8] can make any object into network data by WSN. These derivates a wide spectrum of diversified applications, like smart grids [8, 9], smart homes [8, 10], and smart cities [8, 11]. Moreover, IoT has been broadly utilized to devise and encourage systems based on healthcare [8, 12, 13] because of its efficient potential to incorporate communication resources and provide fundamental details to users. Additionally, health portals, electronic health records, and remote patient monitoring are just a few of the e-health services that can benefit from the vast amounts of data that healthcare organizations can collect from WSNs. IoT, also known as Healthcare IoT (HIoT), is therefore expected to have a significant impact on the healthcare sector [14, 15].

The human body handles the design, expansion, and loss of cells within the tissue. When this process starts to function unusually, cells are not disappearing at the rate they must. As a result, a disturbance in the usual regulation of the cell cycle leads to a cancerous tumor. The ratio of cell growth to cell death can gradually rise as a result of uncontrolled cell division and genetic error accumulation, which is a key cause of cancer [16, 17]. Breast cancer is an abnormal growth that starts inside milk ducts or breast lobules and spreads to other parts of the body [18]. Breast cancer is the second most common disease in the world. Therefore, it’s critical to confirm the overall mortality rates from breast cancer when it is treated effectively [17]. Breast cancer is the sixth most common cause of mortality for women when compared to other cancers [18]. Breast cancer causes a risk to women’s health and life, which in turn increases the mortality and morbidity rate to first and second among every female disease. Detecting the lumps in earlier stages can efficiently diminish breast cancer’s death rate [19]. Mammography is often used in the early detection of breast cancer due to its relatively low cost and excellent sensitivity to tiny lesions [20]. Numerous factors, including distraction, radiologist tiredness, breast structural complications, and the subtle characteristics of the primary-stage disease, could adversely affect the analysis procedure’s accuracy. To effectively address this issue, computer-aided diagnosis (CAD) for breast cancer may be used [19].

When assessing for breast cancer using a mammography for the purpose of completing the process of detection, the mammographic picture of the breast is typically pre-processed to avoid the muscle in the pectoral region [21, 22]. The investigation for abnormalities in mammograms can be limited to the breast profile area by eliminating background areas and pectoral muscle [17]. The usual CAD for breast cancer has three steps: the Region of Interest (RoI) in a pre-processed mammogram is first identified in order to identify the tumor site. After that, the significant features, including shape, density, and texture, are extracted effectively from the tumor region, generating the feature vectors manually. Finally, the feature vectors are classified to assist the diagnosis procedure, thereby classifying the tumors as malignant or benign. Deep learning is a promising field where artificial intelligence (AI) and machine learning utilize multiple non-linear processing layers for learning the features from information [17, 23]. Recently, AI techniques have assisted radiologists through mammography-enhanced and faster breast cancer detection. In contrast, AI techniques examine pixel-level mammograms and enclose long-range memory spatially. Various researchers have explored AI techniques for enhancing the mammographic recognition of breast cancer. The various types of techniques [24] employed in the previous research works are wavelet energy entropy (WEE), biogeography-based optimization (BBO), support vector machine (SVM), cross-validation (CV), particle swarm optimization (PSO), and decision tree (DT), respectively [19, 20].

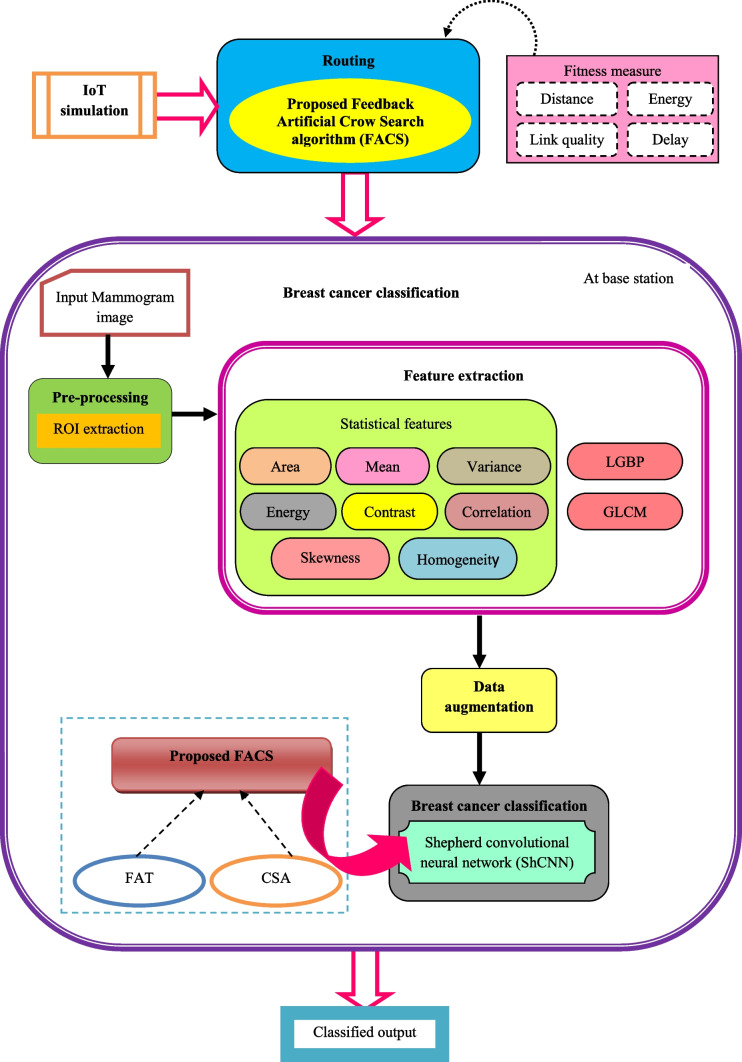

This work aims to provide a trustworthy method for IoT breast cancer classification. First, the IoT simulation is completed, and then the proposed FACS is used to explain the routing process. The phase of breast cancer classification is then started. The mammography input picture is pre-processed before being delivered to the feature extraction phase in order to properly capture the key characteristics required for the further procedure. The obtained feature is then put through a stage of data augmentation. Before the data-augmented outcomes are finally submitted to the classification phase, the proposed FACS-based ShCNN is employed to advantageously classify breast cancer.

The major contribution of the work is illustrated below.

Developed FACS-based ShCNN: using the new FACS-based ShCNN, a reliable method is built for performing breast cancer classification in IoT. The ShCNN classifier successfully categorizes breast cancer, and the training process is carried out using successfully demonstrated FACS. FACS, however, is created through the combination of CSA and FAT.

The research article is divided into three sections: “Motivation,” which shows the various techniques used for IoT breast cancer categorization, and “IoT System Model,” which shows the IoT system model. The breast cancer categorization technique is demonstrated in “Proposed FACS-Based ShCNN for Breast Cancer Identification in IoT,” the experimental findings are presented in “Results and Discussion,” and the paper is concluded in “Conclusion” in an Internet of Things (IoT)–based smart healthcare system.

Motivation

The part presents various existing breast cancer classification techniques in IoT healthcare systems and their merits and demerits that support the research for developing the technique proposed for breast cancer classification in IoT.

Literature Review

The various existing breast cancer categorization methods in IoT are evaluated in this section. A machine learning–driven diagnostic system for finding breast cancer in an IoT health setting was developed by Memon et al. [18]. This technique employed an iterative feature selection algorithm which is used to determine appropriate features from the dataset to perform the classification process. Although this strategy produced the highest classification accuracy, it was unable to shorten the execution time. Zheng et al. [17] presented the Deep Learning Assisted Efficient Adaboost Algorithm (DLA-EABA) for early breast cancer diagnosis. The overlap problems were successfully decreased by this approach. However, the issues with computational complexity could not be solved using this method. Wang et al. [19] established breast cancer detection utilizing CNN deep features and unsupervised extreme learning machine (US-ELM) clustering. Here, the fusion set for categorizing breast cancer was developed by combining the texture, density, morphological, and deep features. This method efficiently minimized the execution time. However, the area under-segmentation was not optimal, affecting the detection process. Zhang et al. [24] developed a Graph Convolutional Network (GCN) and CNN for the classification of breast cancer. This process efficiently minimized the over-fitting problems, so the dataset employed in this approach is of limited size. Hence it degrades the system’s performance.

Chanak and Banerjee [25] developed a distributed congestion control algorithm for resolving congestion issues in IoT-based smart healthcare systems. This technique employed a priority-based data routing scheme to increase reliability. Here, the average delay of data transmission was very low. Still, the major challenge lies in minimizing the execution time. Awan et al. [26] developed a multi-hop Priority-enabled Congestion-avoidance Routing Protocol to improve energy efficiency. Here, energy consumption and network traffic load were reduced by using data aggregation and filtering techniques. Although the processing time was cut down, the network’s stability was not improved. For healthcare applications, Ciciolu and Alhan [27] developed a novel energy-aware routing algorithm. This technique achieved minimum delay during data transmission. Although using innovative techniques, this algorithm was unable to create fuzzy logic for multi-attributed decision-making. Almalkiet al. [28] created the Energy Efficient Routing Protocol with Dual Prediction Model for IoT healthcare applications (EERP-DPM). The effective routing mechanism minimizes transmission between the medical server and sensor nodes. This method effectively reduced the end-to-end delay during transmission. However, this technique failed to design a protocol for routing and for managing the sensor node’s mobility to achieve effective results.

Challenges

The various limitations formed by breast cancer categorization techniques in IoT are described below as follows,

Mammography is the most used early cancer detection technique. However, it has limitations, such as limited dynamic range and low-contrast and grainy pictures [3].

A machine learning–enabled diagnostic system was introduced in [18] for detecting breast cancer in IoT healthcare systems. This model did not, however, take into account novel optimization algorithms, feature selection algorithms, or deep learning techniques to improve performance outcomes.

In [24], GCN and CNN technique was presented for breast cancer classification, but the major challenge lies in analyzing whether GCN with multiple layers will enhance the classification outcomes.

Infrared thermal imaging and a deep learning algorithm were launched in [16] for effective breast cancer detection. Still, this technique did not employ a thermal sensitivity camera to diagnose precisely.

In terms of particular health applications, certain health modalities might be insufficient or ineffective in providing substantial sources of information, which would therefore lessen the efficacy of the required procedures [3].

IoT System Model

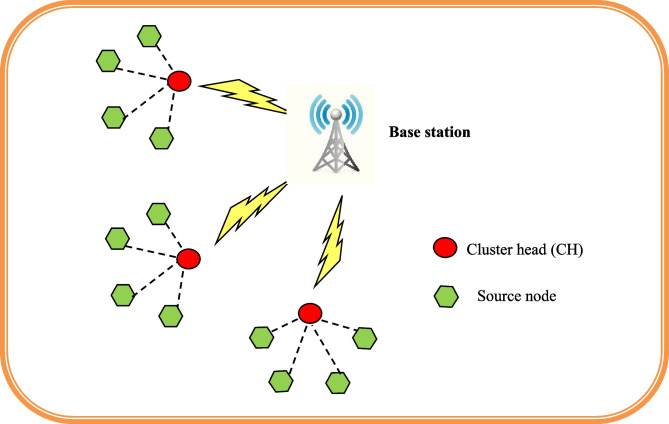

The IoT system model combines various sensors and systems connected wirelessly to the internet to perform the processing and transmission of data of an IoT network. The IoT system model is represented in Fig. 1. Typically, this topology includes a cluster head (CH), several nodes, and a base station (BS). Direct communication occurs at the selected radio frequency value. Every IoT node that subsists in the wireless network has its maximum communication radio range that is uniformly distributed within the dimension of Yw and Zw meters. Nodes possess distinct ID in such a way that nodes are interrelated to generate clusters in the IoT network. The finest location of the sink node in the IoT network is demonstrated as {0.5Yw,0.5Zw}. The node in an IoT network performs a dual role as a router and as a sensor. The source node sends data packets to the base station using a chosen optimal path as soon as the nodes are established. The IoT nodes’ data packets are subsequently collected by the node from the sink. As a result, the CH mechanism has been modified to allow the IoT nodes to send data packets to the BS.

Fig. 1.

System model of IoT

Energy Model

Every node contains initial energy E0 where the energy of the nodes is not rechargeable [29]. Nevertheless, the loss of energy throughout the transmission of data packets from jth nodes to lth CH pursues multipath fading. Due to the separation between the sender and receiver, a free space model is necessary. The power amplifier and radio electronics in the transmitter use up the energy, whereas the radio electronics in the receiver use up the energy. However, the nodes with their dissolute energy while transferring b bytes of data are represented as,

| 1 |

| 2 |

where Eelec represents electronic energy on the basis of parameters, namely modulation, spreading, filtering, amplifier, and digital coding.

| 3 |

where Etrans denotes transmitter energy, Eagg signifies aggregated data energy, Eamp demonstrates the amplified power energy, and ||Gj−Cl|| denotes the distance between jth node and lth CH. However, the dissipation of energy at CH. When receiving the b bytes of data is expressed as

| 4 |

The individual nodes with the energy value get updated after receiving or sending b bytes of data is expressed as,

| 5 |

| 6 |

The data transmission method outlined above is carried out repeatedly until the node is entirely destroyed. When the node’s energy falls to what is seen as being below zero, it is assumed to be dead.

Proposed FACS-Based ShCNN for Breast Cancer Identification in IoT

This part illustrates the design and development of breast cancer identification methods in an IoT. This research’s key contribution is the development of an IoT breast cancer detection method utilizing ShCNN [30] and a proposed optimization strategy. Initially, the IoT simulation is performed where the nodes gather information regarding the patients. The chosen FACS algorithm is then used to perform routing while accounting for fitness factors such as energy, distance, delay, and network quality. However, the CSA [31] and FAT algorithms [32] are newly incorporated into the proposed FACS algorithm. Once the routing procedure is done, the classification of breast cancer is done at the base station, and the steps to be performed are illustrated as follows. Pre-processing, feature extraction, data augmentation, and classification are the four steps that make up the newly proposed breast cancer classification approach. In this procedure, the mammography image is taken from the dataset and pre-processed using ROI extraction to remove unwanted pixels or image falsifications. Following pre-processing, statistical features such as area, mean, variance, energy, contrast, correlation, skewness, homogeneity, GLCM [33], and LGBP [34] features are extracted in order to carry out additional processing. After feature extraction, the operations of rotation, flipping, cropping, and zooming are used to enhance the data. The ShCNN’s training process is built using an optimized methodology known as the FACS technique, and the ShCNN is then utilized to categorize breast cancer. The block diagram for the identification of breast cancer utilizing the created FACS-based ShCNN in an IoT system is shown in Fig. 2.

Fig. 2.

Block diagram of breast cancer detection based on proposed FACS-based ShCNN in IoT system

Routing using Proposed FACS

The proposed FACS, which was created by combining FAT [32] and CSA [31], is used to carry out the routing procedure. The artificial tree method has been updated, and FAT [32] makes use of the feedback mechanism. It involves the self-propagating operator and dispersive propagation, two operators. The leaves and branches make up the tree’s bio-inspired structure. Here, a thicker branch designates the practical answer, but the tree’s thickest trunk designates the ideal solution. By connecting the teeny branches to the leaves, the branch’s population is created. Crossover, self-evolution, and random operators are the FAT’s branch evolutions operators. The additional operator used to eliminate the local optimum solution is defined by the random operator. Based on territory and the total number of other branches, all of the branches’ updated clarifications have been made. When the most recent branch outperforms the oldest one, the new branch takes the place of the oldest branch. On the other hand, the meta-heuristic optimizer CSA [31] is inspired by crows’ clever behavior. Based on the fact that crows conceal excess food and recover it when necessary, CSA is a population-driven method. The key benefit of this approach is that it makes it easy for other algorithms to address problems that one algorithm causes. Hence the incorporation of these algorithms helps in solving the optimization issues. Hybridizing the FAT with the CSA exhibits the proposed technique’s effectiveness and effectively minimizes computational complexity issues.

Solution Encoding

The developed FACS optimization approach’s solution encoding is shown in this section. In this method, the solution vector is accomplished for determining the effective nodes with respect to the index of nodes q and the solution vector is in the size of 1×n Additionally, the index of nodes lies within the range from 1≤q≤10. Figure 3 shows the established FACS solution encoding.

Fig. 3.

Solution encoding

Fitness Function

The fitness function is measured to select an optimal solution from the solution set. The following equation represents the fitness function, which is determined using a number of fitness factors, including energy, distance, link quality, and delay.

| 7 |

where Eu represents the consumption of energy in the uth node, Duv denotes the Euclidean distance between uth and vth node, d represents the delay, and Luv signifies the link quality.

Energy: energy measure defines the utilization of minimum energy for performing a particular task.where Puv represents the power, which is constant among uth and vth node, and Duv specifies the distance.

- Distance: the distance parameter is utilized for computing the space among the nodes. The least space is considered the best solution. However, the distance Duv is given by,

where Duv denotes the Euclidean distance between uth and vth node.where Puv represents the power, which is constant among uth and vth node, and Duv specifies the distance.8 - Delay: the ratio of the number of nodes to all of the nodes along the path is used to quantify delay. The delay d is expressed as

where N denotes the total nodes, and p signifies the overall nodes in a path.where Puv represents the power, which is constant among uth and vth node, and Duv specifies the distance.9 - Link quality: the expression of link quality is given bywhere Puv represents the power, which is constant among uth and vth node, and Duv specifies the distance.

10

Algorithmic Phases of Developed FACS

The algorithmic phases of demonstrated FACS are explained below as follows:

-

Initialization: the shown equation is used to initialize the branch population in the search space K.

11 Here, w specifies the general branches in the branch population. Label the specifications, namely, the territory parameter (T), the search parameter (H), and the maximum function evaluation number (Rmax).

Compute fitness function: the optimal solution comes from measuring the fitness value to determine the optimal fitness value, and an equation results in the fitness function Eq. (7).

- Crossover operator: a new branch is built by combining the existing branch with linear interpolation and using only one-half of the branch territory at random. As follows is how the crossover operator equation is written:

where rand(0,1) signifies the random number that lies within range [0,1], Qnew specifies the position of the branch in the neighborhood of Qm, and Qnew denotes the location of the newly generated branch.12 -

Update solution of self-evolution operator: the standard equation of the self-evolution operator is mathematically expressed as

13 14 15 By integrating the CSA with FAT, the global features can be increased by figuring out various optimization issues. According to CSA [31], the created FACS algorithm’s update equation is as follows:16 17 18 19 - Random operator: the crossover operator generates a new branch which is validated with the original search number Z(St) and is computed as

26

where Z denotes the search parameter that contains the constant value and Y(Qm) represents the maximum searching of the population of the branch Qm.27 Evaluating feasibility: the fitness value of each solution is calculated, and the one with the greatest fitness function score is regarded as being overall the most improved.

Termination: until the ideal answer is found, the previous procedures are repeated. The created FACS pseudo code is shown in Table 1.

Table 1.

Pseudo code of developed FACS

| Sl. no | Pseudo code of developed FACS |

|---|---|

| 1 | Input: T, H, and w |

| 2 | Output: Qnew |

| 3 | Population initialization |

| 4 | Fitness function determination |

| 5 | for (m=1 to w) do |

| 6 | if (m=not crowd) |

| 7 | Using Eq. (12) execute crossover operator |

| 8 | else |

| 9 | Using Eq. (25) execute self-evolution operator |

| 10 | end if |

| 11 | end for |

| 12 | Using (27) execute random operator |

| 13 | Update branch t with new branch |

| 14 | establish fresh branch populations |

| 15 | Reconsider the solution |

| 16 | Stop |

The developed FACS of smart IoT-based breast cancer classification is efficient in classifying breast cancer for achieving improved detection performance.

Breast Cancer Classification Using Proposed FACS

Once the routing is performed, the breast cancer categorization is initialized at the base station. The various steps to be performed in classifying breast cancer at the base station are the acquisition of input mammogram images, pre-processing, feature extraction, data augmentation, and breast cancer classification.

Input Mammogram Image Acquisition

The dataset is utilized to obtain the mammography input picture, which is then used to carry out the breast cancer categorization process. Considering the dataset I with number of input images, which are demonstrated as

| 28 |

where I denotes the database, demonstrates the total input mammogram images, Jk denotes the mammogram image at the kth index.

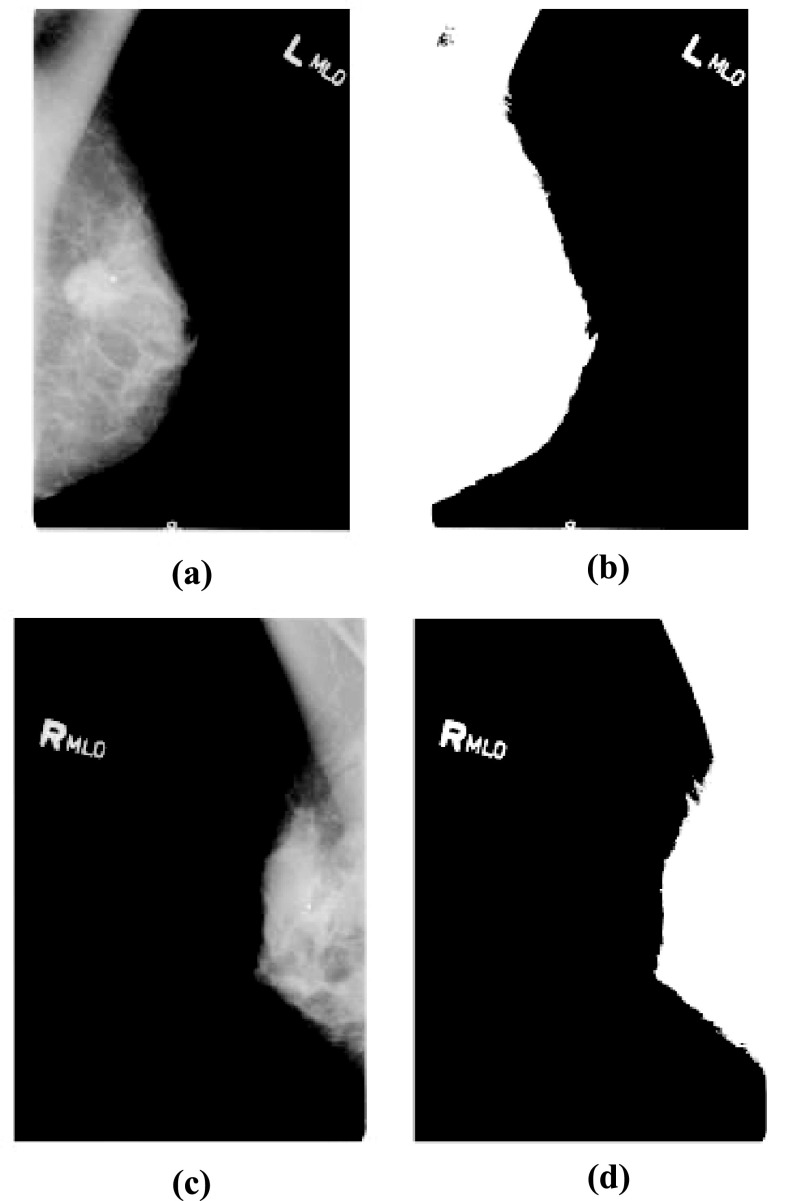

Pre-processing Using the ROI Extraction Technique

Following capture, the input mammography image undergoes pre-processing to remove noise variations and enhance the input images’ visual quality. Here, the input image of the mammogram Jk is given as an input for the pre-processing phase, where the ROI extraction technique does the pre-processing. The objective is to extract a precise location of the mammogram image by using the ROI extraction technique. By choosing a particular region, the computational complexities are efficiently reduced. An input image consists of an outer segment which is eliminated in such a way that the exterior falsifications are discarded. A pre-processed outcome of the ROI extracted image is denoted as Pt*

Feature Extraction

The pre-processed results are fed into the feature extraction phase to identify the salient characteristics after the pre-processing process is complete. Here, the pre-processed image’s various features include statistical, GLCM, and LGBP features.

Statistical Features

The features which are used to differentiate the content of the image are called statistical features, and these features are adapted in extracting the features such as area, mean, variance, energy, contrast, correlation, skewness, and homogeneity. However, the statistical features are described below as follows,

Area: area defines the boundary covered by cancer, and this feature is represented as f1

- Mean: mean is defined as a computed feature which is used for determining the average value of pixels represented in an image, which is depicted as

where h indicates total segments, e(Uh) signifies the pixel value of every segment, and |e(Uh)| denotes the total pixel represented in a segment. The mean feature is denoted as f229 -

Variance: variance is defined as the mean value and is expressed as

30 The variance feature is denoted as f3

-

Energy: the energy of an individual segment is demonstrated as the total sum of the pixel energies present within the segment. The energy is represented as

31 Furthermore, the energy feature is represented as f4.

- Contrast: contrast is obtained by determining the luminance difference, which produces a differentiable image. Besides, this feature is signified by the dissimilarity between the colorfulness and brightness of an image. The contrast feature is depicted as,

where K denotes the difference in luminance value, and ∂ specifies the average value of luminance. The contrast feature is represented as f5.32 -

Correlation: correlation is the ratio of strength and direction of the linear relation between two arbitrary values. The correlation calculation is done to estimate the separation among the variable pairs. Considering two images as arbitrary vectors P1 and P2 with all the pixels as dimensions. The features of correlation are expressed as

33 The result of the correlation is represented as f6

Skewness: skewness f7 defines the object’s shape using a numerical value.

- Homogeneity: homogeneity is defined as the local information obtained from the image representing region regularity. It incorporates two parts, namely standard deviation and discontinuity of intensities. The homogeneity is expressed by

34

where 0≤a ≤A−1 and 0≤b ≤S−1, Ya, b Signifies pixel intensity at the location (a, b) of an image, denotes the size of window centered at (a, b) for dissimilarity calculation, and implies the dimension of window centered at (a, b) for dissimilarity calculation, R indicates the standard deviation, and S denotes the discontinuance. The homogeneity is represented as f8.

GLCM Feature

GLCM feature [33] is related to the wavelet transform. The aim of obtaining the GLCM feature is that the GLCM offers effective classification outcomes, and this feature’s computational complexity is very low. Additionally, the GLCM feature is used to compute the co-occurrence matrix for the entire image in the dataset while accounting for the pixel values, intensity values, orientation, and distance of each element. The equation for the normalization of GLCM is expressed as

| 35 |

Here, αu,v signifies the total occurrence of gray tones u and v, and V represents the overall gray tones specified by the quantization process. The number of columns and rows equals the total number of gray levels in the image. The GLCM feature is depicted as f9.

LGBP

The main concept behind the LGBP approach [34] is that rather than applying the LBP operator directly on raw images, it is applied to the Gabor input images. The LBP histogram features are extracted for every Gabor image. The LGBP feature vector was obtained by applying the characteristics of the histogram on every energy image. Hence, the size of the feature of the LGBP operator is higher than the LBP due to the decomposition of Gabor. The original LBP operator plots the pixels of an image by thresholding the 3×3 neighborhood of each pixel with the middle value nc and choosing the results as a binary number. Furthermore, incorporating the Gabor filter and the LBP extracted feature is called an LGBP operator. The threshold value of the image is denoted as binary form, which is formulated as

| 36 |

In addition, the characteristics of LBP obtained at the pixel are illustrated as,

| 37 |

Therefore, the LGBP feature is indicated as f10.

Thereafter, the overall features are joined to develop a feature vector to classify breast cancer, and the feature vectors are demonstrated as,

| 38 |

where F symbolize feature vectors which are extracted from the segment. The produced feature vectors F are presented for augmenting the data phase for further processing.

Augmentation of Data

The feature extraction output F is given as input for the phase of augmentation of data. Augmentation of the data procedure is done to improve the breast cancer classification performance and maximize the dataset dimension. This phase effectively reduced the over-fitting problems. Furthermore, augmentation of the data process is carried out by flipping, rotation, cropping, and zooming, in a better way.

Flipping: flipping is carried out by utilizing vertical or horizontal flips to flip the image orientation.

Rotating: rotating operation is performed for rotating to a particular degree with respect to the image.

Zooming: zooming is used to enlarge or reduce the size of images.

Cropping: to crop an image, select the area that has to be removed from it so that it can be used in another process.

Finally, the data augmentation result is denoted as Ms.

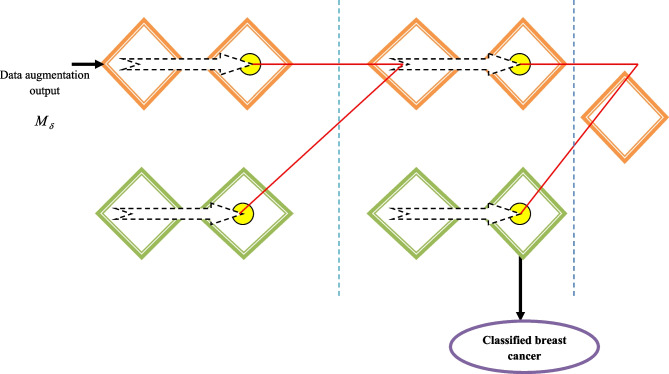

Breast Cancer Classification Using ShCNN

Once the data augmentation is done, the output of augmentation Mδ is given as an input for the categorization phase to classify breast cancer. The created FACS approach is used in this instance to train the ShCNN [30] classifier, which is used to categorize breast cancer. A major benefit of making use of the ShCNN classifier for detecting breast cancer efficiently for improving the training speed, thereby attaining powerful classification outcomes. The architecture of ShCNN and its training process are illustrated below.

-

ShCNN architecture

The structure of the Shephard defines the recognized pixels by taking into consideration of the spatial distances to a processed pixel. The Shephard approach with respect to the structure of convolution is formulated as

where O and L define the input images, G represents the binary indicator, * signifies the convolutional operation, i denotes the coordinates of the image, and C signifies the kernel function where the weight of the function is inversely proportional to the distance between pixel to pixel for processing.39 The partition, which is element-wise, convolves the image and mask and manipulates the transmission procedure, which in turn defines how the information from the pixel is transmitted across the regions. Thus, this provides the capability to handle the process of interpolation among the non-uniformly spaced data and to devise feasible relocated variants. The network’s representation of the convolutional kernel, which results in modifications to the interpolation results, is a key feature. This leads to the introduction of the new convolutional layer for the new tensile model. The Shephard interpolation layer is the name given to the developed convolutional layer.-

Shephard interpolation layerThe interpolation layer which can be trained is expressed as

where v denotes the index layer, s in Bsv denotes the feature maps index in vth layer, and t in Btv-1 indicates the feature map index in v−1 the layer. Besides, Gv signifies the current layer mask and Bv-1 denotes input. Here, Bv-1 signifies the overall feature map in v−1 layer and Cst are trainable kernels, which are allocated in numerator and denominator in the calculation of fraction. Generally, Cst is convolved with the last layer of numerator activations and mask of the current layer in the denominator Gv. In addition, Bv-1 represents an output feature map of the normal layer in CNN, like the pooling layer and convolutional layer as a preceding Shephard interpolation layer, which is a function of Bv-2 and Gv-1. A very non-linear interpolation operator is created by mutually stacking Shephard interpolation layers. The parameter k indicates the bias term, ℵ denotes the non-linear network. The term B represents a distinguishable and smooth function; hence, the standardized backpropagation algorithm is used for training the important terms.40 Additionally, more complex interpolation functions with several non-linear layers are usually produced using the interpolation layer. The mask comprises a binary map of values, where one represents the recognized portion and 0 represents the portion that was missed. Additionally, the image and mask are both applied with the same kernel. The v+1 layer mask is generated by the results of the preceded convolved mask Cv * Gv automatically for the process of allocating zero for inappropriate values and the thresholding procedure. The successive part mask generation is important for areas with massive unidentified parts, like in painting, such that the difficult behaviors of propagation are calculated from data with non-linear interpolation using the multi-phase Shepard interpolation layer. Additionally, the interpolation layer balances the depth of the network and the size of the kernel. ShCNN is the name given to CNN with the Shepard interpolation layers. In Fig. 4, the ShCNN architecture is shown. The ShCNN classifier output is demonstrated as Xo.

-

-

(b)

Training procedure of ShCNN using proposed FACS

The training process of the ShCNN [30] is performed using the developed FACS, which is devised by the incorporation of the CSA [32] and FAT [31]. The algorithmic phases involved in training the ShCNN are illustrated in “Algorithmic Phases of Developed FACS.”

Fig. 4.

Architecture of ShCNN

Results and Discussion

As shown below, the produced ShCNN methodology via the FACS method includes a number of measures, including energy, latency, accuracy, sensitivity, specificity, and TPR.

Experimental Setup

The demonstrated FACS-based ShCNN technique is implemented in MATLAB 2020a using a computer with a Windows 10 operating system, 15 GB of RAM, and an Intel i3 core processor. Details of the experimental setup are shown in Table 2.

Table 2.

Experimental setup

| Parameters | Values |

|---|---|

| Learning rate | 0.01 |

| Batch size | 32 |

| Epoch | 50 |

Dataset Description

The Mammographic Image Analysis Society (MIAS) (Dataset-1) and the Digital Database for Screening Mammography (DDSM) (Dataset-2) are the datasets utilized in this method.

Dataset-1

A digital mammography dataset was produced by the UK research group Mammographic Image Analysis Society (MIAS) to better analyze mammograms. The radiologists indicate the areas where anomalies are present with “truth“ markings. This dataset [35] contains 322 digitized films and is accessible on 2.3 GB 8 mm (ExaByte) tape. The dataset is condensed to a 200 micron pixel edge and clipped or padded, so the images are in the size of 1024×1024.

Dataset-2

The Digital Database for Screening Community (DDSM) dataset was developed through cooperation between Massachusetts General Hospital, Sandia National Laboratories, and the Department of Computer Science and Engineering at the University of South Florida. This dataset [35] contains information from roughly 2500 studies, each of which includes two images of each breast along with patient-related data, including age at the time of study, breast thickness rating, subtlety rating for abnormalities, abnormality depiction, and image-related data, including spatial resolution and scanner. Additionally, photos with questionable areas have associated pixel-level ground truth data about the types and locations of questionable areas. Additionally, the software is used to retrieve mammography and truth images as well as to gauge performance levels for examining automated image algorithms.

Performance Evaluation Measures

The generated technique’s estimated performance for the metrics energy, delay, accuracy, sensitivity, specificity, and TPR can be examined.

Energy: the energy measured is illustrated in “Fitness Function.”

Delay: the equation for the delay metric is presented in Eq. (8).

Accuracy: accuracy is a measure, which is used for calculating the true negative and true positive proportions of the image samples, which is given as

Sensitivity: it is a measure which is used for computing truly positive outcomes of the detected breast cancer, and it is expressed as

Specificity: specificity is defined for calculating the precise classification outcomes of the true-negative rate of identified cancer, and it is expressed as

where Pt represents the true positive, Nt indicates the true negative, Pf specifies a false positive, and Nf implies a false negative.

Experimental Results

The section displays the general findings of the proposed FACS-based ShCNN. Figure 5 shows the experimental outcomes of the developed FACS-based ShCNN. Figure 5a depicts the input image acquired from dataset-1; Fig. 5b shows the ROI extracted output of dataset-1; Fig. 5c depicts the input image taken from dataset-2; and Fig. 5d displays an extracted ROI output of dataset-2.

Fig. 5.

Experimental outcomes of developed FACS-based ShCNN. a Input image of dataset-1, b pre-processed result based on ROI extraction of dataset-1, c input image of dataset-2, d pre-processed result based on ROI extraction of dataset-2

Comparative Techniques

The developed technique is compared to other breast cancer classification methods presently used in Internet of Things (IoT) systems, such as Deep reinforcement learning-based Quality-of-Service (QoS)-aware Secure Routing Protocol (DQSP) + Adaboost [7, 17], DQSP + CNN [7] [24], DQSP + Deep learning [7, 16], and DQSP + Machine learning [7, 18].

Comparative Analysis

Analysis Using Dataset 1

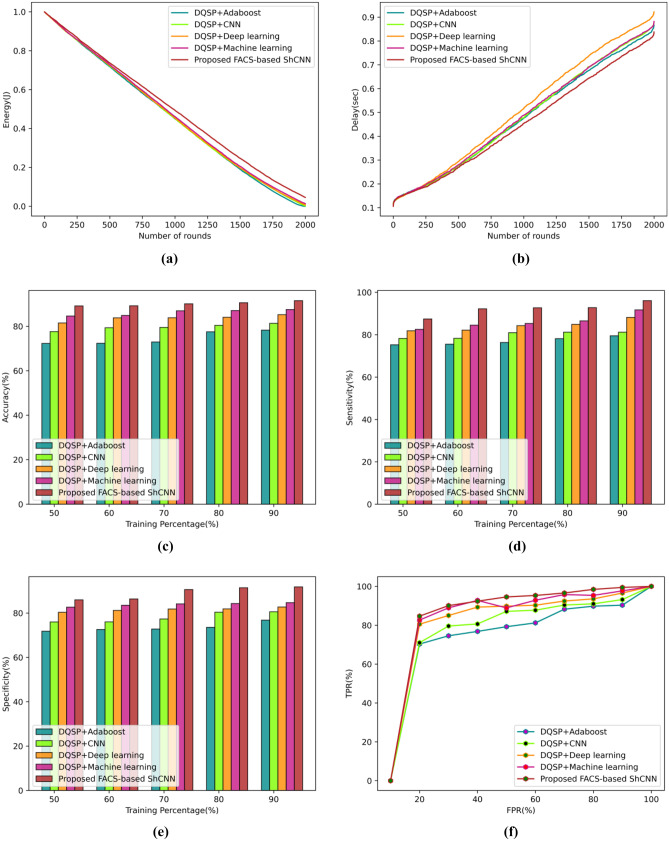

Figure 6 provides an overview of the proposed technique’s performance metrics analysis using dataset-1. These metrics include energy, delay, accuracy, sensitivity, specificity, and TPR. Figure 6a displays the evaluation using the energy metric. For round 300, the DQSP + Adaboost calculated an energy value of 0.825 J, the DQSP + CNN calculated 0.827 J, the DQSP + Deep learning calculated 0.831 J, the DQSP + machine learning calculated 0.829 J, and the developed FACS-based SHCNN calculated 0.839 J. The evaluation of the delay measure is shown in Fig. 6b. The developed FACS-based ShCNN calculates a delay value of 0.269 s, whereas earlier techniques like DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning produce delay values of 0.276 s, 0.277 s, and 0.290 s for the rounds 500, respectively. The evaluation using the accuracy metric is shown in Fig. 6c. In comparison to the proposed FACS-based ShCNN, which calculated an accuracy value of 90.59%, the accuracy values produced by the DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning are, respectively, 77.53%, 80.42%, 84.05%, and 87.07% for the 80% training data. The performance as measured by the current techniques improved by 14.40%, 11.22%, 7.210%, and 3.878% when compared to the proposed FACS-based ShCNN. Figure 6d displays the evaluation using the sensitivity metric. The sensitivity values for the DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, DQSP + machine learning, and the proposed FACS-based SHCNN, respectively, are 76.33%, 80.96%, 84.26%, 85.30%, and 92.69% when the training data is considered. The improved performance is calculated using conventional technologies and is expected to be 17.65%, 12.65%, 9.092%, and 7.970% when compared to the proposed FACS-based ShCNN. The evaluation of the specificity metric is shown in Fig. 6e. The specificity value tested by the new FACS-based ShCNN was 86.360%, whereas the specificity values examined by the existing techniques, such as DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning, are 72.57%, 76.01%, 81.21%, and 83.50% for the training data 60%. The corresponding performance improvements over the suggested FACS-based ShCNN for the earlier methods are 15.95%, 11.97%, 5.957%, and 3.311%. The TPR assessment is displayed in action in Fig. 6f. The TPR estimated using the newly created FACS-based ShCNN is 94.57% for the false positive rate (FPR) of 50%, while the TPR achieved using the existing techniques, such as DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning, is 79.24%, 87.14%, 89.81%, and 88.93%. The difference in performance between the proposed FACS-based ShCNN and the existing approaches is calculated to be 16.203%, 7.848%, 5.027%, and 5.954%.

Fig. 6.

Comparative assessment of the developed technique using dataset-1 based on a energy, b delay, c accuracy, d sensitivity, e specificity, f TPR

Analysis Using Dataset 2

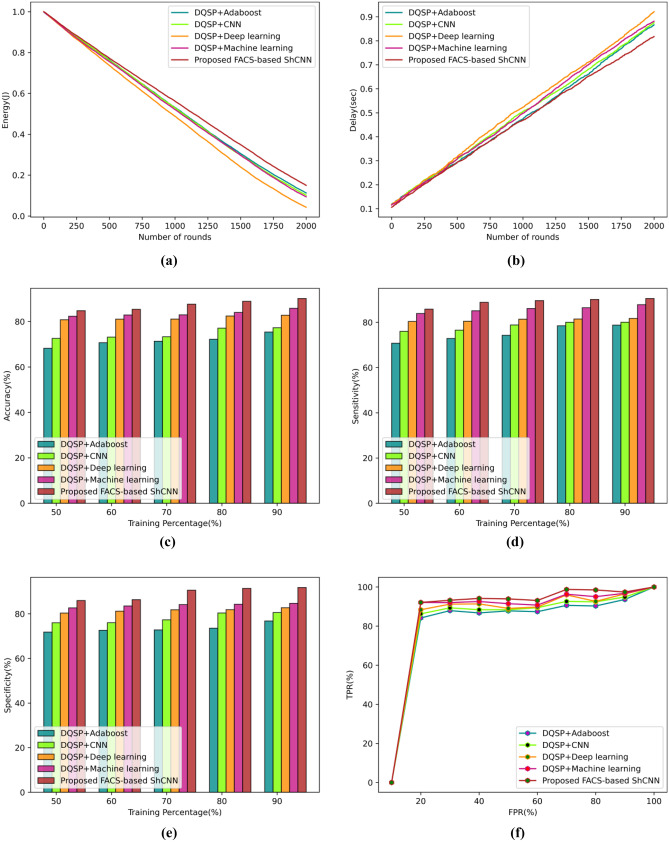

According to the performance parameters of energy, delay, accuracy, sensitivity, specificity, and TPR, Fig. 7 evaluates the dataset-2 approach. The evaluation based on energy measurements is depicted in Fig. 7a. The newly developed FACS-based ShCNN’s energy value was determined to be 0.818 J, whereas the energy values for the 400 rounds of the existing techniques were DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning, respectively. The analysis utilizing the delay measure is displayed in Fig. 7b. By considering 500 rounds, the presented FACS-based ShCNN calculates a delay value of 0.292 s. Existing strategies including DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning have successfully reduced the latency to 0.306 s, 0.315 s, 0.293 s, and 0.307 s, respectively. The analysis utilizing the accuracy metric is shown in Fig. 7c. The methods that yield accuracy presently are DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + Machine Learning, with accuracy values for the established FACS-based ShCNN of 68.215%, 72.576%, 80.826%, 82.358%, and 84.78% for the training data of 50%. The proposed FACS-based ShCNN performs 19.54%, 14.40%, 4.671%, and 2.864% better than the existing techniques. The assessment using the sensitivity metric is shown in Fig. 7d. The sensitivity values obtained by DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning, respectively, are 72.86%, 76.55%, 80.49%, and 85.13% when the training data is assumed to be 60%, while the suggested FACS-based ShCNN computed a sensitivity value of 88.87%. The calculated performance increases by the current techniques are 18.00%, 13.85%, 9.431%, and 4.206% in contrast to the proposed FACS-based ShCNN. Figure 7e displays the evaluation using the specificity metric. When the training data is considered as 70%, the specificity value calculated by the DQSP + Adaboost is 70.57%, the DQSP + CNN is 76.29%, the DQSP + Deep learning is 77.69%, the DQSP + machine learning is 80.59%, and the proposed FACS-based SHCNN is 86.83%. Performance improvements from the current methods over the proposed FACS-based ShCNN are 18.72%, 12.13%, 10.51%, and 7.182%, respectively. Figure 7f depicts the examination of the TPR metric. According to the DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, DQSP + machine learning, and proposed FACS-based SHCNN, the TPR values for an FPR value of 80% are 90.28%, 92.42%, 95.04%, 92.64%, and 98.48%, respectively. The calculated performance enhancements for the current approaches over the proposed FACS-based ShCNN are 8.323%, 6.156%, 5.927%, and 3.496%.

Fig. 7.

Comparative assessment of developed technique using dataset-2 based on a energy, b delay, c accuracy, d sensitivity, e specificity, f TPR

Comparative Discussion

Using datasets 1 and 2, 1000 cycles, 90% of the training data, and 90% of the FPR, Table 3 compares the recently developed FACS-based ShCNN algorithm to the existing techniques. The energy value acquired by the previous techniques, such as DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning, were 0.450 J, 0.452 J, 0.454 J, and 0.461 J, respectively. The energy value measured by the newly developed FACS-based ShCNN was 0.49 J. The delay values acquired by the earlier approaches, such as DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning, are 0.475 s, 0.477 s, 0.515 s, and 0.487 s, respectively. The established FACS-based ShCNN calculated a delay of 0.452 s. The accuracy values obtained by the DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning are 81.35%, 85.24%, 85.24%, and 87.55%, respectively, compared to the proposed FACS-based ShCNN’s accuracy value of 91.56%. The DQSP + Adaboost method yields a sensitivity value of 79.50%, but the DQSP + CNN, DQSP + Deep learning, DQSP + Machine Learning, and the proposed FACS-based SHCNN methods yield sensitivity values of 81.21%, 88.18%, 91.72%, and 96.10%, respectively. Existing techniques like DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning have gained specificity values of 76.81%, 80.58%, 82.74%, and 84.70%, respectively. The shown FACS-based ShCNN measured a specificity value of 91.80%. The TPR obtained utilizing well-known techniques such as DQSP + Adaboost, DQSP + CNN, DQSP + Deep learning, and DQSP + machine learning are 90.33%, 93.20%, 96.56%, and 97.67% respectively, but the TPR calculated by the well-known FACS-based ShCNN is 99.45%. A greater energy value of 0.562 J, the smallest delay of 0.452 s, the highest accuracy of 91.56%, the highest sensitivity of 96.10%, the highest specificity of 91.80%, and the highest TPR of 99.45% were all assessed by the established FACS-based ShCNN. The table depicts this.

Table 3.

Comparative discussion

| Dataset/metrics | DQSP + Adaboost | DQSP + CNN | DQSP + Deep learning | DQSP + Machine learning | Proposed FACS-based ShCNN | |

|---|---|---|---|---|---|---|

| Dataset 1 | Energy (J) | 0.450 | 0.452 | 0.454 | 0.461 | 0.493 |

| Delay (s) | 0.475 | 0.477 | 0.515 | 0.487 | 0.452 | |

| Accuracy (%) | 78.28 | 81.35 | 85.24 | 87.55 | 91.56 | |

| Sensitivity (%) | 79.50 | 81.21 | 88.18 | 91.72 | 96.10 | |

| Specificity (%) | 76.81 | 80.58 | 82.74 | 84.70 | 91.80 | |

| TPR (%) | 90.33 | 93.20 | 96.56 | 97.67 | 99.45 | |

| Dataset 2 | Energy (J) | 0.531 | 0.530 | 0.488 | 0.521 | 0.562 |

| Delay (s) | 0.473 | 0.504 | 0.522 | 0.498 | 0.468 | |

| Accuracy (%) | 75.41 | 77.28 | 82.79 | 85.83 | 90.17 | |

| Sensitivity (%) | 78.79 | 80.08 | 81.76 | 87.83 | 90.57 | |

| Specificity (%) | 75.36 | 76.68 | 79.03 | 84.20 | 88.31 | |

| TPR (%) | 93.60 | 94.87 | 96.46 | 96.77 | 97.43 | |

Conclusion

This experimentation presents a robust and efficient identification of the breast cancer process in IoT known as FACS-based on ShCNN. The demonstrated FACS is a new design formed by incorporating CSA and FAT. Initially, an IoT duplication is done such that the simulated nodes join the patient’s information. The routing process is then carried out using the suggested FACS technique based on fitness characteristics such as distance, energy, link quality, and delay. Moreover, the steps, including pre-processing, feature extraction, data augmentation, and breast cancer categorization, are done in the breast cancer categorization phase at the base station. Here, the RoI extraction is done to pre-process the mammogram image, and after that, significant parameters, such as statistical features, GLCM and LGBP, are generated, and then the augmentation of data is performed. The ShCNN is very efficient at classifying breast cancer, with values for energy, delay, accuracy, sensitivity, specificity, and TPR of 0.562 J, 0.452 s, 91.56%, 96.10%, and 99.45%, respectively. This is because the ShCNN is trained using the FACS that have been effectively used in the field. Moreover, future work will discuss the implementation of a new deep learning classifier to enhance the execution of categorization.

Author Contribution

Ramachandro Majji conceived the presented idea and designed the analysis. Also, he carried out the experiment and wrote the manuscript with support from Om Prakash P. G. and R. Rajeswari. All authors discussed the results and contributed to the final manuscript. All authors read and approved the final manuscript.

Data Availability

The datasets generated during and/or analyzed during the current study are available in the Mammographic Image Analysis Society repository, https://www.mammoimage.org/databases/. The datasets generated during and/or analyzed during the current study are available in the Digital Database for Screening Mammography (DDSM) repository, https://www.mammoimage.org/databases/.

Declarations

Ethics Approval

This paper does not contain any studies with human participants or animals performed by any of the authors.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Conflict of Interest

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Shende, Dipali K. and Yogesh S. Angal, and S.C. Patil. “An Iterative CrowWhale-Based Optimization Model for Energy-Aware Multicast Routing in IoT,” International Journal of Information Security and Privacy (IJISP) vol.16, no.1, pp.1–24, 2022.

- 2.Shende, Dipali & Sonavane, Shefali & Angal, Yogesh, “A Comprehensive Survey of the Routing Schemes for IoT applications”, Scalable Computing: Practice and Experience, vol.no. 21, pp.203–216, 2020.

- 3.Jaglan, P., Dass, R. and Duhan, M., “Breast cancer detection techniques: issues and challenges”, Journal of The Institution of Engineers (India): Series B, vol.100, no.4, pp.379–386, August 2019.

- 4.K. Al-Saedi, M. Al-Emran, E. Abusham and S. A. El Rahman, “Mobile Payment Adoption: A Systematic Review of the UTAUT Model,” 2019 International Conference on Fourth Industrial Revolution (ICFIR), 2019, pp. 1–5,2019.

- 5.V. Rupapara, M. Narra, N. K. Gunda, S. Gandhi and K. R. Thipparthy, “Maintaining Social Distancing in Pandemic Using Smartphones With Acoustic Waves,” in IEEE Transactions on Computational Social Systems, vol. 9, no. 2, pp. 605–611, 2022,

- 6.Gope, P., Gheraibia, Y., Kabir, S. and Sikdar, B., “A secure IoT-based modern healthcare system with fault-tolerant decision making process”, IEEE Journal of Biomedical and Health Informatics, July 2020. [DOI] [PubMed]

- 7.Guo X, Lin H, Li Z, Peng M. Deep-reinforcement-learning-based QoS-aware secure routing for SDN-IoT. IEEE Internet of Things Journal. 2019;7(7):6242–6251. doi: 10.1109/JIOT.2019.2960033. [DOI] [Google Scholar]

- 8.Guo X, Lin H, Wu Y, Peng M. A new data clustering strategy for enhancing mutual privacy in healthcare IoT systems. Future Generation Computer Systems. 2020;113:407–417. doi: 10.1016/j.future.2020.07.023. [DOI] [Google Scholar]

- 9.Reka, S.S. and Dragicevic, T., “Future effectual role of energy delivery: A comprehensive review of Internet of Things and smart grid”, Renewable and Sustainable Energy Reviews, ol.91, pp.90–108, August 2018.

- 10.Stojkoska BLR, Trivodaliev KV. A review of Internet of Things for smart home: Challenges and solutions. Journal of Cleaner Production. 2017;140:1454–1464. doi: 10.1016/j.jclepro.2016.10.006. [DOI] [Google Scholar]

- 11.Talari S, Shafie-Khah M, Siano P, Loia V, Tommasetti A, Catalão JP. A review of smart cities based on the internet of things concept. Energies. 2017;10(4):421. doi: 10.3390/en10040421. [DOI] [Google Scholar]

- 12.Gope P, Hwang T. BSN-Care: A secure IoT-based modern healthcare system using body sensor network. IEEE sensors journal. 2015;16(5):1368–1376. doi: 10.1109/JSEN.2015.2502401. [DOI] [Google Scholar]

- 13.Botti, Giovanni, “Correction of the naso-jugal groove.” Orbit (Amsterdam, Netherlands) vol. 26,no.3, pp.193–202, 2007. [DOI] [PubMed]

- 14.Mahmoud MM, Rodrigues JJ, Saleem K, Al-Muhtadi J, Kumar N, Korotaev V. Towards energy-aware fog-enabled cloud of things for healthcare. Computers & Electrical Engineering. 2018;67:58–69. doi: 10.1016/j.compeleceng.2018.02.047. [DOI] [Google Scholar]

- 15.Alladi, T. and Chamola, V., “HARCI: A two-way authentication protocol for three entity healthcare IoT networks”, IEEE Journal on Selected Areas in Communications, September 2020.

- 16.Mambou, S.J., Maresova, P., Krejcar, O., Selamat, A. and Kuca, K., “Breast cancer detection using infrared thermal imaging and a deep learning model”, Sensors, vol.18, vol.9, pp.2799, September 2018. [DOI] [PMC free article] [PubMed]

- 17.Zheng J, Lin D, Gao Z, Wang S, He M, Fan J. Deep learning assisted efficient AdaBoost algorithm for breast cancer detection and early diagnosis. IEEE Access. 2020;8(8):96946–96954. doi: 10.1109/ACCESS.2020.2993536. [DOI] [Google Scholar]

- 18.Memon, M.H., Li, J.P., Haq, A.U., Memon, M.H. and Zhou, W., “Breast cancer detection in the IOT health environment using modified recursive feature selection”, wireless communications and mobile computing, November 2019.

- 19.Wang Z, Li M, Wang H, Jiang H, Yao Y, Zhang H, Xin J. Breast cancer detection using extreme learning machine based on feature fusion with CNN deep features. IEEE Access. 2019;7:105146–105158. doi: 10.1109/ACCESS.2019.2892795. [DOI] [Google Scholar]

- 20.Sun X, Qian W, Song D. Ipsilateral-mammogram computer-aided detection of breast cancer. Computerized Medical Imaging and Graphics. 2004;28(3):151–158. doi: 10.1016/j.compmedimag.2003.11.004. [DOI] [PubMed] [Google Scholar]

- 21.Raposio, Edoardo & Adami, Martina & Capello, Cecilia & Ferrando, Giovanni & Molinari, R & Renzi, M & Caregnato, P & Gualdi, Alessandro & Faggioni, M & Panarese, Paola & Santi, Pierluigi, “Intraoperative expansion of scalp flaps. Quantitative assessment”, Minerva chirurgica, vol.no.55, pp. 629–34, 2000. [PubMed]

- 22.Fusini, Federico & Zanchini, Fabio, “Mini-open surgical treatment of an ex professional volleyball player with unresponsive Hoffa’s disease”, Minerva Ortopedica e Traumatologica. vol.no. 67, pp. 192–4, 2016.

- 23.Albarqouni S, Baur C, Achilles F, Belagiannis V, Demirci S, Navab N. Aggnet: deep learning from crowds for mitosis detection in breast cancer histology images. IEEE transactions on medical imaging. 2016;35(5):1313–1321. doi: 10.1109/TMI.2016.2528120. [DOI] [PubMed] [Google Scholar]

- 24.Zhang YD, Satapathy SC, Guttery DS, Górriz JM, Wang SH. Improved Breast Cancer Classification Through Combining Graph Convolutional Network and Convolutional Neural Network. Information Processing & Management. 2021;58(2):102439. doi: 10.1016/j.ipm.2020.102439. [DOI] [Google Scholar]

- 25.Chanak P, Banerjee I. Congestion free routing mechanism for IoT-enabled wireless sensor networks for smart healthcare applications. IEEE Transactions on Consumer Electronics. 2020;66(3):223–232. doi: 10.1109/TCE.2020.2987433. [DOI] [Google Scholar]

- 26.Awan KM, Ashraf N, Saleem MQ, Sheta OE, Qureshi KN, Zeb A, Haseeb K, Sadiq AS. A priority-based congestion-avoidance routing protocol using IoT-based heterogeneous medical sensors for energy efficiency in healthcare wireless body area networks. International Journal of Distributed Sensor Networks. 2019;15(6):1550147719853980. doi: 10.1177/1550147719853980. [DOI] [Google Scholar]

- 27.Cicioğlu M, Çalhan A. SDN-based wireless body area network routing algorithm for healthcare architecture. Etri Journal. 2019;41(4):452–464. doi: 10.4218/etrij.2018-0630. [DOI] [Google Scholar]

- 28.Almalki FA, Ben Othman S, A Almalki F, Sakli H., “EERP-DPM: Energy Efficient Routing Protocol Using Dual Prediction Model for Healthcare Using IoT”, Journal of Healthcare Engineering, vol.2021, May 2021. [DOI] [PMC free article] [PubMed]

- 29.Kumar R, Kumar D. Multi-objective fractional artificial bee colony algorithm to energy aware routing protocol in wireless sensor network. Wireless Networks. 2016;22(5):1461–1474. doi: 10.1007/s11276-015-1039-4. [DOI] [Google Scholar]

- 30.Ren, J.S., Xu, L., Yan, Q. and Sun, W., “Shepard convolutional neural networks”, In Proceedings of the 28th International Conference on Neural Information Processing System, vol.1, pp.901–909, December 2015.

- 31.Askarzadeh A. A novel metaheuristic method for solving constrained engineering optimization problems: crow search algorithm. Computers & Structures. 2016;169:1–12. doi: 10.1016/j.compstruc.2016.03.001. [DOI] [Google Scholar]

- 32.Li, Q.Q., He, Z.C. and Li, E., “The feedback artificial tree (FAT) algorithm”, Soft Computing, pp.1–28, February 2020.

- 33.Varish N, Pal AK. A novel image retrieval scheme using gray level co-occurrence matrix descriptors of discrete cosine transform based residual image. Applied Intelligence. 2018;48(9):2930–2953. doi: 10.1007/s10489-017-1125-7. [DOI] [Google Scholar]

- 34.Zhang, W., Shan, S., Gao, W., Chen, X. and Zhang, H., “Local gabor binary pattern histogram sequence (lgbphs): A novel non-statistical model for face representation and recognition”, In proceedings of Tenth IEEE International Conference on Computer Vision (ICCV’05), vol.1, pp.786–791, October 2005.

- 35.MIAS and DDSM Dataset taken from, “https://www.mammoimage.org/databases/”, accessed on May 2021.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available in the Mammographic Image Analysis Society repository, https://www.mammoimage.org/databases/. The datasets generated during and/or analyzed during the current study are available in the Digital Database for Screening Mammography (DDSM) repository, https://www.mammoimage.org/databases/.