Abstract

Multi-modal brain magnetic resonance imaging (MRI) data has been widely applied in vison-based brain tumor segmentation methods due to its complementary diagnostic information from different modalities. Since the multi-modal image data is likely to be corrupted by noise or artifacts during the practical scanning process, making it difficult to build a universal model for the subsequent segmentation and diagnosis with incomplete input data, image completion has become one of the most attractive fields in the medical image pre-processing. It can not only assist clinicians to observe the patient’s lesion area more intuitively and comprehensively, but also realize the desire to save costs for patients and reduce the psychological pressure of patients during tedious pathological examinations. Recently, many deep learning-based methods have been proposed to complement the multi-modal image data and provided good performance. However, current methods cannot fully reflect the continuous semantic information between the adjacent slices and the structural information of the intra-slice features, resulting in limited complementation effects and efficiencies. To solve these problems, in this work, we propose a novel generative adversarial network (GAN) framework, named as random generative adversarial network (RAGAN), to complete the missing T1, T1ce, and FLAIR data from the given T2 modal data in real brain MRI, which consists of the following parts: (1) For the generator, we use T2 modal images and multi-modal classification labels from the same sample for cyclically supervised training of image generation, so as to realize the restoration of arbitrary modal images. (2) For the discriminator, a multi-branch network is proposed where the primary branch is designed to judge whether the certain generated modal image is similar to the target modal image, while the auxiliary branch is to judge whether its essential visual features are similar to those of the target modal image. We conduct qualitative and quantitative experimental validations on the BraTs2018 dataset, generating 10,686 MRI data in each missing modality. Real brain tumor morphology images were compared with synthetic brain tumor morphology images using PSNR and SSIM as evaluation metrics. Experiments demonstrate that the brightness, resolution, location, and morphology of brain tissue under different modalities are well reconstructed. Meanwhile, we also use the segmentation network as a further validation experiment. Blend synthetic and real images into a segmentation network. Our segmentation network adopts the classic segmentation network UNet. The segmentation result is 77.58%. In order to prove the value of our proposed method, we use the better segmentation network RES_UNet with depth supervision as the segmentation model, and the segmentation accuracy rate is 88.76%. Although our method does not significantly outperform other algorithms, the DICE value is 2% higher than the current state-of-the-art data completion algorithm TC-MGAN.

Keywords: Multimodality, Reconstruction consistency, Adversarial generation network, Image synthesis, Brain tumor segmentation

Introduction

Multi-modal brain magnetic resonance imaging (MRI) data has been widely applied in vison-based brain tumor computer-assisted diagnosis (CAD) systems because of its complementary diagnostic information from different modalities. For example, T1, T2, FLAIR (fluid-attenuated inversion recovery), and T1ce (T1 contrast-enhanced) are required as common inputs for current popular brain tumor segmentation algorithms [1]. Multiple modalities can show different varieties of image characteristics of the brain and tumor structures clearly and realizes the fine segmentation of brain tumors, which makes the performance of the segmentation algorithm more convincing, especially for the inner image segmentation method based on deep learning (DL) [2, 3]. Jiang et al. found that adding an image reconstruction branch (VAE) on the basis of UNet encoding–decoding has a regular effect on the encoder [4]. Isensee and Maier-Hein proposed to divide the segmentation task into two stages, which can be used for end-to-end training [5]. However, in the actual clinical MRI data collection process, data loss has become a key obstacle in using multi-modal images for computer-assisted diagnosis. For example, some patients’ movements during the filming process cause image corruption, or some patients’ resistance to one of the tests results in incomplete data. These patients’ data with missing modalities cannot be used for segmentation tasks, which is a great waste of medical imaging resources. Therefore, it is necessary to complete the missing data to take full advantages of the multi-modal images.

Traditional approaches [6–8] for data completion are to estimate the missing modal of one sample from the neighboring samples whose multi-modal data is complete. But the recovered modal lacks semantic relevance to other modals of the target sample because it only depends on the pixels from the same modal of the other existing samples. Nowadays, many image generation methods [9–11] based on convolutional neural networks have been proposed for image data completion. They could complete the missing modals from the existing modals of the given sample via generative models. However, several problems still exist in current models: (1) At present, most methods can only achieve 1-to-1 or n-to-1 image completion and cannot achieve the mutual generation of images of any modalities of brain tumor MRL, as shown in Fig. 1a, b. (2) In the process of synthesizing the target modal image, they cannot guarantee that the characteristic information of the brain tumor is retained in the synthesized image.

Fig. 1.

Image translation using a Pix2Pix/CycleGAN (1-to-1), b CollaGAN (n-to-1), c TC-MAGN/OURS (RAGAN) (1-to-n). In multi-domain image completion, OUR (RAGAN) completes the missing domain image of the T2 domain image given in the input

To solve these problems, in this work, we propose a novel generative adversarial network (GAN) to complete the T1, T1ce, and FLAIR images of the multi-modal MRI sample from the given T2 modal image, as shown in Fig. 1c. Our network consists of three parts: (1) propose a novel supervised training method for synthetic images, by taking T2 modal images and multi-modal classification labels as inputs, the U-Net generator can synthesize arbitrary modal images; (2) a multi-branch network is proposed as a discriminator where the primary branch is designed to measure the pixel-wise similarity between the generated image and the target image, while the auxiliary branch is to judge whether the tumor region in the generated image is similar to that in the target modal image with respect to the visual features; (3) the loss of gradient penalty and reconstruction consistency is added to the Wasserstein adversarial loss of the Wasserstein GAN (WGAN), so the training process can guarantee the accuracy of data completion and the learning efficiency simultaneously. Specifically, the main contributions of this work are summarized as follows:

We propose a novel GAN structure to complete T1, T1ce, and FLAIR modal MRI from a given T2 modal image of the same sample. For the generator, we use T2 modal images and multi-modal classification labels from the same sample for supervised training of image generation, so as to realize the restoration of any arbitrary modal image. For the discriminator, we design a multi-branch network to measure the pixel-wise similarity and the visual feature similarity between the tumor region in the generated image and that in the target image simultaneously.

We propose a novel loss function by combining the Wasserstein adversarial loss, the tumor reconstruction consistency loss, and the gradient penalty loss. With the proposed loss function, the training process could achieve high accuracy of data completion as well as the fast convergence speed.

The experimental results on the public BraTs2018 dataset demonstrate that our proposed method could provide a recovered modal image not only with high quality, but also more sensitivity in tumor region to improve the performance of the subsequent segmentation step.

The rest of this paper is organized as follows: in “Related Work” we describe the architecture of our proposed data completion network. The joint loss and the training strategy are discussed in “Multi-Modality Image-to-Image Translation.” We present and analyze the experimental results in “Experimental Results and Analysis,” and the whole work is concluded in “Conclusion.”

Related Work

Although MRI of different modalities can provide supplementary information for clinical diagnosis, data with missing modalities often appear in the process of multi-modal acquisition. In order to effectively use these missing data and reduce the impact of small sample problems, researchers have proposed many methods, among which complementing the data is one of the effective methods. Traditional missing completion algorithms include the expectation–maximization algorithm [8], proximity algorithm [7], and matrix completion algorithm [6]. However, these methods cannot effectively recover blocky missing data, and for the entire modal missing, estimating a large number of missing values will lead to unstable algorithm performance. The tucker decomposition algorithm based on multi-directional delayed embedding is proposed, which considers the low-rank model of the tensor embedding space, but this method does not consider the possibility of extracting features and completing the tensor [12]. For rich data sets, basic data enhancement operations such as rotation, translation, and zooming can be performed [13], which introduces more variability, but cannot be completed with missing data.

With the development of deep learning, generative adversarial network (GAN) [14] is a widely studied image synthesis method. Various variants of GAN can generate high-quality realistic natural images. Initially, when GAN was proposed, it was an unsupervised generation framework. For example, in image synthesis, random noise was mapped to realistic target images. Jog et al. used T1-weighted, T2-weighted, and PD-weighted images as input and used random forest regression to reconstruct FLAIR images. The resulting images were similar to real FLAIR images [15]. Later, CGAN is the conditional GAN [16], and the input is added with prior information such as labels or image features instead of noise alone. At this time, GAN can be regarded as a supervised generation framework. The generative characteristics of the two frameworks have been used in various ways to synthesize certain types of medical images [17], using DCGAN [18] to synthesize different types of liver CT lesions as data for lesion classification. In the enhancement method, Xu et al. [19] used DCGAN to synthesize realistic lung nodules. Compared with real ones, synthetic lung nodules are difficult to distinguish even for radiologists. Zhu et al. used cycleGAN [20] for unpaired image-to-image conversion and generated heart MRI and segmentation mask “from heart CT slice and segmentation image” [21]. Isola et al. based on Pix2Pix [10] with a slight modification to generate high-resolution retinal images from the binary image of the vascular tree [9, 22] used pix2pix and CycleGAN to achieve modal cross-conversion between brain MRI T1 and T2. CollaGAN [23] proposes a collaborative model to incorporate multiple domains for generating one missing domain. Sharma and Hamarneh proposed a multi-modal generative confrontation network (MM-GAN), which can use combined information from available sequences to synthesize missing MR pulse sequences. Regardless of the number of lost sequences, the synthesis process runs in a single forward pass of the network, and the running time is independent of the number of lost sequences [24]. Hamghalam et al. proposed a multimodal Gaussian process prior to variational autoencoder (MGP-VAE), which uses the Gaussian process (GP) before the variational autoencoder (VAE) to use the correlation between the patient and the sub-modality to estimate one or more modal images missing from the patient [25].

MRI usually have multiple modalities, and pix2pix, CycleGAN, and MGP-VAE can only synthesize images from one modal to another. For example, if we have T2 modal images and want to synthesize the other three modalities, we need to train three independent networks, which will lead to low efficiency. For unpaired multi-domain data, StarGAN [26] introduces domain labels for CycleGAN and enables a single network to transform the input image into any desired target domain. Inspired by StarGAN, for paired multi-modal MRI, this paper introduces the modal label into pix2pix, so that a single modal T2 can synthesize the other three target modalities through a single-generation network. In order to ensure that the brain tumor feature information in the generated modal image is the same as the original T2 image, this paper proposes a multi-modal brain tumor reconstruction consistency loss to maintain the tumor feature information among multiple modal images.

Multi-Modality Image-to-Image Translation

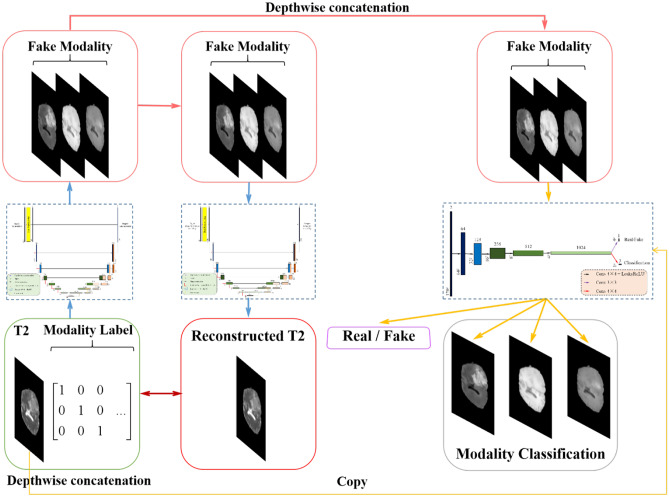

The algorithm framework designed in this paper is shown in Fig. 2. The so-called Random Generative Adversarial Networks (RAGAN) is composed of two parts: (1) a modified U-Net structure as generator whose network diagram is shown in Fig. 3 and (2) a multi-branch convolutional neural network (CNN) structure as discriminator whose network diagram is shown in Fig. 4. We will introduce these two parts as follows:

Original-target modality

Target-original modality

Fooling the discriminator

Fig. 2.

Overview of the proposed 1-to-n multi-domain completion framework. RAGAN (Random Generative Adversarial Networks) is composed of a discriminator D and two generator G. Through training G, it can transform the modal image x into the modal image y, namely G(x, c) = y, can randomly select the target modal label c during the training process so that G can learn how to flexibly transform into different modal images, and the discriminator D can realize the input, and the data distinguishes the true and false function, and the auxiliary classifier is introduced into D

Fig. 3.

Overview of the network structure of the generator. In order to ensure that the converted image retains the brain tumor feature information of the input image, we designed a generator with a cyclic consistency structure

Fig. 4.

Overview of the discriminator network structure. The discriminator D that introduces the auxiliary classifier can discriminate the authenticity of the input data, so that it can control multiple categories

Multi-Input U-Net for Multi-Modal Image Generation

Figure 2a shows the architecture of the proposed generator, where we modify the U-Net structure by applying both the T2 modal image and the label image of the target modal image, where the input is the image only; we apply the label image of the target modal image as a complement. Figure 2b shows the different label images of T1, T1ce, and FLAIR images. The generation process can be mathematically expressed as follows:

| 1 |

where x represents the input T2 image, c denotes the “modal label” which created for each synthesized MRI sequence using one-hot encoding, and y is the generated modal image. Note that the generated image could be any arbitrary image from T1, T1ce, and FLAIR.

By using both the T2 image and the label of the target modal image, the network can be more sensitive to the features that are inherited from the T2 modal to the target modal image. Figure 3 shows a cyclic process of producing an example-generated T1ce image and then generating a T2 mode from the T1ce image. It can be considered that the image is similar to the ground truth image, especially in the region containing tumor.

Multi-Branch CNN for Fake Image and Target Image Discrimination

For a given input T2 image x and target modality label c, the goal of this article is to generate an image as similar to the target one and correctly classify it as the target modality c. The traditional CNN discriminator of GAN only focuses on the pixel-wise similarity between the generated image and the ground truth, underestimating the key features reflecting the classification of the generated image. To solve this problem, we propose a multi-branch CNN as the discriminator of the GAN. As shown in Fig. 4, we adopt a 5-layer CNN structure as the primary branch to measure the pixel-wise similarity between the generated image and the target image. At the last layer of the CNN, a full convolution layer with kernel size 1 × 1 is added as an auxiliary branch to judge whether the generated image could be classified as the certain modality. The objective of the proposed discriminator is to make the visual features of the generated modal image as similar to the target modality and distinct enough from other modalities. Therefore, the completed images could describe the sample from different aspects comprehensively, which is the certain significance of multi-modal MRI.

Objective Function

To accurately restrain the network in generating the image close to the target modal image, the objective function of the proposed network is composed of four parts: (1) pixel-wise adversarial loss, (2) modal classification loss, (3) cycle consistency loss, and (4) gradient penalty loss.

Pixel-Wise Adversarial Loss

In order to make the generated image indistinguishable from the real image, the pixel-wise adversarial loss function is proposed based on pixel alignment as follows:

| 2 |

where x is the input T2 modal image, c represents the target modality label, and is the result generated by the proposed generator, while denotes the target modality sample. () represents the expectation over the sampled image data, and () is the probability distribution on a given source. L11 is the loss based on L1-norm between the generated image G(x, c) and the target image , which can be mathematically expressed as follows:

| 3 |

and is the hyper-parameter used to balance its weight.

Modal Classification Loss

To guarantee that the generated modal image y could be attributed to the same modality as the target image, we add an auxiliary classification branch in the proposed discriminator. Therefore, we propose a modal classification loss to improve the ability of the generator and discriminator in judging the image modality. The modal classification loss function for the discriminator is defined as follows:

| 4 |

where represents the probability distribution on the modal label calculated by the discriminator, c is the given modality label, and x and denote the input T2 modal image and the target modal image, respectively. By minimizing this loss function, D learns to classify the real image into its corresponding target modal label c. In contrast, the modal classification loss function for the generator is defined as follows:

| 5 |

where the denotes the probability distribution on the modal label calculated by the generator, while x and c are the same as those in Eq. 5. G tries to minimize this loss function to generate an image that can be classified as the target modal c.

Cycle Consistency Loss

By minimizing the adversarial loss and classification loss, the generator is trained to synthesize realistic images and classify them into the correct target modalities. However, it could not guarantee that the converted image preserves the semantic content of the input image, but only changes the relevant part of the input modality. In order to alleviate this problem, we propose to add a reconstruction module in the generator to inversely reconstruct the T2 image from the generated T1, T1ce, or FLAIR image so that the reconstructed image is close to the input T2 image. The difference between the reconstructed T2 image and the input T2 image is computed by the so-called cycle consistency loss, which is computed as follows:

| 6 |

where G takes the image G(x, c) converted by the previous generator and the target modal label c as input and tries to reconstruct the image as close as possible to the original image x, and denotes the calculation of L1 norm, while , x, and c are the same symbols as those in the above discussions. Note that the network module for the backward transformation shares the same structure of the forward transformation generator G. which can reduce the amount of network parameters and increase the speed of network inference.

Joint Loss Function

In order to stabilize the training process and generate higher-quality images, we adopt the adversarial function of Wasserstein GAN [27] with Wasserstein adversarial loss and gradient penalty loss in the proposed network, which is defined as follows:

| 7 |

where is uniformly sampled along the straight line between a pair of real images and composite images.

Finally, the objective functions of G and D after optimization are summarized as follows:

| 8 |

| 9 |

where and are hyperparameters, and they control the relative importance of modal classification and reconstruction loss respectively. In all experiments, we use =1, =10, and =100 empirically.

Experimental Results and Analysis

To evaluate the effect of our proposed method, we apply it to the BraTs2018 multi-modal brain tumor dataset and compare the performance with several state-of-the-art medical data completion methods, including pix2pix [10], MGP-VAE [25], and TC-MGAN [11]. For the performance on the quality of the completed modal image, the peak signal-to-noise ratio (PSNR) and structured similarity index (SSIM) are used as the quantitative metrics for the evaluation. For the performance on improving the consequent segmentation with the completed modal images, the DICE score is used for the evaluation. In the following, we introduce the dataset, parameters setting, and implementation of experiments and discuss the experimental results of comparing the proposed method with state-of-the-art methods with respect to different evaluation metrics.

Experimental Dataset

We use the BraTs2018 multi-modal brain tumor dataset, which contains 285 samples with four aligned MRI modalities: FLAIR, T1, T1ce, and T2, and the image size is 240 × 240 × 155. After filtering out the axial slices in the brain area with pixels less than 2000, 257 and 28 samples are randomly selected for training and testing. By extracting the 2D slices from the 3D volumes, we have 39,835 sets of T1, T1ce, T2, and FLAIR images as training sets and 4340 sets of T1, T1ce, T2, and FLAIR images as testing sets. We resize the images of size 240 × 240 to 128 × 128. Specifically for the consequent segmentation evaluation, two categories are labeled for brain tumor segmentation, i.e., tumor region and background region.

Parameter Setting

In the experiments, several parameter combinations are evaluated, and the parameters are empirically set as follows. , , , and are set to 0.1, 1, 10, and 100, respectively. We use the Adam optimizer with a batch size of 128 to minimize the objective, where β1 = 0.4 and β2 = 0.8. For data enhancement, this article flips the image horizontally with a probability of 0.4 and performs 120 training cycles. The learning rate for the first 25 training cycles is set to 0.0002, and the learning rates for the following 25, 55, and 85 training cycles are set to 0.0001, 0.00005, and 0.00001, respectively. The U-Net is used in this work to compare the improvement of synthetic images from different models in brain tumor segmentation. We train the model by 30 training cycles, and the learning rate is set to 0.001.

All the experiments are performed through Python with the Tensorflow and Keras libraries on an Intel Xeon Silver 4110 2.1 GHz PC with 32G RAM and 4 NVIDIA TITAN Xp graphic processing unit cards with 12G RAM.

Implementation of Experiments

We compare the proposed RAGAN method with three state-of-the-art multi-modal image completion methods: pix2pix [10], MGP-VAE [25], and TC-MGAN [11]. The TC-MGAN method proposes a multi-modality generative adversarial network (MGAN) to synthesize three high-quality MRI modalities (FLAIR, T1, and T1ce) from one MRI modality T2 simultaneously. For MRI brain image synthesis, it is important to preserve the critical tumor information in the generated modalities, so it introduces a multi-modality tumor consistency loss. MGP-VAE can leverage the Gaussian process (GP) prior to the variational autoencoder (VAE) to utilize the subjects/patients and sub-modalities correlations, which add two or three missing modalities. The pix2pix method adopts U-Net as the generator and a patch-based fully convolutional network as the discriminator. The input to the discriminator is a channel-wise concatenation of the semantic label map and the corresponding image. The experiments are implemented as follows:

The test T2 images in the dataset are processed to provide synthesized T1, T1ce, and FLAIR images by the proposed method, as well as by TC-MGAN and pix2pix methods.

A blind reader study is performed on 20 groups of generated images for subjective analysis. The images processed by different methods are sent to two radiologists to independently score every group of T1, T1ce, and FLAIR generated from T2 image in terms of contrast retention, lesion discrimination, and overall quality on a five-point scale (1 = unacceptable and 5 = excellent). The mean and standard deviation values of the scores from the two radiologists are calculated as the expert validation.

For the synthesized image quality evaluation, the generated T1, T1ce, and FLAIR images are compared with the ground truth images to generate the peak signal-to-noise ratio (PSNR):

| 10 |

where is the maximum pixel value of the image. When the image quality is better, the noise is smaller, and the MSE is smaller, that is, the peak signal-to-noise ratio is larger.

-

4.

Structured similarity index (SSIM) is also considered to evaluate the performance of the proposed method in generating the missed multi-modal images. SSIM is mathematically defined as follows:

| 11 |

where is the mean value of the corresponding subscript image, is the variance of the corresponding subscript image, and c1, c2, and c3 are all small constants to avoid the denominator being zero. The larger the value of SSIM, the closer the two images are.

-

5.

As shown in Fig. 4, the generated T1, T1ce, and FLAIR images, together with the input T2 image, are sent to the U-Net to segment the tumor in each sample. Then, the segmented tumor region and the ground truth are used to calculate the DICE score:

| 12 |

where |X ∩ Y| is the intersection between sample X and sample Y, and |X| and |Y| represent the number of elements in sample X and sample Y, respectively.

Experimental Results

Ablation Studies

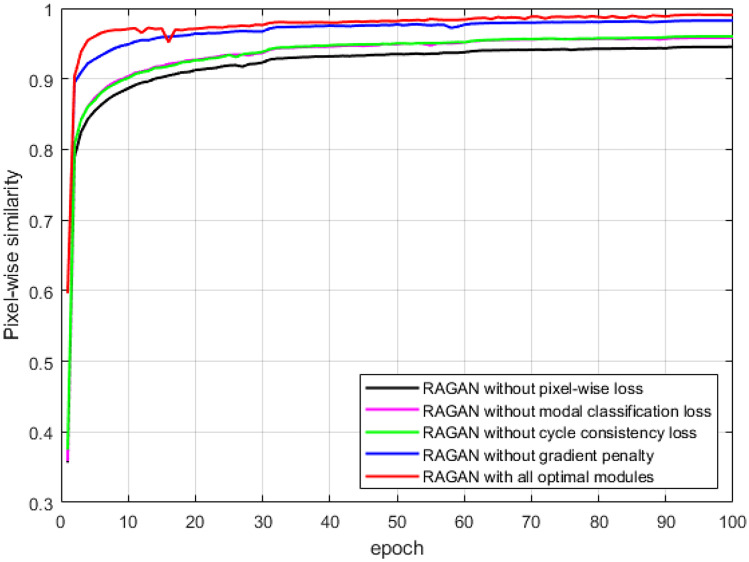

Figure 5 illustrates the change of the pixel-wise similarity between the multi-modal images generated by RAGAN with different loss strategies and the ground truth images with the number of epochs during the training process. Without the pixel-wise loss , the details of the edges or textures in the generated multi-modal images are degraded, leading to the lowest pixel-wise similarity. Training the network without modal classification loss or cycle consistency loss would result in the confusion of image modalities generation. Training the network without gradient penalty leads to slower convergence speed. In contrast, combining all the pixel-wise loss, modal classification loss, cycle consistency loss, and gradient penalty loss, together with the basic adversarial loss in GAN, our proposed RAGAN model can achieve a good balance in training accuracy and speed.

Fig. 5.

The line graph of the change of the pixel-wise similarity between the generated images and the ground truth images with the number of epochs during the training process

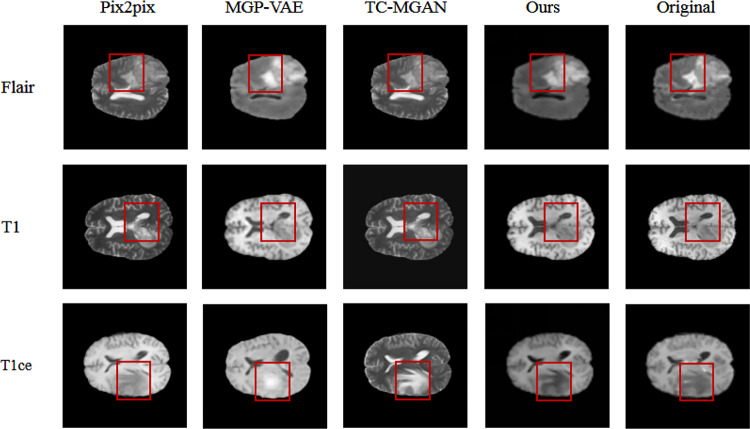

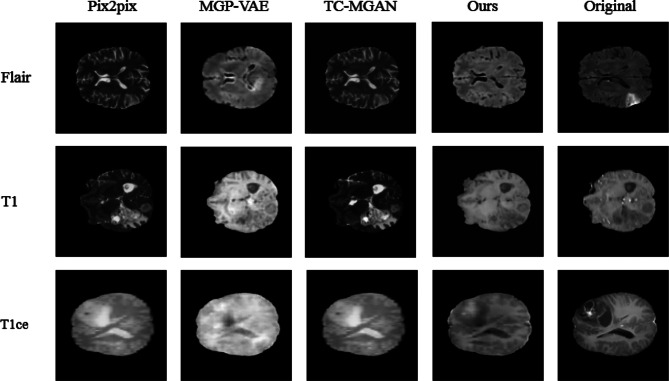

Results on Generating High-Quality Missing Modal Images

Figures 6 and 7 show the images of T1, T1ce, and FLAIR modalities generated from two given T2 modal images by different methods. It can be considered that pix2pix [10], MGP-VAE [25], and TC-MGAN [11] cannot well retain the tumor information from T2 modal image during the training process, resulting in the lack of tumor features in the generated modal images. For example, as marked by the red rectangle in Fig. 6, pix2pix [10], MGP-VAE [25], and TC-MGAN [11] can not well preserve the tumor information from T2, and the brain tumor features in the composite image are not very obvious. In comparison, our model could generate better results in meaningful details, e.g., a more accurate outstanding tumor region in BraTs2018. This is achieved by introducing the label of missing modal image to the generator as a constraint, adding the auxiliary branch in the discriminator to classify the generated image into the certain modality and combining adversarial loss [14] classification loss [11] and cycle consistency loss as a joint loss, which are all essential in preserving the anatomical structures of brain tumors in medical images, therefore providing high-quality multi-modal MRI.

Fig. 6.

Comparing the image effects synthesized by different methods, compared with other methods, the image generated by our proposed method not only has the similarity at the pixel level in the image, but also maintains better semantic consistency with the ground truth

Fig. 7.

Image effects synthesized by different methods. Compared with other data synthesis algorithms, the image generated by our proposed method not only has image pixel-level similarity, but also maintains better semantic consistency with ground truth

Table 1 shows the mean and standard deviation values of the subjective quality scores from two experienced radiologists to judge the qualities of grouped T1, T1ce, and FLAIR modal images generated from the given T2 modal images by different methods. The proposed method gets the highest scores in contrast retention and lesion discrimination; therefore, it achieves a higher overall score than the other methods.

Table 1.

Subjective quality scores () for different algorithms

| 3*pix2pix | MGP-VAE | TC-MGAN | OURS | |

|---|---|---|---|---|

| Contrast retention | ||||

| Lesion discrimination | ||||

| Overall quality |

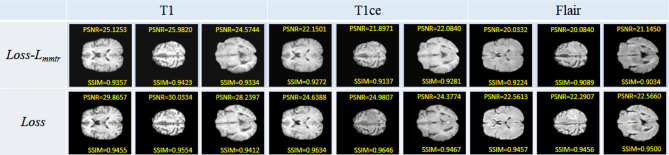

The quantitative results of reconstructing all the testing data are shown in Table 2. The proposed method could provide higher averaging PSNR and SSIM values than pix2pix, MGP-VAE, and TC-MGAN methods in all the T1, T1ce, and FLAIR modal images, proving our qualitative observations. In order to compare the performance improvement of the model after introducing the cyclic consistency loss function, the generative adversarial model is used to conduct the baseline experiment of this article, and the BraTs data set is used for testing under the same conditions. The comparison result is shown in Fig. 8. The image quality synthesized by the function model with cyclic consistency loss is better, and the contrast is clearer.

Table 2.

Using PSNR and SSIM measurement methods to quantitatively analyze the image quality synthesized by different methods

| Synthetic method | PSNR | SSIM | ||||

|---|---|---|---|---|---|---|

| T2–FLAIR | T2–T1 | T2–T1ce | T2–FLAIR | T2–T1 | T2–T1ce | |

| 3*pix2pix | 21.1689 | 26.4856 | 22.1581 | 0.9104 | 0.9443 | 0.9286 |

| MGP-VAE | 22.5044 | 26.8596 | 21.5236 | 0.9187 | 0.9533 | 0.9168 |

| TC-MGAN | 23.7813 | 25.1862 | 22.5629 | 0.9382 | 0.9545 | 0.9326 |

| OURS | 22.2560 | 29.9289 | 24.6464 | 0.9478 | 0.9713 | 0.9537 |

The best quantitative performance is shown in bold emphasis

Fig. 8.

The introduction of cyclic consistency loss preserves the characteristic information of brain tumors to the greatest extent and generates data that is very similar to the original modal image

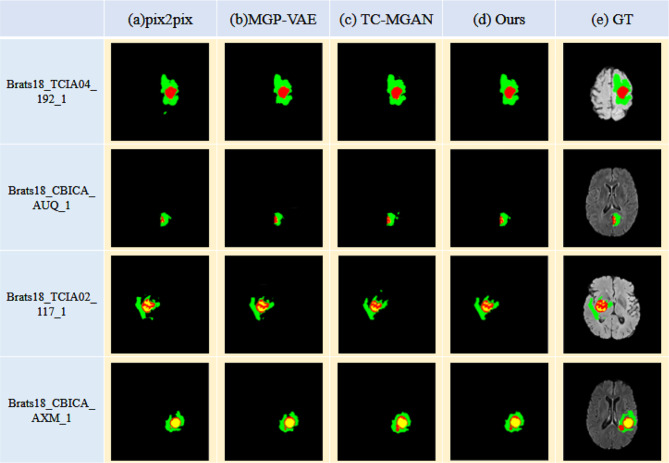

Results on Improving the Consequent Tumor Segmentation with the Completed Multi-Modal Images

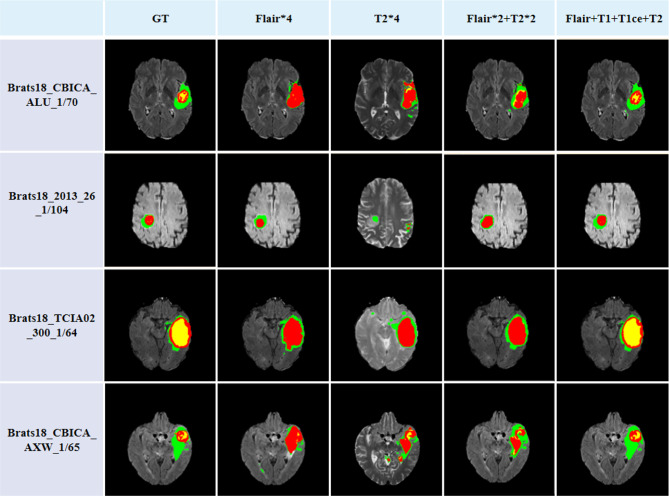

Another significant purpose of multi-modal image data completion is to improve the performance of the consequent segmentation algorithm. Figure 9 shows the results of applying U-Net to segment tumors from multi-modal image data completed by different methods. As illustrated in Fig. 9a, b, using the multi-modal images generated by pix2pix and MGP-VAE as the input of U-Net would identify several tumor regions as background incorrectly. It is mainly because the tumor regions with lower intensities than the background in T1 and T1ce modal images are reconstructed as regions with higher intensities, while the tumor region with higher intensity than the background in FLAIR modal image is generated with lower intensity. As shown in Fig. 9c, the TC-MGAN method focuses on the pixel-wise similarity too much, resulting in redundant detailed information in the tumor region and losing several tumor boundary regions on the contrary. The proposed method is designed for improving the consequent segmentation by adding more constraints in the visual similarity between the generated image and the target image, leading to better segmentation results in preserving the intact tumor regions. We mixed the real and synthetic images in a 3:1 ratio and put them into the classical segmentation network U-Net for tumor segmentation, the overall brain tumor result of 77.58%, which outperformed the segmentation accuracy of other data completion algorithms. In order to demonstrate the clinical value of this algorithm, we use the RESUNet network fused with deep supervision for segmentation; the overall brain tumor segmentation accuracy can reach 88.76%. The quantitative results of the whole tumor segmentation are shown in Table 3, and the higher DICE score of the proposed method is consistent with our discussion above. In order to verify the value of multi-modal datasets for image segmentation, image data analysis and comparison experiments are shown in Fig. 10. Under the premise of using the same segmentation network, in order to eliminate the interference of the number of channels, this paper copies a single T2 modal information four times, expands it into four channels, and inputs them into the segmentation network together. Obviously, after multi-modal image training, the effect of brain tumor segmentation is better and closer to the ground truth.

Fig. 9.

Comparing the training effects of images synthesized by different methods, our method can retain tumor feature information to a greater extent, and the segmentation results are closer to the segmentation label

Table 3.

Through the qualitative analysis of brain tumor segmentation, the superiority of our proposed synthetic image method compared with other synthetic methods is explained, and the introduction of the synthetic image can improve the accuracy of brain tumor segmentation

| DICE | |||

|---|---|---|---|

| Method | WT | TC | ET |

| Only T2 + UNet | 0.7121 | 0.6473 | 0.5378 |

| No data expansion + UNet | 0.7224 | 0.6481 | 0.5490 |

| 3*pix2pix + UNet | 0.7450 | 0.6597 | 0.5682 |

| MGP-VAE + UNet | 0.7467 | 0.6634 | 0.5665 |

| TC-MGAN + UNet | 0.7518 | 0.6789 | 0.6124 |

| OURS + UNet | 0.7758 | 0.6911 | 0.6371 |

| OURS + RESUNET + DEEP | 0.8836 | 0.8056 | 0.7366 |

The best quantitative performance is shown in bold emphasis

Fig. 10.

Comparing the impact of different modal data on the segmentation results, multi-modal data not only helps to improve the accuracy of tumor segmentation, but also improves the network’s distinction between different substantive regions

Conclusion

In this work, we propose a novel generative adversarial network (GAN), named as Random-GAN (RAGAN), for multi-modal brain MRI completion. The proposed network can generate the missing T1, T1ce, and FLAIR modal images from a given T2 modal image. We propose a novel U-Net structure using both the T2 modal image and the tumor segmentation label mask from another modal image of the same sample as the generator to recover any arbitrary modal image. We design a multi-branch network as the discriminator to measure the pixel-wise similarity and the visual feature similarity between the tumor region in the generated image and that in the target image simultaneously. We propose a novel loss function by combining the Wasserstein adversarial loss, the tumor reconstruction consistency loss, and the gradient penalty loss to achieve high accuracy of data completion as well as the fast convergence speed. The experimental results on the public BraTs2018 dataset prove that our proposed method could provide a recovered modal image not only with high quality, but also more sensitivity in the tumor region to improve the performance of the subsequent segmentation algorithm.

Author Contribution

All authors contributed to the study’s conception and design. Material preparation, data collection, and analysis were performed by Shuang Zhang, Jianning Chi, and Yang Jiang. The first draft of the manuscript was written by Yang Jiang and Shuang Zhang, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61901098, 61971118.

Data Availability

The data that support the findings of this study are openly available at www.med.upenn.edu/sbia/brats2018/data.html.

Declarations

Ethics Approval

The study did not require ethics approval.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Menze BH, Jakab A, Bauer S, et al. The multimodal brain tumor image segmentation benchmark (BRATS) IEEE transactions on medical imaging. 2014;34(10):1993–2024. doi: 10.1109/TMI.2014.2377694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Li X, Chen H, Qi X, et al. H-DenseUNet: hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE transactions on medical imaging. 2018;37(12):2663–2674. doi: 10.1109/TMI.2018.2845918. [DOI] [PubMed] [Google Scholar]

- 3.Weng Y, Zhou T, Li Y, et al. Nas-unet: Neural architecture search for medical image segmentation. IEEE Access. 2019;7:44247–44257. doi: 10.1109/ACCESS.2019.2908991. [DOI] [Google Scholar]

- 4.Jiang Z, Ding C, Liu M, et al. Two-stage cascaded u-net: 1st place solution to brats challenge 2019 segmentation task. International MICCAI Brainlesion Workshop. Springer, Cham, 2019: 231-241.

- 5.Isensee F, Maier-Hein KH. nnU-Net for Brain Tumor Segmentation. Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries: 6th International Workshop, BrainLes 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, October 4, 2020, Revised Selected Papers. Part II. Springer Nature. 2021;12658:118. [Google Scholar]

- 6.Ashraphijuo M, Wang X, Aggarwal V. Fundamental sampling patterns for low-rank multi-view data completion. Pattern Recognition. 2020;103:107307. doi: 10.1016/j.patcog.2020.107307. [DOI] [Google Scholar]

- 7.Hastie T, Tibshirani R, Sherlock G, et al. Imputing missing data for gene expression arrays. 1999.

- 8.Schneider T. Analysis of incomplete climate data: Estimation of mean values and covariance matrices and imputation of missing values. Journal of climate. 2001;14(5):853–871. doi: 10.1175/1520-0442(2001)014<0853:AOICDE>2.0.CO;2. [DOI] [Google Scholar]

- 9.Dar SUH, Yurt M, Karacan L, et al. Image synthesis in multi-contrast MRI with conditional generative adversarial networks. IEEE transactions on medical imaging. 2019;38(10):2375–2388. doi: 10.1109/TMI.2019.2901750. [DOI] [PubMed] [Google Scholar]

- 10.Isola P, Zhu J Y, Zhou T, et al. Image-to-image translation with conditional adversarial networks. Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 1125–1134.

- 11.Xin B, Hu Y, Zheng Y, et al. Multi-modality generative adversarial networks with tumor consistency loss for brain MR image synthesis. 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). IEEE, 2020: 1803–1807.

- 12.Yokota T, Erem B, Guler S, et al. Missing slice recovery for tensors using a low-rank model in embedded space. Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 8251–8259.

- 13.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems. 2012;25:1097–1105. [Google Scholar]

- 14.Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. Advances in neural information processing systems, 2014, 27.

- 15.Jog A, Carass A, Pham D L, et al. Random forest flair reconstruction from t 1, t 2, and p d-weighted mri. 2014 IEEE 11th International Symposium on Biomedical Imaging (ISBI). IEEE, 2014: 1079–1082. [DOI] [PMC free article] [PubMed]

- 16.Odena A, Olah C, Shlens J. Conditional image synthesis with auxiliary classifier gans. International conference on machine learning. PMLR, 2017: 2642–2651.

- 17.Frid-Adar M, Klang E, Amitai M, et al. Synthetic data augmentation using GAN for improved liver lesion classification. 2018 IEEE 15th international symposium on biomedical imaging (ISBI. IEEE. 2018;2018:289–293. [Google Scholar]

- 18.Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434, 2015.

- 19.Xu J, Hong L, Zhu H. A generative adversarial network for classification of lung nodules malignancy. Journal of Northeastern University (Natural Science Edition) 2018;39:39–44. [Google Scholar]

- 20.Zhu J Y, Park T, Isola P, et al. Unpaired image-to-image translation using cycle-consistent adversarial networks. Proceedings of the IEEE international conference on computer vision. 2017: 2223–2232.

- 21.Chartsias A, Joyce T, Dharmakumar R, et al. Adversarial image synthesis for unpaired multi-modal cardiac data[. International workshop on simulation and synthesis in medical imaging. Cham: Springer; 2017. pp. 3–13. [Google Scholar]

- 22.Costa P, Galdran A, Meyer M I, et al. Towards adversarial retinal image synthesis. arXiv preprint arXiv:1701.08974, 2017.

- 23.Lee D, Kim J, Moon W J, et al. CollaGAN: Collaborative GAN for missing image data imputation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019: 2487–2496.

- 24.Sharma A, Hamarneh G. Missing MRI pulse sequence synthesis using multi-modal generative adversarial network. IEEE transactions on medical imaging. 2019;39(4):1170–1183. doi: 10.1109/TMI.2019.2945521. [DOI] [PubMed] [Google Scholar]

- 25.Hamghalam M, Frangi A F, Lei B, et al. Modality Completion via Gaussian Process Prior Variational Autoencoders for Multi-modal Glioma Segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Cham, 2021: 442–452.

- 26.Choi Y, Choi M, Kim M, et al. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 8789–8797.

- 27.Gulrajani I, Ahmed F, Arjovsky M, et al. Improved training of wasserstein gans. arXiv preprint arXiv:1704.00028, 2017.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are openly available at www.med.upenn.edu/sbia/brats2018/data.html.