Abstract

We aimed to develop and validate an instrument to detect hospital medication prescribing errors using repurposed clinical decision support system data. Despite significant efforts to eliminate medication prescribing errors, these events remain common in hospitals. Data from clinical decision support systems have not been used to identify prescribing errors as an instrument for physician-level performance. We evaluated medication order alerts generated by a knowledge-based electronic prescribing system occurring in one large academic medical center’s acute care facilities for patient encounters between 2009 and 2012. We developed and validated an instrument to detect medication prescribing errors through a clinical expert panel consensus process to assess physician quality of care. Six medication prescribing alert categories were evaluated for inclusion, one of which – dose – was included in the algorithm to detect prescribing errors. The instrument was 93% sensitive (recall), 51% specific, 40% precise, 62% accurate, with an F1 score of 55%, positive predictive value of 96%, and a negative predictive value of 32%. Using repurposed electronic prescribing system data, dose alert overrides can be used to systematically detect medication prescribing errors occurring in an inpatient setting with high sensitivity.

Keywords: Decision support systems, clinical; Quality of health care; Outcome and process assessment (health care); Medication errors; Medical informatics applications; Electronic health records

Introduction

Clinical decision support (CDS) systems embedded in Electronic Health Records (EHR) are common in modern healthcare delivery, particularly in electronic prescribing functions. Although CDS systems have been associated with improvements in patient safety and quality of care, [1–4] little is known about using data generated by CDS systems to measure clinician quality of care.

Despite great efforts to improve patient safety through meaningful use of EHRs, [5, 6] preventable medication errors continue to be among the most common clinical errors, particularly in acute care settings, [7, 8] and can lead to serious harm or death. [9–11] Prescribing errors are the most prevalent subclass of medication errors and occur at least once in 50% of hospitalizations with a median rate of 7% of all medication orders. [7, 8, 12] Many previous medication safety studies have employed manual chart review or direct clinical practice observation to study prescribing errors, [13, 14] but this approach is costly and the results may not be generalizable across hospitals nationally.

With the adoption of certified EHRs, the use of computerized physician order entry (CPOE) capabilities and “off-the-shelf” knowledge-based CDS system tools have also been broadly adopted technologies. Many efforts [15–17] have supported development of data standards that enable multi-institution interoperability to improve the coordination of care. [18–20] Although some challenges remain, [21] repurposed EHR data could potentially be used to conduct clinical research in a cost-effective manner. [18, 22, 23]

CDS systems have largely been considered a tool to promote best clinical practices and evidence-based decision-making at the point of care with the goal of improving clinical care quality, [24–27] but further studies are needed to understand the potential for using CDS data to develop performance measures for physicians, clinical services, and facilities.

In this study, we focused on the interaction between clinician prescribers and the CDS system interface to evaluate whether clinician-specific prescribing quality can be determined. We hypothesized that prescribing errors can be detected using data from CDS system-generated alerts triggered when a medication order fails to meet clinical safety requirements set by the knowledge-based system. A medication order that triggered an alert and was “overridden” by the clinician may be more likely to capture a medication order that represents a prescribing error. We describe a method for using repurposed CDS system-generated data to detect prescribing errors occurring in a hospital practice setting. We sought to develop and validate an algorithm to identify prescribing errors as an instrument to measure individual clinician-level care quality: a Composite ALgorithm to Identify Prescribing Errors (CALIPEr). We present this instrument and our analysis of its performance in measuring physician-level prescribing quality. Our goal was to build an instrument that is systematic, generalizable for use in other CDS-equipped hospitals, and generates metrics that may be linked to other prescribing processes impacting quality and safety of care.

Methods

Conceptual framework

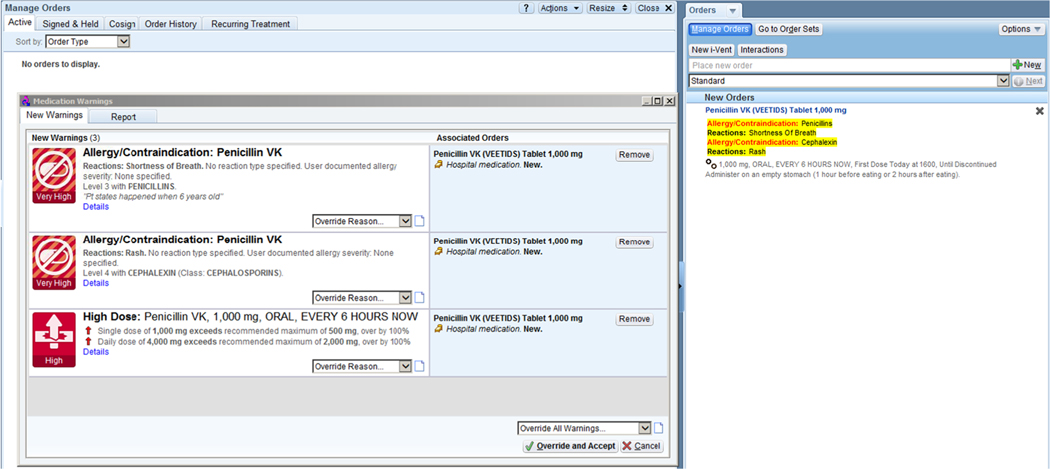

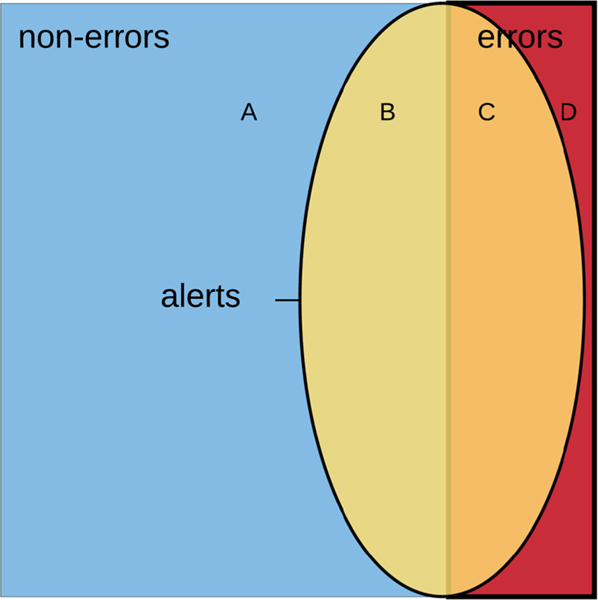

In 2007, the University of California, Davis (UC Davis) Health System deployed a knowledge-based CDS system which interrogated all signed medication orders using a predetermined set of criteria established by the pharmacy department. These criteria were derived from an “off-the-shelf” commercially available database maintained by First Databank, [28] with minor customizations to accommodate processes unique to the health system. Medication orders for hospitalized patients that required a clinician’s electronic signature were interrogated through an automated process; orders that did not satisfy CDS criteria system were suspended and a pop-up “alert” window was generated with a message to the clinician describing the potential medication conflict (Fig. 1). Certain types of alert messages (e.g., dose alerts) also provided guidance for dosing based on age and/or weight. When an order was suspended, the clinician pursued one of the following paths: 1) override the alert without written justification; 2) override the alert with written justification; 3) modify to comply with pharmacy criteria; or 4) withdraw or cancel the order (Fig. 2). All submitted orders were verified by a pharmacist before the order was released for administration to the patient.

Fig. 1.

Example alert presented to the physician

Fig. 2.

Classification of all hospital medications prescribed at UC-Davis Medical Center during 2009–2012 using the CDS system-data generated algorithm

The CDS system alerts are intended to warn physicians of potential prescription errors but are deliberately designed to emphasize sensitivity over specificity, thereby generating alerts that do not have the potential to cause harm. Therefore, our instrument development process focused on enriching specificity by identifying and excluding medication order alerts that did not have significant potential for harm, or where potential risk would outweigh clinical benefit.

We hypothesized that CDS system alert information could be used to distinguish medication orders with the potential to cause harm from those less likely to cause harm. We defined a prescribing error to be a medication ordered that had high potential to cause preventable harm to the patient while considering the balance of clinical benefit and risk. In order to only capture orders that were likely to be unsafe we tended toward a conservative definition of prescribing error and classified orders – within the context of alert override category and medication class – that represented widely accepted patient care practices as non-errors.

By combining data from the medication order with information generated by the clinician–CDS system interaction, each order could be categorized into one of four mutually exclusive groups (Fig. 1): (a) clinically appropriate, non-error, no alert triggered; (b) clinically appropriate, non-error, alert triggered; (c) clinically inappropriate, ordered in error, alert triggered; or (d) clinically inappropriate, ordered in error, no alert triggered.

Data and setting

We obtained data sets from the UC Davis Health System EHR clinical data warehouse (Clarity, Epic Systems, Verona, WI): 1) all medication orders for any hospitalized patient electronically “signed” by a physician from March 2009 to December 2012; and 2) medication prescribing alerts generated by a knowledge-based CDS system in the Epic Willow inpatient pharmacy module. These data sets were loaded into a MySQL relational database instance (Oracle Corporation, Redwood City, CA) where further exclusions described in section 2.3 were applied during extraction queries for subsequent analysis steps. The post-query data management, sampling, and analyses were performed using SAS version 9.4 (SAS Institute, Inc., Cary, NC, USA).

Instrument development

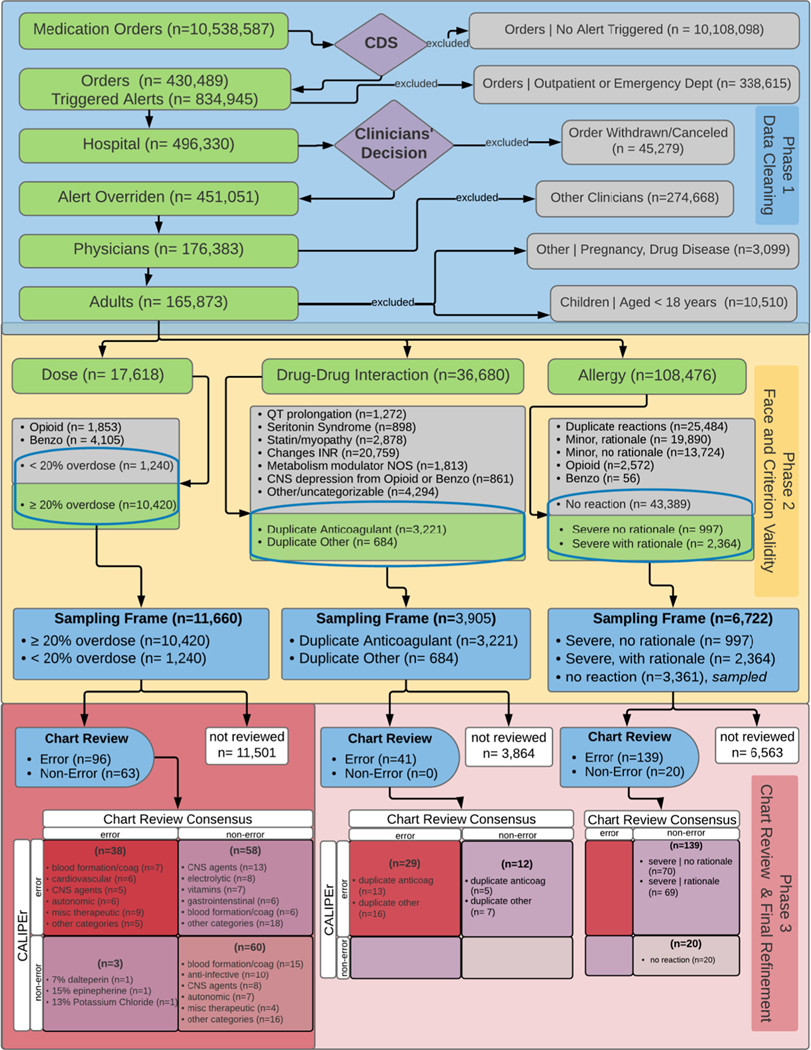

Instrument development was composed of 3 separate phases: 1) dataset cleaning, 2) face and criterion validation, and 3) blinded chart review; the instrument was refined after chart review to improve specificity. Each alert definition was mutually exclusive and was grouped in one of the following categories: dose, drug-allergy [active and inactive ingredients], drug disease, drug-drug interaction, duplicate medication, and pregnancy. However, a single medication order could trigger multiple alerts within one alert category or multiple alerts with many categories (Fig. 3, Phase 1, Appendix 1a). Using these data, we developed the CALIPEr in three phases (Fig. 3, Phases 1–2) and then validated the instrument (Fig. 3, Phase 3).

Fig. 3.

Work flow and data processing for CALIPEr

Phase 1: A priori inclusion and exclusion (dataset cleaning)

Two pharmacists and two physicians on our research team selected candidate prescribing errors from a representative sample of all medication orders. Medication orders were evaluated if they met the following criteria: 1) written for acute care adult patients who were hospitalized at the time of the order, 2) triggered at least one alert, 3) signed by a clinician, and 4) submitted for pharmacy verification (alert warning was overridden) by the physician. We retained alert overrides from three categories—dose, drug-allergy and drug-drug interaction—and merged these records with the corresponding medication order for further investigation. Alert overrides in drug-disease, duplicate medication, and pregnancy categories were rare and were excluded. (Fig. 3, Phase 1).

Phase 2: Face validity

We only considered CDS system-defined alert records that were overridden by a physician to select medication orders that may have been entered in error. We reviewed information corresponding to a sample of alert overrides from three medication categories separately (Fig. 3, Phases 2) while considering the clinical and pharmaceutical context available in these data sets (Appendix 1c, d, e). At least one licensed physician (senior faculty and/or senior internal medicine resident) and at least one licensed pharmacist (licensed expert medication safety pharmacist and/or pharmacy intern) independently reviewed each alert category. Then, the panel reviewed their findings during a research group discussion until a consensus was reached.

Dose

We considered additional data elements when reviewing the dose category — percent of maximum or minimum daily dose, percent of maximum or minimum single dose, the maximum or minimum dose frequency, or duration — to establish the threshold that defined error candidates. Based on our clinician panel recommendations, we defined a candidate error as an alert override that met at least one of the following criteria: the medication order 1) exceeded the maximum daily dose by ≥20%; 2) exceeded the maximum single dose by ≥20%; 3) was 50% less than the minimum daily dose; 4) was 50% less than the minimum single dose; 5) the dosing schedule was less than the minimum frequency or duration; or 6) the dosing schedule was greater than the maximum frequency or duration, as defined by First Databank. [28]

We excluded two drug classes, opioid agents and benzodiazepines, because these agents were being ordered across a broad therapeutic range and would require a more in-depth analysis of patient specific factors to determine if these medications were clinically appropriate.

Drug-drug interaction

We examined specific interaction categories as defined by First Databank [28] (Appendix 1e). Drug-drug interaction alerts fell into nine themes involving increased risks related to prescribing medications that act by similar mechanisms or belong to similar pharmacologic classes. Examples included increased potential for QT interval prolongation, increased risk of serotonin syndrome, duplicate class therapy (e.g., simvastatin and atorvastatin) or changes in metabolism of one of the prescribed medications.

We consulted with a specialist for drug-drug alert overrides that could not be determined after panel review (e.g., antiretroviral therapies). Most alert overrides in this category warned prescribers of potential medication interactions to ensure clinicians were aware of risks when evaluating benefits of therapy. Among the nine themes identified by the review process, only two categories (duplicate anticoagulants and duplicate other [e.g. antibiotics and statins]) represented duplicate therapeutic classes as having high potential to cause harm.

Allergy

An allergy alert was triggered if a patient had an EHR-documented history of an allergic reaction to the medication being ordered. This alert was also triggered for documented untoward side effects to medications. First, we defined severe reactions (e.g., anaphylaxis, angioedema, pancytopenia) and minor reactions (e.g., hives, itching, rash, and fever) to develop candidate error alert criteria from our review. Six or more distinct reactions may be reported within one alert. However, some alert overrides had no reaction characteristics information available and were excluded (Fig. 3).

We considered the type of allergic reaction and the clinician’s documented justification for overriding the alert (Appendix 1d). A candidate error satisfied at least one of the following criteria: 1) the medication ordered was associated with a severe reaction, with or without clinical justification; or 2) the order triggered an allergy alert indicating a minor reaction without clinical justification recorded. Alert overrides that indicated a minor reaction with a clinical justification (e.g., the physician indicated the patient “tolerated [the medication] before”, or “[the physician was] aware, will monitor,”) were considered non-errors.

Phase 3: Criterion validity and post-hoc refinement

Sample selection

We sought to validate the results of CALIPEr by selecting a random sample of medication orders triggering alerts that were overridden. The results from the CALIPEr error determination were compared with error determinations from a blinded manual chart review process. The sampling frame was restricted to medication orders that triggered an alert in the allergy, dose, and drug-drug interaction categories. Using the surveyselect procedure in SAS, we obtained a random sample without replacement from each category independently (Appendix 2). Each sample represented at least 1% of the candidate error alert overrides and complimentary non-candidate error overrides.

Blinded chart review

Within each alert category, at least one licensed physician (one senior faculty and/or one senior internal medicine resident) and one licensed pharmacist (one expert medication safety pharmacist and/or one pharmacy intern) independently reviewed each patient’s EHR from the medication order time through the preceding 24-h period. The results from CALIPEr were concealed from all reviewers while they determined whether each medication order represented a prescribing error, non-error, or could not be clinically determined. We developed a set of decision rules for each alert category to standardize the chart review process (Appendix 3). For the drug-drug interaction category, we could not conceal the CALIPEr results from the reviewers; thus, we limited our manual review only to candidate errors. The results from the pharmacist and physician teams’ determinations were compared to calculate the interrater agreement (Cohen’s kappa). If the reviewers’ conclusions disagreed, the order was discussed until a consensus was reached. Orders that could not be clinically determined were excluded from the algorithm validation assessment. Based on the validation process, we made distinct category-specific modifications to the alert processing algorithm definitions (see Appendices 2 and 3).

Statistical analysis

We used the AHFS Pharmacologic-Therapeutic Classification schema [29] to calculate descriptive statistics for medication order characteristics and alert categories, and we used the final revised CALIPEr to determine the prescribing error rate (per 1000 orders) among physicians who ordered at least 100 medications for hospitalized patients (Table 1) as a potential representation of prescriber-level quality. We evaluated the criterion validity by calculating the binary classification performance characteristics (sensitivity, specificity, positive predictive value, negative predictive value, and positive likelihood ratio) for the error categories (Appendix 4).

Table 1.

CALIPEr performance characteristics for dose category

| Characteristic | Estimate | 95% Confidence Interval |

|---|---|---|

|

| ||

| Recall (Sensitivity) | 0.93 | [0.80, 0.98] |

| Precision (Positive Predictive Value_ | 0.40 | [0.35, 0.45] |

| Accuracy | 0.62 | [0.54, 0.69] |

| F1 score | 0.55 | [0.48, 0.63] |

| Specificity | 0.51 | [0.42, 0.60] |

| Balanced Accuracy | 0.72 | [0.61, 0.79] |

| Negative Predictive Value | 0.95 | [0.87, 0.98] |

| Positive Likelihood Ratio | 1.89 | [1.54, 2.31] |

| Negative Likelihood Ratio | 0.14 | [0.05, 0.43] |

Results

Medication orders and alerts (phase 1)

Between January 1, 2009 and December 31, 2012, 2530 physicians signed 10,538,587 medication orders during 27,626 hospital encounters, which triggered 834,945 alerts for clinicians. We excluded alerts that were triggered for outpatient orders for patients being discharged (n = 338,615), orders for patients not hospitalized (n = 340,495), and orders that were withdrawn after the alert (n = 45,279). We retained 165,873 alert overrides for further analyses (Fig. 3, Phase 1). Among these alert overrides, the most common medication orders were members of the following pharmacologic classes/subclasses: CNS Agents, Analgesics and Antipyretics, Opiate Agonists (41.3%); Analgesics and Antipyretics and Miscellaneous (15.0%); Anti-infective Agents (9.1%); Blood Formation, Coagulation, and Thrombosis (5.9%); and Cardiovascular Drugs (5.1%) (Appendix 5).

Clinician practice

Among clinicians who ordered at least 100 medications for hospitalized patients during the study period, clinicians signed an average of 1399 medication orders (SD 2487), with nearly five CALIPEr-determined prescribing errors (mean 4.8, standard deviation 10.8) per clinician and an average error rate of 3.3 per 1000 orders (median 0, standard deviation 6.7 per 1000 orders).

Candidate error characteristics (phase 2)

Among the dose alert override category, the median overdose error was 2.0 times (interquartile range 1.34, 2.23) the maximum single or daily dose limit. We found 160 distinct CDS system-defined drug-drug interaction alert overrides (Appendix 1e) from which we identified nine recurring themes such as QT interval prolongation, serotonin syndrome, change in metabolite of one or both medications, and duplicate drug class therapy. Most drug-drug interactions did not satisfy criteria for an error (89.3%); those that did satisfy the error criteria were duplicate drug class therapies. Allergic reactions to opioids (5%), benzodiazepine agents (<1%), and minor reactions (36%) such as nausea and dizziness were commonly documented. While the majority of alert overrides overall occurred among the allergy category (65%), most reactions noted were minor, duplicate or did not indicate a clinically meaningful reaction. We retained 66% of dose alert overrides, 11% drug-drug interaction alert overrides, and 6% of allergy overrides for manual review in phase 3.

Validation and post hoc reconciliation (phase 3)

In the first round of alert overrides reviewed from the dose category, we achieved 47% inter-rater agreement. (Appendix 2). Through a group discussion, we reached inter-rater agreement on 98% and excluded 3 orders. Among dose alert overrides, false positive orders were most prevalent among inhaled anticholinergic agents and beta agonists or when the medication was being used for an atypical indication, e.g., for ICU patients receiving greater than maximum dose of ipratropium-albuterol nebulizer therapy, which was considered acceptable off-label dosing. We refined the algorithm to define these medication alert overrides as non-errors. False negatives (orders between 5 and 20% of the recommended dose) occurred most frequently in orders for ibuprofen and albumin. Therefore, we modified the algorithm and lowered the overdose threshold to ≥13% for ibuprofen and ≥ 8% albumin orders.

In the first round of review from the drug-drug interaction category, we achieved 62% inter-rater agreement (Appendix 2). Through further a group discussion, we reached inter-rater agreement on 94%.

None of the allergy alert overrides met the prescribing error definition during our manual chart review. To the contrary, even orders that triggered severe allergy warnings were deliberate and found to be associated with documentation of appropriate and safe clinician prescribing rationale elsewhere in the chart. Therefore, candidate errors within the allergy category were excluded from further consideration.

CALIPEr performance

Within the dose alert overrides after post-validation modifications were included, we found the algorithm performance to have high recall 92.7% [95% confidence interval, 80.1–98.5] and negative predictive value 0.95 [0.87, 0.98], modest precision (positive predictive value) of 39.5% [95% confidence interval, 35.9–44.5], accuracy of 61.6%, and F1 Score of 47.7% [95% confidence interval, 0.48, 0.63] (Table 1). Among the drug-drug interaction category, we found modest precision of 65%, [95% confidence interval, 0.58, 0.72] but we could not assess the other performance characteristics.

Discussion

Clinical decision support tools have become standard in the prescribing functions of nearly all commercially available hospital EHR systems. [21] Even though these systems were primarily intended to improve healthcare quality by informing clinicians’ decision-making process at the point-of-care, a byproduct of the CDS system – data generated by the user-CDS system interface – may also be useful to measure clinician-level care quality. Recent studies have begun to examine CDS alert overrides as a potential data source to detect adverse drug events in the hospital. [30–32] We present a method to measure clinician-level quality by detecting medication prescribing errors occurring in hospitals that is systematic, may be potentially generalizable, and uses data readily available in most acute care facilities. Medication errors have been described as an indicator of patient safety or quality by many investigators, [33–36] and alert overrides have recently gained attention as an indicator for adverse drug event risk, [25, 31] but we are not aware of prior studies which have repurposed CDS system data to measure physician performance. Our study illustrates a novel and efficient approach that potentially has broad applications in health services research and hospital quality improvement efforts. This concept may be used to develop similar tools in any EHR-CDS system equipped acute care facility and extended to measure other healthcare quality attributes.

Our method has limitations. Only errors in prescribing, a subset of all medication errors, can be detected by this approach. Errors occurring in transcription, dispensation, or administration cannot be detected, nor can prescribing errors related to the wrong patient or wrong indication (commission errors). Also, this method depends upon a commercial CDS system and is only able to detect medication prescribing errors related to dose alert overrides.

During the manual review phase to assess criterion validity, we could not blind the reviewer to the alert status of the medication order. Therefore, we restricted the sampling frame to orders that triggered an alert and assumed that algorithm-defined non-errors were similar to the medication orders that did not trigger an alert. Also, we excluded medication orders that were withdrawn or canceled because the order was never signed and did not have the potential to cause harm to the patient. However, further exploration of these orders may provide additional insight into understanding processes of care and how the CDS system affects clinician prescribing quality.

Additionally, CALIPEr was originally developed to measure prescribing error events and emphasized sensitivity over specificity. Although the method is sensitive, its marginal specificity may limit its use if precise discrimination of errors and non-errors is required. A goal of this project was to create a tool that was generalizable, but data from one CDS system within an academic medical center may not be generalizable to other acute care facilities. Some clinical processes, CDS system software attributes, or deployment characteristics may be unique to the institution; thus, additional validation and testing (for possible adaptation) is needed in other acute care settings.

However, this method could be inexpensively implemented and the concept is potentially generalizable in a variety of hospital settings. It does not depend upon human resource-intensive chart review [14] or direct clinical practice observation. [37, 38] It also demonstrates an application of complex repurposed data to measure performance of individual physicians that may be used to inform the clinical processes, and improve prescribing practices and patient safety. However, further validation in various clinical contexts are necessary to objectively evaluate the performance of this approach.

As CDS system developers confront the challenge of balancing alert accuracy, alert precision, and the threat of alert fatigue, data byproducts may become more granular and capture a broader array of prescribing errors. This may create an opportunity to refine the tool’s specificity to incorporate additional alert override categories (e.g., pregnancy), extending the tool’s potential to detect other types of prescribing errors and applicability in other clinical settings.

Future studies are needed to assess the performance characteristics and validate the CALIPEr in other settings, and additional work is needed to incorporate additional error types. Although these data have not been used for surveillance or to monitor clinician quality, this conceptual framework may lay the foundation for developing an instrument to measure clinician prescribing quality in real-time.

Acknowledgments

We thank Michael Fong and Terry Viera for providing the alert override data; Drs. Eduard Poltavskiy and Brian Chan for data management; Gary Tabler for his computational support; Rebecca Davis for guiding our literature search; and Regan Scott-Chin, for her critical contributions drafting this manuscript.

Funding

The project described was supported by the National Center for Advancing Translational Sciences, National Institutes of Health, through grant number UL1TR000002 and linked award TL1TR000133 (DLC); and grant number T32HS022236 from the Agency for Healthcare Research and Quality (AHRQ) through the Quality Safety Comparative Effectiveness Research Training (QSCERT) Program (DLC); National Institutes of Health through grant UL1 TR001860-0002 (HB).

Appendix

Appendix 1a:

Consider the following example: A clinician ordered 1000 mg of penicillin be given four times per day to a patient with documented penicillin and cephalexin allergies. Based on criteria specified by First Databank, [28] the dose alert threshold for penicillin was defined as an order that exceeded 500 mg (maximum recommended single dose) or 2000 mg (maximum recommended daily dose), for a patient aged 12–109 years. Upon signing the medication order, three discrete alerts were triggered and displayed to the prescriber: 1) drug-allergy to penicillin; 2) drug-allergy to cephalexin; and 3) joint dose alert for a single and daily overdose threshold (Fig. 2).

Appendix 1b:

Many alert categories are triggered to warn prescribers of increased risk of QT prolongation, yet many medications that prolong the QT interval may be safely prescribed together if the patient is appropriately monitored in a clinical setting, so these alert overrides were not considered errors.

Appendix 1c:

Variables available during dose pre-review

| Domain | |

|---|---|

|

| |

| Medication | Name |

| Dose | |

| Schedule | |

| Administration route | |

| Percent above/below daily limit | |

| Dose alert tolerance (daily) | |

| Percent above/below single limit | |

| Dose alert tolerance (single) | |

| Order associated with a medication panel | |

| Alert category | |

| Clinician | Identifier |

| Characteristics (Intern, Resident, Attending) | |

| Clinical service | |

| Override justification | |

| Patient | Location in the hospital |

| Admission time/date | |

| Discharge time/date | |

| Discharge time/date | |

| Age | |

| Sex | |

Appendix 1c:

Variables available during allergy pre-review

| Domain | |

|---|---|

|

| |

| Medication | Name |

| Dose | |

| Schedule | |

| Administration route | |

| Allergy to medication or excipient | |

| Severity (Level 1–4) | |

| Order associated with a medication panel | |

| Alert category | |

| Clinician | Identifier |

| Characteristics (Intern, Resident, Attending) | |

| Clinical service | |

| Override justification | |

| Patient | Location in the hospital |

| Admission time/date | |

| Discharge time/date | |

| Age | |

| Sex | |

| Allergic Symptom(s) Reported | |

Appendix 1d:

Variables available during drug-drug interaction pre-review

| Domain | |

|---|---|

|

| |

| Medication | Name |

| Dose | |

| Schedule | |

| Administration route | |

| Other drug involved in putative interaction | |

| Clinical effect of drug-drug interaction | |

| Order associated with a medication panel | |

| Identifier | |

| Characteristics (Intern, Resident, Attending) | |

| Alert category | |

| Clinician | Clinical service |

| Override justification | |

| Plan to mitigate risk | |

| Admission time/date | |

| Patient | Discharge time/date |

| Age | |

| Sex | |

| Location in the hospital | |

Appendix 2:

Review and sampling stratification

| Alert Override Category | Phase 2 | Phase 3 | Findings | Final determination | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||||

| Sampling Frame | Sample Size | Round 1 | Round 2 | |||||||||||

| n | working definition | Strata | n total = 162 | working definition (revised) | quartiles (percent overdose) | n | agreement | disagreement | agreement | disagreement | error | non-error | ||

| Dose | 17,618 | non-error | < 20% overdose | 1240 | non-erro | Q1 (6 to 8) | 26 | 16 | 10 | 26 | 0 | 2 | 24 | |

| Q2 (9 to 13) | 27 | 15 | 12 | 27 | 0 | 7 | 20 | |||||||

| Q3 (14 to 18) | 12 | 2 | 10 | 11 | 1 | 0 | 12 | |||||||

| Q4 (19 to 20) | 6 | 1 | 5 | 5 | 1 | 0 | 6 | |||||||

| error | ≥20% overdose | 10,420 | error | Q1 (21 to 49) | 21 | 12 | 9 | 21 | 0 | 8 | 13 | |||

| Q2 (50 to 99) | 16 | 8 | 8 | 16 | 0 | 7 | 9 | |||||||

| Q3 (100 to 220) | 36 | 13 | 23 | 35 | 1 | 10 | 26 | |||||||

| Q4 (220 to 100,000+) | 18 | 9 | 9 | 18 | 0 | 5 | 13 | |||||||

| n | working definition | Strata | n | working definition (revised) | categories | n total = 43 | agreement | disagreement | agreement | disagreement | error | non-error | could not be determined | |

| Dmg-Drug Interaction | 36,680 | error | Duplicate Anticoagulant | 3221 | error | Duplicate Anticoagulant | 21 | 12 | 9 | 19 | 2 | 11 | 8 | 2 |

| Duplicate Other | 684 | Duplicate Other | 22 | 11 | 11 | 20 | 1 | 15 | 6 | 1 | ||||

| QT prolongation | 1272 | non-error | QT prolongation | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||

| Seritonin Syndrome | 898 | Seritonin Syndrome | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||

| Statin/myopathy | 2878 | Statin/myopathy | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||

| Changes INR | 20,759 | Changes INR | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||

| Metabolism modulator | 1813 | Metabolism | NOS | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||

| NOS | modulator | |||||||||||||

| CNS depression from | 861 | CNS depression from | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||

| Opioid or Benzo | Opioid or Benzo | |||||||||||||

| Other/uncategorizable | 4294 | Other/uncategorizable | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||

| n | working definition | Strata | n | working definition (revised) | categories | n total = 159 | agreement | disagreement | agreement | disagreement | error | non-error | ||

| Allergy | 108,476 | error | severe | no justification | 997 | error | severe | no justification | 70 | 68 | 2 | 70 | 0 | 0 | 70 | |

| severe | with justification | 2364 | severe | with justification | 69 | 68 | 1 | 69 | 0 | 0 | 69 | |||||

| minor | no justification | 13,724 | non-error | minor | no justification | 0 | 0 | *** | *** | *** | *** | |||||

| S | minor | justification | 19,890 | minor | justification | 20 | 20 | 0 | *** | *** | 0 | 20 | ||||

| Opioid | 2572 | Opioid | 0 | 0 | 0 | *** | *** | *** | *** | |||||

| Benzodiapene | 56 | Benzodiapene | 0 | *** | *** | *** | *** | *** | *** | |||||

| Duplicate reactions | 25,484 | *** | 0 | *** | *** | *** | *** | *** | ||||||

| *_ | ||||||||||||||

| *_ | ||||||||||||||

| * | ||||||||||||||

Appendix 3: Decision rules for manual chart review

Dose Two teams, (two clinical medication safety pharmacists; two senior internal medicine resident physicians), each reviewed a stratified random sample of medication orders according to the following standardized process:

Patient chart identified by MRN.

Hospital encounter identified by date of order.

Medications searched for drug in question via filters.

Order date identified.

Order number verified.

Order details reviewed (including alerts).

MD progress notes reviewed for at least the 24 h period before the order was made.

- Patient’s history and physical, and discharge summary were reviewed, as necessary.

- For clarification of maximum recommended dosing for indication Lexicomp was referenced as needed.

A decision was then made about whether or not the order in question could carry potential for harm to the patient.

Discrepancies in decisions between reviewing teams were then decided by consensus after group discussion (all research team members participating in discussion).

-

Decision rules were determined during discussion as follows:

1.1.1.

1.1.2.

| Dose - Examples of non-errors. | ||||

|---|---|---|---|---|

|

| ||||

| Example Number | Error category | Medication Order | CDS Rationale | Clinical Rationale |

|

| ||||

| 1 | Prior to admission PTA dose | lamotrigine (800 mg total daily) PTA | Patient taking more than max daily dose lamotrigine (700 mg total daily) PTA | |

| 2 | Appropriate dose for indication | Propofol 140 mg given for seizure | Over set max single dose of 50 mg | |

| 3 | Common off-label use | Albuterol/Ipratropium inhaler ordered 6 puffs Q4H. | Exceeds max daily dose of 12 puffs and max single dose of 3 puffs | This is commonly used off-label in this amount. |

| 4 | Approximate weight-based dose | Dalteparin 17,500 units ordered daily | Exceeds max calculated weight-based dose (16,−340 units) | This is an appropriate approximation given practical logistics of dosing (RN would be unable to give this precise amount) |

| 5 | Bedside procedure, correct dose documented: | Triamcinolone 10 mg intradermal ordered for single dose of 10 mg | Exceeds max single dose. | Only 1 mg (as recommended for single dose), thus correct dose was documented as being given by MD for procedure. |

| 6 | Inappropriate alert (not max dose) | Magnesium Gluconate 2 g PO ordered one time only. | Exceeds max single dose (1 g) | Above set max dose of 1 g but not an unsafe dose. |

| Dose - Examples of errors | ||||

|

| ||||

| Example Number | Error category | Medication Order | CDS Rationale | Clinical Rationale |

|

| ||||

| 7 | Overdose by max dose | Flurazepam 30–60 mg ordered daily at bedtime prn. | Max single and max daily dose 30 mg. | |

| 8 | Ordered without indication | Rifampin 600 mg IV ordered Q12H | Exceeded max daily dose of 1200 mg. | However, no apparent indication for patient to be on Rifampin. |

| 9 | Order conflicts with admin instructions | Ibuprofen 600 mg ordered Q4H prn. | exceeds max daily dose of 3200 mg if given all available doses) | Automated comments of order stated to not give more than 3200 mg; however, TDD per order is 3600 mg |

| 10 | Systems/Order set | Diltiazem 0.25 mg/kg ordered Q4H prn. | (daily dose 1.5 mg/kg exceeding max daily dose of 1.05 mg/kg) | Ordered for arrhythmia per order set but patient without history of, or ongoing, arrhythmia. |

|

| ||||

| Drug-drug Interaction - Examples of non-errors | ||||

|

| ||||

| Example Number | Error category | Medication Order | CDS Rationale | Clinical Rationale |

|

| ||||

| 1 | Selected quinolones / class Ia & III antiarrhythmics | Moxifloxacin, Amiodarone Tablet 200 mg | increased risk for QT prolongation | benefit > risk for many patients, can be safely monitored. |

| 2 | tramadol/MAOIS | Linezolid, Tramadol Tablet 25 mg | increased risk for serotonin syndrome | Requires increased monitoring |

| 3 | simvastatin / diltiazem | simvastatin (> 10 mg); lovastatin (> 20 mg) / diltiazem | increased risk of statin myopathy | frequently done in the community if risk for CVD elevated with increased monitoring. |

| 4 | anticoagulants / metronidazole; tinidazole | Warfarin 12.5 mg Tablet, Metronidazole 500 mg | increased INR | Can be monitored and dose-adjusted |

| 5 | methotrexate / sulfonamides; trimethoprim | Trimethoprim 160 mg/Sulfamethoxazole 800 mg Tablet 1 tablet, Methotrexate Tablet 10 mg | methotrexate toxicity | Patients should be monitored for pancytopenia and myelotoxicity |

| 6 | methadone oraloxycodone extended release tablet | Methadone Tablet 10 mg, Oxycodone SR Tablet 30 mg | increased CNS depression/ duplicate opiate therapy | Best practice would be to administer one long acting opiate at a time, but this may be done safely with monitoring in specific clinical settings |

| 7 | naltrexone / opioid analgesics | Naltrexone Tablet 50 mg, Morphine 1–4 mg | antagonist and agonist co-administered | frequently done in treatment of acute on chronic pain |

| Drug-drug Interaction - Examples of errors | ||||

|

| ||||

| Example Number | Error category | Medication Order | CDS Rationale | Clinical Rationale |

|

| ||||

| 8a | heparin subq / enoxaparin | Enoxaparin 120 mg, Heparin 5000 Units | duplicate anticoagulant | increased risk of bleeding with low likelihood of clinical benefit |

| 8b | heparins-dabigatran | Dabigatran Capsule 150 mg, Heparin 5000 Units | duplicate anticoagulant | increased risk of bleeding with low likelihood of clinical benefit |

| 9a | cgmp specific pde type-5 inhibitors / nitrates | Sildenafil Tablet 12.5 mg, Nitroglycerin Sublingual Tablet 0.4 mg | hypotension, duplicate class | SL NTG and PDE’s are OK if both are PRN and pt. is educated, but Long acting/ standing nitrates are not OK |

| with PDE’s: isosorbide mononitrate, transdermal patches, isosorbide mononitrate | ||||

| 9b | gentamicin / tobramycin | Gentamicin, Tobramycin | duplicate class | increased risk of toxicity. Duplicate class |

Appendix 4:

CALIPEr performance characteristics calculations

| Consensus by Chart Review | |||

|---|---|---|---|

| Error | Non-error | ||

| CALIPEr | Error | TP | FP |

| Non-error | FN | TN | |

Appendix 5:

AHFS-defined medication order descriptive statistics

| AHFS Tier 1 | Tier 2 | Tier 3 | Frequency | Percent of Total |

|---|---|---|---|---|

|

| ||||

| CNS Agents | Analgesics and Antipyretics | Opiate Agonists | 67,845 | 41.3 |

| Anti-infective Agents | 14,903 | 9.1 | ||

| CNS Agents | Analgesics and Antipyretics | 13,556 | 8.3 | |

| CNS Agents | Analgesics and Antipyretics | Analgesics and Antipyretics, Miscellaneous | 11,118 | 6.8 |

| Blood Formation, Coagulation, and Thrombosis | 9677 | 5.9 | ||

| Cardiovascular Drugs | 8381 | 5.1 | ||

| CNS Agents | Anxiolytics, Sedatives, and Hypnotics | 7230 | 4.4 | |

| CNS Agents | Analgesics and Antipyretics | Other Nonsteroidal | 5203 | 3.2 |

| Anti-Inflammatory Agents | ||||

| Gastrointestinal Drugs | 4505 | 2.7 | ||

| Autonomic Drugs | 3125 | 1.9 | ||

| Electrolytic, Caloric, and Water Balance | 2603 | 1.6 | ||

| CNS Agents | Analgesics and Antipyretics | Salicylates | 2251 | 1.4 |

| Antihistamine Drugs | 1909 | 1.2 | ||

| Hormones and Synthetic Substitutes | 1714 | 1.0 | ||

| CNS Agents | Psychotherapeutic Agents | 1206 | 0.7 | |

| CNS Agents | Anticonvulsants | 931 | 0.6 | |

| Vitamins | 653 | 0.4 | ||

| CNS Agents | Analgesics and Antipyretics | Opiate Partial Agonists | 558 | 0.3 |

| Local Anesthetics | 521 | 0.3 | ||

| Respiratory Tract Agents | 481 | 0.3 | ||

| Miscellaneous Therapeutic Agents | 458 | 0.3 | ||

| Antineoplastic Agents | 438 | 0.3 | ||

| Eye, Ear, Nose, and Throat (EENT) Preparations | 332 | 0.2 | ||

| Skin and Mucous Membrane Agents | 290 | 0.2 | ||

| CNS Agents, Miscellaneous | 283 | 0.2 | ||

| CNS Agents | General Anesthetics | 221 | 0.1 | |

| Blood Derivatives | 197 | 0.1 | ||

| Serums, Toxoids, and Vaccines | 139 | 0.1 | ||

| Smooth Muscle Relaxants | 91 | 0.1 | ||

| CNS Agents | Antiparkinsonian Agents | 78 | 0.0 | |

| CNS Agents | Analgesics and Antipyretics | Cyclooxygenase-2 (COX-2) | 77 | 0.0 |

| Inhibitors | ||||

| CNS Agents | Opiate Antagonists | 75 | 0.0 | |

| Oxytocics | 39 | 0.0 | ||

| CNS Agents | Antimanic Agents | 34 | 0.0 | |

| Heavy Metal Antagonists | 29 | 0.0 | ||

| CNS Agents | Antimigraine Agents | 29 | 0.0 | |

| CNS Agents | Anorexigenic Agents and Respiratory | 11 | 0.0 | |

| and Cerebral Stimulants, Miscellaneous | ||||

| Diagnostic Agents | 3 | 0.0 | ||

| Enzymes | 1 | 0.0 | ||

| CNS Agents | Fibromyalgia Agents | 1 | 0.0 | |

| Missing or Uncategorizable | 4677 | 1.8 | ||

| Total | 165,873 | 100 | ||

Footnotes

Compliance with ethical standards

Ethical approval The study protocol (No. 329369) was reviewed by the Institutional Review Board at the University of California, Davis Medical Center.

Conflict of interest No authors have any conflicts of interest or financial disclosures.

Informed consent The study protocol was determined by Institutional Review Board at the University of California, Davis Medical Center to be minimal risk and a waiver of informed consent was granted.

References

- 1.Amarasingham R, Plantinga L, Diener-West M, Gaskin DJ, Powe NR, Clinical information technologies and inpatient outcomes: a multiple hospital study, Arch. Intern. Med 169 (2009) 108–114. 10.1001/archinternmed.2008.520. [DOI] [PubMed] [Google Scholar]

- 2.Buntin MB, Burke MF, Hoaglin MC, Blumenthal D, The benefits of health information technology: a review of the recent literature shows predominantly positive results, Health Aff. . 30 (2011) 464–471. 10.1377/hlthaff.2011.0178. [DOI] [PubMed] [Google Scholar]

- 3.Lyman JA, Cohn WF, Bloomrosen M, Detmer DE, Clinical decision support: progress and opportunities, J. Am. Med. Inform. Assoc 17 (2010) 487–492. 10.1136/jamia.2010.005561 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jaspers MWM, Smeulers M, Vermeulen H, Peute LW, Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings, J. Am. Med. Inform. Assoc 18 (2011) 327–334. 10.1136/amiajnl-2011-000094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Blumenthal D, Launching HITECH, N. Engl. J. Med 362 (2010) 382–385. 10.1056/NEJMp0912825. [DOI] [PubMed] [Google Scholar]

- 6.Ancker JS, Kern LM, Edwards A, Nosal S, Stein DM, Hauser D, Kaushal R, HITEC Investigators, Associations between healthcare quality and use of electronic health record functions in ambulatory care, J. Am. Med. Inform. Assoc 22 (2015) 864–871. 10.1093/jamia/ocv030. [DOI] [PubMed] [Google Scholar]

- 7.Alanazi MA, Tully MP, Lewis PJ , A systematic review of the prevalence and incidence of prescribing errors with high-risk medicines in hospitals, J. Clin. Pharm. Ther 41 (2016) 239–245. 10.1111/jcpt.12389. [DOI] [PubMed] [Google Scholar]

- 8.Lewis PJ, Dornan T, Taylor D, Tully MP, Wass V, Ashcroft DM, Prevalence, incidence and nature of prescribing errors in hospital inpatients: a systematic review, Drug Saf. 32 (2009) 379–389. 10.2165/00002018-200932050-00002. [DOI] [PubMed] [Google Scholar]

- 9.Institute of Medicine, Board on Health Care Services, Committee on Identifying and Preventing Medication Errors, Preventing Medication Errors, National Academies Press, 2007. https://market.android.com/details?id=book-fsqaAgAAQBAJ. Accessed 10 Aug 2020. [Google Scholar]

- 10.Institute of Medicine, Committee on Quality of Health Care in America, To Err Is Human: Building a Safer Health System, National Academies Press, 2000. https://market.android.com/details?id=book-eVuaobPHxPIC. Accessed 10 Aug 2020. [Google Scholar]

- 11.National Quality Forum (NQF). Serious Reportable Events In Health care—2011 Update: A Consensus Report, Washington, DC: NQF; 2011. [Google Scholar]

- 12.Office of the National Coordinator for Health Information Technology, Individuals Use of Technology to Track Health Care Charges and Costs, Health IT Dashboard, (n.d.). https://dashboard.healthit.gov/quickstats/pages/consumers-health-care-charges-costs-online.php (accessed February 4, 2019). [Google Scholar]

- 13.Nanji KC, Rothschild JM, Salzberg C, Keohane CA, Zigmont K, Devita J, Gandhi TK, Dalal AK, Bates DW, Poon EG, Errors associated with outpatient computerized prescribing systems, J. Am. Med. Inform. Assoc 18 (2011) 767–773. 10.1136/amiajnl-2011-000205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bates DW, Cullen DJ, Laird N, Petersen LA, Small SD, Servi D, Laffel G, Sweitzer BJ, Shea BF, Hallisey R, Vliet MV, Nemeskal R, Leape LL, Bates D, Hojnowski-Diaz P, Petrycki S, Cotugno M, Patterson H, Hickey M, Kleefield S, Cooper J, Kinneally E, Demonaco HJ, Clapp MD, Gallivan T, Ives J, Porter K, Taylor Thompson B, Richard Hackman J, Edmondson A, Incidence of Adverse Drug Events and Potential Adverse Drug Events: Implications for Prevention, JAMA. 274 (1995) 29–34. 10.1001/jama.1995.03530010043033. [DOI] [PubMed] [Google Scholar]

- 15.Daniel J, Bridging the Divide: Office of CTO Works to Connect Immunization, HHS.gov, (2019). https://www.hhs.gov/cto/blog/2019/1/23/bridging-the-divide-office-of-cto-works-to-connect-immunization.html (accessed February 5, 2019). Accessed 10 august 2020 [Google Scholar]

- 16.Choudhry SA, Li J, Davis D, Erdmann C, Sikka R, Sutariya B, A public-private partnership develops and externally validates a 30-day hospital readmission risk prediction model, Online J. Public Health Inform. 5 (2013) 219. 10.5210/ojphi.v5i2.4726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Vest JR, Campion TR, Kern LM, Kaushal R, Public and private sector roles in health information technology policy: Insights from the implementation and operation of exchange efforts in the United States, Health Policy and Technology. 3 (2014) 149–156. 10.1016/j.hlpt.2014.03.002. [DOI] [Google Scholar]

- 18.Sinaci AA, Laleci Erturkmen GB, A federated semantic metadata registry framework for enabling interoperability across clinical research and care domains, J. Biomed. Inform 46 (2013) 784–794. 10.1016/j.jbi.2013.05.009. [DOI] [PubMed] [Google Scholar]

- 19.Garde S, Knaup P, Hovenga E, Heard S, Towards semantic interoperability for electronic health records, Methods Inf. Med 46 (2007) 332–343. 10.1160/ME5001. [DOI] [PubMed] [Google Scholar]

- 20.Rea S, Pathak J, Savova G, Oniki TA, Westberg L, Beebe CE, Tao C, Parker CG, Haug PJ, Huff SM, Chute CG, Building a robust, scalable and standards-driven infrastructure for secondary use of EHR data: the SHARPn project, J. Biomed. Inform 45 (2012) 763–771. 10.1016/j.jbi.2012.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Greenes RA, Bates DW, Kawamoto K, Middleton B, Osheroff J, Shahar Y, Clinical decision support models and frame-works: Seeking to address research issues underlying implementation successes and failures, J. Biomed. Inform 78 (2018) 134–143. 10.1016/j.jbi.2017.12.005. [DOI] [PubMed] [Google Scholar]

- 22.Steiner JF, Paolino AR, Thompson EE, Larson EB, Sustaining Research Networks: the Twenty-Year Experience of the HMO Research Network, EGEMS (Wash DC). 2 2014. 1067. https://www.ncbi.nlm.nih.gov/pubmed/25848605,. [PMC free article] [PubMed] [Google Scholar]

- 23.Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, Samsa G, Hasselblad V, Williams JW, Musty MD, Wing L, Kendrick AS, Sanders GD, Lobach D, Effect of clinical decision-support systems: a systematic review, Ann. Intern. Med 157 (2012) 29–43. 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 24.Wong A, Amato MG, Seger DL, Slight SP, Beeler PE, Dykes PC, Fiskio JM, Silvers ER, Orav EJ, Eguale T, Bates DW, Evaluation of medication-related clinical decision support alert overrides in the intensive care unit, J. Crit. Care 39 (2017) 156–161. 10.1016/j.jcrc.2017.02.027. [DOI] [PubMed] [Google Scholar]

- 25.Wong A, Rehr C, Seger DL, Amato MG, Beeler PE, Slight SP, Wright A, Bates DW, Evaluation of Harm Associated with High Dose-Range Clinical Decision Support Overrides in the Intensive Care Unit, Drug Saf. (2018). 10.1007/s40264-018-0756-x. [DOI] [PubMed] [Google Scholar]

- 26.Ali SM, Giordano R, Lakhani S, Walker DM, A review of randomized controlled trials of medical record powered clinical decision support system to improve quality of diabetes care, Int. J. Med. Inform 87 (2016) 91–100. 10.1016/j.ijmedinf.2015.12.017. [DOI] [PubMed] [Google Scholar]

- 27.Belard A, Buchman T, Forsberg J, Potter BK, Dente CJ, Kirk A, Elster E, Precision diagnosis: a view of the clinical decision support systems (CDSS) landscape through the lens of critical care, J. Clin. Monit. Comput 31 (2017) 261–271. PMID: 25394525. 10.1111/inm.12096. [DOI] [PubMed] [Google Scholar]

- 28.Drug Data | Drug Database | FDB (First Databank), First Databank. n.d.. https://www.fdbhealth.com/. Accessed 5 Feb 2019. [Google Scholar]

- 29.Ashsp, AHFS Drug Information 2017, American Society of Health-System Pharmacists, 2017. https://books.google.com/books?id=fncVMQAACAAJ. Accessed 10 Aug 2020. [Google Scholar]

- 30.Topaz M, Seger DL, Slight SP, Goss F, Lai K, Wickner PG, Blumenthal K, Dhopeshwarkar N, Chang F, Bates DW, Zhou L, Rising drug allergy alert overrides in electronic health records: an observational retrospective study of a decade of experience, J. Am. Med. Inform. Assoc 23 (2016) 601–608. 10.1093/jamia/ocv143. [DOI] [PubMed] [Google Scholar]

- 31.Rehr CA, Wong A, Seger DL, Bates DW, Determining Inappropriate Medication Alerts from “Inaccurate Warning” Overrides in the Intensive Care Unit, Appl. Clin. Inform 9 (2018) 268–274. 10.1055/s-0038-1642608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Her QL, Amato MG, Seger DL, Beeler PE, Slight SP, Dalleur O, Dykes PC, Gilmore JF, Fanikos J, Fiskio JM, Bates DW, The frequency of inappropriate nonformulary medication alert overrides in the inpatient setting, J. Am. Med. Inform. Assoc 23 (2016) 924–933. 10.1093/jamia/ocv181. [DOI] [PubMed] [Google Scholar]

- 33.Bishop MA, Cohen BA, Billings LK, Thomas EV, Reducing errors through discharge medication reconciliation by pharmacy services, Am. J. Health. Syst. Pharm 72 (2015) S120–6. 10.2146/sp150021. [DOI] [PubMed] [Google Scholar]

- 34.Smeulers M, Verweij L, Maaskant JM, de Boer M, Krediet CTP, Nieveen van Dijkum EJM, Vermeulen H, Quality indicators for safe medication preparation and administration: a systematic review, PLoS One. 10 (2015) e0122695. 10.1371/journal.pone.0122695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lee J, Han H, Ock M, Lee S-I, Lee S, Jo M-W, Impact of a clinical decision support system for high-alert medications on the prevention of prescription errors, Int. J. Med. Inform 83 (2014) 929–940. 10.1016/j.ijmedinf.2014.08.006. [DOI] [PubMed] [Google Scholar]

- 36.Nanji KC, Patel A, Shaikh S, Seger DL, Bates DW, Evaluation of Perioperative Medication Errors and Adverse Drug Events, Anesthesiology. 124 (2016) 25–34. 10.1097/ALN.0000000000000904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Cottney A, Innes J, Medication-administration errors in an urban mental health hospital: A direct observation study, Int. J. Ment. Health Nurs 24 (2015) 65–74. 10.1111/inm.12096. [DOI] [PubMed] [Google Scholar]

- 38.Durham ML, Suhayda R, Normand P, Jankiewicz A, Fogg L, Reducing Medication Administration Errors in Acute and Critical Care: Multifaceted Pilot Program Targeting RN Awareness and Behaviors, J. Nurs. Adm 46 (2016) 75–81. 10.1097/NNA.0000000000000299. [DOI] [PubMed] [Google Scholar]