Abstract

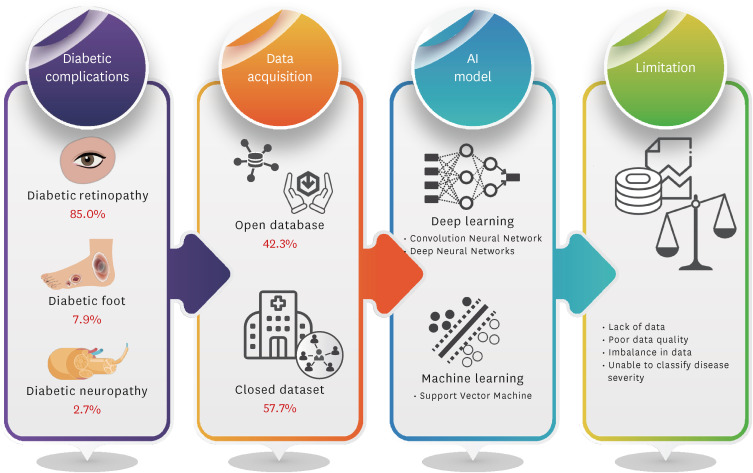

Artificial intelligence (AI)-based diagnostic technology using medical images can be used to increase examination accessibility and support clinical decision-making for screening and diagnosis. To determine a machine learning algorithm for diabetes complications, a literature review of studies using medical image-based AI technology was conducted using the National Library of Medicine PubMed, and the Excerpta Medica databases. Lists of studies using diabetes diagnostic images and AI as keywords were combined. In total, 227 appropriate studies were selected. Diabetic retinopathy studies using the AI model were the most frequent (85.0%, 193/227 cases), followed by diabetic foot (7.9%, 18/227 cases) and diabetic neuropathy (2.7%, 6/227 cases). The studies used open datasets (42.3%, 96/227 cases) or directly constructed data from fundoscopy or optical coherence tomography (57.7%, 131/227 cases). Major limitations in AI-based detection of diabetes complications using medical images were the lack of datasets (36.1%, 82/227 cases) and severity misclassification (26.4%, 60/227 cases). Although it remains difficult to use and fully trust AI-based imaging analysis technology clinically, it reduces clinicians’ time and labor, and the expectations from its decision-support roles are high. Various data collection and synthesis data technology developments according to the disease severity are required to solve data imbalance.

Keywords: Artificial Intelligence, Big Data, Deep Learning, Diagnostic Imaging, Diabetes Mellitus, Diabetic Retinopathy

Graphical Abstract

INTRODUCTION

Diabetes mellitus (DM), which is a chronic disease, is a major cause of diabetic retinopathy (DR), blindness, cardiovascular disease, heart failure, chronic renal failure, and lower extremity amputations.1,2,3,4 To prevent various DM complications, specific imaging tests are required, and it is important to quickly detect and actively treat the accompanying diseases through regular monitoring.5,6 Therefore, to diagnose and treat diabetes complications, such as retinopathy or arteriosclerosis, at an early stage, various images are often used, and it is essential to quickly analyze them to identify diabetes complications. Representative diagnostic imaging indicators of diabetes complications include fundus examination for the screening of DR7 and carotid ultrasonography for the early diagnosis of arteriosclerosis.8 However, the main problems with using medical images for early detection by physicians are inconsistencies in readout by physicians and long readout analysis times. In particular, although some reading standards for fundus examination or carotid ultrasonography have been set, the results can vary depending on the experiences or skills of the physicians who actually perform or read the test.9

Therefore, there has been a recent trend to use medical images to detect diabetes complications using artificial intelligence (AI)-based analysis technology.10,11 Advancements in medical devices and AI-based analysis technology over the past few years have significantly improved the processing speed and accuracy of interpretation. AI technology is used in various applications in the medical field, including early detection of complications, patient condition monitoring, clinical decision-making, and treatment.12,13,14,15 In particular, AI-based medical image analysis technology is used for the diagnosis and prediction of various diabetes complications, and its accuracy is increasing through various machine learning and deep learning.16,17

Despite the rapid development of AI models based on medical images in the field of diabetes, there are also problems with their clinical implementation in the real world. Recently, as AI model development for diabetes complication screening and diagnosis has become more common, the limitations of AI-based algorithm development are also being discussed. Therefore, it is necessary to understand the various limitations of AI models validated in the literature. However, in the review studies of AI models, few studies compared the limitations of previous studies.

This paper will focus on examining the studies that developed AI models using medical images in the field of diabetes and reviewing the limitations of AI models. Therefore, in this study, an AI model using various diagnostic images, a major complication of diabetes, was reviewed from the viewpoints of methods, analysis tools, datasets, limitations, and future directions. In this study, we investigated the applied AI model, datasets, limitations, and future directions. The performance of the AI models, including the accuracy, sensitivity (or recall), and specificity, were compared. We identified the accuracy and effectiveness of AI-based medical image analysis technology in diagnosing diabetes and confirmed the future development direction and applicability of AI-based diagnostic image analysis technology. This study suggests the way forward for AI models in clinical applications and provides important insights for research on the development of AI-based medical image studies in DM.

LITERATURE SEARCH

A literature review was conducted using the National Library of Medicine PubMed database, a medical journal database, and the Excerpta Medica Database (EMBASE), a medical database provided by Elsevier and Ovid (Fig. 1). To collect literature suitable for this study, list of studies using diabetes diagnostic images as keywords and a list of studies using AI as a keyword were combined. Considering advancements in AI analysis technology, only English documents published between 2012 and 2022 were reviewed. The search terms of PubMed were (“Diabetes Mellitus/diagnostic imaging” [Mesh]) AND (“Artificial Intelligence” [Mesh]) and (“Diabetes Mellitus/diagnostic imaging” [Mesh]) AND “Machine learning” [Mesh]. As of September 22, 2022, 229 papers were collected. The search terms of EMBASE were (“diabetes mellitus”/exp) AND (“deep learning”/exp) AND (“imaging”/exp), limited to human subject research, and 222 papers were collected.

Fig. 1. Study trial.

A comprehensive review of all the literature included in the collection list was initially conducted. First, 28 duplicate papers from the two databases were excluded. Sixteen papers for which the contents of the main text could not be confirmed were excluded because only abstracts were provided. Of the remaining full-text papers, 36 were excluded. Second, a detailed full-text review of the 371 papers was conducted by a medical information expert. Before the review, the criteria for whether the contents were appropriate for screening, diagnosis, and disease management of diabetes complications through AI-based image analysis were defined, and reviews were conducted accordingly. In this process, 35 AI and image analysis methodology studies that were not related to the subject of this study, such as comparison and reproduction of previously reported analysis models or studies on datasets, were excluded. Furthermore, 17 studies that were judged not to be directly related to diabetes, such as one animal model, four personal health records or questionnaire studies, brain aging prediction studies using brain imaging, and extensive retinal disease studies, were excluded. In total, 227 studies were selected after excluding 63 studies that mentioned diabetes only as a history or underlying disease and 24 studies that mentioned diabetes as a risk factor for other diseases.

AI-BASED MEDICAL IMAGES ANALYSIS IN DM

In this study, 227 AI-based studies on diabetes using medical images were reviewed (Fig. 2). Among these studies, AI-based diabetes imaging was being studied most actively for DR at 85% (193/227 cases). As the number of studies related to medical images based on deep learning, including a convolution neural network (CNN), has increased significantly, many CNN-based medical imaging studies using fundoscopy or optical coherence tomography (OCT) have been reported since 2019 (Table 1).18,19,20,21,22,23,24,25,26,27,28,29,30 This was followed by diabetic foot (7.9%, 18/227 cases) and diabetic neuropathy (2.7%, 6/227 cases) (Fig. 3A). Reported studies using thermograms and skin images in the diabetic foot and attempted AI-based studies using computed tomography (CT) images in diabetic neuropathy (Table 2).31,32,33,34,35,36,37 Other complications of studies included pancreatic disease, fatty liver disease, and gestational DM (4.4%, 10/227 cases).

Fig. 2. Schematic of diagram AI-based medical images in diabetes mellitus.

AI = artificial intelligence.

Table 1. Summary of classifiers, datasets, and limitations of artificial intelligence imaging technology for diabetes retinopathy.

| References | Classifiers | Datasets | Limitations | Performance (%) | ||||

|---|---|---|---|---|---|---|---|---|

| ACC | AUC | SP | SE | |||||

| Liu et al., 202218 | CNN | 600 Patients | Small size/imbalance/misclassification | 94 | 96 | 91 | ||

| Fundus photos | Images collected from a single regional hospital | |||||||

| OCT images | Inability to cover different age groups | |||||||

| Uneven distribution of ratings owing to relatively small sample size | ||||||||

| Malerbi et al., 202219 | CNN | 824 Patients | Small size/misclassification | 89 | 61 | 97 | ||

| Fundus photos | Lack of clinical and laboratory data | |||||||

| Graded by one expert | ||||||||

| Gao et al., 202120 | CNN | 1,148 Images | Imbalance/misclassification | 88 | 92 | 89 | 87 | |

| OCT and CFP images | Disequilibrium in the dataset itself | |||||||

| Classifies only the presence or absence of retinal pathology, not the identification of specific retinal pathology | ||||||||

| Lu et al., 202121 | CNN | 41,866 Images | Small size/misclassification | 98 | 96 | 90 | ||

| Fundus photos | No ability to screen for fundus diseases other than diabetic retinopathy (e.g., DME) | |||||||

| Verification only on local dataset (external validation required) | ||||||||

| Tang et al., 202122 | CNN | 9,392 Images | Imbalance/misclassification/quality | 92 | 82 | 86 | ||

| Ultrawide field scanning laser ophthalmoscopy images | Various types of retinal diseases not included | |||||||

| Lack of consistency in ground truth labeling | ||||||||

| Some DR lesions not captured | ||||||||

| Lo et al., 202023 | CNN | 3,618 Images | Misclassification | 99 | 99 | 98 | 98 | |

| OCT images | False-positive and false-negative errors found in patients with myopia | |||||||

| Tang et al., 202124 | CNN | 20 Images | Small size/misclassification | 99 | 99 | 87 | ||

| Fundus photos | Difficult to generalize owing to small dataset | |||||||

| False positive found at the edge of the ground truth | ||||||||

| False negatives found in several test images | ||||||||

| Wu et al., 202125 | CNN | 35,126 Images | Small size/imbalance | 91 | 98 | 97 | 92 | |

| Fundus photos | Imbalance in dataset (requires pre-training on a large dataset) | |||||||

| Training and validation time-consuming | ||||||||

| Toğaçar et al., 202226 | CNN | 84,484 OCT images | Practical difficulties in clinical application | 99 | 99 | 100 | ||

| 3,231 spectral domain-OCT images | More time- and cost-consuming than a single CNN model | |||||||

| 4,254 OCT images | Cost of training increased | |||||||

| Clinical validation not performed | ||||||||

| Wang et al., 202227 | CNN | 35,001 Images | Small size/data quality | 90 | 98 | 90 | 96 | |

| Fundus photos | Restricted dataset (causing false positivity) | |||||||

| Methods to evaluate segmentation and indexing techniques in the field of retinal ophthalmology dataset | Blurring of the borders of the optic disc in some diseases, reducing detection | |||||||

| Failed to integrate fusion model and anatomical landmark detector into one model | ||||||||

| Tang et al., 202128 | CNN | 100,727 Images | Imbalance/misclassification | 96 | 96 | 98 | 94 | |

| OCT images | Using only gradable OCT images | |||||||

| Limited non-DME retinal abnormalities | ||||||||

| Difficulty in accurately distinguishing DME only with OCT images | ||||||||

| Guo et al., 202029 | Deep CNN | 51 Participants | Small size/imbalance/misclassification | 89 | 99 | |||

| OCT and OCT angiography images | Problems with retinal fluid segmentation | |||||||

| Inability to differentiate between intraretinal and subretinal fluids | ||||||||

| Arcadu et al., 201930 | CNN | 17,997 Images | Clinical variables | 97 | 96 | 87 | ||

| CFPs and OCT | Difficult to generalize clinically beyond trained data | |||||||

| Consideration of additional clinical variables as it is a proposed model based on time-domain OCT images | ||||||||

ACC = accuracy, AUC = area under the curve, SP = specificity, SE = sensitivity, CNN = convolutional neural network, OCT = optical coherence tomography, CFP = color fundus photography, DME = diabetic macular edema, DR = diabetic retinopathy.

Fig. 3. AI-based medical images analysis in diabetes mellitus. (A) Type of disease. (B) Type of limitation of AI-based imaging analysis.

AI = artificial intelligence.

Table 2. Summary of classifiers, datasets, and limitations of various artificial intelligence imaging technologies for diabetes.

| References | Classifiers | Data | Limitations | Performance (%) | |||||

|---|---|---|---|---|---|---|---|---|---|

| ACC | AUC | SP | SE | ||||||

| Diabetic foot | |||||||||

| Khandakar et al., 202231 | CNN | 167 Patients | Misclassification/clinical application | 95 | 97 | 95 | |||

| Foot-pair thermograms | Need to improve patient classification criteria according to the thermal change index | ||||||||

| Use of additional clinical indicators | |||||||||

| Goyal et al., 202032 | CNN | 1,459 Images | Imbalance/data quality/clinical application | 90 | 83 | 92 | 88 | ||

| Skin images | Objective medical test results that need to be reflected | ||||||||

| May be affected by external lighting and skin tone | |||||||||

| Goyal et al., 201933 | CNN | 1,775 Images | Small size/clinical application | 91 | 95 | ||||

| Skin images | The dataset being limited to use CNN | ||||||||

| Limitations of model application owing to limited resources of portable devices and real-time prediction | |||||||||

| Yogapriya et al., 202234 | CNN | 5,890 Images | Small size/data quality | 91 | 93 | 90 | |||

| Skin images | Requires more data samples under appropriate lighting conditions | ||||||||

| Dremin et al., 202135 | Artificial neural network | 40 Patients | Small size/clinical application | 97 | 85 | 95 | |||

| Skin images | System performance limited owing to real-time data processing time | ||||||||

| Others | |||||||||

| Wright et al., 202236 | eXtreme gradient boosting | 878 Images | Small size/misclassification/clinical application | 65 | 61 | 62 | |||

| CT images | Difficulty in accurately determining the onset of the disease and the duration of the disease | ||||||||

| Inability to explain the severity of the disease | |||||||||

| Tallam et al., 202237 | Cycle generative adversarial network | 8,992 Patients | Clinical variables | 69 | 68 | ||||

| CT images | Poor pancreatic segmentation performance | ||||||||

| Presence of a deviation from the CT scan date obtained owing to limitations of the retrospective study design | |||||||||

| Diabetes stage at the time of CT acquisition that cannot be clearly identified | |||||||||

ACC = accuracy, AUC = area under the curve, SP = specificity, SE = sensitivity, CNN = convolutional neural network, CT = computed tomograph.

Deep learning methods for artificial intelligence in diabetes

Although it differs from study to study, overall, the accuracy, sensitivity, and specificity of the AI model for diabetes imaging were 69–100%, 51–100%, and 61–100%, respectively, in this study. The performance of AI models depends on the data size and the image quality, but AI-based papers using medical images reported since 2018 have shown an average accuracy of 93%. Among the various AI methods, the most widely used method in this study was CNN-based approach (63.5%, 144/227 cases). Of the 144 papers that used CNNs, 130 were published after 2019, and 45 studies (68.2%) of the 66 studies published in 2022 used CNNs. This was followed by deep learning methods such as deep neural networks (DNNs) and support vector machines (SVMs) based on machine learning.

Particularly, the medical field where AI technology was most actively applied was the diagnosis of DR. The imaging model for DR using CNN showed a range of over 72–100% accuracy, 51–100% sensitivity, and 61–100% specificity. This may be because CNN is a useful algorithm for finding patterns for analyzing images, learning algorithms directly from data, and classifying images using patterns.38 Because a CNN receives data in the form of a matrix, it can minimize information loss while vectorizing an image.39 A CNN is a technique similar to the human visual processing pathway, which is based on the selective response of optic nerve cells in the visual cortex to directions.40 CNNs can contribute to classification, detection, segmentation, and image enhancement41 and have the greatest advantage in detecting lesions in medical images because they can have image data themselves as input values. Thus, they are useful for AI imaging models, such as DR detection.

Moreover, various DNNs (15.4%, 35/227 cases), such as generative adversarial networks (GANs) and deep fusion models, have been used, and attempts have been made to improve performance by utilizing more than one algorithm. In this study, an accuracy range of 85–98%, a sensitivity range of 72–96%, and a specificity range of 72–95% were reported for the DNN. A GAN utilizes a DNN in a form suitable for a specific purpose, such as data generation.42 As different types of medical data sets are becoming increasingly available, DNN could be used as a fused network to analyze heterogeneous data (e.g., clinical and genomic data).43 Next, a SVM (6.6%, 15/227 cases) was also used, and the accuracy, sensitivity, and specificity of the SVM were 85–98%, 73–99%, and 85–99%, respectively, in this study.

Diversity of dataset for artificial intelligence in diabetes

Images for DR screening were mostly detected using fundoscopy or OCT (Table 1). A study that analyzed 456 OCTs using the three-dimensional CNN algorithm reported 91% accuracy, 90% sensitivity, and 92% specificity.44 In a study using both fundus and OCT images for DR screening with a CNN-based AI model, simultaneous processing and analysis of both images improved the rate and accuracy of DR screening and contributed to early diabetic macular edema (DME) detection.18 However, the clear limitations of these studies were that they did not use various datasets and the sample size was significantly small. Therefore, future studies should expand this dataset to verify its performance.

A large number of images and a large sample size are important for the development of an AI model; however, the diversity of images is also important for accuracy and reliability.45 Therefore, the data used for AI model development are built through multi-institutional studies, or public datasets, such as the Indian Diabetic Retinopathy Image Dataset, are sometimes used. Of the studies reviewed for diabetes imaging, 42.3% (96/227 cases) utilizing “open data” was used. One example of a study using a large-scale dataset for DR screening is the case of using a real-world DR screening dataset consisting of 666,383 fundus images from 173,346 patients.46 The model proposed in this study consists of CNN-based image quality assessment, lesion-aware, and DR grading algorithms. However, there were limitations to the subclassification of lesions. In DR screening, the results of using a deep ensemble model with an open dataset provided an accuracy of 97.78%, sensitivity of 97.6%, and specificity of 99.3%, showing superior results to existing methods.47 In that study, a CNN algorithm was proposed for fundus feature extraction, and the DR classification accuracy was improved by combining features extracted from a pre-trained deep network.

Finally, for the clinical application of the diabetes complication test system, large-scale data must be established to develop a high-performance algorithm. The biggest factor for the unrivaled development of DR-related AI diagnostic technology for AI diabetes complications was the size and utilization of the open dataset. Additionally, high-quality data, including various data and multidimensional clinical information based on diseases and symptoms, must be established. Data classification performance can be improved by resolving data shortages and imbalances.

Integrating artificial intelligence models to digital health in diabetes

In the current era of digital health, patients manage their health and check their disease statuses.48 Along with the current trend, attempts to increase user or patient convenience (not physicians) by expanding the application range of DR screening have been explored using portable devices and efficient AI algorithms. A study using a portable retinal camera for DR screening reported an accuracy of 89% using a CNN algorithm.19 The eXtreme Inception model used in this study is a CNN model announced by Google in 2017 that improves performance by efficiently using parameters.49

The deep fusion model was evaluated using an edge device with limited computer resources that could be mounted on a portable device and used easily by the patient for an eye examination at an appropriate time.27 Besides, that study reported a good performance in classifying DME through the deep fusion model, with the model reporting a sensitivity of 96% and a specificity of 90%.27

LIMITATIONS OF AI MODELS BASED ON DIABETES STUDIES

In this review, the limitations of AI-based imaging analytical model development in diabetes included the lack of data, data imbalance, poor image quality, difficulty in classifying disease severity, lack of clinical variables, labeling errors, and technical limitations of the algorithm. Regarding the development of an automated screening system for diabetes, lack of data was considered the major limitation (36.1%, 82/227 cases) (Fig. 3B). Poor data quality (25.6%, 58/227 cases) and data imbalance (15.9%, 36/227 cases) of the acquired data itself were influenced by the limitations of disease severity classification (26.4%, 60/227 cases). Furthermore, the lack of patient clinical data variables and omission of detailed information during data acquisition were suggested as limitations for clinical application (24.7%, 56/227 cases). These limitations of data might show exceptional performance for the data used to be trained on a specific AI model but not for other datasets. This could be because the AI model does not adequately reflect the diversity of the data or because it is overfitted to data collected from a specific patient population or situation and does not detect the wide range of diabetes symptoms. Therefore, despite the development of various AI models, it has been noted that clinical application remains difficult because of the limitations of these data.45

It was also noted that AI algorithms must be improved to increase the performance of diabetes diagnosis models using medical images (18.1%, 41/227 cases). The limitations of AI algorithms can lead to results that do not adequately account for the various confounding variables that medical images are exposed to. As a result, the advancement of AI algorithms will be helpful in more accurate screening for diabetes complications by classifying patients according to their crucial clinical factors.

Lack of data and image quality and difficulties in the classification of detailed diseases were cited as major obstacles in the development of AI diagnostic models.45 This suggests that the required development environment may vary depending on the purpose of developing the AI models used in the medical field. In AI model development using medical data, lack and imbalance of data cause data bias, which can lead to inaccurate results in disease prediction and diagnosis. In particular, difficulties in severity and detailed classification, identified as major limitations in the development of diagnostic models, must be overcome for AI technology to be used more actively in the medical field.

The difficulty of providing actual medical images is a problem in AI-based medical imaging studies. Recently, the use of synthetic medical data with a GAN has become an alternative for improving AI-based diagnostic technology by easily securing high-quality medical data.50 A GAN uses a generative neural network to generate an image and a discriminating neural network to determine whether the image created by the generative neural network is real or fake.51 A study observing the pancreas through CT collected from 8,992 patients showed a relatively low accuracy of 69%, but this study tried to increase the amount of learning data by using cycle GAN.37 The cycle GAN used here is a technique for generating oversampled training data, and a function that can return the generated image to the original image was applied. This method can significantly supplement the amount of learning data, which is important for deep learning performance.52

Given these limitations, it remains difficult to trust and widely use AI in clinical practice. In addition, to be used effectively in the clinical field, the development of explainable AI algorithms and interoperability with existing medical systems, such as clinical decision support systems, should be considered. Moreover, the existence of variations in the model performance depending on the dataset will be an important issue in the future.53 It should be considered separately and verified whether this could be used clinically.54,55

FUTURE DIRECTION OF AI IN DM

DR was the most common diabetes complication in the studies examined in this review. In the development and verification of AI models using medical images, the collection of image data and the use of large-scale open data were active. In particular, various public datasets related to DR are hypothesized to be related to why several studies on automated screening and diagnostic systems related to DR. In the 227 studies examined through this review, the accuracy, specificity, and sensitivity reported confirmed high performance. Most studies have used AI algorithms based on CNN, which are developed by addressing the problems of processing images in existing DNNs.

Recently, the rapid and simple detection of diabetes complications using AI technology has developed into clinical decision support by physicians through advanced abnormality detection, using object detection and segmentation based on medical images.56 Deep learning algorithms have shown successful results in computer vision and image analysis tasks, and these technologies are being evaluated and expanded for application to medical images.57 The AI technology that uses medical images in the field of DM has developed rapidly with the development of various AI algorithms. The advantage of the AI-based imaging analysis technique is that it increases patient access to tests at a low cost and faster speed compared with detection performed by medical personnel.

Most current AI models rely on single-type data like imaging or genomics data. It will be necessary to advance AI technology by applying and verifying AI algorithms derived from various datasets in real-world scenarios. In the future, AI models will integrate multiple data types such as clinical notes, imaging data, genomics data, and real-world evidence for a comprehensive understanding of patient health and accurate diagnoses, leading to improved healthcare outcomes. Second, in terms of AI technology development, it is necessary to develop medical AI technology to move toward explainable AI for evidence-based clinical interventions. Deep learning models are considered “black boxes” with opaque decision-making. Explainable AI is crucial to enhance trust and acceptance in healthcare. Lastly, it is necessary to integrate complementary AI models to diagnose and monitor DM. It can promote the opportunity for rapid and efficient clinical decision-making. For example, AI models can be used to monitor a patient’s health status, detect early signs of disease, and make health predictions. This study enhances our understanding of AI-based diagnostic image analysis for diabetes complications and could contribute to advancing the early detection and diagnosis system of diabetes complications.

Footnotes

Funding: This research was supported by the Bio & Medical Technology Development Program of the National Research Foundation (NRF) funded by the Korean government (MSIT) (No. NRF-2019M3E5D3073104).

Disclosure: The authors have no potential conflicts of interest to disclose.

- Conceptualization: Chun JW, Kim HS.

- Data curation: Chun JW, Kim HS.

- Formal analysis: Chun JW, Kim HS.

- Methodology: Kim HS.

- Resources: Kim HS.

- Software: Kim HS.

- Supervision: Kim HS.

- Validation: Kim HS.

- Visualization: Chun JW, Kim HS.

- Writing - original draft: Chun JW, Kim HS.

- Writing - review & editing: Kim HS.

References

- 1.Park JH, Ha KH, Kim BY, Lee JH, Kim DJ. Trends in cardiovascular complications and mortality among patients with diabetes in South Korea. Diabetes Metab J. 2021;45(1):120–124. doi: 10.4093/dmj.2020.0175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kim J, Yoon SJ, Jo MW. Estimating the disease burden of Korean type 2 diabetes mellitus patients considering its complications. PLoS One. 2021;16(2):e0246635. doi: 10.1371/journal.pone.0246635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chawla A, Chawla R, Jaggi S. Microvasular and macrovascular complications in diabetes mellitus: distinct or continuum? Indian J Endocrinol Metab. 2016;20(4):546–551. doi: 10.4103/2230-8210.183480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kim H, Jung DY, Lee SH, Cho JH, Yim HW, Kim HS. Long-term risk of cardiovascular disease among type 2 diabetes patients according to average and visit-to-visit variations of HbA1c levels during the first 3 years of diabetes diagnosis. J Korean Med Sci. 2023;38(4):e24. doi: 10.3346/jkms.2023.38.e24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Crasto W, Patel V, Davies MJ, Khunti K. Prevention of microvascular complications of diabetes. Endocrinol Metab Clin North Am. 2021;50(3):431–455. doi: 10.1016/j.ecl.2021.05.005. [DOI] [PubMed] [Google Scholar]

- 6.Bowling FL, Rashid ST, Boulton AJ. Preventing and treating foot complications associated with diabetes mellitus. Nat Rev Endocrinol. 2015;11(10):606–616. doi: 10.1038/nrendo.2015.130. [DOI] [PubMed] [Google Scholar]

- 7.Kwan CC, Fawzi AA. Imaging and biomarkers in diabetic macular edema and diabetic rRetinopathy. Curr Diab Rep. 2019;19(10):95. doi: 10.1007/s11892-019-1226-2. [DOI] [PubMed] [Google Scholar]

- 8.Polak JF, Szklo M, Kronmal RA, Burke GL, Shea S, Zavodni AE, et al. The value of carotid artery plaque and intima-media thickness for incident cardiovascular disease: the multi-ethnic study of atherosclerosis. J Am Heart Assoc. 2013;2(2):e000087. doi: 10.1161/JAHA.113.000087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Itri JN, Tappouni RR, McEachern RO, Pesch AJ, Patel SH. Fundamentals of diagnostic error in imaging. Radiographics. 2018;38(6):1845–1865. doi: 10.1148/rg.2018180021. [DOI] [PubMed] [Google Scholar]

- 10.Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2(1):35. doi: 10.1186/s41747-018-0061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Nomura A, Noguchi M, Kometani M, Furukawa K, Yoneda T. Artificial intelligence in current diabetes management and prediction. Curr Diab Rep. 2021;21(12):61. doi: 10.1007/s11892-021-01423-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mintz Y, Brodie R. Introduction to artificial intelligence in medicine. Minim Invasive Ther Allied Technol. 2019;28(2):73–81. doi: 10.1080/13645706.2019.1575882. [DOI] [PubMed] [Google Scholar]

- 13.Mahadevaiah G, Rv P, Bermejo I, Jaffray D, Dekker A, Wee L. Artificial intelligence-based clinical decision support in modern medical physics: Selection, acceptance, commissioning, and quality assurance. Med Phys. 2020;47(5):e228–e235. doi: 10.1002/mp.13562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shin J, Kim J, Lee C, Yoon JY, Kim S, Song S, et al. Development of various diabetes prediction models using machine learning techniques. Diabetes Metab J. 2022;46(4):650–657. doi: 10.4093/dmj.2021.0115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lee S, Jeon S, Kim HS. A study on methodologies of drug repositioning using biomedical big data: a focus on diabetes mellitus. Endocrinol Metab (Seoul) 2022;37(2):195–207. doi: 10.3803/EnM.2022.1404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chaki J, Ganesh ST, Cidham SK, Theertan SA. Machine learning and artificial intelligence based diabetes mellitus detection and self-management: a systematic review. J King Saud Univ Comput Inf Sci. 2022;34(6 Pt B):3204–3225. [Google Scholar]

- 17.Anaya-Isaza A, Mera-Jiménez L, Zequera-Diaz M. An overview of deep learning in medical imaging. Inform Med Unlocked. 2021;26:100723 [Google Scholar]

- 18.Liu R, Li Q, Xu F, Wang S, He J, Cao Y, et al. Application of artificial intelligence-based dual-modality analysis combining fundus photography and optical coherence tomography in diabetic retinopathy screening in a community hospital. Biomed Eng Online. 2022;21(1):47. doi: 10.1186/s12938-022-01018-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Malerbi FK, Andrade RE, Morales PH, Stuchi JA, Lencione D, de Paulo JV, et al. Diabetic retinopathy screening using artificial intelligence and handheld smartphone-based retinal camera. J Diabetes Sci Technol. 2022;16(3):716–723. doi: 10.1177/1932296820985567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gao Q, Amason J, Cousins S, Pajic M, Hadziahmetovic M. Automated identification of referable retinal pathology in teleophthalmology setting. Transl Vis Sci Technol. 2021;10(6):30. doi: 10.1167/tvst.10.6.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lu L, Ren P, Lu Q, Zhou E, Yu W, Huang J, et al. Analyzing fundus images to detect diabetic retinopathy (DR) using deep learning system in the Yangtze River delta region of China. Ann Transl Med. 2021;9(3):226. doi: 10.21037/atm-20-3275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tang F, Luenam P, Ran AR, Quadeer AA, Raman R, Sen P, et al. Detection of diabetic retinopathy from ultra-widefield scanning laser ophthalmoscope images: a multicenter deep learning analysis. Ophthalmol Retina. 2021;5(11):1097–1106. doi: 10.1016/j.oret.2021.01.013. [DOI] [PubMed] [Google Scholar]

- 23.Lo YC, Lin KH, Bair H, Sheu WH, Chang CS, Shen YC, et al. Epiretinal membrane detection at the ophthalmologist level using deep learning of optical coherence tomography. Sci Rep. 2020;10(1):8424. doi: 10.1038/s41598-020-65405-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tang MC, Teoh SS, Ibrahim H, Embong Z. Neovascularization detection and localization in fundus images using deep learning. Sensors (Basel) 2021;21(16):5327. doi: 10.3390/s21165327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wu J, Hu R, Xiao Z, Chen J, Liu J. Vision transformer-based recognition of diabetic retinopathy grade. Med Phys. 2021;48(12):7850–7863. doi: 10.1002/mp.15312. [DOI] [PubMed] [Google Scholar]

- 26.Toğaçar M, Ergen B, Tümen V. Use of dominant activations obtained by processing OCT images with the CNNs and slime mold method in retinal disease detection. Biocybern Biomed Eng. 2022;42(2):646–666. [Google Scholar]

- 27.Wang TY, Chen YH, Chen JT, Liu JT, Wu PY, Chang SY, et al. Diabetic macular edema detection using end-to-end deep fusion model and anatomical landmark visualization on an edge computing device. Front Med (Lausanne) 2022;9:851644. doi: 10.3389/fmed.2022.851644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tang F, Wang X, Ran AR, Chan CK, Ho M, Yip W, et al. A multitask deep-learning system to classify diabetic macular edema for different optical coherence tomography devices: a multicenter analysis. Diabetes Care. 2021;44(9):2078–2088. doi: 10.2337/dc20-3064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Guo Y, Hormel TT, Xiong H, Wang J, Hwang TS, Jia Y. Automated segmentation of retinal fluid volumes from structural and angiographic optical coherence tomography using deep learning. Transl Vis Sci Technol. 2020;9(2):54. doi: 10.1167/tvst.9.2.54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Arcadu F, Benmansour F, Maunz A, Michon J, Haskova Z, McClintock D, et al. Deep learning predicts OCT measures of diabetic macular thickening from color fundus photographs. Invest Ophthalmol Vis Sci. 2019;60(4):852–857. doi: 10.1167/iovs.18-25634. [DOI] [PubMed] [Google Scholar]

- 31.Khandakar A, Chowdhury ME, Reaz MB, Ali SH, Kiranyaz S, Rahman T, et al. A novel machine learning approach for severity classification of diabetic foot complications using thermogram images. Sensors (Basel) 2022;22(11):4249. doi: 10.3390/s22114249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Goyal M, Reeves ND, Rajbhandari S, Ahmad N, Wang C, Yap MH. Recognition of ischaemia and infection in diabetic foot ulcers: Dataset and techniques. Comput Biol Med. 2020;117:103616. doi: 10.1016/j.compbiomed.2020.103616. [DOI] [PubMed] [Google Scholar]

- 33.Goyal M, Reeves ND, Rajbhandari S, Yap MH. Robust methods for real-time diabetic foot ulcer detection and localization on mobile devices. IEEE J Biomed Health Inform. 2019;23(4):1730–1741. doi: 10.1109/JBHI.2018.2868656. [DOI] [PubMed] [Google Scholar]

- 34.Yogapriya J, Chandran V, Sumithra MG, Elakkiya B, Shamila Ebenezer A, Suresh Gnana Dhas C. Automated detection of infection in diabetic foot ulcer images using convolutional neural network. J Healthc Eng. 2022;2022:2349849. doi: 10.1155/2022/2349849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Dremin V, Marcinkevics Z, Zherebtsov E, Popov A, Grabovskis A, Kronberga H, et al. Skin complications of diabetes mellitus revealed by polarized hyperspectral imaging and machine learning. IEEE Trans Med Imaging. 2021;40(4):1207–1216. doi: 10.1109/TMI.2021.3049591. [DOI] [PubMed] [Google Scholar]

- 36.Wright DE, Mukherjee S, Patra A, Khasawneh H, Korfiatis P, Suman G, et al. Radiomics-based machine learning (ML) classifier for detection of type 2 diabetes on standard-of-care abdomen CTs: a proof-of-concept study. Abdom Radiol (NY) 2022;47(11):3806–3816. doi: 10.1007/s00261-022-03668-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tallam H, Elton DC, Lee S, Wakim P, Pickhardt PJ, Summers RM. Fully Automated abdominal CT biomarkers for type 2 diabetes using deep learning. Radiology. 2022;304(1):85–95. doi: 10.1148/radiol.211914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Shen D, Wu G, Suk HI. Deep learning in medical image analysis. Annu Rev Biomed Eng. 2017;19(1):221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Yamashita R, Nishio M, Do RK, Togashi K. Convolutional neural networks: an overview and application in radiology. Insights Imaging. 2018;9(4):611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 41.Anwar SM, Majid M, Qayyum A, Awais M, Alnowami M, Khan MK. Medical image analysis using convolutional neural networks: a review. J Med Syst. 2018;42(11):226. doi: 10.1007/s10916-018-1088-1. [DOI] [PubMed] [Google Scholar]

- 42.Aggarwal A, Mittal M, Battineni G. Generative adversarial network: an overview of theory and applications. IJIM Data Insights. 2021;1(1):100004 [Google Scholar]

- 43.Stahlschmidt SR, Ulfenborg B, Synnergren J. Multimodal deep learning for biomedical data fusion: a review. Brief Bioinform. 2022;23(2):bbab569. doi: 10.1093/bib/bbab569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zang P, Hormel TT, Wang X, Tsuboi K, Huang D, Hwang TS, et al. A diabetic retinopathy classification framework based on deep-learning analysis of OCT angiography. Transl Vis Sci Technol. 2022;11(7):10. doi: 10.1167/tvst.11.7.10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kim HS, Kim DJ, Yoon KH. Medical big data is not yet available: why we need realism rather than exaggeration. Endocrinol Metab (Seoul) 2019;34(4):349–354. doi: 10.3803/EnM.2019.34.4.349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Dai L, Wu L, Li H, Cai C, Wu Q, Kong H, et al. A deep learning system for detecting diabetic retinopathy across the disease spectrum. Nat Commun. 2021;12(1):3242. doi: 10.1038/s41467-021-23458-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Jagan Mohan N, Murugan R, Goel T, Mirjalili S, Roy P. A novel four-step feature selection technique for diabetic retinopathy grading. Phys Eng Sci Med. 2021;44(4):1351–1366. doi: 10.1007/s13246-021-01073-4. [DOI] [PubMed] [Google Scholar]

- 48.Park JI, Lee HY, Kim H, Lee J, Shinn J, Kim HS. Lack of acceptance of digital healthcare in the medical market: addressing old problems raised by various clinical professionals and developing possible solutions. J Korean Med Sci. 2021;36(37):e253. doi: 10.3346/jkms.2021.36.e253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Chollet F. Xception: deep learning with depthwise separable convolutions; Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017 Jul 21-26; Honolulu, HI, USA. Piscataway, NJ, USA: IEEE; 2017. pp. 1251–1258. [Google Scholar]

- 50.Iqbal T, Ali H. Generative adversarial network for medical images (MI-GAN) J Med Syst. 2018;42(11):231. doi: 10.1007/s10916-018-1072-9. [DOI] [PubMed] [Google Scholar]

- 51.Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial networks. Commun ACM. 2020;63(11):139–144. [Google Scholar]

- 52.Zhu JY, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks; Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV); 2017 Oct 22-29; Venice, Italy. Piscataway, NJ, USA: IEEE; 2017. pp. 2223–2232. [Google Scholar]

- 53.AbdelMaksoud E, Barakat S, Elmogy M. A computer-aided diagnosis system for detecting various diabetic retinopathy grades based on a hybrid deep learning technique. Med Biol Eng Comput. 2022;60(7):2015–2038. doi: 10.1007/s11517-022-02564-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Zhang K, Liu X, Xu J, Yuan J, Cai W, Chen T, et al. Deep-learning models for the detection and incidence prediction of chronic kidney disease and type 2 diabetes from retinal fundus images. Nat Biomed Eng. 2021;5(6):533–545. doi: 10.1038/s41551-021-00745-6. [DOI] [PubMed] [Google Scholar]

- 55.Kim HS. Decision-making in artificial intelligence: is it always correct? J Korean Med Sci. 2020;35(1):e1. doi: 10.3346/jkms.2020.35.e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Tsuneki M. Deep learning models in medical image analysis. J Oral Biosci. 2022;64(3):312–320. doi: 10.1016/j.job.2022.03.003. [DOI] [PubMed] [Google Scholar]

- 57.Suganyadevi S, Seethalakshmi V, Balasamy K. A review on deep learning in medical image analysis. Int J Multimed Inf Retr. 2022;11(1):19–38. doi: 10.1007/s13735-021-00218-1. [DOI] [PMC free article] [PubMed] [Google Scholar]