Summary

We demonstrate that non-rigid deformations of, e.g., the mouth introduce bias in the vNav-based detection of brain motion, which causes artifacts. We present real-time brain extraction for vNavs and show that these artifacts can be removed by brain-masked registration.

Introduction

Volumetric navigators1 (vNavs) interleaved within longer MRI sequences are an effective method for dynamically detecting and correcting head motion during the acquisition.2 Motion is estimated by registering each vNav back to the first, assuming all of the anatomy in the navigator FOV moves rigidly. However, when the navigator FOV encompasses the entire head, non-rigid deformations, such as opening and closing the mouth, can bias the detection of brain motion. This leads to incorrect motion “correction” and ultimately undesired image artifacts.

We present an experiment showing that non-rigid motion biases the detection and introduces artifacts when the vNav-based motion estimates are used for correction. To address this, we developed robust ultra-fast brain extraction targeted to vNavs and demonstrated offline that leveraging the derived masks for brain-only registration substantially improves the correction.

Methods

Real-time brain extraction

The brain was identified in vNavs (3D EPI, 323 8-mm voxels) as a maximally stable extremal region3 (MSER). We implemented a 3D detector4 to extract global MSERS offline, i.e. clusters of N voxels at the minimum of their growth rate dN/dI over the entire range of intensities I (8 bits, Δ=127). The largest MSER was selected and morphologically opened with a spherical kernel of radius r=3 to remove parts of the neck. A typical vNav and derived mask are shown in Figure 1.

Figure 1.

Brain mask for robust estimation of rigid brain motion from volumetric navigators (vNavs). (A) Slice through a typical 323 vNav at 8 mm isotropic resolution. (B) Automatically generated binary mask excluding non-brain voxels. (C) For robust registration at low resolution, the brain mask is morphologically dilated.

Evaluation of masking performance

We evaluated the accuracy of our masking procedure in n=258 vNav timeseries from the Human Connectome Project5,6 (HCP-A) using the Dice overlap metric. We compared the first vNav mask of each series to a down-sampled mask generated with FreeSurfer7 from the associated motion-corrected MPRAGE. Consistency across time was assessed by comparing the masks of each vNav series to its first frame.

Non-rigid head motion experiment

For this study, a subject was instructed to open and close their mouth at random times during a vNav-MPRAGE acquisition at 3T while otherwise remaining still (matrix 256×256×176, resolution 1×1×1 mm, TR/TI/TE 2500/1070/2.9 ms, FA 8°, readout bandwidth 240 Hz/px, GRAPPA R=2). For a fair comparison between registration methods, Siemens’ on-scanner PACE8 algorithm was used to estimate motion from vNavs without applying real-time corrections to the sequence.

Retrospective motion correction

Brain-masked registration of vNavs was performed offline using FSL/FLIRT9 with brain masks. For robust registration the masks were morphologically dilated to include the scalp using a spherical kernel of radius r=2. RetroMocoBox10 was adapted to perform offline correction and GRAPPA reconstruction of vNav-MPRAGE k-space datasets based on different motion traces.

Results

Masking performance

Figure 2A shows the distribution of Dice coefficients D. The overlap with masks from FreeSurfer was D=0.89±0.01 while the within time-series consistency was D=0.99±0.02. Typically, a few voxels at the outer edges of the masks changed labels between consecutive vNav frames (from brain to non-brain or vice versa) due to noise. Masking took about 0.02 seconds per vNav on a 3.3-GHz Intel Xeon CPU.

Figure 2.

(A) Dice scores for masks derived from 258 vNav timeseries. The first frame of each series was compared to a down-sampled mask generated by FreeSurfer (FS) for the associated motion-corrected MPRAGE image. Consistency was assessed by comparing the masks of each series to the first frame. (B) Shannon entropy (n=256 bins) for the retrospective correction of MPRAGE including jaw motion.

Bias due to non-rigid motion

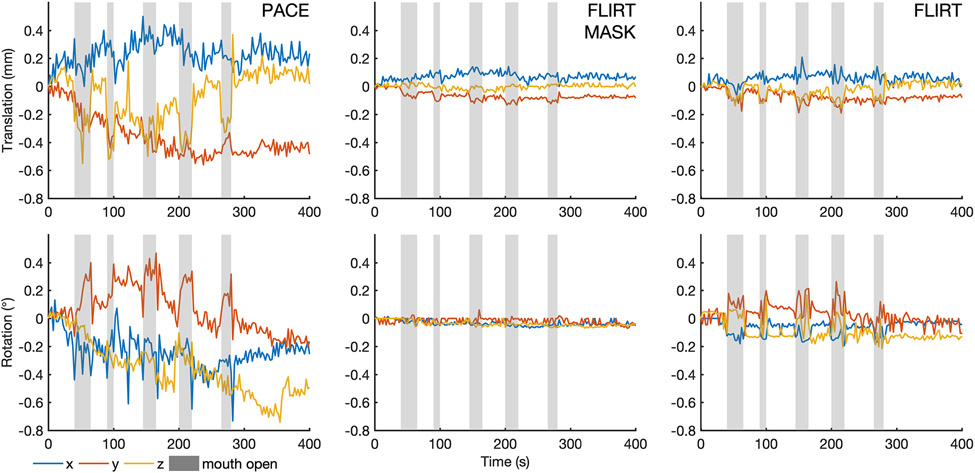

Figure 3 compares brain-motion estimates in the presence of local deformation. PACE estimated large head movements at the times the subject was instructed to open and close their mouth. FLIRT with brain masks detected substantially reduced translations and almost no rotations. In contrast, PACE also detected drifts in head orientation over time. FLIRT without masks estimated reduced motion as compared to PACE but was found to be more sensitive to jaw motion than brain-masked registration.

Figure 3.

Traces from vNavs during MPRAGE with jaw motion of an otherwise still subject. For accurate brain-motion correction, the detection must be insensitive to structures other than the brain. Siemens’ real-time registration PACE detected large head motion when the subject moved their jaw. FLIRT with brain masks estimated substantially reduced translations and almost no rotations. FLIRT only is shown for comparison. Note that FLIRT’s execution time would be incompatible with real-time use.

Retrospective motion correction

T1-weighted images are shown in Figure 4A after retrospective correction with the motion traces estimated by each algorithm. PACE introduced subtle artifacts that were removed by brain-specific registration, and this was reflected by changes in Shannon entropy (n=256 bins, see Figure 2B).

Figure 4.

Motion-correction enhancements in MPRAGE for an otherwise still subject who opened and closed their mouth at random times during the acquisition. Retrospective correction based on the motion estimated by PACE introduced subtle artifacts that were removed when using motion tracks from brain-masked registration.

Discussion

We demonstrated that non-rigid head motion introduces bias in the detection of brain motion using vNavs with Siemens’ PACE algorithm and introduces correction-related artifacts. We presented robust ultra-fast brain extraction from vNavs suitable for real-time use and showed that brain-masked registration removes the artifacts at 3T.

Further experiments may establish anatomy-specific registration for vNav-based correction at 7T, where large distortions around the sinuses are a known source of inaccuracy11. We expect that adapting our approach to children and infants12 will increase the usefulness of vNavs, as bias is introduced if the shoulders or chest impinge on the navigator FOV.

While FLIRT reduced the bias from non-rigid deformations even without brain masks, we note that its execution time of about 6 seconds per vNavs is incompatible with real-time application during MPRAGE. Further work is needed to incorporate our brain masks into an optimized on-scanner registration.

Conclusion

We presented enhancements to vNav-based motion correction using robust real-time brain masking and demonstrated that brain-specific registration removes correction-related artifacts in the presence of non-rigid head motion. This may be useful for imaging initiatives such as ABCD and HCP where subjects are often non-compliant.

Synopsis.

Volumetric navigators (vNavs) interleaved within longer MRI sequences are an effective method for dynamically detecting and correcting head motion during the acquisition. However, motion is estimated by registering each vNav back to the first, and bias can be introduced by non-rigid deformations of, e.g., the mouth, since the head is taken to be a rigid body. We demonstrate that this bias introduces correction-related artifacts. We present robust real-time brain extraction (0.02 s per vNav) and demonstrate offline that the bias and associated artifacts can be removed by using motion tracks from brain-masked registration.

Acknowledgements

This research was supported by the ABCD-USA Consortium (U24DA041123) and by NIH grants R00HD074649, U01AG052564 , R01HD099846, R01HD093578, R01HD085813.

References

- 1.Tisdall MD, Hess AT, Reuter M, Meintjes EM, Fischl B, van der Kouwe AJW. Volumetric navigators for prospective motion correction and selective reacquisition in neuroanatomical MRI. Magn Reson Med. 2012;68(2):389–399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Tisdall MD, Reuter M, Qureshi A, Buckner RL, Fischl B, van der Kouwe AJW. Prospective motion correction with volumetric navigators (vNavs) reduces the bias and variance in brain morphometry induced by subject motion. Neuroimage. 2016;127:11–22. doi: 10.1016/j.neuroimage.2015.11.054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Matas J, Chum O, Urban M, Pajdla T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis Comput. 2004;22(10):761–767. doi: 10.1016/J.IMAVIS.2004.02.006 [DOI] [Google Scholar]

- 4.Kristensen F, MacLean WJ. Real-Time Extraction of Maximally Stable Extremal Regions on an FPGA. In: 2007 IEEE International Symposium on Circuits and Systems. IEEE; 2007:165–168. doi: 10.1109/ISCAS.2007.378247 [DOI] [Google Scholar]

- 5.Harms MP, Somerville LH, Ances BM, et al. Extending the Human Connectome Project across ages: Imaging protocols for the Lifespan Development and Aging projects. Neuroimage. 2018;183:972–984. doi: 10.1016/J.NEUROIMAGE.2018.09.060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bookheimer SY, Salat DH, Terpstra M, et al. The Lifespan Human Connectome Project in Aging: An overview. Neuroimage. 2019;185:335–348. doi: 10.1016/J.NEUROIMAGE.2018.10.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fischl B. FreeSurfer. Neuroimage. 2012;62(2):774–781. doi: 10.1016/J.NEUROIMAGE.2012.01.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Thesen S, Heid O, Mueller E, Schad LR. Prospective acquisition correction for head motion with image-based tracking for real-time fMRI. Magn Reson Med. 2000;44(3):457–465. http://www.ncbi.nlm.nih.gov/pubmed/10975899. [DOI] [PubMed] [Google Scholar]

- 9.Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5(2):143–156. [DOI] [PubMed] [Google Scholar]

- 10.Gallichan D, Marques JP, Gruetter R. Retrospective correction of involuntary microscopic head movement using highly accelerated fat image navigators (3D FatNavs) at 7T. Magn Reson Med. 2015. doi: 10.1002/mrm.25670 [DOI] [PubMed] [Google Scholar]

- 11.Liu J, de Zwart JA, van Gelderen P, Murphy-Boesch J, Duyn JH. Effect of head motion on MRI B0 field distribution. Magn Reson Med. 2018;80(6):2538–2548. doi: 10.1002/mrm.27339 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Brown TT, Kuperman JM, Erhart M, et al. Prospective motion correction of high-resolution magnetic resonance imaging data in children. Neuroimage. 2010;53(1):139–145. doi: 10.1016/j.neuroimage.2010.06.017 [DOI] [PMC free article] [PubMed] [Google Scholar]