Abstract

In this work, we introduce a novel approach to model the rain and fog effect on the light detection and ranging (LiDAR) sensor performance for the simulation-based testing of LiDAR systems. The proposed methodology allows for the simulation of the rain and fog effect using the rigorous applications of the Mie scattering theory on the time domain for transient and point cloud levels for spatial analyses. The time domain analysis permits us to benchmark the virtual LiDAR signal attenuation and signal-to-noise ratio (SNR) caused by rain and fog droplets. In addition, the detection rate (DR), false detection rate (FDR), and distance error of the virtual LiDAR sensor due to rain and fog droplets are evaluated on the point cloud level. The mean absolute percentage error (MAPE) is used to quantify the simulation and real measurement results on the time domain and point cloud levels for the rain and fog droplets. The results of the simulation and real measurements match well on the time domain and point cloud levels if the simulated and real rain distributions are the same. The real and virtual LiDAR sensor performance degrades more under the influence of fog droplets than in rain.

Keywords: LiDAR sensor, rain, fog, sunlight, advanced driver-assistance system, backscattering, Mie theory, open simulation interface, functional mock-up interface, functional mock-up unit

1. Introduction

Highly automated vehicles perceive their surroundings using environmental perception sensors, such as light detection and ranging (LiDAR), radio detection and ranging (RADAR), cameras, and ultrasonic sensors. LiDAR sensors have gained significant attention over the past few years for their use in advanced driver-assistance system (ADAS) applications because they provide outstanding angular resolution and higher-ranging accuracy [1]. As a result, the automotive LiDAR sensor market is predicted to be worth around 2 billion USD in 2027, up from 26 million USD in 2020 [2]. However, it is common knowledge that the LiDAR sensor performance degrades significantly under the influence of certain environmental conditions, such as rain, fog, snow, and sunlight. The effects of these weather phenomena must therefore be taken into account when designing LiDAR sensors, and the measurement performance of the sensors must be validated before using them in highly-automated driving settings. Furthermore, billions of miles of test driving are required for automated vehicles to demonstrate that they reliably prevent fatalities and injuries, which is not feasible in the real world due to cost and time constraints [3]. Simulation-based testing can be an alternative to tackle this challenge, but it requires that the effects of rain and fog are modeled with a high degree of realism in the LiDAR sensor models so they can exhibit the complexity and the behavior of real-life sensors.

In this work, we have modeled the rain and fog effect using the Mie scattering theory in the virtual LiDAR sensor developed by the authors in their previous work [4]. It should be noted that the sensor model considers scan pattern modeling and the real sensor’s complete signal processing. Moreover, it also considers the optical losses, inherent detector effects, effects generated by the electrical amplification, and noise produced by sunlight to generate a realistic output. The sensor model is developed using the standardized open simulation interface (OSI) and functional mock-up interfaces (FMI) to make it tool-independent [4]. We have conducted the rain measurements at the large-scale rain area of the National Research Institute for Earth Science and Disaster Prevention (NIED) in Japan [5] and the fog measurements at CARISSMA (Technische Hochschule Ingolstadt, Germany) [6]. The real measurements and the simulation results are compared to validate the modeling of the rain and fog effects on the time domain and point cloud levels. Furthermore, key performance indicators (KPIs) are defined to validate the rain and fog effects modeling at the time domain and point cloud levels.

The paper is structured as follows. Section 2 describes the LiDAR sensor background, followed by an overview of the related work in Section 3. The modeling of rain and fog effects is described in Section 4, and the results are discussed in Section 5. Finally, Section 6 and Section 7 provide the conclusion and outlook.

2. Working Principle

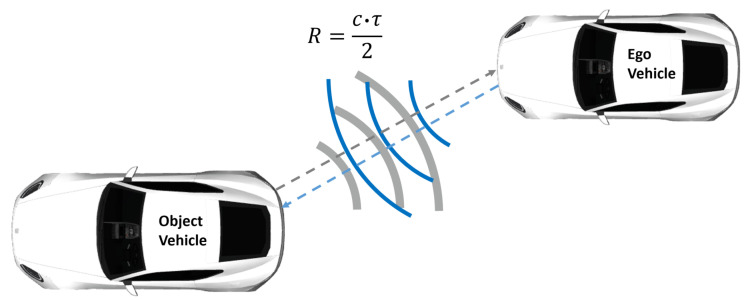

LiDAR is an optical technology that obtains distance information by bouncing light off an object. LiDAR sensors measure the round-trip delay time (RTDT) that laser light takes to hit an object and bounce back to the receiver to calculate the range, as shown in Figure 1.

Figure 1.

LiDAR working principle. The LiDAR sensor mounted on the ego vehicle simultaneously sends and receives laser light, which is partly reflected off the surface of the target, in order to measure the distance [4].

Mathematically, the measured range R can be written as:

| (1) |

where c is the speed of light and RTDT is denoted by .

3. Related Work and Introduction of a Novel Approach

The influence of rain and fog on the LiDAR signal is well-described in the literature. For example, Goodin et al. [7] proposed a mathematical model to predict the influence of rain on the received power and range reduction. Afterward, they investigated the impact of the LiDAR performance degradation caused by rain on an obstacle-detection algorithm. Wojtanowski et al. [8] described the signal attenuation and decrease in the maximum detection range of LiDAR sensors operating both at 905 and 1500 due to the effects of rain and fog. In addition, they have shown that the LiDAR sensors at 905 are less sensitive to environmental conditions than the ones at 1500 . Rasshofer et al. [9] used the Mie scattering theory to model the influence of the effect of rain, fog, and snow on the performance of LiDAR sensors. Their signal attenuation model results show good agreement with the real measurements, but they do not quantify the attenuation of the LiDAR signals. Furthermore, they show a reduction in the maximum detection range of LiDAR sensors once the rain rate increases up to 18 /. Byeon et al. [10] also modeled the LiDAR signal attenuation caused by raindrops using the Mie scattering theory. They used the rain distribution of three regions to show that the LiDAR signal attenuation varies according to rain distribution characteristics. The LiDAR sensor model by Li et al. [11] considers the LiDAR signal attenuation caused by rain, fog, snow, and haze. Zhao et al. [12] extended the work of [11] and modeled the unwanted raw detections (false positives) due to raindrops. They verified their model intuitively by comparing it with the data obtained during uncontrolled outdoor measurements. In Ref. [13], the authors have developed a model to predict and quantify the LiDAR signal attenuation due to raindrops. The simulation results matched well with the real measurements, with a deviation of less than 7.5%. Hasirlioglu et al. [14,15] introduced a noise model that adds unwanted raw detections (false positives) to the real LiDAR data based on the hit ratio. The hit ratio determines whether the laser beam hits the raindrop or not. If the hit ratio value exceeds the selected threshold, a false positive scan point will be added to the real point clouds. One drawback, however, of this approach is that it is computationally expensive. They verified their modeling approach by comparing real point clouds modified by the noise models with the points obtained under rainy conditions. The intensity values of the false positive scan points are set empirically because no internal information about the LiDAR hardware is available. Berk et al. [16] proposed a probabilistic extension of the LiDAR equation to quantify the unwanted raw detection by LiDAR sensors due to raindrops. They combined the probabilistic models’ parameters for rain distribution with the Mie scattering theory and the LiDAR detection theory in the Monte Carlo Simulation. However, they did not verify their model by comparing it with real measurements. In Ref. [17], the authors proposed a real-time LiDAR sensor model that considers the beam propagation characteristics and rain noise based on a probabilistic rain model developed in [16]. Their sensor model uses an unreal engine’s ray casting module, providing the LiDAR point clouds. The sensor model is not validated by the measurements of the real LiDAR sensor. Kilic et al. [18] introduced a physics-based simulation approach to add the effect of rain, fog, and snow on the real point clouds obtained under normal weather conditions. Their results show that the LiDAR-based detector performance improved once they trained with the LiDAR data obtained under normal and adverse weather conditions. Hahner et al. [19] simulated the effect of fog on the real LiDAR sensor point clouds and used the obtained foggy data to train the LiDAR-based detector to improve its detection accuracy.

All the state-of-the-art works mentioned above primarily focus on modeling the LiDAR signal attenuation and stochastic simulation of backscattering (false positives) caused by rain and fog droplets. However, most authors did not quantify these errors or validate their modeling approach by comparing the simulations with the real measurements. Some authors obtained the real LiDAR point clouds under normal environmental conditions and stochastically implemented a false positive effect caused by rain, fog, and snow. These approaches are helpful for use cases where the data from rain and fog are required for ADAS testing, regardless of their accuracy; however, they do not fit very well in the use cases where a high-fidelity virtual LiDAR sensor output is needed under adverse rain and fog conditions because the actual LiDAR sensor’s inherent optical detector and peak detection algorithm behavior significantly change depending on the rain intensity and fog visibility distance V. For instance, the LiDAR rays that are backscattered from the rain or fog droplets also increase the background noise of the detector, and the low-reflective targets close to the LiDAR sensor may be masked under the noise. This effect produces a false negative result, significantly decreasing the LiDAR sensor detection rate. Therefore, it is not the case that every backscattered LiDAR ray from the rain or fog droplets appears as a false positive scan point in the point cloud. Moreover, the scattering from the rain and fog droplets misaligns the LiDAR rays, shifts the target peak location, and ultimately results in the ranging error . An overview of the features, output, and validation approaches of the state-of-the-art works mentioned above are tabulated in Table 1.

Table 1.

Overview of the state-of-the-art LiDAR sensor model working principles and validation approaches.

| Authors | Covered Weather Phenomena |

Covered Effects | Validation Approach |

|---|---|---|---|

| Goodin et al. [7] | Rain | Signal attenuation, false negative, ranging error , decrease in maximum detection range | Simulation results |

| Wojtanowski et al. [8] | Rain, fog, aerosols | Signal attenuation, target reflectivity, range degradation | Simulation results |

| Rasshofer et al. [9] | Rain, fog, snow | Signal attenuation, range degradation | Simulation results, qualitative comparison with real measurements for fog attenuation |

| Byeon et al. [10] | Rain | Signal attenuation | Simulation results |

| Li et al. [11] | Rain, fog, snow, haze | Signal attenuation | Simulation results |

| Zhao et al. [12] | Rain, fog, snow, haze | Signal attenuation, false positive | Quantitative comparison with measurements for rain |

| Guo et al. [13] | Rain | Signal attenuation | Qualitative comparison with measurements |

| Hasirlioglu et al. [14,15] | Rain | Signal attenuation, false positive | Quantitative comparison with measurements |

| Berk et al. [16] | Rain | Signal attenuation, false positive | Simulation results |

| Espineira et al. [17] | Rain | Signal attenuation, false positive | Simulation results |

| Kilic et al. [18] | Rain, fog, snow | Signal attenuation, false positive | Quantitative comparison with measurements |

| Hahner et al. [19] | Fog | Signal attenuation, false positive | Quantitative comparison with measurements |

| Haider et al. (proposed approach) |

Rain, fog | Signal attenuation, SNR, false positive, false negative, ranging error | Qualitative comparison with measurements for all covered effects |

In this work, we introduce a novel approach to model the effect of rain and fog on the performance of the LiDAR sensor. The proposed methodology allows for the simulation of the rain and fog effect using the rigorous applications of the Mie scattering theory on the time domain for transient and point cloud levels for spatial analyses. This methodology can be used to analyze the behavior of LiDAR detectors and peak detection algorithms under adverse rain and fog conditions. We have compared the simulation and real measurements on the time domain to quantify LiDAR signal attenuation and signal-to-noise ratio (SNR) caused by rain and fog droplets. The detection rate (DR), false detection rate (FDR), and ranging error due to rain and fog droplets are evaluated on the point cloud level. In addition, we have generated a virtual rain and fog model that considers the drop size distribution (DSD), falling velocity, gravity, drag forces, turbulent flow, and droplet deformation.

4. Modeling of the Rain and Fog Effect in the Virtual LiDAR Sensor

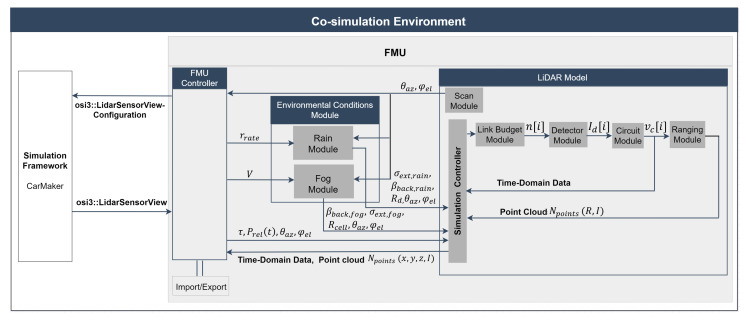

Figure 2 depicts the toolchain of the proposed approach and the signal processing steps to model the rain and fog effect in the virtual LiDAR sensor. As mentioned earlier in Section 1, the model is built using the standardized interfaces OSI and FMI and integrated into the virtual environment of CarMaker. This provides the ray tracing framework with a bidirectional reflectance distribution function (BRDF) that considers the direction of the incident ray , material surface, and color properties [20]. The LiDAR model uses the ray tracing module of CarMaker. The material properties of the simulated objects, the angle-dependent spectral reflectance , and the reflection types (including diffuse, specular, retroreflective, and transmissive) are specified in the material library of CarMaker.

Figure 2.

Co-simulation framework of the proposed approach to model the rain and fog effect in a virtual LiDAR sensor.

The FMU controller passes the required input configuration to the simulation framework via osi3::LidarSensorViewConfiguration. The simulation tool verifies the input configuration and provides the ray tracing detections via osi3::LidarSensorView::reflection, interface time delay , and relative power [4].

The FMU controller then calls the LiDAR model and passes the time delay , relative power , azimuth , and elevation angles of the detected ray for further processing. In the next step, the FMU controller calls the environmental condition module and passes the user-selected rain rate () or visibility distance V to the rain or fog modules. In the rain/fog module, virtual rain and fog are created, through which the scan module casts the LiDAR rays according to its scan pattern (, ), and a collision detection algorithm is applied to determine whether the transmitted ray of each scan point hits the rain or fog droplet; if the beam hits the droplet, the backscattered coefficient and the extinction coefficient based on the DSD are calculated. Furthermore, it also provides the spherical coordinates (, , ) of the rain or fog droplets (, , ) that collided with the LiDAR ray. Next, the rain/fog module calls the LiDAR model and passes the rain or fog droplets data for further processing. The central component of the LiDAR model is the simulation controller. It is used as the primary interface component to provide interactions with the different components of the model, for instance, configuring the simulation pipeline, inserting ray tracing and the rain/fog module data, executing each step of simulation, and retrieving the results.

The link budget module calculates the arrival of photons over time. The detector module’s task is to capture these photons’ arrivals and convert them into a photocurrent signal . In this work, we have implemented silicon photomultipliers (SiPM) as a detector. Next, the circuit module amplifies and converts the detector’s photocurrent signal to a voltage signal processed by the ranging module. The last part of the toolchain is the ranging module, which determines the range R and intensity I of the target based on the received from the analog circuit for every reflected scan point. The LiDAR point cloud is exported in Cartesian coordinates.

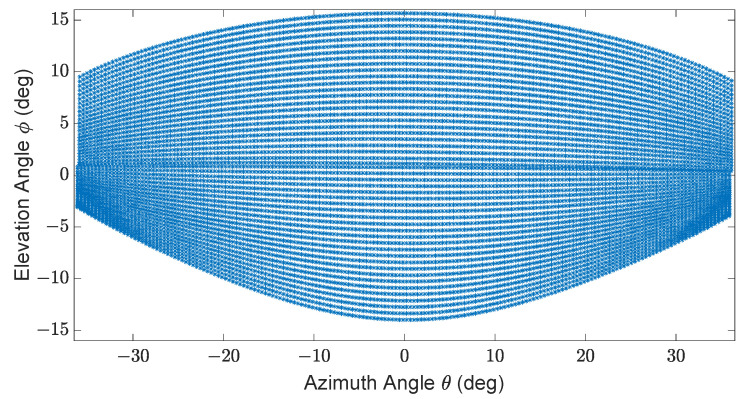

4.1. Scan Module

The scan module uses the scan pattern of the Blickfeld Cube 1, as shown in Figure 3. A detailed description of the Blickfeld Cube 1 scan pattern can be found in [21].

Figure 3.

Exemplary scan pattern of Cube 1. ° horizontal and ° vertical FoV, 50 scan lines, ° horizontal angle spacing, frame rate , maximum detection range 250 , and minimum detection range .

4.2. Rain Module

The rain module generates virtual rain and calculates the extinction coefficient and backscattered coefficient .

Virtual Rain Generation

Virtual rain is generated using the Monte Carlo Simulation. Monte Carlo Simulation uses a Mersenne Twister 19937 pseudo-random generator [22] to sample the given DSD using the inverse transform sampling method [23]. Each raindrop is generated individually based on an underlying DSD and terminal velocity. A similar approach was introduced by Zhao et al. [12] to generate unwanted raw data within the sensor models. DSD is the distribution of the number of raindrops by their diameter. Many types of DSD are described in the literature, including the Marshall–Palmer distribution, Gamma distribution, and Lognormal distribution [24,25,26]. In this work, we have used the Marshall–Palmer rain distribution and the large-scale rainfall simulator distribution recorded by a real disdrometer.

4.3. Marshall–Palmer Distribution

The Marshall–Palmer DSD is commonly used in the literature for characterizing rain, and it can be written as:

| (2) |

where = 8000 , = 4.1 , is the rain rate in / , and D is the drop diameter in [24].

We use the inverse transform sampling method [23] to randomly generate raindrops based on the Marshall–Palmer distribution. Equation (2) becomes:

| (3) |

where u is a random variable with the uniform distribution [23]. The terminal velocity of the raindrops in / can be written as:

| (4) |

where D is the raindrop diameter in [27].

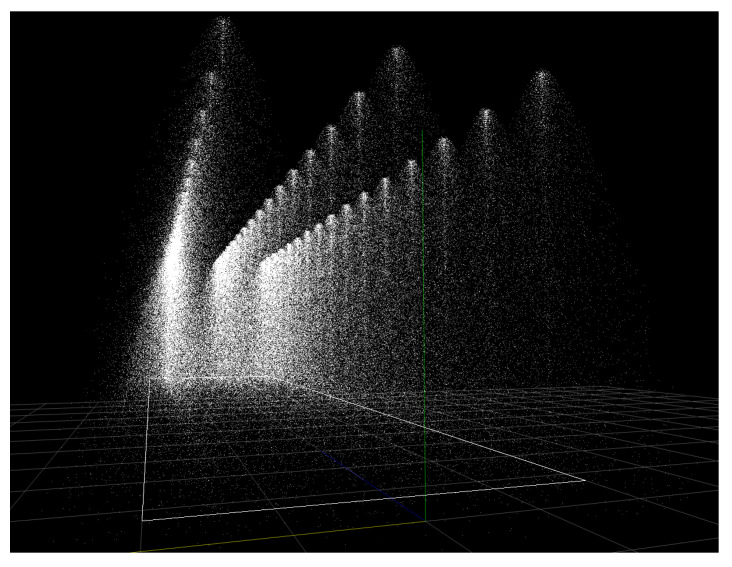

4.4. Physical Rain Model

The physical rain model aims to simulate the characteristics of a rain simulator inside the virtual simulation environment. In this work, we have generated the virtual rain according to the rain distribution of the NIED rain facility recorded by the real disdrometer. Rather than evenly distributing the droplets in the environment, they are “spawned” from rain sources, such as sprinklers or a homogenous planar rain source. Once the droplets are spawned, their movement is determined by physical equations concerning gravity, drag forces, turbulent flow, and droplet deformation according to the base concept published by John H. Van Boxel [28]. Figure 4 shows an exemplary visualization of the rain field generated with the physical rain model.

Figure 4.

Exemplary visualization of a rain field generated by sprinklers resembling a real-world rain simulator.

It is not within the scope of this paper to explain the detailed working principle of the physical rain model, nor will its mathematical model be discussed here. The physical rain model provides access to all the properties of the droplets, including position, speed, and diameter. Based on these properties and the characteristics of the sensors’ beam, the backscattered and extinction effects can be applied to the virtual LiDAR signal.

4.5. Interaction Between the Electromagnetic Waves and Hydrometeors

There are three scattering theories (Rayleigh, Mie, and geometric/ray optics) that can explain the interaction between the electromagnetic wave and hydrometeors depending on the size parameter . When the particle size x is very small compared to the incident wavelength (), the Rayleigh scattering theory is used. Mie scattering theory is used when the incident wavelength and particle size are comparable or equal (). Geometric/ray optics is used when the incident wavelength is very small compared to particles (). The average raindrop diameter D is 1 , while the average fog droplet diameter D is 10 . The size parameter of the LiDAR sensor operating at a wavelength of 905 is for raindrops and for fog droplets [29].

In conclusion, the interaction between LiDAR waves and raindrops can be explained using geometric/ray optics and the Mie scattering theory in the case of fog droplets. In Ref. [30], the authors have found that the results from the Mie scattering theory and geometric/ray optics agree well with each other for . We, therefore, use the Mie scattering theory to model the rain and fog effect on the LiDAR sensor performance.

Mie Scattering Theory

Scattering is the process where a particle changes the direction of the incident wave, while absorption is the process where heat energy is produced. Both scattering and absorption extract energy from the incident wave and are called extinction [31]. Mathematically, it can be written as:

| (5) |

where is the extinction efficiency, denotes the scattering efficiency, and shows the absorption efficiency [31]. In addition, the raindrops backscattered the incident LiDAR ray, and this phenomenon is known as backscattered efficiency [31].

The Mie scattering theory can be used to calculate the extinction efficiency , the scattering efficiency , and the backscattered efficiency for LiDAR sensors. They can be calculated as:

| (6) |

| (7) |

| (8) |

where x is the size parameter, ℜ denotes the real part, = , and and are the complex Mie coefficients [31]. The Mie coefficients can be calculated using the spherical Bessel functions [32] and the method introduced by Hong Du [33]. The Hong Du method is computationally less expensive, so we have used it in this work. According to this algorithm, the Mie coefficients can be written as:

| (9) |

and:

| (10) |

where m is the complex refractive index of water, the size parameter is denoted by x, presents the complex ratio of the Riccati–Bessel functions, and and are Riccati–Bessel functions. The complex ratio can be calculated using the upward and downward recurrences, and it can be written as:

| (11) |

The downwards recurrence of is initialized by:

| (12) |

and the values are iteratively calculated until . controls the precision of the Mie scattering coefficients, and it should be greater than if six significant digits or higher precision are required [33]; in this work, we have used to obtain seven significant digits precision. The Riccati–Bessel functions and of Equation (9) can also be calculated by using the upward and downward recurrences and can be written as:

| (13) |

The complex function can be defined as:

| (14) |

The same Formula (13) applies to . The upward recurrence of is initialized with and , and vice versa for the downward recurrence, while the upward recurrence of starts with and , and vice versa for the downward recurrence [33].

4.6. Calculation of Extinction Coefficients

The extinction efficiencies and the drop-size distribution calculated in the previous section are used to calculate the extinction coefficients and the backscattered coefficients . The extinction coefficients can be written as:

| (15) |

where is the rain distribution and D is the drop size diameter [29]. As mentioned earlier, we have used the rain distribution of Marshall–Palmer [24] and the NIED rain simulator [5].

4.7. Calculation of Backscattered Coefficients

A novel approach has been introduced to model the backscattering effect of rain droplets for the LiDAR sensor. First, the Monte Carlo Simulation generates all the rain droplets based on the DSD; then, a collision detection algorithm is applied between the transmitted ray of each scan point and the droplets. The collision detection algorithm determines the particles that collide with the virtual LiDAR ray by knowing the beam characteristics, such as beam volume, origin, and direction. These particles are described as a set of tuples:

| (16) |

where is the distance of the particle from the sensor, the azimuth angle of the raindrop is denoted by , and the elevation angle by , while D is the droplet diameter. The backscattered coefficient is calculated for every droplet at the distance .

| (17) |

where is the backscattered efficiency of the particle and N is the number of drops in 1/m [31].

4.8. Beam Characteristics

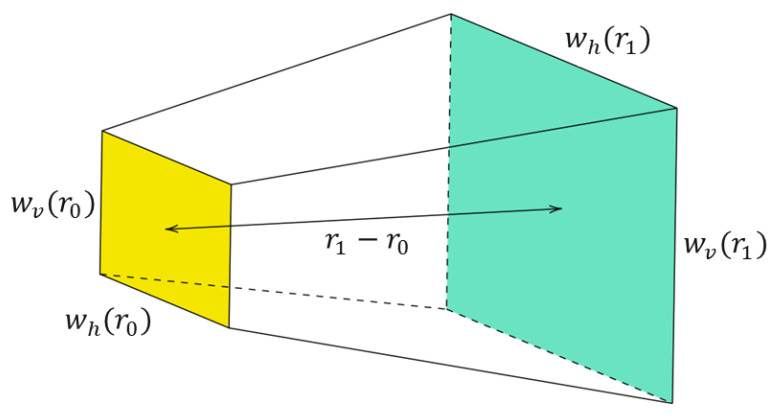

This work assumes a rectangular beam shape because the real LiDAR sensor used in this work has a rectangular beam shape; its near-field size can be described by the horizontal beam width and the vertical beam width . With increasing range, the size of the beam also becomes larger in both directions. The divergence angles give this property with as the horizontal and as the vertical divergence angle. For a given distance distance from the sensor, the size of the beam is [12]:

| (18) |

and:

| (19) |

For the simulation of the effects of rain and fog, the volume of the beam between two given ranges, and , is required (for instance, beam volume for range cells). As shown in Figure 5, it is trivial that the shape of the beam between and is a pyramidal frustum, the volume of which is given by:

| (20) |

where A is the area of the top of the frustum marked in yellow, B is the base of the frustum shown in green, and h is the height of the frustum. Applying this formula to the beam itself results in:

| (21) |

Figure 5.

The geometry of a LiDAR ray from near-field to range considering beam divergence.

This equation can be used to determine the volume of a beam in the field of view (FoV) or calculate the beam volume for each range cell depending on the range resolution.

4.9. Fog Module

There are two main approaches to model the effects of fog on the LiDAR signals: modeling via empirical formulas or modeling based on statistical distributions, as given in Section 4.2. All of these approaches have advantages and disadvantages. For instance, the LiDAR signal attenuation due to fog droplets can be modeled using empirical formulas introduced in [34,35,36], but it is not possible to model the backscattering from fog droplets. These approaches are easy to implement and computationally less expensive, while the statistical distributions that can be used to model the LiDAR signal attenuation and backscattering from the fog droplets are computationally expensive. In this work, we have therefore calculated the extinction coefficient as a function of visibility distance V using the empirical formula recommended by the International Commission on Illumination (CIE) given in [9], which can be written as:

| (22) |

The backscattering coefficient is calculated using the Mie scattering theory and the Deirmendjian gamma distribution, and it can be written as:

| (23) |

where is the gamma function and , , b, , and D are the parameters of ,

| (24) |

where denotes the mode diameter of maximum frequency droplets [9,12,37]. The distribution parameters for typical environmental conditions are given in Table 2.

Table 2.

Parameters of droplet size distribution in fog using a gamma-function model [9].

| Weather Condition | () | |||

|---|---|---|---|---|

| Haze (coast) | 100 | 1 | 0.5 | 0.1 |

| Haze (continental) | 100 | 2 | 0.5 | 0.14 |

| Strong advection fog | 20 | 3 | 1.0 | 20.0 |

| Moderate advection fog | 20 | 3 | 1.0 | 16.0 |

| Strong spray | 100 | 6 | 1.0 | 8.00 |

| Moderate spray | 100 | 6 | 1.0 | 4.00 |

| Fog of type “Chu/Hogg” | 20 | 2 | 0.5 | 2.00 |

The concentration of fog particles in the atmosphere is significantly higher than the number of rain particles, and their size D ranges from 0.1 m to 20 m [9,31]. The Monte Carlo Simulation approach adopted in Section 4.2 would be computationally highly expensive if used to generate virtual fog. Unlike the rain, we have therefore used an analytical method based on the Deirmendjian gamma distribution to generate homogenously distributed fog particles. We have used Equation (8) to calculate the backscattered efficiency . First, the virtual fog field is divided into range cells based on the LiDAR range resolution to determine the backscattered coefficient . In the next step, we will consider all of the fog droplets within the beam volume of LiDAR as given in Equation (21) at any range cell to determine the backscattered coefficient from fog droplets. This can be written as:

| (25) |

where is the fog distribution, D denotes the drop diameter, and is the backscattered efficiency [38].

4.10. Link Budget Module

The received power obtained from the ray tracing module does not consider the rain or fog effect and the receiver optics losses . The link budget considers the optical receiver losses and attenuates the received power according to Beer–Lambert’s law using the rain or fog extinction coefficient obtained from the rain/fog module. This can be written as:

| (26) |

where is the target reflectivity, denotes the diameter of the optical aperture, is the target range, the direction of the incident ray is given by , the receiver optics loss factor is given by , and the transmitted power is denoted by [4,12]. The backscattered coefficient is used to calculate the received power of backscattered rain or fog droplets. The received power from the raindrops can be written as:

| (27) |

where is the backscattered coefficient from raindrops and is the raindrop range [4,12]. The received power from the fog droplets in a range cell can be written as:

| (28) |

where is the backscattered coefficient from the fog droplets in any range cell and is the distance of the range cell.

The total received power by the detector over time can originate from different sources, including internal reflection , target received power , and backscattered power from rain and fog drops . That is why can be given as:

| (29) |

The power signal must be sampled with a time interval to accurately model the optics at the photon level [39]. The sampled power equation takes the form of:

| (30) |

with . The mean of incident photons on the SiPM detector within a one-time bin can be written as:

| (31) |

where is the energy of a single laser photon at the laser’s wavelength, h is the Planck constant, and is the photon frequency [40]. The SiPM detector generates Poisson-distributed shot noise due to the statistical arrival of photons. That is why the arrival of photons can be modeled as a Poisson process [41]:

| (32) |

4.11. Detector Module

We have implemented the SiPM (silicon photomultiplier) detector module that provides an output current proportional to the number of photons [39]. In contrast to the single-photon avalanche diode (SPAD), the SiPM detector yields better multi-photon detection sensitivity, photon number resolution, and extended dynamic range [42,43]. The SiPM detector response for a given photon signal can be calculated as:

| (33) |

where is the SiPM detector sensitivity and the impulse response of the detector, written as . , is given as:

| (34) |

4.12. Circuit Module

We use the small-signal transfer function of the analog circuit model to obtain the voltage signal ,

| (35) |

where is the operating voltage of the circuit model, is the inverse discrete Fourier transform (IDFT), shows the discrete Fourier transform (DFT), and denotes the small-signal voltage of the circuit model [39].

4.13. Ranging Module

The ranging algorithm inputs the circuit module’s voltage signal . It then calculates each scan point’s target range R and signal intensity I. The range R is given in meters while the intensity I is mapped linearly to an arbitrary integer scale from 0 to 4096 as used in the Cube 1 products. The algorithm is applied to several threshold levels to distinguish between internal reflection, noise, and target peaks. The target range is determined based on the relative position of the target peaks to the internal reflection, while the signal intensity is calculated from the peak voltage levels [4].

5. Results

The rain and fog effect modeling is validated on the time domain and point cloud levels. As shown in the following, we have used a single-point scatter to validate the model on the time domain.

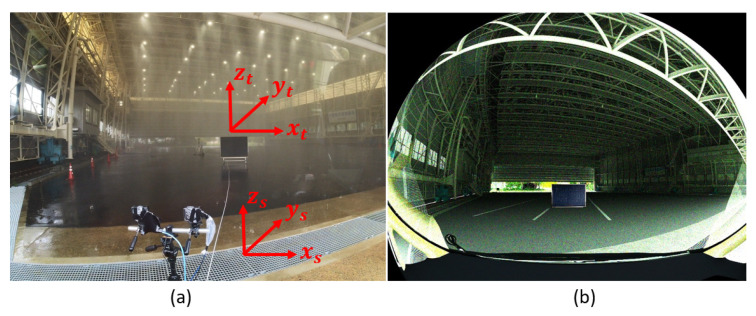

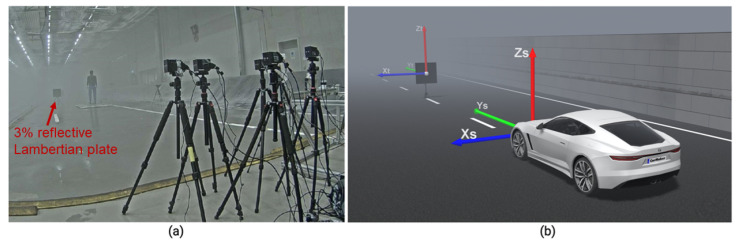

5.1. Validation of the Rain Effect Modeling on the Time Domain Level

The primary reason for verifying the LiDAR model on the time domain is to ensure that the link budget, detector, and circuit modules work as intended. Furthermore, comparing the time domain signals (TDS) establishes the association between measured and modeled noise and the amplitude levels because it is difficult to compare the simulated and measured noise at the point cloud level. It is, therefore, convenient to quantify the LiDAR signal attenuation and the decrease in the SNR due to the rain and fog droplets on the time domain level. A 3%- and a 10%-reflective Lambertian plate were placed in front of the sensor at 20 in the rain rate of 16 mm/h, 32 mm/h, 66 mm/h, and 98 mm/h. An exemplary scenario for a 3% Lambertian plate is shown in Figure 6.

Figure 6.

(a) Real setup to validate the time domain and point cloud data. (b) Static simulation scene to validate the time domain and point cloud data. The 3%-reflective target with an area of × was placed in front of the sensor at different distances. The actual and the simulated sensor and target coordinates are the same. The ground truth distance is calculated from the sensor’s origin to the target’s center.

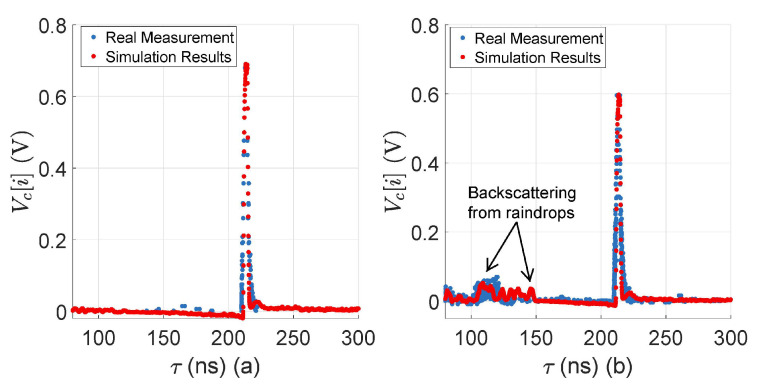

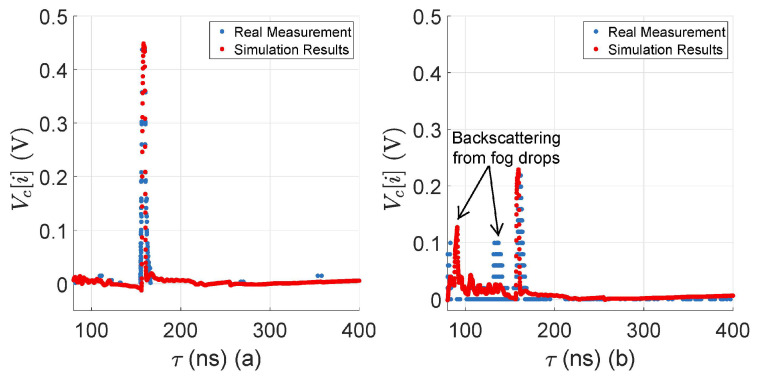

Both the simulated and the real measured TDS obtained from the 10%-reflective plate are shown in Figure 7, both with and without rain at 16 mm/h. There is a good match between the target peaks and the amplitude level of the backscattered raindrops, but still there is a slight difference in the amplitude level of the backscattered raindrops because real-world rainfall is a random process, and it is impossible to replicate the exact behavior of real-world rain in the simulation, yet the simulation and the real measurements agree well with each other.

Figure 7.

(a) LiDAR FMU and real measured TDS comparison obtained from the surface of a 10%-reflective Lambertian plate at 20 without rain. The target peaks and noise levels match well. (b) LiDAR FMU and real measured TDS comparison obtained from the surface of a 10%-reflective Lambertian plate placed at 20 with 16 mm/h rain rate . The target peaks and the amplitude level of the backscattered raindrops match well. It should be noted that the relative distance is calculated from the internal reflection to the target peaks.

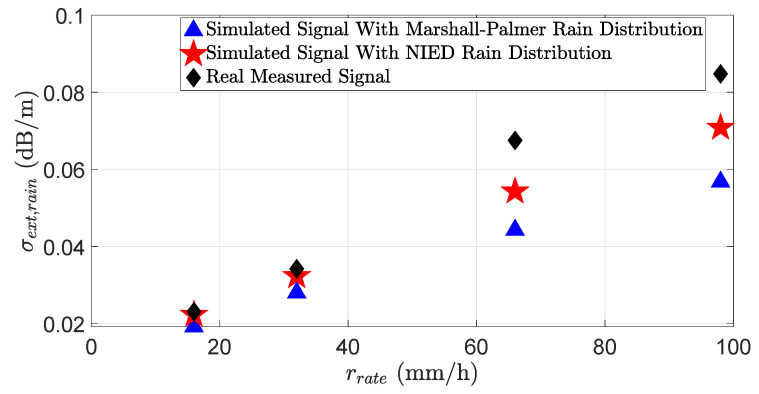

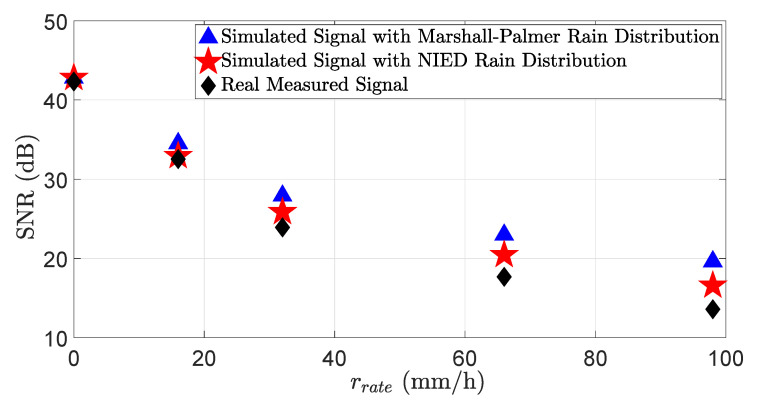

Figure 8 shows the LiDAR signal attenuation at different rain rates . The results show that the LiDAR signal attenuation significantly depends on the rain distribution. Therefore, the simulation and real measurements will match well if the simulated rain distribution is close to the real distribution. Figure 9 shows the SNR of the simulated and real measured signals in different rain rates . The SNR can be calculated as:

| (36) |

where is the mean or expected signal value and is the standard deviation of noise [44]. The result shows that at the lower rain rates , the SNR of the simulated and measured signals match well, but just as the rain rate increases, the SNR mismatch also increases, especially for the simulation results with the Marshall–Palmer rain distribution. Therefore, the simulation and real measurements for SNR will also match well if the simulated rain distribution is close to the real distribution. The backscattering from the raindrops is a random process, and it is not always possible to generate the same backscattering amplitude from the virtual raindrops as from real-world raindrops, and this is also a reason for the mismatch between the real and simulated LiDAR signals’ SNR.

Figure 8.

Simulated and real LiDAR signals attenuation due to different rain rates . The simulation results with Marshall–Palmer, NIED rain distribution, and real measurements match very well at lower rain rates. However, as the rain rate increases, the simulation and real measurement signal attenuation mismatch also increases, especially for the simulation results with the Marshall–Palmer rain distribution.

Figure 9.

The SNR of the simulated and real measured signals in different rain rates . The simulation and real measurement results match very well at lower rain rates, but the mismatch between the simulated and real SNR increases as the rain rate increases, especially for the simulation results with the Marshall–Palmer rain distribution.

To quantify the difference between the simulated and real measured signals’ attenuation and SNR, we use the mean absolute percentage error (MAPE) metric:

| (37) |

where is the measured value, the simulated value is denoted by , and n shows the total number of data points [45]. The MAPE of the signal attenuation for the NIED rain distribution is and for the Marshall–Palmer rain distribution model. Moreover, the MAPE of the SNR for the NIED rain distribution is and for the Marshall–Palmer rain distribution model. The results show that the LiDAR sensor’s behavior will vary significantly depending on the rain distribution.

5.2. Validation of the Rain Effect Modeling on the Point Cloud Level

We have introduced three KPIs to validate the rain effect modeling on the point cloud level: the LiDAR detecting rate (DR), the false detection rate (FDR), and the distance error .

-

The DR is defined as the ratio between the number of returns obtained from both real and simulated objects of interest (OOI) in rainy and dry conditions. It can be written as:

(38) It should be noted that the number of points obtained from OOI in rainy and dry conditions are the mean over all measurements of the same scenario.

- The FDR of the LiDAR sensor in rainy conditions can be written as:

where is the total number of reflections from the sensor minimum detection range to the simulated and real OOI, and it does not contain any reflection from the OOI’s surroundings. and depict the LiDAR returns from the surface of the simulated and real OOI in rainy and dry conditions. It should be noted that the number of LiDAR reflections obtained under rainy and dry conditions are the mean over all measurements of the same scenario.(39) - The distance error of the point cloud received from OOI in rainy and dry conditions, both simulated and real, can be written as:

where the ground truth distance is denoted by and is the mean distance of reflections received from the surface of the simulated and the real OOI in rainy and dry conditions. The ground truth distance is calculated from the sensor’s origin to the target’s center, and it can be written as:(40)

where the target’s x, y, and z coordinates are denoted by subscript t and the sensors by s [46]. The OSI ground truth interface osi3::GroundTruth is used to retrieve the sensor origin and target center position in 3D coordinates.(41)

Table 3 gives the DR of the real and virtual LiDAR sensor in different rain conditions for a 3%-reflective Lambertian plate. The results show that up to 20 , the LiDAR sensor can reliably detect a very low-reflective target with a rain rate of 98 mm/h. It should be noted that these results were obtained in a rain facility area where the rain was homogenously distributed. For the benchmarking of the DR, we have filtered the point clouds from the edges of the Lambertian plate, meaning that the effective area of the plate becomes × . The simulation and the real measurements show a good correlation with each other. The MAPE for the DR is 2.1%.

Table 3.

The DR of the LiDAR sensor, both for a real and a virtual 3%-reflective Lambertian plate, without rain and with different rain rates . The simulation and real measurements show a good correlation. The table presents the mean over 154 measurements of the same scenario. The real LiDAR sensor DR is denoted by , is the virtual LiDAR sensor DR, and .

| (mm/h) |

Target Distance R | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 m | 10 m | 15 m | 20 m | |||||||||

|

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

|

| 0 | 100.0 | 100.0 | 0.0 | 100.0 | 100.0 | 0.0 | 100.0 | 100.0 | 0.0 | 100.0 | 100.0 | 0.0 |

| 16 | 100.0 | 100.0 | 0.0 | 100.0 | 100.0 | 0.0 | 89.3 | 96.7 | 7.4 | 88.1 | 93.9 | 5.8 |

| 32 | 100.0 | 100.0 | 0.0 | 100.0 | 100.0 | 0.0 | 87.5 | 93.2 | 5.7 | 85.3 | 88.4 | 3.1 |

| 66 | 100.0 | 100.0 | 0.0 | 99.8 | 100.0 | 0.2 | 86.2 | 91.6 | 5.4 | 84.4 | 89.2 | 4.8 |

| 98 | 100.0 | 100.0 | 0.0 | 96.5 | 100.0 | 3.5 | 85.2 | 88.2 | 3.0 | 82.3 | 85.9 | 3.6 |

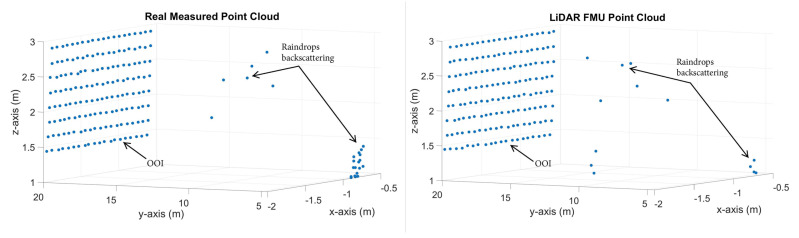

Table 4 shows the FDR of the real and the virtual LiDAR sensor in different rain conditions for a 3%-reflective Lambertian plate. It should be noted that the FDR are the false positive detections from the raindrops. The results show that while the rain rate and the relative distance between the sensor and the target increase, the FDR also increases because as the rain rate increases, the size of the raindrops also increases, leading to a higher backscattering of LiDAR rays from the raindrops, which ultimately results in more FDR. It should be noted that the FDR also depends on the rain distribution and sensor mounting position. Furthermore, the simulation and the real measurements show good agreement. The MAPE for the FDR is about 14.7%. The exemplary point clouds obtained from the surface of the real and the simulated 3%-reflective Lambertian plate at a rain rate of 32 / are shown in Figure 10.

Table 4.

The FDR of the LiDAR sensor, both for a real and a virtual 3%-reflective Lambertian plate, for different rain rates . The simulation and real measurements show a good correlation. The graph presents the mean over 154 measurements of the same scenario. The real LiDAR sensor FDR is denoted by , is the virtual LiDAR sensor FDR, and .

| (mm/h) |

Target Distance R | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 m | 10 m | 15 m | 20 m | |||||||||

|

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

(%) |

|

| 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 16 | 0.8 | 0.3 | 0.5 | 1.8 | 1.4 | 0.4 | 3.2 | 2.9 | 0.3 | 5.5 | 4.1 | 1.4 |

| 32 | 1.6 | 1.0 | 1.6 | 4.8 | 3.1 | 1.7 | 7.1 | 5.8 | 1.4 | 7.3 | 7.6 | 0.3 |

| 66 | 1.7 | 1.2 | 0.5 | 7.0 | 5.7 | 1.3 | 18.9 | 14.6 | 4.3 | 19.6 | 18.2 | 1.4 |

| 98 | 2.4 | 1.9 | 0.5 | 9.1 | 6.9 | 2.2 | 20.4 | 17.2 | 3.2 | 22.7 | 20.2 | 2.5 |

Figure 10.

The exemplary visualization of simulated and real point clouds obtained in 32 / rain rate .

Table 5 gives the distance error of both real and virtual LiDAR sensors in different rain conditions. It shows that the distance error increases with the increase in the rain rate because as the rain rate increases, the size of the raindrops also grows. Once the LiDAR rays collide with them, the drops cause the LiDAR rays to misalign; that is why the distance error increases for higher rain rates.

Table 5.

The distance error of the LiDAR sensor, both for a real and a virtual 3%-reflective Lambertian plate, in different rain rates . The simulation and real measurements show a good correlation. The graph presents the mean over 154 measurements of the same scenario. The real LiDAR sensor distance error is denoted by , the virtual LiDAR sensor distance error is given by , and .

| (mm/h) |

Target Distance R | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 m | 10 m | 15 m | 20 m | |||||||||

|

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

(cm) |

|

| 0 | 0.2 | 0.1 | 0.1 | 0.5 | 0.1 | 0.4 | 0.7 | 0.2 | 0.5 | 1.4 | 0.2 | 1.2 |

| 16 | 1.1 | 0.9 | 0.2 | 1.3 | 1.1 | 0.2 | 1.7 | 1.4 | 0.3 | 2.3 | 1.5 | 0.8 |

| 32 | 1.2 | 1.0 | 0.2 | 1.8 | 1.2 | 0.6 | 2.9 | 2.0 | 0.9 | 3.3 | 2.2 | 1.1 |

| 66 | 1.4 | 1.1 | 0.3 | 2.6 | 1.9 | 0.7 | 3.0 | 2.2 | 0.8 | 4.8 | 2.4 | 2.4 |

| 98 | 1.6 | 1.2 | 0.4 | 2.9 | 1.6 | 1.3 | 3.1 | 2.3 | 0.9 | 4.9 | 2.8 | 2.1 |

5.3. Validation of the Fog Effect Modeling on the Time Domain Level

To verify the modeling of the fog effect, we have placed the 3%-reflective Lambertian plate at in front of the sensor, as shown in Figure 11. Both the simulated and the real measured signals that reflect from the surface of the 3%-reflective Lambertian plate, with and without fog, are shown in Figure 12.

Figure 11.

(a) The real setup for the fog measurement. (b) The static simulation scene for the validation of the fog effect. The 3%-reflective Lambertian plate was placed at a distance. The real and virtual LiDAR sensor and targets coordinates are the same.

Figure 12.

(a) LiDAR FMU and real measured TDS obtained from the surface of a 3%-reflective plate placed at without fog. The target peaks and noise levels match well. (b) LiDAR FMU and real measured TDS obtained from the surface of a 3%-reflective plate at with a fog visibility V of 140 m. It should be noted that the relative distance is calculated from the internal reflection to the target peaks.

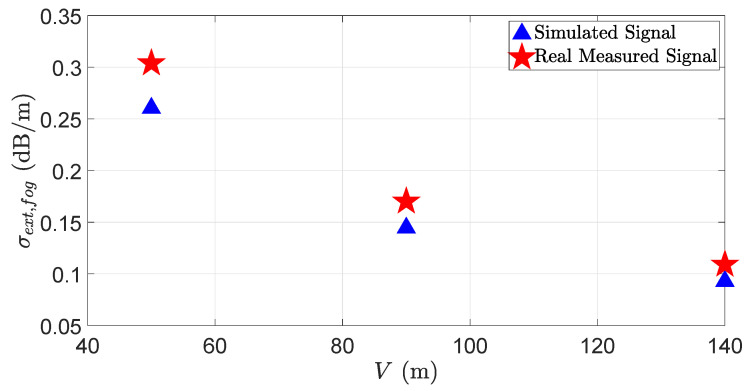

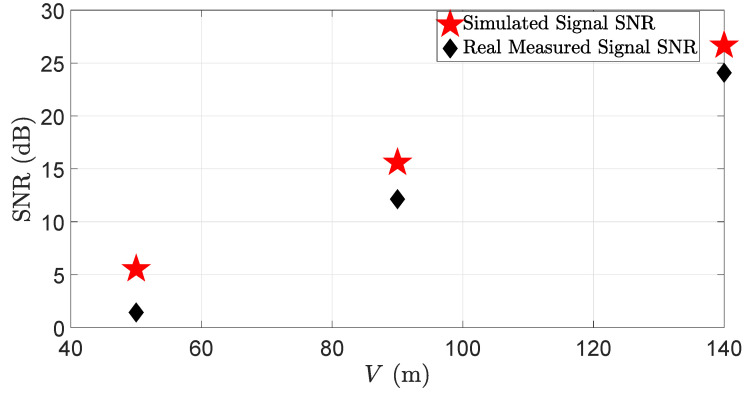

The results show that the simulated and the real measured target peaks and noise levels match well without and with fog. The peak location of backscattering from the fog droplets in the simulation and the real measurement varies because it is a random process, and replicating the exact real-world fog behavior in the simulation is challenging. For instance, the fog drop size changes during the fog life cycle [47,48], affecting the LiDAR sensor performance. Measuring and replicating the change in the fog droplet size during the fog life cycle in the virtual environment is quite challenging. Moreover, in a virtual environment, it is easy to maintain constant visibility to a certain distance. It was a challenge, however, to retain constant visibility to a certain distance, both in the real world and in laboratory-controlled conditions, due to the limitations of the measurement instruments and the fog generation setup. Figure 13 and Figure 14 show the simulated and the real LiDAR signal attenuation and SNR with different visibility distances V. The result shows that the LiDAR signal attenuation caused by fog droplets increases while the visibility distances V decreases; for instance, the LiDAR signal attenuation increases to dB with a visibility distance of 50 . The result also shows that the dense fog increases the mismatch of the simulated and the real measured signals for the attenuation and SNR. As mentioned above, the possible reason behind these deviations is the non-constant distribution of fog in the measurement area and the random backscattering process from fog droplets.

Figure 13.

The real and virtual LiDAR signals attenuation due to the different visibility distances V. The simulation and real measurement results match well at the higher visibility distances. However, as the visibility distance decreases due to fog, the simulated and real measured signal attenuation mismatch increases.

Figure 14.

The SNR of the simulated and real measured signals with different visibility distances V. The simulation and real measurement results match well at the higher visibility distances, but the mismatch between the simulated and real measured SNR increases as the visibility distance decreases.

We use the MAPE metric as given in Equation (37) to quantify the difference between the simulated and the real measured results for fog. The MAPE for the signal attenuation due to fog is 13.9% and 15.7% for SNR.

5.4. Validation of the Fog Effect Modeling on the Point Cloud Level

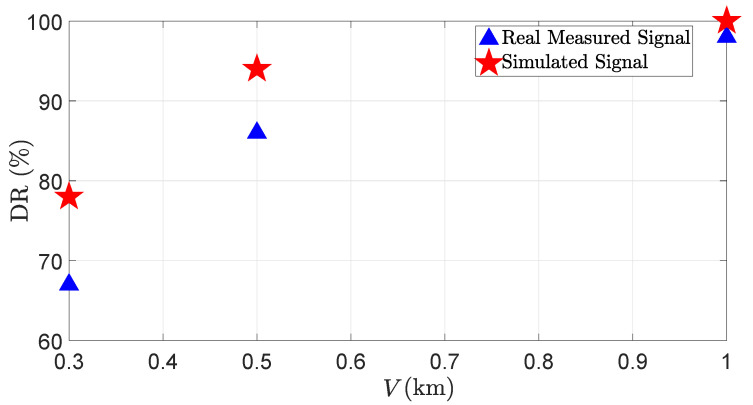

To validate the fog effect modeling on the point cloud level, we consider the same KPIs as given in Section 5.2. Figure 15 shows the DR of the real and the virtual LiDAR sensor for different visibility distances V.

Figure 15.

The DR of the LiDAR sensor for the real and virtual 3%-reflective Lambertian plate with different visibility distances V. The simulation and real measurements show good correlations.

It shows that as the visibility distances decreases, the DR of the real and the virtual LiDAR sensors also decreases. The size of the fog droplets ranges from 5 to 20 , and 1 3 of air contains fog droplets, which is times more than the number of raindrops in 1 3 of air [31]. Therefore, it is easier for LiDAR rays to penetrate through raindrops suspended in the air than fog droplets. That is why the LiDAR signal attenuation is higher in dense fog than in heavy rain. Furthermore, the simulation and the real measurements show a good correlation. The MAPE for the DR is 7.5%. Figure 16 shows the FDR of the real and the virtual LiDAR sensor due to fog.

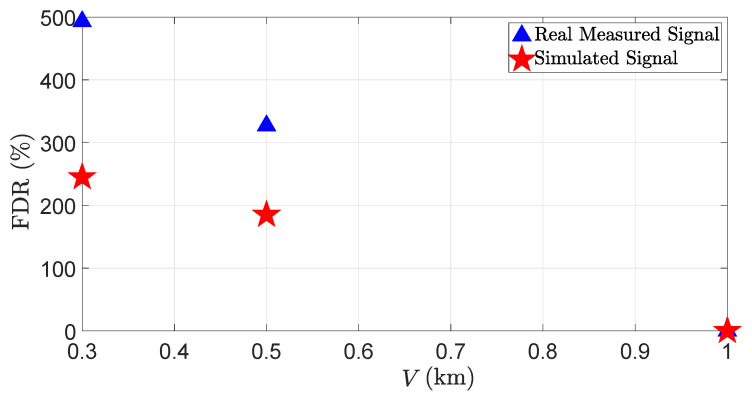

Figure 16.

The FDR of the LiDAR sensor for a real and virtual 3%-reflective Lambertian plate with different visibility distances V. The FDR increases with a decrease in the visibility distances. It should be noted that FDR 300% or 500% shows that the LiDAR reflections received from the fog droplets are 3 or 5 times more than those obtained from the OOI (see Equation (39)).

The results show that as the visibility distance V decreases, the FDR of the real and virtual LiDAR sensors increases. The results also show that the mismatch between the simulation and the real measurements for the FDR increases at low visibility distances. It should be noted that the backscattering from the fog droplets is a random process, and it is, therefore, impossible to model the exact behavior of real-world fog in the simulation. Furthermore, we have not measured nor modeled the real fog distribution due to limited resources. This could be another reason for the mismatch between the simulation and the real measurements. The MAPE for the FDR is 48.2%.

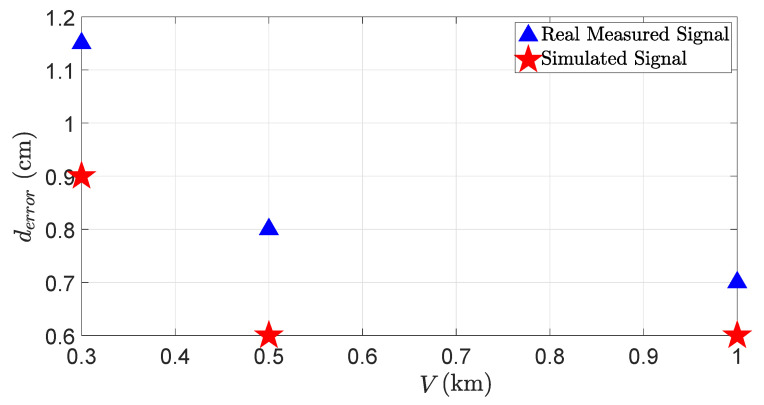

Figure 17 shows the distance error due to fog droplets. The results show that the distance error also increases as the visibility distance V decreases, but still, the distance error does not exceed the maximum permissible error (MPE) specified by the manufacturer, which is 2 in this case. Because the size of fog droplets is several magnitudes smaller than the size of raindrops, LiDAR rays are less misaligned by the scattering from the fog droplets than raindrops. That is why the distance error due to the fog droplets is smaller than the one caused by raindrops.

Figure 17.

The distance error of the LiDAR sensor for the real and virtual 3%-reflective Lambertian plate with different visibility distances V. The distance error increases with the decrease in visibility distances. The simulation and real measurements show a good correlation.

6. Conclusions

In this work, we introduce a novel approach to model the rain and fog effect in the virtual LiDAR sensor with high fidelity. The presented approach allows for the rain and fog effect simulation in the LiDAR model on the time domain and point cloud level. Furthermore, validating the LiDAR sensor model on the time domain enabled us to benchmark the LiDAR signal attenuation and SNR. The results show that the virtual and real LiDAR sensor signal attenuation and SNR match well if both simulated and real rain distributions are the same; for instance, the MAPE of the signal attenuation for the NIED rain distribution is 16.2%, while the MAPE for the Marshall–Palmer rain distribution is 31.6%. In addition, the MAPE of the SNR for the NIED rain distribution is 4.3% and 9.1% for the Marshall–Palmer rain distribution model. Therefore, the simulation and real measurement results will show a good correlation if the simulated and real rain distribution are the same.

The results also show an increase in the LiDAR signal attenuation caused by fog droplets, while the visibility distance V decreases; for instance, the LiDAR signal attenuation increases to dB with a visibility distance of 50 . Furthermore, the simulation and the real measurements show good agreement for the signal attenuation and SNR due to fog droplets. The MAPE for the signal attenuation is about 13.9% and 15.7% for SNR due to fog droplets.

To validate the modeling of the effect of rain and fog at the point cloud level, we have introduced three KPIs: DR, FDR, and distance error . The results show that with the increasing rain rate , DR decreases, while both the real and virtual LiDAR sensors could detect the 3%-reflective target at 20 with a rain rate of 98 /. On the other hand, both FDR and distance error increase with an increase in the rain rate. Moreover, the simulation and the real measurements show a good correlation; for instance, the MAPE for DR is 2.1%, and the MAPE for FDR is 14.7%.

DR significantly decreases in fog with a decrease in the visibility distance because the density of fog droplets is times higher than the density of raindrops in 1 3 of air. As a consequence, LiDAR rays cannot easily penetrate through fog. FDR also increases with the decrease in visibility distances V, but the distance error is less than 2 . As a fog droplet is several magnitudes smaller than a raindrop, LiDAR rays become less misaligned by the scattering from fog droplets as opposed to the scattering from raindrops. However, the simulation and the real measurements correlate well for these KPIs. For example, the MAPE for DR is 7.5%, while MAPE for FDR is 48.2%. It should be noted that the backscattering from the fog droplets is a random process, and it is, therefore, impossible to model the exact behavior of real-world fog in the simulation. Furthermore, we have not measured nor modeled the real fog distribution due to limited resources. This could be another reason for the mismatch between the simulation and the real measurements. For the LiDAR sensor, it is easier to detect the low-reflective target easily in heavy rain, but it is very challenging for the LiDAR sensor to detect the target reliably once the visibility distance drops below 100 m.

7. Outlook

In the next step, we will train the deep learning network-based LiDAR detector with the data from the simulated rain and fog effect to improve object recognition in rainy and foggy situations.

Acknowledgments

The authors thank the DIVP consortium for providing technical assistance and equipment for measurements at NIED. In addition, the authors would like to thank colleagues from NIED and CARISSMA for providing technical services.

Abbreviations

The following abbreviations are used in this manuscript:

| ADAS | Advanced driver-assistance system |

| BRDF | Bidirectional reflectance distribution function |

| CIE | International Commission on Illumination |

| DFT | Discrete Fourier transform |

| DR | Detection rate |

| DSD | Drop size distribution |

| FDR | False detection rate |

| FMU | Functional mock-up unit |

| FMI | Functional mock-up interface |

| FoV | Field of view |

| IDFT | Inverse discrete Fourier transform |

| KPIs | Key performance indicators |

| MAPE | Mean absolute percentage error |

| MPE | Maximum permissible error |

| NIED | National Research Institute for Earth Science and Disaster Prevention |

| OSI | Open simulation interface |

| OOI | Object of interest |

| RADAR | Radio detection and ranging |

| RTDT | Round-trip delay time |

| SNR | Signal-to-noise ratio |

| TDS | Time domain signals |

Author Contributions

Conceptualization, A.H.; methodology, A.H.; software, A.H., M.P. and M.H.K.; validation, A.H.; formal analysis, A.H.; data curation, A.H., S.K. and K.N.; writing—original draft preparation, A.H.; writing—review and editing, A.H., M.P., S.K., M.H.K., L.H., M.F., M.S., K.N., T.Z., A.E., T.P., H.I., M.J. and A.W.K.; visualization, A.H.; supervision, A.W.K. and T.Z.; project administration, T.Z. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This paper shows results from the VIVID project (German Japan Joint Virtual Validation Methodology for Intelligent Driving Systems). The authors acknowledge the financial support by the Federal Ministry of Education and Research of Germany in the framework of VIVID, grant number 16ME0170. The responsibility for the content remains entirely with the authors.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Bilik I. Comparative Analysis of Radar and Lidar Technologies for Automotive Applications. IEEE Intell. Transp. Sys. Mag. 2022;15:244–269. doi: 10.1109/MITS.2022.3162886. [DOI] [Google Scholar]

- 2.Yole Intelligence, with the Strong Push of Chinese Players Eager to Integrate Innovative LiDAR Technologies, the Automotive Industry will Reach $2.0B in 2027, August 2022. [(accessed on 10 February 2023)]. Available online: https://www.yolegroup.com/product/report/lidar—market–technology-trends-2022/

- 3.Kalra N., Paddock S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part A Policy Pract. 2016;94:182–193. doi: 10.1016/j.tra.2016.09.010. [DOI] [Google Scholar]

- 4.Haider A., Pigniczki M., Köhler M.H., Fink M., Schardt M., Cichy Y., Zeh T., Haas L., Poguntke T., Jakobi M., et al. Development of High-Fidelity Automotive LiDAR Sensor Model with Standardized Interfaces. Sensors. 2022;22:7556. doi: 10.3390/s22197556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.NIED Center for Advanced Research Facility: Evaluating the Latest Science and Technology for Disaster Resilience, to Make Society’S Standards for Performance. [(accessed on 15 February 2023)]. Available online: https://www.bosai.go.jp/e/research/center/shisetsu.html.

- 6.CARISSMA, Center of Automotive Research on Integrated Safety Systems and Measurement Area. [(accessed on 15 February 2023)]. Available online: https://www.thi.de/en/research/carissma/

- 7.Goodin C., Carruth D., Doude M., Hudson C. Predicting the Influence of Rain on LIDAR in ADAS. Electronics. 2019;8:89. doi: 10.3390/electronics8010089. [DOI] [Google Scholar]

- 8.Wojtanowski J., Zygmunt M., Kaszczuk M., Mierczyk Z., Muzal M. Comparison of 905 nm and 1550 nm semiconductor laser rangefinders’ performance deterioration due to adverse environmental conditions. Opto-Electron. Rev. 2014;22:183–190. doi: 10.2478/s11772-014-0190-2. [DOI] [Google Scholar]

- 9.Rasshofer R.H., Spies M., Spies H. Influences of weather phenomena on automotive laser radar systems. Adv. Radio Sci. 2011;9:49–60. doi: 10.5194/ars-9-49-2011. [DOI] [Google Scholar]

- 10.Byeon M., Yoon S.W. Analysis of Automotive Lidar Sensor Model Considering Scattering Effects in Regional Rain Environments. IEEE Access. 2020;8:102669–102679. doi: 10.1109/ACCESS.2020.2996366. [DOI] [Google Scholar]

- 11.Li Y., Wang Y., Deng W., Li X., Jiang L. LiDAR Sensor Modeling for ADAS Applications under a Virtual Driving Environment. SAE International; Warrendale, PA, USA: 2016. SAE Technical Paper 2016-01-1907. [Google Scholar]

- 12.Zhao J., Li Y., Zhu B., Deng W., Sun B. Method and Applications of Lidar Modeling for Virtual Testing of Intelligent Vehicles. IEEE Trans. Intell. Transp. Syst. 2021;22:2990–3000. doi: 10.1109/TITS.2020.2978438. [DOI] [Google Scholar]

- 13.Guo J., Zhang H., Zhang X.-J. Propagating Characteristics of Pulsed Laser in Rain. Int. J. Antennas Propag. 2015;2015:292905. doi: 10.1155/2015/292905. [DOI] [Google Scholar]

- 14.Hasirlioglu S., Riener A. A Model-Based Approach to Simulate Rain Effects on Automotive Surround Sensor Data; Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC); Maui, HI, USA. 4–7 November 2018; pp. 2609–2615. [Google Scholar]

- 15.Hasirlioglu S., Riener A. A General Approach for Simulating Rain Effects on Sensor Data in Real and Virtual Environments. IEEE Trans. Intell. Veh. 2020;5:426–438. doi: 10.1109/TIV.2019.2960944. [DOI] [Google Scholar]

- 16.Berk M., Dura M., Rivero J.V., Schubert O., Kroll H.M., Buschardt B., Straub D. A stochastic physical simulation framework to quantify the effect of rainfall on automotive lidar. SAE Int. J. Adv. Curr. Pract. Mobil. 2019;1:531–538. doi: 10.4271/2019-01-0134. [DOI] [Google Scholar]

- 17.Espineira J.P., Robinson J., Groenewald J., Chan P.H., Donzella V. Realistic LiDAR with noise model for real-time testing of automated vehicles in a virtual environment. IEEE Sens. J. 2021;21:9919–9926. doi: 10.1109/JSEN.2021.3059310. [DOI] [Google Scholar]

- 18.Kilic V., Hegde D., Sindagi V., Cooper A.B., Foster M.A., Patel V.M. Lidar Light Scattering Augmentation (LISA): Physics-based Simulation of Adverse Weather Conditions for 3D Object Detection. arXiv. 20212107.07004 [Google Scholar]

- 19.Hahner M., Sakaridis C., Dai D., Van Gool L. Fog simulation on real LiDAR point clouds for 3D object detection in adverse weather; Proceedings of the IEEE/CVF International Conference on Computer Vision; Online. 11–17 October 2021; pp. 15283–15292. [Google Scholar]

- 20.IPG CarMaker . Reference Manual Version 9.0.1. IPG Automotive GmbH; Karlsruhe, Germany: 2021. [Google Scholar]

- 21.Blickfeld Scan Pattern. [(accessed on 10 January 2023)]. Available online: https://docs.blickfeld.com/cube/latest/scan_pattern.html.

- 22.Matsumoto M., Nishimura T. Mersenne twister: A 623-dimensionally equidistributed uniform pseudo-random number generator. ACM Trans. Model. Comput. Simul. 1998;8:3–30. doi: 10.1145/272991.272995. [DOI] [Google Scholar]

- 23.Steele J.M. Non-Uniform Random Variate Generation (Luc Devroye) SIAM Rev. 1987;29:675–676. doi: 10.1137/1029148. [DOI] [Google Scholar]

- 24.Marshall J.S. The distribution of raindrops with size. J. Meteor. 1948;5:165–166. doi: 10.1175/1520-0469(1948)005<0165:TDORWS>2.0.CO;2. [DOI] [Google Scholar]

- 25.Martinez-Villalobos C., Neelin J.D. Why Do Precipitation Intensities Tend to Follow Gamma Distributions? J. Atmos. Sci. 2019;76:3611–3631. doi: 10.1175/JAS-D-18-0343.1. [DOI] [Google Scholar]

- 26.Feingold G., Levin Z. The Lognormal Fit to Raindrop Spectra from Frontal Convective Clouds in Israel. J. Appl. Meteorol. Climatol. 1986;25:1346–1363. doi: 10.1175/1520-0450(1986)025<1346:TLFTRS>2.0.CO;2. [DOI] [Google Scholar]

- 27.Atlas D., Ulbrich C.W. Path- and Area-Integrated Rainfall Measurement by Microwave Attenuation in the 1–3 cm Band. J. Appl. Meteorol. Climatol. 1977;16:1322–1331. doi: 10.1175/1520-0450(1977)016<1322:PAAIRM>2.0.CO;2. [DOI] [Google Scholar]

- 28.Van Boxel J.H. Workshop on Wind and Water Erosion. University of Amsterdam; Amsterdam, The Netherlands: 1997. Numerical Model for the Fall Speed of Rain Drops in a Rain Fall Simulator; pp. 77–85. [Google Scholar]

- 29.Hasirlioglu S., Riener A. Introduction to rain and fog attenuation on automotive surround sensors; Proceedings of the IEEE 20th International Conference on Intelligent Transportation Systems (ITSC); Yokohama, Japan. 16–19 October 2017; pp. 16–19. [Google Scholar]

- 30.Liou K.-N., Hansen J.E. Intensity and polarization for single scattering by polydisperse spheres: A comparison of ray optics and Mie theory. J. Atmos. Sci. 1971;28:995–1004. doi: 10.1175/1520-0469(1971)028<0995:IAPFSS>2.0.CO;2. [DOI] [Google Scholar]

- 31.Van de Hulst H.C. Light Scattering by Small Particles. Courier Corporation; North Chelmsford, MA, USA: 1981. [Google Scholar]

- 32.Bohren C.F., Huffman D.R. Absorption and Scattering of Light by Small Particles. 1st ed. John Wiley and Sons, Inc.; New York, NY, USA: 1983. [Google Scholar]

- 33.Du H. Mie-scattering calculation. Appl. Opt. 2004;43:1951–1956. doi: 10.1364/AO.43.001951. [DOI] [PubMed] [Google Scholar]

- 34.Kruse P.W., McGlauchlin L.D., McQuistan R.B. Elements of Infrared Technology: Generation, Transmission and Detection. John Wiley and Sons, Inc.; New York, NY, USA: 1962. [Google Scholar]

- 35.Kim I.I., McArthur B., Korevaar E.J. Comparison of laser beam propagation at 785 nm and 1550 nm in fog and haze for optical wireless communications; Proceedings of the Optical Wireless Communications III; Boston, MA, USA. 5–8 November 2001; pp. 26–38. [Google Scholar]

- 36.Vasseur H., Gibbins C.J. Inference of fog characteristics from attenuation measurements at millimeter and optical wavelength. Radio Sci. 1996;31:1089–1097. doi: 10.1029/96RS01725. [DOI] [Google Scholar]

- 37.Deirmendjian D. Electromagnetic Scattering on Spherical Polydispersions. Rand Corp; Santa Monica, CA, USA: 1969. p. 456. [Google Scholar]

- 38.Hasirlioglu S. Ph.D. Thesis. Universität Linz; Linz, Austria: 2020. A Novel Method for Simulation-Based Testing and Validation of Automotive Surround Sensors under Adverse Weather Conditions. [Google Scholar]

- 39.Fink M., Schardt M., Baier V., Wang K., Jakobi M., Koch A.W. Full-Waveform Modeling for Time-of-Flight Measurements based on Arrival Time of Photons. arXiv. 20222208.03426 [Google Scholar]

- 40.French A., Taylor E. An Introduction to Quantum Physics. Norton; New York, NY, USA: 1978. [Google Scholar]

- 41.Fox A.M. Quantum Optics: An Introduction. Oxford University Press; New York, NY, USA: 2007. Oxford Master Series in Physics Atomic, Optical, and Laser Physics. [Google Scholar]

- 42.Pasquinelli K., Lussana R., Tisa S., Villa F., Zappa F. Single-Photon Detectors Modeling and Selection Criteria for High-Background LiDAR. IEEE Sens. J. 2020;20:7021–7032. doi: 10.1109/JSEN.2020.2977775. [DOI] [Google Scholar]

- 43.Bretz T., Hebbeker T., Kemp J. Extending the dynamic range of SiPMs by understanding their non-linear behavior. arXiv. 20102010.14886 [Google Scholar]

- 44.Schröder D.J. Astronomical Optics. 2nd ed. Academic Press; San Diego, CA, USA: 2000. [Google Scholar]

- 45.Swamidass P.M. Encyclopedia of Production and Manufacturing Management. Springer; Boston, MA, USA: 2000. Mean Absolute Percentage Error (MAPE) [Google Scholar]

- 46.Lang S., Murrow G. Geometry. Springer; New York, NY, USA: 1988. The Distance Formula; pp. 110–122. [Google Scholar]

- 47.Mazoyer M., Burnet F., Denjean C. Experimental study on the evolution of droplet size distribution during the fog life cycle. Atmos. Chem. Phys. 2022;22:11305–11321. doi: 10.5194/acp-22-11305-2022. [DOI] [Google Scholar]

- 48.Wang Y., Lu C., Niu S., Lv J., Jia X., Xu X., Xue Y., Zhu L., Yan S. Diverse Dispersion Effects and Parameterization of Relative Dispersion in Urban Fog in Eastern China. J. Geophys. Res. Atmos. 2023;128:e2022JD037514. doi: 10.1029/2022JD037514. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.