Abstract

In daily life, prehension is typically not the end goal of hand-object interactions but a precursor for manipulation. Nevertheless, functional MRI (fMRI) studies investigating manual manipulation have primarily relied on prehension as the end goal of an action. Here, we used slow event-related fMRI to investigate differences in neural activation patterns between prehension in isolation and prehension for object manipulation. Sixteen (seven males and nine females) participants were instructed either to simply grasp the handle of a rotatable dial (isolated prehension) or to grasp and turn it (prehension for object manipulation). We used representational similarity analysis (RSA) to investigate whether the experimental conditions could be discriminated from each other based on differences in task-related brain activation patterns. We also used temporal multivoxel pattern analysis (tMVPA) to examine the evolution of regional activation patterns over time. Importantly, we were able to differentiate isolated prehension and prehension for manipulation from activation patterns in the early visual cortex, the caudal intraparietal sulcus (cIPS), and the superior parietal lobule (SPL). Our findings indicate that object manipulation extends beyond the putative cortical grasping network (anterior intraparietal sulcus, premotor and motor cortices) to include the superior parietal lobule and early visual cortex.

SIGNIFICANCE STATEMENT A simple act such as turning an oven dial requires not only that the CNS encode the initial state (starting dial orientation) of the object but also the appropriate posture to grasp it to achieve the desired end state (final dial orientation) and the motor commands to achieve that state. Using advanced temporal neuroimaging analysis techniques, we reveal how such actions unfold over time and how they differ between object manipulation (turning a dial) versus grasping alone. We find that a combination of brain areas implicated in visual processing and sensorimotor integration can distinguish between the complex and simple tasks during planning, with neural patterns that approximate those during the actual execution of the action.

Keywords: cortical grasping network, fMRI, grasping motor control, multivariate analyses, parietal cortex

Significance Statement

A simple act such as turning an oven dial requires not only that the CNS encode the initial state (starting dial orientation) of the object but also the appropriate posture to grasp it to achieve the desired end state (final dial orientation) and the motor commands to achieve that state. Using advanced temporal neuroimaging analysis techniques, we reveal how such actions unfold over time and how they differ between object manipulation (turning a dial) versus grasping alone. We find that a combination of brain areas implicated in visual processing and sensorimotor integration can distinguish between the complex and simple tasks during planning, with neural patterns that approximate those during the actual execution of the action.

Introduction

The hand is central in physical interactions with our environment. Typical hand-object interactions consist of sequential phases, starting with reaching and ending with object manipulation (Castiello, 2005). Electrophysiological studies in macaques have implicated a frontoparietal network in hand-object interactions (for review, see Gerbella et al., 2017). Previous functional MRI (fMRI) research (for review, see Errante et al., 2021) has identified a similar network in humans. This network comprises a dorsomedial pathway, consisting of the superior parietal occipital cortex (SPOC; corresponding to V6/V6A) and the dorsal premotor cortex (PMd), and a dorsolateral pathway, consisting of anterior intraparietal sulcus (aIPS) and ventral premotor cortex (PMv).

While past human neuroimaging studies revealed the neural substrates of grasping, most treated prehension as the end goal, not as a step toward meaningful hand-object interactions. In contrast, real-world hand-object interactions typically involve grasping only as a prelude to subsequent actions such as manipulating or moving objects. Importantly, previous studies have shown that the final action goal shapes prehension: Initial prehension strategies are affected by the goal in the “end-state comfort” effect (Rosenbaum et al., 1990) and in actions like tool use (Comalli et al., 2016). Moreover, the end goal affects brain responses during action planning and prehension (Fogassi et al., 2005; Gallivan et al., 2016).

The purpose of the current study was to investigate how isolated and sequential actions unfold differently on a moment-to-moment basis. The temporal unfolding of brain activation during actions has been largely overlooked (or only studied with EEG, without localizing the specific brain regions involved; Guo et al., 2019) . Most studies that have used multivoxel pattern analysis (MVPA) to investigate hand actions have averaged data within time bins for Planning and Execution (Gallivan et al., 2011). Notably, several studies have revealed the temporal unfolding of MVPA grasping representations for sequential time points (Gallivan et al., 2013; Ariani et al., 2018). In addition, one study examined isolated (grasping an object) versus sequential (grasping to move an object to one of two locations) actions, showing that activation patterns could be discriminated across the grasping network even during action planning (Gallivan et al., 2016).

Here, we examined (univariate) activation levels and (multivariate) activation patterns [using representational similarity analysis (RSA); Kriegeskorte et al., 2008]. Moreover, we also used a new methodological approach, temporal MVPA (tMVPA; Ramon et al., 2015; Vizioli et al., 2018) to examine the representation similarities across trials for the same and different points in time, separately for isolated versus sequential actions.

We measured brain activation using fMRI while participants performed a motor task consisting of either simple grasping of a dial (with two possible initial orientations) or grasping followed by rotation of the dial (clockwise or counterclockwise), as one might turn an oven dial (see Fig. 1). Based on earlier findings that brain regions within the grasping network plan the full action sequence, and not just the initial grasp (see Gallivan et al., 2016), we expected that during the plan phase, as well as the execute phase, tMVPA would reveal representations of the task (Grasp vs Turn). Given that visual orientation (Kamitani and Tong, 2005), surface/object orientation (Shikata et al., 2001; Valyear et al., 2006; Rice et al., 2007) and grip orientation (Monaco et al., 2011) are represented in early visual cortex, the caudal intraparietal sulcus (cIPS), and reach-selective cortex (SPOC), respectively, we predicted that the representation of orientation would be biased to the start orientation early in Planning, with a greater emphasis on end orientation as the movement progressed. Our approach with tMVPA allowed us to examine, across different regions, how representations unfolded over time for isolated versus sequential actions. Specifically, we expected that regions sensitive to the kinematics of executed actions (e.g., M1 and S1) would only show highly similar representations during action execution; whereas, regions involved in more abstract features of action planning (e.g., aIPS for coding object shape) would show similar representations across Planning and Execution.

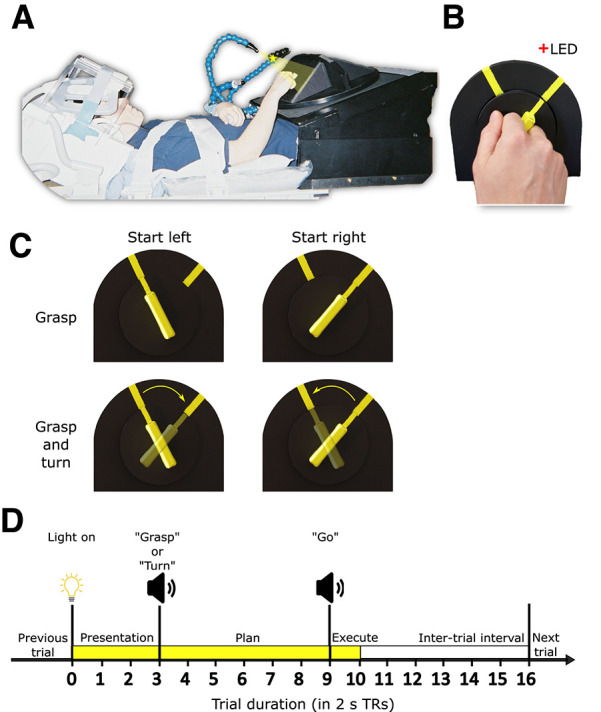

Figure 1.

Experimental set-up and design. A, Picture of participant set-up in the fMRI scanner shown from side view. B, Close up of participant using the instructed grip to grasp the experimental stimulus, i.e., rotatable dial with a handle. C, Experimental conditions in a two (start orientation: left or right) by two (action: grasp or turn) design. D, Timing of each event-related trial. Trials began with the stimulus being illuminated (TR 0–3) indicating the start of the preparation phase. At TR 3 participants received task instructions indicating the start of the Planning phase (TR 3–9). At TR 9, participants received the “go” cue indicating onset of action execution (TR 9–10). After 2 s (1 TR) the light was turned off and the intertrial interval was initiated (TR 10–16).

Materials and Methods

Participants

Data from sixteen right-handed volunteers was used in the analysis (seven males, nine females, mean age: 24.4 years). Participants were recruited from the University of Western Ontario (London, Ontario, Canada) and provided informed consent in accordance with procedures approved by the University’s Health Sciences Research Ethics Board. Data from an additional two subjects (one male and one female) was collected but excluded because of excessive motion artifacts (see below, fMRI functional data processing).

Setup and apparatus

The experimental setup is illustrated in Figure 1A,B. Participants lay supine in a 3-Tesla MRI scanner with the head and head coil tilted ∼30° to allow for direct viewing without mirrors of a manipulandum positioned above the participant’s hips. The manipulandum consisted of a black rotatable dial (9 cm in diameter; Fig. 1B) with a yellow rectangular handle (5-cm length × 1-cm width × 2-cm depth). The dial was mounted on a black surface. The black surface was positioned such that the dial was approximately perpendicular to the subject’s line of gaze and comfortably within reach of their right arm. Two yellow markers were put on the black surface (Fig. 1B) indicating the start and end positions for turning the dial. A gray platform (not shown in Fig. 1A) was positioned above the participant’s lower torso serving as the home/resting position for the right arm between trials. Participants’ upper arms were braced above their torsos and just above their elbows (Fig. 1A) to limit movement of the shoulder, which can induce motion artifacts in fMRI signals. As such, participants could only rely on elbow flexion/extension and forearm rotation to perform the experimental task. Considering these constraints, the position and orientation of the dial were adjusted for each participant to optimize participant comfort during task performance and ensure the dial remained fully visible. The position of the yellow markers on the black surface was adjusted for each participant individually so that dial rotation would not exceed 80% of the participant’s maximum range of motion when turning the dial clockwise or counterclockwise.

During the experiment, the dial was illuminated from the front by a bright yellow light-emitting diode (LED) attached to flexible plastic stalks (Fig. 1A; Loc-Line, Lockwood Products). A dim red LED (masked by a 0.1° aperture) was positioned ∼15° of visual angle above the rotatable dial and just behind it to provide a fixation point for participants (Fig. 1A,B). Experimental timing (see below) and lighting were controlled with in-house MATLAB scripts (The MathWorks Inc.).

Experiment design and timing

Behavioral task

This experiment was a 2 (starting orientation: left or right) × 2 (action: grasp or turn) delayed movement paradigm (Fig. 1C). For each trial, the dial would appear in one of the two yellow-marked starting positions. The grasp condition consisted of reaching toward the dial and squeezing it between the middle phalanges of the index and middle fingers (trial end position shown in Fig. 1A; hand shape during grasp shown in Fig. 1B). After grasp completion, participants returned their arm back to the home position. In the turn condition, participants performed the same reach-to-grasp action but would then subsequently rotate the dial clockwise or counterclockwise after they grasped the dial in the left or right start position, respectively (Fig. 1C). We decided on the index-middle finger grip instead of a more natural variant of precision grasp as this would ensure the grip would be highly similar in all conditions and limit changes to grip angle to optimize end-state comfort (e.g., putting the right-hand thumb further up when planning to turn clockwise and further down when planning to turn the dial counterclockwise). This avoids contaminating neural activation with low-level sensorimotor confounds such as digit positioning. Participants were instructed to keep the timing of all movements as similar as possible, such that the right hand reached from and returned to the home position at the same time (see below, Trial design). To isolate the visuomotor planning response from the visual and motor execution responses, we used a slow event-related paradigm with 32-s trials, consisting of three phases: Presentation, Plan, and Execute (see Fig. 1D). We adapted this paradigm from previous fMRI studies that successfully isolated delay-period activity from transient neural responses following the onset of visual stimuli and movement execution (Beurze et al., 2007, 2009; Pertzov et al., 2011). Furthermore, using this paradigm in previous work from our group, we were able to successfully isolate and decode planning-related neural activation before action execution (Gallivan et al., 2011, 2013).

Trial design

Before each trial, subjects were in complete darkness except for the fixation LED on which participants were instructed to maintain their gaze. The trial began with a 6-s (three TRs of 2 s each) Presentation phase in which the illumination LED lit up the rotatable dial. After the Presentation phase, the 12-s (six-TR) Plan phase was initiated with a voice cue (0.5-s duration) saying either “Grasp” or “Turn” to instruct the upcoming action to the participant. Participants could see the object during the Presentation phase, thus perceiving the starting orientation of the yellow handle. However, they were instructed to only begin the action after they received the “go” cue. After the Plan phase, a 2-s (one-TR) Execute phase began with a 0.5-s beep (the “go” cue) cueing participants to initiate and execute the instructed action. Performing the entire action, consisting of reaching and grasping (with or without dial turning), took ∼2 s. The dial remained illuminated for 2 s after the “go” cue allowing visual feedback during action execution. After the 2-s Execution phase, the illumination LED was turned off, cueing participants to let go of the dial and return their hand back to the home position. Participants remained in this position for 12 s (six TRs) in the dark (i.e., the intertrial interval) to allow the blood oxygenation level-dependent (BOLD) response to return back to baseline before the next trial would be initiated.

Functional runs

Within each functional run, each of the four trial types (Grasp: left or right, Turn: from left to right or right to left) was presented five times in a pseudo-randomized manner for a total of 20 trials. Each participant performed eight functional runs, yielding a total of 40 trials/condition (160 trials in total). Each functional run took between 10 and 11 min. For each participant, trial orders were counterbalanced across all functional runs so that each trial type was preceded and followed equally often by every trial type (including the same trial type) across the entire experiment. During testing, the experimenter was positioned next to the set-up to ensure that the dial was in the correct position before each trial, and manually adjust it if needed. In addition, the experimenter could visually check whether the participant performed the trial correctly. Incorrectly trials were defined as any trial where the participant did not perform the task as instructed: for instance, turning instead of grasping, using incorrect grips, failing to turn in a smooth manner or having the dial slip from the fingers.

Participants performed a separate practice session before the actual experiment to familiarize them with the motor task and ensure proper execution. The experimental session took approximately 3 h and consisted of preparation (i.e., informed consent, MRI safety, placing the participant in the scanner and setting up the behavioral task), eight functional runs and one anatomic scan. The anatomic scan was collected between the fourth and fifth functional runs to give participants a break from the task.

MRI acquisition

Imaging was performed using a 3-Tesla Siemens TIM MAGNETOM Trio MRI scanner at the Robarts Research Institute (London, ON, Canada). The T1-weighted anatomic image was collected using an ADNI MPRAGE sequence [time to repetition (TR) = 2300 ms, time to echo (TE) = 2.98 ms, field of view = 192 × 240 × 256 mm, matrix size = 192 × 240 × 256, flip angle = 9°, 1-mm isotropic voxels]. Functional MRI volumes sensitive to the blood oxygenation level-dependent (BOLD) signal were collected using a T2*-weighted single-shot gradient-echo echoplanar imaging (EPI) acquisition sequence (TR = 2000 ms, slice thickness = 3 mm, in-plane resolution = 3 × 3 mm, TE = 30 ms, field of view = 240 × 240 mm, matrix size = 80 × 80, flip angle = 90°), and acceleration factor (integrated parallel acquisition technologies, iPAT = 2) with generalized auto-calibrating partially parallel acquisitions (GRAPPA) reconstruction. Each volume comprised 34 contiguous (i.e., with no gap) oblique slices acquired at an approximate 30° caudal tilt with respect to the anterior-to-posterior commissure (ACPC) plane, providing near whole brain coverage. We used a combination of parallel imaging coils to achieve a good signal-to-noise ratio and to enable direct viewing of the rotatable dial without mirrors or occlusion. Specifically, we placed the posterior half of the 12-channel receive-only head coil (six channels) beneath the head and tilted it at an angle of ∼20°. To increase the head tilt to ∼30°, we put additional foam padding below the head. We then suspended a four-channel receive-only flex coil over the forehead (Fig. 1A).

fMRI anatomic data processing

All fMRI preprocessing was performed in BrainVoyager version 22 (Brain Innovation). For the present study, we defined regions of interest (ROIs) in surface space instead of volumetric space as it has been shown that cortical alignment improves group results by reducing individual differences in sulcal locations (Fischl et al., 1999; Frost and Goebel, 2012). As such, we performed surface reconstruction (mesh generation) and cortex-based alignment. Given that we relied on the recommended approach and standard settings of BrainVoyager 22, these steps are explained only in brief.

Folded mesh generation for each participant

In volumetric space we performed the following steps. First, we corrected for intensity inhomogeneity. Then, we rotated the anatomic data in ACPC space, because of the experimental head tilt, and normalized to Montreal Neurologic Institute (MNI) space. Next, we excluded the subcortical structures (labeled as white matter) and the cerebellum (removed from anatomic) before mesh generation. We then defined the boundaries between white matter and gray matter and between gray matter and cerebrospinal fluid. Finally, we created a folded mesh (surface representation) of only the left hemisphere after removing topologically incorrect bridges (Kriegeskorte and Goebel, 2001). We decided to only investigate the left hemisphere because the motor task involved the right hand only, which previous work has shown predominantly activates the left (contralateral) hemisphere (Cavina-Pratesi et al., 2010, 2018; Gallivan et al., 2011, 2013).

Standardized folded mesh generation for each participant

Briefly, folded meshes created from anatomic files often result in different numbers of vertices between participants. To facilitate cortex-based alignment between participants, folded meshes were first transformed into high-resolution standardized folded meshes. Each folded mesh was first morphed into a spherical representation by smoothing (thus removing differences between sulci and gyri) and correcting for distortion. The spherical representation of each participant mesh was then mapped to a high-resolution standard sphere to create a high-resolution standardized spherical representation of the participant mesh. The vertex position information of the original participant folded (not spherical) mesh was then used to generate a standardized folded mesh for each participant.

Cortex-based alignment

Cortex-based alignment was performed following the approach of Frost and Goebel (2012) and Goebel et al. (2006): we aligned all individual standard meshes to a dynamically generated group average target mesh. Before aligning to the dynamic group average, we performed prealignment (i.e., rigid sphere alignment). The actual steps of cortex-based alignment generate a dynamic group average (a surface mesh based on all individual meshes) and sphere-to-sphere mapping files for each participant that enable transporting the functional data from each individual to the dynamic group average. Inverse sphere-to-sphere mapping files were also generated, which allows transporting of data (such as regions of interest) from the dynamic group average back to individual meshes.

fMRI functional data processing

General preprocessing

All functional runs were screened for motion and magnet artifacts by examining the movement time courses and motion plots created with the motion correction algorithms (three translation and three rotation parameters). Data from a run was discarded if translation exceeded 1 mm or rotation exceeded 1° between successive volumes. Based on this screening, all data from two participants and one run from an additional participant was discarded. Examination of the remaining data indicated negligible motion artifacts in time courses (as will be shown in later figures).

Functional runs were co-registered with the anatomic data using boundary-based registration (Greve and Fischl, 2009). We subsequently performed slice-scan time correction, motion correction and MNI normalization. Next, we performed linear-trend removal and temporal high-pass filtering (using a cutoff of three sine and cosine cycles on the fast Fourier transform of the time courses).

Volumetric to surface-based time courses

Volumetric time courses were first aligned with the respective participant’s anatomic scan using boundary-based registration to ensure optimal alignment (Greve and Fischl, 2009). Volumetric time courses were then transformed to the respective participant’s standardized folded mesh using depth integration along the vertex normal (sampling from −1 to 3 mm relative to the gray-white matter boundary). Once functional runs were transformed into surface space, they were spatially smoothed. Finally, functional runs in individual standard mesh space, could then be mapped onto the dynamic group average mesh using sphere-to-sphere mapping files that were generated during cortex-based alignment. This approach enabled us to model the averaged brain activation across all participants onto the group mesh and define ROIs based on hotspots of strongest activations in surface space.

Region of interest-based analysis

For the current project, we performed a ROI-based analysis of cortical regions within the hemisphere contralateral to the acting hand at the peak foci for task-related activity. To allow for better intersubject consistency and statistical power, we used cortex-based alignment for defining ROIs (Goebel et al., 2006; Frost and Goebel, 2012). We were primarily interested in cortical areas that constitute the cortical grasping and reaching networks that have been investigated in previous studies of our group (Cavina-Pratesi et al., 2018) and others (Ariani et al., 2018). As in previous projects (Gallivan et al., 2013; Fabbri et al., 2016), we considered only the contralateral hemisphere as it is well established that hand-object actions are strongly lateralized and elicit only limited activation in the ipsilateral hemisphere (Culham et al., 2006). Moreover, as our analysis of the left cerebral hemisphere already included 17 regions and we performed multiple analyses, some at many time points, we wanted to limit the complexity of the results and find a balance between statistical rigor and statistical power.

We chose an ROI approach rather than a whole-brain cortex-based searchlight to optimize statistical power and computational processing time (Kriegeskorte, 2008; Frost and Goebel, 2012; Etzel et al., 2013). Because of the large number of comparisons performed in a searchlight analysis, only the most robust effects survive the correction for multiple comparisons (Kriegeskorte, 2008; Kriegeskorte and Kievit, 2013). Moreover, tMVPA relies on single-trial correlations which are computationally very intensive. Performing this analysis on the voxel-based searchlight level would likely take multiple months.

Defining regions of interest

To localize our regions of interest on the group mesh, we applied a general linear model (GLM) on our data in surface space. Predictors of interest were created from boxcar functions convolved with the two-γ hemodynamic response function (HRF). We aligned a boxcar function with the onset of each phase of each trial within each run of each participant, with a height dependent on the duration of each phase. We used three TRs for the Presentation phase, six TRs for the Plan phase and one TR for the Execution phase. The six motion parameters (three translations and three rotations) were added as predictors of no interest. Each incorrect trial was also assigned a unique predictor of no interest. All regression coefficients (β weights) were defined relative to the baseline activity during the intertrial interval. In addition, time courses were converted to percent signal change before applying the random effects GLM (RFX-GLM).

To specify ROIs for our analyses, we searched for brain areas, on the group level, involved in the experimental task. We contrasted brain activation for all three phases with baseline. This contrast was performed across all conditions to ensure that ROI selection based on local activation patterns was not biased by differences between specific conditions (e.g., Grasp vs Turn). Specifically, the contrast was: {Presentation [Grasp left + Grasp right + Turn left to right + Turn right to left] + Plan [Grasp left + Grasp right + Turn left to right + Turn right to left] + Execute [Grasp left + Grasp right + Turn left to right + Turn right to left]} > baseline. This contrast enabled us to identify regions that showed visual and/or motor activation associated with the task.

In BrainVoyager, area selection on a surface generates a hexagon surrounding the selected vertex. We decided on an area selection size of 100 (arbitrary units) as this value provided a good balance between inclusion of a sufficient number of vertices/voxels around each hotspot for multivariate analyses and avoiding overlap between ROIs (especially in the parietal lobe).

Regions of interest

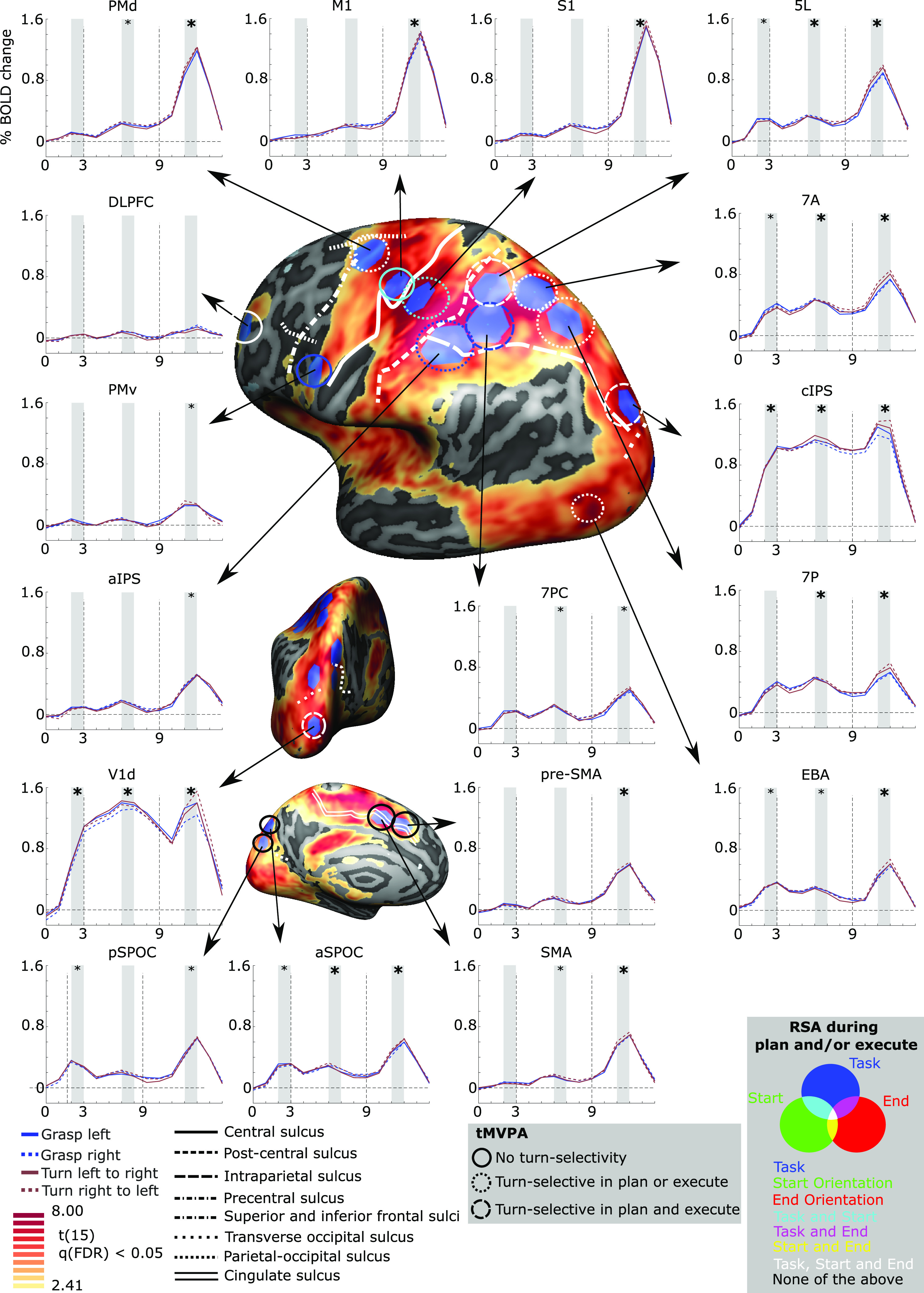

Selected regions of interest (Fig. 2) were defined in the left hemisphere. Most regions of interest were defined using the contrast above (all conditions > baseline), with two exceptions, as detailed below.

Figure 2.

Brain areas selected for multivariate analysis based on a univariate contrast. Cortical areas that exhibited larger responses during the experimental trials [(presentation + plan + execute) > baseline] are shown in yellow to red activation. Results calculated across all participants (RFX GLM) are displayed on the dynamic group average surface across participants. The selected ROIs were selected in BrainVoyager software, which uses a hexagonal selection area, and then transformed to individual volumetric MNI space. Each ROI is linked to the group average of corresponding % signal change of BOLD activity (y-axis) over time (x-axis; each X-ticks represents one TR of 2 s, based on a deconvolution analysis) for each of the four conditions. Vertical dashed lines on the graphs indicate start of the Planning phase (TR 3) and the Execution phase (TR 9). For the time courses, statistical analysis was done for the shaded areas (e.g., average time points 2 and 3) averaged across the four conditions. If significantly >0, when Bonferroni corrected within ROI-only, a small asterisk is depicted. If significantly >0, when Bonferroni corrected for all comparisons, a large asterisk is depicted. On the surface brain, sulcal landmarks are denoted by white lines (stylized according to the corresponding legend). ROI acronyms are spelled out in the methods. tMVPA results are indicated using different types of circles (e.g., dashed vs solid). RSA results are shown by color-coding the tMVPA circles using a Venn diagram. Note that the RSA and tMVPA results are discussed in the results section as well as in Figures 5 and 6.

First, the involvement of the cortical grasping network (Gerbella et al., 2017) was investigated by including sensorimotor and visuomotor regions (Gallivan et al., 2011, 2013; Fabbri et al., 2016; Ariani et al., 2018; Cavina-Pratesi et al., 2018).

Primary motor cortex (M1): hotspot of strongest activation near the “hand knob” landmark (Gallivan et al., 2013; Yousry et al., 1997).

Dorsal premotor cortex (PMd): hotspot of strongest activation near the junction of the precentral sulcus and the superior frontal sulcus (Picard and Strick, 2001; Pilacinski et al., 2018).

Ventral premotor cortex (PMv): hotspot of strongest activation inferior and posterior to the junction of the inferior frontal sulcus; Tomassini et al., 2007).

Primary somatosensory cortex (S1): hotspot of strongest activation anterior to the anterior intraparietal sulcus, encompassing the postcentral gyrus and sulcus (Gallivan et al., 2011, 2013).

Anterior intraparietal sulcus (aIPS): hotspot of strongest activation directly at the junction of the intraparietal sulcus and the postcentral sulcus (Culham et al., 2003).

Caudal intraparietal sulcus (cIPS): hotspot of strongest activation on the lateral side of the brain, anterior and superior to the junction between the intraparietal sulcus and the posterior occipital sulcus (Grefkes and Fink, 2005; Beurze et al., 2009). Comparisons with the Julich atlas (Richter et al., 2019) suggested overlap with hIP7 and/or hP01.

Anterior superior parietal occipital sulcus (aSPOC): hotspot of strongest activation on the medial side of the brain, anterior and superior to the parietal-occipital sulcus (Cavina-Pratesi et al., 2010), thought to correspond to area V6A (Pitzalis et al., 2015).

Posterior superior parietal occipital sulcus (pSPOC): hotspot of strongest activation on the medial side of the brain, posterior and inferior to the parietal-occipital sulcus (Cavina-Pratesi et al., 2010), thought to correspond to area V6 (Pitzalis et al., 2015).

Second, the following medial and frontal regions were selected because of their involvement in motor planning and decision-making (Ariani et al., 2015; Badre and Nee, 2018; Cavina-Pratesi et al., 2018).

Dorsolateral prefrontal cortex (DLPFC): hotspot of strongest activation near the middle frontal gyrus (Mylius et al., 2013).

Supplementary motor area (SMA): hotspot of strongest activation adjacent to the medial end of the cingulate sulcus and posterior to the plane of the anterior commissure (Picard and Strick, 2001).

Presupplementary motor area (pre-SMA): hotspot of strongest activation superior to the cingulate sulcus, anterior to the plane of the anterior commissure and anterior and inferior to the hotspot of strongest activation selected for SMA (Picard and Strick, 2001).

Third, we included the superior parietal lobule (SPL) because of the extensive activation evoked by our task and in hand actions more generally (Ariani et al., 2018; Cavina-Pratesi et al., 2018). We defined the SPL as the area on the lateral/superior side of the brain that is bordered anteriorly by the postcentral sulcus, inferiorly by the intraparietal sulcus and posteriorly by the parietal-occipital sulcus (Scheperjans et al., 2008a, b). Because of the large swathe of activation evoked by our contrast, we defined four ROIs within the SPL based on their relative position to each other (to ensure minimal overlap) and the anatomic landmarks bordering the SPL. We decided on four ROIs as well as their names based on Scheperjans et al. (2008a).

7PC: hotspot of strongest activation located on the posterior wall of the postcentral sulcus and superior to the intraparietal sulcus. Given we defined aIPS as well, 7PC was also defined as superior to AIPS.

5L: hotspot of strongest activation located just posterior to the postcentral sulcus and superior to area 7PC.

7P: hotspot of strongest activation superior to the intraparietal sulcus and anterior to the parietal-occipital sulcus.

7A: hotspot of strongest activation in the postcentral gyrus, superior to 7PC, posterior to 5L and anterior to 7P.

Finally, two visual regions were selected.

Dorsal primary visual cortex (V1d): given that primary visual cortex (V1) responds to visual orientation (Kamitani and Tong, 2005) we wanted to investigate its response here. Because our target objects (and participants hands) fell within the lower visual field (below the fixation point), they would stimulate the dorsal divisions of early visual areas (Wandell et al., 2007).

Extrastriate body area (EBA): we were also interested in examining the response of the extrastriate body area, which has been implicated not only in the visual perception of bodies (Downing et al., 2001) but also in computing goals during action planning (Astafiev et al., 2004; Zimmermann et al., 2016). Because the EBA is not easy to distinguish from nearby regions of the lateral occipitotemporal cortex, we utilized the EBA from the Rosenke atlas. Briefly, this process is nearly identical for the other ROIs. The only difference was that we aligned all individual standard meshes to the template of Rosenke (instead of the dynamic group average). This provided sphere-to-sphere and inverse sphere-to-sphere mapping files. The latter could then be used to transport EBA from the Rosenke template to the individual standard meshes and perform all other steps identically as for the other ROIs. We defined EBA as the combination of all body-selective patches, being OTS-bodies, MTG-bodies, LOS-bodies, and ITG-bodies (for the full explanation of these regions see Rosenke et al., 2021).

After ROIs were defined in group surface space, they were transformed to individual surface space, using the inverse transformation files generated during cortex-based alignment and then to individual volumetric MNI space, using depth expansion (inverse of depth integration, see above, fMRI functional data processing, Volumetric to surface-based time courses) along the vertex normals (−1–3 mm). This approach allowed us to define ROIs on the group surface but extract functional data from the individual volumetric level.

Analysis of functional data

Functional data were extracted to perform deconvolution general linear models (deconvolution GLMs; Hinrichs et al., 2000), representational similarity analysis (Kriegeskorte, 2008) and temporal multivoxel pattern analysis (tMVPA; Vizioli et al., 2018). All analyses described below, excluding initial processing of fMRI data and the univariate analyses (which where both done in BrainVoyager), were done with in-house MATLAB scripts.

Univariate analysis

We used a random-effects GLM with deconvolution to extract the time courses of activation in each ROI during the experimental task. For each experimental condition, we used 15 matchstick predictors (time points 0–14), the first predictor (“predictor 1”) was aligned with time point zero (Fig. 1D; “light on”) and the last predictor (“predictor 15”) was aligned with the drop in signal at the end of the trial (time point 14). Because of this clear drop in signal at time point 14, we did not include a predictor (nonexisting “predictor 16”) for the last time point (existing time point 15; see Fig. 1). For each functional voxel in each ROI, baseline z-normalized estimates of the deconvolved BOLD response, representing the mean-centered signal for each voxel and condition relative to the standard deviation of signal fluctuations, were extracted for all conditions. Voxel activation was then averaged across voxels within each ROI. This provided us with an estimate of the averaged time course of brain activation for each condition for each ROI and for each participant.

Given previous work from our group demonstrating the involvement of our selected ROIs in hand-object interactions (Gallivan et al., 2009; Monaco et al., 2011; Fabbri et al., 2016), we included univariate time courses for comparison as they indicate changes in the signal and reveal the degree to which differences in activation levels are present (or not). The univariate time courses also demonstrate that our quality-assurance procedures, particularly motion screening, succeeded in generating clean data.

Time courses were generated for each ROI (as shown in Fig. 2) separately for each of the four conditions. However, the differences in univariate signals between conditions were small or negligible. Thus, to simply evaluate whether or not there was significant activation during each of the three phases of the trial, we performed statistical comparisons of the average activation levels (collapsed across the four conditions) compared with the intertrial baseline (time 0).

First, for each ROI in each participant we averaged the data across conditions. Second, to reduce the number of statistical comparisons while targeting peak responses, we defined three distinct phases by averaging two datapoints for each. These phases were response (1) to Presentation, by averaging time points 2 and 3; (2) to Planning, by averaging time points 6 and 7; (3) and to Execution by averaging time points 11 and 12. Third, we investigated significant changes compared with baseline (i.e., >0) using one-sample t tests. Accordingly, per ROI we performed three one-sample t tests. Fourth, we corrected for multiple comparisons. It is well known that balancing Type I and Type II errors in fMRI research can be challenging because of the amount of data and number of comparisons involved. Although data between ROIs is independent, the number of comparisons in total across ROIs increase the likelihood of Type I errors. Because of this predicament, we decided to correct the univariate analysis for multiple comparisons using the Bonferroni method in two manners being (1) only including comparisons within ROIs (i.e., three comparisons within each ROI thus α = 0.05/3) and (2) including all comparisons (i.e., three comparisons within each of the 17 ROIs thus α = 0.05/51). The results can be found in Figure 2; a small asterisk indicates that the values are significant against the standard correction (three comparisons), a larger bold asterisk indicates that the values are significant against the strict correction (51 comparisons). The values in the results reflect mean ± SEM p-values will be referred to as p and p(strict) for the 3-comparison and 51-comparison corrections, respectively. Note that we provide the p-values corrected for the comparisons. For instance, if we found a p-value of 0.0035, it would be reported as p = 0.0105 (corrected for three comparisons) and p(strict) = 0.1785 (corrected for 51 comparisons).

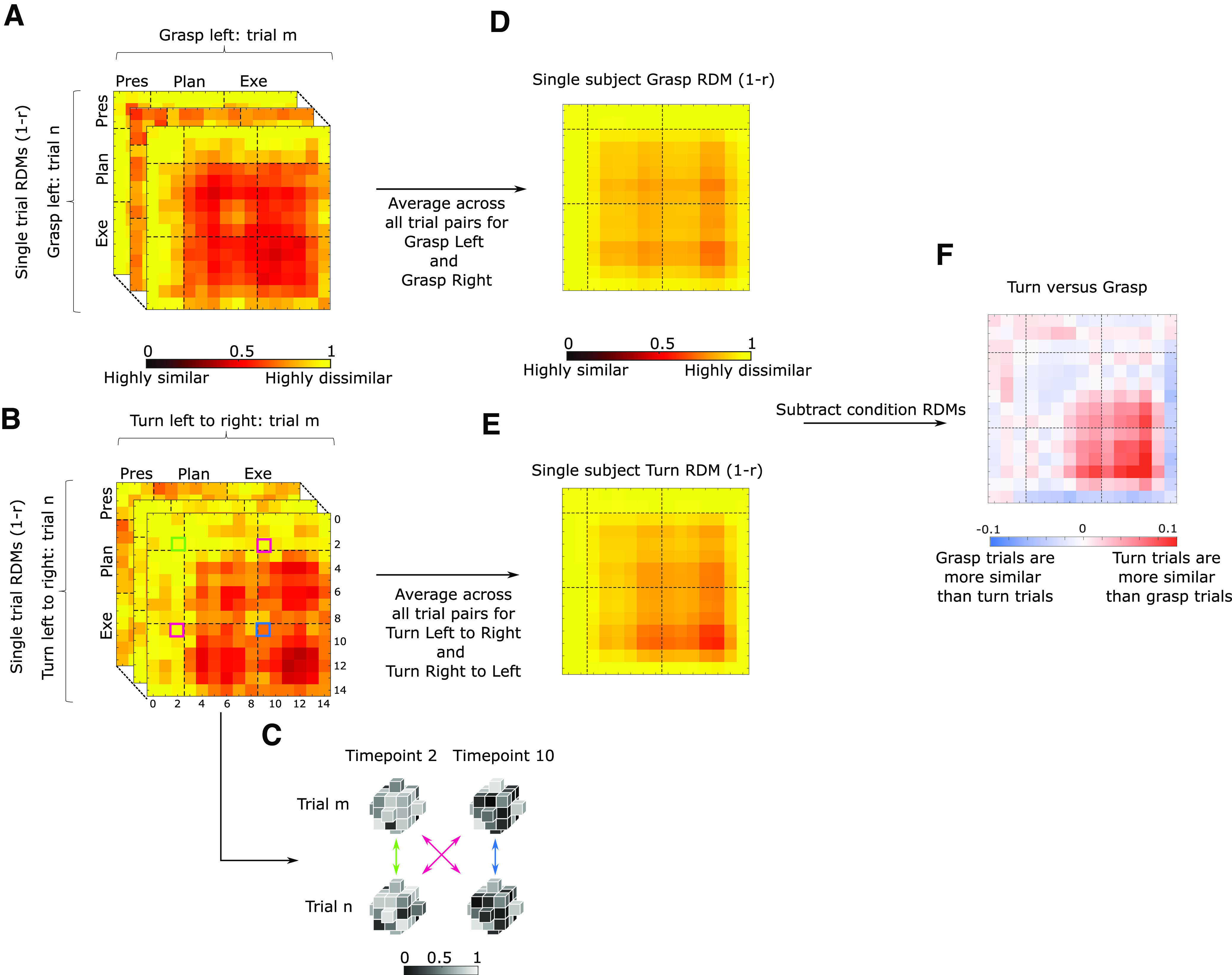

Temporal multivoxel pattern analysis

For tMVPA we relied on the approach developed in Ramon et al. (2015) and Vizioli et al. (2018). Briefly, tMVPA has been developed to investigate the temporal development of neural representations by relying on multivariate analyses with a trial wise approach. The tMVPA methods are schematized in Figure 3. For each voxel of each ROI of each participant, we performed a deconvolution GLM for every trial separately, which was then transformed into BOLD percent signal change by dividing the raw BOLD time course by its mean. Note that for tMVPA we performed deconvolution GLMs for each trial (of the same condition) separately whereas for the univariate analysis and RSA (see below) we ran deconvolution GLMs for each condition (i.e., across same-condition trials). After computing the single-trial activation patterns, we computed single-trial representational dissimilarity matrices (stRDMs; dissimilarity = 1 – r) using Pearson correlations for each condition of each ROI of each participant. As shown in Figure 3A, a stRDM is generated by computing dissimilarity between the activation patterns of voxels at each time point of a given trial (“trial m”) with the activation pattern of the same voxels for each of the time points for another trial (“trial n”). This process is iteratively repeated until all unique within-condition trial pairings are run. stRDMs were then averaged across the main diagonal to yield a diagonally symmetric matrix. That is, the data matrix was averaged position-wise with its transpose matrix. We performed this averaging as we were only interested in between-condition differences and not in differences between same-condition trials.

Figure 3.

Overview of the steps to perform temporal multivoxel pattern analysis (for full explanation, see Materials and Methods). The figure represents the steps taken for each participant separately. Within each condition single trial representational distance matrices (RDMs) are calculated for each time point of each trial pairing (A shows example for grasping trials and B for turning trials). C, Gray cubes represent voxels of the same ROI during different time points for two sample trials to exemplify how cells for each RDM are calculated. After calculating single trial RDMs, a condition average is calculated (middle column; D, E for grasping and turning, respectively), which are then subtracted from each other (last column; F, red and blue matrix). Not shown on picture (for results see Figs. 4, 5): the subtraction matrices are then used for bootstrapping to determine whether the group average differs significantly from zero and to investigate whether one condition is more similar than the other for each given time point.

To further clarify calculation of the dissimilarity metric: in Figure 3B,C, the green highlighted square indicates the dissimilarity between all the voxels (of a given ROI) at time point 2 of Turn Left to Right trial m with the activation of the same voxels at time point 2 of Turn Left to Right trial n. The magenta highlighted squares indicate the averaged dissimilarity between time points 2 and 10 of trial m and n. As explained before, we averaged the dissimilarity between time point 2 of trial m and time point 10 of trial n (magenta highlighted square below diagonal) with the dissimilarity between time point 10 of trial m and time point 2 of trial n (magenta highlighted square above diagonal). Finally, the blue highlighted square indicates the dissimilarity between time points 10 of trial m and n. In sum, values on the main diagonal show dissimilarity between within-condition trials at the same time point. Values that are off the main diagonal show the dissimilarity between within-condition trials at different (i.e., earlier or later) time points.

stRDMs were calculated for the four conditions separately, Fisher z-transformed and then averaged (10% trimmed mean; Vizioli et al., 2018) within conditions (e.g., average RDM for grasp left). Finally, the averaged RDMs were then averaged across orientations (e.g., averaging of RDMs between left and right start orientations to produce average Grasp and Turn RDMs; Fig. 3D,E, respectively). This was done as we were primarily interested in investigating differences in neural representations between singular (grasping) and sequential actions (grasping then turning) and we did not expect that these differences would depend on the start orientation. As such, this approach resulted in two RDMs for each participant.

In line with Vizioli et al. (2018), we performed statistical analysis on the Fisher z-transformed data; however, we used the nontransformed data for visualization purposes to render the values visually more interpretable. To test for statistically significant differences between the grasp and turn RDMs, we subtracted for each participant the turn RDM from the grasp RDM resulting in a subtraction matrix (Turn vs Grasp; Fig. 3F) and investigated where the subtraction differed significantly from zero. As such, a given value in the subtraction matrix that is positive indicates that grasping trials are more dissimilar than turning trials at that given time point. Conversely, a value that is negative indicates that turning trials are more dissimilar than grasping trials at that given time point. Note that we decided to statistically test whether each cell differed from zero instead of the increasingly larger sliding window analysis used in Ramon et al. (2015) and Vizioli et al. (2018). Our rationale was that the earlier studies relied on a visual task (face recognition) whereas our study relied on a motor task. Arguably, during motor preparation/execution, neural representations might evolve differently than purely visual responses. As such, we argued that statistically testing each cell separately might reveal more information on the temporal evolution of motor execution as, for instance, transitions between activation patterns between Planning and Execution might be brief or abrupt. Note that because we had averaged values across the main diagonal when calculating the dissimilarity matrices (Fig. 3 and Fig. 5), these matrices were symmetrical reflections across the diagonal. However, we performed statistical analyses only on the values on and above the main diagonal of the Turn versus Grasp subtraction matrices (Fig. 3F).

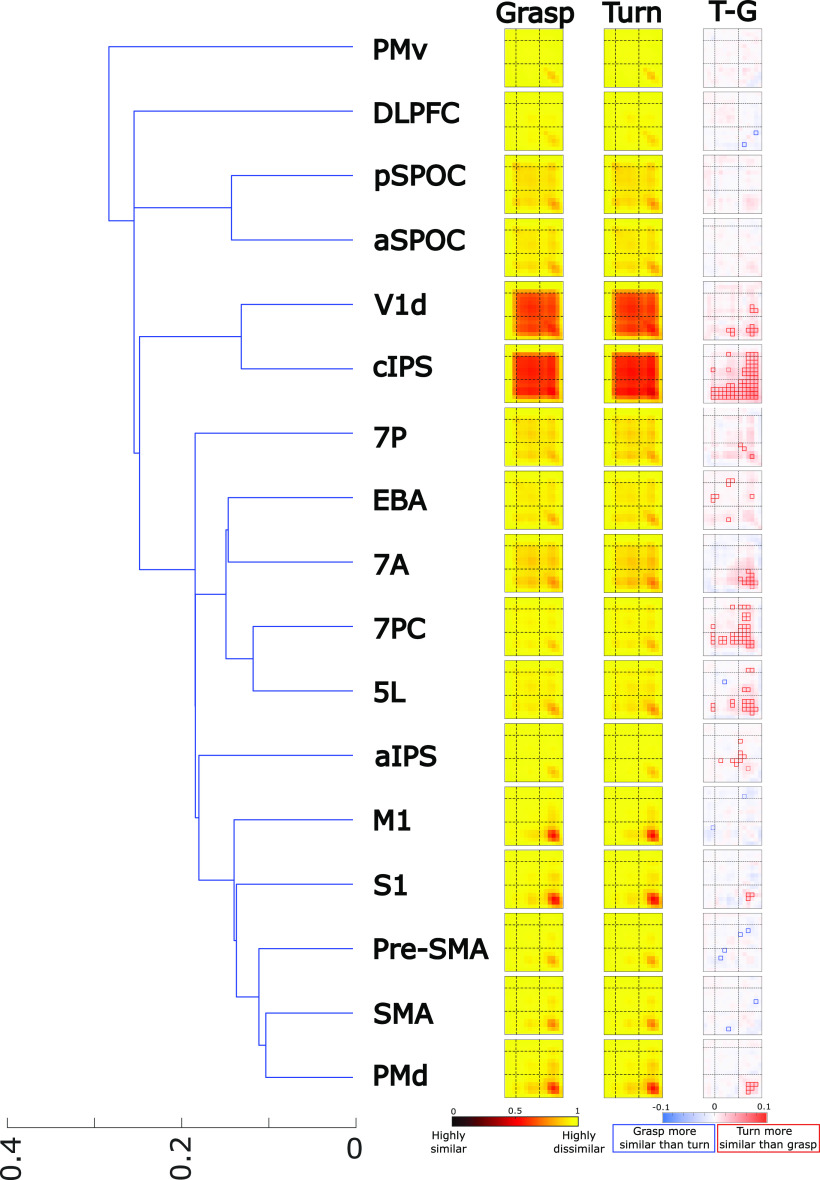

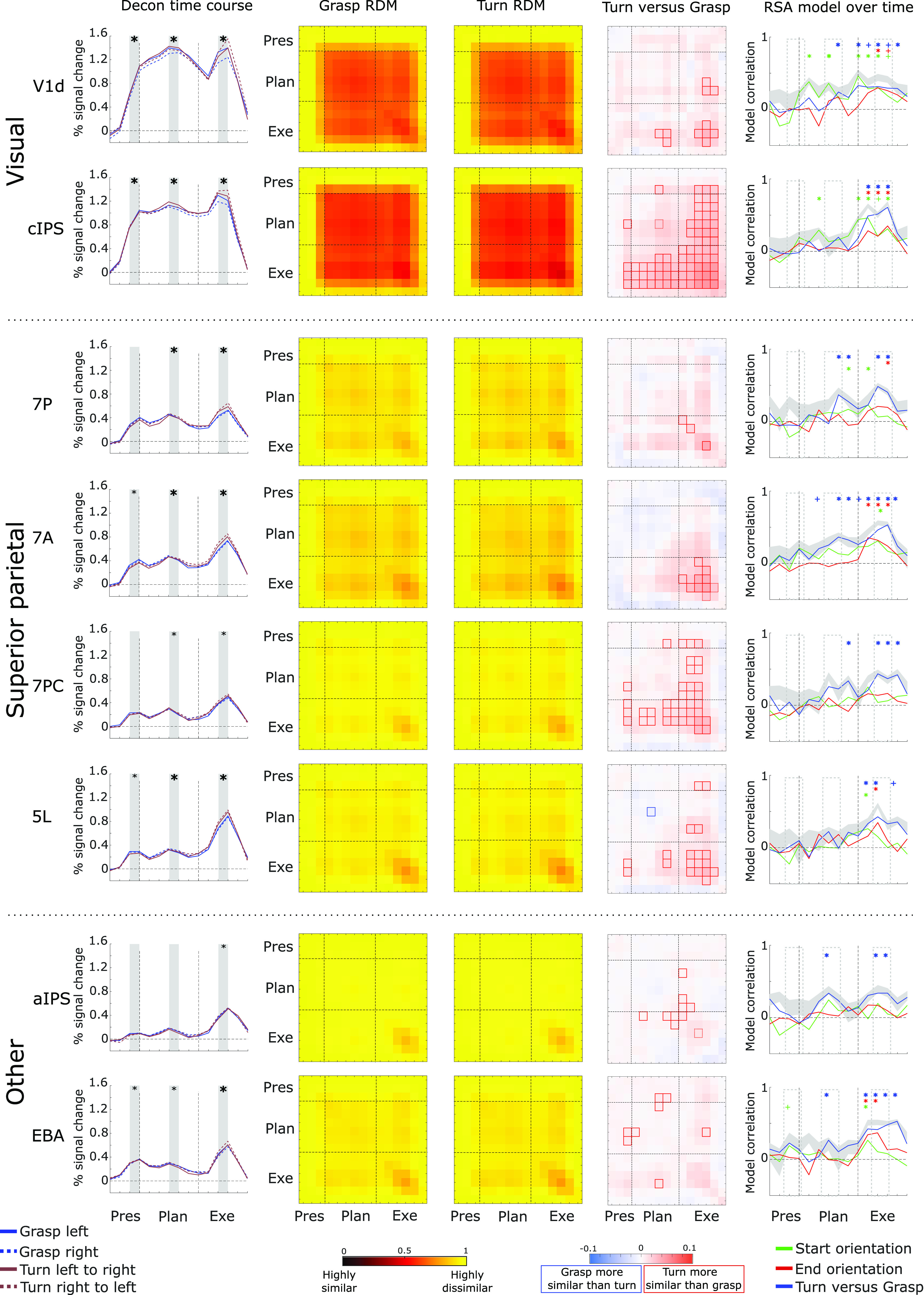

Figure 5.

Left, Hierarchical clustering of all the ROIs based on the averaged representational dissimilarity matrices (RDMs) of the Grasp and Turn condition. We refer the reader to the legend of Figure 3 for the full explanation of the heatmaps. Note that T–G represents “Turn minus Grasp” and represents the final tMVPA result showing statistically significant similarities (small red boxes = Turn > Grasp; small blue boxes = Grasp > Turn). The outline of the significance box indicates significance level. When the outline is dashed, the square was significant only when Bonferroni corrected for within-ROI comparisons. When the outline is solid, the square was significant when Bonferroni corrected for all comparisons across all ROIs. Note that we only tested whether boxes were significantly different from zero (and not from each other).

To test for statistical significance, we performed (1 – α) bootstrap confidence-interval analysis (critical α = 0.05) by sampling participants with replacement 500 times. As described above, we investigated whether tMVPA differed significantly from zero in either direction. Importantly, we excluded the first two time points (time points 0 and 1) and the last one (time point 14) from the statistical analyses (resulting in 12 time points included in the analysis: time points 2–13) because no differences were expected before the BOLD response to emerge at the start, because later time points reflect the poststimulus undershoot phase of the BOLD response, and to reduce the number of comparisons requiring Bonferroni correction for multiple comparisons. See Figure 1 for the trial timing and Figure 3 for the time points in the tMVPA analysis.

As for the univariate analysis, we accounted for multiple comparisons using Bonferroni correction in two manners. Our first, standard, correction did not take multiple comparisons between regions in account but only the number of comparisons within an ROI. This resulted in correcting for 78 tests: the binomial coefficient of 12 time points (which excludes the diagonal) plus the 12 included time points on the diagonal. The second, strict, correction, included multiple comparisons between all regions at all time points and resulted in 936 tests. Accordingly, the critical α was Bonferroni corrected for the number of tests in the bootstrap confidence-interval analysis. Because of the impracticability of reporting our tMVPA data in text or table format, we provide these results only in figure format (see data availability statement). In Figures 5 and 6, cells that differ significantly from zero have a dashed outline or solid outline for the standard and strict correction, respectively. To end, we will also qualitatively discuss the grasp and turn RDMs (which subtraction constitutes the tMVPA subtraction matrix that is statistically tested) to facilitate conceptual understanding of the data. Please note that this qualitative discussion will not be incorporated in the results and discussion because of the lack of the statistical analysis (which has been done on the subtraction matrix instead).

Figure 6.

Selection of ROIs that showed prominent differences for the tMVPA analysis. Some data are reproduced from subsets of Figures 2 (column 1) and 5 (columns 2–4, at higher resolution) to facilitate inspection and comparisons across analysis methods for key regions. Column 1 shows the group average deconvolution time courses for the four conditions. The x-axis represents time (in 2 s TRs) and the y-axis represents % signal change in the BOLD signal. Vertical dashed lines represent onset of the Planning phase (TR 3; after the initial Presentation phase) and the Execution phase (TR 9) following the Planning phase. Columns 2 and 3 show the group average condition representational dissimilarity matrices for the grasp (column 2) and turn condition (column 3). Each cell/square of the matrix represents the dissimilarity between trials of the same condition between two time points. Dashed horizontal and vertical lines represent the same time points as those in column 1, i.e., the first dashed horizontal/vertical line represents the start of the Planning phase (TR 3) and the second dashed vertical/horizontal line represents the start of the Execution phase (TR 9). Column 4, tMVPA results. Dashed lines have the same representation as explained for columns 1–3. Red squares indicate dissimilarity metrics between time points where turning trials are significantly more similar (less dissimilar) than grasping trials. blue squares represent the opposite (grasping more similar than turning). Column 5, Representational similarity analysis using three models, as explained in the color legend, for each time point of the experimental trials. Solid lines represent the correlation between the data and the model over time. The shaded gray area represents the noise ceiling with the lower and upper edges representing the lower and upper bounds. Dashed vertical lines have the same representation as explained for the previous columns. Colored “plus signs” indicate time points with statistically significant correlations for the respective model when FDR corrected for all within-ROI comparisons against zero. Colored asterisks indicate the same when FDR corrected for all comparisons (across all ROIs) against zero.

Hierarchical clustering

The tMVPA matrices (stRDMs) for Grasp and Turn revealed that different ROIs showed different temporal profiles. For example, some regions showed similar activation patterns throughout the entire trial; whereas, other regions showed similar patterns only during motor execution (see Fig. 5). To make it easier to group these different ROI timing patterns for display and discussion, we used hierarchical clustering. To do so, we averaged within-participants the grasp and turn RDMs to generate one RDM per participant. Next, we calculated for each participant the Spearman correlations between all ROIs using the averaged RDMs. The ROI correlation matrix for each participant was then transformed into a dissimilarity measure (1 – r). Finally, hierarchical clustering was then performed using the ROI dissimilarity matrix of all participants.

Representational similarity analysis

We used RSA to examine the degree to which the pattern of activation across voxels within each ROI at each time point represented the start orientation, the end (goal) orientation, and the task, as illustrated in Figure 4A–C. For RSA, we relied on the methods described by Kriegeskorte (2008). By examining the degree to which different conditions evoke a similar pattern of brain activation within a ROI, the nature of neural coding (or representational geometry; Kriegeskorte and Kievit, 2013) can be assessed.

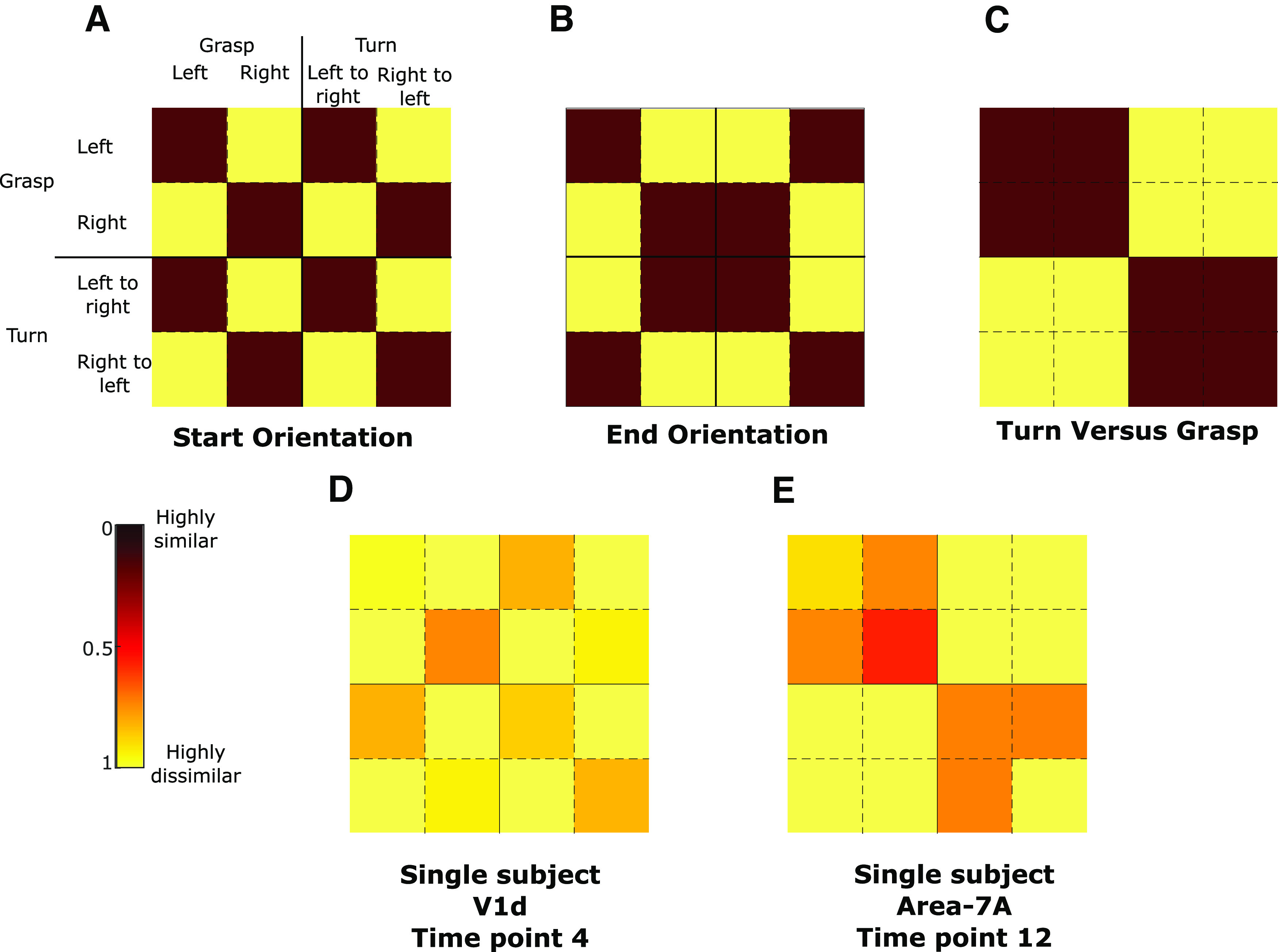

Figure 4.

Models used for representational similarity analysis (RSA). The first row shows the models for start orientation (A), end orientation (B), and turn versus grasp (C). The second row shows example data for one single time point for two regions of interest (D: V1d; E: area 7A) of one participant.

For each voxel of each ROI of each participant, we performed a deconvolution GLM. This was done for each condition and each run (but not trial as for tMVPA) separately, providing the average development of brain activation over time for each condition in each voxel in each ROI of each run of each participant. Then for each voxel separately, activations of the four conditions were normalized by subtracting the grand mean (i.e., average across conditions) from the value of each condition in that voxel (Haxby et al., 2001). Note that this was done for each run separately. These steps resulted in the normalized brain activation over time averaged across trials of the same conditions within one run. This was done for each condition for each voxel of each ROI for each run of each participant.

Subsequently, we computed representational dissimilarity matrices (RDMs) within each ROI. Pearson correlations were calculated at each time point between all voxels of a given ROI, i.e., “within time point correlations,” and then transformed into dissimilarity metric (1 – r). This was done within-condition (e.g., grasp left activations at time point 1 correlated with itself at time point 1) and between conditions (e.g., grasp left activations at time point 1 correlated with turn left to right at time point 1). In this manner, RDMs can quantify the dissimilarity in activation patterns between conditions, i.e., “how well is activity at any time point in a given set of voxels in a given condition correlated with the activity at the same time point of the same voxels during a different condition.” Please note that we did not perform autocorrelations (e.g., correlate grasp left activations during run 1 with itself thus leading to correlation values of 1). Instead to test within-subject reliability of the RDM calculations, we used cross-validation by splitting data into all potential combinations of runs yielding two sets of four runs each (two examples: split 1 = correlate all even runs with the uneven runs; split 2 = correlate the first four runs with the last four runs). Split data provides data-based estimates of dissimilarity even for the same condition as no autocorrelations are performed whereas, in unsplit data, the dissimilarity is necessarily zero. Data for all cells in the RDM is necessary for RSA on factorial designs to ensure that contrasts are balanced across orthogonal factors. Finally, the RDMs were Fisher transformed to have a similarity metric with a Gaussian distribution.

To test whether each region contained information about condition differences, we measured correlations between the RDM in each region (Fig. 4D,E) and three separate models that capture orthogonal components of the experimental task (Fig. 4A–C). This was done for each time point separately (15 time points included in the GLM being time points 0–14; time-resolved decoding). Note that in contrast to the tMVPA we included all time points because of our selection of models which included “two visual models,” which may decode already during the earliest time points. In total, we used three models that capture specific task attributes: (1) start orientation, regardless of task (i.e., grasp or turning), a time point with an RDM that correlates with this model would indicate an encoding of the initial orientation of the handle (i.e., left or right); (2) end orientation, regardless of the performed action (i.e., grasp or turning), a time point with an RDM that correlates with this model would indicate an encoding of the final orientation of the handle (i.e., left or right) after task execution; (3) motor task, regardless of the initial/final handle’s orientation (i.e., left or right), a time point with an RDM that correlates with this model would indicate an encoding of the task goal (i.e., grasping or turning). The metric was calculated by computing the Spearman correlation (ρ) between the Fisher-transformed split RDMs and each model for each ROI for each participant. For each ROI we also calculated the upper and lower bound of the noise ceiling. Briefly, the noise ceiling is the expected RDM correlation achieved by the (unknown) true model, given the noise of the data and provides an estimate of the maximum correlations that could be expected for a given model in a given ROI (Kriegeskorte, 2008). RDMs were first rank transformed, following the original z-transformation. The upper bound of the noise ceiling, considered an overestimate of the maximum correlation, was calculated as iteratively correlating one participant’s RDM with the average RDM of all participants (thus including the given participant) and then averaging across all participants. The lower bound of the noise ceiling, considered an underestimate of the maximum correlation, was calculated as iteratively correlating between one participant’s RDM with the average RDM of all other participants (thus excluding the given participant), and then averaging across participants.

We used one-way Student’s t tests (α < 0.05) to assess whether model correlations were significantly greater than zero for each time point in each ROI. Importantly, as for the tMVPA we decided to exclude the first two time points (time points 0 and 1) and the last one (time point 15). This resulted in 13 time points included in the analysis. Note that we did not compare whether models outperformed each other as we were primarily interested in whether which ROI embodied what type of information. To be in line with previous studies combining motor tasks and RSA (Gallivan et al., 2013; Di Bono et al., 2015; Monaco et al., 2020), we performed corrections for multiple comparisons using the false discovery rate. As for the univariate analysis, we performed multiple comparisons for each ROI separately (i.e., 12 time points × 3 models) and in a stricter manner across all regions (strict comparison: 12 time points × 3 models × 17 ROIs). The results can be found in Figure 6 models at any given time point that are significantly larger than zero for only the within-ROI corrections are indicated with a “plus sign.” When the model at the time point also reaches significance for the strict threshold, this is indicated by an asterisk instead. Because of the amount of data (i.e., 12 time points × 3 models × 17 ROIs), we provide a specific selection of values in the results section. Akin to the univariate analysis, we provide data for time points 2 and 3 for the Presentation phase, 6 and 7 for the Planning phase, and 11 and 12 for the Execution phase in Table 2 for the subset of ROIs in Figure 6. Again, note that we performed statistical tests and performed multiple comparisons for 12 time points and not just the six time points mentioned here to keep the statistical analysis in line with our tMVPA approach. In addition, note that here we do not average time points for each phase (e.g., no averaging of time points 2 and 3 into one “Presentation phase”) for the same reasons. The values in Table 2 reflect the q values resulting from the strict false discovery rate correction as well as the confidence intervals, given as [lower threshold mean upper threshold], which are Bonferroni corrected for multiple comparisons in the strict manner (12 time points × 3 models × 17 ROIs). Note that we had to correct confidence intervals using Bonferroni given the step-by-step approach FDR, which is not common for confidence intervals. As such, it is possible that our actual interpretation of the statistical analysis using FDR (shown in Fig. 6) reveals significant effects despite the confidence intervals using Bonferroni (shown in Table 2) containing zero.

Table 2.

Values represent model correlation values for each ROI at specific time points

| Presentation | Planning | Execution | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Time point 2 | Time point 3 | Time point 6 | Time point 7 | Time point 11 | Time point 12 | ||||||||

| ROI | Phase | CI | q | CI | q | CI | q | CI | q | CI | q | CI | q |

| V1d | Start Ori | −0.728 −0.187 0.355 | 0.979 | −0.411 0.231 0.873 | 0.192 | −0.246 0.36 0.966 | 0.048 | −0.337 0.155 0.648 | 0.244 | −0.033 0.302 0.637 | 0.008 | −0.164 0.187 0.538 | 0.07 |

| End Ori | −0.551 0.009 0.569 | 0.691 | −0.494 −0.009 0.476 | 0.732 | −0.395 0.115 0.626 | 0.365 | −0.234 0.173 0.581 | 0.14 | −0.156 0.298 0.752 | 0.034 | −0.284 0.258 0.799 | 0.103 | |

| GvT | −0.619 −0.018 0.583 | 0.753 | −0.452 0.036 0.523 | 0.602 | −0.201 0.067 0.334 | 0.331 | −0.164 0.24 0.644 | 0.048 | −0.136 0.315 0.767 | 0.026 | −0.231 0.293 0.817 | 0.059 | |

| cIPS | Start Ori | −0.698 −0.049 0.6 | 0.81 | −0.313 0.191 0.695 | 0.179 | −0.407 0.133 0.673 | 0.333 | −0.497 0.16 0.817 | 0.338 | −0.183 0.209 0.6 | 0.069 | 0.067 0.311 0.555 | 0.001 |

| End Ori | −0.321 0.004 0.33 | 0.694 | −0.163 0.111 0.385 | 0.155 | −0.377 0.049 0.475 | 0.53 | −0.253 0.044 0.342 | 0.478 | −0.072 0.209 0.49 | 0.023 | 0.037 0.351 0.665 | 0.002 | |

| GvT | −0.599 −0.036 0.528 | 0.795 | −0.439 −0.018 0.403 | 0.773 | −0.378 0.076 0.529 | 0.451 | −0.397 0.195 0.788 | 0.229 | 0.119 0.515 0.912 | 0.001 | 0.243 0.609 0.974 | 0.001 | |

| 7P | Start Ori | −0.573 −0.218 0.137 | 0.996 | −0.7 −0.138 0.425 | 0.943 | −0.253 0.124 0.502 | 0.229 | −0.297 0.111 0.519 | 0.299 | −0.358 0.062 0.482 | 0.479 | −0.288 0.076 0.439 | 0.394 |

| End Ori | −0.59 0 0.59 | 0.699 | −0.583 −0.062 0.459 | 0.858 | −0.275 0.004 0.283 | 0.691 | −0.342 0.098 0.537 | 0.371 | −0.216 0.209 0.633 | 0.092 | −0.11 0.195 0.501 | 0.038 | |

| GvT | −0.611 −0.049 0.514 | 0.824 | −0.521 −0.058 0.405 | 0.86 | −0.691 −0.031 0.628 | 0.776 | −0.127 0.369 0.865 | 0.023 | −0.031 0.484 1 | 0.008 | −0.156 0.404 0.964 | 0.025 | |

| 7A | Start Ori | −0.722 −0.089 0.544 | 0.873 | −0.328 0.213 0.754 | 0.164 | −0.314 0.209 0.732 | 0.158 | −0.278 0.133 0.545 | 0.235 | −0.037 0.311 0.659 | 0.009 | −0.2 0.169 0.537 | 0.116 |

| End Ori | −0.677 −0.111 0.455 | 0.916 | −0.481 −0.04 0.401 | 0.829 | −0.473 −0.044 0.384 | 0.842 | −0.51 0 0.51 | 0.699 | −0.054 0.32 0.694 | 0.01 | −0.101 0.24 0.58 | 0.026 | |

| GvT | −0.508 0.04 0.588 | 0.602 | −0.278 0.2 0.678 | 0.145 | −0.317 0.253 0.823 | 0.129 | −0.171 0.364 0.899 | 0.029 | −0.078 0.462 1.002 | 0.01 | 0.061 0.533 1.005 | 0.002 | |

| 7PC | Start Ori | −0.57 −0.133 0.304 | 0.969 | −0.53 −0.036 0.459 | 0.808 | −0.383 −0.022 0.339 | 0.795 | −0.473 0.004 0.482 | 0.699 | −0.414 0.04 0.494 | 0.58 | −0.403 0.062 0.527 | 0.504 |

| End Ori | −0.597 −0.142 0.313 | 0.97 | −0.481 −0.031 0.419 | 0.806 | −0.392 0.102 0.597 | 0.395 | −0.528 −0.009 0.51 | 0.731 | −0.175 0.138 0.45 | 0.131 | −0.264 0.164 0.593 | 0.177 | |

| GvT | −0.527 0.044 0.616 | 0.599 | −0.656 −0.129 0.399 | 0.943 | −0.26 0.258 0.775 | 0.09 | −0.263 0.227 0.716 | 0.113 | −0.071 0.435 0.941 | 0.01 | −0.202 0.369 0.939 | 0.036 | |

| 5L | Start Ori | −0.769 −0.227 0.316 | 0.988 | −0.463 0.04 0.543 | 0.596 | −0.395 0 0.395 | 0.699 | −0.519 −0.004 0.51 | 0.716 | −0.209 0.151 0.511 | 0.144 | −0.3 0 0.3 | 0.699 |

| End Ori | −0.381 −0.053 0.274 | 0.887 | −0.434 0.058 0.549 | 0.526 | −0.236 0.062 0.36 | 0.393 | −0.262 0.182 0.627 | 0.151 | −0.029 0.346 0.722 | 0.008 | −0.322 0.098 0.518 | 0.353 | |

| GvT | −0.554 0.013 0.581 | 0.68 | −0.513 0.098 0.709 | 0.461 | −0.299 0.222 0.743 | 0.14 | −0.717 −0.009 0.699 | 0.723 | 0.026 0.426 0.826 | 0.003 | −0.328 0.329 0.986 | 0.088 | |

| aIPS | Start Ori | −0.543 −0.151 0.241 | 0.983 | −0.567 −0.062 0.443 | 0.86 | −0.192 0.244 0.68 | 0.059 | −0.321 0.098 0.517 | 0.352 | −0.245 0.195 0.636 | 0.129 | −0.325 0.049 0.423 | 0.505 |

| End Ori | −0.39 −0.018 0.354 | 0.776 | −0.593 −0.089 0.416 | 0.897 | −0.579 0.04 0.659 | 0.613 | −0.489 0.022 0.533 | 0.651 | −0.25 0.169 0.588 | 0.156 | −0.385 0.093 0.571 | 0.403 | |

| GvT | −0.47 0.036 0.541 | 0.602 | −0.665 −0.071 0.522 | 0.858 | −0.076 0.338 0.751 | 0.013 | −0.271 0.235 0.742 | 0.112 | −0.175 0.338 0.85 | 0.033 | −0.14 0.338 0.816 | 0.026 | |

| EBA | Start Ori | −0.172 0.191 0.554 | 0.072 | −0.364 0.089 0.542 | 0.403 | −0.476 0.004 0.485 | 0.699 | −0.501 0.009 0.519 | 0.689 | −0.239 0.107 0.452 | 0.25 | −0.238 0.08 0.398 | 0.327 |

| End Ori | −0.502 0.027 0.555 | 0.641 | −0.537 0.013 0.563 | 0.679 | −0.661 −0.004 0.652 | 0.716 | −0.479 −0.018 0.443 | 0.769 | −0.135 0.369 0.872 | 0.024 | −0.397 0.133 0.663 | 0.327 | |

| GvT | −0.297 0.227 0.75 | 0.135 | −0.439 0.138 0.715 | 0.343 | −0.17 0.267 0.703 | 0.044 | −0.439 0.107 0.652 | 0.403 | −0.058 0.413 0.884 | 0.009 | −0.047 0.48 1.007 | 0.008 | |

Values depict mean ± confidence interval and is written as [lower threshold mean upper threshold]. Note that the confidence intervals are Bonferroni corrected in the strict manner (critical α divided by 12 time points × 3 models × 17 ROIs). q-statistic represents the p-value that is FDR corrected in the same strict manner. Significant values (outlined in bold) were defined as a having a a q-value < 0.05 (see Materials and Methods, Representational similarity analysis). RSA values for ROIs shown in Figure 6.

Table 1.

Number of voxels per region of interest

| Region of interest | Number of functional voxels |

|---|---|

| M1 | 98 ± 7 |

| S1 | 123 ± 11 |

| PMv | 107 ± 8 |

| PMd | 217 ± 9 |

| aSPOC | 178 ± 14 |

| pSPOC | 138 ± 7 |

| aIPS | 268 ± 17 |

| cIPS | 172 ± 10 |

| Pre-SMA | 108 ± 7 |

| SMA | 132 ± 10 |

| DLPFC | 129 ± 9 |

| V1d | 152 ± 13 |

| Area 5L | 167 ± 10 |

| Area 7A | 194 ± 12 |

| Area 7P | 150 ± 9 |

| Area 7PC | 198 ± 13 |

| EBA | 1163 ± 23 |

The average number of functional (1-mm iso-)voxels per region of interest. Values shown are mean ± SEM.

Results

Qualitative examination of the deconvolution time courses (Fig. 2) and the tMVPA results indicated that different regions had different temporal profiles of activity. As shown in Figure 5, hierarchical clustering of the RDMs averaged across Grasp and Turn facilitated conceptual grouping of the ROIs for further investigation.

Notably, ROIs differed in the time ranges over which they showed reliable activation patterns across trials of the same type. Most strikingly, V1d and cIPS showed strong temporal similarity in activity patterns throughout the Plan and Execute phases of the trial, as indicated by the large block of high similarity (dark red) beginning early in Planning and continuing through late Execution (Fig. 5). That is, in these regions, voxel activation patterns were not only similar during the same phase (along the diagonal cells) but were also similar across time points throughout Planning and Execution (off-diagonal cells in the Planning and Execution phases). This indicates consistency in the neural representation throughout the trials. Similar but weaker similarity patterns were also observed in other regions, particularly aSPOC, pSPOC, 7P, EBA, 7A, 7PC, and 5L.

In contrast, other regions, aIPS, M1, S1, pre-SMA, SMA, and PMd, showed trial-consistent activation patterns predominantly for the peak Execution period (dark red blocks in the Execution phase, i.e., in lower right corners of Grasp and Turn matrices). In some cases, there was also some consistency of patterns between the peak Execution phase and earlier time points during Plan and Execute of which M1 is a clear example in Figure 5 (off-diagonal cells between Planning and Execution phase, as indicated by the reddish “wings” above and left of the peak similarity). PMv and DLPFC showed only weak consistency of patterns across trials, highest in the peak of Execution.

Interesting patterns were also observed in the tMVPA difference in trial-by-trial consistency between turn and grasp (Fig. 5, final column). Notably many of the regions showed higher consistency during Turn actions than Grasp actions.

Based on these observations from Figure 5, we focused subsequent analysis and interpretation on a subset of ROIs that is shown in Figure 6.

Visual regions of V1d and cIPS

Univariate analysis

The first column in Figure 6 shows the deconvolution time courses for V1d and cIPS. V1d and cIPS show a steep increase in average voxel activation following object presentation (V1d = 0.31 ± 0.06, p < 0.001, p(strict) = 0.008; cIPS = 0.46 ± 0.07, p < 0.001; p(strict) < 0.006 compared with baseline. This initial increase is maintained throughout the Planning (V1d = 1.31 ± 0.19, p < 0.001, p(strict) < 0.001; cIPS = 1.09 ± 0.19, p < 0.001; p(strict) < 0.001) and Execution phases (V1d = 1.07 ± 0.22, p < 0.001, p(strict) = 0.013; cIPS = 1.15 ± 0.18, p < 0.001; p(strict) < 0.001).

Qualitative (nonstatistical) assessment of the condition RDMs

In Figure 6, the second and third columns show the condition RDMs for grasp and turn, respectively. For both regions, activation pattern similarity within conditions strongly increases following object presentation. Because of the sluggish nature of the hemodynamic response and our findings for the univariate analysis, it is plausible that this early increase of similarity in the activation patterns is primarily driven by a visual response. Notably, the cIPS within-condition similarity in activation patterns increases toward the peak Execution (approximately time points 11–13).

Temporal multivoxel pattern analysis

The fourth column in Figure 6 depicts the subtraction Turn minus grasp on which we performed statistical analyses as previously described. For V1d, tMVPA is unable to discriminate the grasp and turning condition for matched time points (along the diagonal) during motor Planning. That is, subtracting the grasp dissimilarity from the turn dissimilarity (Fig. 6, second and third columns) does not result in a difference that differs statistically from zero. Interestingly, tMVPA is able to discriminate the activation patterns of grasping and turning during late Execution (i.e., the “plus sign” of significant squares in the Grasp vs Turn RDM for V1d), which indicates that V1d may encode change in visual information (e.g., increased motion during) or motor components that are nonvisual. Interestingly, tMVPA was also able to discriminate activity patterns related to grasping versus turning when dissimilarity calculations were done between time points of the late Planning phase and time points of the late Execution phase (i.e., the three adjacent significant squares in the Grasp vs Turn RDM for V1d). These findings suggest that the activation patterns in V1d become tuned toward either grasping or turning during late motor Planning and may not be limited to solely encoding visual information. Similar but stronger effects were found for cIPS. tMVPA was able to discriminate cIPS activation patterns during most of the Execution phase as well as when dissimilarity calculations were done between time points of the Execution phase and early and late time points of the Planning phase (i.e., the vertical and horizontal “wings” of significant squares above and left of the Execution phase). This suggests that in cIPS, representations of the task emerge early in the Planning period.

Representational similarity analysis

For the RSA, we tested how well multivariate activation patterns fit three models for the representation of start orientation, end orientation, and task (Turn vs Grasp) over time (Fig. 6, last column). The confidence intervals and q-values for a selection of time points (Fig. 6, last column, time points within transparent rectangles with dashed gray outlines) is provided in Table 2. Notably, although all three types of information appear to be represented in both V1d and cIPS during action execution, starting orientation appeared to be represented during the early Planning phase. In addition, during the late Planning phase, both starting orientation and Grasp versus Turn also appeared to be represented in V1d (see Table 2 for values for time points 6 and 7). Although the decoding of start orientation during Planning and end orientation during Execution may reflect the processing of simple visual orientation information (Kamitani and Tong, 2005), coding of both start and end orientation overlapped during Execution (Fig. 6, last column, V1d and cIPS; green and red trace), suggest a much more complex representation. Notably, V1d, represented the task during Planning, before any action had been initiated, perhaps because of anticipation of the visual consequences of the upcoming action.

In sum, while our findings are consistent with a role for V1d and cIPS as predominantly visual regions, the similarity in activation patterns (tMVPA) between the Planning and the Execution phase, in particular for cIPS (Fig. 6, fourth column) as well as V1d coding the motor task during Planning (Fig. 6, last column, blue trace) suggest that these regions may encode motor components already during the Planning phase.

Regions of the SPL: 7A, 7PC, 5L, and 7P

Univariate analysis

Despite only 7A showing a significant increase following object presentation (area 7P = 0.13 ± 0.05, p = 0.09, p(strict) = 1.00; area 7A = 0.16 ± 0.05, p = 0.037, p(strict) = 0.63; area 7PC = 0.11 ± 0.06, p = 0.36, p(strict) = 1.00; area 5L = 0.15 ± 0.05, 0.040. p(strict) = 0.679), all regions showed a significant increase following action Planning (area 7P = 0.40 ± 0.08, p < 0.001, p(strict) < 0.001; area 7A = 0.42 ± 0.08, p < 0.001, p(strict) = 0.01; area 7PC = 0.25 ± 0.07, p = 0.006, p(strict) = 0.103; area 5L = 0.28 ± 0.06, p = 0.001, p(strict) = 0.017), and Execution (area 7P = 0.36 ± 0.07, p < 0.001, p(strict) = 0.009; area 7A = 0.49 ± 0.09, p < 0.001, p(strict) = 0.003; area 7PC = 0.30 ± 0.08, p = 0.006, p(strict) = 0.104; area 5L = 0.54 ± 0.09, p < 0.001, p(strict) = 0.002). As shown in the first column in Figure 6, given that the activity within this ROIs show a different trend than cIPS and V1d (i.e., primarily a significant increase in activation following action planning, not object presentation), it seems plausible that the subregions of the SPL have primarily a visuomotor involvement.

Qualitative (nonstatistical) assessment of the condition RDMs

The condition RDMs of Figure 6 show that the ROIs within the SPL show activity patterns that are similar to each other. First, similarity between trials within each condition increases following object presentation, in particular, during early planning. Second, similarity in activation patterns is then maintained until a second increase during the Execution phase. Notably, this pattern (in the condition RDMs of the SPL regions) is similar to that of V1d and cIPS, although it seems weaker (i.e., less red). Most importantly, the increase in within-condition trial similarity following object presentation seems weaker for the SPL regions than for V1d and cIPS suggesting that these regions are likely less modulated by this type of motor task.

Temporal multivoxel pattern analysis

In line with the assessments of the univariate analysis and the condition RDMs, tMVPA indicate that the regions of the SPL may be involved in the motor task, in particularly during motor execution (Fig. 6, column 4). For area 7A, tMVPA was able to discriminate activation patterns for grasping and turning during the later Execution phase. Interestingly, we found similar results for area 7PC as for cIPS (as explained above, in Visual regions of V1d and cIPS). tMVPA was able to discriminate grasping and turning throughout both earlier and later stages of the Execution phase as well as when dissimilarity calculations were done between time points of the Planning phase and time points of the Execution phase. These findings indicate that area 7PC might anticipate turning actions already during the Planning phase. The results for area 5L are somewhat similar to the findings for areas 7A and 7PC. That is, tMVPA is primarily able to differentiate between grasping and turning during the later Execution phase but also, in a more limited manner than area 7PC, when dissimilarity was calculated on time points of the early/late Planning phase and time points of the Execution phase. For area 7P, tMVPA could significantly discriminate activation patterns associated with grasping and turning only during the Execution phase. Finally, it can be seen that tMVPA can discriminate between grasping and turning by relying on the activation patterns of each of the four regions. However, this ability to discriminate the task is the weakest in 7P, stronger in 7A and 5L, and the strongest in 7PC.

Representational similarity analysis

The RSA results (Fig. 6, column 5) indicate that the regions of the SPL could rather have a visuomotor involvement than a purely visual one: starting orientation cannot be decoded from the SPL (Fig. 6, last column, 7P, 7A, 7PC, and 5L, green trace) during the Planning phase. In support of our tMVPA results, grasping versus turning can be decoded from all four regions in the SPL (Fig. 6, last column, 7P, 7A, 7PC, and 5L, blue trace). RSA is primarily able to do this when relying on the Execution phase. Significant decoding is also observed during the late Planning phase for the regions 7A and 7P (see Table 2 for values for time points 6 and 7). Interestingly, in area 7PC only turning versus grasping can be decoded, suggesting that this region might be mainly involved in rotating the wrist, regardless of the direction, and may not integrate visual information. Indeed, from the other regions (areas 7A, 5L, and 7P) both start and end orientation can be decoded during the action Execution phase suggesting that these regions may encode specific wrist orientations, or may still be involved in visuomotor integration.

In sum, both tMVPA and RSA seem to provide evidence for the involvement of the SPL in decoding sequential actions. That is, sequential (grasping then turning) actions can be differentiated from singular grasping actions based on the activation patterns from the regions 7A, 7PC, 5L, and 7P. In particular, for areas 7A and 7P, our findings highlight that RSA is already able to do so during motor Planning.

EBA and aIPS

Univariate analysis