Abstract

Introduction

There are numerous cases where artificial intelligence (AI) can be applied to improve the outcomes of medical education. The extent to which medical practitioners and students are ready to work and leverage this paradigm is unclear in Iran. This study investigated the psychometric properties of a Persian version of the Medical Artificial Intelligence Readiness Scale for Medical Students (MAIRS-MS) developed by Karaca, et al. in 2021. In future studies, the medical AI readiness for Iranian medical students could be investigated using this scale, and effective interventions might be planned and implemented according to the results.

Methods

In this study, 502 medical students (mean age 22.66(± 2.767); 55% female) responded to the Persian questionnaire in an online survey. The original questionnaire was translated into Persian using a back translation procedure, and all participants completed the demographic component and the entire MAIRS-MS. Internal and external consistencies, factor analysis, construct validity, and confirmatory factor analysis were examined to analyze the collected data. A P ≤ 0.05 was considered as the level of statistical significance.

Results

Four subscales emerged from the exploratory factor analysis (Cognition, Ability, Vision, and Ethics), and confirmatory factor analysis confirmed the four subscales. The Cronbach alpha value for internal consistency was 0.944 for the total scale and 0.886, 0.905, 0.865, and 0.856 for cognition, ability, vision, and ethics, respectively.

Conclusions

The Persian version of MAIRS-MS was fairly equivalent to the original one regarding the conceptual and linguistic aspects. This study also confirmed the validity and reliability of the Persian version of MAIRS-MS. Therefore, the Persian version can be a suitable and brief instrument to assess Iranian Medical Students’ readiness for medical artificial intelligence.

Keywords: Artificial intelligence, Medical education, Medical students, Psychometric properties, Validity and reliability

Introduction

The origin of artificial intelligence (AI) can be traced back to the 1950s when artificial neural networks were created [1]. The Accreditation Council for Graduate Medical Education (ACGME) has revised its internal medicine guidelines in light of AI’s potential to improve and reshape patient treatment and clinical management plans. In its 2019 guidelines, the American Medical Association (AMA) promoted AI implementation and discussed payment, regulation, deployment, and liability issues [2].

Numerous healthcare concerns can be solved globally using AI and its various applications, such as making and simplifying diagnoses, big-data analytics, administration, and overall clinical decision-making [3, 4]. Moreover, this approach enables physicians to provide diagnoses along with prognoses rapidly by minimizing the effort to analyze digital information [5, 6]. This is not a question of whether AI shall be applied to healthcare but rather when it will become a standard approach to optimize healthcare practice [4]. In this context, AI is expected to become essential to physicians’ professional practice [7–9].

AI is highly technical, requiring computer science knowledge and mathematical understanding as the basic foundations [10]. Training healthcare providers with adequate knowledge of AI presents unique challenges to clinical relevance, content selection, and the methods for teaching the concepts [11]. To achieve these, we need curriculum integration, performance assessments, and both interdisciplinary and research-based teaching guidelines [5, 12]. This process involves more than just learning technical skills associated with computer programming. Rather, one must have a comprehensive knowledge of basic, clinical and evidence-based medicine, together with biostatistics and data science [13]. In this context, it is critical for medical students to be provided with curricular and extracurricular opportunities to learn about technical limitations, clinical applications, and the ethical perspective of AI tools [14].

Therefore, it is imperative to integrate technical and non-technical principles of AI with medical students. However, the current medical schools’ teaching approaches are limited in their coverage of AI in their curricula [11]. Our review of the recent literature has uncovered the following important points on medical education: (a) the need for AI in the curricula of undergraduate medical education (UME), (b) recommending such a curricular delivery, (c) suggesting AI curriculum contents, particularly a focus on the ethical aspects of machine learning (ML) and AI, (d) integrating AI into UME curricula, and (e) the need for cultivating the uniquely human skills on empathy for patients in the face of AI-driven development and challenges [15].

These days, students in medical and dental schools have a good understanding of AI concepts, an optimistic attitude regarding it, and are interested in seeing AI incorporated into their curricula [16]. Thus, these students strongly believe that AI will profoundly impact medical education in the near future [17]. However, the curricula in medical schools do not currently have an AI component [3]. Although AI has numerous applications to improve the clinical management of patients, it remains unclear to what extent students and medical practitioners are using them in their education and later in medical practice [2, 4].

Aim of the study

A psychometric measurement tool that is reliable and valid has been developed by Karaca, et al. [18]. to assess the medical students’ perceived readiness toward AI developments and their applications in medicine. Thus, this study aimed to investigate the psychometric properties of a Persian version of the Medical Artificial Intelligence Readiness Scale for Medical Students (MAIRS-MS) among Iranian medical students.

Methods

Sample

The sample population for the present study was selected from among the Iranian medical students at the Faculty of Medicine, Mashhad University of Medical Sciences, using convenience sampling. The students were informed through Email and social media messages, such as WhatsApp and Telegram, where the link to the MAIRS-MS survey was posted online. Two thousand three hundred thirty-eight students were eligible as the research population. The completed surveys were received from 502 medical students (275 female and 227 male).

Demographic information

The study participants responded to questions related to demographics, such as gender, age, and the curriculum of the Doctor of Medicine (MD) program. The questions covered the basic science, preclinical, and clinical aspects of their MD curriculum in addition to those specific to MAIRS-MS.

Medical Artificial Intelligence Readiness Scale for Medical Students (MAIRS-MS): MAIRS-MS was provided by Karaca, et al. [18]. with 22 questions and four categories of cognition, ability, vision, and ethics. The Cronbach alpha values were: 0.83 for cognition, 0.77 for ability, 0.723 for vision, and 0.632 for ethics categories. Also, the four-factor structure model fitted well with the respective indices (CFI = 0.938; TLI = 0.928; NNFI = 0.928; RMSEA = 0.094; and SRMR = 0.057).

Translation process

Prior to the actual Persian translation, the consent of the original questionnaire’s author was obtained via Email. Initially, each item of the English questionnaire was translated into Persian by two academic experts in English translation. Secondly, another two bilingual university instructors examined each of the translated items in terms of meaning, accuracy, wording, spelling, and grammar. Then, a back translation to English and a comparison of the original questionnaire with the back-translated one were made. As a result of their suggestions and feedback, necessary revisions were made to the survey questions. Thirdly, five faculty members specializing in AI checked each of the items to ensure that the exact meaning was achieved by translation. All of these individuals were fluent in both Persian and English languages. Based on subsequent feedback from experts, the wording of seven questions was revised. Finally, the final Persian version of the questionnaire was approved, consisting of 22 questions, each on a 5-point Likert scale (1 = strongly disagree to 5 = strongly agree).

Data Analysis

To conduct data analysis, the steps were carried out as follows.

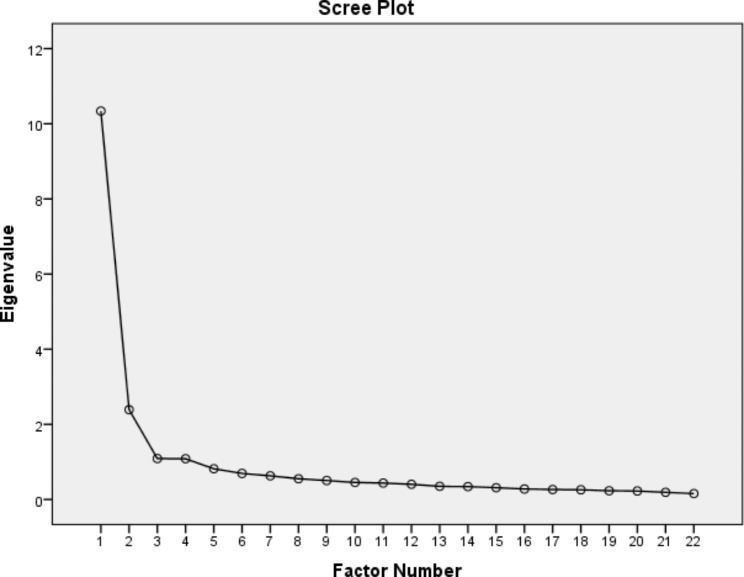

Exploratory factor analysis was led by cross-validation for half of the sample (n = 251) using principal axis factoring with varimax rotation [19, 20]. The following criteria were applied to extract the factors: (a) one eigenvalue criterion based on Kairs and Guttman [21, 22], (b) parallel analysis and Velicer’s MAP test based on Hayton [23, 24], and (c) the scree test proposed by Cattell [25].

Confirmatory factor analysis was performed on the sample’s other half (n = 251) to cross-validate the analysis. The good fit indices examined in this study were Chi-square, Chi-square/df, root mean square error of approximation, the goodness of fit index, comparative fit index, normed fit index, non-normed fit index, adjusted goodness of fit index, incremental fit index, relative fit index, and standardized root mean square residual.

Each dimension’s internal consistency reliability coefficient (Cronbach’s alpha and composite reliability) was calculated.

The intra-class correlation coefficient was conducted to determine inter-rater reliability.

SPSS-23 was used to calculate exploratory factor analysis, internal consistency, and stability of the questionnaire. LISREL-8.70 software was also used to calculate the confirmatory factor analysis.

Results

Descriptive statistics

Out of 502 medical students aged 17 to 36, the mean age was 22.66(± 2.767), with 55% of the sample being female. The participants in each curricular phase of the MD program consisted of basic science (146 (29%)), preclinical (115(23%)), and clinical (241 (48%)). The mean, standard deviation, maximum and minimum, skewness, and kurtosis of scores obtained from the collected data are shown in Table 1. Since the sample size was larger than 300 individuals, Kolmogorov–Smirnov and Shapiro-Wilk’s tests might be unreliable. Both the skew and kurtosis could be analyzed by descriptive statistics. The acceptable values for skewness fell between ˗ 3 and + 3, and the kurtosis was appropriate from ˗ 10 to + 10 when structural equation modeling was introduced [26]. In this study, the skew and kurtosis were less than the absolute value of one. Therefore, the results indicated that the data distribution was normal.

Table 1.

Descriptive statistics of MAIRS-MS scores

| Categories | Mean | SD | Min | Max | Skewness | Kurtosis |

|---|---|---|---|---|---|---|

| Total score | 63.09 | 15.92 | 22 | 110 | -0.184 | -0.113 |

| Cognition | 20.42 | 6.11 | 8 | 40 | 0.050 | -0.157 |

| Ability | 23.64 | 6.82 | 8 | 40 | -0.291 | -0.474 |

| Vision | 8.74 | 2.97 | 3 | 15 | -0.220 | -0.678 |

| Ethics | 10.30 | 2.74 | 3 | 15 | -0.666 | 0.187 |

Note: SD = Standard Deviation

Exploratory factor analysis

The results of the exploratory factor analysis of the Persian version of MAIRS-MS are shown in Table 2. Principal axis factoring was performed for extraction factors, resulting in four factors consistent with the original MAIRS-MS [18]. Also, the number of factors by parallel analysis and Velicer’s MAP test was equal to four. These factors accounted for 63% of the common variance, which was acceptable considering that the expected variance in social science studies is known to be 60% [27, 28]. Out of 63% of the model’s total variance, 19% was explained by the first factor (Cognition). The variances explained by factors 2, 3, and 4 were 18%, 14%, and 12%, respectively. Table 2 shows the relationship between the factors and variables, i.e., questions. All values in Table 2 are above 0.40, indicating a strong relationship between the questions and the respective factors [29]. In addition, the Kaiser-Meyer-Olkin test result was 0.945 (i.e., above 0.60) and the Bartlett test result was satisfactory (X2 = 7478.39; df = 231; P ≤ 0.00001). Also, the scree diagram shows the four mentioned factors with eigenvalues greater than one (Fig. 1).

Table 2.

Exploratory factor analysis with principal axis factoring of MAIRS-MS (Varimax rotation transformation including factors with eigenvalue of one or more)

| Questions | Factor 1 (Cognition) | Factor 2 (Ability) | Factor 3 (Ethics) | Factor 4 (Vision) |

|---|---|---|---|---|

| 1. I can define the basic concepts of data science. | 0.561 * | 0.099 | 0.081 | 0.113 |

| 2. I can define the basic concepts of statistics. | 0.475 * | 0.141 | 0.100 | 0.111 |

| 3. I can explain how AI systems are trained. | 0.761 * | 0.207 | 0.035 | 0.233 |

| 4. I can define the basic concepts and terminology of AI. | 0.807 * | 0.208 | 0.020 | 0.183 |

| 5. I can properly analyze the data obtained by AI in healthcare. | 0.579 * | 0.250 | 0.169 | 0.207 |

| 6. I can differentiate the functions and features of AI related tools and applications. | 0.602 * | 0.280 | 0.074 | 0.230 |

| 7. I can organize workflows compatible with AI. | 0.585 * | 0.277 | 0.057 | 0.199 |

| 8. I can express the importance of data collection, analysis, evaluation and safety; for the development of AI in healthcare. | 0.409 * | 0.210 | 0.266 | 0.302 |

| 9. I can harness AI-based information combined with my professional knowledge. | 0.297 | 0.653 * | 0.256 | 0.217 |

| 10. I can use AI technologies effectively and efficiently in healthcare delivery. | 0.187 | 0.753 * | 0.285 | 0.234 |

| 11. I can use artificial intelligence applications in accordance with its purpose. | 0.181 | 0.714 * | 0.223 | 0.240 |

| 12. I can access, evaluate, use, share and create new knowledge using information and communication technologies. | 0.128 | 0.542 * | 0.271 | 0.173 |

| 13. I can explain how AI applications offer a solution to which problem in healthcare. | 0.220 | 0.484 * | 0.161 | 0.295 |

| 14. I find valuable to use AI for education, service and research purposes. | -0.007 | 0.622 * | 0.179 | 0.131 |

| 15. I can explain the AI applications used in healthcare services to the patient. | 0.201 | 0.414 * | 0.133 | 0.263 |

| 16. I can choose proper AI application for the problem encountered in healthcare. | 0.287 | 0.492 * | 0.269 | 0.208 |

| 17. I can explain the limitations of AI technology. | 0.226 | 0.271 | 0.173 | 0.684 * |

| 18. I can explain the strengths and weaknesses of AI technology. | 0.254 | 0.247 | 0.295 | 0.743 * |

| 19. I can foresee the opportunities and threats that AI technology can create. | 0.239 | 0.198 | 0.225 | 0.643 * |

| 20. I can use health data in accordance with legal and ethical norms. | 0.139 | 0.239 | 0.661 * | 0.236 |

| 21. I can conduct under ethical principles while using AI technologies. | 0.086 | 0.166 | 0.862 * | 0.128 |

| 22. I can follow legal regulations regarding the use of AI technologies in healthcare. | 0.091 | 0.146 | 0.762 * | 0.193 |

| Eigenvalues | 18.999 * | 17.813 * | 14.056 * | 11.932 * |

| Explained variance (%) | 18.999 * | 36.812 * | 50.868 * | 62.80 * |

*Loadings with absolute values of ≥ 0.40 are shown in bold

Fig. 1.

Scree plot of the principal axis factoring

Confirmatory factor analysis

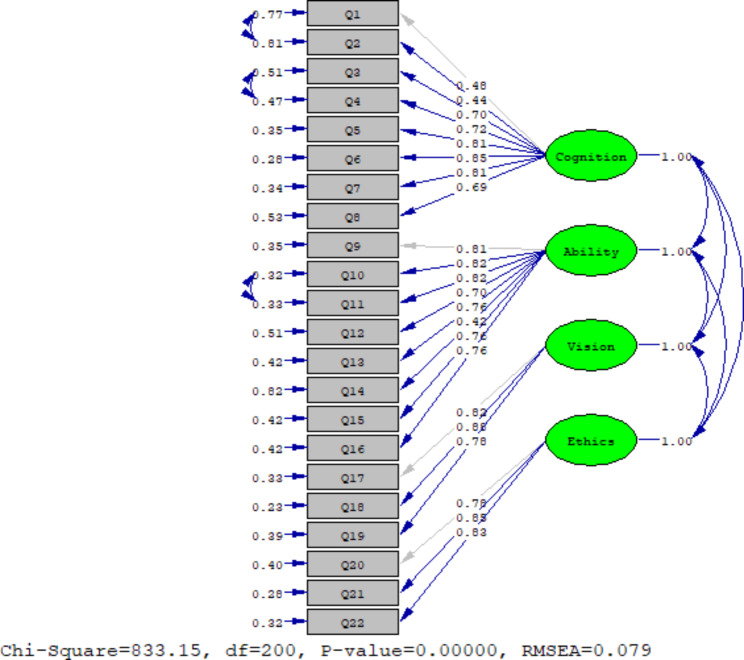

In order to evaluate the conceptual model of the original version of the questionnaire, confirmatory factor analysis was applied using LISREL-8.70 software. This was performed on the sample’s second half (n = 251) to cross-validate the analysis. The estimation method in the confirmatory factor analysis was the maximal likelihood, assuming that the observed indicators follow a continuous and multivariate normal distribution. As shown in Fig. 2, the relationship of all extracted factors with the observed variables (i.e., questions) was desirable (standardized factor loading greater than 0.40) [29]. Also, the fit indices (X2 = 833.15; df = 200; P ≤ 0.000001; RMSEA = 0.079) revealed an acceptable fit of the model with respect to the data.

Fig. 2.

Standardized coefficients for the four-factor model of MAIRS-MS

Since values above 0.90 for the variables were acceptable, the values, as shown in Table 3, indicated a relatively good fit [30, 31]. Also, first-order confirmatory factor analysis was used to evaluate the construct validity of the questions. The unstandardized factor loading of questions, R-squared (R2), and average variance extracted (AVE) were calculated for each factor (Table 4). The results showed that according to the amount of factor loading obtained, which is more than 0.40 and are at a significant level of less than 0.05 (P ≤ 0.05) (all T values are greater than 1.96). Therefore, the finding indicated that the construct validity for all questions was established. In addition, the results showed that the AVE index was greater than 0.50. The AVE index represented the average variance extracted for each factor by its questions. Thus, the larger the index, the greater the fit [32, 33].

Table 3.

Model fit indices determined via confirmatory factor analysis

| Fitness indices | Observed value | Expected value |

|---|---|---|

| Chi-square | 833.15 | - |

| df | 200 | - |

| Chi-square/df | 4.16 | ≤ 5 |

|

RMSEA 90% CI RMSEA |

0.079 0.074–0.085 |

≤ 0.08 |

| GFI | 0.93 | ≥ 0.90 |

| CFI | 0.92 | ≥ 0.90 |

| NFI | 0.90 | ≥ 0.90 |

| NNFI | 0.91 | ≥ 0.90 |

| AGFI | 0.92 | ≥ 0.90 |

| IFI | 0.92 | ≥ 0.90 |

| RFI | 0.93 | ≥ 0.90 |

| TLI | 0.92 | ≥ 0.90 |

| SRMR | 0.048 | ≤ 0.05 |

Note: RMSEA = Root Mean Square Error of Approximation; GFI = Goodness of Fit Index; CFI = Comparative Fit Index; NFI = Normed Fit Index; NNFI = Non-Normed Fit Index; AGFI = Adjusted Goodness of Fit Index; IFI = Incremental Fit Index; RFI = Relative Fit Index; TLI = Tucker–Lewis Index; SRMT = Standardized Root Mean Square Residual

Table 4.

Factor loading, T value for each question, and AVE for each factor

| Factors | Unstandardized factor loading | T value | R2 | AVE |

|---|---|---|---|---|

| Factor 1: (Cognition) | ||||

| Question 1 | 1.00 | 10.22 | 0.43 | 0.56 |

| Question 2 | 1.24 | 9.72 | 0.49 | |

| Question 3 | 1.53 | 10.26 | 0.51 | |

| Question 4 | 1.51 | 10.41 | 0.53 | |

| Question 5 | 1.75 | 10.90 | 0.65 | |

| Question 6 | 1.77 | 11.09 | 0.72 | |

| Question 7 | 1.63 | 10.92 | 0.66 | |

| Question 8 | 1.70 | 10.17 | 0.47 | |

| Factor 2: (Ability) | ||||

| Question 9 | 1.00 | 21.19 | 0.65 | 0.60 |

| Question 10 | 1.14 | 21.17 | 0.68 | |

| Question 11 | 1.14 | 21.00 | 0.67 | |

| Question 12 | 1.13 | 17.12 | 0.59 | |

| Question 13 | 1.12 | 17.11 | 0.58 | |

| Question 14 | 1.13 | 17.12 | 0.48 | |

| Question 15 | 1.15 | 19.04 | 0.58 | |

| Question 16 | 1.15 | 19.04 | 0.58 | |

| Factor 3: (Vision) | ||||

| Question 17 | 1.00 | 21.87 | 0.67 | 0.68 |

| Question 18 | 1.14 | 22.07 | 0.77 | |

| Question 19 | 1.01 | 19.29 | 0.61 | |

| Factor 4: (Ethics) | ||||

| Question 20 | 1.00 | 18.22 | 0.60 | 0.67 |

| Question 21 | 1.05 | 18.93 | 0.72 | |

| Question 22 | 1.05 | 18.54 | 0.68 |

To modify the original model, the error covariance between questions 1 and 2 on the Cognition subscale (decreased Chi-square = 67.02), the error covariance between questions 3 and 4 on the Cognition subscale (decreased Chi-square = 51.25), and the error covariance between questions 10 and 11 on the Ability subscale (decreased Chi-square = 42.33) was released due to the correlation between the error covariance in these items. Modifying the original model improved the RMSEA by approximately 0.02 (decreased from 0.094 to 0.079).

Reliability: Cronbach alpha was used to investigate the internal consistency of the MAIRS-MS questions. The respective values for the Cognition, Ability, Vision, and Ethics subscales were: 0.886, 0.905, 0.865, and 0.856. Also, the Cronbach alpha value for the total scale was 0.944. These values, which were similar to those of the original questionnaire, demonstrated the data’s acceptable internal consistency and reliability. In addition, the composite reliability coefficients for the subscales were 0.972 (Cognition), 0.884 (Ability), 0.879 (Vision), and 0.894 (Ethics). The composite reliability coefficient for the total scales was 0.981. Finally, the inter-rater reliability was determined for each of the subscales as follows: 0.703 (Cognition), 0.753 (Ability), 0.979 (Vision), and 0.715 (Ethics). The Cronbach alpha intra-class correlation coefficient for the total scale was 0.871.

Discussion

AI has transformed healthcare procedures [34] and is viewed and expected to reshape the learning process in medical education and healthcare services as we move into a new era. In addition, natural language processing techniques and large language models have significantly advanced AI applications [35]. However, AI shall not replace the roles of physicians and professors in today’s academia; rather, it may modify the roles [36]. Due to these developments, the current knowledge of medical students and practicing physicians on AI is likely to foster such an evolution [37, 38]. Furthermore, medical education faces pedagogical issues on how AI should be introduced into medical curricula to be maximally effective but not destructive. This is still a controversial subject in the delivery of healthcare to the public [7]. Despite the positive aspects of AI, it may not fill all aspects of the emotions, empathy, and direct communication appropriate to the healthcare system. Thus, many unclear areas exist regarding the application of AI in medical education and the professional setting [18].

The present study was conducted to determine the psychometric properties of the Persian version of MAIRS-MS. The scale consisted of 22 items, and exploratory factor analysis revealed that the MAIRS-MS had four factors: cognition, ability, vision, and ethics. The preliminary results showed high consistency of the Persian version with the original questionnaire with respect to each of the four factors. Our detailed statistical analyses indicated an acceptable validity of all questions on the Persian version of the MAIRS-MS. The reliability analyses of that version led to high values representing its reliability. Hence, this study established the validity and reliability of the Persian version of MAIRS-MS.

Despite the lack of explicit opinions on the effects of AI on healthcare delivery and/or medical education, several studies have developed scales to evaluate the attitude, knowledge, and readiness of healthcare professionals and medical students on the introduction of AI in medicine and medical education. MAIRS-MS has been developed as a 4-subscale tool to assess cognition, ability, vision, and ethics among the currently available scales. The original version has been evaluated as a highly valid and reliable tool for monitoring medical students’ perceived readiness toward AI technologies and applications [18]. In this regard, a Chinese scale has also been developed by Li, et al., which evaluates the personal relevance of medical AI, subjective norms and self-efficacy in learning AI, basic knowledge, behavioral intention, and the actual learning of AI. Despite the 25-item scale being seen as a valid and reliable tool in Chinese, its main aim was to understand medical students’ perceptions of and behavioral intentions toward learning AI, which revealed some differences compared to that of the original English MAIRS-MS [39].

Further, Boillat, et al. developed a questionnaire to capture medical students’ and physicians’ familiarity with AI in medicine and the challenges, barriers, and potential risks linked to the democratization of this new paradigm. The scale consisted of 31 items under five factors, such as familiarity with medical AI, education and training, challenges and barriers in medical AI and its implementation, as well as the risks linked to medical AI [4]. Although the existing scales have some overlapping aspects, they are not identical. The differences among the various questionnaires may cover the gaps and utilize appropriate scales in specific populations to achieve particular goals.

Due to the impact of AI in medicine and medical education, several studies have evaluated medical students’ opinions on AI aiming at bringing further improvement to this method. Abid, et al. investigated Pakistanis medical students’ attitudes and readiness toward AI [40]. In the United Kingdom, Sit, et al. revealed that 89% of medical students believed that teaching in AI would be beneficial to their future medical practice. In this study, 78% of the respondents agreed that students should receive training on AI as part of their medical curricula [41]. Conversely, there are significant issues, such as knowledge, attitudes, and readiness about AI in some developing countries. Hamd, et al. study results showed a lack of education and training programs for AI implementation, and from their point of view, organizations were not well prepared and had to ensure readiness for AI [42]. In the United Arab Emirates, Boillat, et al. reported low familiarity with AI and called for specific training provided at medical schools and hospitals to ensure that they can leverage this new paradigm to improve upon their healthcare delivery and clinical outcomes [4]. It seems that the differences between the developed and developing countries are largely due to their curricular designs, especially concerning the role of AI or lack thereof. Therefore, it is recommended that medical schools consider mechanisms for knowledge sharing about AI and develop curricula to teach the use of AI tools as a competency [43]. In addition, medical students should be practically exposed to AI technology incorporations [44]. The effort to ensure professional and student readiness will improve AI integration in practice [42].

Finally, as a strong finding of the current study, it can be stated that we evaluated the psychometric properties of a novel and beneficial questionnaire among Iranian medical students and explored their readiness for AI in their curricula. In future studies, the student’s readiness for having AI components in the medical curricula will be further investigated using the translated scale developed in the current study, and its efficacy and potential will be assessed in greater detail and from different perspectives.

Conclusions

The findings of this study revealed that the Persian MAIRS-MS was essentially equivalent in nature to the original one regarding the conceptual and linguistic aspects. This study also confirmed the validity and reliability of the Persian MAIRS-MS. Therefore, the Persian version can be a suitable and brief instrument to assess the readiness of Iranian medical students with respect to the inclusion of AI in their medical education and its impact on their future medical practice.

Acknowledgements

We thank our colleagues and the participants who provided expertise and insight that greatly assisted the research. The authors appreciate Dr. Ayhan Caliskan, who critically appraised our manuscript, and his valuable suggestions helped improve the article.

Abbreviations

- MAIRS-MS

Medical Artificial Intelligence Readiness Scale for Medical Students

- AI

Artificial Intelligence

- ACGME

Accreditation Council for Graduate Medical Education

- AMA

American Medical Association

- UME

Undergraduate Medical Education

- ML

Machine Learning

- MD

Doctor of Medicine

- SD

Standard Deviation

- AVE

Average Variance Extracted

- RMSEA

Root Mean Square Error of Approximation

- GFI

Goodness of Fit Index

- CFI

Comparative Fit Index

- NFI

Normed Fit Index

- NNFI

Non-Normed Fit Index

- AGFI

Adjusted Goodness of Fit Index

- IFI

Incremental Fit Index

- RFI

Relative Fit Index

- TLI

Tucker–Lewis Index

- SRMT

Standardized Root Mean Square Residual

Author contributions

Study concept and design: H. M. and M. M.; Data collection: A. M.; Data analysis and interpretation of results: M. M.; Drafting of the manuscript: A. M., M. M., A. E., and H. M.; Critical revision of the manuscript for important intellectual content: A. E. and H. M. All authors read and approved the final manuscript.

Funding

This research was funded by the Research Council of Mashhad University of Medical Sciences, Iran (https://research.mums.ac.ir: Grant Number 992291).

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

All procedures were in line with Iran National Committee for Ethics in Biomedical Research (https://ethics.research.ac.ir; Approval ID: IR.MUMS.REC.1400.086; Evaluated by: Research Ethics Committees of Mashhad University of Medical Sciences; Approval Date: 2021-07-03). Informed consent was obtained from all participants of the study. All methods were performed in accordance with the Declaration of Helsinki.

Consent for publication

Not applicable.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Carin L. On Artificial Intelligence and Deep Learning within Medical Education. Acad Med. 2020;95:10–S11. doi: 10.1097/acm.0000000000003630. [DOI] [PubMed] [Google Scholar]

- 2.Valikodath NG, Cole E, Ting DSW, Campbell JP, Pasquale LR, Chiang MF, et al. Impact of Artificial Intelligence on Medical Education in Ophthalmology. Transl Vis Sci Technoly. 2021;10(7):14–25. doi: 10.1167/tvst.10.7.14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Grunhut J, Marques O, Wyatt ATM. Needs, Challenges, and applications of Artificial Intelligence in Medical Education Curriculum. JMIR Med Educ. 2022;8(2):e35587. doi: 10.2196/35587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Boillat T, Nawaz FA, Rivas H. Readiness to Embrace Artificial intelligence among medical doctors and students: questionnaire-based study. JMIR Med Educ. 2022;8(2):e34973. doi: 10.2196/34973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Han ER, Yeo S, Kim MJ, Lee YH, Park KH, Roh H. Medical education trends for future physicians in the era of advanced technology and artificial intelligence: an integrative review. BMC Med Educ. 2019;19(1):460–75. doi: 10.1186/s12909-019-1891-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Santos JC, Wong JHD, Pallath V, Ng KH. The perceptions of medical physicists towards relevance and impact of artificial intelligence. Phys Eng Sci Med. 2021;44(3):833–41. doi: 10.1007/s13246-021-01036-9. [DOI] [PubMed] [Google Scholar]

- 7.van der Niet AG, Bleakley A. Where medical education meets artificial intelligence: ‘Does technology care?‘. Med Educ. 2021;55(1):30–6. doi: 10.1111/medu.14131. [DOI] [PubMed] [Google Scholar]

- 8.Vedula SS, Ghazi A, Collins JW, Pugh C, Stefanidis D, Meireles O, et al. Artificial Intelligence Methods and Artificial Intelligence-Enabled Metrics for Surgical Education: a Multidisciplinary Consensus. J Am Coll Surg. 2022;234(6):1181–92. doi: 10.1097/xcs.0000000000000190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ward TM, Mascagni P, Madani A, Padoy N, Perretta S, Hashimoto DA. Surgical data science and artificial intelligence for surgical education. J Surg Oncol. 2021;124(2):221–30. doi: 10.1002/jso.26496. [DOI] [PubMed] [Google Scholar]

- 10.Webster CS. Artificial intelligence and the adoption of new technology in medical education. Med Educ. 2021;55(1):6–7. doi: 10.1111/medu.14409. [DOI] [PubMed] [Google Scholar]

- 11.Hu R, Fan KY, Pandey P, Hu Z, Yau O, Teng M, et al. Insights from teaching artificial intelligence to medical students in Canada. Commun Med. 2022;2(1):63. doi: 10.1038/s43856-022-00125-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lang J, Repp H. Artificial intelligence in medical education and the meaning of interaction with natural intelligence - an interdisciplinary approach. GMS J Med Educ. 2020;37(6):Doc59. doi: 10.3205/zma001352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Park SH, Do K-H, Kim S, Park JH, Lim Y-S, Cho AR. What should medical students know about artificial intelligence in medicine? jeehp. 2019;16(0):18–0. doi: 10.3352/jeehp.2019.16.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McCoy LG, Nagaraj S, Morgado F, Harish V, Das S, Celi LA. What do medical students actually need to know about artificial intelligence? npj Digit Med. 2020;3(1):86. doi: 10.1038/s41746-020-0294-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lee J, Wu AS, Li D, Kulasegaram KM. Artificial Intelligence in Undergraduate Medical Education: a scoping review. Acad Med. 2021;96(11s):62–S70. doi: 10.1097/acm.0000000000004291. [DOI] [PubMed] [Google Scholar]

- 16.Bisdas S, Topriceanu C-C, Zakrzewska Z, Irimia A-V, Shakallis L, Subhash J, et al. Artificial Intelligence in Medicine: A Multinational Multi-Center Survey on the Medical and Dental Students’ Perception. Front Public Health. 2021;9. 10.3389/fpubh.2021.795284. [DOI] [PMC free article] [PubMed]

- 17.Park CJ, Yi PH, Siegel EL. Medical Student Perspectives on the impact of Artificial Intelligence on the practice of Medicine. Curr Probl Diagn Radiol. 2021;50(5):614–9. doi: 10.1067/j.cpradiol.2020.06.011. [DOI] [PubMed] [Google Scholar]

- 18.Karaca O, Çalışkan SA, Demir K. Medical artificial intelligence readiness scale for medical students (MAIRS-MS) – development, validity and reliability study. BMC Med Educ. 2021;21(1):112–21. doi: 10.1186/s12909-021-02546-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fabrigar LR, Wegener DT, MacCallum RC, Strahan EJ. Evaluating the use of exploratory factor analysis in psychological research. Psychol Methods. 1999;4(3):272–99. doi: 10.1037/1082-989X.4.3.272. [DOI] [Google Scholar]

- 20.Tabachnick BG, Fidell LS, Ullman JB. Using multivariate statistics. Boston, MA: Pearson; 2007. [Google Scholar]

- 21.Guttman L. Some necessary conditions for common-factor analysis. Psychometrika. 1954;19(2):149–61. doi: 10.1007/BF02289162. [DOI] [Google Scholar]

- 22.Kaiser HF. A note on guttman’s lower bound for the number of common factors 1. Br J Stat Psychol. 1961;14(1). 10.1111/j.2044-8317.1961.tb00061.x.

- 23.O’connor BP. SPSS and SAS programs for determining the number of components using parallel analysis and Velicer’s MAP test. Behav Res methods instruments computers. 2000;32(3):396–402. doi: 10.3758/BF03200807. [DOI] [PubMed] [Google Scholar]

- 24.Hayton JC, Allen DG, Scarpello V. Factor retention decisions in exploratory factor analysis: a tutorial on parallel analysis. Organizational Res methods. 2004;7(2):191–205. doi: 10.1177/1094428104263675. [DOI] [Google Scholar]

- 25.Cattell RB. The scree test for the number of factors. Multivar Behav Res. 2010;1(2):245–76. doi: 10.1207/s15327906mbr0102_10. [DOI] [PubMed] [Google Scholar]

- 26.Brown TA. Confirmatory factor analysis for applied research. Guilford publications; 2015.

- 27.Hoyle RH. Determining the number of factors in exploratory. The Sage handbook of quantitative methodology for the social sciences. 2004.

- 28.Scherer RF, Luther DC, Wiebe FA, Adams JS. Dimensionality of coping: factor stability using the ways of coping questionnaire. Psychol Rep. 1988;62(3):763–70. doi: 10.2466/pr0.1988.62.3.763. [DOI] [PubMed] [Google Scholar]

- 29.Stevens JP. Applied multivariate statistics for the social sciences. Routledge; 2012.

- 30.Rao CR, Miller JP, Rao D. Essential statistical methods for medical statistics. North Holland; 2011.

- 31.Raykov T, Marcoulides GA. On multilevel model reliability estimation from the perspective of structural equation modeling. Struct Equ Model. 2006;13(1):130–41. doi: 10.1207/s15328007sem1301_7. [DOI] [Google Scholar]

- 32.Fornell C, Larcker DF. Structural equation models with unobservable variables and measurement error: Algebra and statistics. Sage Publications Sage CA: Los Angeles, CA; 1981; 18(3): 382–388. Doi: 10.2307/3150980.

- 33.Fornell C. Issues in the application of covariance structure analysis: a comment. J Consum Res. 1983;9(4):443–8. doi: 10.1086/208938. [DOI] [Google Scholar]

- 34.Al Kuwaiti A, Nazer K, Al-Reedy A, Al-Shehri S, Al-Muhanna A, Subbarayalu AV, et al. A review of the role of Artificial Intelligence in Healthcare. J Pers Med. 2023;13(6). 10.3390/jpm13060951. [DOI] [PMC free article] [PubMed]

- 35.Alqahtani T, Badreldin HA, Alrashed M, Alshaya AI, Alghamdi SS, Bin Saleh K, et al. The emergent role of artificial intelligence, natural learning processing, and large language models in higher education and research. Res Social Adm Pharm. 2023 doi: 10.1016/j.sapharm.2023.05.016. [DOI] [PubMed] [Google Scholar]

- 36.Masters K. Artificial intelligence in medical education. Med Teach. 2019;41(9):976–80. doi: 10.1080/0142159X.2019.1595557. [DOI] [PubMed] [Google Scholar]

- 37.Bhardwaj D. Artificial Intelligence: Patient Care and Health Professional’s Education. J Clin Diagn Res. 2019;13(1). 10.7860/JCDR/2019/38035.12453.

- 38.AkbariRad M, Moodi Ghalibaf A. A revolution in Medical Education: are we ready to apply Artificial Intelligence? Future of Medical Education Journal. 2022;12(2):61–2. doi: 10.22038/fmej.2022.63275.1456. [DOI] [Google Scholar]

- 39.Li X, Jiang MY-c, Jong MS-y, Zhang X, Chai C-s. Understanding medical students’ perceptions of and behavioral intentions toward learning Artificial Intelligence: a Survey Study. Int J Environ Res Public Health. 2022;19(14):8733–50. doi: 10.3390/ijerph19148733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Abid S, Awan B, Ismail T, Sarwar N, Sarwar G, Tariq M, et al. Artificial intelligence: medical student s attitude in district Peshawar Pakistan. Pakistan J Public Health. 2019;9(1):19–21. doi: 10.32413/pjph.v9i1.295. [DOI] [Google Scholar]

- 41.Sit C, Srinivasan R, Amlani A, Muthuswamy K, Azam A, Monzon L, et al. Attitudes and perceptions of UK medical students towards artificial intelligence and radiology: a multicentre survey. Insights into imaging. 2020;11(1). 10.1186%2Fs13244-019-0830-7. [DOI] [PMC free article] [PubMed]

- 42.Hamd Z, Elshami W, Al Kawas S, Aljuaid H, Abuzaid MM. A closer look at the current knowledge and prospects of artificial intelligence integration in dentistry practice: a cross-sectional study. Heliyon. 2023;9(6):e17089. doi: 10.1016/j.heliyon.2023.e17089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hashimoto DA, Johnson KB. The Use of Artificial Intelligence Tools to prepare Medical School Applications. Acad Med. 2023 doi: 10.1097/acm.0000000000005309. [DOI] [PubMed] [Google Scholar]

- 44.Ampofo JW, Emery CV, Ofori IN. Assessing the level of understanding (knowledge) and awareness of diagnostic imaging students in Ghana on Artificial Intelligence and its applications in Medical Imaging. Radiol Res Pract. 2023;2023:4704342. doi: 10.1155/2023/4704342. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.