Abstract

In this paper, we present and compare four methods to enforce Dirichlet boundary conditions in Physics-Informed Neural Networks (PINNs) and Variational Physics-Informed Neural Networks (VPINNs). Such conditions are usually imposed by adding penalization terms in the loss function and properly choosing the corresponding scaling coefficients; however, in practice, this requires an expensive tuning phase. We show through several numerical tests that modifying the output of the neural network to exactly match the prescribed values leads to more efficient and accurate solvers. The best results are achieved by exactly enforcing the Dirichlet boundary conditions by means of an approximate distance function. We also show that variationally imposing the Dirichlet boundary conditions via Nitsche's method leads to suboptimal solvers.

MSC: 35A15, 65L10, 65L20, 65K10, 68T05

Keywords: Dirichlet boundary conditions, PINN, VPINN, Deep neural networks, Approximate distance function

1. Introduction

Physics-Informed Neural Networks (PINNs), proposed in [1] after the initial pioneering contributions of Lagaris et al. [2], [3], [4], are rapidly emerging computational methods to solve partial differential equations (PDEs). In its basic formulation, a PINN is a neural network that is trained to minimize the PDE residual on a given set of collocation points in order to compute a corresponding approximate solution. In particular, the fact that the PDE solution is sought in a nonlinear space via a nonlinear optimizer distinguishes PINNs from classical computational methods. This provides PINNs flexibility, since the same code can be used to solve completely different problems by adapting the neural network loss function that is used in the training phase. Moreover, due to the intrinsic nonlinearity and the adaptive architecture of the neural network, PINNs can efficiently solve inverse [5], [6], [7], parametric [8], high-dimensional [9], [10] as well as nonlinear [11] problems. Another important feature characterizing PINNs is that it is possible to combine distinct types of information within the same loss function to readily modify the optimization process. This is useful, for instance, to effortlessly integrate (synthetic or experimental) external data into the training phase to obtain an approximate solution that is computed using both data and physics [12].

In order to improve the original PINN idea, several extensions have been developed. Some of these developments include the Deep Ritz method (DRM) [13], in which the energy functional of a variational problem is minimized; the conservative PINN (cPINN) [14], where the approximate solution is computed by a domain-decomposition approach enforcing flux conservation at the interfaces, as well as its improvement in the extended PINN (XPINN) [15]; and the variational PINN (VPINN) [16], [17], in which the loss function is defined by exploiting the variational structure of the underlying PDE.

Most of the existing PINN approaches enforce the essential (Dirichlet) boundary conditions by means of additional penalization terms that contribute to the loss function, these are each multiplied by constant weighting factors. See for instance [18], [19], [20], [21], [22], [23], [24], [25], [26]; note that this list is by no means exhaustive, therefore we also refer to [27], [28], [29] for more detailed overviews of the PINN literature. However, such an approach may lead to poor approximation, and therefore several techniques to improve it have been proposed. In [30] and [31], adaptive scaling parameters are proposed to balance the different terms in the loss functions. In particular, in [30] the parameters are updated during the minimization to maximize the loss function via backpropagation, whereas in [31] a fixed learning rate annealing procedure is adopted. Other alternatives are related to adaptive sampling strategies (e.g., [32], [33], [34]) or to specific techniques such as the Neural Tangent Kernel [35].

Note that although it is possible to automatically tune these scaling parameters during the training, such techniques require more involved implementations and in most cases lead to intrusive methods since the optimizer has to be modified. Instead, in this paper, we focus on three simple and non-intrusive approaches to impose Dirichlet boundary conditions and we compare their accuracy and efficiency. The proposed approaches are tested using standard PINN and interpolated VPINN which have been proven to be more stable than standard VPINNs [36].

The main contributions of this paper are as follows:

-

1.

We present three non-standard approaches to enforce Dirichlet boundary conditions on PINNs and VPINNs, and discuss their mathematical formulation and their pros and cons. Two of them, based on the use of an approximate distance function, modify the output of the neural network to exactly impose such conditions, whereas the last one enforces them approximately by a weak formulation of the equation.

-

2.

The performance of the distinct approaches to impose Dirichlet boundary conditions is assessed on various test cases. On average, we find that exactly imposing the boundary conditions leads to more efficient and accurate solvers. We also compare the interpolated VPINN to the standard PINN, and observe that the different approaches used to enforce the boundary conditions affect these two models in similar ways.

The structure of the remainder of this paper is as follows. In Section 2, the PINN and VPINN formulations are described: first, we describe the neural network architecture in Section 2.1 and then focus on the loss functions that characterize the two models in Section 2.2. Subsequently, in Section 3, we present the four approaches to enforce the imposition of Dirichlet boundary conditions; three of them can be used with both PINNs and VPINNs, whereas the last one is used to enforce the required boundary conditions only on VPINNs because it relies on the variational formulation. Numerical results are presented in Section 4. In Section 4.1, we first analyze for a second-order elliptic problem the convergence rate of the VPINN with respect to mesh refinement. In doing so, we demonstrate that when the neural network is properly trained, identical optimal convergence rates are realized by all approaches only if the PDE solution is simple enough. Otherwise, only enforcing the Dirichlet boundary conditions with Nitsche's method or by exactly imposing them via approximate distance functions ensure the theoretical convergence rate. In addition, we compare the behavior of the loss function and the error while increasing the number of epochs, as well as the behavior of the error when the network architecture is varied. In Section 4.2, we show that it is also possible to efficiently solve second-order parametric nonlinear elliptic problems. Furthermore, in Sections 4.3–4.5, we compare the performance of all approaches on PINNs and VPINNs by solving a linear elasticity problem and a stabilized Eikonal equation over an L-shaped domain, and a convection problem. Finally, in Section 5, we close with our main findings and present a few perspectives for future work.

2. PINNs and interpolated variational PINNs

In this section, we describe the PINN and VPINN that are used in Section 4. In particular, in Section 2.1 the neural network architecture is presented, and the construction of the loss functions is discussed in Section 2.2.

2.1. Neural network description

In this work we compare the efficiency of four approaches to enforce Dirichlet boundary conditions in PINN and VPINN. The main difference between these two numerical models is the training loss function; the architecture of the neural network is the same and is independent of the way the boundary conditions are imposed.

In our numerical experiments we only consider fully-connected feed forward neural networks with a fixed architecture. Such neural networks can be represented as nonlinear parametric functions that can be evaluated via the following recursive formula:

| (2.1) |

In particular, with the notation of (2.1), is the neural network input vector, is the neural network output vector, the neural network architecture consists of an input layer, hidden layers and one output layer, and are matrices and vectors containing the neural network weights, and is the activation function of the i-th layer and is element-wise applied to its input vector. We also remark that the i-th layer is said to contain neurons and that has to be nonlinear for any . Common nonlinear activation functions are the rectified linear unit (), the hyperbolic tangent and the sigmoid function. In this work, we take to be the identity function in order to avoid imposing any constraint on the neural network output.

The weights contained in and can be logically reorganized in a single vector . The goal of the training phase is to find a vector that minimizes the loss function; however, since such a loss function is nonlinear with respect to and the corresponding manifold is extremely complicated, we can at best find good local minima.

2.2. PINN and interpolated VPINN loss functions

For the sake of simplicity, the loss function for PINN and interpolated VPINN is stated for second-order elliptic boundary-value problems. However, the discussion can be directly generalized to different PDEs, and in Section 4, numerical results associated with other problems are also presented.

Let us consider the model problem:

| (2.2) |

where is a bounded domain whose Lipschitz boundary ∂Ω is partitioned as , with . For the well-posedness of the boundary-value problem we require μ, and satisfying, in the entire domain Ω, for some strictly positive constant and . Moreover, , and for some . We point out that even if these assumptions ensure the well-posedness of the problem, PINNs and VPINNs often struggle to compute low regularity solutions. We refer to [37] for a recent example of a neural network based model that overcomes this issue.

In order to train a PINN, one introduces a set of collocation points and evaluates the corresponding equation residuals . Such residuals, for problem (2.2), are defined as:

| (2.3) |

Since we are interested in a neural network that satisfies the PDE in a discrete sense, the loss function minimized during the PINN training is:

| (2.4) |

In (2.4), when is sufficiently large and is close to zero, the function represented by the neural network output approximately satisfies the PDE and can thus be considered a good approximation of the exact solution. Other terms are often added to impose the boundary conditions or improve the training, which are discussed in Section 3.

Let us now focus on the interpolated VPINN proposed in [36]. We introduce the function spaces and , the bilinear form and the linear form ,

The variational counterpart of problem (2.2) thus reads: Find such that:

| (2.5) |

In order to discretize problem (2.5), we use two discrete function spaces. Inspired by the Petrov-Galerkin framework, we denote the discrete trial space by and the discrete test space by . The functions comprising such spaces are generated on two conforming, shape-regular and nested partitions and with compatible meshsizes H and h, respectively. Assuming that is the finer mesh, one can claim that and that every element of is strictly contained in an element of .

Denoting by the space of piecewise polynomial functions of order over and the space of piecewise polynomial functions of order over that vanish on , we define the discrete variational problem as: Find such that:

| (2.6) |

where is a suitable piecewise polynomial approximation of g. A representation of the spaces and in a one-dimensional domain is provided in Figs. 1a and 1b. Examples of pair of meshes and are shown in Fig. 1c.

Figure 1.

Pair of meshes and corresponding basis functions of a one-dimensional discretization (left) and nested meshes and in a two-dimensional domain (right). (a) Basis functions of Vh. The filled circles (red) are the nodes of the corresponding mesh ; (b) Basis functions of UH. The filled circles (blue) are the vertex nodes that define the elements of the corresponding mesh ; and (c) Meshes used in the numerical experiments of Sections 4.3 and 4.4. The blue mesh is , the red one is . All the figures are obtained with q = 3, ktest = 1, kint = 4.

In order to obtain computable forms and , we introduce elemental quadrature rules of order q and define and as the approximations of and computed with such quadrature rules. In [36], under suitable assumptions, an a priori error estimate with respect to mesh refinement has been proved when . It is then possible to define the computable variational residuals associated with the basis functions of as:

| (2.7) |

Consequently, in order to compute an approximate solution of problem (2.6), one seeks a function that minimizes the quantity:

| (2.8) |

and satisfies the imposed boundary conditions. We refer to Section 3 for a detailed description of different approaches used to impose Dirichlet boundary conditions. It should be noted that, since in Sections 4.2–4.5 we consider problems other than (2.2), the residuals in (2.7) have to be suitably modified, while the loss function structure defined in (2.8) is maintained.

We are interested in using a neural network to find the minimizer of . We thus denote by an interpolation operator used to map the function associated with the neural network to its interpolating element in , and train the neural network to minimize the quantity . We highlight that in order to construct the function , the neural network has to be evaluated only on interpolation points . Then, assuming that is a Lagrange basis such that for every , it holds:

| (2.9) |

We remark that the approaches proposed in Section 3 can also be used on non-interpolated VPINNs. However, we restrict our analysis to interpolated VPINNs because of their better stability properties (see Fig. 11 and the corresponding discussion).

Figure 11.

H1 errors using method MB for standard (left) and interpolated VPINNs (right) as a function of the hyperparameters.

3. Mathematical formulation

We compare four methods to impose Dirichlet boundary conditions on PINNs and VPINNs. We do not consider Neumann or Robin boundary conditions since they can be weakly enforced by the trained VPINN due to the chosen variational formulation (computations using PINNs is discussed in [38]). We also highlight that method below can be used only with VPINNs because it relies on the variational formulation of the PDE. We analyze the following methods:

-

:Incorporation of an additional cost in the loss function that penalizes unsatisfied boundary conditions; this is the standard approach in PINNs and VPINNs because of its simplicity and effectiveness. In fact, it is possible to choose control points and modify the loss functions defined in (2.4) or (2.8) as follows:

or(3.1)

where is a model hyperparameter. Note that on considering the interpolated VPINN and exploiting the solution structure in (2.9), it is possible to ensure the uniqueness of the numerical solution by choosing the control points as the interpolation points belonging to .(3.2) We also highlight that such a method can be easily adapted to impose other types of boundary conditions just by adding suitable terms to (3.1) and (3.2). On the other hand, despite its simplicity, the main drawback of this approach is that it leads to a more complex multi-objective optimization problem.

-

:Exactly imposing the Dirichlet boundary conditions as described in [38] and [36]. In this method we add a non-trainable layer B at the end of the neural network to modify its output w according to the rule:

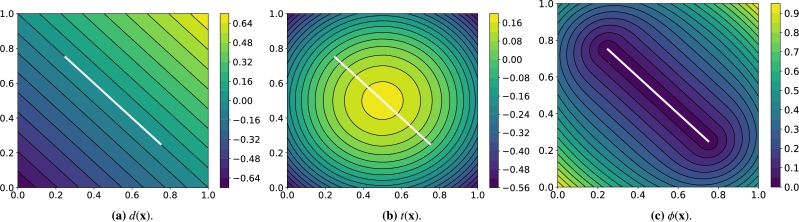

where is an extension of the function g inside the domain Ω (i.e., ) and is an approximate distance function (ADF) to the boundary , i.e., if and only if , and it is positive elsewhere. During the training phase one minimizes the quantity or .(3.3) For the sake of simplicity, we only consider ADFs for two-dimensional unions of segments, even though the approach generalizes to more complex geometries. Following the derivation of and ϕ in [38], we start by defining d as the signed distance function from to the line defined by the segment AB of length L with vertices and :

Then, we denote to be the center of AB and define t as the following trimming function:

Note that defines a circle of center . Finally, the ADF to AB is defined as

A graphical representation of , and for an inclined line segment is shown in Figs. 2a, 2b and 2c, respectively.Assuming that can be expressed as the union of segments , then the ADF to , normalized up to order , is defined as:

where is the ADF to the segment (see [39]). We remark that an ADF normalized up to order is an ADF such that, for every regular point of , the following holds:(3.4)

Such a normalization is useful to impose constraints associated with the solution derivatives and to obtain ADFs with about the same order of magnitude in every region of the domain Ω. However, one of the main limitations of this approach with collocation-PINN is that Δϕ tends to infinity near the vertices of (see Appendix A for an example). This phenomenon produces oscillations in the numerical solutions, hence collocation points that are close to such vertices should not be selected. On the other hand, when only first derivatives are present in the weak formulation of second-order problems (as in the present study), then one can choose quadrature points that are very close to the vertices of .When a function is not known, it is possible to construct it using transfinite interpolation. Let be a function such that , then can be defined as:

where is defined as:

Note that since is a segment, a function can be readily defined at any arbitrary point just by evaluating g at the orthogonal projection of onto . -

:Exactly imposing the Dirichlet boundary conditions as in but without normalizing the ADF. Therefore, we consider a different function ϕ in (3.3), namely

This ensures that ϕ and all its derivatives exist and are bounded in , although ϕ may be very small in regions close to many segments . -

:

Using Nitsche's method [40]. The goal of this method is to variationally impose the Dirichlet boundary conditions. In doing so, the network architecture is not modified with additional layers (as in and ) and a single objective function suffices for network training.

To do so, one enlarges the space to contain all piecewise polynomials of order defined on and modifies the residuals defined in (2.7) in the following way:

where γ is a positive constant satisfying for a suitable and is now an enlarged index set corresponding to the enlarged basis . Thanks to the scaling term that magnifies the quantity when fine meshes are used, the choice of γ is not as important as the one of λ in method . This property is confirmed by numerical results shown in Figure 8, Figure 10. Since there is no ambiguity, we maintain the same symbols , and introduced in Section 2.2; they always represent the enlarged sets when method is considered. Note that when w satisfies the Dirichlet boundary conditions, the terms added in (3.5) vanish.(3.5)

Figure 2.

Representation of the signed distance function d(x) to a straight line (left), the trimming function t(x) (middle) and the approximate distance function ϕ(x) to a segment (right).

Figure 8.

Error decay obtained with MA and different values of λ. Forcing term and Dirichlet boundary conditions are set such that the exact solution is (4.3). The theoretical convergence rate is kint. (a) Convergence rates: 3.66 (λ = 103), 2.05 (λ = 1), 0.01 (λ = 10−3). (b) Convergence rates: 5.85 (λ = 103), 4.42 (λ = 1), 2.89 (λ = 10−3). (c) Convergence rates: 3.95 (λ = 103), 3.68 (λ = 1), 3.71 (λ = 10−3).

Figure 10.

Error decay obtained with MD and different values of γ. Forcing term and Dirichlet boundary conditions are set such that the exact solution is (4.3). The theoretical convergence rate is kint. (a) Convergence rates: 4.18 (γ = 0.1), 4.79 (γ = 1), 3.78 (γ = 10). (b) Convergence rates: 6.54 (γ = 0.1), 6.51 (γ = 1), 7.06 (γ = 10). (c) Convergence rates: 4.19 (γ = 0.1), 4.19 (γ = 1), 4.20 (γ = 10).

We point out that method is often referred to as soft boundary condition imposition, whereas and are known as hard boundary condition impositions. Hence, we can treat as weak boundary condition imposition.

4. Numerical results

In this section, the methods , , and discussed in Section 3 are analyzed and compared. In each numerical experiment the neural network is a fully-connected feed-forward neural network as described in Section 2.1. The corresponding architecture is composed of 4 hidden layers with 50 neurons in each layer and with the hyperbolic tangent as the activation function, while the output layer is a linear layer with one or two neurons.

In order to properly minimize the loss function we use the first-order ADAM optimizer [41] with an exponentially decaying learning rate, and after a prescribed number of epochs, the second-order BFGS method [42] is used until a maximum number of iterations is reached, or it is not possible to further improve the objective function (i.e. when two consecutive iterates are identical, up to machine precision). When the interpolated VPINN is used, the training set consists of all the interpolation nodes and no regularization is applied since the interpolation operator already filters the neural network high frequencies out. Instead, when the PINN is used, the training set contains a set of control points inside the domain Ω, and when is employed, a set of approximately control points on the boundary ∂Ω. Moreover, in order to stabilize the PINN, the regularization term

| (4.1) |

is added to the loss function, where is the vector containing all the neural network weights defined in Section 2.1 and . The value of this parameter has been chosen through several numerical experiments to minimize the norm of the error.

The computer code to perform the numerical experiments is written in Python, while the neural networks and the optimizers are implemented using the open-source Python package Tensorflow [43]. The loss function gradient with respect to the neural network weights and the PINN output gradient with respect to the spatial coordinates are always computed with automatic differentiation that is available in Tensorflow [44]. On the other hand, the VPINN output gradient with respect to its input is computed by means of suitable projection matrices as described in [36].

4.1. Rate of convergence for second-order elliptic problems

We focus on the VPINN model and show that the a priori error estimate proved in [36] for second-order elliptic problems holds even on varying the way in which the boundary conditions are imposed. On letting , we consider problem (2.2) in the domain with the physical parameters

We consider two test cases. In the first one the Dirichlet boundary conditions and forcing term are chosen so that the exact solution is

| (4.2) |

whereas in the second one they are chosen such that the exact solution is more oscillatory. Its expression is:

| (4.3) |

Such a solution is shown in Fig. 3a, whereas an example of numerical error corresponding to the VPINN in which Dirichlet boundary conditions are imposed using method is shown in Fig. 3b; it exhibits a rather uniform distribution of the error, which is not localized near boundaries. We remark that in these numerical tests and in the subsequent ones, the function used in and is computed via transfinite interpolation.

Figure 3.

Exact solution u (left) and a plot of the absolute error with VPINN and method MB in which the Dirichlet boundary conditions are imposed on every edge of ∂Ω (right).

We vary both the order of the quadrature rule and the degree of the test functions, and train the same model with different meshes and impose the Dirichlet boundary conditions with the proposed approaches. In Figs. 4a–4c, 5a–5c and 6a–6c, in which the exact solution is the one in (4.2), we observe close agreement with the results shown in [36]. In fact, when the loss is properly minimized, all the approaches perform comparably and the corresponding empirical convergence rates are always close to the theoretical rate of . We point out that in [36] we prove that, when the solution is regular enough and a method similar to is used to enforce the boundary conditions, the convergence rate is . Here, instead we show that the same behavior is observed even if the boundary conditions are enforced in different ways. Note in particular that the choice or in , and the choice , or in yields nearly identical results (see Figure 5, Figure 6).

Figure 4.

Error decay obtained with MA and different values of λ. Forcing term and Dirichlet boundary conditions are set such that the exact solution is (4.2). The theoretical convergence rate is kint. (a) Convergence rates: 4.04 (λ = 103), 3.99 (λ = 1), 3.42 (λ = 10−3). (b) Convergence rates: 6.18 (λ = 103), 6.00 (λ = 1), 5.52 (λ = 10−3). (c) Convergence rates: 4.50 (λ = 103), 4.44 (λ = 1), 5.29 (λ = 10−3).

Figure 5.

Error decay obtained with MB, with different values of m, and MC. Forcing term and Dirichlet boundary conditions are set such that the exact solution is (4.2). The theoretical convergence rate is kint. (a) Convergence rates: 4.05 (MB,m = 1), 4.05 (MB,m = 2), 4.06 (MC). (b) Convergence rates: 6.24 (MB,m = 1), 6.25 (MB,m = 2), 6.25 (MC). (c) Convergence rates: 4.43 (MB,m = 1), 4.43 (MB,m = 2), 4.67 (MC).

Figure 6.

Error decay obtained with MD and different values of γ. Forcing term and Dirichlet boundary conditions are set such that the exact solution is (4.2). The theoretical convergence rate is kint. (a) Convergence rates: 3.98 (γ = 0.1), 3.89 (γ = 1), 4.39 (γ = 10). (b) Convergence rates: 6.45 (γ = 0.1), 5.69 (γ = 1), 6.90 (γ = 10). (c) Convergence rates: 4.46 (γ = 0.1), 4.43 (γ = 1), 4.43 (γ = 10).

We highlight that the different methods, while delivering similar empirical convergence rates with respect to mesh refinement, exhibit very different performance during training. To observe this phenomenon, let us train multiple identical neural networks on the same mesh but impose the Dirichlet boundary conditions in different ways. Here we only consider quadrature rules of order and piecewise linear test functions. The values of the loss function and of the error prediction during training are presented in Figs. 7a and 7b, respectively. A vertical line separates the epochs where the ADAM optimizer is used from the ones where the BFGS optimizer is used.

Figure 7.

Training loss (left) and H1 error prediction (right) for the VPINN. The first 5000 epochs are performed with a standard ADAM optimizer, the remaining ones with the BFGS optimizer. The exact solution is given in (4.3).

It can be noted that the most efficient method is , as it converges faster and to a more accurate solution, while method is characterized by very fast convergence only when the BFGS optimizer is adopted. Such an optimizer is also crucial to train the VPINN with ; in fact the corresponding error does not decrease when the ADAM optimizer is used. Instead, the convergence obtained using method seems independent of the choice of the optimizer. It is important to remark that all the loss functions are decreasing even when the error is constant. This implies that there exist other sources of error that dominate and that a very small loss function does not ensure a very accurate solution; this phenomenon is also observed in Fig. 3 of [45] and is discussed in greater detail therein.

Note that, if we change the forcing term and Dirichlet boundary conditions to consider the more oscillatory exact solution in (4.3), some approaches do not ensure the theoretical convergence rate (see Figs. 8a–8c, 9a–9c and 10a–10c). In fact, in Fig. 8 it is evident that, in this case, large values of λ are required to properly enforce the Dirichlet boundary conditions. In Fig. 9, instead, we can observe that the VPINN trained with method is often inaccurate and the corresponding error decay is very noisy. The performance of methods and seems independent of the complexity of the forcing term and boundary conditions.

Figure 9.

Error decay obtained with MB, with different values of m, and MC. Forcing term and Dirichlet boundary conditions are set such that the exact solution is (4.3). The theoretical convergence rate is kint. (a) Convergence rates: 3.90 (MB,m = 1), 3.88 (MB,m = 2), 2.36 (MC). (b) Convergence rates: 5.85 (MB,m = 1), 4.42 (MB,m = 2), 2.89 (MC). (c) Convergence rates: 4.60 (MB,m = 1), 4.59 (MB,m = 2), -0.43 (MC).

In order to show that interpolation acts as a stabilization, we fix a mesh and vary the number of layers and neurons of the neural network. The boundary conditions are imposed using method with and the exact solution is the one in (4.2); the results are shown in Fig. 11. The number L of layers varies in , whereas the number of neurons in each hidden layer belongs to the set . In Fig. 11a we show the performance of a non-interpolated VPINN trained with the regularization in (4.1), where . It can be noted that the error is high when the neural network is small because of its poor approximation capability, and that it decreases with intermediate values of the two hyperparameters. However, when the neural networks contain more than 100 neurons in each layer the error increases because of uncontrolled spurious zero-energy modes and the fact that we are looking for good local minima in a very high-dimensional space. On the other hand, when the VPINN is interpolated and the neural network is sufficiently rich, the error is constant and independent of the network dimension (see Fig. 11b). In addition, note that the average accuracy of an interpolated VPINN is better than its non-interpolated counterpart.

4.2. Application to nonlinear parametric problem

Let us now extend our analysis to nonlinear and parametric PDEs. Since in the previous section we observed that method performs the best, in this example we do not consider and . We focus on the following problem:

| (4.4) |

It has been observed in [36] that considering constant or variable coefficients does not influence VPINN convergence. Hence, we choose , , and assume that the exact solution is

| (4.5) |

where is a scalar parameter.

In order to train the VPINN to solve problem (4.4), we minimize

when is used, or

when is used instead. Here is a finite set of parameter values and is the residual obtained using the i-th test function and the parameter p. In the numerical computations, we use and the VPINN is trained with and .

In Fig. 12, we report the behavior of the loss function and the average error:

where . It is noted that the loss functions behave qualitatively similarly (see Fig. 12a). On the other hand, higher values of λ lead to lower errors when is adopted, but the most stable and accurate approach remains .

Figure 12.

(a) Training loss and (b) H1 error prediction for the VPINN. The first 10000 epochs are performed with a standard ADAM optimizer, the remaining ones with the BFGS optimizer. The exact solution is given in (4.5).

Moreover, when the VPINN is trained, it can be evaluated at arbitrary locations in the parameter domain , yielding the error plot shown in Fig. 13. Therein, for each dot and for each point belonging to the solid lines, given the parameter value represented on the horizontal axis, its value on the vertical axis represents the error between the VPINN solution and the exact solution . Note that dots are associated with parameter values that are chosen in during the training, whereas solid lines are the predictions to assess the accuracy of the models for intermediate values of p. Such lines thus show the error for values of the parameter not used during the training.

Figure 13.

H1 error for different parameter values in problem (4.4).

4.3. Deformation of an elastic body

We consider the deformation of a linear elastic solid in the region , which is subjected to a body force field f and Dirichlet boundary conditions imposed on . The elastostatic boundary-value problem is:

| (4.6) |

In (4.6), is the Cauchy stress tensor, is the small strain tensor and (4.6c) is the isotropic linear elastic constitutive relation. The Lamé parameters λ and μ are related to the Young modulus E and the Poisson ratio ν via

For the numerical experiments, we choose , and the following body force field and boundary data:

The variational formulation of problem (4.6a) reads as: Find such that:

where is the natural lifting of the boundary data. Such a formulation is used to compute the quantity in (2.8), where the modified residuals

replace the ones defined in (2.7). For this and the subsequent test cases, we will also provide a comparison with the results obtained by a PINN, in order to give a more complete view of the performance of the methods. The modified residuals required in the PINN loss function are defined as:

Since the exact solution is not known, we produce a very accurate numerical solution for comparison (shown in Figs. 14a and 14b), using the open-source FEM solver FEniCS [46].

Figure 14.

Reference finite element displacement field solution for problem (4.6). The x-component (left) and y-component (right) of uh are shown.

Problem (4.6) is solved by training a VPINN on the mesh shown in Fig. 1c with and . Then, in order to compare the performance of PINN and VPINN, a standard PINN is trained to solve the same problem. In order to verify if the distribution of the collocation points affects the PINN accuracy, we firstly train it by choosing as collocation points the interpolation nodes used in the VPINN training, and then we train it with the same number of uniformly distributed collocation points.

For these three methods we analyze the error during the neural network training for a fixed training set dimension; we report the results in Figs. 15a–15c. Observing that Figs. 15b and 15c are very similar, we deduce that, in this case, the choice of control points in the PINN training is not strictly related to the efficacy of the different approaches.

Figure 15.

H1 error decay during the neural network training when solving problem (4.6). (a) VPINN error: H1 error of the most accurate solution is 0.020; (b) PINN error: model is trained with collocation points distributed on a Delaunay mesh and the H1 error of the most accurate solution is 0.070; and (c) PINN error: model is trained with collocation points from a uniform distribution and the H1 error of the most accurate solution is 0.047. The legend in (a) also applies to (b) and (c).

It can be observed that method is always the most efficient approach and leads to convergence to more accurate solutions. Exactly imposing the Dirichlet boundary conditions via can be considered a good alternative since the solutions at convergence obtained with the VPINN and the PINN trained with random control points are very similar to the ones computed using , although the convergence is slower. The most commonly used approach, , is instead dependent on the choice of the non-trainable parameter λ. In this case, large values of λ ensure accurate solutions and acceptably efficient training phases, but the correct values are problem dependent and can be often found only after a potentially expensive tuning. Indeed, choosing the wrong values of λ can ruin the efficiency and the accuracy of the method, as it can be observed in Fig. 15 when or . We also highlight that the performance of method is very similar to method when reasonable values of λ are chosen.

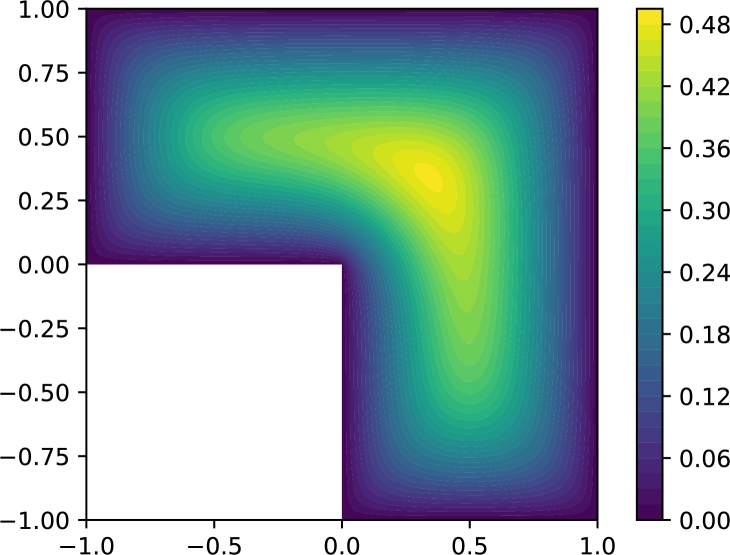

4.4. Stabilized Eikonal equation

In this section we consider the stabilized Eikonal equation, which is a nonlinear second-order PDE and reads as:

| (4.7) |

where ε is a small positive constant. Note that when , and , the exact solution is the distance function to the boundary and the problem can be efficiently solved by the fast sweeping method [47] or by the fast marching method [48]. In our numerical computations we set and and we introduce a weak diffusivity with to guarantee uniqueness of the solution.

The PINN and VPINN residuals associated with problem (4.7) that extend the residuals in (2.3) and (2.7), respectively, are defined as:

and

We compute the VPINN and PINN numerical solutions as described in Section 4.3 and compute the corresponding errors using a finite element reference solution that is computed on a much finer mesh (see Fig. 16).

Figure 16.

Reference finite element solution for problem (4.7).

As in Fig. 15, in Fig. 17 we show the decay of the error during the training for the different methods. Again, it can be noted that the most accurate method is always ; is a valid alternative provided λ is properly chosen. However, when the value of λ is not suitably chosen, convergence can be completely ruined (see, for instance, the curves associated with in Figs. 17b and 17c) or a second-order optimizer is required to retain convergence (see all the curves computed with ). Moreover, similar convergence issues are present when or are employed.

Figure 17.

H1 error decay during the neural network training when solving problem (4.7). (a) VPINN error: H1 error of the most accurate solution is 0.021; (b) PINN error: model is trained with collocation points distributed on a Delaunay mesh and the H1 error of the most accurate solution is 0.085; and (c) PINN error: model is trained with collocation points from a uniform distribution and the H1 error of the most accurate solution is 0.029. The legend in (a) also applies to (b) and (c).

4.5. One-dimensional convection problem

As a final example, we consider a one-dimensional convection problem on the space-time domain . As discussed in [49], when solving such a hyperbolic PDE with PINN, possible failure modes may arise due to the very complex loss landscape. The model problem reads as:

| (4.8) |

Let us consider the boundary condition and the initial condition . The corresponding exact solution is . We solve problem (4.8) with the convection coefficient .

Given a set of collocation points , and a suitable set of space-time test functions , the PINN and VPINN residuals that are used to train the models are given by

and

respectively. When the boundary conditions are exactly imposed (i.e., when or are used), the function is constructed as , where and is a function that vanishes on the Dirichlet boundary of . Note that, due to the simplicity of the spatial domain , there is no reason to distinguish between and . Therefore, we just consider the function in both approaches.

The numerical results obtained using the different approaches are presented in Fig. 18. In Fig. 18a, problem (4.8) is solved with the VPINN method. In this case, is slightly more accurate and efficient than (or since they coincide) if λ is chosen properly. However, when the value of λ is not optimal, the solution is significantly less accurate. Once more, method is not competitive with the other approaches. On the other hand, when PINN is considered, exactly imposing the boundary conditions ensures better accuracy and efficiency than using , regardless of the value of λ (see Figs. 18b and 18c).

Figure 18.

H1 error decay during the neural network training when solving problem (4.8). (a) VPINN error: H1 error of the most accurate solution is 0.077; (b) PINN error: model is trained with collocation points distributed on a Delaunay mesh and the H1 error of the most accurate solution is 0.125; and (c) PINN error: model is trained with collocation points from a uniform distribution and the H1 error of the most accurate solution is 0.051. The legend in (a) also applies to (b) and (c).

5. Conclusions

In this paper, we analyzed the formulation and the performance of four different approaches to enforce Dirichlet boundary conditions in PINNs and VPINNs on arbitrary polygonal domains. In the first approach, which is the most commonly used when training PINNs, the boundary conditions are imposed by means of additional terms in the loss function that penalize the discrepancy between the neural network output and the prescribed boundary conditions. The subsequent two approaches exactly enforce the boundary conditions and differ in the way they modify the model output in order to force it to satisfy the desired conditions. The last approach, which can be used only when the loss function is derived from the weak formulation of the PDE, is based on Nitsche's method and enforces the boundary conditions variationally.

We have shown that and , in the considered second-order elliptic PDEs, always ensure the theoretically predicted convergence rate with respect to mesh refinement, regardless of the value of the involved parameter. Instead, method and ensure it only if the exact solution is not characterized by an intense oscillatory behavior.

In general, we observed that the most efficient and accurate approach is the one introduced in [38] (method ), which is based on the use of a class of approximate distance functions. A variant of this approach (method ) leads to suboptimal results and may even ruin the convergence of the method (as in Fig. 17c). Imposing the boundary conditions via additional cost (method ) can be considered a valid alternative, but the choice of the additional penalization parameter is crucial because wrong values can prevent convergence to the correct solution or dramatically slow down the training. In the proposed numerical experiments we fixed the penalization parameter. As discussed in the Introduction, we highlight that it is possible to tune it during training, but we chose to fix it in order to compare non-intrusive methods with simple implementations. Finally, we observed that Nitsche's method (method ) is in some cases similar to with an acceptable value of λ, while in other cases requires a second-order optimizer to converge to the correct solution.

Among possible extensions of this work, we mention applications to high-dimensional PDEs over complex geometries, where we expect methods and to be even more efficient than their alternatives. In fact, such methods can enforce the correct conditions on each portion of the boundary, whereas methods and are likely to be less robust and efficient.

CRediT authorship contribution statement

S. Berrone; C. Canuto; N. Sukumar: Conceived and designed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data.

M. Pintore: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

CC, SB and MP performed this research in the framework of the Italian MIUR Award “Dipartimenti di Eccellenza 2018-2022” granted to the Department of Mathematical Sciences, Politecnico di Torino (CUP: E11G18000350001). The research leading to this paper has also been partially supported by the SmartData@PoliTO center for Big Data and Machine Learning technologies. SB was supported by the Italian MIUR PRIN Project 201744KLJL-004, CC was supported by the Italian MIUR PRIN Project 201752HKH8-003. CC, SB and MP are members of the Italian INdAM-GNCS research group.

Contributor Information

S. Berrone, Email: stefano.berrone@polito.it.

C. Canuto, Email: claudio.canuto@polito.it.

M. Pintore, Email: moreno.pintore@polito.it.

N. Sukumar, Email: nsukumar@ucdavis.edu.

Appendix A. On the Laplacian of the approximate distance function

In [38], the issue of the blowing-up of the Laplacian of ϕ in (3.4) is discussed. Herein, we illustrate the same for the simple setting in which is composed of two edges that intersect.

Let Ω be the non-negative quadrant and let us consider the (semi-infinite) segments and . For the sake of simplicity, we consider the exact distance functions and . Let us compute the ADF of order to the boundary . Substituting in (3.4), ϕ can be written as:

The gradient of ϕ is:

Note that the gradient is bounded on , and in particular:

where α is a strictly positive constant, i.e. ϕ is an ADF of order 1 and ∇ϕ is bounded along any straight line intersecting the origin and entering inside the domain. The Laplacian of ϕ is:

Consider the limit at along the line , for :

Therefore, Δϕ is unbounded at the origin.

For , the function ϕ is:

Its gradient is:

which in polar coordinates (, ) is expressed as:

In polar coordinates, we can write

Similarly,

holds, which implies:

Thus, as in the case , when .

We point out that for and , respectively, we note that:

Therefore, ϕ is an ADF that is normalized up to order 2.

Data availability statement

Data will be made available on request.

References

- 1.Raissi M., Perdikaris P., Karniadakis G. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019;378:686–707. [Google Scholar]

- 2.Lagaris I.E., Likas A., Fotiadis D.I. Artificial neural network methods in quantum mechanics. Comput. Phys. Commun. 1997;104:1–14. [Google Scholar]

- 3.Lagaris I.E., Likas A., Fotiadis D.I. Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw. 1998;9:987–1000. doi: 10.1109/72.712178. [DOI] [PubMed] [Google Scholar]

- 4.Lagaris I.E., Likas A.C., Papageorgiou D.G. Neural-network methods for boundary value problems with irregular boundaries. IEEE Trans. Neural Netw. 2000;11:1041–1049. doi: 10.1109/72.870037. [DOI] [PubMed] [Google Scholar]

- 5.Chen Y., Lu L., Karniadakis G.E., Negro L.D. Physics-informed neural networks for inverse problems in nano-optics and metamaterials. Opt. Express. 2020;28:11618–11633. doi: 10.1364/OE.384875. [DOI] [PubMed] [Google Scholar]

- 6.Guo Q., Zhao Y., Lu C., Luo J. High-dimensional inverse modeling of hydraulic tomography by physics informed neural network (HT-PINN) J. Hydrol. 2023;616 [Google Scholar]

- 7.Mishra S., Molinaro R. Estimates on the generalization error of physics-informed neural networks for approximating a class of inverse problems for PDEs. IMA J. Numer. Anal. 2021 [Google Scholar]

- 8.Gao H., Sun L., Wang J.-X. PhyGeoNet: physics-informed geometry-adaptive convolutional neural networks for solving parameterized steady-state PDEs on irregular domain. J. Comput. Phys. 2021;428 [Google Scholar]

- 9.Han J., Jentzen A., Weinan E. Solving high-dimensional partial differential equations using deep learning. Proc. Natl. Acad. Sci. 2018;115:8505–8510. doi: 10.1073/pnas.1718942115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lanthaler S., Mishra S., Karniadakis G.E. Error estimates for DeepONets: a deep learning framework in infinite dimensions. Trans. Math. Appl. 2022;6 [Google Scholar]

- 11.Jiang X., Wang D., Fan Q., Zhang M., Lu C., Tao Lau A.P. 2021 Optical Fiber Communications Conference and Exhibition (OFC) 2021. Solving the nonlinear Schrödinger equation in optical fibers using physics-informed neural network; pp. 1–3. [Google Scholar]

- 12.Chen Z., Liu Y., Sun H. Physics-informed learning of governing equations from scarce data. Nat. Commun. 2021;12:1–13. doi: 10.1038/s41467-021-26434-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Weinan E., Yu B. The deep Ritz method: a deep learning-based numerical algorithm for solving variational problems. Commun. Math. Stat. 2018;6:1–12. [Google Scholar]

- 14.Jagtap A.D., Kharazmi E., Karniadakis G.E. Conservative physics-informed neural networks on discrete domains for conservation laws: applications to forward and inverse problems. Comput. Methods Appl. Mech. Eng. 2020;365 [Google Scholar]

- 15.Jagtap A.D., Karniadakis G.E. Extended physics-informed neural networks (XPINNs): a generalized space-time domain decomposition based deep learning framework for nonlinear partial differential equations. Commun. Comput. Phys. 2020;28:2002–2041. [Google Scholar]

- 16.Kharazmi E., Zhang Z., Karniadakis G. VPINNs: variational physics-informed neural networks for solving partial differential equations. 2019. arXiv:1912.00873 arXiv preprint.

- 17.Kharazmi E., Zhang Z., Karniadakis G. hp-VPINNs: variational physics-informed neural networks with domain decomposition. Comput. Methods Appl. Mech. Eng. 2021;374 [Google Scholar]

- 18.De Ryck T., Jagtap A., Mishra S. Error estimates for physics-informed neural networks approximating the Navier-Stokes equations. IMA J. Numer. Anal. 2023 [Google Scholar]

- 19.De Ryck T., Mishra S. Error analysis for physics informed neural networks (PINNs) approximating Kolmogorov PDEs. Adv. Comput. Math. 2022;48 [Google Scholar]

- 20.Demo N., Strazzullo M., Rozza G. An extended physics informed neural network for preliminary analysis of parametric optimal control problems. 2021. arXiv:2110.13530 arXiv preprint.

- 21.Hu R., Lin Q., Raydan A., Tang S. Higher-order error estimates for physics-informed neural networks approximating the primitive equations. 2022. arXiv:2209.11929 arXiv preprint.

- 22.Pu J., Li J., Chen Y. Solving localized wave solutions of the derivative nonlinear Schrödinger equation using an improved PINN method. Nonlinear Dyn. 2021;105:1723–1739. [Google Scholar]

- 23.Sirignano J., Spiliopoulos K. DGM: a deep learning algorithm for solving partial differential equations. J. Comput. Phys. 2018;375:1339–1364. [Google Scholar]

- 24.Tartakovsky A., Marrero C., Perdikaris P., Tartakovsky G., Barajas-Solano D. Learning parameters and constitutive relationships with physics informed deep neural networks. 2018. arXiv:1808.03398 arXiv preprint.

- 25.Yang L., Meng X., Karniadakis G. B-PINNs: Bayesian physics-informed neural networks for forward and inverse PDE problems with noisy data. J. Comput. Phys. 2021;425 [Google Scholar]

- 26.Zhu Y., Zabaras N., Koutsourelakis P., Perdikaris P. Physics-constrained deep learning for high-dimensional surrogate modeling and uncertainty quantification without labeled data. J. Comput. Phys. 2019;394:56–81. [Google Scholar]

- 27.Beck C., Hutzenthaler M., Jentzen A., Kuckuck B. An overview on deep learning-based approximation methods for partial differential equations. Discrete Contin. Dyn. Syst., Ser. B. 2022 [Google Scholar]

- 28.Cuomo S., Di Cola V.S., Giampaolo F., Rozza G., Raissi M., Piccialli F. Scientific machine learning through physics-informed neural networks: where we are and what's next. J. Sci. Comput. 2022;92 [Google Scholar]

- 29.Lawal Z., Yassin H., Lai D., Che Idris A. Physics-informed neural network (PINN) evolution and beyond: a systematic literature review and bibliometric analysis. Big Data Cogn. Comput. 2022;6 [Google Scholar]

- 30.McClenny L.D., Braga-Neto U.M. Self-adaptive physics-informed neural networks. J. Comput. Phys. 2023;474 [Google Scholar]

- 31.Wang S., Teng Y., Perdikaris P. Understanding and mitigating gradient flow pathologies in physics-informed neural networks. SIAM J. Sci. Comput. 2021;43:A3055–A3081. [Google Scholar]

- 32.Wight C.L., Zhao J. Solving Allen-Cahn and Cahn-Hilliard equations using the adaptive physics informed neural networks. Commun. Comput. Phys. 2021;29:930–954. [Google Scholar]

- 33.Tang K., Wan X., Liao Q. Adaptive deep density approximation for Fokker-Planck equations. J. Comput. Phys. 2022;457 [Google Scholar]

- 34.Feng X., Zeng L., Zhou T. Solving time dependent Fokker-Planck equations via temporal normalizing flow. 2021. arXiv:2112.14012 arXiv preprint.

- 35.Wang S., Yu X., Perdikaris P. When and why pinns fail to train: a neural tangent kernel perspective. J. Comput. Phys. 2022;449 [Google Scholar]

- 36.Berrone S., Canuto C., Pintore M. Variational physics informed neural networks: the role of quadratures and test functions. J. Sci. Comput. 2022;92:1–27. [Google Scholar]

- 37.Taylor J.M., Pardo D., Muga I. A deep Fourier residual method for solving PDEs using neural networks. Comput. Methods Appl. Mech. Eng. 2023;405 [Google Scholar]

- 38.Sukumar N., Srivastava A. Exact imposition of boundary conditions with distance functions in physics-informed deep neural networks. Comput. Methods Appl. Mech. Eng. 2022;389 [Google Scholar]

- 39.Biswas A., Shapiro V. Approximate distance fields with non-vanishing gradients. Graph. Models. 2004;66:133–159. [Google Scholar]

- 40.Nitsche J.A. Uber ein Variationsprinzip zur Losung Dirichlet-Problemen bei Verwendung von Teilraumen, die keinen Randbedingungen unteworfen sind. Abh. Math. Semin. Univ. Hamb. 1971;36:9–15. [Google Scholar]

- 41.Kingma D.P., Ba J. Adam: a method for stochastic optimization. 2014. arXiv:1412.6980 arXiv preprint.

- 42.Wright S., Nocedal J., et al. vol. 35. 1999. Numerical Optimization. [Google Scholar]

- 43.Abadi M., et al. TensorFlow: large-scale machine learning on heterogeneous systems. 2015. http://tensorflow.org/ software available from tensorflow.org.

- 44.Baydin A.G., Pearlmutter B.A., Radul A.A., Siskind J.M. Automatic differentiation in machine learning: a survey. J. Mach. Learn. Res. 2018;18 [Google Scholar]

- 45.Berrone S., Canuto C., Pintore M. Solving PDEs by variational physics-informed neural networks: an a posteriori error analysis. Ann. Univ. Ferrara. 2022;68:575–595. [Google Scholar]

- 46.Alnaes M.S., Blechta J., Hake J., Johansson A., Kehlet B., Logg A., Richardson C., Ring J., Rognes M.E., Wells G.N. The FEniCS project version 1.5. Arch. Numer. Softw. 2015;3 [Google Scholar]

- 47.Zhao H. A fast sweeping method for Eikonal equations. Math. Comput. 2005;74:603–627. [Google Scholar]

- 48.Sethian J.A. vol. 3. Cambridge University Press; 1999. Level Set Methods and Fast Marching Methods: Evolving Interfaces in Computational Geometry, Fluid Mechanics, Computer Vision, and Materials Science. [Google Scholar]

- 49.Krishnapriyan A., Gholami A., Zhe S., Kirby R., Mahoney M.W. Characterizing possible failure modes in physics-informed neural networks. Adv. Neural Inf. Process. Syst. 2021;34:26548–26560. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on request.