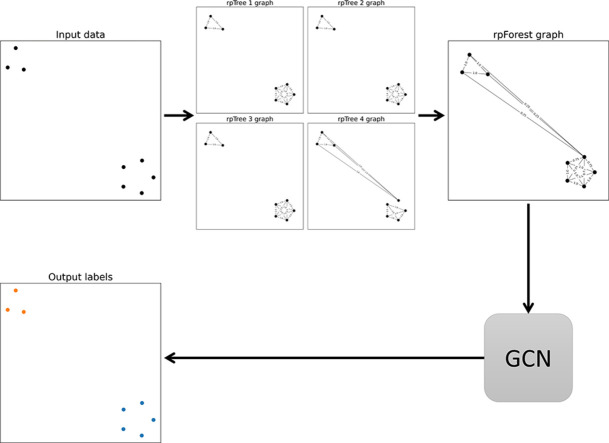

Graphical abstract

Keywords: Deep learning, Graph convolutional network (GCN), Graph neural network (GNN), Random projection forests

Method name: Random Projection Forest Initialization for Graph Convolutional Networks

Abstract

Graph convolutional networks (GCNs) were a great step towards extending deep learning to graphs. GCN uses the graph and the feature matrix as inputs. However, in most cases the graph is missing and we are only provided with the feature matrix . To solve this problem, classical graphs such as -nearest neighbor (-nn) are usually used to construct the graph and initialize the GCN. Although it is computationally efficient to construct -nn graphs, the constructed graph might not be very useful for learning. In a -nn graph, points are restricted to have a fixed number of edges, and all edges in the graph have equal weights. Our contribution is Initializing GCN using a graph with varying weights on edges, which provides better performance compared to -nn initialization. Our proposed method is based on random projection forest (rpForest). rpForest enables us to assign varying weights on edges indicating varying importance, which enhanced the learning. The number of trees is a hyperparameter in rpForest. We performed spectral analysis to help us setting this parameter in the right range. In the experiments, initializing the GCN using rpForest provides better results compared to -nn initialization.

-

•

Constructing the graph using rpForest sets varying weights on edges, which represents the similarity between a pair of samples.Unlike -nearest neighbor graph where all weights are equal.

-

•

Using rpForest graph to initialize GCN provides better results compared to -nn initialization. The varying weights in rpForest graph quantify the similarity between samples, which guided the GCN training to deliver better results.

-

•

The rpForest graph involves the tuning of the hyperparameter (number of trees ). We provided an informative way to set this hyperparameter through spectral analysis.

Specifications table

| Subject Area: | Computer Science |

| More specific subject area: | Graph Neural Network |

| Method name: | Random Projection Forest Initialization for Graph Convolutional Networks |

| Name and reference of original method: | N.A. |

| Resource availability : | https://github.com/mashaan14/RPTree-GCN |

Introduction

Convolutional neural networks (CNNs) proved to be effective in many applications. The convolution component in CNNs is only applicable to fixed grids like images and videos. Applying CNNs to non-grid data (graphs for example) can be useful for many applications. Graph Convolutional Networks (GCNs) [1] introduced a convolution component designed for graphs, where vertices are allowed to have a varying number of neighbors unlike fixed grids. GCNs were used in several application such as sentiment analysis [2], computer vision tasks [3] and ranking gas adsorption properties [4].

Because the edges arrangements are different from one graph to another, GCN needs the adjacency matrix to perform the convolutions. The GCN performs two changes to the input adjacency matrix: 1) it adds self-loops to include the vertex own feature vector into the convolution, and 2) it normalizes the adjacency matrix using the degree matrix to avoid favoring vertices with many edges. These two changes were embedded in the convolution function of GCN. Researchers have improved the GCN algorithm in different ways such as: increasing the depth of GCN [5], implementing attention mechanism [4], [6], or replacing self-loops with trainable skip connection [7]. Our focus is on creating an adjacency matrix in an unsupervised way.

GCN can classify graph vertices efficiently if the adjacency matrix is given, but it cannot create an adjacency matrix from scratch. This opens a new research track on how to create an adjacency matrix for GCN. An obvious solution is to use traditional methods to create the adjacency matrix such as: fully connected graph, -nearest neighbor graph, and -neighborhood graph [8]. The fully connected graph grows exponentially with the number of vertices (), which makes -nn and -graphs more appealing. But if we compare -nn and -graphs, we can see that -nn graphs can be implemented using efficient data structures such as -trees.

Franceschi et al. proposed a bi-level optimization for GCN graphs [9]. They used a number of sample graphs to train the GCN and based on the validation error they modified the original adjacency matrix. Although the method proposed by Franceschi et al. is effective in learning the adjacency matrix, it still uses the -nearest neighbor graph. -nn graphs have two problems. First, all vertices in a -nn graph are restricted to edges, this would limit the ability of vertices to connect to more similar neighbors. Second, all edges in a -nn graph are assigned equal weights, which gives them the same level of importance when passed through a GCN.

We present a new method to construct the adjacency matrix for GCN. We used random projection forests (rpForest) [10], [11] to construct the adjacency matrix. An rpForest is a collection of random projection trees (rpTree). rpTrees use random directions to partition the data points into tree nodes [12], [13], [14]. A leaf node in an rpTree represents a small region that contains similar points. We connect all points in a leaf node in each rpTree. If an edge keeps persisting over multiple rpTrees it will be assigned a higher weight. This would solve the equal weights problem in -nn graphs. Also, since leaf nodes can have a varying number of points, this would allow points to connect to more or less neighbors depending on the density inside the leaf node. The experiments showed that a GCN with rpForest initialization performed better than a GCN with -nn initialization. Our contributions are:

-

•

Initializing GCN using a graph based on rpForest, that allows points to connect to a varying number of neighbors and assigns weights proportionate to the edge’s occurrence in the rpForest.

-

•

Providing a spectral analysis to set the hyperparameter (number of trees ) in rpForest.

Related work

This section discusses the recent advancements in graph convolutional networks, graph construction methods, and random projection forests. These three topics form the basis of our proposed method.

Graph convolutional networks (GCNs)

The successful application of convolutional neural network (CNN) on imagery data has stimulated research to extend the convolution concept beyond images. Images can be viewed as a special case of graphs where edges and vertices are ordered on a fixed grid. The problem with applying convolutions on graphs is that vertices have a varying number of edges. GCN extended the convolution to graphs by performing three steps: 1) feature vectors are averaged within the node’s local neighborhood, 2) the averaged features are transformed linearly, and 3) a nonlinear activation is applied to the averaged features [15].

Wu et al. [16] have categorized graph convolutional network methods into two categories: 1) spectral-based GCNs and 2) spatial-based GCNs. Spectral-based GCNs rely on spectral graph theory. The intuition is that the graph Laplacian carries rich information about graph geometry. A symmetric graph Laplacian is defined as [8], [17]. is the degree matrix in which the diagonal shows the degree of each vertex . The graph Fourier transform projects an input graph signal to an embedding space, where the basis are formed by eigenvectors of the normalized graph Laplacian . The process of graph convolution can be thought of as convoluting an input signal with a filter as shown in Eq. (1):

| (1) |

where is a parameter that controls the filter and is the matrix of eigenvectors ordered by eigenvalues. Bruna et al. represented the filter as a set of learnable parameters [18]. Henaff et al. [19] extended the model proposed by Bruna et al. [18] to datasets where graphs are unavailable. Wu et al. [16] have identified three limitations for spectral-based GCNs: 1) any change in the graph structure would change the embedding space, 2) the learned filters are domain specific and cannot be applied to different graphs, 3) they require an eigen decomposition step, which is computationally expensive .

Although the graph spectrum provides rich information, avoiding the eigen decomposition step will be a huge boost in terms of performance. Hammond et al. [20] proposed an approximation of the filter via Chebyshev polynomials , which was deployed into GCN by Defferrard et al. [21]. The Chebyshev polynomials are recursively defined as:

| (2) |

with and . This formulation would allow us to perform the convolution on graphs as:

| (3) |

with . This localization is a th-order neighborhood, which means that it depends on the nodes that are steps away from the central node. This graph convolution was simplified by Kipf and Welling [1]. They limited the layer-wise convolution operation to , and set . Using these settings, the graph convolution in Eq. (3) can be simplified to:

| (4) |

The time complexity for GCN is , where is the number of layers, is the adjacency matrix, is the number of features, and is the number of nodes [22].

Graph construction

All methods explained in the previous section assume the graph to be already constructed. But this is not the case in many practical applications, where only the feature matrix is provided. When a graph is missing, the most used way to construct it is to use the Gaussian heat kernel [23]:

| (5) |

where is the distance between the samples and . The problem with the heat kernel is that it heavily depends on the selection of the scaling parameter , and usually the user has to try different values and selects the best one. This was improved by the self-tuning diffusion kernel [24]:

| (6) |

where is the distance from the point to its th neighbor. Zelnik-manor and Perona have set [24].

Constructing a graph using these two approaches requires performing pairwise comparisons, which means computations in order of . To avoid these computations, one could use a more efficient data structure. -nearest neighbor graphs are usually implemented using k-dimensional trees (also known as d-trees) [25]. d-trees start by selecting the dimension with maximum dispersion. Along that dimension they split at the median and place whatever less than the median in the left child and whatever greater than the median in the right child. After several recursive executions, the d-tree algorithm scans the leaf nodes and returns the -nearest neighbors. The -nearest neighbor graph is defined as:

| (7) |

We can identify two problems with a -nearest neighbor graph: 1) it assigns equal weights on edges which gives all edges the same importance, and 2) it restricts all points to have edges regardless of their position in the feature space.

Random projection forests (rpForests)

Random projection forest (rpForest) is a collection of random projection trees (rpTrees) [12], [26]. rpTrees use the same principle as d-trees, that is partitioning the feature space and placing points in a binary tree. The difference is that d-tree splits along the existing dimensions, while rpTree splits along random directions. In rpTrees, the root node contains all the data points, and the leaf nodes contain disjoint subsets of these data points. Each internal node in rpTree holds a random projection direction and a scalar split point along that random direction. Fig. 1 shows an example of rpTree.

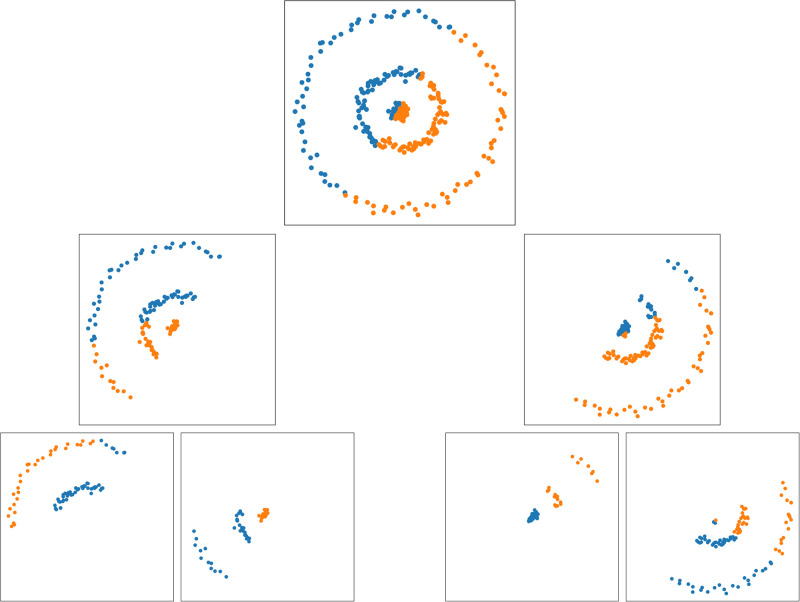

Fig. 1.

An example of running rpTree algorithm on points in 2D. At each node in the tree, a random direction is selected, and all points are projected onto it. Points are split at the median, where points less than the median (points in blue) are placed in the left child, and points larger than the median (points in orange) are placed in the right child. (Best viewed in color). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

The most common use of rpTrees is performing -nearest neighbor search. Yan et al. [11] used rpForest (i.e., a collection of rpTrees) to perform -nn search. They modified the splitting rule by selecting three random directions, then project onto the one that yields the maximum dispersion of points. rpTree was used in anomaly detection application by Chen et al. [27]. They also modified the splitting rule by examining if the points form two Gaussian components, they will split these two components into left and right nodes. Tavallali et al. [28] proposed a -means tree, which outputs the centroids of clusters. All these modifications on rpTree are supported by the empirical evidence provided by Ram and Gray [29]. They stated that the best performing binary space-partitioning trees are the ones that have better vector quantization and large partition margins. But these modifications add extra computations to the rpTree algorithm.

Proposed method

Our proposed method has two components: a neural network and a graph construction component. The next section explains the neural network component, followed by two sections discussing the graph construction component.

GCN and LDS

Graph convolutional networks (GCNs) are used for semi-supervised node classification. A GCN propagation rule at the first layer is defined as:

| (8) |

where is the adjacency matrix, is the activation function , and is the weight matrix for the first neural network layer. Two problems arise from this definition. First, the node’s own feature vector is not included since has zeros on the diagonal. This can be solved by allowing self-loops, and rewrite the adjacency matrix to be . The second problem is the normalization of the adjacency matrix, which can be solved by normalizing using the degree matrix . The adjacency matrix becomes . By applying these changes, the GCN propagation rule in Eq. (8) can be rewritten as:

| (9) |

The most common architecture is a two-layer GCN, which can be defined using the following formula:

| (10) |

Franceschi et al. [9] proposed LDS method to learn the adjacency matrix . It stands for Learning Discrete Structures (LDS). They used -nearest neighbor graph to initialize the Graph Convolutional Networks (GCNs). The methodology involves four steps: initialization, sampling, inner optimization, and outer optimization. First, a parameter is initialized to be the adjacency matrix of -nn graph and run GCN once to initialize its parameters. Then, the method iteratively sample graphs from to optimize for GCN parameters (inner optimizer) and (outer optimizer) in a bilevel optimization.

In our experiments, we used both methods GCN and LDS to evaluate their performance when we initialize them using different graphs. The initializations we used in the experiments are -nearest neighbor graph initialization and random projection forest (rpForest) initialization.

-nearest neighbor initialization

GCN needs a graph to perform the convolutions. A common choice is to use the -nearest neighbor graphs to initialize the GCN. In a -nn graph, each point is connected to its nearest neighbors. Intuitively, the adjacency matrix contains nonzero entries since we have points and each one of them has edges. A formal definition for -nn graphs is given in Eq. (7).

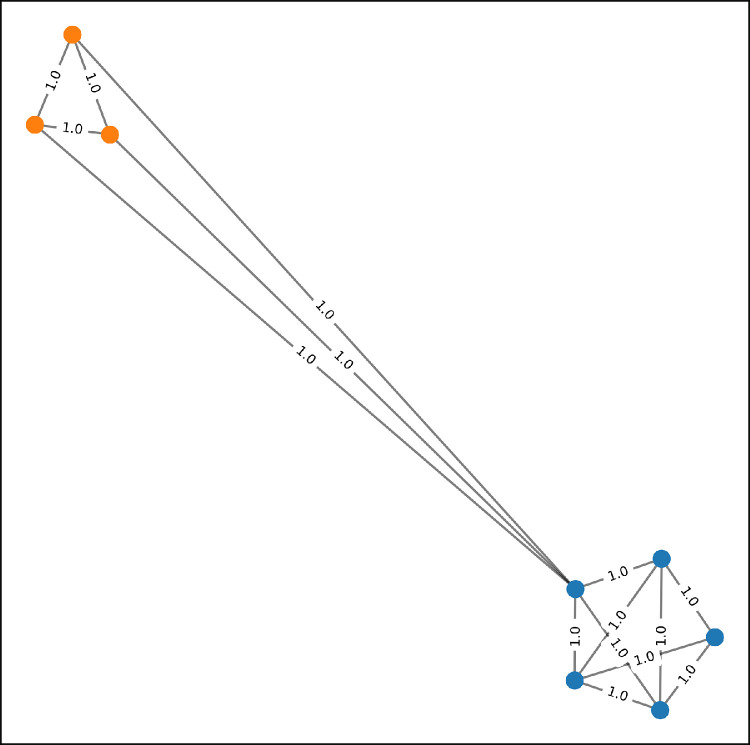

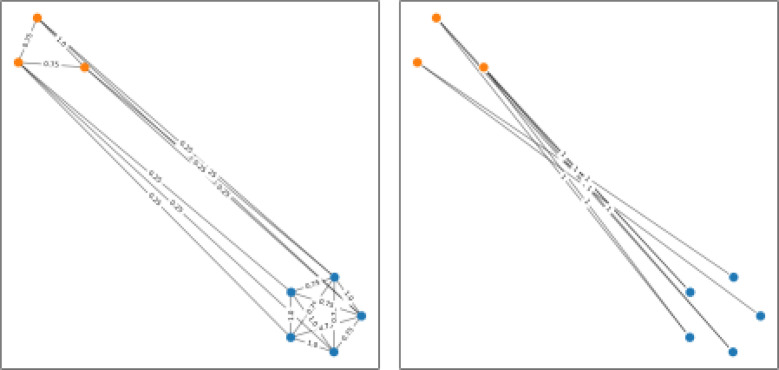

An example of -nearest neighbor graph is shown in Fig. 2. In that figure, we have three points in the blue class and five points in the orange class. The graph was constructed with . Note that some edges connect two points from two different classes. The existence of these edges is undesirable because they could confuse the classifier. But in an unsupervised graph construction, these edges are sometimes unavoidable. The solution is to assign a small weight on these edges connecting two different classes. Unfortunately, we cannot do that in -nn graphs because all edges get an equal weight.

Fig. 2.

A -nn graph with ; all edges were assigned equal weights even the ones connecting two different classes. (Best viewed in color). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

-nn provides a fast initialization for the graph convolution networks (GCN). But it assigns equal weights to all edges which gives the edges spanning two classes the same importance as edges connecting one class. To avoid this problem, we need a graph construction scheme that assigns small weights on edges connecting two classes.

rpForest initialization

The random projection tree (rpTree) is a binary space-partitioning tree. The root node contains all points in the dataset. For each node in the tree, the method picks a random direction . The dimensions of is , where is the number of dimensions in the dataset. All points in the tree node get projected onto . Then, a split point is selected randomly between along . In the projection space, if a point is less than it is placed in the left child, otherwise it is placed in the right child. rpTrees are particularly useful for -nearest neighbor search. But in this paper, we are going to use them for graph construction.

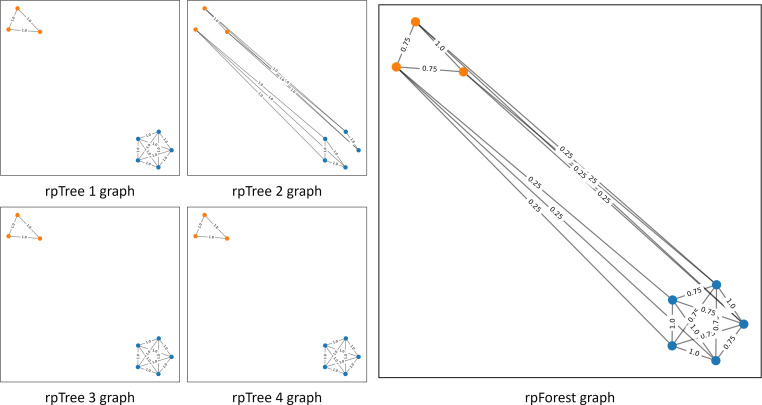

A collection of rpTrees is called rpForest. rpForests were proposed by Yan et al. [10], [11] and they applied it in spectral clustering similarity and -nearest neighbor search. We used rpForests to construct a graph and use it as an input to the GCN. rpForests helped us to overcome two problems we identified with -nn graphs. The problem of equal weights on edges, and the problem of restricting points to a fixed number of neighbors. We constructed a number of rpTrees. Then, we connect all points falling into the same leaf node. The intuition is simple, if a pair of points fall into the same leaf node in all rpTrees, they will be connected with the maximum weight. Otherwise, the weight on the edge connecting them will be proportionate to the number of the leaf nodes they fall in together. Also, the points will not be restricted to a fixed number of edges because the number of points varies from one leaf node to another.

Fig. 3 illustrates how did we construct a graph using rpForest. In that figure we used four rpTrees each of which has two levels meaning we only perform the split once. Apart from (rpTree 2), all trees have split the two classes into two different leaf nodes. The final graph aggregates all edges in the trees. The edges connecting the two classes were assigned a small weight (0.25) because they only appear in one tree out of four. Edges connecting points from the same class were assigned higher weights (1 or 0.75) because they either appear in all trees or in three out of four trees.

Fig. 3.

An rpForest graph with ; edges connecting the two classes were assigned a small weight (0.25) because they only appear in one tree out of four. (Best viewed in color). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Experiments and discussions

We designed our experiments to test how -nn and rpForest graphs affect the performance of GCN [1] and LDS [9]. Unlike GCN, LDS iteratively improves the original graph based on the validation error. For the -nn graph, we used the same settings used by the LDS algorithm, where was set to be 10. For the rpForest graph, we set the number of trees to be 10. In the next section we provide an empirical examination showing why it is safe to use as the number of trees. The number of layers in GCN was kept less than 4 layers, to prevent a drop in the performance [5]. We used two evaluation metrics: 1) the test accuracy was used to evaluate the performance, and 2) the number of edges in the graph was used to evaluate the storage efficiency.

We modified the original python files provided by GCN and LDS, to include the code for rpForest graph. We used a different font for the names of datasets. The name of a dataset is written as dataset. The code used to produce the experiments is available on https://github.com/mashaan14/RPTree-GCN. All experiments were coded in python 3 and run on a machine with 20 GB of memory and a 3.10 GHz Intel Core i5-10500 CPU.

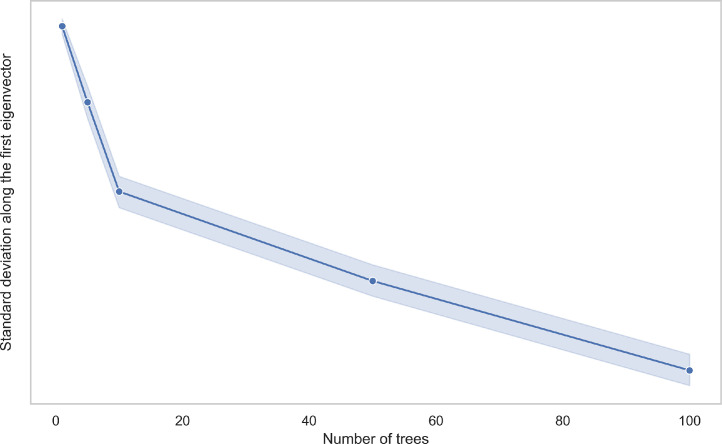

Using spectral analysis to set the number of trees

We are constructing the graph out of the leaf nodes in the rpForest. The number of rpTrees is a hyperparameter in rpForest. Tuning highly influences the outcome of rpForest. We can identify two problems that could occur from different values of . The first problem occurs when is set to a low value, which risks feeding a disconnected graph to the GCN. A disconnected graph could mean one of the classes is not connected, which negatively affects the performance of the GCN. The second problem occurs when is set to a high value. This will lead to a graph with so many edges, which could affect the memory efficiency of our method.

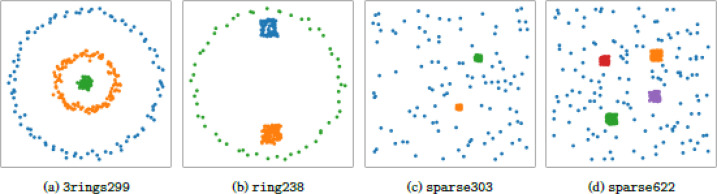

Spectral analysis provides an elegant way to check the graph connectivity. The eigenvector associated with the smallest eigenvalue of the graph Laplacian , that eigenvector should be constant. This was stated in (Proposition 2) by von Luxburg [8]. She wrote “In a graph consisting of only one connected component we thus only have the constant one vector as eigenvector with eigenvalue 0”. So, we used the standard deviation of points along the smallest eigenvector to see if the graph is connected or not. If the graph is connected (i.e. it contains a one connected component) the standard deviation will be small. We used the ring238 dataset, which is a 2D dataset shown in Fig. 5. In Fig. 4, there is a clear elbow point at , which means the graph becomes connected from this point onwards.

Fig. 5.

2-dimensional datasets used in the experiments. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Fig. 4.

Measuring the standard deviation of points along the smallest eigenvector ; represents an elbow point. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

The second problem related to the number of trees is setting to a large value, which affects the memory efficiency. Naturally if we start with a low , some edges will be missing. These edges will be created as we increase . At some point, the graph will have the same edges even if we increase . Based on our empirical analysis we set in all experiments.

GCN and LDS using -nn and rpForest graphs

In this experiment, we compared the performance of GCN and LDS using -nn and rpForest graphs. We used four 2-dimensional datasets. These 2D datasets are shown in Fig. 5 with their class labels. We also used four datasets retrieved from scikit-learn library [30]. For train and test splits we used the same settings in LDS paper [9]. These settings are shown in Table 1.

Table 1.

Summary statistics of the datasets.

| Name | Samples | Features | Train/Valid/Test | |

|---|---|---|---|---|

| 3rings299 | 299 | 2 | 3 | 10 / 20/ 279 |

| rings238 | 238 | 2 | 3 | 10 / 20 / 218 |

| spares303 | 303 | 2 | 3 | 10 / 20 / 283 |

| spares622 | 622 | 2 | 5 | 10 / 20 / 602 |

| Iris | 150 | 4 | 3 | 10 / 20 / 130 |

| Wine | 569 | 30 | 2 | 10 / 20 / 158 |

| Cancer | 569 | 30 | 2 | 10 / 20 / 539 |

| Digits | 1797 | 64 | 10 | 50 /100 / 1,647 |

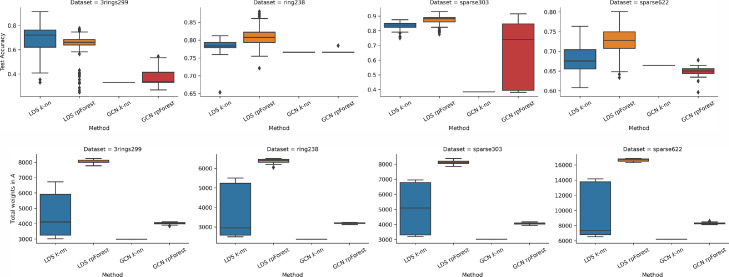

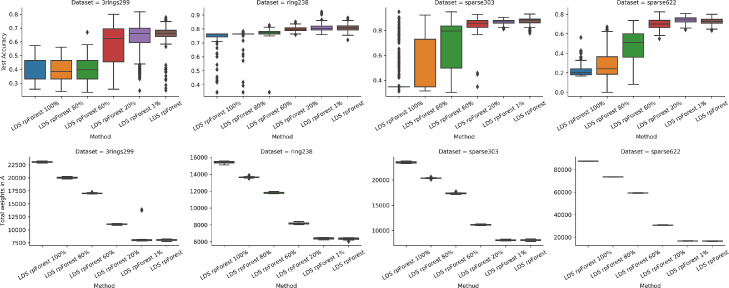

Fig. 6 shows the results of running GCN and LDS on 2-dimensional datasets. In general, we can see LDS performed better than GCN, whether it is using -nn graph or rpForest graph. This can be explained by how these two methods work. GCN takes the graph and runs it through the deep network, it cannot modify the graph by adding or removing edges. On the other hand, LDS uses the validation error to modify the graph by keeping the edges that minimize the validation error. Of course, LDS needs more time than GCN.

Fig. 6.

Running LDS and GCN using -nn and rpForest graph on 2D datasets; (top) test accuracy; (bottom) total weights in the adjacency matrix . (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

LDS performed better when given a graph based on rpForest compared to -nn graph. But this was not the case with 3rings299 dataset, when the linear line split by rpForest breaks the two rings in 3rings299 dataset. Also, we observed that rpForest graph has improved the performance of GCN. The total weights in the adjacency matrix gives us a hint about memory efficiency. Graphs in LDS have more weight than GCN, because LDS keeps modifying the graph by adding more edges. Another thing to highlight is rpForest graphs have more edges than -nn graphs.

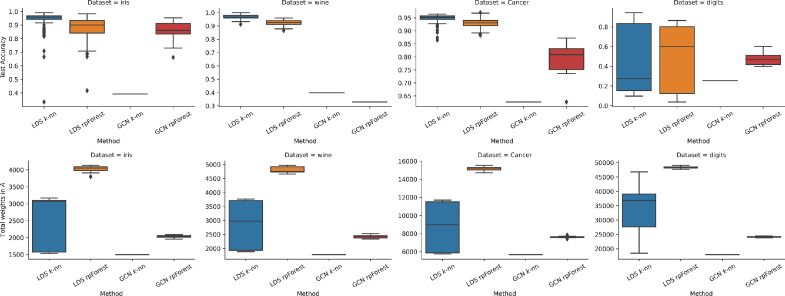

The results of experiments on scikit-learn datasets are shown in Fig. 7. GCN test accuracy was very close to the one delivered by LDS in iris and digits, even though LDS has the ability to modify the graph. Another observation is that when a GCN is fed an rpForest graph it performs better compared to -nn graph. For the total weights metric, we had the same observation across all datasets. LDS requires more storage especially when we feed it an rpForest graph, whereas GCN requires less storage.

Fig. 7.

Running LDS and GCN using -nn and rpForest graph on scikit-learn datasets; (top) test accuracy; (bottom) total weights in the adjacency matrix . (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

LDS using rpForest graph with extra edges

The rpForest graph connects only the points from the same leaf node. In this experiment, we investigate if we add some edges between points from different leaf nodes, would this improve the performance. Fig. 8 shows an example of rpForest graph and the edges that did not appear in the rpForest graph. We want to examine if we take a percentage of these edges that did not appear in the rpForest graph, would that increase the connectivity and consequently improve the performance.

Fig. 8.

(left) an rpForest graph; (right) edges that did not appear in the rpForest graph. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

By looking at Fig. 9, which shows LDS test accuracies using extra edges, we can see that these extra edges did not improve the performance. Even at the most extreme case when we included 100% of these edges, the performance dropped by 50% in some datasets. The memory footprint of these extra edges was very large. These findings emphasize on the ability of rpForest to find the most important edges for classification.

Fig. 9.

Running LDS using rpForest with extra edges; the percentage on the -axis represents the percentage of extra edges; (top) test accuracy; (bottom) total weights in the adjacency matrix . (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

One advantage for our method is assigning weights proportionate to the edge’s occurrence in the rpForest. This allows non-equal weights across the graph. A potential application for our method is the analysis of complex networks in the brain [31]. This is a research area in neuroscience that studies the complex connectivity on neuronal circuit dynamics. The functional connectivity between brain areas can be modeled as edges on a graph. These edges must have some varying weights, which is provided by our method.

Conclusion

Graphs are useful in modeling real-world relationships. That is why researchers were keen on extending deep learning to graphs. One of the successful applications of deep learning on graphs is graph convolutional networks (GCNs). The problem with GCN is that it needs the graph prepared beforehand. In most cases, the graph must be constructed from the dataset. A common choice to construct the graph is to use -nearest neighbor graph. But -nn assigns equal weights on all edges, which gives all edges the same importance during deep learning training.

We present a graph based on random projection forests (rpForest) with varying weights on edges. The weight on the edge was set proportional to how many trees it appears on. The number of trees is a hyperparameter in rpForest that needs careful tuning. We performed spectral analysis that helps us to set this parameter within the right range. The experiments revealed that initializing GCN using rpForest delivers better accuracy than -nn initialization. We also showed that the edges provided by rpForest are the best for learning and adding extra edges did not improve the performance.

For future work, we can try a different weight assignment strategy other than average, a Euclidean distance for example. Another potential extension to our work could be investigating how different binary space-partitioning trees would affect the performance of the GCN. Also, it is important to examine how rpForest graph would perform in different methods of graph neural networks (GNNs).

CRediT authorship contribution statement

Mashaan Alshammari: Conceptualization, Formal analysis, Investigation, Methodology, Project administration, Software, Visualization, Writing – original draft. John Stavrakakis: Conceptualization, Formal analysis, Investigation, Methodology, Validation, Writing – review & editing. Adel F. Ahmed: Conceptualization, Formal analysis, Supervision, Validation, Writing – review & editing, Funding acquisition. Masahiro Takatsuka: Conceptualization, Formal analysis, Supervision, Validation, Writing – review & editing.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Contributor Information

Mashaan Alshammari, Email: mashaan.awad1930@alum.kfupm.edu.sa.

John Stavrakakis, Email: john.stavrakakis@sydney.edu.au.

Adel F. Ahmed, Email: adelahmed@kfupm.edu.sa.

Masahiro Takatsuka, Email: masa.takatsuka@sydney.edu.au.

Data availability

I have shared a link to my code/data.

References

- 1.T. N. Kipf, M. Welling, Semi-supervised classification with graph convolutional networks, 2017, arXiv:1609.02907.

- 2.Phan H.T., Nguyen N.T., Hwang D. Aspect-level sentiment analysis: a survey of graph convolutional network methods. Inf. Fusion. 2023;91:149–172. doi: 10.1016/j.inffus.2022.10.004. [DOI] [Google Scholar]

- 3.Ren H., Lu W., Xiao Y., Chang X., Wang X., Dong Z., Fang D. Graph convolutional networks in language and vision: a survey. Knowl. Based Syst. 2022;251:109250. doi: 10.1016/j.knosys.2022.109250. [DOI] [Google Scholar]

- 4.G. Cong, A. Gupta, R. Neumann, M. de Bayser, M. Steiner, B. Ó. Conchúir, Prediction of adsorption in nano-pores with graph neural networks, 2022, arXiv:2209.07567.

- 5.Li G., Müller M., Qian G., Delgadillo I.C., Abualshour A., Thabet A., Ghanem B. DeepGCNs: making GCNs go as deep as CNNs. IEEE Trans. Pattern Anal. Mach. Intell. 2023;45(6):6923–6939. doi: 10.1109/TPAMI.2021.3074057. [DOI] [PubMed] [Google Scholar]

- 6.P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Lió, Y. Bengio, Graph attention networks, 2018, arXiv:1710.10903.

- 7.Tsitsulin A., Palowitch J., Perozzi B., Müller E. Graph clustering with graph neural networks. J. Mach. Learn. Res. 2023;24(127):1–21. [Google Scholar]; http://jmlr.org/papers/v24/20-998.html

- 8.von Luxburg U. A tutorial on spectral clustering. Stat. Comput. 2007;17(4):395–416. doi: 10.1007/s11222-007-9033-z. [DOI] [Google Scholar]

- 9.Franceschi L., Niepert M., Pontil M., He X. Proceedings of the 36th International Conference on Machine Learning. 2019. Learning discrete structures for graph neural networks; pp. 1972–1982. [Google Scholar]

- 10.Yan D., Wang Y., Wang J., Wang H., Li Z. 2018 IEEE International Conference on Big Data (Big Data) 2018. K-nearest neighbor search by random projection forests; pp. 4775–4781. [DOI] [Google Scholar]

- 11.Yan D., Wang Y., Wang J., Wang H., Li Z. K-nearest neighbor search by random projection forests. IEEE Trans. Big Data. 2021;7(1):147–157. doi: 10.1109/TBDATA.2019.2908178. [DOI] [Google Scholar]

- 12.Dasgupta S., Freund Y. Proceedings of the Fortieth Annual ACM Symposium on Theory of Computing, STOC ’08. Association for Computing Machinery; New York, NY, USA: 2008. Random projection trees and low dimensional manifolds; pp. 537–546. [DOI] [Google Scholar]

- 13.Dasgupta S., Sinha K. Randomized partition trees for nearest neighbor search. Algorithmica. 2015;72(1):237–263. doi: 10.1007/s00453-014-9885-5. [DOI] [Google Scholar]

- 14.Keivani O., Sinha K. Random projection-based auxiliary information can improve tree-based nearest neighbor search. Inf. Sci. (Ny) 2021;546:526–542. doi: 10.1016/j.ins.2020.08.054. [DOI] [Google Scholar]

- 15.Wei L., Chen Z., Yin J., Zhu C., Zhou R., Liu J. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2023. Adaptive graph convolutional subspace clustering; pp. 6262–6271. [Google Scholar]

- 16.Wu Z., Pan S., Chen F., Long G., Zhang C., Yu P.S. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2021;32(1):4–24. doi: 10.1109/TNNLS.2020.2978386. [DOI] [PubMed] [Google Scholar]

- 17.Ng A., Jordan M., Weiss Y. On spectral clustering: analysis and an algorithm. Adv. Neural Inf. Process. Syst. 2001;14 [Google Scholar]

- 18.J. Bruna, W. Zaremba, A. Szlam, Y. LeCun, Spectral networks and locally connected networks on graphs, 2013, doi: 10.48550/ARXIV.1312.6203. [DOI]

- 19.M. Henaff, J. Bruna, Y. LeCun, Deep convolutional networks on graph-structured data, 2015, doi: 10.48550/ARXIV.1506.05163. [DOI]

- 20.Hammond D.K., Vandergheynst P., Gribonval R. Wavelets on graphs via spectral graph theory. Appl. Comput. Harmon. Anal. 2011;30(2):129–150. doi: 10.1016/j.acha.2010.04.005. [DOI] [Google Scholar]

- 21.M. Defferrard, X. Bresson, P. Vandergheynst, Convolutional neural networks on graphs with fast localized spectral filtering, 2016, doi: 10.48550/ARXIV.1606.09375. [DOI]

- 22.You Y., Chen T., Wang Z., Shen Y. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2020. L2-GCN: layer-wise and learned efficient training of graph convolutional networks; pp. 2127–2135. [Google Scholar]

- 23.Belkin M., Niyogi P. Laplacian eigenmaps and spectral techniques for embedding and clustering. Adv. Neural Inf. Process. Syst. 2001;14 [Google Scholar]

- 24.Zelnik-Manor L., Perona P. Self-tuning spectral clustering. Adv. Neural Inf. Process. Syst. 2004;17 [Google Scholar]

- 25.Bentley J.L. Multidimensional binary search trees used for associative searching. Commun. ACM. 1975;18(9):509–517. doi: 10.1145/361002.361007. [DOI] [Google Scholar]

- 26.Freund Y., Dasgupta S., Kabra M., Verma N. Learning the structure of manifolds using random projections. Adv. Neural Inf. Process. Syst. 2007;20 [Google Scholar]

- 27.Chen F., Liu Z., Sun M.T. 2015 IEEE International Conference on Image Processing (ICIP) 2015. Anomaly detection by using random projection forest; pp. 1210–1214. [DOI] [Google Scholar]

- 28.Tavallali P., Tavallali P., Singhal M. K-means tree: an optimal clustering tree for unsupervised learning. J. Supercomput. 2021;77 doi: 10.1007/s11227-020-03436-2. [DOI] [Google Scholar]

- 29.Ram P., Gray A. Which space partitioning tree to use for search? Adv. Neural Inf. Process. Syst. 2013;26 [Google Scholar]

- 30.Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., Blondel M., Prettenhofer P., Weiss R., Dubourg V., Vanderplas J., Passos A., Cournapeau D., Brucher M., Perrot M., Duchesnay E. Scikit-learn: machine learning in python. J. Mach. Learn. Res. 2011;12:2825–2830. [Google Scholar]

- 31.Stam C.J., Reijneveld J.C. Nonlinear Biomedical Physics. 2007. Graph theoretical analysis of complex networks in the brain. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

I have shared a link to my code/data.