Significance

Artificial intelligence (AI), once merely the draw and drama of science fiction, is now a feature of everyday life. AI is commonly used to generate recommendations, from the movies we watch to the medical procedures we endure. As AI recommendations become increasingly prevalent and the world grapples with its benefits and costs, it is important to understand the factors that shape whether people accept or reject AI-based recommendations. We focus on one factor that is prevalent across nearly every society: religion. Research has not yet systematically examined how religion affects decision-making in light of emerging AI technologies, which inherently raise questions on the role and value of humans. In introducing this discussion, we find that God salience heightens AI acceptance.

Keywords: artificial intelligence, religion, algorithm aversion, decision-making

Abstract

Thinking about God promotes greater acceptance of Artificial intelligence (AI)-based recommendations. Eight preregistered experiments (n = 2,462) reveal that when God is salient, people are more willing to consider AI-based recommendations than when God is not salient. Studies 1 and 2a to 2d demonstrate across a wide variety of contexts, from choosing entertainment and food to mutual funds and dental procedures, that God salience reduces reliance on human recommenders and heightens willingness to consider AI recommendations. Studies 3 and 4 demonstrate that the reduced reliance on humans is driven by a heightened feeling of smallness when God is salient, followed by a recognition of human fallibility. Study 5 addresses the similarity in mysteriousness between God and AI as an alternative, but unsupported, explanation. Finally, study 6 (n = 53,563) corroborates the experimental results with data from 21 countries on the usage of robo-advisors in financial decision-making.

Many of life’s most consequential decisions—deciding which medical procedure to undergo, which romantic partner to pursue, which financial or legal paths to follow, etc.—can now be largely delegated to artificial intelligence (AI). Empowered by algorithms that very often surpass humans in their efficiency and accuracy (1–3), AI has the potential to significantly affect people’s well-being and the world’s economy. Estimates suggest, in fact, that developments in AI will add $15.7 trillion dollars to the global gross domestic product by 2030, driven by the more efficient production of goods and services and the strong demand for new offerings enabled by AI (4). Whether AI reaches its expected potential, however, will depend on the extent to which people are willing to embrace it in the years to come.

Despite AI’s ability to outperform humans in many contexts, people often exhibit a biased preference for human recommendations, a phenomenon known as algorithm aversion (5–8). For example, people trust medical recommendations less when they come from an algorithm than from a human doctor (9, 10) and rely less on advice from an algorithm than from a human when forecasting stock prices (7). People are particularly likely to assume that humans are more capable than algorithms when it involves making judgments for contexts that are subjective or hedonic in nature, or those that require empathy and a consideration of individual uniqueness (10–12). Moreover, when algorithms err, people are more likely to transfer the perceived fallibility of that algorithm to other algorithms, but less likely to do so when humans err (5, 13). People are also less likely to believe that algorithms can learn from mistakes (14). Together, whether reflecting a negative bias toward algorithms, or an overly positive evaluation of the self, and by extension, other humans (15), such findings suggest that a deeper understanding of algorithm aversion will be important for individuals and society at large.

Of course, the degree to which people embrace AI varies based on a number of factors. Importantly, while research has identified several factors that inform when people are more or less likely to accept AI versus human recommendations, such as the characteristics of the recommenders [e.g., relative expertise and performance outcomes (16–18)] and the type of decision being made [subjective vs. objective (11)], little work has explored factors related to the individual user (19). We suggest that systematically identifying such factors will elucidate meaningful barriers to AI acceptance and enable researchers and organizations—across public and private spheres—to better understand and predict the pace at which AI is likely to be adopted. We suggest that religion, and more precisely, the salience of God, is one meaningful consideration.

Having permeated the existence of nearly every known society (20), religion has been a persistent and powerful influence in people’s lives throughout history and continues to shape the lives of billions of people around the world. Even those who are not explicitly religious are exposed to God-related concepts and ideas in daily life, whether through political debates, entertainment, or places of worship in their surroundings. Whether religion evolved as a tool for group cooperation (21, 22), a by-product of natural selection (20, 23–25), or a means of satisfying people’s needs for order and structure (26), it affects decision-making in important ways, particularly in social and moral domains. For example, religion influences (pro)social behavior (27), ingroup favoritism and outgroup derogation (28), and moral decisions that involve curbing self-interest to benefit others (29).

Importantly, a relatively nascent body of research shows that religion also influences how humans behave and make decisions in more mundane aspects of everyday life. For instance, there is growing evidence that religious reminders lower interest in self-improvement products (30), lessen reliance on brand name products (31), and decrease impulse grocery spending (32). These findings suggest that the impact of religion on human behavior is broad and that more research is needed to understand how religion influences decision-making, especially in light of massive advances in technology that have become integral to modern decision-contexts. The question of how religion affects decision-making in the face of AI is particularly interesting when considering that such technologies evoke fundamental questions about the value and role of humans (33); religion has faced such questions since its beginnings (34, 35).

To begin addressing the intersection of religion and AI, we investigate how the salience of God affects people’s propensity to rely on AI. We theorize that God salience—the extent to which individuals are actively thinking about God—is one important factor that may attenuate AI aversion. In broaching a relationship between religion and AI, we focus specifically on the salience of God for two main reasons. First, the centrality of God(s) or other supernatural deities is what is common across all large-scale religions (20, 36), as opposed to any specific set of beliefs or practices. Indeed, among all words that relate to religion, “God” is the most commonly used in the English language (37). Second, people are frequently exposed to reminders of God in their daily lives, even if they are not religious, suggesting that an effect of mere God salience may be relevant to more of the world’s population than a narrower focus on specific religious beliefs or activities.

We predict that God salience will dampen AI aversion in decision-making. That is, individuals will be less reliant on humans and more open to recommendations from AI systems when God is salient. This is because when God is salient, people feel smaller and are thus more likely to recognize themselves, and mankind more generally, as limited and fallible.

Unpacking our predictions, we first note that across the world’s major religions, the concept of God(s) represents supernatural entities with divine powers that greatly surpass those of humans (20). Accordingly, thoughts of God evoke feelings of awe (38–44), and such feelings lead people to feel smaller and less significant (39–43). The notion that the self feels small in relation to God is also supported by research on metaphors, which finds that God is cognitively represented as being in an elevated position relative to humans (45).

We suggest that because thoughts of God are likely to lead to a smaller, diminished sense of self, people will see themselves as more limited and fallible. This is consistent with prior research that suggests that awe, as well as thoughts of God more specifically, are associated with greater humility, or the realistic acceptance that one is limited (43, 44, 46, 47). Specifically, when individuals experience awe, having encountered an entity that is vast and challenging to their worldview, they demonstrate a more balanced view of their strengths and weaknesses (43). Similarly, when people recall a connection to the divine, they report both greater awe and humility. This is true for both those who are religious and those who are not (44).

While such prior work focuses on reports of individuals’ willingness to see their own limitations, we suggest that the humility inspired by God extends to a recognition of the limitations of mankind more generally. Humility is a fundamental tenet and virtue across the world’s major religions (46, 47), but it is able to be viewed as a virtue only because every human is presumed to have weaknesses and limitations (46). This assumption is salient across religions, from the teachings of Islam that encourage followers to seek the revelation of Allah over human intuition, to the lessons of Buddhism and Hinduism that emphasize minimal focus on self in favor of seeing the interconnection of all things, to the writings of Judaism and Christianity that emphasize the totality and glory of God in contrast to the limitations of human life (46–48). Additionally, empirical work points to the likelihood that people are more apt to see the limitations of mankind when God is salient. When individuals experience awe, as is often evoked by experiences with the divine, they feel more connected to others. In particular, they identify more closely with their groups, nation, and species (40, 42, 49, 50). We suggest that when individuals simultaneously recognize their own limitations and see themselves as similar to other humans, they will be more likely to acknowledge all humans as fallible.

In sum, we predict that thoughts of God will weaken the extent to which consumers favor humans over algorithms, driven by feelings of a small self and a recognition of human limitations. We provide empirical support for our predictions across a series of eight controlled and field experiments (all preregistered; N = 2,462) that employ different methods of heightening the salience of God to establish a causal relationship between God salience and algorithm aversion. We also examine a number of alternative explanations, including mood, deterministic beliefs, and perceptions of risk. Finally, results from a preregistered analysis of an international consumer survey (N = 53,563) with participants from 21 countries support our findings.

Results of Controlled Experiments

Study 1.

To determine the impact of God salience on the preference between human expert and AI recommendations across different tasks, we randomly assigned participants in the preregistered study 1 to either a high or low God salience condition. Participants in the high God salience condition wrote about what God means to them. In the low God salience condition, participants wrote about their day. Participants then indicated their relative preference between relying on a human recommendation versus an algorithm’s recommendation for 24 different contexts (0: strongly prefer algorithm; 100: strongly prefer human). Topics included things as trivial as watching a movie and as meaningful as choosing a romantic partner. After indicating their preferences, participants rated each task on its objectivity, consequentialness, and the extent to which one’s unique needs must be considered for the task to be completed successfully. As a manipulation check for God salience, participants also indicated the extent to which they thought about God while participating in the study.

First, assessing results on the manipulation check for God salience, the high salience condition reported thinking about God during the study more than the low salience condition [Mlow God salience = 1.32, SD = 0.79; Mhigh God salience = 2.24, SD = 1.53; F(1, 319) = 47.28, P < 0.001]. Second, we assessed attrition across conditions (51), particularly to ensure that participants did not differ in their propensity to complete the different study conditions as a function of their religious backgrounds, given our context. The attrition rates between the low salience (11.6%) and high salience (17.3%) conditions did not significantly differ from each other [χ2(1) = 2.61, P > 0.1]. Moreover, among participants who completed the survey, the strength of belief in God did not significantly differ across conditions [Mlow God salience = 48.51, SD = 42.11; Mhigh God salience = 55.48, SD = 42.72; F(1, 337) = 2.29, P > 0.13], and the two groups consisted of an equal proportion of participants affiliated with a religion [59.7% in the low salience condition vs. 63.2% in the high salience condition; χ2(1) = 0.44, P > 0.5].

For our preregistered main analysis, a one-way ANOVA on an index score computed by averaging each person’s responses across the 24 decisions resulted in a significant effect of God salience [F(1, 319) = 12.91, P < 0.001; effect size f = 0.20]. Supporting our predictions, participants’ preference for receiving recommendations from humans was significantly lower when they were reminded of God (Mlow God salience = 55.25, SD = 15.04; Mhigh God salience = 49.13, SD = 15.49). This effect was highly consistent across contexts; participants indicated a directionally—if not significantly—lower preference for human recommendations under high God salience than low God salience for each of the 24 decisions (SI Appendix, Table S2). As preregistered, we also conducted a multiple linear regression analysis, which regressed recommendation preferences on God salience, while controlling for three task characteristics. The effect of God salience remained significant (B = −5.43; t = −3.22, P = 0.001) after controlling for task consequentialness (B = 0.13; t = 1.28, P > 0.2), task objectivity (B = 0.06; t = 0.74, P > 0.45), and uniqueness (B = 0.17; t = 2.09, P = 0.037).

We also conducted exploratory analyses to assess the impact of God salience when controlling for additional control measures (See SI Appendix for all analyses). The effect of God salience remained significant even when other variables were controlled for (P < 0.001). Additionally, we ran separate regression models to examine the potential moderating roles of religious affiliation (0: nonaffiliated; 1: affiliated) and God belief. The interaction of God salience by religious affiliation (B = −1.01; t = −0.29, P > 0.7) was not significant, nor was the interaction of God salience by God belief strength (B = −0.01; t = −0.24, P > 0.8).

Studies 2a to 2d.

Having provided initial evidence of the impact of God salience on people’s increased willingness to rely on AI across a variety of tasks, we tested our prediction in four specific domains—financial investment, music, food, and nutritional supplements—in online and field settings. In preregistered studies 2a and 2b, we manipulated God salience by asking participants to write either about what God(s) means to them or about what they did earlier in the day. Participants then proceeded to their respective decision tasks: In study 2a, we asked participants to state their preference between two hypothetical mutual funds, one recommended by a human and one by AI. In study 2b, participants chose to listen to and evaluate one of two songs, one recommended by a human music expert and one by AI. The song choice was real, and participants actually listened to their selection.

In preregistered study 2c, conducted in Turkey, a predominantly Muslim country (unlike studies 1 through 2b, which were run among US Americans), we manipulated God salience through the presence or absence of environmental cues. More specifically, half of participants were recruited in front of a mosque with a full view of the mosque, while the others were recruited nearby without any visible religious cues. Participants then indicated their preference between two snacks, one recommended by an expert nutritionist and one by AI specializing in nutritional advice. Additionally, participants indicated whether they had heard of or consumed the offered snack before and the extent to which they thought of God while making their choice. Because the call to prayer also heightens God salience, we noted down the exact time of data collection for each response in order to control for the duration since the last call to prayer.

In preregistered study 2d, conducted in a dental clinic in Turkey, we manipulated God salience through the music played in the waiting room. We alternated playing either a religious or nonreligious instrumental traditional Turkish song in the waiting room over 8 d of data collection. Between moving from the waiting room to the dentist’s chair, patients were invited to a short survey purportedly assessing their reaction to the music in the waiting room. All patients agreed to take the survey. After two initial song evaluation questions, patients rated the extent to which God-related thoughts had come to their mind in the waiting room. Next, they indicated which of two omega-3/fish oil supplements they preferred as a gift for participating in the survey. As in study 2c, one of the two options was presented as recommended by expert nutritionists and the other by AI specializing in nutritional advice. The assistant also documented the length of each patient’s stay in the waiting room, whether they had used any brand of omega-3/fish oil supplements before, and whether they had used or heard of the two specific brands presented in the survey. Finally, the assistant collected demographic information, including gender, age, religious affiliation, and belief in God.

We obtained consistent results across studies 2a to 2d in support of our predictions. In study 2a, 35.7% of participants in the low God salience condition picked the mutual fund recommended by the robo-advisor compared to 50.5% of participants in the high God salience condition [χ2(1) = 4.46, P = 0.035; Φ = 0.15]. In exploratory analyses, we found that the effect remains significant even when controlling for mood effects and demographics (B = 0.75; Wald = 5.63, P = 0.018; see SI Appendix, Table S3). Also, examining the potential moderating roles of religious affiliation (0: nonaffiliated; 1: affiliated) and God belief, we found insignificant interactions of God salience with religious affiliation (B = −0.47; Wald = 0.65, P = 0.42) and God belief (B = −0.002; Wald = 0.08, P = 0.77) in separate logistic regression models.

In study 2b, while 31% of participants in the low God salience condition listened to the song recommended by AI, 44.6% of those in the high God salience condition listened to the song recommended by AI [χ2(1) = 6.80, P = 0.009; Φ = 0.14], an effect that remained significant even after including additional control variables (P = 0.043; see SI Appendix, Table S4). In exploratory analyses, results revealed insignificant interactions of God salience with religious affiliation (0: nonaffiliated, 1: affiliated; B = 0.30; Wald = 0.45; P > 0.5) and God belief (B = 0.004, Wald = 0.48; P > 0.48).

In study 2c, the manipulation check for God salience indicated that the high God salience condition thought about God more than the low salience condition [Mlow God salience = 2.23, SD = 1.66; Mhigh God salience= 3.58, SD = 1.96; F(1, 348) = 47.83, P < 0.001]. As predicted, the results were consistent with the results of studies 2a to 2b; 20.6% of participants in the low God salience condition chose the snack recommended by AI, whereas 34.9% of participants in the high God salience condition chose the snack recommended by AI [χ2(1) = 8.91, P = 0.003; Φ = 0.16]. The effect remained significant even when we controlled for other measures that we preregistered (P = 0.008; see SI Appendix, Table S5). Additionally, separate regression models showed an insignificant interaction between God salience and religious affiliation (0: nonaffiliated, 1: affiliated; B = −20.78; Wald = 0.00; P > 0.99) and between God salience and God belief (B = −0.02; Wald = 2.64, P > 0.1), Of course, given that this study was conducted in a predominantly Muslim country, there was very little variation in God belief or religious affiliation (93.1% of participants indicated being affiliated with Islam). Interestingly, however, an additional exploratory analysis found that time since the last call to prayer significantly influenced the choice. A logistic regression model with God salience condition and time since the last call to prayer (in minutes) as predictor variables showed that the preference for the algorithm’s recommendation was significantly higher right after the call to prayer (B = −0.002; Wald = 4.22, P = 0.04). Importantly, the impact of God salience remained significant even after controlling for the call to prayer timing (B = 0.67; Wald = 7.40, P = 0.007).

In study 2d, the manipulation check for God salience confirmed that the high God salience condition thought about God more than the low salience condition [Mlow God salience = 2.46, SD = 1.15; Mhigh God salience = 3.05, SD = 1.21; F(1, 189) = 11.93, P < 0.001]. Consistent with the prior studies and our predictions, 16.8% (vs. 29.2%) of participants in the low (vs. high) God salience condition chose the supplement recommended by AI [χ2(1) = 4.09, P = 0.043; Φ = 0.15], an effect that remained significant even when all preregistered covariates were controlled for (P = 0.045). Not surprisingly, with 96.9% of the sample reporting an affiliation with Islam, religious affiliation and belief in God did not influence choice (Ps = 1) nor did their interactions with God salience (Ps = 1). Interestingly, in another exploratory analysis, we found that the length of stay in the waiting room significantly increased the probability of choosing the supplement recommended by AI (B = 0.14; Wald = 4.47, P = 0.035) within the high God salience condition. In other words, the longer patients were exposed to the religious music, the more likely they were to choose the supplement recommended by the AI.

Study 3.

Next, in a preregistered experiment where we investigated the role of God salience in a medical decision-making context, we also tested our hypothesis regarding the underlying psychological process. We predicted that the effect of God salience on acceptance of AI recommendations would be serially mediated by feelings of small self and a belief in human imperfection. We first manipulated God salience through the writing exercise noted in study 1. We then measured the extent to which participants felt small and believed that humans are imperfect and fallible (see Materials and Methods for the procedure and all measures). Next, we sought to examine whether the effects might instead be driven by those in the high God salience condition being more indifferent to a choice between humans and AI because they are in a more positive mood and feel better about the potential outcomes or are more likely to believe that decisions are predetermined. To do so, we administered mood and fatalistic determinism scales. Participants then imagined a dental treatment scenario in which they needed to choose one of two treatments: a root canal or an implant. After indicating the perceived riskiness of making a wrong decision, participants made their choice.

We replicated the expected effect: 33.5% of participants in the low God salience condition preferred the recommendation by the AI, while 44.3% of participants in the high God salience condition preferred the recommendation by the AI [χ2(1) = 4.12, P = 0.042; Φ = 0.11]. As in studies reported so far, the effect of God salience remained significant when other measures were controlled for (P = 0.03; see SI Appendix, Table S6).

Analyzing the proposed psychological process, we found that God salience evoked significantly stronger feelings of smallness (B = 0.98; t = 6.26, P < 0.001; CI95% = [0.6722, 1.2874]). God salience also evoked higher beliefs of human imperfection (B = 0.18; t = 2.27, P = 0.024; CI95% = [0.0246, 0.3435]). Supporting our theorizing, the indirect effect of God salience on algorithm acceptance with small self as the proximal mediator and belief in human imperfection as the distal mediator was significant (B = 0.03, CI95% = [0.0005, 0.0817]). Of note, a model with small self and belief in human imperfection as parallel mediators resulted in an insignificant indirect effect of small self (B = 0.03; CI95% = [−0.1165, 0.2101]) and a significant effect of belief in human imperfection (B = 0.07; CI95% = [0.0058, 0.2002]).

Addressing alternative explanations as preregistered, three separate one-way ANOVAs showed that God salience did not influence positive mood (Mlow God salience = 2.61, SD = 0.92; Mhigh God salience = 2.71, SD = 0.90; P > 0.3), negative mood (Mlow God salience = 1.43, SD = 0.65; Mhigh God salience = 1.44, SD = 0.62; P > 0.7), or risk perceptions (Mlow God salience = 3.80, SD = 0.97; Mhigh God salience = 3.89, SD = 1.07; P > 0.3). Finally, although participants in the high God salience condition reported significantly higher levels of deterministic beliefs [Mlow God salience = 2.59, SD = 1.29; Mhigh God salience = 2.90, SD = 1.39; F(1, 338) = 4.46, P = 0.035], a mediation analysis yielded an insignificant indirect effect of God salience on choice through determinism (B = −0.02, CI95% = [−0.0998, 0.0275]), minimizing the possibility that people prefer AI to a greater extent under high (vs. low) God salience due to a heightened belief that human effort has little or no impact on outcomes.

Examining the potential moderating effect of God belief, a logistic regression model resulted in an insignificant interaction between God salience and God belief (B = −0.007; Wald = 1.71, P = 0.19) on choice. However, a separate model with God salience, religious affiliation (0: nonaffiliated; 1: affiliated), and their interaction as predictors revealed a significant main effect of God salience (B = 0.88; Wald = 7.20, P = 0.007), an insignificant main effect of religious affiliation (B = 1.082; Wald = 2.38, P = 0.12), and a marginally significant interaction term (B = −0.81; Wald = 3.22, P = 0.073). Probing this interaction, we found that unlike the prior studies, the effect of God salience was significant among those unaffiliated with a religion (Z = 2.68, P = 0.007; CI95% = [0.2382, 1.5295]) and not among those affiliated with a religion (Z = 0.22, P = 0.825; CI95% = [−0.5427, 0.6807]), though there was no statistically significant difference between religiously affiliated and unaffiliated participants under low God salience (P = 0.38) or high God salience (P = 0.10).

Study 4.

In preregistered study 4, which employed an incentive-compatible experimental design with a predominantly Muslim sample, we further examined the notion that God salience heightens the acceptance of AI relative to humans because humans are more likely to be viewed as imperfect. We reasoned that if God was made salient in a way that reinforced the perfection of God, participants would be more interested in AI than in the baseline (low God salience) condition. However, if God was made salient in a way that also associated humans with perfection, participants would not show an increased preference for AI relative to the baseline (low God salience) condition. Participants in this experiment were randomly assigned to one of three conditions [God salience: low, high (God perfection), and high (human perfection)]. In the low salience condition, participants wrote about a neutral quote. In the two high God salience conditions, participants wrote about a verse from the Quran regarding either 1) how flawless God is or 2) the perfection of the human form, as created by God. Next, they chose one of two cryptocurrencies. One of the cryptocurrencies was ostensibly recommended by top human traders. The other was recommended by top algorithms. Participants were entered into a lottery to actually win whichever cryptocoin they chose, enhancing the consequentialness of their choice.

As predicted, God salience significantly affected participants’ choice [χ2(2) = 7.16, P = 0.028; Φ = 0.125), and the effect remained significant even when preregistered variables were controlled for (P = 0.020; see SI Appendix, Table S7). The preference for the cryptocoin recommended by algorithms was significantly higher in the God perfection condition (49%) than in both the low God salience (35%; Wald = 6.14, P = 0.013) and the God/human perfection (36.9%; Wald = 4.34, P = 0.037) conditions. The relative preferences did not differ between the low salience and the God/human perfection conditions (Wald = 0.128, P = 0.72). In other words, when God was salient and his/her perfection was emphasized, people were more accepting of AI (presumably because thoughts of human imperfection were higher, as suggested by study 3). However, when God was salient, but human perfection was implied, God salience no longer increased acceptance of AI. As in previous studies, we ran two separate logistic regression models to examine the role of God belief and religious affiliation as potential moderators after collapsing the low salience and the high salience/human perfection conditions. The models revealed no significant interaction between God salience and religious affiliation (0: nonaffiliated, 1: affiliated; B = 0.13; Wald = 0.08, P > 0.7) or God belief (B = −0.001; Wald = 0.011, P > 0.9).

Study 5.

While studies 3 and 4 provide evidence consistent with our hypothesized process, whereby God salience heightens feelings of a small self and awareness of human imperfection, we also considered an alternative account. As most AI systems operate as a “black box” (52, 53) and consumers are not fully certain how AI systems make decisions, it is possible that consumers perceive AI decisions as being similar to the decision-making of God, which is also unknown to them, and that this perceived similarity heightens the preference for AI systems under God salience.

We addressed this possibility in preregistered study 5 by directly manipulating perceptions of AI either as a “black box” or as an explainable, nonmysterious system. We then asked participants to state their investment preference on a 101-point scale between two mutual funds, one recommended by a human and the other recommended by AI (0: strongly prefer algorithm, 100: strongly prefer human). The mysteriousness manipulation was successful; participants in the mysterious AI condition reported that it is significantly more uncertain to them how AI systems make decisions [Mmysterious AI = 4.91, SD = 1.63; Mnonmysterious AI = 3.10, SD = 1.50; F(1, 238) = 78.69, P < 0.001]. The main effect of the mysteriousness of the AI [F(1, 236) = 0.03, P > 0.8] and the interaction term [F(1, 236) = 0.006, P > 0.9] on the choice between the human and AI recommendations were insignificant. However, the main effect of God salience was significant [F(1, 236) = 9.25, P = 0.003]. Specifically, participants in the God salience condition exhibited a lower preference for the human recommendation (Mlow God salience = 55.61, SD = 17.12; Mhigh God salience = 47.74, SD = 22.48). This difference was significant both when the AI was a black box [Mlow God salience = 55.72, SD = 18.29; Mhigh God salience = 48.01, SD = 24.31; F(1, 236) = 4.89, P = 0.028] and when it was nonmysterious [Mlow God salience = 55.47, SD = 15.83; Mhigh God salience = 47.37, SD = 20.05; F(1, 236) = 4.41, P = 0.037].

The effect of God salience on choice remained significant when demographics were included as covariates (P = 0.002; see SI Appendix for details). Assessing the potential moderating role of religious affiliation in further exploratory analyses, a regression model resulted in an insignificant interaction between religious affiliation (0: nonaffiliated; 1: affiliated) and God salience (B = −2.09; t = −0.41, P > 0.68). However, we obtained significant effects of participants’ strength of belief in God (B = 0.26; t = 2.75, P = 0.006) and its interaction with God salience (B = −0.13; t = −2.24, P = 0.026) on choice. Probing the interaction revealed a significant difference between the high and low God salience conditions in their preference for the human recommendation only among participants who had relatively stronger beliefs in God (Mlow God salience = 60.87, Mhigh God salience = 47.50; B = −13.37; t = −3.72, P < 0.001). This significance was attenuated among participants who believed in God less strongly (Mlow God salience = 49.94, Mhigh God salience = 47.96; B = −1.98; t = −0.55, P > 0.5).

Global Analysis of the Relationship between Religion and AI Aversion in Finance

Finally, leveraging a global consumer survey conducted between July 2021 and June 2022, we assessed the relationship between religion and AI aversion across 21 countries by investigating people’s use of AI in a financial context. Lacking a manipulation of God salience in these secondary data, we reasoned that God is more likely to be salient among those affiliated with a religion than those who are not and therefore used religious affiliation as our independent variable and rough proxy for God salience. As preregistered, individuals who indicated an affiliation with religion were categorized as “high God salience” and those who indicated being nonreligious or atheists were categorized as “low God salience.” The dependent variable was whether respondents had “ever used a robo-advisor (algorithm-based digital program) for finance issues and investments.” Those who had used a robo-advisor before (within the last year or beyond) received a score of 1 (n = 10,356; 19.3%). Those who had never used a robo-advisor or “didn’t know”* received a score of 0 (n = 43,207; 80.7%).

Regressing robo-advisor use on God salience, logistic regression analyses indicate that high God salience was associated with a higher likelihood of using a robo-advisor than low God salience (B = 0.47, χ2 = 369.09; P < 0.0001). Of course, one limitation of such secondary data, in particular using religious affiliation as a proxy for God salience as opposed to being able to manipulate salience, is the fact that individuals affiliated with a religion may differ from those unaffiliated in ways that extend beyond the salience of God. While unable to account for a fully exhaustive list of potential differences, we find that the predicted relationship held even after controlling for a variety of preregistered covariates, including age, gender, country, education, employment status, household size, community size, community type, political views, and annual household income (B = 0.64, χ2 = 424.16; P < 0.0001) (SI Appendix, Table S8). Additionally, exploratory analyses suggest that the hypothesized pattern is unlikely to be explained by differences in access to or willingness to use financial tools more generally. Assessing whether participants use various financial tools (e.g., real estate, precious metals, credit card, savings account, etc.), results indicate no difference in the number of different financial products and investments currently used/owned based on God salience [F(1, 53561) = 1.36, P = 0.24; Mhigh God salience = 3.06, Mlow God salience = 3.08; see SI Appendix for further details and exploratory analyses].

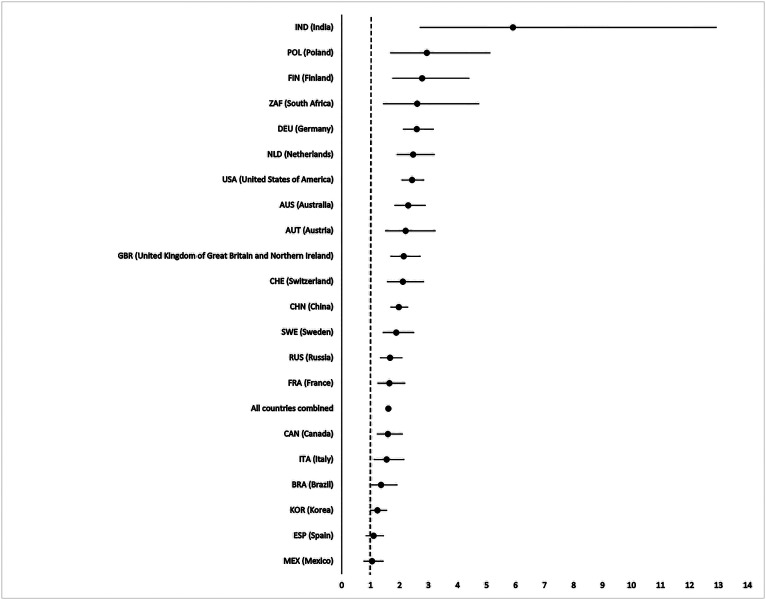

Finally, the effect of God salience on robo-advisor use was generally consistent across individual countries. The hypothesized relationship was supported at least directionally across all countries, though Mexico and Spain were particularly far from reaching statistical significance (Fig. 1 and SI Appendix, Table S11). Of note, even omitting India, the country exhibiting the strongest effect, the effect across the remaining countries is significant (B = 0.34, χ2 = 175.41; P < 0.0001). The hypothesized pattern was also consistent across different religious denominations (SI Appendix, Table S12). Affiliation with each religious denomination showed a higher likelihood of robo-advisor use than a lack of religious affiliation (all Ps < 0.0001).

Fig. 1.

Odds ratio and 95% CIs for the Global Consumer Survey data.

Discussion

AI is now a ubiquitous part of everyday life for much of the world—perhaps even akin to the pervasiveness of God. Given the diminished role of humans when viewed in relation to God and within AI operations, might there be a relationship between how thoughts of God affect people’s reactions to AI? Across several studies, our research demonstrates that thinking about God leads people to be more willing to accept recommendations from AI systems than they otherwise would. The results hold across a variety of recommendation contexts (financial, health, entertainment decisions), religious beliefs, and research methodologies (field and lab experiments, global survey). Thoughts of God lead individuals to feel smaller, rendering them more likely to recognize the fallibility of humans. They therefore find it less essential to rely on humans when making decisions and are more accepting of AI-based recommendations.

Importantly, these results extend prior research on the role of religion in decision-making. Prior research has largely focused on how religion affects social and moral decision-making (54, 55). The present findings suggest that religion has important implications for a wide swath of decisions, particularly as it relates to how decisions are made in the face of new technologies that mimic the traditional role of humans. By drawing a connection between how people view humans in relation to God (i.e., as smaller and flawed) and the decreased role that humans embody in AI, our work has broad implications for understanding the acceptance of AI as a decision-making tool. We also acknowledge the counterintuitiveness of the findings at first glance. Based on popular assumptions, one might assume that God salience leads to greater conservatism, less openness to new experiences, and decreased risk-taking, suggesting that people might be less open to the novel technology that drives AI when God is salient. However, empirical evidence provides a more complex picture. For example, prior research suggests that God salience may not necessarily lead to greater conservatism. While religious identification is positively associated with conservatism, spiritual identification is negatively associated with conservatism (56). Moreover, research suggests that there is no conclusive evidence that thoughts of God lead people to be more close-minded (57). Finally, God salience often leads to greater risk-taking, as long as one’s morals are not implicated (54, 55).

One key question raised by our findings, however, is the extent to which the effect of God salience on algorithm aversion is dependent upon the existence of underlying religious beliefs. We find few and inconsistent interactions between God salience and God belief or religious affiliation across studies (see studies 3 and 5). We therefore reason that the salience of God, irrespective of specific religious beliefs, is enough to activate a smaller sense of self and a recognition of human fallibility. This is supported by work that demonstrates that thoughts of the divine enhance humility among those who are religious and those who are not (44). There are likely implicit associations about supernatural beings learned early in life, regardless of one’s religious identification, and these implicit associations (such as God being vast and perfect, while humans are small and imperfect) are automatically activated regardless of one’s religious identification. We also note that while an important meta-analysis found that religious priming effects are stronger among believers or those who self-report higher levels of religiosity (27), research also suggests that this effect may be moderated by experimental design- and procedure-related issues such as the priming technique or the concepts used for priming religion (58) since different religious concepts generate different cognitive and motivational reactions (59–61). In fact, several studies that used relatively more explicit priming methods that induce God-related (instead of institutionalized religion-specific) thoughts (54, 55, 59, 62–65) find religious priming effects that are not moderated by the level of religiosity or belief in God. Our findings thus speak to the ongoing discussion on whether the effects of religious priming are universal (i.e., reflecting general, universal associations about the capabilities or qualities of God) or related to religious engagement [i.e., reflecting culture-specific values that are taught through one's religious upbringing (66, 67)]. Our findings suggest more of the former in the context of algorithm aversion.

It is important to note, however, that while religious affiliation is not necessary to see the effect of God salience on algorithm aversion, it is likely that God is more salient among those with an underlying religious affiliation, as they are more likely to intentionally and frequently interact with God. Thus, it is not surprising to see an effect of religious affiliation on algorithm aversion in the Global Consumer Survey. We suspect that religious affiliation is a weaker and noisier reflection of God salience though, which may be why we obtained a significant effect of religious affiliation on algorithm aversion in the highly powered consumer survey but not in the much smaller experiments. Still, in the experiments, exploratory analyses reveal directional, albeit nonsignificant, effects within the control conditions (no God salience manipulation). Specifically, investigating the control conditions across studies 2a to 2b, 3, and 4 (which share the same manipulation and the dependent variable format), there is an insignificant, but directional, pattern whereby religious affiliation is associated with greater AI acceptance (n = 626; B = −0.23; Wald = 1.83, P = 0.18). Similarly, investigating the control conditions across studies 1 and 5 (where we measured the dependent variable in a continuous format), there is a directional pattern such that religious affiliation is associated with greater AI acceptance (n = 287; B = −0.58; t = −1.26; P = 0.2).

Our results also extend work on AI aversion. Our work is consistent with prior findings on algorithm aversion that suggest that individuals often prefer human recommendations over algorithms (5, 11). We find that this preference weakens, but does not typically reverse, when God is salient. When God is salient, people simply become more accepting of AI recommendations than they would have otherwise been. While prior work has largely investigated characteristics of the decision context (e.g., subjectivity vs. objectivity) and characteristics of the recommending AI or human entity (e.g., expertise, success rate) as determinants of people’s reliance on humans versus AI (68), our work suggests that factors linked to the individual user, specifically as related to the salience of God, are important to consider. This work also builds on prior work on algorithm aversion that demonstrates that when people witness algorithms err, they exhibit greater algorithm aversion, as the fallibility of the algorithm becomes salient and generalized to other algorithms (5, 13). We demonstrate the other side of the coin—when the fallibility of humans is salient (via God salience), people exhibit less algorithm aversion. Effectively, algorithm aversion appears to strengthen as the perceived gap in fallibility between humans and algorithms becomes greater, whether this is because humans are perceived as more perfect than they are (as when God is not salient) or because algorithms are seen as less perfect (as when they err).

Massive advances in the “intelligence” of machines have the potential to change how people make decisions in nearly every aspect of life, from choosing a romantic partner to selecting a medical process, for better or worse. It appears that understanding how humans relate to God(s), the foundation of one of the world’s oldest institutions, may bring us closer to understanding people’s acceptance of some of the world’s newest technologies.

Materials and Methods

All procedures were approved between the institutional review boards of Duke University and Koç University. Participants provided informed consent and were informed that their identities would remain confidential and that they could leave the study at any time without giving a reason. Anonymized data for studies 1 to 5 and all preregistrations are available at Open Science Framework (https://doi.org/10.17605/OSF.IO/FDH4M) (69). Additional methodological information, sampling details, and full analyses are provided in SI Appendix.

Study 1.

We recruited 405 US-based participants on Prolific in return for monetary payment. Nine participants dropped out of the survey during the initial questions before being assigned to any experimental conditions, and 339 of the remaining 396 participants completed the survey (attrition rate: 14%; Mage = 37.8 y, 189 female and 9 nonbinary). As preregistered, we excluded participants who failed the attention check question and/or who started the survey from duplicate Internet Protocol (IP) addresses, resulting in a final sample of 321 participants.

Participants who started the survey were randomly assigned to one of the two conditions after an initial attention check question: In the high salience condition, participants were asked to write about the role or impact of God, however they define it, in their lives. In the low salience condition, they wrote about the things they have done during the day (69). After the writing task, participants were given a list of 24 tasks, and they indicated on a 101-point scale their relative likelihood of following the recommendation of a computer algorithm over that of an equally effective human counterpart for each task (0: definitely prefer algorithm; 100: definitely prefer human).

Next, participants rated these tasks (on 101-point scales) on objectivity, consequentialness, and the importance of considering the unique characteristics of the situation or the individual in making a decision. To ensure that participants did not differ in their interpretation of these concepts, each question provided an explanation of what we meant by these terms (SI Appendix). Participants then completed a God salience manipulation check, indicating the extent to which they thought about God while participating in the study (1 = not at all, 5 = a great deal). Finally, participants indicated the strength of their belief in God on a 101-point scale (0: not at all believe; 100: strongly believe), gender, age, and religious affiliation (1: Christian; 2: Judaism; 3: Islam; 4: Hinduism; 5: Buddhism; 6: Other religion; 7: Agnostic; 8: Atheist).

Study 2a.

We recruited 202 US-based participants (Mage = 32.2 y, 148 female and 3 nonbinary) from Prolific in return for a small monetary payment. After the attention check, participants were randomly assigned to one of two God salience conditions, which employed the same manipulation as in study 1.

Next, we asked participants to imagine that they were considering investing in two hypothetical mutual funds that yielded comparable returns in the previous year. They further imagined that they came across two financial advisors—a human financial expert and a robo-advisor—with different recommendations. The two advisors were presented as having similar success in their past recommendations. At the end of the scenario, participants indicated which mutual fund they would invest in. We counterbalanced the recommendations. Finally, participants reported the strength of their belief in God on a 101-point scale (0: not at all believe; 100: strongly believe), gender, age, and religious affiliation (1: Christian; 2: Islam; 3: Judaism; 4: Hinduism; 5: Buddhism; 6: Nonreligious; 7: Other).

Study 2b.

US-based participants (n = 350, Mage = 33.04 y, 245 female and 12 nonbinary) were recruited on Prolific in return for monetary payment for a study ostensibly presented as a song evaluation survey. After an initial attention check question, participants were randomly assigned to one of the two God salience conditions. We used the same manipulation as in studies 1 and 2a.

After the manipulation, participants were told that the objective of the second part of the survey was to understand the appeal of hit Turkish songs to the general US population. We further informed participants that an algorithm and an expert musician, with both being equally successful in recommending songs to people based on their music preferences, would be picking two songs from Apple Music’s “Turkey: Top 100” chart list. Accordingly, participants were first presented a list of 15 well-known songs, and they indicated three of these songs that they liked most. Next, they were given two songs (of the same Turkish artist), one purportedly recommended by the algorithm and one by the human expert, based on the three songs they indicated liking in the prior task. We randomized i) the order of the visual presentation of the recommending agent and ii) the song that was recommended by different agents. After indicating their preference, participants listened to the song they chose and evaluated the song on a 7-point scale (1: “strongly disagree;” 7: “strongly agree”) with two items (“I like this song;” “I would be willing to explore more songs from this artist”). Finally, we measured the same demographic and control variables as in study 2A and thanked participants.

Study 2c.

We recruited two assistants who were blind to the research hypotheses. They collected the data simultaneously by recruiting participants (n = 350; Mage = 41.8 y, 192 male and 158 female) around a mid-sized mosque in Turkey. One assistant (24-y-old, male) recruited participants in front of the mosque with a full view of the mosque, while the other assistant (22-y-old, female) recruited participants on a separate, nearby street without a view of the mosque. Participants were invited to participate in the study, presented as a survey for understanding snack preferences among the general public. Those who agreed read a short scenario which briefly introduced a healthy protein bar brand that had entered the market in the country recently. Participants were asked to choose one of two flavors of the protein bar. Before making their choice, participants were informed that a nutritional expert recommended one of the two flavors, whereas an equally competent AI specializing in nutritional advice recommended the other flavor. We counterbalanced the flavor recommended by the human and the AI. After making their choice, participants reported their gender, age, religious self-identification (Islam/other), belief in God (on a 101-point scale), their familiarity with the brand (yes/no), and whether they consumed this brand before (yes/no). Finally, participants rated the extent to which they had God-related thoughts while making the choice (1: not at all; 7: very much). The research assistants also noted the exact time of the completion of the survey.

Study 2d.

We collaborated with a dental clinic in Turkey to recruit their patients as our participants over 8 d of data collection. A total of 191 Turkish participants participated in the study (Mage = 39.3 y, 95 male). We assigned one (vs. the other) of two traditional instrumental Turkish songs to four (vs. the other four) of the 8 d during which we conducted the study. Patients of the clinic listened to either a religious or a nonreligious song in the waiting room before proceeding to the dentist’s room. When a patient left the waiting room, an assistant (43-y-old, female) who was blind to the research hypotheses asked patients whether she could ask a question about the music they listened to. After answering the two initial questions about the music (like/recommend), patients were asked to choose one of the two omega-3/fish oil supplements as a gift for their feedback about the music. Next, patients indicated the extent to which God-related thoughts came to their mind while listening to the music in the waiting room (1: not at all; 5: to a great extent). They then reported their religious affiliation (1: Islam; 2: other), whether they believe in God (1: yes; 2: no), whether they had consumed omega-3/fish oil before, whether they had previously heard of and/or consumed any of the two options presented to them, their gender, and their age. The assistant noted down the exact time when each patient entered and left the waiting room, which we used to calculate the length of their stay in the waiting room.

Study 3.

US-based participants (n = 377; Mage = 37.9 y, 204 female and 11 nonbinary) were recruited on Prolific in exchange for money and were randomly assigned to one of the two God salience conditions. We used the same manipulation as in studies 1 through 2b. Next, we employed the four-item small-self scale (41) and four items we developed to measure participants’ beliefs in human imperfection (“We are all imperfect in many ways;” “All people have flaws;” “There is no perfect person;” “We all make mistakes;” Cronbach’ alpha = 0.90). To measure mood and deterministic beliefs, we then administered the Positive and Negative Affect Schedule (PANAS) (70) and the fatalistic determinism subscale of the Free Will and Determinism-Plus (FAD-Plus) scale (71).

This was followed by a dental treatment scenario in which participants imagined having a tooth with root decay. They were told that they would make a choice between one of two possible treatments: an implant or a root canal treatment. After a brief description of the two treatments, they imagined receiving two recommendations, one from AI and one from a dentist who had an equal rate of accuracy in their past recommendations. We counterbalanced the specific treatment recommended by the dentist and AI. After indicating their perceptions of the riskiness of the decision (55), participants indicated their preferred treatment, gender, age, strength of belief in God (on a 101-point scale), and religious affiliation (1: Christian; 2: Islam; 3: Judaism; 4: Hinduism; 5: Buddhism; 6: Nonreligious; 7: Other).

Study 4.

Four hundred fifty-seven participants (Mage = 27.4 y, 225 female) completed the survey in return for a chance to participate in a raffle to win a monetary prize. Participants were identified via a snowballing technique whereby students were asked to distribute the survey to their friends and family and received course credit for every five surveys completed, with a maximum of three course credits. Participants were randomly assigned to one of three conditions. In the low salience condition, participants wrote about a quote from Shakespeare. In the other two high God salience conditions, participants wrote about one of two verses from the Quran. One verse was about God’s perfection and the other was about the perfection of humans, as created by God. After indicating their gender, age, religious identification [1: Islam; 2: Christianity (including Orthodox); 3: None; 4: Other], and their strength of belief in God (on a 101-point scale), participants were told that the survey was over and that we were planning to give one participant—to be determined by a raffle—a monetary prize by the end of the week. We also told them that the monetary prize of 50 USD had been converted into two cryptocurrencies—ADA and XRP—at the beginning of the week and that the winner would get the USD equivalent of their preferred cryptocurrency at the end of the week. We clarified that the monetary prize could be higher or lower than 50 USD depending on the performance of the two cryptocurrencies, and we instructed them to choose the coin that they thought would outperform the other. One coin was recommended by human traders. The other coin was recommended by AI. We counterbalanced which coin was recommended by the human and AI. Participants then indicated their choice and were thanked.

Study 5.

US-based participants (n = 281, Mage = 36.7 y, 167 female and 1 nonbinary) were recruited on Prolific in return for monetary payment. The study had a 2 (algorithm: mysterious vs. nonmysterious) × 2 (God salience: low vs. high) between-subjects design. Participants were first randomly assigned to one of the two algorithm conditions. In the mysterious AI condition, participants read a short text which described the decision-making process of algorithms as a “black box.” In the nonmysterious AI condition, the algorithms were presented as nonmysterious systems that make decisions by using the decision rules provided to them by developers. Next, we manipulated God’s salience using the same writing task as in previous studies.

Participants then saw a graph showing the cumulative returns of two hypothetical mutual funds in the last 5 mo, whose monthly returns were highly correlated and which yielded the same cumulative return at the end of the last 5 mo. Participants were informed that a financial advisor recommends one of these two funds, whereas an equally competent robo-advisor recommends the other fund. We counterbalanced the specific fund recommended by each advisor. After reading this information, participants indicated their relative preference for investing in these two funds on a 101-point scale.

We then asked participants to indicate their certainty regarding how AI systems make decisions, how believable the article was, how much they enjoyed reading the article, to what extent the article is a good fit for a tech magazine, and the extent to which AI systems reminded them of God or a higher power in how they work. After answering a question about the title of the article, which we used as an attention check, participants indicated their age, gender, religious affiliation, and the strength of belief in God.

Global Consumer Survey.

We investigated the relationship between God salience and financial robo-advisor use with the Global Consumer Survey, conducted by Statista—a global market and consumer research firm—between July 2021 and June 2022. A total of 53,563 participants from 21 countries participated in the survey, which included questions about religion and financial robo-advisor use, as well as several other questions. Of note, the Global Consumer Survey uses a split questionnaire design such that not everyone who participates in the survey sees the same questions. The sample size and analyses include only respondents who saw both the religion and robo-advisor questions of interest. The measures identified in the preregistration as focal to our investigation are described in SI Appendix. Individuals who indicated an affiliation with religion (Christianity, Islam, Hinduism, Buddhism, Judaism, and Other) were categorized as “high God salience” (n = 35,332; 66%) and those who indicated being nonreligious or atheists were categorized as “low God salience” (n = 18,231; 34%). Those who preferred not to respond were excluded (n = 3,417). The dependent variable was whether respondents had “ever used a robo-advisor (algorithm-based digital program) for finance issues and investments.”

Supplementary Material

Appendix 01 (PDF)

Acknowledgments

We thank Jim Bettman and Joseph Reiff for their helpful feedback.

Author contributions

M.K. and K.M.C. designed research; performed research; analyzed data; and wrote the paper.

Competing interests

The authors declare no competing interest.

Footnotes

This article is a PNAS Direct Submission.

*The results do not meaningfully differ if “didn’t know” participants are excluded from analyses.

Data, Materials, and Software Availability

Anonymized data and preregistrations for studies 1 to 5 are publicly available via the Open Science Framework (https://doi.org/10.17605/OSF.IO/FDH4M) (69). Data for the Global Consumer Survey will be available upon request. Statista will provide a purpose-limited data transfer agreement for researchers who request the data. Recipients will receive a data set (for free) with selected variables when they agree to use them for the well-defined purpose of reviewing the existing study, but not to address new questions. Data and materials from all studies (except for the Global Consumer Survey) data have been deposited in Open Science Framework (https://doi.org/10.17605/OSF.IO/FDH4M).

Supporting Information

References

- 1.Simonite T., 2014 in Computing: Breakthroughs in artificial intelligence. MIT Technology Review (2014). https://www.technologyreview.com/2014/12/29/169759/2014-in-computing-breakthroughs-in-artificial-intelligence/. Accessed 25 July 2023.

- 2.Kleinberg J., Lakkaraju H., Leskovec J., Ludwig J., Mullainathan S., Human decisions and machine predictions. Q. J. Econ. 133, 237–293 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Highhouse S., Stubborn reliance on intuition and subjectivity in employee selection. Ind. Organ. Psychol. 1, 333–342 (2008). [Google Scholar]

- 4.Rao A., Verweij G., Sizing the prize: What’s the real value of AI for your business and how can you capitalise? PwC (2017). https://www.pwc.com/gx/en/issues/analytics/assets/pwc-ai-analysis-sizing-the-prize-report.pdf. Accessed 25 July 2023.

- 5.Dietvorst B. J., Simmons J. P., Massey C., Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 144, 114 (2015). [DOI] [PubMed] [Google Scholar]

- 6.Hastie R., Dawes R. M., Rational Choice in an Uncertain World: The Psychology of Judgment and Decision Making (Sage Publications, 2009). [Google Scholar]

- 7.Önkal D., Goodwin P., Thomson M., Gönül S., Pollock A., The relative influence of advice from human experts and statistical methods on forecast adjustments. J. Behav. Decis. Mak. 22, 390–409 (2009). [Google Scholar]

- 8.Dana J., Thomas R., In defense of clinical judgment and mechanical prediction. J. Behav. Decis. Mak. 19, 413–428 (2006). [Google Scholar]

- 9.Promberger M., Baron J., Do patients trust computers? J. Behav. Decis. Mak. 19, 455–468 (2006). [Google Scholar]

- 10.Longoni C., Bonezzi A., Morewedge C. K., Resistance to medical artificial intelligence. J. Consum. Res. 46, 629–650 (2019). [Google Scholar]

- 11.Castelo N., Bos M. W., Lehmann D. R., Task-dependent algorithm aversion. J. Mark. Res. 56, 809–825 (2019). [Google Scholar]

- 12.Heßler P. O., Pfeiffer J., Hafenbrädl S., When self-humanization leads to algorithm aversion: What users want from decision support systems on prosocial microlending platforms. Bus. Inf. Syst. Eng. 64, 275–292 (2022). [Google Scholar]

- 13.Longoni C., Cian L., Kyung E. J., Algorithmic transference: People overgeneralize failures of AI in the government. J. Mark. Res. 60, 170–188 (2023). [Google Scholar]

- 14.Reich T., Kaju A., Maglio S. J., How to overcome Algorithm aversion: Learning from mistakes. J. Consum. Psychol. 33, 285–302 (2022). [Google Scholar]

- 15.Morewedge C. K., Preference for human, not algorithm aversion. Trends Cogn. Sci. 26, 824–826 (2022). [DOI] [PubMed] [Google Scholar]

- 16.Bonaccio S., Dalal R. S., Advice taking and decision-making: An integrative literature review, and implications for the organizational sciences. Organ. Behav. Hum. Decis. Process. 101, 127–151 (2006). [Google Scholar]

- 17.Madhavan P., Wiegmann D. A., Similarities and differences between human–human and human–automation trust: An integrative review. Theor. Issues Ergon. Sci. 8, 277–301 (2007). [Google Scholar]

- 18.Hou Y.T.-Y., Jung M. F., Who is the expert? Reconciling algorithm aversion and algorithm appreciation in AI-supported decision making. Proc. ACM Human-Comput. Interact. 5, 1–25 (2021). [Google Scholar]

- 19.Sindermann C., et al. , Acceptance and fear of Artificial Intelligence: Associations with personality in a German and a Chinese sample. Discov. Psychol. 2, 8 (2022). [Google Scholar]

- 20.Atran S., Norenzayan A., Religion’s evolutionary landscape: Counterintuition, commitment, compassion, communion. Behav. Brain Sci. 27, 713–730 (2004). [DOI] [PubMed] [Google Scholar]

- 21.Johnson D., Bering J., Hand of God, mind of man: Punishment and cognition in the evolution of cooperation. Evol. Psychol. 4, 147470490600400130 (2006). [Google Scholar]

- 22.Johnson D., Krüger O., The good of wrath: Supernatural punishment and the evolution of cooperation. Polit. Theol. 5, 159–176 (2004). [Google Scholar]

- 23.Boyer P., Religion Explained (Random House, 2008). [Google Scholar]

- 24.Sosis R., Bressler E. R., Cooperation and commune longevity: A test of the costly signaling theory of religion. Cross-cultural Res. 37, 211–239 (2003). [Google Scholar]

- 25.Bloom P., Religion, morality, evolution. Annu. Rev. Psychol. 63, 179–199 (2012). [DOI] [PubMed] [Google Scholar]

- 26.Laurin K., Kay A. C., "The motivational underpinnings of belief in God" in Advances in Experimental Social Psychology (Elsevier, 2017), pp. 201–257. [Google Scholar]

- 27.Shariff A. F., Willard A. K., Andersen T., Norenzayan A., Religious priming: A meta-analysis with a focus on prosociality. Personal Soc. Psychol. Rev. 20, 27–48 (2016). [DOI] [PubMed] [Google Scholar]

- 28.Johnson M. K., Rowatt W. C., LaBouff J. P., Religiosity and prejudice revisited: In-group favoritism, out-group derogation, or both? Psycholog. Relig. Spiritual. 4, 154 (2012). [Google Scholar]

- 29.McKay R., Whitehouse H., Religion and morality. Psychol. Bull. 141, 447 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Grewal L., Wu E. C., Cutright K. M., Loved as-is: How God salience lowers interest in self-improvement products. J. Consum. Res. 49, 154–174 (2022). [Google Scholar]

- 31.Shachar R., Erdem T., Cutright K. M., Fitzsimons G. J., Brands: The opiate of the nonreligious masses? Mark. Sci. 30, 92–110 (2011). [Google Scholar]

- 32.Kurt D., Inman J. J., Gino F., Religious shoppers spend less money. J. Exp. Soc. Psychol. 78, 116–124 (2018). [Google Scholar]

- 33.Anderson J., Rainie L., Luchsinger A., Artificial intelligence and the future of humans. Pew Res. Cent. 10, 1–120 (2018). [Google Scholar]

- 34.Davies B., Davies B., An Introduction to the Philosophy of Religion (Oxford University Press, Oxford, 1993). [Google Scholar]

- 35.Rowe W. L., Baker R., Philosophy of Religion: An Introduction (Dickenson Publishing Company Encino, CA, 1978). [Google Scholar]

- 36.Norenzayan A., Shariff A. F., The origin and evolution of religious prosociality. Science 322, 58–62 (2008). [DOI] [PubMed] [Google Scholar]

- 37.Laurin K., Kay A. C., Fitzsimons G. M., Divergent effects of activating thoughts of God on self-regulation. J. Pers. Soc. Psychol. 102, 4–21 (2012). [DOI] [PubMed] [Google Scholar]

- 38.Keltner D., Haidt J., Approaching awe, a moral, spiritual, and aesthetic emotion. Cogn. Emot. 17, 297–314 (2003). [DOI] [PubMed] [Google Scholar]

- 39.Campos B., Shiota M. N., Keltner D., Gonzaga G. C., Goetz J. L., What is shared, what is different? Core relational themes and expressive displays of eight positive emotions. Cogn. Emot. 27, 37–52 (2013). [DOI] [PubMed] [Google Scholar]

- 40.Shiota M. N., Keltner D., Mossman A., The nature of awe: Elicitors, appraisals, and effects on self-concept. Cogn. Emot. 21, 944–963 (2007). [Google Scholar]

- 41.Piff P. K., Dietze P., Feinberg M., Stancato D. M., Keltner D., Awe, the small self, and prosocial behavior. J. Pers. Soc. Psychol. 108, 883 (2015). [DOI] [PubMed] [Google Scholar]

- 42.Bai Y., et al. , Awe, the diminished self, and collective engagement: Universals and cultural variations in the small self. J. Pers. Soc. Psychol. 113, 185 (2017). [DOI] [PubMed] [Google Scholar]

- 43.Stellar J. E., et al. , Awe and humility. J. Pers. Soc. Psychol. 114, 258 (2018). [DOI] [PubMed] [Google Scholar]

- 44.Preston J. L., Shin F., Spiritual experiences evoke awe through the small self in both religious and non-religious individuals. J. Exp. Soc. Psychol. 70, 212–221 (2017). [Google Scholar]

- 45.Meier B. P., Hauser D. J., Robinson M. D., Friesen C. K., Schjeldahl K., What’s” up” with God? Vertical space as a representation of the divine. J. Pers. Soc. Psychol. 93, 699 (2007). [DOI] [PubMed] [Google Scholar]

- 46.Bollinger R. A., Hill P. C., "Humility" in Religion, Spirituality, and Positive Psychology (Praeger, 2012). [Google Scholar]

- 47.Davis D. E., Hook J. N., McAnnally-Linz R., Choe E., Placeres V., Humility, religion, and spirituality: A review of the literature. Psychol. Relig. Spiritual. 9, 242 (2017). [Google Scholar]

- 48.Porter S. L., et al. , “Religious perspectives on humility” in Handbook of Humility, (Routledge, 2016), pp. 63–77. [Google Scholar]

- 49.Van Cappellen P., Saroglou V., Awe activates religious and spiritual feelings and behavioral intentions. Psychol. Relig. Spiritual. 4, 223 (2012). [Google Scholar]

- 50.Pizarro J. J., et al. , Self-transcendent emotions and their social effects: Awe, elevation and Kama Muta promote a human identification and motivations to help others. Front. Psychol. 12, 709859 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zhou H., Fishbach A., The pitfall of experimenting on the web: How unattended selective attrition leads to surprising (yet false) research conclusions. J. Pers. Soc. Psychol. 111, 493 (2016). [DOI] [PubMed] [Google Scholar]

- 52.Al-Amoudi I., Latsis J. “Anormative black boxes: Artificial intelligence and health policy” in Post-Human Institutions and Organizations (Routledge, 2019), pp. 119–142. [Google Scholar]

- 53.Cadario R., Longoni C., Morewedge C. K., Understanding, explaining, and utilizing medical artificial intelligence. Nat. Hum. Behav. 5, 1636–1642 (2021). [DOI] [PubMed] [Google Scholar]

- 54.Chan K. Q., Tong E. M. W., Tan Y. L., Taking a leap of faith: Reminders of God lead to greater risk taking. Soc. Psychol. Personal. Sci. 5, 901–909 (2014). [Google Scholar]

- 55.Kupor D. M., Laurin K., Levav J., Anticipating divine protection? Reminders of God can increase nonmoral risk taking. Psychol. Sci. 26, 374–384 (2015). [DOI] [PubMed] [Google Scholar]

- 56.Lockhart C., Sibley C. G., Osborne D., Religion makes—and unmakes—the status quo: Religiosity and spirituality have opposing effects on conservatism via RWA and SDO. Relig. Brain Behav. 10, 379–392 (2020). [Google Scholar]

- 57.Jackson J., Halberstadt J., Jong J., Felman H., Perceived openness to experience accounts for religious homogamy. Soc. Psychol. Personal Sci. 6, 630–638 (2015). [Google Scholar]

- 58.Van Elk M., et al. , Meta-analyses are no substitute for registered replications: A skeptical perspective on religious priming. Front. Psychol. 6, 1365 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Karataş M., Gürhan-Canli Z., A construal level account of the impact of religion and God on prosociality. Personal Soc. Psychol. Bull. 46, 1107–1120 (2020). [DOI] [PubMed] [Google Scholar]

- 60.Preston J. L., Ritter R. S., Different effects of religion and God on prosociality with the ingroup and outgroup. Personal Soc. Psychol. Bull. 39, 1471–1483 (2013). [DOI] [PubMed] [Google Scholar]

- 61.Ritter R. S., Preston J. L., Representations of religious words: Insights for religious priming research. J. Sci. Study Relig. 52, 494–507 (2013). [Google Scholar]

- 62.Kitchens M. B., Thinking about God causes internal reflection in believers and unbelievers of God. Self Identity 14, 724–747 (2015). [Google Scholar]

- 63.Laurin K., Fitzsimons G. M., Kay A. C., Social disadvantage and the self-regulatory function of justice beliefs. J. Pers. Soc. Psychol. 100, 149 (2011). [DOI] [PubMed] [Google Scholar]

- 64.Wu E. C., Cutright K. M., In God’s hands: How reminders of God dampen the effectiveness of fear appeals. J. Mark. Res. 55, 119–131 (2018). [Google Scholar]

- 65.Yilmaz O., Bahçekapili H. G., Supernatural and secular monitors promote human cooperation only if they remind of punishment. Evol. Hum. Behav. 37, 79–84 (2016). [Google Scholar]

- 66.Gervais W. M., Willard A. K., Norenzayan A., Henrich J., The Cultural transmission of faith: Why innate intuitions are necessary, but insufficient, to explain religious belief. Religion 41, 389–410 (2011). [Google Scholar]

- 67.Richerson P. J., Boyd R., The evolution of human ultra-sociality. Indoctrinability, Ideol. Warf. Evol. Perspect. 71–95 (1998). [Google Scholar]

- 68.Burton J. W., Stein M., Jensen T. B., A systematic review of algorithm aversion in augmented decision making. J. Behav. Decis. Mak. 33, 220–239 (2020). [Google Scholar]

- 69.Karataş M., Cutright K. M., Thinking about God increases acceptance of artificial intelligence in decision making. Open Science Framework. 10.17605/OSF.IO/FDH4M. Deposited 23 May 2023. [DOI] [PMC free article] [PubMed]

- 70.Watson D., Clark L. A., Tellegen A., Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Pers. Soc. Psychol. 54, 1063–1070 (1988). [DOI] [PubMed] [Google Scholar]

- 71.Paulhus D. L., Carey J. M., The FAD–Plus: Measuring lay beliefs regarding free will and related constructs. J. Pers. Assess. 93, 96–104 (2011). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix 01 (PDF)

Data Availability Statement

Anonymized data and preregistrations for studies 1 to 5 are publicly available via the Open Science Framework (https://doi.org/10.17605/OSF.IO/FDH4M) (69). Data for the Global Consumer Survey will be available upon request. Statista will provide a purpose-limited data transfer agreement for researchers who request the data. Recipients will receive a data set (for free) with selected variables when they agree to use them for the well-defined purpose of reviewing the existing study, but not to address new questions. Data and materials from all studies (except for the Global Consumer Survey) data have been deposited in Open Science Framework (https://doi.org/10.17605/OSF.IO/FDH4M).