Abstract

Exploiting the random assignment of Medicaid beneficiaries to managed care plans, we find substantial plan-specific spending effects despite plans having identical cost sharing. Enrollment in the lowest-spending plan reduces spending by at least 25%—primarily through quantity reductions—relative to enrollment in the highest-spending plan. Rather than reducing “wasteful” spending, lower-spending plans broadly reduce medical service provision—including the provision of low-cost, high-value care—and worsen beneficiary satisfaction and health. Consumer demand follows spending: a 10 percent increase in plan-specific spending is associated with a 40 percent increase in market share. These facts have implications for the government’s contracting problem and program cost growth.

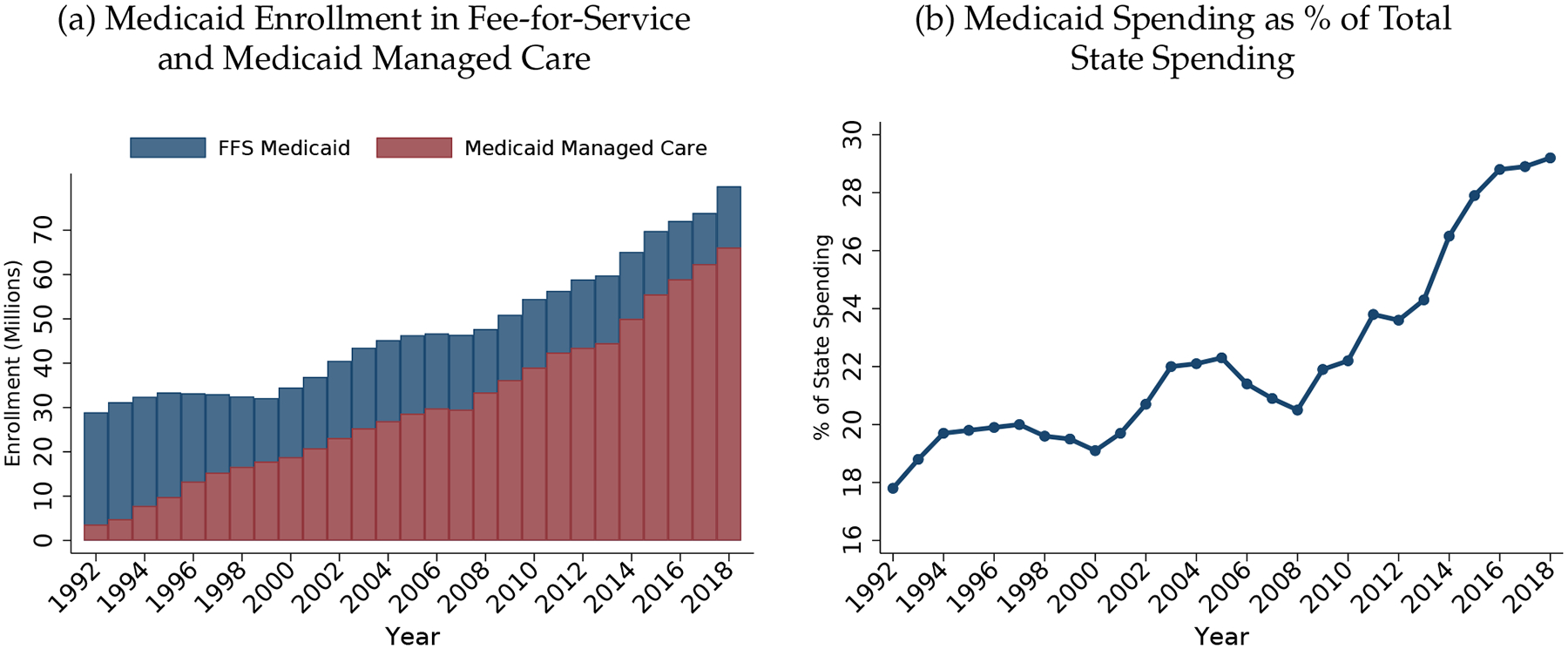

1. Introduction

Private managed care plans are the dominant form of healthcare delivery in the United States, including in publicly funded programs (Gruber, 2017). Although there is growing evidence on the roles of hospitals (Doyle et al., 2015; Hull, 2020), nursing homes (Einav, Finkelstein and Mahoney, 2022), and individual physicians (Kwok, 2019) in determining the allocation of healthcare goods and services, evidence on the impact of health plans is less complete. Several studies have documented the effects of demand-side cost sharing tools such as deductibles and copays.1 But a modern health plan consists of much more than just a schedule of consumer-facing prices, and relatively little is known about the extent to which plans can and do use other tools to influence healthcare consumption, clinical quality, and satisfaction. There is especially little evidence regarding such plan effects in the largest health insurance program in the United States—Medicaid. In addition to being an important program in its own right, Medicaid is an ideal setting to study how plans use managed care tools to influence the allocation of healthcare because cost-sharing is generally prohibited, so beneficiaries choose between managed care plans differentiated only in their supply-side features (provider networks, utilization management rules, etc.).2

In this paper, we examine three interrelated questions, drawing on evidence from Medicaid. In the first part, we ask whether private managed care plans can substantially affect patient healthcare spending (rather than merely attract high- or low-spending patients) without exposing consumers to cost-sharing. Second, we assess how spending reductions are achieved by managed care plans—and what trade-offs the savings entail. And, third, we ask whether competitive forces and consumer choice allocate beneficiaries to plans that efficiently constrain healthcare spending.

To investigate, we leverage the random assignment of nearly 70,000 beneficiaries to Medicaid managed care (MMC) plans from 2008 to 2012. The setting for our natural experiment is New York City, the second-largest MMC market in the United States, where ten plans competed for enrollees during our study period. Like many state Medicaid programs, beneficiaries in New York who did not actively choose a plan within a designated choice period were randomly assigned to one (a process known as “auto-assignment”), allowing us to estimate causal plan differences in healthcare spending and patient outcomes in an IV framework. The key identification challenge we overcome—the endogenous sorting of beneficiaries across plans (see, e.g., Geruso and Layton, 2017)—parallels the difficulty of overcoming selection bias in other contexts inside and outside of healthcare—e.g., estimating physician effects (Doyle, Ewer and Wagner, 2010); hospital effects (Doyle et al., 2015; Hull, 2020); neighborhood effects (Finkelstein, Gentzkow and Williams, 2016, 2019; Chetty, Hendren and Katz, 2016; Chetty and Hendren, 2018a,b); and teacher and school effects (Chetty, Friedman and Rockoff, 2014a,b; Angrist et al., 2016, 2017).

As our first main result, we document statistically and economically significant causal variation in spending across plans. If an individual enrolls in the lowest-spending plan in the market, she will generate about 25% less in healthcare spending than if the same individual enrolled in the highest-spending plan in the market.3 This finding is, in itself, a striking new fact. To put this result in context, a 25% difference in total mean spending was close to the difference in the RAND health insurance experiment between the 0% and the 95% coinsurance arms (Manning et al., 1987). These results reveal that (at least some) insurers can significantly constrain healthcare spending, even in the absence of any demand-side cost-sharing (deductibles, coinsurance, and copayments). Comparing our IV estimates of plan spending effects based on random assignment to risk-adjusted observational measures reveals that they are correlated, but the risk-adjusted measures tend to overstate the causal differences in spending across plans.4

If lower negotiated provider prices accounted for the savings in low-spending plans, then spending reductions could have minimal effects on consumer well-being (being instead a transfer from providers to plans and ultimately to the public program, as we discuss below). However, we find that unlike in fully private health insurance markets (Cutler, McClellan and Newhouse, 2000; Gruber and McKnight, 2016; Cooper et al., 2019), differences in provider prices do not explain the differences in healthcare spending across plans in our publicly-funded insurance setting. Instead, lower-spending plans—disproportionately for-profit entities—constrain the quantity of healthcare goods and services received by program beneficiaries, particularly on the extensive margin. We find that enrolling in the lowest-spending plan reduces a beneficiary’s probability of receiving any care in a given month by about 5 percentage points (or 16 percent) relative to the highest spending plan.

The lower real resource use we document in low-spending plans suggests the possibility of a material trade-off, in which these plans restrict access to services, technologies, or providers valued by enrollees. In contrast, if lower-spending plans control cost by keeping beneficiaries healthy or better coordinating their care, consumers may be better off in these plans. (This is the positive case often made in favor of managed competition.) To assess this, we examine the types of services for which plans matter. We show that cost savings in the lower-spending plans are driven by broad-based reductions in care provided, including lower utilization of inpatient and outpatient care and prescription drugs.5 We further establish that lower-spending plans are not merely cutting low value services (e.g., imaging for an uncomplicated headache) and promoting high value services (e.g., statins to control cholesterol).6 Instead, managed care tools used by the lower-spending plans to constrain cost are blunt: Enrollees in the lower-spending plans used fewer of both low and high value services and were more likely to be hospitalized for avoidable reasons. An important implication of these findings is that—somewhat contrary to popular myth in the broader healthcare landscape—lower-spending plans are not achieving savings by keeping people healthy. They are restricting access to a broad set of services with potentially harmful health consequences.

Beneficiaries may or may not highly value the plan attributes reflected in these clinical measures. To build a more complete characterization of consumer well-being, we generate a novel revealed preference measure that uses the same identifying variation that identifies our plan effect estimates. The key insight is that beneficiaries’ willingness to continue to comply with the random assignment reveals important information—their plan preferences post-assignment. While imperfect compliance poses no problem for identification in our IV framework, it does create an opportunity for identifying revealed preference. Using our measure of experienced utility, we show that lower-spending plans are significantly more likely than higher-spending plans (71%) to lose auto-assignees due to noncompliance and that lower-spending plans (especially the three for-profits) are less likely to attract enrollees making active choices, including noncomplying enrollees switching away from their plan of assignment. This suggests a real trade-off between spending and beneficiary satisfaction, a supply-side analog to the trade-off between risk protection and moral hazard inherent in the use of demand-side cost-sharing.

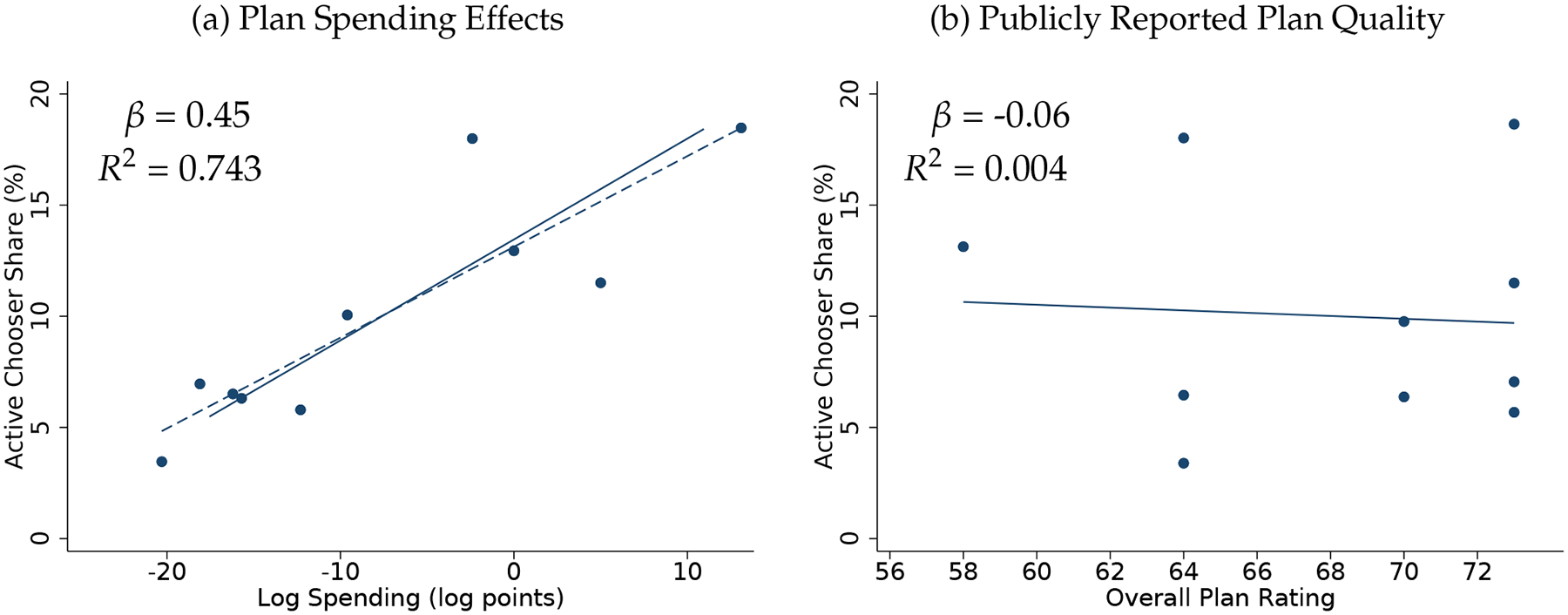

We conclude with an analysis of whether choice and competition in this setting lead beneficiaries to plans that effectively constrain spending, consistent with the positions of policymakers who advocate for the transition to private provision. What matters for the larger question of whether managed care can reduce spending in aggregate is the interaction of plan spending effects and enrollment flows among the overall population, including the active choosers not used in our IV analysis. There are many reasons to doubt that enrollment flows necessarily follow clinical measures of plan quality, given the type of choice frictions and imperfections often documented in this domain (e.g., Handel and Kolstad, 2015; Abaluck et al., 2021). Additionally, in Medicaid there are reasons to doubt that beneficiaries will flock to more efficient plans that are able to constrain spending, as plans have limited ability to pass savings back to beneficiaries in forms that beneficiaries value most, such as cash via lower premiums (as there are no premiums) and additional supplemental benefits (which are typically not allowed).7 This differs, for example, from managed competition in Medicare (Song, Landrum and Chernew, 2013; Duggan, Starc and Vabson, 2016; Cabral, Geruso and Mahoney, 2018; Curto et al., 2021). For these reasons, it is unclear ex ante what types of plans beneficiaries will prefer and thus what types of plans this market will reward.

We study this question by observing beneficiaries making active plan choices. We find that demand follows spending. Health plans with 10% higher spending on healthcare among the randomly-assigned enrollees have a 4.1 percentage point (41 percent) higher market share among enrollees making active choices. We further show that this pattern of demand following spending holds when examining both the origin and destination plans among auto-assignees who switch, when examining the initial choices of active chooser beneficiaries, and when examining the subsequent choices of active chooser beneficiaries who switch from their initial choice. Plan choices do not align with publicly-reported plan quality ratings, the one piece of information about plans provided to beneficiaries by the state at the time of choice. Instead, demand seems primarily tied to the ability to use care, and thus to higher levels of healthcare spending.

Our results also imply that a state’s choice of which managed care plans to contract with is not an innocuous one. In our setting, if the state removed the four highest spending plans from the market via a more managed procurement process, spending would decline by $1.4 billion per year or 10% of total NY Medicaid spending on MMC in NYC. The trade-off for that procurement decision would be declines in utilization/access, beneficiary satisfaction, and beneficiary health outcomes (as our IV estimation documents).

This paper contributes to a nascent literature on the effects of health plans in settings where plans differ on more than cost-sharing parameters. This complements contemporaneous research on Medicare Advantage by Abaluck et al. (2020), Medicaid managed care in South Carolina by Garthwaite and Notowidigdo (2019) and in Louisiana by Wallace et al. (2022), and health plans serving the non-elderly, non-Medicaid population by Handel et al. (2019).8 This paper is also closely related to Wallace (2020), which uses the same random assignment in New York we use here, combined with within-plan geographic variation, to study how narrow provider networks affect beneficiary outcomes.

Our work also contributes to the literature on optimal insurance design in the presence of moral hazard. We provide new evidence on how an under-studied set of health plan features (those not related to cost-sharing) constrain moral hazard, adding to a smaller recent literature concerned with these features (see, e.g., Curto et al., 2017; Layton et al., 2019). Consistent with Garthwaite and Notowidigdo (2019), we find substantial causal heterogeneity across plans in spending and utilization that arises without any differences in consumer cost-sharing exposure. Thus, significantly constraining healthcare spending—with effects larger than what a high deductible has been shown to accomplish—does not require exposing consumers to out of pocket spending. In this way managed care circumvents the classic trade-off between financial risk protection and moral hazard noted by Zeckhauser (1970) and Pauly (1974).

Our findings also complement and extend an important literature dating back to the RAND health insurance experiment (Manning et al., 1987) that documents how consumer prices impact healthcare utilization. In RAND and the studies that have followed, patient cost-sharing has proven to be a blunt instrument, reducing the use of low- and high-value services alike (Brot-Goldberg et al., 2017). These findings sparked interest in whether managed care tools could better target inefficient utilization and manage the care of high-cost patients responsible for the majority of spending. But our results, along with prior work studying managed care in Medicare (Curto et al., 2017), indicate that supply-side tools exhibit many of the same features and limitations as demand-side tools. They lead to broad reductions in utilization, limiting both high- and low-value care rather than targeting “waste.”9 Our results do not rule out the possibility that managed care tools could be used to efficiently ration and target healthcare products or services, but they do provide a well-identified and important data point on the “bluntness” of supply-side restrictions in practice.

The rest of the article proceeds as follows. Section 2 describes our empirical setting and data. Section 3 presents our empirical framework. Section 4 presents our main plan effect estimates for healthcare spending. In Section 5 we decompose the plan spending effects into price and quantity, and assess their correlation with causal estimates of plan effects on clinical quality and consumer satisfaction. Section 6 discusses the implications of our results for the economics of Medicaid managed care. Section 7 concludes.

2. Data and Setting

2.1. Medicaid Managed Care in New York

New York State is similar to the broader US in its reliance on private managed care organizations (MCOs) to deliver Medicaid benefits to the majority of its Medicaid beneficiaries.10 New York is typical in that Medicaid beneficiaries may choose plans from a range of carriers that include national for-profits, local for-profits, and local non-profits, though we are not permitted to identify specific plans in our analysis. We focus on the five counties comprising New York City, where enrollment in managed care is mandatory and which contains about two-thirds of the state’s Medicaid population. Restricting attention to a single large city allows us to identify differences across managed care plans operating in the same healthcare market.

Plans’ incentives for cost control and patient satisfaction were determined by the combination of the contract structure and the institutional feature that all plans would receive some enrollees through the auto-assignment process regardless of patient satisfaction or quality (above the state’s minimum threshold). Plans received a monthly capitation payment for each individual enrolled in the plan in a given month. In the beginning of the study period, these payments were plan-specific and were based on each plans’ spending patterns in prior years. By the end of the period, payments were set at the market level (i.e., made uniform across plans) and risk-adjusted according to each enrollee’s clinical conditions. Thus, throughout the study period, plans were the residual claimants on any healthcare spending reductions in a given plan-year, though the incentive to constrain spending was stronger later in the study period because of the dynamic incentives involved in rate-setting.11

In terms of attracting and retaining enrollees, plans may have had asymmetric strategies enabled by the presence of auto-assignment and enrollment inertia in this context. Below, we show that the pattern of enrollment and spending across plans would be consistent with some plans pursuing a high-margin, low-volume strategy and others pursuing a low-margin, high-volume strategy.12

2.2. Administrative data and outcomes

We obtained detailed administrative data from the New York State Department of Health (NYSDOH) for the non-elderly New York Medicaid population from 2008 to 2012 (New York State Department of Health, 2008–2012). Critically, the enrollment data include an indicator for whether a beneficiary made an active plan choice or was auto-assigned, and, for auto-assignees, the plan of assignment. Monthly plan enrollment data allow us to observe whether beneficiaries remained in their assigned plans. We describe auto-assignment (our identifying variation) in the next section.

The claims data used to assess plan impacts on healthcare spending include information on providers, transaction prices, procedures, and quantities. All managed care plans are required to submit standardized encounter data for the services they provide, and the NYSDOH has linked these data to their own administrative records for claims paid directly by the state through the FFS program. Thus, the assembled data (at the enrollee-by-encounter level) contain beneficiary-level demographic and enrollment data, plan-reported claims-level data for each beneficiary while in an MCO, NYSDOH-generated claims-level data for FFS services prior to MCO enrollment, and NYSDOH-generated claims-level data for FFS services carved out of MCO responsibility during MCO enrollment.

In principle, the quality of managed care encounter data reported by MCOs may vary across markets and across plans within a market. For example, nationally-aggregated Medicaid managed care encounter data that is filtered through the Medicaid Analytic eXtract (MAX) is known to have quality problems for some states (though not New York in our time period; see Byrd, Dodd et al. (2015)), and may discard information that is idiosyncratic to a particular state or time period. It is important to understand that our data come directly from the NYSDOH and that New York during our sample period is a high-quality outlier in terms of MCO claims validation Lewin Group, 2012.

Using this data, we construct several beneficiary-month level outcomes:

Healthcare use, prices, and spending.

We observe all services paid for by the managed care plans and by fee-for-service Medicaid. Most beneficiaries spend a few months enrolled in the FFS program prior to choosing or being assigned to a managed care plan, allowing us to observe utilization under a common fee-for-service regime prior to randomization. This enables powerful balance tests on a variety of baseline characteristics. When we report total enrollee spending in managed care, we add together the components paid by the MCO plan as well the services carved out from managed care financial responsibility and paid for via FFS by the state.

Healthcare quality.

We measure healthcare quality by adapting access measures developed by the Secretary of Health and Human Services (HHS) for the adult Medicaid population. We determined whether beneficiaries complied with recommended preventive care, measured as the frequency of flu vaccination for adults ages 18 to 64 as well as the number of breast cancer screenings, cervical cancer screenings, and chlamydia screenings in women. We also examined the frequency of avoidable hospitalizations (a surrogate health outcome), operationalized as admission rates for four conditions: diabetes short-term complications, chronic obstructive pulmonary disease (COPD) or asthma in older adults, heart failure, and asthma in younger adults. We use additional measures of potentially high- and low-value care that follow recent contributions in the literature (Schwartz et al., 2014; Brot-Goldberg et al., 2017). For example, as low-value care measures we assess the likelihood an enrollee uses the emergency department for avoidable reasons (Medi-Cal Managed Care Division, 2012) and the hospital all-cause readmission rate (National Committee for Quality Assurance, NCQA) (Washington Health Alliance, 2015). We use General Equivalence Mappings from ICD-10 CM diagnosis codes to ICD-9 codes to assign low value status to procedures in the data (CMS, 2018). We also use the IBM Micromedex RED BOOK to classify drugs into therapeutic classes (IBM, 2020).

Willingness-to-Stay.

Because Medicaid enrollees do not pay a premium (price) for enrolling with any of the plans in the market, we cannot measure beneficiary willingness-to-pay for one plan versus another. Instead, we assume beneficiaries’ preferences are revealed through their subsequent plan choices—voting with their feet. While switching rates are low, enrollees are not locked-in to their assigned plans: For the first three months after assignment they may switch for any reason, after which they can switch for “good cause.” As we discuss in Section 5.2, we measure willingness-to-stay as the likelihood that a randomly-assigned enrollee remains in her assigned plan. We also examine which plans auto-assignees switch into, once they make such a switch.

2.3. Auto-assignment to Plans

For our study period (2008–2012), beneficiaries in New York City had 30, 60, or 90 days to actively choose an MCO. In excess of 90 percent of beneficiaries did so. Our study design focuses on the beneficiaries who did not choose within the required time frame and were automatically assigned to a plan, a policy known as “auto-assignment.” These auto-assigned enrollees were randomly allocated across eligible plans with equal probability via a round robin approach:13 Each month, a person in the New York State Department of Health would start from a roster of Medicaid enrollees needing auto-assignment. They would then make assignments to plans in groups of about 20 beneficiaries, using an assignment “wheel.” Each group would be assigned to the qualifying plan appearing next on the wheel; then the wheel would cycle until all enrollees were assigned. In a typical month, more than 1,000 enrollees would be assigned in this manner. The following month, assignment would begin again from wherever the wheel had stopped in the prior month.

This was not a randomized control trial, and we had no involvement in the randomization process. The quasi-random assignment to plans is a standard part of NY Medicaid administration. We leverage the fact that this policy causes plan choice to be orthogonal to individual characteristics for the subset of the population subject to auto-assignment. Because beneficiaries can opt out of their assigned plans and switch to a different plan, we use an IV research design to address noncompliance. We use assignment to a plan as an instrument for enrollment in that plan. As we show below in Section 3, auto-assignment is a powerful instrument for enrollment, and balance tests—in which data on pre-assignment healthcare utilization allows us to explore correlation between assignment and predetermined characteristics—show no evidence against the assumption that assignment was as good as random.

The limited non-compliance that does occur is driven by the fact that after auto-assignment each beneficiary had three months to switch plans without cause before a nine-month lock-in period began.14 This is the primary explanation for imperfect compliance, which generates a first stage effect of assignment on enrollment smaller than 1.0, but poses no problem for the maintained exogeneity assumption. Additional institutional details regarding auto-assignment are available in Appendix A and are documented in Wallace (2020), which examines the effect of Medicaid managed care provider networks in New York.

We construct our “auto-assignee sample” with the following restrictions. First, we restrict the sample to beneficiaries aged 18 to 64. We exclude individuals aged 65 and older because they are excluded from managed care. We remove beneficiaries below age 18 because children are often non-randomly auto-assigned to their parents’ plans. Second, we exclude Medicaid beneficiaries with family members in a Medicaid managed care plan at the time of auto assignment and beneficiaries who were enrolled in a managed care plan in the year prior to assignment. Plan assignments for these beneficiaries are automatic, but not random.15 Third, we restrict to beneficiaries with at least six months of post-assignment enrollment in Medicaid to allow us to observe plan effects on spending, utilization, and quality outcomes.

In primary analyses we restrict attention to the initial six months post-assignment. Enrollment is high and stable until six months and then drops off precipitously (see Appendix Figure A2). This is due to high levels of churn in the Medicaid program combined with a NY regulation guaranteeing Medicaid eligibility for six months following the beginning of an MMC enrollment spell. We show robustness of our main results to expanding the sample to include additional months in Section 4.2. The expanded-sample results are nearly identical.

These sample restrictions, further detailed in Appendix Table A1, leave us with 65,595 auto-assigned beneficiaries in five boroughs and ten plans. The final “auto-assignee” sample includes 258 month × county of enrollment (the unit of randomization) cohorts of observations. Table 1 presents summary statistics on this sample.16 In some analyses, we compare outcomes between auto-assigned beneficiaries and those that made active plan choices. To do so, we construct a 10% random sample of “active-choosers” (for computational feasibility), imposing the same basic sample restrictions we used for the auto-assigned beneficiaries. However, when testing for balance across plans on predetermined characteristics we construct a random subsample of this 10% active-chooser sample that is equal size to the auto-assignee sample to equalize statistical power across the two groups.

Table 1:

Summary Statistics

| Mean | Std. Dev | Observations | |

|---|---|---|---|

| (1) | (2) | (3) | |

| Demographics | |||

| Female (%) | 40.1 | 49.0 | 393,570 |

| White (%) | 27.2 | 44.5 | 393,570 |

| Black (%) | 51.8 | 50.0 | 393,570 |

| Age (years) | 35.8 | 12.7 | 393,570 |

| Healthcare Spending, $ per enrollee-month | |||

| Total | 510 | 2,877 | 393,570 |

| Office Visits | 21 | 165 | 393,570 |

| Clinic | 52 | 280 | 393,570 |

| Inpatient | 220 | 2,546 | 393,570 |

| Outpatient | 41 | 302 | 393,570 |

| Emergency Dept. | 16 | 100 | 393,570 |

| Pharmacy | 75 | 454 | 393,570 |

| All Other | 84 | 621 | 393,570 |

| Enrollees with Any Spending (%) | 34.87 | 47.65 | 393,570 |

| Spending Conditional on Any ($) | 1,462 | 4,727 | 137,222 |

| Drug Days Supply, days per enrollee-month | |||

| Diabetes | 1.11 | 8.69 | 393,570 |

| Statins | 0.83 | 5.79 | 393,570 |

| Anti-Depressants | 1.31 | 7.80 | 393,570 |

| Anti-Psychotics | 1.49 | 8.64 | 393,570 |

| Anti-Hypertension | 1.32 | 7.91 | 393,570 |

| Anti-Stroke | 0.10 | 2.14 | 393,570 |

| Asthma | 0.46 | 4.11 | 393,570 |

| Contraceptives | 0.25 | 3.28 | 393,570 |

| High-Value Care, per 1,000 enrollee-months | |||

| HbA1c Testing | 5.49 | 73.91 | 393,570 |

| Breast Cancer Screening | 1.47 | 38.29 | 393,570 |

| Cervical Cancer Screening | 7.29 | 85.05 | 393,570 |

| Chlamydia Screening | 6.61 | 81.01 | 393,570 |

| Low-Value Care, per 1,000 enrollee-months | |||

| Abdomen CT | 0.33 | 18.17 | 393,570 |

| Imaging and Lab | 143.88 | 350.97 | 393,570 |

| Head Imaging for Uncomp. HA | 1.90 | 43.52 | 393,570 |

| Thorax CT | 0.09 | 9.43 | 393,570 |

| Avoidable Hospitalizations | 5.44 | 73.56 | 393,570 |

| All Cause Readmission | 0.29 | 18.59 | 393,570 |

Note: Table reports summary statistics for the auto-assignee sample (used in the main IV analysis) over the first 6 months post-assignment. Observations are at the enrollee-month level. See Section 2.3 for details on the auto-assignee sample restriction and Appendix B for detailed descriptions of the low- and high-value care measures.

3. Empirical Framework and First-Stage

3.1. Econometric Model

Our main empirical goal in this paper is to measure the causal effect of enrollment in health plan j ∈ J on outcomes at the beneficiary (i) or beneficiary-time (it) level. We follow Finkelstein, Gentzkow and Williams (2016) in modeling a data generating process for healthcare spending in which log spending (Y) is determined by a plan component (γ), a person-level component (ξ), time-varying observables (X), and a mean zero shock (ϵ).17 To recover plan effects, γj, we estimate regressions of the following form, combining the individual component, ξ, and the error, ϵ, into a compound error term μ:

| (1) |

In these regressions, an observation is a beneficiary-month.18 The subscript t denotes the month × year of the observation. The subscript c(i) denotes an assignment cohort of beneficiary i—fixed for each individual—defined by the county × month × year in which the beneficiary was originally assigned to a managed care plan. The regressors of interest are indicators for enrollment in month t in each of the nine plans competing in the New York City market (with the tenth plan as the omitted category), denoted Plan_jit and equal to 1 if beneficiary i is enrolled in plan j in month t and zero otherwise. Assignment cohort fixed effects ψc(i) (e.g., an indicator for being assigned in June 2011 in the Bronx) are included in all specifications, as this is the unit of randomization. The X vector of individual controls includes indicators for: sex, race (5 groups), deciles of spending in FFS prior to MMC enrollment, and each individual year of age (18 to 64).

To address the endogeneity of beneficiaries sorting across plans—correlation between plan choice and μit(≡ ξi + ϵit)—we exploit random assignment, which is cross-sectional within an assignment cohort. We restrict to individuals who were randomly auto-assigned to plans and instrument for plan enrollment indicators with plan assignment indicators, denoted Assigned_ji and equal to 1 if the beneficiary was assigned to plan j and 0 otherwise. There are ten plans that receive auto-assigned enrollees during our time period, requiring nine first-stage regressions (with plan 10 omitted):

| (2) |

We use the nine first-stage regressions to predict enrollment in each plan. For each auto-assigned enrollee, only one of the plan assignment variables will be equal to one. The coefficient λkj captures the probability that an individual auto-assigned to plan j will be enrolled in plan k during the observation month, relative to the omitted plan. For each first-stage regression, a λkj equal to one when k = j and equal to zero when k ≠ j would indicate perfect compliance. The second stage estimating equation uses the vector of predicted enrollment values () from the first-stage regressions:

| (3) |

This IV strategy results in estimates of the plan effects, γj, that use only variation in enrollment due to quasi-random auto-assignment.

For some analyses, it is useful to reduce the dimensionality of the problem by grouping together plans. The grouping aids with statistical power, as well as with tractability of certain comparisons. In this modified IV regression specification, the endogenous variables are indicators for enrollment in any plan in each set, and the instruments are indicators for assignment to any plan in each set. These estimating equations take the form:

| (4) |

where we have divided plans into three groups: low, medium, high, with medium being the omitted category. (We define the groupings below.) The corresponding first stage regressions are analogous to Equation 2.19

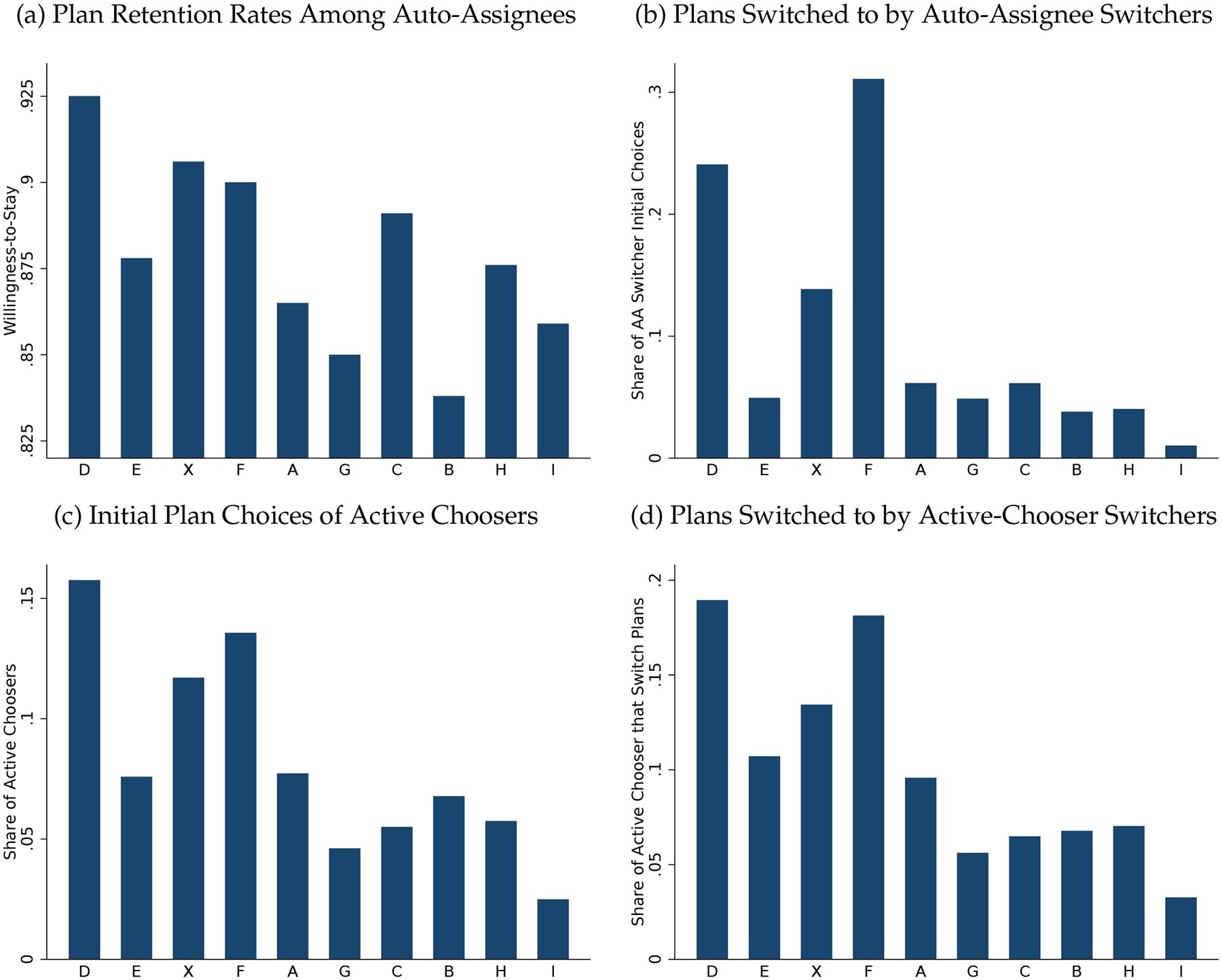

3.2. First-Stage and Instrument Validity

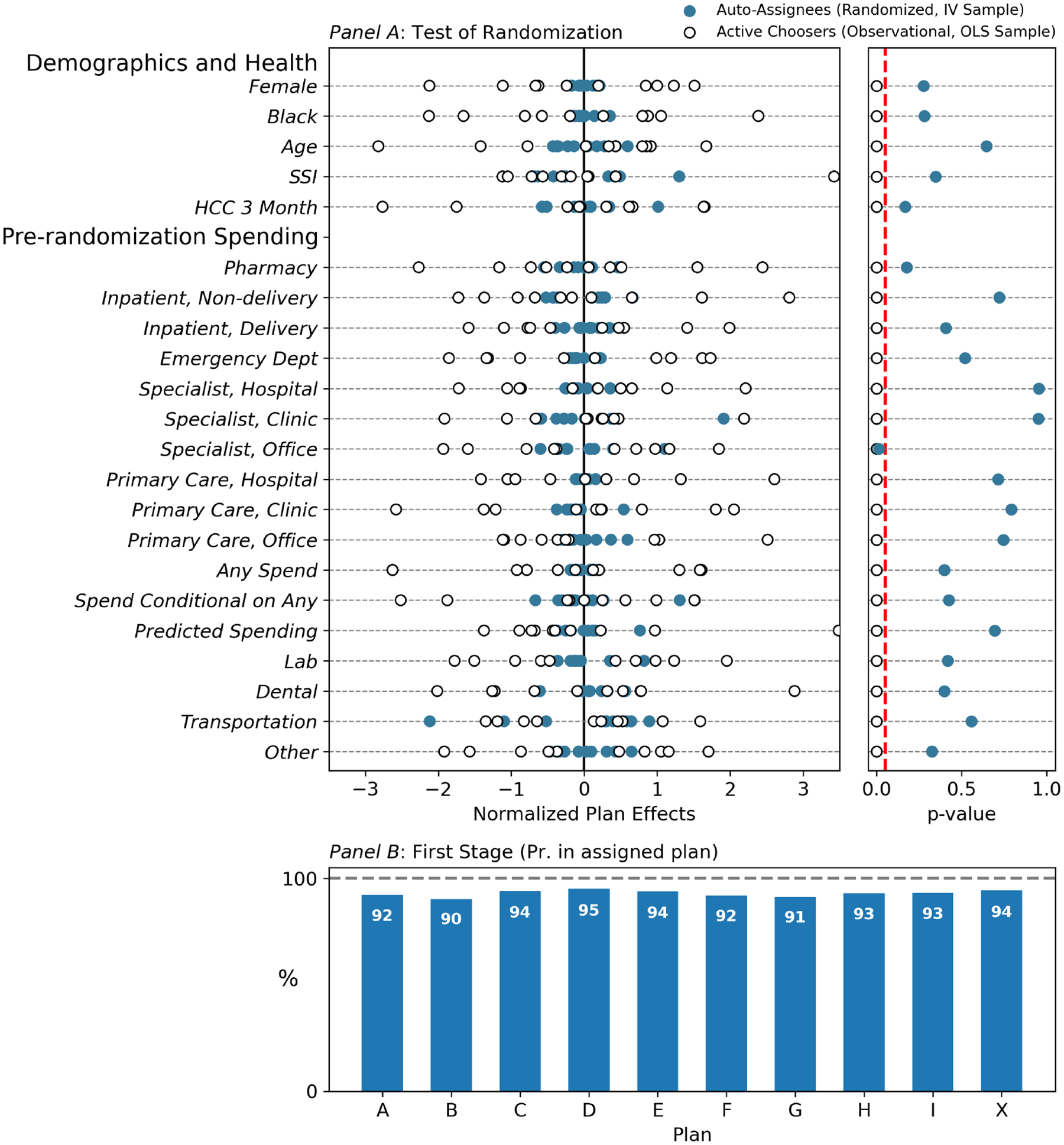

Panel (b) of Figure 2 plots λjj for each plan—roughly, the probability that a beneficiary who is auto-assigned to a plan is enrolled in that plan after assignment. For example, the estimate of λAA is 0.924, indicating that the probability of Plan A auto-assignees being observed in Plan A in each of the following six months is 0.924. Across all plans, beneficiaries spend more than ninety percent of beneficiary-months on average in the follow-up period in their assigned plan. The high rate of compliance implies that the effects recovered by IV are unlikely to differ much from average treatment effects for the full auto-assignee sample. Table A3 lists all of the first-stage coefficient estimates, λkj. The overall first-stage F-statistic is reported in Table 2 and exceeds 7,000.

Figure 2:

First Stage and Instrument Balance on Predetermined Characteristics

Note: Figure displays a balance test for the randomization in panel (a) and first stage regression coefficients in panel (b). Pre-determined characteristics include demographics and healthcare utilization in FFS Medicaid prior to randomized auto-assignment to a managed care plan. Each enrollee spent a pre-period (often a few months, once retroactive enrollment is included) enrolled in the FFS program prior to choosing or being assigned to a managed care plan. For the balance test, two samples are used: the main IV analysis sample of auto-assignees (AA) and a same-sized random subsample of active choosers (AC) for comparison. On the left side of panel (a), each pre-determined characteristic is regressed on the set of indicators for the assigned plan (for auto-assignees) or for the chosen plan (for active choosers), and the plan effects are plotted. Separate regressions are run for the AA and AC groups, so that each horizontal line plots plan coefficients from two regressions. The plan effects are demeaned within the AA and AC groups separately, and then scaled by the same factor (the standard deviation of the combined set of demeaned plan effects). Hence, the scales (not displayed) differ for each dependent variable but are identical for the AA and AC regressions within a dependent variable. Tighter groupings of estimated plan coefficients indicate smaller differences across plans in the characteristics of enrollees. In the right side of panel (a), we show the p-values from F-tests that the plan effects in these regressions are jointly different from zero. Tabular versions of these results are in Table A4. Large p-values are consistent with random assignment. Small p-values indicate selection on observables. The vertical dashed line is at p=0.05. In the bottom panel, bar heights correspond to coefficients from the first stage regressions (Eq. 2), in which observations are enrollee-months, the coefficient plotted is on an indicator for assignment to plan j, and the dependent variable is enrollment in plan j. Bar heights can be interpreted as approximately the fraction of months auto-assignees remain in their plan of assignment. Table A3 reports all first stage coefficients.

Table 2:

Main Results: Plan Effects on Spending and Plan Switching

| Summary Statistics | Regression Results | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Number of AutoAssignees (IV Sample) | % of Active Choosers Selecting Plan | For-profit | OLS Spending | IV Spending | Willingness-to-Stay | ||||||

| Log Spending | Log Spending | Log Spending, Weighted | Log Spending | Log Spending | Any Spending in Enrollee-Month? | Enrolled in Assigned Plan at 3 mos? | Enrolled in Assigned Plan at 6 mos? | ||||

| Plan | (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | |

| D | 2,621 | 18.47 | 0.165** (0.027) |

−0.111** (0.023) |

−0.064 (0.055) |

0.171** (0.050) |

0.130** (0.041) |

0.020** (0.007) |

0.014** (0.004) |

0.019** (0.005) |

|

| E | 6,763 | 11.51 | 0.076* (0.033) |

−0.084** (0.027) |

−0.065 (0.055) |

0.058 (0.040) |

0.050 (0.033) |

0.007 (0.005) |

−0.025** (0.003) |

−0.028** (0.004) |

|

| X | 8,698 | 12.94 | |||||||||

| F | 8,057 | 17.99 | 0.286** (0.030) |

−0.126** (0.025) |

−0.040 (0.048) |

−0.011 (0.036) |

−0.024 (0.031) |

−0.007 (0.005) |

−0.001 (0.003) |

−0.006 (0.004) |

|

| A | 8,512 | 10.06 | −0.265** (0.035) |

−0.290** (0.029) |

−0.276** (0.057) |

−0.101* (0.042) |

−0.097** (0.035) |

−0.012* (0.005) |

−0.029** (0.004) |

−0.041** (0.004) |

|

| G | 8,444 | 5.79 | −0.003 (0.038) |

−0.225** (0.031) |

−0.214** (0.068) |

−0.134** (0.041) |

−0.123** (0.034) |

−0.021** (0.005) |

−0.041** (0.004) |

−0.056** (0.004) |

|

| C | 6,198 | 6.31 | 0.066+ (0.037) |

−0.142** (0.031) |

−0.188** (0.067) |

−0.166** (0.044) |

−0.158** (0.036) |

−0.023** (0.006) |

−0.010* (0.004) |

−0.015** (0.005) |

|

| B | 7,815 | 6.51 | ✓ | −0.551** (0.046) |

−0.459** (0.035) |

−0.394** (0.067) |

−0.178** (0.042) |

−0.162** (0.036) |

−0.024** (0.006) |

−0.047** (0.004) |

−0.068** (0.004) |

| H | 7,066 | 6.96 | ✓ | −0.100* (0.042) |

−0.125** (0.034) |

−0.152* (0.070) |

−0.158** (0.046) |

−0.182** (0.038) |

−0.024** (0.006) |

−0.020** (0.004) |

−0.030** (0.005) |

| I | 1,417 | 3.46 | ✓ | −0.522** (0.046) |

−0.375** (0.040) |

−0.423** (0.073) |

−0.165+ (0.084) |

−0.202** (0.069) |

−0.036** (0.011) |

−0.030** (0.006) |

−0.047** (0.008) |

| Mean (spend displayed in dollars) | 466.202 | 466.202 | 462.263 | 509.740 | 509.740 | 0.349 | 0.930 | 0.906 | |||

| County × Year × Month FEs | X | X | X | X | X | X | X | X | |||

| Person-Level Controls | X | X | X | X | X | X | |||||

| First Stage F-Test P-Value | <0.001 | <0.001 | <0.001 | ||||||||

| F-Test P-Value | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | |||

| Plan Effect SD | 0.265 | 0.135 | 0.140 | 0.112 | 0.105 | 0.016 | 0.018 | 0.026 | |||

| Corrected SD | 0.263 | 0.132 | 0.126 | 0.102 | 0.098 | 0.015 | 0.018 | 0.025 | |||

| Obs: Enrollees | 65,595 | 65,595 | |||||||||

| Obs: Enrollee X Months | 592,692 | 592,692 | 392,026 | 393,570 | 393,570 | 393,570 | |||||

Note: Table displays summary statistics and main results. Column 1 reports counts of auto-assignees. When aggregated over the study period, plans received different numbers of auto-assignees depending on whether the plans were offered in the county and eligible for auto-enrollees at the time of assignment (see Appendix A). Column 2 reports the percent of active choosers selecting each plan. Remaining columns report OLS or IV regression results, where dependent variables are indicated in the column headers. In columns 3–8, plan regressors correspond to the plan of current enrollment in the enrollee-month. For the IV regressions (columns 6–8), these are instrumented with plan of initial assignment. Kleibergen-Paap F statistics from the first stage are reported. See Table A3 for first stage coefficients. In columns 9 and 10, the dependent variable is an indicator for remaining in the auto-assigned plan at three and six months post-assignment, respectively. Observations are enrollee × months in columns 3 through 8 and enrollees in columns 9 and 10. OLS regressions include only active-choosers; see Table A24 for additional OLS results that pool the active chooser and auto-assignee (IV) samples. Person-level controls include: sex, 5 race categories, deciles of spending in FFS prior to MMC enrollment, and 47 age categories (single years from 18 to 64). All regressions control for county × year × month-of-assignment and the count of months since plan assignment/plan enrollment, both as saturated sets of indicators. Standard errors in parentheses are clustered at the county × year × month-of-assignment level. This is the level at which the randomization operates.

p < 0.1,

p < 0.05,

p < 0.01.

The statutory goal of the state Medicaid administrator was to randomly assign auto-assignees across the eligible plans. Panel (a) of Figure 2 presents a series of balance tests to assess the IV independence assumption to the extent possible, using information on predetermined characteristics like demographics, as well as pre-randomization medical expenditure. These characteristics are measured at the enrollee level rather than the enrollee-month level (collapsed to pre-assignment means for time-varying outcomes like monthly spending prior to assignment). To test for correlations between assignment and predetermined characteristics, each baseline characteristic is regressed on nine indicators for beneficiaries’ assigned plans (omitting one plan to prevent perfect collinearity). We perform this regression separately for auto-assignees and a random subsample of active-choosers of equal size to the auto-assignee sample to equalize statistical power across the two groups.

The two panels of panel (a) Figure 2 offer different visualizations of the same underlying balance test regressions. In the left panel, we plot the plan coefficients. Results from the active-chooser regressions are plotted as hollow circles and coefficients from the auto-assignee regressions are plotted as solid circles. To create a comparable scale across dependent variables, all coefficients here are normalized by the standard deviation of the combined set of demeaned plan effects. Importantly, within an outcome (row), a uniform normalization is applied to both the active chooser and auto-assignee samples, so that the spreads of plan effects can be compared. The larger spread apparent among the active chooser plan “effects” indicates that there is strong sorting to plans along predetermined enrollee characteristics among this group.

In the right panel of panel (a) of Figure 2, we plot for each dependent variable the p-value from an F-test of whether the plan “effects” on predetermined characteristics are jointly different from zero, again separately for the active-chooser and auto-assignee samples.20 Successful random assignment would tend to generate large p-values, indicating no significant relationship, so large p-values are consistent with random assignment.

The results in the figure provide strong evidence of balance across plans for the auto-assignees, with plan effects tightly clustered around zero for all predetermined characteristics. p-values exceed 0.05 for all but one characteristic. The test here is unusually strong: The panel nature of the data and the pre-assignment period during which we observe all healthcare utilization for all beneficiaries in the same fee-for-service program allows us to check for balance on exactly the type of healthcare utilization variables we examine as outcomes below—as opposed to merely a few demographic variables.

The analogous balance estimates for the active-choosers show that plan coefficients on predetermined characteristics are large, and each characteristic is predicted by plan choice with p < 0.05. The imbalance among a same-sized random subsample of active-choosers indicates that the lack of statistical imbalance among the auto-assignees is not due to noisy or uninformative observables. It also suggests that selection would be an important confounder in the absence of quasi-random assignment.

The exclusion-restriction in our setting requires that the plan of assignment influences outcomes like healthcare utilization only via plan of enrollment. That is a natural assumption in this context, in which the plan of enrollment is the vehicle through which healthcare is provided. Although it is impossible to rule out, for example, that assignment to some plan—as distinct from enrollment in that plan—causes the healthcare utilization outcomes we document, such an interpretation would be significantly at odds with the existing small experimental and quasi-experimental literature on health plan effects. A more relevant potential violation of the exclusion-restriction could occur if plan of assignment caused attrition out of the observation sample. This would be the case if plan of assignment caused beneficiaries to exit the Medicaid system altogether. (In contrast, exiting the plan of assignment or exiting the managed care program to enroll in FFS Medicaid would pose no problem as enrollees in these scenarios would remain in our data). We rule out the possibility of differential attrition from the sample directly in the data, showing no evidence of it over our study window (see Figure 5, discussed below).21

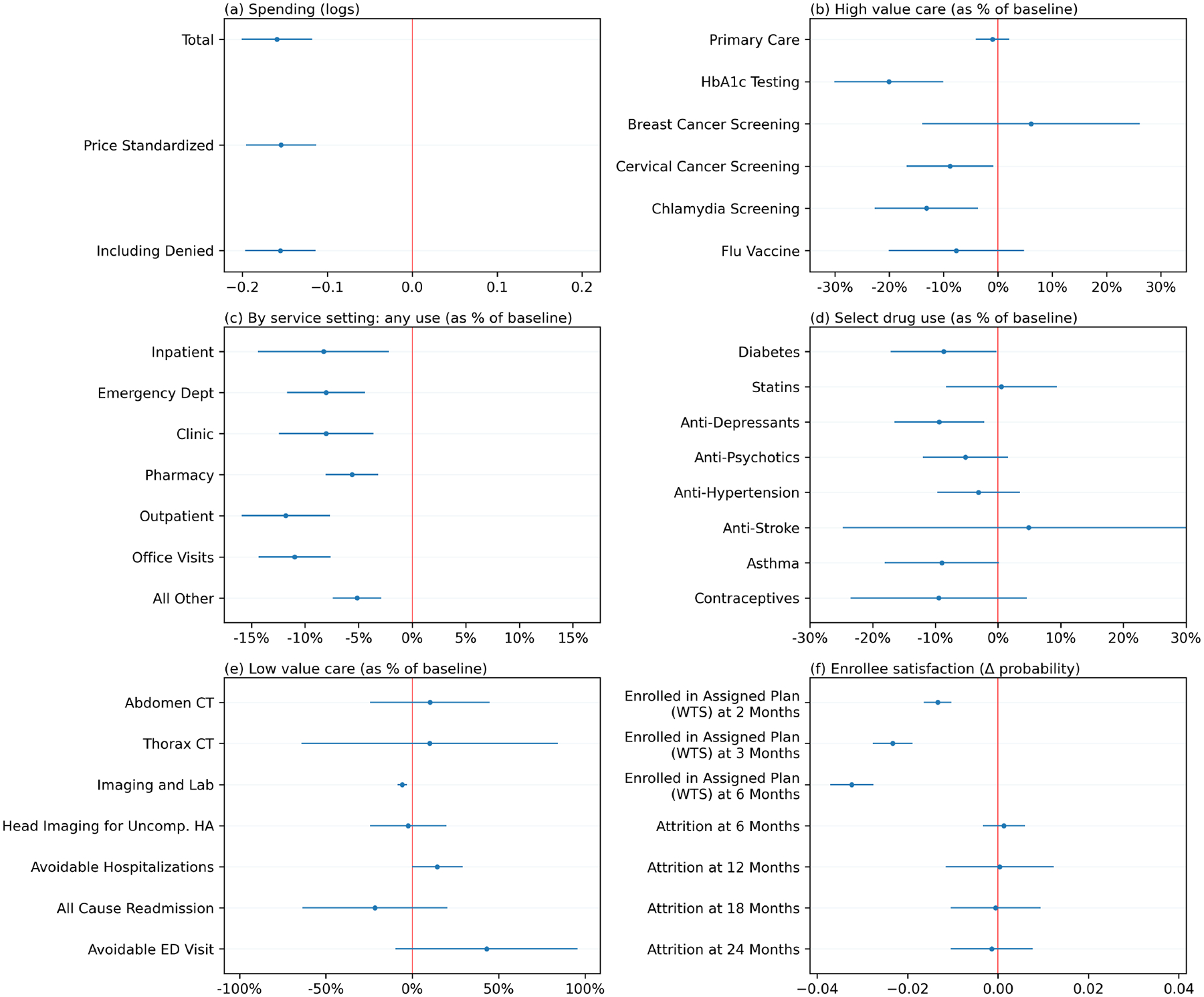

Figure 5:

Low- Versus Medium-Spending Plan Effects Across Settings and Outcomes

Note: Figure shows spending and utilization in low-spending plans compared to medium-spending plans across various categories and service settings. Plans are divided into three sets: low- (Plans A, B, C, G, H, I), medium- (Plans E, F, and X), and high- (Plan D) spending. We estimate a modified version of the IV regression in Eq. 3 in which the endogenous variables are indicators for enrollment in any plan in each set: . Medium spending is the omitted category. The instruments are indicators for assignment to any plan in each set. We focus here on coefficients on the low-spending group indicator (γLow), because the high spender is a single plan outlier. (Figure A6 reports the analogous results for the single high-spending outlier.) Labels to the left within each panel describe the dependent variable. Coefficients are plotted with 95% confidence intervals. Coefficients in the first panel are effects on log spending. In the next four panels, coefficients are divided by the mean of the dependent variable in the omitted group to allow placing multiple outcomes on the same scale. In the last panel, which describes willingness to remain enrolled in the assigned plan (willingness-to-stay; WTS) and attrition out of sample, the dependent variables are indicators and the coefficients are not scaled. For example, a WTS coefficient of −0.03 would correspond to an effect in which enrollment in a low-spending plan—in place of a medium-spending plan—increased the probability of switching plans by three percentage points. For a complete tabulation of all regression results displayed in the Figure, see Tables A19, A20, A21, and A22.

3.3. External Validity

Our primary causal estimates rely on a sample of Medicaid enrollees that were auto-assigned to Medicaid managed care plans in New York City. This limits the external validity of our estimates due to the reliance on auto-assignees, who are a non-random sample of the Medicaid population, and our focus on New York City, which is an urban market that differs from other parts of the country.

Auto-assignment only occurs if enrollees don’t select a plan.22 It is therefore useful to understand whether auto-assignees differ on observables from active choosers. Table A5 shows that auto-assignees differ somewhat from active-choosers on observables, being more likely to be Black males. But on overall healthcare spending, the groups appear similar. In fact, auto-assignees use slightly more care than active-choosers. The IV analysis thus estimates plan effects among individuals that use typical levels of care, rather than enrollees that are not actively engaged with the health care system. To maximize the generalizability of our estimates, in Section 4 we also include a set of analyses where we re-estimate our primary specification after reweighting the auto-assignee sample to match the full Medicaid population on a rich set of demographic and baseline utilization characteristics, including baseline healthcare spending in the initial months of enrollment while all individuals (both active-choosers and auto-assignees) were in the same FFS program. However, it is important to point out that the auto-assignee population may also differ from the average Medicaid enrollee on unobserved characteristics. For example, if it is easier to restrict access to care for auto-assignees—because, for example, they are selected on passivity—then the estimated range of spending effects in this paper may overstate the scope for plans to generate spending differences for the typical Medicaid enrollee.

Beyond the issues of generalizing from the auto-assignees to the full New York Medicaid population, it is useful to compare New York Medicaid to Medicaid programs elsewhere. Appendix Table A7 compares the characteristics of state Medicaid programs, including Medicaid spending per enrollee (Centers for Medicaid and Medicare Services, 2011), the fraction of Medicaid beneficiaries in managed care plans (United States Census Bureau, 2019), the number of plans serving in the state (Kaiser Family Foundation, 2019b), the share of these plans that are for-profit, and the share of Medicaid enrollees who are auto-assigned. The table shows that the Medicaid program in New York is typical in some ways: it contracts with a variety of plans and plan types, including national for-profits and various not-for-profits, and it uses auto-assignment. New York is atypical in other ways: A larger share of the population resides in urban areas (Iowa State University, Iowa Community Indicators Program, 2022), Medicaid managed care penetration is higher than other states, and a relatively small share of enrollees are auto-assigned. Also, the fact that New York has an unusually large number of plans competing in the same market suggests that other states may not feature as much between-plan variation as we find in our study context. Given these considerations, the large range of plan effects we document below could be informative of the potential for plans to have large impacts on spending even without the ability to set cost-sharing, but may not be predictive of the range of plan effects in any particular Medicaid managed care market.

4. Plan Effects

4.1. Healthcare spending

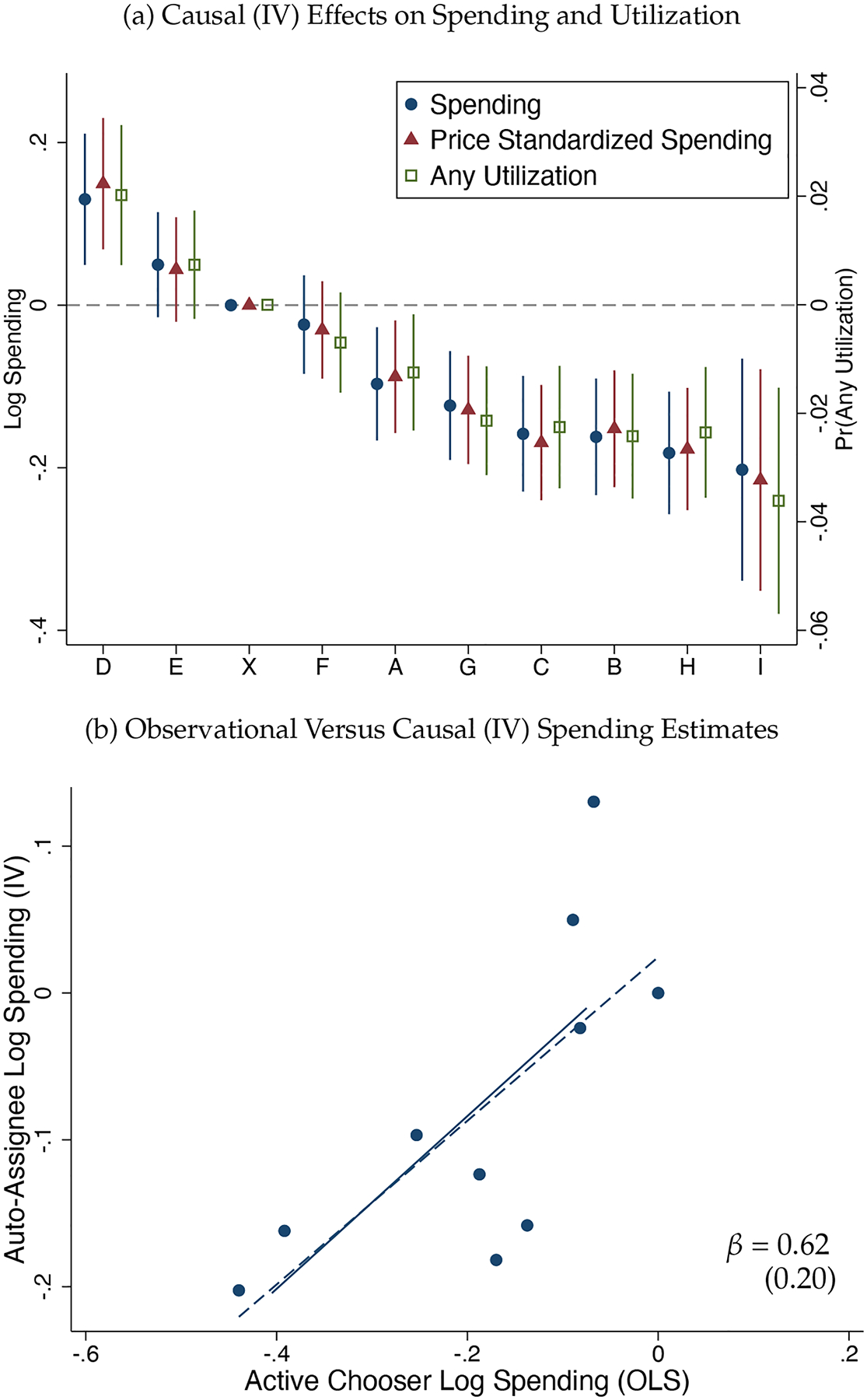

We start by presenting results for each plan’s causal effect on spending relative to an omitted plan, using the IV regression in Equation 3. Panel (a) of Figure 3 reports the main result—plan effects on monthly log spending from the IV regression. The plotted coefficients reveal substantial heterogeneity in spending and utilization across plans. Six plans (A, B, C, G, H, I) spent significantly less than the omitted plan (X), two plans (E and F) had spending levels similar to the omitted plan, and one plan (D) had significantly higher spending. Interestingly, the three lowest-spending plans (B, H, I) are the three for-profit plans in our setting. Comparing the highest-spending plan, D (+13.1% relative to the omitted plan), to the lowest-spending plan, I (−20.3% relative to the omitted plan), yields an overall range of about 33 percentage points. With an inference on winners correction (Andrews, Kitagawa and McCloskey, 2019), this range shrinks to 25 percentage points.23 The same panel also reports coefficients from a regression in which the dependent variable is an indicator for any utilization in the month. This regression reveals similar patterns, with lower-spending plans exhibiting lower probabilities of any utilization each month.

Figure 3:

Main Results: IV Plan Effects on Healthcare Utilization

Note: Figure displays a main result of the paper—plan effects on healthcare utilization identified by random plan assignment. Panel (a) plots IV coefficients corresponding to Eq. 3, where the dependent variable is log(total healthcare spending +1) and price-standardized spending on the left axis or an indicator for any spending in the enrollee-month on the right axis. Plan of enrollment is instrumented with plan of assignment. Coefficients are relative to the omitted plan, X. For the plot, plans are ordered by their spending effects. Whiskers indicate 95% confidence intervals. Standard errors are clustered at the county × year × month-of-assignment level. This is the level at which the randomization operates. Panel (b) compares the same IV estimates from panel (a) with the observational differences in spending across plans estimated in the active chooser sample. The dashed line is an OLS regression line fit to the ten points. The regression represented in the solid line uses an empirical Bayes procedure to shrink coefficients prior to estimation. The slope of the solid line is reported. Active chooser (observational) differences are estimated as OLS coefficients in a regression of log total monthly spending on a full set of plan indicators, as in Eq. 1. The active chooser sample is reweighted to match the IV sample on observables, including FFS healthcare utilization prior to managed care enrollment. Person-level controls are identical in the OLS and IV specifications. See the notes to Tables 2 and A11 for tabular forms of these results and for complete details on the control variables and reweighting.

These patterns are robust to alternative specifications and constructions of the dependent variable. Table 2 reports results with and without controls for pre-determined characteristics. We estimate a similar range in plan effects when the outcome is parameterized as the inverse hyperbolic sine of spending or Winsorized spending levels (Table A8), when we use a Poisson regression that places less emphasis on zeros relative to the log specification (Table A9), and when we aggregate spending over the entire six-month enrollment spell, rather than analyzing monthly outcomes (Table A10). Each approach is affected differently by the presence of zero-spending months at the person × month level, but all are broadly consistent: In some specifications, some of the plan effects of the “interior” plans attenuate, but the range between the highest and lowest spending plans is quite robust, always about the same range as in Figure 3. Further, the “any use” results in Figure 3 show clear evidence of significant variation in extensive margin plan effects on utilization, an outcome that is unaffected by outliers in the healthcare spending distribution and that is not subject to the issues caused by zeros in log or inverse hyperbolic sine specifications.

The similarity of plan effects on total spending and plan effects on an indicator for any utilization suggests that quantity differences may be more important than negotiated price differences in this context. To illustrate how much of the spending differences can be accounted for by prices, panel (a) of Figure 3 also reports coefficients from a version of the IV regression in which all claims have been repriced as if every plan transacted with providers at a common set of prices. Repricing has almost no effect on our estimates of plan spending coefficients, indicating that price differences cannot account for the spending differences we observe.24

The range of these estimates is large.25 For example, the range of our ten plan effects corresponds to 2.5 times the size of the spending difference between plans with no deductible versus a high deductible (Brot-Goldberg et al., 2017). This fact remains true even after correcting for the noisiness of the estimates: In Table 2, the standard deviation of estimated plan effects shrinks only a little when one adjusts for the standard error of the estimates (.102 vs .112).26 Yet, our estimates are considerably smaller than the observational, cross-sectional differences in plan spending. To better understand this relationship, panel (b) of Figure 3 plots plan effects identified via random assignment in the IV sample against plan effects (estimated via OLS) that compare the spending of enrollees making active plan choices. Both regressions include rich controls (risk adjusters) for observable enrollee characteristics, including deciles of ex-ante spending from the period prior to the beneficiary entering MMC, during which all beneficiaries were enrolled in FFS. Further, the active-chooser sample is reweighted to match the distribution of observables in the auto-assignee IV sample to provide the most consistent comparison the data allow. These coefficients are also reported in Tables 2 and A11.27

Figure 3 indicates a noisy relationship between the observational and causal estimates. On average, enrolling in a plan with high risk-adjusted spending among active choosers (x-axis) will cause an enrollee to have higher spending (y-axis). But this average relationship masks substantial heterogeneity: The size of plan effects varies in the two sets of estimates, indicative of substantial selection across plans. On average, the observed selection is adverse: Higher-spending enrollees opt into plans with larger positive causal effects on spending. Such selection suggests that conventional cross-sectional comparisons of spending or other outcomes across plans would be difficult to interpret, as differences will be driven by both causal plan effects and residual selection. We find this even when adjusting for an unusually rich set of observables that include prior healthcare spending in a common FFS plan (which would be typically unavailable as a risk-adjuster for MCO plan effects).

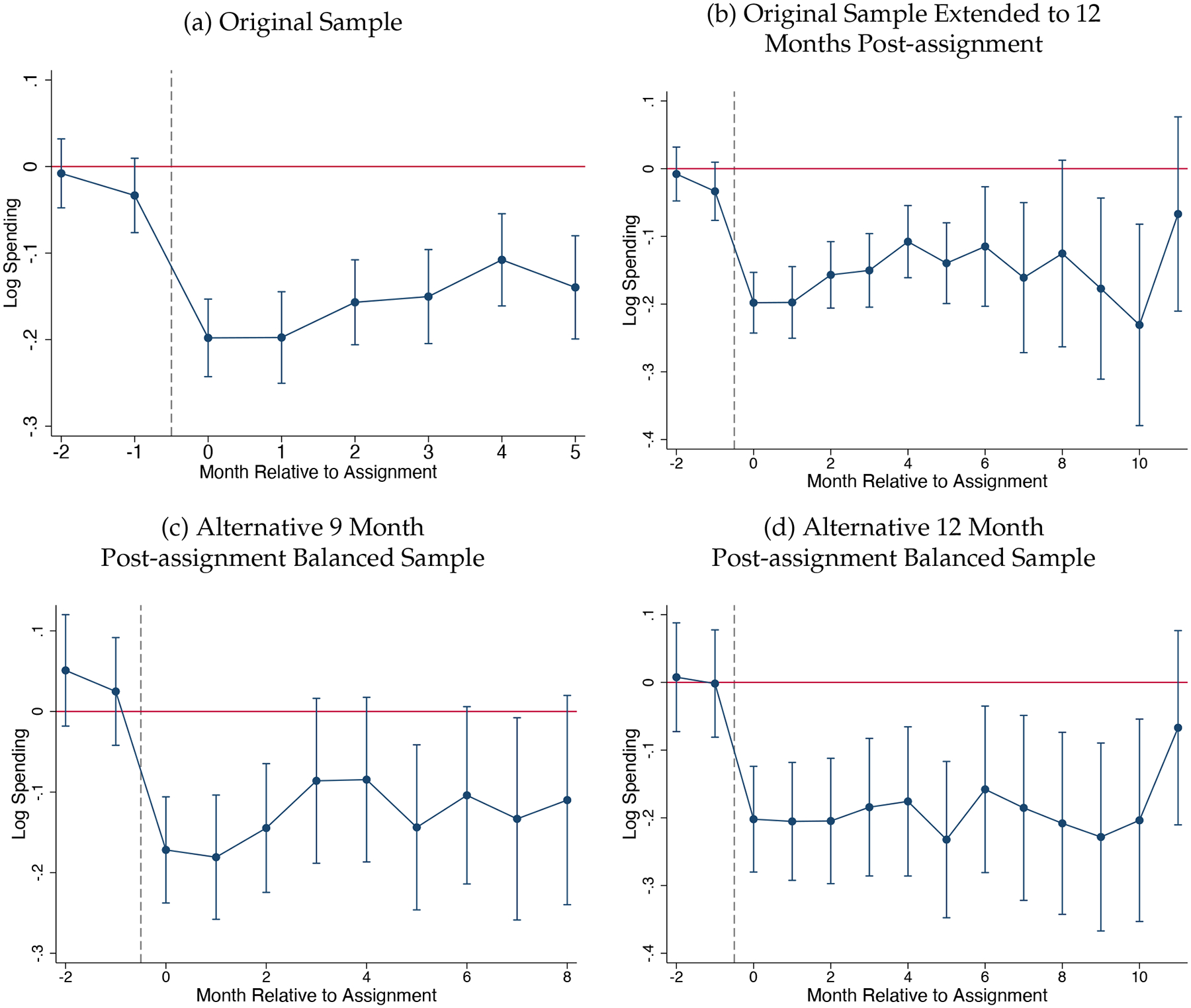

4.2. Effects over Enrollment Spells

How do these effects unfold over time? In Figure 4 we plot month-by-month event study versions of our IV regressions, in which time is relative to the month of auto-assignment. Rather than attempting to estimate nine plan effects interacted with indicators for each month of event time, we group plans together, dividing the ten plans into low- (Plans A, B, C, G, H, I), medium- (Plans E, F, and X), and high-spending (Plan D) groups based on the IV spending effects as described above.28 This both improves statistical power and allows for a simpler visual summary of the time patterns of effects. The specification follows Equation 4, but is estimated separately for each point in relative event time—for each of two months prior to random assignment and for each of six months post assignment.29 Because Plan D (the single outlier high-spending plan) is so different from the others in terms of overall spending, we focus on the low versus medium coefficients.

Figure 4:

Persistence: Effects By Time Since Assignment to a Plan

Figure 4 plots the IV estimates. Panels (a) and (b) use our original sample, with panel (a) showing only the first 6 months post-assignment and panel (b) extending up to 12 months post-assignment. As discussed in Section 2, our main auto-assignee sample restricts to observations in the first six months following plan assignment. This is due to the fact that few auto-assignees remain enrolled after the sixth month post-assignment.30 Thus, there is no change in the composition of auto-assignees over time in panel (a). Panel (b), on the other hand, allows us to examine longer run impacts but also introduces the possibility of composition bias as the sample becomes unbalanced starting in month 6. For panels (c) and (d) we generate new smaller balanced samples of beneficiaries with at least 9 and at least 12 months of post-assignment enrollment, respectively, so that the patterns over time cannot be explained by a change in the composition of beneficiaries remaining enrolled in Medicaid.

Figure 4 shows that the effects begin immediately upon enrollment and then are generally stable over the entire enrollment spell. In the baseline sample (panel (a)), effects do appear somewhat larger in the first months post-assignment, but they are still large and significant by month 5. Further, the (insignificant) suggestion of attenuation over time in panel (a) is not replicated across alternative specifications, including in panel (b) which uses the same sample but allows the horizon to run out an additional six months. In Table A12 we present regression estimates for these different samples, pooling over all post-assignment months. The results in this table show that our estimates of the causal effects of low-spending plans on spending are remarkably consistent across these samples. Overall, Figure 4 and Table A12 show that spending effects remain large throughout the post-assignment months.31

4.3. Heterogeneity

In Figure A3 we plot coefficients of plan effects estimated separately in various subsamples of the auto-assignee sample. The three panels split the data by sex, median age, and baseline spending, where the latter is measured prior to assignment to a managed care plan, when enrollees received all care through the FFS system. Differences in plan effects by sex are mostly negligible. Differences by age and baseline spending are more substantial, with larger plan effects estimated for older and sicker groups. The regressions are underpowered to detect statistical differences across plans-by-groups, but the point estimates suggest that the overall plan effects are larger for sicker beneficiaries, proxied here by those who have used more care in the past, but still meaningful for healthier groups.

The final panel in Figure A3 aggregates the data differently, in order to gain statistical power and reveal the time pattern of effects. Here, as in Figure 4, the specification follows Equation 4, grouping plans into high-, medium-, and low-spending groups and allowing for heterogeneous treatment effects over event time. The figure plots the low (versus medium) coefficients estimated separately for two subsamples: those with no spending in the baseline period and those with positive spending. Consistent with the plan-level estimates, the impact of being assigned to a plan in the low-spending group (relative to the medium group) is largest for the sicker beneficiaries. The differences are statistically significant at the beginning of the event window and marginally significant at the end. Appendix Table A14 presents pooled regression results corresponding to each of the event studies in Figure A3, revealing that at this level of aggregation there is little heterogeneity in plan effects by age and sex but significant heterogeneity by baseline spending: Spending effects are 60% larger for beneficiaries with some baseline spending relative to beneficiaries with no baseline spending. Clearly, spending effects are not driven by healthy beneficiaries with minimal interaction with the healthcare system. Instead, effects are driven by sicker beneficiaries who frequently use care.

5. Quality and Satisfaction

In this section we evaluate whether the relative savings of lower-spending plans were associated with observable correlates of clinical plan quality and/or revealed enrollee preference.

5.1. Marginal Services

In the RAND HIE and the quasi-experimental studies that have followed it, patient cost-sharing has proven to be a blunt instrument, with deductibles and coinsurance affecting use of low- and high-value services alike. In our setting, are the utilization reductions achieved using non-cost-sharing tools similarly broad-based, or are the services that are marginal to enrollment in lower-spending plans more targeted—and perhaps of lower value?

In the remaining panels of Figure 5, we investigate whether the reductions in spending generated by managed care are similarly blunt or better targeted. We begin in the service panel by examining plan effects by type of service. Each row reports an IV coefficient estimate on low-spending plan enrollment. The dependent variables in the panel are indicators for any use of the service type in the enrollee-month, and coefficients are divided by the mean of the dependent variable in the omitted group in order to place multiple outcomes on the same scale. The panel shows that reductions in low-spending plans occur across all services: inpatient admissions, pharmacy, outpatient care, office visits, lab services, and dental care. The most-rationed services were office visits and hospital outpatient services. Beneficiaries assigned to the low-spending plans also used fewer emergency department (ED) visits, consistent with evidence that for some populations ED may be a complement to, rather than substitute for, other ambulatory care (Finkelstein et al., 2012; Cuddy and Currie, 2020).

So far, our findings do not rule out the possibility that low-spending plans invest in high-value treatments that make people healthier and decrease the need for costly inpatient and outpatient hospital treatments (e.g., ED utilization). To investigate this, we examine two sets of potentially high-value services that could produce spending offsets: high-value drugs and high-value services, including primary care.

The drug and high-value care panels in Figure 5 show no evidence that low-spending plans invest more in high-value drugs or preventive services. With respect to drugs, we focus on a set of maintenance drugs used to treat chronic conditions. Specifically, we estimate plan effects on diabetes drugs, statins, anti-depressants, anti-psychotics, anti-hypertensives, anti-stroke drugs, asthma drugs, and contraceptives. Rather than increase utilization, low-spending plans have limited effects on the utilization of most of these high value drugs, with some suggestive evidence that they lower utilization for some. This is inconsistent with the idea that lower-spending plans use scalpel-like tools to reduce inefficient spending while improving or maintaining provision of high-value care: For many of these drugs non-adherence can result in health deterioration and expensive hospitalizations.

The high-value care panel of Figure 5 analyzes six measures of compliance with recommended care developed by the Department of Health and Human Services for Medicaid enrollees: the use of primary care, the prevalence of HbA1c testing, breast cancer screening rates, cervical cancer screening rates, chlamydia screening rates, and flu vaccination rates. For primary care and breast cancer screening, there is no difference in spending. The coefficient for primary care, in particular, is a precisely estimated zero. For flu vaccinations, the effect is negative but insignificant. Among the other measures, we find that enrollment in a low-spending spending plan significantly reduces the use of recommended preventive care. In sum, there is no indication that low-spending plans achieve savings by promoting high-value care and achieving offsets. Instead, similar to what happens when consumers face a high deductible, supply-side managed care tools appear to constrain most of care with the exception of primary care (Figure A4), which we return to in Section 5.3.

Beyond plan effects on high-value services, we also estimate the effects of enrolling in a low-spending plan on the use of a variety of potentially low-value services, including inappropriate abdominal imaging, chest imaging, and head imaging for an uncomplicated headache (Schwartz et al., 2014; Charlesworth et al., 2016). With the exception of possibly reducing overall imaging (but not narrowly defined low-value imaging), the low-value care panel of Figure 5 shows no evidence that low-spending plans reduce the use of these low-value services. These results are somewhat in contrast to the finding that lower-spending plans make across-the-board reductions by service setting (inpatient, clinic, pharmacy, etc.), but they make it very clear that these plans are not selectively cutting out services that offer little value to patients. Indeed, these are the few services where utilization appears not to be affected by low-spending plans, though we note that confidence intervals are wide, leaving us unable to rule out significant decreases as well as significant increases in the use of these services.

Finally, as another dimension of heterogeneity, we can examine differences across plans in enrollee spending on services carved out of MMC plan contracts and always paid by the FFS program, even for beneficiaries enrolled in MMC. A minority of services for managed care enrollees are carved-out and paid directly by the state on a fee-for-service basis. The claims data for these services are generated by the state and merged with the plan data. If carved-out services are substitutes for carved-in services, low-spending plans may strategically push beneficiaries to use carved-out services (for which plans bear no financial responsibility) in place of carved-in services (for which plans are the residual claimant). On the other hand, if plans impact spending on both carved-in and carved out services similarly then plan effects may show up even in carved-out FFS claims. In Appendix Figure A5, we estimate the IV plan effects on each spending component separately. The figure shows that the patterns of plan effects on FFS claims are tightly correlated with the patterns of managed care claims. Either there are important complementarities between managed-care-paid and FFS services, or cost-saving reductions are blunt, rather than strategically targeted.

Importantly, these results also carry the implication that plan spending differences are unlikely to be driven by differential reporting. The FFS services represent a data component that cannot be contaminated by plan reporting differentials. The plans themselves have no reporting role for these claims, yet we observe a tight correlation between plan effects on self-reported spending (via managed care encounter data) and plan effects on state-reported spending (via FFS claims), providing strong evidence that the differences in self-reported spending are not merely due to differential reporting.

5.2. Satisfaction and Health

In the Medicaid setting, beneficiaries enrolling in lower-spending plans are not subject to cost-sharing. Hence, the classic trade-off between financial risk protection and moral hazard (Zeckhauser, 1970) is absent. There may, however, be a trade-off between satisfaction and plan spending, as well as a potential trade-off between spending and health. We study the spending/satisfaction trade-off by estimating differences in the probability that an individual assigned to a low- versus medium-spending plan opts to stay in that plan after auto-assignment rather than switch to a different plan. Recall that enrollees can switch away from their plan of assignment. In the language of IV, these are never-takers with respect to the auto-assignment instrument. Random assignment allows us to interpret empirical differences in the likelihood of switching plans as causal effects of being assigned to those plans.

We operationalize this measure of beneficiary satisfaction as the probability that an auto-assignee remains enrolled in their assigned plan and call it “willingness-to-stay.” We measure willingness-to-stay at the individual level with a indicator variable equal to 1 if the individual is enrolled in their assigned plan 3 (or 6) months post-assignment and 0 otherwise. The key assumption underlying the interpretation is the typical one: that choices (to remain enrolled or switch plans) reveal preferences. Unlike willingness-to-pay, our measure of beneficiary satisfaction is not scaled to dollars, but rather reports probabilities of continued enrollment. Despite the scale limitations, one potential advantage of our measure is that it plausibly offers some insight on consumers’ experienced utility in a plan, as it is measured as a reaction to (i.e., causal effect of) being enrolled in a plan. For certain questions related to ex-post consumer evaluations, willingness-to-stay may be preferable to, for example, a willingness-to-pay measure derived from initial plan choices in a market setting with important information frictions.

Plan effects on willingness-to-stay are reported in the last two columns of Table 2. These coefficient estimates range from −0.068 (Plan B) to 0.019 (plan D), implying a range of differences in willingness-to-stay of 8.7 percentage points. Relative to the baseline rate of remaining in the assigned plan among those assigned to the omitted plan (90.6%), this represents an almost 10% difference, which we interpret as economically meaningful, especially given the likely high levels of inertia and inattention. Put another way, in the omitted plan, 9.4% of assigned beneficiaries left the plan by 6 months post-assignment. In Plan B, 16.2% (= 9.4% + 6.8%) of assigned beneficiaries left the plan by 6 months post-assigned, about a 70% difference in this exit rate.

The enrollee satisfaction panel of Figure 5 shows that people are less likely to stay in lower-spending plans. This figure also shows how willingness-to-stay evolves over time, measuring willingness-to-stay at 3 and 6 months post-assignment. Overall, willingness-to-stay is lower in lower-spending plans and declines over the post-assignment window, reaching a differential of several percentage points (relative to willingness-to-stay in medium-spending plans) by six months post-assignment. This is consistent with enrollees learning about the poor subjective quality of low-spending plans over time. Appendix Figure A6 shows the analog for the one high spending plan. There, as well, beneficiaries are similarly more likely to stay in the high-spending plan versus the medium-spending plans. It’s not possible to directly observe in these claims data whether the revealed dissatisfaction reflects difficulty scheduling appointments, restrictive gate-keeping by PCPs, or other factors—though we discuss the possible roles of these and other factors in Section 5.3.

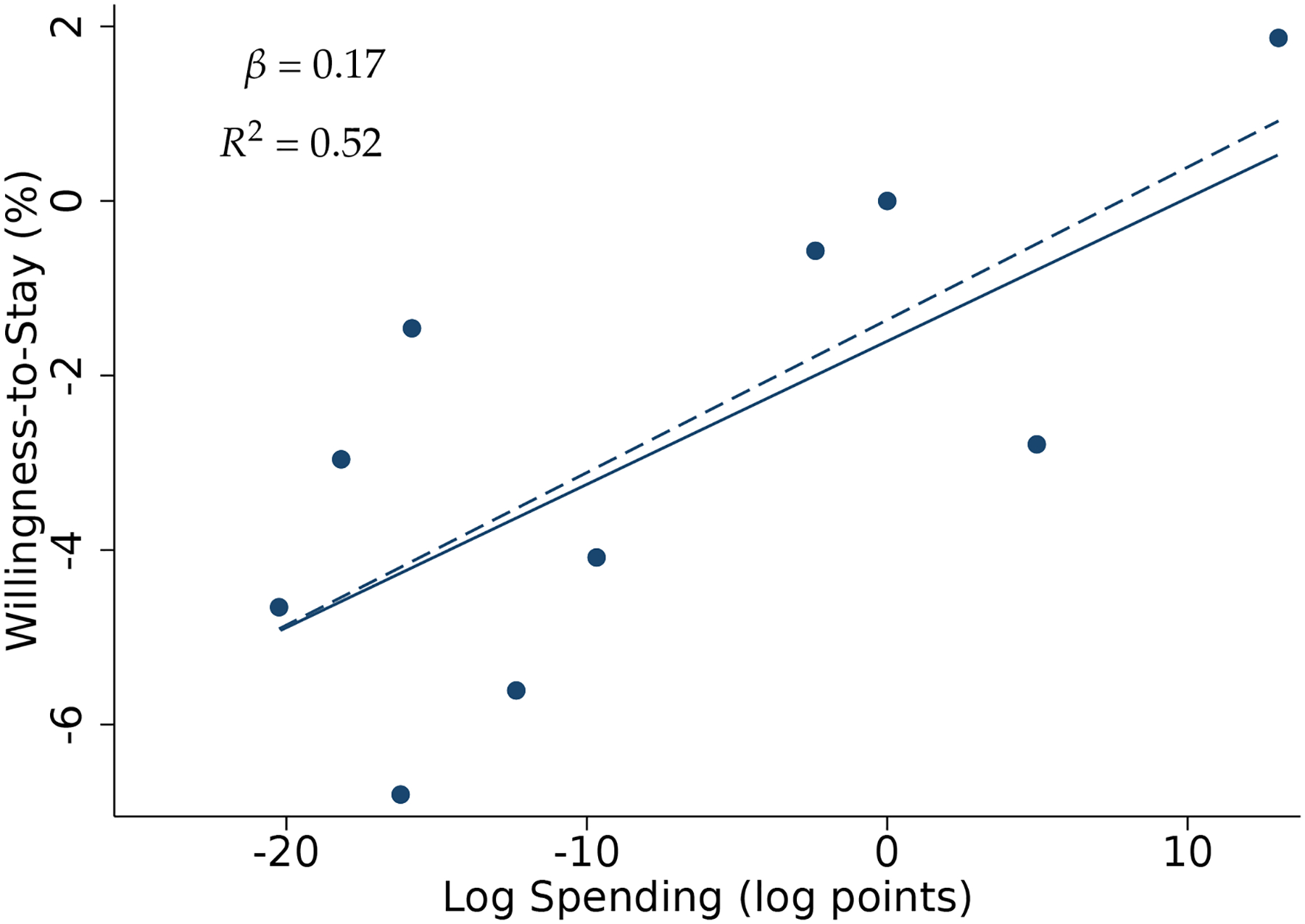

To give a finer view of these results, in Figure 6 we plot plan-level estimates of willingness-to-stay against the plan effects on spending. The relationship is clear, with higher-spending plans having higher estimates of willingness-to-stay. In Appendix Figure A7 we present a similar figure, stratifying the auto-assignees by whether or not they had any baseline spending. This figure suggests that sicker beneficiaries—those who use more care and so have more experience with their plans—drive the relationship. Thus, the plan effects we estimate via the claims data are strongly correlated with consumers’ actual experiences in the plans and their decisions over continued enrollment, consistent with a binding trade-off between plan spending and beneficiary satisfaction. In Section 6.1 we present additional evidence on the relationship between plan spending and enrollment flows, including among active-chooser beneficiaries.

Figure 6:

Consumer Satisfaction Versus Plan Spending Effects

Note: Figure shows the strong correspondence between willingness-to-stay (WTS) and IV plan spending effects. WTS measures beneficiary satisfaction as the probability that a (randomly assigned) auto-assignee remains enrolled in their assigned plan through six months post-assignment. Each plan corresponds to one point, with the coordinates corresponding to the coefficient estimates from Table 2. The dashed line is an OLS regression line fit to the ten points. The regression represented in the solid line uses an empirical Bayes procedure to shrink coefficients prior to estimation. The slope and R2 from the shrunken estimation is reported.

To investigate the trade-off between spending and health, we use a standard, if imperfect, surrogate health outcome that can be constructed from claims data: hospitalizations that are potentially avoidable given appropriate treatment and management of a set of common conditions. The measures were developed by the Agency for Healthcare Research and Quality (AHRQ) for the Medicaid population. (See Appendix B for details.) Figure 5 shows that enrollees in the low-spending plans are 15% more likely to have an avoidable hospitalization despite having lower utilization for most other types of care. This result is particularly striking in the context of our prior results showing that for the vast majority of healthcare services, low-spending plans generate lower levels of utilization. This result shows that in contrast to most healthcare services, when it comes to types of services whose utilization may indicate a deterioration of beneficiary health, low-spending plans generate higher levels of utilization.32 This suggests that the tools used by low-spending plans to constrain costs could have negative consequences for beneficiary health.

5.3. Summary and Potential Mechanisms

To summarize, our results show that even without exposing consumers to out-of-pocket spending, plans exert significant influence over total spending. In this sense, supply side interventions by plans—as opposed to consumer cost-sharing—can constrain healthcare spending while circumventing the classic trade-off between financial risk protection and moral hazard (Zeckhauser, 1970; Pauly, 1974). However, those reductions are not a free lunch, with costs borne by beneficiaries in terms of the quality of care delivered, health outcomes, and in a revealed preference measure of satisfaction. Further, a key limitation of reducing spending via consumer cost sharing is replicated here: The impacts are blunt and broad-based, rather than targeted to low-value services.

Next, we briefly explore what we can learn about how plans achieve spending reductions. Since there is no consumer cost sharing in our setting, and the statutory scope of covered benefits is set by the state, causal differences in spending between plans must be driven by differences in their use of supply-side (i.e., managed care) tools. Though the term managed care can encompass a wide range of mechanisms, Glied (2000) summarizes the key methods as the selection and organization of providers (i.e. networks), how plans negotiate payments to providers, and utilization management, in its various forms. We thus take a moment to briefly explore the potential channels through which the lower-spending plans constrain costs, to the extent possible given our data and setting, and discuss the implications of our findings for the economics of Medicaid managed care.