Highlights

-

•

Nonlinear spherical stability analysis of acoustic bubbles.

-

•

Fixed-point iteration technique to handle implicit nature.

-

•

Theoretical comparison with Gauss elimination.

-

•

The role of GPU programming.

-

•

Analysis of arithmetic operation counts.

-

•

Analysis of memory bandwidth limitations.

Keywords: Acoustic cavitation, Bubble dynamics, Implicit dynamical system, Fixed-point iteration, High-performance computing

Abstract

A fixed-point iteration technique is presented to handle the implicit nature of the governing equations of nonlinear surface mode oscillations of acoustically excited microbubbles. The model is adopted from the theoretical work of Shaw [1], where the dynamics of the mean bubble radius and the surface modes are bi-directionally coupled via nonlinear terms. The model comprises a set of second-order ordinary differential equations. It extends the classic Keller–Miksis equation and the linearized dynamical equations for each surface mode. Only the implicit parts (containing the second derivatives) are reevaluated during the iteration process. The performance of the technique is tested at various parameter combinations. The majority of the test cases needs only a single reevaluation to achieve error. Although the arithmetic operation count is higher than the Gauss elimination, due to its memory-friendly matrix-free nature, it is a viable alternative for high-performance GPU computations of massive parameter studies.

1. Introduction

Irradiating a liquid domain with high-intensity and high-frequency ultrasound, bubble clusters are formed composed of micron-sized bubbles [2], [3]. The phenomenon is also called acoustic cavitation [4], [5]. During the radial pulsation of the bubbles, the compression phase can be so violent that thousands of degrees of Kelvin and hundreds of bars can be built-up inside the bubble inducing chemical reactions [6], [7], [8], [9], [10], [11], [12], [13], [14], [15], [16], [17], [18], [19], [12]. Such extreme conditions are the keen interest of sonochemistry [20], [21], [22] that regards the bubbles as micron-sized chemical reactors. It has various industrial applications: wastewater treatment [23], [24], [25], [26], synthesis of organic [27], [28], [29] chemical species or the production of metal nanoparticles [30], [31], [32], to name a few.

Sonochemistry faces major challenges in terms of energy efficiency and scale-up to magnitudes feasible for commercial applications [33], [34], [35]. One of the main reasons is that a large number of parameters needs to be optimised, which renders the experimental studies a trial-and-error approach. To highlight the magnitude of the problem, the reader is referred to a recent numerical study involving nearly 2 billion parameter combinations [36]. Therefore, numerical simulations of bubble clusters are viable approaches to produce optimal, large-scale and energy-efficient operation strategies.

A suitable bubble cluster simulator also struggles with many challenges. First, the nucleation of bubbles appears in seemingly random positions. The source of such bubbles still generates debate in the sonochemical society [37]. Second, due to primary and secondary Bjerknes forces, bubbles are moving in space producing various forms of cluster structure [38], [2], [3]. Third, as bubbles translate in space and time, they can merge to form larger bubbles [39], [40]. Fourth, the radial dynamics of the individual bubbles is highly nonlinear and chaotic [41], [42], [43], [44], [45], [46], [47], [48], which need to be appropriately resolved to capture the energy-focusing process of the contraction phase [49], [50], [51]. If feasible, the solution of the chemical kinetics is advisable to estimate the temporal composition of a bubble [6], [7], [8], [9], [10], [11], [12], [13], [14], [15], [16], [17], [18], [19]. Last but not least, large bubbles tend to be spherically unstable, and they can disintegrate into many smaller daughter bubbles [52]. Interaction of closely spaced bubbles can also disrupt the spherical stability [53].

This study focuses on the issue of shape instability since this is a primary source of losing the energy focusing during a bubble collapse. The task is highly parallelisable for bubble cluster dynamics simulations or large-scale parameter scans as the governing equations describing the shape stability of the individual bubbles are identical. This makes the employment of graphics processing units (GPUs) attractive, especially if one considers the tendency in high-performance computing: more than of the peak processing power of the Frontier supercomputer (put into service in 2022) comes from GPUs [54]. Therefore, high-performance GPU programming is the best approach today in simulating large-scale parameter studies (in the order of millions or even billions). However, an efficient GPU code needs careful thread and explicit memory management by the user; otherwise, the exploitation of the peak processing power can be as low as merely , see one of our recent publications for details [55]. The identification of the possible bottlenecks and their solutions are highly model dependent. Thus, we proceed with a brief summary of the widely employed modelling techniques and with the justification of our choice.

Various approaches can be found in the literature with a variety of complexity to describe the shape deformation of a bubble. They are based on linear or non-linear perturbation theories; boundary integral method; boundary-fitted finite-volume (finite-element) method; or full 3D or 2D (axisymmetric) hydrodynamical simulations.

Linearised perturbation theories provide the simplest way of studying spherical stability [56], [57], [58], [59], [60]. Each shape mode consists of a decoupled second-order ordinary differential equation. The coupling with the radial dynamics is unidirectional. That is, the radial dynamics of the bubble is incorporated as a parametric excitation into the governing equations of the shape modes; however, the shape modes themselves do not influence the radial dynamics. Due to the simplicity of the model, GPU implementation is straightforward, and it is already done in our earlier publications [61], [62], [63]. The most severe limitation of the above-described approach is its linearity: the magnitudes of the shape modes tend to zero or grow to infinity. Finite amplitude surface mode oscillations cannot be analysed, which are the dominant dynamics in many situations. In addition, if the transient behaviour is essential; for instance, when the keen interest is the number of acoustic cycles the bubble survives (without a break up), the nonlinear coupling between the surface modes becomes essential to obtain realistic results (as the dynamics is far from the linear regime).

Including higher-order terms in a perturbation theory is the simplest way to extend a linearised model with non-linear terms that can prevent the infinite growth of the surface modes and produce finite amplitude, steady oscillations [64], [65], [1], [66], [67], [68], [69], [70], [71], [72], [73], [53], [74], [75]. There is a bi-directional coupling between the mean radial oscillation and the surface mode amplitude dynamics. In addition, the shape modes themselves are also mutually coupled; thus, this approach can capture the energy transition between the surface modes and the mean radial pulsation. The model is composed of globally coupled second-order ordinary differential equations (albeit the model complexity is significantly increased compared to the linearised approach), for which the use of GPUs is already widespread [76], [77], [78], [79], [80], [81], [82]. However, due to the implicit nature of the coupling, a linear system of algebraic equations has to be solved at every function evaluation for the second time-derivatives of the radial dynamics and the surface mode amplitudes. This can severely impact the performance since basic linear algebraic tasks are typically memory bandwidth limited applications [83], [84] (the computing units cannot be fed with enough data to keep them busy). The severity of the problem is demonstrated in Sec.5.2.

Perturbation theory assumes that the amplitudes of the surface mode oscillations are small. Although taking into account higher-order terms in the series expansion can increase the validity limit, and in practice, the non-linear type of models can agree well with experimental results having relatively large amplitudes, the correctness of the results cannot be guaranteed far from the linear behaviour. Boundary Integral Method (BIM) is a viable option to eliminate restrictions for the magnitude of the deformation [85], [86], [87], [88], [89]. Also, it is a computationally efficient technique: only the surface of the bubble needs to be discretised. Therefore, the spatial dimension of the problem is reduced by one. In an axisymmetric case, this means the discretisation only along a one-dimensional curve.

The perturbation theory and the BIM approach; referred to as Reduced Order Models (ROM); fundamentally deal with potential, inviscid and incompressible flows. Although with additional terms, the effect of viscosity and compressibility can be taken into account as approximations, the most accurate treatment of the non-spherical bubble oscillation is the solution of the complete set of conservation equations of the multi-phase hydrodynamics [90], [91], [92], [93] (e.g. employing a well-tested CFD software like OpenFOAM [94], [95], [96] or ALPACA [97]). However, the computation demand of the simplest 2D simulations is already orders of magnitude higher than the ROM approaches. Therefore, they are unfeasible for large-scale parameter scans of bubble clusters, regardless of the available computing power (e.g. via GPUs).

The boundary-fitted discretisation schemes are good candidates to solve the Navier–Stokes equations without simplification while keeping the bubble interface sharp and well-resolved [98], [99]. During the simulation, an orthogonal and time-dependent coordinate transformation ensures the automatic deformation of the mesh fitted to the boundary of the bubble [100], [101]. To the best knowledge of the authors, the technique is suitable only for 2D axisymmetric problems. Although this technique efficiently handles the bubble interface, the solution of the underlying conservation equations is still resource-intensive.

Considering the previous discussion, the trade-off between the model complexity (its validity limit) and runtime is a severe issue for large-scale parameter studies. As a compromise, nonlinear perturbation theory and the BIM method are good candidates for moderately viscous liquids with weak compressibility. Both approaches are computationally efficient (compared to the CFD techniques) and can be applied to 3D cases; thus, both are widely employed in the literature, see the aforementioned references. Although the BIM has a larger validity range regarding bubble-shape deformation, the present study focuses on models based on the nonlinear perturbation theory (adopted from [1]). The reason is that the governing equations are composed of Ordinary Differential Equations (ODEs), which fit well into the existing GPU library of our research group [102], [103]. In addition, the validity range is large enough to detect the inception of losing the energy focus of bubble collapses. Even though a bubble is fragmented in the long term, such a model is also capable of approximating the number of acoustic cycles (or collapses) the bubble can survive.

As it is already stated above, the primary numerical difficulty is related to the implicit nature of the coupled ODEs; that is, a linear system of equations has to be solved for every function evaluation, see Sec. 2 for more details. It is not an issue employing conventional Central Processing Units (CPUs) as many commercial software packages (e.g. Maple or Mathematica) can handle implicit ODE systems automatically. In addition, they can even generate highly optimised Fortran code making the application efficient for CPU clusters.

It is well-known that linear algebraic applications are usually limited by memory bandwidth, i.e., the system memory is not fast enough to feed the computing units with data [83], [84]. Assuming that one CPU core solves one ODE system and that the number of the employed surface modes is moderate (only a few tens maximum), the complete problem fits into the fast L1 cache of the CPU core. Such an extensive data reuse via the L1 cache eases the pressure on the slow system memory. Thus, for CPUs, system memory bandwidth is not a limiting factor.

For large-scale parameter studies, utilising the massive computing power of GPUs is a viable alternative (compared to CPUs). The millions or even billions of parameter combinations (independent tasks) can easily be distributed among a large number of GPU threads. The most straightforward parallelisation strategy is assigning one ODE system to a GPU thread, each with a different parameter set and/or initial conditions. However, while a large amount of fast cache memory is available for a single CPU thread, in the case of GPUs, hundreds or thousands of threads share a similar amount of fast on-chip cache memory. Therefore, direct or iterative solvers, where operations on moderately large or small matrices are involved, are memory bandwidth-bound approaches for the GPU [104]. The reason is simple: only a single matrix must be stored on a single CPU core, while hundreds or thousands of matrices must be stored somewhere in the GPU for a single streaming multiprocessor. The massive number of matrices is most probably stored in the slowest global memory of the GPU due to the fast on-chip memory capacity limitations (registers, shared memory or L1 cache), see Sec. 5.2 for details.

A memory-friendly, low storage (matrix-free) approach is a fixed point iteration method since only successive function evaluations are necessary, where the required state variables must already be available. Unfortunately, the convergence of a fixed-point iteration is not guaranteed; it might have a low convergence rate or even divergence. The main aim of the present study is to develop a fixed-point iteration technique with a fast convergence rate, which is specialised for the ODE systems originating from nonlinear perturbation theories. Altogether, four variants are tested on various parameter combinations of an acoustically excited microbubble. The best version requires only 1 to 3 function reevaluations to achieve error.

The performance of the fixed-point iteration technique is compared with Gauss elimination. It is demonstrated that in terms of the raw arithmetic operation count, Gauss elimination is superior. However, via simple elementary calculations, it is shown that the performance of the Gauss elimination is limited by memory bandwidth. Depending on the GPU architecture, this bottleneck can be so severe that the Gauss elimination becomes slower by to compared to the best fixed-point iteration variant. The pressure on the global memory can be eased by extensive data reuse in the user-programmable shared memory, e.g., by decomposing the coefficient matrix into smaller chunks. However, such a task is far from trivial, architecture-dependent, and still, the memory bottleneck can only be eliminated partially while the complexity of the code increases significantly. Keep in mind that a fixed-point iteration needs only the reevaluation of some parts of the ODE function. Therefore, detailed runtime comparisons with specific implementations with other software packages or algorithms are out of the scope of the paper. Instead, this study provides best and worst-case scenarios for selecting the proper algorithm depending on the available hardware and the scale of the problem.

2. Governing equations

This section focuses on the description of the governing equation, which describes the volume oscillation and the shape deformation of a single bubble subjected to acoustic forcing. The model is based on the work of Shaw [64], [65], [1]. Assuming axisymmetric shape deformation, the bubble surface is described by the infinite series

| (1) |

where is the bubble radius as a function of time t (i.e. the volume oscillation also referred to as the zeroth mode), are the Legendre polynomials of order n (shape modes) and are the corresponding shape mode amplitudes. In accordance with Eq. (1), the translational motion is neglected (first mode). The axisymmetric assumption is valid if the disturbance of the deformation has a definite direction; for instance, the direction of the acoustic wave or the direction of a neighbouring bubble. The system consists of coupled, second-order nonlinear ODEs, which describe the temporal evolution of the mean bubble radius (volume oscillation, spherical part) and the surface mode amplitudes (non-spherical part). The nonlinear coupling terms in the ODEs describe the interaction between the surface modes and the volume oscillation.

The equation describing the volume oscillation reads as

| (2) |

where is the sound speed of the surrounding liquid and the function

| (3) |

describes the dynamic mechanical equilibrium at the bubble interface. Here, is the liquid density, refers to the equilibrium bubble radius, is the polytropic exponent (adiabatic behaviour) and is the vapour pressure. The pressure far away from the bubble is composed of a static ambient pressure and a periodic component with pressure amplitude and angular frequency . The dynamic viscosity and the surface tension are represented by and , respectively. The equilibrium gas pressure inside the bubble can be expressed as

| (4) |

The notation in Eq. (2) indicates the order of the series expansion of the perturbation method. Note that only second-order terms are presented, which describes the influence of the surface modes on the volume pulsation of the bubble. Without these terms, Eq. (2) reduces to the Keller–Miksis equation [105], which describes the radial pulsation of a spherically symmetric bubble and considers the compressibility of the liquid to the first order. It is important to note that the original work of Shaw includes third-order terms. These terms significantly increase the complexity of the model and the computational demand; therefore, they are neglected throughout the present paper. It is demonstrated in Sec. 4 that keeping only the second-order terms, and neglecting the translational motion already provide good agreement with experimental data.

The second-order coupling terms can be divided into two parts. The inviscid part is defined as

| (5) |

while the damping part (effect of liquid viscosity) is written as

| (6) |

During the numerical simulations, the infinite series in Eqs. (5), (6) are truncated at a finite number . In the scientific literature, the truncation varies between to 16 [66]. In this study, the authors increased it to to be able to examine the behaviour of the higher modes as well.

The dynamics of each surface mode are governed by a second-order ODE:

| (7) |

Here, the left-hand side of the equation corresponds to the system used for the linear stability examination by Plesset [106] (linear in terms of ). The second-order terms realise the non-linear feedback between the modes and the spherical oscillation (non-linear in terms of ). Similarly, as in the case of Eq. (2), the second-order terms can be divided into an inviscid part

| (8) |

and a damping part

| (9) |

where are order-3 hypermatrices composed of integrals of Legendre polynomials or evaluations of Legendre polynomials; for the definitions, the reader is referred to papers [64], [65].

2.1. Rearrangement of the ODE system suitable for fixed-point iteration

Rewriting the governing equations to a first-order system by introducing additional variables leads to

| (10) |

| (11) |

| (12) |

| (13) |

For an efficient fixed-point iteration technique to compute the second derivatives, Eqs. (2), (7) are reorganised to separate explicit parts (need a single evaluation) and implicit parts (need re-evaluations):

| (14) |

| (15) |

Observe that the order notation is omitted for clarity. Also, in the implicit parts, the dependence on the second derivatives are also highlighted, where . According to Eqs. (2), (7), the leading coefficients in Eqs. (14), (15) are

| (16) |

| (17) |

In Eq. (14), the function

| (18) |

is the well-known Keller–Miksis equation [105]. Although it is an explicit part, it is separated from to clearly highlight the subcase having spherical symmetry. The two other functions in Eq. (14) can be obtained by reorganising Eqs. (5), (6). The explicit part is

| (19) |

while the implicit part is defined as

| (20) |

By rearranging Eqs. (7), (8), (9), the components of Eq. (15) are

| (21) |

| (22) |

| (23) |

and

| (24) |

It is to be stressed that during the fixed-point iteration, only Eqs. (20), (22), (24) are re-evaluated. That is, the cost of one iteration is much less than a complete function evaluation.

3. Variants of fixed-point iteration techniques

In this section, only the definition of the iterations can be found with short notes of their advantages and disadvantages. Detailed convergence characteristics are given in Sec. 4, and a parameter study is shown in Sec. 4.1. The number of the required iterations to achieve a prescribed precision depends on the initial guess (how close it is to the solution) and the convergence rate of the iteration (divergence is also possible).

It is practical to investigate the classical fixed-point iteration first, examine its behaviour and improve it if necessary. Based on Eqs. (14), (15), this baseline iteration referred to as throughout the paper, and reads as

| (25) |

| (26) |

| (27) |

| (28) |

where the upper index in the parenthesis indicates the actual iteration number. Observe that the initial guess (zeroth index) is estimated from the explicit parts of the system. The initial bubble wall acceleration is computed from the Keller–Miksis equation with spherical symmetry. Next, the initial acceleration of the shape mode amplitudes can be obtained from the linear part of their governing equations (initial bubble wall acceleration is also necessary). Initialising the fixed-point iteration via Eqs. (25), (26) is better than simply taking the initial values, e.g., from the previous time step. It is shown in Sec. 4 that this algorithm needs a relatively large iteration number to reach a given precision.

Due to the reasons mentioned above, improved versions are needed. The variant is defined as follows

| (29) |

| (30) |

| (31) |

| (32) |

where the initial guess and the calculation of the bubble wall acceleration via Eq. (31) remain the same. However, this method uses Eq. (31) to calculate the second derivative of the mode amplitudes at the next iteration. Contrary to the previous case, with this substitution, the bubble wall acceleration in the current iteration stage is used to update to achieve faster convergence. Unfortunately, this special substituting does not significantly affect the iteration number or convergence.

Therefore, further modifications leads to the iteration technique :

| (33) |

| (34) |

| (35) |

| (36) |

| (37) |

where the initial guess and the calculation of the bubble wall acceleration remain the same as in the previous two cases. As an improvement, an intermediate or half step is added via Eq. (36) in order to pre-approximate from the linear parts of the ODE system employing the current bubble wall acceleration. In Eq. (37), this linear approximation is used. This slight modification improves the convergence rate significantly. Note that the term appears both in Eqs. (36) and (37); thus, its has to be reevaluated twice. This behaviour can be taken into account via the factor in Eq. (53) during the computation of the arithmetic operation count in Sec. 5.1.

The final version called tries to further improve the iteration by introducing an additional intermediate approximation:

| (38) |

| (39) |

| (40) |

| (41) |

| (42) |

| (43) |

That is, the acceleration of the surface mode amplitudes are updated twice via the linear approximation. This increase the factor of the operation count to ; however, the extra intermediate step makes convergence even faster.

4. Convergence rate of the fixed-point iterations

The biggest impact on the computational performance of a fixed-point iteration technique is its convergence rate. Simply put, fewer iteration means fewer reevaluation and less arithmetic operation count. This section is devoted to a detailed analysis of the convergence rates of the fixed-point iteration variants introduced in Sec. 3. First, only two test cases are examined having different parameter combinations, where experimental data are available to validate the numerical results. Next, the convergence rate is presented for a broader range of parameters.

The selected cases for a preliminary study are taken from the experimental work of Cleve et al. [67], see Figs. 5 and 6 therein. One has a dominant mode-2 oscillation with an equilibrium bubble radius of and a pressure amplitude of (Case A). The other has dominant mode-3 dynamics at and (Case B). For both cases, the driving frequency is . The rest of the parameters defined in Sec. 2 are as follows: , , , , , . Paper [67] presents the decomposition of the measured perimeter of the bubble into a sum of spherical part and surface modes with the help of Legendre polynomials. Therefore, directly comparing the measured and calculated times series curves is possible.

The ODE system (10), (24) is solved by the adaptive fifth-order Runge–Kutta–Cash–Karp (RKCK) method with fourth-order embedded error estimation. The absolute and relative tolerances are set to . A single time step has six stages that require six function evaluations. The fixed-point iteration technique is embedded into the function evaluation to obtain the second derivatives and with a prescribed precision. Its error is defined as

| (44) |

where the vector f is composed of and . If the error is smaller than the tolerance written as

| (45) |

the fixed-point iteration is considered converged (and terminated). The comparison is element-wise; that is, all the elements of must be smaller than the corresponding elements of . The absolute and the relative tolerances are prescribed to , which are an order of magnitude smaller than that of the RKCK method to minimise the effect of the fixed-point iteration on the time step size selection. The convergence check starts from that compares the initial guess with the first complete evaluation of the ODE function. Thus, zero reevaluation is also possible if the initial guess based on the linear approximation is already precise enough.

The numerical simulations are summarised in Fig. 1. The left and right-hand sides are related to Cases A and B, respectively. The first row shows the mean bubble radius as a function of time. The second row depicts the time series curves of the first two most significant mode amplitudes normalised by the actual mean bubble radius. Due to the normalisation, the deviation from the small perturbation regime can be visualised explicitly. In the third row, the mode amplitudes are also given in microns to directly compare the numerics with measurements. The dimensionless time is defined via the period of the acoustic driving: means one acoustic cycle. Altogether, 300 cycles are simulated in both cases. In Fig. 1, only the transition from the near equilibrium (in the absence of acoustic driving) to the steady oscillation is presented. The initial conditions were:

| (46) |

| (47) |

| (48) |

| (49) |

| (50) |

Fig. 1.

Time series curves for the preliminary test cases. Left-hand side: Case A at and . Right-hand side: Case B at and . For both cases, the driving frequency is . From top to bottom, the first, second and third rows represent the mean bubble radius , the normalised shape mode amplitudes and the shape mode amplitudes in micron, respectively.

The amplitudes of modes , , and shows an excellent agreement with the measured data presented in [67] via Figs. 5 and 6; although, the values of are as high as approximately . Therefore, the model is not only valid for small perturbations but for relatively large amplitude oscillations as well. In addition, despite the neglection of the translational motion of the bubble in our governing equations, which has a comparable amplitude to the equilibrium radius , the shape mode amplitudes still agree well with the measurements. From a numerical point of view, the preliminary tests of the convergence rate of our fixed-point iteration variants are carried out in validated situations.

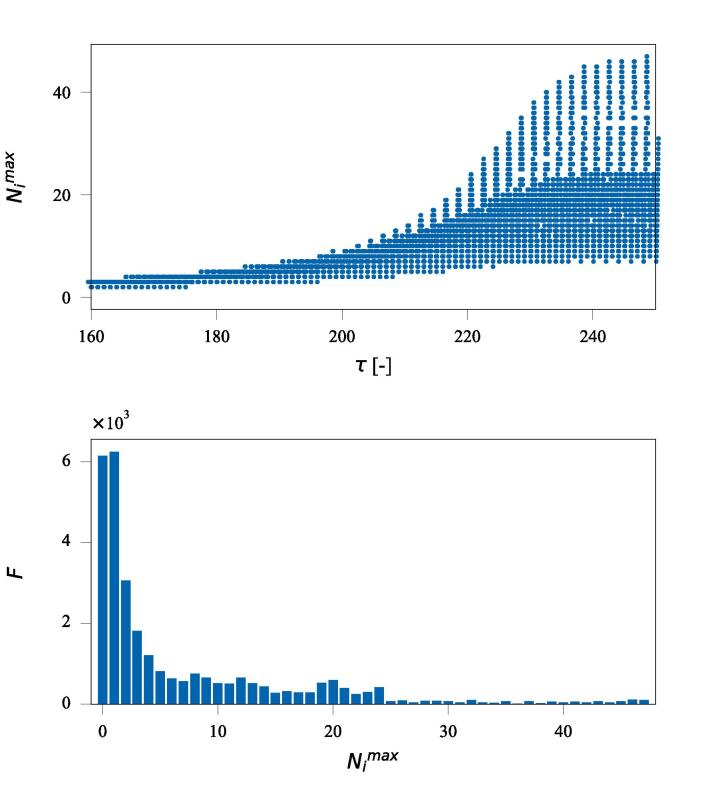

The required number of iterations varies from time step to time step and from function evaluation to function evaluation. This is demonstrated in the top panel of Fig. 2, where the maximum required iterations are shown as a function of time (Case A, fixed-point iteration variant ). Observe how the iteration numbers increase with the magnitude of the shape mode amplitudes; compare the bottom-left panel of Fig. 1 with the top panel of Fig. 2. The RKCK method has six stages (six function evaluations), translating to six fixed-point iterations in a single time step. Generally, the number of iterations can differ at each stage. For simplicity, only the maximum value (worst case scenario) is recorded per time step denoted by . Due to the high variability of , only statistical comparison is possible for the convergence rates between the fixed-point iteration variants. For such purpose, a histogram is created for each simulation where the occurrences F of the values of are presented, see the bottom panel of Fig. 2 as an example. The average iteration number defined as

| (51) |

can characterise a complete simulation (). The notation stands for the number of the time steps.

Fig. 2.

Iteration number for Case A applying the fixed-point iteration variant . Top: maximum iteration number at each time step as a function of time . Bottom: occurrences F of the values of for the complete simulation ().

Figure 3 summarises the histograms obtained for the two preliminary cases tested with the four fixed-iteration variants; see the figure caption for the layout details. The convergence rate of variants (first row) and (second row) is poor. The required number of function reevaluations can be as high as nearly 70. According to the detailed performance analysis carried out in Sec. 5, such high reevaluation numbers make these fixed-point iteration techniques inferior to the Gauss elimination; even though the fixed-point iteration is free of memory bandwidth limitations. A significant difference occurs between variants and ; compare rows three and four in Fig. 3. That is, an additional intermediate approximation for the acceleration of the shape mode amplitudes increases the convergence rate considerably. The maximum number of reevaluations for both test cases is five. Finally, introducing a second intermediate approximation (, fourth row) further improves the convergence rate. In Case A (left-hand side), during the complete integration process of the ODE system, fixed-point iteration always converged within a single reevaluation. For Case B, this number is increased to ; however, this is still a computationally efficient situation.

Fig. 3.

Summary of the histograms for Cases A (left side) and B (right side). The first, second, third and fourth rows correspond to fixed-point iteration variants and , respectively.

Table 1 extracts the main statistical quantities from the eight histograms presented in Fig. 3. The average iteration numbers and the maximum value of in the histograms confirm the above conclusions. While being memory friendly, the fourth variant of the fixed-point iteration () has a fast convergence rate making the technique low-cost also in terms of arithmetic operation count.

Table 1.

Summary of the statistical properties of the fixed-point iteration variants (-) tested on Cases A and B.

|

Case A |

Case B |

||||

|---|---|---|---|---|---|

| 6.9 | 46 | 28.1 | 69 | ||

| 6.8 | 46 | 27.9 | 69 | ||

| 1.9 | 5 | 3.8 | 5 | ||

| 1.0 | 1 | 2.4 | 3 | ||

4.1. Convergence rate for a wide range of parameters

Additional simulations are performed to test the convergence rate of the best fixed-point iteration variant on a broader range of parameters. The driving frequency and the pressure amplitude are modified to and , respectively. Due to the much larger pressure amplitude, the bubble dynamics has much larger oscillation amplitudes. Consequently, stable oscillations of the surface modes occur at smaller bubble sizes (without a break-up). For this reason, the equilibrium radius as the control parameter is varied between and with a resolution of 8192. The simulation was run on an Nvidia RTX A5000 GPU (Ampere architecture) having GFLOPS double precision peak performance. The runtime was hours.

The first 268 acoustic cycles are regarded as transients, and the minima and maxima of the relative surface mode amplitudes are recorded at each of the subsequent 32 cycles. As a function of the control parameter , these values are shown in the top panel of Fig. 4 up to mode number six. In the case of bubble break up (), the complete simulation is discarded; see the missing parts at the regions of high . Although the relative amplitude can be larger than , where the governing equations might not be valid, the corresponding data is kept if stable oscillations are observed. The reason is to test the fixed-point iteration even under extreme conditions. In the bottom panel, the maximum value of of the complete integration procedure is plotted as a function of the control parameter . It is clear that the required number of function reevaluations never exceeds one.

Fig. 4.

Parameter study to test the convergence rate of the fixed-point iteration variant on a wider range of parameters. The driving frequency and pressure amplitude are and , respectively. The bubble size is varied between and with a resolution of 8192. Top panel: surface mode amplitudes. Bottom panel: maximum required function reevaluation.

5. Performance analysis in terms of GPU architecture

This section discusses the theoretical performance comparison of our best fixed-point iteration technique () with the Gauss elimination. As the Gauss elimination minimises the number of arithmetic operation count for solving a linear system of equations, this algorithm serves as a good baseline for comparison. It is shown that the arithmetic operation requirement and the hardware features of a GPU (theoretical peak performance of the compute units and the theoretical peak bandwidth of the memory subsystems) are enough to calculate best and worst-case scenarios for runtime estimations. It is also demonstrated that limitations by memory bandwidth can severely impact the overall runtime of an ODE function evaluation.

5.1. Analysis of the arithmetic operation count

The raw number of operation counts of a numerical technique is a fundamental metric. To reevaluate Eq. (20), one needs

| (52) |

number of additions and multiplications. Here we assumed that the coefficients involving n are precomputed (possibly at compile time) and that the division by R is already available, e.g., during the evaluation of Eq. (19). Similarly, the arithmetic operation counts of Eqs. (22) and (24) are

| (53) |

and

| (54) |

respectively. It was shown in Sec. 3 that Eqs. (22) needs to be reevaluated three times during a single iteration in the case of . This effect is taken into account by the factor . Its value depends on the fixed-iteration variant. In the current version, it is . The fact that division operation is not involved during the reevaluations is an important aspect of the proposed technique: an addition (subtraction) or multiplication is processed in one clock cycle; however, a division needs approximately 50–70 clock cycles. That is, a division is an extremely expensive operation, and its number must be kept minimal. The overall operation count (additions and multiplications) of the fixed-point iteration is

| (55) |

where is the number of the required iterations to achieve a given tolerance. The superscript emphasises the involvement only of additions and multiplications.

Gauss elimination minimises the number of arithmetic operation count for solving a linear system of equations. Therefore, this algorithm serves as a baseline for comparison. There is also a cubic dependence for the additions and multiplications:

| (56) |

Gauss elimination, however, involves a relatively large number of division operations:

| (57) |

Note that the divisions here cannot be eliminated or pre-computed at compile time. In addition, as the coefficient matrices differ from system to system, there are no common divisions between the GPU threads that can ease the arithmetic operation pressure. In the case of the Gauss elimination, the size of the linear equation system is : one for the bubble wall acceleration and for the mode amplitude accelerations. Observe that the summations in Eqs. (20) and (24) are goes from 2 to ; therefore, their reevaluations depends only on (instead of ).

Table 2 summarises the required operation counts for three values of the system size . As the number of the necessary function evaluations is not known in advance for the fixed-point iteration technique, only the operation count for a single reevaluation is highlighted (implicit part, second row). For the Gauss elimination, an equivalent operation count is also calculated by multiplying the division operation count with a factor of 50 (the minimum cost of a division in clock cycles) and adding to the operation count of the additions/multiplications (need a single clock cycle). Although in the majority of the examined cases the fixed-point iteration number is around , the ratio of the implicit and the Gauss elimination reveals that the fixed-point iteration needs more operation by a factor of to .

Table 2.

Summary of the arithmetic operation counts for different values of .

| 8 | 15 | 33 | |||

|---|---|---|---|---|---|

| (, implicit part) | 2842 | 22148 | 262592 | ||

| Gauss (add/mul) | 392 | 2002 | 24992 | ||

| Gauss (div) | 36 | 120 | 561 | ||

| Gauss (equivalent) | 2192 | 8450 | 53042 | ||

| /Gauss | 1.3 | 2.6 | 4.9 | ||

| explicit | 10563 | 82866 | 984288 | ||

| total (single function eval.) | 13405 | 105014 | 1246880 | ||

| implicit/total | 0.21 | 0.21 | 0.21 |

Note that the operation counts of the fixed-point iteration is calculated only from Eqs. (20), (22) and (24), and the operation counts of the complete function evaluation is omitted. Comparing these numbers with the Gauss elimination is correct since determining the elements of the coefficient matrix also needs the evaluation of the complete function. Nevertheless, the arithmetic operations necessary to evaluate the explicit part of the ODE function are also an essential aspect of the comparison. That is, what is the share of the fixed-point iteration or Gauss elimination in the total computational requirements?.

The arithmetic operation count of the explicit parts defined by Eqs. (19), (21) and (23) is

| (58) |

assuming again that the coefficients of n are precomputed and that the division by R is done only once per function evaluation (i.e. neglected). The explicit part must be evaluated only once for both the fixed-point iteration and the Gauss elimination algorithms. The total number of operation counts of the complete function evaluation is the sum of the implicit and explicit values. It is nearly five times larger than the implicit parts (a single reevaluation for the fixed-point iteration). Therefore, the overall effect of the superiority of the Gauss elimination (in terms of operation count) is much less than the factors - presented in Tab. 2. In addition, in order to obtain a realistic picture, the memory hierarchy must also be taken into account.

5.2. Possible performance issues in terms of GPU memory subsystem

The structure of the governing equations presented previously allows the identification of possible performance bottlenecks in terms of the memory subsystem (registers, shared memory and L2 cache) of the GPU. Employing Gauss elimination requires the storage of a corresponding augmented coefficient matrix A with a size of for each replication of the governing equations (i.e. for each GPU thread). If the memory subsystem of the GPU cannot be effectively utilised to store and use these coefficient matrices, the global memory bandwidth will limit the application performance.

Let us highlight the magnitude of the problem via some reference numbers. First, Tab. 3 summarises the available fast, on-chip memory types of the latest Nvidia GPU architectures (as of Q2, 2023). The last level L2 cache of a GPU is shared among all the threads, and its size is in the order of megabytes. Although not user programmable, a large portion of this memory type can be configured for persistent data (frequently reused by the threads). Which data goes into the persistent portion of the L2 cache is decided at runtime via the hit rate. Therefore, if data is frequently used (like the coefficient matrices), it is likely that it remains in the L2 cache. This feature of Nvidia GPUs is available from CUDA 11.0, and compute capability 8.0 and above (Ampere architecture). The compute units of a GPU are partitioned into Streaming Multiprocessors (SMs). Each SM has a dedicated user-programmable L1 cache called Shared Memory. Its size is in the order of tens of kilobytes. Due to the user-programmable feature, one can directly allocate memory for the coefficient matrices (if enough memory is available). The register file is the fastest memory type (practically has no latency). Each GPU thread has a dedicated number of registers. They are precious and scarce resources: the maximum available double precision registers are 127 for all GPU architectures.

Table 3.

Hardware features of different Nvidia GPU architectures (full implementation). The notation DP stands for double precision.

| Volta | Ampere | Hopper | |||

|---|---|---|---|---|---|

| V100 | A100 | H100 | |||

| compute capability | 7.0 | 8.0 | 9.0 | ||

| clock rate (MHz) | 1530 | 1410 | 1755 | ||

| SM count | 84 | 128 | 144 | ||

| DP units per SM | 32 | 32 | 64 | ||

| DP units per GPU | 2688 | 4096 | 9216 | ||

| peak DP computing power (TFLOPS) | 8.2 | 11.6 | 32.3 | ||

| max registers per thread (DP) | 127 | 127 | 127 | ||

| max shared memory per SM (kB) | 96 | 164 | 228 | ||

| max L2 cache per GPU (MB) | 6 | 40 | 50 | ||

| global memory bandwidth (GB/s) | 900 | 1555 | 3352 | ||

| global memory latency (clock cycles) | 400 | 400 | 400 | ||

| shared memory bandwidth (GB/s) | 16451 | 23101 | 32348 | ||

| shared memory latency (clock cycles) | 20 | 20 | 20 | ||

| L2 cache bandwidth (GB/s) | 3133 | 7219 | 8986 | ||

| L2 cache latency (clock cycles) | 200 | 200 | 200 | ||

| arithmetic operation latency (cl. cyc.) | 4 | 4 | 4 | ||

| required memory bandwidth (GB/s) | 67381 | 94623 | 264996 | ||

| bandwidth ratio (required/global) | 75 | 61 | 79 | ||

| bandwidth ratio (required/L2 cache) | 22 | 13 | 29 | ||

| bandwidth ratio (required/shared) | 4 | 4 | 8 |

In a simplified viewpoint, every piece of data that does not fit into the aforementioned memory types resides in the slowest global memory of the GPU. Its latency is high, in the order of several hundreds of clock cycles. This number of cycles must pass before a piece of data becomes available for computations after initiating a load operation. The strategy of the GPU to hide latency is to reside a large number of threads in an SM (the maximum is 2048). That is, while some threads wait for data (either from memory or previous computations), others might be ready to perform an arithmetic operation. On the other hand, a large number of residing threads per SM means fewer available registers, less shared memory and L2 cache per thread. Thus, a compromise must be found between latency hiding capability and on-chip memory overuse, which is usually not trivial. Optimally, all the data can reside in the registers, and the code can run practically without memory latency. In such cases, a high number of residing threads in an SM is not necessary. Unfortunately, our governing equation does not fit this category; therefore, efficient memory and thread management are mandatory. The present study focuses only on memory bandwidth limitation, and performance issues caused by latency are neglected. Thus, it is a best-case scenario for the Gauss elimination, where the number of threads residing simultaneously in an SM is reduced to allocate more registers and shared memory to a GPU thread. For this purpose, the number of threads per SM is set only to 256. Considering that the maximum is 2048, this number is quite small (). Table 3 summarises the estimated latency of the different memory types. Arithmetic operation latency is also included for completeness. Although the number of threads per SM is considered to be the same for all architectures, due to the different number of SMs, the total number of threads launched per GPU is different, compare Tabs. 3 and 4.

Table 4.

Best and worst case scenarios of global memory pressure for different coefficient matrix sizes .

| 8 | 15 | 33 | ||

|---|---|---|---|---|

| matrix size | 64 | 225 | 1089 | |

| registers per threads (DP) | 127 | 127 | 127 | |

| threads per SM | 256 | 256 | 256 | |

| threads per GPU (V100) | 21504 | 21504 | 21504 | |

| threads per GPU (A100) | 32768 | 32768 | 32768 | |

| threads per GPU (H100) | 36864 | 36864 | 36864 | |

| registers req. (DP) | 64 | 225 | 1089 | |

| shared memory req. (kB) | 128 | 450 | 2178 | |

| L2 cache req. (MB, V100) | 10.5 | 36.9 | 178.7 | |

| L2 cache req. (MB, A100) | 16.0 | 56.3 | 272.3 | |

| L2 cache req. (MB, H100) | 18.0 | 63.3 | 306.3 | |

| Gauss (equivalent arithm. op.) | 2192 | 8450 | 53042 | |

| Gauss load/store best | 144 | 480 | 2244 | |

| Gauss load/store worst | 406 | 2478 | 25056 | |

| Gauss arith./mem. best | 15.2 | 17.6 | 23.6 | |

| Gauss arith./mem. worst | 5.4 | 3.4 | 2.1 | |

| Gauss slow factor (H100) best | 1.9 | 4.5 | 3.3 | |

| Gauss slow factor (H100) worst | 5.5 | 23.2 | 37.3 |

Assuming that all the data in a series of arithmetic operations need to be loaded from memory without data reuse and that the operands are double precision floating point numbers (8 bytes), the required memory bandwidth in GB/s is

| (59) |

where is the peak double-precision performance of the hardware in TFLOPS (Tera FLoating Point operation per Second). The ratio of the required and the available memory bandwidth is a valuable hardware metric that defines the required number of arithmetic operations per memory transaction to eliminate bandwidth limitations. Table 3 summarises these hardware features for the different memory types and architectures. Observe that in the case of global memory, 60 to 80 arithmetic operations need to be performed for a single memory transaction. It is to be stressed that the number of the arithmetic and load/store operations are usually equal in linear algebraic tasks. For instance, a vector-vector multiplication of size n needs memory loads (for the two vectors), whereas it only needs n additions and n multiplications. In other words, without extensive data reuse via registers, shared memory or L2 cache, the runtime might increase by a factor of 60 to 80; and the arithmetic operation count requirements, like the ones presented in Sec. 5.1, becomes meaningless.

The memory requirement to store the coefficient matrices for and 32 is summarised in Tab. 4. The corresponding matrix sizes are and 1089, respectively. If one intends to store the coefficient matrices in the fastest memory type (registers), the required number of double precision (DP) registers equals the coefficient matrix size. This can be satisfied only for small values of . However, other data, e.g., vectors of the state variable, stages of the numerical integration or intermediate results, usually need to be stored in registers as well. Therefore, consuming half of the registers for the coefficient matrix alone is possible but can still produce suboptimal performance. Variables with no room in the register file are automatically spilt back to the slow global memory.

Putting the coefficient matrices into the shared memory needs the storage of as many matrices as the number of the residing threads in the SM. Considering double precision floating points (8 bytes) means and 2178 kilobyte storage requirement for and 33, respectively. Only the Ampere and the Hopper GPU architectures can store the coefficient matrices for small values of .

The L2 cache requirement must be considered on a GPU basis. Due to the variable number of SMs, the total number of threads per GPU depends on the architecture; see Tab. 4. Accordingly, the required L2 cache capacity is a function of the matrix size and the GPU architecture. The corresponding three rows of Tab. 4 present the storage requirements in megabytes. Again, only the newest Ampere and Hopper architectures have enough capacity, and again only for small matrix sizes.

The motivation to develop a matrix-free solver is clearly demonstrated in this section. Storing coefficient matrices in fast on-chip GPU memories is feasible only for small problem sizes, see the bold numbers in Tab. 4. Otherwise, extensive global memory operations are expected that can degrade the performance of the code significantly. With fixed-point iteration techniques, the entire matrix storage problem can be eliminated.

How this knowledge translates to the Gauss elimination? First, the required number of load/store memory transactions must be calculated. In the best-case scenario, the memory transactions are two times the size of the augmented coefficient matrix (store when it is computed, and load for the elimination):

| (60) |

Equation (60) assumes that all matrix elements are loaded only once. This means that the entire matrix fits into one of the fast memory types (e.g., shared memory). If there is insufficient fast memory capacity, the same elements must be loaded multiple times during the elimination process. Simply put, when an element is needed again, it is usually already evicted from the fast memory type by other data. Consequently, in the worst-case scenario, data is always loaded from the global memory during an arithmetic operation. In this situation, the load and store operations are calculated as

| (61) |

and

| (62) |

respectively. The best- and worst-case scenarios are shown in Tab. 4. The available ratio of the arithmetic operations and memory transactions is based on the equivalent operation count. Comparing this ratio with the required one presented in the last three rows in Tab. 3, it is clear that the Gauss elimination is limited by memory bandwidth (even for the best-case scenario). Dividing the available and the required ratios, slow-down factors are defined in the last two rows of Tab. 4 (H100 architecture only). Here we assume that for , the augmented coefficient matrix fits into the L2 cache.

If there is not enough fast (e.g., shared) memory capacity, dividing the coefficient matrix into smaller slices and performing as many operations as possible on such a smaller portion of data before replacing it with others from the global memory is possible. Although these techniques can significantly reduce global memory transactions, they cannot eliminate memory bandwidth bottlenecks completely, as the same data still needs to be loaded multiple times from the global memory [107]. In addition, these techniques significantly increase the complexity of the control flow of the program. Although the discussion of such clever algorithms is out of the scope of the present study, they must lie between the best- and worst-case scenarios.

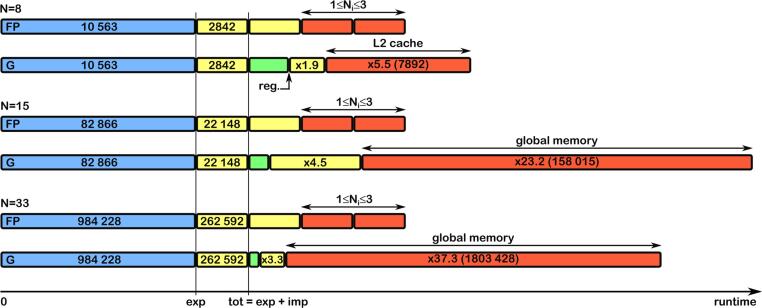

5.3. Overall performance comparison

Based on the theoretical considerations discussed in Secs. 5.1 and 5.2, the estimated runtime comparisons (measured in clock cycles) between the fixed-point iteration (FP) and Gauss elimination (G) are shown in Fig. 5 at three values of . For both techniques, the complete ODE function has to be evaluated once, which includes the explicit parts (blue rectangle) and an implicit part (one yellow rectangle). The numbers are the arithmetic operation counts also given in Tab. 2. Note that the total operation count of the complete function evaluation normalises the horizontal axis.

Fig. 5.

Best and worst case performance comparisons between the fixed-point iteration and the Gauss elimination.

The best variant of the fixed-point iteration technique needs to additional reevaluations of the implicit parts represented by the next three rectangles having an equal length. The red parts define the best- and worst-case scenarios that occurred during the employed parameter combinations in the present paper. In general, the fixed-point iteration technique provides a well-predictable performance.

If the augmented coefficient matrix fits into the GPU registers, the Gauss elimination is free of memory bandwidth limitations. The green rectangles in Fig. 5 presents such an optimal situation based only on the arithmetic operation counts. Although the Gauss elimination is superior regarding raw arithmetic operation counts, the actual runtimes can be significantly higher due to the bottleneck of memory bandwidth limitations. Applying the factors presented in the last two rows in Tab. 4 (H100 architecture), best- and worst-case scenarios can also be presented for the Gauss elimination; see the corresponding red rectangles. According to [107], the achieved performance of the Gauss–Jordan elimination is approximately between and . Therefore, it is very likely that the performance of the Gauss elimination for our bubble dynamical problem is close to the presented worst-case scenario, which makes the fixed-point iteration a better alternative.

It is important to note again that additional storage capacity is necessary for the state variables, RKCK integrator stages, and other intermediate variables. However, these requirements are identical for the fixed-point iteration and the Gauss elimination. Furthermore, the performance of the Gauss elimination might further be degraded by the lack of latency hiding capabilities; see Sec. 5.2 for the related discussion. Finally, the increased complexity of the code is the cost of a clever algorithm that exploits the shared memory capacity by decomposing the Gauss elimination procedure into smaller subtasks. This has an overhead, e.g., because of the additional arithmetic operations. These issues mentioned above are omitted during the performance analysis.

6. Summary

Shape instability of acoustically driven microbubbles is a crucial aspect of sonochemistry. A shape-unstable bubble loses its energy-focusing potential leading to a reduced chemical yield. The investigation of the disintegration of bubbles and the acoustic cycles they can survive is a cumbersome task due to the large number of involved parameters. The complexity of the model and the peak processing power of the selected hardware determines the possible scale of a parameter study.

This paper focused on developing an efficient algorithm suitable for massive parameter scans of nonlinear spherical stability analysis on graphics processing units (GPUs). The main challenge is the solution of the emerging linear algebraic equation systems at every function evaluation during the numerical integration process of the governing equations (a set of second-order ordinary differential equations).

It is shown that the performance of the Gauss elimination is limited by memory bandwidth, although it is superior in terms of raw arithmetic operation counts. In the examined test cases, the runtime might increase by a factor as high as 37. Other direct or iterative solvers involving a coefficient matrix shall suffer from the same limitations.

Due to its matrix-free nature, a fixed-point iteration is free of the aforementioned memory issues (only successive function reevaluations are necessary). However, its performance heavily relies on the required number of iterations (reevaluations) to achieve a prescribed tolerance. Moreover, fixed-point iterations can even be divergent. This paper presents a specialised fixed-point iteration with a high convergence rate that needs only 1 to 3 reevaluations to retrieve accuracy. Therefore, the proposed technique is superior to the Gauss elimination due to its memory friendliness despite its slightly higher arithmetic operation count.

CRediT authorship contribution statement

Péter Kalmár: Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization. Ferenc Hegedűs: Funding acquisition, Project administration, Writing – original draft, Writing – review & editing. Dániel Nagy: Software, Resources. Levente Sándor: Supervision, Project administration, Writing – original draft.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgement

Project No. TKP-6–6/PALY-2021 has been implemented with the support provided by the Ministry of Culture and Innovation of Hungary from the National Research, Development and Innovation Fund, financed under the TKP2021-NVA funding scheme. This paper was also supported by the János Bolyai Research Scholarship of the Hungarian Academy of Sciences, by the New National Excellence Program of the Ministry of Human Capacities (Kálmán Klapcsik: ÚNKP-22–5-BME-310, Dániel Nagy: ÚNKP-22–2-I-BME-68), and by the NVIDIA Corporation via the Academic Hardware Grants Program. The authors acknowledge the financial support of the Hungarian National Research, Development and Innovation Office via NKFIH grant OTKA FK-142376 (Ferenc Hegedűs) and PD-142254 (Kálmán Klapcsik).

The authors also thank Stephen J. Shaw for the valuable personal discussions that influenced our thoughts while developing the proposed fixed-point iteration technique.

Contributor Information

Péter Kalmár, Email: peter.kalmar@edu.bme.hu.

Ferenc Hegedűs, Email: fhegedus@hds.bme.hu.

Dániel Nagy, Email: nagyd@edu.bme.hu.

Levente Sándor, Email: lsandor@hds.bme.hu.

Kálmán Klapcsik, Email: kklapcsik@hds.bme.hu.

References

- 1.Shaw S.J. Nonspherical sub-millimeter gas bubble oscillations: Parametric forcing and nonlinear shape mode coupling. Phys. Fluids. 2017;29(12):300–311. [Google Scholar]

- 2.R. Mettin, Bubble structures in acoustic cavitation, in: Bubble and Particle Dynamics in Acoustic Fields: Modern Trends and Applications, Research Signpost, Trivandrum, Kerala, India, 2005.

- 3.Mettin R. Oscillations, Waves and Interactions: Sixty Years Drittes Physikalisches Institut; a Festschrift. Universitätsverlag Göttingen; Göttingen, Germany: 2007. From a single bubble to bubble structures in acoustic cavitation. [Google Scholar]

- 4.Leighton T.G. Academic press; London: 2012. The acoustic bubble. [Google Scholar]

- 5.Neppiras E.A. Acoustic cavitation. Phys. Rep. 1980;63(3):159–251. [Google Scholar]

- 6.Yasui K., Tuziuti T., Lee J., Kozuka T., Towata A., Iida Y. The range of ambient radius for an active bubble in sonoluminescence and sonochemical reactions. J. Chem. Phys. 2008;128(18) doi: 10.1063/1.2919119. [DOI] [PubMed] [Google Scholar]

- 7.Yasui K., Tuziuti T., Kozuka T., Towata A., Iida Y. Relationship between the bubble temperature and main oxidant created inside an air bubble under ultrasound. J. Chem. Phys. 2007;127(15) doi: 10.1063/1.2790420. [DOI] [PubMed] [Google Scholar]

- 8.Kerboua K., Hamdaoui O. Sonochemical production of hydrogen: Enhancement by summed harmonics excitation. Chem. Phys. 2019;519:27–37. [Google Scholar]

- 9.Kerboua K., Hamdaoui O. Numerical investigation of the effect of dual frequency sonication on stable bubble dynamics. Ultrasonics Sonochemistry. 2018;49:325–332. doi: 10.1016/j.ultsonch.2018.08.025. [DOI] [PubMed] [Google Scholar]

- 10.Merabet N., Kerboua K. Sonolytic and ultrasound-assisted techniques for hydrogen production: A review based on the role of ultrasound. Int. J. Hydrog. Energy. 2022;47(41):17879–17893. [Google Scholar]

- 11.Dehane A., Merouani S., Hamdaoui O., Alghyamah A. A complete analysis of the effects of transfer phenomenons and reaction heats on sono-hydrogen production from reacting bubbles: Impact of ambient bubble size. Int. J. Hydrog. Energy. 2021;46(36):18767–18779. [Google Scholar]

- 12.Storey B.D., Szeri A.J. Water vapour, sonoluminescence and sonochemistry. Proc. R. Soc. Lond. A. 2000;456(1999):1685–1709. [Google Scholar]

- 13.Stricker L., Lohse D. Radical production inside an acoustically driven microbubble. Ultrason. Sonochem. 2014;21(1):336–345. doi: 10.1016/j.ultsonch.2013.07.004. [DOI] [PubMed] [Google Scholar]

- 14.Kalmár C., Turányi T., Zsély I.G., Papp M., Hegedűs F. The importance of chemical mechanisms in sonochemical modelling. Ultrason. Sonochem. 2022;83 doi: 10.1016/j.ultsonch.2022.105925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kalmár C., Klapcsik K., Hegedűs F. Relationship between the radial dynamics and the chemical production of a harmonically driven spherical bubble. Ultrason. Sonochem. 2020;64 doi: 10.1016/j.ultsonch.2020.104989. [DOI] [PubMed] [Google Scholar]

- 16.Peng K., Tian S., Zhang Y., He Q., Wang Q. Penetration of hydroxyl radicals in the aqueous phase surrounding a cavitation bubble. Ultrason. Sonochem. 2022;91 doi: 10.1016/j.ultsonch.2022.106235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Peng K., Qin F.G.F., Jiang R., Qu W., Wang Q. Production and dispersion of free radicals from transient cavitation bubbles: An integrated numerical scheme and applications. Ultrason. Sonochem. 2022;88 doi: 10.1016/j.ultsonch.2022.106067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lee G.L., Law M.C. Numerical modelling of single-bubble acoustic cavitation in water at saturation temperature. Chem. Eng. J. 2022;430 [Google Scholar]

- 19.Authier O., Ouhabaz H., Bedogni S. Modeling of sonochemistry in water in the presence of dissolved carbon dioxide. Ultrason. Sonochem. 2018;45:17–28. doi: 10.1016/j.ultsonch.2018.02.044. [DOI] [PubMed] [Google Scholar]

- 20.Cavalieri F., Chemat F., Okitsu K., Sambandam A., Yasui K., Zisu B. 1st Edition. Springer Singapore; Singapore: 2016. Handbook of Ultrasonics and Sonochemistry. [Google Scholar]

- 21.Thomson L.H., Doraiswamy L.K. Sonochemistry: science and engineering. Ind. Eng. Chem. Res. 1999;38(4):1215–1249. [Google Scholar]

- 22.Mason T.J. Some neglected or rejected paths in sonochemistry - A very personal view. Ultrason. Sonochem. 2015;25:89–93. doi: 10.1016/j.ultsonch.2014.11.014. [DOI] [PubMed] [Google Scholar]

- 23.Pradhan A.A., Gogate P.R. Degradation of p-nitrophenol using acoustic cavitation and Fenton chemistry. J. Hazard. Mater. 2010;173(1):517–522. doi: 10.1016/j.jhazmat.2009.08.115. [DOI] [PubMed] [Google Scholar]

- 24.Gogate P.R., Mujumdar S., Pandit A.B. Sonochemical reactors for waste water treatment: comparison using formic acid degradation as a model reaction. Adv. Environ. Res. 2003;7(2):283–299. [Google Scholar]

- 25.Mullakaev R.M., Mullakaev M.S. Development of a mobile sonochemical complex for wastewater treatment. Chem. Pet. Eng. 2021;57(5–6):484–492. [Google Scholar]

- 26.V. Pandur, J. Zevnik, D. Podbevšek, B. Stojkoviæ, D. Stopar, M. Dular, Water treatment by cavitation: Understanding it at a single bubble - bacterial cell level, Water Res. (2023) 119956. [DOI] [PubMed]

- 27.Martínez R.F., Cravotto G., Cintas P. Organic sonochemistry: A chemist’s timely perspective on mechanisms and reactivity. J. Org. Chem. 2021;86(20):13833–13856. doi: 10.1021/acs.joc.1c00805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sancheti S.V., Gogate P.R. A review of engineering aspects of intensification of chemical synthesis using ultrasound. Ultrason. Sonochem. 2017;36:527–543. doi: 10.1016/j.ultsonch.2016.08.009. [DOI] [PubMed] [Google Scholar]

- 29.Xie L.-Y., Li Y.-J., Qu J., Duan Y., Hu J., Liu K.-J., Cao Z., He W.-M. A base-free, ultrasound accelerated one-pot synthesis of 2-sulfonylquinolines in water. Green Chem. 2017;19:5642–5646. [Google Scholar]

- 30.Okitsu K., Ashokkumar M., Grieser F. Sonochemical synthesis of gold nanoparticles: Effects of ultrasound frequency. J. Phys. Chem. B. 2005;109(44):20673–20675. doi: 10.1021/jp0549374. [DOI] [PubMed] [Google Scholar]

- 31.Okitsu K., Yue A., Tanabe S., Matsumoto H. Sonochemical preparation and catalytic behavior of highly dispersed palladium nanoparticles on alumina. Chem. Mater. 2000;12(10):3006–3011. [Google Scholar]

- 32.Cao X., Koltypin Y., Prozorov R., Kataby G., Gedanken A. Preparation of amorphous Fe2O3 powder with different particle sizes. J. Mater. Chem. 1997;7:2447–2451. [Google Scholar]

- 33.Wood R.J., Lee J., Bussemaker M.J. A parametric review of sonochemistry: Control and augmentation of sonochemical activity in aqueous solutions. Ultrason. Sonochem. 2017;38:351–370. doi: 10.1016/j.ultsonch.2017.03.030. [DOI] [PubMed] [Google Scholar]

- 34.Sutkar V.S., Gogate P.R. Design aspects of sonochemical reactors: Techniques for understanding cavitational activity distribution and effect of operating parameters. Chem. Eng. J. 2009;155(1):26–36. [Google Scholar]

- 35.Gogate P.R., Pandit A.B. Sonochemical reactors: scale up aspects. Ultrason. Sonochem. 2004;11(3–4):105–117. doi: 10.1016/j.ultsonch.2004.01.005. [DOI] [PubMed] [Google Scholar]

- 36.Hegedűs F., Klapcsik K., Lauterborn W., Parlitz U., Mettin R. GPU accelerated study of a dual-frequency driven single bubble in a 6-dimensional parameter space: The active cavitation threshold. Ultrason. Sonochem. 2020;67 doi: 10.1016/j.ultsonch.2020.105067. [DOI] [PubMed] [Google Scholar]

- 37.Rosselló J.M., Ohl C.-D. Clean production and characterization of nanobubbles using laser energy deposition. Ultrason. Sonochem. 2023;94 doi: 10.1016/j.ultsonch.2023.106321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.A.A. Doinikov, Bjerknes forces and translationalbubble dynamics, in: Bubble and Particle Dynamics in Acoustic Fields: Modern Trends and Applications, Research Signpost, Trivandrum, Kerala, India, 2005.

- 39.J.M. Rosselló, D.S. Stephens, R. Mettin, Bubble ”lightning” streamers from laser induced cavities in phosphoric acid.

- 40.Reuter F., Lesnik S., Ayaz-Bustami K., Brenner G., Mettin R. Bubble size measurements in different acoustic cavitation structures: Filaments, clusters, and the acoustically cavitated jet. Ultrason. Sonochem. 2019;55:383–394. doi: 10.1016/j.ultsonch.2018.05.003. [DOI] [PubMed] [Google Scholar]

- 41.Lauterborn W., Parlitz U. Methods of chaos physics and their application to acoustics. J. Acoust. Soc. Am. 1988;84(6):1975–1993. [Google Scholar]

- 42.Hegedűs F. Topological analysis of the periodic structures in a harmonically driven bubble oscillator near Blake’s critical threshold: Infinite sequence of two-sided Farey ordering trees. Phys. Lett. A. 2016;380(9–10):1012–1022. [Google Scholar]

- 43.Klapcsik K., Varga R., Hegedűs F. Bi-parametric topology of subharmonics of an asymmetric bubble oscillator at high dissipation rate. Nonlinear Dyn. 2018;94(4):2373–2389. [Google Scholar]

- 44.Sojahrood A.J., Wegierak D., Haghi H., Karshfian R., Kolios M.C. A simple method to analyze the super-harmonic and ultra-harmonic behavior of the acoustically excited bubble oscillator. Ultrason. Sonochem. 2019;54:99–109. doi: 10.1016/j.ultsonch.2019.02.010. [DOI] [PubMed] [Google Scholar]

- 45.Haghi H., Sojahrood A.J., Kolios M.C. Collective nonlinear behavior of interacting polydisperse microbubble clusters. Ultrason. Sonochem. 2019;58 doi: 10.1016/j.ultsonch.2019.104708. [DOI] [PubMed] [Google Scholar]

- 46.Sojahrood A.J., Haghi H., Shirazi N.R., Karshafian R., Kolios M.C. On the threshold of 1/2 order subharmonic emissions in the oscillations of ultrasonically excited bubbles. Ultrasonics. 2021;112 doi: 10.1016/j.ultras.2021.106363. [DOI] [PubMed] [Google Scholar]

- 47.Zhang Y., Zhang Y. Chaotic oscillations of gas bubbles under dual-frequency acoustic excitation. Ultrason. Sonochem. 2018;40:151–157. doi: 10.1016/j.ultsonch.2017.03.058. [DOI] [PubMed] [Google Scholar]

- 48.Zhang Y., Zhang Y., Li S. Combination and simultaneous resonances of gas bubbles oscillating in liquids under dual-frequency acoustic excitation. Ultrason. Sonochem. 2017;35:431–439. doi: 10.1016/j.ultsonch.2016.10.022. [DOI] [PubMed] [Google Scholar]

- 49.J. Ma, X. Deng, C.-T. Hsiao, G.L. Chahine, Hybrid message-passing interface-open multiprocessing accelerated euler-lagrange simulations of microbubble enhanced hifu for tumor ablation, J. Biomech. Eng. 145 (7). [DOI] [PMC free article] [PubMed]

- 50.Ayukai T., Kanagawa T. Derivation and stability analysis of two-fluid model equations for bubbly flow with bubble oscillations and thermal damping. Int. J. Multiph. Flow. 2023;104456 [Google Scholar]

- 51.Kawame T., Kanagawa T. Weakly nonlinear propagation of pressure waves in bubbly liquids with a polydispersity based on two-fluid model equations. Int. J. Multiph. Flow. 2023;164 [Google Scholar]

- 52.Lee J., Tuziuti T., Yasui K., Kentish S., Grieser F., Ashokkumar M., Iida Y. Influence of surface-active solutes on the coalescence, clustering, and fragmentation of acoustic bubbles confined in a microspace. J. Phys. Chem. C. 2007;111(51):19015–19023. [Google Scholar]

- 53.Kurihara E. Dynamical equations for oscillating nonspherical bubbles with nonlinear interactions. SIAM J. Appl. Dyn. Syst. 2017;16(1):139–158. [Google Scholar]

- 54.Oak Ridge Leadership Computing Facility, URL:https://www.olcf.ornl.gov/frontier/ (2022).

- 55.Nagy D., Plavecz L., Hegedűs F. The art of solving a large number of non-stiff, low-dimensional ordinary differential equation systems on GPUs and CPUs. Commun. Nonlinear Sci. Numer. Simul. 2022;112 [Google Scholar]

- 56.Hao Y., Prosperetti A. The effect of viscosity on the spherical stability of oscillating gas bubbles. Phys. Fluids. 1999;11(6):1309–1317. [Google Scholar]

- 57.Prosperetti A. Viscous effects on small-amplitude surface waves. Phys. Fluids. 1976;19(2):195–203. [Google Scholar]

- 58.Brenner M.P., Lohse D., Dupont T.F. Bubble shape oscillations and the onset of sonoluminescence. Phys. Rev. Lett. 1995;75(5):954–957. doi: 10.1103/PhysRevLett.75.954. [DOI] [PubMed] [Google Scholar]

- 59.Hilgenfeldt S., Lohse D., Brenner M.P. Phase diagrams for sonoluminescing bubbles. Phys. Fluids. 1996;8(11):2808–2826. [Google Scholar]

- 60.Versluis M., Goertz D.E., Palanchon P., Heitman I.L., van der Meer S.M., Dollet B., de Jong N., Lohse D. Microbubble shape oscillations excited through ultrasonic parametric driving. Phys. Rev. E. 2010;82(2) doi: 10.1103/PhysRevE.82.026321. [DOI] [PubMed] [Google Scholar]

- 61.Klapcsik K. Dataset of exponential growth rate values corresponding non-spherical bubble oscillations under dual-frequency acoustic irradiation. Data Brief. 2022;40 doi: 10.1016/j.dib.2022.107810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Klapcsik K. GPU accelerated numerical investigation of the spherical stability of an acoustic cavitation bubble excited by dual-frequency. Ultrason. Sonochem. 2021;77 doi: 10.1016/j.ultsonch.2021.105684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Klapcsik K., Hegedűs F. Study of non-spherical bubble oscillations under acoustic irradiation in viscous liquid. Ultrason. Sonochem. 2019;54:256–273. doi: 10.1016/j.ultsonch.2019.01.031. [DOI] [PubMed] [Google Scholar]

- 64.Shaw S.J. Translation and oscillation of a bubble under axisymmetric deformation. Phys. Fluids. 2006;18(7):402–415. [Google Scholar]

- 65.Shaw S.J. The stability of a bubble in a weakly viscous liquid subject to an acoustic travelling wave. Phys. Fluids. 2009;21(2):1400–1417. [Google Scholar]

- 66.Shaw S.J. Nonspherical sub-millimetre resonantly excited bubble oscillations. Fluid Dyn. Res. 2019;51(3) [Google Scholar]

- 67.Cleve S., Guédra M., Mauger C., Inserra C., Blanc-Benon P. Microstreaming induced by acoustically trapped, non-spherically oscillating microbubbles. J. Fluid Mech. 2019;875:597–621. [Google Scholar]

- 68.Cleve S., Guédra M., Inserra C., Mauger C., Blanc-Benon P. Surface modes with controlled axisymmetry triggered by bubble coalescence in a high-amplitude acoustic field. Phys. Rev. 2018;98 [Google Scholar]

- 69.Guédra M., Cleve S., Mauger C., Blanc-Benon P., Inserra C. Dynamics of nonspherical microbubble oscillations above instability threshold. Phys. Rev. 2017;96 doi: 10.1103/PhysRevE.96.063104. [DOI] [PubMed] [Google Scholar]

- 70.Guédra M., Inserra C. Bubble shape oscillations of finite amplitude. J. Fluid Mech. 2018;857:681–703. [Google Scholar]

- 71.Guédra M., Inserra C., Mauger C., Gilles B. Experimental evidence of nonlinear mode coupling between spherical and nonspherical oscillations of microbubbles. Phys. Rev. E. 2016;94(5) doi: 10.1103/PhysRevE.94.053115. [DOI] [PubMed] [Google Scholar]

- 72.Regnault G., Mauger C., Blanc-Benon P., Doinikov A.A., Inserra C. Signatures of microstreaming patterns induced by non-spherically oscillating bubbles. J. Acoust. Soc. Am. 2021;150(2):1188–1197. doi: 10.1121/10.0005821. [DOI] [PubMed] [Google Scholar]

- 73.Doinikov A.A. Translational motion of a bubble undergoing shape oscillations. J. Fluid Mech. 2004;501:1–24. [Google Scholar]

- 74.Maksimov A.O., Leighton T.G. Pattern formation on the surface of a bubble driven by an acoustic field. Proc. Math. Phys. Eng. Sci. 2012;468(2137):57–75. [Google Scholar]

- 75.Maksimov A.O. Hamiltonian description of bubble dynamics. J. Exp. Theor. Phys. 2008;106(2):355–370. [Google Scholar]

- 76.Hegedűs F. Program package MPGOS: challenges and solutions during the integration of a large number of independent ODE systems using GPUs. Commun. Nonlinear Sci. Numer. Simul. 2021;97 [Google Scholar]

- 77.Hegedűs F. Budapest University of Technology and Economics; Budapest, Hungary: 2019. MPGOS: GPU accelerated integrator for large number of independent ordinary differential equation systems. [Google Scholar]

- 78.C. Rackauckas, A comparison between differential equation solver suites in MATLAB, R, Julia, Python, C, Mathematica, Maple, and Fortran, The Winnower 6 (2018) e153459.98975.

- 79.Rackauckas C., Nie Q. DifferentialEquations.jl - A performant and feature-rich ecosystem for solving differential equations in Julia. J. Open Res. Softw. 2017;5(1):15. [Google Scholar]

- 80.Ahnert K., Demidov D., Mulansky M. Solving Ordinary Differential Equations on GPUs. Springer International Publishing. 2014:125–157. [Google Scholar]

- 81.Al-Omari A.M., Griffith J., Scruse A., Robinson R.W., Schüttler H.-B., Arnold J. Ensemble methods for identifying RNA operons and regulons in the clock network of neurospora crassa. IEEE Access. 2022;10:32510–32524. [Google Scholar]

- 82.Al-Omari A.M., Arnold J., Taha T., Schüttler H.-B. Solving large nonlinear systems of first-order ordinary differential equations with hierarchical structure using multi-GPGPUs and an adaptive Runge Kutta ODE solver. IEEE Access. 2013;1:770–777. [Google Scholar]

- 83.Clark M.A., Strelchenko A., Vaquero A., Wagner M., Weinberg E. Pushing memory bandwidth limitations through efficient implementations of Block-Krylov space solvers on GPUs. Comput. Phys. Commun. 2018;233:29–40. [Google Scholar]

- 84.Walden A., Zubair M., Stone C.P., Nielsen E.J. 2021 IEEE/ACM Workshop on Memory Centric High Performance Computing (MCHPC) 2021. Memory optimizations for sparse linear algebra on gpu hardware; pp. 25–32. [Google Scholar]

- 85.Calvisi M.L., Lindau O., Blake J.R., Szeri A.J. Shape stability and violent collapse of microbubbles in acoustic traveling waves. Phys. Fluids. 2007;19(4) [Google Scholar]

- 86.Wang Q.X., Blake J.R. Non-spherical bubble dynamics in a compressible liquid. Part 2. Acoustic standing wave. J. Fluid Mech. 2011;679:559–581. [Google Scholar]

- 87.Riccardi G., De Bernardis E. Numerical simulations of the dynamics and the acoustics of an axisymmetric bubble rising in an inviscid liquid. Eur. J. Mech. B. Fluids. 2020;79:121–140. [Google Scholar]

- 88.Li S., Saade Y., van der Meer D., Lohse D. Comparison of boundary integral and volume-of-fluid methods for compressible bubble dynamics. Int. J. Multiph. Flow. 2021;145 [Google Scholar]

- 89.Wang Q., Liu W., Corbett C., Smith W.R. Microbubble dynamics in a viscous compressible liquid subject to ultrasound. Phys. Fluids. 2022;34(1) [Google Scholar]

- 90.Li X., Bao F., Wang Y. Shape oscillation of a single microbubble in an ultrasound field. J. Nanotechnol. 2021;145 [Google Scholar]

- 91.Fuster D., Popinet S. An all-Mach method for the simulation of bubble dynamics problems in the presence of surface tension. J. Comput. Phys. 2018;374:752–768. [Google Scholar]

- 92.F. Denner, F. Evrard, B. van Wachem, Modeling acoustic cavitation using a pressure-based algorithm for polytropic fluids, Fluids 5 (2).

- 93.Denner F., van Wachem B. Springer Nature Singapore; Singapore: 2022. A Unified Algorithm for Interfacial Flows with Incompressible and Compressible Fluids; pp. 179–208. [Google Scholar]

- 94.Yamamoto T., Hatanaka S., Komarov S.V. Fragmentation of cavitation bubble in ultrasound field under small pressure amplitude. Ultrason. Sonochem. 2019;58 doi: 10.1016/j.ultsonch.2019.104684. [DOI] [PubMed] [Google Scholar]

- 95.Lechner C., Lauterborn W., Koch M., Mettin R. Jet formation from bubbles near a solid boundary in a compressible liquid: Numerical study of distance dependence. Phys. Rev. Fluids. 2020;5(9) [Google Scholar]