This cohort study uses data from the American College of Surgeons Trauma Quality Improvement Program database to examine disparities in access to postacute care and develop a machine learning model that can remedy them.

Key Points

Question

Can artificial intelligence (AI) be used to diagnose and remedy racial disparities in care?

Findings

In this cohort study, examining the AI logic uncovered significant disparities in access to postacute care after discharge, with race (Black patients compared with White) playing the second most important role. After fairness adjustment, disparities disappeared and a similar percentage of Black and White patients had a recommended discharge to postacute care.

Meaning

Interpretable machine learning methodologies are powerful tools to diagnose and remedy system-related bias in care, such as disparities in access to postinjury rehabilitation care.

Abstract

Importance

The use of artificial intelligence (AI) in clinical medicine risks perpetuating existing bias in care, such as disparities in access to postinjury rehabilitation services.

Objective

To leverage a novel, interpretable AI-based technology to uncover racial disparities in access to postinjury rehabilitation care and create an AI-based prescriptive tool to address these disparities.

Design, Setting, and Participants

This cohort study used data from the 2010-2016 American College of Surgeons Trauma Quality Improvement Program database for Black and White patients with a penetrating mechanism of injury. An interpretable AI methodology called optimal classification trees (OCTs) was applied in an 80:20 derivation/validation split to predict discharge disposition (home vs postacute care [PAC]). The interpretable nature of OCTs allowed for examination of the AI logic to identify racial disparities. A prescriptive mixed-integer optimization model using age, injury, and gender data was allowed to “fairness-flip” the recommended discharge destination for a subset of patients while minimizing the ratio of imbalance between Black and White patients. Three OCTs were developed to predict discharge disposition: the first 2 trees used unadjusted data (one without and one with the race variable), and the third tree used fairness-adjusted data.

Main Outcomes and Measures

Disparities and the discriminative performance (C statistic) were compared among fairness-adjusted and unadjusted OCTs.

Results

A total of 52 468 patients were included; the median (IQR) age was 29 (22-40) years, 46 189 patients (88.0%) were male, 31 470 (60.0%) were Black, and 20 998 (40.0%) were White. A total of 3800 Black patients (12.1%) were discharged to PAC, compared with 4504 White patients (21.5%; P < .001). Examining the AI logic uncovered significant disparities in PAC discharge destination access, with race playing the second most important role. The prescriptive fairness adjustment recommended flipping the discharge destination of 4.5% of the patients, with the performance of the adjusted model increasing from a C statistic of 0.79 to 0.87. After fairness adjustment, disparities disappeared, and a similar percentage of Black and White patients (15.8% vs 15.8%; P = .87) had a recommended discharge to PAC.

Conclusions and Relevance

In this study, we developed an accurate, machine learning–based, fairness-adjusted model that can identify barriers to discharge to postacute care. Instead of accidentally encoding bias, interpretable AI methodologies are powerful tools to diagnose and remedy system-related bias in care, such as disparities in access to postinjury rehabilitation care.

Introduction

The use of artificial intelligence, especially machine learning (ML), promises more accurate analytics that improve decision-making in medicine. Appropriate, accurate, and granular data are crucial to construct high-quality ML models. Inadequate or unreliable training data will result in suboptimal ML models with low prediction accuracy and serious patient care consequences, especially for racial and ethnic minority individuals.1,2 Even with reasonable data, care must still be taken to ensure that the ML models being used do not accidentally consolidate existing system-level disparities into decision-making tools.3 Concerns about artificial intelligence and ML noninterpretable “black box” methodologies incorporating bias and consolidating disparities are not new and have been described in many fields, including medicine and health care.4,5

Such disparities exist in the care of injured patients. Traumatic injury is associated with severe and debilitating outcomes, such as lower quality of life, limitations in daily activities, work disability, emotional distress, and chronic pain.6,7,8,9 As a result, the care of injured patients often extends beyond their first hospitalization, with many requiring prolonged services at a postacute care (PAC) facility, such as a rehabilitation center or skilled nursing facility. Disparities in access to PAC have been described: when compared with White patients, Black patients are more likely to be discharged home as opposed to rehabilitation centers or skilled nursing facilities after injury,10,11,12 potentially contributing to their higher rates of mortality and long-term impairment.13,14,15,16

Using the example of disparities in access to PAC, we sought to demonstrate that using interpretable ML algorithms, instead of black box ML methods, not only can avoid incorporating bias but can in fact help (1) uncover racial disparities in access to health care and (2) effectively address these disparities.

Methods

The study was reviewed and approved by the Mass General Brigham institutional review board. The institutional review board ruled the study exempt from informed consent because the data were publicly accessible, retrospectively obtained, and deidentified.

Data Source and Model Development

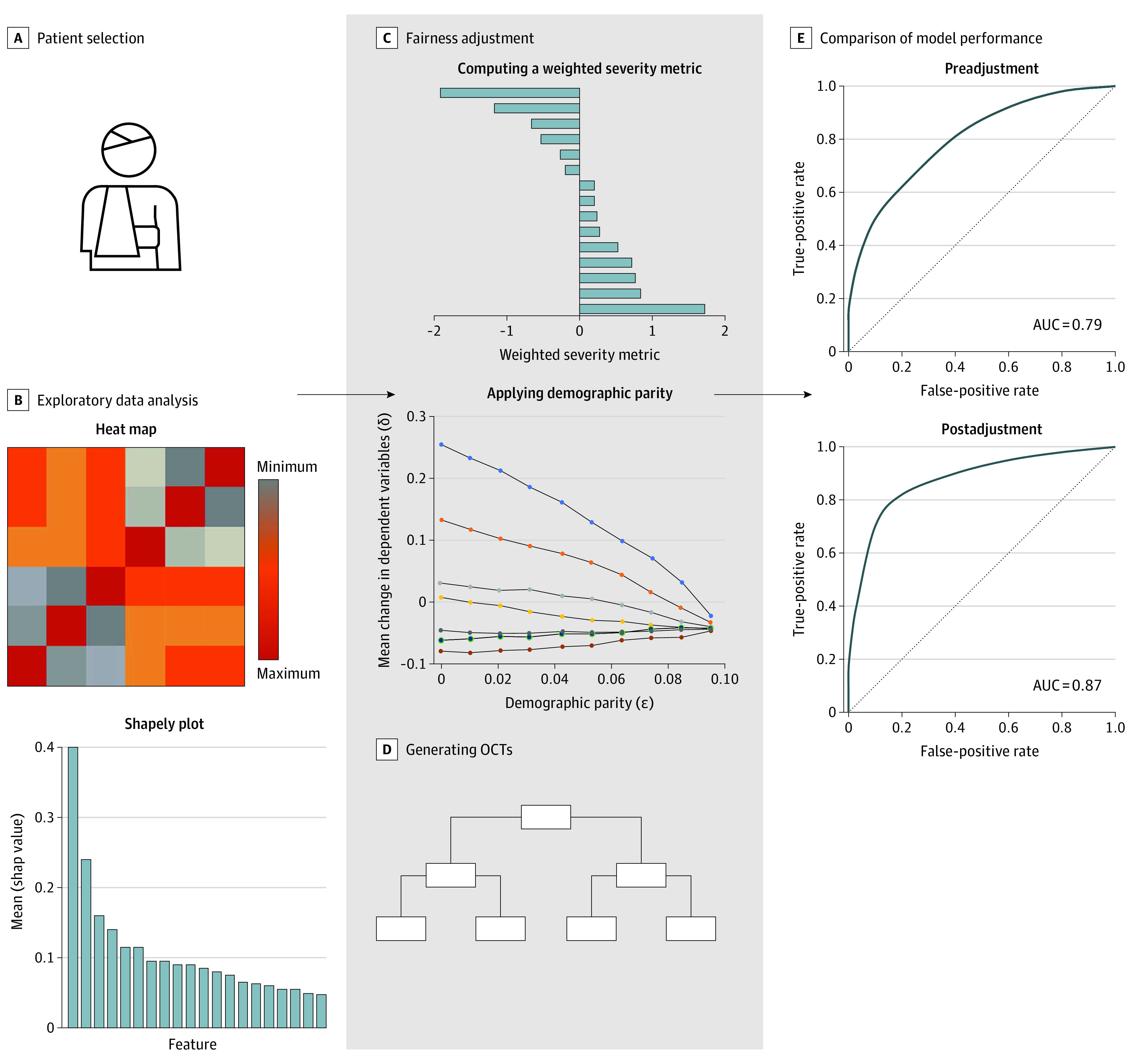

Figure 1 provides an overview of the model development. All patients with a penetrating mechanism of injury were selected from the 2010-2016 American College of Surgeons Trauma Quality Improvement Program database, which captures a subset of patients from the nationally representative National Trauma Data Bank. Race is defined by National Trauma Data Bank fields, classified based on self-report. To make sure there were sufficient data in each race category for the model development, only Black and White patients were included. We excluded patients who died while hospitalized, left against medical advice, or had missing discharge destination data. We used an interpretable ML methodology called optimal classification trees (OCTs) to develop, train, and validate the prediction algorithms, as described below.

Figure 1. Overview of Model Development.

The first column represents the data preparation. Black and White patients with a penetrating mechanism of injury were selected from the 2010-2016 American College of Surgeons Trauma Quality Improvement Program database. Data variables were reviewed, and relevant ones were included in the model. Exploratory data analysis (B) was performed by identifying cross-correlation between different features (heat map) and determining feature importance (Shapely plot). The second column represents the machine learning. Fairness adjustment (C) was achieved by computing a weighted severity metric based on relative feature importance, followed by application of demographic parity across all features. Optimal classification trees (OCTs) were developed to predict discharge disposition using fairness-adjusted and unadjusted data. The third column represents model evaluation. Model performance (E) was compared between fairness-adjusted and unadjusted OCTs using the area under the receiver operating characteristic curve (AUC).

Data Variables

The included data variables were demographic characteristics (age, sex, race, and ethnicity), vital signs at arrival to the emergency department (heart rate, systolic blood pressure, respiratory rate, oxygen saturation on room air, Glasgow Coma Scale score, and temperature), comorbidities (hypertension, congestive heart failure, current smoker, chronic kidney failure, history of stroke, diabetes, disseminated cancer, chronic obstructive pulmonary disease, cirrhosis, drug use history, history of myocardial infarction, history of peripheral vascular disease, alcohol use disorder, bleeding disorder, current chemotherapy), and injury characteristics (mechanism of injury, Abbreviated Injury Scale score per body region). All variables had less than 10% missing data except for temperature, which had 12.1% missing data. Missing data were imputed using the ML method optimal impute, which has demonstrated superior performance over traditional methods of imputation in predicting missing values.17 eTable 1 in Supplement 1 shows the percentage of values imputed by variable with missing observations.

OCTs and Fairness Adjustment

OCTs are a subset of decision trees that reboot themselves while considering variables at all levels of the tree to find the combination that maximizes accuracy. While providing higher accuracy, OCTs also preserve interpretability because of their tree structure, which follows a series of splits (nodes) on a small number of high-importance variables. This makes OCTs superior to other neural networks, which often use noninterpretable methods. Additional information on the model and its applications have been previously detailed.18,19,20,21,22

First, an OCT was applied in an 80:20 derivation/validation split of the original data set to predict discharge disposition. The latter was defined as discharge to home or to PAC, which included inpatient rehabilitation, skilled nursing facilities, and short-term hospitals. Two trees were generated: one without and one with the race variable. The interpretable nature of OCTs allowed for examination of the decision-making capabilities of the OCT and identification of racial disparities in access to PAC. Second, a prescriptive mixed-integer optimization model using age, injury, and gender data was allowed to “fairness-flip” the recommended discharge destination for a subset of patients. Fairness adjustment means that the algorithm chooses specific patients to flip their actual disposition from home to PAC or vice versa, in order to optimize the performance of the predictive model.

To create this fairness-adjustment model, a logistic regression model was first used to determine the relative importance of injuries to different body regions and the associated Abbreviated Injury Scale score in predicting patient disposition. A weighted injury severity metric was then derived based on the results of the logistic regression. The mixed-integer optimization model was then allowed to fairness-flip the discharge disposition of certain patients to minimize the ratio of imbalance between Black and White patients; that is, make the difference between White and Black patients’ utilization of PAC as small as possible. While doing that, the standard error or deviation of the weighted injury severity metric, age, and gender variables were kept within a fixed, tolerated range (determined by the optimization model itself). Those 3 variables were as such protected to maintain the integrity of the data set and ensure that the increase in discriminative performance, and subsequently in predictive power, is valid. A third tree was generated using fairness-adjusted data. The mixed-integer optimization methodology has been previously described.23

Model Performance

Disparities in access to PAC were compared before and after fairness adjustment. The discriminative performance (C statistic) was compared between fairness-adjusted and unadjusted OCTs. C statistics were determined as areas under the receiver operating characteristic curve (AUC) of the different models. The C statistic was measured across 10 random 80:20 derivation/validation splits of the data. This was performed to ensure that the findings were replicable across different splits of the data set.

Results

A total of 52 468 patients were included. The median (IQR) age was 29 (22-40) years, 46 189 patients (88.0%) were male, 31 470 (60.0%) were Black, and 20 998 (40.0%) were White. The most frequent comorbid conditions were smoking (30.6%), history of drug use (14.9%), and hypertension (9.2%). Most patients sustained gunshot wounds (67.7%). The Table describes the patient characteristics for the overall cohort.

Table. Patient Characteristics (N = 52 468).

| Characteristic | Overall cohort, No. (%) |

|---|---|

| Age, median (IQR), y | 29.0 (22.0-40.0) |

| Sex | |

| Male | 46 189 (88.03) |

| Female | 6279 (11.97) |

| Racea | |

| Black | 31 470 (59.98) |

| White | 20 998 (40.02) |

| ED vital signs, median (IQR) | |

| Systolic blood pressure | 130.0 (111.0-146.0) |

| Heart rate | 95.0 (81.0-110.0) |

| Respiratory rate | 20.0 (17.0-22.0) |

| Oxygen saturation | 99.0 (97.0-100.0) |

| GCS score at presentation | 15.0 (15.0-15.0) |

| Temperature, ° C | 36.5 (36.2-36.8) |

| Comorbidities | |

| Alcohol use disorder | 4413 (8.41) |

| Bleeding disorder | 440 (0.84) |

| Current chemotherapy | 22 (0.04) |

| CHF | 190 (0.36) |

| Current smoker | 16 049 (30.59) |

| Chronic kidney failure | 70 (0.13) |

| History of stroke | 160 (0.31) |

| Diabetes | 1707 (3.25) |

| Disseminated cancer | 52 (0.10) |

| COPD | 2235 (4.26) |

| Chronic steroid use | 67 (0.13) |

| Cirrhosis | 120 (0.23) |

| Drug use history | 7836 (14.94) |

| History of MI | 99 (0.19) |

| History of PVD | 30 (0.06) |

| Hypertension | 4847 (9.24) |

| Arrived with signs of life | 52 386 (99.84) |

| Injury characteristics | |

| Mechanism of injury | |

| Penetrating, gunshot wound | 35 509 (67.68) |

| Penetrating, stab wound | 16 886 (32.18) |

| Penetrating, other/mixed | 73 (0.14) |

| Injury body region | |

| Head | 5651 (10.77) |

| Face | 6616 (12.61) |

| Neck | 3730 (7.11) |

| Thorax | 23 553 (44.89) |

| Abdomen | 19 266 (36.72) |

| Spine | 4806 (9.16) |

| Upper extremity | 11 490 (21.90) |

| Lower extremity | 11 018 (21.00) |

| Pelvis | 2781 (5.30) |

| Skin/soft tissue | 2036 (3.88) |

| Severe injury body region | |

| Head | 3872 (7.38) |

| Face | 94 (0.18) |

| Neck | 808 (1.54) |

| Thorax | 12 141 (23.14) |

| Abdomen | 10 079 (19.21) |

| Spine | 1847 (3.52) |

| Upper extremity | 1301 (2.48) |

| Lower extremity | 4371 (8.33) |

| Pelvis | 1139 (2.17) |

| External | 1 (0.001) |

| Outcomes | |

| Discharged to PAC (preadjustment) | 8304 (15.83) |

| Discharged to PAC (postadjustment) | 8304 (15.83) |

| Discharged to PAC (outcomes changed) | 2358 (4.50) |

Abbreviations: ED, emergency department; GCS, Glasgow Coma Scale; CHF, congestive heart failure; COPD, chronic obstructive pulmonary disease; MI, myocardial infarction, PVD, peripheral vascular disease; PAC, postacute care.

Race is defined by National Trauma Data Bank fields, classified based on self-report. To make sure there were sufficient data in each race category for the model development, only Black and White patients were included.

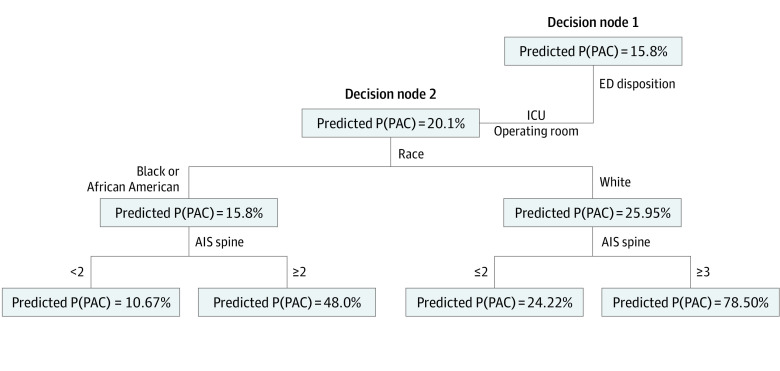

Diagnosing Disparities

Only 3800 Black patients (12.1%) were discharged to PAC, compared with 4504 White patients (21.5%; P < .001). The first OCT algorithm was developed using unadjusted data and included the race variable. Figure 2 shows details for the first 2 nodes of the OCT. The eFigure in Supplement 1 illustrates the comprehensive structure of the overall tree. In this leaf, the tree branches to the left for patients who were admitted from the emergency department to the intensive care unit or to the operating room (as opposed to the floor). The predicted probability of discharge to a PAC, P(PAC), was 20.1%. Race appeared as the second major decision node in the initial unadjusted OCT. A variable that appears in an early node in the OCT carries more weight toward the final prediction than a variable that is further down the tree. The P(PAC) increased to 26.0% for White patients but decreased for Black patients to 15.8% thereafter.

Figure 2. First 2 Nodes of the Optimal Classification Tree Using Unadjusted Data.

AIS indicates Abbreviated Injury Scale; ED, emergency department; ICU, intensive care unit; P(PAC), probability of discharge to postacute care.

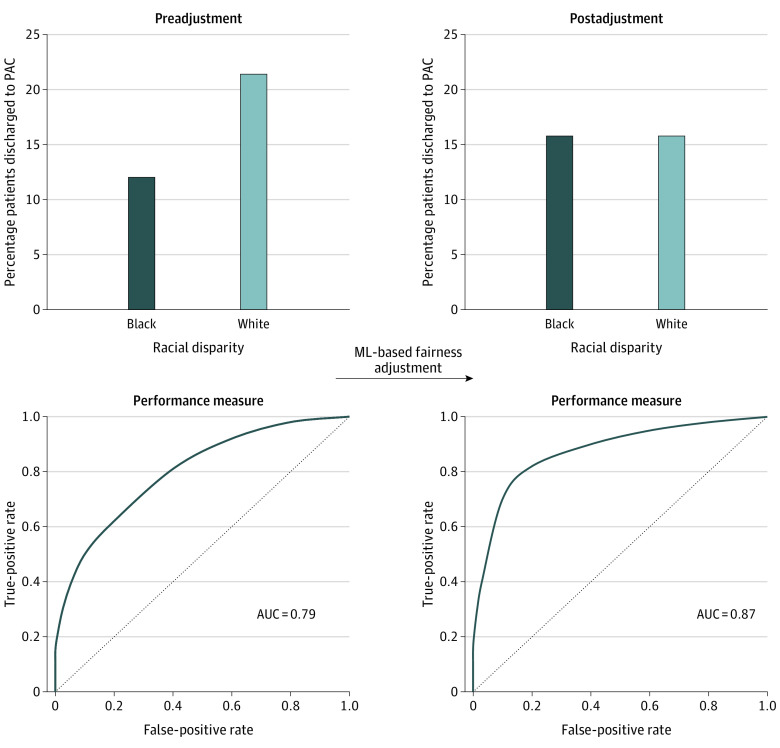

Remedying Disparities

Following the application of the mixed-integer optimization model to create a fairness-adjusted discharge disposition, the outcome was changed for 2358 patients (4.5%) from PAC to home or vice versa. The number of patients discharged to PAC before and after adjustment was the same (8304 patients). After fairness adjustment, 4979 Black patients (15.8%) and 3325 White patients (15.8%) had a recommended discharge to PAC (P = .87). Furthermore, fairness adjustment resulted in an improvement of the model performance from a mean C statistic of 0.79 to 0.87 across 10 random seeds of the data set (eTables 2 and 3 in Supplement 1). Figure 3 illustrates the percentage of patients discharged to PAC and model performance before and after ML-based fairness adjustment.

Figure 3. Percentage of Patients Discharged to Postacute Care (PAC) and Model Performance Before and After Machine Learning (ML)–Based Fairness Adjustment.

AUC indicates area under the receiver operating characteristic curve.

Discussion

A serious concern for the use of ML methodologies in medicine and health care is the potential for accidental encoding of existing bias and disparities in the training data sets because of the black box, noninterpretable nature of most existing ML methodologies. Through this model predicting PAC discharge following injury, we have demonstrated that interpretable ML methodologies can be used not only to diagnose bias and disparities but also to possibly remedy them. Such premise is demonstrated by the fact that a similar percentage of Black and White patients were discharged to PAC, and the predictive performance of our PAC discharge predictive model improved after fairness adjustment. We believe that the use of this tool has the potential to overcome the blind spots that may exist not only in relation to the role of race in postinjury care of patients but potentially in other areas in health care.

We used this specific model studying PAC in trauma patients because there is an abundance of evidence in the literature about disparities in access to PAC. Chun Fat et al24 examined the utilization of postdischarge health care services by Black and White trauma patients in 3 major hospitals in Boston. After exact matching on age, gender, mechanism of injury, and injury severity, Black patients were still less likely to access postdischarge rehabilitation services and less likely to be seen in the outpatient setting for injury-related follow-up. Similarly, Meagher et al12 studied racial differences in discharge disposition of patients with traumatic brain injury using the Nationwide Inpatient Sample. After the application of a logistic regression model, insured Black patients had lower odds of being discharged to rehabilitation when compared with insured White patients.12 Our findings using non–fairness-adjusted ML are in line with the existing literature.

The discriminatory effect or bias of artificial intelligence and ML has received significant attention in the scientific world, including medicine, in recent years.3,4,5,25,26 Machine learning algorithms might reconstruct existent socioeconomic, racial, and ethnic strata based on factors concealed within the data that was used to train them. Bias can infiltrate ML models at every stage of development and deployment, which includes historical bias, representation bias, measurement bias, learning bias, and deployment bias, just to name a few.26 Historical bias results from real-world bias and results in training models that reinforce preexisting disparities. This type of bias became evident in our unadjusted OCT algorithms as the predicted discharge to PAC varied significantly based on race, to the extent that race was the second node in our “raw” trees, suggesting a major role for race in PAC disposition in trauma patients. Examining carefully the interpretable ML tree allowed us to realize that the algorithm is simply reproducing the system-level disparities in the training data. Representation bias is another potential source of harm and often originates from a failure to represent minority groups appropriately in the training set.1,5 Esteva et al27 used 129 450 skin pictures to train a deep convolutional neural network that can classify skin lesions and recognize cancerous lesions. However, the training set included less than 5% of pictures of individuals with dark skin, which resulted in significant limitations to the use of the algorithm in this population.

Awareness of disparities in health care and subsequent ML models is the first step to reducing bias. Action can then be taken to improve existing models and create fair algorithms, but fairness adjustment is not a one-size-fits-all approach, and solutions need to target the specific type of bias that exists. The rationale for using fairness adjustment in our data was as follows: fairness entails sending patients who have the highest injury burden and most likelihood of benefit from rehabilitation to PAC regardless of race. The subsequent increase in discriminatory performance of the trees suggests that the resulting fairness-adjusted model was more accurate in predicting discharge disposition. This improvement likely stems from the creation of a more systematic approach to discharge prediction that emphasizes patient factors such as comorbidities, severity of injury, and nature of injury rather than race.

Machine learning–based models are most helpful when used at the bedside. To simplify the complexity of the OCT decision trees for the end user, our team previously created an interactive interface for prediction of outcomes after elective surgery, the Predictive Optimal Trees in Emergency Surgery Risk (POTTER), and trauma, the Trauma Outcome Predictor (TOP).18,19 Following a series of short questions, the algorithm displays a specific risk percentage. The sequence of questions mimics the structure of the OCTs. Ultimately, this technology could be further simplified by incorporating the algorithms into the electronic health record (EHR) so that answers to the algorithm’s questions can be seamlessly pulled from the EHR, allowing for a smooth integration into a physician’s workflow. As part of this study, we aim to develop a similar interactive application to facilitate access to our fairness-adjusted model in real time.

Machine learning can make health care more efficient, accurate, and comprehensive. However, exposing areas of improvement in this relatively new field requires close collaboration between physicians and ML engineers. A clinician should be able to offer proactive input during algorithm creation and training, and the engineers should be able to actively seek input from the clinician when faced with ambiguous data and results. Interpretable (non–black box) ML models allow stakeholders, including clinicians, to examine the ML logic and determine potential sources of bias. In addition, with the growing use of ML in health care, it’s now more important than ever to examine any algorithmic biases by comparing prediction accuracy across demographic groups. Clinicians and ML algorithm developers or engineers must collaborate to identify the origins of such algorithm bias and improve prediction models through better data collection and analyses.

Limitations

Our study has a few limitations. First, the predictive ability of OCTs is limited by the available data, and as with any big-data study, we are limited to the variables that exist in the Trauma Quality Improvement Program database. Additional factors such as the presence or absence of a social support network can play an important role in determining discharge disposition. Second, we only included Black and White patients who had penetrating injuries. We wanted this work to serve as a proof of concept for the use of ML models to alleviate racial disparities, and we chose this cohort as a specific starting one. The results might not be generalizable to other racial and ethnic groups. Third, we did not adjust for insurance status, which likely plays a role in discharge disposition.10,11 There is significant intersection between race and insurance status, and we felt that including insurance in our model at this early stage may introduce another source of bias into the data set and algorithms and that disparities in insurance are closely related to racial disparities. In future work of this kind, we will examine the role of insurance status similarly.

Conclusions

Interpretable ML methodologies are powerful tools to diagnose and remedy system-related bias in care, such as disparities in access to postinjury rehabilitation care. We developed an accurate, ML-based, fairness-adjusted model that can serve to alleviate racial disparities and identify barriers to discharge to postacute care. This work demonstrates the feasibility of such algorithms and can serve as a framework for decision-makers seeking equity.

eFigure. Complete optimal classification tree using unadjusted data with dotted rectangle delineating portion of the tree represented in Figure 2

eTable 1. Variables requiring imputation

eTable 2. Relative variable importance from a logistic regression model used to inform weighted severity metric

eTable 3. Metrics for the area under the curve (AUC) across the test set for 10 random seeds

Data sharing statement

References

- 1.Zou J, Schiebinger L. AI can be sexist and racist: it’s time to make it fair. Nature. 2018;559(7714):324-326. doi: 10.1038/d41586-018-05707-8 [DOI] [PubMed] [Google Scholar]

- 2.Chen IY, Szolovits P, Ghassemi M. Can AI help reduce disparities in general medical and mental health care? AMA J Ethics. 2019;21(2):E167-E179. doi: 10.1001/amajethics.2019.167 [DOI] [PubMed] [Google Scholar]

- 3.Maurer LR, Bertsimas D, Kaafarani HMA. Machine learning reimagined: the promise of interpretability to combat bias. Ann Surg. 2022;275(6):e738-e739. doi: 10.1097/SLA.0000000000005396 [DOI] [PubMed] [Google Scholar]

- 4.Naudts L. How machine learning generates unfair inequalities and how data protection instruments may help in mitigating them. In: Leenes R, van Brakel R, Gutwirth S, De Hert P, eds. Data Protection and Privacy: The Internet of Bodies. Hart Publishing; 2019: 71-92. doi: 10.5040/9781509926237.ch-003 [DOI] [Google Scholar]

- 5.Adamson AS, Smith A. Machine learning and health care disparities in dermatology. JAMA Dermatol. 2018;154(11):1247-1248. doi: 10.1001/jamadermatol.2018.2348 [DOI] [PubMed] [Google Scholar]

- 6.Velmahos CS, Herrera-Escobar JP, Al Rafai SS, et al. It still hurts! persistent pain and use of pain medication one year after injury. Am J Surg. 2019;218(5):864-868. doi: 10.1016/j.amjsurg.2019.03.022 [DOI] [PubMed] [Google Scholar]

- 7.Wegener ST, Castillo RC, Haythornthwaite J, MacKenzie EJ, Bosse MJ; LEAP Study Group . Psychological distress mediates the effect of pain on function. Pain. 2011;152(6):1349-1357. doi: 10.1016/j.pain.2011.02.020 [DOI] [PubMed] [Google Scholar]

- 8.MacKenzie EJ, Bosse MJ, Kellam JF, et al. Early predictors of long-term work disability after major limb trauma. J Trauma. 2006;61(3):688-694. doi: 10.1097/01.ta.0000195985.56153.68 [DOI] [PubMed] [Google Scholar]

- 9.Haider AH, Herrera-Escobar JP, Al Rafai SS, et al. Factors associated with long-term outcomes after injury: results of the Functional Outcomes and Recovery After Trauma Emergencies (FORTE) multicenter cohort study. Ann Surg. 2020;271(6):1165-1173. doi: 10.1097/SLA.0000000000003101 [DOI] [PubMed] [Google Scholar]

- 10.Englum BR, Villegas C, Bolorunduro O, et al. Racial, ethnic, and insurance status disparities in use of posthospitalization care after trauma. J Am Coll Surg. 2011;213(6):699-708. doi: 10.1016/j.jamcollsurg.2011.08.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sacks GD, Hill C, Rogers SO Jr. Insurance status and hospital discharge disposition after trauma: inequities in access to postacute care. J Trauma. 2011;71(4):1011-1015. doi: 10.1097/TA.0b013e3182092c27 [DOI] [PubMed] [Google Scholar]

- 12.Meagher AD, Beadles CA, Doorey J, Charles AG. Racial and ethnic disparities in discharge to rehabilitation following traumatic brain injury. J Neurosurg. 2015;122(3):595-601. doi: 10.3171/2014.10.JNS14187 [DOI] [PubMed] [Google Scholar]

- 13.Haider AH, Chang DC, Efron DT, Haut ER, Crandall M, Cornwell EE III. Race and insurance status as risk factors for trauma mortality. Arch Surg. 2008;143(10):945-949. doi: 10.1001/archsurg.143.10.945 [DOI] [PubMed] [Google Scholar]

- 14.Hicks CW, Hashmi ZG, Velopulos C, et al. Association between race and age in survival after trauma. JAMA Surg. 2014;149(7):642-647. doi: 10.1001/jamasurg.2014.166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Haider AH, Weygandt PL, Bentley JM, et al. Disparities in trauma care and outcomes in the United States: a systematic review and meta-analysis. J Trauma Acute Care Surg. 2013;74(5):1195-1205. doi: 10.1097/TA.0b013e31828c331d [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lin SF, Beck AN, Finch BK. Black-white disparity in disability among U.S. older adults: age, period, and cohort trends. J Gerontol B Psychol Sci Soc Sci. 2014;69(5):784-797. doi: 10.1093/geronb/gbu010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bertsimas D, Pawlowski C, Zhuo YD. From predictive methods to missing data imputation: an optimization approach. J Mach Learn Res. 2018;18(196):1-39. [Google Scholar]

- 18.Bertsimas D, Dunn J, Velmahos GC, Kaafarani HMA. Surgical risk is not linear: derivation and validation of a novel, user-friendly, and machine-learning-based Predictive Optimal Trees in Emergency Surgery Risk (POTTER) calculator. Ann Surg. 2018;268(4):574-583. doi: 10.1097/SLA.0000000000002956 [DOI] [PubMed] [Google Scholar]

- 19.Maurer LR, Bertsimas D, Bouardi HT, et al. Trauma outcome predictor: an artificial intelligence interactive smartphone tool to predict outcomes in trauma patients. J Trauma Acute Care Surg. 2021;91(1):93-99. doi: 10.1097/TA.0000000000003158 [DOI] [PubMed] [Google Scholar]

- 20.Maurer LR, Chetlur P, Zhuo D, et al. Validation of the AI-based Predictive Optimal Trees in Emergency Surgery Risk (POTTER) calculator in patients 65 years and older. Ann Surg. 2023;277(1):e8-e15. doi: 10.1097/SLA.0000000000004714 [DOI] [PubMed] [Google Scholar]

- 21.El Hechi MW, Maurer LR, Levine J, et al. Validation of the artificial intelligence-based Predictive Optimal Trees in Emergency Surgery Risk (POTTER) calculator in emergency general surgery and emergency laparotomy patients. J Am Coll Surg. 2021;232(6):912-919.e1. doi: 10.1016/j.jamcollsurg.2021.02.009 [DOI] [PubMed] [Google Scholar]

- 22.El Hechi M, Gebran A, Bouardi HT, et al. Validation of the artificial intelligence-based trauma outcomes predictor (TOP) in patients 65 years and older. Surgery. 2022;171(6):1687-1694. doi: 10.1016/j.surg.2021.11.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bandi H, Bertsimas D. The price of diversity. Published online July 2, 2021. https://arxiv.org/abs/2107.03900

- 24.Chun Fat S, Herrera-Escobar JP, Seshadri AJ, et al. Racial disparities in post-discharge healthcare utilization after trauma. Am J Surg. 2019;218(5):842-846. doi: 10.1016/j.amjsurg.2019.03.024 [DOI] [PubMed] [Google Scholar]

- 25.Mhasawade V, Zhao Y, Chunara R. Machine learning and algorithmic fairness in public and population health. Nat Mach Intell. 2021;3(8):659-666. doi: 10.1038/s42256-021-00373-4 [DOI] [Google Scholar]

- 26.Suresh H, Guttag J. A framework for understanding sources of harm throughout the machine learning life cycle. ACM International Conference Proceeding Series. Association for Computing Machinery; October 2021. doi: 10.1145/3465416.3483305 [DOI] [Google Scholar]

- 27.Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115-118. doi: 10.1038/nature21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure. Complete optimal classification tree using unadjusted data with dotted rectangle delineating portion of the tree represented in Figure 2

eTable 1. Variables requiring imputation

eTable 2. Relative variable importance from a logistic regression model used to inform weighted severity metric

eTable 3. Metrics for the area under the curve (AUC) across the test set for 10 random seeds

Data sharing statement