Abstract

The brain is a complex system comprising a myriad of interacting neurons, posing significant challenges in understanding its structure, function, and dynamics. Network science has emerged as a powerful tool for studying such interconnected systems, offering a framework for integrating multiscale data and complexity. To date, network methods have significantly advanced functional imaging studies of the human brain and have facilitated the development of control theory-based applications for directing brain activity. Here, we discuss emerging frontiers for network neuroscience in the brain atlas era, addressing the challenges and opportunities in integrating multiple data streams for understanding the neural transitions from development to healthy function to disease. We underscore the importance of fostering interdisciplinary opportunities through workshops, conferences, and funding initiatives, such as supporting students and postdoctoral fellows with interests in both disciplines. By bringing together the network science and neuroscience communities, we can develop novel network-based methods tailored to neural circuits, paving the way toward a deeper understanding of the brain and its functions, as well as offering new challenges for network science.

Keywords: Network Science, Connectomics, Neurodevelopment, Systems Neuroscience, Network Neuroscience, NeuroAI

Introduction

During the past two decades, network science has become a vital tool in the study of complex systems, offering a wide range of analytical and algorithmic techniques to explore the structure of a complex, interconnected system (Albert and Barabási, 2002; Newman, 2003). Previous reductionist approaches, built on decades of empirical research, have focused on the functioning of individual elements while neglecting how their interactions give rise to emergent aspects of organization. More recently, network approaches helped map out the interactions between molecules, cells, tissues, individuals, and organizations. It is becoming clear that network theory can aid neuroscience in understanding how distributed patterns of interactions create function, accounting for the complexity of integrated systems. At the same time, neuroscience introduces novel questions for network science, providing the potential for new tools and inquiries.

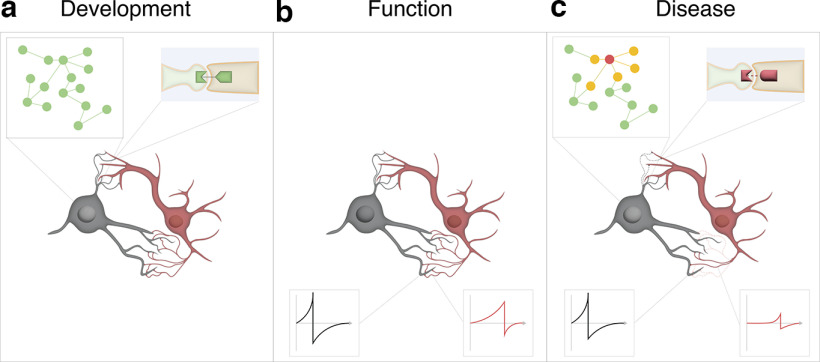

The brain, with its billions of cells connected by synapses, is the ultimate example of a complex system that cannot be understood through the study of individual components alone. In order to unveil the neural basis of complex behaviors and functions, such as perception, movement, cognition, memory, and emotion, we must acknowledge and catalog the interactions between the neurons, allowing us to integrate multiple levels of observations and apply diverse approaches, including computational and mathematical modeling (Fornito et al., 2015; Bassett et al., 2018; Pulvermüller et al., 2021). The goal of this perspective is to outline how network science provides valuable insights and methods that can greatly enhance our broader understanding of brain function. Toward this goal, The Kavli Foundation convened a workshop in which participants began to outline how recent network science techniques can contextualize the emerging wave of neuroscientific big data, focusing on three topics: neurodevelopment, functional brain data, and health and disease. Below, we summarize these discussion points and outline research frontiers in which the fields of network science and neuroscience can jointly benefit from defining common goals and language (Fig. 1).

Figure 1.

Multiscale interaction in network development, function, and disease. a, Development: Neural connectivity emerges as a function of cell identity, linking network dynamics across modalities and scales. Regulatory networks (top left) underlie cell differentiation, and protein–protein interactions guide morphologic maturation and synaptic specificity (top right). b, Function: Structural connectivity guides the emergent possibilities of functional networks, determining the strength with which one neuron can influence the next (bottom). c, Disease: In a diseased state, failures at multiple network levels lead to perturbed function. Genetic mutations cause disruptions in gene regulatory networks (top left), as well as conformation changes that change protein–protein interactions (top right), potentially leading to loss of synaptic connectivity (dashed neurites). In turn, reduced connection strength between neuron disruptions activity propagation (bottom), providing links between genetic changes and cognitive dysfunction.

Techniques

Neuroscience

In the recent past, technical and experimental advancements in neuroscience have enabled scientists to study the brain at increasingly finer scales, ranging from coarse circuit analysis to whole-animal, cellular-level neural recording, connectivity mapping, and genetic profiling. While previous techniques already necessitated the use of graph theoretical tools, recent data collection methods have started to offer a consistent stream of multimodal and high-quality connectomic reconstructions that make the use of network science a necessity. For example, while a connectome of Caenorhabditis elegans has been available since 1986 (White et al., 1986), recent advances in electron microscopy (Abbott et al., 2020) have produced whole-animal wiring information in Ciona Intestinalis (Ryan et al., 2016) and Platynereis dumerilii (Verasztó et al., 2020), as well as brain-wide connectivity maps for Drosophila at different stages of development (Eichler et al., 2017; Scheffer et al., 2020; Winding et al., 2023), along with partial connectomes for zebrafish (Hildebrand et al., 2017), mice (Bae et al., 2021), and humans (Shapson-Coe et al., 2021). Single-cell transcriptomics has also enabled rapid and diverse profiling of cellular identity in various animals, developmental stages, and brain health patterns (Zeng, 2022). Additional “bridge” techniques allow for rapid acquisition of multimodal datasets, such as spatial transcriptomics (Chen et al., 2019), promising physical and genetic information of cells from a single measurement. These datasets offer detailed connectivity and identity information about thousands, and soon millions, of neurons. To analyze these data, and to extract experimentally testable signals and hypotheses, we need to integrate all data points via the use of network science tools, which in turn will also necessitate further advancement of the current tools in network science to address emerging challenges.

To study functional properties of individual neurons and neural networks in the living brain, in vivo techniques, such as two-photon microscopy (Grienberger and Konnerth, 2012) and multiunit electrode recording (Steinmetz et al., 2018), provide rapid profiling of local and mesoscale neuronal activity and anatomy in animal models, revealing principles of circuit organization and dynamic coding underlying a variety of neural processes in sensory perception, movement control, decision-making, and behavior generation. However, presently, the application of these approaches is largely restricted to one or a few brain regions at a time. Technology advancement is needed to monitor neuronal activities across multiple brain regions and at high resolution, necessary to truly understand the dynamic interplay of the different components of the brain-wide circuits for brain function.

Currently, MRI is the primary technology for noninvasively recording functioning brain networks in the human brain, either by reconstructing white matter tracts using diffusion tensor imaging or by inferring axonal connectivity through the measurement of cytoarchitectural or morphometric similarity between brain regions. The analysis of brain-wide neural activity maps has already broadly showcased the insight network neuroscience can bring (Sporns and Betzel, 2016; Shine and Poldrack, 2018; Lurie et al., 2020; Suárez et al., 2020), and the field continues to have immense potential. For instance, the rapid growth and widespread usage of datasets, such as the Allen Human Brain Atlas (Hawrylycz et al., 2012), highlight the need for a wider range of human brain atlases that document gene expression and other molecular or cellular phenotypes that are commensurate with the structural phenotypes, such as volume and myelination. We must integrate multiple levels of analysis and apply diverse approaches, including computational and mathematical modeling, to successfully unravel the complexity of the brain networks and the role of its many interacting components. Future functional brain profiling methods must also account for the multiple cell identities and network features that define neuronal systems.

Box 1:

Common concepts in network neuroscience

Studying the brain requires moving across scales and modalities, and a set of shared terms provides a common reference frame. Structural connectivity refers to “ground-truth” physically instantiated networks, such as measured synaptic connections or axon tracts, while functional connectivity represents estimates of statistical dependencies between neural time courses. Similarly, dynamics of networks corresponds to changing of the connection topology, such as synaptic updates or pruning, while dynamics on networks refers to the neural activity patterns instantiated on top of the structural connectivity.

Network science

Traditionally, neural connectivity is modeled as a simple graph, formalizing the brain as a set of nodes (neurons) connected by links (synapses, gap junctions). Network science aims to go beyond the study of such simple graphs: starting from the adjacency matrix of the system, encoding who is connected to whom, network science offers a suite of tools to characterize local and large-scale structure, ranging from degree distributions to community structure, degree correlations, and even controllability, exploring our ability to guide the dynamics of the circuit. Yet, this “time-frozen” graph-based approach highly oversimplifies the true complexity of the brain, ignoring cell identities, signaling types, dynamics, and spatial and energetic constraints that shape this complex organ. Emerging approaches in network science offer a suite of tools to start capturing this rich complexity, helping us analyze the structural and functional brain data across scales (Betzel and Bassett, 2017).

For example, multiplex and multilayer networks provide a framework for understanding and describing cell–cell relationships and hierarchies, capturing the circuit motifs that can significantly impact the dynamical and topological properties of functional networks. Indeed, multiplex networks can represent multiple types of connections, such as synapses, gap junctions, neuromodulators, and circulating gut peptides, within a single formal framework (Bianconi, 2018; Presigny and De Vico Fallani, 2022). Triadic interactions, in which a node affects the interaction between two other nodes, can also be incorporated, capturing, for example, how glia can influence the synaptic signal between neurons (Sun et al., 2023). These triadic interactions can lead to the emergence of higher-order networks, often represented as hypergraphs or simplicial complexes (Battiston et al., 2020, 2021; Bianconi, 2021; Torres et al., 2021). Promise Theory furthers network analysis by incorporating complex agent modeling and conditional linkage, process interconnection language, and accounting for the functional and structural diversity of cells and their roles (Burgess, 2015, 2021).

Traditional network-based analyses of the brain have largely ignored the spatial component of multiscale datasets, such as geometry and morphology of neurons, treating them as point-like nodes rather than physical objects with length, volume, and a branching tree structure. At larger scales, numerous network models have attempted to incorporate the physical dimension or geometry of extended neural networks, through considerations of wiring economy (Markov et al., 2013; Horvát et al., 2016), metabolic cost, and conduction delays (Bullmore and Sporns, 2012). The emerging study of physical networks promises the tools to explore how the physicality and the spatial organization of the individual neurons and the noncrossing constraints affect the network structure of the whole brain (Pósfai et al., 2022). These approaches have the potential to address the metabolic cost of building and maintaining wiring, and incorporate the physical length of connections. There is a real need, for both network science and neuroscience, to go beyond simple connectivity information and incorporate the true physical nature of neurons, informed by weighing cell properties with their connections, allowing us to enrich our understanding of neuronal circuit operations.

Application Areas

Neurodevelopment

System neuroscience and genomics have relied on a fruitful collaboration between theory and experiment. However, a similar symbiosis has so far escaped neurodevelopment. Neurodevelopment has strong core principles, ripe for modeling, empowered by the recent availability of rich connectomics, genomics, and imaging datasets, from which computational and network-based analyses can unleash rich insights. For instance, in addition to whole-body behavior and neural recordings, C. elegans now has developmentally resolved connectomes and transcriptomes (Boeck et al., 2016; Witvliet et al., 2021), allowing for the integrated analysis of connectivity, genetics, activity, and behavior, inspiring the ongoing acquisition of similar datasets for larger organisms. The main use of network tools in brain science has so far been limited to the mapping and analysis of static network maps, ignoring the temporal scale of brain connectivity and especially the temporal aspects of brain activity (i.e., network dynamics). However, a key discovery of network science is that we must understand the regularities and rules governing the growth and assembly of networks (i.e., the evolving topology of their connectivity) to understand the origin of the empirically observed network characteristics (A. L. Barabási and Albert, 1999). Network science offers important tools to address this gap, and hence can provide a comprehensive quantitative framework to study and understand the temporal unfolding of neurodevelopment across species. It offers the formal language to describe, and then to analyze, how the emerging cell identity and its physical instantiation lead to the observed connectivity of the brain. Reciprocally, new insights from biological systems that first establish and then prune structured networks may inspire new network approaches (Woźniak et al., 2020).

One central question in this field is how neuron identity, captured by gene expression profiles, location, and shape, determines the wiring patterns of neurons and leads to stereotyped connectivity and behavior. Network models of neurodevelopmental principles are needed, therefore, to validate hypotheses and make predictions for future experiments. For instance, Roger Sperry's hypothesis that genetic compatibility drives neuronal connectivity, helped infer the protein interactions that underlie connectivity in C. elegans (D. L. Barabási and Barabási, 2020; Kovács et al., 2020) and in the Drosophila visual system (Kurmangaliyev et al., 2020). These models are most successful when they take into account the affordances of the niche in which organisms operate, including noise from data collection limitations and spatial restrictions, offering more accurate descriptions of the complex landscape of neuronal circuit construction.

Further work on cell migration, morphogenesis, and axon guidance can help unveil the temporospatial considerations that lead to specific circuit implementations and overall network assembly. For example, the preconfigured dynamics of the hippocampus have been shown to be influenced by factors, such as embryonic birthdate and neurogenesis rate (Huszár et al., 2022). Additionally, it is now known that certain network features, including heavy tailed degree distributions, modularity, and interconnected hubs, are present across species and scales in the brain (Towlson et al., 2013; Buzsáki and Mizuseki, 2014; van den Heuvel et al., 2016). One potential explanation for the conservation of these features is the existence of universal constraints on the brain's physical architecture that arise from the trade-off between the cost of development, physical constraints, and coding efficiency. In this context, it is likely that high-cost components, such as long-distance intermodular tracts, are topologically integrative to minimize the transmission time of signals between spatially distant brain regions (Bullmore and Sporns, 2012). Further research in this area has the potential to improve our understanding of the development and organization of the brain, with potential implications for the diagnosis and treatment of neurologic disorders, as we discuss later.

Brains are networks that do

In technological networks, such as the Internet or a computer chip, structure and function are carefully separated: information is encoded into the signal; hence, the role of the network is only to guarantee routing paths between nodes. In the brain, however, action potentials do not encode information in isolation. Instead, the brain relies on population coding, meaning that encoding is implemented by the patterns of signals generated by multiple physical networks of connections. Thus, monitoring and quantifying this network structure are critical for understanding how neuronal coding achieves information processing. This makes the structure of the network more than a propagation backbone; it becomes an integral part of the algorithm itself (Molnár et al., 2020). Thus, the connectome cannot be understood divorced from the context of the actions it performs. Hence, the modules, metrics, and generative processes that support robust representation need to be integrated with the structural representation.

While many recent studies have revealed ways in which task structures are reflected in the networks of neural representations (Chung and Abbott, 2021), little work has been done to elucidate how such representational geometries arise mechanistically and dynamically. Future research should aim to unveil how connectivity patterns at multiple temporal and spatial scales influence the population-level representational geometry, and how this leads to the implementation of behaviorally relevant task dynamics. For instance, hippocampal “cognitive maps” that support reasoning in different encountered task spaces have a natural extension to network formalism: each behavioral state can be a node, and possible transitions between states are edges (Muller, 1996; Eichenbaum and Cohen, 2004; Stachenfeld et al., 2017; George et al., 2021), a representation that can be extended to the challenge of inducing latent networks from sequential inputs (Raju et al., 2022). Further, we must account for the dual dynamics present in the brain: network connectivity defines the possible functions that can be supported. In the reverse direction, the functional dynamics of the network allows synapses to form and change, allowing dynamics (activity) to change the connectivity of the underlying networks (Papadimitriou et al., 2020). Important insights into brain function can be revealed when dynamics taking place at the node level (single neurons, brain regions) are integrated with dynamics taking place on links (synaptic signals, edge signals) (Faskowitz et al., 2022) or on higher-order motifs (Santoro et al., 2023), which are driven by the network cyclic structure and its higher-order topology (Millán et al., 2020). For instance, studying symmetrical complexes, such as automorphisms (Morone and Makse, 2019) and fibrations (Morone et al., 2020), in structural and functional neural connectivities, has succeeded in unveiling the building blocks for neural synchronization in the brain. Graph neural networks (Battaglia et al., 2018; Bronstein et al., 2021), which combine the benefits of network topology and machine learning, may also help us relate connectomically constrained graphs to the neural dynamics that take place over them.

For a brain to perform the numerous processes it supports, it is expected to simultaneously control the activity of the individual neurons, as well as the dynamics of individual circuits and ultimately the full network. This represents an enormously complex control task, as unveiled by recent advances in network controllability that merged the tools of control theory and network science (Liu and Barabási, 2016). These tools help us identify the nodes through which one can control a complex neural circuit, just like a car is controlled through three core mechanisms: the steering wheel, gas pedal, and brake. Recent work used network control to predict the function of individual neurons in the C. elegans connectome, leading to the discovery of new neurons involved in the control of locomotion, and offering direct falsifiable experimental confirmation of control principles (Yan et al., 2017). An alternate description of brain function requires a deeper understanding of the underlying control problems, which requires simultaneous profiling and understanding of network structure and dynamics (Tang and Bassett, 2018; Stiso et al., 2019).

Finally, machine learning methods have offered a unique approach for linking network structure to task performance (Chami et al., 2020; Veličković, 2023), allowing for rapid profiling of learning and behavior that can later inform how we query biological learning (Marblestone et al., 2016; Vu et al., 2018; Richards et al., 2019). To move forward, we must study the statistics of AI architecture's weight structures that offer high performance on complex tasks, helping identify powerful subnetworks, or “winning tickets,” responsible for the majority of the performance of a system (Frankle and Carbin, 2018). An alternative approach lies in identifying generative processes that produce highly performing networks. This is inspired by innate behaviors: animals arrive into the world with a set of evolution-tested preexisting dynamics, implying an optimized set of developmental processes that yield a fine-tuned functional connectome at birth (Zador, 2019; D. L. Barabási et al., 2023). This process, termed the “genomic bottleneck,” has the potential to greatly increase the flexibility and utility of AI systems (Koulakov et al., 2022). Indeed, developmentally inspired encodings of neural network weights have already shown high and stable performance on reinforcement learning, meta-learning, and transfer learning tasks (D. L. Barabási and Czégel, 2021; D. L. Barabási et al., 2022).

Further work in these directions would require streamlined integrations of powerful circuits identified in the connectome with machine learning systems. A major barrier lies in the complexity of the initial setup of tasks that the networks are asked to learn (Seshadhri et al., 2020), embedded in complex packages, such as the simulated physics environment of Mujoco (Todorov et al., 2012). It is also challenging to provide custom topologies or weights to current machine learning packages, thereby moving past the standard feedforward, layered architectures. Addressing these challenges would allow the network science toolkit to define a systematic search of network priors in machine learning, thereby modeling the neuroevolutionary processes and neurodevelopmental solutions responsible for biological intelligence.

Health and disease

The integration of connectivity and genetic data and their dynamic patterns is crucial for understanding the neural transitions from a healthy state to a disease state, particularly in the context of brain disorders, diseases, and mental illnesses, often rooted in the early years of life. Large-scale MRI datasets have allowed for the modeling of normative trajectories of brain development (Bethlehem et al., 2022); however, major opportunities remain for network science to reveal the causes and physiologies of brain disorders through population analyses.

In addition to the brain's own internal networks, the connections between the brain and other organs robustly affect neural development and function. Complex interactions have been revealed in the gut–brain axis, where microbiota can modulate immune and neural states, as well as in the brain's interaction with the reproductive system, driving intricate fluctuations in levels of sex hormones during puberty, menopause, and pregnancy (Andreano et al., 2018; Pritschet et al., 2020). Overall, the connections between the brain and other organs can have significant effects on neural development and function, highlighting the importance of a holistic exploration of neural networks together with the body (Buzsáki and Tingley, 2023).

Ultimately, to diagnose and treat disease, we must understand the temporally evolving complex interactions between genetic, disease, and drug networks and their impact on the connectome. Toward that goal, network neuroscience must partner with network medicine, which applies network science to subcellular interactions, aiming to diagnose, prevent, and treat diseases (A. L. Barabási et al., 2011). This need is reinforced by studies that have found that high degree hubs, located mainly in dorsolateral prefrontal, lateral and medial temporal, and cingulate areas of human cortex, are co-located with an enrichment of neurodevelopmental and neurotransmitter-related genes and implicated in the pathogenesis of schizophrenia (Morgan et al., 2019). Network medicine takes advantage of the structure of subcellular networks, as captured by experimentally mapped protein and noncoding interactions, to identify disease mechanisms, therapeutic targets, drug-repurposing opportunities, and biomarkers. In the case of brain diseases, mutations and other molecular changes that alter the subcellular networks within neurons and non-neuronal cells in turn affect the wiring and rewiring of the connectome and neural dynamics. Hence, effective interventions and treatments for brain disorders must confront the double network problem, accounting for the impact of changes in the subcellular network on connectivity and ultimately brain function.

Discussion

In conclusion, major funding directives, such as the public-private funding alliance of the U.S. BRAIN Initiative, have significantly advanced the development of technologies for studying the brain across temporal and spatial scales and measurement modalities. Yet, the massive amount of data produced and expected to emerge from these tools have created a complexity bottleneck. We need guiding frameworks to organize and conceptualize these data, leading to falsifiable hypotheses. Network science offers a natural match for this task, with the potential for integrating complexity across cell identities, signaling types, dynamics, and spatial and energetic constraints that shape brain development, function, and disease.

We need the joint engagement of network scientists and neuroscientists to develop novel network-based methods that address the unique priorities and challenges posed by brain research. Such approaches will need to account for the dynamic nature of connections in the brain, which are continually changing as a result of various factors, such as experience, aging, and disease, as well as incomplete or uncertain reconstructions of brain connectivity. Continued advances in neuroscience have opened up exciting possibilities for a deeper understanding of the brain and its function, and now require input from network science to fully capture the dynamics of this complex system with the goals of unlocking how neural identity, dynamics, behavior, and disease all link together.

These methodological advances can run parallel to ever-increasing efforts toward adoption of open-science practices, such as data and code sharing. Such efforts bring new challenges related to reproducibility, and have, in some cases, resulted in examples of findings that fail to replicate (Open Science Collaboration, 2015; Errington et al., 2021) or exhibit substantial variability attributable to software (Bowring et al., 2019; Botvinik-Nezer et al., 2020) or analysis teams (Botvinik-Nezer et al., 2020). As a discipline, neuroimaging has championed open-science initiatives, promoting practices including detailed methodological descriptions and sharing of data and code used to generate results in a publication (Nichols et al., 2016), and even multiverse analyses that consider all plausible analytical variations (Dafflon et al., 2022).

To achieve these goals, there is a need to facilitate greater interaction between the network science and neuroscience communities. A well-tested way is to offer interdisciplinary grants from public and private organizations, such as The Kavli Foundation, the National Institutes of Health, and the National Science Foundation, that focus on developing network tools for emerging neuroscience technologies and questions, as well as support for students and postdoctoral fellows with interests in both disciplines. These grants could also support workshops and conferences that bring together researchers from both fields, and provide funding for coursework in network neuroscience at the undergraduate and graduate levels. Actively fostering collaboration between these two fields will encourage the adaptation of novel network approaches to understanding biological data, a necessary step toward advancing our understanding of the brain in health and disease.

Footnotes

G.B. was supported by the Turing-Roche partnership and Royal Society IEC\NSFC\191147. H.M. was supported by National Institute of Biomedical Imaging and Bioengineering and National Institute of Mental Health through the National Institutes of Health BRAIN Initiative Grant R01EB028157. E.K.T. was supported by Government of Canada's New Frontiers in Research Fund NFRFE-2021-00420, and the Natural Sciences and Engineering Research Council of Canada reference number RGPIN-2021-02949. H.Z. was supported by National Institutes of Health BRAIN Initiative Grant U19MH114830. We thank The Kavli Foundation for organizing and supporting a convening in October 2022, “Network Science Meets Neuroscience,” which inspired this review; and Daria Koshkina for designing figures.

The authors declare no competing financial interests.

References

- Abbott LF, et al. (2020) The mind of a mouse. Cell 182:1372–1376. 10.1016/j.cell.2020.08.010 [DOI] [PubMed] [Google Scholar]

- Albert R, Barabási AL (2002) Statistical mechanics of complex networks. Rev Mod Phys 74:47–97. 10.1103/RevModPhys.74.47 [DOI] [Google Scholar]

- Andreano JM, Touroutoglou A, Dickerson B, Barrett LF (2018) Hormonal cycles, brain network connectivity, and windows of vulnerability to affective disorder. Trends Neurosci 41:660–676. 10.1016/j.tins.2018.08.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bae JA, et al., MICrONS Consortium (2021) Functional connectomics spanning multiple areas of mouse visual cortex. BioRxiv 454025. 10.1101/2021.07.28.454025. [DOI] [Google Scholar]

- Barabási AL, Albert R (1999) Emergence of scaling in random networks. Science 286:509–512. 10.1126/science.286.5439.509 [DOI] [PubMed] [Google Scholar]

- Barabási AL, Gulbahce N, Loscalzo J (2011) Network medicine: a network-based approach to human disease. Nat Rev Genet 12:56–68. 10.1038/nrg2918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barabási DL, Barabási AL (2020) A genetic model of the connectome. Neuron 105:435–445. e5. 10.1016/j.neuron.2019.10.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barabási DL, Czégel D (2021) Constructing graphs from genetic encodings. Sci Rep 11:13270. 10.1038/s41598-021-92577-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barabási DL, Beynon T, Katona Á, Perez-Nieves N (2023) Complex computation from developmental priors. Nat Commun 14:2226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barabási DL, Schuhknecht GFP, Engert F (2022) Nature over nurture: functional neuronal circuits emerge in the absence of developmental activity. BioRxiv 513526. 10.1101/2022.10.24.513526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bassett DS, Zurn P, Gold JI (2018) On the nature and use of models in network neuroscience. Nat Rev Neurosci 19:566–578. 10.1038/s41583-018-0038-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battaglia PW, et al. (2018) Relational inductive biases, deep learning, and graph networks. ArXiv. 10.48550/ARXIV.1806.01261. [DOI] [Google Scholar]

- Battiston F, et al. (2021) The physics of higher-order interactions in complex systems. Nat Phys 17:1093–1098. 10.1038/s41567-021-01371-4 [DOI] [Google Scholar]

- Battiston F, Cencetti G, Iacopini I, Latora V, Lucas M, Patania A, Young JG, Petri G (2020) Networks beyond pairwise interactions: structure and dynamics. Phys Rep 874:1–92. 10.1016/j.physrep.2020.05.004 [DOI] [Google Scholar]

- Bethlehem RA, et al., VETSA (2022) Brain charts for the human lifespan. Nature 604:525–533. 10.1038/s41586-022-04554-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Betzel RF, Bassett DS (2017) Multi-scale brain networks. Neuroimage 160:73–83. 10.1016/j.neuroimage.2016.11.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bianconi G (2018) Multilayer networks. Oxford Scholarship Online. 10.1093/oso/9780198753919.001.0001. [DOI] [Google Scholar]

- Bianconi G (2021) Higher-order networks. Cambridge: Cambridge UP. 10.1017/9781108770996. [DOI] [Google Scholar]

- Boeck ME, Huynh C, Gevirtzman L, Thompson OA, Wang G, Kasper DM, Reinke V, Hillier LW, Waterston RH (2016) The time-resolved transcriptome of C. elegans. Genome Res 26:1441–1450. 10.1101/gr.202663.115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinik-Nezer R, et al. (2020) Variability in the analysis of a single neuroimaging dataset by many teams. Nature 582:84–88. 10.1038/s41586-020-2314-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowring A, Maumet C, Nichols TE (2019) Exploring the impact of analysis software on task fMRI results. Hum Brain Mapp 40:3362–3384. 10.1002/hbm.24603 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronstein MM, Bruna J, Cohen T, Veličković P (2021) Geometric deep learning: grids, groups, graphs, geodesics, and gauges. ArXiv. 10.48550/ARXIV.2104.13478. [DOI] [Google Scholar]

- Bullmore E, Sporns O (2012) The economy of brain network organization. Nat Rev Neurosci 13:336–349. 10.1038/nrn3214 [DOI] [PubMed] [Google Scholar]

- Burgess M (2015) Spacetimes with semantics (II), scaling of agency, semantics, and tenancy. ArXiv. 10.48550/ARXIV.1505.01716. [DOI] [Google Scholar]

- Burgess M (2021) Motion of the third kind (I) notes on the causal structure of virtual processes for privileged observers. ResearchGate. 10.13140/RG.2.2.30483.35361. [DOI] [Google Scholar]

- Buzsáki G, Mizuseki K (2014) The log-dynamic brain: how skewed distributions affect network operations. Nat Rev Neurosci 15:264–278. 10.1038/nrn3687 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buzsáki G, Tingley D (2023) Cognition from the body-brain partnership: exaptation of memory. Annu Rev Neurosci 46:191–210. 10.1146/annurev-neuro-101222-110632 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chami I, Abu-El-Haija S, Perozzi B, Ré C, Murphy K (2020) Machine learning on graphs: a model and comprehensive taxonomy. ArXiv. 10.48550/arXiv.2005.03675. [DOI] [Google Scholar]

- Chen X, Sun YC, Zhan H, Kebschull JM, Fischer S, Matho K, Huang ZJ, Gillis J, Zador AM (2019) High-throughput mapping of long-range neuronal projection using in situ sequencing. Cell 179:772–786. e19. 10.1016/j.cell.2019.09.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung S, Abbott LF (2021) Neural population geometry: an approach for understanding biological and artificial neural networks. Curr Opin Neurobiol 70:137–144. 10.1016/j.conb.2021.10.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dafflon J, Da Costa PF, Váša F, Monti RP, Bzdok D, Hellyer PJ, Turkheimer F, Smallwood J, Jones E, Leech R (2022) A guided multiverse study of neuroimaging analyses. Nat Commun 13:3758. 10.1038/s41467-022-31347-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Cohen NJ (2004) From conditioning to conscious recollection: memory systems of the brain. Oxford: Oxford UP. [Google Scholar]

- Eichler K, et al. (2017) The complete connectome of a learning and memory centre in an insect brain. Nature 548:175–182. 10.1038/nature23455 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Errington TM, Denis A, Perfito N, Iorns E, Nosek BA (2021) Challenges for assessing replicability in preclinical cancer biology. Elife 10:e67995. 10.7554/Elife.67995 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faskowitz J, Betzel RF, Sporns O (2022) Edges in brain networks: contributions to models of structure and function. Netw Neurosci 6:1–28. 10.1162/netn_a_00204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fornito A, Zalesky A, Breakspear M (2015) The connectomics of brain disorders. Nat Rev Neurosci 16:159–172. 10.1038/nrn3901 [DOI] [PubMed] [Google Scholar]

- Frankle J, Carbin M (2018) The lottery ticket hypothesis: finding sparse, trainable neural networks. ArXiv. 10.48550/ARXIV.1803.03635. [DOI] [Google Scholar]

- George D, Rikhye RV, Gothoskar N, Swaroop Guntupalli J, Dedieu A, Lázaro-Gredilla M (2021) Clone-structured graph representations enable flexible learning and vicarious evaluation of cognitive maps. Nat Commun 12:2392. 10.1038/s41467-021-22559-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grienberger C, Konnerth A (2012) Imaging calcium in neurons. Neuron 73:862–885. 10.1016/j.neuron.2012.02.011 [DOI] [PubMed] [Google Scholar]

- Hawrylycz MJ, et al. (2012) An anatomically comprehensive atlas of the adult human brain transcriptome. Nature 489:391–399. 10.1038/nature11405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hildebrand DG, et al. (2017) Whole-brain serial-section electron microscopy in larval zebrafish. Nature 545:345–349. 10.1038/nature22356 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horvát S, Gămănuț R, Ercsey-Ravasz M, Magrou L, Gămănuț B, Van Essen DC, Burkhalter A, Knoblauch K, Toroczkai Z, Kennedy H (2016) Spatial embedding and wiring cost constrain the functional layout of the cortical network of rodents and primates. PLoS Biol 14:e1002512. 10.1371/journal.pbio.1002512 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huszár R, Zhang Y, Blockus H, Buzsáki G (2022) Preconfigured dynamics in the hippocampus are guided by embryonic birthdate and rate of neurogenesis. Nat Neurosci 25:1201–1212. 10.1038/s41593-022-01138-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koulakov A, Shuvaev S, Lachi D, Zador A (2022) Encoding innate ability through a genomic bottleneck. BioRxiv 435261. 10.1101/2021.03.16.435261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovács IA, Barabási DL, Barabási AL (2020) Uncovering the genetic blueprint of the nervous system. Proc Natl Acad Sci USA 117:33570–33577. 10.1073/pnas.2009093117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurmangaliyev YZ, Yoo J, Valdes-Aleman J, Sanfilippo P, Zipursky SL (2020) Transcriptional programs of circuit assembly in the Drosophila visual system. Neuron 108:1045–1057. e6. 10.1016/j.neuron.2020.10.006 [DOI] [PubMed] [Google Scholar]

- Liu YY, Barabási AL (2016) Control principles of complex systems. Rev Mod Phys 88:035006. 10.1103/RevModPhys.88.035006 [DOI] [Google Scholar]

- Lurie DJ, et al. (2020) Questions and controversies in the study of time-varying functional connectivity in resting fMRI. Netw Neurosci 4:30–69. 10.1162/netn_a_00116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marblestone AH, Wayne G, Kording KP (2016) Toward an integration of deep learning and neuroscience. Front Comput Neurosci 10:94. 10.3389/fncom.2016.00094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markov NT, Ercsey-Ravasz M, Van Essen DC, Knoblauch K, Toroczkai Z, Kennedy H (2013) Cortical high-density counterstream architectures. Science 342:1238406. 10.1126/science.1238406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Millán AP, Torres JJ, Bianconi G (2020) Explosive higher-order Kuramoto dynamics on simplicial complexes. Phys Rev Lett 124:218301. 10.1103/PhysRevLett.124.218301 [DOI] [PubMed] [Google Scholar]

- Molnár F, Kharel SR, Hu XS, Toroczkai Z (2020) Accelerating a continuous-time analog SAT solver using GPUs. Comput Phys Commun 256:107469. 10.1016/j.cpc.2020.107469 [DOI] [Google Scholar]

- Morgan SE, et al. (2019) Cortical patterning of abnormal morphometric similarity in psychosis is associated with brain expression of schizophrenia-related genes. Proc Natl Acad Sci USA 116:9604–9609. 10.1073/pnas.1820754116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morone F, Makse HA (2019) Symmetry group factorization reveals the structure-function relation in the neural connectome of Caenorhabditis elegans. Nat Commun 10:4961. 10.1038/s41467-019-12675-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morone F, Leifer I, Makse HA (2020) Fibration symmetries uncover the building blocks of biological networks. Proc Natl Acad Sci USA 117:8306–8314. 10.1073/pnas.1914628117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller R (1996) A quarter of a century of place cells. Neuron 17:813–822. 10.1016/s0896-6273(00)80214-7 [DOI] [PubMed] [Google Scholar]

- Newman ME (2003) The structure and function of complex networks. SIAM Rev 45:167–256. 10.1137/S003614450342480 [DOI] [Google Scholar]

- Nichols TE, et al. (2016) Best practices in data analysis and sharing in neuroimaging using MRI. bioRxiv 054262. 10.1101/054262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Open Science Collaboration (2015) PSYCHOLOGY: estimating the reproducibility of psychological science. Science 349:aac4716. 10.1126/science.aac4716 [DOI] [PubMed] [Google Scholar]

- Papadimitriou CH, Vempala SS, Mitropolsky D, Collins M, Maass W (2020) Brain computation by assemblies of neurons. Proc Natl Acad Sci USA 117:14464–14472. 10.1073/pnas.2001893117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pósfai M, Szegedy B, Bačić I, Blagojević L, Abért M, Kertész J, Lovász L, Barabási AL (2022) Understanding the impact of physicality on network structure. ArXiv. 10.48550/ARXIV.2211.13265. [DOI] [Google Scholar]

- Presigny C, De Vico Fallani F (2022) Colloquium: multiscale modeling of brain network organization. Rev Mod Phys 94:031002. 10.1103/RevModPhys.94.031002 [DOI] [Google Scholar]

- Pritschet L, Santander T, Taylor CM, Layher E, Yu S, Miller MB, Grafton ST, Jacobs EG (2020) Functional reorganization of brain networks across the human menstrual cycle. Neuroimage 220:117091. 10.1016/j.neuroimage.2020.117091 [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Tomasello R, Henningsen-Schomers MR, Wennekers T (2021) Biological constraints on neural network models of cognitive function. Nat Rev Neurosci 22:488–502. 10.1038/s41583-021-00473-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raju RV, Guntupalli JS, Zhou G, Lázaro-Gredilla M, George D (2022) Space is a latent sequence: structured sequence learning as a unified theory of representation in the hippocampus. ArXiv. 10.48550/ARXIV.2212.01508. [DOI] [Google Scholar]

- Richards BA, et al. (2019) A deep learning framework for neuroscience. Nat Neurosci 22:1761–1770. 10.1038/s41593-019-0520-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ryan K, Lu Z, Meinertzhagen IA (2016) The CNS connectome of a tadpole larva of (L.) highlights sidedness in the brain of a chordate sibling. Elife 5:e16962. 10.7554/Elife.16962 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santoro A, Battiston F, Petri G, Amico E (2023) Higher-order organization of multivariate time series. Nat Phys 19:221–229. 10.1038/s41567-022-01852-0 [DOI] [Google Scholar]

- Scheffer LK, et al. (2020) A connectome and analysis of the adult central brain. Elife 9:e57443. 10.7554/Elife.57443 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seshadhri C, Sharma A, Stolman A, Goel A (2020) The impossibility of low-rank representations for triangle-rich complex networks. Proc Natl Acad Sci USA 117:5631–5637. 10.1073/pnas.1911030117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shapson-Coe A (2021) A connectomic study of a petascale fragment of human cerebral cortex. BioRxiv 446289. 10.1101/2021.05.29.446289. [DOI] [Google Scholar]

- Shine JM, Poldrack RA (2018) Principles of dynamic network reconfiguration across diverse brain states. Neuroimage 180:396–405. 10.1016/j.neuroimage.2017.08.010 [DOI] [PubMed] [Google Scholar]

- Sporns O, Betzel RF (2016) Modular brain networks. Annu Rev Psychol 67:613–640. 10.1146/annurev-psych-122414-033634 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stachenfeld KL, Botvinick MM, Gershman SJ (2017) The hippocampus as a predictive map. Nat Neurosci 20:1643–1653. 10.1038/nn.4650 [DOI] [PubMed] [Google Scholar]

- Steinmetz NA, Koch C, Harris KD, Carandini M (2018) Challenges and opportunities for large-scale electrophysiology with neuropixels probes. Curr Opin Neurobiol 50:92–100. 10.1016/j.conb.2018.01.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stiso J, et al. (2019) White matter network architecture guides direct electrical stimulation through optimal state transitions. Cell Rep 28:2554–2566. e7. 10.1016/j.celrep.2019.08.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suárez LE, Markello RD, Betzel RF, Misic B (2020) Linking structure and function in macroscale brain networks. Trends Cogn Sci 24:302–315. 10.1016/j.tics.2020.01.008 [DOI] [PubMed] [Google Scholar]

- Sun H, Radicchi F, Kurths J, Bianconi G (2023) The dynamic nature of percolation on networks with triadic interactions. Nat Commun 14:1308. 10.1038/s41467-023-37019-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang E, Bassett DS (2018) Colloquium: control of dynamics in brain networks. Rev Mod Phys 90:031003. 10.1103/RevModPhys.90.031003 [DOI] [Google Scholar]

- Todorov E, Erez T, Tassa Y (2012) MuJoCo: a physics engine for model-based control. 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems. 10.1109/iros.2012.6386109 [DOI] [Google Scholar]

- Torres L, Blevins AS, Bassett D, Eliassi-Rad T (2021) The why, how, and when of representations for complex systems. SIAM Rev 63:435–485. 10.1137/20M1355896 [DOI] [Google Scholar]

- Towlson EK, Vértes PE, Ahnert SE, Schafer WR, Bullmore ET (2013) The rich club of the C. elegans neuronal connectome. J Neurosci 33:6380–6387. 10.1523/JNEUROSCI.3784-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van den Heuvel MP, Bullmore ET, Sporns O (2016) Comparative connectomics. Trends Cogn Sci 20:345–361. 10.1016/j.tics.2016.03.001 [DOI] [PubMed] [Google Scholar]

- Veličković P (2023) Everything is connected: graph neural networks. Curr Opin Struct Biol 79:102538. 10.1016/j.sbi.2023.102538 [DOI] [PubMed] [Google Scholar]

- Verasztó C, et al. (2020) Whole-animal connectome and cell-type complement of the three-segmented Platynereis dumerilii larva. bioRxiv 260984. 10.1101/2020.08.21.260984. [DOI] [Google Scholar]

- Vu MA, et al. (2018) A shared vision for machine learning in neuroscience. J Neurosci 38:1601–1607. 10.1523/JNEUROSCI.0508-17.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- White JG, Southgate E, Thomson JN, Brenner S (1986) The structure of the nervous system of the nematode Caenorhabditis elegans. Philos Trans R Soc Lond B Biol Sci 314:1–340. 10.1098/rstb.1986.0056 [DOI] [PubMed] [Google Scholar]

- Winding M, et al. (2023) The connectome of an insect brain. Science 379:eadd9330. 10.1126/science.add9330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witvliet D, et al. (2021) Connectomes across development reveal principles of brain maturation. Nature 596:257–261. 10.1038/s41586-021-03778-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woźniak S, Pantazi A, Bohnstingl T, Eleftheriou E (2020) Deep learning incorporating biologically inspired neural dynamics and in-memory computing. Nat Mach Intell 2:325–336. 10.1038/s42256-020-0187-0 [DOI] [Google Scholar]

- Yan G, Vértes PE, Towlson EK, Chew YL, Walker DS, Schafer WR, Barabási AL (2017) Network control principles predict neuron function in the Caenorhabditis elegans connectome. Nature 550:519–523. 10.1038/nature24056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zador AM (2019) A critique of pure learning and what artificial neural networks can learn from animal brains. Nat Commun 10:3770. 10.1038/s41467-019-11786-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng H (2022) What is a cell type and how to define it? Cell 185:2739–2755. 10.1016/j.cell.2022.06.031 [DOI] [PMC free article] [PubMed] [Google Scholar]