Abstract

As the fourth paradigm of materials research and development, the materials genome paradigm can significantly improve the efficiency of research and development for austenitic stainless steel. In this study, by collecting experimental data of austenitic stainless steel, the chemical composition of austenitic stainless steel is optimized by machine learning and a genetic algorithm, so that the production cost is reduced, and the research and development of new steel grades is accelerated without reducing the mechanical properties. Specifically, four machine learning prediction models were established for different mechanical properties, with the gradient boosting regression (gbr) algorithm demonstrating superior prediction accuracy compared to other commonly used machine learning algorithms. Bayesian optimization was then employed to optimize the hyperparameters in the gbr algorithm, resulting in the identification of the optimal combination of hyperparameters. The mechanical properties prediction model established at this stage had good prediction accuracy on the test set (yield strength: R2 = 0.88, MAE = 4.89 MPa; ultimate tensile strength: R2 = 0.99, MAE = 2.65 MPa; elongation: R2 = 0.84, MAE = 1.42%; reduction in area: R2 = 0.88, MAE = 1.39%). Moreover, feature importance and Shapley Additive Explanation (SHAP) values were utilized to analyze the interpretability of the performance prediction models and to assess how the features influence the overall performance. Finally, the NSGA-III algorithm was used to simultaneously maximize the mechanical property prediction models within the search space, thereby obtaining the corresponding non-dominated solution set of chemical composition and achieving the optimization of austenitic stainless-steel compositions.

Keywords: machine learning, austenitic stainless steel, genetic algorithm, composition optimization

1. Introduction

Figure 1 shows the four stages of a material research and development model over recent history [1]. The first stage is the Empirical paradigm, which focuses on practical experiments in substances’ structure, properties, preparation processes, and applications by combining laboratory experience. This paradigm provides a large amount of quantitative data to support repeatable conclusions but lacks deep understanding of material properties in larger scale systems. The next stage is the Theoretical research paradigm, which mainly summarizes theoretical models from previous research based on experiments, forming various laws in mathematical equations. However, as systems become more complex, the calculations of theoretical models become inaccurate. With the advent of computers, the Computational modeling paradigm began using computer simulation techniques to study material properties, such as finite element models and molecular dynamics simulations. The advantage of this paradigm is that it can study problems that are difficult to achieve experimentally and quantitatively predict material properties, thus promoting the development of previous paradigms. However, the disadvantage is the strict requirements on the definition of simulation processes and selection of initial parameters. With further developments in computer science, the Materials genomics paradigm has emerged in the past 20 years. This paradigm integrates experimental design, computer simulation, and theoretical analysis methods to systematically obtain large-scale data of materials using high-throughput experiments, and after uses computer algorithms to analyze these data and deduce unknown material properties. The advantages of this paradigm are increased efficiency in material research and reduced trial-and-error efforts. Recently, this material research paradigm has been applied in various aspects, including material feature construction and selection [2,3,4,5], material organization and property prediction [6,7,8,9], discovery of explicit structure–effect relationships [10,11,12,13], and material optimization design [14,15,16], greatly promoting the development of material science.

Figure 1.

Evolution of material research and development methods.

Austenitic stainless steel is a type of steel material whose matrix is primarily composed of the face-centered cubic crystal structure of austenite. It has excellent corrosion resistance and mechanical properties and is widely used in high-service requirements such as deep-drawn forming components, as well as acid-corrosive medium pipelines, containers, and structural components. As the service environment of stainless steel continues to deteriorate, higher requirements are imposed on its performance, presenting significant challenges in designing new stainless steels that can meet these demands. Traditional “trial-and-error” methods suffer from high experimental costs, long experimental cycles, and low R&D efficiency. In contrast, machine learning can perfectly avoid these issues and achieve efficient material design. Research has shown that this method is reliable in designing new materials. Diao et al. [17] used machine learning to establish a mechanical performance prediction model for carbon steel. They used the strength-ductility product as the optimized target value and ultimately achieved the design of carbon steel with excellent comprehensive performance. Reddy et al. [18] combined neural networks and genetic algorithms to optimize the chemical composition and heat treatment parameters of medium carbon steel to achieve desired mechanical properties. Shen et al. [19] introduced physical metallurgy models into machine learning models, effectively improving the accuracy of machine learning predictions. Furthermore, the study showed that the introduction of physical metallurgy can improve the design accuracy and efficiency by eliminating intermediate parameters that do not follow physical metallurgical principles in the machine learning process.

In this study, experimental data on the compositional features, process features, testing features, and mechanical properties of austenitic stainless steel were collected as a dataset. By establishing a high-precision performance prediction model and using the range of compositional feature changes as the search space, genetic algorithms were used to search for optimization in this space. The objective was to optimize the composition of austenitic stainless steel and improve the R&D efficiency of high-performance steel materials.

2. Materials and Methods

2.1. Optimal Design Strategy

To accelerate the search for optimal performance indicators in the search space, this study combined machine learning algorithms with non-dominated genetic algorithms to achieve optimized design of material composition. Figure 2 shows the specific research route. The first step was to collect a large amount of experimental data and construct the original dataset; then, high-throughput screening was conducted on the dataset according to the designed screening strategy to obtain a sub-dataset for learning. Feature selection combined with multiple machine learning regression models was used to compare and find the optimal algorithm, while the hyperparameters in the algorithm were adjusted using Bayesian optimization to establish the final machine learning model. Finally, using the machine learning model’s predictions in the search space as the target, multi-objective optimization was conducted using the NSGA-III algorithm to search for the optimal composition corresponding to the optimal results.

Figure 2.

Research route.

2.2. Dataset

The sample data used in this article were obtained from the Materials Algorithms Project Program Library’s experimental data, which was compiled from the Citrination database [20]. The dataset contains a total of 2085 sample data, including information such as the chemical composition of austenitic stainless steel, solid solution treatment temperature and time, cooling method, melting method, test conditions, mechanical properties, and test sample type. To maintain consistency in all unrelated variables, Table 1 was established to set the screening conditions, ultimately resulting in 132 sample data after screening. Table 2 shows the distribution range of the screened data. To eliminate the influence of differences in the magnitude of different features on machine learning models, the input features were normalized using the feature scaling method. The normalization scaling formula is shown in Equation (1) [21].

| (1) |

where Xmin and Xmax are the minimum and maximum values of the input features. X is the original feature value, and X* is the scaled feature value. Figure 3 shows the distribution of the original data after feature scaling. After feature scaling, all feature variables are distributed between 0 and 1. However, some of the feature data are unevenly distributed with a significant degree of skewness, indicating the original data have discrete values which may have some impact on subsequent analysis.

Table 1.

Screening conditions.

| Features | Variable |

|---|---|

| Heat treatment | Water cooling after solid solution treatment |

| Composition | Mass fraction of each element |

| Steel type | Steel tube |

| Mechanical properties | Yield strength (YS), ultimate tensile strength (UTS), elongation (EL), and reduction of area (RA) |

| Test condition | Test temperature |

| Grain | Grain size |

| Melting mode | Arc furnace |

Table 2.

Spatial distribution range of filtered dataset.

| Feature Name | Minimum | Maximum | Mean | |

|---|---|---|---|---|

| Composition | Cr content (wt%) | 16.42 | 18.24 | 17.6113 |

| Ni content (wt%) | 9.8 | 13.5 | 12.08947 | |

| Mo content (wt%) | 0.02 | 2.38 | 0.688626 | |

| Mn content (wt%) | 1.47 | 1.74 | 1.621221 | |

| Si content (wt%) | 0.52 | 0.82 | 0.638168 | |

| Nb content (wt%) | 0.005 | 0.79 | 0.198321 | |

| Ti content (wt%) | 0.011 | 0.53 | 0.142389 | |

| Cu content (wt%) | 0.05 | 0.17 | 0.103817 | |

| N content (wt%) | 0.013 | 0.038 | 0.024901 | |

| C content (wt%) | 0.04 | 0.09 | 0.059466 | |

| B content (wt%) | 0.0001 | 0.0013 | 0.059466 | |

| P content (wt%) | 0.019 | 0.028 | 0.022802 | |

| S content (wt%) | 0.006 | 0.017 | 0.011573 | |

| Al content (wt%) | 0.004 | 0.161 | 0.039153 | |

| Co content (wt%) | 0 | 0.37 | 0.08145 | |

| V content (wt%) | 0 | 0.33 | 0.007656 | |

| Process | Solution treatment temperature/STT (K) | 1343 | 1473 | 1394 |

| Solution treatment time/STt (s) | 600 | 1200 | 742 | |

| Test | Test temperature/TT (K) | 298 | 1073 | 714 |

| Property | YS (MPa) | 108 | 239 | 153 |

| UTS (MPa) | 203 | 620 | 416 | |

| EL (%) | 11 | 75 | 46 | |

| RA (%) | 14 | 82 | 66 |

Figure 3.

Distribution of original data after feature scaling.

2.3. Model Evaluation and Hyperparametric Optimization

In this study, nine common machine learning algorithms are employed, including decision tree (dtr), random forest (rfr), Adaboost (abg), gradient boosting machine (gbr), extremely randomized trees (etr), bagging regression (br), ridge regression (rdg), least squares orthogonal regression (lso), and XGBoost (xgb). Predictive models are established for the four types of property outputs using compositional features, process features, and testing features as inputs. To compare the performance of different algorithms in the model, fivefold cross-validation is utilized based on the mean absolute error (MAE) and the coefficient of determination (R-squared). Equations (2) and (3) show the calculation formulas for MAE and R2, respectively. The best machine learning algorithm is determined based on the evaluation metrics [22,23].

| (2) |

| (3) |

where n is the sample size, yi is the true value, yi,ave is the mean of the true value, and fi is the predicted value.

In machine learning, the performance of a model is not only related to the algorithm used but also to the selection of hyperparameters within the algorithm. For instance, the gbr algorithm has many hyperparameters, such as n_estimators, learning_rate, max_depth, subsample, min_samples_split, and min_samples_leaf, whose significances are listed in Table 3. The combinations of these hyperparameters can affect the model’s underfitting or overfitting, so it is necessary to optimize the hyperparameters and determine the best combination. There are generally three methods for hyperparameter optimization in machine learning, namely Grid Search [24], Random Search [25], and Bayesian Optimization [26]. When there are many hyperparameters and a large search space, Grid Search and Random Search may take too long to run, so Bayesian Optimization is often used to optimize hyperparameters. The basic idea of Bayesian optimization [27,28,29] is to determine the optimal hyperparameter combination by continuously evaluating the results of the objective function under each hyperparameter combination. It is a process of model optimization that treats the objective function as a black-box function of the hyperparameters. In each round of iteration, the prior distribution of the existing data will be adjusted to maximize the expected value of the objective function. In-depth analysis is as follows: Let us assume the goal is to find a hyperparameter θ ∈ Θ, where Θ represents the space of all possible combinations of hyperparameters. The objective is to discover the optimal hyperparameter θ* that maximizes the score of the objective function F(θ). It is crucial to first establish an empirical probability model P(F,θ), which represents the joint distribution between the unknown objective constant and the unknown parameter θ. Based on Bayesian theory, the posterior probability can be expressed using the Equation (4):

| (4) |

where P(F|θ) is the conditional probability density function of the objective function F under the hyperparameters θ, which describes the probability of finding the given function output F after determining the hyperparameters θ. P(θ) is the “subjective belief” that hyperparameter θ possesses without observed data, i.e., the prior distribution, while P(F) is the normalization constant. After calculating the initial probability of the surrogate model, it is used to guide the specific tuning process, i.e., selecting a new hyperparameter at each iteration and then running the objective function to collect results. By analyzing the information increment obtained from each sampling point, Bayesian optimization can update the transfer probability of the objective function, making it more likely to find the global optimal solution. Finally, after several iterations, the maximum value searched in the observation space is the estimated optimal solution of the objective function F.

Table 3.

Significance of hyperparameters and their impact on the model.

| Hyperparameter | Significance |

|---|---|

| n_estimators | The number of weak learners, that is, the number of subtrees. More trees can improve the model accuracy, but at the same time, it will reduce the running speed of the model, and too many trees may lead to overfitting. |

| learning_rate | The step size used in each iteration. If the step size is set too large, it may cause the gradient to descend too quickly and fail to converge; conversely, if the step size is set too small, it may take a very long time to reach the optimal result. |

| max_depth | This parameter limits the depth of the decision tree, controlling the complexity and prediction accuracy of the model. Increasing max_depth will make the model more complex and more prone to overfitting, while smaller values may lead to underfitting. |

| subsample | The proportion of randomly sampled data for each tree. It is used to control the number of samples in each tree of the training dataset and can be used to solve overfitting problems. |

| min_samples_split | The minimum number of observations required for a split at an internal node. This parameter can limit the depth of subtree split and prevent overfitting. |

| min_samples_leaf | The minimum number of samples required to be in a leaf node. Smaller leaf sizes correspond to higher variance and may lead to overfitting problems. |

3. Results and Discussion

3.1. Model Establishment

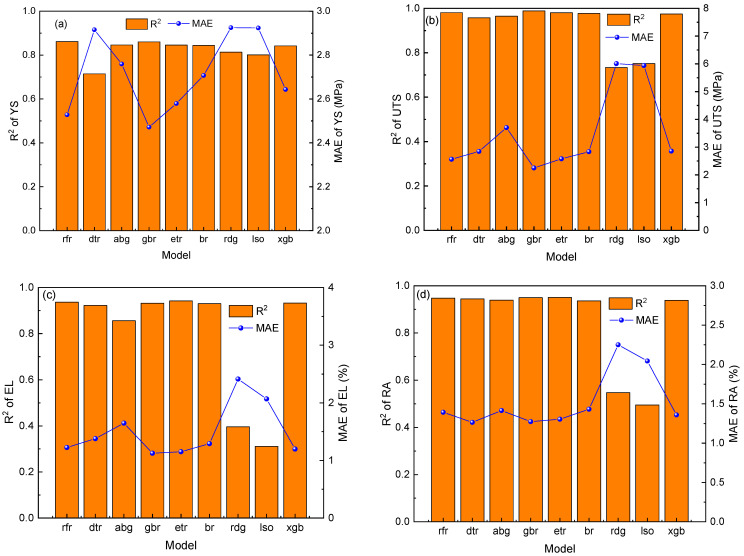

Figure 4 shows the performance of the dataset on different machine learning models. It can be observed that the gbr algorithm has the highest R2 value and the lowest MAE value, indicating that the gbr algorithm has the best performance in predicting four properties. Therefore, this article will use the gbr algorithm to construct a performance prediction model for further research.

Figure 4.

Performance of the dataset on different machine learning models (a) YS; (b) UTS; (c) EL; (d) RA.

The accuracy of the subsequent machine learning model is directly affected by the number of input features. Therefore, it is necessary to screen the input features and eliminate some redundant features. This study uses correlation filtering to select key feature variables, with the Pearson correlation coefficient [30,31] used to analyze the correlation between the data. When the absolute value of the correlation coefficient between each feature is greater than 0.95, it is generally believed that there is a strong linear correlation between them, and one of the features should be deleted. To determine which feature to delete, a machine learning model is established after each feature is deleted, and the prediction errors of different machine learning models are compared, with the feature exhibiting a large prediction error being deleted. Figure 5a shows the correlation coefficients between the features, with some correlation coefficients between the features being greater than 0.95, requiring screening. Finally, the Co, V, and solution treatment time features are removed. Figure 5b shows the correlation between the performance characteristics. Since there is a test temperature in the input features, it is different from the conventional strength and plasticity, which show a negative correlation. The strength and plasticity in this article exhibit a certain degree of positive correlation.

Figure 5.

Correlation analysis between data: (a) between features; (b) between property.

3.2. Hyperparametric Optimization

After determining the optimal algorithm to be GBR, it is necessary to optimize hyperparameters in GBR to improve model performance. First, before tuning, the original dataset is divided using the hold-out method, with 80% as the training dataset and 20% as the testing dataset. The parameters listed in Section 2.3 are used as tuning targets. The evaluation metric is MAE, and fivefold cross-validation is used to fit the training dataset to obtain the best parameter combination for different performance prediction models. Figure 6 shows the change in MAE for the four performances with the iteration of Bayesian optimization. With an increase in the number of iterations, the MAE of the four mechanical properties gradually becomes stable, indicating that the optimization finally converges. The corresponding optimized hyperparameters are shown in Table 4. Figure 7 shows the comparison between the actual value and the predicted value of the machine learning model established by using the optimized hyperparameters in the training set and the test set. The actual and predicted values of four mechanical properties are mainly distributed along the diagonal line, indicating that the model has good prediction effectiveness. The R2 of the four mechanical properties in the training set is greater than 0.95, and the R2 in the testing set is greater than 0.8, which means that the model has good prediction ability for unknown data, providing a foundation for subsequent component optimization.

Figure 6.

MAE with Bayesian optimization iterations: (a) YS; (b) UTS; (c) EL; (d) RA.

Table 4.

The best hyperparameters found by Bayesian optimization.

| n_Estimators | Learning_Rate | Max_Depth | Subsample | Min_Samples_Split | Min_Samples_Leaf | |

|---|---|---|---|---|---|---|

| YS | 223 | 0.03773 | 2 | 0.5 | 24 | 1 |

| UTS | 347 | 0.08097 | 20 | 1.0 | 27 | 5 |

| EL | 500 | 0.03750 | 8 | 0.7287 | 20 | 4 |

| RA | 383 | 0.09559 | 2 | 1.0 | 20 | 1 |

Figure 7.

Comparison of predicted and actual values of the model in the training dataset and test set: (a) YS; (b) UTS; (c) EL; (d) RA.

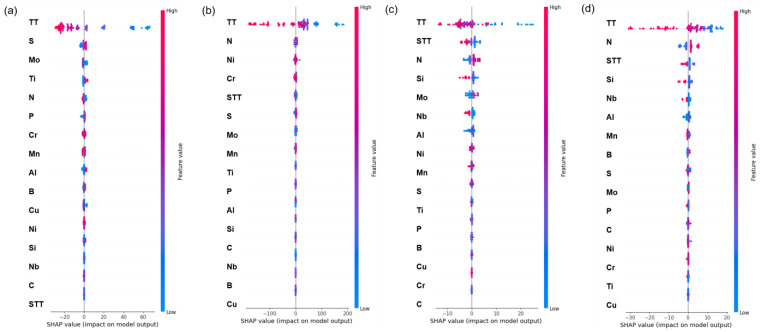

3.3. Interpretable Analysis

The above discussion indicates that machine learning models can effectively solve the problem of predicting features and mechanical property. However, since a black box model is established when predicting property, there is a lack of interpretability of how features affect performance. Therefore, it is necessary to analyze the interpretability between features and property. Figure 8 shows the feature importance ranking of the four property features after the machine learning model is established. For the four property features, the test temperature is the most influential among many input features, which is consistent with the conventional view in materials science [32,33,34,35]. However, Figure 8 cannot show how a feature affects performance. Taking the test temperature as an example, it is impossible to know whether the test temperature leads to a decrease or an increase in the final performance. Therefore, the SHAP (Shapley Additive Explanation) value is introduced to analyze how features specifically affect performance [36,37,38,39]. The principle of the SHAP value is based on the concept of “Shapley values” in the theory of positive cooperative games. The mathematical principle of the Shapley value is as follows: for a set of participants with N members, each participant forms a joint combination with the other N−1 participants. The Shapley value assigns the contribution of each participant to different combinations and averages the contribution of all combinations. Specifically, for participant i, the number of times in each combination is divided into two cases: with i and without i. For the contribution of each participant in each combination, the Shapley value calculates the difference between the benefits of the two cases and takes the average to obtain the Shapley value of i. In machine learning models, each feature can be viewed as a participant in a game, and the model prediction result can be viewed as the benefit of the game. The SHAP value is a method for explaining the interpretability of each feature in the actual model prediction result. The calculation of the SHAP value can be based on the model prediction result, input features, and the combination of weighted random sampling points. The contribution of each feature is assigned to each combination, and the SHAP value is finally weighted averaged. Figure 9 shows the SHAP value distribution of each feature for each sample data. Taking Figure 9a as an example, the horizontal axis corresponds to the SHAP value of the sample, and the vertical axis corresponds to each point in the feature. Each point represents a sample. The redder the color of the sample, the larger the corresponding feature value, and the bluer the color, the smaller the corresponding feature value. When the test temperature is small, the SHAP value distribution of the sample is on the positive half-axis, indicating that the test temperature has a positive effect on the yield strength. When the test temperature is large, the SHAP value distribution is on the negative half-axis, indicating that the test temperature has a negative effect on the yield strength. By introducing the SHAP value, the contribution of each feature to the final model prediction value can be effectively analyzed, improving the interpretability of the machine learning model. Figure 9 also shows the effect of alloying elements on the mechanical properties of austenitic stainless steel. For example, Mn element, as a common deoxidizer in iron and steel smelting process, has always existed in stainless steel. At the same time, as an austenite forming element, Mn can stabilize austenite phase and replace part of Ni, but excessive Mn will form MnS inclusions with S, which will reduce the properties of stainless steel [40].

Figure 8.

Feature importance ranking of four properties.

Figure 9.

Distribution of features SHAP values: (a) YS; (b) UTS; (c) EL; (d) RA.

3.4. Genetic Algorithm Optimization

To achieve the simultaneous optimization of the four mechanical properties, this study uses the NSGA-III algorithm to screen the composition space of the target component, accelerating the research and development design of high-performance stainless steel. The NSGA-III algorithm [41,42,43,44,45] is an improved version of the NSGA-II algorithm. Compared with NSGA-II, the main improvement of NSGA-III is that it can handle optimization problems with more than three objectives. The core idea of the NSGA-III algorithm is to maintain the diversity of the population by retaining more non-dominated solutions. Specifically, the algorithm divides the population into multiple tiers, with each tier containing a set of dominated solutions, and all solutions in each tier are mutually non-dominated. With this method, NSGA-III can effectively control the diversity of the population and provide better local optimal solutions while ensuring global optimal solutions.

After determining the algorithm, the next step is to define the search space and constraints. The main purpose of this study is to optimize the composition of austenitic stainless steel. Therefore, only the composition features change in the search space, and the solution treatment temperature is set at 1343 K, while the test temperature is set at 298 K. The composition features include the alloy content of 14 kinds of steels. Among them, Cr and Ni, which are the highest alloy additions in stainless steel, are limited to 16.42–17 wt.% and 9.8–10 wt.%, respectively, to reduce production costs. The ranges of other alloy contents are the same as the dataset, and the variation step of each alloy component is 0.001. In addition, to ensure that the optimized stainless steel has an austenitic structure, it is necessary to combine the knowledge of experts in the field of materials and constrain the alloy composition. The ferrite forming element (Cr equivalent) represented by Cr and the austenite forming element (Ni equivalent) represented by Ni jointly affect the microstructure of stainless steel, and Equations (5) and (6) show the calculation formulas for Cr equivalent and Ni equivalent, respectively [46]. Figure 10 shows the effect of Cr equivalent and Ni equivalent on microstructure in stainless steel. To constrain the structure of stainless steel to be austenite, the red shadowed area in Figure 10 should be constrained for the Cr equivalent and Ni equivalent.

| (5) |

| (6) |

where element symbol (Cr, Si, Mo, …) is the mass fraction of each element.

Figure 10.

The distribution advantage diagram of the influence of Cr equivalent and Ni equivalent on the microstructure of stainless steel.

After defining the search space and constraints, the parameters of the NSGA-III algorithm are determined. The initial population size for genetic evolution is set to 300, and the number of generations is set to 500. The Polymutation mutation operator is used with a mutation rate of 0.02, and the XOVR crossover operator is used with a crossover rate of 0.9. The chromosome is encoded using the real number encoding RI. Based on the above constraints and composition search range, a selection strategy based on non-dominated sorting and crowding distance is used. After 500 generations of evolutionary search, the non-dominated solution set for four mechanical properties is obtained. Figure 11 shows the corresponding original data distribution and optimized data distribution. Even under the constraint of Cr and Ni content, there are still composition values close to the highest performance, indicating that the composition optimization is effective. Therefore, under the premise of a reliable prediction model, the search strategy of the genetic algorithm is beneficial to the integrated optimization design of multi-objective performance. Table 5 gives the alloy composition corresponding to the maximum value of four mechanical properties in the non-dominated solution set, which is different from any one in the initial dataset. Compared with the typical 18Cr8Ni stainless steel [47] (YS > 205 MPa, UTS > 520 MPa, EL > 40%, AR > 60%), the mechanical properties of the optimized stainless steel are better, but the corrosion resistance may be reduced due to the small addition of alloying elements.

Figure 11.

Original and optimized property distributions at test temperature 298 K: (a) EL and YTS; (b) AR and YS.

Table 5.

The alloy composition corresponding to the maximum of four properties (UTS/Mpa EL/% YS/MPa AR/%).

| No | Cr | Ni | Mo | Mn | Si | Nb | Ti | Cu | N | C | B | P | S | Al | UTS | EL | YS | AR |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 16.98 | 9.80 | 0.121 | 1.481 | 0.597 | 0.375 | 0.021 | 0.051 | 0.026 | 0.090 | 0.00039 | 0.023 | 0.00604 | 0.0138 | 611 | 72.5 | 221 | 81.5 |

| 2 | 16.99 | 9.98 | 0.0395 | 1.568 | 0.598 | 0.276 | 0.521 | 0.052 | 0.025 | 0.043 | 0.00113 | 0.022 | 0.01700 | 0.1438 | 614 | 68.7 | 223 | 81.9 |

| 3 | 16.99 | 9.96 | 1.020 | 1.471 | 0.597 | 0.116 | 0.103 | 0.050 | 0.013 | 0.041 | 0.00092 | 0.022 | 0.01263 | 0.0141 | 601 | 70.5 | 224 | 84.6 |

| 4 | 16.84 | 9.92 | 0.181 | 1.671 | 0.597 | 0.295 | 0.529 | 0.050 | 0.026 | 0.042 | 0.00017 | 0.023 | 0.00625 | 0.0142 | 603 | 68.2 | 229 | 79.8 |

However, further experimental verification is still needed. The main purpose of this study is to explore the feasibility of a multi-objective optimization scheme for austenitic stainless-steel performance and provide optimized composition to guide the next step of experimental design [48,49].

4. Conclusions

-

(1)

Nine machine learning algorithms were used to establish prediction models for mechanical properties of austenitic stainless steel. The results show that the gradient boosting regression (gbr) algorithm has the highest prediction accuracy and the best fitting degree.

-

(2)

Bayesian optimization was used to optimize the hyperparameters of the gbr algorithm, and the best parameter combination corresponding to four mechanical properties was obtained. The mechanical properties prediction model established had good prediction accuracy on the test set (YS: R2 = 0.88, MAE = 4.89 MPa; UTS: R2 = 0.99, MAE = 2.65 MPa; EL: R2 = 0.84, MAE = 1.42%; AR: R2 = 0.88, MAE = 1.39%).

-

(3)

The feature importance and SHAP value were used to perform interpretable analysis on the performance prediction model. The results indicate that the test temperature is the most important feature affecting the performance, and the high- and low-test temperatures have different positive and negative effects on the performance.

-

(4)

The NSGA-III algorithm was used to optimize the four mechanical properties of austenitic stainless steel, and the constraints and search space were established based on expert knowledge. A new type of austenitic stainless steel with excellent performance was successfully obtained.

-

(5)

The combination of machine learning and genetic algorithm to find the optimal value of performance in the search space can accelerate the research and development efficiency of materials and provide some guidance for the design of new materials.

Acknowledgments

This work is supported by grants from the National Key Research and Development Program of China (Grant No. 2021YFB3702500). The authors are very grateful to the reviewers and editors for their valuable suggestions, which have helped improve the paper substantially.

Author Contributions

Data curation, C.L. and X.W.; formal analysis, C.L. and W.C.; investigation, C.L., X.W., J.Y. and W.C.; conceptualization, H.S.; methodology, C.L., H.S. and J.Y.; software, C.L., H.S.; validation, C.L. and J.Y.; funding acquisition, H.S. and J.Y.; supervision, H.S.; writing—original draft preparation, C.L.; writing—review and editing, H.S. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was funded by the National Key Research and Development Program of China (Grant No. 2021YFB3702500).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Agrawal A., Choudhary A. Perspective: Materials informatics and big data: Realization of the “fourth paradigm” of science in materials science. APL Mater. 2016;4:053208. doi: 10.1063/1.4946894. [DOI] [Google Scholar]

- 2.Zhang H., Fu H., He X., Wang C., Jiang L., Chen L., Xie J. Dramatically enhanced combination of ultimate tensile strength and electric conductivity of alloys via machine learning screening. Acta Mater. 2020;200:803–810. doi: 10.1016/j.actamat.2020.09.068. [DOI] [Google Scholar]

- 3.Pattanayak S., Dey S., Chatterjee S., Chowdhury S.G., Datta S. Computational intelligence based designing of microalloyed pipeline steel. Comput. Mater. Sci. 2015;104:60–68. doi: 10.1016/j.commatsci.2015.03.029. [DOI] [Google Scholar]

- 4.Weng B., Song Z., Zhu R., Yan Q., Sun Q., Grice C.G., Yan Y., Yin W.-J. Simple descriptor derived from symbolic regression accelerating the discovery of new perovskite catalysts. Nat. Commun. 2020;11:3513. doi: 10.1038/s41467-020-17263-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Xu G., Zhang X., Xu J. Data Augmentation of Micrographs and Prediction of Impact Toughness for Cast Austenitic Steel by Machine Learning. Metals. 2023;13:107. doi: 10.3390/met13010107. [DOI] [Google Scholar]

- 6.Qin Z., Wang Z., Wang Y., Zhang L., Li W., Liu J., Wang Z., Li Z., Pan J., Zhao L. Phase prediction of Ni-base superalloys via high-throughput experiments and machine learning. Mater. Res. Lett. 2021;9:32–40. doi: 10.1080/21663831.2020.1815093. [DOI] [Google Scholar]

- 7.Yu J., Guo S., Chen Y., Han J., Lu Y., Jiang Q., Wang C., Liu X. A two-stage predicting model for γ′ solvus temperature of L12-strengthened Co-base superalloys based on machine learning. Intermetallics. 2019;110:106466. doi: 10.1016/j.intermet.2019.04.009. [DOI] [Google Scholar]

- 8.Li Y., Guo W. Machine-learning model for predicting phase formations of high-entropy alloys. Phys. Rev. Mater. 2019;3:095005. doi: 10.1103/PhysRevMaterials.3.095005. [DOI] [Google Scholar]

- 9.Zhou Z., Zhou Y., He Q., Ding Z., Li F., Yang Y. Machine learning guided appraisal and exploration of phase design for high entropy alloys. NPJ Comput. Mater. 2019;5:128. doi: 10.1038/s41524-019-0265-1. [DOI] [Google Scholar]

- 10.Kong C.S., Luo W., Arapan S., Villars P., Iwata S., Ahuja R., Rajan K. Information-theoretic approach for the discovery of design rules for crystal chemistry. J. Chem. Inf. Model. 2012;52:1812–1820. doi: 10.1021/ci200628z. [DOI] [PubMed] [Google Scholar]

- 11.Xue D., Xue D., Yuan R., Zhou Y., Balachandran P.V., Ding X., Sun J., Lookman T. An informatics approach to transformation temperatures of NiTi-based shape memory alloys. Acta Mater. 2017;125:532–541. doi: 10.1016/j.actamat.2016.12.009. [DOI] [Google Scholar]

- 12.Wen C., Wang C., Zhang Y., Antonov S., Xue D., Lookman T., Su Y. Modeling solid solution strengthening in high entropy alloys using machine learning. Acta Mater. 2021;212:116917. doi: 10.1016/j.actamat.2021.116917. [DOI] [Google Scholar]

- 13.Zhu Q., Liu Z., Yan J. Machine learning for metal additive manufacturing: Predicting temperature and melt pool fluid dynamics using physics-informed neural networks. Comput. Mech. 2021;67:619–635. doi: 10.1007/s00466-020-01952-9. [DOI] [Google Scholar]

- 14.Zhu C., Li C., Wu D., Ye W., Shi S., Ming H., Zhang X., Zhou K. A titanium alloys design method based on high-throughput experiments and machine learning. J. Mater. Res. Technol. 2021;11:2336–2353. doi: 10.1016/j.jmrt.2021.02.055. [DOI] [Google Scholar]

- 15.Jiang L., Wang C., Fu H., Shen J., Zhang Z., Xie J. Discovery of aluminum alloys with ultra-strength and high-toughness via a property-oriented design strategy. J. Mater. Sci. Technol. 2022;98:33–43. doi: 10.1016/j.jmst.2021.05.011. [DOI] [Google Scholar]

- 16.Konno T., Kurokawa H., Nabeshima F., Sakishita Y., Ogawa R., Hosako I., Maeda A. Deep learning model for finding new superconductors. Phys. Rev. B. 2021;103:014509. doi: 10.1103/PhysRevB.103.014509. [DOI] [Google Scholar]

- 17.Diao Y., Yan L., Gao K. A strategy assisted machine learning to process multi-objective optimization for improving mechanical properties of carbon steels. J. Mater. Sci. Technol. 2022;109:86–93. doi: 10.1016/j.jmst.2021.09.004. [DOI] [Google Scholar]

- 18.Reddy N., Krishnaiah J., Young H.B., Lee J.S. Design of medium carbon steels by computational intelligence techniques. Comput. Mater. Sci. 2015;101:120–126. doi: 10.1016/j.commatsci.2015.01.031. [DOI] [Google Scholar]

- 19.Shen C., Wang C., Wei X., Li Y., van der Zwaag S., Xu W. Physical metallurgy-guided machine learning and artificial intelligent design of ultrahigh-strength stainless steel. Acta Mater. 2019;179:201–214. doi: 10.1016/j.actamat.2019.08.033. [DOI] [Google Scholar]

- 20. [(accessed on 12 July 2023)]. Available online: https://citrination.com/datasets/114165/show_files/

- 21.Cavanaugh M., Buchheit R., Birbilis N. Modeling the environmental dependence of pit growth using neural network approaches. Corros. Sci. 2010;52:3070–3077. doi: 10.1016/j.corsci.2010.05.027. [DOI] [Google Scholar]

- 22.Yan L., Diao Y., Gao K. Analysis of environmental factors affecting the atmospheric corrosion rate of low-alloy steel using random forest-based models. Materials. 2020;13:3266. doi: 10.3390/ma13153266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wen Y., Cai C., Liu X., Pei J., Zhu X., Xiao T. Corrosion rate prediction of 3C steel under different seawater environment by using support vector regression. Corros. Sci. 2009;51:349–355. doi: 10.1016/j.corsci.2008.10.038. [DOI] [Google Scholar]

- 24.Jiang X., Yin H.-Q., Zhang C., Zhang R.-J., Zhang K.-Q., Deng Z.-H., Liu G.-Q., Qu X.-H. An materials informatics approach to Ni-based single crystal superalloys lattice misfit prediction. Comput. Mater. Sci. 2018;143:295–300. doi: 10.1016/j.commatsci.2017.09.061. [DOI] [Google Scholar]

- 25.Bergstra J., Bengio Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012;13:281–305. [Google Scholar]

- 26.Zhang W., Wu C., Zhong H., Li Y., Wang L. Prediction of undrained shear strength using extreme gradient boosting and random forest based on Bayesian optimization. Geosci. Front. 2021;12:469–477. doi: 10.1016/j.gsf.2020.03.007. [DOI] [Google Scholar]

- 27.Snoek J., Larochelle H., Adams R.P. Practical bayesian optimization of machine learning algorithms. Adv. Neural Inf. Process. Syst. 2012;25:1–9. [Google Scholar]

- 28.Greenhill S., Rana S., Gupta S. Bayesian optimization for adaptive experimental design: A review. IEEE Access. 2020;8:13937–13948. doi: 10.1109/ACCESS.2020.2966228. [DOI] [Google Scholar]

- 29.Hernández-Lobato J.M., Gelbart M.A., Adams R.P., Hoffman M.W., Ghahramani Z. A general framework for constrained Bayesian optimization using information-based search. J. Mach. Learn. Res. 2016;17:1–53. [Google Scholar]

- 30.Roy A., Babuska T., Krick B., Balasubramanian G. Machine learned feature identification for predicting phase and Young’s modulus of low-, medium-and high-entropy alloys. Scr. Mater. 2020;185:152–158. doi: 10.1016/j.scriptamat.2020.04.016. [DOI] [Google Scholar]

- 31.Yan L., Diao Y., Lang Z., Gao K. Corrosion rate prediction and influencing factors evaluation of low-alloy steels in marine atmosphere using machine learning approach. Sci. Technol. Adv. Mater. 2020;21:359–370. doi: 10.1080/14686996.2020.1746196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Choudhary B., Samuel E.I., Bhanu Sankara Rao K., Mannan S. Tensile stress–strain and work hardening behaviour of 316LN austenitic stainless steel. Mater. Sci. Eng. A. 2001;17:223–231. doi: 10.1179/026708301101509890. [DOI] [Google Scholar]

- 33.Aghaie-Khafri M., Zargaran A. High temperature tensile behavior of a PH stainless steel. Mater. Sci. Eng. A. 2010;527:4727–4732. doi: 10.1016/j.msea.2010.03.099. [DOI] [Google Scholar]

- 34.Kim J.W., Byun T.S. Analysis of tensile deformation and failure in austenitic stainless steels: Part I—Temperature dependence. J. Nucl. Mater. 2010;396:1–9. doi: 10.1016/j.jnucmat.2009.08.010. [DOI] [Google Scholar]

- 35.Yanushkevich Z., Lugovskaya A., Belyakov A., Kaibyshev R. Deformation microstructures and tensile properties of an austenitic stainless steel subjected to multiple warm rolling. Mater. Sci. Eng. A. 2016;667:279–285. doi: 10.1016/j.msea.2016.05.008. [DOI] [Google Scholar]

- 36.Lipovetsky S., Conklin M. Analysis of regression in game theory approach. Appl. Stoch. Models Bus. Ind. 2001;17:319–330. doi: 10.1002/asmb.446. [DOI] [Google Scholar]

- 37.Štrumbelj E., Kononenko I. Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 2014;41:647–665. doi: 10.1007/s10115-013-0679-x. [DOI] [Google Scholar]

- 38.Lundberg S.M., Lee S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017;30:4768–4777. [Google Scholar]

- 39.Lundberg S.M., Erion G., Chen H., DeGrave A., Prutkin J.M., Nair B., Katz R., Himmelfarb J., Bansal N., Lee S.-I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020;2:56–67. doi: 10.1038/s42256-019-0138-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pardo A., Merino M.C., Coy A.E., Viejo F., Arrabal R., Matykina E. Pitting corrosion behaviour of austenitic stainless steels—Combining effects of Mn and Mo additions. Corros. Sci. 2008;50:1796–1806. doi: 10.1016/j.corsci.2008.04.005. [DOI] [Google Scholar]

- 41.Deb K., Jain H. An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: Solving problems with box constraints. IEEE Trans. Evol. Comput. 2013;18:577–601. doi: 10.1109/TEVC.2013.2281535. [DOI] [Google Scholar]

- 42.Jain H., Deb K. An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part II: Handling constraints and extending to an adaptive approach. IEEE Trans. Evol. Comput. 2013;18:602–622. doi: 10.1109/TEVC.2013.2281534. [DOI] [Google Scholar]

- 43.Yuan Y., Xu H., Wang B. An improved NSGA-III procedure for evolutionary many-objective optimization; Proceedings of the 2014 Annual Conference on Genetic and Evolutionary Computation; New York, NY, USA. 12–16 July 2014; pp. 661–668. [Google Scholar]

- 44.Chand S., Wagner M. Evolutionary many-objective optimization: A quick-start guide. Surv. Oper. Res. Manag. Sci. 2015;20:35–42. doi: 10.1016/j.sorms.2015.08.001. [DOI] [Google Scholar]

- 45.Vesikar Y., Deb K., Blank J. Reference point based NSGA-III for preferred solutions; Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI); Bengaluru, India. 18–21 November 2018; Piscataway, NJ, USA: IEEE; pp. 1587–1594. [Google Scholar]

- 46.Lu S. Introduction to Stainless Steel. Chemical Industry Press; Beijing, China: 2013. [Google Scholar]

- 47.Hong I.T., Koo C.H. Antibacterial properties, corrosion resistance and mechanical properties of Cu-modified SUS 304 stainless steel. Mater. Sci. Eng. A. 2005;393:213–222. doi: 10.1016/j.msea.2004.10.032. [DOI] [Google Scholar]

- 48.Veiga F., Bhujangrao T., Suárez A., Aldalur E., Goenaga I., Gil-Hernandez D. Validation of the Mechanical Behavior of an Aeronautical Fixing Turret Produced by a Design for Additive Manufacturing (DfAM) Polymers. 2022;14:2177. doi: 10.3390/polym14112177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Veiga F., Suárez A., Aldalur E., Goenaga I., Amondarain J. Wire Arc Additive Manufacturing Process for Topologically Optimized Aeronautical Fixtures. 3d Print. Addit. Manuf. 2021;10:23–33. doi: 10.1089/3dp.2021.0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.