Abstract

ChatGPT has promising applications in health care, but potential ethical issues need to be addressed proactively to prevent harm. ChatGPT presents potential ethical challenges from legal, humanistic, algorithmic, and informational perspectives. Legal ethics concerns arise from the unclear allocation of responsibility when patient harm occurs and from potential breaches of patient privacy due to data collection. Clear rules and legal boundaries are needed to properly allocate liability and protect users. Humanistic ethics concerns arise from the potential disruption of the physician-patient relationship, humanistic care, and issues of integrity. Overreliance on artificial intelligence (AI) can undermine compassion and erode trust. Transparency and disclosure of AI-generated content are critical to maintaining integrity. Algorithmic ethics raise concerns about algorithmic bias, responsibility, transparency and explainability, as well as validation and evaluation. Information ethics include data bias, validity, and effectiveness. Biased training data can lead to biased output, and overreliance on ChatGPT can reduce patient adherence and encourage self-diagnosis. Ensuring the accuracy, reliability, and validity of ChatGPT-generated content requires rigorous validation and ongoing updates based on clinical practice. To navigate the evolving ethical landscape of AI, AI in health care must adhere to the strictest ethical standards. Through comprehensive ethical guidelines, health care professionals can ensure the responsible use of ChatGPT, promote accurate and reliable information exchange, protect patient privacy, and empower patients to make informed decisions about their health care.

Keywords: ethics, ChatGPT, artificial intelligence, AI, large language models, health care, artificial intelligence development, development, algorithm, patient safety, patient privacy, safety, privacy

Introduction

ChatGPT (OpenAI) is a large language model (LLM) and an artificial intelligence (AI) chatbot [1]. Its remarkable ability to access and analyze large amounts of information allows it to generate, categorize, and summarize text with high coherence and accuracy [2]. The user-friendly interface and remarkable features of ChatGPT have made it a preferred tool for tasks such as academic writing and examinations, garnering significant interest in the field [3-6]. Although guidelines for AI bots like ChatGPT are still being developed, ChatGPT development has exceeded initial expectations [7,8]. The latest iteration, GPT-4, surpasses ChatGPT in terms of advanced reasoning, text processing capabilities, and image analysis, and it even demonstrates a degree of “creativity” [9]. Several initiatives and organizations were working to develop such standards, including the Partnership on AI [10], the AI Now Institute [11], the European Commission’s Ethics Guidelines for Trustworthy AI [12], and AI4People [13]. Many of these have been incorporated into the practices of scientific publishers and universities, such as the Committee on Publication Ethics (COPE) [14], the International Committee of Medical Journal Editors (ICMJE) [15], and Wiley’s Research Integrity and Publishing Ethics Guidelines [16]. However, the lack of established ethical review standards for AI systems, such as ChatGPT and GPT-4, poses challenges not only in academic publishing and education but also in various fields of research.

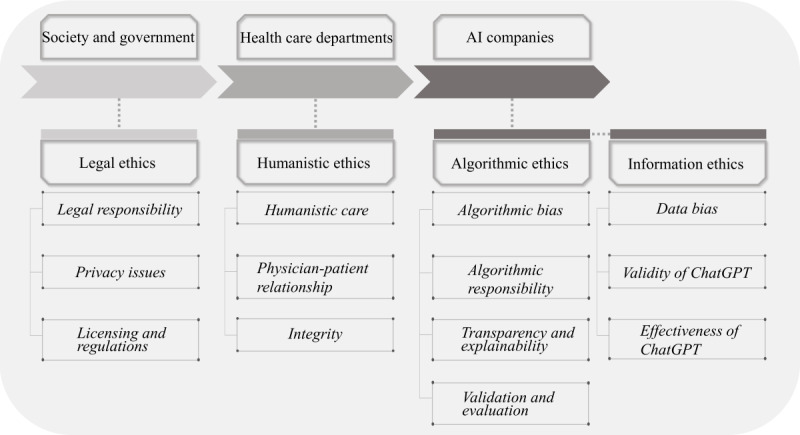

In health care, ChatGPT offers numerous benefits [17], including optimizing radiology reporting [18], generating patient discharge summaries [19], improving patient care [20], providing antimicrobial advice [21], and improving clinical decision support [22]. However, it is essential that ChatGPT adheres to principles such as beneficence, justice and fairness, medical integrity, nonmaleficence, privacy, responsibility, and transparency to prevent potential human harm [23,24]. To ensure the safe use and regulation of this technology and to facilitate public understanding, concern, and participation in the discussion, we systematically explored the potential ethical concerns associated with ChatGPT. We have considered 3 primary entities, including society and government, health care departments, and AI companies. The regulatory framework established for these 3 primary entities took into account legal ethics, humanistic ethics, algorithmic ethics, and information ethics, encompassing a total of 13 points (Figure 1) [25]. Our aim was to provide a comprehensive examination of the ethical implications of ChatGPT in health care. Given the swift advancement of AI, it is crucial to adopt a rational approach that balances the benefits and risks associated with this progress.

Figure 1.

The regulatory framework of artificial intelligence (AI).

Legal Ethics

Overview

The legal ethics surrounding the use of ChatGPT in health care are an important consideration [25,26]. Essential factors to consider include the following: determining legal responsibility in cases where ChatGPT advice leads to harm or adverse outcomes; the collection and storage of sensitive patient information, which raises privacy issues; and the need to consider licensing and regulatory requirements for health care professionals when incorporating ChatGPT into clinical practice.

Legal Responsibility

The potential for ChatGPT to provide inappropriate medical advice in real cases raises significant legal concerns [8]. From a legal perspective, AI lacks the legal status of a human being, leaving humans as the ultimate duty bearers [27]. However, determining legal responsibility in instances where a patient is harmed can indeed become a complex issue. The question arises as to who should be held accountable—the patient, the treating hospital, or OpenAI. The ambiguity underscores the need for comprehensive legal frameworks and guidelines to clearly define and allocate responsibility for the use of AI in health care.

Although OpenAI has taken steps to address these concerns by publishing detailed security standards, usage guidelines, and basic bylaws, they have also explicitly identified situations where the use of ChatGPT is prohibited. It is important to note, however, that none of these measures are currently mandatory [2]. The powerful openness of ChatGPT allows unrestricted access to all registered users. Furthermore, OpenAI explicitly disclaims any responsibility for the generated texts [28]. Consequently, it seems that the burden of any errors rests solely on the user [8]. This raises the question of who should be held responsible if inaccurate or inappropriate advice leads to harm. Clear regulations and legal restrictions are needed to properly allocate responsibility and protect users. Such regulations and laws can help establish guidelines for the use of AI systems, such as ChatGPT, in health care and outline the legal obligations of developers, health care providers, and other stakeholders.

Privacy Issues

Privacy issues are an important aspect when using ChatGPT in health care settings [26]. The collection, storage, and processing of sensitive patient information raise important privacy issues that need to be addressed to ensure the confidentiality and protection of personal data. One of the concerns is the possibility of unauthorized access or data breaches. As ChatGPT interacts with patients and health care providers, it may gather and store personal health information. This information could encompass medical histories, test results, diagnoses, and other sensitive data. Protecting this information is critical to maintaining patient privacy and complying with applicable privacy regulations, such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States or similar laws in other countries [29,30].

Another privacy concern is the risk of reidentification. Even if the data collected by ChatGPT are deidentified, there is still the potential for individuals to be reidentified by combining them with other available data sources [31]. Preventing reidentification requires strong anonymization techniques and strict access controls to prevent unauthorized linking of data.

Transparency in the use of data is also essential [32]. Patients should be informed that their data would be used by ChatGPT, and they should be given the opportunity to provide informed consent. A clear and understandable privacy policy should be in place outlining the purposes for which data are collected, where they are stored, and the measures taken to protect patient privacy [33,34].

In addition, ChatGPT’s advanced features, such as natural language processing and machine learning, may pose privacy risks [35,36]. The model may inadvertently expose sensitive information or provide inaccurate responses that could compromise patient privacy or well-being. Regular monitoring and auditing of system performance and data processing methods is essential to identify and address any privacy issues that may arise.

To mitigate privacy risks, health care organizations should implement robust security measures, including encryption, access controls, and regular vulnerability assessments [10]. A data governance framework should be in place to ensure compliance with privacy regulations and to promote responsible data handling practices. By implementing strong privacy protections and ensuring transparency and accountability in the use of ChatGPT, health care organizations can maximize the benefits of AI while protecting patient privacy and trust.

Licensing and Regulations

As an AI-powered tool that interacts with patients and provides medical advice or support, it is necessary to implement licensing and strict regulations. This could help ChatGPT’s application in health care to meet the relevant regulatory and licensing requirements to ensure patient safety, ethical standards, and legal compliance.

Depending on the jurisdiction, health care professionals who use or rely on ChatGPT may need to hold a valid license and comply with specific regulations governing their practice. These regulations are intended to ensure that health care services provided through AI tools meet the necessary standards of care and professionalism. In addition, regulatory bodies, such as health authorities or medical boards, may need to establish guidelines or frameworks that specifically address the use of AI in health care. These guidelines could cover issues such as data privacy and security, the accuracy and reliability of AI-generated content, informed consent, and the roles and responsibilities of health care professionals when using AI tools, such as ChatGPT. Proactive and flexible regulations are necessary to ensure that AI effectively benefits patients [37]. Rigid or incomplete regulations can be detrimental and may hinder the development of AI. The current regulatory landscape for AI is still evolving [38], with ongoing efforts to establish more rigorous oversight mechanisms [39]. Although OpenAI has implemented privacy provisions and promises to handle information in an anonymized or deidentified form, the lack of sufficient regulations remains a concern [35].

Furthermore, regulatory oversight may be required to assess and approve the use of ChatGPT for specific health care applications or situations. Regulators may evaluate the safety, efficacy, and performance of AI systems, such as ChatGPT, before they are deployed in a clinical setting. This evaluation process helps to ensure that AI tools meet established standards and do not pose undue risks to patients or health care providers.

Humanistic Ethics

Overview

Humanistic ethics should guide the use of ChatGPT in health care, emphasizing the importance of a person-centered approach, respect for the physician-patient relationship, and integrity with patients. Health care professionals can take advantage of ChatGPT while upholding the core values of compassion, empathy, and personalized care [40,41]. Humanistic ethics in health care using ChatGPT include key aspects such as humane care, respect for the physician-patient relationship, and integrity.

Humanistic Care

Humanistic ethics emphasize the significance of providing compassionate and individualized care [41,42]. When using ChatGPT, health care professionals should prioritize the well-being and emotional requirements of patients, ensuring that their care is not solely driven by AI-generated recommendations. Humanistic care involves offering empathetic support, actively listening to patients, and tailoring treatment plans based on a comprehensive understanding of their unique circumstances [41,43]. Although ChatGPT can provide efficient and accurate information, it lacks the human touch and empathy that are crucial in health care interactions. Health care professionals should be mindful of patients’ emotional needs and ensure that the involvement of ChatGPT does not undermine compassionate care and the overall patient experience.

Physician-Patient Relationship

Humanistic ethics underscore the significance of the physician-patient relationship as a central component of health care [44]. When using ChatGPT, health care professionals should ensure that the presence of AI does not compromise this relationship. Health care professionals should use ChatGPT as a tool to enhance their expertise, aid in decision-making, and facilitate communication while maintaining the human connection and trust that underpin effective health care.

Integrity

Indeed, integrity is considered a fundamental ethical principle in health care [45]. Humanistic ethics in health care using ChatGPT should involve maintaining integrity [46]. This includes transparent disclosure of AI involvement in patient care, accurate representation of ChatGPT’s limitations and capabilities, and maintaining honesty and accuracy in the communication of medical information. Health care professionals should ensure that AI-generated content, such as reports or recommendations, is based on evidence-based medicine and aligns with established clinical guidelines.

By incorporating human care, preserving the physician-patient relationship, and maintaining integrity, health care professionals can navigate the ethical implications of using ChatGPT in health care while promoting patient-centered care, empathy, and the integration of AI in a responsible and ethical manner.

Algorithmic Ethics

Overview

Algorithmic ethics in health care involving the use of ChatGPT require careful consideration of ethical principles and guidelines by health care professionals and organizations. This is critical to the use of ChatGPT in health care. It involves addressing the ethical implications and challenges associated with the algorithms and underlying technology that power ChatGPT. Below are some key aspects of algorithmic ethics in health care.

Algorithmic Bias

Algorithmic bias refers to biases that arise as a result of the design, implementation, or decision-making processes within the algorithms themselves [47,48]. It occurs when the algorithms, despite being trained on unbiased data, exhibit biased behavior or produce discriminatory outcomes. Algorithmic bias can arise from several sources, including biased feature selection, biased model design, or biased decision rules [47,49]. It can amplify and exacerbate existing social, cultural, or historical biases, leading to unfair treatment or discrimination against certain individuals or groups [50-52]. In essence, data bias originates from biased data used to train the model, while algorithmic bias stems from biased decision-making processes within the model itself. Data bias can directly contribute to algorithmic bias [53], but it is possible for algorithmic bias to occur even with unbiased training data if the model’s design or decision-making mechanisms introduce bias [54,55]. This algorithmic bias could lead to clinical errors with significant consequences [56]. Even small biases in widely used algorithms can have serious consequences [57]. Concerns about the training set and underlying LLM of ChatGPT arise because OpenAI has not disclosed these details, raising suspicions that the inner workings of the AI may be hidden [58]. Besides the numerous researchers and individuals who are currently jailbreaking and testing the penetrability of generative AI, the lack of transparency in the system prevents external researchers from evaluating the bot and identifying potential algorithmic biases. It is imperative to require algorithmic transparency for all LLMs to ensure the responsible use of AI by physicians and patients. Evidence should be developed through indirect access based on results, not access to a black box. Rigorous testing and regulation of AI algorithms are necessary to protect human health [59].

Algorithmic Responsibility

When using ChatGPT, it is important to have a clear division of responsibilities between patients, physicians, and OpenAI. Patients take responsibility for the questions they ask, ensuring that they are appropriate and relevant. Physicians, on the other hand, need to recognize and mitigate the potential “automation bias” that can result from overreliance on algorithms [60,61]. As the developer of the algorithm, OpenAI is responsible for its design and operation. It is incumbent upon OpenAI to ensure that the ChatGPT algorithm is autonomous and beneficial to patients and to justify its design choices, settings, and overall impact on society [62]. Accountability for patient protection should be rigorously enforced [63]. Health care professionals must understand their roles and responsibilities when using AI technologies such as ChatGPT and ensure that they take ultimate responsibility for decisions made based on the generated content.

Transparency and Explainability

Transparency is a fundamental aspect of algorithmic ethics, and explainability is seen as a component of transparency [64,65]. Transparency refers to the openness and clarity of how algorithms and AI systems operate, make decisions, and generate outputs [66]. A transparent AI system provides visibility into its inner workings, enabling users and stakeholders to understand the factors that lead to its outputs. Explainability refers to the ability to provide understandable explanations for the decisions and recommendations made by an AI system [66]. Explainability is essential for users, such as health care professionals and patients, to trust AI systems and to understand the reasons behind their outputs. By ensuring transparency in algorithm ethics, AI systems like ChatGPT become more accountable and explainable. Explainability allows for the identification of potential biases, errors, or unintended consequences and facilitates the assessment of their ethical implications. In addition, transparency and interpretability help build trust and acceptance between users and stakeholders, addressing concerns about the “black box” nature of AI systems. The transparency of the algorithm enables health care professionals to comprehend how ChatGPT formulates its recommendations and allows them to explain its reasoning process to patients.

Validation and Evaluation

Validation and evaluation are important components of algorithmic ethics [67]. They are the means by which researchers and practitioners assess the performance, accuracy, and reliability of AI algorithms [68]. Health care professionals should evaluate the accuracy, reliability, and effectiveness of the recommendations generated and compare them with established clinical guidelines and best practices. It could help promote fairness, transparency, and accountability in algorithmic decision-making, ultimately improving the quality of patient care and outcomes.

Information Ethics

Overview

Information ethics in health care using ChatGPT encompasses the responsible and ethical handling of data to ensure accuracy, validity, and effectiveness in the information provided by ChatGPT. It includes several key considerations.

Data Bias

Data bias refers to the presence of biases in the training data used to develop AI models, which may not be representative of the real world or may contain systematic biases [69]. Data bias can cause AI systems to make inaccurate or unfair predictions or decisions. These biases can be unintentionally embedded in the data due to various factors, such as sampling methods, data collection processes, or human biases present in the data sources [7,70,71]. Data bias can lead to skewed outcomes and predictions, as the model learns from the biased data and perpetuates the same biases in its results [72]. AI systems, including ChatGPT, are susceptible to data bias resulting from their training data, particularly in paramedical treatments, such as developing treatment plans. AI algorithms can only generate content based on the information they have been trained on and lack the ability to generate novel ideas. If the training data used for ChatGPT are biased, the bot may inadvertently perpetuate this bias [72]. Furthermore, as the output of ChatGPT can be used to train future iterations of the model, any bias present in the data may persist without human intervention [5].

Validity of ChatGPT

Validity refers to the accuracy, reliability, and appropriateness of the information provided by ChatGPT. As the content generated by ChatGPT can directly affect the health and well-being of patients, it is crucial to prioritize accuracy and reliability to avoid potential harm or misinformation [73]. Comprehensive validation of ChatGPT output can only be achieved through meticulous annotation of large data sets by human experts, resulting in truly valuable and reliable data [3]. However, in the case of ChatGPT, the aggregation of text and the lack of accessible source information make querying and validating responses challenging [1]. As a result, the manual validation required for ChatGPT would be extremely time and resource intensive.

Despite some limited validation efforts, ChatGPT still requires further error correction [74]. Currently, the references generated by the bot have not undergone extensive validation, leaving users to rely on their own judgment to assess the accuracy of the content [75]. This subjective assessment carries a high risk of adverse consequences. To ensure the safe and effective use of ChatGPT in health care, it is imperative that the model is trained on a substantial amount of data annotated by clinical experts and validated by physicians. This rigorous validation process could increase the reliability and trustworthiness of ChatGPT responses, ultimately benefiting patient care.

Effectiveness of ChatGPT

Our concerns about the effectiveness of ChatGPT in health care revolve around 2 key issues: accuracy and limitations [76]. Accuracy refers to the ability of ChatGPT to generate correct and reliable information or responses in health care–related tasks. Limitations encompass the boundaries and shortcomings of ChatGPT’s capabilities, such as potential biases, lack of contextual understanding, or inability to handle complex medical scenarios.

First, ChatGPT has the potential to provide logically coherent but incorrect responses or inaccurate information due to its inability to consciously assess the accuracy of its output text [75,77]. In particular, cases of apparent error have been identified in the provision of discharge summaries and radiology reports by ChatGPT [18,19]. Therefore, clinicians should exercise caution and not overrely on ChatGPT advice, but instead, they should select clinically appropriate information.

Second, due to the nature of its training data [78], the information incorporated into ChatGPT may have delays and incompleteness [8,79]. This limitation raises concerns about its ability to provide up-to-date and comprehensive insights into the latest medical and professional research [75]. To address this, ChatGPT needs to undergo specific training and continuous updates tailored to the needs of clinical practice [73]. By moving away from fictional scenarios and focusing on providing effective answers to real health care questions [3,21], ChatGPT can increase its utility in assisting clinicians and patients.

The Influence and Future of ChatGPT in Health Care

ChatGPT has gained widespread popularity and is beginning to reshape working practices around the world [80]. With the introduction of GPT-4, AI technology is advancing at an unprecedented rate, leading to disruptive innovation [81]. This rapid progress points to a future where AI surpasses human capabilities in processing information, including text and images. However, physicians need not resist this technological development or fear being replaced by AI [82]. In fact, the judicious use of AI can reduce procedural tasks and unleash human creativity [82]. Rather than focusing on rote learning, physicians can improve their critical thinking skills more broadly [83]. AI could be a driving force in the advancement of health care, but its integration must undergo rigorous ethical scrutiny before it is fully embraced by society.

To ensure that fundamental principles such as beneficence, nonmaleficence, medical integrity, and justice are upheld [23], AI in health care must adhere to the strictest ethical standards. Comprehensive ethical guidelines could provide essential legal, ethical, algorithmic, and informational support for patients, physicians, and health care researchers. By establishing a robust ethical framework, we can harness the potential of AI while safeguarding the well-being of individuals. This technology is expected to make a significant contribution to humanity and medicine, shaping a future where AI serves as a valuable tool to advance health care and improve patient outcomes.

Acknowledgments

This manuscript was drafted without the use of artificial intelligence techniques. After preparing the manuscript, we used ChatGPT to aid in the creation of supplementary materials. Detailed prompts, inputs, and outputs from this interaction are included in Multimedia Appendix 1 for transparency.

Abbreviations

- AI

artificial intelligence

- COPE

Committee on Publication Ethics

- HIPAA

Health Insurance Portability and Accountability Act

- ICMJE

International Committee of Medical Journal Editors

- LLM

large language model

Detailed prompts, inputs, and outputs from interaction with ChatGPT.

Footnotes

Conflicts of Interest: None declared.

References

- 1.ChatGPT. [2023-03-30]. https://chat.openai.com/

- 2.Introducing ChatGPT. [2023-03-30]. https://openai.com/blog/chatgpt .

- 3.Alvero R. ChatGPT: rumors of human providers' demise have been greatly exaggerated. Fertil Steril. 2023 Jun;119(6):930–931. doi: 10.1016/j.fertnstert.2023.03.010.S0015-0282(23)00217-0 [DOI] [PubMed] [Google Scholar]

- 4.Stokel-Walker C. AI bot ChatGPT writes smart essays - should professors worry? Nature. 2022 Dec 09; doi: 10.1038/d41586-022-04397-7.10.1038/d41586-022-04397-7 [DOI] [PubMed] [Google Scholar]

- 5.The Lancet Digital Health ChatGPT: friend or foe? Lancet Digit Health. 2023 Mar;5(3):e102. doi: 10.1016/S2589-7500(23)00023-7. https://linkinghub.elsevier.com/retrieve/pii/S2589-7500(23)00023-7 .S2589-7500(23)00023-7 [DOI] [PubMed] [Google Scholar]

- 6.Anders BA. Is using ChatGPT cheating, plagiarism, both, neither, or forward thinking? Patterns (N Y) 2023 Mar 10;4(3):100694. doi: 10.1016/j.patter.2023.100694. https://linkinghub.elsevier.com/retrieve/pii/S2666-3899(23)00025-9 .S2666-3899(23)00025-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liebrenz M, Schleifer R, Buadze A, Bhugra D, Smith A. Generating scholarly content with ChatGPT: ethical challenges for medical publishing. Lancet Digit Health. 2023 Mar;5(3):e105–e106. doi: 10.1016/s2589-7500(23)00019-5. [DOI] [PubMed] [Google Scholar]

- 8.Chatbots, generative AI, and scholarly manuscripts. WAME. [2023-03-31]. https://wame.org/page3.php?id=106 . [DOI] [PMC free article] [PubMed]

- 9.GPT-4 is OpenAI’s most advanced system, producing safer and more useful responses. OpenAI. [2023-03-30]. https://openai.com/product/gpt-4 .

- 10.Safety critical AI. Partnership on AI. [2023-06-30]. https://partnershiponai.org/program/safety-critical-ai/

- 11.ChatGPT and more: large scale AI models entrench big tech power. AI Now Institute. [2023-06-30]. https://ainowinstitute.org/publication/large-scale-ai-models .

- 12.Ethics guidelines for trustworthy AI. FUTURIUM - European Commission. [2023-06-30]. https://ec.europa.eu/futurium/en/ai-alliance-consultation .

- 13.Floridi L, Cowls J, Beltrametti M, Chatila R, Chazerand P, Dignum V, Luetge C, Madelin R, Pagallo U, Rossi F, Schafer B, Valcke P, Vayena E. AI4People-An ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Minds Mach (Dordr) 2018;28(4):689–707. doi: 10.1007/s11023-018-9482-5. http://hdl.handle.net/2318/1728327 .9482 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Promoting integrity in research and its publication. COPE: Committee on Publication Ethics. [2023-07-01]. https://publicationethics.org/

- 15.Defining the role of authors and contributors. ICMJE. [2023-03-31]. https://www.icmje.org/recommendations/browse/roles-and-responsibilities/defining-the-role-of-authors-and-contributors.html .

- 16.Best practice guidelines on research integrity and publishing ethics. Wiley Author Services. [2023-07-01]. https://authorservices.wiley.com/ethics-guidelines/index.html .

- 17.Liu J, Wang C, Liu S. Utility of ChatGPT in clinical practice. J Med Internet Res. 2023 Jun 28;25:e48568. doi: 10.2196/48568. https://www.jmir.org/2023//e48568/ v25i1e48568 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jeblick K, Schachtner B, Dexl J, Mittermeier A, Stüber A, Topalis J, Weber T, Wesp P, Sabel B, Ricke J, Ingrisch M. ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports. arXiv. doi: 10.48550/arXiv.2212.14882. Preprint posted online Dec 30, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Patel SB, Lam K. ChatGPT: the future of discharge summaries? Lancet Digit Health. 2023 Mar;5(3):e107–e108. doi: 10.1016/S2589-7500(23)00021-3. https://linkinghub.elsevier.com/retrieve/pii/S2589-7500(23)00021-3 .S2589-7500(23)00021-3 [DOI] [PubMed] [Google Scholar]

- 20.D'Amico RS, White TG, Shah HA, Langer DJ. I asked a ChatGPT to write an editorial about how we can incorporate chatbots into neurosurgical research and patient care…. Neurosurgery. 2023 Apr 01;92(4):663–664. doi: 10.1227/neu.0000000000002414.00006123-202304000-00002 [DOI] [PubMed] [Google Scholar]

- 21.Howard A, Hope W, Gerada A. ChatGPT and antimicrobial advice: the end of the consulting infection doctor? Lancet Infect Dis. 2023 Apr;23(4):405–406. doi: 10.1016/S1473-3099(23)00113-5.S1473-3099(23)00113-5 [DOI] [PubMed] [Google Scholar]

- 22.Liu S, Wright AP, Patterson BL, Wanderer JP, Turer RW, Nelson SD, McCoy AB, Sittig DF, Wright A. Assessing the value of ChatGPT for clinical decision support optimization. medRxiv. doi: 10.1101/2023.02.21.23286254. doi: 10.1101/2023.02.21.23286254. Preprint posted online on Feb 23, 2023. 2023.02.21.23286254 [DOI] [Google Scholar]

- 23.Shen Y, Heacock L, Elias J, Hentel KD, Reig B, Shih G, Moy L. ChatGPT and other large language models are double-edged swords. Radiology. 2023 Apr;307(2):e230163. doi: 10.1148/radiol.230163. [DOI] [PubMed] [Google Scholar]

- 24.Jobin A, Ienca M, Vayena E. The global landscape of AI ethics guidelines. Nat Mach Intell. 2019 Sep 02;1(9):389–399. doi: 10.1038/s42256-019-0088-2. [DOI] [Google Scholar]

- 25.Cath C. Governing artificial intelligence: ethical, legal and technical opportunities and challenges. Philos Trans A Math Phys Eng Sci. 2018 Oct 15;376(2133):20180080. doi: 10.1098/rsta.2018.0080. http://europepmc.org/abstract/MED/30322996 .rsta.2018.0080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Naik N, Hameed BMZ, Shetty DK, Swain D, Shah M, Paul R, Aggarwal K, Ibrahim S, Patil V, Smriti K, Shetty S, Rai BP, Chlosta P, Somani BK. Legal and ethical consideration in artificial intelligence in healthcare: who takes responsibility? Front Surg. 2022;9:862322. doi: 10.3389/fsurg.2022.862322. https://europepmc.org/abstract/MED/35360424 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zhang J, Zhang Z. Ethics and governance of trustworthy medical artificial intelligence. BMC Med Inform Decis Mak. 2023 Jan 13;23(1):7. doi: 10.1186/s12911-023-02103-9. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-023-02103-9 .10.1186/s12911-023-02103-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Terms of use. OpenAI. [2023-03-31]. https://openai.com/policies/terms-of-use .

- 29.Su Z, McDonnell D, Bentley BL, He J, Shi F, Cheshmehzangi A, Ahmad J, Jia P. Addressing biodisaster X threats with artificial intelligence and 6G technologies: literature review and critical insights. J Med Internet Res. 2021 May 25;23(5):e26109. doi: 10.2196/26109. https://www.jmir.org/2021/5/e26109/ v23i5e26109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Summary of the HIPAA security rule. US Department of Health and Human Services. [2023-07-04]. https://www.hhs.gov/hipaa/for-professionals/security/laws-regulations/index.html .

- 31.El EK, Jonker E, Arbuckle L, Malin B. A systematic review of re-identification attacks on health data. PLoS One. 2011;6(12):e28071. doi: 10.1371/journal.pone.0028071. http://dx.plos.org/10.1371/journal.pone.0028071 .PONE-D-11-14348 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bazoukis G, Hall J, Loscalzo J, Antman EM, Fuster V, Armoundas AA. The inclusion of augmented intelligence in medicine: a framework for successful implementation. Cell Rep Med. 2022 Jan 18;3(1):100485. doi: 10.1016/j.xcrm.2021.100485. doi: 10.1016/j.xcrm.2021.100485.S2666-3791(21)00357-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.March 20 ChatGPT outage: Here’s what happened. OpenAI. [2023-07-01]. https://openai.com/blog/march-20-chatgpt-outage .

- 34.Luo E, Bhuiyan MZA, Wang G, Rahman MA, Wu J, Atiquzzaman M. PrivacyProtector: privacy-protected patient data collection in IoT-based healthcare systems. IEEE Commun. Mag. 2018 Feb;56(2):163–168. doi: 10.1109/mcom.2018.1700364. [DOI] [Google Scholar]

- 35.Privacy policy. OpenAI. [2023-03-31]. https://openai.com/policies/privacy-policy .

- 36.Ford E, Oswald M, Hassan L, Bozentko K, Nenadic G, Cassell J. Should free-text data in electronic medical records be shared for research? A citizens' jury study in the UK. J Med Ethics. 2020 Jun;46(6):367–377. doi: 10.1136/medethics-2019-105472. http://jme.bmj.com/lookup/pmidlookup?view=long&pmid=32457202 .medethics-2019-105472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zanca F, Brusasco C, Pesapane F, Kwade Z, Beckers R, Avanzo M. Regulatory aspects of the use of artificial intelligence medical software. Semin Radiat Oncol. 2022 Oct;32(4):432–441. doi: 10.1016/j.semradonc.2022.06.012.S1053-4296(22)00041-8 [DOI] [PubMed] [Google Scholar]

- 38.Health CFD. Artificial intelligence and machine learning in software as a medical device. FDA. 2022. Sep 27, [2023-04-02]. https://tinyurl.com/rwrh739a .

- 39.Goodman RS, Patrinely JR, Osterman T, Wheless L, Johnson DB. On the cusp: Considering the impact of artificial intelligence language models in healthcare. Med. 2023 Mar 10;4(3):139–140. doi: 10.1016/j.medj.2023.02.008.S2666-6340(23)00068-5 [DOI] [PubMed] [Google Scholar]

- 40.Kerasidou A, Bærøe K, Berger Z, Caruso Brown AE. The need for empathetic healthcare systems. J Med Ethics. 2020 Jul 24;47(12):e27. doi: 10.1136/medethics-2019-105921. http://jme.bmj.com/lookup/pmidlookup?view=long&pmid=32709754 .medethics-2019-105921 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Coghlan S. Robots and the possibility of humanistic care. Int J Soc Robot. 2022;14(10):2095–2108. doi: 10.1007/s12369-021-00804-7. https://europepmc.org/abstract/MED/34221183 .804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Taggart G. Compassionate pedagogy: the ethics of care in early childhood professionalism. EECERJ. 2016:173. doi: 10.1080/1350293X.2014.970847. [DOI] [Google Scholar]

- 43.Jeffrey D. Empathy, sympathy and compassion in healthcare: Is there a problem? Is there a difference? Does it matter? J R Soc Med. 2016 Dec;109(12):446–452. doi: 10.1177/0141076816680120. https://europepmc.org/abstract/MED/27923897 .109/12/446 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Vearrier L, Henderson CM. Utilitarian principlism as a framework for crisis healthcare ethics. HEC Forum. 2021 Jun 15;33(1-2):45–60. doi: 10.1007/s10730-020-09431-7. https://europepmc.org/abstract/MED/33449232 .10.1007/s10730-020-09431-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Rider EA, Kurtz S, Slade D, Longmaid HE, Ho M, Pun JK, Eggins S, Branch WT. The International Charter for Human Values in Healthcare: an interprofessional global collaboration to enhance values and communication in healthcare. Patient Educ Couns. 2014 Sep;96(3):273–80. doi: 10.1016/j.pec.2014.06.017. https://linkinghub.elsevier.com/retrieve/pii/S0738-3991(14)00272-9 .S0738-3991(14)00272-9 [DOI] [PubMed] [Google Scholar]

- 46.Solimini R, Busardò FP, Gibelli F, Sirignano A, Ricci G. Ethical and legal challenges of telemedicine in the era of the COVID-19 pandemic. Medicina (Kaunas) 2021 Nov 30;57(12):1314. doi: 10.3390/medicina57121314. https://www.mdpi.com/resolver?pii=medicina57121314 .medicina57121314 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kordzadeh N, Ghasemaghaei M. Algorithmic bias: review, synthesis, and future research directions. Eur J Inf Syst. 2022 May 4;:388. doi: 10.1080/0960085x.2021.1927212. [DOI] [Google Scholar]

- 48.Akter S, Dwivedi YK, Sajib S, Biswas K, Bandara RJ, Michael K. Algorithmic bias in machine learning-based marketing models. J Bus Res. 2022 May;144:201–216. doi: 10.1016/j.jbusres.2022.01.083. [DOI] [Google Scholar]

- 49.Tsamados A, Aggarwal N, Cowls J, Morley J, Roberts H, Taddeo M, Floridi L. The ethics of algorithms: key problems and solutions. AI & Soc. 2021 Feb 20;37(1):215–230. doi: 10.1007/s00146-021-01154-8. [DOI] [Google Scholar]

- 50.Fletcher R, Nakeshimana A, Olubeko O. Addressing fairness, bias, and appropriate use of artificial intelligence and machine learning in global health. Frontiers in Artificial Intelligence. 2021. [2023-07-03]. https://www.frontiersin.org/articles/10.3389/frai.2020.561802 . [DOI] [PMC free article] [PubMed]

- 51.Starke G, De Clercq E, Elger BS. Towards a pragmatist dealing with algorithmic bias in medical machine learning. Med Health Care Philos. 2021 Sep;24(3):341–349. doi: 10.1007/s11019-021-10008-5. https://europepmc.org/abstract/MED/33713239 .10.1007/s11019-021-10008-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mitchell M, Baker D, Moorosi N, Denton E, Hutchinson B, Hanna A, Gebru T, Morgenstern J. Diversity and inclusion metrics in subset selection. AIES '20: AAAI/ACM Conference on AI, Ethics, and Society; Feb 7-9; New York, NY. 2020. pp. 117–123. [DOI] [Google Scholar]

- 53.Parikh RB, Teeple S, Navathe AS. Addressing bias in artificial intelligence in health care. JAMA. 2019 Nov 22;:2377–2378. doi: 10.1001/jama.2019.18058.2756196 [DOI] [PubMed] [Google Scholar]

- 54.Sun W, Nasraoui O, Shafto P. Evolution and impact of bias in human and machine learning algorithm interaction. PLoS One. 2020 Aug 13;15(8):e0235502. doi: 10.1371/journal.pone.0235502. https://dx.plos.org/10.1371/journal.pone.0235502 .PONE-D-19-35469 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Akter S, McCarthy G, Sajib S, Michael K, Dwivedi YK, D’Ambra J, Shen K. Algorithmic bias in data-driven innovation in the age of AI. Int J Inf Manag. 2021 Oct;60:102387. doi: 10.1016/j.ijinfomgt.2021.102387. [DOI] [Google Scholar]

- 56.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019 Jan;25(1):44–56. doi: 10.1038/s41591-018-0300-7.10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 57.Madhusoodanan J. Is a racially-biased algorithm delaying health care for one million Black people? Nature. 2020 Dec;588(7839):546–547. doi: 10.1038/d41586-020-03419-6.10.1038/d41586-020-03419-6 [DOI] [PubMed] [Google Scholar]

- 58.van Dis EAM, Bollen J, Zuidema W, van Rooij R, Bockting CL. ChatGPT: five priorities for research. Nature. 2023 Feb 03;614(7947):224–226. doi: 10.1038/d41586-023-00288-7. http://paperpile.com/b/KWcOMb/9UIV . [DOI] [PubMed] [Google Scholar]

- 59.Liu X, Glocker B, McCradden MM, Ghassemi M, Denniston AK, Oakden-Rayner L. The medical algorithmic audit. Lancet Digit Health. 2022 May;4(5):e384–e397. doi: 10.1016/S2589-7500(22)00003-6. https://linkinghub.elsevier.com/retrieve/pii/S2589-7500(22)00003-6 .S2589-7500(22)00003-6 [DOI] [PubMed] [Google Scholar]

- 60.Skitka LJ, Moisier KL, Burdick M. Does automation bias decision-making? Int J Hum Comput. 1999 Nov;51(5):991–1006. doi: 10.1006/ijhc.1999.0252. [DOI] [Google Scholar]

- 61.Lyell D, Coiera E. Automation bias and verification complexity: a systematic review. J Am Med Inform Assoc. 2017 Mar 01;24(2):423–431. doi: 10.1093/jamia/ocw105.ocw105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Busuioc M. Accountable artificial intelligence: holding algorithms to account. Public Adm Rev. 2021;81(5):825–836. doi: 10.1111/puar.13293. https://europepmc.org/abstract/MED/34690372 .PUAR13293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.SKITKA LJ, MOSIER K, BURDICK MD. Accountability and automation bias. Int J Hum Comput. 2000 Apr;52(4):701–717. doi: 10.1006/ijhc.1999.0349. [DOI] [Google Scholar]

- 64.Balasubramaniam N, Kauppinen M, Rannisto A, Hiekkanen K, Kujala S. Transparency and explainability of AI systems: from ethical guidelines to requirements. Information and Software Technology. 2023 Jul;159:107197. doi: 10.1016/j.infsof.2023.107197. [DOI] [Google Scholar]

- 65.Wachter S, Mittelstadt B, Floridi L. Transparent, explainable, and accountable AI for robotics. Sci Robot. 2017 May 31;2(6):eaan6080. doi: 10.1126/scirobotics.aan6080.2/6/eaan6080 [DOI] [PubMed] [Google Scholar]

- 66.Williams R, Cloete R, Cobbe J, Cottrill C, Edwards P, Markovic M, Naja I, Ryan F, Singh J, Pang W. Data & Policy. Cambridge, United Kingdom: Cambridge University Press; 2022. Feb 18, From transparency to accountability of intelligent systems: moving beyond aspirations. [Google Scholar]

- 67.Raghavan M, Barocas S, Kleinberg J, Levy K. Mitigating bias in algorithmic hiring: evaluating claims and practices. Conference on Fairness, Accountability, and Transparency (FAT* '20); Jan 27-30; Barcelona, Spain. 2020. pp. 469–481. [DOI] [Google Scholar]

- 68.Goldsack JC, Coravos A, Bakker JP, Bent B, Dowling AV, Fitzer-Attas C, Godfrey A, Godino JG, Gujar N, Izmailova E, Manta C, Peterson B, Vandendriessche B, Wood WA, Wang KW, Dunn J. Verification, analytical validation, and clinical validation (V3): the foundation of determining fit-for-purpose for Biometric Monitoring Technologies (BioMeTs) NPJ Digit Med. 2020;3:55. doi: 10.1038/s41746-020-0260-4. http://europepmc.org/abstract/MED/32337371 .260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Choi Y, Yu W, Nagarajan MB, Teng P, Goldin JG, Raman SS, Enzmann DR, Kim GHJ, Brown MS. Translating AI to clinical practice: overcoming data shift with explainability. Radiographics. 2023 May;43(5):e220105. doi: 10.1148/rg.220105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Tan J, Liu W, Xie M, Song H, Liu A, Zhao M, Zhang G. A low redundancy data collection scheme to maximize lifetime using matrix completion technique. J Wireless Com Network. 2019 Jan 8;2019(1) doi: 10.1186/s13638-018-1313-0. [DOI] [Google Scholar]

- 71.Huse SM, Young VB, Morrison HG, Antonopoulos DA, Kwon J, Dalal S, Arrieta R, Hubert NA, Shen L, Vineis JH, Koval JC, Sogin ML, Chang EB, Raffals LE. Comparison of brush and biopsy sampling methods of the ileal pouch for assessment of mucosa-associated microbiota of human subjects. Microbiome. 2014 Feb 14;2(1):5. doi: 10.1186/2049-2618-2-5. https://microbiomejournal.biomedcentral.com/articles/10.1186/2049-2618-2-5 .2049-2618-2-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst. 2023 Mar 04;47(1):33. doi: 10.1007/s10916-023-01925-4. https://europepmc.org/abstract/MED/36869927 .10.1007/s10916-023-01925-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.No authors listed Will ChatGPT transform healthcare? Nat Med. 2023 Mar 14;29(3):505–506. doi: 10.1038/s41591-023-02289-5.10.1038/s41591-023-02289-5 [DOI] [PubMed] [Google Scholar]

- 74.Johnson D, Goodman R, Patrinely J, Stone C, Zimmerman E, Donald R, Chang S, Berkowitz S, Finn A, Jahangir E, Scoville E, Reese T, Friedman D, Bastarache J, van der Heijden Y, Wright J, Carter N, Alexander M, Choe J, Chastain C, Zic J, Horst S, Turker I, Agarwal R, Osmundson E, Idrees K, Kiernan C, Padmanabhan C, Bailey C, Schlegel C, Chambless L, Gibson M, Osterman T, Wheless L. Assessing the accuracy and reliability of AI-generated medical responses: an evaluation of the Chat-GPT model. Res Sq. 2023 Feb 28; doi: 10.21203/rs.3.rs-2566942/v1. https://europepmc.org/abstract/MED/36909565 .rs.3.rs-2566942 [DOI] [Google Scholar]

- 75.Stokel-Walker C, Van Noorden R. What ChatGPT and generative AI mean for science. Nature. 2023 Feb 06;614(7947):214–216. doi: 10.1038/d41586-023-00340-6. [DOI] [PubMed] [Google Scholar]

- 76.Wang G, Badal A, Jia X, Maltz JS, Mueller K, Myers KJ, Niu C, Vannier M, Yan P, Yu Z, Zeng R. Development of metaverse for intelligent healthcare. Nat Mach Intell. 2022 Nov;4(11):922–929. doi: 10.1038/s42256-022-00549-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Korngiebel DM, Mooney SD. Considering the possibilities and pitfalls of Generative Pre-trained Transformer 3 (GPT-3) in healthcare delivery. NPJ Digit Med. 2021 Jun 03;4(1):93. doi: 10.1038/s41746-021-00464-x. doi: 10.1038/s41746-021-00464-x.10.1038/s41746-021-00464-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Boßelmann CM, Leu C, Lal D. Are AI language models such as ChatGPT ready to improve the care of individuals with epilepsy? Epilepsia. 2023 May;64(5):1195–1199. doi: 10.1111/epi.17570. [DOI] [PubMed] [Google Scholar]

- 79.Grünebaum A, Chervenak J, Pollet SL, Katz A, Chervenak FA. The exciting potential for ChatGPT in obstetrics and gynecology. Am J Obstet Gynecol. 2023 Jun;228(6):696–705. doi: 10.1016/j.ajog.2023.03.009.S0002-9378(23)00154-0 [DOI] [PubMed] [Google Scholar]

- 80.Eloundou T, Manning S, Mishkin P, Rock D. GPTs are GPTs: an early look at the labor market impact potential of large language models. arXiv. doi: 10.48550/arXiv.2303.10130. Preprint posted online Mar 17, 2023. [DOI] [Google Scholar]

- 81.Sardana D, Fagan TR, Wright JT. ChatGPT: A disruptive innovation or disrupting innovation in academia? J Am Dent Assoc. 2023 May;154(5):361–364. doi: 10.1016/j.adaj.2023.02.008.S0002-8177(23)00075-2 [DOI] [PubMed] [Google Scholar]

- 82.DiGiorgio AM, Ehrenfeld JM. J Med Syst. 2023 Mar 04;47(1):32. doi: 10.1007/s10916-023-01926-3.10.1007/s10916-023-01926-3 [DOI] [PubMed] [Google Scholar]

- 83.Castelvecchi D. Are ChatGPT and AlphaCode going to replace programmers? Nature. 2022 Dec 08; doi: 10.1038/d41586-022-04383-z.10.1038/d41586-022-04383-z [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Detailed prompts, inputs, and outputs from interaction with ChatGPT.