Abstract

Many physics-based and machine-learned scoring functions (SFs) used to predict protein-ligand binding free energies have been trained on the PDBBind dataset. However, it is controversial as to whether new SFs are actually improving since the general, refined, and core datasets of PDBBind are cross-contaminated with proteins and ligands with high similarity, and hence they may not perform comparably well in binding prediction of new protein-ligand complexes. In this work we have carefully prepared a cleaned PDBBind data set of non-covalent binders that are split into training, validation, and test datasets to control for data leakage. The resulting leak-proof (LP)-PDBBind data is used to retrain four popular SFs: AutoDock vina, Random Forest (RF)-Score, InteractionGraphNet (IGN), and DeepDTA, to better test their capabilities when applied to new protein-ligand complexes. In particular we have formulated a new independent data set, BDB2020+, by matching high quality binding free energies from BindingDB with co-crystalized ligand-protein complexes from the PDB that have been deposited since 2020. Based on all the benchmark results, the retrained models using LP-PDBBind that rely on 3D information perform consistently among the best, with IGN especially being recommended for scoring and ranking applications for new protein-ligand systems.

Introduction

Scoring functions (SFs) are crucial in computer aided drug discovery, utilized for selecting the most probable binding pose geometry and/or free energy of binding between a ligand and a protein.1,2 There are a plethora of SFs being developed and widely used by computational chemists, but they can be broadly categorized into either physical scoring functions (PSFs),3–13 or machine learning scoring functions (MLSFs).14–20 PSFs are designed to model intermolecular interactions or missing free energy components, and can benefit both from better design of the functional form, many-body physics, as well as the availability of training data for parameterization of their semi-empirical or empirical functions.3–9 Knowledge based SFs by contrast are less reliant on physical interaction modeling and are far more dependent on experimental information,11,21,22 culminating in the current development and use of sophisticated machine learning models14–20 whose much larger parameter spaces are optimized on large, high quality datasets.

However, it is still an open question as to whether the new MLSFs have truly surpassed traditional PSFs in actual predictive performance, or how well any given PSF or MLSF performs on real-world protein-ligand binding applications.23–26 It is already known that any SF model can be overtrained so that it has exceptional performance on the training dataset, a problem which can be mitigated by several known regularization strategies to provide better generalization to an independent test set.27,28 Equally important, however, is to control for data leakage into the test set itself, without which can lead to false confidence in predictive capacity when the training dataset has high similarity to the test set, but manifests as poor generalization when the sequence or structure similarity is low.24

The majority of protein-ligand interaction SF predictors, whether physical or machine-learned, have been trained on the PDBBind dataset.29 The Comparative Assessment of Scoring Functions (CASF) benchmark, which assesses the scoring power, ranking power, docking power and screening power of various SFs, was also conducted on the PDBBind core set.30 More specifically PDBBind is a curated set of ~20K protein-ligand complex structures and their experimentally measured binding affinities, in which the ”general” and ”refined” data subsets are used for training, and a separate ”core” set is used for testing. However, not all protein-ligand complex structures in the general and refined data sets are as high-quality as those in the core set which contains protein-ligand complexes with the best structural resolution and most reliable binding affinity data.29 Additionally, the average size of the ligands in the core set is also smaller than that in the rest of the PDBBind dataset, which may indicate that the core set are easier prediction targets.31

To make more fair comparisons, Stärk, et al. suggested splitting PDBBind according to a time cutoff in the creation of the EquiBind dataset.31 This idea mimics a ”blind test” setting, by which the model can only be trained with data released in year 2019 or earlier, and predictions are made with data after year 2019, so that the test data will never be covered by training data. This time-based data splitting marks a significant step forward in reporting more realistic evaluation performance of the tested SFs. However as we show below, the majority of the core data records in the PDBBind dataset have identical proteins and/or ligands with that found in the general and/or refined sets. As such, most empirical PSF and MLSF models have been trained with significant data leakage, and thus their reported performance on the core set is only a true measure of new protein-ligand complexes with high similarity, but will inevitably have limited transferability to low-similarity ligand-proteins scenarios. Hence a purely time based splitting will not solve the problem of data leakage. Specifically, since new drugs are being developed that can interact with popular protein targets that have been established for years,32,33 and existing drug molecules may also be tested on new proteins,34 it is rather frequent that we see almost identical proteins or ligands in the latest experiments with earlier assays. Under this circumstance, a time based splitting of the dataset is still not an ideal solution.

In this paper, we aim to reorganize PDBBind data into a new set of train, validation and test datasets which we call Leak Proof PDBBind (LP-PDBBind), by incorporating a carefully designed algorithm to control the data similarity between the train, validation and test dataset. Furthermore we also carefully clean the PDBBind data to eliminate covalent bound ligand-protein complexes (thus focusing on non-covalent binding), certain ligands with very low frequency occurrences of certain atomic elements, to remove obvious steric clashes, as well as maintaining consistency in reported binding free energies and their units. We then use the cleaned LP-PDBBind data for the development of new versions of several popular SFs, including AutoDock vina,3 RF-Score,14 IGN,15 and DeepDTA.18 Furthermore, in order to provide a true independent benchmark for the new SFs that result from retraining using the cleaned LP-PDBBind, we created a new evaluation dataset, BDB2020+. BDB2020+ is compiled based on data records in the BindingDB dataset35 that were deposited after year 2020, and further filtered according to the same similarity control criteria used for the development of the new LP-PDBBind. As a further test of ranking power, we additionally prepared two sets of experimental binding affinity data for different ligand complexes of the SARS-CoV-2 main protease (Mpro)36 and epidermal grow factor receptor (EGFR),37 neither of which was included in the PDBBind dataset (although Mpro has similar SARS-CoV-1 protease proteins in PDBBind).

The results show that 3-dimensional structure based SFs like AutoDock vina, IGN and RF-Score are able to improve significantly when the model is trained using the new training set provided by LP-PDBBind, while purely data-driven approaches that only use 1-dimensional string representations for the proteins and ligands, such as DeepDTA, perform worse, indicating their reduced capability to generalize. Given that the same model architecture or functional form can still achieve enhanced performance with our new splitting of PDBBind, which contained even less training data than what these models originally used, we believe the cleaned and resplit LP-PDBBind data provides a better way of utilizing the existing PDBBind dataset, and provides a more realistic and meaningful benchmark to help develop higher quality and more generalizable SFs for real-world applications. All data and analysis scripts are available in our lab’s github repository (https://github.com/THGLab/LP-PDBBind/tree/master).

Method

Description for processing the PDBBind dataset

The PDBBind v2020 dataset includes 14108 protein-ligand complexes in the general set, 5050 complexes in the refined set and 285 complexes in the core set.30 All PDB files were downloaded from RCSB to recover the original headers, and the categories of the proteins were defined according to whether the keywords occurred in the header. The categories we considered include transport proteins, hydrolases, transferases, transcription proteins, lyases, oxidoreductases, isomerases, ligases, membrane proteins, viral proteins, chaperone proteins, and metalloproteins. A protein file with none of these keywords occurring in the header were categorized into a generic ”other” category. Protein sequences were extracted directly from the PDBBind dataset files using the SEQRES records, and sequences for proteins with multiple chains were concatenated using a column (:) symbol. Additionally, the SMILES strings for each of the ligands were extracted using the rdkit package38 from either the .mol2 or .sdf file provided in the original PDBBind dataset. The .mol2 files were used with higher priority due to their generally better description of bond orders in the ligands compared with the .sdf files in the PDBBind dataset. The latter were used instead when rdkit failed to read in .mol2 files.

Identification of Covalent and Non-covalent Binders in PDBBind

It is important to treat covalent and non-covalent binders separately in PDBBind, because most existing algorithms that predict protein-ligand binding primarily focus on non-covalent interactions. As far as we are aware, there has been no systematic study of whether the binders in PDBBind are covalent or non-covalent. Relying on the CovBinderInPDB39 repository, we have identified covalent binders in the PDBBind dataset. The ligand names were extracted from the PDB files by comparing the minimum distance between any atom from the ligands in PDBBind database and residues in the PDB files downloaded from the RCSB database. If the minimum distance is less than 1Å, the matched residue name was compared with the record in CovBinderInPDB to identify whether the ligand is a covalent binder. If the minimum distance is more than 1Å or the residue name did not match the record in CovBinderInPDB, the structures were manually checked to identify whether the ligands are covalent binders or not. Ultimately 893 covalent binders and 18550 non-covalent binders were identified in the PDBBind dataset. The identifiers of the 893 covalent binders are reported in the Supplementary Information.

Cleaning the PDBBind dataset

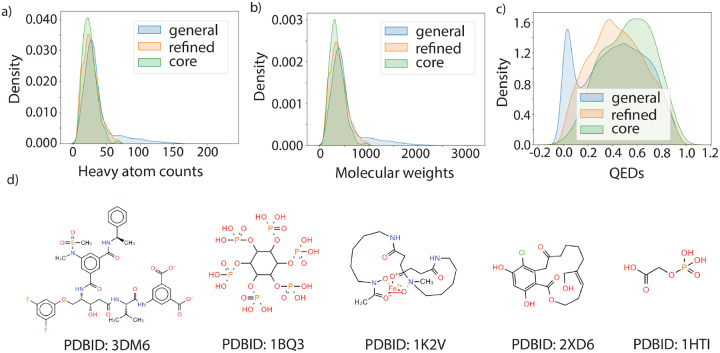

The majority of protein-ligand affinity prediction models are designed for drug-like ligands. However, a careful investigation of the ligands in the PDBBind dataset elucidated that not all the ligands are not necessarily drug like. Figure 1(a–c) shows the distributions of the number of heavy atoms, molecular weights and quantitative estimate of drug-likeness (QED)40 for ligands in the general, refined and core set in the PDBBind dataset. Some randomly sampled ligand structures are visualized in Figure 1(d). Specifically, some structures contain unusually large macrocycles, are peptide like, or contain long aliphatic chains. Although these protein-ligand complexes may still provide information about structure-affinity relationships, they may also contaminate the dataset and introduce non-transferable bias into the machine learning models trained on them. A small portion of the complex structures in PDBBind dataset also contained steric clashes, and there was one structure for which the ligand was far away from the protein (PDBID: 2R1W), and should be excluded from the dataset due to the low quality of the complex structures. The list of PDBIDs containing steric clashes (minimum heavy atom distance <1.75Å) are also provided in Supplementary Information.

Figure 1: Property distributions and example ligands in the PDBBind dataset.

(a) Distribution of number of heavy atoms, (b) molecular weights and (c) QED values for ligands in general, refined and core set of PDBBind dataset. (d) Example of ligand structures in the PDBBind dataset, with the corresponding PDBIDs

Additionally, some proteins and ligands in the dataset contain uncommon elements. The proportion of occurrence for each element that occurred in the PDBBind dataset is summarized in Supplementary Table 1 for proteins and Supplementary Table 2 for ligands. It is difficult for machine learning models to learn protein-ligand interactions when uncommon elements are present in the PDBBind dataset. Therefore, we have defined a ”cleaner” version of PDBBind which only contains all data with ligand QED values larger than 0.2, protein and ligand elements that have at least 1‰ (more than 19 occurrences in the dataset), and minimum heavy-atom distance between protein and ligand fall within 1.75Å and 4Å. We call this subset of the data Clean Level 1 (CL1).

The binding affinity in terms of ΔG is directly related to the dissociation coefficient Kd or Ki through the formula ΔG = −RT ln(K).41 However, a large portion of the data in PDBBind is reported in terms of IC50, which cannot be easily translated to ΔGs due to its dependence on other experimental conditions and inhibition mechanisms.42 The IC50 values for the same protein-ligand complex can vary up to one order of magnitude in different assays. In addition, some data in PDBBind were not reported as exact values. Therefore, a second clean level (CL2) was defined on top of CL1, that additionally requires the target values are converted from records with “Kd = xxx” or “Ki = xxx”, to ensure the reliability of experimental binding free energy data. Finally, considering the original splitting of PDBBind into general/refined/core was based on structure quality,29 we also defined a third and highest quality level of data (CL3) that only retained data from refined and core set of PDBBind while still complying with all other quality-control metrics that we have defined. We did not use CL3 in this study, but identifiers of these protein-ligand complexes are available at https://github.com/THGLab/LP-PDBBind/tree/master along with the Cl1, Cl2 data sets.

Similarity calculation of protein and ligands in the PDBBind dataset

Similarities for each ligand to any other ligand in the whole PDBBind dataset were calculated based on the Morgan fingerprints of the ligands using 1024 bits,43 and the Dice similarity is reported for the ligand pairs according to the following:44

| (1) |

where |A∪B| counts the number of bits set to ON in the fingerprints of both ligands A and B, and |X| counts the number of bits set to ON in an single ligand X. A radius of 4 was first used when calculating Morgan fingerprints of the ligands; if the similarity between two ligands was calculated to be 1, it is recalculated using Morgan fingerprints of the ligands with radius of 10 to allow more careful validation of ligand identity. If the similarity was still 1 with the extended radius for calculating the fingerprint, the canonical SMILES string of the two molecules were compared and any discrepancy in the canonical SMILES will enforce the similarity to 0.99. These steps were designed to ensure ligands with similarity of 1 are strictly identical.

Similarities for proteins were calculated based on the aligned sequences of the proteins. Considering that it is unlikely two proteins belonging to different functional categories are similar, the sequence similarities were calculated only for proteins in the same category (i.e. transport proteins, hydrolases, etc.), and any two proteins that belong to different categories were defined to have similarity of 0. Within each category, every pair of protein sequences were aligned using the Needleman-Wunsch alignment algorithm,45 and the similarity was calculated as the number of aligned residues divided by the total length of the aligned sequence.

New splitting of PDBBind dataset

We have formulated a new splitting of the PDBBind dataset to minimize the overlap between training, validation and test data as much as possible in order to eliminate the risk of data leakage. Data splitting was first done inside each protein category, and an iterative process was employed to separate the dataset step by step using the following algorithm.

In the first iteration, 5 complexes were randomly selected as seed data in the test set, and all data in the same protein category that have protein sequence similarity greater than 0.9 or ligand similarity greater than 0.99 were added to the test set as well. If protein-ligand complex data that have protein sequence similarity greater than 0.5 or ligand similarity greater than 0.99 were found, they too were added to the validation set. Any data newly added to the test set in this iteration will become seed data in the next iteration, and the iteration continues until no new data are added to the test set. Next, a similar process was applied to the validation data, adding all remaining data that have protein sequence similarity greater than 0.5 or ligand similarity greater than 0.99 into the validation set. The remaining data is then defined as the training set.

The number of training, validation and test data under the LP-PDBBind in each category is reported in Table 1. After data splitting by category, the protein-ligand complexes for training, validation, and testing were combined to define the splitting for the whole dataset. However, after combination the ligand similarity might still exceed 0.99 for data from different categories. Therefore, any data in the combined training set that has ligand similarity greater than 0.99 to any other data in the validation or test set were discarded altogether. The resulting number of data in the training, validation, and test set were 11513, 2422 and 4860, respectively, after this cleaning step. The new splitting procedure ensures the data in the training set has a maximum protein sequence similarity of 0.5 and maximum ligand similarity of 0.99 to any data in the validation or test datasets, and the maximum similarity between any validation and test data is 0.9 for protein sequence similarity and 0.99 for ligand similarity.

Table 1:

Number of train, validation and test data in each protein category before merging for the new split of PDBBind

| Protein type | Train | Validation | Test |

|---|---|---|---|

| hydrolase | 4038 | 318 | 1377 |

| transferase | 2226 | 1228 | 1837 |

| other | 3050 | 405 | 134 |

| transcription | 642 | 52 | 298 |

| lyase | 331 | 71 | 465 |

| transport | 503 | 35 | 83 |

| oxidoreductase | 342 | 52 | 182 |

| ligase | 342 | 38 | 90 |

| isomerase | 199 | 70 | 64 |

| chaperone | 50 | 66 | 184 |

| membrane | 157 | 56 | 71 |

| viral | 196 | 18 | 53 |

| metal containing | 85 | 13 | 22 |

Compilation of the BDB2020+ dataset

Because many of the recent SFs have been trained on PDBBind, which means utilizing a subset of PDBBind as the test dataset risks data leakage, we have created a new benchmark dataset that is independent of PDBBind. To fulfill the need for a fair benchmark, we looked for the latest records deposited in BindingDB,35,46 one of the largest public binding affinity repositories, which provides additional experimental conditions including assay information, pH and temperature. However, it does not guarantee each record has an associated 3D complex structure.

We have developed a workflow to match complex structures in RCSB PDB with records in BindingDB, and the flowchart is illustrated in Supplementary Figure S1. Starting with the original BindingDB dataset, the records with potential matched structures in the RCSB database were identified by searching the InChi keys that occur in BindingDB to find all PDB records that contain the same ligand, and then further filtering based on the PDBID of the target chain that was also recorded in the BindingDB dataset. Release dates of the PDB structures were extracted from the RCSB PDB records and only records after year 2020 were selected for further processing. Additionally, we employed the same similarity criterion as what was used in developing LP-PDBBind, and removed all data that has sequence similarity greater than 50% or ligand similarity greater than 99%.

The filtered PDB files were downloaded from the RCSB database and small molecule ligands were extracted from the PDB files. Since a complex may contain multiple ligands, but only one matches the record in BindingDB, we selected the ligand in the PDB that has the best structural match with the SMILEs provided in BindingDB, and ensuring that the number of heavy atoms is exactly the same. We then used rdkit to reassign bond orders to the extracted ligands using the BindingDB SMILES as reference. This step was necessary because bond orders are usually not present in a PDB file and are typically inferred from local atomic geometries, which sometimes result in unreasonable bonding structures; thus the bond order reassignment step ensures the rationality of the processed structures. Additionally, any chain that is within 5Å of the ligand was compared with the interacting chain sequence in BindingDB record. A reliable match was only made when the consecutive aligned residues are exactly the same. The reason to keep strict alignment criterion is that if the protein contains mutations, the binding affinity might change significantly, in which case the BindingDB record will not represent the true binding affinity for the complex structure in the PDB and is not usable for the benchmark. After discarding all unmatched data, we obtained 130 data records, out of which 115 contains accurate binding affinity data, and defines the BDB2020+ test dataset.

Model retraining procedures

All models were retrained using the CL1 version of the LP-PDBBind training dataset to achieve balance between data cleanliness and the amount of training data available. For model validation and testing, the non-covalent LP-PDBBind validation and test data at CL2 were used to ensure data quality is higher, and can be considered as an optimistic estimation of the performance of these models on high quality data.

AutoDock Vina Retraining

For each molecule in the LP-PDBBind training set, the 6 individual terms (gauss1, gauss2, repulsion, hydrophobic, hydrogen, rot) of AutoDock Vina3 were calculated using the vina binary by setting weight of one term to one and the rest of the weight to 0. The weights of these six terms were optimized by minimizing the mean absolute error between the weighted sum of six terms and binding free energy through the Nelder-Mead optimization algorithm.47 The final retrained AutoDock Vina parameters are provided in Supplementary Table 4 and all further evaluations of retrained models are done with these modified weights.

RF-Score Retraining

RF-Score (RF) is a Random Forest model that predicts binding affinities from the number of occurrences of a particular protein-ligand atom type pair interacting within a certain distance range.14 We here followed the RF-Score-v1 approach, where nine common elemental atom types (C, N, O, F, P, S, Cl, Br, I) for both the protein and the ligand were considered and neighboring contacts between a protein-ligand atom pair were defined within 12 Å. For fairness of comparison, we trained a RF model on the PDBbind2007 refined set, denoted as the original model, and on the LP-PDBBind training data, as the retrained model, using the same script.

IGN Retraining

InteractionGraphNet (IGN) utilizes two independent graph convolution modules to sequentially learn the intramolecular and intermolecular interactions from the 3D structures of protein-ligand complexes to predict binding affinities.15 The code for retraining the IGN model (https://github.com/THGLab/LP-PDBBind/tree/master/model_retraining/IGN) was adapted from the original training scripts using the same feature size and layer numbers as the original published model with only the training data modified. Due to molecule generation errors in RDKit,38 which is required for featurizing the 3D structures into graph representations, only 7277 complexes from the LP-PDBBind training set were used.

DeepDTA Retraining

DeepDTA is a Y-shaped 1d convolutional neural network that takes in protein sequences and ligand isomeric SMILES strings as input and outputs the predicted binding affinities.18 Since the method did not originally train on the PDBBind dataset, we retrained the model with the original PDBBind general and refined sets using a PyTorch implementation of the original code: https://github.com/THGLab/LP-PDBBind/tree/master/model_retraining/deepdta. More specifically, we did a 90–10 train-validation split on the data for training and then tested its performance on the core set. Different protein and ligand kernel sizes were used as suggested by the original work and the best-performed parameters were chosen to serve as the original model in our performance comparison tables. The retrained model with LP-PDBBind was also generated following the same scheme.

Since the 1d convolution requires a fixed size of the protein sequence and ligands, during all training processes, proteins with sequence lengths longer than 2000 and ligands with isomeric SMILES lengths longer than 200 were discarded, resulting in a loss of around 100 protein-ligand pairs. Also, since the ligand encoding was based on the training set only, all ligands with unseen tokens from their isomeric SMILES strings in the test set and real-world examples are discarded, resulting in a loss of around 15 data points. It is worth noting that these losses of data happen to both the original model and the retrained model, so it won’t affect our conclusions in this work.

Results

Analysis of PDBBind Splittings

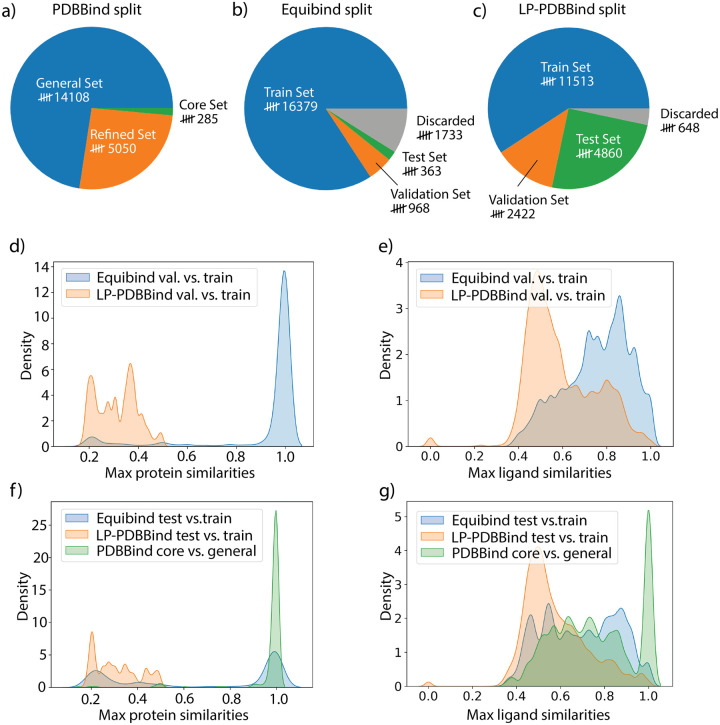

Data distributions of PDBBind under the original split (general set/refined set/core set), Equibind Split (train/validation/test) and LP-PDBBind (train/validation/test) are provided in Figure 2 a–c. LP-PDBBind has significantly extended the size of the test set compared with the original PDBBind split and the more recent Equibind split. A bigger test set provides more accurate evaluation of model performance when the model is applied to data that has not been trained on. By contrast, the number of data in the training set is much smaller in LP-PDBBind. As we will show later, the shrinkage in the training set is necessary, because it keeps the similarity with validation/test set sufficiently low. Compared with the Equibind split, we have also decreased the number of discarded data, because we only discard data when they are highly similar to any other data in train, validation or test set.

Figure 2: Data statistics under different splits of the PDBBind dataset.

Number of data in different splits of the PDBBind dataset: (a) general, refined and core set in original PDBBind split; (b) train, validation, test set and discarded data in Equibind split; (c) train, validation, test set and discarded data in LP-PDBBind. Comparison of (d) maximum protein similarities and (e) ligand similarities between validation set and train set under Equibind split and LP-PDBBind. Comparison of (f) maximum protein similarities and (g) ligand similarities between test set and train set (or core set and general set in original PDBBind split) under different splittings.

One of the main purpose of defining the new split of PDBBind is to prevent data leakage between training, validation, and test data. To understand whether the new split has solved the data leakage issue, the maximum similarity for proteins and ligands between the training, validation, and test data under the EquiBind split and LP-PDBBind, and maximum similarity between the general set and core set under the original PDBBind split are summarized in Figure 2(d–g). The original PDBBind split has significant protein and ligand overlap between the general and core sets, as can be seen in the sharp peak at similarity of 1.0 in Figure 2(f–g). The same level of similarity between the refined and core sets in the original PDBBind split are shown in Supplementary Figure 2. Since many machine learning models use the PDBBind core set as the test dataset without carefully excluding similar data from their respective training dataset, the performance of these models reported may be overly optimistic and do not reflect their true generalizability and needs to be reevaluated.

The Equibind split is a significant step forward in reorganizing the PDBBind data more reasonably. Its time-based cutoff in defining the test dataset decreased the chance of data leakage, but still did not eliminate the possibility of highly-similar data that occurs in both train and test set. By comparison, LP-PDBBind minimizes overlap between data in the train and test set by design, keeping the maximum sequence similarty between any protein in the test set and any protein in the train set below 0.5, and the maximum ligand similarity below 0.99 (Figure 2(f–g)). Therefore, results for a model trained with LP-PDBBind train set and evaluated with LP-PDBBind test set should better reflect the performance of the model when applied to a new protein-ligand complex that may be very different than data used for training the model.

To help with training more transferable models, we have also defined the validation set to be equally different than the train set as the test set. Figure 2(d–e) illustrates the maximum protein similarities and maximum ligand similarities between the validation and training sets for the Equibind split and LP-PDBBind, respectively. The similarity between validation and training sets under LP-PDBBind is also well controlled so that the validation set can be used to select the most transferable model or hyperparameters when training the model. By comparison, the validation set in Equibind split is too similar to the train set, hence overfitting will not be effectively captured when monitoring model performance on the validation set. As is provided in Supplementary Figure 3, both the Equibind and LP-PDBBind splits of PDBBind, the validation and test set have a wide range of (dis)/similarities on proteins and ligands. Therefore using the validation set for model selection will not lead to overfitting and thus increase transferability to the test set.

Evaluating Retrained Models with LP-PDBBind

The LP-PDBBind CL1 cleaned data for non-covalent binders of PDBBind were used to retrain Autodock vina,3 IGN,15 the 2010 RF-Score14 and DeepDTA.18 These models cover a wide range of different approaches for scoring, and are representative of different dimensions of the SF space of models. AutoDock vina is a PSF that contains molecular interaction terms consisting of a van der Waals-like potential (defined by a combination of a repulsion term and two attractive Gaussians), a nondirectional hydrogen-bond term, a hydrophobic term, and a conformational entropy penalty, all of which are weighted by empirical parameters.3,48 The other three methods belong to MLSF category, but vastly differ in their feature set. The original RF-Score model utilizes the occurrence count of intermolecular distances between elemental atom types of protein and ligands based on 3D structures, and utilizes a random forest model to make predictions on binding affinities.14 IGN utilizes a graph neural network operating on the 3D complex structures, and the node and edge features are straightforward information about atoms and bonds, including atom types, atom hybridization, bond order, etc.15 Finally, DeepDTA is a purely data-driven approach which does not rely on physical interactions or 3D structural information. Instead, it uses 1D convolutions on the string representations of the proteins and ligands to make predictions.18 After retraining, the new AutoDock vina, RF-Score, IGN, and DeepDTA model performances are compared with the old models as tested on the non-covalent LP-PDBBind test set using the CL2 data, the BDB2020+ new benchmark data, and the Mpro and EFGR applications.

Table 2 provides the root mean square error (RMSE) of the binding affinity prediction (ΔGbind) on the training, validation, and test data for the models retrained with LP-PDBBind, and comparing it to the original models and their performance on the LP-PDBBind test data. Supplementary Figure 4 shows the scatter plots of the same data and reports the correlation coefficient between predicted and experimental binding affinities. Due to the data leakage issue, performance of the original models on the LP-PDBBind test data are over-estimated for the MLSFs. By comparison, AutoDock vina due to its small number of trainable parameters does not suffer from the data leakage issue, and has achieved lower RMSE after retraining. Among the MLSF models, IGN has the smallest generalization gap, and is also the best performing model when evaluated using the LP-PDBBind test set. Since random forest models can overfit training data relatively easily,27 the RF-Score model has exceptional performance on the LP-PDBBind training dataset, but its validation and test performance is slightly worse than the IGN model. DeepDTA also performs quite well on the training dataset, but exhibits a large generalization gap with respect to the validation dataset, and has similar performance as AutoDock vina on the test dataset. Overall the original MLSF models have seen some of the LP-PDBBind test proteins and ligands in their training, and thus they appear to perform better than they actually do when data leakage is controlled for using the LP-PDBBind data.

Table 2:

The training and test errors for the original and retrained models using LP-PDBBind for AutoDock vina, IGN, RF-Score, and DeepDTA in terms of root mean square error (RMSE) in kcal/mol

| Model | RMSE Original | RMSE Retrained | ||

|---|---|---|---|---|

| test | train | validation | test | |

| AutoDock Vina | 2.85 | 2.42 | 2.29 | 2.56 |

| IGN | 1.82 | 1.69 | 1.93 | 2.03 |

| RF-Score | 1.89 | 0.68 | 2.13 | 2.09 |

| DeepDTA | 1.34 | 0.64 | 2.74 | 2.45 |

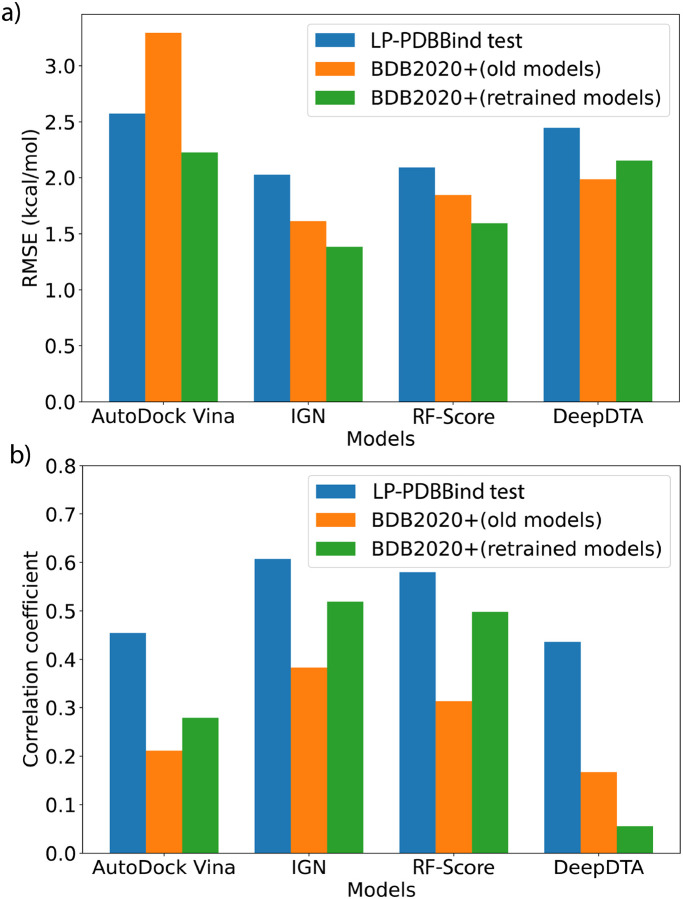

Figure 3 and Table 3 summarize the scoring performance of the original and retrained models on the independent BDB2020+ benchmark dataset; the corresponding scatter plots for the original and retrained models are provided in Supplementary Figure 5. We see that upon retraining, the three models based on 3D structures have all improved significantly on the BDB2020+ benchmark set. In terms of RMSE, AutoDock vina decreased by 1 kcal/mol while IGN and RF-Score decreased by 0.2–0.3 kcal/mol. The changes in the correlation coefficients are more profound: both IGN and RF-Score have achieved absolute correlation coefficients better than 0.5, and relative improvements for AutoDock vina and IGN increased by 30–40%, and RF-Score improved by more than 60%. On the other hand, the performance of DeepDTA declined after retraining. The RMSE has increased by 8% and the retrained model has almost no correlation with experimental measurements. It is reasonable to expect a purely sequence based method to not perform well when the training dataset contains more variety, since it poses a much greater challenge for the model to find the patterns of the interaction modes between the protein and ligand from sequence information alone. But for the 3D structure based models, a diverse training dataset actually helps with finding more transferable features, which leads to the overall performance enhancements on the BDB2020+ evaluation dataset.

Figure 3: Performance comparisons using different models and different benchmark datasets.

(a) Comparison on the root mean square error (RMSE) for different models. Lower is better. Blue bars indicate RMSEs on the LP-PDBBind test dataset using retrained models, orange bars indicate RMSEs for the models without retraining using LP-PDBBind, and green bars indicate RMSEs for the models retrained using LP-PDBBind. (b) Comparison on the Pearson correlation coefficient (R) for different models.

Table 3:

Performance comparisons on the BDB2020+ benchmark set in terms of root mean square error (RMSE) and Pearson correlation coefficient (R) for different models before and after retraining using LP-PDBBind.

| Model | RMSE (kcal/mol) | R | ||||

|---|---|---|---|---|---|---|

| original | retrained | difference | original | retrained | difference | |

| AutoDock Vina | 3.29 | 2.23 | −32% | 0.21 | 0.28 | +33% |

| IGN | 1.61 | 1.38 | −14% | 0.38 | 0.52 | +37% |

| RF-Score | 1.84 | 1.59 | −14% | 0.31 | 0.50 | +61% |

| DeepDTA | 1.99 | 2.15 | +8% | 0.17 | 0.06 | −65% |

Figure 3 also compares the performance of the four models on BDB2020+ with the results evaluated on the LP-PDBBind test dataset. Interestingly, we find that all models have achieved lower RMSE on the BDB2020+ dataset but also lower Pearson correlation coefficients, R. This seemingly contradictory result is actually reasonable due to the dataset distribution differences of the two evaluation benchmarks. The LP-PDBBind test dataset contains much more data than BDB2020+, and also spans a wider range of binding affinity values. The measured −log(Kd) values in the LP-PDBBind test dataset ranges from 0 to 12 (i.e. 12 orders of magnitude), but the BDB2020+ dataset only ranges from 4 to 10. Given that extreme predictions from a robust ML model is unlikely, a narrower range of binding affinities means the overall error will be smaller. However, it also poses challenge for successfully differentiating the nuances between more clustered data points, and therefore it is also more difficult to achieve higher correlation coefficients.

Nevertheless, we see that the relative rankings of the four methods are consistent between different evaluation benchmarks and different metrics. IGN and RF-Score are performing better than AutoDock vina, and DeepDTA is not as competitive to the other models that use 3D structural information. In terms of a generalization gap to new data, we also see the correlation differences are the smallest for IGN and RF-Score, while DeepDTA has a huge generalization gap. These results are consistent with the overall performances of the models, and provide evidence that modern MLSFs can indeed surpass PSFs such as AutoDock vina, even when protein and ligand similarities are low. However, 3D information is essential to ensure that the model has transferable performance.

Evaluating the Ranking Capabilities of the Retrained Models

The improvement in scoring power, despite being important, still does not fully reflect the performance capability of the SFs in real world applications. Therefore, we have prepared two additional datasets of protein-ligand complexes with the same protein and different ligands to evaluate their ranking accuracy of the SFs before and after retraining using LP-PDBBind. The first case is the SARS-CoV-2 main protease (Mpro) for which a wide variety of potential Mpro inhibitors have been developed.36 We have manually extracted published co-crystal structures of Mpro with a number of non-covalent inhibitors,49–61 and prepared a dataset containing 40 structures and corresponding experimental binding affinity measurements. The second dataset involves the epidermal growth factor receptor (EGFR), which is a receptor tyrosine kinase related to multiple cancers including lung cancer, pancreatic cancer and breast cancer.37 Similarly, we have selected 23 representative non-covalent protein-ligand complex structures of EGFR with binding affinities taken from BindingDB.62–76

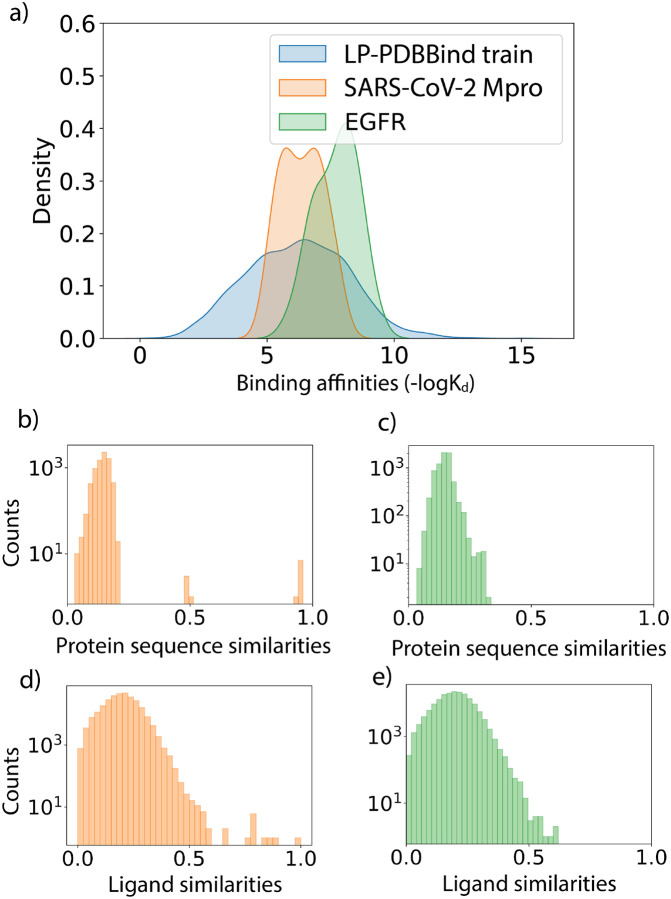

Figure 4 shows the distributions of experimental free energies and protein and ligand similarities when comparing the LP-PDBBind training data with the Mpro and EGFR datasets. The binding affinities for the Mpro and EFGR systems are found to be in a narrower range than the LP-PDBBind training data. While the average binding affinity of the Mpro dataset is roughly in line with the LP-PDBBind training dataset, the ligands in the EGFR dataset have an overall tendency to bind stronger to their targets. This shift in the average indicates that the EGFR is more ”out-of-distribution” than the Mpro dataset. Figure 4b–e further show that the protein and sequence similarities of the two evaluation datasets are overall very dissimilar to the LP-PDBBind training dataset, with the exception of a small fraction of protein and ligand sequence similarity attributable to the SARS-CoV-1 Mpro training entry PDBID: 3V3M.77 Hence the Mpro and EFGR evaluations reflect two representative scenarios of using the SFs on a similar or different protein-ligand system than what was included in the PDBBind dataset.

Figure 4: Data statistics for the SARS-CoV-2 main protease (Mpro) benchmark set and epidermal growth factor receptor (EGFR) benchmark set.

(a) Distributions of the binding affinity data (−logKd) in the LP-PDBBind train dataset in blue, Mpro benchmark set in orange and EGFR set in green. (b-c) Distributions of protein sequence similarities between the Mpro protein (b) and EGFR protein (c) with proteins in the LP-PDBBind train dataset. (d-e) Distributions of ligand fingerprint similarities between molecules in the Mpro benchmark set (d) and EGFR benchmark set (e) with ligands in the LP-PDBBind train dataset.

Table 4 summarizes the RMSE, Pearson correlation coefficients (R) and Spearmann correlation coefficients (RS or ranking power) for the data in the Mpro and EGFR evaluation sets using both the original models and the models retrained using LP-PDBBind. The prediction scatter plots for the Mpro benchmark dataset and EGFR benchmark dataset are provided in Supplementary Figures 6 and 7. For the Mpro dataset, AutoDock vina and RF-Score exhibit some modest improvement in all the metrics. The RMSE on the retrained IGN model decreased by 0.42 kcal/mol, but the correlations in terms of R and RS have also become slightly lower. The performance of the DeepDTA model is largely unchanged on the Mpro dataset. Taken all metrics into account, AutoDock vina is the best performing SF for the Mpro dataset, although the differentiation among all models is not large.

Table 4:

Performance comparisons on the SARS-CoV-2 main protease (Mpro) and epidermal growth factor receptor (EGFR) benchmark set in terms of root mean square error (RMSE) in kcal/mol, Pearson correlation coefficient (R) and Spearmann correlation coefficient (RS) for different models before and after retraining using LP-PDBBind.

| Mpro Model | RMSE | R | R S | |||

|---|---|---|---|---|---|---|

| original | retrained | original | retrained | original | retrained | |

| AutoDock Vina | 1.20 | 1.17 | 0.55 | 0.66 | 0.51 | 0.68 |

| IGN | 1.86 | 1.44 | 0.64 | 0.61 | 0.69 | 0.65 |

| RF-Score | 2.06 | 1.64 | 0.43 | 0.52 | 0.47 | 0.58 |

| DeepDTA | 1.18 | 1.12 | 0.59 | 0.63 | 0.60 | 0.66 |

| EFGR Model | RMSE | R | R S | |||

| original | retrained | original | retrained | original | retrained | |

| AutoDock Vina | 3.11 | 1.59 | 0.25 | 0.38 | 0.21 | 0.36 |

| IGN | 1.06 | 0.96 | 0.36 | 0.65 | 0.17 | 0.62 |

| RF-Score | 1.57 | 0.97 | −0.15 | 0.52 | −0.18 | 0.45 |

| DeepDTA | 1.22 | 1.38 | 0.23 | 0.06 | 0.20 | 0.23 |

However, the results on the EGFR dataset exhibit much larger variations for the different models, and the changes are significant for all the SFs. There is a dramatic decrease by ˜50% in RMSE for AutoDock vina, and both R and RS have increased more than 50%. IGN has a slight decrease in RMSE but has increased remarkably in the correlation coefficients. The retrained IGN model achieves 0.65 in Pearson correlation coefficient and 0.62 in Spearmann correlation coefficient, which is the highest among all models, and does not differ much with the Mpro results and thus showing good generalizability. RF-Score has also benefitted from retraining quite significantly, with a 0.6 kcal/mol decrease in RMSE, and the correlation coefficients have improved from −0.15 to 0.52 in terms of R and from −0.18 to 0.45 in terms of RS. The importance of 3D information is also illustrated by finally considering a method that ignores it, in which DeepDTA performs relatively comparable to the other SFs for the Mpro dataset with RS = 0.66, but with RS = 0.23 for the EGFR dataset. Overall, the performance rankings of the four SFs are still consistent with that obtained from the LP-PDBBind test set benchmark and the BDB 2020+ benchmark. But the EFGR benchmark emphasizes that new applications will benefit from better generalizability of the newly retrained models, and that 3D information is vital for exploiting the PDBBind data.

Discussion and Conclusion

The area of computational drug discovery relies on generalizable scoring functions that have robust scoring and ranking power of binding affinities of ligand-protein complexes. However due to the lack of independently built datasets that test the true generalizability of the SFs, it is hard to differentiate among the plethora of many models which have been trained and tested on the original split of the PDBBind dataset. As we have shown, there is too much overlap between the PDBBind general and refined data used for training with the core subset, leading to the possibility of inflated performance metrics that in turn lead to false confidence in how such models will perform on new protein-ligand complexes.

In order to reduce data similarity between training, validation, and test data of the PDBBind dataset, we have developed LP-PDBBind using an iterative process to select most similar data first into the test set, and then validation set, so that the final training dataset has low similarity with validation or test dataset. We have also cleaned the PDBBind data in multiple ways: CL1 removed covalent ligand-protein complexes, the low populations of drug molecules with underrepresented chemical elements, and complexes with steric clashes. In addition to CL1, the CL2 level of cleaning aimed for consistent measures of binding free energies by either converting Kd or eliminating data reported as IC50. Finally, CL3 eliminated the general set to perform splits on the refined and core set data which is deemed of higher quality. However, it is always a trade-off between the quality of data and the amount of data, and all of the results reported here were based on training on CL1 data and testing on CL2. One possibility to achieve better performance in the future is to train a base model using the larger amount of data with CL1, and then fine-tune using the least available but higher quality data such as CL3. In addition we have isolated the covalent binding data from PDBBind that may help newly formulated SF predict these type of protein-ligand complexes.

It is important to understand whether the improved performance of latest SFs are due to better methodologies that can truly generalize to unseen data. This is especially true for MLSFs because their complex architecture and large amount of parameters allow them to memorize data in the training dataset, which if leaked to the test will obscure the benchmarks. To provide a benchmark dataset truly independent of PDBBind, we have compiled the BDB2020+ dataset derived from the BindingDB database deposited after 2020, and further ensuring that there is no overlap with PDBBind. Additionally, we also tested ranking power through construction of the Mpro and EFGR ligand-protein complex series. The SARS-CoV-2 Mpro protein has high similarity counterparts in the PDBBind dataset, and the other series involving EGFR does not have anything similar in the training dataset of PDBBind. These new data should also be useful in future evaluation and/or finetuning of any scoring function.

In this work we utilized the new split of PDBBind using CL1 to retrain AutoDock vina, IGN, RF-Score and DeepDTA and compared the old models with the retrained models on the LP-PDBBind test set, as well as the fully independent BDB2020+ data, Mpro series, and EGFR series. We have demonstrated that using a different splitting of the same dataset leads to significant performance improvements, but the SF has to rely on features that take into account the 3D structures. Furthermore, we have shown that retrained MLSFs can indeed surpass traditional PSFs, even when protein and sequence similarities are low. The comparisons between the different benchmark results for the SFs can be well explained by the ”difficulty level” of these datasets, and provide insights about the generalizability of various models. We found that well performing models also have more stable ranking results for different ligands towards a protein target. When the target system is similar to data included in the training dataset, the differentiation between models are not obvious. However, when the protein target is not similar to anything in the training dataset, we found that different SFs demonstrate quite different generalization capabilities, and IGN model retrained with LP-PDBBind is recommended due to its reliable good scoring and ranking power.

Given that the benchmarks are done with experimental protein-ligand complex structures, the superior performance of the retrained models do not necessarily mean they also have better docking power for recognizing native ligand bound poses. Neverthless, the improvements shown for scoring power and ranking power are meaningful, because the virtual screening process can be broken into multiple steps, and we could use one SF for docking and another for scoring. Furthermore, it is reasonable to expect SFs trained with LP-PDBBind will also have better generalizablity in terms of docking or screening capabilities, and it would be worthwhile to generate decoy structures for the BDB2020+ dataset to better benchmark the docking and screening powers of the SFs independent of the PDBBind dataset. In summary, the cleaned LP-PDBBind data in its current form is a valuable resource for training more transferable SFs, and we hope reporting evaluation metrics on the BDB2020+ dataset can also become a common practice for future SFs.

Supplementary Material

Acknowledgement

This work was supported by National Institute of Allergy and Infectious Disease grant U19-AI171954. This research used computational resources of the National Energy Research Scientific Computing Center, a DOE Office of Science User Facility supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC02-05CH11231.

Footnotes

Supporting Information Available

Computational procedures and characterization of the PDBBind, EquiBind, and LP-PDBBind data sets, cleaning procedures, and results for original and retrained scoring functions.

References

- (1).Huang S.-Y.; Grinter S. Z.; Zou X. Scoring functions and their evaluation methods for protein–ligand docking: recent advances and future directions. Physical Chemistry Chemical Physics 2010, 12, 12899–12908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (2).Cheng T.; Li X.; Li Y.; Liu Z.; Wang R. Comparative assessment of scoring functions on a diverse test set. Journal of chemical information and modeling 2009, 49, 1079–1093. [DOI] [PubMed] [Google Scholar]

- (3).Trott O.; Olson A. J. AutoDock Vina: improving the speed and accuracy of docking with a new scoring function, efficient optimization, and multithreading. Journal of computational chemistry 2010, 31, 455–461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (4).Morris G. M.; Huey R.; Lindstrom W.; Sanner M. F.; Belew R. K.; Goodsell D. S.; Olson A. J. AutoDock4 and AutoDockTools4: Automated docking with selective receptor flexibility. Journal of computational chemistry 2009, 30, 2785–2791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (5).Friesner R. A.; Banks J. L.; Murphy R. B.; Halgren T. A.; Klicic J. J.; Mainz D. T.; Repasky M. P.; Knoll E. H.; Shelley M.; Perry J. K., et al. Glide: a new approach for rapid, accurate docking and scoring. 1. Method and assessment of docking accuracy. Journal of medicinal chemistry 2004, 47, 1739–1749. [DOI] [PubMed] [Google Scholar]

- (6).Friesner R. A.; Murphy R. B.; Repasky M. P.; Frye L. L.; Greenwood J. R.; Halgren T. A.; Sanschagrin P. C.; Mainz D. T. Extra precision glide: Docking and scoring incorporating a model of hydrophobic enclosure for protein- ligand complexes. Journal of medicinal chemistry 2006, 49, 6177–6196. [DOI] [PubMed] [Google Scholar]

- (7).Qiu Y. et al. Development and Benchmarking of Open Force Field v1.0.0-the Parsley Small-Molecule Force Field. J Chem Theory Comput 2021, 17, 6262–6280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (8).Boothroyd S. et al. Development and Benchmarking of Open Force Field 2.0.0: The Sage Small Molecule Force Field. Journal of Chemical Theory and Computation 2023, 19, 3251–3275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (9).Wang R.; Lai L.; Wang S. Further development and validation of empirical scoring functions for structure-based binding affinity prediction. Journal of computer-aided molecular design 2002, 16, 11–26. [DOI] [PubMed] [Google Scholar]

- (10).Jones G.; Willett P.; Glen R. C.; Leach A. R.; Taylor R. Development and validation of a genetic algorithm for flexible docking. Journal of molecular biology 1997, 267, 727–748. [DOI] [PubMed] [Google Scholar]

- (11).Muegge I. PMF scoring revisited. Journal of medicinal chemistry 2006, 49, 5895–5902. [DOI] [PubMed] [Google Scholar]

- (12).Huang N.; Kalyanaraman C.; Bernacki K.; Jacobson M. P. Molecular mechanics methods for predicting protein–ligand binding. Physical Chemistry Chemical Physics 2006, 8, 5166–5177. [DOI] [PubMed] [Google Scholar]

- (13).Dittrich J.; Schmidt D.; Pfleger C.; Gohlke H. Converging a knowledge-based scoring function: DrugScore2018. Journal of chemical information and modeling 2018, 59, 509–521. [DOI] [PubMed] [Google Scholar]

- (14).Ballester P. J.; Mitchell J. B. A machine learning approach to predicting protein–ligand binding affinity with applications to molecular docking. Bioinformatics 2010, 26, 1169–1175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (15).Jiang D.; Hsieh C.-Y.; Wu Z.; Kang Y.; Wang J.; Wang E.; Liao B.; Shen C.; Xu L.; Wu J., et al. Interactiongraphnet: A novel and efficient deep graph representation learning framework for accurate protein–ligand interaction predictions. Journal of medicinal chemistry 2021, 64, 18209–18232. [DOI] [PubMed] [Google Scholar]

- (16).Moon S.; Zhung W.; Yang S.; Lim J.; Kim W. Y. PIGNet: a physics-informed deep learning model toward generalized drug–target interaction predictions. Chemical Science 2022, 13, 3661–3673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (17).Shen C.; Zhang X.; Deng Y.; Gao J.; Wang D.; Xu L.; Pan P.; Hou T.; Kang Y. Boosting protein–ligand binding pose prediction and virtual screening based on residue–atom distance likelihood potential and graph transformer. Journal of Medicinal Chemistry 2022, 65, 10691–10706. [DOI] [PubMed] [Google Scholar]

- (18).Ozturk H.; Ozgur A.; Ozkirimli E. DeepDTA: deep drug–target binding affinity prediction. Bioinformatics 2018, 34, i821–i829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (19).Somnath V. R.; Bunne C.; Krause A. Multi-scale representation learning on proteins. Advances in Neural Information Processing Systems 2021, 34, 25244–25255. [Google Scholar]

- (20).Lu W.; Wu Q.; Zhang J.; Rao J.; Li C.; Zheng S. Tankbind: Trigonometry-aware neural networks for drug-protein binding structure prediction. bioRxiv 2022, 2022–06. [Google Scholar]

- (21).Gohlke H.; Hendlich M.; Klebe G. Knowledge-based scoring function to predict protein-ligand interactions. Journal of molecular biology 2000, 295, 337–356. [DOI] [PubMed] [Google Scholar]

- (22).Mooij W. T.; Verdonk M. L. General and targeted statistical potentials for protein–ligand interactions. Proteins: Structure, Function, and Bioinformatics 2005, 61, 272–287. [DOI] [PubMed] [Google Scholar]

- (23).Shen C.; Weng G.; Zhang X.; Leung E. L.-H.; Yao X.; Pang J.; Chai X.; Li D.; Wang E.; Cao D., et al. Accuracy or novelty: what can we gain from target-specific machine-learning-based scoring functions in virtual screening? Briefings in Bioinformatics 2021, 22, bbaa410. [DOI] [PubMed] [Google Scholar]

- (24).Li Y.; Yang J. Structural and sequence similarity makes a significant impact on machine-learning-based scoring functions for protein–ligand interactions. Journal of chemical information and modeling 2017, 57, 1007–1012. [DOI] [PubMed] [Google Scholar]

- (25).Li H.; Peng J.; Leung Y.; Leung K.-S.; Wong M.-H.; Lu G.; Ballester P. J. The impact of protein structure and sequence similarity on the accuracy of machine-learning scoring functions for binding affinity prediction. Biomolecules 2018, 8, 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (26).Yu Y.; Lu S.; Gao Z.; Zheng H.; Ke G. Do deep learning models really outperform traditional approaches in molecular docking? arXiv preprint arXiv:2302.07134 2023, [Google Scholar]

- (27).Haghighatlari M.; Li J.; Heidar-Zadeh F.; Liu Y.; Guan X.; Head-Gordon T. Learning to Make Chemical Predictions: The Interplay of Feature Representation, Data, and Machine Learning Methods. Chem 2020, 6, 1527–1542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (28).Wang L.-P.; Martinez T. J.; Pande V. S. Building Force Fields: An Automatic, Systematic, and Reproducible Approach. The Journal of Physical Chemistry Letters 2014, 5, 1885–1891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (29).Liu Z.; Li Y.; Han L.; Li J.; Liu J.; Zhao Z.; Nie W.; Liu Y.; Wang R. PDB-wide collection of binding data: current status of the PDBbind database. Bioinformatics 2015, 31, 405–412. [DOI] [PubMed] [Google Scholar]

- (30).Su M.; Yang Q.; Du Y.; Feng G.; Liu Z.; Li Y.; Wang R. Comparative assessment of scoring functions: the CASF-2016 update. Journal of chemical information and modeling 2018, 59, 895–913. [DOI] [PubMed] [Google Scholar]

- (31).Stärk H.; Ganea O.; Pattanaik L.; Barzilay R.; Jaakkola T. Equibind: Geometric deep learning for drug binding structure prediction. International conference on machine learning. 2022; pp 20503–20521. [Google Scholar]

- (32).Zervosen A.; Sauvage E.; Frère J.-M.; Charlier P.; Luxen A. Development of new drugs for an old target—the penicillin binding proteins. Molecules 2012, 17, 12478–12505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (33).Newman A. H.; Ku T.; Jordan C. J.; Bonifazi A.; Xi Z.-X. New drugs, old targets: tweaking the dopamine system to treat psychostimulant use disorders. Annual review of pharmacology and toxicology 2021, 61, 609–628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (34).Pushpakom S.; Iorio F.; Eyers P. A.; Escott K. J.; Hopper S.; Wells A.; Doig A.; Guilliams T.; Latimer J.; McNamee C., et al. Drug repurposing: progress, challenges and recommendations. Nature reviews Drug discovery 2019, 18, 41–58. [DOI] [PubMed] [Google Scholar]

- (35).Liu T.; Lin Y.; Wen X.; Jorissen R. N.; Gilson M. K. BindingDB: a web-accessible database of experimentally determined protein–ligand binding affinities. Nucleic acids research 2007, 35, D198–D201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (36).Gao K.; Wang R.; Chen J.; Tepe J. J.; Huang F.; Wei G.-W. Perspectives on SARS-CoV-2 main protease inhibitors. Journal of medicinal chemistry 2021, 64, 16922–16955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (37).Herbst R. S. Review of epidermal growth factor receptor biology. International Journal of Radiation Oncology* Biology* Physics 2004, 59, S21–S26. [DOI] [PubMed] [Google Scholar]

- (38).Landrum G., et al. RDKit: A software suite for cheminformatics, computational chemistry, and predictive modeling. Greg Landrum 2013, 8. [Google Scholar]

- (39).Guo X.-K.; Zhang Y. CovBinderInPDB: A Structure-Based Covalent Binder Database. Journal of Chemical Information and Modeling 2022, 62, 6057–6068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (40).Bickerton G. R.; Paolini G. V.; Besnard J.; Muresan S.; Hopkins A. L. Quantifying the chemical beauty of drugs. Nature chemistry 2012, 4, 90–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (41).Fermi E. Thermodynamics; Courier Corporation, 2012. [Google Scholar]

- (42).MarÉchal E. Measuring bioactivity: KI, IC50 and EC50. Chemogenomics and Chemical Genetics: A User’s Introduction for Biologists, Chemists and Informaticians 2011, 55–65. [Google Scholar]

- (43).Rogers D.; Hahn M. Extended-connectivity fingerprints. Journal of chemical information and modeling 2010, 50, 742–754. [DOI] [PubMed] [Google Scholar]

- (44).Dice L. R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar]

- (45).Needleman S. B.; Wunsch C. D. A general method applicable to the search for similarities in the amino acid sequence of two proteins. Journal of molecular biology 1970, 48, 443–453. [DOI] [PubMed] [Google Scholar]

- (46).Gilson M. K.; Liu T.; Baitaluk M.; Nicola G.; Hwang L.; Chong J. BindingDB in 2015: A public database for medicinal chemistry, computational chemistry and systems pharmacology. Nucleic Acids Research 2016, 44, D1045–D1053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (47).Nelder J. A.; Mead R. A simplex method for function minimization. The computer journal 1965, 7, 308–313. [Google Scholar]

- (48).Eberhardt J.; Santos-Martins D.; Tillack A. F.; Forli S. AutoDock Vina 1.2.0: New Docking Methods, Expanded Force Field, and Python Bindings. Journal of Chemical Information and Modeling 2021, 61, 3891–3898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (49).Unoh Y.; Uehara S.; Nakahara K.; Nobori H.; Yamatsu Y.; Yamamoto S.; Maruyama Y.; Taoda Y.; Kasamatsu K.; Suto T., et al. Discovery of S-217622, a noncovalent oral SARS-CoV-2 3CL protease inhibitor clinical candidate for treating COVID-19. Journal of medicinal chemistry 2022, 65, 6499–6512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (50).Lockbaum G. J.; Reyes A. C.; Lee J. M.; Tilvawala R.; Nalivaika E. A.; Ali A.; Kurt Yilmaz N.; Thompson P. R.; Schiffer C. A. Crystal structure of SARS-CoV-2 main protease in complex with the non-covalent inhibitor ML188. Viruses 2021, 13, 174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (51).Su H.-x.; Yao S.; Zhao W.-f.; Li M.-j.; Liu J.; Shang W.-j.; Xie H.; Ke C.-q.; Hu H.-c.; Gao M.-n., et al. Anti-SARS-CoV-2 activities in vitro of Shuanghuanglian preparations and bioactive ingredients. Acta Pharmacologica Sinica 2020, 41, 1167–1177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (52).Günther S.; Reinke P. Y.; Fernández-García Y.; Lieske J.; Lane T. J.; Ginn H. M.; Koua F. H.; Ehrt C.; Ewert W.; Oberthuer D., et al. X-ray screening identifies active site and allosteric inhibitors of SARS-CoV-2 main protease. Science 2021, 372, 642–646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (53).Drayman N.; DeMarco J. K.; Jones K. A.; Azizi S.-A.; Froggatt H. M.; Tan K.; Maltseva N. I.; Chen S.; Nicolaescu V.; Dvorkin S., et al. Masitinib is a broad coronavirus 3CL inhibitor that blocks replication of SARS-CoV-2. Science 2021, 373, 931–936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (54).Kitamura N.; Sacco M. D.; Ma C.; Hu Y.; Townsend J. A.; Meng X.; Zhang F.; Zhang X.; Ba M.; Szeto T., et al. Expedited approach toward the rational design of noncovalent SARS-CoV-2 main protease inhibitors. Journal of medicinal chemistry 2021, 65, 2848–2865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (55).Zhang C.-H.; Stone E. A.; Deshmukh M.; Ippolito J. A.; Ghahremanpour M. M.; Tirado-Rives J.; Spasov K. A.; Zhang S.; Takeo Y.; Kudalkar S. N., et al. Potent noncovalent inhibitors of the main protease of SARS-CoV-2 from molecular sculpting of the drug perampanel guided by free energy perturbation calculations. ACS central science 2021, 7, 467–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (56).Glaser J.; Sedova A.; Galanie S.; Kneller D. W.; Davidson R. B.; Maradzike E.; Del Galdo S.; Labbé A.; Hsu D. J.; Agarwal R., et al. Hit expansion of a noncovalent SARS-CoV-2 main protease inhibitor. ACS Pharmacology & Translational Science 2022, 5, 255–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (57).Deshmukh M. G.; Ippolito J. A.; Zhang C.-H.; Stone E. A.; Reilly R. A.; Miller S. J.; Jorgensen W. L.; Anderson K. S. Structure-guided design of a perampanel-derived pharmacophore targeting the SARS-CoV-2 main protease. Structure 2021, 29, 823–833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (58).Hou N.; Shuai L.; Zhang L.; Xie X.; Tang K.; Zhu Y.; Yu Y.; Zhang W.; Tan Q.; Zhong G., et al. Development of highly potent noncovalent inhibitors of SARS-CoV-2 3CLpro. ACS central science 2023, 9, 217–227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (59).Kneller D. W.; Li H.; Galanie S.; Phillips G.; Labbé A.; Weiss K. L.; Zhang Q.; Arnould M. A.; Clyde A.; Ma H., et al. Structural, electronic, and electrostatic determinants for inhibitor binding to subsites S1 and S2 in SARS-CoV-2 main protease. Journal of medicinal chemistry 2021, 64, 17366–17383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (60).Gao S.; Sylvester K.; Song L.; Claff T.; Jing L.; Woodson M.; Weisse R. H.; Cheng Y.; Schaakel L.; Petry M., et al. Discovery and crystallographic studies of trisubstituted piperazine derivatives as non-covalent SARS-CoV-2 main protease inhibitors with high target specificity and low toxicity. Journal of Medicinal Chemistry 2022, 65, 13343–13364. [DOI] [PubMed] [Google Scholar]

- (61).Han S. H.; Goins C. M.; Arya T.; Shin W.-J.; Maw J.; Hooper A.; Sonawane D. P.; Porter M. R.; Bannister B. E.; Crouch R. D., et al. Structure-based optimization of ML300-derived, noncovalent inhibitors targeting the severe acute respiratory syndrome coronavirus 3CL protease (SARS-CoV-2 3CLpro). Journal of Medicinal Chemistry 2021, 65, 2880–2904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (62).Yun C.-H.; Boggon T. J.; Li Y.; Woo M. S.; Greulich H.; Meyerson M.; Eck M. J. Structures of lung cancer-derived EGFR mutants and inhibitor complexes: mechanism of activation and insights into differential inhibitor sensitivity. Cancer cell 2007, 11, 217–227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (63).Zhu S.-J.; Zhao P.; Yang J.; Ma R.; Yan X.-E.; yong Yang S.; Yang J.-W.; Yun C. Structural insights into drug development strategy targeting EGFR T790M/C797S. Oncotarget 2018, 9, 13652–13665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (64).Yan X.-E.; Zhu S.-J.; Liang L.; Zhao P.; Choi H. G.; Yun C.-H. Structural basis of mutant-selectivity and drug-resistance related to CO-1686. Oncotarget 2017, 8, 53508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (65).Peng Y.-H.; Shiao H.-Y.; Tu C.-H.; Liu P.-M.; Hsu J. T.-A.; Amancha P. K.; Wu J.-S.; Coumar M. S.; Chen C.-H.; Wang S.-Y., et al. Protein kinase inhibitor design by targeting the Asp-Phe-Gly (DFG) motif: the role of the DFG motif in the design of epidermal growth factor receptor inhibitors. Journal of medicinal chemistry 2013, 56, 3889–3903. [DOI] [PubMed] [Google Scholar]

- (66).Hanan E. J.; Baumgardner M.; Bryan M. C.; Chen Y.; Eigenbrot C.; Fan P.; Gu X.-H.; La H.; Malek S.; Purkey H. E., et al. 4-Aminoindazolyl-dihydrofuro [3, 4-d] pyrimidines as non-covalent inhibitors of mutant epidermal growth factor receptor tyrosine kinase. Bioorganic & Medicinal Chemistry Letters 2016, 26, 534–539. [DOI] [PubMed] [Google Scholar]

- (67).Sogabe S.; Kawakita Y.; Igaki S.; Iwata H.; Miki H.; Cary D. R.; Takagi T.; Takagi S.; Ohta Y.; Ishikawa T. Structure-based approach for the discovery of pyrrolo [3, 2-d] pyrimidine-based EGFR T790M/L858R mutant inhibitors. ACS medicinal chemistry letters 2013, 4, 201–205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (68).Stamos J.; Sliwkowski M. X.; Eigenbrot C. Structure of the epidermal growth factor receptor kinase domain alone and in complex with a 4-anilinoquinazoline inhibitor. Journal of biological chemistry 2002, 277, 46265–46272. [DOI] [PubMed] [Google Scholar]

- (69).Bryan M. C.; Burdick D. J.; Chan B. K.; Chen Y.; Clausen S.; Dotson J.; Eigenbrot C.; Elliott R.; Hanan E. J.; Heald R., et al. Pyridones as highly selective, noncovalent inhibitors of T790M double mutants of EGFR. ACS Medicinal Chemistry Letters 2016, 7, 100–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (70).Peng Y.-H.; Shiao H.-Y.; Tu C.-H.; Liu P.-M.; Hsu J. T.-A.; Amancha P. K.; Wu J.-S.; Coumar M. S.; Chen C.-H.; Wang S.-Y., et al. Protein kinase inhibitor design by targeting the Asp-Phe-Gly (DFG) motif: the role of the DFG motif in the design of epidermal growth factor receptor inhibitors. Journal of medicinal chemistry 2013, 56, 3889–3903. [DOI] [PubMed] [Google Scholar]

- (71).Aertgeerts K.; Skene R.; Yano J.; Sang B.-C.; Zou H.; Snell G.; Jennings A.; Iwamoto K.; Habuka N.; Hirokawa A., et al. Structural analysis of the mechanism of inhibition and allosteric activation of the kinase domain of HER2 protein. Journal of Biological Chemistry 2011, 286, 18756–18765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (72).Planken S.; Behenna D. C.; Nair S. K.; Johnson T. O.; Nagata A.; Almaden C.; Bailey S.; Ballard T. E.; Bernier L.; Cheng H., et al. Discovery of n-((3 r, 4 r)-4-fluoro-1-(6-((3-methoxy-1-methyl-1 h-pyrazol-4-yl) amino)-9-methyl-9 h-purin-2-yl) pyrrolidine-3-yl) acrylamide (pf-06747775) through structure-based drug design: A high affinity irreversible inhibitor targeting oncogenic egfr mutants with selectivity over wild-type egfr. Journal of Medicinal Chemistry 2017, 60, 3002–3019. [DOI] [PubMed] [Google Scholar]

- (73).Yun C.-H.; Mengwasser K. E.; Toms A. V.; Woo M. S.; Greulich H.; Wong K.-K.; Meyerson M.; Eck M. J. The T790M mutation in EGFR kinase causes drug resistance by increasing the affinity for ATP. Proceedings of the National Academy of Sciences 2008, 105, 2070–2075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (74).Xu G.; Searle L. L.; Hughes T. V.; Beck A. K.; Connolly P. J.; Abad M. C.; Neeper M. P.; Struble G. T.; Springer B. A.; Emanuel S. L., et al. Discovery of novel 4-amino-6-arylaminopyrimidine-5-carbaldehyde oximes as dual inhibitors of EGFR and ErbB-2 protein tyrosine kinases. Bioorganic & medicinal chemistry letters 2008, 18, 3495–3499. [DOI] [PubMed] [Google Scholar]

- (75).Yosaatmadja Y.; Silva S.; Dickson J. M.; Patterson A. V.; Smaill J. B.; Flanagan J. U.; McKeage M. J.; Squire C. J. Binding mode of the breakthrough inhibitor AZD9291 to epidermal growth factor receptor revealed. Journal of structural biology 2015, 192, 539–544. [DOI] [PubMed] [Google Scholar]

- (76).Wood E. R.; Truesdale A. T.; McDonald O. B.; Yuan D.; Hassell A.; Dickerson S. H.; Ellis B.; Pennisi C.; Horne E.; Lackey K., et al. A unique structure for epidermal growth factor receptor bound to GW572016 (Lapatinib) relationships among protein conformation, inhibitor off-rate, and receptor activity in tumor cells. Cancer research 2004, 64, 6652–6659. [DOI] [PubMed] [Google Scholar]

- (77).Jacobs J.; Grum-Tokars V.; Zhou Y.; Turlington M.; Saldanha S. A.; Chase P.; Eggler A.; Dawson E. S.; Baez-Santos Y. M.; Tomar S., et al. Discovery, synthesis, and structure-based optimization of a series of N-(tert-butyl)-2-(N-arylamido)-2-(pyridin-3-yl) acetamides (ML188) as potent noncovalent small molecule inhibitors of the severe acute respiratory syndrome coronavirus (SARS-CoV) 3CL protease. Journal of medicinal chemistry 2013, 56, 534–546. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.