Abstract

Surveys provide evidence for the social sciences for knowledge, attitudes, and other behaviors, and, in health care, to quantify qualitative research and to assist in policymaking. A survey-designed research project is about asking questions of individuals, and, from the answers, the researcher can generalize the findings from a sample of respondents to a population. Therefore, this overview can serve as a guide to conducting survey research that can provide answers for practitioners, educators, and leaders, but only if the right questions and methods are used. The main advantage of using surveys is their economical access to participants online. A major disadvantage of survey research is the low response rates in most situations. Online surveys have many limitations that should be expected before conducting a search, and then described after the survey is complete. Any conclusions and recommendations are to be supported by evidence in a clear and objective manner. Presenting evidence in a structured format is crucial but well-developed reporting guidelines are needed for researchers who conduct survey research.

Keywords: survey research, study design, scientific method, research question, sampling, sampling methods, target audience, population, instrumentation, survey tools

Introduction

Survey research is about asking the right questions. A questioning attitude that seeks to question, evaluate, and investigate is the first step in the research process.1 A good survey is harder to do than it might seem. In the social sciences, surveys can provide evidence for good practice, knowledge, behaviors, and attitudes.2 In health care, surveys can be used to validate qualitative research data and address policymaking concerns by ranking or prioritizing outcomes. Also, surveys can be economical to conduct. Before online surveys became a common tool, mailed surveys were the norm. Perhaps the best-known mailed survey is the United States Census, which is used to describe the characteristics of the population of the United States. This requires having home addresses to send by postal service and waiting for the survey to be mailed back. Compared with traditional methods, conducting online survey research in a virtual community is easier, less expensive in most cases, and timelier in reaching populations. However, disadvantages exist, from sampling errors, low response rates, self-selection, to deception. Researchers should carefully consider limitations when writing their results. Also, some journals are hesitant to publish surveys due to poor quality.3–4. Fortunately, in recent years, there are published surveys in Respiratory Care that are good examples for guidance.5–7 A survey-designed research project provides quantitative data by asking questions of individuals. From this data collection, the researcher can generalize the findings from a sample of responses to a population. In this review, the purpose and the essential components of conducting survey research are presented as well as a structure for writing about the findings.

Methods

Novice researchers should begin with a topic that is of interest and ask what is important about that topic. A literature review of related key words can assist in narrowing the topic for clarity and focus. Using the literature review can also be viewed as a way to find questions that remain unanswered. Several questions are needed to sufficiently cover a topic. For example, is this a topic that can impart good questions? Is it possible to describe or explain how to answer these questions? One way to yield a wide variety of concepts related to the topic is to invite experts or peers to assist in generating questions. This can be done informally from interviews by suggesting questions to the interviewees that serve as cues or prompts for them to provide responses. A more formal approach is to form a focus group in which prompts or key words related to the topic serve as a brainstorming exercise. This helps to determine what questions are missing and is a productive way to discern the right questions to include in a survey. However, if creating a new instrument is not possible, then a literature review of surveys that match the topic of interest may provide options to consider.

Survey Instrumentation

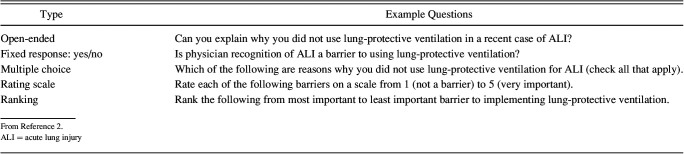

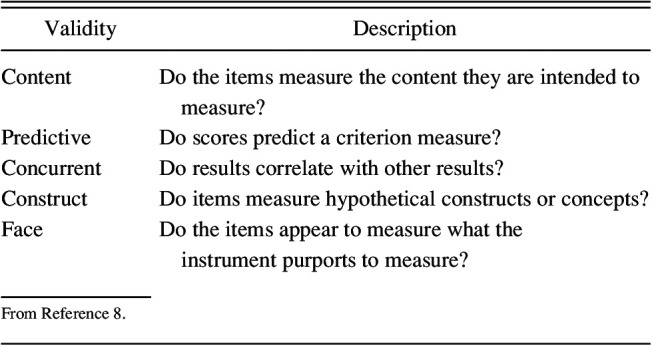

It is important to identify the type of survey instrument to use, whether it is a self-designed instrument, a modified instrument, or an intact instrument developed by someone else. If an acceptable validated survey instrument is found, then the best practice is the request permission to use or permission to modify it before use. Also, efforts by the original authors to establish validity and reliability should be noted. Validity measures include content validity, predictive validity, concurrent validity, construct validity or internal consistency, and face validity (Table 1).8 Reliability tests include tests of stability, internal consistency, and inter-rater reliability for equivalence (Table 2).9 If creating a new instrument and after generating multiple questions, measures of face, content, and criterion, predictive and construct validation require pilot testing and statistical analysis. This can also be true when modified or combined instruments are used because the original validity measures may become distorted; therefore, re-establishing validity and reliability is important. An example of a test of reliability is inter-rater reliability. It is described as calculating the percentage of items about which a set of raters agree. This is known as percent agreement, which ranges between 0 and 100, with 0 indicating no agreement between raters and 100 indicating perfect agreement between raters. A rubric or a scoring guide is used to assist with scoring, which can be considered stable and reliable because all raters use the same criteria to make their judgments. In other words, there is little distance between the subjective score of each rater. Therefore, predictions of stability and consistency can be realized.

Table 1.

Validity Descriptions

Table 2.

Reliability Measures

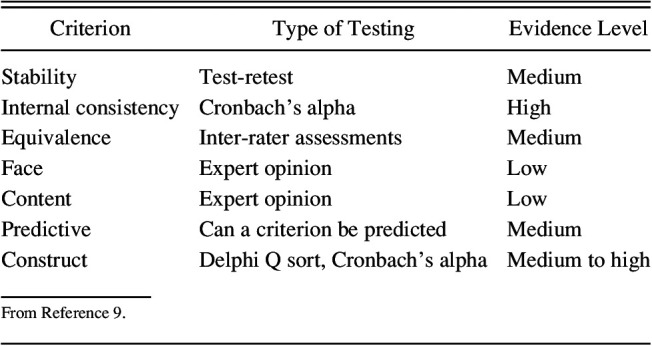

Knowing if an instrument is valid and reliable provides confidence for data collection and is essential for survey methods. Once an instrument is determined to be useful, the researcher determines how to collect the information. This includes determining what scale or measure to use. Will questions be presented by multiple choice selections; simple nominal or true/false questions; ordinal ratings from high to low; a variety of pictorial scales, such as □ or ☹; a traditional agreement range like a Likert scale; a sliding scale (1 to 10); or open-ended questions? Rubenfeld2 provides example questions and the type of scale to use (Table 3). Mandatory questions should be avoided. Options such as “don't know,” “don't wish to answer,” or “not applicable” should be included. Regardless of the scale chosen, a good practice is to test the instrument for reading level, word count, and time to complete. Tools to assist in determining reading levels are the Flesch–Kincaid and Flesch Reading Ease levels. It is recommended that the readability for every item listed on a survey be no higher than at the 8th-grade reading level.10 Also, a researcher should become a respondent so that he or she can imagine what the sample population is being asked to do. Pre-testing the instrument to a small sample is also a good practice for validating reliability, finding previously missed errors, finding questions that may not be clear, and general feedback.

Table 3.

Types and Examples of Survey Questions

It is recommended to submit your survey instrument and survey methods to an institutional review board, also known as a research ethics committee, to review before conducting survey research.11 Survey research is generally exempt from full institutional review board review because surveys rarely put respondents at more than the minimal risks of everyday life.12 However, it is not the researcher's decision to determine if survey research is exempt, but is the role of the institutional review board or ethics committee. Failure to apply for and receive an approval or exemption letter can be a reason for rejection by journal editors.

Population and Sample

The intended population should have a familiarity with the subject to be surveyed. For example, asking respiratory therapists their insights or knowledge of flying an airplane will probably not provide answers unless the respiratory therapist has a pilot's license. Also, the size of the population under consideration should be defined and how this population will be identified. If the researcher has access to names and contact information in the population and can sample directly, then this is referred to as a single-staged sampling design. Multi-staged sampling is when clusters of sample groups or organizations obtain names that then will be sampled directly within the cluster.13,14 Both methods are also referred to as probability sampling.15 Researchers should strive for random sampling, which can ensure that each individual has an equal chance of being selected. Non-probability sampling is less desirable because this method typically assumes that small groups of samples are adequately represented. An example of this type of sampling is convenience or snowball sampling. There is no direct recruitment but relies on the availability of individuals. For example, Stelfox et al16 used a snowball sampling technique that efficiently identified clinical experts but provided for unequal sampling from the different clinical areas. Only a small minority of respondents principally worked in a rehabilitation or chronic care facility, but these were clinicians experienced in the management of patients with tracheostomies and represented > 100 medical centers. Hence, non-probability sampling results provide less certainty and allow for fewer generalizations of the study to a population, as opposed to random sampling, which is more rigorous. Be mindful of sampling bias because respondents may be different from the sample population that the researcher intended.

Target Audience

Target audiences in survey research are determined by selecting individuals who have familiarity or experience in the topic of interest and are therefore able to provide responses. This is known as the selection criteria, which is stated as inclusion and exclusion criteria. For instance, a survey project seeks to find women who left college after 3 years of matriculation with no bachelor's degree. To be certain that you sample enough women to be confident in the results, calculate a sample size to estimate the number of women needed to participate. Another calculation is the effect size, which seeks to determine how many participants are needed to see a difference in treatment. It is best to consult a statistician to provide guidance for the number of participants needed (or sample size) and the margin of error. Recruiting those who meet the selection criteria is crucial. Historical methods have included recruiting via telephone calls, the postal service, and in-person solicitation. Although these methods may still be used, an online survey is a fast-growing method that is sent via e-mail or social media platforms. A Google search of the pros and cons of surveying by social media going back to 2019 revealed > 16,000 hits.17 The advantages of online surveys are little expense (or no costs at all) and they can be faster than previous methods: as e-mails are sent, the researcher waits for data to be uploaded into a software data analysis portal that assists with collating results, without coding required by legacy software. The major disadvantage to online surveys, such as the ones seen in AARConnect (American Association for Respiratory Care, Irving, Texas; https://connect.aarc.org/home), is that the overall population size is unknown, so generalizations can be difficult to predict, which is a limitation that must be acknowledged. To counter this limitation, a larger number of respondents are needed to prevent this type of sampling error and to achieve statistical significance.

Think of the survey instrument as a recruiter to reach your target audience. The instrument should be formatted with bold fonts for headers and labels, be judicious with soft colors, and have breaks and empty spaces on the paper or screen so the survey is not cluttered. Too many words are a distraction. Give specific directions and ask the participants to respond. If a paper survey is used, then the cover sheet serves as the informed consent. In this case, the participant's signature and a witness's signature are required. For online surveys, most institutional review board or research ethics committees allow clicking to the next web page or screen as validation of assent by the participant. Include at the end of the survey a note of thanks to the respondents for their time or just write “Thank you.” Some researchers provide a sentence that allows respondents to ask for a copy of the results. This is a nice gesture but an abstract of the findings is a better choice than raw data.

An important consideration is where to include demographic questions on the survey instrument because this information is needed to describe the characteristics of the respondents. Traditionally trained survey researchers position demographic questions at the end of the survey. The reason is to allow respondents to provide answers to the research questions first, then answer personal questions that may be perceived as offensive. However, recent studies found that placing the demographic questions at the beginning of their surveys increased the response rate for the demographic questions and did not affect the response rate for the non-demographic questions.18,19 The placement may be a matter of convenience, with no effect on responses provided but researchers should carefully consider the questions and demographic placement with the same thoughtful selection process and treat all questions the same.20,21 Regardless, to achieve the best return possible, weekly reminders are needed to increase the response rate. If e-mail is how the survey instrument was delivered, then incentives can be offered to those who return the survey. Other strategies to increase response are to contact participants personally, resample by widening your inclusion criteria, and recruit supervisors who are aware of the survey to encourage their employees to respond.

Analysis

Reporting data should be structured in a stepwise approach. A table is a good visual for presentation of this information. Provide a rationale for the statistics used and state the unit of measurement, if applicable. Also, comment on whether the data met any assumptions of the statistics used. Creswell and Creswell9 provide a process for presenting results. First step is to report the number of return and non-returns of the survey. This is best presented in table form along with demographic data with special attending to respondents and nonrespondents. Demographic data includes characteristic of the respondents, such as the number of males and females, educational levels, ages, and occupations provide an image of the respondents. For surveys sent via postal mail, low response rates can be problematic because a 70% response rate was once considered optimal.9 However, with e-mailed surveys or surveys posted to social media, a 45% response rate is a current acceptable norm.22 Next, discuss if response bias was accounted for by determining if non-respondents had any effect on survey estimates. In other words, would the overall results of the survey be different if non-respondents had responded? It is important to ensure that the demographics of the respondents and non-respondents are similar; in other words, the respondents are representative of the larger population.

Provide details of the methods used by describing each independent and dependent variable. Measures to include are means, standard deviations, and ranges. For data that are ordinal or not normally distributed, provide nonparametric summary data and for nominal data, proportions are provided. This information can be included in a table, an appendix, an online supplement, or summarized in the body of the paper. If you are using a newly created instrument tool, include building scales by using factor analysis. This requires statistical programs that can combine independent and dependent constructs. This can also provide reliability measures, such as internal consistency and construct validity. Regardless, any procedures and statistical programs used are reported so that readers can understand the rationale of the mathematical functions used. P values for tests of significance are provided as well.

Reporting Results

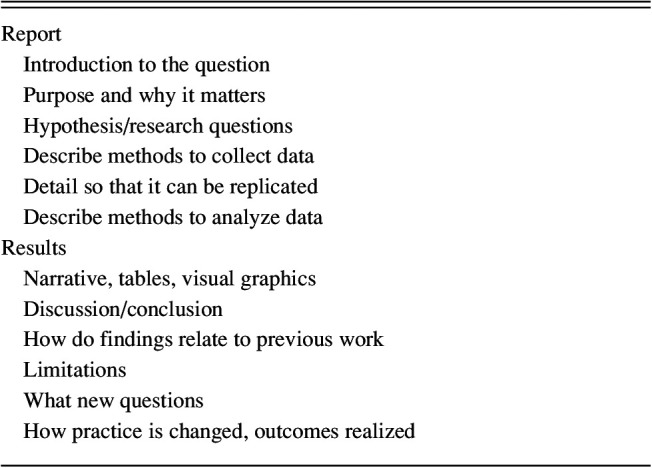

Rigorous discussion sections are needed that specifically address how the results relate to the study questions; list any strengths and weaknesses as well as sources of bias or imprecision (not accurate), and, importantly, whether there are any generalizations to be considered. Guidance is lacking, and no true consensus is noted for the optimal reporting of survey results.23 There are attempts to create checklists from various organizations (psychology, information systems) but no consensus was found in the literature in reporting results. The scientific method of reporting is a good example, but a more detailed approach for survey research is presented in Table 4. Two examples of survey research with discussion sections written by respiratory therapists as sole authors are Becker24 and Goodfellow.25 Above all, researchers should strive for transparency, reproducibility, and reporting properly sized studies of survey research.

Table 4.

Reporting Results

Summary

Survey research requires concentration and many upfront tasks before the study begins. It is important to ask the right questions in survey research and have the best instrument tool available. Population, sample size, effect size, and validity measures should be calculated that can enable the survey to provide answers to the research questions. Analysis of the responses by statistical tests and descriptive measures should be provided with enough detail to allow the reader to visualize the respondents and results. Lastly, the conclusions and any recommendations are supported by evidence in a clear and objective manner. Well-developed reporting guidelines are needed for researchers who conduct survey research.

Footnotes

Dr Goodfellow presented a version of this paper at both the AARC Summer Forum held July 25, 2022 in Palm Springs, California, and AARC Congress 2022 held November 9–12, 2022 in New Orleans, Louisiana.

No funding support was received.

Dr Goodfellow has a relationship with Respiratory Care as a section editor, the American Association for Respiratory Care as Director of Clinical Practice Guidelines Development, and Professor of Respiratory Therapy, at Georgia State University.

REFERENCES

- 1. Durbin CG, Jr. How to come up with a good research question: framing the hypothesis. Respir Care 2004;49(10):1195–1198. [PubMed] [Google Scholar]

- 2. Rubenfeld GD. Surveys: an introduction. Respir Care 2004;49(10):1181–1185. [PubMed] [Google Scholar]

- 3. Tait AR, Voepel-Lewis T. Survey research: it's just a few questions, right? Paediatr Anaesth 2015;25(7):656–662. [DOI] [PubMed] [Google Scholar]

- 4. Story DA, Tait AR. Survey research. Anesthesiology. 2019;130(2):192–202. [DOI] [PubMed] [Google Scholar]

- 5. Quach S, Reise K, McGregor C, Papaconstantinou E, Nonoyama ML. A Delphi survey of Canadian respiratory therapists' practice statements on pediatric mechanical ventilation. Respir Care 2022;67(11):1420–1436. [DOI] [PubMed] [Google Scholar]

- 6. Miller AG, Roberts KJ, Smith BJ, Burr KL, Hinkson CR, Hoerr CA, et al. Prevalence of burnout among respiratory therapists amid the COVID-19 pandemic. Respir Care 2021;66(11):1639–1648. [DOI] [PubMed] [Google Scholar]

- 7. Willis LD, Spray BJ, Edmondson E, Pruss K, Jambhekar SK. Positive airway pressure device care and cleaning practices in the pediatric home. Respir Care 2023;68(1):87–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Borg WR, Gail JP, Gail MD. Applying educational research: a practical guide. 3rd edition. White Plains, New York: Longman; 1993. [Google Scholar]

- 9. Creswell JW, Creswell JD. Research design: qualitative and quantitative approaches. 5th edition. Los Angeles: Sage; 2018. [Google Scholar]

- 10. Informed Consent Guidance - How to Prepare a Readable Consent Form. https://www.hopkinsmedicine.org/institutional_review_board/guidelines_policies/guidelines/informed_consent_ii.html#:∼:text=The%20JHM%20IRB%20recommends%20that,than%20an%208th%20grade%20level. Accessed February 22, 2023.

- 11. Grady C. Institutional review boards: purposes and challenges. Chest 2015;148(5):1148–1155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. American Association for Public Opinion Research. 2014. https://www-archive.aapor.org/Standards-Ethics/Institutional-Review-Boards/Full-AAPOR-IRB-Statement.aspx. Accessed February 22, 2023.

- 13. Babbie E. The basics of social research. 4th edition. Belmont, California: Thomson Wadsworth; 2008. [Google Scholar]

- 14. Fink A, Bourque LB, Fielder EP. The survey kit: how to conduct self-administered and mail surveys. 2nd edition. Thousand Oaks, California: Sage; 2003. [Google Scholar]

- 15. Taherdoost H. Sampling methods in research methodology: how to choose a sampling technique for research. Int J Acad Res Manage 2016;5(2):18–27. [Google Scholar]

- 16. Stelfox HT, Crimi C, Berra L, Noto A, Schmidt U, Bigatello LM, Hess D. Determinants of tracheostomy decannulation: an international survey. Crit Care 2008;12(1):R26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Home page: Google Scholar Description: Pros and cons of online survey. https://scholar.google.com/scholar?as_ylo=2019&q=pros+and+cons+of+online+survey&hl=en&a. Accessed February 19, 2023.

- 18. Teclaw R, Price MV, Osatuke K. Demographic question placement: effect on item response rates and means of a veteran's health administration survey. J Bus Psychol 2012;27(3):281–290. [Google Scholar]

- 19. Drummond FJ, Sharp L, Carsin A-E, Kelleher T, Comber H. Questionnaire order significantly increased response to a postal survey sent to primary care physicians. J Clin Epidemiol 2008;61(2):177–185. [DOI] [PubMed] [Google Scholar]

- 20. Hughes JL, Camden AA, Yangchen T. Rethinking and updating demographic questions: guidance to improve descriptions of research samples. Psi Chi J Psychological Res FALL 2016;21(3):138–151. [Google Scholar]

- 21. Green RG, Murphy KD, Snyder SM. Should demographics be placed at the end or at the beginning of mailed questionnaires? An empirical answer to a persistent methodological question. Social Work Res 2000;24(4):237–241. [Google Scholar]

- 22. Leslie LL. Increasing response rates to long questionnaires. J Educ Res 1970;63(8):347–350. [Google Scholar]

- 23. Bennett C, Khangura S, Brehaut JC, Graham ID, Moher D, Potter BK, Grimshaw JM. Reporting guidelines for survey research: an analysis of published guidance and reporting practices. PLoS Med 2010;8(8):e1001069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Becker EA. Respiratory care managers' preference regarding baccalaureate and master's degree education for respiratory therapists. Respir Care 2003;48(9):840–858. [PubMed] [Google Scholar]

- 25. Goodfellow LT. Respiratory therapists and critical thinking behaviors: a self-assessment. J Allied Health 2001;30(1):20–25. [PubMed] [Google Scholar]