Abstract

Preknowledge cheating jeopardizes the validity of inferences based on test results. Many methods have been developed to detect preknowledge cheating by jointly analyzing item responses and response times. Gaze fixations, an essential eye-tracker measure, can be utilized to help detect aberrant testing behavior with improved accuracy beyond using product and process data types in isolation. As such, this study proposes a mixture hierarchical model that integrates item responses, response times, and visual fixation counts collected from an eye-tracker (a) to detect aberrant test takers who have different levels of preknowledge and (b) to account for nuances in behavioral patterns between normally-behaved and aberrant examinees. A Bayesian approach to estimating model parameters is carried out via an MCMC algorithm. Finally, the proposed model is applied to experimental data to illustrate how the model can be used to identify test takers having preknowledge on the test items.

Keywords: technology enhanced assessment, joint modeling, item response theory, response times, gaze-fixation counts, eye-tracking

Growing public popularity of visual-based remote assessment and testing necessitates constantly upgrading the tools to assess test takers’ behaviors. For instance, many at-home-testing programs were launched during the COVID-19 pandemic, which offers test takers a safe and convenient option to take their exams at home rather than at a test center. In such remote and difficult-to-supervise test-taking settings, how to ensure test takers actively and appropriately answer questions without cheating are essential and timely questions needing to be answered. In response to these questions, several testing companies are currently using online human proctors to remotely monitor test-taking activity and performance. An obvious, primary goal of such a proctoring system is reducing the prevalence of cheating behavior during those times that test centers were closed due to travel restrictions and lockdown conditions.

However, shortages and limitations with an online human proctoring system have been recognized by educators and practitioners, and exploited by students. Many are related to issues of test security. For example, the actual test taker could ask or hire someone to impersonate him or her to take the exam in their place. This could occur in different stages of the examination delivery process, especially during a “water- or bathroom-break,” which could be considered a new form of copy-cheating. Using advanced technology such as micro-inductive earpieces, micro-projectors, and cameras, are other ways “tech-savvy” students can cheat during online proctored exams. Students, for instance, may hide micro-cameras facing the laptop screen to record questions. Then, “expert tutors” can provide answers for them via micro-inductive earpieces, which are hard to detect—even when being monitored via an online proctor. Finally, cheating can occur by writing short, small notes and concealing them from the online-proctor camera. As mice adapt their behaviors to avoid more clever mousetraps, some test takers will continue to find ways to exploit testing environments that are administered online. Although these aberrant testing behaviors seem far-fetched, they have been flagged in online proctored exams nonetheless—sabotaging correct inferences drawn from these possibly contaminated test scores.

As a potential solution, eye-tracking technology could be utilized to monitor examinees’ online test-taking behaviors in a noninvasive manner. Many open-source pupil-detection algorithms have been developed and deployed allowing for a low-cost web-based camera to capture eye movements in conjunction with gaze estimation. This technology is an attractive alternative to online human proctoring because of its accessibility and affordability especially for large-scale educational assessments taken at home (Fuhl, Santini et al., 2016; Fuhl, Tonsen et al., 2016). Eye-tracking involves measuring either where the eye is focused or the motion of the eye as individual examinees view test items delivered online. Their gaze patterns intimate (a) where on the screen they are gazing; (b) the duration of time looking at an item; (c) detecting shifts in focus as they move from item to item; (d) identifying those facets of the online interface that they miss; and (e) whether the eye-related measures are from the same or different persons (Man & Harring, 2021). Therefore, potential cheating behaviors could be detected by analyzing the intensively collected eye-movement-related indicators from an eye-tracker along with other multimodal data such as item responses and response times. All this disparate, yet complementary information, could be utilized to detect aberrant test-taking behaviors like preknowledge cheating.

Among many types of cheating, preknowledge cheating is considered a prominent ongoing issue that testing companies are eager to resolve—especially in online testing settings. Item preknowledge refers to some test takers having prior access to test questions and/or answers before taking the assessment (McLeod et al., 2003; Sinharay, 2020). In remote test-taking settings, the concept of preknowledge cheating is defined to include cases in which tech-savvy test takers utilize high-tech devices to record questions then receive helps from “expert tutors” in unexpected ways. Detection of this type of fraudulent testing behavior relies heavily on the use of statistical analysis of multimodal data, where patterns of cheating are camouflaged and concealed—showing few external signs that a proctor might not be able to discern (Bliss, 2012; Toten & Maynes, 2019). This is because the retrieval of answers they receive from “tutors” by utilizing high-tech devices or through memorized items during “breaks” occurs internally, rather than externally (Toton & Maynes, 2019).

Many methods have been proposed to detect preknowledge cheating, including person-fit statistics, experiment-based evaluation, and data-mining-based methods, which are either analyzing the item response patterns or response time patterns. Of the many indices, a few popular, representative person-fit statistics based on item responses are: Gutttman error (Guttman, 1944) index (Drasgow et al., 1985), and the index (Sijtsma & Meijer, 1992). Representative indices based on item response times are: RT residual-based methods (Qian et al., 2016; van der Linden & Guo, 2008), the index (Man et al., 2018), the index (Fox & Marianti, 2017; Marianti et al., 2014), and the index (Sinharay, 2018).

However, few attempts have been made to jointly model eye-tracking indicators with item responses, and response times in a unified framework to detect preknowledge cheating. In this study, an ML-mixture three-way factor (ML-mixture) model is proposed to detect test takers having preknowledge on test items by jointly modeling item responses, RTs, and visual fixation counts. The proposed model, an extension of the Bayesian multilevel modeling framework proposed by van der Linden (2007) and Wang and Xu (2015), allows for the investigation of the association among latent factors: ability, working speed, and test engagement of underlying item responses, RTs, and visual fixation counts (VFCs) across latent classes, respectively.

In this three-way ML-mixture modeling approach, the Rasch model, an RT model, and a visual fixation counts model are specified at the measurement level. The variance-covariance structures of the person-side and item-side parameters are specified at level two. Bayesian estimation is used to estimate the proposed three-way ML-mixture joint model. Experimental data collected in an eye-tracking lab will be analyzed. The data come from a study in which participants were randomly assigned to one of three treatment conditions.

Multilevel Mixture Model Construction

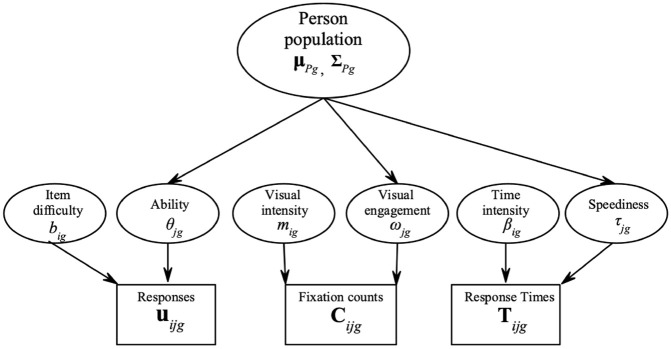

The ML-mixture model (visualized in Figure 1) assumes that two latent classes exist among test takers, and each latent class captures a specific type of testing behavior, such as normal responding and preknowledge cheating. To identify the two latent groups via item responses, response times, and visual fixation counts, three conditional mixture probabilities are specified for item responses, response times, and visual fixations, respectively.

Figure 1.

ML-Mixture Three-Way Joint Model of Item Response, Response Time, and Visual Fixation Counts. , Mean Vector of Person-Side Parameters; , Covariance of Person-Side Parameters; Indicates Different Latent Class

For item responses, the conditional probability for a correct response can be specified as

| (1) |

where is the probability of a correct response to item ( ), by person ( ), in the normally behaved responding group, while quantifies the probability of a correct responses from ones who have preknowledge cheating.

Similarly, for response times, the conditional probability for response time required for a test taker to respond to item could be specified as

| (2) |

where is the conditional density of observed response times based on different latent classes, where represents the probability density for the time spent by a preknowledge cheater on answering an item, where as denotes the probability density for the time spent by a normally behaved test taker on answering an item.

In parallel, for visual fixation counts, the conditional probability for visual fixation required for a test taker to respond to item could be specified as

| (3) |

where is the conditional density of collected visual fixation counts based on different latent classes, where represents the probability density for the number of visual fixations generated while a test taker was decoding an item, whereas denotes the probability density for the visual fixations generated by a normally behaved test taker on answering an item.

To estimate the conditional probabilities for item responses, response times, and visual fixation counts, three respective measurements models—a one-parameter logistic model, a lognormal response time model, and a visual fixation counts model—are specified at the measurement level of the multilevel structure to facilitate identifying different latent classes.

Measurement Models

Item Response Model

A class-specific 1-PL (or Rasch Model) model (Lord, 1952; Rasch, 1960), was chosen to model the relation between latent ability reflecting responding accuracy and item responses within each latent class. The model is specified as

| (4) |

where is the probability of a correct response to item ( ), by person ( ) in latent class , where only takes on the values of 0 or 1, indicating normal or aberrant responding behaviors, respectively. The parameter denotes the difficulty of item , and is a general latent ability parameter for person . By fitting the Rasch model across different latent classes, responding pattern nuances between normally-behaved test takers and those having item preknowledge can be detected.

Response Time Model

In addition to the 1-PL model for item responses, a log-normal RT model (van der Linden, 2006) is selected to depict a test taker’s responding speed. Specification of the log-normal RT model for a specific latent class is defined as

| (5) |

where denotes the log-transferred RT of test taker used to decode item . A person-side latent parameter, , denotes working efficiency for test taker in decoding a set of items. The item parameter indicates time intensity, that is, the average of . Parameter represents the item time discrimination parameter reflecting the dispersion of for item . Akin to the 1-PL MG model, by modeling the person-side parameters in different latent classes, the behavioral differences regarding the overall working efficiencies between the normally-behaved test takers and cheaters can also be investigated.

Visual Fixation Counts Model

Following Man and Harring (2019), a visual fixation counts model is used to model the association between observed visual fixation counts and latent test visual engagement, which is specified as

| (6) |

where indicates the observed fixation counts generated by test taker on answering item , and its expectation is denoted as: ; the item-side parameter depicts the visual intensity for item , which reflects the average amount of visual effort for a group of examinees to complete an item. Person-specific parameter, , represents an individual’s test engagement level across all the items. In addition, an item-specific visual discrimination parameter, , is defined as , where denotes the shape parameter of item ; , indicating the dispersion of the fixation counts on item .

Person-Side Structural Model at Level Two

The structural model incorporates one person-domain variance-covariance matrix describing the dependencies among three person-side parameters, which are (a) latent ability , (b) working efficiency , and (c) visual engagement , estimated from the level-1 measurement models for each latent class. Similar to the hierarchical model proposed by van der Linden (2007), these three person-side latent variables are assumed to follow a multivariate normal distribution such that

| (7) |

with mean vector, , and covariance matrix

| (8) |

The variances of the latent constructs are represented by the diagonal components of the , while the covariances between any pair of latent constructs are represented by the off-diagonal parameters. The correlation between latent ability and speediness of test takers in a specific latent class, for example, could be computed in a straightforward manner from variance and covariance components of , as, .

By estimating class-specific parameters in the person-domain structural variance-covariance matrices, the relations among person parameters can be manifested across latent classes. Structural differences across latent classes represent distinct test-taking behavioral patterns regarding the normally behaved test takers and the ones who have preknowledge of test items.

Figure 1 displays the graphical representation of the ML-mixture model jointly modeling of item response, response time, and visual fixation counts across latent classes.

Testing Differential Item Functioning Across Latent Classes

Differential item functioning (DIF) occurs when different groups of test takers respond differently to the same item (Hambleton et al., 1991; Smith & Prometric, 2004). Typically, DIF is assessed based on observed grouping variables like gender or SES. However, in a mixture modeling framework, DIF is evaluated based on the latent classes, which relies on the assumption that the latent classes have been correctly identified.

To examine whether item DIF exists across latent classes, standardized Wald tests of the item DIF in the item parameters (i.e., , , ) were conducted (Man & Harring, 2019). These are defined as

where indicate posterior mean differences in the corresponding item parameters, while indicates the posterior standard deviations of the mean differences. For instance, DIF in item difficulties is defined as , which follows , , , which indicates different latent classes. For instance, describes the DIF in difficulties for item 1 between the latent class 1 and 2. And, the calculated W test statistics will be compared with their corresponding critical values, 1.96 with an level of 0.05.

Bayesian Estimation Using MCMC Sampling

Just Another Gibbs Sampler, a Bayesian estimation tool, (JAGS; Plummer, 2015), which is in the R2jags package (Su & Yajima, 2015) was utilized for model parameter estimation. coda package is utilized to determine model convergence. Four chains employing 6,000 total iterations with a thinning of four were done to eliminate autocorrelation between draws. Also, model parameter estimates and standard deviations were aggregated based on posterior densities, using the last 10,000 iterations following a burn-in of 50,000 draws. For measuring convergence for all model parameters, the potential scale reduction factor (PSRF) was applied (Gelman et al., 2003). A PSRF value of 1.2 or below for each model parameter was utilized as the model convergence criteria in this study.

Model Identification and Scalability

According to Paek and Cho (2015), interpreting and further utilizing model parameters within a mixture IRT framework requires that the model is identified within each class and that parameter estimates across latent classes are on a common scale. We discuss each of these in turn explicating how we intend to satisfy both identifiability and scalability conditions.

To properly identify the scales of the latent variables, model constraints are needed either on the item side (fixing the summation of item thresholds to zero) or the person-side (fixing the expectation of the latent ability parameter to zero). In this study, to ensure the identifiability of the model, constraints were placed on the person-side parameters. Within each latent class , the population mean of the latent ability for the 1-PL model, , was set to 0 (Lord, 1952), and, the item discrimination parameter for each item was fixed as one. For the log-normal RT model, the population mean of latent speediness, , was constrained to 0 as well (van der Linden, 2006). For the NBF model, the population mean of the latent person-side visual engagement parameter was also fixed to 0 (Man & Harring, 2019):

| (9) |

Paek and Cho (2015) note that when the estimation method (i.e., in this study, we use an MCMC algorithm within a Bayesian approach) accommodates the latent class population distributions as part of the modeling, constraints to the population ability distributions of the latent classes [i.e., ensure parameter estimate comparability within a latent class. A common scale was primarily achieved across latent classes due to the fact that the two latent classes share the same population means and variances, which is also true in the real data due to the experimental design that test-takers were randomly assigned into one of the conditions.

Label Switching Identification for Mixture Distributions

Identification is a potentially critical challenge in Bayesian estimation of mixture models. Identification of a mixture model necessitates that distinct parameter estimates result in distinct probability values. The definition of identification for a mixture distribution is defined as follows (McLachlan & Peel, 2000):

| (10) |

If and only if, = , the component labels can be permuted so that and, for . And, the sign, ≡, implies the equivalence of densities of observed , where is the realization of the random vector given the -dimensional mixture component.

The invariance of the likelihood under relabeling of the mixture components (Diebolt & Robert, 1994; Redner & Walker, 1984) is a serious problem that must be handled. Several methods have been proposed to tackle the issue. For example, Vermunt and Magidson (2005) imposed strong informative prior to uniquely determine parameter estimates. Another commonly used method to tackle the label switching issue is to impose a set of identifiability constraints on the parameter space that could help identify the latent components and avoid latent class labels switching within MCMC chain at each iteration (Cho et al., 2010; Stephens, 2000).

In this study, several constraints were imposed on the item-side parameters to differentiate the two latent groups: normally behaved and aberrantly behaved test takers. First, item difficulties ( ) for the preknowledge cheating latent group are constrained to be lower than the ones for the normally behaved test takers; . This is sensible since having preknowledge of test items could reduce the difficulty levels of the exposed items. Similarly, item time intensities ( ) for the preknowledge cheating latent group are constrained to be lower than the ones for the normally behaved test takers; . Having preknowledge of test items could reduce the expected working time on the exposed items. Finally, item visual intensities ( ) for the preknowledge cheating latent group are constrained to be lower than the ones for the normally behaved test takers; . We believe this is a sensible choice because having preknowledge of test items could reduce the expected visual efforts on solving the exposed items.

Prior Distributions

Weak informative priors are preferentially used in this study to increase the generalizability of our code by imposing vague prior beliefs on estimating parameters. The setting of priors in this way was also implemented in Man et al. (2022) and Man and Harring (2019). The prior specification for the person parameters across the two latent classes, referring to Equation 7, of the three-way joint model follows a trivariate normal distribution where the are all fixed to 0. And,

where is an 3 × 3 identity matrix for a specific latent class, and is the degree of freedom fixed as 3.

The prior distribution of item parameters is specified for each of the latent class, respectively. For the normally behaved latent class,

In terms of the latent class for the ones having preknowledge on test items, label-switching constraints are imposed to the means of item parameter by letting the item parameter from the first latent class minus the item DIFs that always larger than 0. By applying that constraints to the means of item difficulties, time intensities, and visual intensities of the second latent class, the two latent class can be correctly identified. The prior of each item parameter for the preknowledge-cheating latent class was specified as

where , which are item DIFs across latent class defined previously.

To identify the two latent class, . The probability mass function of the dcat distribution is defined as: . And, is a set of mixture proportion parameters, which must be non-zero so that . Also, to sample from a Dirichlet distribution, independent , or 1, are needed to be sampled from the Gamma distribution, Gamma (Cho et al., 2013).

The full joint likelihood function of person and item parameters for the ML-mixture model is as follows:

where quantifies the probability of a correct response from a normally behaved test taker, while quantifies the probability of getting an incorrect response from a normally behaved test taker. , , , , represent item difficulty, time intensity, time discrimination, visual intensity, and visual discrimination parameters for the latent normally-behaved group. Conversely, quantifies the probability of getting a correct response from a preknowledge cheater. And, , , , , represent item difficulty, time intensity, time discrimination, visual intensity, and visual discrimination parameters for the latent preknowledge cheating group. In addition, .

The joint posterior probability for the proposed model can be represented as

Outcome Measures for Model Selection and Classification Accuracy Evaluation

To evaluate the performance of the proposed method on classifying aberrant and non-aberrant test takers, the number of test takers in each of these categories were cross-tabulated. Schematically, these are true positives, false positives, false negatives, and true negatives, labeled as TP, FP, FN, and TN. 1 To summarize the results, outcome measures consist of sensitivity, specificity, and overall accuracy were calculated as follows:

Real Data Analysis

The data were fitted with the proposed ML-mixture three-way joint model of item responses, response times, and visual fixation counts. Parameter estimation of the measurement models at level-1 were presented across different latent classes. Moreover, the trade-offs of the person-side parameters at the level-2 were explored by reporting the estimated latent class-specific variance-covariance matrices.

Data Description

The eye-tracking study was conducted at an university with the IRB approval. The dataset used for this study includes = 200 university students who had normal or corrected vision were recruited for the study. Students were required to take an exam consisting of = 10 questions related to verbal reasoning. The test material implemented for the current study imitated the composition of a high-stakes credentialing exam. Subjects who randomly assigned to the two different experimental conditions were summarized in Table 1.

Table 1.

Number of Subjects in Each Condition.

| Normally behaved condition | Preknowledge-cheated condition | |

|---|---|---|

| Number of subjects | 93 | 107 |

Note. Normally behaved condition: participants in the control condition who did not receive any test preparation materials. Preknowledge-cheated condition: participants in this condition would receive similar exam questions and the answer key.

Table 2.

Item-Specific PPP-Values Across Items.

| Item 1 | Item 2 | Item 3 | Item 4 | Item 5 | Item 6 | Item 7 | Item 8 | Item 9 | Item 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| IRT | 0.469 | 0.494 | 0.499 | 0.538 | 0.572 | 0.514 | 0.548 | 0.581 | 0.541 | 0.517 |

| RTM | 0.502 | 0.500 | 0.508 | 0.501 | 0.498 | 0.502 | 0.509 | 0.502 | 0.497 | 0.502 |

| VFM | 0.825 | 0.719 | 0.362 | 0.311 | 0.288 | 0.438 | 0.601 | 0.273 | 0.456 | 0.388 |

Note. IRT = item response model; PPP = Posterior predictive p-values; RTM = response time model; VFM = visual fixations model.

Students were seated around 80 cm away from a monitor with an eye-tracking device, Gazepoint, placed beneath the screen. Gazepoint is an accessible and reliable experimental eye-tracker with 60 Hz sampling rate and 0.5–1 degree of visual angle precision that is often used for eye-tracking research. Students were required to complete an short test consisting of verbal reasoning items. The test structure followed the format of one portion of a high-stakes certification exam. As the participants answered the questions, item responses, reaction times as well as gaze fixation counts of the Area of Interest (AOIs) were collected simultaneously. For the detailed information regarding the usage and setup of the eye-tracker, the interested readers can visit the Gazepoint website for tutorials (https://www.gazept.com/tutorials/).

Data Visualization

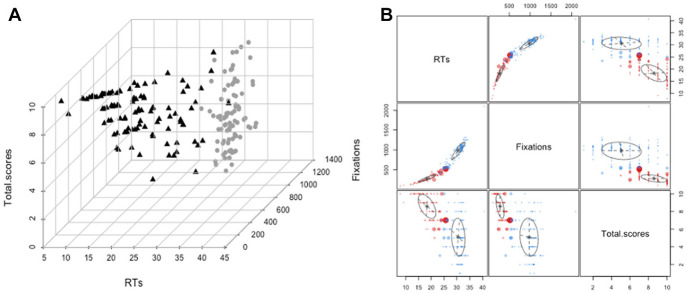

To have better understanding about the data and to appropriately model it for accurate inferences, the collected data were explored by showing the three-dimensional structure of the data space and the bivariate scatterplots of three variables with the estimated mixture densities using the package mclust (Fraley & Raftery, 2007). The three variables at the aggregate level for each test taker were plotted, which are total response times, responding accuracy, and total fixations across all items. Figure 2A depicts the 3D spatial position of each data point for each individual. By viewing at the 3D plot, it was possible to extract two latent from the data bubble. Moreover, Figure 2B also displays all scatterplots with the fitted mixture densities based on the total values of each variable for each test taker. Based on the fitted densities contours, two latent classes are expected across all the pair-wise scatter plots. For example, on the top right of Figure 2B, the two bivariate Gaussian densities placed on the data points shows that the data may reveal two latent groups in terms of answering accuracy and working efficiency. By visualizing the major variables, it aids in determining the how many latent classes may be anticipated and how to set proper priors for model estimation.

Figure 2.

Figure 2(A): 3D Visualization of the Collected Data. Figure 2(B): Scatterplots of Essential Variables Across Three Conditions. The Variable names showing in the Matrix From the Top Left to the Bottom Right Are Total.Score, Total.Gaze, and Total.Time. The Distribution of Each Variable Is Listed on the Diagonal of the Plot Matrix.The Bivariate Scatterplots are Listed on the Off-Diagonal.

The proposed ML-mixture model of item responses, RTs, and visual fixation counts were fitted to the data to understand and evaluate the pattern differences in test-taking behaviors across latent classes. Parameter estimates of the level-1 measurement models across two latent classes were summarized. Furthermore, the test-taking behavioral pattern differences were reported by showing the corresponding covariance estimates across the identified latent groups. In addition, posterior predictive model checking (PPMC; Gelman et al., 2014) was utilized to evaluate model–data fit. A PPMC value is within the range of 0.05 to 0.95 indicates adequate model data fits (Sinharay et al., 2006). 2 displays the item-specific PPP-values for evaluating data model fits. In general, the majority of the PPP-values were near to 0.5 for the IRT, lognormal RT, and NBFM models, showing satisfactory data model fits across the three measurement models.

Item Characteristics Nuances Across Latent Groups

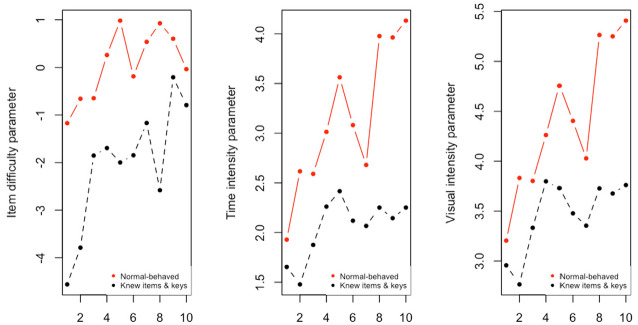

Figure 3 shows item parameter estimates across two latent classes. The dashed lines in Figure 3 correspond to the estimated finite mixture components of item difficulties, time intensities, and visual intensities, reflecting the impact of having preknowledge on the items. The solid line shows the estimated item difficulties, time intensities, and visual intensities for ones classified as normally behaved test takers. Due to the contains imposed for identifying latent classes, item difficulties ( ), time intensities ( ), and visual intensities ( ), on average, tend to show lower values in the cheated latent group than one representing the normally-behaved latent class. This finding is consistent with one of the conclusions reached by Man and Harring (2021), which may be attributed to the fact that test takers tend to spend less time and less visual effort on an exam in which they have previously practiced the items.

Figure 3.

Item Parameter Estimates Across Identified Latent Classes

In terms of item difficulties, examinees belonging to the preknowledge-cheating latent group appeared, on average, to have a lower level of item difficulty than those belonging to the normally behaved latent group. For the latent class with normal test-taking behavior, ranged from −1.17 to 0.98 over 10 items. In contrast, ranged from −4.56 to −0.21 for the latent group with preknowledge test-taking behavior, as shown in Table 3. In terms of item DIFs across the two latent groups, the item difficulty differences fluctuated from 0.76 to 3.51, with an averaged difference 2.11. In addition, credible intervals of DIFs in item difficulties were reported, as long as the Wald statistics revealed significant large difficulty DIFs across all items when compared with the cutoff values: ± 1.96.

Table 3.

Impact of Having Preknowledge of Test Items on Item DIFs

| Par. | classes | Item 1 | Item 2 | Item 3 | Item 4 | Item 5 | Item 6 | Item 7 | Item 8 | Item 9 | Item 10 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| C1 | −1.17 | −0.66 | −0.65 | 0.26 | 0.98 | −0.19 | 0.54 | 0.93 | 0.6 | −0.04 | |

| C2 | −4.56 | −3.79 | −1.85 | −1.70 | −2.0 | −1.85 | −1.17 | −2.58 | −0.21 | −0.79 | |

| 3.41 | 3.13 | 1.20 | 1.96 | 2.98 | 1.66 | 1.71 | 3.51 | 0.81 | 0.76 | ||

| CI: | (2.04,5.13) | (2.06,4.35) | (0.46,1.98) | (1.25,2.72) | (2.22,3.79) | (0.94,2.43) | (1.02,2.44) | (2.69,4.42) | (0.17,1.48) | (0.15,1.42) | |

| . | 4.4 | 5.3 | 3.13 | 5.21 | 7.43 | 4.36 | 4.78 | 7.99 | 2.42 | 2.27 | |

| C1 | 1.93 | 2.61 | 2.59 | 3.01 | 3.56 | 3.08 | 2.68 | 3.98 | 3.96 | 4.13 | |

| C2 | 1.65 | 1.48 | 1.88 | 2.26 | 2.42 | 2.12 | 2.07 | 2.25 | 2.15 | 2.25 | |

| 0.25 | 1.07 | 0.47 | 0.46 | 1.03 | 0.93 | 0.67 | 1.54 | 1.57 | 1.65 | ||

| CI: | (0.13,0.42) | (1.01,1.27) | (0.56,0.88) | (0.57,0.94) | (1.02,1.27) | (0.82,1.10) | (0.47,0.76) | (1.58,1.87) | (1.67,1.96) | (1.74,2.03) | |

| 3.82 | 16.66 | 5.95 | 4.99 | 15.55 | 13.43 | 9.77 | 22.61 | 21.86 | 24.22 | ||

| C1 | 3.20 | 3.83 | 3.80 | 4.26 | 4.76 | 4.40 | 4.03 | 5.26 | 5.25 | 5.41 | |

| C2 | 2.96 | 2.77 | 3.33 | 3.80 | 3.73 | 3.48 | 3.35 | 3.73 | 3.68 | 3.76 | |

| 0.27 | 1.14 | 0.72 | 0.75 | 1.15 | 0.96 | 0.61 | 1.73 | 1.82 | 1.88 | ||

| CI: | (0.13,0.37) | (0.95,1.19) | (0.32,0.62) | (0.29,9,64) | (0.90,1.15) | (0.80,1.06) | (0.54,0.81) | (1.41,1.67) | (1.44,1.71) | (1.52,1.78) | |

| 3.56 | 16.74 | 8.83 | 7.83 | 16.61 | 13.00. | 8.17 | 23.31 | 23.92 | 25.41 |

Note. : difficulty; : visual intensity; : time intensity; : difference in item difficulty across latent classes; : difference in item visual intensity across latent classes; : difference in item time intensity across latent classes; : Wald test statistic of difference in item difficulty; : Wald test statistic of difference in item visual intensity; : Wald test statistic of difference in item time intensity; C1 indicates normally-behaved latent group; C2 indicates preknowledge-cheating latent group. DIF = differential item functioning; CI = confidence interval.

Similarly, test takers in the latent class for preknowledge cheating behavior tend to spend less time finishing their exams. varied between 1.93 and 4.13 for the latent class with normal test-taking behavior. In contrast, time-intensity estimates for the aberrant-behaved latent class ranged between 1.48 and 2.42. In addition, the Wald statistics and credible intervals presented in Table 3 of testing item DIFs in time intensities confirm that the differences in the time intensities are statistically significant across the two latent classes.

The impact of having preknowledge on visual intensities is similar to the ones observed regarding responding accuracy and time intensity, which means test takers who are classified in the preknowledge latent class tend to put less visual effort into tracking information to decode test items. Generally, the visual intensities from the test takers who belong to the normally behaved latent class ranged from 3.20 to 5.41, equivalent to 25 to 224 fixations counts by exponentiating the estimated visual intensity values. In contrast, visual intensities for the preknowledge-cheating latent class varied from 2.77 to 3.80, which is about 16 to 44 fixation counts averaged across all the test takers. Similarly, the Wald statistics and credible intervals supported the conclusion that the mean differences are significant in the average visual effort placed by test takers on answering items across latent classes, demonstrating a similar pattern in testing DIF as in time intensity.

Behavioral Pattern Differences Across Latent Groups

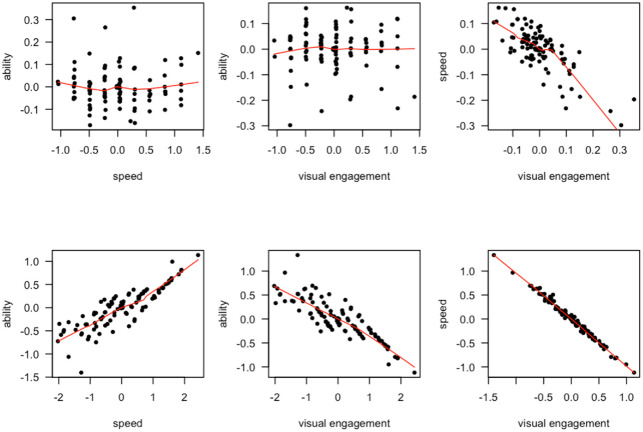

Figure 4 depicts behavioral pattern variations between the normally and aberrantly behaved test takers. The behavioral pattern differences were illustrated via the correlation matrices listed in Table 4, and the pair-wise scatterplots of the estimated latent constructs (ability, visual engagement, and processing speed) across the two identified latent classes. Regarding the latent preknowledge-cheating group, a high positive association between latent ability and working speed ( = , ), as seen in Figure 4, was expected. In addition, large negative relationships were discovered between latent visual engagement and both latent abilities ( = , ) and working speediness ( = , ). In contrast, those who answered normally exhibited a trivial correlation between their latent ability and processing speed ( = , . Similar correlations were identified between their latent abilities and degrees of visual engagement ( = , . Intriguingly, processing speed and gaze exhibited a negative association ( = , ).

Figure 4.

Scatterplots for Person-Side Parameter Estimates. A Loess Nonparametric Smoothed Curve Is Plotted for Each Scatterplot

Table 4.

Person-Side Correlation Matrix Estimates

| Latent class | Normally behaved | Preknowledge-cheating | ||

|---|---|---|---|---|

| Parameter | M | CI | M | CI |

| −0.008 | [−0.252, 0.243] | −0.713 | [−0.843, −0.545] | |

| −0.011 | [−0.265, 0.238] | 0.711 | [0.542, 0.842] | |

| −0.301 | [−0.532, −0.073] | −0.899 | [−0.936, −0.846] | |

Note. CI = confidence interval.

This result infers that when test takers answered normally, their latent ability was not related to their responding speed. For example, high-ability test takers could answer both quickly and slowly. Moreover, the visual engagements of high-ability test takers are also independent of their ability levels. As for cheated ones, when they know the answer keys of the test items, they tend to answer quickly without paying careful attention to the content of the questions.

Detection Accuracy

As a mean of comparison of the performance of detection of preknowledge cheating based on the proposed mixture method, Table 5 shows the cross-tabulation of the numbers of test takers summarized in each of these crossed categories, which are labeled as TP, FP, FN, and TN explained previously. The overall sensitive rate based on the proposed mixture method is perfect ( ) and the specificity rate is approximately . The overall classification is with the CI: . The results indicate that the overall rate for detecting preknowledge cheating with the proposed method is high with the given dataset. Also, the high specificity rate implies that the proposed method could potentially protect normally behaved test takers from being incorrectly classified as cheaters.

Table 5.

Classification.

| Actual label | Predicted label | ||

|---|---|---|---|

| Normal | Aberrant | Row total | |

| Normal | 93 (100%) | 0 (0%) | 93 |

| Aberrant | 4 (3.74%) | 103 (96.26%) | 107 |

| Column Total | 97 | 103 | 200 |

Discussion

The use of technology-enhanced assessment systems has permitted practitioners and education specialists to gain a better understanding of the behavioral characteristics associated with the different groups exhibiting different responding styles through the use of enriched information compiled from the log-files of biometric and computational devices, such as extracted RTs and VFCs. As previously stated, home examinations are gaining in popularity. The opportunity for examinees to cheat during home examinations utilizing a range of advanced technologies is growing. Consequently, it is vital to preserve the integrity of an exam by securing it using multiple data sources beyond item responses. Through evaluating or modeling only response correctness on items, it is difficult for practitioners and researchers to discriminate between those with high ability and those with a priori test content knowledge. In this instance, if RT and visual attention are evaluated with the accuracy of responses, cheating cases, particularly those with prior knowledge of test questions, can be separated from high-ability candidates more precisely.

The suggested ML-mixture three-way joint model can aid in (a) accurately distinguishing abnormal test takers with prior knowledge of test items from those with normal behavior, (b) automatically estimating the person-side and item-side parameters for various latent groups, and, in addition, (c) investigating pattern differences in the trade-offs of visual attention, working speed, and accuracy across the manifested latent classes by accounting for test-taking behaviors differences by jointly modeling visual fixation collected from an eye-tracker with conventional psychometrics information such as item responses and response times. These demonstrated relationships may aid practitioners in comprehending and explaining the distinctions between the recognized types of responding actions. Potentially, this proposed model could serve as an useful tool for detecting aberrant test takers, thereby ensuring that our home-based online assessments are as secure as feasible.

The results from the real data example suggested that the proposed ML-mixture model yields at least two desirable outcomes. First, both item- and person-side parameters can be accurately estimated within specific latent classes. Accurately estimated item parameters can be used for future applications, such as assisting practitioners and substantive researchers in gaining a deeper understanding of the behavioral nuances and cognitive processes displayed by test takers from different groups, such as normally and aberrantly responding groups, in technology-enhanced environments. Second, aberrant test takers can be accurately classified concurrently. For example, class-specific labels, , might indicate which items/individuals have aberrant test-taking potential.

Despite the fact that the proposed model showed promise in the current study, several limitations to this study need to be acknowledged. First, the current Bayesian implementation enables for accurately characterize parameter uncertainty. However, because of the computational intensity associated with Bayesian estimation, the suggested approach is best suited for post hoc analysis rather than detecting cheated cases in real time. Furthermore, the current model includes two latent classes for distinguishing individuals who have prior knowledge of test items from those who behave normally. To identify more cheating subcategories, model constraints need to be further extended to accommodate more latent classes. In addition, though the credibility intervals were reported for the DIFs, scale comparability was not tested throughout. Moreover, due to the limited sample size, the current model only used the Rasch model to model the item response; alternative IRT models, such as partial credit and test let models, might be used for more complex answer structures with more data.

In conclusion, the proposed model could be expanded. For instance, to study how preknowledge cheating could affect the mastery of latent skill attributes, it may be worthwhile to substitute the Rasch model with the Cognitive Diagnostic Model as a prospective next step in this line of research. In addition, many other biometric information variables, such as heart rate and blinking rates, could be added to the proposed modeling framework either as covariates or independent latent constructs, which could provide more refined formative feedback to practitioners for a better understanding of cheating machinery and ultimately boost the cheating detect rate.

FN are those test takers incorrectly classified as aberrant; FP are ones incorrectly classified as non-aberrant; TP are ones correctly classified as aberrant; TN are ones correctly classified as non-aberrant.

Footnotes

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

ORCID iDs: Kaiwen Man  https://orcid.org/0000-0002-9696-9726

https://orcid.org/0000-0002-9696-9726

Jeffrey R. Harring  https://orcid.org/0000-0002-7102-0303

https://orcid.org/0000-0002-7102-0303

References

- Bliss T. J. (2012). Statistical Methods to Detect Cheating on Tests: A Review of the Literature (Unpublished doctoral dissertation). Brigham Young University, Provo, UT. [Google Scholar]

- Cho S.-J., Cohen A. S., Kim S.-H. (2013). Markov chain Monte Carlo estimation of a mixture item response theory model. Journal of Statistical Computation and Simulation, 83(2), 278–306. [Google Scholar]

- Cho S.-J., Cohen A. S., Kim S.-H., Bottge B. (2010). Latent transition analysis with a mixture item response theory measurement model. Applied Psychological Measurement, 34(7), 483–504. [Google Scholar]

- Diebolt J., Robert C. P. (1994). Estimation of finite mixture distributions through Bayesian sampling. Journal of the Royal Statistical Society: Series B (Methodological), 56(2), 363–375. [Google Scholar]

- Drasgow F., Levine M. V., Williams E. A. (1985). Appropriateness measurement with polychotomous item response models and standardized indices. British Journal of Mathematical and Statistical Psychology, 38(1), 67–86. [Google Scholar]

- Fox J.-P., Marianti S. (2017). Person-fit statistics for joint models for accuracy and speed. Journal of Educational Measurement, 54(2), 243–262. [Google Scholar]

- Fraley C., Raftery A. (2007). Model-based methods of classification: Using the mclust software in chemometrics. Journal of Statistical Software, 18, 1–13. [Google Scholar]

- Fuhl W., Santini T. C., Kübler T., Kasneci E. (2016). EISe: Ellipse selection for robust pupil detection in real-world environments In Proceedings of the ninth biennial ACM symposium on eye tracking research & applications, Association for Computing Machinery, New York, NY, pp. 123–130. [Google Scholar]

- Fuhl W., Tonsen M., Bulling A., Kasneci E. (2016). Pupil detection in the wild: An evaluation of the state of the art in mobile head-mounted eye tracking. Machine Vision and Applications, 27(1), 1275–1288. [Google Scholar]

- Gelman A., Carlin J. B., Stern H. S., Rubin D. B. (2003). Bayesian data analysis. Chapman & Hall. [Google Scholar]

- Gelman A., Meng X. L., Stern H. (1996). Posterior predictive assessment of model fitness via realized discrepancies. Statistica sinica, 733–760. [Google Scholar]

- Guttman L. (1944). A basis for scaling qualitative data. American Sociological Review, 9, 139–150. [Google Scholar]

- Hambleton R. K., Swaminathan H., Rogers H. J. (1991). Fundamentals of item response theory. SAGE. [Google Scholar]

- Lord F. M. (1952). A theory of test scores. Psychometric Corporation. [Google Scholar]

- Man K., Harring J. R. (2019). Negative binomial models for visual fixation counts on test items. Educational and Psychological Measurement, 79(4), 617–635. 10.1177/0013164418824148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Man K., Harring J. R. (2021). Assessing preknowledge cheating via innovative measures: A multiple-group analysis of jointly modeling item responses, response times, and visual fixation counts. Educational and Psychological Measurement, 81(3), 441–465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Man K., Harring J. R., Ouyang Y., Thomas S. L. (2018). Response time based nonparametric Kullback-Leibler divergence measure for detecting aberrant test-taking behavior. International Journal of Testing, 18, 155–177. 10.1080/15305058.2018.1429446 [DOI] [Google Scholar]

- Man K., Harring J. R., Zhan P. (2022). Bridging models of biometric and psychometric assessment: A three-way joint modeling approach of item responses, response times, and gaze fixation counts. Applied Psychological Measurement, 46, 361–381. 10.1177/01466216221089344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marianti S., Fox J.-P., Avetisyan M., Veldkamp B. P., Tijmstra J. (2014). Testing for aberrant behavior in response time modeling. Journal of Educational and Behavioral Statistics, 39(6), 426–451. [Google Scholar]

- McLachlan G., Peel D. (2000). Mixtures of factor analyzers. In Langley P. (ed.), Proceedings of the Seventeenth International Conference on Machine Learning. Morgan Kaufmann, San Francisco, pp. 599–606. [Google Scholar]

- McLeod L., Lewis C., Thissen D. (2003). A Bayesian method for the detection of item preknowledge in computerized adaptive testing. Applied Psychological Measurement, 27(2), 121–137. [Google Scholar]

- Paek I., Cho S.-J. (2015). A note on parameter estimate comparability: Across latent classes in mixture IRT modeling. Applied Psychological Measurement, 39(2), 135–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plummer M. (2015). Jags: Just another Gibbs sampler (Version 4.0.0) [Computer software]. http://mcmc-jags.sourceforge.net/

- Qian H., Staniewska D., Reckase M., Woo A. (2016). Using response time to detect item preknowledge in computer-based licensure examinations. Educational Measurement: Issues and Practice, 35(1), 38–47. [Google Scholar]

- Rasch G. (1960). Studies in mathematical psychology: I. Probabilistic models for some intelligence and attainment tests. Nielsen Lydiche. [Google Scholar]

- Redner R. A., Walker H. F. (1984). Mixture densities, maximum likelihood and the Em algorithm. SIAM Review, 26(2), 195–239. [Google Scholar]

- Sijtsma K., Meijer R. R. (1992). A method for investigating the intersection of item response functions in Mokken’s nonparametric IRT model. Applied Psychological Measurement, 16(2), 149–157. [Google Scholar]

- Sinharay S. (2018). A new person-fit statistic for the lognormal model for response times. Journal of Educational Measurement, 55(4), 457–476. [Google Scholar]

- Sinharay S. (2020). Detection of item preknowledge using response times. Applied Psychological Measurement, 45(5), 376–392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinharay S., Johnson M. S., Stern H. S. (2006). Posterior predictive assessment of item response theory models. Applied Psychological Measurement, 30(4), 298–321. 10.1177/0146621605285517 [DOI] [Google Scholar]

- Smith R. W., Prometric T. (2004). The impact of braindump sites on item exposure and item parameter drift [Paper presentation]. Annual Meeting of the American Education Research Association, San Diego, CA. [Google Scholar]

- Stephens M. (2000). Dealing with label switching in mixture models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 62(4), 795–809. [Google Scholar]

- Su Y. S., Yajima M. (2015). Package `R2jags’. R package version 0.03-08, URL http://CRAN.R-project.org/package=R2jags

- Toton S. L., Maynes D. D. (2019). Detecting examinees with pre-knowledge in experimental data using conditional scaling of response times. Frontiers in Education, 4, Article 49. [Google Scholar]

- Toton S. L., Maynes D. D. (2019). Detecting examinees with pre-knowledge in experimental data using conditional scaling of response times. Frontiers in Education, 4. https://www.frontiersin.org/article/10.3389/feduc.2019.00049 [Google Scholar]

- van der Linden W. J. (2006). A lognormal model for response times on test items. Journal of Educational and Behavioral Statistics, 31, 181–204. [Google Scholar]

- van der Linden W. J. (2007). A hierarchical framework for modeling speed and accuracy on test items. Psychometrika, 72, 287–308. [Google Scholar]

- van der Linden W. J., Guo F. (2008). Bayesian procedures for identifying aberrant response-time patterns in adaptive testing. Psychometrika, 73, 365–384. [Google Scholar]

- Vermunt J. K., Magidson J. (2005). Hierarchical mixture models for nested data structures. In: Weihs C., Gaul W. (Eds.), Classification—The ubiquitous challenge (pp. 240–247). Springer. [Google Scholar]

- Wang C., Xu G. (2015). A mixture hierarchical model for response times and response accuracy. British Journal of Mathematical and Statistical Psychology, 68(3), 456–477. [DOI] [PubMed] [Google Scholar]