Abstract

Recent approaches to the detection of cheaters in tests employ detectors from the field of machine learning. Detectors based on supervised learning algorithms achieve high accuracy but require labeled data sets with identified cheaters for training. Labeled data sets are usually not available at an early stage of the assessment period. In this article, we discuss the approach of adapting a detector that was trained previously with a labeled training data set to a new unlabeled data set. The training and the new data set may contain data from different tests. The adaptation of detectors to new data or tasks is denominated as transfer learning in the field of machine learning. We first discuss the conditions under which a detector of cheating can be transferred. We then investigate whether the conditions are met in a real data set. We finally evaluate the benefits of transferring a detector of cheating. We find that a transferred detector has higher accuracy than an unsupervised detector of cheating. A naive transfer that consists of a simple reuse of the detector increases the accuracy considerably. A transfer via a self-labeling (SETRED) algorithm increases the accuracy slightly more than the naive transfer. The findings suggest that the detection of cheating might be improved by using existing detectors of cheating at an early stage of an assessment period.

Keywords: cheating, data mining, transfer learning

Standardized tests are omnipresent in higher education and large-scale assessment. In some of these tests, the results have significant consequences for the test takers (e.g., in admission or licensure tests). This motivates some test takers to increase their test scores by actions that do not conform to the code of conduct set by the test provider. Test takers, for example, use unauthorized materials, copy answers, or acquire test questions before taking the test (Bernardi et al., 2008). Such forms of irregular test-taking behavior are usually denoted as cheating. Test takers who cheat tend to score better than comparable test takers who do not. It is therefore of great importance to identify and exclude cheaters in order to maintain test fairness. Methods to detect cheaters have been investigated intensively in psychometrics; for an overview, see the three recently published monographs of Cizek and Wollack (2017b), Kingston and Clark (2014), and Wollack and Fremer (2013).

A promising approach to the detection of cheating consists of an analysis of process data. Process data are data that describe aspects of a test taker’s behavior during the test. Process data are, for example, the time spent on the test items (Kroehne & Goldhammer, 2018), change scores (Bishop & Egan, 2017), but also measures related to page focus (Diedenhof & Musch, 2017), head movement (Chuang et al., 2017), fixations (Man & Harring, 2020; Ponce et al., 2020), and number of item revisits (Bezirhan et al., 2021). As process data describe aspects of the problem-solving process, they should be indicative of unusual, possibly unauthorized ways of test taking.

Although process data may be analyzed with any statistical procedure, it has become popular to analyze them using classifiers from the field of machine learning; see, for example, Burlak et al. (2006), Chen and Chen (2017), Kim et al. (2017), Man et al. (2019), Ranger et al. (2020), Zhou and Jiao (2022), and Zopluoglu (2019). In machine learning, a detector of cheating is built from a classifier that learns to distinguish regular responders from cheaters. Such a detector is capable of considering multiple indicators of cheating simultaneously and of combining them in a flexible way. Two different forms of machine learning are established, supervised and unsupervised machine learning (Hastie et al., 2009, p. 2). In supervised machine learning, a detector is trained to classify a test taker either as a cheater or as a regular responder. The detector receives process data of numerous test takers and labels that mark each test taker as cheater or regular responder. The detector then learns to separate the cheaters from the regular responders by acquiring a prediction function that maximizes a measure of the prediction accuracy. Supervised detectors may be implemented with neural nets, support vector machines, classification trees, and naive Bayes classifiers, for example, (Hastie et al., 2009). In unsupervised machine learning, a detector searches for clusters or outliers. The detector receives a data set that contains the process data of numerous test takers but no labels that indicate the cheaters. The detector then groups similar test takers into homogeneous clusters or partitions the indicator space into areas of high (regular data) and low density (outliers). Although unsupervised detectors do not search explicitly for cheaters, they detect cheaters indirectly in case cheaters constitute separate clusters. Unsupervised detectors may be implemented with k-means cluster analysis, Gaussian mixture models, one-class support vector machines and all approaches to outlier detection that were suggested in the literature (Hodge & Austin, 2004; Zimek et al., 2012).

Detectors based on supervised machine learning can achieve a remarkable classification performance. In the study of Man et al. (2019), for example, a random forest achieved a sensitivity of 46% and a specificity of 99% in a credentialing test. Supervised machine learning, however, is often not feasible in practice as the training phase requires a data set with labeled test takers. Such a data set is usually not available. Detectors based on unsupervised machine learning, on the contrary, do not require a data set with labeled test takers. They, however, performed less well than detectors based on supervised machine learning (Man et al., 2019). In this article, we discuss a way to combine supervised and unsupervised machine learning. We use a detector that was trained with a labeled training data set and adapt the detector to unlabeled target data. Both data sets may contain data from different tests. The transfer of knowledge from one domain to the other is a problem that has been discussed intensively in machine learning under the denomination of transfer learning or domain adaptation; for overviews of transfer learning see Kouw and Loog (2019), Pan and Yang (2010), Quiñonero Candela et al. (2008), Weiss et al. (2016) and L. Zhang and Gao (2019).

In this article, we make several contributions. In the first section of the article, we address the question of knowledge transfer from a theoretical perspective. We review conditions under which a detector can be applied to new data and describe approaches to transfer learning. In the second section of the article, we investigate whether the conditions for transfer learning are met in real data. Then, we study whether the accuracy of the detection of cheating can be increased by transferring a detector that was trained with an alternative data set. We compare two approaches to transfer a detector and try a way to evaluate the accuracy of the transferred detector.

Conditions for Knowledge Transfer

In this section, we review previous findings on transfer learning. In the review, we follow closely the overviews given by Kouw and Loog (2019) and K. Zhang et al. (2013). Throughout the article, we consider the following scenario. We assume that a first data set is available that we denote henceforth as training data set. The training data set contains indicators of cheating and labels (Cheating: , Noncheating: ) of test takers that took a standardized test. The test takers are considered as a random sample from a first population. We denote this population as the training population. The observed labels and indicators of cheating are interpreted as realizations of the random variables and . The distribution of the random variables in the training population can be represented by the joint density function . The training data set is used in order to train a detector to predict the indicator of cheating. A detector consists of a function that assigns a label ( ) to all values of . The symbol ( ) denotes the parameters of the detector (e.g., the weights of a neural net) that have to be specified before the detector can be used. Learning to predict cheating consists in determining the values of the parameters.

Classifications are not always correct. To evaluate a detector, a loss function is defined. The loss function assigns a value to the output of the detector as a function of the true label . The zero-one loss, for example, assigns a value of to correct predictions ( ) and a value of to incorrect predictions ( ). The loss that is expected when a specific detector is used in the target population is where the subscript indicates that expectation is taken with respect to . The optimal detector from the class of all detectors with has those parameter values that minimize the expected loss. When training a detector with a training data set, one aims at determining the optimal parameter values . This is done by choosing those parameter values that minimize the observed loss in the training data. When increases, the chosen parameter values converge to the optimal values in probability, provided some regularity conditions hold. Having trained the detector with the training data set, the detector can be employed to detect cheaters in new data.

Assume that the detector is used for the detection of cheaters in a new data set (denoted henceforth as target data set). The target data set is similar to the training data set in the sense that it contains the same indicators of cheating. The target data set, however, does not contain labels and may have been collected with a different test (e.g., different form, content, and length) or under different circumstances (e.g., different testing time) than the training data set. The test takers that provided the target data are considered as a random sample from a population. We denote this population as the target population. The unknown label and the indicators of cheating of test taker ( are interpreted as realizations of the random variables and with joint density function in the target population. Using a detector of cheating in the target population implies the expected loss where the expectation is over distribution . The optimal parameter values that minimize the expected loss are denoted as . Estimates of the optimal parameter values, however, are not available. Instead, the parameter values from the training sample are used and the test takers in the target sample are classified according to detector . Using an estimate of , however, is not necessarily optimal for the target population. Optimality requires that is a good estimate of . This is bound to strong assumption. Optimality requires that the joint distribution of and are equal in the training and target population as

| (1) |

which simplifies to when . This condition, however, may be violated in practice.

In the following, we discuss different ways of how the two distributions may differ. The joint distribution of and in the training population can be factored as

| (2) |

In Equation 2, the distribution is a Bernoulli distribution whose parameter determines the baseline rate of cheating. The baseline rate of cheating is the proportion of the cheaters in the training population. It is specific for the target population and depends on the test, the assessment condition and contextual factors (Rettinger & Kramer, 2009). In educational testing, the proportion is typically between 0.05 and 0.10 (McCabe, 2016). The distribution is the conditional distribution of the indicators in the cheaters or the regular responders. The joint distribution in the target population can be factored in the same way as . Depending on which factors of and are identical, three different cases can be distinguished. 1

Case I: In the first case, we assume that the conditional distributions of the indicators are identical ( ), but the baseline rates of cheating differ ( ). This case is denoted as prior shift (Kouw & Loog, 2019). Prior shift requires that the response mode (regular responding versus cheating) solely determines the indicators of cheating. That is, the data generating mechanisms underlying and depend on , but not on those context factors that have changed from the first to the second assessment. Prior shift, for example, might occur when the test material and the form of cheating remain the same, but the frequency of cheating increases as supervision is less strict, test takers lose fear of getting caught or leaked items are becoming more widespread. When prior shift occurs, the distribution of the indicators in the training population differs from the distribution of the indicators in the target population. A detector trained with the training data is thus not optimal for the target data (Elkan, 2001). The optimal Bayes classifier with zero-one loss, for example, partitions the indicator space into subspaces corresponding to cheaters and regular responders at the boundary in the target population. This can be re-expressed as . Prior shift thus invalidates the decision criterion of the detector when employed to the target data set.

The effect of a prior shift can be corrected by weighting the data by when the detector is trained with the training data set. This is due to Equation 1, Equation 2, and the assumption that . Weighting by requires an estimate of the baseline rate of cheating in the training and the target population. For the training population, the estimate is simply the proportion of cheaters in the training data set. For the target population, the baseline rate of cheating cannot be estimated directly as the target data are not labeled. A crude estimate of can be determined by a first application of the preliminary detector or an application of an unsupervised learning algorithm (e.g., Gaussian mixture model). More refined approaches exploit the fact that ; note that and can be estimated with the data that are available (K. Zhang et al., 2013).

Case II: In the second case, we assume that the baseline rates of cheating are identical in the training and the target population ( ) but that the conditional distributions of the indicators are different ( ). This is denoted as concept drift in the literature (Kouw & Loog, 2019). In concept drift, the distribution of the indicators of cheating is influenced not only by the response mode but also by factors of the context. Concept drift occurs, for example, when the form of cheating changes. This happens when the amount of preknowledge increases during the assessment period. In such cases, the distribution of the indicators (e.g., total testing time) might be different in the cheaters ( ) but not in the regular responders ( ). Concept drift may also follow changes in the test material or the testing context. In this case, the distribution of the indicators might be different in the cheaters and the regular responders ( , ). Concept drift occurs when the indicators of cheating are not ancillary statistics for but depend on the difficulty of the items, the length of the test, the time demand of the items or the sample size. Concept drift implies that the distribution of the indicators in the training population differs from the distribution of the indicators in the target population. A detector trained with the training data is not optimal for the target data in this case.

Concept drift can be accounted for by weighting the target data, transforming the target data or by retraining the detector. All three approaches are discussed in the following. Parallel to Case I, the effect of concept drift can be corrected by weighting the data by when the detector is trained with the training data set. This is due to Equation 1, Equation 2, and the assumption that . Weighting by , however, is not feasible in practice for several reasons. Estimating the ratio of densities is difficult and often results in large standard errors of estimation (Huang et al., 2006). Furthermore, due to a lack of labels in the target data, there is no direct estimator of and . Provided that the distribution of the indicators is identical in the regular responders ( ), one may estimate the distribution with a mixture model. In the more general case, a different solution is necessary. If the distributions of the indicators in the training and the target population simply differ in the locations and the scales, the weights can be estimated by a kernel embedding of the conditional distributions as proposed by K. Zhang et al. (2013).

Instead of weighting the training data when training the detector, one can transfer the target data. In case of concept drift, there is a transformation that transforms the indicators in such a way that the transformed indicators have the same conditional distribution in the target and the training population. In case the conditional distributions of the indicators in cheaters and regular responders differs in the same way, the transformation does not depend on . This, for example, occurs in visual recognition where data differs with respect to perspective or resolution but is otherwise comparable (Shao et al., 2014). In psychological assessment, this happens when contextual changes have the same effects on cheaters as on regular responders. New items, for example, might increase the response time by the same amount of time. When the transformation does not depend on , it is justified to apply a transformation that transforms into . As for this transformation, no labels are required, it can be determined with the available data (e.g., Pan et al., 2011).

The last option to handle concept drift is self-labeling, a technique that consists in simultaneously updating the parameters of a detector and the labels of the originally unlabeled test takers in the target sample (Long et al., 2014; Triguero et al., 2015). As there are many different variants of self-labeling, we only describe the general approach; see the empirical application for a detailed description of a specific variant. In the present context, the test takers in the target sample are labeled as regular responders or as cheaters with a detector that was previously trained with the training data. From the labeled target data, those test takers are selected for which the detector makes the most confident predictions. The selected test takers are then added to the training data and the detector is trained anew with the augmented data set. Labeling and retraining are repeated until the detector does not change anymore. More recent approaches combine self-labeling methods with down-weighting the instances of the training data (e.g., Bruzzone & Marconcini, 2010). Here, in each iteration, one adds labeled test takers of the target data set and down-weights or removes test takers of the training data set. This slowly decreases the influence of the original training data on the detector.

Case III: In the third case, we assume that both factors of Equation 2 are different in the target population, the baseline rates of cheating ( ), and the conditional distributions of the indicators ( ). This is the combination of Case I and Case II. We denominate this case as domain shift. Domain shift impairs the usage of the trained detector for the target data as the distribution of the indicators in the training population is different to the distribution of the indicators in the target population.

Domain shift can be accounted for by weighting the target data or by retraining the detector. Similar to Case I and Case II, the effects of domain shift can be countered by weighting the data by when training the detector with the training data. This is a direct consequence of Equation 1 and Equation 2. A direct application of this result is infeasible as the labels in the target sample are not known such that cannot be determined. Unfortunately, there is no way to estimate the weights directly, unless no further assumptions are made about the difference of and and the difference of and . When the shift consists of a change in the baseline rates of cheating and in a scale and location shift of , the approach of K. Zhang et al. (2013) can be generalized to the case of a domain shift. The second option to handle domain shift is self-labeling. This will work as long as the difference between the two distributions is not too large. For self-labeling, the same procedures can be used as in Case II.

Empirical Application

When a detector is transferred from training to target data, its performance depends crucially on the similarity of the baseline rates of cheating and the conditional distributions of the indicators in the training and the target population (Mansour et al., 2009; Xu & Mannor, 2012). As there are numerous reasons why the baseline rates of cheating and the conditional distributions of the indicators might differ, it is not clear whether a transferred detector will perform well in practice. In the following, we investigate by means of an empirical example the performance of a transferred detector. In the study, we considered several popular indicators of cheating that were used in previous papers on cheating detection. We first tested whether the distribution of the indicators differs in different data sets. We then compared the performance of a transferred detector to the performance of an unsupervised detector that was trained solely with the unlabeled target data. We considered two forms of transfer. In the first form, the trained detector was simply applied to the new data without any adaptations to possible distributional changes. In the second form, the trained indicator was retrained via a self-labeling approach. We finally investigated whether the transferred detector can be back-transferred to the original data set. This was suggested in order to evaluate the success of the transfer (Bruzzone & Marconcini, 2010). As in practice, the target data are not labeled, the detection accuracy of the transferred detector cannot be estimated directly.

Data

The data consisted of the responses and the response times in a credentialing test (Cizek & Wollack, 2017a). The credentialing test was employed in two forms, Form A and Form B, each consisting of 170 scored items. Both forms have items in common. The remaining items are specific for each form. Form A was taken by 1,636 test takers and Form B by 1,644 test takers. In the data set, all test takers were classified either as regular responders or as cheaters by the testing company. In Form A, (2.8%) of the test takers were flagged as cheaters. In Form B, (2.9%) of the test takers were flagged as cheaters. We chose the credentialing data set as it is one of the few data sets with identified cheaters. Only when cheaters are identified, it is possible to evaluate the accuracy of a detector. Furthermore, the credentialing data are a good representative of the data one would analysis for cheaters. It is a computer-based high-stakes test with single-choice response format that is employed over a longer testing period; note that the credentialing data have been analyzed before by Boughton et al. (2017), Man et al. (2019) or Zopluoglu (2019), just to mention a few.

For the present purpose, we only considered the specific items that were contained in either Form A or Form B. The selected items constituted two item sets with no overlap. We denote the corresponding data sets as data set A-L and data set B-L in the following. As the data sets A-L and B-L had no item in common, they can be regarded as data from different tests. We then split the data set A-L into two subtests of and items. Data set A-R1 contained the data in the 42 items with odd item number and data set A-R2 the data in the 41 items with even item number. We split the data set B-L in the same way into data set B-R1 and data set B-R2. This resulted in six data sets containing the responses and response times on tests of different content and length. We split the credentialing data in this way as we wanted to investigate the transfer of a cheating detector when tests differ in content and length.

Indicators of Cheating

In the study, we used indicators of cheating based on the responses, the response times, or both. We favored nonparametric indicators of cheating that do not require strong distributional assumptions about the responses and the response times. This was motivated by the fact that such assumptions are often not met in practice. Although we also included a few parametric indicators of cheating, we did not use them for tests of model fit but simply treated them as further nonparametric indicators. In total, we used ten indicators of cheating, two based on the responses of a test taker, two based on the similarity of the responses of different test takers, five based on the response times of a test taker, and one based on the responses and response times of a test taker. Due to space limitations, we give only a short description of the indicators; see the references for a more detailed description.

Indicator

(Sijtsma, 1986) is a measure of person fit on basis of the responses. is the average covariance of the responses of a test taker and the responses of the remaining test takers. This covariance is then scaled to . Small values of indicate an irregular response process.

Indicator C

(Sato, 1975) is a measure of person fit that assesses the Guttman homogeneity of a response pattern. Response patterns are Guttman homogeneous when solving a question implies that all easier questions have been solved as well. is based on the covariance between the responses of a test taker and the solution probabilities of the items divided by the covariance a perfect Guttman pattern would have. ( ) is zero when a response pattern is absolutely Guttman homogeneous. Large values of indicate an irregular response process.

Indicator ZT

is the standardized log-transformed total testing time of a test taker. In order to prevent masking, the standardization is done with robust estimates of location and scale. Large absolute values of indicate outliers and might be a sign of an irregular response process.

Indicator KL

(Man et al., 2018) is a measure of person fit on basis of the response times. evaluates whether a test taker distributes his or her testing time over the items in a similar way as the remaining test takers. is the Kullback–Leibler divergence between the percentage shares of the response times of a test taker in his or her total testing time and the average shares of the sample. KL is zero in case of perfect agreement. Large values of indicate an irregular response process.

Indicator L

is a measure of person fit on basis of the response times. For , the log-response times are centered over the items and the test takers. The centered response times are divided by the item specific standard deviation (double standardization; Fekken & Holden, 1992). The standardized response times of a test taker are then squared and summed. approximates the statistics proposed by Marianti et al. (2014) and Sinharay (2018). As such, the expected value of is close to the number of items minus one in case the response times are log-normally distributed. Values near zero and large values indicate an irregular response process.

Indicator

(Ranger et al., 2021) is a measure of person fit on basis of the response times that parallels (Sijtsma, 1986). is the average -correlation between the response times of a test taker and the response times of the remaining test takers. Small values of indicate an irregular response process.

Indicator

is a measure of person fit on basis of the response times. For , the continuous response times are discretized itemwise into zero and one at item specific thresholds. Then, the statistic (van der Flier, 1982) is determined for the binary response time pattern of a test taker. is the sum of weighted Guttman errors (violations of Guttman homogeneity) that is scaled to . Large values of indicate an irregular response process.

Indicator /

and are indicators of answer copying on basis of the responses of the test takers. They are related to the -statistic (Holland, 1996; Sotaridona & Meijer, 2002). The -statistic is used in order to test the hypotheses that the number of identical incorrect responses between a suspected copier and a supposed source are due to chance. The value of the test is determined by a binomial distribution and a linear approximation of the agreement probability. For the present purpose, we determined the number of identical incorrect responses and values for all pairs of test takers. is the maximal number of identical responses and the minimal value of a test taker. High values of and low values of are indicative of an irregular response process.

Indicator

(Ranger et al., 2020) is a measure of person fit on basis of the responses and response times. is Cook’s distance in the regression of the total testing time on the test score. The distance of a test taker is large when the test score of a test taker is an outlier and the response time has a large regression residual. High values of CD statistic are indicative of an irregular response process.

We used these indicators as they had good discriminatory power in previous studies (Man et al., 2018; Ranger et al., 2020, 2021) and did not correlate too highly. The indicators were determined with the R packages CopyDetect (Zopluoglu, 2018), PerFit (Tendeiro et al., 2016) or with scripts written by the authors. Prior to data analysis, the indicators in each data set were standardized. We used robust estimates of location and scale in order to reduce the influence of the cheaters which might provide outlying values. The robust estimates were determined with the R package robust (Wang et al., 2013).

Method

We investigated whether the distributions of the indicators of cheating differ in the different data sets. For this purpose, we tested for all pairs of data sets the equality of the joint distribution of the indicators with two multivariate two-sample tests. The first test (MMD test) was a kernel two-sample test on basis of the maximum mean discrepancy (Gretton et al., 2012). For this test, the expectation of functions of the indicators in the two populations are considered. One could, for example, determine the expected product of all indicators in the training and the target population. The discrepancy between the two distributions is then assessed by the difference in the expectations. The maximum mean discrepancy is the largest difference that can be achieved for all continuous functions that are subject to certain technical bounds. The MMD test is thus not restricted to a comparison of a limited set of moments but employs the most discriminating statistic that is available. It can be shown that in case the maximum mean discrepancy is zero, two multivariate distributions are identical. The MMD test was performed with the R package maotai (Kisung, 2022). We used the unbiased version of the test and a radial basis function kernel. The second test (RF test) was a test on basis of a random forest classifier (Hediger et al., 2022). The RF test determines whether a random forest classifier is capable to separate observations from two data sets beyond chance level. The RF test was performed with the R package hypoRF (Hediger et al., 2021). Both tests are complementary. The MMD test is recommended for the detection of differences in the dependency structure, while the RF test is recommended for the detection of differences in the marginal distributions (Hediger et al., 2022). We also conducted a post hoc analysis with two-sample Kolmogorov–Smirnov tests in order to determine which indicators are distributed differently.

We then trained a detector of cheating. As the detector of cheating, we used a quadratic discriminant classifier (Hastie et al., 2009, pp. 110–112). We chose a quadratic discriminant classifier as it achieved similar accuracy as alternative methods of machine learning (Man et al., 2019) but was simpler to train and did not require tuning. We proceeded as follows. We fit the quadratic discriminant classifier to a first data set (training data) using the known labels of the test takers. The training data set was chosen from the six data sets A-L, A-R1, A-R2, B-L, B-R1, or B-R2. We used the R package RSSL (Krijthe, 2016) for model fitting. We then chose a new data set (target data) for which we aimed at identifying the cheaters. The target data set was chosen from the remaining data sets such that there was no overlap in items or test takers. When using A-L as training data set, for example, it was only paired with target data set B-L, B-R1, or B-R2. Although the target data were labeled, we pretended that the labels were not known. As a detector of cheating, we reused the trained quadratic discriminant classifier for the target data. We predicted the conditional probabilities of being a regular responder or a cheater with the reused quadratic discriminant classifier and classified the test taker into the more probable class.

Simply reusing a detector is a naive transfer where no adaptations to possible distributional differences are made. We additionally transferred the trained quadratic discriminant classifier to the target data via a self-labeling approach. This was done in order to compare the detection performance of the naive transfer to a more sophisticated transfer. The self-training approach was based on the SETRED algorithm of Li and Zhou (2005). The SETRED algorithm is an algorithm for semisupervised learning. It uses a pretrained classifier to predict the labels of unlabeled data (here, the target data). In the current application, this was the quadratic discriminant classifier that was trained with the target data. Then, the target data points with the most confident predictions are selected and included in the original training data set. This constitutes the augmented training data set. The augmented data set is then checked for possible incorrect label assignments. This is done via a comparison of the predicted label of a target data point with the labels of the training data in a neighborhood of the target data point. In case of low agreement, the prediction is considered as unreliable and the target data point is removed from the augmented data set. The classifier is then fit anew to the augmented data set in the same way as it was fit to the original training data. This process of prediction, data augmentation, data cleaning, and retraining is repeated until the detector does not change anymore or a maximum number of cycles is exceeded. We used the implementation of the SETRED algorithm provided in the R package ssc (González et al., 2019). After the transfer, we predicted the labels of all test takers in the target data set. We predicted the conditional probabilities of being a regular responder or a cheater with the transferred quadratic discriminant classifier and classified the test taker into the more probable class. In practice, the SETRED algorithm can be used for all data sets provided that they contain the same variables.

We additionally fit a Gaussian mixture model with two mixture components to the target data. Fitting a Gaussian mixture model does not require labeled data and would have been one of the few popular options to detect cheaters in new data (Man et al., 2019). Test takers were assigned to the component with the highest posterior probability. We considered the component with the smaller prior probability as representing cheating. The Gaussian mixture model was fit with the R package mclust (Scrucca et al., 2016). We considered the Gaussian mixture model as a benchmark for the two transferred quadratic discriminant classifiers. Without labeled target data, one would either have to use a method from unsupervised machine learning or a method from supervised machine learning that was trained with other data.

We evaluated the detection accuracy of all three detectors, the reused quadratic discriminant classifier, the transferred quadratic discriminant classifier, and the Gaussian mixture model using the known labels in the target data set. We considered the detection accuracy of the Gaussian mixture model as a benchmark for the two transferred quadratic discriminant classifiers. We considered the detection accuracy of the reused quadratic discriminant classifier as a benchmark for the classifier that was transferred with the SETRED algorithm. As measures of the detection accuracy, we used the hit rate (relative detection frequency of cheaters), the rate of false alarms (relative frequency of mislabeled regular responders), and the area under the curve (AUC) of the receiver–operating characteristic (ROC). These quantities were determined with the R package pROC (Robin et al., 2011).

In practice, the target data are not labeled. Hence, it is neither possible to evaluate the detection accuracy nor to assess whether the transfer was successful. As a solution, Bruzzone and Marconcini (2010) suggested evaluating the transfer of a detector by an evaluation of a back-transfer of the detector from the target data to the training data. As in the training data, the labels are known, the detection accuracy of a back-transferred detector can be evaluated. A successful back-transfer is then considered as indicative of a successful transfer. The suggestion was motivated by the assumption that relations, when they exist, can be reversed. To investigate whether this proceeding is useful in the present context, we changed the role of the training and the target data. We first labeled the target data with the transferred quadratic discriminant classifier and considered the predicted labels as the true ones. We then fit a quadratic discriminant classifier to the labeled target data. This classifier would be the classifier to transfer when the target data were the training data. The classifier was then back-transferred to the training data via the SETRED algorithm. In doing so, we ignored the labels of the training data. The proceeding was identical to the first application of the SETRED algorithm. With the back-transferred quadratic discriminant classifier, we predicted the labels of all test takers in the training data set. We then evaluated the detection accuracy of the back-transferred quadratic discriminant classifier in the training data by means of the AUC. We also evaluated the accuracy of the classifier that was trained on the labeled target data when predicting the labels of the training data. The benefit of the back-transfer was assessed by the performance difference in the two classifiers. The benefit of the back-transfer was considered as a proxy of the benefit of the transfer.

Results

The means (M) and standard deviations (SD) of the standardized indicators of cheating are reported in Table 1 for the different data sets separately for the regular responders (RR) and the cheaters (CT); note that the mean and the standard deviation of the standardized indicators are not zero and one, respectively, as we used robust estimates of scale and location for the standardization and standardization was done with the whole data set.

Table 1.

Mean (M) and Standard Deviation (SD) of the Standardized Indicators of Cheating in the Different Data Sets Separately for Regular Responders (RR) and Cheaters (CT) as Well as the Sample Size (n)

| A-L | A-R1 | A-R2 | B-L | B-R1 | B-R2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Stat | RR | CT | RR | CT | RR | CT | RR | CT | RR | CT | RR | CT | |

| Ind | n | 1,590 | 46 | 1,590 | 46 | 1,590 | 46 | 1,595 | 48 | 1,595 | 48 | 1,595 | 48 |

| ZT | M | −0.50 | −2.51 | −0.49 | −2.39 | −0.40 | −2.31 | −0.54 | −2.98 | −0.33 | −2.38 | −0.44 | −2.59 |

| SD | 1.58 | 3.28 | 1.57 | 3.12 | 1.61 | 3.22 | 1.62 | 3.37 | 1.49 | 3.02 | 1.48 | 2.83 | |

| KL | M | 0.12 | 0.85 | 0.15 | 0.98 | 0.12 | 0.56 | 0.17 | 0.99 | 0.21 | 0.84 | 0.24 | 1.15 |

| SD | 1.11 | 2.49 | 1.14 | 2.53 | 1.05 | 2.05 | 1.12 | 2.63 | 1.09 | 2.17 | 1.19 | 2.89 | |

| L | M | 0.10 | 1.79 | 0.12 | 1.90 | 0.10 | 1.28 | 0.17 | 2.02 | 0.19 | 1.51 | 0.25 | 2.28 |

| SD | 1.29 | 5.52 | 1.36 | 5.45 | 1.13 | 4.49 | 1.43 | 6.03 | 1.28 | 4.65 | 1.49 | 6.60 | |

| M | −0.01 | −0.16 | −0.01 | −0.15 | −0.05 | −0.14 | −0.07 | −0.41 | −0.08 | −0.37 | −0.09 | −0.34 | |

| SD | 0.94 | 1.23 | 0.91 | 1.08 | 0.90 | 1.14 | 0.99 | 1.16 | 0.95 | 0.99 | 0.94 | 1.12 | |

| UT3 | M | 0.03 | 0.17 | 0.03 | 0.10 | 0.05 | 0.25 | 0.03 | −0.09 | 0.07 | 0.14 | 0.10 | −0.07 |

| SD | 0.94 | 1.20 | 0.92 | 0.92 | 0.91 | 0.99 | 0.95 | 1.53 | 0.99 | 1.44 | 0.98 | 1.46 | |

| M | 0.07 | 0.19 | 0.11 | 0.03 | 0.02 | 0.24 | 0.06 | −0.27 | 0.04 | −0.05 | 0.06 | −0.38 | |

| SD | 0.88 | 0.88 | 0.92 | 0.99 | 0.91 | 0.83 | 0.95 | 1.14 | 0.90 | 1.03 | 0.97 | 1.00 | |

| C | M | 0.01 | −0.07 | −0.05 | 0.05 | 0.03 | −0.17 | 0.02 | 0.33 | 0.00 | 0.08 | 0.03 | 0.47 |

| SD | 0.85 | 0.92 | 0.86 | 0.91 | 0.87 | 0.88 | 0.90 | 1.12 | 0.87 | 1.01 | 0.89 | 0.96 | |

| M | 0.03 | 0.31 | 0.00 | −0.03 | −0.02 | 0.03 | 0.08 | 0.49 | 0.01 | 0.39 | −0.07 | −0.17 | |

| SD | 0.90 | 1.34 | 0.85 | 1.05 | 0.87 | 1.06 | 0.96 | 1.39 | 0.90 | 1.23 | 0.95 | 1.29 | |

| M | −0.03 | 0.27 | 0.01 | −0.11 | −0.08 | 0.12 | 0.00 | 0.27 | −0.06 | 0.29 | −0.15 | −0.39 | |

| SD | 0.89 | 1.37 | 0.92 | 1.26 | 0.87 | 1.32 | 0.95 | 1.51 | 0.91 | 1.19 | 0.97 | 1.56 | |

| CD | M | 2.28 | 23.45 | 2.05 | 25.35 | 2.80 | 22.64 | 1.90 | 24.10 | 1.93 | 18.49 | 1.81 | 27.02 |

| SD | 10.75 | 51.61 | 9.99 | 59.20 | 9.21 | 47.51 | 9.76 | 49.63 | 9.40 | 37.37 | 9.32 | 62.56 | |

Note. A-L/B-L = data sets containing all items; A-R1/B-R1 = data sets containing items with odd number; A-R2/B-R2 = data set containing items with even number; RR = regular responders; CT = cheaters; ZT = standardized log-transformed total testing time; KL = Kullback–Leibler divergence between the percentage shares of the response times; L = sum of squared double standardized response times; = average -correlation between a test taker’s and remaining test takers’ response times; = sum of weighted Guttman errors in discrete response time pattern; = average covariance between a test taker’s and the remaining test takers’ responses; C = Guttman homogeneity of a response pattern; and = indicators of answer copying on basis of test takers’ responses; CD = Cook’s distance.

As the indicators tap different aspects of the data and are not normally distributed, it is difficult to compare values of the different indicators although they are standardized. The values of an indicator, however, can be compared between cheaters and regular responders. A measure of the separation of both groups is the mean difference divided by the standard deviation of the regular responders. We chose to relate the mean difference to the standard deviation of the regular responders as the standard deviation differs in cheaters and regular responders and we did not want to pool them. The quantity is similar to Cohen’s . Values of 0.2, 0.5, and 0.8 are considered small, medium, and large effects, respectively (Cohen, 1988).

The indicators based on response time differ with respect to their capability to distinguish regular responders from cheaters. For ZT, KL, and L, the average values of regular responders and cheaters differ moderately. Mean differences are on average 1.33, 0.65, and 1.23 SD. For and UT3, the means differ less. Mean differences are generally smaller for indicators based on the responses than for indicators based on the response times. For , C, , and , the average mean difference between regular responders and cheaters is about 0.1 SD. Indicator CD, that combines responses and response times, is very powerful. Mean differences are on average about 2.2 SD. Table 1 also suggests that the variance of the indicators tends to be larger in the cheaters than in the regular responders.

We tested whether the joint distribution of the indicators of cheating is identical in the different data sets. For this purpose, we compared the multivariate distribution of the indicators in the data sets that were used as training data to the distribution in the data sets that were used as target data. We paired the data sets and tested the equality of the distribution with two sample tests. Results for the two tests, the MMD test and the RF test, are reported in Table 2 where the value of the test statistic and the value of the test can be found. Each cell contains the results for a pair of two data sets; note that small values of are evidence against the hypothesis that the distributions are identical. Results are limited to the regular responders. The number of cheaters is too small for valid statements about the distribution of the indicators. The values of the RF test are determined with a bootstrap. For this reason, some values are identical as the value is a fraction of the bootstrap samples.

Table 2.

Test Statistic ( ) and Value (p) of Two Tests for the Equality of the Distribution of the Indicators in the Different Samples of the Regular Responders

| B-L | B-R1 | B-R2 | |||||

|---|---|---|---|---|---|---|---|

| Data | Result | MMD | RF | MMD | RF | MMD | RF |

| A-L | T | 0.000 | 0.930 | 0.001 | 0.860 | 0.001 | 0.831 |

| p | .082 | .010 | .037 | .010 | .011 | .010 | |

| A-R1 | T | 0.001 | 0.804 | 0.001 | 0.834 | 0.001 | 0.839 |

| p | .012 | .010 | .007 | .010 | .013 | .010 | |

| A-R2 | T | 0.003 | 0.741 | 0.001 | 0.868 | 0.003 | 0.843 |

| p | .001 | .010 | .002 | .010 | .001 | .010 | |

Note. =1,590 in data sets A-L, A-R1, A-R2; = 1595 in data sets B-L, B-R1, B-R2. Each cell contains the results of the two-sample tests when the data set in the row and the data set in the column is compared. MMD = Kernel two-sample test; RF = test based on a random forest.

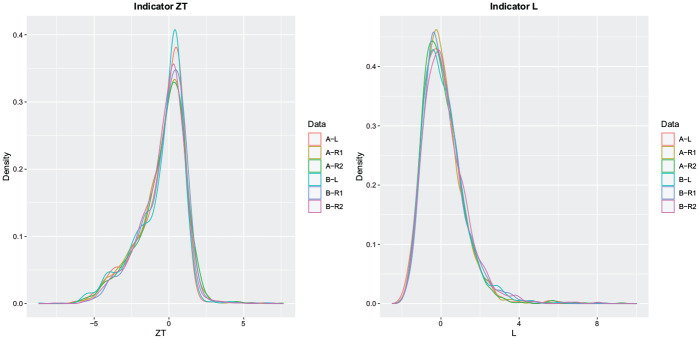

According to the results in Table 2, the indicators are distributed differently in the data sets. This result was to be expected, as only indicators L and are ancillary statistics, that is, are statistics whose distribution does not depend on unknown parameters; note, however, that L is only an ancillary statistic when the response times are log-normally distributed, an assumption that might not hold in practice. A post hoc analysis of distributional differences with univariate two-sample Kolmogorov–Smirnov tests suggested that distributional differences are most pronounced for indicator ZT, L, and CD. A kernel density estimate of the distribution of ZT and L is visualized in Figure 1 for the six data sets. Although the general form is similar, there are differences in the tails of the distribution. The tail behavior, however, is very important for the detection of cheating as cheaters have outlying values.

Figure 1.

Distribution of Indicator ZT and Indicator L in the Regular Responders and the Six Data Sets (A-L, A-R1, A-R2, B-L, B-R1, B-R2)

We investigated whether and to what extend a detector of cheating trained with a first data set (training data) can be transferred to a second data set (target data). We proceeded as follows. We first chose one of the data sets as training data and fit a quadratic discriminant classifier to it. The quadratic discriminant classifier was then reused in order to classify the test takers in the target data (naive transfer). We additionally transferred the trained quadratic discriminant classifier via a self-learning algorithm to the target data (SETRED transfer). Test takers were classified as cheaters with the transferred quadratic discriminant classifier as before. We finally fit a Gaussian mixture model to the target data (unsupervised machine learning approach). The Gaussian mixture model (GMM) was likewise used for classifying test takers as regular responders or cheaters. The hit rates (HR), the rates of false alarms (FA), and the AUCs are reported for the different classifiers and all combinations of training and target data in Table 3; note that higher values of AUC imply higher accuracy.

Table 3.

Hit Rates (HR), Rates of False Alarms (FA), Areas Under the Curve (AUCs), and Relative Gains (D1, D2) in AUC of Three Detectors of Cheating for Different Transfer Tasks Consisting in Different Combinations of Training and Target Data

| Train | Target | GMM | Naive | SETRED | D1 | D2 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HR | FA | AUC | HR | FA | AUC | HR | FA | AUC | ||||

| A-L | B-L | 0.604 | 0.164 | 0.649 | 0.375 | 0.009 | 0.894 | 0.479 | 0.018 | 0.919 | 0.269 | 0.024 |

| B-R1 | 0.604 | 0.139 | 0.705 | 0.292 | 0.008 | 0.865 | 0.417 | 0.011 | 0.864 | 0.158 | −0.002 | |

| B-R2 | 0.562 | 0.157 | 0.771 | 0.271 | 0.013 | 0.784 | 0.333 | 0.016 | 0.837 | 0.066 | 0.053 | |

| A-R1 | B-L | 0.604 | 0.164 | 0.649 | 0.375 | 0.013 | 0.881 | 0.479 | 0.020 | 0.848 | 0.199 | −0.033 |

| B-R1 | 0.604 | 0.139 | 0.705 | 0.271 | 0.009 | 0.852 | 0.396 | 0.016 | 0.865 | 0.160 | 0.013 | |

| B-R2 | 0.562 | 0.157 | 0.771 | 0.333 | 0.017 | 0.804 | 0.396 | 0.040 | 0.804 | 0.033 | 0.000 | |

| A-R2 | B-L | 0.604 | 0.164 | 0.649 | 0.271 | 0.011 | 0.837 | 0.479 | 0.016 | 0.879 | 0.230 | 0.042 |

| B-R1 | 0.604 | 0.139 | 0.705 | 0.208 | 0.010 | 0.831 | 0.396 | 0.013 | 0.868 | 0.162 | 0.037 | |

| B-R2 | 0.562 | 0.157 | 0.771 | 0.229 | 0.012 | 0.775 | 0.333 | 0.017 | 0.821 | 0.050 | 0.045 | |

| B-L | A-L | 0.522 | 0.175 | 0.715 | 0.326 | 0.012 | 0.848 | 0.457 | 0.020 | 0.869 | 0.154 | 0.020 |

| A-R1 | 0.609 | 0.152 | 0.799 | 0.239 | 0.009 | 0.795 | 0.239 | 0.013 | 0.835 | 0.035 | 0.040 | |

| A-R2 | 0.543 | 0.184 | 0.697 | 0.261 | 0.016 | 0.803 | 0.391 | 0.025 | 0.807 | 0.109 | 0.003 | |

| B-R1 | A-L | 0.522 | 0.175 | 0.715 | 0.391 | 0.016 | 0.868 | 0.457 | 0.040 | 0.869 | 0.154 | 0.001 |

| A-R1 | 0.609 | 0.152 | 0.799 | 0.239 | 0.011 | 0.822 | 0.304 | 0.026 | 0.806 | 0.007 | −0.016 | |

| A-R2 | 0.543 | 0.184 | 0.697 | 0.370 | 0.021 | 0.821 | 0.391 | 0.031 | 0.827 | 0.130 | 0.006 | |

| B-R2 | A-L | 0.522 | 0.175 | 0.715 | 0.348 | 0.016 | 0.841 | 0.478 | 0.026 | 0.840 | 0.125 | −0.001 |

| A-R1 | 0.609 | 0.152 | 0.799 | 0.239 | 0.011 | 0.846 | 0.348 | 0.019 | 0.856 | 0.057 | 0.010 | |

| A-R2 | 0.543 | 0.184 | 0.697 | 0.348 | 0.019 | 0.778 | 0.413 | 0.031 | 0.790 | 0.093 | 0.012 | |

Note. GMM = Gaussian Mixture Model; Naive = reuse of quadratic discriminant classifier; SETRED = Transfer of quadratic discriminant classifier. D1 = AUC(SETRED)—AUC(GMM); D2 = AUC(SETRED)—AUC(Naive). HR = hit rates; FA = the rates of false alarms; AUC = areas under the curve (AUC).

The Gaussian mixture model has limited value as a detector of cheating in practice. It is true that its discriminatory power is acceptable according to the AUC value that is above 0.7 (Mandrekar, 2010). Its usefulness in practice, however, is limited by the high rate of false alarms. The two quadratic discriminant classifiers perform better. Their AUC is above 0.8 and thus excellent in most transfer tasks (Mandrekar, 2010). The criterion for separating regular responders from cheaters is also more conservative. Both transferred classifiers have a lower rate of false alarms, but also a slightly lower hit rate than the Gaussian mixture model. This suggests that it might be better to reuse a detector of cheating for a new data set than to use an unsupervised method for clustering or outlier detection. The transferred quadratic discriminant classifier (SETRED transfer) achieves a higher AUC than the reused quadratic discriminant classifier (naive transfer) in of the transfer tasks. Via the SETRED transfer, the AUC can be increased on average by over a naive transfer. This is a modest improvement of the discriminatory power. The SETRED transfer also adjusts the decision criterion. In contrast to the naive transfer, the hit rates are higher when the SETRED algorithm is used. In the transfer from the A-L to B-R1 data set the hit rate increases from to , for example.

In practice, the target data are not labeled. For this reason, it is not possible to evaluate the transferred detector as we did in Table 3. Bruzzone and Marconcini (2010) suggested assessing the benefit of a transfer indirectly via the benefit of a back-transfer. As the training data are labeled, the accuracy of a back-transferred detector can be assessed. For this purpose, we fit a quadratic discriminant classifier to the target data that were labeled with the transferred quadratic discriminant classifier. This was the classifier to transfer. The classifier was then transferred back from the target data to the training data via the SETRED algorithm. Table 4 contains the AUC of the back-transferred classifier in the training data. In addition, we included the AUC of the classifier to transfer when it was reused in the training data without any adaptations as a standard of reference. The difference between the two AUCs denotes the utility of the back-transfer. A positive difference indicates that the back-transfer was successful. A negative difference indicates the contrary. Motivation for this analysis was the question of whether the benefit of the back-transfer (Table 4, D) is related to the benefit of the transfer (Table 3, D2).

Table 4.

Areas Under the Curve (AUCs) and Difference (D) in AUC of Two Detectors of Cheating When the Detector Is Transferred From the Target Data Back to the Training Data for Different Transfer Tasks

| Train | Retrain | Target | Naive | SETRED | D |

|---|---|---|---|---|---|

| A-L | B-L | A-L | 0.845 | 0.855 | 0.011 |

| B-R1 | 0.779 | 0.837 | 0.057 | ||

| B-R2 | 0.796 | 0.821 | 0.025 | ||

| A-R1 | B-L | A-R1 | 0.811 | 0.808 | −0.002 |

| B-R1 | 0.786 | 0.788 | 0.002 | ||

| B-R2 | 0.833 | 0.829 | −0.004 | ||

| A-R2 | B-L | A-R2 | 0.760 | 0.765 | 0.005 |

| B-R1 | 0.737 | 0.772 | 0.035 | ||

| B-R2 | 0.745 | 0.779 | 0.034 | ||

| B-L | A-L | B-L | 0.886 | 0.878 | −0.008 |

| A-R1 | 0.722 | 0.787 | 0.065 | ||

| A-R2 | 0.850 | 0.850 | 0.001 | ||

| B-R1 | A-L | B-R1 | 0.858 | 0.856 | −0.002 |

| A-R1 | 0.782 | 0.804 | 0.022 | ||

| A-R2 | 0.858 | 0.858 | 0.000 | ||

| B-R2 | A-L | B-R2 | 0.817 | 0.810 | −0.007 |

| A-R1 | 0.777 | 0.803 | 0.026 | ||

| A-R2 | 0.846 | 0.830 | −0.016 |

Note. AUCs = Areas Under the Curve; Naive = reuse of transferred quadratic discriminant classifier; SETRED = Transfer of transferred quadratic discriminant classifier via SETRED.

There is only a weak relation between the utility of the transfer and the back-transfer. The change in AUC from a naive transfer to the transfer via the SETRED algorithm is different when transferring the classifier from the training to the target data (Table 3, D2) than when transferring the classifier from the target to the training data (Table 4, D). According to Table 3, the transfer via the SETRED algorithm was not successful in transfer A-R1 → B-L and in transfer B-R1 → AR-1. The difference in AUC when performing the corresponding back-transfers, however, did not indicate the decrease. According to Table 4, the back-transfer suggests that the transfer B-R2 → A-R2 was not successful. This, however, is not the case as here the AUC increased after the transfer (see Table 3, D2). In general, the changes in AUC in the back-transfer are not indicative of changes in the transfer.

Discussion

The detection of cheaters is an important topic in large-scale assessment. A promising approach consists in using several indicators of cheating and methods developed in the field of machine learning (Man et al., 2019). Methods for supervised machine learning usually achieve high accuracy rates. They, however, require a sample with known cheaters for training. Labeled data are usually not available in practice. Methods for unsupervised machine learning, on the other hand, do not require labeled data. They are based on algorithms for the detection of clusters or outliers and achieve a separation of regular responders from cheaters themselves. Methods for unsupervised machine learning, however, do not achieve the high accuracy of the supervised methods. This is due to the fact that they detect any form of unusual test-taking behavior, not just cheating. In this article, we investigated whether it is possible to combine elements of supervised and unsupervised machine learning. We investigated whether it is possible to transfer a trained detector to a new data set. Often, there is already some detector of cheating that was used in the past. It is tempting to use the existing detector for future detection tasks.

Whether and to what benefit a trained detector can be transferred to new data depends on several factors. This was discussed in the first section of the article where we gave a short overview of transfer learning. In short, a successful transfer is only possible in case the distributions of the indicators do not differ between the training and target population and the frequency of cheating is roughly the same. However, even in case the distributions are not identical, a transferred detector might perform better than an unsupervised detector that was trained with the target data.

In the empirical part of the article, we investigated the benefits of transferring a detector of cheating from a training data set to a target data set. Tests suggested that the distributions of the indicators of cheating were different in the training and target data. This implies that the detector that was fit to the training data is not optimal for the target data. Our investigation, however, demonstrated that despite the distributional differences, the trained detector had higher detection accuracy than a Gaussian mixture model fit to the target data. Using the more sophisticated SETRED algorithm to transfer the detector did improve the accuracy slightly. In addition to the transfer via the SETRED algorithm, we also considered a transfer via a belief-based Gaussian mixture model (Biecek et al., 2012). In the belief-based Gaussian mixture model, a standard Gaussian mixture model is combined with posterior probabilities from an existing detector. This transfer, as well as the DASVM transfer of Bruzzone and Marconcini (2010), did not outperform the transfer via the SETRED algorithm. This is probably due to the small number of cheaters in the target data that complicates a full domain adaptation.

We also investigated whether the performance of the SETRED algorithm can be gauged by a back-transfer. Contrary to our expectations and in contrast to the findings of Bruzzone and Marconcini (2010), the payoff of using the SETRED algorithm in the back-transfer task was not indicative of the actual payoff of using the SETRED algorithm in the transfer task. This might be due to the fact that in our application, the distributional differences between the training and the target data were small. The finding might also indicate a general problem of the back-transfer. The predicted labels in the target data set conform to the transferred model and thus might reflect the structure of the training data more strongly than the structure of the target data. Anyway, the weakness of the relation impedes that the decision can be made between reusing a detector or using the SETRED algorithm can be made mechanically based on the performance in the back-transfer. We suggest selecting a specific detector by a careful inspection of the instances the detector labels as cheaters in the target data set. As was stated by Weinstein (2017), statistical analyses are an important tool to detect cheaters but should be complemented by collecting and analyzing further evidence.

Some limitations of our study have to be mentioned. First, we only investigated the transfer between similar data sets. The training and target data set differed in the content and the number of items. The baseline rates of cheating and general testing context, however, were similar. We chose this setting as we considered it most relevant for practice. In educational testing, the achievement tests usually do not change much from year to year the next. In large-scale assessment, old items are continually replaced by new items. In adaptive testing, the tests are similar but not identical for all test takers. The question of whether a detector can be transferred when the training and target data differ to a greater extend, for example, in the response format or the form of cheating, is a question that requires further investigation. Some accordance between the training and the target data, however, is necessary for transfer learning. Second, we treated all cheaters as a homogeneous group of irregular responders. We transferred a detector that solely distinguished two groups, regular responders and cheaters. Recent transfer algorithms, however, allow the creation of new classes in the target data set (L. Zhang & Gao, 2019). Such algorithms might be worth investigating as during a test program new forms of cheating may occur.

Despite these limitations, our findings have implications in practice. In large-scale assessment or educational testing, similar tests are often used over a longer period of time. During this period, some experience might have been made which aspects of test taking or test results are indicative of cheating. In the optimal case, this experience has been formalized in mathematical rules that separate cheaters from regular responders. These rules are the core of a detector of cheating. It is tempting to reuse an established detector of cheating in new assessment periods or tasks. Whether this is justified depends on the similarity of the tests and the testing conditions. Several scenarios can be distinguished. In the simplest case, a test is repeated under exactly the same conditions. This might happen in educational testing when a test is reused in different cohorts of students. In this case, there may be more cheaters and new forms of cheating when the test is repeated, but the conditional distribution of the indicators in the regular test takers stays the same. This scenario is less critical than the scenario considered in the empirical study. A trained detector should be transferable in this case. Sometimes, when a test is repeated, a part of the items is exchanged. This occurs in educational testing, but also when tests are used continuously. Again, there may be more cheaters and new forms of cheating when the test is repeated. The conditional distribution of the indicators in the regular test takers might change slightly. This scenario is also less critical than the scenario considered in the empirical study. A transferred detector will achieve more or less the same accuracy in the new data than in the old ones in most cases. Often, one uses different, but parallel tests in different assessment periods. There will not necessarily be more cheaters or new forms of cheating, as test material cannot be leaked. The distribution of the indicators might change in the regular test takers. The difference, however, will not be large in the case of parallel tests. This is exactly the scenario that was considered in the empirical study. Our findings suggest that a detector can be transferred. Often, a test is employed for the first time and there is no similar test for which a detector of cheating exists. In this case, there is no guarantee that a transferred detector performs well. A transfer might still be possible in case the conditional distributions of the indicators are not too different. Nevertheless, it is advisable to handle the predictions of a transferred detector with care in this case.

What can be done when there is no detector than can be transferred as there is no training data with similar characteristics than the target data? In this case, it might be possible to acquire data of regular responders in a pretest, induce some test takers to cheat or simulate data of cheaters and use this first data set as training data for a detector; see, for example, the study of Ranger et al. (2020). Alternatively, one might try to combine different detectors in order to reduce the dependency on specific test data. This requires a transfer from multiple sources (Mansour et al., 2008). This, however, is a topic of future research.

Similar to K. Zhang et al. (2013), we will not consider the alternative factorization as cheating determines the indicators of cheating and not vice versa. We therefore will not review the case of covariate shift or sample selection that has been discussed in the literature on transfer learning intensively; see, for example, Shimodaira (2000) or Zadrozny (2004).

Footnotes

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Jochen Ranger  https://orcid.org/0000-0001-5110-1213

https://orcid.org/0000-0001-5110-1213

References

- Bernardi R., Baca A., Landers K., Witek M. (2008). Methods of cheating and deterrents to classroom cheating: An international study. Ethics & Behavior, 18, 373–391. 10.1080/10508420701713030 [DOI] [Google Scholar]

- Bezirhan U., von Davier M., Grabovsky I. (2021). Modeling item revisit behavior: The hierarchical speed-accuracy-revisits model. Educational and Psychological Measurement, 81, 363–387. 10.1177/0013164420950556 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biecek P., Szczurek E., Vingron M., Tiuryn J. (2012). The R package bgmm: Mixture modeling with uncertain knowledge. Journal of Statistical Software, 47, 1–31. 10.18637/jss.v047.i03 [DOI] [Google Scholar]

- Bishop S., Egan K. (2017). Detecting erasures and unusual gain scores. In Cizek G., Wollack J. (Eds.), Handbook of quantitative methods for detecting cheating on tests (pp. 193–213). Routledge. 10.4324/9781315743097.ch10. [DOI] [Google Scholar]

- Boughton K., Smith J., Ren H. (2017). Using response time data to detect compromised items and/or people. In Cizek G., Wollack J. (Eds.), Handbook of quantitative methods for detecting cheating on tests (pp. 177–190). Routledge. 10.4324/9781315743097 [DOI] [Google Scholar]

- Bruzzone L., Marconcini M. (2010). Domain adaptation problems: A DASVM classification technique and a circular validation strategy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32, 770–787. 10.1109/TPAMI.2009.57 [DOI] [PubMed] [Google Scholar]

- Burlak G., Hernández J., Ochoa A., Muñoz J. (2006, September 26–29). The use of data mining to determine cheating in online student assessment [Conference session]. CERMA’06: Electronics, Robotics and Automotive Mechanics Conference, Cuervanaca, Mexico. 10.1109/CERMA.2006.91 [DOI] [Google Scholar]

- Chen M., Chen C. (2017). Detect exam cheating pattern by data mining. In Tallón-Ballesteros A., Li K. (Eds.), Fuzzy systems and data mining (pp. 25–34). IOS Press. 10.3233/978-1-61499-828-0-25 [DOI] [Google Scholar]

- Chuang C., Craig S., Femiani J. (2017). Detecting probable cheating during online assessments based on time delay and head pose. Higher Education Research & Development, 36, 1123–1137. 10.1080/07294360.2017.1303456 [DOI] [Google Scholar]

- Cizek G., Wollack J. (2017. a). Exploring cheating on tests: The context, the concern, and the challenges. In Cizek G., Wollack J. (Eds.), Handbook of quantitative methods for detecting cheating on tests (pp. 3–19). Routledge. 10.4324/9781315743097 [DOI] [Google Scholar]

- Cizek G., Wollack J. (Eds.). (2017. b). Handbook of quantitative methods for detecting cheating on tests. Routledge. 10.4324/9781315743097 [DOI] [Google Scholar]

- Cohen J. (1988). Statistical power analysis for the behavioral sciences. Routledge. 10.4324/9780203771587 [DOI] [Google Scholar]

- Diedenhof B., Musch J. (2017). PageFocus: Using paradata to detect and prevent cheating on online achievement tests. Behavior Research Methods, 49, 1444–1459. 10.3758/s13428-016-0800-7 [DOI] [PubMed] [Google Scholar]

- Elkan C. (2001, August 4–10). The foundation of cost-sensitive learning [Conference session]. IJCAI’01: Proceedings of the 17th International Joint Conference on Artificial Intelligence, Washington, DC, United States. 10.5555/1642194.1642224 [DOI] [Google Scholar]

- Fekken G., Holden R. (1992). Response latency evidence for viewing personality traits as schema indicators. Journal of Research in Personality, 26, 103–120. 10.1016/0092-6566(92)90047-8 [DOI] [Google Scholar]

- González M., Rosado-Falcón O., Rodríguez J. (2019). ssc: Semi-supervised classification methods (R Package Version 2.1-0). https://CRAN.R-project.org/package=ssc

- Gretton A., Borgwardt K., Rasch M., Schölkopf B., Smola A. (2012). A kernel two-sample test. Journal of Machine Learning Research, 13, 723–773. https://dl.acm.org/doi/10.5555/2188385.2188410 [Google Scholar]

- Hastie T., Tibshirani R., Friedman J. (2009). The elements of statistical learning. Springer. 10.1007/978-0-387-84858-7 [DOI] [Google Scholar]

- Hediger S., Michel L., Näf J. (2021). hyporf: Random forest two-sample tests (R Package Version 1.0.0). https://CRAN.R-project.org/package=hypoRF

- Hediger S., Michel L., Näf J. (2022). On the use of random forest for two-sample testing. Computational Statistics and Data Analysis, 170, 107435. 10.1016/j.csda.2022.107435 [DOI] [Google Scholar]

- Hodge V., Austin J. (2004). A survey of outlier detection methodologies. Artificial Intelligence Review, 22, 85–126. 10.1023/B:AIRE.0000045502.10941.a9 [DOI] [Google Scholar]

- Holland P. (1996). Assessing unusual agreement between the incorrect answers of two examinees using the K-index: Statistical theory and empirical support (Tech. Rep. No. RR-96-07). Educational Testing Service. 10.1002/j.2333-8504.1996.tb01685.x [DOI] [Google Scholar]

- Huang J., Smola A., Gretton A., Borgwardt K., Schölkopf B. (2006, December 4–7). Correcting sample selection bias by unlabeled data [Conference session]. NIPS’06: Proceedings of the 19th International Conference on Neural Information Processing Systems, Vancouver, British Columbia, Canada. https://dl.acm.org/doi/10.5555/2976456.2976532 [Google Scholar]

- Kim D., Woo A., Dickison P. (2017). Identifying and investigating aberrant responses using psychometrics-based and machine learning-based approaches. In Cizek G., Wollack J. (Eds.), Handbook of quantitative methods for detecting cheating on tests (pp. 71–97). Routledge. 10.4324/9781315743097. [DOI] [Google Scholar]

- Kingston N., Clark A. (Eds.). (2014). Test fraud: Statistical detection and methodology. Routledge. 10.4324/9781315884677 [DOI] [Google Scholar]

- Kisung Y. (2022). maotai: Tools for matrix algebra, optimization and inference (R Package Version 0.2.4). https://CRAN.R-project.org/package=maotai

- Kouw W., Loog M. (2019). An introduction to domain adaptation and transfer learning. arXiv e-prints, 1812.11806. https://arxiv.org/abs/1812.11806

- Krijthe J. H. (2016). Rssl: R package for semi-supervised learning. In Kerautret B., Colom M., Monasse P. (Eds.), Reproducible research in pattern recognition (pp. 104–115). Springer. 10.1007/978-3-319-56414-2_8. [DOI] [Google Scholar]

- Kroehne U., Goldhammer F. (2018). How to conceptualize, represent, and analyze log data from technology-based assessments? A generic framework and an application to questionnaire items. Behaviometrika, 45, 527–563. 10.1007/s41237-018-0063-y [DOI] [Google Scholar]

- Li M., Zhou Z. (2005). Self-training with editing. In Ho T., Cheung D., Liu H. (Eds.), Advances in knowledge discovery and data mining (pp. 611–621). Springer. 10.1007/11430919_71 [DOI] [Google Scholar]

- Long M., Wang J., Ding G., Pan S., Yu P. (2014). Adaptation regularization: A general framework for transfer learning. IEEE Transactions on Knowledge and Data Engineering, 26, 1076–1089. 10.1109/TKDE.2013.111 [DOI] [Google Scholar]

- Man K., Harring J. (2020). Assessing preknowledge cheating via innovative measures: A multiple-group analysis of jointly modeling item responses, response times, and visual fixation counts. Educational and Psychological Measurement, 81, 441–465. 10.1177/0013164420968630 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Man K., Harring J., Ouyang Y., Thomas S. (2018). Response time based nonparametric Kullback-Leibler divergence measure for detecting aberrant test-taking behavior. International Journal of Testing, 18, 155–177. 10.1080/15305058.2018.1429446 [DOI] [Google Scholar]

- Man K., Harring J., Sinharay S. (2019). Use of data mining methods to detect test fraud. Journal of Educational Measurement, 56, 251–279. 10.1111/jedm.12208 [DOI] [Google Scholar]

- Mandrekar J. (2010). Receiver operating characteristic curve in diagnostic test assessment. Journal of Thoracic Oncology, 5, 1315–1316. 10.1097/JTO.0b013e3181ec173d [DOI] [PubMed] [Google Scholar]

- Mansour Y., Mohri M., Rostamizadeh A. (2008). Domain adaptation with multiple sources. In Koller D., Schuurmans D., Bengio Y., Bottou L. (Eds.), Advances in neural information processing systems (pp. 1–8). Curran Associates. [Google Scholar]

- Mansour Y., Mohri M., Rostamizadeh A. (2009, June 18–21). Domain adaptation: Learning bounds and algorithms [Conference session]. COLT’09: Proceedings of the 22nd Annual Conference on Learning Theory, Montréal, Québec, Canada. 10.48550/arXiv.0902.3430 [DOI] [Google Scholar]

- Marianti S., Fox J.-P., Avetisyan M., Veldkamp M., Tijmstra J. (2014). Testing for aberrant behavior in response time modeling. Journal of Educational and Behavioral Statistics, 39, 426–451. 10.3102/1076998614559412 [DOI] [Google Scholar]

- McCabe D. (2016). Cheating and honor: Lessons from a long-term research project. In Bretag T. (Ed.), Handbook of academic integrity (pp. 187–198). Springer. 10.1007/978-981-287-098-8_35 [DOI] [Google Scholar]

- Pan S., Tsang I., Kwok J., Yang Q. (2011). Domain adaptation via transfer component analysis. IEEE Transactions on Neural Networks, 22, 199–210. 10.1109/TNN.2010.2091281 [DOI] [PubMed] [Google Scholar]

- Pan S., Yang Q. (2010). A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering, 22, 1345–1359. 10.1109/TKDE.2009.191 [DOI] [Google Scholar]

- Ponce H., Mayer R., Sitthiworachart J., López M. (2020). Effects on response time and accuracy of technology-enhanced cloze tests: An eye-tracking study. Educational Technology Research and Development, 68, 2033–2053. 10.1007/s11423-020-09740-1 [DOI] [Google Scholar]

- Quiñonero Candela J., Sugiyama M., Schwaighofer A., Lawrence N. (2008). Dataset shift in machine learning. London. 10.7551/MITPRESS/9780262170055.001.0001 [DOI] [Google Scholar]

- Ranger J., Schmidt N., Wolgast A. (2020). The detection of cheating on e—exams in higher education—The performance of several old and some new indicators. Frontiers in Psychology, 11, Article 2390. 10.3389/fpsyg.2020.568825 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranger J., Schmidt N., Wolgast A. (2021). Detection of cheating on e—exams—The performance of transferred detection rules. In Schubert T., Gerth M. (Eds.), Multimediales Lehren und Lernen an der Martin-Luther-Universität Halle-Wittenberg. Befunde und Ansätze aus dem Forschungsförderprogramm des Zentrums für multimediales Lehren und Lernen (pp. 131–146). LLZ. 10.25673/38465. [DOI] [Google Scholar]

- Rettinger D., Kramer Y. (2009). Situational and personal causes of student cheating. Research in Higher Education, 50, 293–313. 10.1007/s11162-008-9116-5 [DOI] [Google Scholar]

- Robin X., Turck N., Hainard A., Tiberti N., Lisacek J., Sanchez J., Müller M. (2011). PROC: An open-source package for R and S+ to analyze and compare ROC curves. BMC Bioinformatics, 12, Article 77. 10.1186/1471-2105-12-77 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato C. (Ed.) (1975). The construction and interpretation of s—p tables. Meiji Tosho. [Google Scholar]

- Scrucca L., Fop M., Murphy T., Raftery A. (2016). Mclust 5: Clustering, classification and density estimation using Gaussian finite mixture models. The R Journal, 8, 289–317. 10.32614/RJ-2016-021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shao L., Zhu F., Li X. (2014). Transfer learning for visual categorization: A Survey. IEEE Transactions on Neural Networks and Learning Systems, 26, 1019–1034. 10.1109/TNNLS.2014.2330900 [DOI] [PubMed] [Google Scholar]

- Shimodaira H. (2000). Improving predictive inference under covariate shift by weighting the log-likelihood function. Journal of Statistical Planning and Inference, 90, 227–244. 10.1016/S0378-3758(00)00115-4 [DOI] [Google Scholar]

- Sijtsma K. (1986). A coefficient of deviance of response patterns. Kwantitatieve Methoden, 7, 131–145. [Google Scholar]

- Sinharay S. (2018). A new person-fit statistic for the lognormal model for response times. Journal of Educational Measurement, 55, 457–476. 10.1111/jedm.12188 [DOI] [Google Scholar]

- Sotaridona L., Meijer R. (2002). Statistical properties of the K-index for detecting answer copying. Journal of Educational Measurement, 39, 115–132. https://www.jstor.org/stable/1435251 [Google Scholar]

- Tendeiro J., Meijer R., Niessen A. (2016). PerFit: An R package for person-fit analysis in IRT. Journal of Statistical Software, 74, 1–27. 10.18637/jss.v074.i05 [DOI] [Google Scholar]

- Triguero I., García S., Herrera F. (2015). Self-labeled techniques for semi-supervised learning: Taxonomy, software and empirical study. Knowledge and Information Systems, 42, 245–284. 10.1007/s10115-013-0706-y [DOI] [Google Scholar]

- van der Flier H. (1982). Deviant response patterns and comparability of test scores. Journal of Cross-cultural Psychology, 13, 267–298. 10.1177/0022002182013003001 [DOI] [Google Scholar]

- Wang J., Zamar R., Marazzi A., Yohai V., Salibian-Barrera M., Maronna R., . . .Konis K. (2013). robust: Robust library (R Package Version 0.4-15). http://CRAN.R-project.org/package=robust

- Weinstein M. (2017). When numbers are not enough—Collection and use of collateral evidence to assess the ethics and professionalism of examinees suspected of test fraud. In Cizek G., Wollack J. (Eds.), Handbook of quantitative methods for detecting cheating on tests (pp. 3–19). Routledge. 10.4324/9781315743097 [DOI] [Google Scholar]

- Weiss K., Khoshgoftaar T., Wang D. (2016). A survey of transfer learning. Journal of Big Data, 3, 9. 10.1186/s40537-016-0043-6 [DOI] [Google Scholar]

- Wollack J., Fremer J. (Eds.). (2013). Handbook of test security. Routledge. 10.4324/9780203664803 [DOI] [Google Scholar]

- Xu H., Mannor S. (2012). Robustness and generalization. Machine Learning, 86, 391–423. 10.1007/s10994-011-5268-1 [DOI] [Google Scholar]