Abstract

The accurate estimation of cell surface receptor abundance for single cell transcriptomics data is important for the tasks of cell type and phenotype categorization and cell-cell interaction quantification. We previously developed an unsupervised receptor abundance estimation technique named SPECK (Surface Protein abundance Estimation using CKmeans-based clustered thresholding) to address the challenges associated with accurate abundance estimation. In that paper, we concluded that SPECK results in improved concordance with Cellular Indexing of Transcriptomes and Epitopes by Sequencing (CITE-seq) data relative to comparative unsupervised abundance estimation techniques using only single-cell RNA-sequencing (scRNA-seq) data. In this paper, we outline a new supervised receptor abundance estimation method called STREAK (gene Set Testing-based Receptor abundance Estimation using Adjusted distances and cKmeans thresholding) that leverages associations learned from joint scRNA-seq/CITE-seq training data and a thresholded gene set scoring mechanism to estimate receptor abundance for scRNA-seq target data. We evaluate STREAK relative to both unsupervised and supervised receptor abundance estimation techniques using two evaluation approaches on six joint scRNA-seq/CITE-seq datasets that represent four human and mouse tissue types. We conclude that STREAK outperforms other abundance estimation strategies and provides a more biologically interpretable and transparent statistical model.

Author summary

Herein, we present an overview of our recently developed supervised receptor abundance estimation technique, STREAK (gene Set Testing-based Receptor abundance Estimation using Adjusted distances and cKmeans thresholding), which leverages co-expression associations learned from joint scRNA-seq/CITE-seq data to perform approximate abundance estimation. More specifically, STREAK functions by utilizing these expression associations to develop weighted membership gene sets, which are next thresholded following a gene set scoring procedure. These thresholded scores are set to the estimated abundance profiles.

We validate STREAK relative to both unsupervised and supervised estimation approaches using two different evaluation approaches, which include a cross-validation and a cross-training strategy, and approximately four different tissue types, which include the peripheral blood mononuclear cells, mesothelial cells, monocytes and lymphoid tissue. We conclude that STREAK outperforms comparative receptor abundance estimation approaches via a relatively more biologically interpretable and transparent statistical model, facilitated by VAM’s (the Variance-adjusted Mahalanobis distance measure) customizable gene set scoring procedure.

Introduction

Single cell RNA-sequencing (scRNA-seq) technologies, such as the 10X Chromium system [1], can now cost effectively profile gene expression in tens-of-thousands of cells dissociated from a single tissue sample [2, 3]. The transcriptomic data captured via scRNA-seq gives researchers unprecedented insight into the cell types and phenotypes that comprise complex tissues such as the tumor microenvironment or brain. Single cell transcriptomics can also facilitate the characterization of cell-cell signaling [4] and the identification of co-regulated genetic modules and gene-regulatory networks [5]. A critical element of these cell type/phenotype identification and cell-cell interaction analysis tasks is the accurate estimation of receptor protein abundance. Although direct receptor protein measurements are sometimes available, via either fluorescence-activated cell sorting (FACS)-based [6] enrichment prior to scRNA-seq analysis or through joint scRNA-seq/CITE-seq (Cellular Indexing of Transcriptomes and Epitopes by Sequencing) [7] profiling, most single cell data sets capture only gene expression values. For such data, receptor abundance estimates can be generated using either a supervised method (i.e., a method that leverages associations learned on joint transcriptomic/proteomic training data) or an unsupervised method (i.e., a method that generates estimates from the target scRNA-seq data without reference to a trained model).

A common unsupervised approach for estimating receptor abundance uses the expression of the associated mRNA transcript as a proxy for the receptor protein. While this approach is plausible, it often results in low quality estimates given the significant sparsity of scRNA-seq data [8]. This sparsity problem is well illustrated by scRNA-seq data for FAC sorted immune cells from Zheng et al. [1], which found that a large fraction of cells have no detectable expression of the transcript whose corresponding receptor is positively expressed in those cells, e.g., only 20% of CD19+ B cells expressed the CD19 transcript.

To overcome scRNA-seq sparsity, the standard bioinformatics workflow uses the average expression of the receptor transcript across large populations or cluster of cells to estimate receptor protein abundance. Although a cluster-based analysis mitigates sparsity, it has two major limitations. First, it assumes that receptor abundance is uniform across all cells in a given cluster, ignoring potentially significant within cluster heterogeneity. Second, it generates only a small number of independent receptor abundance estimates (one per cluster), which limits insight into the joint distribution of different receptors. A key benefit of single cell transcriptomics is the dramatic increase in sample size, with each cell providing a distinct expression profile. These large sample sizes can significantly improve estimates of the marginal and joint distribution of gene expression values. To support the cluster-free estimation of receptor abundance, we recently developed the Surface Protein abundance Estimation using CKmeans-based clustered thresholding (SPECK) method. SPECK uses a low-rank reconstruction of scRNA-seq data followed by a clustering-based thresholding of the reconstructed gene expression values to generate non-sparse estimates of receptor transcripts. While the SPECK approach significantly outperforms both the naïve approach of directly using the receptor transcript and comparative reduced rank reconstruction (RRR)-based abundance estimation strategies, accuracy is poor for several biologically important receptors, e.g., receptors such as CD69 for which protein abundance is not closely correlated with transcript expression [9]. For such receptors, a supervised approach is needed that can leverage joint transcriptomic/proteomic data to identify the gene expression signature that is most closely associated with protein abundance.

Existing supervised methods for receptor abundance estimation include cTP-net (single cell Transcriptome to Protein prediction with deep neural network) [10] and PIKE-R2P (Protein–protein Interaction network-based Knowledge Embedding with graph neural network for single-cell RNA to Protein prediction) [11]. cTP-net uses a multiple branch deep neural network (MB-DNN) on joint scRNA-seq/CITE-seq training data to generate cell-level surface protein abundance estimates for target scRNA-seq data. While the abundance estimates generated using cTP-net are highly correlated with corresponding protein measurements, only select 24 immunophenotype markers/receptors are currently supported. Additionally, since cTP-net uses a deep learning model trained on the same immune cell populations (i.e., peripheral blood mononuclear cells (PBMC), cord blood mononuclear cells (CBMC) and bone marrow mononuclear cells (BMMC)) to perform estimation via transfer learning, it may not be able to capture gene expression patterns that are specific to an individual dataset or that more broadly generalize to non-immune-related surface markers. PIKE-R2P uses a protein-protein interactions (PPI)-based graph neural network (GNN) integrated with prior knowledge embeddings to estimate receptor abundance values. This method assumes that since gene expression regulation mechanisms are likely shared between proteins, such similarities can be used to generate protein-protein interactions that can then be leveraged in a GNN. Like cTP-net, PIKE-R2P is limited to an analysis and assessment of model prediction results on a small group of 10 receptors. A second important limitation of neural network-based models such as PIKE-R2P and cTP-net is that the weights retrieved via the training process are generally not sufficiently transparent or biologically interpretable enough to be manually fine-tuned by medical practitioners.

Given the performance issues with unsupervised methods like SPECK and the limited support, generalizability, and applicability of existing supervised estimation techniques such as cTP-net, alternative receptor abundance estimation methods are needed. Here, we propose a novel supervised approach for cell-level receptor abundance estimation for scRNA-seq data named STREAK (gene Set Testing-based Receptor abundance Estimation using Adjusted distances and cKmeans thresholding) that leverages co-expression associations learned from joint scRNA-seq/CITE-seq training data to perform thresholded gene set scoring on target scRNA-seq data. We validate STREAK on six datasets representing about five different tissue types and compare its performance against both unsupervised receptor abundance estimation techniques, such as SPECK and normalized RNA transcript counts, and supervised methods. In addition to supervised receptor abundance estimation techniques that leverage trained associations learned from separate training datasets like cTP-net, we also evaluate STREAK against the Random Forest (RF) and Support Vector Machines (SVM) algorithms that are trained on a small separate subset of cells from the same joint scRNA-seq/CITE-seq training data as the target data. This latter supervised comparison with the RF and the SVM model is motivated by a recent study that analyzed performance of tree-based ensemble methods and neural networks and found RF to have a superior performance over neural nets for the task of receptor abundance estimation [12]. We do not perform comparison against PIKE-R2P since, while an initial implementation exists on Github, the upstream package dependencies are not adequately documented. We evaluate all comparisons between the estimated receptor abundance profiles and CITE-seq ADT data using the Spearman rank correlation coefficient. Overall, we observe that STREAK has superior performance relative to comparative methods on the six analyzed datasets, which highlights the accuracy and generalizability of our proposed estimation approach. Moreover, since it allows the specification of custom gene weights for subsequent gene set scoring, STREAK has tremendous clinical utility as an interpretable and adaptable receptor abundance estimation strategy.

Materials and methods

STREAK method overview

STREAK performs receptor abundance estimation for target scRNA-seq data using trained associations learned from joint gene expression and protein abundance data (see Fig 1). These associations are used to construct weighted receptor membership gene sets with the set for each receptor containing genes whose normalized and reconstructed scRNA-seq expression values are most strongly correlated with CITE-seq protein abundance (see Algorithm 1). The weighted gene sets are next leveraged in the gene set scoring step to produce cell-specific scores for each receptor. Lastly, a thresholding mechanism is applied to the resulting scores and the estimated abundance estimates are set to this thresholded output (see Algorithm 2).

Fig 1.

STREAK schematic with step (1) corresponding to the training co-expression analysis and step (2) corresponding to gene set scoring and subsequent clustering and thresholding to achieve cell-specific estimated receptor abundance profiles.

Algorithm 1 STREAK Algorithm (Receptor gene set construction)

Require: —scRNA-seq training counts

Require: —CITE-seq training counts

Ensure: —Gene sets weighted membership matrix

Normalization and RRR of training data

1:

Co-expression analysis

2: for i ← 1 to h do ▹ Associations between scRNA-seq and CITE-seq training data

3: for j ← 1 to n do

4:

5: ry ← rank(XtP[, i])

6: ▹ Pearson correlation on rank data (i.e., Spearman rank correlation)

7: end for

8: end for

Algorithm 2 STREAK Algorithm (Receptor abundance estimation)

Require: —scRNA-seq target counts

Require: —Gene sets weighted membership matrix

Ensure: —Estimated abundance profiles

Normalization and RRR of target data

1:

Gene set scoring and thresholding

2: for k ← 1 to h do

3: topGenes ← names(A[1 : 10, k])

4: topGenesWeights ← A[1 : 10, k]

5: ▹ Top 10 most co-expressed genes

6: vamOut ← vam(testRRR, gene.weights = topGenesWeights, gamma = T, center = F) ▹ Gene set scoring

7: vamCDF ← vamOut[“cdf.value”]

8: vamSqDist ← vamOut[“distance.sq”]

9: ckRes ← Ckmeans.1d.dp(vamSqDist, k = c(1 : 4)) ▹ Clustering of cell-specific squared distances

10: numCluster ← ckRes[“cluster”]

11: valCenters ← ckRes[“centers”]

12: if length(valCenters) > 1 then

13: minVal ← which(valCenters = min(valCenters))

14: minNum ← which(numCluster = minVal)

15: vamCDF[minNum] ← 0 ▹ Thresholding of cell-specific gene set scores

16: end if

17: SR ← vamCDF

18: end for

Receptor gene set construction

In order to generate weighted gene sets for all supported receptors, we performed co-expression analysis on joint scRNA-seq/CITE-seq training data. It is important to note, however, that the weighted gene sets used by STREAK can be generated using alternative approaches, e.g., manually specified based on prior biological knowledge. The first step in the training process used for this paper generated , a rank-k reconstruction of the m1 × n training scRNA-seq matrix XtR holding the log-normalized gene expression counts for n genes in m1 cells. The RRR of the scRNA-seq data was performed using the randomized SVD algorithm from the rsvd R package [13] based on the rank selection procedure proposed in the SPECK paper [9], which utilizes the rate of change in the standard deviation of the non-centered sample principal components to compute the estimated rank-k. The analogous m1 × h CITE-seq counts matrix XtP from the joint scRNA-seq/CITE-seq training data was normalized using the centered log-ratio (CLR) transformation. Both the log-normalization and CLR transformation were performed using Seurat [14–17]. Following normalization and RRR, the and XtP matrices were used to define gene sets for each of the h supported receptors, with the set for each receptor containing the genes whose reconstructed expression values from have the largest positive Spearman rank correlation with the corresponding receptor’s normalized CITE-seq values from XtP. The rank correlation values were also used to define positive weights for the genes in each set. For the results reported in this paper, a gene set size of 10 was used.

Receptor abundance estimation

Single cell gene set scoring

Given weighted gene sets for h target receptors, we used a thresholded single cell gene set scoring mechanism to generate receptor abundance estimates. For a m2 × n matrix XR that holds scRNA-seq data for m2 cells and n genes, we first performed normalization and RRR to output the m2 × n matrix . We next computed cell-level scores for each of the h target receptors using with the Variance-adjusted Mahalanobis (VAM) gene set scoring [18] method.

Execution of the VAM method requires two input matrices:

: a m2 × n scRNA-seq target matrix containing the positive normalized and RRR counts for n genes in m2 cells.

A: a n × h matrix that captures the weighted annotation of n genes to h gene sets, i.e., gene sets for each of the h receptor proteins. If gene i is included in gene set j, then element ai,j holds the gene weight, otherwise, ai,j is not defined.

VAM outputs a m2 × h matrix, M, that holds the cell-specific squared modified Mahalanobis distances for m2 cells and h gene sets, and a m2 × h matrix, S, that holds the cell-level scores for m2 cells and h gene sets. The computation for both matrices M and S is detailed below (see the VAM paper [18] for additional details).

Technical variances estimation: The length n vector holding the technical variance of each gene in is first computed using the Seurat variance decomposition approach for either the log-normalized or SCTransform-normalized data [19]. Alternatively, the elements of can be set to the sample variance of each gene in under the assumption that the observed marginal variance of each gene is entirely technical.

Modified Mahalanobis distances computation: Given M, a m2 × h matrix of squared values of a modified Mahalanobis distance, each column k of M, which holds the cell-level squared distances for gene set k, is calculated as where g corresponds to the size of the gene set k, Xk is a m2 × g matrix containing the g columns of relating to the members of set k (i.e., the g elements of column k of A with non-zero values), Ig is a g × g identity matrix, and contains the elements of associated with the g genes in set k. To prioritize genes with large weights, the elements of the vector are divided by the corresponding elements of column k of A. This modification will shrink the effective variance for genes with large weights resulting in a larger Mahalanobis distance. Modified Mahalanobis distances are additionally recomputed on a version of where the row labels of each column are randomly permuted, which captures the distribution of the squared modified Mahalanobis distances under the H0 that the normalized and RRR expression values in are uncorrelated with only the technical variance. Xp thereby represents the row-permuted version and Mp represents the m2 × h matrix that contains the squared modified Mahalanobis distances computed on Xp.

Gamma distribution fit: A gamma distribution is individually fit to the non-zero elements in each column of Mp using the method of maximum likelihood. Alternatively, gamma distributions can be fit directly on M to alleviate the computational costs of generating Xp and Mp.

Cell-specific scores computation: Cell-level gene set scores, matrix S, are defined to be the gamma cumulative distribution function (CDF) value for each element of M.

One-dimensional clustering and thresholding

Following the generation of cell-specific squared distances, matrix M, and cell-specific gene set scores, matrix S, we used the Ckmeans.1d.dp algorithm from Ckmeans.1d.dp v3.3.3 [20, 21] to perform one-dimensional clustering on M. Each column k of M, which holds the cell-specific squared distances for gene set k, was clustered with the number of computed clusters bound between one and four. If more than one cluster was identified, then all the non-zero cell-specific gene set scores corresponding to the indices of the least-valued cluster for k from the analogous matrix S were set to zero. All zero values corresponding to the indices of the least-valued cluster and the remaining non-zero and zero values corresponding to the indices of the higher-valued clusters for k were retained. If only one cluster was identified for gene set k of M, then thresholding was not performed and the analogous cell-specific gene set scores for gene set k of S were preserved as estimated abundance profiles.

Evaluation

Datasets

Comparative evaluation of STREAK was performed on six publicly accessible joint scRNA-seq/CITE-seq datasets generated on approximately four human and one mouse tissue types: 1) the Hao et al. [14] human PBMC dataset (GEO [22] series GSE164378) contains 161,764 cells profiled using 10X Chromium 3’ with 228 TotalSeq A antibodies, 2) the Unterman et al. [23] human PBMC dataset (GEO series GSE155224) contains 163,452 cells profiled using 10X Chromium 5’ with 189 TotalSeq C antibodies, 3) the 10X Genomics [24] human extranodal marginal zone B-cell tumor/mucosa-associated lymphoid tissue (MALT) dataset contains 8,412 cells profiled using 10X Chromium 3’ with 17 TotalSeq B antibodies, 4) the Lakkis et al. [25] human blood monocyte and dendritic cell dataset profiled with 238 antibodies, 5) the Ma et al. [26] malignant peritoneal mesothelioma (MPEM) dataset profiled with 46 antibodies and 6) the Gayoso et al. [27] mus musculus dataset (GSE150599) profiled with 102 mouse antibodies. Hao data was generated on PBMC samples obtained from eight volunteers enrolled in a HIV vaccine trial [28, 29], with age ranging from 20 to 49 years. In comparison, the PBMC samples for the Unterman data were obtained from 10 COVID-19 patients and 13 matched controls with a mean age of 71 years. The two PBMC datasets thus consisted of different underlying patient populations with varying age ranges. All six scRNA-seq/CITE-seq datasets were processed using Seurat v.4.1.0 [14–17] in R v.4.1.2 [30].

We performed method evaluation using two approaches. First, we used a 5-fold cross-validation strategy where we utilized a subset of cells from each of the six joint scRNA-seq/CITE-seq datasets as training data and a second distinct subset of the same dataset as target data. This approach is suitable for analytical scenarios where joint scRNA-seq/CITE-seq data is only captured for a subset of cells from the original, larger population of cells for which only scRNA-seq data is available. Given this first scenario, receptor abundance levels can be estimated by mapping associations learned on the smaller subset of cells with joint scRNA-seq/CITE-seq training data to the larger population with only scRNA-seq data. Our second evaluation strategy used a cross-training approach to learn and evaluate associations on separate datasets. This latter approach, which was used to learn associations on the Hao data and evaluate on the Unterman data, is suitable for situations where co-expression patterns learned from one joint scRNA-seq/CITE-seq data can be leveraged to perform receptor abundance estimation for another disjoint dataset that is of the same tissue type as the first data but only contains quantified scRNA-seq expression profiles. For both the cross-validation and the cross-training approaches, concordance between the estimated receptor abundance values for the target scRNA-seq data and the analogous ADT transcripts from the same joint, target scRNA-seq/CITE-seq data was quantified using the Spearman rank correlation, which was used to measure relationships between the relative ranks of the estimated abundance values [31].

Comparison methods

STREAK was evaluated against existing unsupervised and supervised-learning-based receptor abundance estimation techniques. Aside from the normalized RNA transcript approach, SPECK was the only unsupervised receptor abundance estimation method evaluated since it previously performed better than the comparative unsupervised techniques MAGIC (Markov Affinity-based Graph Imputation of Cells) [32] and ALRA (Adaptively thresholded Low-Rank Approximation) [33]. All comparative approaches are detailed below.

Normalized RNA transcript: RNA transcript associated with the scRNA-seq count matrix was normalized using Seurat’s log-normalization procedure [14–17] and set as the estimated receptor abundance.

SPECK: scRNA-seq count matrix was normalized, RRR and thresholded with the speck function from the SPECK v0.1.1 R package [34]. Receptor abundance was set to this estimated output.

cTP-net: Estimation was performed on the scRNA-seq count matrix with the cTPnet function and default parameters using cTP-net v1.0.3 R package [10]. Receptor abundance was set to this estimated output. The scRNA-seq matrix was not denoised with the SAVER-X package since cTP-net maintainers note that cTP-net can predict protein abundance relatively accurately without denoising. Denoising using SAVER-X was additionally not performed due to the associated high time complexity for large datasets and pending Python package dependency updates required by the SAVER-X package maintainers.

Random Forest (RF): A random forest model was trained using the randomForest function with default parameters from the randomForest v4.7-1.1 R package [35]. Model training leveraged the top 10 genes whose normalized expression values from the scRNA-seq component of the scRNA-seq/CITE-seq joint training data had the largest positive Spearman rank correlation with the receptor’s normalized ADT values from the corresponding CITE-seq component of the same joint training data. This trained RF model was applied to the target scRNA-seq data to generate estimated receptor abundance values.

Support Vector Machines (SVM): A SVM model was trained using the svm function with default parameters from the e1071 v1.7.13 R package [36]. Similar to the random forest approach detailed above, scRNA-seq expression from top 10 genes with normalized scRNA-seq expression values most correlated with corresponding ADT values from a joint scRNA-seq/CITE-seq dataset was applied to the target scRNA-seq data to generate measures of estimated receptor abundance.

Benchmark setup

Each of the cross-validation and cross-training evaluation approaches had a distinct benchmark setup. For the cross-validation approach, five individual subsets of different sizes were selected from each dataset to get a 20–80 train-test split where 20% of total cells were used as training data and 80% of cells were used as test data. The number of cells used in the training and test subsets and the number of total cells (i.e., sum of cells from the training and target data subsets) for each dataset are indicated in Tables 1 and 2 for the cross-validation approach. Evaluation using the PBMC cross-training strategy was performed on gene sets trained on a subset of 5,000 cells from the Hao data. Each trained subset was evaluated on five subsets of 5,000, 7,000 and 10,000 cells and one subset of 50,000 cells from the Unterman data.

Table 1. Joint scRNA-seq/CITE-seq datasets used for method evaluation.

| Species | Source Tissue | Final Subset Size | Number of Antibodies |

|---|---|---|---|

| Human | PBMC Hao [14] | 60,000 | 211 |

| Human | PBMC Unterman [23] | 60,000 | 167 |

| Human | MALT [Mucosa-Associated Lymphoid Tissue] [24] | 8,412 | 17 |

| Human | Monocytes [25] | 37,000 | 238 |

| Human | MPEM [Malignant Peritoneal Mesothelioma] [26] | 4,969 | 46 |

| Mouse | Spleen and Lymph Nodes [37] | 20,000 | 102 |

Table 2. Number of cells in individual training and target data subsets and total number of cells for each of the six datasets for the 5-fold cross-validation evaluation approach.

| Dataset | Number of Training Cells | Number of Target Cells | Total Number of Cells |

|---|---|---|---|

| PBMC Hao | 1,000 | 4,000 | 5,000 |

| 2,500 | 10,000 | 12,500 | |

| 5,000 | 20,000 | 25,000 | |

| 10,000 | 40,000 | 50,000 | |

| 12,000 | 48,000 | 60,000 | |

| PBMC Unterman | 1,000 | 4,000 | 5,000 |

| 2,500 | 10,000 | 12,500 | |

| 5,000 | 20,000 | 25,000 | |

| 10,000 | 40,000 | 50,000 | |

| MALT | 1,000 | 4,000 | 5,000 |

| 1,250 | 5,000 | 6,250 | |

| 1,500 | 6,000 | 7,500 | |

| 1,682 | 6,728 | 8,410 | |

| Monocytes | 7,422 | 29,690 | 37,112 |

| MPEM | 994 | 3,975 | 4,969 |

| Spleen and Lymph Nodes | 3,940 | 15,758 | 19,698 |

Lower and upper cell count limits for the cross-validation approach were individually determined for each dataset. For the Hao data, the upper limit of 60,000 total cells (i.e., 12,000 training cells and 48,000 target cells) was determined by the capacity to perform RRR on 16 CPU cores without any virtual memory allocation errors. For the Unterman data, the upper limit of 50,000 total cells (i.e., 10,000 training cells and 40,000 target cells) was determined by the total number of cells in the CITE-seq assay (50,438 cells). For the MALT data, the upper limit of 8,410 total cells (i.e., 1,682 training cells and 6,728 target cells) was similarly determined by the total number of cells in the analogous CITE-seq assay (8,412 cells). The upper limit of 50,000 target cells for the cross-training approach was determined by the total number of cells in the CITE-seq assay corresponding to the Unterman data (50,438 cells).

From the initial 228 antibodies included in the Hao data, antibodies mapping to multiple HGNC (HUGO Gene Nomenclature Committee) [38] symbols or antibodies with their HGNC symbols not present in the feature/gene names corresponding to the scRNA-seq matrix were removed. Final assessment was performed for 217 antibodies for each subset of the Hao data as evaluated with the 5-fold cross-validation strategy. From the initial 200 antibodies included in the Unterman data, antibodies mapping to multiple HGNC symbols and mouse/rat specific antibodies were removed, resulting in 168 antibodies. Since assessment was performed for a varying number of cells, antibodies not expressed in smaller cell groups were dropped, resulting in assessment of either 167 or 168 antibodies for the Unterman data as evaluated with the cross-validation strategy. From the initial 17 antibodies included in the MALT data, three mouse/rat specific antibodies (IgG2a, IgG1 and IgG2b control) were removed. Final assessment was performed on 14 antibodies for the MALT data. For the cross-training approach, 124 antibodies, overlapping between the 217 antibodies from the Hao data and the 168 antibodies from the Unterman data, were assessed.

Results

STREAK generates receptor abundance estimates that are highly correlated with CITE-seq data

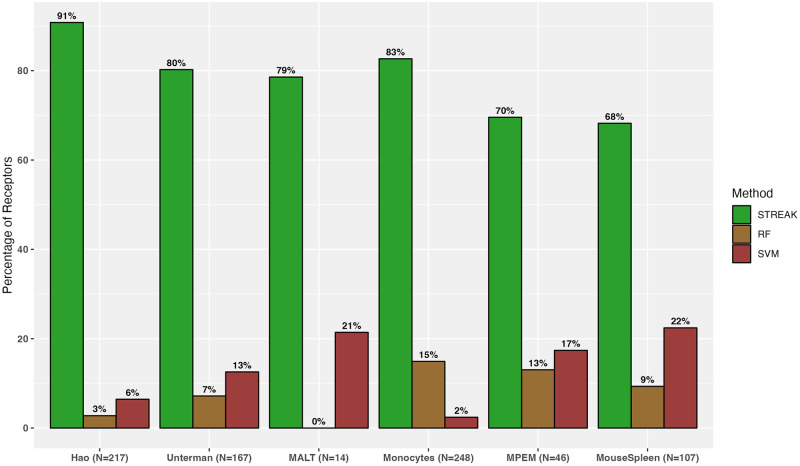

We first quantified the proportion of abundance profiles that have the highest Spearman rank correlation with the corresponding CITE-seq measurements when estimated using STREAK, SPECK or the normalized RNA method for the 5-fold cross-validation approach. We performed this computation for five different cell subsets of the Hao training and target data ranging from 1,000 training and 4,000 target cells to 12,000 training and 48,000 target cells and for four subsets of training and target cells ranging from 1,000 training and 4,000 target cells to 10,000 training and 40,000 target cells for the Unterman data and from 1,000 training and 4,000 target cells to 1,682 training and 6,728 target cells for the MALT data. We visualized each set of proportion estimates using a collective figure for all datasets, including Hao, Unterman, MALT, Monocytes, MPEM and Mouse Spleen and Lymph Nodes datasets, combined as indicated by Figs 2 and 3 as well as via separate graphical representations for each dataset as displayed by S5A, S5B and S5C Fig for the Hao, Unterman and the MALT datasets, respectively. Overall, the results indicate that the percentage of receptors for which the STREAK estimates have high rank correlations with analogous CITE-seq data are considerably larger than the corresponding percentage for SPECK or the normalized RNA approach for each of the Hao, Unterman and MALT datasets.

Fig 2.

Percentage of receptors for which a given technique (STREAK, SPECK, normalized RNA transcript, Random Forest or Support Vector Machines) generates estimates with the highest average rank correlation with the associated CITE-seq data over five subsets of training data consisting of 12,000 cells from the Hao dataset, 10,000 cells from the Unterman data, 1,682 cells from the MALT data, 3,940 cells from the Mouse Spleen data, 7,422 cells from the Monocytes data and 994 cells from the MPEM dataset.

Fig 3.

Percentage of receptors for which a given technique (STREAK, SPECK, normalized RNA transcript, Random Forest or Support Vector Machines) generates estimates with the highest average rank correlation with the associated CITE-seq data. Results are computed on the subset of receptors supported by the cTP-net methods.

In addition to comparisons against existing unsupervised receptor abundance estimation strategies as displayed by Fig 4, we compared STREAK against supervised abundance estimation methods such as cTP-net and the RF model and similarly quantified the proportion of receptors for which the estimated abundance generated by each technique was most highly correlated with CITE-seq data. We similarly quantify comparisons for all datasets combined, as shown by Figs 5 and 6, as well as for individual data. S5D–S5F Fig present comparisons between STREAK and cTP-net while S5G–S5I Fig display comparisons between STREAK and the RF model for the Hao, Unterman and MALT datasets, respectively. Both sets of figures evidently show that a large percentage of abundance profiles estimated using STREAK are most highly correlated with corresponding CITE-seq data, which substantiates the claim that STREAK overall performs better than cTP-net and the RF model across all datasets and training subset sizes.

Fig 4.

Percentage of receptors for which a given unsupervised abundance estimation technique (STREAK, SPECK and the normalized RNA transcript) generates estimates with the highest average rank correlation with the associated CITE-seq data.

Fig 5.

Percentage of receptors for which a given supervised abundance estimation technique (STREAK, the Random Forest and Support Vector Machines algorithms) generates estimates with the highest average rank correlation with the associated CITE-seq data.

Fig 6.

Percentage of receptors for which a given supervised abundance estimation technique (STREAK, cTP-net, and the Random Forest and Support Vector Machines algorithms) generates estimates with the highest average rank correlation with the associated CITE-seq data for a subset of receptors with defined cTP-net expression.

We next compared STREAK against unsupervised and supervised receptor abundance estimation approaches for the cross-training evaluation strategy, as indicated by Fig 7. We observed an equivalent pattern of improved concordance of the STREAK-based receptor abundance estimates with CITE-seq data relative to the estimates produced using SPECK, the normalized RNA approach, cTP-net, and the RF model. While the relative performance benefit of STREAK for the cross-training evaluation approach is more incremental than for the cross-validation strategy, the results nevertheless emphasize STREAK’s ability to consistently generate more accurate abundance estimates than comparative methods. The fact that STREAK’s relative performance is relatively insensitive to training and test dataset size across three main assessed datasets (PBMC Hao, PBMC Unterman and the 10X Genomics MALT) and two evaluation strategies further stresses the robustness of this approach for receptor abundance estimation.

Fig 7.

Percentage of receptors with highest average Spearman rank correlations between CITE-seq data and abundance profiles estimated using STREAK, SPECK, the normalized RNA approach, cTP-net or RF using the cross-training evaluation approach (Fig 1A–1C). The horizontal axis for these plots indicates the number of target cells evaluated from the Unterman data.

In addition to evaluating STREAK against comparative methods using the evaluation strategy that assesses the proportion of receptors that are most correlated with CITE-seq data when estimated using STREAK, SPECK, normalized RNA transcript, the cTP-net approach and the RF and SVM algorithms (as presented using bar charts), we evaluated STREAK using correlation-correlation scatterplots. Figs 8 and 9 display the indicated percentage of receptors that are above the diagonal (y = x) line for STREAK versus the remaining comparative approaches for the PBMC Hao and the Mouse Spleen datasets. Overall, we observed that a large percentage of receptors are above the y = x line in all of the subplots indicating that STREAK provides more accurate receptor abundance estimates than the comparison methods. A similar pattern is found for the PBMC Unterman, MALT, Monocytes and MPEM datasets, as shown in S1–S4 Figs.

Fig 8.

Correlation versus correlation scatter plots for the PBMC Hao data. Each point corresponds to a receptor from a maximum sample size of 217 receptors. LOESS (locally estimated scatterplot smoothing) function is applied to smooth out conditional means. Individual correlations are computed using the Spearman rank correlation metric.

Fig 9.

Correlation versus correlation (computed using the Spearman rank correlation metric) scatter plots for the Mouse Spleen data. Each point corresponds to a receptor from a maximum sample size of 107 receptors.

STREAK as a tool for selecting the optimal abundance estimation strategy for specific receptors

Following an examination of overall rank correlation trends between CITE-seq data and corresponding abundance profiles estimated using STREAK and comparative methods across different training and test dataset sizes, we inspected individual trends in rank correlations over a select train/test split group for all six datasets, including the Hao, Unterman, MALT, Monocytes, MPEM and Mouse Spleen and Lymph Nodes. For the 5-fold cross-validation evaluation approach, we trained and evaluated abundance values estimated using STREAK, SPECK, the normalized RNA approach, cTP-net and the RF model using train/test splits of 12,000/48,000, 10,000/40,000, 1,682/6,728, 7,422/29,690, 994/3,975 and 3,940/15,758 cells for the Hao, Unterman, MALT, Monocytes, MPEM and Mouse Spleen and Lymph Nodes datasets, respectively. We visualized rank correlations between estimated abundance values and CITE-seq data using heatmaps. Asterisk text format was used to indicate receptors whose STREAK-based estimates have the highest rank correlation with analogous CITE-seq data in each plot. Figs 10 and 11 display these correlations between CITE-seq data and abundance profiles generated using all unsupervised and supervised estimation approaches (STREAK, SPECK, RNA, cTP-net, RF and SVM) for the Hao and the Mouse Spleen and Lymph Nodes datasets, respectively. These figures, along with the corresponding S13–S16 Figs, show that the abundance values estimated with STREAK have relatively higher individual rank correlations with analogous CITE-seq data compared to alternative estimation strategies.

Fig 10.

Average rank correlations between CITE-seq data and receptor abundance values estimated using STREAK and all comparative methods as evaluated using the 5-fold cross-validation approach for training data consisting of 12,000 cells from the Hao data. Asterisk text format is used to indicate receptors for which the STREAK estimate has highest correlation with corresponding CITE-seq data among all evaluated methods. Limits of the gradient color scale are determined by the minimum and maximum average correlation values for all comparative methods combined.

Fig 11.

Average rank correlations between CITE-seq data and receptor abundance values estimated using STREAK and comparative methods as evaluated using the 5-fold cross-validation approach for training data consisting of 3,940 cells from the Mouse Spleen dataset.

We similarly visualized rank correlations between CITE-seq data and receptor abundance profiles estimated using co-expression associations learned from a subset of 5,000 cells from the Hao data and comparative unsupervised and supervised abundance estimation methods as evaluated on a subset of 50,000 cells from the Unterman data for the cross-training approach. These results, which are displayed in Fig 12, show that while STREAK’s performance as evaluated using the 5-fold cross-validation approach is superior to its performance when using the cross-training approach, STREAK has significantly better performance than the unsupervised techniques for the cross-training approach. Thus, while it is optimal to use trained associations learned from a subset of the same joint scRNA-seq/CITE-seq dataset as used for target estimation, there is still utility to leveraging associations from an independent scRNA-seq/CITE-seq dataset measured on the same tissue type as the target data.

Fig 12.

Average rank correlations between CITE-seq data and abundance values estimated using STREAK and comparative methods evaluated using the cross-training approach trained on a subset of 5,000 cells from the Hao data and evaluated on a subset of 50,000 cells from the Unterman data.

Along with the practical advantage of these correlation heatmaps as strategies for evaluating estimated receptor abundance values, they can be leveraged to help identify an optimal abundance estimation approach for specific receptors. As shown in the heatmaps, while STREAK provides the best estimate for a majority of receptors, it is not optimal in all cases. For example, the rank correlation computed between the estimated CD14 abundance profiles and the corresponding CITE-seq values for the Hao data is higher for SPECK (ρ = 0.754) than STREAK (ρ = 0.744) (see Fig 10). Similarly, the rank correlation between the CD301 abundance profiles and the corresponding CITE-seq values for the Hao data is higher than the normalized RNA transcript (ρ = 0.105) as compared to STREAK (ρ = 0.078). Similarly, when assessing STREAK against supervised abundance estimation techniques, the cTP-net-based profiles for the CD45RA receptor from the Hao data are more correlated with corresponding CITE-seq values (ρ = 0.720) than the STREAK-based abundance profiles (ρ = 0.708) (see Fig 10). These plots thus provide users with practical guidance when selecting the optimal abundance estimation approach for specific receptors.

STREAK thresholding mechanism provides a considerable advantage over simple gene set scoring

Our next analysis evaluated the distinctive contributions of the gene set scoring and thresholding steps on STREAK’s performance. For this purpose, we compared the gene set scores (i.e., the CDF values) generated directly by VAM with the thresholded VAM scores used by STREAK. Fig 13A, 13B and 13C indicate the proportion of receptors that have high rank correlations with analogous CITE-seq data when estimated using STREAK and VAM for the Hao, Unterman and MALT datasets, respectively, as evaluated using the 5-fold cross-validation approach. Overall, a greater percentage of receptors estimated with STREAK have higher rank correlations with CITE-seq values for all datasets and every training subset size, except the 1,000 cells training subset of the Hao data, versus direct use of the VAM scores. This result emphasizes the important contribution of thresholding in further improving the concordance of the estimated receptor abundance profiles with analogous CITE-seq data over and above VAM-based set scoring.

Fig 13.

Gene set scoring versus thresholding sensitivity analysis examining frequency of receptors with highest average rank correlations between CITE-seq data and abundance values estimated using STREAK (i.e., estimation via gene set scoring followed by thresholding) or VAM (i.e., estimation using just gene set scoring) evaluated using the 5-fold cross-validation approach with the indicated training data ranging from 1,000 to 12,000 cells for the Hao data (Fig 13A) 1,000 to 10,000 cells for the Unterman data (Fig 13B) and 1,000 to 1,682 cells for the MALT data (Fig 13C).

STREAK is relatively insensitive to both training data subset size and gene set size

Following an assessment of the individual contributions of the gene set scoring and thresholding steps to STREAK performance, we implemented sensitivity analyses to examine the influence of training data size on STREAK’s performance for the cross-training evaluation approach. To accomplish this, we evaluated STREAK against SPECK, the normalized RNA transcript, the RF model, and cTP-net using co-expression associations constructed from the joint scRNA-seq/CITE-seq Hao training data consisting of 5,000, 7,000, 10,000, 20,000 and 30,000 cells. Each set of trained associations were evaluated on five subsets of 5,000, 7,000 and 10,000 cells and a subset of 50,000 cells from the Unterman data. S7A–S7E Fig plot the proportion of receptors that have the highest rank correlations with CITE-seq data when abundance is estimated using STREAK, SPECK or the normalized RNA transcript with training performance on 5,000, 7,000, 10,000, 20,000 or 30,000 cells from the Hao data. Similarly, S8A–S8E Fig indicate the analogous proportion of receptors that have the highest rank correlations with CITE-seq data when estimated using STREAK and cTP-net while S9A–S9E Fig visualize the equivalent results for the RF model trained on co-expression associations learned from subsets of 5,000, 7,000, 10,000, 20,000 and 30,000 cells from the Hao data. These results demonstrate that the relative performance of STREAK is consistent across all training cell subsets, thereby underscoring STREAK’s robustness to training data size for the cross-training evaluation strategy.

Our subsequent examination assessed STREAK’s sensitivity to gene set size. We performed this analysis for the Hao data and the 5-fold cross-validation evaluation strategy using trained associations from the top 5, 10, 15, 20, 25 and 30 most correlated scRNA-seq transcripts with CITE-seq data. S10 Fig quantifies the proportion of receptors that have high rank correlations with CITE-seq data when estimated using STREAK versus when estimated using SPECK or the normalized RNA approach. Similarly, S11 Fig compares the proportion of receptors that have the highest correlation with the corresponding CITE-seq data when estimated using STREAK versus cTP-net while S12 Fig compares this proportion for STREAK versus the RF model. All three plots emphasize that STREAK is generally insensitive to gene set size and consistently performs better than comparative evaluation strategies given a gene set size range of 5 to 30 genes.

Discussion

In this paper, we detail a novel supervised receptor abundance estimation method, STREAK, which functions by first learning associations between gene expression and protein abundance data using joint scRNA-seq/CITE-seq training data and then leverages these associations to perform thresholded gene set scoring on the target scRNA-seq data. We evaluate this method on six joint scRNA-seq/CITE-seq datasets representing four different tissue types and two organisms using two evaluation strategies, which include the human PBMC Hao, PBMC Unterman, MALT, Monocytes, MPEM and mouse Spleen and Lymph Nodes. We compare STREAK’s performance against both unsupervised abundance estimation techniques such as SPECK and the normalized RNA approach and supervised methods such as cTP-net, RF, and SVM. This evaluation demonstrates that for the majority of the analyzed receptors, STREAK abundance estimates are more accurate than those produced alternative techniques, as assessed by the Spearman rank correlation between estimated abundance profiles and associated CITE-seq data.

A key strength of STREAK in comparison to neural network or ensemble-based supervised receptor abundance estimation techniques is that the weighted gene sets used for cell-level estimation are simple to interpret and customize. Researchers can easily add or remove genes and adjust weights for empirically derived sets or define entirely new gene sets to reflect specific biological knowledge or better adapt to the expected pattern of gene expression in a given tissue type or environment.

One limitation of STREAK is that receptor gene set construction will typically require access to joint scRNA-seq/CITE-seq training data that is ideally measured on the same tissue type (and under similar biological conditions) as the target scRNA-seq data. A related limitation is that this gene set construction step is computationally expensive since it entails comparison of each CITE-seq ADT transcript with every gene in the scRNA-seq expression matrix. While both limitations can be avoided if users feel comfortable manually defining the receptor gene sets, we anticipate that access to experimental training data will be necessary to create accurate estimates for most receptor proteins. Our current R package implementation for STREAK is available on CRAN [39]. This package supports both the gene set construction and the receptor abundance estimation components of the STREAK algorithm. The gene set construction functionality can moreover be modified to compute co-expression associations using metrics other than the Spearman rank correlation.

In short, we outline a new supervised receptor abundance estimation method that leverages joint associations between transcriptomics and proteomics data to generate abundance estimates using thresholded cell-level gene set scores. The STREAK method produces more accurate abundance estimates relative to other unsupervised and supervised abundance estimation techniques with the potential to significantly improve the performance of downstream single cell analysis tasks such as cell typing/phenotyping and cell-cell signaling estimation.

Supporting information

Correlation versus correlation scatter plots for the PBMC Unterman data. Each point corresponds to a receptor from a sample size of 167 receptors.

(TIFF)

Correlation versus correlation scatter plots for the MALT data. Each point corresponds to a receptor from a sample size of 14 receptors.

(TIFF)

Correlation versus correlation scatter plots for the Monocytes data. Each point corresponds to a receptor from a sample size of 252 receptors.

(TIFF)

Correlation versus correlation scatter plots for the MPEM data. Each point corresponds to a receptor from a sample size of 46 receptors.

(TIFF)

Frequency of receptors with highest average Spearman rank correlations between CITE-seq data and abundance profiles estimated using STREAK, SPECK and normalized RNA approach or cTP-net or RF for the 5-fold cross-validation approach with training data ranging from 1,000 to 12,000 cells for the Hao data (S5A, S5D, S5G), 1,000 to 10,000 cells for the Unterman data (S5B, S5E, S5H) and 1,000 to 1,682 cells for the MALT data (S5C, S5F, S5I) and 5,000 cells from the Hao data for the cross-training evaluation approach (S5J, S5K, S5L). The horizontal axis for the 5-fold cross-validation evaluation plots (S5A-S5I) indicates the number of cells used for training while the horizontal axis for the cross-training evaluation plots (S5J-S5L) indicates the number of target cells evaluated from the Unterman data.

(TIFF)

Percentage of receptors with highest average Spearman rank correlations between CITE-seq data and abundance profiles estimated using STREAK, SPECK and normalized RNA approach or cTP-net or RF for the 5-fold cross-validation approach with 5,000 cells from the Hao data for the cross-training evaluation approach (S6A, S6B, S6C). The horizontal axis for these plots (S6A-S6C) indicates the number of target cells evaluated from the Unterman data.

(TIFF)

Training data sensitivity analysis examining frequency of receptors with highest average rank correlations between CITE-seq data and abundance values estimated using STREAK, SPECK and normalized RNA transcript evaluated using the cross-training approach with Hao training data consisting of 5,000 (S7A), 7,000 (S7B), 10,000 (S7C), 20,000 (S7D) and 30,000 (S7E) cells. The horizontal axis for each plot indicates the number of target cells evaluated from the Unterman data.

(TIFF)

Training data sensitivity analysis examining frequency of receptors with highest average rank correlations between CITE-seq data and abundance values estimated using STREAK and cTP-net via the cross-training strategy.

(TIFF)

Training data sensitivity analysis examining frequency of receptors with highest average rank correlations between CITE-seq data and abundance values estimated using STREAK and the RF model via the cross-training strategy.

(TIFF)

Gene set size sensitivity analysis examining frequency of receptors with highest average rank correlations between CITE-seq data and abundance values estimated using STREAK, SPECK and normalized RNA transcript evaluated using the 5-fold cross-validation approach with the indicated training data ranging from 1,000 to 12,000 cells for the Hao data and gene set size consisting of 5, 10, 15, 20, 25 and 30 genes.

(TIFF)

Gene set size sensitivity analysis between CITE-seq data and abundance profiles estimated with STREAK and cTP-net using the 5-fold cross-validation approach with training data ranging from 1,000 to 12,000 cells for the Hao data and gene set size consisting of 5, 10, 15, 20, 25 and 30 genes.

(TIFF)

Gene set size sensitivity analysis between CITE-seq data and abundance profiles estimated with STREAK and the RF model using the 5-fold cross-validation approach with training data ranging from 1,000 to 12,000 cells for the Hao data and gene set size consisting of 5, 10, 15, 20, 25 and 30 genes.

(TIFF)

Average rank correlations between CITE-seq data and receptor abundance values estimated using STREAK and comparative methods as evaluated using the 5-fold cross-validation approach for training data consisting of 12,000 cells from the Unterman dataset.

(TIFF)

Average rank correlations between CITE-seq data and receptor abundance values estimated using STREAK and comparative methods as evaluated using the 5-fold cross-validation approach for training data consisting of 1,682 cells from the MALT dataset.

(TIFF)

Average rank correlations between CITE-seq data and receptor abundance values estimated using STREAK and comparative methods as evaluated using the 5-fold cross-validation approach for training data consisting of 7,422 cells from the Monocytes dataset.

(TIFF)

Average rank correlations between CITE-seq data and receptor abundance values estimated using STREAK and comparative methods as evaluated using the 5-fold cross-validation approach for training data consisting of 994 cells from the MPEM dataset.

(TIFF)

Acknowledgments

We would like to acknowledge the supportive environment at the Geisel School of Medicine at Dartmouth where this research was performed.

Data Availability

All relevant data are within the manuscript and its Supporting information files. Please see our Github repository for the associated STREAK package at [https://github.com/azkajavaid/STREAK].

Funding Statement

This work was funded by National Institutes of Health grants R35GM146586, R21CA253408, P20GM130454 and P30CA023108. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. No authors received a salary from any of the funders.

References

- 1. Zheng GXY, Terry JM, Belgrader P, Ryvkin P, Bent ZW, Wilson R, et al. Massively parallel digital transcriptional profiling of single cells. Nature Communications. 2017;8(1):14049. doi: 10.1038/ncomms14049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Tang F, Barbacioru C, Wang Y, Nordman E, Lee C, Xu N, et al. mRNA-Seq whole-transcriptome analysis of a single cell. Nature Methods. 2009;6(5):377–382. doi: 10.1038/nmeth.1315 [DOI] [PubMed] [Google Scholar]

- 3. Tirosh I, Izar B, Prakadan SM, Wadsworth MH, Treacy D, Trombetta JJ, et al. Dissecting the multicellular ecosystem of metastatic melanoma by single-cell RNA-seq. Science. 2016;352(6282):189–196. doi: 10.1126/science.aad0501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Armingol E, Officer A, Harismendy O, Lewis NE. Deciphering cell–cell interactions and communication from gene expression. Nature Reviews Genetics. 2021;22(2):71–88. doi: 10.1038/s41576-020-00292-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Haque A, Engel J, Teichmann SA, Lönnberg T. A practical guide to single-cell RNA-sequencing for biomedical research and clinical applications. Genome Medicine. 2017;9(1):75. doi: 10.1186/s13073-017-0467-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Bonner WA, Hulett HR, Sweet RG, Herzenberg LA. Fluorescence Activated Cell Sorting. Review of Scientific Instruments. 1972;43(3):404. doi: 10.1063/1.1685647 [DOI] [PubMed] [Google Scholar]

- 7. Stoeckius M, Hafemeister C, Stephenson W, Houck-Loomis B, Chattopadhyay PK, Swerdlow H, et al. Simultaneous epitope and transcriptome measurement in single cells. Nature Methods. 2017;14(9):865–868. doi: 10.1038/nmeth.4380 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Lähnemann D, Köster J, Szczurek E, McCarthy DJ, Hicks SC, Robinson MD, et al. Eleven grand challenges in single-cell data science. Genome Biology. 2020;21(1):31. doi: 10.1186/s13059-020-1926-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Javaid A, Frost HR. SPECK: an unsupervised learning approach for cell surface receptor abundance estimation for single-cell RNA-sequencing data, Bioinformatics Advances. 2023; 3(1), vbad073. doi: 10.1093/bioadv/vbad073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Zhou Z, Ye C, Wang J, Zhang NR. Surface protein imputation from single cell transcriptomes by deep neural networks. Nature Communications. 2020;11(1):651. doi: 10.1038/s41467-020-14391-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Dai X, Xu F, Wang S, Mundra PA, Zheng J. PIKE-R2P: Protein–protein interaction network-based knowledge embedding with graph neural network for single-cell RNA to protein prediction. BMC Bioinformatics. 2021;22(Suppl 6). doi: 10.1186/s12859-021-04022-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Xu F, Wang S, Dai X, Mundra PA, Zheng J. Ensemble learning models that predict surface protein abundance from single-cell multimodal omics data. Methods. 2021;189:65–73. doi: 10.1016/j.ymeth.2020.10.001 [DOI] [PubMed] [Google Scholar]

- 13. Erichson NB, et al. Randomized Matrix Decompositions Using R. Journal of Statistical Software. 2019;89(11):1–48. doi: 10.18637/jss.v089.i11 [DOI] [Google Scholar]

- 14. Hao Y, Hao S, Andersen-Nissen E, Mauck WM, Zheng S, Butler A, et al. Integrated analysis of multimodal single-cell data. Cell. 2021;184(13):3573–3587.e29. doi: 10.1016/j.cell.2021.04.048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Stuart T, Butler A, Hoffman P, Hafemeister C, Papalexi E, Mauck WM, et al. Comprehensive Integration of Single-Cell Data. Cell. 2019;177(7):1888–1902.e21. doi: 10.1016/j.cell.2019.05.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Butler A, Hoffman P, Smibert P, Papalexi E, Satija R. Integrating single-cell transcriptomic data across different conditions, technologies, and species. Nature Biotechnology. 2018;36:411–420. doi: 10.1038/nbt.4096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Satija R, Farrell JA, Gennert D, Schier AF, Regev A. Spatial reconstruction of single-cell gene expression data. Nature Biotechnology. 2015;33(5):495–502. doi: 10.1038/nbt.3192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Frost HR. Variance-adjusted Mahalanobis (VAM): a fast and accurate method for cell-specific gene set scoring; p. 20. [DOI] [PMC free article] [PubMed]

- 19. Hafemeister C, Satija R. Normalization and variance stabilization of single-cell RNA-seq data using regularized negative binomial regression. Genome Biology. 2019;20(1):296. doi: 10.1186/s13059-019-1874-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Song M, Zhong H. Efficient weighted univariate clustering maps outstanding dysregulated genomic zones in human cancers. Bioinformatics. 2020;36(20):5027–5036. doi: 10.1093/bioinformatics/btaa613 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Wang H, Song M. Ckmeans.1d.dp: Optimal k-means Clustering in One Dimension by Dynamic Programming. The R Journal. 2011;3(2):29. doi: 10.32614/RJ-2011-015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Edgar R, Domrachev M, Lash AE. Gene Expression Omnibus: NCBI gene expression and hybridization array data repository. Nucleic Acids Research. 2002;30(1):207–210. doi: 10.1093/nar/30.1.207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Unterman A, Sumida TS, Nouri N, Yan X, Zhao AY, Gasque V, et al. Single-cell multi-omics reveals dyssynchrony of the innate and adaptive immune system in progressive COVID-19. Nature Communications. 2022;13(1):440. doi: 10.1038/s41467-021-27716-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.10k Cells from a MALT Tumor—Gene Expression with a Panel of TotalSeq-B Antibodies;. Available from: https://www.10xgenomics.com/resources/datasets/10-k-cells-from-a-malt-tumor-gene-expression-and-cell-surface-protein-3-standard-3-0-0.

- 25. Lakkis J, Schroeder A, Su K, Lee MYY, Bashore AC, Reilly MP, et al. A multi-use deep learning method for CITE-seq and single-cell RNA-seq data integration with cell surface protein prediction and imputation. Nature Machine Intelligence. 2022;4(11):940–952. doi: 10.1038/s42256-022-00545-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Ma X, Somasundaram A, Qi Z, Hartman D, Singh H, Osmanbeyoglu H. SPaRTAN, a computational framework for linking cell-surface receptors to transcriptional regulators. Nucleic Acids Research. 2021;49(17):9633–9647. doi: 10.1093/nar/gkab745 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Gayoso A, Steier Z, Lopez R, Regier J, Nazor KL, Streets A, et al. Joint probabilistic modeling of single-cell multi-omic data with totalVI. Nature Methods. 2021;18(3):272–282. doi: 10.1038/s41592-020-01050-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Elizaga ML, Li SS, Kochar NK, Wilson GJ, Allen MA, Tieu HVN, et al. Safety and tolerability of HIV-1 multiantigen pDNA vaccine given with IL-12 plasmid DNA via electroporation, boosted with a recombinant vesicular stomatitis virus HIV Gag vaccine in healthy volunteers in a randomized, controlled clinical trial. PLOS ONE. 2018;13(9):e0202753. doi: 10.1371/journal.pone.0202753 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Li SS, Kochar NK, Elizaga M, Hay CM, Wilson GJ, Cohen KW, et al. DNA Priming Increases Frequency of T-Cell Responses to a Vesicular Stomatitis Virus HIV Vaccine with Specific Enhancement of CD8+ T-Cell Responses by Interleukin-12 Plasmid DNA. Clinical and Vaccine Immunology. 2017;24(11):e00263–17. doi: 10.1128/CVI.00263-17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.R: The R Project for Statistical Computing;. Available from: https://www.r-project.org/.

- 31. Schober P, Boer C, Schwarte LA. Correlation Coefficients: Appropriate Use and Interpretation. Anesthesia & Analgesia. 2018;126(5):1763–1768. doi: 10.1213/ANE.0000000000002864 [DOI] [PubMed] [Google Scholar]

- 32. Dijk Dv, Sharma R, Nainys J, Yim K, Kathail P, Carr AJ, et al. Recovering Gene Interactions from Single-Cell Data Using Data Diffusion. Cell. 2018;174(3):716–729.e27. doi: 10.1016/j.cell.2018.05.061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Linderman GC, Zhao J, Roulis M, Bielecki P, Flavell RA, Nadler B, et al. Zero-preserving imputation of single-cell RNA-seq data. Nature Communications. 2022;13(1):192. doi: 10.1038/s41467-021-27729-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Javaid A, Frost HR. SPECK: Receptor Abundance Estimation using Reduced Rank Reconstruction and Clustered Thresholding; 2022. https://CRAN.R-project.org/package=SPECK.

- 35. Liaw A, Wiener M. Classification and Regression by randomForest. R News. 2002;2(3):18–22. [Google Scholar]

- 36.Meyer [aut D, cre, Dimitriadou E, Hornik K, Weingessel A, Leisch F, et al. e1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071), TU Wien; 2023. Available from: https://CRAN.R-project.org/package=e1071.

- 37. Gayoso A, Steier Z, Lopez R, Regier J, Nazor KL, Streets A, et al. Joint probabilistic modeling of single-cell multi-omic data with totalVI. Nature Methods. 2021;18(3):272–282. doi: 10.1038/s41592-020-01050-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Tweedie S, Braschi B, Gray K, Jones TEM, Seal R, Yates B, et al. Genenames.org: the HGNC and VGNC resources in 2021. Nucleic Acids Research. 2021;49(D1):D939–D946. doi: 10.1093/nar/gkaa980 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Javaid A, Frost HR. STREAK: Receptor Abundance Estimation using Feature Selection and Gene Set Scoring; 2022. https://CRAN.R-project.org/package=STREAK. [DOI] [PMC free article] [PubMed]