Abstract

A rapidly emerging application of network neuroscience in neuroimaging studies has provided useful tools to understand individual differences in intrinsic brain function by mapping spontaneous brain activity, namely intrinsic functional network neuroscience (ifNN). However, the variability of methodologies applied across the ifNN studies—with respect to node definition, edge construction, and graph measurements—makes it difficult to directly compare findings and also challenging for end users to select the optimal strategies for mapping individual differences in brain networks. Here, we aim to provide a benchmark for best ifNN practices by systematically comparing the measurement reliability of individual differences under different ifNN analytical strategies using the test-retest design of the Human Connectome Project. The results uncovered four essential principles to guide ifNN studies: (1) use a whole brain parcellation to define network nodes, including subcortical and cerebellar regions; (2) construct functional networks using spontaneous brain activity in multiple slow bands; and (3) optimize topological economy of networks at individual level; and (4) characterize information flow with specific metrics of integration and segregation. We built an interactive online resource of reliability assessments for future ifNN (https://ibraindata.com/research/ifNN).

Keywords: Individual difference, Reliability, Open science, Spontaneous brain activity, Connectome

Author Summary

It is an essential mission for neuroscience to understand the individual differences in brain function. Graph or network theory offer novel methods of network neuroscience to address such a challenge. This article documents optimal strategies on the test-retest reliability of measuring individual differences in intrinsic brain networks of spontaneous activity. The analytical pipelines are identified to optimize for highly reliable, individualized network measurements. These pipelines optimize network metrics for high interindividual variances and low inner-individual variances by defining network nodes with whole-brain parcellations, deriving the connectivity with spontaneous high-frequency slow-band oscillations, constructing brain graphs with topology-based methods for edge filtering, and favoring multilevel or multimodal metrics. These psychometric findings are critical for translating the functional network neuroscience into clinical or other personalized practices requiring neuroimaging markers.

INTRODUCTION

Over the past two decades, network neuroscience has helped transform the field of neuroscience (D. Bassett et al., 2020), providing a quantitative methodology framework for modeling brains as graphs (or networks) composed of nodes (brain regions) and edges (their connections), namely connectomics (Sporns, 2013a). The organization and topology of macroscale brain networks can be characterized by a growing suite of connectomic measurements including efficiency, centrality, clustering, small-word topology, rich-club, and so forth (Craddock et al., 2013). In parallel, resting-state fMRI (rfMRI) has opened up new avenues toward understanding the intrinsic human brain function (Biswal et al., 2010). In conjunction with network neuroscience, rfMRI has led to the emergence of a multidisciplinary field, intrinsic functional connectomics or network neuroscience (ifNN), in which the brain’s intrinsic, interregional connectivity is estimated from rfMRI recordings. It has been widely used to investigate the system-level organization of the human brain function and its relationship with individual differences (Dubois & Adolphs, 2016) in developmental (Zuo et al., 2017), sociocultural (Pessoa, 2018), and clinical conditions (Fornito, Zalesky, & Breakspear, 2015).

Highly reliable measurements are essential for studying individual differences. In general, reliability characterizes a proportion of measurement variability between different subjects relative to the overall variability including both between-subject and within-subject (i.e., random) components (Xing & Zuo, 2018). It is commonly used to assess the consistency or agreement between measurements, or the ability to obtain consistent measures over time. Beyond that, it can also serve as a measure of discriminability (Xing & Zuo, 2018; Zuo, Biswal, & Poldrack, 2019a; Zuo, Xu, & Milham, 2019b). For example, if a measurement can more sufficiently capture individual characteristics (i.e., better differentiate a group of individuals), it will produce higher between-subject variability and thus higher reliability than a measurement underestimating the between-subject variability. Such reliability concept has well-established statistical theory and applications in fields such as psychology (Elliott, Knodt, Caspi, Moffitt, & Hariri, 2021a) and medicine (Kraemer, 2014) where it is used in psychometric theory and diagnosis theory, respectively. Specifically, in psychology, reliability is important for assessing the validity of psychological tests, and in medicine, it is important for accurately diagnosing and treating patients. In the field of human brain mapping, more recent studies have demonstrated that the measurement reliability is equivalent to the “fingerprint” or discriminability of the measurement under the Gaussian distribution (Bridgeford et al., 2021; Milham, Vogelstein, & Xu, 2021). Therefore, the optimization of measurement reliability of the individual differences can help guide ifNN processing and analysis pipelines for individualized or personalized (e.g., neurodevelopmental; Herting, Gautam, Chen, Mezher, & Vetter, 2018) or clinical (Matthews & Hampshire, 2016) research.

Previous studies have demonstrated that many functional network measurements with rfMRI have limited reliability (Noble, Scheinost, & Constable, 2019; Zuo & Xing, 2014). These low levels of reliability could be an indication of failure in handling individual variability at different levels (Elliott, Knodt, & Hariri, 2021b; Hallquist & Hillary, 2019). In particular, experimental design and processing decisions related to scan duration, determining frequency range, and regressing global signal have impacts on rfMRI measurements and thus their reliability (Noble et al., 2019; Zuo et al., 2013). Although less focused on reliability, existing network neuroscience studies revealed that their findings are influenced by choices of parcellation templates (Bryce et al., 2021; Wang et al., 2009), edge construction and definition, and choice of graph metrics (Liang et al., 2012). How these decisions affect the measurement reliability in ifNN deserves further investigation. These analytical choices have been implemented in different software packages but can vary from one package to another, and thus introduce more analytic variability (Botvinik-Nezer et al., 2020). Beyond limited examinations on reliability (Aurich, Filho, da Silva, & Franco, 2015; Braun et al., 2012; Termenon, Jaillard, Delon-Martin, & Achard, 2016), a systematic investigation into the measurement reliability is warranted to guide ifNN software use and analyses.

We conducted a systematic ifNN reliability analysis using the test-retest rfMRI data from the Human Connectome Project (HCP). The HCP has developed its imaging acquisition and data preprocessing (Glasser et al., 2013) by integrating various strategies optimized for reliability in previous studies (Noble et al., 2019; Noble, Scheinost, & Constable, 2021; Zuo & Xing, 2014; Zuo et al., 2013). We thus analyzed the minimally preprocessed HCP rfMRI data and focused our work on four key postanalytic stages: node definition, edge construction, network measurement, and reliability assessments. In the end, we propose a set of principles to guide researchers in performing reliable ifNN, advancing the fieldstandard call for the best practices in network neuroscience. We released all the codes and reliability data by building an online platform for sharing the data and computational resources to foster future ifNN.

THEORY AND METHODS

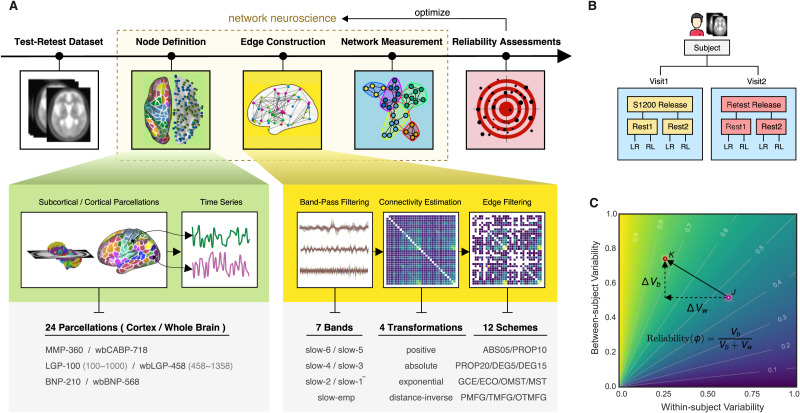

A typical analysis pipeline in ifNN includes steps for node definition (parcellations) and edge construction (frequency bands, connectivity estimation and filtering schemes) (Figure 1A). To determine an optimal pipeline, we combine the most reliable strategies across different parts of the analysis by comparing the reliability of derived global network metrics. The HCP test-retest data were employed for reliability evaluation (Figure 1B) using the intraclass correlation (ICC) statistics on the measurement reliability. Overall reliability assessments associated with the various analytic strategies as well as their impact on between- and within-subject variability (Figure 1C) are investigated. We calculated the between-subject variability (Vb) and within-subject variability (Vw) and normalized them to values between 0 and 1 by the total sample variances. The changes in these variability measures, ΔVb and ΔVw, were used to create a reliability gradient represented by a vector. The length of the arrow reflects the amplitude of the change in reliability when comparing one choice (pink circle, J) to another choice (red circle, K). The direction of the arrow, JK, indicates the sources of the change in reliability. In this case, the reliability increases from a moderate to a substantial level with an increase in between-subject variability (ΔVb > 0) and a decrease in within-subject variability (ΔVw < 0). We then determine the optimized pipelines based on the highest reliability measurements, while documenting the derived both global and local network metrics and both their reliability and variability at an individual level. We first introduce the reliability theory and assessments.

Figure 1. .

Analytical pipelines for reliable ifNN. (A) There are five stages during our analyses: (1) test-retest dataset (white box) downloaded from HCP website; (2) node definition (green box) defining nodes using a set of brain areas of 24 different partitions of the human brain; (3) edge construction (yellow box) estimating individual correlation matrices using the six frequency bands (slow 1–6) from Buzsaki’s theoretical framework on the brain oscillations as well as the widely used empirical frequency band (Slow-emp) and transferring these matrices into adjacency matrices using 7 × 4 × 12 different strategies on edge construction including band-pass filtering, connectivity estimation and edge filtering; (4) network analysis (blue box) systematically calculating various brain graph metrics on measurements of information flow; and (5) reliability assessment (red box) evaluating test-retest reliability with massive linear mixed models. (B) The test-retest data shared multimodal MRI datasets of 46 subjects in the HCP S1200 release and the HCP Retest release. Each subject underwent the first four test scans on 2 days (two scans per day: Rest1 and Rest2) and return several months later to finish the four retest scans on another 2 days. (C) Measurement reliability refers to the interindividual or between-subject variability Vb relative to the intraindividual or within-subject variability Vw. Variability of both between-subject (Vb) and within-subject (Vw) are normalized into between 0 and 1 by the total sample variances. Their changes (ΔVb and ΔVw) introduce a reliability gradient as represented by the vector (the black arrow). The length of the arrow reflects the amplitude of reliability changes when the reliability assessment from one choice (pink circle, J) to another choice (red circle, K). Further, the arrow’s direction (JK) indicates the sources of this reliability change. Here the reliability becomes from moderate to substantial level with increases of between-subject variability (ΔVb > 0) and decreases of within-subject variability (ΔVw < 0).

Reliability Theory and Assessments

Measurement reliability is defined as the extent to which measurements can be replicated across multiple repeated measuring occasions (Xing & Zuo, 2018; Zuo, Biswal, & Poldrack, 2019a; Zuo, Xu, & Milham, 2019b). Test-retest reliability is the closeness of the agreement between the results of successive measurements of the same measure and carried out under the same conditions of measurement.

Linear mixed models.

As a group-level statistic, reliability refers to the interindividual or between-subject variability Vb relative to the intraindividual or within-subject variability Vw. Both the intra- and inter-individual variances can be estimated using linear mixed model (LMM). In this study, given a functional graph metric ϕ, we considered a random sample of P subjects with N repeated measurements of a continuous variable in M visits. ϕijk (for i = 1, … N and j = 1, …, M, and k = 1, …, P) denotes the metric from the kth subject’s jth visit and ith measurement occasions. The three-level LMM models ϕijk as the following equations:

where γ000 is a fixed parameter (the group mean) and p0k, v0jk, and eijk are independent random effects normally distributed with a mean of 0 and variances σp02, σv02, and σe2. The term p0k is the subject effect, v0jk is the visit effect, and eijk is the measurement residual. Age, gender, and interval (Δt) between two visits are covariants.

ICC estimation.

These variances are used to calculate the test-retest reliability, which is measured by the dependability coefficient and reflects the absolute agreement of measurements. The dependability coefficient is a form of ICC commonly, which is the ratio of the variances due to the object of measurement versus sources of error. To avoid negative ICC values and obtain more accurate estimation of the sample ICC, the variance components in model are usually estimated with the restricted maximum likelihood approach with the covariance structure of an unrestricted symmetrical matrix (Zuo et al., 2013).

| (ICC) |

The ICC statistics on the measurement reliability are categorized into five common levels: 0 < ICC ≤ 0.2 (slight); 0.2 < ICC ≤ 0.4 (fair); 0.4 < ICC ≤ 0.6 (moderate); 0.6 < ICC ≤ 0.8 (substantial); and 0.8 < ICC < 1.0 (almost perfect). A metric with moderate to almost perfect test-retest reliability (ICC ≥ 0.4) is commonly expected in practice. The ICC level should not be judged only based upon the point statistical estimation of ICC but its confidence intervals (CI) (Koo & Li, 2016). We employed the nonparametric conditional bootstrap method for 1,000 times to estimate their 95% CIs.

Statistics evaluation.

Our analyses can produce big data of 524,160 ICCs (419,328 for the global network metrics). These ICCs are grouped into four categories (parcellation, frequency band, connectivity transformation, and edge filtering scheme), each of which has different choices. Given each choice of a category, we estimated its density distributions of ICCs and calculated two descriptive statistics: (1) mean ICC values, which measures the general reliability under the given choice; (2) number of almost perfect (noap) ICC values, which measures the potential reliability under the given choice.

We further perform Friedman rank sum test to evaluate whether the location parameters of the distribution of ICCs are the same in each choice. Once the Friedman test is significant, we employ the pairwise Wilcoxon signed rank test for post hoc evaluations to compare ICCs between each pair of the distributions under different choices. The statistical significance levels are corrected with Bonferroni method for controlling the familywise error rate at a level of 0.05. We develop a method to visualize and evaluate the change of ICCs (i.e., reliability gradient) between different choices (Figure 1C). Specifically, the reliability can be plotted as a function of Vb and Vw in its anatomy plane (Xing & Zuo, 2018; Zuo, Xu, & Milham, 2019b). The gradient of reliability between two choices is modeled by the vector (i.e., the black arrow), and decomposed into changes of individual variability. The systematic evaluation on the reliability of the global network metrics determines the optimal network neuroscience by combining the most reliable pipeline choices, which further generated the nodal metrics’ reliability.

Specifically, using the HCP test-retest dataset, our analytic procedure implemented the four postanalytic stages: node definition, edge construction, network measurement, and reliability assessments. The test-retest rfMRI dataset underwent the standardized preprocessing pipeline developed by the HCP team (Glasser et al., 2013). The second step defines nodes (green box) using sets of brain areas based on 24 partitions, and then extracts the nodal time series. During the third step (yellow box), individual correlation matrices are first estimated based upon the six frequency bands derived from Buzsaki’s theoretical framework on brain oscillations (Buzsáki & Draguhn, 2004) along with the classical band widely used (0.01–0.08 Hz). These matrices are then converted into adjacency matrices using 4 × 12 = 48 strategies on edge filtering. In the fourth step, we performed graph analyses (blue box) by systematically calculating the brain graph metrics at global, modular, and nodal scales. Finally, test-retest reliability was evaluated (red box) as ICCs with the linear mixed models as described above. We present details of the other three stages of analyses in the following sections.

Test-Retest Dataset

The WU-Minn Consortium in HCP shared a set of test-retest multimodal MRI datasets of 46 subjects from both the S1200 release and the Retest release. These subjects were retested using the full HCP 3T multimodal imaging and behavioral protocol. Each subject underwent the four scans on 2 days (two scans per day: Rest1 versus Rest2) during the first visit and returned several months later to finish the four scans on another two days during the second visit (Figure 1B). The test-retest interval ranged from 18 to 328 days (mean: 4.74 months; standard deviation: 2.12 months). Only 41 subjects (28 females, age range: 26–35 years; 13 males, age range: 22–33 years) had full length rfMRI data across all the eight scans, 2 visits × 2 days × 2 (LR and RL encoding directions), and were included in the subsequent analyses. Then we averaged across the RL and LR encodings for each day, so each subject had four repeated measurements in the ICC estimation. This sample size is larger than the minimal sample size (N = 35) for fair reliability with 80% power and significance level of 0.05 based on the above-mentioned test-retest design (four observations per subject) (Bujang & Baharum, 2017). The HCP rfMRI protocols for scanning and preprocessing images have been optimized for reliability.

During the scanning, participants were instructed to keep their eyes open and to let their mind wander while fixating on a cross-hair projected on a dark background. Data were collected at the 3T Siemens Connectome Skyra MRI scanner with a 32-channel head coil. All functional images were acquired using a multiband gradient-echo EPI imaging sequence (2 mm isotropic voxel, 72 axial slices, TR = 720 ms, TE = 33.1 ms, flip angle = 52°, field of view = 208 × 180 mm2, matrix size = 104 × 90 and a multiband factor of 8). A total of 1,200 images was acquired for a duration of 14 min and 24 s. Details on the imaging protocols can be found in Smith et al. (2013).

The protocols of rfMRI image preprocessing and artifact removal procedures are documented in detail elsewhere and generated the minimally preprocessed HCP rfMRI images. Artifacts were removed using the ICA-based X-noiseifier (ICA + FIX) procedure, followed by MS-MAll for intersubject registration. The preprocessed rfMRI data were represented as a time series of grayordinates (4D), combining both cortical surface vertices and subcortical voxels (Glasser et al., 2013).

Node Definition

A brain graph defines a node as a brain area, which is generally derived by an element of brain parcellation (parcel) according to borders or landmarks of brain anatomy, structure, or function as well as an element of volume (voxel) in imaging signal acquisition or a cluster of voxels (Sporns, 2013b). Due to the high computational demand of voxel-based brain graph, in this study, we defined nodes as parcels according to the following brain parcellation strategies (Figure 2A). A surface-based approach has been demonstrated to outperform other approaches for fMRI analysis (Coalson, Van Essen, & Glasser, 2018; Zuo et al., 2013), and thus the nodes are defined in the surface space (total 24 surface parcellation choices). Of note, we adopted a naming convention for brain parcellations as follows: ParcAbbr-NumberOfParcels’ (e.g., LGP-100 or its whole-brain version wbLGP-458).

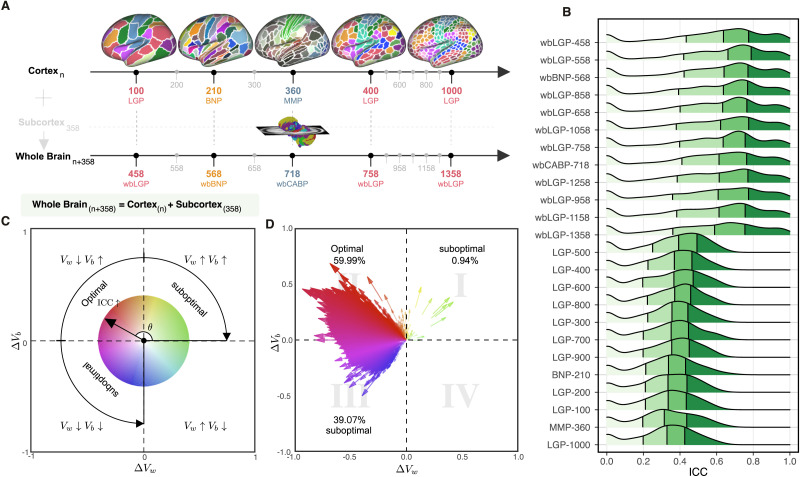

Figure 2. .

Parcellation choices impact measurement reliability and individual variability. (A) Node definitions are derived from the process of spatially partitioning the human cortex and whole brain (including both cortical and subcortical nodes) at various resolutions; see more details of these name abbreviations in Theory and Methods. (B) Density plots are visualized for distributions of the ICCs under the various parcellation choices on node definition. These density distributions are ranked from top to bottom according to decreases of the mean ICCs while the four colors depict the four quantiles. (C) Reliability gradient between any one whole-brain parcellation choice and its corresponding cortical parcellation choice is decomposed into the axis of changes of the between-subject variability (ΔVb) and the axis of changes of the within-subject variability (ΔVw). This gradient can be represented as a vector, which is the black arrow from the origin with an angle θ with the x axis, while the color encodes this angle and the transparency or the length reflects the magnitude of the degree of ICC improvement. According to the anatomy of reliability, the optimal space is in the second quadrant (quadII) while the first and third quadrant (quadI and quadIII) are suboptimal for reliability. (D) The improvement in the reliability of the pipeline, which is defined from the cortical parcellations to the corresponding whole-brain parcellations (including the subcortex), is illustrated by gradient arrows in the plane of individual variability, while controlling for all other processing steps. Each arrow represents a specific global metric, while controlling for all other processing steps. The position of the arrows reflects the magnitude of between- and within-subject variability changes (ΔVb, ΔVw), and the size of the arrows indicates the magnitude of ICC changes.

HCP multimodal parcellation (MMP).

A cortical parcellation generated from multimodal images of 210 adults from the HCP database, using a semiautomated approach (Glasser et al., 2016). Cortical regions are delineated with respect to their function, connectivity, cortical architecture, and topography, as well as expert knowledge and meta-analysis results from the literature (Glasser et al., 2016). The atlas contains 180 parcels for each hemisphere.

Local-global parcellation (LGP).

A gradient-weighted Markov random field model integrating local gradient and global similarity approaches produces the novel parcellations (Schaefer et al., 2018). The final version of LGP comes with a multiscale cortical atlas including 100, 200, 300, 400, 500, 600, 700, 800, 900, and 1,000 parcels (equal numbers across the two hemispheres). One benefit of using LGP is to have nodes with almost the same size, and these nodes are also assigned to the 4acommon large-scale functional networks (Thomas Yeo et al., 2011).

Brainnetome parcellation (BNP).

Both anatomical landmarks and connectivity-driven information are employed to develop this volumetric brain parcellation (Fan et al., 2016). Specifically, anatomical regions defined as in Desikan et al. (2006) are parcellated into subregions using functional and structural connectivity fingerprints from HCP datasets. Cortical parcels are obtained by projecting their volume space to surface space. It is noticed that the original BNP contains both cortical (105 areas per hemisphere) and subcortical (36 areas) regions but only the 210 cortical parcels are included for the subsequent analyses.

Whole-brain parcellation (wb).

Inclusion of subcortical areas has been shown to contain unignorable influences on brain graph analyses (D. Greene et al., 2020; Noble et al., 2019), and we thus also constructed brain graphs with subcortical structures in volume space as nodes by adding these nodes to the cortical brain graphs. To get a high-resolution subcortical parcellation, we adopted the 358 subcortical parcels in Ji et al. (2019). The authors employed data of 337 unrelated HCP healthy volunteers and extended the MMP cortical network partition into subcortex. This results in a set of whole-brain parcellations by combining these subcortical parcels with the aforementioned cortical parcellations, namely wbMMP, wbLGP, and wbBNP. We noticed that the wbMMP-718 has been named by Ji et al. (2019) as the Cole-Anticevic Brain-wide Network Partition, and we thus renamed the wbMMP-718 as wbCABP-718 for consistency.

Edge Construction

After defining the node with each parcellation, in each parcel, regional mean time series were estimated by averaging the vertex time series at each time point. To construct an edge between a pair of nodes, their representative time series entered into the following steps in order: band-pass filtering, internode connectivity transformation, and edge filtering.

Band-pass filtering.

Resting-state functional connectivity studies have typically focused on fluctuations below 0.08 Hz or 0.1 Hz (Biswal, Zerrin Yetkin, Haughton, & Hyde, 1995; Fox & Raichle, 2007) and assumed that only these frequencies contribute significantly to inter-regional functional connectivity while other frequencies are artifacts (Cordes et al., 2001). In contrast, however, other studies have found that specific frequency bands of the rfMRI oscillations make unique and neurobiologically meaningful contributions to resting-state functional connectivity (Salvador et al., 2005; Zuo & Xing, 2014). More recently, with fast fMRI methods, some meaningful FC patterns were reported across much higher frequency bands (Boubela et al., 2013). These observations motivate exploring a range of frequency bands beyond those typically studied in resting-state functional connectivity studies.

Buzsáki and Draguhn (2004) proposed a hierarchical organization of frequency bands driven by the natural logarithm linear law. This offers a theoretical template for partitioning rfMRI frequency content into multiple bands (Figure 3A). The frequencies occupied by these bands have a relatively constant relationship to each other on a natural logarithmic scale and have a constant ratio between any given pair of neighboring frequencies (Buzsáki, 2009). These different oscillations are linked to different neural activities, including cognition, emotion regulation, and memory (Achard, Salvador, Whitcher, Suckling, & Bullmore, 2006; Buzsáki, 2009; Fox & Raichle, 2007). Advanced by the fast imaging protocols offered by the HCP scanner, the short scan interval (TR = 720 ms) allows us to obtain more oscillation classes that the traditional rfMRI method. We incorporate Buzsaki’s framework (Buzsáki & Draguhn, 2004; Penttonen & Buzsáki, 2003) with the HCP fast-TR datasets by using the DREAM toolbox (Gong et al., 2021) in the Connectome Computation System (Xing, Xu, Jiang, Wang, & Zuo, 2022; Xu, Yang, Jiang, Xing, & Zuo, 2015). It decomposed the time series into the six slow bands as illustrated in Figure 3A.

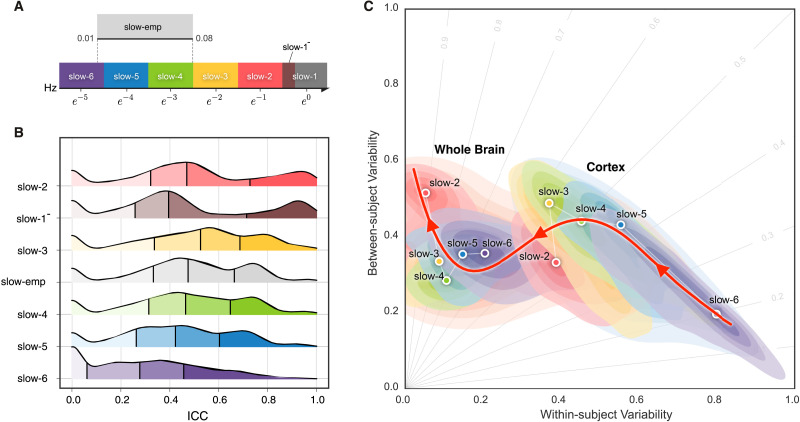

Figure 3. .

Reliability gradient across the slow bands and changes of related individual variability. (A) Classes of frequency bands for slow oscillations derived from the natural logarithm linear law. (B) Density plots are visualized for the ICC distributions under the various frequency bands. These density distributions are ranked from top to bottom according to decreases of the mean ICCs, while the vertical lines depict the four quartiles. (C) Network measurements are projected onto the reliability anatomy plane coordinated by both between- and within-subject variability. These dot plots are fitted into the topographic (contour) maps where the local maxima for each band is labeled as a circle. To highlight the trend of increasing reliability as the frequency band increases, a fourth-order polynomial curve (represented by a red line) is fitted to the frequency contour plot peak points, tracing the reliability flow along slow-to-fast oscillations in the cortex and whole brain.

Connectivity transformation.

For each scan, individual nodal representative time series were band-pass filtered with each of the six frequency bands, and another empirical frequency band, slow-emp (0.01–0.08 Hz). The Pearson’s correlation rij ∈ [−1, 1] between the filtered time series of each pair of nodes i = 1, …, N, j = 1, …, N was calculated (N is the number of nodes). These correlation values provided an estimation on the edge strengths between the two nodes and formed a N × N symmetric correlation matrix R = (rij) for each given subject, scan, parcellation, and frequency band.

Many network metrics are not well defined for negatively weighted connections. In order to ensure that the connection weights are positive only, we applied four types of transformations to the symmetric correlation matrix: the positive (Equation pos), absolute (Equation abs), exponential (Equation exp) and distance-inverse (Equation div) functions, respectively. This avoids the negative values in the internode connectivity matrix W = (wij) where zij = tanh−1 (rij) is Fisher’s z–transformation.

| (pos) |

| (abs) |

| (exp) |

| (div) |

The connectivity matrix represents a set of the node parcels and relational quantities between each pair of the nodes, and will serve as the basis of following edge filtering procedure for generation of the final brain graphs.

Edge filtering.

In a graph, edges represent a set of relevant interactions of crucial importance to obtain parsimonious descriptions of complex networks. Filtering valid edges can be highly challenging due to the lack of ‘ground truth’ of the human brain connectome. To provide a reliable way of building candidate edges, we sampled the following 12 schemes on edge filtering and applied them to the connectivity matrices.

Absolute weight thresholding (ABS).

This approach selects those edges that exceed a manually defined absolute threshold (e.g., correlations higher than 0.5), setting all correlations smaller than 0.5 to 0 (ABS05). This is a simple approach to reconstruct networks (Hagmann et al., 2007).

Proportional thresholding (PROP).

It is a common step in the reconstruction of functional brain networks to ensure equal edge density across subjects (D. Bassett et al., 2009; Rubinov, Sporns, van Leeuwen, & Breakspear, 2009; van den Heuvel et al., 2017). It keeps the number of connections fixed across all individuals to rule out the influence of network density on the computation and comparison of graph metrics across groups. This approach includes the selection of a fixed percentage of the strongest conncections as edges in each individual network or brain graph. Compared to ABS, PROP has been argued to reliably separate density from topological effects (Braun et al., 2012; Ginestet, Nichols, Bullmore, & Simmons, 2011) and to result in more stable network metrics (Garrison, Scheinost, Finn, Shen, & Constable, 2015). This makes it a commonly used approach for network construction and analysis in disease-related studies. Here, we focused on two thresholds that are commonly reported in the literature: 10% (PROP10) and 20% (PROP20).

Degree thresholding (DEG).

The structure of a graph can be biased by the number of existing edges. Accordingly, statistical measures derived from the graph should be compared against graphs that preserve the same average degree, K. A threshold of the degree can be chosen to produce graphs with a fixed mean degree (e.g., K = 5, DEG5), which is the average nodal degrees of an individual graph from a single subject’s scan. Many network neuroscience studies have taken this choice for K = 5 (S. I. Dimitriadis, Laskaris, Del Rio-Portilla, & Koudounis, 2009; Micheloyannis et al., 2006; Milo et al., 2002; Stam, Jones, Nolte, Breakspear, & Scheltens, 2007). We also include the DEG15 for denser graphs of the brain networks.

Global cost efficiency optimization (GCE).

Given a network with a cost ρ, its global efficiency is a function of the cost Eg(ρ), and its GCE is J(ρ) = Eg(ρ) − ρ. Several studies suggested that brain networks, in particular those with small-world topology, maximize their global cost efficiency (D. S. Bassett et al., 2008), that is, Jmax = maxρ J(ρ). Computationally, this scheme is implemented by looping all network costs (e.g., adding edges with weights in order) to find the Jmax where the corresponding edge weight was determined as the threshold for edge filtering. In this sense, GCE is an individualized and optimized version of ABS, PROP, and DEG, while the latter three are commonly employed with a fixed threshold for all individuals.

Overall efficiency cost optimization (ECO).

Both global and local efficiency are important graph features to characterize the structure of complex systems in terms of integration and segregation of information (Latora & Marchiori, 2001). ECO was proposed to determine a network density threshold for filtering out the weakest links (De Vico Fallani, Latora, & Chavez, 2017). It maximizes an extension of Jmax, the ratio between the overall (both global and local) efficiency and its wiring cost maxρ Jext(ρ) = (Eg(ρ) + Eloc(ρ))/ρ, where Eloc denotes the network local efficiency. The study (Latora & Marchiori, 2001) also demonstrated that, to maximize J, these networks have to be sparse with an average node degree K ≃ 3.

Minimum spanning tree (MST).

This is an increasingly popular method for identifying the smallest and most essential set of connections while ensuring that the network forms a fully connected graph (Guo, Qin, Chen, Xu, & Xiang, 2017; Meier, Tewarie, & Van Mieghem, 2015; Otte et al., 2015; van Nieuwenhuizen et al., 2018). The tenet of using MST is to summarize information and index the structure of the graph, and thus remove edges with redundant information (Mantegna, 1999). Specifically, an MST filtered graph will contain N nodes connected via N − 1 connections with minimal cost and no loops. This addresses key issues in existing topology filtering schemes that rely on arbitrary and user-specified absolute thresholds or densities.

Orthogonal minimum spanning tree (OMST).

This topological filtering scheme was proposed recently (S. Dimitriadis, Antonakakis, Simos, Fletcher, & Papanicolaou, 2017) to maximize the information flow over the network versus the cost by selecting the connections via the OMSTs. It samples the full-weighted brain network over consecutive rounds of MST that are orthogonal to each other. Practically, we extracted the first MST, and then we cleared their connections and we tracked the second MST from the rest of the network connections, and so forth. Such an iterative procedure (stopped by the Mth MST) can get orthogonal MSTs and topologically filter brain network by optimizing the GCE under the constrains by the MST, leading to an integration of both GCE and MST:

Planar maximally filtered graph (PMFG).

The idea underneath PMFG (Tumminello, Aste, Di Matteo, & Mantegna, 2005) is to filter a dense matrix of weights by retaining the largest possible subgraph while imposing global constraints on the derived network topology. Edges with the strong connection weights are retained while constraining the subgraph to be a (spanning) tree globally. Similarly, during the PMFG construction, the largest weights are retained while constraining the subgraph to be a planar graph globally. The PMFG algorithm searches for the maximum weighted planar subgraph by adding edges one by one. The resulting matrix is sparse with 3(N − 2) edges. It starts by sorting all the edges of a dense matrix of weights in nonincreasing order and tries to insert every edge in the PMFG. Edges that violate the planarity constraint are discarded.

Triangulated maximally filtered graph (TMFG).

The algorithm for implementing PMFG is computationally expensive, and is therefore impractical when applied to large brain networks (Massara, Di Matteo, & Aste, 2016). A more efficient algorithm, TMFG, was developed that exhibited greatly reduced computational complexity compared to PMFG. This method captures the most relevant information between nodes by approximating the network connectivity matrix with the endorsement association matrix and minimizing spurious associations. The TMFG-derived network contains three-node (triangle) and four-node (tetrahedron) cliques, imposing a nested hierarchy and automatically generates a chordal network (Massara et al., 2016; Song, Di Matteo, & Aste, 2012). Although TMFG is not widely applied in network neuroscience studies, it has been applied elsewhere and proven to be a suitable choice for modeling interrelationships between psychological constructs like personality traits (Christensen, Kenett, Aste, Silvia, & Kwapil, 2018).

Orthogonal TMF graph (OTMFG).

To combine both the TMFG’s efficiency and OMST’s accuracy, we propose OTMFG to maximize the information flow over the network versus the cost by selecting the connections of the orthogonal TMFG. It samples the full-weighted brain network over consecutive rounds of TMFG that are orthogonal to each other.

In summary, as illustrated in Figure 4A, the 12 edge filtering schemes transform a fully weighted matrix into a sparse matrix to represent the corresponding brain network. They can be categorized into two classes: threshold-based versus topology-based schemes. ABS05, PROP10, PROP20, DEG5, DEG15, ECO, and GCE rely on a threshold for filtering and retaining edges with higher weights than the threshold. These schemes normally ignore the topological structure of the entire network and can result in isolated nodes. In contrast, the topology-based methods including MST, OMST, PMFG, TMFG, and OTMFG all consider the global network topology in determining which edges to retain. As illustrated in Figure 4B, all the schemes are plotted in the ρ − Jmax plane for their network economics.

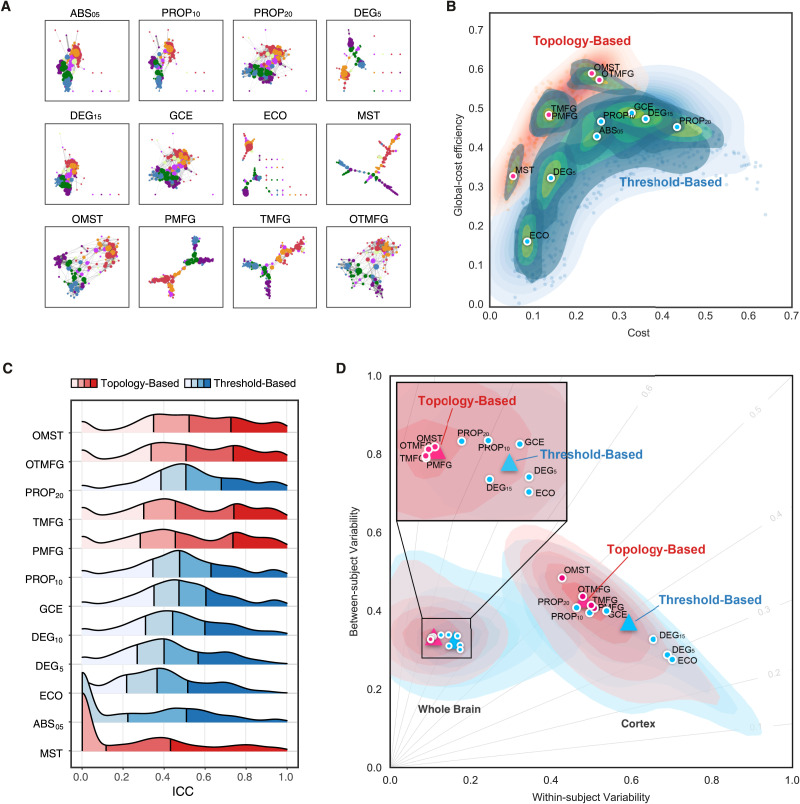

Figure 4. .

Edge filtering schemes and their networking performance. (A) Twelve schemes of filtering edge are applied to an individual connectivity matrix, resulting in the 12 brain networks with their nodes colored as the Yeo2011-7Networks (Thomas Yeo et al., 2011). (B) Global cost efficiency are plotted against network wiring costs of all the brain networks derived with the 12 edge filtering schemes from all the individual rfMRI scans. Red dots represent the topology-based, while blue dots are for threshold-based networks. These dot plots are fitted into the topographic (contour) maps where the local maxima for each filtering choice is labeled as a circle. (C) Density plots are for ICC distributions under various the 12 edge filtering schemes. These density distributions are ranked from top to bottom according to decreases of the mean ICCs, while the two colors depict the topology-based and threshold-based schemes. Four quartiles were indicated by vertical lines. (D) Network measurements are projected onto the reliability anatomy plane coordinated by both between- and within-subject variability. Red dots represent the topology-based, while blue dots are for threshold-based networks. The topographic (contour) maps fit the dots and label the local maxima as a circle for each scheme and the global maxima as a triangle for the topology and threshold groups, respectively.

Network Analysis

We performed graph theory–driven network analysis by calculating several common graph-based metrics for the resulting graphs. These measures, broadly, can be interpreted based on whether they characterize the extent to which network structure allows for integrated or segregation information flow. Examples of integrative measures include average shortest path length (Lp), global efficiency (Eg), and pseudo diameter (D). Segregation measures include clustering coefficient (Cp), local efficiency (Elocal), transitivity (Tr), modularity (Q), and a suite of nodal centrality measures (Table 1). All the metrics are calculated using the Brain Connectivity Toolbox (Rubinov & Sporns, 2010). We employed graph-tool (https://graph-tool.skewed.de) and NetworKit (https://networkit.github.io) to achieve high performance comparable (both in memory usage and computation time) to that of a pure C/C++ library.

Table 1. .

A list of the employed network metrics derived with graph theory

| Measure | Attribute | Symbol | Reference | |

|---|---|---|---|---|

| Global | Integration | Global efficiency of the network | Eg | Latora & Marchiori, 2001 |

| Average shortest path length of the network | Lp | Watts & Strogatz, 1998 | ||

| Pseudo diameter of the network | D | Bouttier, Di Francesco, & Guitter, 2003 | ||

| Segregation | Clustering coefficient of the network | Cp | Watts & Strogatz, 1998 | |

| Local efficiency of the network | Elocal | Latora & Marchiori, 2001 | ||

| Modularity of the network | Q | Newman, 2004 | ||

| Transitivity of the network | Tr | Newman, 2003 | ||

| Nodal | Centrality | Local characteristic path length of nodes | Lpi | Watts & Strogatz, 1998 |

| Efficiency of nodes | Enodal,i | Latora & Marchiori, 2001 | ||

| Local efficiency of nodes | Elocal,i | Latora & Marchiori, 2001 | ||

| Clustering coefficient of nodes | Cpi | Watts & Strogatz, 1998 | ||

| Pagerank centrality of nodes | Pci | Page, Brin, Motwani, & Winograd, 1999 | ||

| Degree centrality of nodes | Dci | Pastor-Satorras, Vázquez, & Vespignani, 2001 | ||

| Eigenvector centrality of nodes | Eci | Newman, 2008 | ||

| Resolvent centrality of nodes | Rci | Estrada & Higham, 2010 | ||

| Subgraph centrality of nodes | Sci | Estrada & Rodriguez-Velazquez, 2005 | ||

| Betweenness centrality of nodes | Bci | Freeman, 1978 |

RESULTS

Whole-brain Networks Are More Reliable Than Cortical Networks

We evaluated reliability based on 24 different parcellation choices (Figure 2A). In the following parts of the paper, we name a parcellation as ‘ParcAbbr-NumberOfParcels’ (e.g., LGP-100 or its whole-brain version wbLGP-458). We found significant differences in ICC distributions across the 24 parcellation choices (Figure 2B, Friedman rank sum test: χ2 = 20379.07, df = 23, p < 2.2 × 10−16, effect size WKendall = 0.377). The mean ICCs range from slight (LGP-1000) to substantial (wbLGP-458). Given a particular parcellation and definition of nodes, we illustrate the density distribution of its ICCs under all other strategies (edge definition and metric derivation). Notably, whole-brain parcellations yield higher measurement reliability than parcellations of cerebral cortex on their own (the effect sizes > 0.65). This improvement in reliability seems not simply a by-product of having more parcels. We chose the parcellations in which the number of parcels (400 ≤ n ≤ 1,000) almost overlapped between the cortex and the whole brain, and found no correlation between the number of parcels and the median ICCs (r = −0.11, p = 0.7). We report the mean ICC and the number of almost perfect (noap) ICCs (≥0.8) as the descriptive statistics for the density distributions. The wbLGP-458 (mean ICC: 0.671; noap ICC: 519), wbLGP-558 (mean ICC: 0.671; noap ICC: 540) and The wbBNP-568 (mean ICC: 0.664; noap ICC: 511) are the three most reliable choices (see more details of the post hoc Wilcoxon signed rank test in Supporting Information Table S7). Among the cortical parcellations, the LGP-500 (mean ICC: 0.362; noap ICC: 0), LGP-400 (mean ICC: 0.342; noap ICC: 0), and LGP-600 (mean ICC: 0.340; noap ICC: 0) are the three most reliable choices (Supporting Information Table S3).

To better understand the effect of introducing 358 subcortical parcels into the cortical parcellations, we decomposed the reliability changes into a two-dimensional representation of changes of individual variability (Figure 2C, and D). This idea was motivated by the analysis of reliability derived with individual variability (Xing & Zuo, 2018; Zuo, Xu, & Milham, 2019b) as in Figure 1C. For each ICC under a given parcellation choice, we calculated the related between-subject variability Vb and within-subject variability Vw. Changes in the individual variability associated with the reliability improvements from cortical to whole-brain pipelines were plotted along with ΔVb and ΔVw as arrows. These arrows are distributed across the three quadrants (quadI: 0.94%; quadII: 59.99%; quadIII: 39.07%). We noticed that most of these arrows were distributed into the optimal quadrant where the improvements of test-retest reliability by the whole-brain parcellation choices largely attributing to the increases of between-subject variability and decreases of within-subject variability. The decreases of both between-subject and within-subject variability may also strengthen the measurement reliability (the suboptimal quadIII in Figure 2).

Spontaneous Brain Activity Portrays More Reliable Networks in Higher Slow Bands

Brain oscillations are hierarchically organized, and their frequency bands were theoretically driven by the natural logarithm linear law (Buzsáki & Draguhn, 2004). By analogy, rfMRI oscillations can, similarly, be partitioned into distinct frequency bands. Advanced by the fast imaging protocols (TR = 720 ms), HCP test-retest data allow to obtain more oscillation classes than traditional rfMRI acquisitions (typical TR = 2 s). We incorporate Buzsaki’s framework with the HCP dataset using the DREAM toolbox (Gong et al., 2021) in the Connectome Computation System to decompose the time series into the six slow bands (Figure 3A): slow-6 (0.0069–0.0116 Hz), slow-5 (0.0116–0.0301 Hz), slow-4 (0.0301–0.0822 Hz), slow-3 (0.0822–0.2234 Hz), slow-2 (0.2234–0.6065 Hz), and slow-1− (0.6065–0.6944 Hz).

We noticed that, due to the limited sampling rate (TR), this slow-1− only covers a small part of the full slow-1 band (0.6065–1.6487 Hz) – we indicate this above. We also included the frequency band, slow-emp (0.01–0.08 Hz) for the sake of comparison, as it is covers a range commonly used in rfMRI studies. A significant effect on order (χ2 = 9283.536, df = 6, p < 2.2 × 10−16, WKendall = 0.192) across the frequency bands was revealed based on the density distributions of ICC (Figure 3B): slow-2, slow-1−, slow-3, slow-emp, slow-4, slow-5, and slow-6. Post hoc paired tests indicated that any pairs of neighboring bands are significantly different from one another, with measurement reliability increasing with faster frequency bands. Note, however, that slow-1− (mean ICC: 0.564) did not fit into this trend, possibly due to its limited coverage of the full band. But remarkably, slow-1− exhibited the largest number of almost prefect ICCs for potential reliability (noap ICC: 1746). Slow-emp (mean ICC: 0.519; noap ICC: 434) contains overlapping frequencies with both slow-4 (mean ICC: 0.560; noap ICC: 441) and slow-5 (mean ICC: 0.494; noap ICC: 285), and higher ICCs than the two bands but the effect sizes are small to moderate (slow-emp vs. slow-4: 0.193; slow-emp vs. slow-5: 0.485). Slow-6 is the choice with the lowest ICCs (mean ICC: 0.331; noap ICC: 154) compared to other bands (large effect sizes: r > 0.57).

To visualize reliability variation across frequency bands, we plotted a trajectory tracing reliability flow along the five full (slow-6 to slow-2) bands in the reliability plane, whose axes correspond to between-versus within-subject variability (Figure 3C). As expected, this nonlinear trajectory contains two stages of almost linear changes of the network measurement reliability from slow to fast oscillations: whole brain versus cortex. In each case, the reliability improvements attribute to both increases of between-subject variability and decreases of within-subject variability while the improvements of whole-brain network measurement reliability were largely driven by the increased variability between subjects.

Topological Economics Individualize Highly Reliable Functional Brain Networks

Estimating functional connections can be highly challenging due to the absence of a ‘ground truth’ human functional connectome. To provide a reliable way of building candidate edges of the connections, we sampled the 12 schemes on graph edge filtering (Figure 4A), which turn a fully connected matrix into a sparse graphical representation of the corresponding brain network. These schemes can be categorized into two classes: threshold-based versus topology-based schemes. Threshold-based schemes usually use a threshold to preserve those edges whose strengths are above a cutoff value, such as ABS05, PROP10, PROP20, DEG5, and DEG15. Threshold-based schemes are widely used in network neuroscience and ignore the intrinsic topological structure of the entire brain network (e.g., leading to multiple connected components or isolated nodes). In contrast, topology-based schemes such as MST, OMST, PMFG and TMFG come from other scientific disciplines and are optimized based on the entire network topology (see Materials and Methods). To combine both the TMFG’s efficiency and OMST’s accuracy, we proposed the OTMFG. All the schemes are plotted in the plane of cost versus global cost efficiency to better visualize the economical properties of the derived networks (Figure 4B). These plots are fitted into the topographic (contour) maps where the local maxima for each filtering choice is labeled as a circle. The human brain networks achieve higher global efficiency with lower cost by using topology-based schemes compared to threshold-based schemes, suggesting increasingly optimal economics.

Significant differences in test-retest reliability were detectable across these 12 edge filtering schemes (χ2 = 9784.317, df = 11, p < 2.2 × 10−16, WKendall = 0.189, see Figure 4C). Among the topology-based schemes, OMST (mean ICC: 0.608; noap ICC: 765), OTMFG (mean ICC: 0.602; noap ICC: 781), and TMFG (mean ICC: 0.570; noap ICC: 767) were the three most reliable choices. They showed significantly greater reliability than the three most reliable threshold-based, respectively: PROP20 (mean ICC: 0.593; noap ICC: 632), PROP10 (mean ICC: 549; noap ICC: 445), and GCE (mean ICC: 0.533; noap ICC: 352). Mean reliability of MST are slight to fair (mean ICC: 0.309) but its number of almost perfect reliability (noap ICC:362) is still higher than all threshold-based schemes except PROP10 and PROP20.

Network measurements are labeled based on topology and threshold groups and projected onto the reliability anatomy plane, whose axes represent between- and within-subject variability (Figure 4D). The contour maps are reconstructed for each scheme based upon the individual variability of all the related network measurements. The topology-based methods (red) showed overall higher ICCs than the threshold-based methods (blue), improvements that could be attributed to increases in between-subject variability and decreases of within-subject variability. These observations are consistent between cortex and whole-brain networks while topology-based whole brain network are almost perfectly reliable (meaning almost perfect reliability, i.e., ICC ≥ 0.8).

We also explored connection transformation and edge weights, two factors included in edge filtering, the choices of connectivity transformation, and weighing edges, regarding their measurement reliability. Positive (Equation pos) (mean ICC: 0.512; noap ICC: 1,031) and exponential (Equation exp) transformation (mean ICC: 0.509; noap ICC: 1,855) were the two most reliable choices. Comparing to the positive and absolute (Equation abs) (mean ICC: 0.508; noap ICC: 1,050) transformation, the exponential and distance-inverse (Equation div) (mean ICC: 0.500; noap ICC: 1,031) transformation show larger number of almost perfect ICCs. Weighted graphs are also more reliable than the binary graphs, while the normalized weighted graphs demonstrated the highest ICCs, reflecting both the increased between-subject variability and decreased within-subject variability.

Network Integration and Segregation Can Serve Reliable Metrics of Information Flow

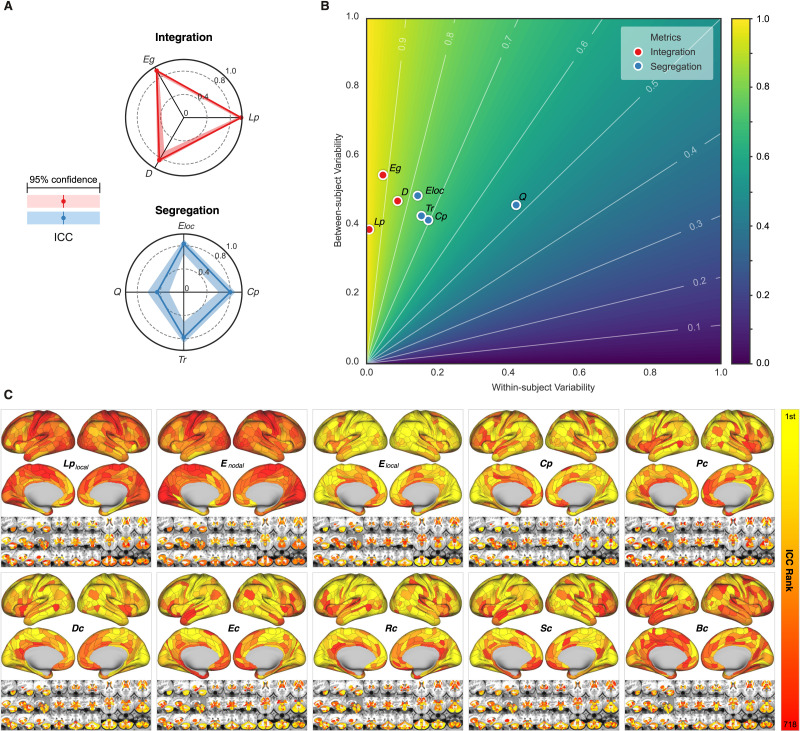

The previous extensive data analysis suggests that the optimally reliable pipeline should (1) define network nodes using a whole-brain parcellation, (2) filter the time series with higher frequency bands, (3) transform the connectivity using positive transformation, and (4) construct network edges using topology-based methods and normalized weights. Using the optimal pipelines, we evaluated the reliability levels of various metrics from network neuroscience and their differences across individuals. Focusing on the optimized pipeline with the highest ICCs of the various choices (wbLGP-458, slow-2, pos, normalized weights, OMST), we reported test-retest reliability of the measurements as well as their corresponding individual variability. In Figure 5A, we found that the global network measurements of information segregation and integration are at the level of almost perfect reliability except for the modularity Q (ICC = 0.46, 95% CI = [0.252, 0.625]). These high-level ICCs are derived with large between-subject variability and small within-subject variability (Figure 5B). These findings are reproducible across the other two parcellation choices (wbCABP-718, wbBNP-458). In consideration of “Ease-of-Use” for researchers and higher cortical resolution, we mapped the “Out-of-the-Box” Cole-Anticevic Brain-wide Network Partition (wbCABP-718) for nodal metrics visualization.

Figure 5. .

Measurement reliability and variability of global/nodal network metrics under the optimized pipeline. (A) Spider plots are visualized for ICCs (test-retest) with the 95% confidence intervals (CIs, shadow bands) for the global metrics of network integration, segregation. Integrative measures include average shortest path length (Lp), global efficiency (Eg), and pseudo diameter (D). Segregation measures include clustering coefficient (Cp), local efficiency (Eloc), transitivity (Tr), and modularity (Q). (B) The reliability anatomy was plotted as a function of between-subject variability (Vb) and within-subject variability (Vw). (C) Ranks of ICCs across the 360 cortical parcels and the 358 subcortical parcels in the optimal pipeline (wbCABP-718, slow-2, pos, OMST) are depicted. Ten nodal metrics are assessed including local characteristic path length of node (Lplocal), nodal efficiency (Enodal), local efficiency of nodes (Elocal), nodal clustering coefficient (Cp), pagerank centrality (Pc), degree centrality (Dc), eigenvector centrality (Ec), resolvent centrality (Rc), subgraph centrality (Sc), and betweeness centrality (Bc).

Similar to the global metrics, shortest path length Lp and nodal efficiency Enodal exhibited the highest ICCs (almost perfect test-retest reliability), while ICCs of other nodal metrics remained less than 0.6. To visualize node-level network metrics, we reported results derived from the wbCABP-718 choice. To improve spatial contrasts of reliability, we ranked the parcels according to their ICCs and visualized the ranks in Figure 5C. Most nodal metrics are more reliable across the 360 cortical areas than the 358 subcortical areas (Wilcoxon tests: all p values less than 0.001, corrected for multiple comparisons). However, Lp, Enodal, and Bc exhibited higher across subcortical areas than cortical areas (corrected p < 0.001). Across the human cerebral cortex, the right hemispheric areas demonstrated more reliable Cp (corrected p < 0.0036) than the left hemispheric areas. Interesting patterns of the reliability gradient are also observable along large-scale anatomical directions (dorsal > ventral, posterior > anterior) across the nodal metrics of information segregation and centrality. These spatial configuration profiles on the reliability reflected their correspondence on interindividual variability of these metrics, characterising the network information flow through the slow-2 band.

Building An Open Resource for Reliable Network Neuroscience

The results presented here represent huge costs in terms of computational resources (more than 1,728,000 core-hours on CNGrid), supported by Chinese Academy of Sciences (https://cscgrid.cas.cn). Derivations of the ICCs and their linear mixed models were implemented in R and Python. As is our practice in open science, we have started to provide an online platform on the reliability assessments (https://ibraindata.com/research/ifNN/reliabilityassessment). The big reliability data were designed into an online database for providing the community a resource to search reliable choices and help with final decision-making. The website for this online database provided more details of the reliability data use. We shared all the codes, figures, and other reliability resources via the website (https://ibraindata.com/research/ifNN/database).

DISCUSSION

This study examined the series of processing and analysis decisions in constructing graphical representations of brains’ intrinsic spontaneous activity. The focus, here, was on identifying the pipeline that generated reliable, individualized networks and network metrics. The results of our study suggest that to derive reliable global network metrics showing higher interindividual variances and lower intraindividual variances, one should use whole-brain parcellations to define network nodes, focus on higher frequencies in the slow band for time series filtering to derive the connectivity, and use the topology-based methods for edge filtering to construct sparse brain graphs. Regarding network metrics, multilevel or multimodal metrics appear more reliable than single-level or single-model metrics. Deriving reliable measurements is critical in network neuroscience, especially for translating network neuroscience into personalized practice. Based on these results, we provide four principles toward optimal functional network neuroscience for reliability of measuring individual differences.

Principle I: Use A Whole Brain Parcellation to Define Network Nodes

The basic unit of a graph is the node. However, variability across brain parcellations can yield different graphs, distorting network metrics and making it difficult to compare findings across studies (Wang et al., 2009; Zalesky et al., 2010). In many clinical applications (Fornito et al., 2015; Matthews & Hampshire, 2016) researchers aim to identify disease-specific connectivity profiles of the whole brain, including cortical and subcortical structures, as well as cerebellum. A recent review has raised the concern that many studies have focused on restricted sets of nodes, for example, cortex only, and has called for a field standard for the best practices in clinical network neuroscience (Hallquist & Hillary, 2019), which requires almost perfectly reliable measurements (Xing & Zuo, 2018). Our meta-reliability assessments revealed such high reliability of measurements made involving functional brain networks can be achieved, through the inclusion of high-resolution subcortical nodes. This provides strong evidence that whole-brain node use should be part of the standard analysis pipeline for network neuroscience applications. These improvements of reliability can be attributed to increases in between-subject variability coupled with reductions in within-subject variability relative to networks of cortical regions alone. One possible neuroanatomical explanation is that distant areas of cerebral cortex are interconnected by the basal ganglia and thalamus while also communicating with different regions of the cerebellum via polysynaptic circuits, forming an integrated connectome (Bostan & Strick, 2018). These subcortical structures have been suggested to play a role in both primary (e.g., motor) and higher order function (e.g., learning and memory), while studies using rfMRI have delineated the resting-state functional connectivity (RSFC) maps between these subcortical structures and cortical networks of both primary and high-order functions. Interestingly, a recent work revealed that interindividual variance in cerebellar RSFC networks exceeds that of cortex (Marek et al., 2018). Meanwhile, these RSFC maps are highly individualized and stable within individuals (D. Greene et al., 2020), indicating that they possess reliable characteristics. In line with our observations, we argue that inclusion of the subcortical structures as network nodes can enhance the between-subject variability and stabilize the within-subject variability by providing more comprehensive measurements on the entirety of the brain connectivity.

Principle II: Generate Functional Networks using Spontaneous Brain Activity in Multiple Slow Bands

It has been a common practice in rfMRI research to estimate the RSFC profile based on BOLD time series of the intrinsic spontaneous brain activity from the low frequency (0.01–0.1 Hz or 0.01–0.08 Hz). However, the test-retest reliability of RSFC measurements derived from this frequency band has been limited, with ICCs less than 0.4 (Noble et al., 2019; Zuo & Xing, 2014). Existing studies, however, have advocated adopting a multifrequency perspective to examine the amplitude of brain activity at rest (Zuo et al., 2010) and its network properties (Achard et al., 2006). This approach has been spurred along by recent advances in multibanded acquisitions and fast imaging protocols, offering rfMRI studies a way to examine spontaneous brain activity at much higher frequencies that may contain neurobiologically meaningful signals (Gong et al., 2021). Our study provides strong evidence of highly reliable signals across higher slow-frequency bands, which are derived with the hierarchical frequency band theory of neuronal oscillation system (Buzsáki & Draguhn, 2004). Specifically, a spectrum of reliability increases was evident from slow bands to fast bands. This reflects greater variability of the network measurements between subjects and less measurement variability within subject between the higher and lower bands of the slow frequencies. In theory, each frequency band has an independent role in supporting brain function. Lower frequency bands are thought to support more general or global computation with long-distance connections to integrate specific or local computation, which are driven by higher slow bands based on short-distance connections (Buzsáki, 2009). Our findings of high reliability (interindividual differences) are perfectly consistent with this theory from a perspective of individual variability. Previous findings have found that high-order associative (e.g., default mode and cognitive control) networks are more reliable than the primary (e.g., somatomotor and visual) networks (Noble et al., 2019; Zuo & Xing, 2014; Zuo, Xu, & Milham, 2019b). A novel frequency-based perspective on these network-level individual differences can be inspired directly by our observations on the multiple bands.

Principle III: Optimize Topological Economy to Construct Network Connections at Individual Level

There is no gold standard on for human functional connectomes, leading to a plurality of approaches for inferring and constructing brain network connections. Threshold-based methods focus on the absolute strength of connectivity, retaining connections that are above some user-defined threshold and oftentimes involve applying the same threshold to all subjects. Although this approach mitigates potential biases in network metrics associated with differences in network density, it may inadvertently also lead to decreased variability between subjects. This is supported by our results showing that threshold-based methods yield low reliability of network measurements. On the other hand, the human brain is a complex network that is also near optimal in terms of connectional economy, balancing trade-offs of cost with functionality (Bullmore & Sporns, 2012). In line with this view, certain classes of topology-based methods for connection definition may hold promise for individualized network construction. Specifically, each individual brain optimizes its economic wiring in terms of cost and efficiency, reaching a trade-off between minimizing costs and allowing the emergence of adaptive topology. Our results demonstrate that such highly individualized functional connectomes generated by the topology-based methods are more reliable than those generated by the threshold-methods. This reflects the increase of individual differences in functional connectomes attributing to the optimal wiring economics at individual level. The topological optimization also brings other benefits such as ensuring that a graph forms a single connected component and preserving weak connections. Indeed, there is increasing evidence supporting the hypothesis that weak connections are neurobiologically meaningful and explain individual differences in mind, behavior, and demographics as well as disorders (Santarnecchi, Galli, Polizzotto, Rossi, & Rossi, 2014). Weak connections in a graph may be consistent across datasets and reproducible within the same individual over multiple scanning sessions and therefore be reliable. Weak connections might also play nontrivial roles in transformed versions of the original brain network, for example, so-called “edge-based functional connectivity” (Faskowitz, Esfahlani, Jo, Sporns, & Betzel, 2020). Among these topology-based methods, MST is the simplest and most promising filtering method if computational efficiency is the priority. MST can obtain a graph with the same number of nodes and edges, and it is not sensitive to scaling effects, because its structure only depends on the order rather than the absolute values of the edges. Although MST loses some local network measurements due to the limited number of edges, it has some other unique metrics that can be calculated (e.g., leaf fraction, tree hierarchy). A better alternative might be TMFG, which is computationally very efficient and statistically robust, while the OMST and OTMFG are the most reliable choices by prioritizing significant individual differences.

Principle IV: Characterize Information Flow with Both Network Integration and Segregation Metrics

Intrinsic functional networks reflect the outcomes of communication processes and information flows between pairs of brain regions. How the information and other signals propagate between pairs of brain regions can be assayed using network neuroscientific metrics and is essential to understanding normative connectome function and its variation in clinical settings. While the ground truth of functional connectome remains unknown (and may not exist), network models can help validate the imaging-based reconstructions of human functional networks (D. Bassett et al., 2020). From a perspective of individual differences, reliable network measures are the basis of achieving valid ifNN measurements (Zuo, Xu, & Milham, 2019b). Our findings indicated that both the brain network segregation and integration could be reliably measured with functional connectomics using rfMRI by the optimized pipelines. At the global level, measures of information integration, for example, characteristic path length and efficiency, were more reliable than those of information segregation, for example, modularity and clustering coefficient. Our results also revealed that measures of integration were more stable across different scan sessions (i.e., the test-retest) for an individual subject than the segregation measurements, while interindividual variability are measured at the similar level for both integration and segregation metrics. At the nodal level, mapping reliability of the network measurements revealed interesting spatial patterns. Specifically, we found that cortical areas were generally associated with more reliable local measurements compared to subcortical areas. This may reflect different functional roles for human cortex and subcortex. For example, the differences in reliability of path-based metrics might reflect the fact that there are more cortical within-community paths, while between-community paths are more common in subcortex. Beyond this cortical-subcortical gradient, reliability of the nodal information flow also fit the left-right asymmetry and dorsal-ventral as well as posterior-anterior gradient, implying the potential validity of individual differences in information flow attributing to evolutionary, genetic, and anatomical factors (Chen et al., 2013; Rakic, 2009). To facilitate the utility of reliable network integration and segregation metrics in ifNN, we integrated all the reliability resources into an online platform for reliability queries on specific metrics of information flow (https://ibraindata.com/research/ifNN).

Reproducibility and Generalizability

Both reproducibility and generalizability are cornerstones of modern sciences, and remain challenging as a scientific research frontier (Munafo et al., 2017; Yarkoni, 2022). In this research, we adopt a big data approach by deeply sampling the parameters (more than 524k parametric settings) of various steps in the network construction and analysis pipeline to systematically explore the reliability of functional brain network measurements. This provided robust experimental evidence supporting four key principles that will foster optimal ifNN research and application. These principles can serve as the basis for building guidelines on the use of ifNN to map individual differences. Standard guidelines are essential for improvements of reproducibility and generalizability in research practice, and our work provides basic resources, initiating such standardization in future network neuroscience. We note, however, that while our approach was extensive, it was not exhaustive (likely impossible)—the analytical sampling procedure could miss many other existing choices. The processing decisions that yield reliable connectomic measurements may yield the most reliable network statistics, but there may be another way to process data that yields overall a higher level of reliability in network measures. Regarding the statistical benefits of our sampling analytics in the parametric space of the ifNN pipelines, we discuss below the implications of the present research for reproducible and generalizable network neuroscience.

Reliability and validity.

Pipelines of generating highly reliable measurements are central to the experimental design of studying individual differences (Matheson, 2019; Zuo, Xu, & Milham, 2019b). Given a statistical power, for a fixed sample size, experiments designed with more reliable pipelines can detect bigger effects of interests. On the other side, to detect a fixed effect size, experiments designed with more reliable pipelines would be more powerful or logistically economical (e.g., needless samples). This has very important implications on the recent arguments about “big data versus small data” (Marek et al., 2022; Rosenberg & Finn, 2022; Tibon, Geerligs, & Campbell, 2022), which must take the reliability into account when first designing an experiment (Gratton, Nelson, & Gordon, 2022), and has been increasingly appreciated by the field of network neuroscience (Helwegen, Libedinsky, & van den Heuvel, 2023). From a perspective of experimental design, reliability is more straightforward to reproducibility but validity is related to generalizability. Therefore, we clarify that the measurement reliability is not the final goal but the validity (Finn & Rosenberg, 2021; Noble et al., 2021), which is not easily ready for a direct examination as a reliability assessment (Zuo, Xu, & Milham, 2019b). The reliable pipeline we proposed produced biologically plausible findings according to the four principles we discussed, likely reflecting its potential validity of measuring individual differences in intrinsic brain functional organization. Validation on the use of our proposed principles represents a promising arena for fostering future network neuroscience studies such as personality (Hilger & Markett, 2021) or brain developmental charts (Bethlehem et al., 2022), with potential novel fMRI paradigms (Elliott, Knodt, & Hariri, 2021b; Finn, Glerean, Hasson, & Vanderwal, 2022) or more precise neuroimaging technology (Toi et al., 2022).

Reliability does not necessarily equate to but indeed provides an up bound of validity. In some cases, increasing reliability may cause a decrease in validity, particularly if the sources of reliability are not related to the underlying construct of interests. For example, physiological noise and head motion can be highly reliable as biological traits, but may not be involved in the investigated cognitive processes. Previous studies have shown that head motion can introduce artifacts into the fMRI data, which can affect the reliability of functional connectivity measures (Power et al., 2014). In particular, head motion may have a nonuniform impact on different edge filtering methods and network metrics. Certain methods that rely on the strength of functional connections, such as threshold-based approaches, may be more sensitive to head motion artifacts than topology-based methods that focus on the overall structure of the network. Measures that are highly reliable due to the inclusion of these contaminants may not be valid indicators of the underlying construct. However, head motion may not always be purely noise and may contain some neurobiologically meaningful signals (Zeng et al., 2014; Zhou et al., 2016). Therefore, it is important to carefully consider the potential impact of head motion when choosing an edge filtering method and interpreting the resulting functional connectivity measures, as well as the trade-off between controlling for motion artifacts and preserving potentially meaningful signals in the data. In the context of graph theory, noise can affect both the reliability and validity of graph metrics. For example, noise in the data can result in higher reliability of certain graph metrics, but this may not necessarily reflect the true underlying network structure. This is because noise may lead to inflated correlations between certain regions, resulting in overestimations of network connectivity and thus higher reliability. However, these measurements may not be valid indicators of the true network structure and may not accurately reflect the underlying cognitive processes being studied. On the other hand, the removal of noise may lead to decreased reliability, but may improve the validity of the measurement by reducing the influence of unrelated sources of variance. The optimal choices for maximizing reliability in our study may also have implications for interpretability and generalizability. For example, the inclusion of subcortical structures in the parcellation scheme may increase the interpretability of the results, as these structures play a key role in the functional organization of the brain. On the other hand, the choice of connectivity transformation and edge weighting may have implications for the generalizability of the results, as different methods may produce different results depending on the specific characteristics of the data. Further research is warranted to fully understand the consequences of these choices on interpretability, generalizability, and other aspects of the measurement process.

The rfMRI datasets minimally preprocessed by the HCP pipeline are employed for our study while many different pipelines are available for rfMRI data preprocessing (see a list of pipelines in Xu et al., 2015). These different pipelines vary across parametric settings and orders of various steps of preprocessing, and thus can have different impacts on the reliability of measuring spontaneous brain activity (Li et al., 2022). Therefore, it is very important to validate whether the present findings are reproducible under another preprocessing pipeline. Accordingly, we repeated our analyses by leveraging another widely accepted preprocessing pipeline, fMRIPrep (Esteban et al., 2019). As documented in the Supporting Information, the major findings supporting the principal guidelines are reproducible while the measurement reliability derived with fMRIPrep are generally lower than those with the HCP pipeline. Various within-pipeline parametric settings also as exist other choices not sampled by our experimental design but remain potentials for further investigation. For example, edge filtering methods are commonly used for identification and retain only the most important edges in a graph, based on criteria such as statistical significance or functional relevance. However, this approach has the potential to introduce bias and subjectivity in the selection process, and may not fully capture the higher order structure of a network system. Algebraic topology, as demonstrated by Giusti, Ghrist, and Bassett (2016) and other recent studies, offers a promising alternative for high-order edge filtering. By representing relationships between objects as higher dimensional simplices instead of edges, simplicial complexes can characterize polyadic interactions and capture more nuanced aspects of complex network organization. With the increasing availability of computational tools for the application of algebraic topology to real data, this framework has the potential to surpass graph theory in understanding the complexities of neural systems.

Other fMRI paradigms.