Abstract

An enduring question in cognitive science is how perceptually novel objects are processed. Addressing this issue has been limited by the absence of a standardised set of object-like stimuli that appear realistic, but cannot possibly have been previously encountered. To this end, we created a dataset, at the core of which are images of 400 perceptually novel objects. These stimuli were created using Generative Adversarial Networks that integrated features of everyday stimuli to produce a set of synthetic objects that appear entirely plausible, yet do not in fact exist. We curated an accompanying dataset of 400 familiar stimuli, which were matched in terms of size, contrast, luminance, and colourfulness. For each object, we quantified their key visual properties (edge density, entropy, symmetry, complexity, and spectral signatures). We also confirmed that adult observers (N = 390) perceive the novel objects to be less familiar, yet similarly engaging, relative to the familiar objects. This dataset serves as an open resource to facilitate future studies on visual perception.

Subject terms: Human behaviour, Psychology

Background & Summary

Object perception has long been an area of interest in the visual neurosciences1–10. When viewing a scene, our attention is typically captured by objects that are salient (which may be driven by low-level visual features such as contrast11–13), and guided by our expectations (based on prior knowledge of the objects themselves13,14). A particularly topical question is how the brain processes object information in the absence of any such prior beliefs. Examining how perceptually novel objects are processed, and what features of those objects guide curiosity, exploration and learning14–22,, therefore has the potential to provide significant insights into the structure of the visual hierarchy.

Although several studies have focused on the processing of perceptually novel objects, reconciling their disparate findings has been challenging because of the heterogeneity in stimulus sets used. For example, some studies have used stimuli that are abstract (e.g., shapes, fractals) or configurally impossible16,18–20,23–25. A limitation of such stimuli is that they differ fundamentally in their structure or meaning relative to everyday objects, and may consequently be less engaging to an observer. Other studies have used: objects that are simply less common; everyday items photographed in unusual configurations; or different arrangements of familiar elements (e.g., blocks or plastic shapes)25,26. However, such approaches risk the diametrically opposite issue of all objects being potentially considered familiar and recognisable.

To understand how an object is visually processed independent of any prior expectations requires a set of objects that is: (1) realistic; (2) novel (i.e., could not possibly have been previously encountered); and (3) no less engaging than more familiar objects. Furthermore, in order to compare the visual processing of novel vs familiar objects, it is critical to ensure that differences in object type are not confounded by low-level visual features, such as size, contrast, luminance, or colourfulness. Although there are several well-curated datasets of familiar, real-world objects27–32, there is only one comparable dataset of novel objects (the Novel Object and Unusual Name Database; NOUN26). However, this dataset comprises only 64 objects, most of which are unusual (rather than truly novel) objects made of simpler shapes. Thus, there remains a conspicuous lack of standardised stimuli to facilitate research into object novelty.

To fill this gap, we trained Generative Adversarial Networks (GANs) – a class of machine learning algorithm – on everyday objects, with the goal of integrating their features to generate a set of 400 entirely novel, yet realistic, stimuli. To facilitate comparisons between the processing of novel and familiar objects, we curated an accompanying set of everyday objects sourced from existing open-source datasets (the Bank of Standardized Stimuli, BOSS33,34) and free online images (see Attributions in the dataset), and carefully matched their key low-level perceptual features (e.g., size, contrast, luminance, colourfulness). In an online study of 390 adult observers, we then confirmed that these novel stimuli were subjectively less familiar, but similarly engaging, relative to the familiar stimuli. The outcome is ‘IMAGINE’ (IMages of AI-Generated Imaginary Novel Entities) – a well-matched, standardised dataset of novel and familiar objects that can be readily applied in future investigations of object perception and perceptual novelty.

Methods

Stimulus generation

Novel objects

Novel objects were created using the artbreeder website (https://artbreeder.com), which generates artificial images of objects using a GAN algorithm. GANs are a class of machine learning algorithm where learning occurs via a competition in a minmax two-player game between two models: a generator model that attempts to produce a data sample that replicates the underlying latent properties of a training dataset, and a discriminator model that estimates the likelihood of a data sample being originally part of the training data35. That is, the generator’s goal is to maximise the number of false positives the discriminator produces, while the discriminator aims to increase its classification accuracy. Efficacy for these goals are represented within separate loss functions for the generator and discriminator, which are then used to improve subsequent training rounds. Ultimately, GANs produce data that is comparable to the original training data using this competition scenario. In the case of images, this results in a generator network that has learnt how to model a data distribution that can produce artificial images (e.g., objects) which appear as though they were part of the real, training set.

Artbreeder applies the commonly used BigGAN36 and styleGAN23 architectures to generate artificial images of objects. First, an image is created using these GANs, with the option to restrict the latent space sampled (truncation). Next, using Compositional Pattern Producing Networks (CPPNs)37, predefined networks that produce known classes of images (“genes”) can be added to the original “parent” image network to produce a new image that includes both features from the original and the selected “genes”. In practice, this allows one image (e.g., a dog) to be “bred” with other types of images (e.g., a toaster) to produce a hybrid dog*toaster object. Importantly, CPPNs allow the “genes” that produce the resultant image to be independently altered. Thus, different variations of an image can be created by bespoke tweaking of specific genes.

For this dataset, each novel object was based on a different set of parents and genes. We selected parent images from artbreeder’s “general” class that had white/light-coloured backgrounds, which were more likely to produce images of single objects, rather than scenes. We then trained the GANs on the selected parent images, and pseudo-randomly selected genes that maximised the likelihood of creating distinct novel objects. We generated an initial set of 761 novel images. As a quality control measure, each image was manually inspected and assessed by one or more of the authors (PC and/or EC) for:

Clarity – Stimuli were excluded if they were poorly defined (i.e., lacking clear form or internal detail);

Similarity to other objects – We ensured that the generated images were distinct from commonplace objects, or other novel or familiar objects in the dataset;

Artefacts and fragments – Occasionally, during the image creation process, a well-defined primary object would be accompanied by blurred or indistinct shadows or fragments separate to the object itself. We used image-editing software to remove any such artefacts from the final image (GNU Image Manipulation Program; GIMP).

This resulted in a curated data set of 400 novel objects (see Figs. 1, 5 for exemplar images).

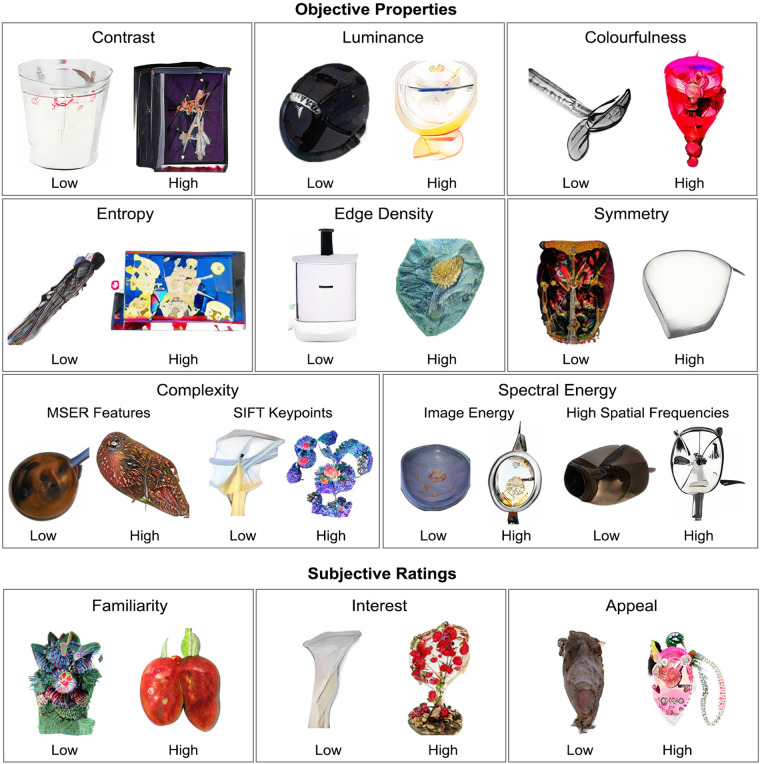

Fig. 1.

Exemplars of familiar and novel objects contained in IMAGINE.

Fig. 5.

Exemplars of novel objects that are representative of those at the extreme of the distributions shown in Fig. 4.

Familiar objects

To benchmark the properties of these novel stimuli to those of real-life objects, we curated a companion data set comprising an equivalent number of familiar objects. We pooled images from the Bank of Standardised Stimuli (BOSS; both Phase I and II)33,34, as well as online sources of appropriately licenced images. The BOSS dataset comprises a set of standardised images, which were photographed and digitally edited to ensure a) only the object remained in frame and b) luminance and colouring were equalised33,34. The familiarity of the BOSS images has been previously confirmed by having participants rate their familiarity with the objects, and assessing the degree to which participants agree on the names of the objects.

For our purposes, we manually inspected all items in the BOSS dataset, and excluded those that were dated (e.g., slides, camera film), affectively valenced (e.g., weapons, religious iconography), or highly culturally specific (e.g., American football helmet). We also selected objects that were generally of a similar shape to our novel stimuli, which tended to be confined to the central portion of the canvas (e.g., we excluded elongated objects, such as broom, ruler). We supplemented the BOSS images with those from other internet sources, which were selected with similar criteria (attributions are provided in the released dataset).

Image standardisation

First, we standardised the image size (in pixels) of all novel and familiar objects. We used cubic interpolation implemented in GIMP to ensure that all objects were 150,000 pixels +/− 10% in size. We ran any image whose quality was reduced following resizing (35 novel objects) through the auto-enhancement procedure from Claid Studio (https://claid.ai). All object backgrounds were recoloured to white. Each object’s canvas was cropped to include a 100-pixel white border around its perimeter.

After standardising the size of each object, we ensured that the set of novel and familiar stimuli were closely matched in terms of key perceptual features. Specifically, we selected a subset of familiar objects that matched the novel objects in terms of:

Contrast – The standard deviation of the normalised, greyscale image (i.e., the standard deviation of the intensity values for the image);

Luminance – The average of the normalised greyscale image;

-

Colourfulness – The linear combination of the mean and standard deviation of the object’s colour plane38. To compute this value, we first constructed a simple opponent colour space for each image (comprising rg [red-green] and yb [yellow-blue]).Next, we computed the mean and standard deviation of both rg and yb colour spaces:We then defined colourfulness as:

In addition, we computed other image properties that are known to influence perceptual processing38–43. These include:

Edge density – The proportion of the total image space that consists of edges, as defined by the Canny44 procedure implemented with MATLAB’s edge function;

Entropy – The degree of randomness in an image, which provides a measure of the average amount of information it contains. Entropy, H, was defined in terms of the Shannon entropy function: , where Pk is the probability of image pixels with value k. Images were converted to greyscale prior to calculation, so that k reflects image intensity, as implemented with skimage’s Shannon entropy function45;

Symmetry39 – The discrepancy in the number of pixels of two halves of an object split along multiple planes by applying a radon transformation on the image. Radon transformation was applied using skimage’s radon transformation, with symmetry computed as the mean of the transformation;

- Object complexity – We applied two separate, commonly-used algorithms that define complexity as a function of the number of distinct features embedded within an object:

-

7.1.Maximally Stable Extremal Regions (MSER) – This algorithm detects ‘distinct features’ as areas of an image with consistent intensity values. That is, consistent intensity values reflect patches of “texture” on an image, with more textures associated with a more complex image. We used MATLAB’s detectMSERFeatures algorithm.

-

7.2.Scale-Invariant Feature Transform (SIFT)46 – This defines the number of ‘key points’ in an image based on features of an image that are robustly detected when the image is rescaled or re-orientated. We employed the OpenCV implementation of the SIFT algorithm.

-

7.1.

- Spectral energy – A common approach to control for low-level visual confounds in psychophysics and neuroimaging experiments is to ensure that stimuli are matched in spectral energy38. We used the Natural Image Statistical Toolbox for MATLAB42 with default parameters to measure the spectral profile of each image in terms of its:

-

8.1Image energy – The frequency that contains 80% of the image energy.

-

8.2High spatial frequencies – The proportion of high spatial frequencies in the image (>10 cycles per image).

-

8.1

Data Records

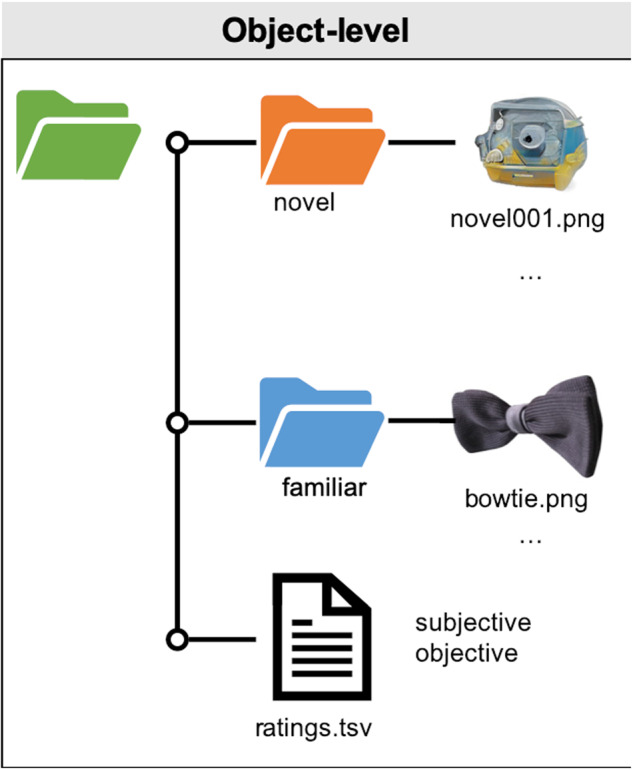

Data are stored using the Open Science framework (OSF) online platform as the IMAGINE dataset47. The released dataset contains standardised images of 400 novel and 400 familiar objects. Novel and familiar objects have been sorted into their respective folders (Fig. 2). Filenames for novel objects are prefixed by ‘novel’, followed by a three-digit code (e.g., ‘novel001’). Filenames for familiar objects comprise their original filenames from the BOSS dataset33,34 (e.g., aceofdiamond) or a descriptor if sourced from other repositories (e.g., bananas). All images are provided in compressed JPEG format (with a white background) and as PNG files (with a transparent background). An accompanying ratings.tsv file contains a summary of the perceptual properties of each object, and data from the online validation study that provides mean familiarity, interest and appeal ratings of every object.

Fig. 2.

Dataset structure. Data are organised at the item-level, with separate folders for JPEG and PNG formats.

Technical Validation

Our technical validation comprised two parts. First, we confirmed that our corpuses of novel and familiar objects were closely matched in terms of size, contrast, luminance, and colourfulness. Second, we verified that our novel images were indeed perceived by human observers to be subjectively less familiar than the images of existing objects, and evoked comparable levels of interest.

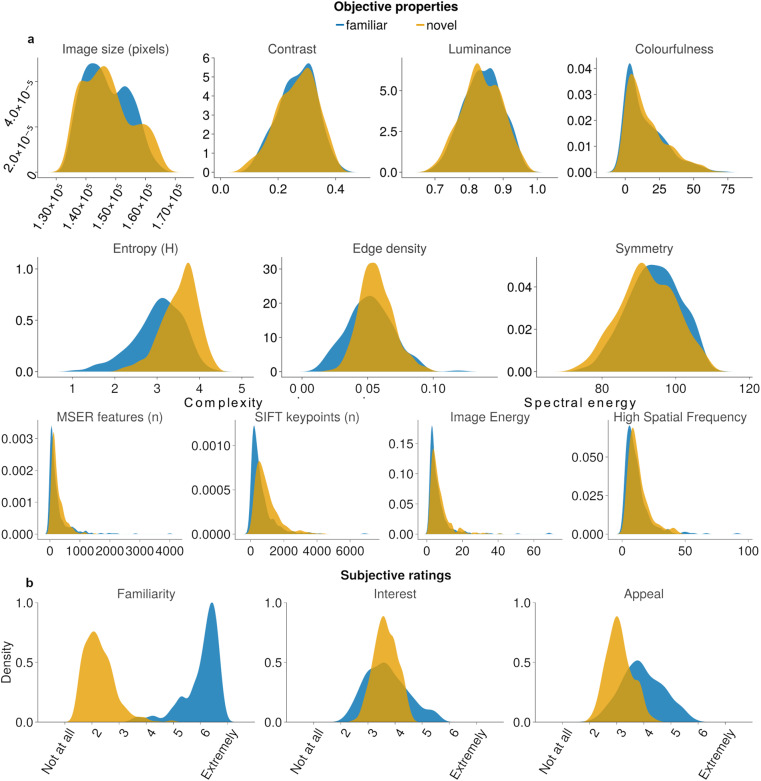

Standardisation of object properties

Many metrics violated assumptions of normality and so we compared perceptual features of the novel vs familiar objects using non-parametric statistics (Mann-Whitney U). We report both frequentist statistics, and their Bayesian analogues to guide interpretations of any differences between object categories (Figs. 4, 5). Critically, there were no differences between the novel and familiar objects in terms of their size, contrast, luminance, or colourfulness (size, novelmedian = 146,464 ± 407 SEM, familiarmedian = 146,020 ± 364, U = 79542, p = 0.889, BF10 = 0.081; contrast, novel = 0.27 ± 0.003, familiar = 0.27 ± 0.003, U = 79478, p = 0.873, BF10 = 0.078; luminance, novel = 0.83 ± 0.003, familiar = 0.84 ± 0.003, U = 77312, p = 0.411, BF10 = 0.100; colourfulness, novel = 11.3 ± 0.720, familiar = 9.9 ± 0.729, U = 75491, p = 0.168, BF10 = 0.183; Fig. 4a). This confirms that our approach was able to generate a set of novel objects that were matched in terms of their key lower level perceptual features to a set of existing familiar objects.

Fig. 4.

(a) Objective and (b) subjective properties of novel and familiar objects. Distributions visualised as density plots, with y-axes reflecting kernel density estimates. Note: MSER; maximally stable extremal regions, SIFT; scale-invariant feature transformation.

For completion, we also computed the more complex perceptual features of each object. We found that, relative to familiar objects, our set of novel objects had greater edge densities, greater entropy, less symmetry, greater complexity, and higher spectral energy (edge densities, novel = 0.056 ± 6.63 × 10−4, familiar = 0.052 ± 8.83 × 10−4, U = 66245, p < 0.0001, BF10 = 207; entropy, novel = 3.61 ± 0.021, familiar = 3.09 ± 0.029, U = 37686, p < 0.0001, BF10 = 1.22 × 1011; symmetry, novel = 91.9 ± 0.376, familiar = 94.2 ± 0.351, U = 69190, p < 0.0001, BF10 = 14.3; MSER, novel = 179 ± 10.7, familiar = 120 ± 20.4, U = 62511, p < 0.0001, BF10 = 462; SIFT keypoints, novel = 769 ± 34.4, familiar = 404 ± 36.1, U = 48764, p < 0.0001, BF10 = 1.56 × 107; image energy, novel = 5 ± 0.27, familiar = 4 ± 0.36, U = 66059, p < 0.0001, BF10 = 38.4; high spatial frequencies, novel = 10.6 ± 0.42, familiar = 8.74 ± 0.49, U = 65810, BF10 = 75.3; Fig. 4a).

Finally, we ensured that the GAN-generated objects were indeed perceived as less familiar than the objects from the BOSS database, and that they evoked comparable levels of interest. To do so, we conducted an online validation study, in which participants assessed their degree of familiarity of objects in each category, as well as their degree of interest in each object. As a supplementary question, we also asked participants to rate the degree to which they found each object appealing.

Online validation study

Participants

435 participants completed this study, which was conducted on the Cloud Research platform48 (integrated with the Amazon Mechanical Turk; MTurk). We excluded 23 participants for attempting the study more than once. A further 22 were excluded for perseverative responses (e.g., providing the same response for every object). The final sample comprised 390 participants (205 self-identified males, 181 self-identified females, 2 non-binary, 2 unspecified), aged between 18 and 76 (mean = 40.32, SD = 12.12). All participants were from the United States, and were reimbursed US$2.20 for their time. Data were collected between August 2022 and January 2023. Participants provided informed consent, and study protocols were approved by Monash University’s Human Research Ethics Committee (ID: CF20/23934).

Experimental design

The task was implemented using jsPsych (version 7.2.1)49, with the experiment housed on https://cognition.run. To ensure that stimuli could be viewed with sufficient clarity and resolution, participants could only perform the task on a desktop or laptop computer (the task was aborted if an attempt was made to run it on a mobile phone). Each participant was required to rate a random subset of 50 familiar and 50 novel objects, which were presented in random order (Fig. 3).

Fig. 3.

Overall study design. Participants viewed and rated a selection of 50 familiar and 50 novel items on familiarity, interest and appeal.

Each trial was self-paced, and began with the object presented at the centre of the screen. Participants could freely view and interact with the object using their mouse. Objects could be stretched and rotated, and participants could pan and zoom into or out of each image. A reset button could be used to reset the image to its original configuration.

Once satisfied, participants pressed a “continue” button, and were presented with three questions: (1) “How familiar is this object?” (2) “How interesting do you find this object?” and (3) “How appealing is this object?” Responses were recorded on a 7-point Likert scale ranging from Not at all (1) to Extremely (7). Once all responses were complete, participants pressed a “continue” button, and the next object was then displayed.

Results

The key comparison was in familiarity ratings between novel and familiar objects. This provided decisive evidence that GAN-generated objects were indeed perceived as less familiar than the BOSS objects (Figs. 4b; novelmedian = 2.19 ± 0.028 SEM, familiarmedian = 6.26 ± 0.033; U = 134, p < 0.0001, BF10 = 5.10 × 1021). In addition, the novel and familiar objects evoked similar degrees of interest (novel = 3.63 ± 0.021, familiar = 3.61 ± 0.039, U = 78509, p = 0.788, BF10 = 0.085). Interestingly, we found familiar objects were more appealing than novel objects (novel = 3.04 ± 0.023, familiar = 3.87 ± 0.038, U = 28272, p < 0.0001, BF10 = 2.19 × 1014).

In sum, the ‘IMAGINE’ dataset comprises 400 artificially-generated objects that are entirely plausible, yet do not in fact exist. These objects have been carefully standardised and matched to a complementary dataset of 400 familiar objects. Importantly, the novel objects are perceived by adult observers to be less familiar than the familiar objects, but similarly engaging. We hope that this stimulus set will facilitate future research in the neuroscience of object processing and perceptual novelty.

Usage Notes

Users may choose to sample a subset of the familiar and novel stimuli for their unique purposes. Here, we have matched objects according to size, contrast, luminance and colourfulness. Although there may be significant statistical differences in symmetry and complexity across the corpus of novel and familiar objects as a whole, there is sufficient overlap between these features (Fig. 4) such that users will be able to sample a subset of novel and familiar objects which are matched across those features should they wish. Likewise, there may be sufficient variability within subjective ratings to use familiar and novel objects that are comparable on interest or appeal if required.

We also note that our online validation study comprised a group of participants that were exclusively based in the U.S.A. The subjective ratings of familiarity, interest and appeal are therefore limited to that population. Although we anticipate that most cultures would broadly consider the novel objects to be ‘less familiar’, the precise degree to which a novel stimulus is perceived as less familiar in other cultures, and the relative difference in ratings for novel and familiar objects, cannot be assumed.

Finally, we note that certain aspects of our procedure required manual inspection of the novel objects as a quality control measure. This was a critical aspect of our protocol to verify that the generated images were clear, plausible, distinct, and free of artefacts. Given recent advances in the development of artificial intelligence techniques, such steps could potentially be automated in the future.

Acknowledgements

This work was supported by the Australian Research Council (DP180102383 to TC and SB; FT220100294 to TC), and the Office of Naval Research (Global) (TC). PC was supported by funding from the Australian Research Council (DP180102383), and the Office of Naval Research (Global). We thank Luke McCaffrey and Nadja Duszniak for assistance with image creation.

Author contributions

P.C., conceptualisation, methodology, software, investigation, validation, data curation, writing – original draft, writing – review and editing. E.C., investigation, validation, writing – review and editing. S.B., supervision, funding acquisition, writing – review and editing. T.C., conceptualisation, supervision, funding acquisition, writing – review and editing.

Code availability

Software to generate novel objects is available at https://artbreeder.com. Code to perform data collection (i.e., run the online experiment) was created using jsPsych (version 7.2.1), and a modified version of the mouse-tracking extension (all available at47). Code to extract the objective properties of each object, and to compile the subjective ratings from our online study, was written in Python, MATLAB and Julia respectively (available at47).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Patrick S. Cooper, Email: patrick.cooper@monash.edu

Trevor T.-J. Chong, Email: trevor.chong@monash.edu

References

- 1.Grill-Spector K. The neural basis of object perception. Curr Opin Neurobiol. 2003;13:159–166. doi: 10.1016/s0959-4388(03)00040-0. [DOI] [PubMed] [Google Scholar]

- 2.Treisman A. Feature binding, attention and object perception. Philosophical Transactions Royal Soc Lond Ser B Biological Sci. 1998;353:1295–1306. doi: 10.1098/rstb.1998.0284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Treisman A, Paterson R. Emergent features, attention, and object perception. J Exp Psychology Hum Percept Perform. 1984;10:12–31. doi: 10.1037//0096-1523.10.1.12. [DOI] [PubMed] [Google Scholar]

- 4.Ashby FG, Prinzmetal W, Ivry R, Maddox WT. A Formal Theory of Feature Binding in Object Perception. Psychol Rev. 1996;103:165–192. doi: 10.1037/0033-295x.103.1.165. [DOI] [PubMed] [Google Scholar]

- 5.Kourtzi Z, Kanwisher N. Representation of Perceived Object Shape by the Human Lateral Occipital Complex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- 6.Haxby JV, et al. The Effect of Face Inversion on Activity in Human Neural Systems for Face and Object Perception. Neuron. 1999;22:189–199. doi: 10.1016/s0896-6273(00)80690-x. [DOI] [PubMed] [Google Scholar]

- 7.Ungerleider LG, Bell AH. Uncovering the visual “alphabet”: Advances in our understanding of object perception. Vision Res. 2011;51:782–799. doi: 10.1016/j.visres.2010.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Behrmann M, Kimchi R. What Does Visual Agnosia Tell Us About Perceptual Organization and Its Relationship to Object Perception? J Exp Psychology Hum Percept Perform. 2003;29:19–42. doi: 10.1037//0096-1523.29.1.19. [DOI] [PubMed] [Google Scholar]

- 9.Logothetis NK. Visual Object Recognition. Annu Rev Neurosci. 1996;19:577–621. doi: 10.1146/annurev.ne.19.030196.003045. [DOI] [PubMed] [Google Scholar]

- 10.DiCarlo JJ, Cox DD. Untangling invariant object recognition. Trends Cogn Sci. 2007;11:333–341. doi: 10.1016/j.tics.2007.06.010. [DOI] [PubMed] [Google Scholar]

- 11.Morrone MC, Denti V, Spinelli D. Color and luminance contrasts attract independent attention. Current biology. 2002;12:1134–1137. doi: 10.1016/s0960-9822(02)00921-1. [DOI] [PubMed] [Google Scholar]

- 12.Beck DM, Kastner S. Stimulus context modulates competition in human extrastriate cortex. Nat Neurosci. 2005;8:1110–1116. doi: 10.1038/nn1501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pooresmaeili A, Poort J, Thiele A, Roelfsema PR. Separable codes for attention and luminance contrast in the primary visual cortex. J Neurosci. 2010;30:12701–12711. doi: 10.1523/JNEUROSCI.1388-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kersten D, Mamassian P, Yuille A. Object Perception as Bayesian Inference. Annu Rev Psychol. 2004;55:271–304. doi: 10.1146/annurev.psych.55.090902.142005. [DOI] [PubMed] [Google Scholar]

- 15.Parr T, Friston KJ. Attention or salience? Curr Opin Psychology. 2018;29:1–5. doi: 10.1016/j.copsyc.2018.10.006. [DOI] [PubMed] [Google Scholar]

- 16.Op de Beeck HP, Deutsch JA, Vanduffel W, Kanwisher NG, DiCarlo JJ. A Stable Topography of Selectivity for Unfamiliar Shape Classes in Monkey Inferior Temporal Cortex. Cereb Cortex. 2008;18:1676–1694. doi: 10.1093/cercor/bhm196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5:617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- 18.Gauthier I, Tarr MJ. Becoming a “Greeble” Expert: Exploring Mechanisms for Face Recognition. Vision Res. 1997;37:1673–1682. doi: 10.1016/s0042-6989(96)00286-6. [DOI] [PubMed] [Google Scholar]

- 19.Hegdé J, Bart E, Kersten D. Fragment-Based Learning of Visual Object Categories. Curr Biol. 2008;18:597–601. doi: 10.1016/j.cub.2008.03.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hegdé J, Thompson SK, Brady M, Kersten D. Object recognition in clutter: cortical responses depend on the type of learning. Front Hum Neurosci. 2012;6:170. doi: 10.3389/fnhum.2012.00170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Palmeri, T J., & Tarr, M. Visual object perception and long-term memory. In Visual memory, 163–207 (2008).

- 22.Op de Beeck HP, Baker CI. The neural basis of visual object learning. Trends Cogn Sci. 2010;14:22–30. doi: 10.1016/j.tics.2009.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tian M, Yamins D, Grill-Spector K. Learning the 3-D structure of objects from 2-D views depends on shape, not format. J Vision. 2016;16:7. doi: 10.1167/16.7.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Freud E, Ganal T, Avidan G. Representations of possible and impossible objects in the visual cortex: Evidence from fMRI adaptation. Neuroimage. 2013;64:685–692. doi: 10.1016/j.neuroimage.2012.08.070. [DOI] [PubMed] [Google Scholar]

- 25.Chen X, Twomey KE, Westermann G. Curiosity enhances incidental object encoding in 8-month-old infants. J Exp Child Psychol. 2022;223:105508. doi: 10.1016/j.jecp.2022.105508. [DOI] [PubMed] [Google Scholar]

- 26.Horst JS, Hout MC. The Novel Object and Unusual Name (NOUN) Database: A collection of novel images for use in experimental research. Behav Res Methods. 2016;48:1393–1409. doi: 10.3758/s13428-015-0647-3. [DOI] [PubMed] [Google Scholar]

- 27.Migo EM, Montaldi D, Mayes AR. A visual object stimulus database with standardized similarity information. Behav Res Methods. 2013;45:344–354. doi: 10.3758/s13428-012-0255-4. [DOI] [PubMed] [Google Scholar]

- 28.Hout MC, Goldinger SD, Brady KJ. MM-MDS: A Multidimensional Scaling Database with Similarity Ratings for 240 Object Categories from the Massive Memory Picture Database. Plos One. 2014;9:e112644. doi: 10.1371/journal.pone.0112644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Konkle T, Brady TF, Alvarez GA, Oliva A. Scene Memory Is More Detailed Than You Think. Psychol Sci. 2010;21:1551–1556. doi: 10.1177/0956797610385359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Brady TF, Konkle T, Alvarez GA, Oliva A. Visual long-term memory has a massive storage capacity for object details. Proc National Acad Sci. 2008;105:14325–14329. doi: 10.1073/pnas.0803390105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Dan-Glauser ES, Scherer KR. The Geneva affective picture database (GAPED): a new 730-picture database focusing on valence and normative significance. Behav Res Methods. 2011;43:468. doi: 10.3758/s13428-011-0064-1. [DOI] [PubMed] [Google Scholar]

- 32.Hebart MN, et al. THINGS: A database of 1,854 object concepts and more than 26,000 naturalistic object images. Plos One. 2019;14:e0223792. doi: 10.1371/journal.pone.0223792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Brodeur MB, Guérard K, Bouras M. Bank of Standardized Stimuli (BOSS) Phase II: 930 New Normative Photos. Plos One. 2014;9:e106953. doi: 10.1371/journal.pone.0106953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Brodeur MB, Dionne-Dostie E, Montreuil T, Lepage M. The Bank of Standardized Stimuli (BOSS), a New Set of 480 Normative Photos of Objects to Be Used as Visual Stimuli in Cognitive Research. Plos One. 2010;5:e10773. doi: 10.1371/journal.pone.0010773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Goodfellow, I. J. et al. Generative Adversarial Nets. Adv Neural Inf Process Syst (2014).

- 36.Brock A, Donahue J, Simonyan K. Large Scale GAN Training for High Fidelity Natural Image Synthesis. Arxiv. 2018 doi: 10.48550/arxiv.1809.11096. [DOI] [Google Scholar]

- 37.Stanley KO. Compositional pattern producing networks: A novel abstraction of development. Genet Program Evol M. 2007;8:131–162. [Google Scholar]

- 38.Torralba A, Oliva A. Statistics of Natural Images Categories. Network: Computation in Neural Systems. 2003;14:391–412. [PubMed] [Google Scholar]

- 39.Rosenholtz R, Li Y, Nakano L. Measuring visual clutter. J Vision. 2007;7:17. doi: 10.1167/7.2.17. [DOI] [PubMed] [Google Scholar]

- 40.Corchs SE, Ciocca G, Bricolo E, Gasparini F. Predicting Complexity Perception of Real World Images. Plos One. 2016;11:e0157986. doi: 10.1371/journal.pone.0157986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Nagle F, Lavie N. Predicting human complexity perception of real-world scenes. Roy Soc Open Sci. 2020;7:191487. doi: 10.1098/rsos.191487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Bainbridge WA, Oliva A. A toolbox and sample object perception data for equalization of natural images. Data in Brief. 2015;5:846–851. doi: 10.1016/j.dib.2015.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Artese, M. T., Ciocca, G. & Gagliardi, I. Good 50x70 Project: A portal for Cultural And Social Campaigns. in Archiving Conference vol. 1 213–218 (2014).

- 44.Ding L, Goshtasby A. On the Canny edge detector. Pattern Recogn. 2001;34:721–725. [Google Scholar]

- 45.Shannon CA. A mathematical theory of communication. Bell Syst. Tech. J. 1948;843:379–423. [Google Scholar]

- 46.Lowe DG. Distinctive Image Features from Scale-Invariant Keypoints. Int J Comput Vision. 2004;60:91–110. [Google Scholar]

- 47.Cooper PS, Colton E, Bode S, Chong T. 2023. ‘IMAGINE’ (IMages of AI-Generated Imaginary Entities) OSF. [DOI]

- 48.Litman L, Robinson J, Abberbock T. TurkPrime.com: A versatile crowdsourcing data acquisition platform for the behavioral sciences. Behav Res Methods. 2017;49:433–442. doi: 10.3758/s13428-016-0727-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.de Leeuw JR. jsPsych: A JavaScript library for creating behavioral experiments in a Web browser. Behav Res Methods. 2015;47:1–12. doi: 10.3758/s13428-014-0458-y. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Cooper PS, Colton E, Bode S, Chong T. 2023. ‘IMAGINE’ (IMages of AI-Generated Imaginary Entities) OSF. [DOI]

Data Availability Statement

Software to generate novel objects is available at https://artbreeder.com. Code to perform data collection (i.e., run the online experiment) was created using jsPsych (version 7.2.1), and a modified version of the mouse-tracking extension (all available at47). Code to extract the objective properties of each object, and to compile the subjective ratings from our online study, was written in Python, MATLAB and Julia respectively (available at47).