Abstract

Artificial Intelligence (AI) is increasingly influential across various sectors, including healthcare, with the potential to revolutionize clinical practice. However, risks associated with AI adoption in medicine have also been identified. Despite the general understanding that AI will impact healthcare, studies that assess the perceptions of medical doctors about AI use in medicine are still scarce. We set out to survey the medical doctors licensed to practice medicine in Portugal about the impact, advantages, and disadvantages of AI adoption in clinical practice. We designed an observational, descriptive, cross-sectional study with a quantitative approach and developed an online survey which addressed the following aspects: impact on healthcare quality of the extraction and processing of health data via AI; delegation of clinical procedures on AI tools; perception of the impact of AI in clinical practice; perceived advantages of using AI in clinical practice; perceived disadvantages of using AI in clinical practice and predisposition to adopt AI in professional activity. Our sample was also subject to demographic, professional and digital use and proficiency characterization. We obtained 1013 valid, fully answered questionnaires (sample representativeness of 99%, confidence level (p< 0.01), for the total universe of medical doctors licensed to practice in Portugal). Our results reveal that, in general terms, the medical community surveyed is optimistic about AI use in medicine and are predisposed to adopt it while still aware of some disadvantages and challenges to AI use in healthcare. Most medical doctors surveyed are also convinced that AI should be part of medical formation. These findings contribute to facilitating the professional integration of AI in medical practice in Portugal, aiding the seamless integration of AI into clinical workflows by leveraging its perceived strengths according to healthcare professionals. This study identifies challenges such as gaps in medical curricula, which hinder the adoption of AI applications due to inadequate digital health training. Due to high professional integration in the healthcare sector, particularly within the European Union, our results are also relevant for other jurisdictions and across diverse healthcare systems.

Introduction

The scientific foundations of Artificial Intelligence (AI) were established almost 70 years ago [1]. Depending on context, AI can be defined differently [2–7]. In summary, AI is a computer science field that develops systems capable of manipulating concepts and data, using heuristics, representing knowledge and incorporating inaccurate or incomplete data, allowing solutions, and facilitating learning [8].

AI progress to date has been described in generations: application to generic tasks, such as image, text and sound recognition, analysis, and interpretation (1st generation) [7, 9–12]; software that can act autonomously, comparable to intelligent human life (2nd generation) [13]; and self-aware systems, which surpass human intelligence and can be applied in any area (3rd generation) [6]. Currently, the use of first-generation AI has expanded across different sectors and generative AI has been considered by some authors as an early example of 2nd generation AI [13–17].

In healthcare, AI has demonstrated its capability to assist healthcare professionals with administrative and clinical tasks, including routine work [18], diagnosis and prescription decision-making [2, 19]. Furthermore, AI has been proposed as a potential solution for significant healthcare challenges, including reducing medical errors in diagnostics, drug treatments, and surgeries, optimizing resource utilization, and improving workflows [20, 21]. Its application in performing routine and repetitive tasks shows potential for enhancing efficiency, accuracy, and impartiality in healthcare [22].

Due to its expanding reach and broad potential, some argue that implementation of AI tools can revolutionize healthcare [23], particularly given the abundance of data, complex problems, and diverse operational contexts in the health sector, including medicine [22, 24]. With the advancements in big data analytics, AI can uncover crucial information and extract knowledge that may be inaccessible even to skilled medical professionals [25]. Promising AI applications include integrating health information, user education, pandemic and epidemic prevention, medical diagnosis, and decision support models across various clinical contexts [26–36].

However, AI applications in healthcare also elicit significant technical, ethical, legal, and social challenges [20, 37–40]. Building trust and confidence in AI’s positive outcomes in medicine is essential for its acceptance and adoption by health professionals [41–43]. Therefore, evaluating healthcare professionals’ perspectives on AI’s potential and impact is essential for successful integration [42, 44–47]. Recent studies indicate that some professionals remain reticent about preparedness, resource availability, and economic implications of AI implementation [48–50]. Furthermore, education and training on AI are identified as important requirements [49]. Additionally, involving all stakeholders is deemed fundamental [46] and some medical specialists view AI as a "co-pilot" rather than an independent clinical decision-maker [49]. Connectivity issues, infrastructure gaps, and potential negative consequences also raise concerns [48, 51, 52]. In parallel, studies among medical students indicate their awareness of AI’s potential in healthcare, particularly in radiology, and their willingness to embrace it in their daily lives [45, 50, 53, 54]. They recognize the importance of including AI training in medical education, as students who received such training feel more confident in utilizing AI [55]. Both healthcare professionals and patients express skepticism and hope concerning privacy and health data protection in AI applications [52], underscoring the need to test and adapt AI systems in healthcare to meet stakeholders’ needs [56].

Despite the growing usage of AI and the accumulating evidence about its potential and impact in healthcare, obstacles to AI adoption persist, including regulatory issues, the digital divide, economic constraints, literacy levels and cultural resistance from users and administrative bodies. Furthermore, there is a lack of sufficient studies assessing medical doctors’ perspectives on this issue [41, 56, 57]. To address this gap, our nationwide survey aims to assess the potential and impact of AI in healthcare from the viewpoint of physicians with different specialties, experience, and working contexts in Portugal. Understanding these perceptions will contribute to promoting the inclusive adoption of AI in healthcare.

Methods

Study design, study population and questionnaire

We designed an observational, descriptive, cross-sectional study with a quantitative approach. In order to assess the perceptions of Portuguese physicians regarding the use of AI in healthcare we developed an online survey using the SurveyMonkey platform [58–60] entitled “Artificial Intelligence in healthcare provision and the perspective of Portuguese physicians". This survey was composed of seven broad questions divided into "sub-questions", with response options in semantic differential—ranging from "1"(“strongly disagree") to "6" ("strongly agree"). Survey questions addressed the following aspects: extraction and processing of health data via AI; delegation of clinical procedures to AI tools; perception of the impact of AI in clinical practice; perceived advantages of using AI in clinical practice; perceived disadvantages of using AI in clinical practice and predisposition to adopt AI in their own professional activity. The survey also included 7 questions to assess the following dimensions of the respondents: demographic characterization (gender and age); professional characterization (medical specialty, years of experience and place of clinical practice); and digital use and proficiency characterization (use of ICT and self-perception of AI knowledge and digital technology command).

Our survey was pre-tested in a convenience sample of 11 doctors from different specialties (Pediatrics, Public Health, Nephrology, General Practice, Rheumatology and Oncology). As a result of this test, changes were introduced to the clarity of definitions, expressions and concepts included in the survey questions.

Link to the survey was sent via email to every licensed physician in Portugal via the Portuguese Medical Association, which reviewed the study protocol and survey and accepted to collaborate in its dissemination. Respondents were informed about the context and objectives of the study and were asked to voluntarily consent to participation (only by expressly selecting the consent option were respondents allowed access to survey questions). Respondents were also informed that the study was anonymous and that study results could be used for scientific publication purposes only.

Research ethics principles and legally applicable requirements were fully complied with.

Data processing and statistical analysis

Considering that there are approximately 54,450 physicians officially licensed in Portugal, 656 valid answers would be required for a confidence level of 99% (p< 0.01), with a margin of error of 5% [61]. We obtained 1013 valid, fully answered questionnaires during the data collection period between September 18th and October 4th, 2019.

Results were transferred to IBM® SPSS® software (version 28) for data processing and statistical analysis. Univariate analysis consisting in the descriptive statistics of the sample and the frequency analysis of each variable was performed. In addition, score variables were built to facilitate bivariate statistical analysis (correlation tests) involving questions that contained semantic differentials (Questions 2, 3, 5, 6 and 7). To this end, responses "1", "2" and "3" were grouped into a larger category ("disagree"), and responses "4", "5" and "6" into a different larger category ("agree"). Each score was calculated using the arithmetic mean of the answers to the "sub-questions" included in each question, except for Question 4 (due to the lack of a unifying meaning among of the respective sub-questions, resulting in a low Internal Consistency). Each score was evaluated for Internal Consistency, using Cronbach’s alpha, revealing high consistency (Question 2 α = 0,975; Q3 α = 0,896; Q5 α = 0,934; Q6 α = 0,889; and Q7 α = 0,934 –S5 Table) [62]. Score variables were created for questions 13, with responses "never", "monthly", and "weekly" grouped into the category "low use," and responses "daily" into "high use." Additionally, for question 14, responses "1," "2," and "3" were grouped into "disagree," and responses "4," "5," and "6" into "agree".

In order to further facilitate the bivariate analysis, two dichotomous variables were built for Question 12 corresponding to place of clinical practice: Public (including National Health Service (NHS) Primary Care and NHS Hospital) versus Private (including Private Healthcare, Private Hospital and Private Offices/Clinics); and Primary Health Care (including Primary Health Care of the Portuguese NHS and Primary Health Care in a private unit) versus Hospitals (including Portuguese NHS Hospital, Private Hospital and Hospital of the social sector). A third category labelled "Other" (including Hospitals of the social sector, Insurance Companies/Subsystems, and private practices/clinics) was created to include places of professional practice which did not belong elsewhere and were also residual considering the total sample.

Bivariate analysis was conducted by assessing the statistical association between the variables under study through statistical tests. Non-parametric tests were used due to the non-normal distributions of the sample (confirmed by the Kolmogorov-Smirnov test). According to the characteristics of each variable, the non-parametric tests performed included: Spearman (correlation test) and Mann-Whitney U. Positive correlation between variables was considered when Sig (p-value) was smaller than 0.05 (for 95% confidence level).

Results

Demographic characterization of the study population

Demographic characteristics of the surveyed population are presented in Table 1. Survey respondents were mostly women (gender ratio 55,0: 45,0; female: male; n = 557 and n = 456, respectively) with a mean age of 46 years old (46,14 ±15,69). Most respondents were between 30 and 39 years old (25,6%), while only 6,5% of respondents were 70 or more years old (Table 1).

Table 1. Demographic and professional characteristics of respondents.

| Characteristics | Category | Mean ± DP | |

|---|---|---|---|

| Age | 46,14 ± 15,69 | ||

|

Age intervals

N = 999 |

n | % | |

| 20–29 years old | 183 | 18,3 | |

| 30–39 years old | 257 | 25,6 | |

| 40–49 years old | 134 | 13,4 | |

| 50–59 years old | 153 | 15,3 | |

| 60–69 years old | 209 | 20,9 | |

| 70 years old or older | 63 | 6,5 | |

|

Gender

N = 1013 |

Masculine | 456 | 45 |

| Feminine | 557 | 55 | |

| Setting of professional practice | Hospital of the NHS | 545 | 53,8 |

| Primary healthcare of the NHS | 278 | 27,4 | |

| Medical clinic/office of the private sector | 265 | 26,2 | |

| Hospital of the private sector | 221 | 21,8 | |

| Primary healthcare of the private sector | 51 | 5 | |

| Hospital of the social sector | 25 | 2,5 | |

| Insurance or health subsystem | 24 | 2,4 | |

| NHS sector | 779 | 76,9 | |

| Private sector | 431 | 42,5 | |

| Primary health Care | 306 | 30,2 | |

| Hospital Care | 660 | 65,2 | |

| Professional experience N = 981 | < 1 year | 246 | 25,1 |

| 1–9 years | 215 | 21,9 | |

| 10–19 years | 122 | 12,4 | |

| 20–29 years | 176 | 18 | |

| 30–39 years | 166 | 16,9 | |

| > 40 years | 56 | 5,7 | |

| Medical specialty N = 1013 | General and Family Medicine | 271 | 26,8 |

| Internal Medicine | 70 | 6,9 | |

| Pediatric Medicine | 50 | 4,9 | |

| Anesthesiology | 45 | 4,4 | |

| Medical Internship (Common year–no specialty) | 40 | 3,9 | |

| General Surgery | 37 | 3,7 | |

| Public Health | 31 | 3,1 | |

| Radiology | 32 | 3,2 | |

| Gynecology/Obstetrics | 28 | 2,8 | |

| Psychiatry | 26 | 2,6 | |

| Ophthalmology | 24 | 2,4 | |

| Clinical Pathology | 20 | 2 | |

| Orthopedics | 20 | 2 | |

| Occupational Medicine | 19 | 1,9 | |

| Rheumatology | 19 | 1,9 | |

| Physical and Rehabilitation Medicine | 18 | 1,8 | |

| Intensive Care Medicine | 17 | 1,7 | |

| Dermatology and Venereology | 15 | 1,5 | |

| Medical Oncology | 16 | 1,6 | |

| Psychiatry (Childhood and Adolescence) | 13 | 1,3 | |

| Cardiology | 12 | 1,2 | |

| Neurology | 12 | 1,2 | |

| Anatomic Pathology | 11 | 1,1 | |

| Immunology/Allergy | 11 | 1,1 | |

| Nephrology | 11 | 1,1 | |

| Otorhinolaryngology | 11 | 1,1 | |

| Neurosurgery | 10 | 1 | |

| Other | 124 | 12,4 |

Descriptive statistics of the study population including age, gender, years of professional experience, setting of professional practice and medical specialty.

Professional characterization of the study population

Physicians from all medical specialties were surveyed. The most represented specialty was General and Family Medicine (26,8%), followed by Internal Medicine (6,9%) and Pediatric Medicine (4,9%). For the sake of clarity and data treatment purposes, less represented medical specialties (< 1%) were grouped and classified as “Other” (Table 1).

In terms of professional experience as a medical specialist, most respondents had less than one year of experience (25,1%), followed by those with one to nine years’ experience (21,9%) (Table 1).

Regarding the setting of professional practice, most respondents worked in the Portuguese NHS (76,9%), either in a public hospital (53,8%), or on public primary healthcare (27,4%) (Table 1). 26,2% of respondents worked on a medical clinic/office of the private sector and 21,8% worked in a hospital of the private sector (Table 1). As medicine in Portugal is not mandatorily practiced in a regimen of exclusivity, respondents could select more than one setting of practice.

Characterization of the study population in terms of use and command of Information and Communication Technologies (ICT)

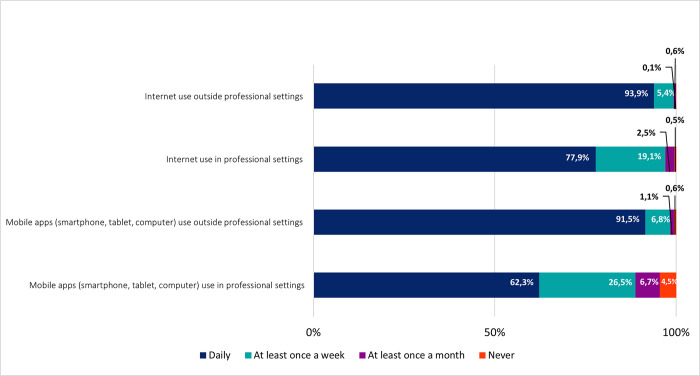

Most respondents mentioned the daily use of the internet and mobile apps in non-professional settings (93,9% and 91,5%, respectively) (Fig 1). Although in smaller frequencies, most medical doctors in Portugal also referred the daily use of the internet and mobile apps in professional settings (77,9% and 62,3% of daily use, respectively). Nonetheless, most respondents mentioned using the internet (97%) and mobile apps (88,8%) in professional settings, either weekly or daily. Taken together our results show that medical doctors in Portugal are frequent users of ICT.

Fig 1. Use of information and communication technologies (ICT).

Frequencies corresponding to periodicity of use of Information and Communication Technologies (ICT). Results correspond to answers to the question “How often do you perform each of the following activities?”.

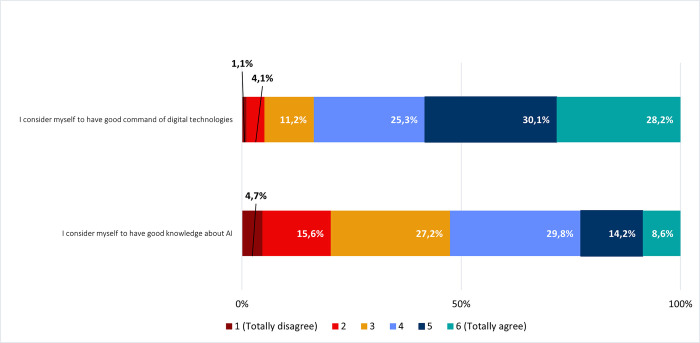

With the purpose of evaluating the self-perceived command of digital technologies and knowledge about AI, we questioned respondents to estimate their literacy levels according to a 6-level Likert scale [63, 64] (Fig 2). Most respondents (83,6%) considered to have good command of digital technologies (levels 4, 5 and 6 combined). In contrast, most respondents estimated their levels of knowledge about AI as being intermediate (29,8% for level 4 and 27,2% for level 3, resulting in 57% when combined).

Fig 2. Self-perceived command of digital technologies and knowledge about AI.

Frequencies corresponding to self-perceived command of digital technologies and knowledge about AI. Results correspond to agreement with statements on the left column, according to a Likert scale.

Perceptions regarding the impact of AI on healthcare

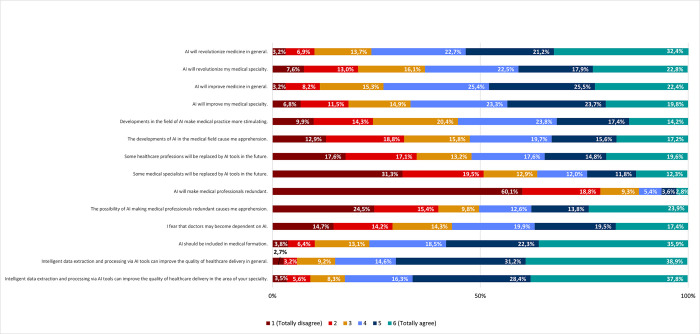

To evaluate the perceptions of medical doctors in Portugal regarding the impact of AI in healthcare, we first asked respondents to indicate their agreement or disagreement (according to a 6-level Likert scale) with a series of related statements. Most respondents agreed that AI will have an impact in medicine in general. In particular, 76,3% agreed (32,4% totally agree; only 3,2% totally disagree) that AI will revolutionize medicine and 73,3% agreed (22,4% totally agree; only 3,2% totally disagree) that it will improve it (Fig 3). Accordingly, most respondents also agreed that AI should be included in medical formation (76,7% as a combination of levels 4,5 and 6; 35,9% totally agree; 3,8% totally disagree). Regarding the impact of AI on their own medical specialties, respondents were slightly less assertive. Nonetheless, 63,2% still agreed that AI will revolutionize their specialty and 66,8% agreed that it will improve it. Furthermore, 55,4% agreed that developments in AI will make medical practice more stimulating (Fig 3).

Fig 3. Impact and potential of the use of AI in healthcare delivery.

Frequencies corresponding to impact and potential of the use of AI in healthcare delivery. Results correspond to agreement with statements on the left column, according to a Likert scale.

Despite the assessment that AI will have a significant impact in the medical profession, 88,2% of respondents disagreed (60,1% totally disagree) with the general statement that AI will make medical doctors redundant. However, disagreement was reduced to 63,7% regarding whether medical specialists will be replaced by AI in the future and, notably, 52% of respondents agreed that some healthcare professions will be replaced by AI in the future. Furthermore, approximately half of those inquired (50,3%) agreed that the possibility of medical doctors becoming redundant due to AI causes apprehension as do the developments of AI in the medical field (52,5%). Accordingly, 56,8% of respondents fear that medical doctors may become dependent on AI (Fig 3).

Specifically, regarding the potential of AI-mediated health data processing, 84,7% of respondents agreed with a resulting improvement of the quality of healthcare delivery in general (38,9% totally agree), and 82,5% agreed (37,8% totally agree) with an improvement in their specific specialty (Fig 3).

Perceptions regarding the use of AI in healthcare

This study also aimed to assess physicians’ perceptions regarding the use of AI in healthcare according to four different dimensions: delegation of tasks, specific advantages, specific disadvantages, and predisposition to adopt AI use.

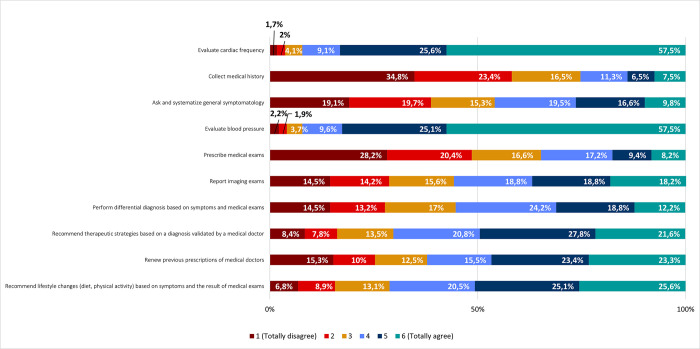

I. Task delegation

Respondents indicated that they agree to delegate various tasks on AI, including evaluating cardiac frequency (92,2%; 57,5% totally agree), and blood pressure (92,2%; 57,5% totally agree); recommending lifestyle changes based on symptoms and the result of medical exams (71,2%); recommending therapeutic strategies based on a diagnosis validated by a medical doctor (70,2%); renewing previous prescriptions of medical doctors (62,2%); reporting imaging exams (55,8%); and performing differential diagnosis based on symptoms and medical exams (55,2%) (Fig 4).

Fig 4. Task delegation on AI tools.

Frequencies corresponding to task delegation on Artificial Intelligence tools. Results correspond to agreement with statements on the left column (by responding to the following question: “Among the following procedures, please indicate the degree of agreement that it can be delegated to an AI tool”), according to a Likert scale.

On the contrary, most respondents disagreed about delegating other tasks on AI, in particular, obtaining medical history (74,7%; 34,8% totally disagree); prescribing medical exams (65,2%; 28,2% totally disagree); and asking and systematizing general symptomatology (54,1%) (Fig 4).

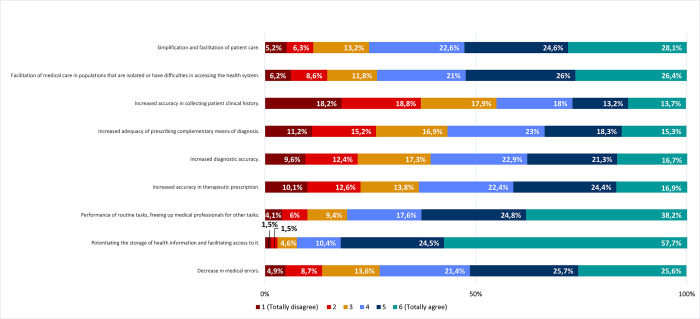

II. Specific advantages

Respondents agreed with different advantages of using AI, such as potentiating the storage of health information and facilitating access to it (92,6%); fulfilling routine tasks, while freeing medical professionals for other tasks (80,6%); simplifying and streamlining patient care (75,3%); facilitating access to healthcare for isolated populations or others (73,4%); reducing medical errors (72,7%); increasing accuracy in therapeutic prescriptions (63,7%), diagnosis (60,9%), and the adequacy of the prescription of complementary diagnostic procedures (56,6%) (Fig 5).

Fig 5. Specific advantages of using AI in healthcare.

Frequencies corresponding to agreement/disagreement with specific advantages of using Artificial Intelligence tools in healthcare. Results correspond to agreement with statements on the left column (by responding to the following question: “Please indicate your degree of agreement with the following possible advantages of using AI tools in healthcare"), according to a Likert scale.

On the contrary, in line with their disagreement in delegating the collection of medical history on AI (Fig 4), respondents disagreed that AI has the advantage of increasing the accuracy in collecting patient clinical history (55,2% disagree; 18,2% totally disagree) (Fig 5).

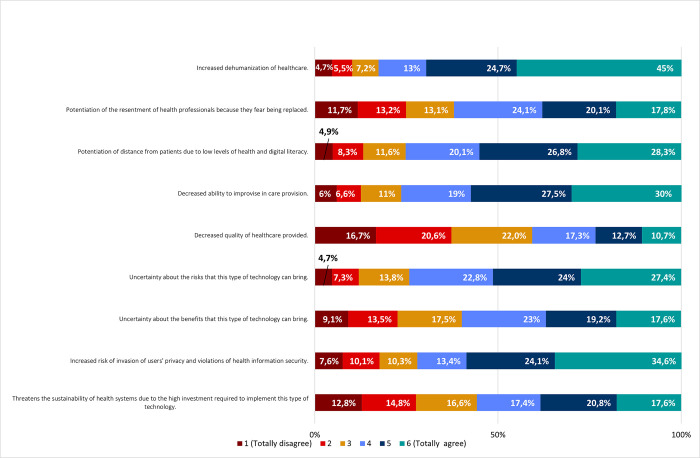

III. Specific disadvantages

Regarding disadvantages of AI use, our results revealed a general agreement among respondents. Increased dehumanization of health care (82,7%); decreased ability to improvise in care provision (76,5%); potentiation of distance from patients due to low levels of health and digital literacy (75,2%); uncertainty about the risks (74,2%); increased risk of invasion of users’ privacy and violations of health information security (72,1%); potentiation of resentment of health professionals due to fear of being replaced (62%); uncertainty about benefits (59,8%); and threat to the sustainability of health systems due to high investment required (55,8%), were agreed to as disadvantages of AI use in healthcare (Fig 6).

Fig 6. Specific disadvantages of using AI in healthcare.

Frequencies corresponding to agreement/disagreement with specific disadvantages of using Artificial Intelligence tools in healthcare. Results correspond to agreement with statements on the left column (by responding to the following question: “Please indicate your degree of agreement with the following possible disadvantages of using AI tools in healthcare"), according to a Likert scale.

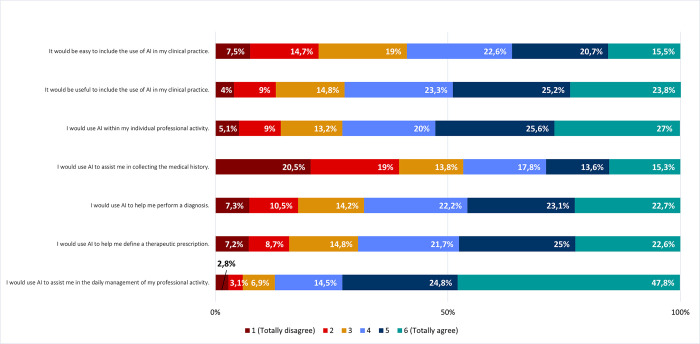

I. Predisposition to use AI in clinical practice. Considering the predisposition of using AI in their own clinical practice, respondents agreed that it would be useful (72,3%; 23,8% totally agree) and easy (58,8%; 15.5% totally agree) (Fig 7). Accordingly, most respondents admitted they would use AI in their professional activity (72,6%; 27% totally agree). Specifically, respondents admitted they would use AI in the daily management of their professional practice, for example writing in the electronic health record, schedule, and book appointments (87,1%; 47,8% totally agree). Most respondents also admitted they would use AI to assist them in defining therapeutic prescriptions (69,3%; 22,6% totally agree) and making diagnoses (68%; 22,7% totally agree). In accordance with responses to previous questions (Figs 4 and 5), respondents were divided about using AI to assist them in collecting patient’s medical history (53,3%; 20,5% totally disagree) (Fig 7).

Fig 7. Predisposition to adopt AI in clinical practice.

Frequencies corresponding to agreement/disagreement with predisposition to adopt AI in clinical practice. Results correspond to agreement with statements on the left column (by responding to the following question: “Please indicate your agreement with the following statements regarding the use of AI in your clinical practice”), according to a Likert scale.

Bivariate analysis: Correlation and association between different AI perceptions and study population characteristics

Through bivariate analysis of our results, we found a positive and statistically significant association between the general (professional and non-professional) use of ICT and the perception that application of AI in health data extraction and processing improves healthcare quality (ρ = 0.252; p < 0.001). Respondents with higher digital and AI command also agreed more with this perception (ρ = 0.158; p <0.001) (S1 Table). We also found a significant association between gender and the perception that application of AI in health data extraction and processing improves healthcare quality (p<0.001) (S6 Table), with men tending to agree more (88,7%) compared to women (83,7%) (S2 Table). Contrarily, no association was found between this AI perception indicator and the respondent’s place of clinical practice (S6 Table). Regarding the delegation of clinical procedures to AI tools, the higher the respondent’s general (professional and non-professional) ICT use (ρ = 0.170; p <0.001) and AI and digital command (ρ = 0.096; p <0.001), the higher the agreement with delegation to AI (S1 Table). On the other hand, we also found that men agree more (76,0%) than women (59,9%) (S2 Table) with the delegation of clinical procedures to AI tools (p < 0.001) (S6 Table).

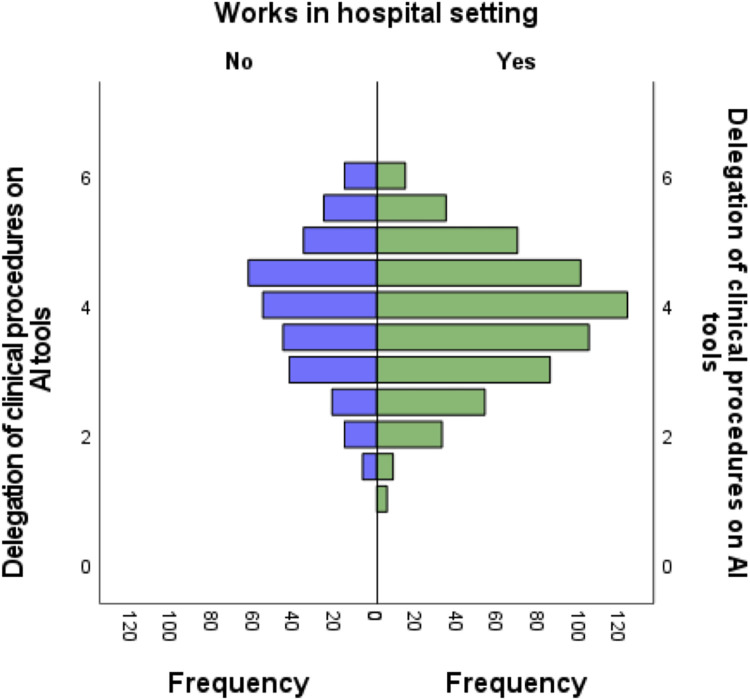

We also found a statistically significant association (ρ = 0.119; p< 0.001) between age and delegation of clinical procedures to AI tools, indicating that the older the respondent the higher the expressed agreement with delegation to AI (S1 Table). In accordance with this observation, the respondents with more years of professional experience expressed higher agreement with delegation of clinical procedures to AI (ρ = 0.113; p< 0.001) (S1 Table). Furthermore, respondents who work in a hospital environment expressed higher agreement with delegation of clinical procedures to AI (65,45%) (Fig 8) (p < 0.05) (S6 Table). In contrast, no statistically significant relationship was observed between this AI perception indicator and respondents who work in primary care, in the public sector or private sector (S6 Table).

Fig 8. Agreement to delegate clinical procedures on AI tools as expressed by medical doctors who work in hospital settings.

Association between the score corresponding to question 3 “Delegation of clinical procedures on AI tools” and results of respondents who work in hospital settings (p<0.05). Independent samples of Mann-Whitney U test.

Regarding the perceived advantages of AI in healthcare, we found a statistically significant association between this indicator and gender, with men perceiving more advantages than women (85,1% and 73,1%, respectively) and, conversely, women perceived more disadvantages than men (85,6% and 68,1%) (S2 and S6 Tables).

However, ICT use (ρ = 0.204; p< 0.001) and digital and AI command (ρ = 0.141; p< 0.001), have revealed statistical significance, both with positive correlation coefficients. Consistently, respondents who expressed higher agreement with the delegation of clinical procedures to AI, perceived more advantages (ρ = 0.771; p < 0.001) and less disadvantages (ρ = -0.276; p < 0.001) of AI use in healthcare (S1 Table). Furthermore, the higher the ICT use (ρ = -0.202; p < 0.001) and digital and AI command (ρ = -0.101; p < 0.001) of respondents, the lower their perceived disadvantages in AI use in healthcare (S1 Table).

Additionally, higher ICT use, and digital and AI command corresponded to higher predisposition to adopt AI tools in clinical practice (ρ = 0.263; p< 0.001 and ρ = 0.139; p< 0.001, respectively) (S1 Table). As observed for other AI use in healthcare indicators, we also found a statistically significant association between gender and predisposition to adopt AI in clinical practice (p < 0.001) (S6 Table), with men agreeing the most (81,8%) when compared to women (68,4%) (S2 Table). In our study, we did not find significant differences between medical specialties regarding the various AI perception indicators assessed. However, we did observe a statistically significant result (p<0,033) for Radiologists regarding the "predisposition for using AI in clinical practice" indicator compared to other medical specialties (S3 Table). This suggests that radiologists might have a higher inclination to utilize AI tools in their clinical practice compared to other medical specialties.

Finally, respondents who affirmed higher predisposition for AI adoption in their clinical practice also express higher agreement with delegation of clinical procedures to AI tools (ρ = 0.689; p < 0.001) and agree more that application of AI in health data extraction and processing improves healthcare quality (ρ = 0.661; p < 0.001). The respondents who perceived more advantages in AI use agree the most with adopting AI in their clinical practice (ρ = 0.819; p < 0.001). Conversely, those who perceived more disadvantages in AI use are those with less predisposition for AI adoption in their clinical practice, as these results reveal a statistically significant association with a negative correlation (ρ = -0.373; p < 0.001) (S1 Table).

Discussion

This study aimed to assess the perception of Portuguese medical doctors regarding the potential and impact of AI in healthcare. Our survey reached all licensed medical doctors in Portugal, with a higher representation of General Practice and Family Medicine specialists (26.8%), which aligns with the national distribution [65].

Most respondents in our study reported being frequent digital users and having good knowledge of digital technologies. However, their knowledge of AI was generally rated as intermediate, possibly because AI is a relatively new field compared to other digital technologies. This finding is consistent with previous research in healthcare [63, 66–70].

In our study, respondents considered that most tasks can be delegated to AI tools, particularly medical doctors working in hospital settings. This finding might reveal lower risk aversion and/or optimism toward the potential of technology, perhaps due to the fact that medical doctors working in hospitals have more contact with technology [71].

Obtaining medical history and prescribing medical exams were the tasks that most respondents showed less agreement to delegate. Consistently, most respondents did not consider that AI has the advantage of increasing the accuracy in performing these tasks. In the future it would be interesting to continue to explore why it is that obtaining medical history is perceived by medical doctors as a less-delegable task. It could be due to its inherent complexity, its variability, or the tendency to result from a direct communication between the doctor and the patient. It has long been established that good communication and empathy skills are fundamental to strengthen the (human) doctor-patient relationship and improve healthcare quality [72–74]. Furthermore, different evidence has highlighted the collection of medical history as a limitation of digital health [75, 76]. In contrast, AI is currently used to deliver personalized patient care, which is usually perceived as a synonym of higher accuracy [23, 77, 78]. Other studies have shown that the use of AI tools to take medical history has advantages, for example, the interaction is not time-constrained which will not limit patient response times to seconds, the fact that computers do not use heuristics and the fact that these systems may increase the quality of care by supporting the optimization of staff assignments [79, 80].

Most respondents did not consider that prescribing complementary diagnostic tests can be delegated to AI tools. Nonetheless, using these tools to adjust complementary diagnostic prescription is perceived as an advantage. These results can demonstrate that medical doctors view AI essentially as a support instrument (a very valuable assistant or reviewer) but not as a substitute, which highlights a mixture of trust and skepticism [81]. Other results from this study are consistent with this hypothesis: simpler routine clinical activities such as checking blood pressure and heart rate obtained greater agreement for delegation on AI tools. Moreover, the most perceived advantage of AI was the increased storage of health information and the facilitation of health data access, which is compatible with the use of AI to assist the daily management of professional activity and also with the previously documented predisposition for delegating on AI the systematization of the patient’s general symptomatology [41].

While most respondents believe that their specific occupation will not be replaced by AI in the future and their profession will not become redundant, they also express concerns about the potential resentment caused by AI implementation among medical doctors, fearing their own replacement as a disadvantage of using AI in clinical practice. Additionally, many respondents acknowledge the possibility of AI replacing other healthcare professionals, aligning with existing evidence that foresees the impact of AI on certain healthcare professions [24, 48, 82–84]. For example, in the field of Radiology, evidence shows that using AI has broader implications outside the traditional activities of lesion detection and characterization [85]. Nonetheless, in alignment with similar studies that assessed physician´s perspectives on AI utilization, our research revealed that physicians demonstrated a positive attitude about integrating AI into their practice. They did not primarily express concern about being replaced by AI, and believe AI training and education should be enhanced [86–89].

However, variations within the medical profession exist. For example, other studies indicate that surgeons are less worried about job replacement compared to other specialties due to the intricate surgical procedures and direct interaction with patients that their role entails [42]. Despite this fact, our analysis did not reveal significant differences between medical specialties. However, it is worth noting that in our study radiologists reported a higher inclination to adopt AI tools in their clinical practice compared to other medical specialties. While not present for all indicators assessed, this difference observed for radiologists aligns with the more established use of AI in this medical specialty compared to others [90–93].

Our study showed that most physicians believe that AI could reduce medical errors. AI technology is capable of highlighting hidden health information in large databases in order to aid clinical decision-making, which can help reduce misdiagnoses that are inevitable to human practice [5, 14, 94, 95]. Furthermore, AI use in clinical practice can significantly relieve the pressure of routine work [41], freeing up more time for patient care and quality improvement [40, 82, 96]. Nonetheless, the fact that many participants in our study believe that AI can dehumanize medical care highlights the need to implement mechanisms that ensure automation-driven efficiency gains in healthcare do not equate to devaluing human interaction [24]. A balanced and complementary human-machine interaction can foster dialogue and proximity between doctors and patients, especially when clinicians are released from routine work and are better equipped with knowledge resulting from the analysis of large amounts of data [97]. Big data, which must be clean and readily available for analysis by AI systems. Additionally, bias detection, correction, and data contextualization are crucial steps that must be carried out [98].

Ideally, achieving this balanced human-machine interaction would require a collaborative effort, involving healthcare professionals, technical experts, policy makers, and patients to establish guidelines, standards, and ethical considerations that ensure the effective and responsible integration of AI in healthcare.

Another key finding from our study is that medical doctors practicing in Portugal believe that AI can facilitate healthcare access for isolated populations. Importantly, providing care to isolated populations and improving mobile connectivity through technological devices, can enable clinicians and public health researchers to better understand variability between individuals and populations, providing more individualized care and planning more appropriate preventive therapeutic measures [23, 28, 78, 99, 100]. Concomitantly, patients armed with their own health data (combined with information from central databases) can better communicate with health professionals and feel more empowered to access healthcare [101]. On the flipside, risks of privacy invasion and security breaches of health information can diminish trust in digital health and put patients of technology adoption [22]. Our results show that privacy and security risks are perceived by medical doctors in Portugal as a downside of AI. Consistent with other studies, the successful integration of AI in medicine relies on the establishment of robust data privacy and security safeguards [19, 77, 98, 102, 103]. This imperative extends to the perspectives of African radiographers who also express concerns about job security and data protection, which emphasizes the critical need to address these and other issues for the successful implementation of advanced medical imaging technologies on a global scale [104, 105].

Globally, AI implementation in healthcare has been proposed to depend on success factors, including policy setting, technological implementation, and medical and economic impact measurement, each of which leading to specific recommendations, including risk-adjustment and “privacy by design” policies as well as quantification of medical and economic impact [106].

In the EU, healthcare is a priority sector for AI implementation, and countries proposed regulatory frameworks for health data management. However, specific policies vary, and some are still being defined [107].

Furthermore, AI adoption in healthcare remains limited to certain areas, partly due to insufficient trust in AI-driven decision support, which differ among countries [108].

Addressing country-specific and cultural concerns regarding AI use in the healthcare sector is crucial. Reported disparities in AI scientific output, collaboration, and adoption between larger and smaller EU countries have prompted experts to call for coordinated approaches at the European level [107].

A significant finding in our study was that some respondents perceived a disadvantage of AI in healthcare as the reduced ability to improvise, which is a skill that medical doctors develop through years of practice [109]. As AI implementation gathers pace, it is fundamental to understand its impact at this level. Representing improvisation (or intuition) in healthcare as an exclusively human quality calls for a careful reflection in light of the nature of the medical act in its different dimensions, including risk, uncertainty, and responsibility [110]. AI-based systems, with their learning and self-correction capabilities, have the potential to enhance accuracy in health outcomes by incorporating feedback and extracting information from a large user population for real-time risk warnings and diagnostic predictions [5, 41]. In parallel, the risk of automation bias is highly associated with the cases when clinicians become overly dependent of decision support systems, which can lead to de-skilling of human professionals [111]. Studies suggest the importance of maximizing the benefits of AI tools while minimizing over-reliance through the implementation of constant checking alerts that prevent unchecked decisions [111, 112].

Our results also show that, as expected, the more willing a medical doctor is to use AI in their clinical practice and the more predisposed they are to delegate clinical tasks to AI tools, the more advantages (and less disadvantages) they perceive in using AI in healthcare. This is coherent with a view of AI use in medicine built on a human-machine trust relationship, which respects ethical principles and potentiates AI adoption [113–117].

Furthermore, in our study the older and more experienced the respondents, the higher agreement to delegate clinical tasks on AI. This is an interesting finding. Further investigation should focus on the specific reasons for this result, but one can speculate that experience in clinical practice may lead to better prioritization, easier recognition of medical tasks that may be delegable on AI tools and a higher human focus on procedures that are considered not to be not delegable on AI. Also, some studies have shown that beliefs related with older adults being less compatible with digital health innovation than the younger [118]. Care must be exercised not to expand the same idea to professional adoption, which could disproportionately exclude more experienced professionals from AI use.

The advancement and implementation of AI technologies in medical imaging should be accompanied by adequate professional training. This applies not only to medical specialties like radiology, which lead in AI implementation due to their technologically enhanced nature, but also extends to other medical specialties [119]. As demonstrated in the literature, while there are numerous benefits of AI-enabled clinical workflows, such advancements can also bring about disruption of traditional roles and patient-centered care [24, 46, 120]. However, these challenges can be managed by promoting the education of the medical workforce [46, 50, 119]. Therefore, it is widely recognized that healthcare professionals need to improve their digital and AI literacy to effectively adopt AI in clinical practice [24, 46]. Our study underscores the perception that AI should be an integral part of medical training, emphasizing the need to educate all stakeholders about AI applications, limitations, and specific challenges [46, 50]. Universities and healthcare courses play a central role in facilitating this transition and promoting the inclusion of AI in medical education [53, 102]. Notably, AI integration in medical education is already evident in certain specialties like Radiology, owing to the extensive use of medical imaging and diagnostic possibilities [53, 102]. The results of our study align with other evidence indicating that medical doctors are receptive to learning more about AI and consider it important for their practice [45, 48, 49, 53–55, 121]. Considering the rapid proliferation of AI in healthcare, it is crucial to accelerate the inclusion of digital health disciplines in medical degrees to prepare future healthcare professionals for the challenges and opportunities presented by AI [122].

Our study has significant implications for research, policy, and practice. By surveying a large sample of medical doctors, we identified an overall optimism about the potential of AI and a significant predisposition for AI adoption, which highlights the need to further research the nature of the obstacles and resistance to AI use that still exist. Future policy should focus on maximizing the potential of AI use in clinical practice considering the views of health professionals, while urgently addressing identified challenges, in particular the existing gaps in medical curricula, which limit the use of state-of-the art AI applications in healthcare due to suboptimal digital health training.

Limitations

Respondents were surveyed using a digital online questionnaire, which can limit the participation of some potential responders. Most responders had less than 10 years’ experience. Nonetheless, we believe that this reflects the national reality. Furthermore, our sample size takes into account the number of licensed physicians in Portugal, guaranteeing a statistical confidence level of 99% (with a margin of error of 5%) within the specific context of Portugal. Cultural and context specificities of clinical practice in Portugal should be considered. The fact that many respondents accumulate jobs between public, private or social work settings may limit conclusions about professional environments. Extrapolation of the results of this study to other realities should be carried out in a rigorous and controlled way. AI implementation is influenced by various factors that go beyond physician perceptions. These factors include patient literacy levels, systems interoperability, health reimbursement arrangements, legal and regulatory contexts, among others. Nonetheless, due to high levels of professional integration in the healthcare sector, at least within the European Union, our results should also be relevant for analysis in other contexts.

Conclusions

This study concludes that medical doctors licensed to practice in Portugal are in agreement about the significant impact of AI on medicine, including their own specialty, and advocate for its inclusion in medical training. AI is seen as a valuable tool for healthcare professionals, offering benefits such as improved access to health data, increased accuracy, reduced errors, and relief of medical doctors from routine tasks, thereby enhancing the quality of healthcare. However, physicians reported resistance to utilizing AI for obtaining medical history and prescribing tests. Concerns were raised about potential dehumanization of healthcare, decreased ability to improvise when necessary, and risks to privacy and confidentiality. Interestingly, older and more experienced doctors displayed greater optimism and openness towards incorporating AI in medicine.

By conducting a nationwide survey of physicians´ perspectives, this study significantly contributes to facilitate the professional integration of AI in medical practice. The high professional integration observed in the healthcare sector, especially within the European Union (EU), makes our results relevant for broader geographical contexts and diverse healthcare systems. Furthermore, our findings emphasize the importance of expediting the optimization of AI use in healthcare and the integration of AI skills training into medical curricula.

Supporting information

Coefficients of correlation between AI perceptions (scores) and different study population characteristics. ns. = not significant; **Correlation is significant at the 0.01 level (2-tailed); * Correlation is significant at the 0.05 level (2-tailed); AI in DEP—Application of AI in health data extraction and processing (Question 2); Delegation on AI—Delegation of clinical procedures on AI tools (Question 3); Adv. of AI—Specific advantages of AI (Question 5); Disadv. of AI—Specific Disadvantages of using AI (Question 6); Pred. for using AI—Predisposition for using AI in clinical practice (Question 7); ICT use–use of information and communication technologies (Question 13); Com. of DT and AI—Self-perceived command of digital technologies and knowledge about AI (Question 14); YPE—Years of Professional Experience.

(DOCX)

Description of score results according to gender (statistically significant associations).

(DOCX)

Description of score results according to radiology and other medical specialties (statistically significant associations).

(DOCX)

Description of general statistics related with the scores and their respective questions.

(DOCX)

Results of Cronbach´s Alpha test (questions 2, 3, 5, 6 and 7 of our survey) for measure of internal consistency (reliability test), and the respective coefficient´s level (Coefficient of Cronbach´s Alpha: more than 0.9—Excellent; 0.80–0.89 –Good; 0.70–0.79 –Acceptable; 0.6–0.69 –Questionable; 0.5–0.59 –Poor; less than 0.59 –Unacceptable). 1* Corrected total item correlation [62].

(DOCX)

U-test values for statistically significant associations between AI perceptions (scores) and different study population characteristics. ns. = not significant; ** Significant at the 0.01 level (2-tailed); * Significant at the 0.05 level (2-tailed); AI in DEP—Application of AI in health data extraction and processing (Question 2); Delegation on AI—Delegation of clinical procedures on AI tools (Question 3); Adv. of AI—Specific advantages of AI (Question 5); Disadv. of AI—Specific Disadvantages of using AI (Question 6); Pred. for using AI—Predisposition for using AI in clinical practice (Question 7); ICT use–use of information and communication technologies (Question 13); Com. of DT and AI—Self-perceived command of digital technologies and knowledge about AI (Question 14); YPE—Years of Professional Experience; Public—Public Sector; Private—Private Sector; PHC—Primary Health Care; HC—Hospital Care.

(DOCX)

Acknowledgments

The authors gratefully acknowledge the collaboration of the Portuguese Medical Association in disseminating the questionnaire of this study among registered physicians in Portugal.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

The present publication was funded by Fundação Ciência e Tecnologia, IP national support through CHRC (UIDP/04923/2020). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Mintz Y, Brodie R. Introduction to artificial intelligence in medicine. Minim Invasive Ther Allied Technol. 2019;28(2). doi: 10.1080/13645706.2019.1575882 [DOI] [PubMed] [Google Scholar]

- 2.Loftus TJ, Tighe PJ, Filiberto AC, Efron PA, Brakenridge SC, Mohr AM, et al. Artificial Intelligence and surgical decision-making. JAMA Surg. 2020;155(2). doi: 10.1001/jamasurg.2019.4917 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bottino A, Laurentini A. Experimenting with nonintrusive motion capture in a virtual environment. Vis Comput. 2001;17(1). [Google Scholar]

- 4.Millington I, Funge J. Artificial Intelligence for games. 2nd ed. CRC, PRESS. Boca Raton; 2009. [Google Scholar]

- 5.Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017;2(4). doi: 10.1136/svn-2017-000101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kaplan A, Haenlein M. Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus Horiz. 2019;62(1). [Google Scholar]

- 7.Bryson JJ. The Past Decade and Future of AI’s Impact on Society. Towards a New Enlightenment? A Transcendent Decade. Madrid: Turner; 2019. [Google Scholar]

- 8.Mendes RD. Artificial Intelligence: expert systems in information management. Ciência da Informação. 1997;26(1). [Google Scholar]

- 9.Zeng Y, Lu E, Sun Y, Tian R. Responsible facial recognition and beyond. Cornell Unversity. 2019; [Google Scholar]

- 10.Martinez-Martin N. What are important ethical implications of using facial recognition technology in health care? AMA J Ethics. 2019;21(2). doi: 10.1001/amajethics.2019.180 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chang WJ, Schmelzer M, Kopp F, Hsu CH, Su JP, Chen LB, et al. A deep learning facial expression recognition based scoring system for restaurants. In: 2019 International Conference on Artificial Intelligence in Information and Communication (ICAIIC). IEEE; 2019.

- 12.Raj M, Seamans R. Primer on artificial intelligence and robotics. J Organ Des. 2019;8(1). [Google Scholar]

- 13.Smith BC. The promise of artificial intelligence: reckoning and judgment. MIT Press. 2019; [Google Scholar]

- 14.Magrabi F, Ammenwerth E, McNair JB, De Keizer NF, Hyppönen H, Nykänen P, et al. Artificial Intelligence in clinical decision support: challenges for evaluating AI and practical implications. Yearb Med Inform. 2019;28(1). doi: 10.1055/s-0039-1677903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Park CW, Seo SW, Kang N, Ko BS, Choi BW, Park CM, et al. Artificial Intelligence in Health Care: Current Applications and Issues. Vol. 35, World Journal of Orthopedics. J Korean Med Sci; 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Harrer S. Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. eBioMedicine. 2023;90:104512. doi: 10.1016/j.ebiom.2023.104512 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hurvitz N, Azmanov H, Kesler A, Ilan Y. Establishing a second-generation artificial intelligence-based system for improving diagnosis, treatment, and monitoring of patients with rare diseases. Eur J Hum Genet. 2021;29(10):1485–90. doi: 10.1038/s41431-021-00928-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Panch T, Szolovits P, Atun R. Artificial intelligence, machine learning and health systems. J Glob Health. 2018;8(2). doi: 10.7189/jogh.08.020303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019. Jan 7;25(1). doi: 10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 20.Challen R, Denny J, Pitt M, Gompels L, Edwards T, Tsaneva-Atanasova K. Artificial intelligence, bias and clinical safety. BMJ Qual Saf. 2019;28(3). doi: 10.1136/bmjqs-2018-008370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Huang G, Wei X, Tang H, Bai F, Lin X, Xue D. A systematic review and meta-analysis of diagnostic performance and physicians’ perceptions of artificial intelligence (AI)-assisted CT diagnostic technology for the classification of pulmonary nodules. J Thorac Dis. 2021;13(8). doi: 10.21037/jtd-21-810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Organization for Economic Co-operation and Devolopment´s. TRUSTWORTHY AI [Internet]. SAUDI ARABIA; 2020. Available from: https://www.oecd.org/termsandconditions/

- 23.Johnson KB, Wei WQ, Weeraratne D, Frisse ME, Misulis K, Rhee K, et al. Precision Medicine, AI, and the future of personalized health care. Clin Transl Sci. 2021;14(1). doi: 10.1111/cts.12884 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Topol EJ. Preparing the healthcare workforce to deliver the digital future The Topol Review An independent report on behalf of the Secretary of State for Health and Social Care [Internet]. 2019. Available from: https://topol.hee.nhs.uk/wp-content/uploads/HEE-Topol-Review-2019.pdf [Google Scholar]

- 25.Yang YC, Islam SU, Noor A, Khan S, Afsar W, Nazir S. Influential usage of big data and Artificial Intelligence in healthcare. Asghar MZ, editor. Comput Math Methods Med. 2021;2021. doi: 10.1155/2021/5812499 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 26.Esengönül M, Marta A, Beirão J, Pires IM, Cunha A. A systematic review of Artificial Intelligence applications used for inherited retinal disease management. Medicina (B Aires). 2022;58(4). doi: 10.3390/medicina58040504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jones OT, Matin RN, van der Schaar M, Prathivadi Bhayankaram K, Ranmuthu CKI, Islam MS, et al. Artificial intelligence and machine learning algorithms for early detection of skin cancer in community and primary care settings: a systematic review. Lancet Digit Heal. 2022;4(6). doi: 10.1016/S2589-7500(22)00023-1 [DOI] [PubMed] [Google Scholar]

- 28.Shaban-Nejad A, Michalowski M, Buckeridge DL. Health intelligence: how artificial intelligence transforms population and personalized health. npj Digit Med. 2018;1(1):53. doi: 10.1038/s41746-018-0058-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Edrees H, Song W, Syrowatka A, Simona A, Amato MG, Bates DW. Intelligent Telehealth in pharmacovigilance: a future perspective. Drug Saf. 2022;45(5). doi: 10.1007/s40264-022-01172-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Rezayi S, R Niakan Kalhori S, Saeedi S. Effectiveness of Artificial Intelligence for personalized medicine in neoplasms: a systematic review. Biomed Res Int. 2022;2022. doi: 10.1155/2022/7842566 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zare S, Meidani Z, Ouhadian M, Akbari H, Zand F, Fakharian E, et al. Identification of data elements for blood gas analysis dataset: a base for developing registries and artificial intelligence-based systems. BMC Health Serv Res. 2022;22(1). doi: 10.1186/s12913-022-07706-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Baxi V, Edwards R, Montalto M, Saha S. Digital pathology and artificial intelligence in translational medicine and clinical practice. Mod Pathol. 2022;35(1). doi: 10.1038/s41379-021-00919-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Malik YS, Sircar S, Bhat S, Ansari MI, Pande T, Kumar P, et al. How artificial intelligence may help the Covid‐19 pandemic: Pitfalls and lessons for the future. Rev Med Virol. 2021;31(5). doi: 10.1002/rmv.2205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bera K, Braman N, Gupta A, Velcheti V, Madabhushi A. Predicting cancer outcomes with radiomics and artificial intelligence in radiology. Nat Rev Clin Oncol. 2022;19(2). doi: 10.1038/s41571-021-00560-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bordoloi D, Singh V, Sanober S, Buhari SM, Ujjan JA, Boddu R. Deep learning in healthcare system for quality of service. J Healthc Eng. 2022;2022. doi: 10.1155/2022/8169203 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 36.Kamruzzaman MM, Alrashdi I, Alqazzaz A. New opportunities, challenges, and applications of edge-AI for connected healthcare in internet of medical things for smart cities. Chakraborty C, editor. J Healthc Eng. 2022;2022. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 37.McKay F, Williams BJ, Prestwich G, Bansal D, Hallowell N, Treanor D. The ethical challenges of artificial intelligence-driven digital pathology. J Pathol Clin Res. 2022;8(3). doi: 10.1002/cjp2.263 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Schwalbe N, Wahl B. Artificial intelligence and the future of global health. Lancet. 2020;395(10236). doi: 10.1016/S0140-6736(20)30226-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Price WN, Cohen IG. Privacy in the age of medical big data. Nat Med. 2019;25(1). doi: 10.1038/s41591-018-0272-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Martinho A, Kroesen M, Chorus C. A healthy debate: Exploring the views of medical doctors on the ethics of artificial intelligence. Artif Intell Med. 2021;121. doi: 10.1016/j.artmed.2021.102190 [DOI] [PubMed] [Google Scholar]

- 41.Fan W, Liu J, Zhu S, Pardalos PM. Investigating the impacting factors for the healthcare professionals to adopt artificial intelligence-based medical diagnosis support system (AIMDSS). Ann Oper Res. 2020;294(1–2). [Google Scholar]

- 42.Voskens FJ, Abbing JR, Ruys AT, Ruurda JP, Broeders IAMJ. A nationwide survey on the perceptions of general surgeons on artificial intelligence. Artif Intell Surg. 2022;2(1). [Google Scholar]

- 43.Bisdas S, Topriceanu CC, Zakrzewska Z, Irimia AV, Shakallis L, Subhash J, et al. Artificial Intelligence in medicine: a multinational multi-center survey on the medical and dental students’ perception. Front Public Heal. 2021;9. doi: 10.3389/fpubh.2021.795284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Scott IA, Carter SM, Coiera E. Exploring stakeholder attitudes towards AI in clinical practice. BMJ Heal Care Informatics. 2021;28(1). doi: 10.1136/bmjhci-2021-100450 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Santos JC, Wong JHD, Pallath V, Ng KH. The perceptions of medical physicists towards relevance and impact of artificial intelligence. Phys Eng Sci Med. 2021;44(3). doi: 10.1007/s13246-021-01036-9 [DOI] [PubMed] [Google Scholar]

- 46.Laï MC, Brian M, Mamzer MF. Perceptions of artificial intelligence in healthcare: findings from a qualitative survey study among actors in France. J Transl Med. 2020;18(1). doi: 10.1186/s12967-019-02204-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Master Z, Campo-Engelstein L, Caulfield T. Scientists’ perspectives on consent in the context of biobanking research. Eur J Hum Genet. 2015;23(5):569–74. doi: 10.1038/ejhg.2014.143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Botwe BO, Akudjedu TN, Antwi WK, Rockson P, Mkoloma SS, Balogun EO, et al. The integration of artificial intelligence in medical imaging practice: Perspectives of African radiographers. Radiography. 2021;27(3). doi: 10.1016/j.radi.2021.01.008 [DOI] [PubMed] [Google Scholar]

- 49.Yang L, Ene IC, Arabi Belaghi R, Koff D, Stein N, Santaguida P. Stakeholders’ perspectives on the future of artificial intelligence in radiology: a scoping review. Eur Radiol. 2022;32(3). doi: 10.1007/s00330-021-08214-z [DOI] [PubMed] [Google Scholar]

- 50.Castagno S, Khalifa M. Perceptions of Artificial Intelligence among healthcare staff: a qualitative survey study. Front Artif Intell. 2020;3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shaw J, Rudzicz F, Jamieson T, Goldfarb A. Artificial Intelligence and the Implementation Challenge. J Med Internet Res. 2019;21(7). doi: 10.2196/13659 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.McCradden MD, Baba A, Saha A, Ahmad S, Boparai K, Fadaiefard P, et al. Ethical concerns around use of artificial intelligence in health care research from the perspective of patients with meningioma, caregivers and health care providers: a qualitative study. C Open. 2020;8(1). doi: 10.9778/cmajo.20190151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Pinto dos Santos D, Giese D, Brodehl S, Chon SH, Staab W, Kleinert R, et al. Medical students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol. 2019;29(4). doi: 10.1007/s00330-018-5601-1 [DOI] [PubMed] [Google Scholar]

- 54.Sit C, Srinivasan R, Amlani A, Muthuswamy K, Azam A, Monzon L, et al. Attitudes and perceptions of UK medical students towards artificial intelligence and radiology: a multicentre survey. Insights Imaging. 2020;11(1). doi: 10.1186/s13244-019-0830-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Park CJ, Yi PH, Siegel EL. Medical student perspectives on the impact of Artificial Intelligence on the practice of medicine. Curr Probl Diagn Radiol. 2021;50(5). doi: 10.1067/j.cpradiol.2020.06.011 [DOI] [PubMed] [Google Scholar]

- 56.Petitgand C, Motulsky A, Denis JL, Régis C. Investigating the barriers to physician adoption of an artificial intelligence-based decision support system in emergency care: An interpretative qualitative study. Stud Health Technol Inform. 2020;270. doi: 10.3233/SHTI200312 [DOI] [PubMed] [Google Scholar]

- 57.Panch T, Mattie H, Celi LA. The “inconvenient truth” about AI in healthcare. npj Digit Med. 2019;2(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Elawady A, Khalil A, Assaf O, Toure S, Cassidy C. Telemedicine during COVID-19: a survey of Health Care Professionals’ perceptions. Monaldi Arch Chest Dis. 2020. Sep 22;90(4):576–81. doi: 10.4081/monaldi.2020.1528 [DOI] [PubMed] [Google Scholar]

- 59.Bramstedt KA, Ierna B, Woodcroft-Brown V. Using SurveyMonkey® to teach safe social media strategies to medical students in their clinical years. Commun Med. 2015;11(2). [DOI] [PubMed] [Google Scholar]

- 60.George DR, Dreibelbis TD, Aumiller B. Google Docs and SurveyMonkeyTM: lecture-based active learning tools. Med Educ. 2013;47(5). [DOI] [PubMed] [Google Scholar]

- 61.Dean AG, Sullivan KM SM. OpenEpi Menu [Internet]. OpenEpi: Open Source Epidemiologic Statistics for Public Health. 2013. [cited 2022 Jun 10]. Available from: https://www.openepi.com/Menu/OE_Menu.htm [Google Scholar]

- 62.Feldt LS. A test of the hypothesis that Cronbach’s alpha reliability coefficient is the same for two tests administered to the same sample. Psychometrika. 1980;45(1). [Google Scholar]

- 63.Özkaya G, Aydin MO, Alper Z. Distance education perception scale for medical students: a validity and reliability study. BMC Med Educ. 2021;21(1):400. doi: 10.1186/s12909-021-02839-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Jebb AT, Ng V, Tay L. A Review of Key Likert Scale Development Advances: 1995–2019. Front Psychol. 2021;12:1590. doi: 10.3389/fpsyg.2021.637547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Instiuto Nacional de Estatística, PORDATA. Doctors: non-specialists and specialist by specialist area. [Internet]. 2021. Available from: https://www.pordata.pt/en/Portugal/Doctors+non+specialists+and+specialist+by+specialist+area-147

- 66.Zeng X, Zhang Y, Kwong JSW, Zhang C, Li S, Sun F, et al. The methodological quality assessment tools for preclinical and clinical studies, systematic review and meta-analysis, and clinical practice guideline: A systematic review. J Evid Based Med. 2015;8(1). doi: 10.1111/jebm.12141 [DOI] [PubMed] [Google Scholar]

- 67.Abdullah R, Fakieh B. Health Care employees’ perceptions of the use of Artificial Intelligence applications: survey study. J Med Internet Res. 2020;22(5). doi: 10.2196/17620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Dorsey ER, Topol EJ. Telemedicine 2020 and the next decade. Lancet. 2020;395(10227). doi: 10.1016/S0140-6736(20)30424-4 [DOI] [PubMed] [Google Scholar]

- 69.Shinners L, Grace S, Smith S, Stephens A, Aggar C. Exploring healthcare professionals’ perceptions of artificial intelligence: Piloting the Shinners Artificial Intelligence Perception tool. Digit Heal. 2022;8. doi: 10.1177/20552076221078110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Antes AL, Burrous S, Sisk BA, Schuelke MJ, Keune JD, DuBois JM. Exploring perceptions of healthcare technologies enabled by artificial intelligence: an online, scenario-based survey. BMC Med Inform Decis Mak. 2021;21(1). doi: 10.1186/s12911-021-01586-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Lorenzetti J, Trindade LL, de Pires DEP, Ramos FRS. Technology, technological innovation and health: A necessary reflection. Texto e Context Enferm. 2012;21(2). [Google Scholar]

- 72.Hall JA. Affective and Nonverbal Aspects of the Medical Visit. In: The Medical Interview Frontiers of Primary Care. New York: Springer; 1995. [Google Scholar]

- 73.Zandbelt LC, Smets EMA, Oort FJ, Godfried MH, De Haes HCJM. Satisfaction with the outpatient encounter: A comparison of patients’ and physicians’ views. J Gen Intern Med. 2004;19(11). doi: 10.1111/j.1525-1497.2004.30420.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Klein Buller M, Buller DB. Physicians’ communication style and patient satisfaction. J Health Soc Behav. 1987;28(4). [PubMed] [Google Scholar]

- 75.Lino L, Martins H. Medical History Taking Using Electronic Medical Records: A Systematic Review. Int J Digit Heal. 2021. May 7;1(1). [Google Scholar]

- 76.Stevenson FA, Kerr C, Murray E, Nazareth I. Information from the Internet and the doctor-patient relationship: the patient perspective–a qualitative study. BMC Fam Pract. 2007;8(1). doi: 10.1186/1471-2296-8-47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Khoury MJ, Iademarco MF, Riley WT. Precision public health for the era of precision medicine. Am J Prev Med. 2016. Mar;50(3). doi: 10.1016/j.amepre.2015.08.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Schleidgen S, Klingler C, Bertram T, Rogowski WH, Marckmann G. What is personalized medicine: sharpening a vague term based on a systematic literature review. BMC Med Ethics. 2013;14(1). doi: 10.1186/1472-6939-14-55 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Zakim D, Brandberg H, El Amrani S, Hultgren A, Stathakarou N, Nifakos S, et al. Computerized history-taking improves data quality for clinical decision-making—Comparison of EHR and computer-acquired history data in patients with chest pain. Bahl A, editor. PLoS One. 2021;16(9). doi: 10.1371/journal.pone.0257677 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Harada Y, Shimizu T. Impact of a commercial Artificial Intelligence–driven patient self-assessment solution on waiting times at general internal medicine outpatient departments: retrospective study. JMIR Med Informatics. 2020;8(8). doi: 10.2196/21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Topol EJ. Deep medicine. New York: Basic Books; 2019. [Google Scholar]

- 82.Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2(1). doi: 10.1186/s41747-018-0061-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Durrani H. Healthcare and healthcare systems: inspiring progress and future prospects. mHealth. 2016;2. doi: 10.3978/j.issn.2306-9740.2016.01.03 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Chen M, Zhang B, Cai Z, Seery S, Gonzalez MJ, Ali NM, et al. Acceptance of clinical artificial intelligence among physicians and medical students: A systematic review with cross-sectional survey. Front Med. 2022;9(August). doi: 10.3389/fmed.2022.990604 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Neri E, de Souza N, Brady A, Bayarri AA, Becker CD, Coppola F, et al. What the radiologist should know about artificial intelligence–an ESR white paper. Insights Imaging. 2019;10(1):44. doi: 10.1186/s13244-019-0738-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Coppola F, Faggioni L, Regge D, Giovagnoni A, Golfieri R, Bibbolino C, et al. Artificial intelligence: radiologists’ expectations and opinions gleaned from a nationwide online survey. Radiol Med. 2021;126(1):63–71. doi: 10.1007/s11547-020-01205-y [DOI] [PubMed] [Google Scholar]

- 87.Mosch L, Fürstenau D, Brandt J, Wagnitz J, Klopfenstein SAI, Poncette AS, et al. The medical profession transformed by artificial intelligence: Qualitative study. Digit Heal. 2022. Jan 13;8:205520762211439. doi: 10.1177/20552076221143903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Blease C, Locher C, Leon-Carlyle M, Doraiswamy M. Artificial intelligence and the future of psychiatry: Qualitative findings from a global physician survey. Digit Heal. 2020. Jan 27;6:205520762096835. doi: 10.1177/2055207620968355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Pecqueux M, Riediger C, Distler M, Oehme F, Bork U, Kolbinger FR, et al. The use and future perspective of Artificial Intelligence—A survey among German surgeons. Front Public Heal. 2022;10. doi: 10.3389/fpubh.2022.982335 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Liew C. The future of radiology augmented with artificial intelligence: a strategy for success. Eur J Radiol. 2018;102:152–6. doi: 10.1016/j.ejrad.2018.03.019 [DOI] [PubMed] [Google Scholar]

- 91.Benjamens S, Dhunnoo P, Meskó B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: an online database. npj Digit Med. 2020;3(1):118. doi: 10.1038/s41746-020-00324-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Meskó B, Görög M. A short guide for medical professionals in the era of artificial intelligence. npj Digit Med. 2020;3(1):126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Pakdemirli E, Wegner U. Artificial intelligence in various medical fields with emphasis on radiology: statistical evaluation of the literature. Cureus. 2020;12(10). doi: 10.7759/cureus.10961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Stanfill MH, Marc DT. Health information management: implications of Artificial Intelligence on healthcare data and information management. Yearb Med Inform. 2019;28(01). doi: 10.1055/s-0039-1677913 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Neill DB. Using artificial intelligence to improve hospital inpatient care. IEEE Intell Syst. 2013;28(2). [Google Scholar]

- 96.Meskó B, Hetényi G, Gyorffy Z. Will artificial intelligence solve the human resource crisis in healthcare? Vol. 18, BMC Health Services Research. BioMed Central Ltd.; 2018. doi: 10.1186/s12913-018-3359-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Gopal G, Suter-Crazzolara C, Toldo L, Eberhardt W. Digital transformation in healthcare–architectures of present and future information technologies. Clin Chem Lab Med. 2019;57(3). doi: 10.1515/cclm-2018-0658 [DOI] [PubMed] [Google Scholar]

- 98.Cordeiro J V. Digital technologies and data science as health enablers: an outline of appealing promises and compelling ethical, legal, and social challenges. Front Med. 2021;8. doi: 10.3389/fmed.2021.647897 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Grant M, Hill S. Improving outcomes through personalised medicine: Working at the cutting edge of science to improve patients’ lives. 2016. [Google Scholar]

- 100.Weeramanthri TS, Dawkins HJS, Baynam G, Ward PR. Editorial: Precision Public Health. Front Public Heal. 2018;6(April). doi: 10.3389/fpubh.2018.00121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Parish JM. The patient will see you now: the future of medicine is in your hands. J Clin Sleep Med. 2015;11(06). [PubMed] [Google Scholar]

- 102.Lim SS, Phan TD, Law M, Goh GS, Moriarty HK, Lukies MW, et al. Non‐radiologist perception of the use of artificial intelligence (AI) in diagnostic medical imaging reports. J Med Imaging Radiat Oncol. 2022; doi: 10.1111/1754-9485.13388 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Liyanage H, Liaw ST, Jonnagaddala J, Schreiber R, Kuziemsky C, Terry AL, et al. Artificial Intelligence in primary health Care: perceptions, issues, and challenges. Yearb Med Inform. 2019;28(01). doi: 10.1055/s-0039-1677901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Antwi WK, Akudjedu TN, Botwe BO. Artificial intelligence in medical imaging practice in Africa: a qualitative content analysis study of radiographers’ perspectives. Insights Imaging. 2021. Dec 16;12(1):80. doi: 10.1186/s13244-021-01028-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Botwe BO, Antwi WK, Arkoh S, Akudjedu TN. Radiographers’ perspectives on the emerging integration of artificial intelligence into diagnostic imaging: The Ghana study. J Med Radiat Sci. 2021. Sep 14;68(3):260–8. doi: 10.1002/jmrs.460 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Wolff J, Pauling J, Keck A, Baumbach J. Success factors of artificial intelligence implementation in healthcare. Front Digit Heal. 2021. Jun 16;3(June):1–11. doi: 10.3389/fdgth.2021.594971 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.European Commission. Study on eHealth, interoperability of health data and artificial intelligence for health and care in the European Union. Lot 2: artificial intelligence for health and care in the EU. Final study report. First edit. Brussels: Luxembourg: Publications Office of the European Union; 2021. 66 p. [Google Scholar]

- 108.European Commission. Study on eHealth, interoperability of health data and artificial intelligence for health and care in the European Union. Lot 2: artificial intelligence for health and care in the EU. Country Factsheets. First edit. Brussels: Luxembourg: Publications Office of the European Union, 2021; 2021. 66 p. [Google Scholar]

- 109.Kanheman D. “Thinking: Fast and Slow.” New York: Farrar, Straus and Giroux; 2011. [Google Scholar]

- 110.Epstein S. Demystifying intuition: what it is, what it does, and how it does it. Psychol Inq. 2010. Nov 30;21(4). [Google Scholar]

- 111.Lyell D, Magrabi F, Raban MZ, Pont LG, Baysari MT, Day RO, et al. Automation bias in electronic prescribing. BMC Med Inform Decis Mak. 2017;17(1). doi: 10.1186/s12911-017-0425-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Lyell D, Coiera E. Automation bias and verification complexity: a systematic review. J Am Med Informatics Assoc. 2017;24(2). doi: 10.1093/jamia/ocw105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Tonekaboni S, Joshi S, McCradden MD, Goldenberg A. What clinicians want: contextualizing explainable machine learning for clinical end use. Proc Mach Learn Res. 2019; [Google Scholar]

- 114.Jacobs M, He J, F. Pradier M, Lam B, Ahn AC, McCoy TH, et al. Designing AI for trust and collaboration in time-constrained medical decisions: A sociotechnical lens. In: Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. New York, NY, USA: ACM; 2021.

- 115.Walter Z, Lopez MS. Physician acceptance of information technologies: Role of perceived threat to professional autonomy. Decis Support Syst. 2008. Dec;46(1). [Google Scholar]

- 116.Lee JD, See KA. Trust in Automation: designing for appropriate reliance. Hum Factors J Hum Factors Ergon Soc. 2004;46(1). doi: 10.1518/hfes.46.1.50_30392 [DOI] [PubMed] [Google Scholar]