Abstract

Background

Bibliographic databases provide access to an international body of scientific literature in health and medical sciences. Systematic reviews are an important source of evidence for clinicians, researchers, consumers, and policymakers as they address a specific health‐related question and use explicit methods to identify, appraise and synthesize evidence from which conclusions can be drawn and decisions made.

Methodological search filters help database end‐users search the literature effectively with different levels of sensitivity and specificity. These filters have been developed for various study designs and have been found to be particularly useful for intervention studies. Other filters have been developed for finding systematic reviews. Considering the variety and number of available search filters for systematic reviews, there is a need for a review of them in order to provide evidence about their retrieval properties at the time they were developed.

Objectives

To review systematically empirical studies that report the development, evaluation, or comparison of search filters to retrieve reports of systematic reviews in MEDLINE and Embase.

Search methods

We searched the following databases from inception to January 2023: MEDLINE, Embase, PsycINFO; Library, Information Science & Technology Abstracts (LISTA) and Science Citation Index (Web of Science).

Selection criteria

We included studies if one of their primary objectives is the development, evaluation, or comparison of a search filter that could be used to retrieve systematic reviews on MEDLINE, Embase, or both.

Data collection and analysis

Two review authors independently extracted data using a pre‐specified and piloted data extraction form using InterTASC Information Specialist Subgroup (ISSG) Search Filter Evaluation Checklist.

Main results

We identified eight studies that developed filters for MEDLINE and three studies that developed filters for Embase. Most studies are very old and some were limited to systematic reviews in specific clinical areas. Six included studies reported the sensitivity of their developed filter. Seven studies reported precision and six studies reported specificity. Only one study reported the number needed to read and positive predictive value. None of the filters were designed to differentiate systematic reviews on the basis of their methodological quality. For MEDLINE, all filters showed similar sensitivity and precision, and one filter showed higher levels of specificity. For Embase, filters showed variable sensitivity and precision, with limited study reports that may affect accuracy assessments. The report of these studies had some limitations, and the assessments of their accuracy may suffer from indirectness, considering that they were mostly developed before the release of the PRISMA 2009 statement or due to their limited scope in the selection of systematic review topics.

Search filters for MEDLINE

Three studies produced filters with sensitivity > 90% with variable degrees of precision, and only one of them was developed and validated in a gold‐standard database, which allowed the calculation of specificity. The other two search filters had lower levels of sensitivity. One of these produced a filter with higher levels of specificity (> 90%). All filters showed similar sensitivity and precision in the external validation, except for one which was not externally validated and another one which was conceptually derived and only externally validated.

Search filters for Embase

We identified three studies that developed filters for this database. One of these studies developed filters with variable sensitivity and precision, including highly sensitive strategies (> 90%); however, it was not externally validated. The other study produced a filter with a lower sensitivity (72.7%) but high specificity (99.1%) with a similar performance in the external validation.

Authors' conclusions

Studies reporting the development, evaluation, or comparison of search filters to retrieve reports of systematic reviews in MEDLINE showed similar sensitivity and precision, with one filter showing higher levels of specificity. For Embase, filters showed variable sensitivity and precision, with limited information about how the filter was produced, which leaves us uncertain about their performance assessments. Newer filters had limitations in their methods or scope, including very focused subject topics for their gold standards, limiting their applicability across other topics. Our findings highlight that consensus guidance on the conduct of search filters and standardized reporting of search filters are needed, as we found highly heterogeneous development methods, accuracy assessments and outcome selection. New strategies adaptable across interfaces could enhance their usability. Moreover, the performance of existing filters needs to be evaluated in light of the impact of reporting guidelines, including the PRISMA 2009, on how systematic reviews are reported. Finally, future filter developments should also consider comparing the filters against a common reference set to establish comparative performance and assess the quality of systematic reviews retrieved by strategies.

Plain language summary

How can we best filter systematic reviews in MEDLINE and Embase?

Key Messages

A wide range of search filters to retrieve systematic reviews were evaluated. Although many had acceptable sensitivity (missed few relevant studies) and specificity (omitted irrelevant studies), no single filter can be recommended since most were derived from older sets of reviews that may not reflect current reporting characteristics and standards.

What are search filters for systematic reviews?

Search filters combine words and phrases to retrieve records with a common feature (e.g. study design, clinical topic) and are typically evaluated in terms of their sensitivity and precision. Systematic reviews summarise and synthesise scientific evidence and represent an important source of information for healthcare professionals. Databases provide access to them, and search filters can be used to retrieve systematic reviews pragmatically.

What did we want to find out?

We wanted to identify search filters for systematic reviews, assess their quality and retrieve data on their sensitivity, specificity and precision.

What did we do?

We searched for studies that developed, evaluated or compared a search filter that could be used to retrieve systematic reviews in MEDLINE, Embase, or both. We identified nine studies that developed filters for MEDLINE and three studies that developed filters for Embase.

What did we find?

For MEDLINE, all filters showed similar sensitivity and precision, and one filter showed higher levels of specificity. For Embase, filters showed variable sensitivity and precision, with limited study reports that may affect accuracy assessments.

What are the limitations of the evidence?

Some filters were developed for specific topics (e.g. public health), and most were developed using older studies, which may not reflect how systematic reviews are currently reported. Moreover, filters may not be able to discern between high‐ and low‐quality reviews.

How up‐to‐date is the evidence?

The evidence is up‐to‐date to January 2023.

Summary of findings

Summary of findings 1. Summary of findings of the available filters.

| Range of measurements of accuracy from a) internal validation, b) external validation and c) independent evaluations | |||||||

| Study | Database (interface) |

Year of development/ Year of external evaluation |

Sensitivitya | Specificitya | Precisiona | NNRa | Comments |

| Filters with external evaluations and acceptable sensitivity and precisionb | |||||||

| Shojania 2001 | MEDLINE (PubMed) | 1999 to 2000/2007, 2012 and 2021 | b) 93 to 97% c) 62 to 90% |

c) 97.2% to 99.1% | c) 1.7 to 33.2% | c) 3.01 to 57.8 | Filter with independent evaluations with lower sensitivity. |

| Boynton 1998 | MEDLINE (Ovid) | 1992 to 1995/2001 and 2012 | a) 39 to 98% c) 47.8 to 99.5% |

c) 75.6 to 99.6% | a) 12 to 79% c) 0.1 to 2.1% |

a) 2.04 to 8.33 c) 46.7 to 1395 |

Filter developed in an old dataset but with recent positive independent evaluations. High sensitivity but low precision. |

| Wilczynski 2007 | Embase (Ovid) | 2000/2012 | a) 61.4 to 94.6% c) 63.4 to 96.3% |

a) 63.7 to 99.3% c) 72.3 to 99.5% |

a) 2 to 40.9% b) 0 to 0.9% |

a) 2.44 to 50 b) 117.8 to 2709.5 |

Four filters with different sensitivity and specificity profiles with consistent independent evaluations. |

| MEDLINE (Ovid) | 2000/2012 | a) 75.2 to 100% b) 71.2 to 99.9% c) 81.6 to 99.0% |

a) 63.5 to 99.4% b) 52 to 99.2% c) 62 to 99.3% |

a) 3.41 to 60.2% b) 3.14 to 57.1% c) 0 to 2% |

a) 1.66 to 29.33 b) 1.75 to 31.84 c) 49.4 to 2191.2 |

Three filters with different sensitivity and specificity profiles with consistent independent evaluations. | |

| Filters focus on specific topics | |||||||

| Boluyt 2008 | MEDLINE (PubMed) | 1994 to 2004 | b) 68 to 96% | N/A | b) 2 to 45% | 2.22 to 50 | Adaptation of existing search filters (Boynton 1998; Shojania 2001; White 2001; Wilczynski 2007) for systematic reviews on child health. |

| Lee 2012 | MEDLINE (Ovid) | 2004 to 2005 | a) 86.8% b) 89.9% |

a) 99.2% b) 98.9 % |

a) 1.1% b) 1.4% |

a) 91.6 b) 71.4 |

Filter with a focus on public health topics without independent evaluations with suboptimal sensitivity. |

| Embase (Ovid) | a) 72.7% b) 87.9% |

a) 99.1% b) 98.2% |

a) 0.6% b) 0.5% |

a) 171.6 b) 186.0 |

|||

| Avau 2021 | MEDLINE (PubMed) | 2019‐2020 | N/A | b) 97% | b) 9.7% | b) 10 | Filter with a focus on fist aid without independent evaluations. |

| Embase (Elsevier) | N/A | b) 96% | b) 5.4% | b) 19 | |||

| Others | |||||||

| White 2001 | MEDLINE (Ovid) | 1995 to 1997 | a) 67.1 to 87.1% b) 84.2% |

a) 89.2 to 99.4% b) 93% |

N/A | N/A | Filter without independent evaluations with suboptimal sensitivity. |

| Salvador‐Oliván 2021 | MEDLINE (PubMEd) | 2020 | N/A | N/A | b) 83.8% | b) 1.19 | Filter without independent evaluation assessed to retrieve "possible systematic reviews" |

Definition of outcome measures:

- Sensitivity: Proportion of systematic reviews that are correctly identified using the methodological filter.

- Specificity: Proportion of records that are not systematic reviews not identified using the methodological filter.

- Precision: Proportion of systematic reviews that are identified from all records retrieved using the methodological filter.

- Number needed to read (NNR): 1/precision

N/A: Not available. NR: not reported.

a Range of point estimates across different reports, evaluations and subsets of filters.

b >90% for sensitivity and >10% for precision

See Table 2 for a more detailed summary of the number of filters developed and evaluated in each database.

1. Performance of each search filter.

| Author/year | Reference standard/database (interface) | Internal validity (with 95% confidence interval when available) | External validity (with 95% confidence interval when available) | External evaluations |

| Boynton 1998 |

Quasi‐gold standard: handsearch in high impact journals and electronic searches (1992‐1995) MEDLINE (Ovid) |

8 filters using objective measures (A, B, C, D, E, F, H, J) 3 filters using expert input (K, L, M) Sensitivity: A: 66%; B: 95%; C: 92%; D: 39%; E: 29%; F: 61%; H: 98%; J: 98% K: 55%; L: 89%; M: 58% Precision: A: 26%; B: 12%; C: 23%; D: 49%; E: 79%; F: 42%; H: 19%; J: 20% K: 71%; L: 31%; M: 37% Specificity: not reported Number needed to read(a): A: 3.85; B: 8.33; C: 4.35; D: 2.04; E: 1.27; F: 2.38; H: 5.26; J: 5; K: 1.43; L: 3.23; M: 2.7 |

No external validation |

ByLee 2012 Sensitivity maximiser Sensitivity 99.5% (97.3 to 99.9) Specificity 75.6% (75.6 to 75.6) Precision 0.1% (0.1 to 0.1) Number needed to read 1395.1 (1387.7 to 1437.2) Precision query (> 70%) Sensitivity 47.8% (41.2 to 54.6) Specificity 99.6% (99.6 to 99.6) Precision 2.1% (1.8 to 2.5) Number needed to read 46.7 (40.9 to 54.4) ByWhite 2001 Most sensitive strategy Sensitivity 93.6% Precision 11.3% |

| Shojania 2001 |

Quasi‐gold standard 1 : systematic reviews from DARE (2000) Quasi‐gold standard 1 : handsearch ACP journal club (1999‐2000) MEDLINE (PubMed) |

No internal validation | Gold standard 1: Sensitivity 93% (86 to 97) Gold standard 2: Sensitivity 97% (91 to 99) Specificity: not reported |

ByWilczynski 2007 Sensitivity 90.0% (87.9 to 92.2) Specificity 97.2% (97.0 to 97.4) Precision 33.2% (31.2 to 35.2) Number needed to read(a) 3.01 (2.84 to 3.21) ByLee 2012 Sensitivity 85.5% (80.1 to 89.7) Specificity 99.1% (99.1 to 99.1) Precision 1.7% (1.6 to 1.8) Number needed to read 57.8 (55.1 to 61.8) By Salvador‐Oliván 2021 Sensitivity 62%(b) |

| White 2001 |

Gold standard (1995 to 1997) handsearch from five journals Annals of Internal Medicine, Archives of Internal Medicine, BMJ, JAMA, and The Lancet . This included 110 systematic reviews, 110 reviews (not systematic), and 125 non‐review articles. MEDLINE (Ovid) |

5 filters (A, B, C, D, E) Sensitivity: A: 73.4%; B: 67.1%; C: 81.9%; D: 87.1%; E: 77.2% Specificity: A: 93.3%; B: 94.9%; C: 99.4%; D: 89.2%; E: 94.9% |

Filter A: Sensitivity: 84.2% Specificity: 93.0% |

None |

| Wilczynski 2007 |

Gold standard 1: Handsearch 55 journals (2000) Embase (Ovid) |

Best sensitivity

Sensitivity 94.6% (91.5, 97.6) Specificity 63.7% (63.2, 64.3) Precision 2.0% (1.8, 2.3) Accuracy 64.0% (63.4, 64.5) NNR(a): 50 Best specificity Sensitivity 61.4% (54.9, 67.8) Specificity 99.3% (99.2, 99.4) Precision 40.9% (35.6, 46.2) Accuracy 99.0% (98.9, 99.1) Number needed to read(a): 2.44 Small drop in specificity with a substantive gain in sensitivity Sensitivity 75.0% (69.3, 80.7) Specificity 98.5% (98.4, 98.7) Precision 29.2% (25.4, 32.9) Accuracy 98.4% (98.2, 98.5) Number needed to read(a): 3.42 Best optimization of sensitivity and specificity Sensitivity 92.3% (88.7, 95.8) Specificity 87.7% (87.3, 88.1) Precision 5.6% (4.9, 6.4) Accuracy 87.7 (87.3, 88.1) Number needed to read(a): 17.86 |

No external validation |

ByLee 2012 Best sensitivity Sensitivity 96.3% (90.8 to 98.5) Specificity 72.3% (72.3 to 72.3) Precision 0 (0 to 0) Number needed to read 2709.5 (2622.5 to 2945.2) Small drop in specificity with a substantive gain in sensitivity Sensitivity 75.7% (66.7 to 82.8) Specificity 99.3% (99.3 to 99.3) Precision 1.1% (1 to 1.2) Number needed to read 88.2 (80.5 to 100.1) Best optimization of sensitivity and specificity Sensitivity 96.3% (90.8 to 98.5) Specificity 85.5% (85.5 to 85.5) Precision 0.1% (0.1 to 0.1) Number needed to read 1403.4 (1363.4 to 1502.0) Best specificity Sensitivity 63.4% (28.0 to 45.9) Specificity 99.5% (99.5 to 99.5) Precision 0.9% (0.7 to 1.1) Number needed to read 117.8 (93.4, 154.2) |

|

Gold standard 1: Handsearch 55 journals (2000) Gold standard 2: Validation DS ‐ CDST Gold standard 3: Full validation DB MEDLINE (Ovid) |

Top sensitivity strategies Sensitivity 100% (97.3 to 100) Specificity 63.5% (62.5 to 64.4) Precision 3.41% (2.86 to 4.03) Number needed to read(a): 29.33 Top strategy minimising the difference between sensitivity and specificity Sensitivity 92.5% (86.6 to 96.3) Specificity 93.0% (92.5 to 93.5) Precision 14.6% (12.3 to 17.2) Number needed to read(a): 6.85 Top precision performer Sensitivity 75.2% (67.0 to 82.3) Specificity 99.4% (99.2 to 99.5) Precision 60.2% (52.4 to 67.7) Number needed to read(a): 1.66 |

Top sensitivity strategies Sensitivity 99.9 (99.6 to 100) Specificity 52.0% (51.6 to 52.5) Precision 3.14% (2.92 to 3.37) Number needed to read(a): 31.84 Top strategy minimising the difference between sensitivity and specificity Sensitivity 98.0% (97.0 to 99.0) Specificity 90.8% (90.5 to 91.1) Precision 14.2% (13.3 to 15.2) Number needed to read(a): 7.04 Top precision performer Sensitivity 71.2% (68.0 to 74.4) Specificity 99.2% (99.1 to 99.3) Precision 57.1 (53.9 to 60.3) Number needed to read(a): 1.75 |

ByLee 2012 Top sensitivity strategies Sensitivity 99.0% (96.5 to 99.7) Specificity 62.0% (62.0 to 62.0) Precision 0% (0 to 0) Number needed to read 2191.2 (2166.3 to 2284.3) Balanced query (sensitivity > specificity) Sensitivity 99.0% (96.5 to 99.7) Specificity 87.6% (87.6 to 87.6) Precision 0.1% (0.1 to 0.1) Number needed to read 712.4 (706.7 to 733.4) Balanced query (specificity > sensitivity) Sensitivity 87.9% (82.8 to 91.7) Specificity 98.5% (98.5 to 98.5) Precision 1.1% (1.0 to 1.1) Number needed to read 94.9% (90.9 to 100.9) Specific query Sensitivity 81.6% (75.8 to 86.3) Specificity 99.3% (99.3 to 99.3) Precision 2.0% (1.9 to 2.3) Number needed to read 49.4 (46.7 to 53.2) |

|

| Boluyt 2008 |

Gold standard. Was established by searching for RS of children's health in DARE and by manually searching for various magazines for RS (1994 to 2004) MEDLINE (Pubmed) |

No internal validation |

Sensitivity 1. Shojania+child 74% (69 to78) 2. Boynton+child 95% (92 to 97) 3. White 1+child 93% (91 to 96) 4. White 2+child 94% (91 to 96) 5. Montori 1+child 96% (93 to 97) 6. Montori 2+child 68% (64 to 73) 7. Montori 3+child 94% (91 to 96) 8. Montori 4+child 72% (67 to 76) 9. Pubmed +child 76% (72 to 80) Precision 1. Shojania+child 45% (36 to 55) 2. Boynton+child 3% (1 to 9) 3. White 2+child 2% (1 to 7) 4. Montori 2+child 45% (36 to 55) 5. Montori 3+child 3% (1 to 9) 6. Pubmed +child 32% (24 to 42) Number needed to read(a) 1. Shojania+child 2.22 2. Boynton+child 33.3 3. White 2+child 50 4. Montori 2+child 2.22 5. Montori 3+child 33.33 6. Pubmed +child 3.13 |

None |

| Lee 2012 | 1 Gold standard for each database: Manual screening (2004‐2005) MEDLINE (Ovid) Embase (Ovid) |

MEDLINE Sensitivity 86.8% (75.2 to 93.5) Specificity 99.2% (99.2 to 99.2) Precision 1.1% (0.9 to 1.2) Number needed to read 91.6 (85.0 to 105.9) |

MEDLINE Sensitivity 89.9% (85.0 to 93.3) Specificity 98.9% (98.9 to 98.9) Precision 1.4% (1.3 to 1.5) Number needed to read 71.4 (68.7 to 75.5) |

None |

|

EMBASE Sensitivity 72.7% (55.8 to 84.9) Specificity 99.1% (99.1 to 99.1) Precision 0.6% (0.4 to 0.7) Number needed to read 171.6 (146.7 to 224.6) |

EMBASE Sensitivity 87.9% (80.2 to 92.8) Specificity 98.2% (98.2 to 98.2) Precision 0.5% (0.5 to 0.6) Number needed to read 186.0 (176.0 to 208.9) |

|||

| Avau 2021 |

1 Quasi‐gold standard for MEDLINE (PubMed): (2019‐2020) The reference GS consisted of 77 SR references, collected in 33 evidence summaries on different first aid topics. |

No internal validation |

Specificity: 97% Precision: 9.7% Number needed to read: 10 |

None |

|

1 Quasi‐gold standard for Embase (Embase.com): (2019‐2020) The reference GS consisted of 70 SR references, collected in 35 evidence summaries. |

Specificity: 96% Precision: 5.4% Number needed to read: 19 |

|||

| Salvador‐Oliván 2021 | No gold standard. A set of probable systematic reviews was Precisions identified using the PubMed search filter for systematic reviews. MEDLINE (Pubmed) | No internal validation |

Precision* 83.8% (range 72.3 to 96.7%) Number needed to read(a)1.19 |

None |

Definition of outcome measures:

- Sensitivity: Proportion of systematic reviews that are correctly identified using the methodological filter.

- Specificity: Proportion of records that are not systematic reviews not identified using the methodological filter.

- Precision: Proportion of systematic reviews that are identified from all records retrieved using the methodological filter.

- Number needed to read (NNR): 1/precision

(a): Calculated from precision. *for "probable systematic reviews"; SR: systematic review. (b) This version of the filter was modified by PubMed and this number indicates the proportion of potential systematic reviews retrieved by the PubMed filter.

Background

Bibliographic databases, such as MEDLINE and Embase, provide access to an international body of scientific literature in health and medical sciences. They provide bibliographic citation information and, frequently, abstracts or links to full‐text publications. These databases also provide controlled vocabulary (index terms) to make it easier to index, catalogue and search biomedical and health‐related information and documents (Dhammi 2014; Leydesdorff 2016; Lipscomb 2000).

Systematic reviews of the literature are an important source of evidence for clinicians, researchers, consumers, and policymakers. They typically address a specific health‐related question using explicit methods to identify, appraise and synthesize research‐based evidence and present it in an accessible format, providing more reliable findings from which conclusions can be drawn and decisions made when reviews are well conducted (Chandler 2019). Systematic reviews can also be a useful starting point for researchers by identifying gaps in the evidence (Ioannidis 2016). Cochrane provides two main guidelines for the development and reporting of systematic reviews. These are the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2022b) and the Methodological Expectations of Cochrane Intervention Reviews (known as the MECIR standards) (Higgins 2022a). The Preferred Reporting Items for Systematic Reviews and Meta‐Analyses (PRISMA) statement (Moher 2009; Page 2021) provides guidance on how to report systematic reviews; there is evidence that adherence to these guidelines has improved over time (Page 2016); however, there is still scope for improvement. The PRISMA statement updates and adherence may have led to the improved reporting of systematic reviews, affecting filter performances.

Systematic reviews are also widely used to develop clinical practice guidelines (IOM 2011), overviews (Becker 2011), and other forms of evidence synthesis, such as evidence mappings (Bragge 2011). Consequently, the appropriate and prompt identification of systematic reviews is necessary for many important purposes.

Description of the methods being investigated

Search filters were originally defined by Wilczynski 1995 as a list of terms that can improve the detection of studies of high quality for clinical practice. The Cochrane Handbook defines search filters as "search strategies that are designed to retrieve specific types of records, such as those of a particular methodological design" (Lefebvre 2022). Alternative terms used include clinical queries, hedges, optimal search filters, optimal search strategies, quality filters, search filters, or search strategies (Jenkins 2004). At the same time, the original definition by Wilczynski and colleagues included study quality; methodological search filters are not necessarily designed to retrieve studies by their quality. Some search filters have been assessed to determine how effective they are at identifying relevant articles while avoiding the detection of irrelevant articles. For the purpose of this review, we will restrict the definition of "search filters" to search strategies with a formally published test of diagnostic performance (e.g. sensitivity, specificity, precision, etc.). We will refer to other methods for retrieving specific types of records as "search strategies" alone. As the amount of research evidence continues to increase rapidly in some areas and indexing is not consistent for all studies designs, the use of search filters has been advocated to assist the searching process, as it reduces the total number of records found and increases the likelihood that they will be of interest. For that reason, the performance of a search filter is usually calculated according to its capacity to retrieve as many relevant citations as possible whilst also omitting irrelevant results (Wilczynski 2005), the aim being to reduce the number of irrelevant citations that may have to be screened to find a relevant systematic review (Bachmann 2002).

Currently, one of the ways that MEDLINE can be searched is via PubMed (www.pubmed.gov) and provides two related publication type descriptors for the retrieval of systematic reviews: "meta‐analysis" (introduced in 1993), which might not be useful for those systematic reviews which do not include a meta‐analysis; and "review" (introduced in 1966), which may not differentiate systematic reviews from narrative reviews. More recently, PubMed incorporated a filter to retrieve "systematic reviews" through the system interface (systematic review subset)(Shojania 2001). This filter was originally intended to retrieve citations identified as systematic reviews, meta‐analyses, reviews of clinical trials, evidence‐based medicine, consensus development conferences, guidelines, and citations to articles from journals specialising in reviews of value to clinicians. This filter has been updated periodically and now contains terms more specific to systematic reviews (the last update was in December 2018). These modifications were pragmatic and have not undergone testing for sensitivity, selectivity, precision, or accuracy in a formal validation process (Bradley 2010). Recently, the National Library of Medicine added new terminology to the Medical Subject Headings: "Systematic review as topic" and "Systematic review" [publication type], defined as "A review of primary literature in health and health policy that attempts to identify, appraise, and synthesise all the empirical evidence that meets specified eligibility criteria to answer a given research question ... aimed at minimising bias in order to produce more reliable findings regarding the effects of interventions for prevention, treatment, and rehabilitation that can be used to inform decision making." (NLM 2019a) The "systematic review as topic" heading will help with identifying studies that address systematic reviews as a method and the other heading will help to identify systematic reviews. However, the value of the index terms is dependent on the consistency with which the indexers apply the index terms appropriately (NLM 2019b; NLM 2019c).

Elsevier indexes Embase citations with the check tag "systematic review" for studies summarising systematically all the available evidence (Embase Indexing Guide 2020). Over the years, several organisations and individuals developed and evaluated search filters to retrieve systematic reviews in Embase (SIGN, Wilczynski 2007). Other filters have been developed for finding systematic reviews in MEDLINE via Ovid, such as one by Montori and colleagues, who created the filter by assessing index terms, text words and discussions with clinicians and biomedical librarians (Montori 2005).

How these methods might work

Methodological search filters are used to help end‐users search the literature effectively (Jenkins 2004). Filters have been developed with different levels of sensitivity and specificity according to the requirements of the users (for instance, those with high sensitivity, high specificity, a balance between sensitivity and specificity or a balance between sensitivity and precision) (Brettle 1998; Glanville 2000; Jenkins 2004).

Methodological search filters have been developed for various study designs and have been found to be particularly useful for intervention studies. Within the Cochrane Handbook, for example, a highly sensitive search strategy is proposed for identifying reports of randomised trials (Lefebvre 2022); and there are Cochrane Reviews of the evidence on filters for retrieving studies of diagnostic test accuracy (Beynon 2013) and observational studies (Li 2019). However, there is no guidance for finding the best filters for identifying systematic reviews (Becker 2011).

Why it is important to do this review

Systematic reviews provide core material for guidelines, overviews, health technology assessments and other forms of evidence synthesis, as well as being an invaluable tool for decision‐making (Sprakel 2019). Authors of these documents are faced with a choice of different methods to retrieve systematic reviews for their research question or clinical scenario. Considering the variety and number of available search filters for systematic reviews, there is a need for a review of them in order to provide evidence about their retrieval properties at the time they were developed. We have restricted our review scope to MEDLINE and Embase since these are large and widely used bibliographic databases, often suggested as mandatory when conducting evidence syntheses in health care (Bramer 2017). We expect that the findings of our review will aid those who use systematic methods for information retrieval (e.g. researchers conducting overviews or evidence maps) who wish to use validated search filters with adequate sensitivity and specificity, whereas other stakeholders (e.g. clinicians and consumers) might use search filters that are built into search interfaces, such as the "systematic review" filter in PubMed/MEDLINE.

Objectives

To review systematically empirical studies that report the development, evaluation, or comparison of search filters to retrieve reports of systematic reviews in MEDLINE and Embase.

Methods

Criteria for considering studies for this review

Types of studies

We included studies if one of their main objectives is the development, evaluation, or comparison of a search filter that could be used to identify systematic reviews in MEDLINE, Embase, or both. A development study is one in which a filter was generated and tested for its ability to identify relevant articles while avoiding the detection of irrelevant articles. An evaluation study is one in which these properties of a developed filter are tested in a new reference set of relevant studies. A comparison study is one in which different search filters are tested in a reference set to compare their properties. We considered systematic reviews as defined by the study authors.

Types of data

We collected the following information from the included studies.

Methodological filters: fully detailed search strategies

Dates the searches were conducted

Years covered by the searches

Electronic bibliographic database (MEDLINE or Embase) and interface used (e.g. Ovid or PubMed)

Healthcare topic

Characteristics of the gold standard used to test the filter

Outcome measures (e.g. sensitivity, specificity, or precision)

Types of methods

Search strategies for identifying reports of systematic reviews in MEDLINE and Embase.

Types of outcome measures

We included any of the following outcome measures (see Table 1 below for more information).

Primary outcomes

Sensitivity: proportion of systematic reviews that are correctly identified using the methodological filter.

Specificity: proportion of records that are not systematic reviews not identified using the methodological filter.

Precision: proportion of systematic reviews that are identified from all records retrieved using the methodological filter.

Table 1. Definition of outcome measures of this review

| Gold standard | |||

| Systematic review | Not systematic review | ||

| Searches with methodological filters | Detected by the filter | a | b |

| Not detected by the filter | c | d | |

| Sensitivity = a / (a + c) Specificity = d / (b + d) Precision = a / (a + b) Accuracy = (a + d) / (a + b + c + c) Number needed to read = 1 / precision = (a + b) / a Systematic reviews in the gold standard = a + c Non‐systematic reviews in the gold standard = b + d | |||

We have defined a priori the levels of sensitivity (more than 90%) and precision (more than 10%) in external validation studies that would be an acceptable threshold for use when searching for systematic reviews (Beynon 2013).

Secondary outcomes

Accuracy: proportion of records that were adequately classified using the methodological filter

Number needed to read (NNR): 1/precision

Quality assessment of the systematic reviews retrieved and missed by the search strategy ("a" + "c" in Table 1, analyzed by the developers of the filter). This includes whether the authors of the included studies provide details on the quality of reviews that were retrieved or missed by the search filter. For instance, the proportion of high‐quality reviews missed by the search filter.

Definitions adapted from Cooper 2018.

Search methods for identification of studies

Electronic searches

We searched the following databases from inception to January 2023.

MEDLINE Ovid SP and Epub Ahead of Print, In‐Process, In‐Data‐Review & Other Non‐Indexed Citations, Daily and Versions (from 1946 to January 2023);

Embase (Elsevier.com; from 1974 to January 2023);

PsycINFO Ovid SP (from 1967 to January 2023);

Library, Information Science & Technology Abstracts (LISTA) (EBSCO); (from inception to January 2023);

Science Citation Index (Web of Science; Clarivate); (from 1964 to January 2023).

For detailed search strategies for each database, see Appendix 1. We did not restrict searches by the language of publication.

Searching other resources

To identify additional published, unpublished and ongoing studies:

relevant studies identified from the above sources were entered into PubMed, and the Related Articles feature was used; and

reference lists of all relevant studies were assessed (Horsley 2011).

We also searched the websites of, among others:

the InterTASC Information Specialists’ Sub‐Group (www.intertasc.org.uk/subgroups/issg);

the Health Information Research Unit (McMaster) (https://hiru.mcmaster.ca/hiru/).

Data collection and analysis

Selection of studies

Four review authors (CMEL, VG, VV, JVAF) worked independently in pairs to screen the titles and abstracts of all retrieved records and assess papers for eligibility. Any disagreements were resolved by discussion or consultation with a third author (IS) to reach a consensus.

Full copies of the relevant reports were obtained for records possibly meeting the inclusion criteria. Each full report was assessed independently in pairs by four review authors (CMEL, VG, VV, JVAF) to determine if it met the inclusion criteria for the review. Any disagreements were resolved by discussion or by consultation with a third author (IS) to reach a consensus.

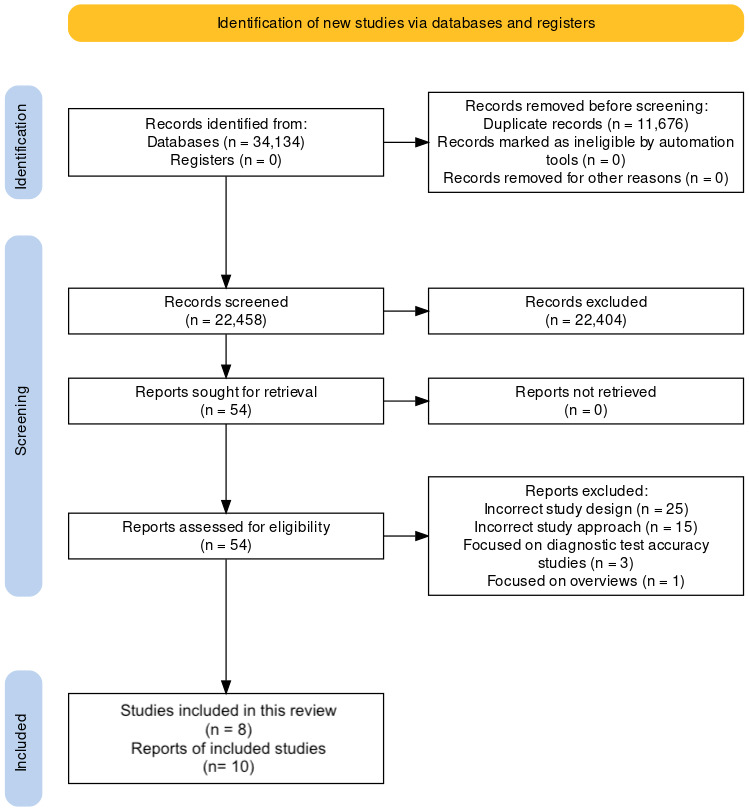

We presented results following a PRISMA 2020 flow diagram (Haddaway 2022; Page 2021).

Data extraction and management

Two review authors (LG and CMEL) independently extracted data, using a piloted pre‐specified data extraction form. We extracted Information on the following.

Citation details for the study

Methodological filter used

Dates the searches were conducted

Years covered by the searches

Search interface used (e.g. Ovid or PubMed)

Healthcare topic

Gold standard

Outcome measures (e.g. sensitivity, specificity, or precision)

Any disagreements were resolved by discussion or by consultation with a third review author to reach a consensus. If there were studies with incomplete or missing data, we attempted to contact the corresponding author (Young 2011).

Assessment of risk of bias in included studies

A small number of critical appraisal tools have been developed to assess the quality of methodological search filters (Bak 2009; Glanville 2008; Jenkins 2004). The included studies were assessed against the search filter appraisal checklist proposed by the UK InterTASC Information Specialists’ Sub‐Group (Glanville 2008) and reported in the Cochrane Handbook (Lefebvre 2022).

Two review authors (LIG and CMEL) completed the appraisal checklist (Appendix 2). Any disagreements were resolved by discussion or by consultation with the remaining group of authors (VV, JVAF, or IS) to reach a consensus.

Data synthesis

We synthesized filter performance measures separately for MEDLINE and Embase. We tabulated performance measures reported by development and evaluation studies grouped by individual filters, so that a comparison can be made between a filter's original reported performance and its performance in later evaluation studies. If sensitivity, specificity, or precision (along with 95% confidence intervals (CI)) were not reported in the original reports, they were calculated from 2 x 2 data tables, where possible. Data extraction tables were established that take the InterTASC Information Specialists Sub‐Group (ISSG) Glanville 2008 search filter evaluation checklist evaluation fields to evaluate the report on the studies of each of the filters, taking, for example, the methodological objective of the filter (search for systematic reviews/search interface), reference standard, Gold Standard preparation method, sensitivity and precision performance, external validation, among other elements.

Subgroup analysis and investigation of heterogeneity

Where sufficient data were available, we would have performed subgroup analyses based on the following characteristics.

Dates the searches were conducted: searches conducted before the release of the PRISMA statement in 2009 versus those conducted after its release (because the PRISMA guidance may affect how systematic reviews are reported)

Search interface used (e.g. PubMed or Ovid)

Healthcare topic: searches conducted within a specific health topic (e.g. public health, cardiovascular disease, etc.) versus those conducted across the biomedical literature or within a core set of non‐specialized biomedical journals (e.g. Core Clinical Journals)

Results

Description of studies

For study details, see Characteristics of included studies; Characteristics of excluded studies.

Results of the search

We retrieved 34,134 records after searching MEDLINE, Embase, PsycINFO, LISTA and Science Citation Index electronic databases. We did not identify studies using additional methods. After removing 11,676 duplicates, 22,458 records remained for the title and abstract screening. Of this total number of records, we eliminated 22,404 as they were considered irrelevant. We analyzed the full texts of the remaining 54 records to assess their inclusion. Finally, we included eight studies reported in 10 publications (Figure 1). See Characteristics of included studies; Characteristics of excluded studies. We did not classify any study as awaiting classification. We did not identify any ongoing studies.

1.

PRISMA flow diagram

Included studies

The methodological filters of the included studies focused on developing a filter to retrieve systematic reviews. Four studies were development studies (Boynton 1998; Lee 2012; White 2001; Wilczynski 2007). Seven studies were evaluation studies (Avau 2021; Boluyt 2008; Lee 2012; Salvador‐Oliván 2021; Shojania 2001; White 2001; Wilczynski 2007). Seven studies compared their filter with other available filters, some of them included in this review (Avau 2021; Boluyt 2008; Boynton 1998; Lee 2012; Salvador‐Oliván 2021; White 2001; Wilczynski 2007).

The included studies developed filters for the following databases.

MEDLINE (Ovid): Boynton 1998 ; Lee 2012; White 2001 ; Wilczynski 2007

MEDLINE (PubMed): Avau 2021; Boluyt 2008; Salvador‐Oliván 2021; Shojania 2001

Embase (Ovid): Lee 2012 ; Wilczynski 2007

Embase (via Elsevier): Avau 2021

The main objective of the studies that developed filters for MEDLINE was to improve the performance in the retrieval of systematic reviews by improving sensitivity, specificity and precision through different search approaches. These approaches included manual or electronic searches for selecting relevant terms.

The Ovid platform was used for the two Embase filters (Lee 2012; Wilczynski 2007). The declared objective was to recover systematic reviews, improving precision and sensitivity in the search strategy.

Each study may have developed and validated a variable number of filters. Moreover, a filter might have been tested in more than one dataset and compared with other filters. See Table 2 and Table 2 (below) for a summary of databases, the gold standards/quasi‐gold standards and the types of validation and comparisons of the included studies.

Methods and platform for the development of the search filter

The following methods were used to develop the search filter.

Analyses of terms frequency in MEDLINE (Boynton 1998) or a pre‐defined set of citations using text mining software (White 2001)

Expert input (Boynton 1998)

Previously validated filters (Lee 2012)

A combination of relevant publication types with title and text words typically found in systematic reviews (Shojania 2001)

Using methodological search terms and phrases, including indexing terms and text words from clinical articles from a subset of journal articles (Wilczynski 2007)

The terms used in the search strategies were searched using various field labels, for example, abstract or subject heading.

Gold standard/Quasi‐Gold Standard

Gold standards were constructed in various ways. Some of the gold standard sets of records were produced by different components such as indexing terms and keywords referring to systematic review methods (Lee 2012), handsearching in different journals (White 2001), or by guidance from clinicians and librarians (Wilczynski 2007).

Other studies used a quasi‐gold standard, i.e. a database in which the authors identified systematic reviews, but did not classify the other records as non‐systematic reviews. In these studies, specificity could not be assessed, only sensitivity and precision (Avau 2021; Boynton 1998; Shojania 2001).

Validation

One study (Lee 2012) performed external validation with a dataset of indexed articles. Another study (Wilczynski 2007), performed two external validations: One with a set of records that included the Cochrane Database of Systematic Reviews and one without it. Both search filters were validated internally, but only one (Lee 2012) was validated internally and externally. Three studies did not perform external validation (Boynton 1998; Shojania 2001; White 2001).

Comparisons to other filters

Most developed filters compared themselves with other available filters, such as the Hunt and K.A. McKibbon full and brief strategy (Hunt 1997), and the Centre for Reviews and Dissemination (CRD) brief and full strategy (NHS 1996). Some of the included filters in this review were compared with another filter also included in this review. Only one study (Shojania 2001) did not compare their filter with other commonly used filters.

Outcome measures

Six studies reported the sensitivity of their developed filter(Boluyt 2008; Boynton 1998; Lee 2012; Shojania 2001; White 2001; Wilczynski 2007). Seven studies reported precision (Avau 2021; Boluyt 2008; Boynton 1998; Lee 2012; Salvador‐Oliván 2021; Shojania 2001; Wilczynski 2007), and six studies (Avau 2021; Boynton 1998; Lee 2012; Shojania 2001; White 2001; Wilczynski 2007) reported specificity. Only one study reported positive predictive value (Shojania 2001).

Table 2. Summary characteristics of search filters.

| Author/year | Database (platform) | Gold Standard | Type of validation | Comparisons to other filters | Available outcome measures |

| Boynton 1998 | MEDLINE (Ovid) | Quasi‐gold standard handsearch and electronic search (n = 288 records) | Internal | Hunt and McKibbon full and brief (Hunt 1997) Centre for Reviews and Dissemination full and brief (NHS 1996) |

Sensitivity, specificity and precision |

| Shojania 2001* | MEDLINE (PubMed) | Gold standard 1: systematic reviews from DARE (n = 100 records) Gold standard 2: handsearched from AC J Club (n=104 records) |

External | None | Sensitivity, specificity, precision, positive predictive value |

| White 2001 | MEDLINE (Ovid) | 1 GS handsearch; QGS (SR): n = 110 records, Non SR: n = 110 records, Non review: n = 125 records) | Internal / External |

Boynton 1998 NHS 1996 |

Sensitivity and specificity |

| Wilczynski 2007 | Embase (Ovid) | Gold standard 1: handsearch 55 journals (n = 27,769 records) | Internal | None | Sensitivity, Specificity, Accuracy and Precision |

| MEDLINE (Ovid) | Gold standard 1: handsearch journals (n = 27,769 records) Gold standard 2: Validation dataset ‐ CDSR (n = 10,446 records) Gold standard 3: full validation database (n = 49,028 records) |

Internal / External |

Hunt 1997 NHS 1996 Shojania 2001 Wilczynski 2010 |

Sensitivity, specificity and precision | |

| Boluyt 2008* | MEDLINE(PubMed) | 1 GS. Was established by search for systematic reviews of children's health in DARE and by manually searching for various magazines for systematic reviews | External | Shojania 2001, Boynton 1998, White 2001, Montori 2005, Cochrane Child Health Field 2006 | Sensitivity and Precision |

| Lee 2012* | MEDLINE (Ovid) Embase (Ovid) |

1 GS for each database Manual screening (n = 387 records) | Internal / External |

Boynton 1998 BMJ Clinical Evidence Hunt 1997 NHS 1996 SIGN Shojania 2001 Wilczynski 2007 Hunt 1997 |

Sensitivity, Specificity, Precision, NNR |

| Avau 2021* | MEDLINE (PubMed) Embase (via Elsevier) |

1 Quasi GS for MEDLINE (PubMed) (n = 77 records); 1 Quasi GS for Embase (Embase.com) (n = 70 records) |

External validation. | SIGN | Specificity, Precision, NNR |

| Salvador‐Oliván 2021 | MEDLINE (PubMed) | Does not develop GS | External validation. PubMed SR filter |

Shojania 2001 | Precision, NNR |

Footnote: QGS: Quasi‐Gold Standard; CDSR: Cochrane Database of Systematic Reviews; DARE: Database of Abstracts of Reviews of Effect; NNR: number needed to read. * Filter developed for a specific clinical area (first aids in Avau 2021, child health in Boluyt 2008, public health in Lee 2012 and multi‐topic such as colorectal cancer, thrombolytic therapy for venous thromboembolism, and treatment of dementia in Shojania 2001)

Excluded studies

We excluded 44 records after full‐text selection.

We excluded 25 due to an incorrect study design that did not focus on the methodological development of the filters.

We excluded 15 because the study approach was incorrect; they did not report search strategies to retrieve systematic reviews on PubMed or Embase.

Three records focused on diagnostic test accuracy studies.

One study focused on overviews.

See Characteristics of excluded studies for more details.

Risk of bias in included studies

All studies stated their objectives clearly, identifying the focus of their research and the database and interface used. The focus of the filters was also clearly reported. Four studies described additional specific topics for their filter's focus (for example, colorectal cancer, thrombolytic therapy for venous thromboembolism, and treatment of dementia in Shojania 2001 or public health in Lee 2012).

The identification of the number of gold standards or quasi‐gold standards of known relevant records was clearly reported, when applicable. The size of the gold standard was not reported in some studies (Lee 2012; Shojania 2001). The size of gold standards was relatively small, ranging from 70 to 387 for most of the studies, with the exception of Wilczynski 2007, which screened 27,769, 10,446 and 49,028 records for each gold standard to evaluate the search filters.

The identification of the search terms incorporated in the filters was highly variable across studies and also not clearly reported.

Internal validity testing was reported in four studies (Boynton 1998; Lee 2012; White 2001; Wilczynski 2007). Most studies reported several tested strategies, with various performance measures for all strategies, although some studies only reported a single value for this performance measure, with no measure of variance reported.

External validity testing was performed in seven studies (Avau 2021; Boluyt 2008; Lee 2012; Salvador‐Oliván 2021; Shojania 2001; White 2001; Wilczynski 2007). In these studies, various strategies were tested, and several performance measures were also reported.

Most studies addressed their limitation in the discussion sections, with no additional potential limitations identified when appraised by the review team. Most studies also compared their filters with other available filters, but the references for the compared filters were not consistently reported in the studies.

See Additional Table 3; Table 4; Table 5; Table 6; Table 7; Table 8; Table 9; Table 10 for a detailed assessment of the InterTASC appraisal tool.

2. InterTASC ‐ Avau 2021.

| A. Information | |

| A.1 State the author’s objective | Validate search filters for systematic reviews, intervention and observational studies translated from Ovid MEDLINE and Embase syntax to PubMed and Embase.com (Elsevier) |

| A.2 State the focus of the research. | Balance of sensitivity “number needed to read" (NNR) |

| A.3 Database(s) and search interface(s) . | MEDLINE (PubMed) and MEDLINE (Ovid) Embase (Embase.com) |

| A.4 Describe the methodological focus of the filter (e.g. RCTs). | Systematic reviews, Intervention studies, Observational studies |

| A.5 Describe any other topic that forms an additional focus of the filter (e.g. clinical topics such as breast cancer, geographic location such as Asia or population grouping such as paediatrics). | Yes, First aids |

| A.6 Other observations. | |

| B. Identification of a gold standard (GS) of known relevant records | |

| B.1 Did the authors identify one or more gold standards (GSs)? | 1 GSs for MEDLINE (PubMed) 1 GSs for Embase (Embase.com) |

| B.2 How did the authors identify the records in each GS? | 1) Obtained from searches developed for an evidence summary informing our 2019 Sub‐Saharan Africa Advanced First Aid Manual or 2020 updates to our Flanders, Belgium, or Sub‐Saharan Africa Basic First Aid Guidelines on PubMed or Embase. 2) Identified as a relevant systematic review, intervention study, or observational study as judged by the reviewer of the evidence summary according to predefined study selection criteria described in CEBaP’s methodological charter 3) Originally retrieved without using a methodological search filter Records from different searches were accrued until a minimum of 70 relevant publications of a specific study design were included in a gold standard. For PubMed, the reference gold standard consisted of 77 systematic review references, collected in 33 evidence summaries on different first aid topics. For Embase, The reference gold standard consisted of 70 systematic review references, collected in 35 evidence summaries. |

| B.3 Report the dates of the records in each GS. | Not reported |

| B.4 What are the inclusion criteria for each GS? | Yes, detailed in Appendix B.2 |

| B.5 Describe the size of each GS and the authors’ justification, if provided (for example the size of the gold standard may have been determined by a power calculation) | ‐ PubMed: The reference GS consisted of 77 SR references, collected in 33 evidence summaries on different first aid topics. ‐ Embase: The reference GS consisted of 70 SR references, collected in 35 evidence summaries. |

| B.6 Are there limitations to the gold standard(s)? | Search dates limited and the filters evaluated in this paper were specifically tested on searches of evidence summaries used for first aid guideline projects. |

| B.7 How was each gold standard used? | To test external validity. |

| B.8 Other observations. | |

| C. How did the researchers identify the search terms in their filter(s) (select all that apply)? | |

| C.1 Adapted a published search strategy. | The systematic review filters tested were translated from existing filters from SIGN, designed for Ovid MEDLINE and Ovid Embase. “The SR filters tested were translated from existing SIGN filters, designed for Ovid MEDLINE and Ovid Embase, into PubMed and Embase.com syntax. Adaptations were made to accommodate the SR‐related index terms that were added to the PubMed MeSH tree and Embase Emtree after the development of the SIGN filters. For PubMed, "Systematic Review"[PT] and "Systematic Reviews as Topic"[MeSH] were included in the filter. For Embase, we included 'meta‐analysis (topic)'/exp, 'systematic review (topic)'/exp, and 'systematic review'/exp in the filter." |

| C.2 Asked experts for suggestions of relevant terms. | Not reported |

| C.3 Used a database thesaurus. | MEDLINE: MeSH Embase: Emtree |

| C.4 Statistical analysis of terms in a gold standard set of records (see B above). | Not reported |

| C.5 Extracted terms from the gold standard set of records (see B above). | Not reported |

| C.6 Extracted terms from some relevant records (but not a gold standard). | Not reported |

| C.7 Tick all types of search terms tested. | Subject headings Text words (e.g. in title, abstract) Publication types Subheadings |

| C.8 Include the citation of any adapted strategies. | Scottish Intercollegiate Guidelines Network. Search filters [Internet]. Edinburgh, UK: Healthcare Improvement Scotland [cited Nov 25 2020]. Available from: <https://www.sign.ac.uk/what‐we‐ do/methodology/search‐filters/>. |

| C.9 How were the (final) combination(s) of search terms selected? | Not reported |

| C.10 Were the search terms combined (using Boolean logic) in a way that is likely to retrieve the studies of interest? |

Pubmed filter: ((“Meta‐Analysis as Topic”[MeSH] OR metaanaly*[TIAB] OR metaanaly*[TIAB] OR “Meta‐Analysis”[PT] OR “Systematic Review”[PT] OR “Systematic Reviews as Topic”[MeSH] OR systematic review*[TIAB] OR systematic overview*[TIAB] OR “Review Literature as Topic”[MeSH]) OR (cochrane[TIAB] OR embase[TIAB] OR psychlit[TIAB] OR psyclit[TIAB] OR psychinfo[TIAB] OR psycinfo[TIAB] OR cinahl[TIAB] OR cinhal[TIAB] OR “science citation index”[TIAB] OR bids[TIAB] OR cancerlit[TIAB]) OR (reference list*[TIAB] OR bibliograph*[TIAB] OR hand‐search*[TIAB] OR “relevant journals”[TIAB] OR manual search*[TIAB]) OR ((“selection criteria”[TIAB] OR “data extraction”[TIAB]) AND “Review”[PT])) NOT (“Comment”[PT] OR “Letter”[PT] OR “Editorial”[PT] OR (“Animals”[MeSH] NOT (“Animals”[MeSH] AND “Humans”[MeSH]))) Embase Filter: ((‘meta analysis (topic)’/exp OR ‘metaanalysis’/exp OR (meta NEXT/1 analy*):ab,ti OR metaanaly*:ab,ti OR ‘systematic review (topic)’/exp OR ‘systematic review’/exp OR (systematic NEXT/1 review*):ab,ti OR (systematic NEXT/1 overview*):ab,ti) OR (cancerlit:ab,ti OR cochrane:ab,ti OR embase:ab,ti OR psychlit:ab,ti OR psyclit:ab,ti OR psychinfo:ab,ti OR psycinfo:ab,ti OR cinahl:ab,ti OR cinhal:ab,ti OR ‘science citation index’:ab,ti OR bids:ab,ti) OR ((reference NEXT/1 list*):ab,ti OR bibliograph*:ab,ti OR hand‐search*:ab,ti OR (manual NEXT/1 search*):ab,ti OR ‘relevant journals’:ab,ti) OR ((‘data extraction’:ab,ti OR ‘selection criteria’:ab,ti) AND review/it)) NOT (letter/it OR editorial/it OR (‘animal’/exp NOT (‘animal’/exp AND ‘human’/exp))) |

| C.11 Other observations. | Not reported |

| D. Internal validity testing (This type of testing is possible when the search filter terms were developed from a known gold standard set of records). | |

| D.1 How many filters were tested for internal validity? | Not applicable |

| For each filter report the following information | |

| D.2 Was the performance of the search filter tested on the gold standard from which it was derived? | Not applicable |

| D.3 Report sensitivity data (a single value, a range, ‘Unclear’* or ‘not reported’, as appropriate). *Please describe. | Not applicable |

| D.4 Report precision data (a single value, a range, ‘Unclear’* or ‘not reported’ as appropriate). *Please describe. | Not applicable |

| D.5 Report specificity data (a single value, a range, ‘Unclear’* or ‘not reported’ as appropriate). *Please describe. | Not applicable |

| D.6 Other performance measures reported. | Not applicable |

| D.7 Other observations. | Not applicable |

| E. External validity testing (This section relates to testing the search filter on records that are different from the records used to identify the search terms) | |

| E.1 How many filters were tested for external validity on records different from those used to identify the search terms? | 2 filters. Reported Appendix 4 |

| E.2 Describe the validation set(s) of records, including the interface. | PubMed: the reference GS consisted of 77 systematic review references, collected in 33 evidence summaries on different first aid topics. Embase: The reference GS consisted of 70 systematic review references, collected in 35 evidence summarie |

| E.3 On which validation set(s) was the filter tested? | Not reported. |

| E.4 Report sensitivity data for each validation set (a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | Not reported |

| E.5 Report precision data for each validation set (report a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | Single value: Pubmed 9.7% Embase 5,4% |

| E.6 Report specificity data for each validation set (a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | Single value: Pubmed 97% Embase 96% |

| E.7 Other performance measures reported. | NNR – Single value: 10 |

| E.8 Other observations. | |

| F. Limitations and comparisons. | |

| F.1 Did the authors discuss any limitations to their research? | Yes: First: the filters evaluated in this paper were specifically tested on searches of evidence summaries used for first aid guideline projects. Second: we use the relative recall technique which is one more "real world" technique application of the use of search filters that compose a reference gold standard by hand searching journals. Third: systematic searches for guidelines CEBaP projects are more pragmatic than searches for RS s in the sense that they try to balance methodological rigor with the time constraints associated with guide production. |

| F.2 Are there other potential limitations to this research that you have noticed? | Not reported |

| F.3 Report any comparisons of the performance of the filter against other relevant published filters (sensitivity, precision, specificity or other measures). | Not reported |

| F.4 Include the citations of any compared filters. | Not reported |

| F.5 Other observations and / or comments. | Not reported |

| G. Other comments. This section can be used to provide any other comments. Selected prompts for issues to bear in mind are given below. | |

| G.1 Have you noticed any errors in the document that might impact on the usability of the filter? | Not reported |

| G.2 Are there any published errata or comments (for example in the MEDLINE record)? | Not reported |

| G.3 Is there public access to pre‐publication history and / or correspondence? | Not reported |

| G.4 Are further data available on a linked site or from the authors? | Not reported |

| G.5 Include references to related papers and/or other relevant material. | Not reported |

| G.6 Other comments. | Not reported |

3. InterTASC ‐ Boynton 1998.

| A. Information | |

| A.1 State the author’s objective | "To develop a highly sensitive search strategy to identify systematic reviews of intervention, including meta‐analyzes, indexed in MEDLINE and to evaluate this strategy in terms of its sensitivity and precision." |

| A.2 State the focus of the research. | Sensitivity‐maximising Precision‐maximising |

| A.3 Database(s) and search interface(s) . | MEDLINE(Ovid). |

| A.4 Describe the methodological focus of the filter (e.g. RCTs). | Systematic reviews |

| A.5 Describe any other topic that forms an additional focus of the filter (e.g. clinical topics such as breast cancer, geographic location such as Asia or population grouping such as paediatrics). | N/A |

| A.6 Other observations. | N/A |

| B. Identification of a gold standard (GS) of known relevant records | |

| B.1 Did the authors identify one or more gold standards (GSs)? | 1 GSs To test the sensitivity and precision of various search terms.a ‘quasi‐gold standard’ of systematic reviews was established by a combination of hand and electronic searching. |

| B.2 How did the authors identify the records in each GS? | The ‘quasi‐gold standard’ was produced by using:Institute for Scientific Information (ISI) citation index impact factors to identify six journals from the top ten high‐impact factor journals in the ‘Medicine general and internal’ category produced by ISI, searched by hand for the year.Search strategy used to identify candidate systematic reviews in MEDLINE (Ovid) |

| B.3 Report the dates of the records in each GS. | 1992 and 1995 |

| B.4 What are the inclusion criteria for each GS? | Decisions on whether to include studies in the ‘quasi‐gold standard’ by two authors (JB and JG) independently, using a CRD quick‐assessment sifting process. |

| B.5 Describe the size of each GS and theauthors’ justification, if provided (for example the size of the gold standard may have been determined by a power calculation) | 288 papers. Ninety per cent of these systematic reviews were identified by hand searching and a further 10% by searching MEDLINE. |

| B.6 Are there limitations to the gold standard(s)? | Search dates limited (1992‐1995) |

| B.7 How was each gold standard used? | ‐ To identify potential search terms ‐ To derive potential strategies (groups of terms) ‐ To test internal validityTo test external validity |

| B.8 Other observations. | No |

| C. How did the researchers identify the search terms in their filter(s) (select all that apply)? | |

| C.1 Adapted a published search strategy. | No |

| C.2 Asked experts for suggestions of relevant terms. | No |

| C.3 Used a database thesaurus. | Mesh, Subject Headings. |

| C.4 Statistical analysis of terms in a gold standard set of records (see B above). | The list of candidate terms for search strategies was derived by analysing the MEDLINE records of the 288 articles in the ‘quasi‐gold standard’ for word frequency. |

| C.5 Extracted terms from the gold standard set of records (see B above). | No |

| C.6 Extracted terms from some relevant records (but not a gold standard). | No |

| C.7 Tick all types of search terms tested. | ‐ Subject headings ‐ Text words (e.g. in title, abstract) ‐ Publication types |

| C.8 Include the citation of any adapted strategies. | N/A |

| C.9 How were the (final) combination(s) of search terms selected? | The list of terms was sorted acording to the best results for sensitivity and precision. |

| C.10 Were the search terms combined (using Boolean logic) in a way that is likely to retrieve the studies of interest? | Yes, Only MEDLINE search appendix 1 |

| C.11 Other observations. | No |

| D. Internal validity testing (This type of testing is possible when the search filter terms were developed from a known gold standard set of records). | |

| D.1 How many filters were tested for internal validity? | 8 filters: Strategy A: Sensitivity ≥ 50, Precision ≥ 25%, Strategy B: Sensitivity ≥ 25%, Precision ≥ 10%, Strategy C: Sensitivity ≥ 25%, Precision ≥ 25%, Strategy D: Sensitivity ≥ 25%, Precision ≥ 50%, Strategy E: Sensitivity ≥ 25%, Precision ≥ 70%, Strategy F: Sensitivity ≥ 50%, Precision ≥ 10%, Strategy H: Sensitivity ≥ 25%, Precision ≥ 10%, Strategy J: Sensitivity ≥ 15%, Precision ≥ 25% 3 additional filters were tested with expert input. |

| For each filter report the following information | |

| D.2 Was the performance of the search filter tested on the gold standard from which it was derived? | Yes, the search strategies were compared against the performance of other published search strategies in relation to the ‘quasi‐gold standard’ and the OVID MEDLINE interface. |

| D.3 Report sensitivity data (a single value, a range, ‘Unclear’* or ‘not reported’, as appropriate). *Please describe. | Yes, Strategy A: 66%, Strategy B: 95%, Strategy C: 92%, Strategy D: 39%, Strategy E: 29%, Strategy F: 61%, Strategy H: 98%, Strategy J: 98% |

| D.4 Report precision data (a single value, a range, ‘Unclear’* or ‘not reported’ as appropriate). *Please describe. | A single value: Strategy A: 26%, Strategy B: 12%, Strategy C: 23%, Strategy D: 49%, Strategy E: 79%, Strategy F: 42%, Strategy H: 19%, Strategy J: 20% |

| D.5 Report specificity data (a single value, a range, ‘Unclear’* or ‘not reported’ as appropriate). *Please describe. | No |

| D.6 Other performance measures reported. | No |

| D.7 Other observations. | No |

| E. External validity testing (This section relates to testing the search filter on records that are different from the records used to identify the search terms) | |

| E.1 How many filters were tested for external validity on records different from those used to identify the search terms? | No external validity test performed |

| E.2 Describe the validation set(s) of records, including the interface. | N/A |

| E.3 On which validation set(s) was the filter tested? | N/A |

| E.4 Report sensitivity data for each validation set (a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | N/A |

| E.5 Report precision data for each validation set (report a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | N/A |

| E.6 Report specificity data for each validation set (a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | N/A |

| E.6 Other performance measures reported. | N/A |

| E.7 Other observations. | No |

| F. Limitations and comparisons. | |

| F.1 Did the authors discuss any limitations to their research? | Yes, “This study is based on six ‘high‐impact’ factor English‐language journals which may not be representative of health care journals as a whole”“The years chosen for the creation of the ‘quasi‐gold standard’ may present a limitation of this study”“The CRD definition of systematic reviews and the associated criteria used by CRD to identify articles for inclusion in DARE, as outlined in Section 1.1 above, may be criticised for being too demanding” |

| F.2 Are there other potential limitations to this research that you have noticed? | NO |

| F.3 Report any comparisons of the performance of the filter against other relevant published filters (sensitivity, precision, specificity or other measures). | Yes: Hunt and McKibbon full: Sensitivity 41%, Precision 75%. Hunt and McKibbon brief: Sensitivity 40%, Precision 75%. CRD full: Sensitivity 84%, Precision 31%. CRD brief: Sensitivity 41%, Precision 64%. |

| F.4 Include the citations of any compared filters. | Yes. ( Hunt 1997 ; NHS 1996 ) |

| F.5 Other observations and / or comments. | No |

| G. Other comments. This section can be used to provide any other comments. Selected prompts for issues to bear in mind are given below. | |

| G.1 Have you noticed any errors in the document that might impact on the usability of the filter? | No |

| G.2 Are there any published errata or comments (for example in the MEDLINE record)? | No |

| G.3 Is there public access to pre‐publication history and / or correspondence? | No |

| G.4 Are further data available on a linked site or from the authors? | No |

| G.5 Include references to related papers and/or other relevant material. | No |

| G.6 Other comments. | No |

4. InterTASC ‐ Boluyt 2008.

| A. Information | |

| A.1 State the author’s objective | To determine the sensitivity and precision of existing search strategies for retrieving child health systematic reviews in MEDLINE using PubMed. |

| A.2 State the focus of the research. | Sensitivity‐maximising Precision‐maximising |

| A.3 Database(s) and search interface(s) . | MEDLINE(PubMed) |

| A.4 Describe the methodological focus of the filter (e.g. RCTs). | Systematic reviews and meta‐analyses. |

| A.5 Describe any other topic that forms an additional focus of the filter (e.g. clinical topics such as breast cancer, geographic location such as Asia or population grouping such as paediatrics). | Child health |

| A.6 Other observations. | NO |

| B. Identification of a gold standard (GS) of known relevant records | |

| B.1 Did the authors identify one or more gold standards (GSs)? | 1 GSs. To measure search sensitivity strategies, a reference standard set of RS was established by search for RS of children's health in DARE and by manually searching for various magazines for RS. |

| B.2 How did the authors identify the records in each GS? | All titles and abstracts in DARE (Cochrane Library, Issue 2, 2004) were searched for SRs of child health also indexed in MEDLINE. We hand‐searched 7 MEDLINE‐indexed pediatric journals with a variety of impact factors and for which full‐text electronic copies were available in our medical library. All issues of each journal were searched for the following 5 years: 1994, 1997, 2000, 2002 and 2004 |

| B.3 Report the dates of the records in each GS. | Yes, 1994, 1997, 2000, 2002, and 2004 |

| B.4 What are the inclusion criteria for each GS? | “Any literature review, meta‐analysis, or other article that explicitly indicates the use of a strategy for locating evidence by mentioning at least the databases that were searched and reviewing the empirical evidence on children” |

| B.5 Describe the size of each GS and theauthors’ justification, if provided (for example the size of the gold standard may have been determined by a power calculation) | Total Reference Standard: 387 (298 by DARE + 115 found by hand search) ‐ 26 overlap= 387 |

| B.6 Are there limitations to the gold standard(s)? | Subset of the MEDLINE database |

| B.7 How was each gold standard used? | To test external validity |

| B.8 Other observations. | |

| C. How did the researchers identify the search terms in their filter(s) (select all that apply)? | |

| C.1 Adapted a published search strategy. | To identify articles that report on the development and validation of systems review the search filters in MEDLINE, searched MEDLINE from January 1995 to January 2006 with the following MeSH terms: MEDLINE, information storage and Retrieval/Methods, and Review, Literature. In addition, reference lists of relevant articles were reviewed and content experts were contacted to find further studies. To improve accuracy, © InterTASC Information Specialist Subgroup (ISSG) March 2008 We combined the systematic review filters with a sensitive child filter developed by the Cochrane Field of Child Health to retrieve only studies in children. |

| C.2 Asked experts for suggestions of relevant terms. | Contact with experts |

| C.3 Used a database thesaurus. | MeSH terms |

| C.4 Statistical analysis of terms in a gold standard set of records (see B above). | Not reported |

| C.5 Extracted terms from the gold standard set of records (see B above). | Not reported |

| C.6 Extracted terms from some relevant records (but not a gold standard). | Not reported |

| C.7 Tick all types of search terms tested. | Subject headings Text words (e.g. in title, abstract) Publication types |

| C.8 Include the citation of any adapted strategies. | Yes: Shojania, Boyton, White, Montori, PubMed plus child |

| C.9 How were the (final) combination(s) of search terms selected? | Not applicable. |

| C.10 Were the search terms combined (using Boolean logic) in a way that is likely to retrieve the studies of interest? | Not reported. |

| C.11 Other observations. | |

| D. Internal validity testing (This type of testing is possible when the search filter terms were developed from a known gold standard set of records). | |

| D.1 How many filters were tested for internal validity? | Not applicable. |

| For each filter report the following information | |

| D.2 Was the performance of the search filter tested on the gold standard from which it was derived? | Not applicable. |

| D.3 Report sensitivity data (a single value, a range, ‘Unclear’* or ‘not reported’, as appropriate). *Please describe. | Not applicable. |

| D.4 Report precision data (a single value, a range, ‘Unclear’* or ‘not reported’ as appropriate). *Please describe. | Not applicable. |

| D.5 Report specificity data (a single value, a range, ‘Unclear’* or ‘not reported’ as appropriate). *Please describe. | Not applicable. |

| D.6 Other performance measures reported. | Not applicable. |

| D.7 Other observations. | |

| E. External validity testing (This section relates to testing the search filter on records that are different from the records used to identify the search terms) | |

| E.1 How many filters were tested for external validity on records different from those used to identify the search terms? | 1. Shojania Plus Child 2. Boynton plus child 3. White 1 plus child 4. White 2 plus child 5. Montori 1 plus child 6. Montori 2 plus child 7. Montori 3 plus child 8. Montori 4 plus child 9. PubMed plus child |

| E.2 Describe the validation set(s) of records, including the interface. | Total Reference Standard: 387 True child health systematic reviews found in MEDLINE (limits 1990‐2006). |

| E.3 On which validation set(s) was the filter tested? | Reference standard all Pubmed records |

| E.4 Report sensitivity data for each validation set (a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | Shojania+child 74 (95%CI 69‐78) Boynton+child 95 (95%CI 92‐97) White 1+child 93 (95%CI 91‐96) White 2+child 94 (95%CI 91‐96) Montori 1+child 96 (95%CI 93‐97) Montori 2+child 68 (95%CI 64‐73) Montori 3+child 94 (95%CI 91‐96) Montori 4+child 72 (95%CI 67‐76) Pubmed +child 76 (95%CI 72‐80) |

| E.5 Report precision data for each validation set (report a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | Shojania+child 45 (95%CI 36‐55) Boynton+child 3 (95%CI 1‐9) White 2+child 2 (95%CI 1‐7) Montori 2+child 45 (95%CI 36‐55) Montori 3+child 3 (95%CI 1‐9) Pubmed +child 32 (95%CI 24‐42) |

| E.6 Report specificity data for each validation set (a single value, a range or ‘Unclear’ or ‘not reported’, as appropriate). | Not reported |

| E.6 Other performance measures reported. | Not reported |

| E.7 Other observations. | |

| F. Limitations and comparisons. | |

| F.1 Did the authors discuss any limitations to their research? | Broad definition of systematic reviews |

| F.2 Are there other potential limitations to this research that you have noticed? | Not reported |

| F.3 Report any comparisons of the performance of the filter against other relevant published filters (sensitivity, precision, specificity or other measures). | Not reported |

| F.4 Include the citations of any compared filters. | Not reported |

| F.5 Other observations and / or comments. | Not reported |

| G. Other comments. This section can be used to provide any other comments. Selected prompts for issues to bear in mind are given below. | |

| G.1 Have you noticed any errors in the document that might impact on the usability of the filter? | Not reported |

| G.2 Are there any published errata or comments (for example in the MEDLINE record)? | Not reported |

| G.3 Is there public access to pre‐publication history and / or correspondence? | Not reported |

| G.4 Are further data available on a linked site or from the authors? | Not reported |

| G.5 Include references to related papers and/or other relevant material. | Not reported |

| G.6 Other comments. | |

5. InterTASC ‐ Lee 2012.

| A. Information | |

| A.1 State the author’s objective | "The objective is to describe the development and validation of the health‐evidence.ca systematic review search filter and to compare its performance with other available systematic review filters." |

| A.2 State the focus of the research. | Balance of sensitivity |

| A.3 Database(s) and search interface(s) . | MEDLINE, EMBASE, and CINAHL (Ovid) |

| A.4 Describe the methodological focus of the filter (e.g. RCTs). | Systematic reviews |

| A.5 Describe any other topic that forms an additional focus of the filter (e.g. clinical topics such as breast cancer, geographic location such as Asia or population grouping such as paediatrics). | Included reviews should meet the criteria for relevance and should be systematic reviews that focus on public health, provide outcome data on the effectiveness of interventions, and include a documented search strategy. |

| A.6 Other observations. | No |

| B. Identification of a gold standard (GS) of known relevant records | |

| B.1 Did the authors identify one or more gold standards (GSs)? | 1 GSs. “We considered this set (the electronic database searches plus additional search strategies), the‘gold standard’ for health‐evidence.ca.” |

| B.2 How did the authors identify the records in each GS? | “Our PH search filter typically yielded a very high volume of results with very low precision. For example,between January 2006 and December 2007, of the 136,427 titles screened, 409 were relevant for the health evidence.ca registry, or in other words, precision was 0.3%. In addition to using the PH search filter, more than 40 public health‐relevant journals were hand searched annually, as well as the reference lists of allrelevant reviews. Given this systematic search of the published review literature, we were reasonably confident thatour retrieval methods were capturing a near complete set of relevant articles.” |

| B.3 Report the dates of the records in each GS. | Jan 2006 to Dec 2007 |

| B.4 What are the inclusion criteria for each GS? | Systematic reviews that focus on public health, provide outcome data on the effectiveness of interventions, and include a documented search strategy. |

| B.5 Describe the size of each GS and theauthors’ justification, if provided (for example the size of the gold standard may have been determined by a power calculation) | No reported |

| B.6 Are there limitations to the gold standard(s)? | No |

| B.7 How was each gold standard used? | To test internal validity |

| B.8 Other observations. | No |

| C. How did the researchers identify the search terms in their filter(s) (select all that apply)? | |