Abstract

Purpose of review

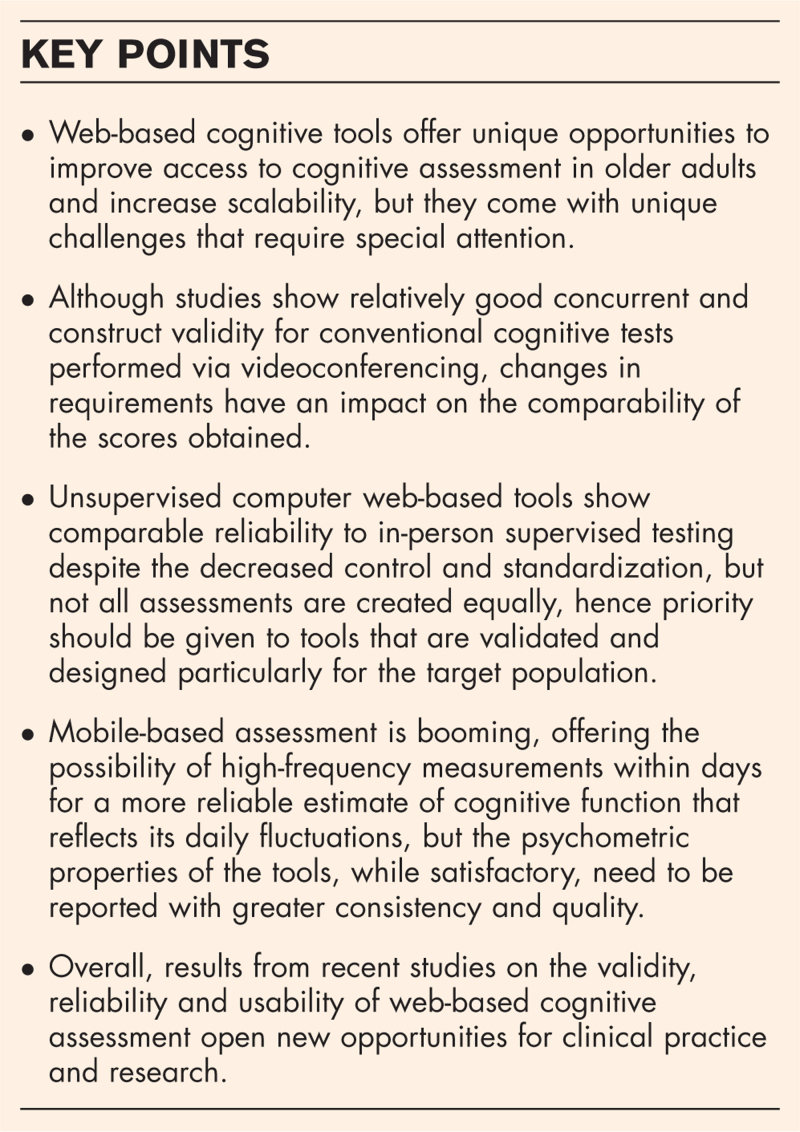

The use of digital tools for remote cognitive measurement of older adults is generating increasing interest due to the numerous advantages offered for accessibility and scalability. However, these tools also pose distinctive challenges, necessitating a thorough analysis of their psychometric properties, feasibility and acceptability.

Recent findings

In this narrative review, we present the recent literature on the use of web-based cognitive assessment to characterize cognition in older adults and to contribute to the diagnosis of age-related neurodegenerative diseases. We present and discuss three types of web-based cognitive assessments: conventional cognitive tests administered through videoconferencing; unsupervised web-based assessments conducted on a computer; and unsupervised web-based assessments performed on smartphones.

Summary

There have been considerable progress documenting the properties, strengths and limitations of web-based cognitive assessments. For the three types of assessments reported here, the findings support their promising potential for older adults. However, certain aspects, such as the construct validity of these tools and the development of robust norms, remain less well documented. Nonetheless, the beneficial potential of these tools, and their current validation and feasibility data, justify their application [see Supplementary Digital Content (SDC)].

Keywords: aging, cognitive assessment, digital health, unsupervised mobile cognitive tests, unsupervised web-based cognitive tests, videoconference-based cognitive assessment

INTRODUCTION

Thirty years ago, a group of researchers and clinicians at the Institut universitaire de gériatrie de Montréal (IUGM) created an award-winning computerized memory battery. This battery was poised to revolutionize cognitive assessment by providing precisely controlled measurements in terms of temporal parameters and delivery, while streamlining the workflow for clinicians [1]. Unfortunately, the anticipated promises did not materialize as expected. Decades later, the field has yet to fully embrace web-based tools in both clinical and laboratory settings. Changing the way a field practices is notoriously difficult and it is hard to implement innovation in practice. There are many reasons why this might have been the case for web-based cognitive assessment. Most clinicians and researchers were trained in, and frequently use, traditional in-person psychometric testing. By contrast, the online environment is newer and hence less familiar. Clinicians may also have hesitation due to less control/standardization of online formats and they may wonder whether they are sufficiently valid and normed. Given the consequences of a diagnosis of dementia from a cognitive assessment, the need for properly designed and validated tests is particularly critical. Another possible reason is that switching to online testing requires access to computerized tests, capacity hosting web-based data collection and storage, as well as capacity to process and analyze large-scale web-based data. In addition, clinicians may feel overwhelmed by the number of web-based tests, especially as there are no recommendations or major consensus from the field on which tools to use. Finally, clinicians may think that older adults will not be comfortable with computerized testing due to the digital divide. For all these reasons, experts may be open to the idea of web-based testing in theory, but have difficulty implementing it in practice.

However, this situation has the potential to change dramatically over the next few years. The SARS-CoV-2 COVID-19 pandemic has reshaped the landscape, ushering a new era where clinicians and researchers have become aware of the enormous but under-exploited potential of technology for cognitive assessment. Extended periods of confinement and safety concerns, which limited hospital visits and direct contact with vulnerable older adults, compelled many clinicians and researchers to adopt remote assessment methods (e.g.: [2,3]).

It is important to acknowledge that telehealth and web-based assessments have existed long before the COVID-19 pandemic. One example is the Cambridge Neuropsychological Test Automated Battery (CANTAB) system, which was initially developed in the 1980 s to assess older adults suspected to have dementia [4,5]. As early as 2012, the Academy of Clinical Neuropsychology and the National Academy of Neuropsychology published a position paper with recommendations on this topic [6]. However, the COVID-19 pandemic brought a dramatic shift that transformed web-based cognitive assessments from sophisticated tools for specific research interests into tools likely to make a substantial impact in both clinical practice and research.

In this narrative review, we examine recent literature (2021–2023) on the use of web-based cognitive assessment in older adults, focusing on its potential for characterizing cognition and contributing to the diagnosis of age-related neurodegenerative diseases. Furthermore, we examine the limitations of these tools and explore potential avenues for further advancements in this field. Digital neuropsychology refers to the assessment of cognition using either computerized or web-based resources [7,8]. Web-based cognitive assessment refers to any cognitive test or battery that requires access to the internet. Here, we present and discuss three types of web-based cognitive assessments: conventional cognitive tests administered through videoconferencing; unsupervised web-based assessments conducted on a computer; and unsupervised web-based assessments performed on smartphones or other portable devices. For each type of assessment, we present available recent data on psychometric validation, examining criterion validity (via concurrent validity and within-individual comparability), construct validity, and test-retest reliability. Concurrent validity measures whether web-based tests are related to in-person versions, while within-individual comparability measures whether the web-based and in-person versions yield similar scores. Construct validity measures whether the test accurately reflects what it is intended to measure by examining their sensitivity to characteristics expected to be associated with these tests (for instance, diagnostic group or age) or show similar inter-correlations. Test-retest reliability measures consistency over repeated testing. Where available, we present findings on aspects of the user experience, including acceptability and usability of the tools. We also discuss the unique challenges and potential contributions associated with each assessment type. To conclude, we provide an overview of the overall potential, identify key issues, and share perspectives in this regard.

Box 1.

no caption available

VIDEOCONFERENCING

Videoconferencing has been used to administer conventional in-person cognitive tests remotely and under supervision, following the same protocols as a conventional in-person test. Thus, the examiner is present virtually and can provide instructions, record responses and the time required to perform the test, as well as provide feedback and verify that the test is carried out correctly. In some cases, the tests need to be adapted for remote administration by removing or modifying items that are not suitable for remote testing. This includes elements of the test that involve motor components or require copying tasks. In most cases, however, test elements do not need to be modified and can be used largely the same way as when administered in-person.

A few recent studies or literature reviews [3,9,10▪▪,11,12▪] have investigated the concurrent validity and comparability between videoconference-based assessments of commonly used cognitive tests and their in-person counterparts. Hunter et al.[10▪▪] conducted a literature review identifying 12 studies (740 participants) that compared in-person with videoconference-based assessment in older adults with a range of cognitive impairments (from mild cognitive impairment (MCI) to dementia). Results indicate that many of the tests currently used in clinical practice [e.g.: Mini-Mental State examination (MMSE), Severe MMSE (SMMSE), Letter Fluency, and Hopkins Verbal Learning Test-revised (HVLT-R)] show good concurrent validity compared to in-person administration. It should be noted, however, that the participants included in the studies had, on average, a relatively high level of education (mean of 14.1). Additionally, concurrent validity was often measured in controlled environments (usually a research facility) and therefore may not be as high for people who are disadvantaged or less proficient with technology, or when the test is completed in the person's home. Beishon et al.[12▪] examined the literature on common global cognition tests [e.g., Montreal Cognitive Assessment (MoCA), MMSE, Rowland Universal Dementia Assessment Scale (RUDAS)] and also reported high concurrent validity, but scores were often not comparable, suggesting insufficient comparability (see also [9]). It is interesting to note that the remote version of the Kimberley Indigenous Cognitive Assessment (KICA-screen) showed good concurrent validity and comparability compared to the in-person version when tested in Aboriginal and Torres Strait Islander people [13▪]. This supports the hypothesis that remote assessment could reduce barriers related to accessing dementia care in rural and isolated areas.

Videoconferencing assessment proved to have good diagnostic validity, particularly in distinguishing people with dementia from healthy older adults. However, its precision in identifying individuals with MCI was found to be relatively lower [10▪▪,11], although there are still very few studies that have assessed diagnostic validity.

Finally, a limited number of studies have examined the user-experience, which report good acceptability of remote testing through videoconferencing, even among a diverse population and patients with dementia [13▪,14,15]. However, challenges have been reported, specifically for certain linguistic tasks (e.g. monosyllabic repetition) or visual items (e.g. Visual Object and Space Perception Battery (VOSP) object decision task) [14].

In sum, most studies comparing videoconference-based to in-person traditional cognitive tests report good concurrent validity but poor or fair comparability. Lack of comparability means that individual participants do not necessarily obtain the same score on the videoconference and in-person versions of the assessment. Thus, despite the apparent similarities and seemingly minor changes when the test is administered remotely, there are differences in the underlying construct and/or level of difficulty. Differences in the underlying construct imply that the presence of an impairment may indicate different cognitive deficits when using different versions of the assessment. Differences in the level of difficulty indicate that cut-off values and normative data cannot be transposed directly from in-person to remote versions of the same test. It is therefore important to provide more research data to not only determine the criterion validity of cognitive tests administered through videoconferencing compared to their in-person version, but also to understand their construct validity. Furthermore, there is a need to develop norms and cut-off values that are appropriate for these particular versions of assessments.

UNSUPERVISED COMPUTER WEB-BASED COGNITIVE ASSESSMENTS

Unsupervised web-based computer cognitive assessments consist of self-administered online tasks completed remotely. These assessments are typically short web-versions of lab or clinical cognitive tasks, although a few recent developments include more ecologically valid tasks or a virtual reality environment [16]. Unsupervised tests allow the collection of data from tens of thousands of individuals [17–19]. The scale and age range in these large datasets offer an unprecedented opportunity for researchers to study population-level variability in cognitive performance [17,18], examine how cognition relates to demographic or lifestyle risk factors for dementia (e.g., age, sex, education, smoking [20,21]), and detect subtle effects, such as sex differences [22].

A recent systematic review identified over 3000 unsupervised web-based cognitive assessment tools [23]. However, it is important to note that these tools exhibit substantial differences in development and validation. The main foreseeable challenge for unsupervised computer assessments is the increase in confounding factors due to loss of experimenter control and standardization. However, counterbalanced within-person testing has demonstrated that the testing environment does not significantly impact the scores of older adults from unsupervised at-home testing compared to supervised lab testing [24]. Within-person studies also show good test-retest reliability [25,26,27▪,28], good concurrent validity compared to a gold-standard clinician administered neuropsychological assessment [29,30,31▪] and poor to good convergent validity compared to analogous neuropsychological tasks [24,25,28,32,33▪,34▪] in older adults and in age-related neurodegenerative diseases or conditions (MCI, Alzheimer's disease, dementia with Lewy bodies, and Parkinson's disease). Unsupervised online assessments have successfully identified effects that are consistently found with supervised in-person testing, such as age differences and between-task correlations [35,36▪]. This suggests excellent construct validity, although with slightly lower scores on fine motor or fast reaction time tests due to differences in device latencies and interfaces [19,32]. Unsupervised computer assessments also show good diagnostic validity for neurodegenerative diseases [30,34▪,37,38] and associations with neurodegenerative biomarkers for dementia [26].

Due to the unsupervised nature of such tests, it is especially critical to assess and report measures of user experience. User experience studies find that most older adults successfully understand and complete online tasks [24,39–41], even though they subjectively report greater anxiety and difficulty than younger adults [28]. Studies have indicated relatively positive user experiences and comparable scores across variations in familiarity or attitudes to technology use [24,27▪,41]. Interestingly, some studies have reported that older and middle-aged adults can even present more reliable scores than younger adults, possibly due to fewer distractions [35,42], and have lower attrition levels than younger adults for multiple tests [27▪]. Older adults also report an eagerness to use technology, and actively incorporate it into their daily lives, particularly touchscreen devices, as the direct motor-screen interactions are easy to navigate [43]. Unsupervised computer assessments have also demonstrated feasibility in people with MCI and mild AD [44].

While unsupervised computer assessments herald a new era of efficiency and scalability in cognitive testing, they come with unique challenges. Rigorous data examination and cleaning are imperative when handling large online datasets [36▪]. Furthermore, test developers should pay attention to task adaptations for older adults, such as clear task instructions, more practice trials, video tutorials, or a lower/adaptive starting difficulty [39,45].

UNSUPERVISED MOBILE WEB-BASED COGNITIVE ASSESSMENTS

Unsupervised mobile web-based cognitive assessments consist of digitized versions of existing conventional cognitive tools or newly developed laboratory tools. They are completed online by individuals without supervision using their own mobile devices, usually smartphones.

A major asset of mobile web-based assessments is the possibility of performing repeated high-frequency assessments over multiple days and on various occasions [27▪]. This approach provides an estimate of the day-to-day cognitive functioning of individuals, accounting for within-individual variability, and improves reliability compared to conventional cognitive assessments that capture performance only at a single time point [46▪▪]. Repeated assessments may be particularly relevant when assessing individuals with neurodegenerative disorders as their cognitive performance can vary significantly depending on the time of day [47,48▪▪]. The variability observed in mobile cognitive performance assessments may have the potential to identify subtle changes in cognitive function that may help detect MCI [49].

Although there have been few studies conducted on mobile assessments as this field is fairly new, recent studies show that mobile tools can in some cases achieve high between-person reliability (i.e., whether inter-individual differences are comparable over repeated testing) [46▪▪,50–54] and good test-retest reliability over intervals ranging from several days to several months[46▪▪,50,55,56▪]. This suggests that high-frequency measurement can be used with confidence to assess within-person fluctuations in cognitive performance. However, test-retest reliability is highly variable, ranging from poor to exceptional, and is not systematically reported [57].

Some mobile-based assessment tools (e.g., Trail Making Test, MoCA, or MMSE) also demonstrate very good concurrent validity, as evidenced by high correlation against their conventional in-person versions [49,50,55]. Recent studies also show good construct validity of mobile-based assessments through sensitivity to age and education effects, as well as fluid and neuro-imaging biomarkers that index AD progression. These biomarkers also include measures such as cortical thickness, hippocampal volume, and levels of amyloid and tau [46▪▪,50,55,58–60]. Moreover, mobile-based assessments have good diagnostic accuracy in distinguishing people with MCI or AD from cognitively healthy older adults [55], and sometimes have greater accuracy than conventional in-person tools, such as the MoCA [61].

In terms of user experience, studies show good-to-high completion rates, with relatively low drop-out rates, even for cognitive assessments completed several times per day [46▪▪,50,51,54,55,56▪]. Older participants have shown a tendency to stay engaged in remote studies for longer durations compared to younger participants [62]. Additionally, they have expressed positive perceptions of mobile-based assessments, finding them acceptable and enjoyable (e.g., [50]).

Mobile-based assessments can be used to design tests that are less expensive and time consuming than conventional in-person assessments. However, frequent assessments can be challenging to complete, hence concise or shortened measures are recommended to provide participants the autonomy they need to plan tasks according to their daily schedule [63]. Furthermore, while many tools are now available, many do not provide psychometric data that are critical for clinical use, such as normative data and sensitivity or specificity values [23]. Finally, there are also several practical limitations to consider, such as potential variability in finger and hand dexterity, as well as variations in screen size [64].

CONCLUSION

Interest in the vast potential offered by remote assessment possibilities is growing. Web-based assessments (especially supervised) demonstrate good concurrent validity, and expected associations (e.g., with biomarkers, age, clinical status), but can show insufficient comparability with conventional in-person versions which suggests they reflect slightly different constructs or difficulty. Psychometric properties are largely good, but vary considerably from one tool to another. Web-based assessments are generally well accepted by older adults, although further user experience research is needed.

Web-based testing offers advantages of better accessibility and inclusivity (e.g., for remote or vulnerable populations) (e.g., [13▪]) and thus overcomes barriers to recruitment, testing, and retention [65▪]. Unsupervised assessments can be easily and inexpensively distributed world-wide, and mobile device options offer particularly exceptional portability. Such assessments enable measuring cognition across multiple sessions, shifting from traditional single-session in-person neuropsychological testing to repeated assessments [35,66]. This scalability offers larger sample sizes, greater sample diversity, and lower experimenter effects [46▪▪].

Although web-based assessments offer clear advantages, they also present certain challenges [67▪]. Issues remain that might hinder validity and usability, such as hardware differences or technical issues [8,62]. While web-based assessments save time and resources in the long term, it is important to initially invest in tool development, validation, and technical adaptation. Older adults still face challenges due to the digital divide (e.g., technology anxiety, limited digital literacy [68], although confinement from the COVID-19 pandemic increased technology usage in this group [69,70]. Finally, privacy and ethical issues must be considered, particularly when communicating results [63].

In conclusion, although cognitive assessments administered in person by a clinician are currently considered the gold standard for clinical diagnosis and characterization, the landscape is evolving. The role of web-based assessments in cognitive evaluation is gaining recognition, although its widespread adoption depends on objectives and contexts [67▪]. For example, unsupervised assessments may be useful for initial screening efficiency and reach, and to indicate when further testing is needed. Supervised videoconferencing may represent an interesting option for vulnerable people or those living in rural or remote areas. In the long term, advances in digital health are expected to change how clinical and scientific work is conducted. It is however important to ensure scientifically and ethically sound practices when developing and adopting digital health solutions.

Acknowledgements

We would like to thank Ève Samson for her assistance in preparing the manuscript and Annie Webb for English revision.

Financial support and sponsorship

This work received support from grants awarded by the Canadian Institutes of Health Research (CIHR), Canada Research Chairs program, and National Sciences and Engineering Research Council of Canada (NSERC) to SB; a fellowship from the Quebec Consortium for the early identification of Alzheimer's disease (CIMA-Q), Lemaire Family Foundation, and a salary supported by the Canadian Consortium for Neurodegeneration in Aging (CCNA) funds to AAL; and a fellowship from the Research Center of the Institut universitaire de gériatrie de Montréal (CRIUGM), from SB's NSERC and CIHR funds to RP. SB also received support from the Fonds de recherche du Québec – Santé, the Social Sciences and Humanities Research Council of Canada and the Canadian Consortium on Neurodegeneration and the Alzheimer’ Society of Canada.

Funding support: Canadian Institutes of Health Research, Canada Research Chairs and National Sciences and Engineering Research Council of Canada.

Conflicts of interest

There are no conflicts of interest.

Supplementary Material

Footnotes

Supplemental digital content is available for this article.

REFERENCES AND RECOMMENDED READING

Papers of particular interest, published within the annual period of review, have been highlighted as:

▪ of special interest

▪▪ of outstanding interest

REFERENCES

- 1.Chatelois J, Pineau H, Belleville S, et al. Batterie informatisée d’évaluation de la mémoire inspirée de l’approche cognitive. Can Psychol 1993; 34:45. [Google Scholar]

- 2.Collins JT, Mohamed B, Bayer A. Feasibility of remote memory clinics using the plan, do, study, act (PDSA) cycle. Age Ageing 2021; 50:2259–2263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Elbaz S, Cinalioglu K, Sekhon K, et al. A systematic review of telemedicine for older adults with dementia during COVID-19: an alternative to in-person health services? Front Neurol 2021; 12:761965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Robbins TW, James M, Owen AM, et al. Cambridge Neuropsychological Test Automated Battery (CANTAB): a factor analytic study of a large sample of normal elderly volunteers. Dement Geriatr Cogn Disord 1994; 5:266–281. [DOI] [PubMed] [Google Scholar]

- 5.Sahakian BJ, Owen A. Computerized assessment in neuropsychiatry using CANTAB: discussion paper. J R Soc Med 1992; 85:399. [PMC free article] [PubMed] [Google Scholar]

- 6.Bauer RM, Iverson GL, Cernich AN, et al. Computerized neuropsychological assessment devices: Joint position paper of the American Academy of Clinical Neuropsychology and the National Academy of Neuropsychology. Arch Clin Neuropsychol 2012; 27:362–373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Taylor & Francis, Van Patten R. Introduction to the special issue-neuropsychology from a distance: psychometric properties and clinical utility of remote neurocognitive tests. 2021; pp. 767–773. [DOI] [PubMed] [Google Scholar]

- 8.Germine L, Reinecke K, Chaytor NS. Digital neuropsychology: challenges and opportunities at the intersection of science and software. Clin Neuropsychol 2019; 33:271–286. [DOI] [PubMed] [Google Scholar]

- 9.Hernandez HHC, Ong PL, Anthony P, et al. Cognitive assessment by telemedicine: reliability and agreement between face-to-face and remote videoconference-based cognitive tests in older adults attending a memory clinic. Ann Geriatr Med Res 2022; 26:42–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10▪▪.Hunter MB, Jenkins N, Dolan C, et al. Reliability of telephone and videoconference methods of cognitive assessment in older adults with and without dementia. J Alzheimers Dis 2021; 81:1625–1647. [DOI] [PubMed] [Google Scholar]; This article provides psychometric properties of well known global cognition tests when conducted through videoconferencing in older adults with and without dementia.

- 11.McCleery J, Laverty J, Quinn TJ. Diagnostic test accuracy of telehealth assessment for dementia and mild cognitive impairment. Cochrane Database Syst Rev 2021; 7:CD013786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12▪.Beishon LC, Elliott E, Hietamies TM, et al. Diagnostic test accuracy of remote, multidomain cognitive assessment (telephone and video call) for dementia. Cochrane Database Syst Rev 2022; 4:CD013724. [DOI] [PMC free article] [PubMed] [Google Scholar]; This article reports psychometric properties of videoconferencing methods for cognitive assessment in older adults with and without dementia.

- 13▪.Russell S, Quigley R, Strivens E, et al. Validation of the Kimberley Indigenous Cognitive Assessment short form (KICA-screen) for telehealth. J Telemed Telecare 2021; 27:54–58. [DOI] [PubMed] [Google Scholar]; This study examines the validity and user experience of cognitive assessment via videoconferencing in an Indigenous population, making it unique and interesting.

- 14.Requena-Komuro M-C, Jiang J, Dobson L, et al. Remote versus face-to-face neuropsychological testing for dementia research: a comparative study in people with Alzheimer's disease, frontotemporal dementia and healthy older individuals. BMJ Open 2022; 12:e064576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rao LA, Roberts AC, Schafer R, et al. The reliability of telepractice administration of the western aphasia battery-revised in persons with primary progressive aphasia. Am J Speech Lang Pathol 2022; 31:881–895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wiley K, Robinson R, Mandryk RL. The making and evaluation of digital games used for the assessment of attention: Systematic review. JMIR Serious Games 2021; 9:e26449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jaffe PI, Kaluszka A, Ng NF, et al. A massive dataset of the NeuroCognitive Performance Test, a web-based cognitive assessment. Sci Data 2022; 9:758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.LaPlume AA, Anderson ND, McKetton L, et al. When I’m 64: age-related variability in over 40,000 online cognitive test takers. J Gerontol B Psychol Sci Soc Sci 2022; 77:104–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Passell E, Strong RW, Rutter LA, et al. Cognitive test scores vary with choice of personal digital device. Behav Res Methods 2021; 53:2544–2557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Eastman JA, Kaup AR, Bahorik AL, et al. Remote assessment of cardiovascular risk factors and cognition in middle-aged and older adults: proof-of-concept study. JMIR Form Res 2022; 6:e30410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.LaPlume AA, McKetton L, Levine B, et al. The adverse effect of modifiable dementia risk factors on cognition amplifies across the adult lifespan. Alzheimers Dement (Amst) 2022; 14:e12337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.LaPlume AA, McKetton L, Anderson ND, et al. Sex differences and modifiable dementia risk factors synergistically influence memory over the adult lifespan. Alzheimers Dement (Amst) 2022; 14:e12301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Charalambous AP, Pye A, Yeung WK, et al. Tools for app-and web-based self-testing of cognitive impairment: systematic search and evaluation. J Med Internet Res 2020; 22:e14551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cyr AA, Romero K, Galin-Corini L. Web-based cognitive testing of older adults in person versus at home: within-subjects comparison study. JMIR Aging 2021; 4:e23384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dorociak KE, Mattek N, Lee J, et al. The survey for memory, attention, and reaction time (SMART): development and validation of a brief web-based measure of cognition for older adults. Gerontology 2021; 67:740–752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Stricker NH, Stricker JL, Karstens AJ, et al. A novel computer adaptive word list memory test optimized for remote assessment: psychometric properties and associations with neurodegenerative biomarkers in older women without dementia. Alzheimers Dement (Amst) 2022; 14:e12299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27▪.Berron D, Ziegler G, Vieweg P, et al. Feasibility of digital memory assessments in an unsupervised and remote study setting. Front Digit Health 2022; 4:892997. [DOI] [PMC free article] [PubMed] [Google Scholar]; Large-scale study (n = 1407) demonstrating the feasibility, high usability, good retest reliability and preliminary findings of high construct validity of smartphone tools assessing pattern separation and pattern completion. It also identifies critical factors to consider in future studies.

- 28.Kochan NA, Heffernan M, Valenzuela M, et al. Reliability, validity, and user-experience of remote unsupervised computerized neuropsychological assessments in community-living 55- to 75-year-olds. J Alzheimers Dis 2022; 90:1629–1645. [DOI] [PubMed] [Google Scholar]

- 29.Alty J, Bai Q, George RJS, et al. TasTest: moving towards a digital screening test for preclinical Alzheimer's disease. Alzheimers Dement 2021; 17:e058732. [Google Scholar]

- 30.Bottiroli S, Bernini S, Cavallini E, et al. The smart aging platform for assessing early phases of cognitive impairment in patients with neurodegenerative diseases. Front Psychol 2021; 12:635410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31▪.Doraiswamy PM, Goldberg TE, Qian M, et al. Validity of the web-based, self-directed, NeuroCognitive Performance Test in mild cognitive impairment. J Alzheimers Dis 2022; 86:1131–1136. [DOI] [PubMed] [Google Scholar]; Unsupervised computer web-based cognitive assessment shows high concurrent validity with analogous neuropsychological tests and with Mini-Mental Status Examination (MMSE) in people with mild cognitive impairment.

- 32.Backx R, Skirrow C, Dente P, et al. Comparing web-based and lab-based cognitive assessment using the Cambridge Neuropsychological Test Automated Battery: a within-subjects counterbalanced study. J Med Internet Res 2020; 22:e16792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33▪.Paterson TSE, Sivajohan B, Gardner S, et al. Accuracy of a self-administered online cognitive assessment in detecting amnestic mild cognitive impairment. J Gerontol B Psychol Sci Soc Sci 2022; 77:341–350. [DOI] [PMC free article] [PubMed] [Google Scholar]; Unsupervised computer web-based cognitive assessment shows good diagnostic validity for mild cognitive impairment in older adults (with fewer inconclusive classifications than clinician-administered Montreal Cognitive Assessment, MoCA) and good convergent validity compared to gold standard neuropsychological battery.

- 34▪.van Gils AM, van de Beek M, van Unnik A, et al. Optimizing cCOG, a web-based tool, to detect dementia with Lewy bodies. Alzheimers Dement (Amst) 2022; 14:e12379. [DOI] [PMC free article] [PubMed] [Google Scholar]; Good diagnostic validity and convergent validity for an unsupervised computer cognitive assessment in older adults with Alzheimer's disease, Dementia with Lewy bodies, and controls.

- 35.Bauza M, Krstulovic M, Krupic J. Unsupervised, frequent and remote: a novel platform for personalised digital phenotyping of long-term memory in humans. PLoS One 2023; 18:e0284220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36▪.LaPlume AA. Challenges and solutions for data analysis in an adult lifespan study of over 100,000 online cognitive test completions. SAGE Res Methods Cases 2022; doi: 10.4135/9781529600865. [Google Scholar]; Methodological paper on appropriate techniques to visualize, clean, and analyze data from unsupervised web-based cognitive assessments, using insights from over 100,000 online test completions.

- 37.Brooker H, Williams G, Hampshire A, et al. FLAME: a computerized neuropsychological composite for trials in early dementia. Alzheimers Dement (Amst) 2020; 12:e12098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Mackin RS, Insel PS, Truran D, et al. Unsupervised online neuropsychological test performance for individuals with mild cognitive impairment and dementia: results from the Brain Health Registry. Alzheimers Dement (Amst) 2018; 10:573–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Capizzi R, Fisher M, Biagianti B, et al. Testing a novel web-based neurocognitive battery in the general community: validation and usability study. J Med Internet Res 2021; 23:e25082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Leese MI, Mattek N, Bernstein JPK, et al. The survey for memory, attention, and reaction time (SMART): preliminary normative online panel data and user attitudes for a brief web-based cognitive performance measure. Clin Neuropsychol 2022; 1–19. doi: 10.1080/13854046.2022.2103033. [Online ahead of print]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Tagliabue CF, Bissig D, Kaye J, et al. Feasibility of remote unsupervised cognitive screening with SATURN in older adults. J Appl Gerontol 2023; 7334648231166894.doi: 10.1177/07334648231166894. [Online ahead of print]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Madero EN, Anderson J, Bott NT, et al. Environmental distractions during unsupervised remote digital cognitive assessment. J Prev Alzheimers Dis 2021; 8:263–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tsoy E, Zygouris S, Possin KL. Current state of self-administered brief computerized cognitive assessments for detection of cognitive disorders in older adults: a systematic review. J Prev Alzheimers Dis 2021; 8:267–276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Howell T, Gummadi S, Bui C, et al. Development and implementation of an electronic Clinical Dementia Rating and Financial Capacity Instrument-Short Form. Alzheimers Dement (Amst) 2022; 14:e12331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Troyer AK, Rowe G, Murphy KJ, et al. Development and evaluation of a self-administered on-line test of memory and attention for middle-aged and older adults. Front Aging Neurosci 2014; 6:335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46▪▪.Nicosia J, Aschenbrenner AJ, Balota DA, et al. Unsupervised high-frequency smartphone-based cognitive assessments are reliable, valid, and feasible in older adults at risk for Alzheimer's disease. J Int Neuropsychol Soc 2023; 29:459–471. [DOI] [PMC free article] [PubMed] [Google Scholar]; An exemplary study of 268 cognitively normal older adults (aged 65–97 years) and 22 individuals with very mild dementia (aged 61–88 years), demonstrating high adherence, low dropout, acceptability, high between-person and high test-retest reliability and good construct and criterion validity, correlating with baseline AD biomarker burden to a similar extent as conventional cognitive measures, for a smartphone tool assessing associative memory, processing speed, and working memory.

- 47.Matar E, Shine JM, Halliday GM, et al. Cognitive fluctuations in Lewy body dementia: towards a pathophysiological framework. Brain 2020; 143:31–46. [DOI] [PubMed] [Google Scholar]

- 48▪▪.Wilks H, Aschenbrenner AJ, Gordon BA, et al. Sharper in the morning: cognitive time of day effects revealed with high-frequency smartphone testing. J Clin Exp Neuropsychol 2021; 43:825–837. [DOI] [PMC free article] [PubMed] [Google Scholar]; A study that captured circadian fluctuations in cognition in individuals at risk for Alzheimer disease (AD) using a remotely administered smartphone assessment that samples cognition rapidly and repeatedly over several days.

- 49.Cerino ES, Katz MJ, Wang C, et al. Variability in cognitive performance on mobile devices is sensitive to mild cognitive impairment: Results from the einstein aging study. Front Digit Health 2021; 3:758031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Brewster PW, Rush J, Ozen L, et al. Feasibility and psychometric integrity of mobile phone-based intensive measurement of cognition in older adults. Exp Aging Res 2021; 47:303–321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Moore RC, Ackerman RA, Russell MT, et al. Feasibility and validity of ecological momentary cognitive testing among older adults with mild cognitive impairment. Front Digit Health 2022; 4:149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Singh S, Strong R, Xu I, et al. Ecological momentary assessment of cognition in clinical and community samples: Reliability and validity study. J Med Internet Res 2023; 25:e45028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Sliwinski MJ, Mogle JA, Hyun J, et al. Reliability and validity of ambulatory cognitive assessments. Assessment 2018; 25:14–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Weizenbaum EL, Fulford D, Torous J, et al. Smartphone-based neuropsychological assessment in Parkinson's disease: feasibility, validity, and contextually driven variability in cognition. J Int Neuropsychol Soc 2022; 28:401–413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Koo BM, Vizer LM. Mobile technology for cognitive assessment of older adults: a scoping review. Innov Aging 2019; 3:igy038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56▪.Thompson LI, Harrington KD, Roque N, et al. A highly feasible, reliable, and fully remote protocol for mobile app-based cognitive assessment in cognitively healthy older adults. Alzheimers Dement 2022; 14:e12283. [DOI] [PMC free article] [PubMed] [Google Scholar]; Nice study on unimpaired older adults (N = 52) demonstrating exceptionally high retest reliability, good convergent validity, and significantly higher adherence rates than typically reported in the existing digital health assessment literature for a smartphone tool that assesses visual working memory, processing speed, and episodic memory in short bursts.

- 57.Binng D, Splonskowski M, Jacova C. Distance assessment for detecting cognitive impairment in older adults: a systematic review of psychometric evidence. Dement Geriatr Cogn Disord 2021; 49:456–470. [DOI] [PubMed] [Google Scholar]

- 58.Öhman F, Hassenstab J, Berron D, et al. Current advances in digital cognitive assessment for preclinical Alzheimer's disease. Alzheimers Dement 2021; 13:e12217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Papp KV, Samaroo A, Chou HC, et al. Unsupervised mobile cognitive testing for use in preclinical Alzheimer's disease. Alzheimers Dement 2021; 13:e12243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Snitz BE, Tudorascu DL, Yu Z, et al. Associations between NIH Toolbox Cognition Battery and in vivo brain amyloid and tau pathology in nondemented older adults. Alzheimers Dement (Amst) 2020; 12:e12018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Possin KL, Moskowitz T, Erlhoff SJ, et al. The brain health assessment for detecting and diagnosing neurocognitive disorders. J Am Geriatr Soc 2018; 66:150–156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Pratap A, Neto EC, Snyder P, et al. Indicators of retention in remote digital health studies: a cross-study evaluation of 100,000 participants. NPJ Digit Med 2020; 3:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Lancaster C, Koychev I, Blane J, et al. Evaluating the feasibility of frequent cognitive assessment using the Mezurio smartphone app: Observational and interview study in adults with elevated dementia risk. JMIR mHealth and uHealth 2020; 8:e16142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Jenkins A, Lindsay S, Eslambolchilar P, et al. Administering cognitive tests through touch screen tablet devices: potential issues. J Alzheimers Dis 2016; 54:1169–1182. [DOI] [PubMed] [Google Scholar]

- 65▪.Leroy V, Gana W, Aïdoud A, et al. Digital health technologies and Alzheimer's disease clinical trials: Might decentralized clinical trials increase participation by people with cognitive impairment? Alzheimers Res Ther 2023; 15:1–4. [DOI] [PMC free article] [PubMed] [Google Scholar]; This paper presents an interesting perspective on clinical trials, illustrating the impact of web-based evaluations on research feasibility and accessibility.

- 66.Hassenstab J, Aschenbrenner AJ, Balota DA, et al. Remote cognitive assessment approaches in the Dominantly Inherited Alzheimer Network (DIAN). Alzheimers Dement 2020; 16 (S6):e038144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67▪.O’Connell ME, Vellani S, Robertson S, et al. Going from zero to 100 in remote dementia research: a practical guide. J Med Internet Res 2021; 23:e24098. [DOI] [PMC free article] [PubMed] [Google Scholar]; The paper provides interesting and practical information on optimal conditions for remote assessment.

- 68.Wilson SA, Byrne P, Rodgers SE, et al. A systematic review of smartphone and tablet use by older adults with and without cognitive impairment. Innov Aging 2022; 6:igac002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. AGE-WELL. COVID-19 has significantly increased the use of many technologies among older Canadians: Poll. Available at: https://agewell-nce.ca/archives/10884 [cited 2023 June 23]. [Google Scholar]

- 70. Center PR. Mobile fact sheet. Pew Research Center, April 7, 2021. Available from: https://www.pewresearch.org/internet/fact-sheet/mobile/ [cited 2023 June 22]. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.