Abstract

Digital cognitive aids have the potential to serve as clinical decision support platforms, triggering alerts about process delays and recommending interventions. In this mixed-methods study, we examined how a digital checklist for pediatric trauma resuscitation could trigger decision support alerts and recommendations. We identified two criteria that cognitive aids must satisfy to support these alerts: (1) context information must be entered in a timely, accurate, and standardized manner, and (2) task status must be accurately documented. Using co-design sessions and near-live simulations, we created two checklist features to satisfy these criteria: a form for entering the pre-hospital information and a progress slider for documenting the progression of a multi-step task. We evaluated these two features in the wild, contributing guidelines for designing these features on cognitive aids to support alerts and recommendations in time- and safety-critical scenarios.

Additional Keywords and Phrases: clinical decision support system, alerts, trauma resuscitation, cognitive aid, alert fatigue, electronic documentation

1. INTRODUCTION

Clinical decision support systems (CDSSs) have long been used in healthcare settings to aid decision-making, but human-computer interaction issues have reduced their adoption and effectiveness [40,63]. Most CDSSs have been built as stand-alone systems [11,44,52,85,95] because they are straightforward to develop and can be easily shared between different institutions [91]. Despite these benefits, stand-alone CDSSs often require additional work and can interrupt clinician workflows and increase provider cognitive load [85]. To mitigate this adoption barrier, prior research has proposed integrating decision support into existing clinical systems [86]. In primary care and inpatient settings, decision support has been integrated into archival systems, such as electronic health records (EHRs) [24,25,64] and computerized order entry systems [51]. Archival systems, however, are cumbersome for high-stakes, time-critical events occurring in emergency medical settings [89]. Clinicians in these settings either rely on a scribe to document the event [88] or use the archival system after the event [13]. Unlike archival systems, digital cognitive aids have become widely used during emergency medical care [1,45,49,57]. Cognitive aids are “implementation tools” designed to help users complete a task or series of tasks [58]. The aids are used concurrently with work and include checklists (or “task lists”), flowcharts, mnemonics, and manuals [59]. Because digital cognitive aids have had higher adoption rates [14], decision support features could be integrated into these aids to support clinicians during decision making.

Our long-term goal has been to design and evaluate digital cognitive aids to support decision making during time-critical clinical events. We situated this work in the context of pediatric trauma resuscitation because this setting requires rapid decisions about life-saving interventions [20]. In pediatric trauma resuscitation, an interdisciplinary team cares for a severely injured child during a short window of time. Surgical residents and fellows, along with emergency department (ED) physicians, serve as team leaders. Decision support is critical in resuscitations because clinicians’ cognitive load is substantially higher during medical crises that involve patients with potentially life-threatening injuries [83]. Even highly-trained resuscitation teams can make errors leading to preventable deaths [31]. Prior research identified several types of errors in time-critical, team-based medical work, including interpretation errors (failures to make correct diagnoses promptly) and management errors (failures to track task progress) [76]. Despite prior attempts to reduce these types of errors by implementing CDSSs, usability issues (e.g., poor workflow integration) have limited system adoption [74,94].

In this paper, we studied how cognitive aids can be transitioned into platforms for triggering decision support alerts aimed at mitigating interpretation and management errors. Decision support platforms have two components: (1) acquisition of the data needed to determine if decision support alerts should be triggered, and (2) communication of the alerts to users in some form. Here, we focus on the first component—obtaining the data needed to determine if alerts should be triggered—by studying two alerts: (1) an interpretation alert about an increased risk of needing a blood transfusion, and (2) a management alert about delays in establishing intravenous (IV) or intraosseous (IO) access. We had three research questions: (1) What types of design changes are needed to transition cognitive aids from “task lists” into decision support platforms that trigger alerts? (2) How can features on cognitive aids be designed to improve the acquisition of the data needed for decision support? (3) How do clinicians use these features to document context information and task status during time-critical medical events? To answer these research questions, we first studied how an existing checklist supported data acquisition for triggering management and interpretation alerts. We found that the checklist design poorly supported the input of context information (for interpretation alerts) and accurate documentation of multi-step tasks (for management alerts). We then used co-design sessions and near-live simulations to design two new checklist features: a pre-hospital form for obtaining context information (e.g., the patient’s injury type) and a progress slider to improve documentation accuracy. Finally, we evaluated these new features in the wild during 78 actual resuscitations. Team leaders used the pre-hospital form in most cases but documented some items less frequently than others. Because the pre-hospital form was used in most cases, the information entered on the form could be used to determine the patient’s risk of needing a blood transfusion and trigger appropriate decision support alerts. The accuracy of these alerts, however, could be limited due to incomplete documentation. Premature documentation of multi-step tasks decreased after we introduced the progress slider, potentially leading to more accurate alerts in cases with delays in establishing intravenous (IV) access and other multi-step tasks. However, false alerts would also increase in cases without delays because leaders frequently did not update the status of the slider after task completion.

Using our findings, we make two contributions to HCI: (1) an understanding of how cognitive aids (specifically “task lists”) can be used as decision support platforms to trigger alerts aimed at mitigating errors during time-critical decision making, and (2) design guidelines for accurately capturing context information and task status required for decision support alerts on cognitive aids. These contributions are not limited to clinical settings but could inform the design of cognitive aids in other settings with the potential for interpretation or management errors, such as driving [38] and aviation [22,37].

2. RELATED WORK

Below we review prior work on common errors occurring during time-critical medical events. We also discuss research on the use of cognitive aids and clinical decision support systems in clinical settings.

2.1. Computerized Support to Address Errors During Time-Critical Medical Work

Prior research identified four error types in time-critical, team-based medical work: communication errors, management errors (failures to track task progress), vigilance errors (failures to block team members’ errors), and interpretation errors (failures to make correct diagnoses promptly) [76]. We chose to focus on interpretation and management errors because a cognitive aid used by an individual user may be less effective in reducing other errors that involve entire teams. Several technologies have been designed to address errors in time-critical medical work [33,43,47,74,93,94]. For example, multiple CDSSs were implemented to reduce interpretation errors by assisting physicians in making rapid diagnoses with limited information, but their adoption has been limited due to poor workflow integration [74,94]. Other studies examined how cognitive aids could reduce delays and procedural errors [33,93]. Because limited provider attention has been a key issue during dynamic medical events, some cognitive aids have started showing intervention prompts and timers to help users manage task performance [93]. However, research on the design and use of alerts on cognitive aids is scarce. A recent study found that an alert on a checklist reduced delays in documenting time-critical information [61], suggesting that alerts on cognitive aids can be effective. In this study, we contribute new insight and guidelines for incorporating alerts within cognitive aids to mitigate interpretation and management errors.

2.2. Cognitive Aids in Clinical Settings: Information Displays and Checklists

Shared information displays are frequently used as cognitive aids in clinical settings to improve situational awareness and teamwork [12,27,69]. Digital information displays are especially advantageous in time-critical settings because they can adapt to the situation, presenting only relevant information [27]. Although information displays may improve overall teamwork, their success has been limited because (1) displays only increase the situational awareness of select team members [69] and (2) data entry and accuracy issues prevent using displays in real time [12]. Checklists are another type of cognitive aid used in clinical settings. Prior work has designed and implemented checklists for different resuscitation settings [32,47–49,68] and intensive care units [21,33,87]. Earlier studies compared paper and digital checklists, finding fewer unchecked items on the digital forms [47,87] and no difference in data quality between the two modalities [30]. Checklists used by an individual team member can also impact the entire team and their work in hierarchical team settings [33]. Most checklists for intensive care units and resuscitation settings are in the form of task lists, while checklists that support diagnostic thinking instead of task completion have been developed for outpatient settings [50].

Past research has also explored how the design of cognitive aids should evolve to better support users, increase adoption, and avoid fatigue [29,46,93]. Digital aids can be designed to adapt to different scenarios in time-critical medical events [93]. Non-routine cases would especially benefit from adaptive cognitive aids that highlight tasks normally not performed in routine cases [46]. Cognitive aids require context information, such as task and patient status, to provide the right content at the right time in a medical event [29]. We build on this prior work by studying how “task list” checklists can obtain accurate context information. In addition to supporting decision-support alerts, this context information would also allow for more dynamic and adaptive cognitive aids.

2.3. Clinical Decision Support Systems: Design Issues and Barriers to Implementation

Both medical [40,63,82,86] and HCI [11,42,44,52,90,95,96] studies have evaluated the use of CDSSs in healthcare settings. Medical studies found mixed results, with drug ordering systems improving clinical performance and diagnostic systems having low accuracy from poor data availability [40,86]. Some studies found HCI issues, such as delayed alerts activation [63] and data entry burden [40]. We next review HCI studies that focused on CDSS design. We also discuss the barriers to implementation and adoption of these systems.

2.3.1. Design of Clinical Decision Support Systems

Prior HCI studies of CDSSs have focused on the types of support for different clinical settings [42,44,95,96]. For example, the design of decision support for intensive care units should be evidence-based and support three phases of decision making: (1) deciding appropriate interventions, (2) implementing the interventions, and (3) monitoring the patient [42]. CDSSs for surgical teams should (1) support autonomy, (2) provide appropriate aid given the setting, (3) enhance teamwork and communication, and (4) allow for inputting different types of data while requiring minimal interaction from clinicians [44].

A common issue with CDSSs has been alert fatigue, where users frequently ignore or override alerts [63]. Past work has proposed different strategies for mitigating alert fatigue in CDSSs and other alerting systems [10,17,35,62,95], such as triggering related alerts together [35]. Other studies examined how alerts could be designed to reduce fatigue [10,17,62,95]. Proposed design strategies involve the use of vibrotactile modalities [17] and peripheral interactions (e.g., turning off an alarm by pressing a foot pedal) [10]. Another strategy includes varying the alert format based on clinicians’ intentions [95]. Recommendations should be “unremarkable” most of the time, and only appear in more intrusive formats when the system’s recommendations conflict with clinicians’ plans. The accuracy of an alert could also impact its intrusiveness, with more intrusive designs used for alerts with high accuracy rates [62]. However, research on the accuracy of alerts triggered by data entered on cognitive aids is currently limited. In this paper, we begin addressing this gap by evaluating the accuracy of alerts triggered by data recorded on a digital checklist.

2.3.2. Barriers to Implementation and Adoption of Clinical Decision Support Systems

Although the design of CDSSs has improved over time, different barriers have prevented the implementation and adoption of these systems [96]. The main challenge has been obtaining the contextual data required for accurate recommendations and alerts. This challenge is especially present in diagnostic CDSSs because they require more data than CDSSs for preventative care or drug prescription [40]. A common method for obtaining the required data has been manual input by clinicians. Data entry burdens, however, can deter clinicians from using the system or cause incomplete information, negatively impacting the accuracy of the alerts [40]. Because clinicians may only have time for data entry after they had made decisions, alerts and recommendations may be coming too late in their workflows [90]. As a result, continued research is needed to study how data entry can be improved in CDSSs to allow for more accurate and timely decision support alerts.

Documentation practices in electronic health records (EHRs) have been studied extensively [26]. Electronic documentation can increase provider workload [5,66,71] and affect their thought processes [9,67,100]. Tensions can also arise when providers undergo additional work to enter data for secondary purposes, such as research [5]. Several studies have examined documentation practices during time-critical medical events, focusing on temporality [39], compliance [23], and the differences between paper and electronic documentation [19]. Even when teams have a dedicated role for documentation, not all information about an event is recorded. In a recent study, medical scribes documented less than half of the reports provided during a resuscitation on an electronic flowsheet [39]. Clinicians in fast-paced settings may use transitional artifacts, like paper notes, to record information before they are able to document it in formal electronic systems [13].

Integrating data from other electronic systems (e.g., patient monitoring devices) can reduce the data entry burden in CDSSs [65]. Not all data required for decision support, however, can be found in an electronic system. For example, patient home life and social support are important factors in decision-making, but this information is not frequently captured in medical histories [96]. In intensive care settings, data required for decision support is captured at different times by different providers, with some information recorded on paper records [42]. While most studies investigated documentation in archival (e.g., EHRs and flowsheets) and ordering systems, documentation on cognitive aids remains understudied. We expand this prior work by addressing how cognitive aids can be used to capture the data required for triggering decision support alerts.

3. STUDY OVERVIEW

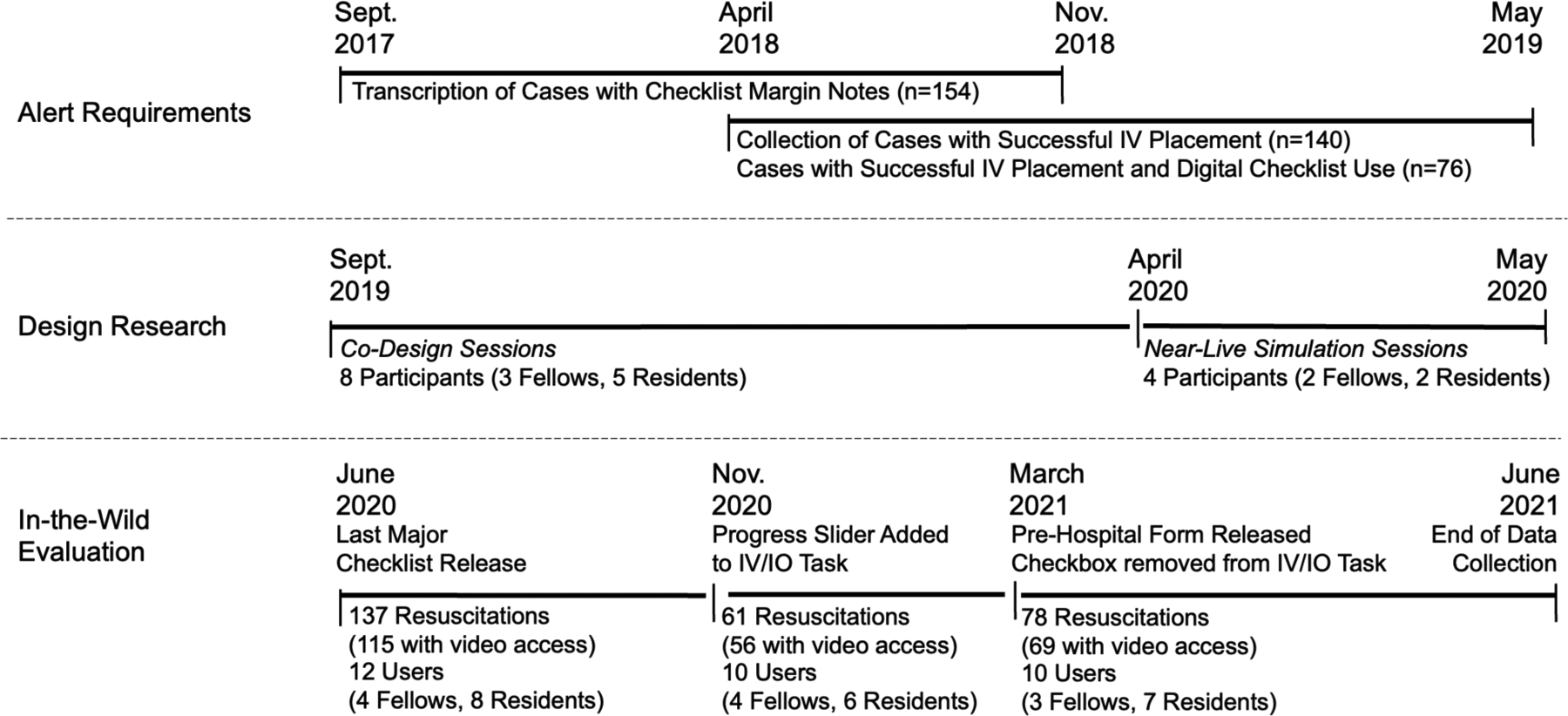

Our study had three main components: (1) alert requirements, (2) design research, and (3) in-the-wild evaluation (Figure 1). To determine the alert requirements, we analyzed past cases to understand how the checklist interface could support data acquisition required for alerts. In the design research, we conducted co-design and near-live simulation sessions to design and evaluate features for obtaining these data. We then evaluated the modified checklist design in the wild. This study was approved by the hospital’s Institutional Review Board (IRB).

Figure 1:

Study overview and data collection timeline for the three study components.

3.1. Research Site and Trauma Team Members

Our research site has been a level 1 pediatric trauma center in the United States, treating over 500 patients each year. When a child is injured, the responding emergency medical services (EMS) use an alert system to notify the trauma center. In this alert, the EMS provide information about the incoming patient, including age, mechanism of injury, and symptoms. This alert is transcribed by the hospital staff, who activate the trauma team through a page system. Team members report to the trauma bay after receiving the page, occasionally arriving after the patient. When EMS arrive with the patient, they also report the patient’s injuries and treatments. The trauma team is co-led by a surgical fellow or resident and an emergency department (ED) physician. Together, they guide the team through the Advanced Trauma Life Support protocol [3]. The surgical leader uses a digital checklist listing the resuscitation tasks (Figure 2). In the pre-arrival period, the team prepares for the patient. In the primary survey phase, the examiner evaluates the patient’s airway, breathing, and circulatory status, while the bedside nurses obtain vital signs and intravenous (IV) access. The team then examines the patient to identify other injuries (secondary survey) and discusses the next steps (departure plan). Depending on the injuries, other roles may get involved, such as anesthesiologists and respiratory therapists. Each resuscitation is recorded for quality assurance purposes using an always-on video and audio recording system with three cameras and an array of microphones. While consent from the patient’s caregiver is not required to review checklist data, consent is required to review the videos for research purposes. Videos for consented cases are stored and viewed according to IRB-approved research protocols at the trauma center.

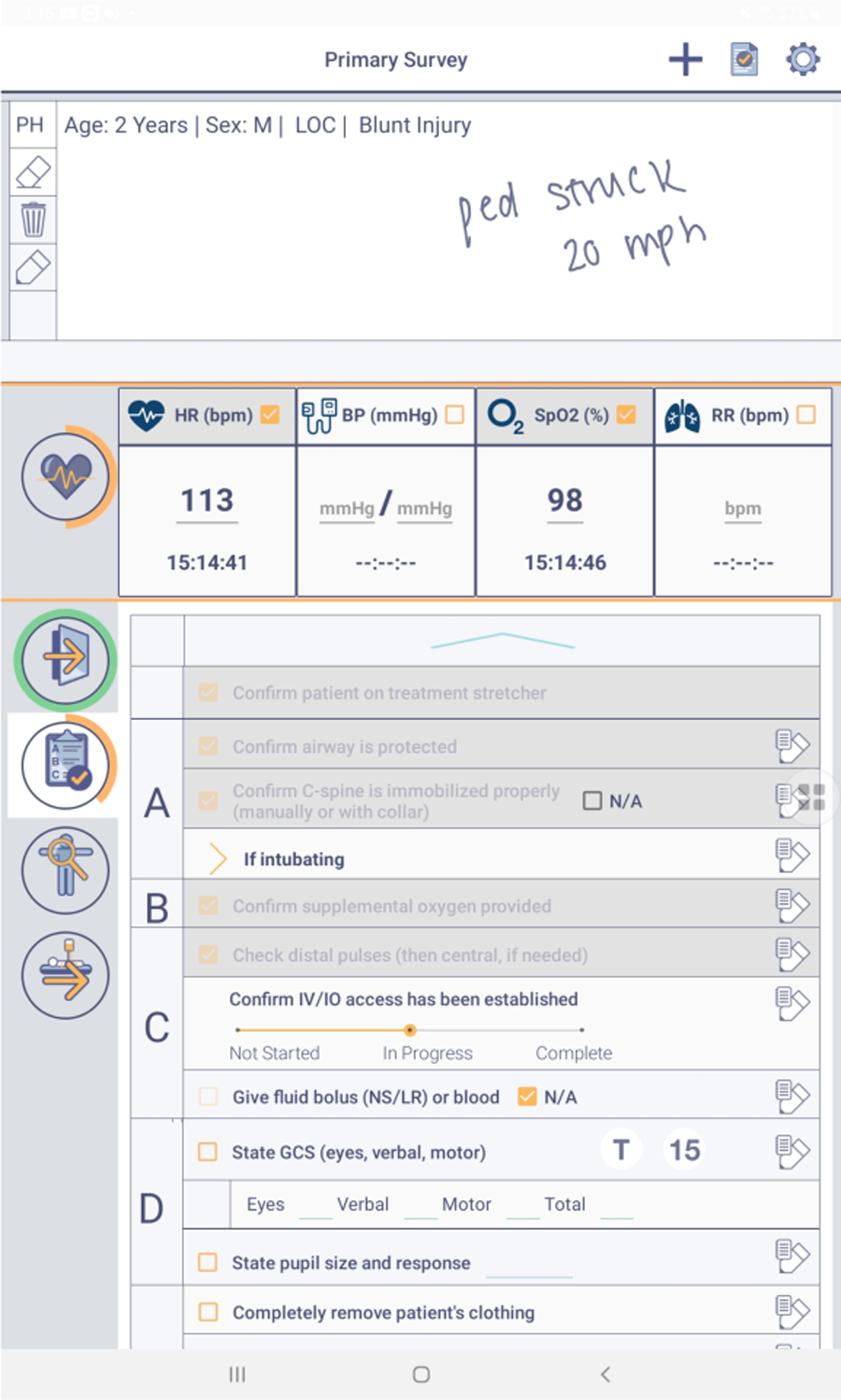

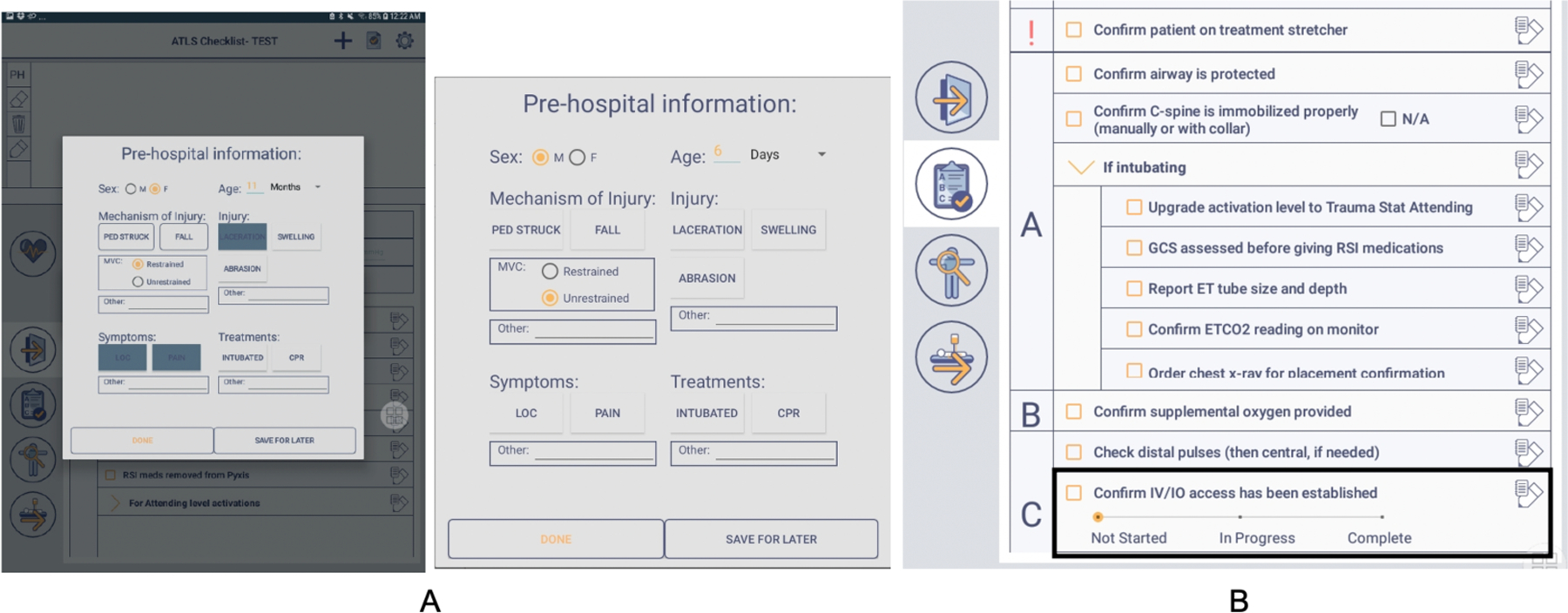

Figure 2:

Digital checklist used at the hospital.

3.2. Digital Checklist

Clinicians at the hospital have been using a digital checklist for trauma resuscitation since 2017 (Figure 2). The checklist is implemented on a Samsung Galaxy tablet and used by the surgical team leader to ensure adherence to ATLS protocol. The checklist has five sections: two for the primary and secondary ATLS surveys, a pre-arrival section, a vital signs section, and a prepare for travel section. Each section contains a list of tasks that should be completed by the team. Next to each task is a checkbox and a place to take handwritten notes about the task. A few tasks have spaces for typing values (e.g., the blood pressure task has a space to type the blood pressure value). A notetaking area is placed at the top of the checklist where users can handwrite any information using the stylus. When leaders click the button to submit the checklist, a window appears with any unchecked items, giving the leader a final opportunity to check-off the tasks. Each use of the checklist produces a text file log and screenshot of the notes. The log file also contains timestamps for each check-off and any typed values.

3.3. Study Participants

Our study participants were surgical team leaders with experience in using the digital checklist during trauma resuscitations at the hospital. The surgical team leaders are either fellows or senior residents. While both fellows and senior residents have training in trauma resuscitation, fellows are more experienced with pediatric patients than senior residents. Fellows rotate at the trauma center for two years, while senior residents rotate for two months. At the beginning of their rotation, the leader receives training on the digital checklist and consents to using the tool as part of the research study. All team leaders who participated in the co-design sessions and near-live simulation sessions received monetary compensation for their time.

4. ALERT REQUIREMENTS

In this study, we focused on supporting two alerts: (1) an alert about an increased risk of requiring blood transfusion (interpretation alert) and (2) an alert about delays in establishing IV access (management alert). Context information and task status are required to determine if these alerts should be triggered during a medical event. To determine the design changes needed to support these types of alerts on cognitive aids (our first research question), we evaluated how the current “task list” design supported timely and accurate documentation of context information and task status.

4.1. Interpretation Alert: Increased Risk of Requiring Blood Transfusion

To understand how a digital cognitive aid could support alerts aimed at mitigating interpretation errors, we explored an alert indicating that a patient is at an increased risk of requiring blood transfusion. Severe blood loss contributes to about 30% of pediatric deaths after traumatic injury [70]. Timely life-saving interventions, such as massive blood transfusion, lead to better patient outcomes [72]. Our collaborating clinical team identified four variables that could predict the need for blood transfusion in pediatric trauma patients based on literature review [e.g., 4]: patient age, injury type, pre-arrival vital signs, and pre-arrival Glasgow Coma Score (GCS), a measure of consciousness. We then reviewed past resuscitation cases where leaders used the digital checklist to identify design requirements for obtaining these four variables.

4.1.1. Analyzing Information Recorded on Checklist: Methods and Findings

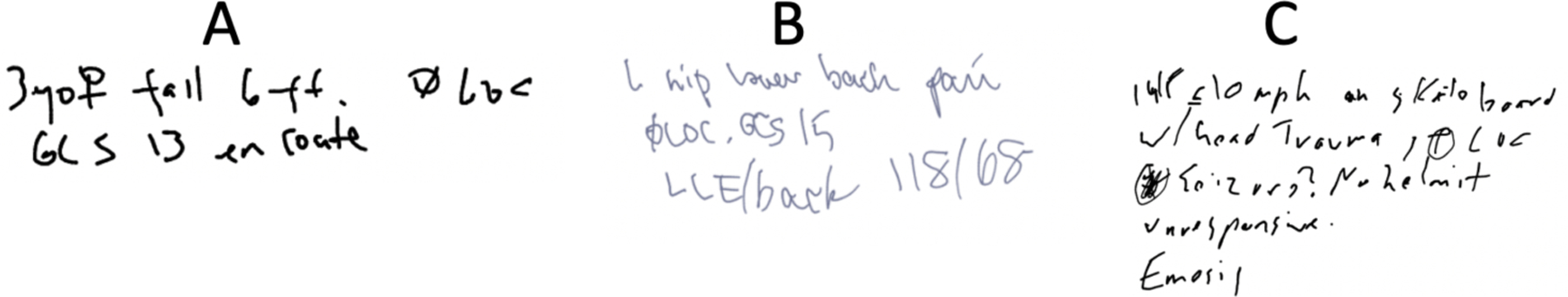

Because the digital checklist is primarily a task list, it does not contain dedicated spaces for entering context information required to determine risk of needing a blood transfusion. In our prior work, however, we found that leaders can use task lists as a memory externalization tool, handwriting notes about patient status and pre-hospital information [47,77]. We transcribed and reviewed the margin notes from 154 digital checklist cases between September 2017 and November 2018, observing instances of handwritten pre-arrival context information needed for determining bleeding risk (Figure 3). We calculated the word frequencies, finding that most common words fell into four categories: (1) demographic information (e.g., age, sex), (2) mechanism of injury (e.g., pedestrian struck, fall), (3) symptoms (e.g., loss of consciousness, pain), and (4) treatments (e.g., intubated). Although these notes contained information that could indicate bleeding risk, the notes were not taken in a standardized manner and did not always include all four variables. As part of the design research, we studied how the task list could be modified to standardize the entry of required context information, focusing on variables associated with an increased risk of bleeding.

Figure 3:

Examples of handwritten notes taken by users on the digital checklist: (A): 3yo [gender symbol] fell 6 ft, Ø LOC [loss of consciousness], GCS 13 en route. (B) L hip lower back pain Ø LOC. GCS 15 LLE/back 118/68 [blood pressure]. (C): 14F <=10 mph on skateboard w/ head trauma, ⊕ LOC [illegible text] no helmet, unresponsive, [illegible text].

4.2. Management Alert: Delays in Establishing IV/IO Access

To determine how a digital cognitive aid could support alerts aimed at mitigating management errors, we explored an alert highlighting delays in establishing IV/IO access. According to ATLS protocol, access to the circulatory system should be promptly obtained [3]. This access is essential for administering medications, fluids, and blood products required for managing life-threatening conditions [56]. The process of obtaining IV access involves multiple steps: a provider must place a tourniquet, locate a vein, and successfully insert a needle into the vein. Several attempts may be needed to successfully place an IV. If the team has difficulty obtaining IV access, they may instead obtain IO access, which requires drilling into the bone. Some patients will arrive to the trauma center with existing IV or IO access, either receiving this access from EMS or at another institution. Given the importance of promptly establishing IV/IO access, we studied how an alert could be created on the checklist to inform the leader of delays in this multi-step process. This alert could suggest alternative means, like using a vein finder or obtaining IO access. We first analyzed the timing of successful IV placements in past cases to determine when an alert should be triggered if IV access had not been established. We then performed a retrospective analysis to examine the accuracy of an IV/IO alert on the checklist.

4.2.1. Determining When to Trigger the Alert

To determine the appropriate time for triggering the alert, we considered a combination of the time- and process-based approaches [61]. In a time-based approach, the alert is triggered if a task is not completed after a certain period. In a process-based approach, the alert is triggered if a task is not completed after a certain point in the process. We reviewed videos from 140 past cases between April 2018 and May 2019, finding that the median time from the patient arrival until establishing IV access was about seven minutes. In our combined time- and process-based approach, the alert would be triggered (1) if IV access had not been marked as complete on the checklist after seven minutes from the first item check-off on the primary survey section (roughly indicating the time of patient arrival) or (2) if the task had not been marked as complete when the last task on the secondary survey section was checked-off.

4.2.2. Assessing the Alert Accuracy: Methods and Findings

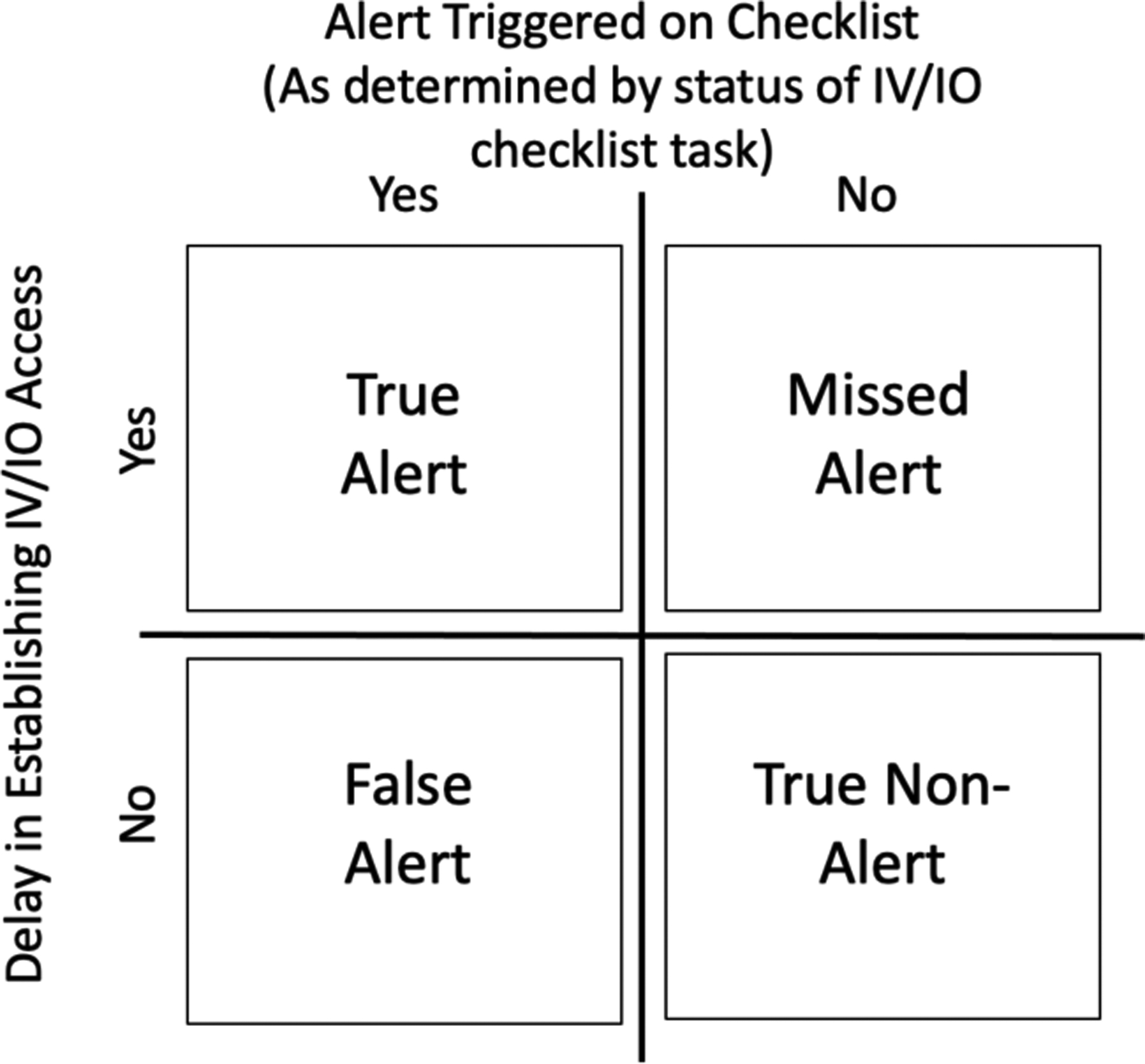

We used a retrospective analysis to determine if the checklist system could accurately trigger alerts about delays in establishing IV access. In retrospective analysis, data from past cases are used to evaluate alerts on metrics such as sensitivity and specificity before releasing the alerts [62]. Low sensitivity (number of true alerts) will prevent the alert triggering when the corresponding condition is present, while low specificity (number of true non-alerts) will lead to greater false alerts. The digital checklist was used in 76 of the 140 cases that we reviewed when determining the median time for establishing IV access. To perform the retrospective analysis, we examined digital checklist logs from these 76 cases, focusing on the “Confirm IV/IO access has been established” task. Per protocol, this checkbox should be checked-off immediately after IV/IO access is obtained. From the video review of all 140 cases, we had a record of the time when IV/IO access was obtained in each case. We parsed each checklist log with a Python script. The script used the timestamps of primary and secondary survey task check-offs to determine the alert time (e.g., seven minutes after the first primary survey check-off or after the last secondary survey check-off, whichever event came first). The script also determined if the IV/IO task was unchecked at this time, which would have triggered the alert. Using the alert status (triggered or not triggered) and the IV placement status at the time of the alert (obtained or not obtained), we classified the 76 cases into four groups: missed alert, false alert, true alert, and true non-alert (Figure 4). We found that this alert would not have been triggered in most cases (69.4%) with delays in establishing IV access because team leaders checked-off the IV/IO task before it was completed. Because of these premature check-offs, the task status on the checklist did not match the actual status of the task. In our prior work, we found that users frequently mark the multi-step tasks as complete when the team begins the task, instead of waiting to check it off at task completion [49,60]. To support management alerts about delays in multi-step tasks, we studied how to improve the design of multi-step tasks on the checklist to increase documentation accuracy.

Figure 4:

Classification of alert status.

5. DESIGN RESEARCH

To answer our second research question, we used co-design sessions to develop features for improving the documentation of context information and increasing the accuracy of documenting multi-step task status. We evaluated these new decision support features using near-live simulations (Figure 1).

5.1. Co-Design Sessions

5.1.1. Procedure

From September 2019 through April 2020, we conducted four hour-long co-design sessions with eight surgical fellows and residents. Co-design sessions allow participants to directly engage with the design process by collaborating with researchers, expressing independent opinions, and making decisions [34,75]. The first three sessions were held in person, with three participants in the first session and two participants in the following two sessions. Due to the COVID-19 pandemic, we conducted the fourth session virtually over the video-conferencing platform Zoom with one participant. All participants had experience in using the checklist during trauma resuscitations. We began the session by asking participants to describe their experiences with the checklist from actual events to ground their design thinking. We also presented an overview of the current state of the checklist. Participants then individually identified issues with the checklist design and shared them with the group. Next, participants in the three in-person sessions used cardboard cutouts in a group activity to design an “ideal” checklist before drawing individual sketches of features that might resolve current issues. Participants then discussed their sketches with the group and voted to prioritize potential new features by placing stickers on Post-it notes. In the remote session we allocated extra time for sketching because we skipped the cardboard cutouts activity. We collected several types of data, including video recordings of participants discussing their sketches, photos of the sketches, photos from the prioritization activity, and notes taken by researchers during the sessions. We then inductively analyzed these data to identify current issues with the checklist, features that should be introduced on the checklist, and design ideas for these features.

5.1.2. Findings

Participants described several issues with the current version of the checklist and proposed numerous design ideas. Here, we focus on the challenges and design ideas related to documenting context information about the patient and capturing the status of multi-step tasks. Leaders highlighted that the checklist only supported marking task completion. They used the example of the IV/IO task (“Confirm IV/IO access is established”), discussing how the team may not start this task or may be in the process of obtaining IV access when the leader reaches this item on the checklist. The leader would then either skip the task or prematurely mark it as completed. One participant described a case where they wrote “will do later” [S#4, P#8] in the notes section next to the task. These conversations highlighted how the leaders needed an approach to mark the phases of multi-step tasks, rather than just checking tasks as completed or not completed.

Participants also discussed design ideas for capturing pre-hospital context information about the patient, providing different reasons for why this feature would be useful. One participant drew a detailed pre-hospital form for the checklist, with spaces for documenting the patient’s age, mechanism of injury and severity level, time of injury, pre-arrival interventions, level of consciousness, and important medical history. They described how this form would better facilitate documentation of the pre-hospital information than handwriting the information in the margin notes section:

“Instead of writing ‘13yo female, fall from bike, no helmet,’ if you click on ‘bike’ for mechanism of injury, it will drop down with options for ‘helmeted’ and ‘unhelemeted’… Instead of having to handwrite all that stuff, you could just click through it.”

[S#4, P#8]

Another participant described how information in a pre-hospital form could be used to generate a one-line summary of the case to support team preparation for the patient:

“The section for pre-hospital could be a quick one-liner so it is ‘MVC, unrestrained, how fast was the car, was it a baby in a car seat, any injuries,’ and then we can prepare by looking at this information and thinking about, ‘unrestrained means we need a collar…’”

[S#1, P#3]

Participants also discussed how the pre-hospital information could be used to adapt the tasks presented on the checklist or support decision making by suggesting different algorithms:

“You can check ‘Is this a burn?’ and then there is a suggested algorithm [for treating burn injuries] that pops up on the side and you can click on it and look at it.”

[S#1, P#2]

Another participant agreed, stating “you could do burn, blunt, and penetrating… that would be helpful if you could categorize it from the beginning” [S#1, P#1]. One participant similarly described how information recorded in the primary survey could be used to prompt the leader to consider ordering a certain type of imaging test:

“If somewhere you’re recording the primary survey and the GCS [Glasgow Coma Score] is 13, then it prompts you ‘Do you want a head CT?’”

[S#1, P#2]

When voting on the new features for the checklist, all four sessions prioritized using context information entered on the checklist to recommend interventions. Because leaders currently rely on their recollection of the diagnostic algorithms or reference them manually, they thought this feature would aid their decision making.

5.2. Design and Evaluation of Decision Support Features

5.2.1. Decision Support Features Design Process

We added two new features to the checklist interface: (1) a pre-hospital form (Figure 5(a)), and (2) a progress slider for the “Confirm IV/IO task has been established” (Figure 5(b)). We used participant sketches from the co-design sessions and our analysis of past margin notes to inform the pre-hospital form design. We included the four categories (demographic information, injury type, symptoms, and treatments) identified in the notes analysis. In the co-design sessions, participants also discussed the need to mark a task as “in progress,” instead of “complete” or “not complete” through a checkbox. We implemented a progress slider for the IV/IO task to allow users to move between several different statuses. To simplify the progress slider, we provided three status options: “Not Started,” “In Progress,” and “Completed.” The progress slider was initially set to “Not Started,” with users able to move it to either “In Progress” or “Complete.”

Figure 5:

Screenshots of two features evaluated during the near-live simulations: (A) pre-hospital form and (B) progress slider for the IV/IO task.

5.2.2. Near-Live Simulations: Procedure

Throughout April and May 2020, we conducted four near-live simulation sessions to evaluate these new decision support features. During near-live simulations, a single user watches videos of simulated cases while using a system and thinking aloud. Near-live simulation sessions can highlight usability issues without requiring an entire clinical team to perform the simulation [54,94]. All four participants had experience in using the checklist in actual resuscitations. Three of the participants had also participated in earlier co-design sessions. Each simulation session was an hour long and held virtually over Zoom. Clinicians participated from the hospital, using the new version of the checklist on a tablet. One researcher facilitated the session, while two other researchers observed and took notes. We began the session by asking the participant to describe their last trauma resuscitation to ground their thinking based on real-world scenarios. We then introduced the new checklist features and provided brief training. Next, we asked the participant to watch two videos of simulated cases while using the checklist as if they were the leader. In the videos, a trauma team treated a “patient” (simulation mannequin) suffering from life-threatening injuries. The patient’s age and type of injury differed between the two videos. While the participant watched the videos, we remotely accessed their tablet, informing the participant that we could view their interactions with the checklist. The facilitator shared their screen with the other two researchers who took notes on how the participant was using the new features. After the participant completed both simulation cases, we discussed each feature, asking them if they used it, their thoughts on the feature, and any potential changes they would make. We concluded by having the participant compare this new checklist to the current version and discuss how the new features would impact their work.

We analyzed the observation notes from the near-live simulation sessions using an inductive qualitative content analysis approach [15]. The goal of the analysis was to both highlight how the participants were using these new features and identify potential design improvements. The first author performed the analysis using NVivo, a qualitative data analysis software, open coding the notes related to the pre-hospital form and progress slider and iteratively connecting the open codes to identify themes. These themes were discussed and refined in meetings with the wider research team.

5.2.3. Near-Live Simulations: Findings

All four participants used the pre-hospital form during both cases in their session. We identified three themes related to participants’ use of the pre-hospital form: (1) different documentation practices for the sporadic information, (2) persistence of handwritten notes, and (3) concerns about the quality of pre-arrival information.

We observed two different practices for documenting the sporadic information shared about the patient. During the simulations, all four participants completed the pre-hospital form before patient arrival, documenting the information from the simulated pager system. When the EMS arrived with the patient in the video, two participants [P#1,2] reopened the pre-hospital form to enter additional information provided by the EMS. The other two participants [P#3,4] handwrote this information in the margin note area instead of reopening and updating the pre-hospital form. We also identified a persistence of handwritten notes even with the use of the pre-hospital form. Although information from the pre-hospital form appeared in the summary line on top of the margin note, two participants [P#3,4] copied the information they entered in the pre-hospital form into the margin note. One participant explained that handwriting a one-line summary had become a “habit” [P#3] and that they might stop with acclimatization to the pre-hospital form.

During the post-simulation debriefing, participants highlighted concerns about the quality of the pre-arrival information. Three participants [P#2,3,4] discussed how context information about the patient may be unknown or inaccurate. Participants #2 and #3 suggested mitigating this problem by adding an “unknown” option for certain fields on the pre-hospital form. Participant #4 discussed how they would be hesitant to document information provided by EMS on the pre-hospital form due to concerns about the reliability of this information. Several changes were recommended for the pre-hospital form. Two participants [P#2,3] suggested making the form simpler and easier to use (e.g., changing the default in the age dropdown from “days” to “years” [P#2]). They also suggested removing the “Other” section in each category because they found it easier to handwrite that information in the margin note instead of typing it. Participant #3 stated they would not add anything else to the form to avoid complexity. Three participants [P#1,2,3] said they would use the pre-hospital form in actual cases. Participants #1 and #2 highlighted how converting the pre-hospital information into a one-line summary aided their thought processes, explaining that the summary was “a basic reminder of what you need to be thinking about” [P#1] and “it’s not a burden to check a few more boxes if it makes things smoother” [P#1]. Participant #4, however, expressed a preference for handwriting notes in the margin note area. They highlighted how they would prefer having that information auto populated on the checklist, assuming this automation would make the tool easier to use and less distracting.

We also identified one theme in participant reactions to the IV/IO progress slider: concerns about returning to the feature to update task status. All four participants used the IV/IO progress slider in at least one of their two simulation videos. During discussion, Participant #1 stressed the importance of ensuring that IV/IO access is established but was concerned they would not go back to mark the task as complete after moving to another section of the checklist. They discussed how they needed to be selective about the items they returned to since multiple tasks could be unchecked. Participant #3 went back to the primary survey section to change the task status from in-progress to complete during the simulation. This participant suggested moving uncompleted tasks from one section over to the next to facilitate this multi-step interaction and ensure the tasks are marked as complete. However, moving unchecked tasks between sections could be complicated when teams do not follow the ATLS protocol and leaders frequently jump between checklist sections. Participant #4 suggested putting the status of the IV/IO task in a “global view,” explaining that “once you scroll away, you lose track of it.”

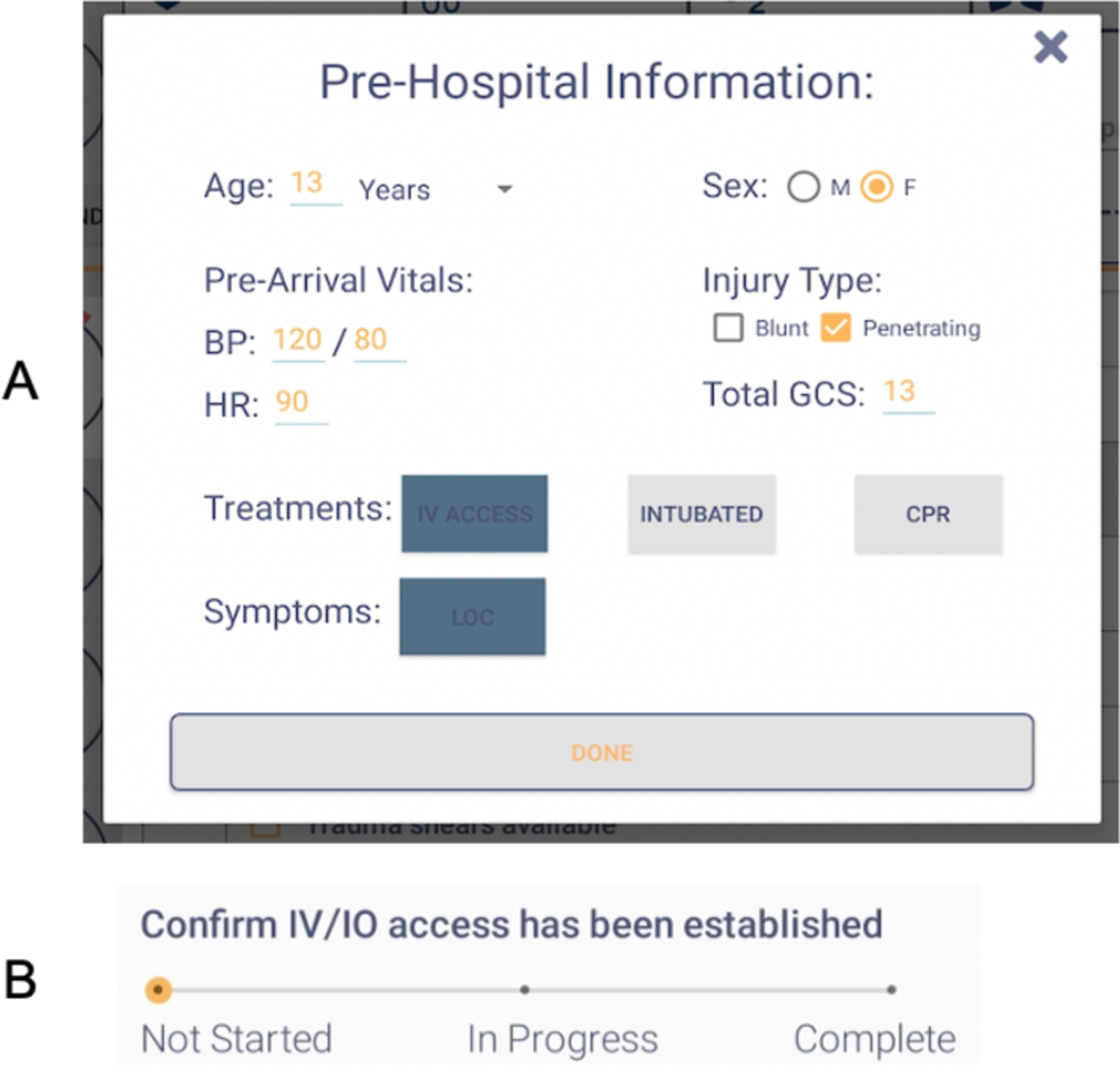

5.3. Final Designs for Decision-Support Checklist Features

Using the results of the near-live simulation sessions, we finalized the design for the pre-hospital form (Figure 6(a)). Leaders expressed different preferences for the pre-hospital information types they record on the checklist. Our goal was to design a simple form while capturing the information most useful for decision support. Combining user data with input from our clinical collaborators, we simplified or removed extraneous sections from the initial design, such as changing the mechanism of injury section to specify only blunt or penetrating mechanism. Because the pre-arrival GCS and vital sign measurements are also needed for decision support, we added these fields to the form. The form’s input was now modified to dynamically update some items on the checklist. For example, selecting pre-arrival IV access on the form automatically sets the IV/IO task item on the checklist to complete. Finally, we had the form appear on the screen as soon as the leader began using the checklist, removing the need to manually open it at the beginning of the resuscitation. Leaders are not required to fill out any parts of the pre-hospital form, can exit the form at any time, and still have the margin note area to take handwritten notes. They can also click a button on the main screen to reopen the form. No changes were made to the initial design of the progress slider based on findings from the near-live simulations (Figure 6(b)).

Figure 6:

Final designs for (A) pre-hospital form and (B) progress slider.

6. IN-THE-WILD EVALUATION

To answer our third research question, we released the new features on the checklist at the hospital to study how clinicians used the features to document context information and task status in actual cases (Figure 1). We could release these features for actual use because they were evaluated for usability in the near-live simulations and leaders could choose not to use either feature during patient care. To understand how leaders used the pre-hospital form, we (1) calculated documentation rates for different variables and evaluated their accuracy, and (2) reviewed videos from cases with pre-hospital form use. Because the information entered on the form can be used to trigger decision support alerts aim at mitigating interpretation errors, studying the documentation rates and accuracy can provide insight into the accuracy of these alerts. To determine the effects of the progress slider on the accuracy of an alert about delays in establishing IV access, we retrospectively analyzed the slider use by examining both the checklist logs and videos of cases.

6.1. Pre-Hospital Form

We released the pre-hospital form on the checklist at our research site on March 22, 2021. In the three months of data collection (through June 30, 2021), leaders used the checklist during 78 trauma resuscitations.

6.1.1. Pre-Hospital Information Documentation Rates: Methods

To evaluate documentation rates and accuracy, we examined the checklist logs and notes, along with patient charts. We began by transcribing the handwritten margin notes taken before the release of the pre-hospital form (June 1, 2020–March 22, 2021), noting each time a variable from the pre-hospital form was captured in the handwritten note. After the release of the pre-hospital form, the checklist logs contained the timestamps when the leaders opened and closed the form, along with the timestamps and values for documented data. Using these data, we calculated documentation rates for different fields on the pre-hospital form. We then compared documentation between the handwritten margin notes and pre-hospital form. To evaluate the accuracy of the pre-hospital form documentation, we compared the data entered on the form to the data documented in patient charts. We could not evaluate the accuracy of the GCS, heart rate, and blood pressure values because these values fluctuate over time. We could not ensure that the values recorded in the patient chart were measured at the same time as the values recorded on the digital checklist.

6.1.2. Pre-Hospital Information Documentation Rates: Findings

Leaders used the pre-hospital form in 85% of the cases, frequently entering the age, sex, and injury type variables in the form (Table 1). The pre-arrival clinical measures (e.g., GCS, heart rate, blood pressure) were documented more often in the form than in the margin notes but had lower documentation rates when compared to the age, sex, and injury type. The checklist also has dedicated spaces for recording the GCS, heart rate, and blood pressure values measured by the team in the trauma bay. Pre-hospital variables were documented less frequently than variables measured during the resuscitation, highlighting the challenge of documenting pre-hospital information. While the pre-arrival GCS was documented in the pre-hospital form in only 37.7% of cases, the GCS calculated during the resuscitation was recorded in 97.4% of cases. Leaders also documented the heart rate and blood pressure values measured in the trauma bay in 90.1% of cases, while the pre-arrival heart rate and blood pressure values were documented in 15.6% and 11.7% of cases, respectively. Variables in symptoms (e.g., loss of consciousness) and treatments (e.g., intubation, CPR, IV access) also had lower documentation rates in the pre-hospital form when compared to the age, sex, and injury type variables.

Table 1:

Documentation rates in cases before and after the introduction of the pre-hospital form.

| Checklist Margin Note (n=198) (June 1, 2020 – March 21, 2021) |

Pre-Hospital Form (n=78) (March 22, 2021 – June 30, 2021) |

|

|---|---|---|

| Age (%) | 85 (42.9) | 66 (84.6) |

| Sex (%) | 51 (25.8) | 58 (74.4) |

| Injury Type (%) | 96 (48.4) | 50 (64.1) |

| GCS (%) | 7 (3.5) | 30 (38.5) |

| Heart Rate (%) | 0 (0.0) | 12 (15.6) |

| Blood Pressure (%) | 0 (0.0) | 9 (11.7) |

| Pre-arrival IV Access (%) | 0 out of 92 cases (0.0) | 6 out of 37 cases (16.2) |

| Loss of Consciousness (%) | 8 out of 36 cases (22.0) | 1 out of 10 cases (10.0) |

| Intubation (%) | 2 out of 4 cases (50.0) | 1 out of 2 cases (50.0) |

| CPR (%) | 2 out of 3 cases (66.7) | 0 out of 1 case (0.0) |

We found high levels of accuracy in the recorded age (61/66, 92.4%), sex (54/58, 93.1%), and injury type (50/50, 100.0%) variables. One age entry was off by four years, while the other four discrepancies were only different by a year or two. In two cases, the age was also inaccurate on the page sent at the beginning of the case. When evaluating variables in the treatments and symptoms categories, we found inaccuracies in the pre-arrival IV and loss of consciousness. Leaders documented pre-arrival IV access in nine cases but three of those cases did not have established access. In three cases marked as having loss of consciousness, one case was marked as “unknown” in the chart, while the chart for another case stated, “no loss of consciousness.” We did not find any cases in which intubation or CPR were incorrectly selected in the pre-hospital form.

6.1.3. Video Review: Methods

To understand how the leaders used the pre-hospital form and the factors affecting its use, we reviewed videos from 11 resuscitations with pre-hospital form use. We started by randomly selecting 10 cases to review, ensuring an even distribution of cases throughout the post-intervention period (i.e., after the pre-hospital form release). When reviewing those cases, we observed that one case had more severe injuries than the others. We purposefully selected an additional severe case for review to determine if case severity impacted pre-hospital form use. One researcher reviewed each video, recording their observations. The researcher noted when different team members arrived, how the team communicated about the information documented on the form, and how the leader interacted with the checklist during the EMS report and patient handover. Another researcher transcribed the page texts sent to the trauma teams before patient arrival. We then analyzed the observations and page texts using an inductive qualitative analysis approach [28]. The two researchers independently examined the observation notes and page texts to begin identifying patterns of the pre-hospital form use. The researchers then met to discuss the patterns and iteratively finalize the themes.

6.1.4. Video Review: Findings

We identified four themes when analyzing videos of resuscitations with pre-hospital form use:

Team members arrive at different times resulting in multiple team briefings.

In seven of 11 cases, the ED leader arrived and briefed team members on the incoming resuscitation before the surgical leader arrived. In one case, the ED leader briefed the team twice before the surgical leader arrived. During the second briefing, they asked the team to introduce themselves, acknowledging that the full team had not arrived yet. Upon arrival, the surgical leader asked the ED leader for the results of a scan and then completed the pre-hospital form on the checklist. A few minutes later, the ED leader briefed the team for the third time.

Sources of the pre-hospital information depend on patient and leader arrival.

Leaders used different data sources to document the pre-hospital information depending on their time of arrival. When arriving before the patient, leaders filled out the form with the information conveyed in the pre-arrival page and through the ED leader’s update. In two cases, however, the team arrived at the same time as the patient. In these cases, the surgical leader completed the form using their observations and the EMS report instead of the page. For example, the team in one case had no advance notice that a patient was arriving, receiving the page “Trauma Stat **NOW** in the ER” with no additional information. The surgical leader arrived less than a minute before the patient and started filling out the pre-hospital form as EMS brought the patient into the room. The surgical leader left the pre-hospital form open for three minutes while the examiner was receiving basic information about the patient and starting the primary survey. After the examiner asked the patient for their age, the leader documented it in the form. The leader then entered the vital signs shown on the room monitor on the form.

Leaders will reopen the form to correct information but will not reopen it to enter new information.

The leaders documented an incorrect age or sex in three of the 11 cases, reopening the form to correct the information in two cases. Although leaders reopened the form to correct existing information, they entered new information in the margin note area instead of reopening the form. In one case, the leader asked the team if the patient was two years old, documenting this age on the pre-hospital form after receiving confirmation. Shortly after, the ED leader stated in their briefing that the patient was three years old, while EMS reported four years during patient handover. During the EMS report, the surgical leader took handwritten notes about the patient’s pre-arrival GCS score and loss of consciousness. They then reopened the form to correct the patient’s age but did not add the GCS score or loss of consciousness to the form.

Leaders manage uncertainty and incomplete information while documenting pre-hospital information.

In most cases, leaders had incomplete or uncertain information while filling out the pre-hospital form. The pre-arrival page texts from all cases infrequently included information about the GCS (20.7%), heart rate (31.1%), and blood pressure (29.9%) measurements. Page texts in two video-reviewed cases stated “alert and oriented” instead of providing a GCS value. In one of these cases, the leader documented a value of 15 (normal) for the pre-arrival GCS. The leader in the other case did not document a GCS value. Even in cases with the page containing the exact pre-arrival vital signs, leaders used phrases like “hemodynamically stable” and “normal vital signs” in their briefings, instead of exact values. Uncertainty and confusion also arose from the dynamic nature of the emergency department. In one case, two patients were arriving at the same time, leading to confusion about which patient the team would treat. Team members assumed they were treating one of the patients and the leader filled out the pre-hospital form with the information known about that patient.

6.2. IV/IO Task Progress Slider

6.2.1. Methods

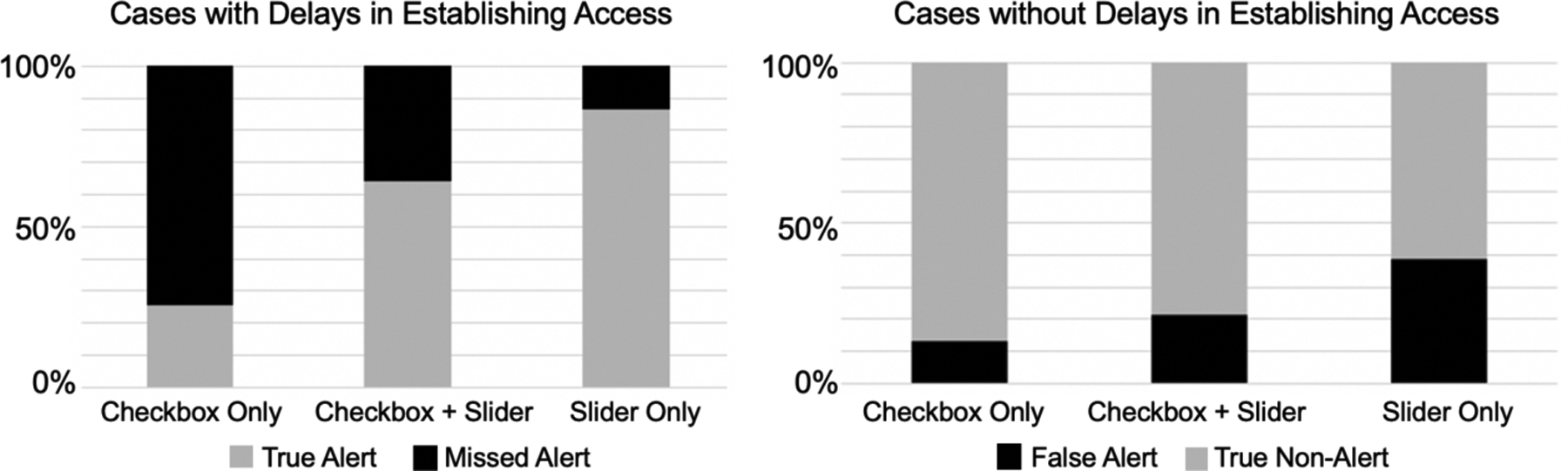

We first added the progress slider to the IV/IO task on November 9, 2020. After releasing the slider, we reviewed checklist logs from 29 cases, finding that the progress slider was used in only two cases. From user feedback received through our clinical collaborators, we found that using both the checkbox and progress slider for this task was confusing (Figure 5(b)). We removed the checkbox on March 22, 2021, leaving just the progress slider (Figure 6(b)). After completing data collection in June 2021, we performed another retrospective analysis to evaluate how the progress slider would have impacted the accuracy of the alert for delays in establishing IV access. We reviewed 246 video-consented cases from June 2020 through June 2021 to determine when IV/IO access was established. We could not analyze six consented cases due to corrupted video files. The review process consisted of several steps. First, we completed a chart review to determine if the patient arrived with IV/IO access. For cases where the patient did not arrive with access, we reviewed each video, timestamping each step of the IV placement process (e.g., placing the tourniquet, inserting the catheter, and attaching the syringe to confirm placement). We also determined the time when the alert would have been triggered and noted if the task was marked as completed on the checklist at that time. Using these two data sources, we classified the alert status into the four categories used in our initial alert research (Figure 4): missed alert, false alert, true alert, or true non-alert. We then performed univariate analysis (chi-square) to compare the categorizations between the three periods: (1) checkbox only (n=115), (2) checkbox and progress slider (n=56), and (3) progress slider only (n=69).

6.2.2. Findings

We found no difference in the number of cases with delays in establishing IV/IO access between the three periods: (1) checkbox only (31/115, 27.0%), (2) checkbox and progress slider (14/56, 25.0%), and (3) progress slider only (15/69, 21.7%) (p=0.7). After the introduction of the slider, premature documentation of IV/IO task completion in cases without pre-arrival access decreased from 67.8% with the checkbox-only design to 30.3% with the slider-only design. This reduction in premature documentation would have contributed to fewer missed alerts in cases with delays, improving the alert’s sensitivity (Table 2, Figure 7). False alerts, however, would have increased in cases without delays in establishing IV access, with 30 cases having false alerts. We further examined these 30 cases to understand why false alerts would have occurred. Sixteen cases included patients who arrived with IV access and 14 had patients who arrived without access. The leader only marked the IV/IO task as complete in five of the 16 cases with pre-arrival access and did so after the time when the alert would have been triggered. For the 14 cases that did not have pre-arrival IV access, the leaders did not mark the task as complete after IV access was established in the room, or they marked it as complete after the false alert would have been triggered. Team members in these cases had not started obtaining IV access (10 cases) or were in the process of obtaining access (4 cases) when the leaders reached the IV/IO access task on the checklist. The leaders then left the slider at the “Not Started” or “In Progress” points before moving to the next item on the checklist. In seven cases, the leader never returned to the IV/IO task to mark it as complete even though the team obtained access. In the other seven cases, the leader either returned to the slider to mark the task as complete or marked the task as done on the summary screen that appeared upon checklist submission. The median time between task completion and the task being marked as complete on the checklist was seven minutes (IQR: 5.4 – 8.1). The false alarm would have been triggered during this time.

Table 2:

Number of missed and false alerts in cases with different IV/IO task designs.

| Checkbox Only (n = 115) |

Checkbox & Slider (n = 56) |

Slider Only (n = 69) |

p-value | |

|---|---|---|---|---|

| Missed alerts in cases with delays (%) | 23/31 (74.2) | 5/14 (35.7) | 2/15 (13.3) | <0.001 |

| False alerts in cases without delays (%) | 11/84 (13.1) | 9/42 (21.4) | 21/54 (38.9) | <0.01 |

Figure 7:

Percentage of missed alerts in cases with delays in establishing IV/IO access (left) and percentage of false alerts in cases without delays (right) with three different task designs.

7. DISCUSSION

To enable alerts on cognitive aids, context information and task status must be captured in a timely, accurate, and standardized manner. The current “task list” design found on most cognitive aids does not fully support documentation of the context information and task status needed for triggering accurate decision support alerts. We explored two features on a digital checklist for a time-critical medical process to address this limitation. We first created a form for documenting pre-hospital information about the patient. Because the accuracy and frequency of documentation would impact the accuracy of alerts triggered by the information entered on the form, we studied how clinicians used this form in the wild. Although clinicians frequently used the form during the in-the-wild evaluation, some variables were infrequently documented. This infrequent documentation was partially due to challenges with incomplete and sporadic information and time constraints. We also introduced a progress slider for the IV/IO task to facilitate tracking of multi-step task status. After performing a retrospective analysis of live cases in which this feature was used, we found that the number of missed alerts in cases with delays in establishing IV/IO access would have decreased with the use of the slider. The number of false alerts, however, would have increased in cases without delays because users did not always update the status of the slider after task completion. Using these findings, we next discuss how cognitive aids can serve as decision support platforms in settings with time-critical decision making and the potential for interpretation or management errors. We also discuss guidelines for designing cognitive aid features that promote timely and accurate documentation of context information and multi-step task status.

7.1. Cognitive Aids as Decision Support Platforms

Our findings highlight how cognitive aids can evolve and advance to better support users. While the ability to accurately capture context information is required for decision support alerts, it is also necessary to improve the design of cognitive aids to support advanced interactions. Prior studies have proposed that cognitive aids should progress by becoming dynamic and context-aware, selecting content based on the specifics of the event [29,46,93]. To become adaptative and context-aware, a cognitive aid requires context information to determine how the content should be altered. In our study, we used context information captured in the pre-hospital form to dynamically alter the checklist content. For example, a task was set to the “not applicable” status when the user specified a certain injury type on the pre-hospital form. Visual clutter, distraction, and fatigue are reduced when cognitive aids adapt to present only relevant tasks [29]. In addition to supporting the user in tracking task status, an adaptive cognitive aid could also assist in decision making by signaling when to consider different interventions. If an adaptive cognitive aid supports decision making, cognitive aids may become decision support platforms even before the addition of alerts. Research on both cognitive aids and decision support systems has stressed the importance of displaying the right content at the right time in the correct format [29,81], strengthening the argument for further studying how these two types of systems can be integrated together.

Although we have focused on mitigating interpretation and management errors in a clinical setting, our insights and design guidelines go beyond medical domains. The transition of cognitive aids into decision support platforms could occur in any setting where users must make rapid decisions or perform tasks in a timely manner and where the potential for interpretation or management errors is high. Interpretation errors can occur across domains that involve changing information and rapid decision making, such as driving [38] and aviation [22,37]. For example, air traffic controllers must interpret and integrate many different types of information when making decisions, such as aircraft types, routes, and altitudes [22]. Similarly, pilots can make interpretation errors when assessing aircraft instruments [36]. Because pilots use checklists during both routine flights [8] and emergency situations [37], decision support could be integrated into their checklists to mitigate interpretation and management errors. We next discuss guidelines for designing cognitive aid features that promote timely and accurate documentation of context information and multi-step task status. These guidelines may be generalizable to other contexts where teams make time-critical decisions and perform dynamic tasks.

7.2. Supporting Alerts for Mitigating Interpretation Errors in Time-Critical, Team-Based Work

Decision support systems that suggest potential interventions and mitigate interpretation errors through alerts require real-time context information. Our pre-hospital form required manual effort to capture context information, but technological advances could reduce the documentation burden. Recent work has examined the use of natural language processing for obtaining context information during patient encounters, allowing for hands-free documentation [55,73,98]. Integrations with other systems could also capture context data, reducing the amount of manual documentation and better supporting decision support features [65]. For example, integrations between emergency medical services (EMS) and ED systems could provide the context information needed for some alerts, minimizing documentation of pre-arrival data by ED clinicians. To achieve this integration, electronic documentation practices must first be improved for EMS teams [7,97]. Even when electronic documentation systems exist, interoperability issues often prevent systems from sharing information [65]. Until advancements in electronic documentation are made and interoperability issues are resolved, we still need to understand how digital cognitive aids can support manual documentation of context information to provide decision support alerts. Using the findings from our study and prior work, we discuss several guidelines for designing cognitive aid features that promote rapid documentation of context data in medical and other domains where teams must rapidly document data while managing uncertainty and changing information.

Consider structured data entry to capture context information.

Our co-design participants suggested a checklist section that allowed for structured data entry of context information about the patient. Some participants also proposed using the recorded context information to trigger decision support features, such as alerts. Past work found that senior physicians perceived no need for decision support tools during complex medical processes [88]. Because the participants in our study were rotating at the hospital and less experienced with the pediatric patient population, they might have perceived a higher need for decision support than senior physicians. In addition, clinicians in intensive care settings frequently consider the opinions of other team members and work with different types of equipment, which may make them more open to CDSSs than clinicians in other specialties [39]. Structured data entry for capturing context information could also apply to design of cognitive aids used in other domains. For example, general aviation pilots frequently record information from air traffic control on paper notes [80]. A cognitive aid could support structured data entry of the information received from air traffic control and use that information to provide decision support. Evaluating the effects of this transition from free-form note-taking to structured data entry on pilots’ cognitive processing will be critical. Clinicians in our study thought that structured data entry would support their clinical reasoning and better prepare them for the patient. These perceptions contradicted findings from prior work that showed how physicians’ documentation shifted from clinical reasoning to mechanical data entry after the introduction of an ordering system that relied on structured data entry [92].

Provide options for entering or selecting classifications.

We found instances where pre-event notifications had classifications instead of exact values for pre-arrival measurements. Instead of only providing space to enter numeric values, the system could also allow indicating the classification of a measurement when the exact value is not known or cannot be entered because of time limitations. For example, the system could have an abnormal/normal classification added to the vital signs or a scale added to the GCS measurement that assesses the patient’s consciousness (e.g., alert, verbal, pain, unresponsive). Because selecting an item is easier than typing information, the use of classifications could better facilitate data input [42]. In addition to facilitating data entry during time-critical decision making, the ability to quickly select classifications could also support pilots who have trouble typing on touchpads during turbulence [18]. Adding classifications could increase documentation rates, leading to more accurate decision support alerts. Classifications, however, are more subjective than numeric values. Inaccurate decision support alerts could be triggered if a user interprets the classification differently than the decision support system.

Support uncertainty and indicating different situations.

Our pre-hospital form only allowed users to select whether a patient had certain attributes. Users were also not required to complete these fields on the form. When attributes were left unselected, it was unclear if (a) the patient did not have those attributes, (b) the leader did not know if the patient had those attributes, or (c) the leader chose not to document the field. While the form only supported “yes/no” status, the attributes had additional states during cases: “unknown” and “not documented.” Uncertainty is not limited to clinical settings. For example, operators of railroad control rooms frequently have to manage uncertain information, such as the behavior of drivers or passengers, when making decisions [84]. Informal documentation practices, such as handwritten notes, afford the capture of uncertain and incomplete information, which is still important in decision making [99]. To become a decision support platform, a cognitive aid should support documenting these different states in a standardized manner because of their different implications for triggering decision support features. For example, a decision support system designed to aid anesthesia teams during surgery displayed three potential states for attributes: (1) present, (2) absent, or (3) undetectable by the system [44]. Similarly, a cognitive aid could allow the user to indicate when a status is currently unknown by the team [50]. If the status of an attribute is critical for determining decision support and the user has not documented the status, a reminder could be incorporated within the cognitive aid interface to prompt documentation.

Allow other team members to document and view information.

Designing decision support systems for a specific user in a team-based process can create issues because other team members lack visibility into the system [79]. In time-critical events with ad hoc teams, team members may arrive at different times. As a result, decisions may be made before a team member using the cognitive aid arrives. Although a cognitive aid may be primarily used by one team role, allowing other roles to document data could help trigger decision support features more quickly, while discussions about the event are occurring between the present team members. Clearly denoting which team role should document data in the absence of the primary cognitive aid user could help avoid confusion. Prior work has also found that decision support systems in team settings support collaborative decision-making [42]. The information documented in the cognitive aid could be included on a wall display to help late-arriving members understand the currently known information about the patient and collaborate more easily with other team members. Presenting information on a shared display could also help all team members establish a shared mental model. Prior studies in different domains, such as surgery [92] and search and rescue [41], have highlighted the importance of establishing and maintaining shared mental models during crisis management.

Support documentation of data at multiple times.

Ad-hoc, knowledge-based teams may learn about changes in status and new data at different points before, during, and after the event or work process. For example, prior work found that clinicians document sporadic information in accessible transitional artifacts (e.g., paper notes) during medical events and then formally document this data in archival systems after the event [13]. In our study, users frequently handwrote information received throughout the event in the already accessible note area instead of reopening the form to document context information. Based on our findings, features for documenting status information and other data should be easily accessible at any time or place in the system to support the capture of evolving information. Capturing this evolving information would allow for more specific and precise decision support alerts.

7.3. Supporting Alerts for Mitigating Management Errors

Despite our cognitive aid being designed as a “task list,” we observed issues with accurately capturing the status of multi-step tasks. Multi-step tasks have greater potential for delays than single-step tasks and would benefit from alerts about delays. Users, however, have a harder time accurately documenting multi-step task status, creating barriers to triggering accurate alerts on cognitive aids about delays. We propose three guidelines for designing features that track the status of multi-step activities in dynamic settings:

Clearly define the stages of progress in multi-step tasks.

In addition to supporting awareness of overall task status, cognitive aids can also display subtasks and their respective statuses. For example, prior checklists designed for creative (e.g., website design) [6] and medical [16] work used hierarchical structures to display the status of both a task and its subtasks. In our study, the multi-step task to establish IV/IO access was originally represented as a single checkbox, which only indicated overall task status (“complete” or “not complete) and did not provide insight into the progress of the subtasks. The redesigned IV/IO task featuring a progress slider provided more information about task status by including several different stages. Because the stages may contain different information depending on the steps of the task, they can be used to trigger specific alerts or other decision support features. Our slider had three stages: “Not Started,” “In Progress,” and “Complete,” representing the task status, but these stages could have different meanings to different users. For example, some users may consider the task as in progress when they instruct the team to start the task, while others might consider it as in progress when the team starts with the first step of the task. An alternative approach to using task status (e.g., “Not Started”, “In Progress”) as the stages in the slider could be using the specific subtasks (e.g., “Perform venipuncture”). Clearly defining the task stages represented on the cognitive aid would reduce ambiguity and improve decision support features related to delays. The stages need to be represented at the appropriate level of granularity for a given domain so that they provide the information needed for alerts about delays without greatly increasing the documentation burden.

Allow users to continuously view and change the status for multi-step tasks.

Because the progress slider was in one section of the checklist, users in our study would scroll away from the slider as they moved to other sections. During the near-live simulation sessions, some participants were concerned they would forget to go back to change the slider status. Indeed, we observed during the in-the-wild evaluation that users did not update the status of the slider or only updated it after a delay. The failure to update the slider status would have led to more false alerts. Users may be more likely to update the status of a multi-step task more frequently if they can always view and change the task status. A prior study found that clinicians documented information more quickly when using a cognitive aid that continuously displayed buttons for marking task completion during resuscitations [32]. Improving the timeliness of task status updates would lead to more accurate decision support alerts. After completion, the multi-step task could be removed from the global view to reduce the amount of information shown to the user and focus their attention on the other tasks that need completion.

Consider how the design might impact the accuracy of alerts.

Because extra work is required to distinguish true alerts from false alerts [2], false alerts are detrimental in safety-critical domains, such as clinical [62], driving [53], and rail traffic control [78] settings. High amounts of false alerts can reduce a user’s trust and compliance with a system [53]. Retrospective analyses can be an effective method to estimate the effects of different cognitive aid feature designs on the proportion of true and false alerts. Our retrospective analysis of the IV/IO task progress slider use showed that the slider would have increased the number of true alerts, while also leading to more false alerts. Because the design of the checklist item (e.g., checkbox vs. slider) can impact the proportion of missed and false alerts, designers should consider the implications of false and missed alerts when designing the cognitive aid features. In situations where the user can easily assess an alert’s accuracy, feature designs that lead to more false alerts (but also more true alerts) may be more acceptable. Understanding the false alert rate before implementation can also influence how the alerts are designed. For example, prior work has proposed that alerts with higher false alarm rates should use less intrusive designs [62]. Until improvements are made in recording multi-step task status, alerts about delays in task completion may need to be designed less intrusively to mitigate issues with inaccuracy that could lead to alert fatigue.

7.4. Study Limitations